Abstract

Most organizational researchers understand the detrimental effects of measurement errors in testing relationships among latent variables and hence adopt structural equation modeling (SEM) to control for measurement errors. Nonetheless, many of them revert to regression-based approaches, such as moderated multiple regression (MMR), when testing for moderating and other nonlinear effects. The predominance of MMR is likely due to the limited evidence showing the superiority of latent interaction approaches over regression-based approaches combined with the previous complicated procedures for testing latent interactions. In this teaching note, we first briefly explain the latent moderated structural equations (LMS) approach, which estimates latent interaction effects while controlling for measurement errors. Then we explain the reliability-corrected single-indicator LMS (RCSLMS) approach to testing latent interactions with summated scales and correcting for measurement errors, yielding results which approximate those from LMS. Next, we report simulation results illustrating that LMS and RCSLMS outperform MMR in terms of accuracy of point estimates and confidence intervals for interaction effects under various conditions. Then, we show how LMS and RCSLMS can be implemented with Mplus, providing an example-based tutorial to demonstrate a 4-step procedure for testing a range of latent interactions, as well as the decisions at each step. Finally, we conclude with answers to some frequently asked questions when testing latent interactions. As supplementary files to support researchers, we provide a narrated PowerPoint presentation, all Mplus syntax and output files, data sets for numerical examples, and Excel files for conducting the loglikelihood values difference test and plotting the latent interaction effects.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The assessment of interactions between variables is critical to theoretical and empirical advances in business and psychological research. An interaction indicates that the relationship between two variables is either contingent upon or varies across levels of another variable, with such interactions commonly proposed in business and psychological theories. While most researchers report results of their measurement models for latent variables based on multiple-item surveys used to collect data, and employ structural equation modeling (SEM) with latent variables to control for measurement errors when estimating path coefficients in models without latent interaction, the majority of them revert to regression analysis (such as moderated multiple regression (MMR)) or path analysis with observed variables (such as PROCESS; Hayes, 2013) when estimating interaction effects. In their recent review, Cortina, Markell-Goldstein, Green, and Chang (2019) confirmed this, showing that two-thirds of tests for interaction effects adopted non-latent approaches, predominantly MMR.

One major concern with using MMR to test for interaction effects is the detrimental influence of measures with low reliability (Aguinis, 1995). While most researchers pay attention to the reliability of their variables, few have paid attention to the reliability of the interaction term that can be much lower than the reliability of the two variables forming the interaction (Aguinis, Edwards, & Bradley, 2017; Busemeyer & Jones, 1983), and which results in larger bias in the estimated interaction effect. Consequently, researchers have focused on developing methods for estimating interaction effects using SEM (for example, Kenny & Judd, 1984; Marsh, Wen, & Hau, 2004). Prior proposed SEM methods, such as the product-indicator approach (PI; Kenny & Judd, 1984), require creating a latent interaction term from the indicators and imposing sophisticated nonlinear constraints. Such complexity in creating and testing interaction effects with SEM has likely limited its adoption. Contrasting this, the interaction term in MMR is simply computed from the product of observed variables.

Recently, Cortina et al. (2019) presented a flowchart with a list of estimation approaches for testing latent interaction effects, starting with the most accurate estimation method and moving down to less accurate ones. Notably, they place the PI approach and the latent moderated structural equations (LMS) approach (Klein & Moosbrugger, 2000) at the top of the list. While conceptually easier to understand, PI has a number of drawbacks including the complexity of creating numerous nonlinear constraints among latent variables and indicator residuals; violation of the normal distribution assumption for latent variables and their indicators resulting in incorrect estimates; and lack of information on how to use PI with more complex models, such as models with multiple interactions or a three-way interaction.

On the other hand, recent developments for implementing LMS in Mplus allow users to estimate latent interaction effects easily with relatively simple syntax, even when the number of indicators of the latent variables forming the latent interaction is large, and for complicated models such as those with a curvilinear three-way interaction. Several simulation studies have demonstrated the superiority of LMS over PI in terms of higher accuracy of estimates, smaller standard errors, and higher power (Kelava et al., 2011; Klein & Moosbrugger, 2000). Yet a limitation of LMS is that, because it requires a more complex estimation method, a model with multiple latent interactions and a large sample size may require a long time to run or computing resources that go beyond ordinary personal computers. In such cases, reliability-corrected single-indicator LMS (RCSLMS) provides an approximation approach that adopts LMS with summated scales and correction of measurement errors (Cheung & Lau, 2017).

Despite a number of studies explaining the benefits of LMS when estimating latent interaction effects (for example, Kelava et al., 2011; Klein & Moosbrugger, 2000), and a number of teaching notes elaborating the procedures (for example, Cheung & Lau, 2017; Maslowsky, Jager, & Hemken, 2015; Muthén, 2012; Sardeshmukh & Vandenberg, 2017), only 6% of the analyses in Cortina et al.’s (2019) review applied LMS. Moreover, MMR remains the prevalent approach for assessing interaction effects in nonexperimental studies (Cortina et al., 2019) in spite of evidence that various methodological artifacts negatively affect MMR when testing for interaction effects (for example, Aguinis, 1995; Aguinis et al., 2017).

To address this situation, the aim of this teaching note is to motivate and enable researchers to employ LMS when investigating latent interaction effects, including RCSLMS when appropriate. The remaining paper is divided into four sections. In the first section, we briefly explain the conceptual basis of LMS and RCSLMS approaches to estimating latent interactions since one possible reason for the low utilization of LMS is that it is more difficult to understand than the PI approach (Cortina et al., 2019). In the second section, we establish the superiority of LMS and RCSLMS compared with MMR by providing the results of two simulation studies. In the third section, we demonstrate a 4-step procedure to facilitate the easy implementation of LMS for testing latent interaction effects using Mplus. Notwithstanding the advantages of LMS, a further possible reason for its low adoption rate may be the practical concerns raised in previous LMS-oriented teaching notes, such as challenges in computing time, estimating the model fit and the effect size of the interaction term, and obtaining standardized coefficients (Maslowsky et al., 2015; Sardeshmukh & Vandenberg, 2017). More specifically, while Maslowsky et al. (2015) provided a tutorial on applying LMS, we demonstrate how the three limitations they raised can be resolved, and show how moderating effects can be better probed by the Johnson-Neyman approach (Johnson & Neyman, 1936). Building upon the work of Dawson (2014) on moderation in management research, we also demonstrate LMS for simple slope tests, examining three-way interaction and other curvilinear effects. In the final section, we provide answers to some frequently asked questions regarding the application of LMS, including tips that increase the computing capacity and lower the computing requirements of LMS.

Early SEM Approaches to Estimating Interaction Effects

Estimating interaction effects using MMR assumes that observed variables are measured without errors (Kline, 2016).Footnote 1 As such, an interaction term constructed from observed variables includes not only the true score of the latent interaction, but also random measurement errors. Because SEM isolates indicator residuals from latent constructs (Bollen, 1989), estimating interaction effects from the latent constructs controls for measurement errors; this substantially reduces any bias in estimates caused by measurement errors.

Researchers have developed various approaches for estimating the effects of interactions between latent variables in SEM. Early work on PI, referred to as the constrained approach, proposed using the products of all possible pairs of indicators of two latent variables as indicators of the latent interaction term (Algina & Moulder, 2001; Bollen, 1989, pp. 405–406; Jöreskog & Yang, 1996; Kenny & Judd, 1984). While this PI approach is straightforward conceptually, Cortina et al. (2019) highlight a major limitation in that it requires researchers to define numerous nonlinear constraints for the loadings, intercepts, and residual variances of the products of indicators. Typically, when one product indicator is added, five additional concomitant constraints are required (Jöreskog & Yang, 1996). This process rapidly becomes unwieldy when the two latent variables have more than two or three indicators. Hence, Cortina et al. (2019) recommend creating two to three parcels for each latent variable before creating the product indicators and provide R-code accordingly. Nonetheless, the results are unstable as different combinations of the indicators in parcels will yield different results (Hall, Snell, & Foust, 1999).

Unlike the traditional constrained approach, which imposes complicated nonlinear constraints, the unconstrained approach introduced by Marsh et al. (2004) imposes no nonlinear constraints. However, the unconstrained approach also raises concerns, one being that the indicators for the latent interaction are created with matched pair products, implying that different pairings of the indicators of the two latent variables may produce different results. In addition, it is unclear how to form the matched pair product indicators when the two latent variables have different numbers of indicators. While the two-stage least square (2SLS) method proposed by Bollen (1995) and Bollen and Paxton (1998) avoids employing complicated nonlinear constraints, as only one of the indicators of the outcome variable is used, the results may vary when different indicators are used. A second issue with PI, together with its variations, is that the maximum likelihood (ML) estimator assumes that latent variables and their indicators are normally distributed. However, when nonlinear effects exist, the endogenous latent variable (dependent variable) and its indicators are not normally distributed. As a result, PI may provide not only incorrect chi-square values but also biased standard errors and significance tests for interaction effects (Bollen, 1989, p. 406; Cortina, Chen, & Dunlap, 2001; Kelava et al., 2011; Klein & Moosbrugger, 2000; Sardeshmukh & Vandenberg, 2017). A final issue with PI is that, to date, there is no explanation of how it should be implemented for more complicated models, including those with multiple moderators, three-way interaction, moderated quadratic effects, and polynomial regression.

Cortina et al. (2001) provided a comprehensive review of the problems of various early approaches to modeling latent interactions, while Cortina et al. (2019) and Sardeshmukh and Vandenberg (2017) provided brief reviews on PI and more recent approaches. Interested readers are referred to these reviews outlining the issues inherent to various approaches.

Conceptual Basis of Latent Moderated Structural Equations

Investigations of interaction effects stem from the same conceptual foundation. Equation 1 below shows the general equation that specifies the relationships among two exogenous latent variables (independent variables) X and Z, and one endogenous latent variable (dependent variable) Y with both interaction and quadratic terms (Kelava et al., 2011, p. 467).Footnote 2

where α is the intercept of the regression equation, γ1 and γ2 are the main effects of X and Z respectively, ω12 is the interaction effect of XZ, ω11 and ω22 are the quadratic effects of X and Z respectively, and ζ is the residual of the regression equation. An important development in testing for latent interactions was the introduction of LMS by Klein and Moosbrugger (2000). LMS estimates the regression coefficient ωij of all freely estimated two-way interactions of exogenous latent variables with the following equation (Klein & Moosbrugger, 2000, p. 460):

where η is the latent endogenous variable, α is the intercept, ξ are the latent exogenous variables, Γ are the main effects of ξ on η, Ω are the nonlinear effects of ξ on η, and ζ is the residual of η.

Hence, Eq. 1 can be expressed as follows (Kelava et al., 2011, p. 467):

While PI treats the product term XZ and the quadratic terms X 2 and Z 2 in Eq. 1 as latent variables and therefore requires the creation of product indicators to “measure” these latent variables, Eq. 3 shows that LMS uses matrix multiplication of X and Z to estimate the interaction and quadratic effects on Y without creating latent variables to represent the product term XZ and quadratic terms X 2 and Z 2. Thus, LMS does not require the creation of product indicators to represent the latent interaction and quadratic terms, which avoids the need to impose complicated nonlinear constraints. This differentiates LMS as superior, contrasting with PI which uses product indicators and nonlinear constraints.

Technical explanations of how LMS estimates model parameters are beyond the scope of this teaching note. Interested readers are referred to Kelava et al. (2011), Klein and Moosbrugger (2000), and Sardeshmukh and Vandenberg (2017) for more details. In short, LMS employs distribution analytic methods to resolve the problems encountered by PI that result from a nonnormally distributed endogenous latent variable and its indicators, and uses an iterative estimation procedure—the expectation-maximization (EM; Dempster, Laird, & Rubin, 1977) algorithm—to provide an ML estimation of model parameters (Kelava et al., 2011). In LMS, only error variables and latent independent variables, but not latent dependent variables, are assumed to be normally distributed. Previous simulation investigations have provided evidence that LMS offers unbiased standard errors and efficient parameter estimates even for data that deviate slightly from a normal distribution (Klein & Moosbrugger, 2000).

Reliability-Corrected Single-Indicator Latent Moderated Structural Equations

While it is advantageous to estimate latent interaction effects with LMS, there are various conditions (which will be explained later) under which LMS is not ideal. Under such conditions, and if all indicators of the construct are measured with the same response scale, we recommend adopting reliability-corrected single-indicator latent moderated structural equations (RCSLMS) to test latent interactions (Cheung & Lau, 2017). RCSLMS is a modified version of LMS in which the values of all indicators for a latent variable are averaged to form a scale score such that each latent variable in the model has only one indicator. The factor loading between the latent variable and the single indicator is then fixed to 1. Instead of assuming no measurement errors as in MMR, or estimating the residual variances as in LMS, RCSLMS fixes the residual variance of the single indicator to (1 − reliability) times the variance of the observed scale score (Bollen, 1989; Hayduk, 1987). One convenient approach is to use Cronbach’s alpha as a measure of reliability. For example, if the scale has Cronbach’s alpha of 0.8 and the variance of the average score is 1.5, the residual variance for this latent variable is fixed to (1 − 0.8)(1.5) = 0.3. Finally, LMS is employed to estimate the interaction effects of latent variables with single indicators. RCSLMS has been shown to provide estimates that are good approximations to those produced by LMS with all indicators and superior to those provided by regression-based approaches (Cheung & Lau, 2017; Su, Zhang, Liu, & Tay, 2019).

Two Simulations Comparing MMR, LMS, and RCSLMS

In this section, we report the results of two simulations that compared the performance of MMR, LMS, and RCSLMS for estimating latent interaction effects. Similar simulations have been conducted in specific contexts including moderated-mediation (Cheung & Lau, 2017) and polynomial regression (Su et al., 2019). The two simulations in this teaching note examined the impact of various factors on the performance of these three approaches to test for interaction effects in a more general context, in terms of the accuracy and precision of estimated parameters, confidence interval (CI) coverage rates, type I error rates, and statistical power. These simulations were conducted based on the interaction model in Eq. 4, which has been employed in previous LMS simulation studies (Klein & Moosbrugger, 2000; Klein & Muthén, 2007).

where α is the intercept, γ1 is the main effect of X, γ2 is the main effect of Z, ω12 is the interaction effect of XZ, and ζ is the residual of Y.

Simulation 1—Effects of Model Parameters on Performance of MMR, LMS, and RCSLMS

This first simulation examined accuracy and precision of estimates, coverage, and completion rates comparing MMR, LMS, and RCSLMS. Four indicators for each of the three latent variables, X, Z, and Y were created. The levels of model parameters were chosen to approximate models observed in empirical research. In the first simulation, three different sample sizes (N = 100, 200, and 500) and three levels of correlation between X and Z (rXZ = 0.1, 0.3, and 0.5) were manipulated to represent small, medium, and large correlations between X and Z (Cohen, 1988, pp. 79–80). Four levels of interaction effects (ω12 = 0, 0.12, 0.3, and 0.42) were used. Note that the condition of ω12 = 0 was used to examine the type I error rate for the interaction effect. Cohen (1988, pp. 413–414) defined small, medium, and large effect sizes for regression as f 2 = 0.02, 0.15, and 0.35 respectively, which can be translated to ω12 = 0.12, 0.3, and 0.42, respectively when rXZ = 0.3. Cohen’s f 2 for the same interaction effects are larger when rXZ = 0.5 and are smaller when rXZ = 0.1. Since the objective of the first simulation was to examine the accuracy of parameter estimates, we manipulated ω12 instead of f 2. The average effect sizes across all rXZ conditions were f 2 = 0, 0.02, 0.16, and 0.36. Three levels of residual variance δ of each indicator were set based on Cronbach’s alpha of the aggregated score of each latent variable (α = 0.7, 0.8, and 0.9). Finally, three patterns of factor loadings for indicators of Z were adopted (z1 = 1, z2 = 1, z3 = 1, z4 = 1; z1 = 1, z2 = 1, z3 = 0.8, z4 = 1.2; z1 = 1, z2 = 1, z3 = 0.8, z4 = 0.8) to examine the effect of unequal factor loadings on the performance of the three approaches. As a result, the standardized factor loadings, which vary as functions of unstandardized factor loadings and size of residual variance, covered a wide range of values from 0.49 to 0.91 in the population parameters. Factor loadings for all indicators for X and Y were set at 1. In total, there were 324 population conditions. Similar to Klein and Moosbrugger (2000) and Klein and Muthén (2007), the values of the main effects were set to γ1 = 0.4 and γ2 = 0.2. The residual variances ζ for Y were fixed based on the interaction effects. All intercepts in the model, including the indicator intercepts and intercept for Y, were set to 0. All latent variables and indicators were set to be normally distributed with variance equal to 1. Five hundred data sets were simulated with Mplus 8.4 (Muthén & Muthén, 1998-2014) for each of the 324 conditions, giving a total of 162,000 simulated data sets.

Each simulated data set was analyzed with the three approaches for testing interaction effects. For MMR, the values of the four indicators for each latent variable were averaged to form the aggregate score for the analysis. For LMS, the raw data (indicator values) were analyzed with the latent variable interaction function in Mplus 8.4. For RCSLMS, the aggregated scores (instead of indicator values) were analyzed with the latent variable interaction function in Mplus 8.4. Also for RCSLMS, the R-package was used to calculate the variance and Cronbach’s alpha of each variable and to create the Mplus input file in which the residual variance of each variable was fixed at (1 − reliability) times the variance of the aggregated score.

When comparing the performance of each approach, we first examined the average estimated interaction effects across 500 simulated data sets for each population condition, which indicates the accuracy of the estimated interaction effect. Then absolute bias was calculated as the average of the absolute difference between the point estimate and the population value (Ledgerwood & Shrout, 2011). Because the standard error does not reflect the deviation of the point estimate from the population value, the absolute bias is a better representation of the precision of the estimate produced by each approach. The coverage of the 95% CI was then examined. The coverage was calculated as the proportion of the 500 simulated samples for which the population value was included in the 95% CI, thus serving as a measure of CI accuracy. It is expected that 95% of the simulated samples have estimated 95% CI that contain the true value. Finally, one frequently raised concern inherent in LMS is the higher chance of nonconvergence when the sample size is small because a large number of parameters are estimated (Sardeshmukh & Vandenberg, 2017). Therefore, we examined the completion rates of the simulated samples.

Results—Simulation 1 on the Effects of Model Parameters Footnote 3

Accuracy of Estimates

The average estimated interaction effects of each approach are shown in Table 1. On average, LMS produced very accurate estimates irrespective of the population conditions. RCSLMS also produced very accurate estimates, with average estimated interaction effects deviating from population values by less than 1% even under the unequal factor loading conditions. While MMR produced very accurate estimates when there was no interaction effect in the population, on average, the interaction effects were underestimated by -21.62%. The attenuation for MMR was more serious when reliability was lower, with the interaction effects underestimated by -33.33% when α = 0.7. For all three approaches, increased sample size did not result in more accurate estimates.

Precision of Estimates

The average absolute biases are presented in Table 2. Precision is the combined effect of accuracy and variation of estimates. In general, the estimated interaction effect was more precise when both reliability and sample size increased because the standard error of estimates was smaller. Because LMS and RCSLMS include measurement errors in the calculation of standard error of estimates, LMS had the largest standard error of estimates, closely followed by RCSLMS, whereas MMR resulted in the smallest standard error of estimates (as presented in Table S3 in the supplementary files). When there was no interaction effect or a small interaction effect, MMR produced the most precise estimates because of the smaller standard errors and only small attenuation of estimated parameters. With medium and large interaction effects, both LMS and RCSLMS produced more precise estimates, whereas the attenuation of estimated parameters in MMR became more severe.

Coverage of 95% Confidence Interval

LMS resulted in the most accurate 95% CI, with an average coverage of 94.30%. This was closely followed by RCSLMS with an average coverage of 92.8%. On the other hand, the attenuation of estimated parameters and the smaller standard error of estimates produced by MMR together resulted in inaccurate CIs, with an average coverage of only 69.7%. Since increased sample size led to smaller standard errors but not more accurate estimates by MMR, the coverage of CI was lowered when sample size was larger. This was most obvious when N = 200 or 500, reliability was at 0.7, and the interaction effect was medium or large. In such conditions, the average coverage of the 95% CI produced by MMR was only 14.0%. This is of particular concern because an increasing number of studies, in particular moderated-mediation studies, report CIs generated from MMR or related regression approaches (such as PROCESS; Hayes, 2013) which are likely to be inaccurate. Coverages of CI are presented in Table S5 in the supplementary files.

Completion Rates

In the first simulation, MMR completed estimation for all 162,000 simulated data sets. Both LMS and RCSLMS also had very high completion rates, probably because there are only twelve indicators and one latent interaction in the model. More specifically, out of the 162,000 simulated data sets, LMS completed all but 14 cases, and RCSLMS completed all but six cases. All incomplete estimations were under conditions with N = 100.

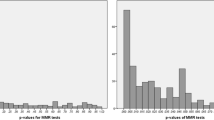

Simulation 2—Comparing Type I Error Rates and Power of MMR, LMS, and RCSLMS

We conducted a second simulation to examine type I error rates and power. In this simulation, we only used the condition of equal factor loadings, and all population parameters were the same as those in the first simulation except for the sample size and the magnitude of interaction effects. We manipulated six levels of sample size (N = 100, 150, 200, 300, 400, and 500) and four levels of f 2 (0, 0.02, 0.15, and 0.35). We manipulated f 2 instead of ω12 such that readers can find the power and required sample size for small, medium, and large effect sizes from the results. The second simulation included 216 population conditions, giving a total of 108,000 simulated data sets. We calculated the proportion of the 500 simulated samples in which the 95% CI did not include zero. This proportion represented a type I error when the population interaction effect was zero and represented power when the population interaction effects were at f 2 = 0.02, 0.15, and 0.35.

Results—Simulation 2 Comparing Type I Error Rates and Power

Type I Error and Power

Table 3 shows the results of simulation 2. Columns on the left show the type I error rates of the three approaches when the population interaction effect f 2 = 0. The average type I error rates for MMR, RCSLMS, and LMS across all samples were 0.070, 0.057, and 0.070 respectively. The type I error rates for MMR were higher than 0.080 when N = 100 and 150 (0.085 and 0.083 respectively), and for RCSLMS when N = 100 (0.091). It should be noted that the type I error rates for LMS were above 0.08 when there was high reliability (α = 0.9), high rXZ (0.5), and N = 200 or smaller. None of the approaches had adequate power (0.8) for testing a small interaction effect except under the most favorable conditions in which N = 400 or higher, rXZ = 0.5, and α = 0.9. Power increased with larger sample size and higher reliability. For the most part, all three approaches produced adequate power for testing medium and large interaction effects with N = 150 or higher when α = 0.8 or higher.

When to Adopt MMR, LMS, or RCSLMS

Given the simulation results reported above, and from previous studies (Cheung & Lau, 2017; Su et al., 2019), we provide some general rules for choosing between MMR, LMS, and RCSLMS when testing interaction effects, which are summarized in Fig. 1. In general, similar to the advice by Su et al. (2019), we recommend using LMS whenever possible, which is also in line with Cortina et al.’s (2019) recommendation to test interactions with a fully latent approach. The two key advantages of LMS are that it provides the best estimates of both the size of measurement errors and the relationships between the indicators and latent variables by allowing factor loadings to vary across indicators. In turn, LMS produces the most accurate estimated parameters and CIs. LMS also allows higher model complexities. One shortcoming of LMS indicated by the simulations is that, when N = 100, it yields large standard errors, large absolute differences, and low power. Nevertheless, this shortcoming can be resolved by increasing the sample size. If LMS is not feasible, then RCSLMS is usually the next best option because it provides more accurate estimates and better CI coverage than MMR.

However, MMR is the only choice when both X and Z are measured directly without errors, because both LMS and RCSLMS require at least one of the variables to be latent. There may be instances where X and Z are measured directly but the researcher suspects there are measurement errors, yet Cronbach’s alpha cannot be estimated from the sample. The best solution is to use RCSLMS with reliability estimated from other methods, such as split-half and test-retest reliabilities. If reliability cannot be estimated from the sample, RCSLMS can be used with reliability taken from previous studies. In such cases, measurement errors should be fixed with slightly overestimated reliability (by + 0.05) to avoid over-adjustment of measurement errors (Savalei, 2019).Footnote 4 If either X or Z is a categorical variable and the other is a continuous latent variable, multiple group analysis should be adopted and interaction effects tested by comparing the effects on Y using a direct comparison method (Lau & Cheung, 2012).

If either or both X and Z are latent, then LMS should be used when feasible because it provides greater modeling flexibility. When one of the variables is observed, that variable should be modeled as a single indicator latent variable by fixing the factor loading to 1 and residual variance to 0 (or 0.01 when Mplus gives an error message since residual variance at 0 may sometimes cause problems in estimating the standard errors). This converts the exogenous observed variable into a latent variable with the benefit that, by default, Mplus estimates all the covariances among exogenous latent variables, whereas this is not the case for observed variables, for which covariances would have to be coded. A further benefit of converting the observed variable into a single indicator latent variable is that, by default, Mplus centers the variable.

LMS may not be feasible when:

-

(a)

The sample size is smaller than the number of estimated parameters. Models in empirical studies frequently involve many indicators for multiple variables, but sample size may be limited, such as in experiments or studies at group or organization levels.

-

(b)

The model does not converge, usually due to model complexities and small sample size.

-

(c)

Complex models that require greater computing capacities than found in most personal computers. The larger the number of latent variables involved in one or more latent interactions, the greater the number of numerical integration points required. Mplus will provide a warning message when computing capacities are exceeded, which is likely to occur when five or more latent variables are involved in multiple interactions.

-

(d)

Models require excessive amounts of time for estimation. This is normally the case when bootstrapping is required, such as in moderated-mediation models or in testing simple slope effects. For example, when the number of indicators is large or the sample size is large, it may take about 1 minute to estimate a model. Multiplying 1 minute by 2000 bootstrap samples gives over 30 hours required for estimation, indicating the excessive amount of time for testing a model.

-

(e)

When Mplus is not available. Currently, among the most commonly used SEM software programs, LMS is only available with Mplus. The R-based lavaan package does not provide LMS estimation. Although the R-based nlsem package (Umbach, Naumann, Brandt, & Kelava, 2017) supports fitting nonlinear structural equation models with LMS, results from nlsem are very sensitive to the starting values used, and hence, even the factor loadings may not be comparable with results from lavaan or Mplus. When using nlsem, the recommendation is to run the model 100 times with different starting values and then average the results (private communication with N. Umbach). Hence, until this problem with nlsem has been resolved, it is not recommended for implementing LMS. When the full version of Mplus is not available, one may consider estimating the model with RCSLMS using the Mplus demo version, which can be downloaded at no cost from the Mplus homepage (https://www.statmodel.com/demo.shtml). However, one limitation of estimating latent interactions with RCSLMS using the Mplus demo version is that only six variables are allowed in the model, although that should be enough for many empirical studies. If there are more than six variables in the model, then the full version of Mplus should be acquired to test the model.

Compared to LMS, RCSLMS involves fewer parameter estimates. This simpler model yields several benefits, namely that more complex models can be estimated when the sample size is small, and that the elapsed time for estimating the model is much shorter compared with the LMS approach. Several simulation studies have shown RCSLMS performs equally well or even slightly better than LMS (Cheung & Lau, 2017; Su et al., 2019) when there are equal factor loadings across indicators, and our simulation results show that RCSLMS performs equally well in conditions with slightly unequal factor loadings. However, we also find that RCSLMS results in inflated type I error when sample size is small and rXZ is high. In summary, although RCSLMS mostly performs well, it is not appropriate under the following conditions:

-

(a)

When sample size is 100 or smaller.

-

(b)

Response scales for the indicators of a variable are not the same.

-

(c)

The latent variable is not unidimensional, such as in higher-order constructs, in which case LMS should be used because it can model latent interactions with higher-order constructs.

-

(d)

When the same or similar indicators are used for measuring both X and Z, in which case measurement errors for the same indicators are likely to be correlated across X and Z; this can only be modeled in LMS. An example of this is congruence research, which usually uses similar measures for person values (X) and organization values (Z).

These four limitations for RCSLMS also apply to MMR and PI.

The simulation results presented in Table 3 also suggest the need to adopt specific criteria for reliability. Despite accounting for measurement errors, LMS and RCSLMS have lower power when reliability is low. Although the manipulations in our simulations were based on Cronbach’s alpha, the values of construct reliability are usually very similar to the values of Cronbach’s alpha (Hair, Black, Babin, & Anderson, 2009). Hence, we recommend a minimum level of construct reliability at 0.7 for LMS and a minimum level of Cronbach’s alpha at 0.7 for RCSLMS. At this level of reliability, there is still adequate power for testing a moderate interaction effect with a sample size between 200 and 300. This recommended level is also suggested by Hair et al. (2009, p. 619) as denoting good reliability.

Numerical Examples Demonstrating LMS and RCSLMS Implementation

In this section, we demonstrate a 4-step procedure for estimating latent interaction effects with LMS using Mplus 8.4 on simulated data developed from Study 1 in Du, Derks, Bakker, and Lu (2018). A summary of the 4-step procedure, including the decisions and actions in each step, is provided in Fig. 2. Most interaction effects can be tested by estimating one SEM model in each step, giving rise to Model 1, Model 2, Model 3, and Model 4. Hence, for Example A, the models are Model A1 to Model A4. For more complex interactions, a further model is added in Step 3 (Model 3b) to estimate the effect size. Mplus output files for all models, as well as data sets used in the numerical examples, are available in the supplementary files. The Mplus syntax can be found under “Input Instructions” at the beginning of each output file. Key results data are highlighted in each output file. Note that the supplementary files include also a narrated PowerPoint presentation working through the examples presented below.

The original study by Du et al. (2018) examined the moderating effects of homesickness on the relationships between job resources and both task performance and safety behavior using MMR. They also hypothesized the moderating effect of homesickness was moderated by emotional stability and openness to experience. For simplicity of exposition, we demonstrate the model with only task performance as the dependent variable, as well as the interactions among job resources, homesickness, and emotional stability. In the first example, we demonstrate a model testing the two-way interaction between job resources and homesickness (Du et al., 2018, p. 102 – Step 3). Then we illustrate the models for testing three-way interaction (Du et al., 2018, p. 102 – Step 6), followed by testing for quadratic effects and moderated quadratic effects.

The original data included responses from a two-wave survey of 442 employees in a Chinese manufacturing company. Job resources were measured using Karasek’s (1985) three-item feedback scale and Van Veldhoven, de Jonge, Broersen, Kompier, and Meijman’s (2002) three-item social support scale. Homesickness was measured with the 20-item Utrecht Homesickness Scale (Stroebe, van Vliet, Hewstone, & Wills, 2002). The six-item scale for measuring emotional stability and the six-item scale for measuring openness to experience were both from Judge, Rodell, Klinger, Simon, and Crawford (2013). Task performance was measured at time 2 with the three-item scale from Goodman and Svyantek (1999). We included only one control variable, job demands, measured using the three-item scale from Peterson et al. (1995). Except for emotional stability and openness to experience, which were measured on 7-point response scales, all other variables were measured on 5-point response scales. Interested readers are referred to Du et al. (2018, pp. 99–100) for the conceptual model, sample, and measures. The data set (Example1.dat in the supplementary files) was simulated based on the reported descriptive statistics, correlations, and results of estimated effects with N = 422 (Du et al., 2018, pp. 101–102). LMS works only on raw data but not summary statistics (means and covariance matrix).

Example A: Two-Way Interactions (Models A1, A2, A3, and A4)

Step 1: Measurement Model Estimation (Model 1)

As in all other SEM analyses, the first step is conducting a confirmatory factor analysis (CFA) by estimating the measurement model; this assesses the quality of measures by examining their convergent and discriminant validity. We assessed the fit of the six-factor measurement model (Model A1), in which the 44 observed indicators loaded on six defined factors. Indicators for the dependent variable are likely nonnormally distributed because of the interaction effects; hence, we recommend and used the maximum likelihood with robust standard error (MLR) estimator due to its higher robustness to nonnormality. In the supplementary Mplus files, the indicators for job resources (JOBRES) are denoted as JobRes1 − JobRes6; those for homesickness (HOMES) are denoted as Homes1 − Homes20; those for emotional stability (EMOSTAB) are denoted as EmoStab1 − EmoStab6; those for openness (OPEN) are denoted as Open1 − Open6; and those for task performance (TASKP) are denoted as TaskP1 − TaskP3. The indicators for the control variable, job demands (JOBD), are denoted as JobD1 − JobD3. To set the scale for latent variables, the factor loading of the first indicator for each latent variable is set to 1, and the means of all latent variables are set to 0, which centers all latent variables (these are the defaults in Mplus). This centering facilitates the interpretation of the main effects when there is a statistically significant interaction effect (Aguinis & Gottfredson, 2010).Footnote 5

For this empirical example, the measurement model generated χ2 = 946.54 (df = 887). Other fit indices—RMSEA = 0.013, CFI = 0.982, and SRMR = 0.040—indicated that the measurement model fit the data well. Unstandardized factor loadings of all indicators were statistically significant (p < 0.01), and standardized factor loadings were all greater than the threshold value of 0.40 suggested by Ford, MacCallum, and Tait (1986). Moreover, the correlation coefficients among all latent variables ranged from -0.382 to 0.463, indicating that they were six distinct variables because the absolute value of all correlation coefficients were significantly lower than 1. Construct reliability of the six variables was estimated using the following formula (Fornell & Larcker, 1981, p. 45):

where ρ is the construct reliability, λi is the standardized factor loading, and Var(εi) is the residual variance of indicator i obtained from the Mplus output. As noted above, construct reliability should be at least 0.70; this criterion was met in our numerical example, with values ranging from 0.70 to 0.86. These results provided evidence for adequate reliability, as well as both convergent validity and discriminant validity. If the measurement model does not provide a good fit to the data, one should review the measurement model for any coding errors, for example, higher-order constructs or omitted correlated errors for the same items across time or across sources. If there is insufficient evidence for the appropriateness of the measures, testing for latent interactions should not proceed further with this data set.

Step 2: SEM Without Latent Interaction (Model 2)

The second step is to estimate the structural equation model with only hypothesized linear effects. The objective of this step is to provide baseline model fit indices to allow a subsequent comparative evaluation of overall model fit for the model with latent interactions. More specifically, because the nonlinear effects of a latent interaction model result in biases to the chi-square value and other fit indices derived from the chi-square value (Kelava et al., 2011), Mplus does not provide conventional fit indices for evaluating the overall model fit when estimating an LMS model. Thus, we followed the procedure described in Muthén (2012) to evaluate model fit of an LMS model. First, we estimated a structural model without the latent interaction term (Model A2), that is, we estimated only the main effects of job resources, homesickness, emotional stability, openness, and job demands on task performance, whereas we excluded from the model the interaction effect between job resources and homesickness on task performance. If Model 2 provides an adequate fit to the data, one may proceed to estimate the structural equation model with the latent interaction (Model A3 in the next step) and examine if inclusion of the latent interaction effect improves model fit significantly.Footnote 6 If Model 2 does not provide adequate fit to the data, one may want to revise the model based on alternative theories, noting that modification of model parameters based on modification indices is exploratory and sample specific, and hence requires a separate sample for confirmation. Since we wanted to compare the model fit of Model 2 against the model with the latent interaction using the MLR estimator, that is, Model 3, we estimated both Model 2 and Model 3 with the MLR estimator. In our example, this structural equation model (Model A2) resulted in χ2 (887) = 946.54, RMSEA = 0.013, CFI = 0.982, and SRMR = 0.040. Hence, we concluded that this structural equation model without the latent interaction fitted the data well. The results showed that only the main effect of job resources (b = 0.287, p < 0.01, β = 0.323) on task performance was statistically significant. Significance tests for regression coefficients should be based on unstandardized coefficients because the estimated standard errors for standardized coefficients may be biased (Cudeck, 1989). All five independent variables together accounted for 17.4% of the variance in task performance.

Step 3: LMS Model Estimation (Model 3)

Because the structural equation model without the latent interaction in Step 2 fitted the data well, we proceeded to the next step which was to estimate the 2-way interaction effect between job resources and homesickness on task performance (Model A3). In Mplus, under the ANALYSIS command, the statement “TYPE = RANDOM;” was added to request estimates of the random slopes, and the statement “ALGORITHM = INTEGRATION;” was added to request the numerical integration for the estimation of interaction effects. The latent interaction term was defined under the MODEL command with the statement “JRxHS | JOBRES xwith HOMES;” where JRxHS denotes the latent interaction between job resources and homesickness.

Model Fit of the LMS Model

We followed the procedure in Muthén (2012) to evaluate the model fit of the LMS model by comparing the model fit of the models with and without the latent interaction term. Although Sardeshmukh and Vandenberg (2017) suggested comparing the Akaike information criterion (AIC) between the two models, Klein and Moosbrugger (2000) and Muthén (2012) recommended comparing the loglikelihood values because the two models are nested, while AIC is more appropriate for comparing nonnested models. While Maslowsky et al. (2015) suggested comparing the scaled loglikelihood values directly, Bryant and Satorra (2012) pointed out that the difference between two scaled loglikelihood values is not chi-square distributed. The proper comparison is a chi-square difference test based on the loglikelihood values and scaling correction factors estimated from the two models both estimated with the MLR estimator (Asparouhov & Muthén, 2013, p. 2; Satorra & Bentler, 2010):

where TRd is the chi-square distributed difference in scaled loglikelihood ratios with degrees of freedom equals to (p1 − p0) and, for the model with latent interaction (Model A3), L1 is the loglikelihood H0 value, c1 is the H0 scaling correction factor for MLR, and p1 is the number of free parameters. Similarly, for the model without latent interaction (Model A2) estimated in Step 2, L0 is the loglikelihood H0 value, c0 is the H0 scaling correction factor for MLR, and p0 is the number of free parameters. In our example, L1 = − 23,453.387, c1 = 0.9767, p1 = 148 (from output of Model A3), L0 = − 23,457.537, c0 = 0.9780, and p0 = 147 (from output of Model A2); therefore, TRd(df = 1) = 10.565 (p = 0.0012) which indicated that the improvement in model fit through inclusion of the latent interaction effect was statistically significant. An Excel spreadsheet (Loglikelihood Values Difference.xlsx) is provided in the supplementary files to facilitate calculation of the difference in scaled loglikelihood values.

Testing an Interaction Effect

The results of Model A3 showed that the regression coefficient of JRxHS on TASKP (task performance), that is, ω12 in Eq. 4, was -0.426 (p < 0.01, β = -0.177). Thus, we conclude that the interaction effect was statistically significant and the effect of job resources on task performance depended on the level of homesickness.

Standardized Coefficients and R 2

Standardized coefficients and R2 for all endogenous variables can be obtained by including “OUTPUT: STDYX;” under the OUTPUT command in the Mplus syntax. Results from Model A3 show that the R2 for task performance was 0.217, that is, all the independent variables in the model together explained 21.7% of variance in task performance.

Effect Size

For a significant interaction, it is valuable to know the effect size of the interaction effect, with Cohen’s (1988) f 2 recommended (Dawson, 2014). The effect size f 2 represents the proportion of residual variance in the dependent variable accounted for by the latent interaction, over and above what is accounted for by the main effects and other control variables. The effect size f 2 of the interaction term can be obtained with the following formula (Cohen, 1988, p. 410; Dawson, 2014, p. 14):

where \( {R}_2^2 \) and \( {R}_1^2 \) are the variance of the dependent variable explained in the models with and without the latent interaction (Model A3 and Model A2), respectively. In our example, \( {R}_2^2 \) = 0.217 and \( {R}_1^2 \) = 0.174. Hence, the change in R2 is 0.043, and thus, inclusion of the latent interaction accounted for an additional 4.3% of the variance in task performance with an effect size f 2 = 0.055.

Step 4: Interpreting the Interaction Effect (Model 4)

The objective of Step 4 is to interpret the latent interaction effect by conducting simple slope tests and probing the latent interaction effects via two different figures. While this step involves post-hoc analyses conducted only if an interaction effect is statistically significant, researchers are encouraged to specify the form of all interactions a priori in their hypotheses, and these tools then “test” the hypothesized forms of interactions (Dawson, 2014). For example, when hypothesizing that the positive relationship between X and Y is stronger when Z is higher, one can further specify a boundary condition that the positive relationship only exists when Z is higher than 3 (on a 7-point scale). An alternative example is to hypothesize a positive relationship between X and Y when Z is higher than 5 (on a 7-point scale) and a negative relationship when Z is lower than 2. Step 4 requires running Model A4, which is a modified version of Model A3 in Step 3, in which one removes the request for standardized outputs, and adds estimation of simple main effects and a request for bootstrapping the results.

Simple Slope Tests

If the latent interaction effect estimated in Step 3 using Model A3 is statistically significant, one can conduct simple slope tests in a new model (Model A4) to examine the statistical significance of the simple main effect of X on Y at various levels of the moderator Z (Aiken & West, 1991). In our example, we examined homesickness moderating the relationship between job resources and task performance. Based on Eq. 4, the relationship between job resources and task performance can be expressed as follows:

By default in Mplus, the means of latent variables are set to zero and the variances of exogenous variables are estimated. The value of one standard deviation of the moderator, in this case homesickness, can be obtained by first labeling the estimated variance of homesickness (varHS) and then creating a new variable (stdHS) for the standard deviation of homesickness and defining that as the square root of the variance of homesickness using the MODEL CONSTRAINT option in Mplus. For example (and as per Model A4),

-

$$ \mathrm{HOMES}\ \left(\mathrm{varHS}\right); $$

-

$$ \mathrm{MODEL}\ \mathrm{CONSTRAINT}: $$

-

$$ \mathrm{NEW}\ \left(\mathrm{stdHS}\right); $$

-

$$ \mathrm{stdHS}=\mathrm{SQRT}\left(\mathrm{varHS}\right); $$

The common method for examining the simple main effects, as suggested by Aiken and West (1991), is to assess these at two levels of the moderator by simply substituting the values of mean plus 1 standard deviation and mean minus 1 standard deviation of homesickness into Eq. 8. Instead, to provide greater detail, we estimate the simple main effects of job resources on task performance at five levels of homesickness as follows: (a) 2 standard deviations below the mean, (b) 1 standard deviation below the mean, (c) at the mean, (d) 1 standard deviation above the mean, and (e) 2 standard deviations above the mean.Footnote 7 Estimating the conditional main effects at five or more levels enables probing of the interaction effects in a figure using the Johnson-Neyman approach as described below. In order to substitute different levels of homesickness into Eq. 8, in the Mplus syntax (Model A4), we first labeled γ1 and ω12 in Eq. 8 as b1 and b3, and then created new variables by using the MODEL CONSTRAINT option. For example:

-

$$ \mathrm{TASKP}\ \mathrm{ON}\ \mathrm{JOBRES}\ \left(\mathrm{b}1\right); $$

-

$$ \mathrm{TASKP}\ \mathrm{ON}\ \mathrm{JRxHS}\ \left(\mathrm{b}3\right); $$

-

$$ \mathrm{MODEL}\ \mathrm{CONSTRAINT}: $$

-

$$ \mathrm{NEW}\ \left(\mathrm{Slope}\_2\mathrm{L}\ \mathrm{Slope}\_\mathrm{L}\ \mathrm{Slope}\_\mathrm{M}\ \mathrm{Slope}\_\mathrm{H}\ \mathrm{Slope}\_2\mathrm{H}\right); $$

-

$$ \mathrm{Slope}\_2\mathrm{L}=\mathrm{b}1+\mathrm{b}3\ast \left(-2\ast \mathrm{stdHS}\right); $$

-

$$ \mathrm{Slope}\_\mathrm{L}=\mathrm{b}1+\mathrm{b}3\ast \left(-1\ast \mathrm{stdHS}\right); $$

-

$$ \mathrm{Slope}\_\mathrm{M}=\mathrm{b}1+\mathrm{b}3\ast \left(0\ast \mathrm{stdHS}\right); $$

-

$$ \mathrm{Slope}\_\mathrm{H}=\mathrm{b}1+\mathrm{b}3\ast \left(1\ast \mathrm{stdHS}\right); $$

-

$$ \mathrm{Slope}\_2\mathrm{H}=\mathrm{b}1+\mathrm{b}3\ast \left(2\ast \mathrm{stdHS}\right); $$

Then a statistical test was conducted for the simple main effect of job resources on task performance at each specified level of homesickness by estimating the CI of each simple main effect using bootstrapping. Since Mplus does not allow for bootstrapping with the MLR estimator, the ML estimator is used instead. Note that both the MLR and ML estimators give the same estimated parameters, and because bootstrapping is used to estimate the standard errors of the estimated parameters, there is no need to adjust the estimated standard errors with the MLR estimator. The ML estimator is specified by including “ESTIMATOR = ML;” under the ANALYSIS command. We suggest generating 2000 bootstrap samples by including “BOOTSTRAP = 2000;” under the ANALYSIS command. Bias-corrected confidence intervals (BCCI) are obtained by including “OUTPUT: CINTERVAL(BCBOOTSTRAP);” under the OUTPUT command. The results from the output file of Model A4 show that when homesickness was one standard deviation below the mean, the effect of job resources on task performance was statistically significant (b = 0.463; p < 0.01; 95% CI [0.257, 0.771]). When homesickness was one standard deviation above the mean, the effect of job resources on task performance was not statistically significant (b = 0.144; p > 0.10; 95% CI [-0.044, 0.348]).

Plotting the Interaction Effect with the Johnson-Neyman Technique

For a moderator which is a continuous variable, such as homesickness, the moderating effect can be displayed graphically using the Johnson-Neyman technique (Johnson & Neyman, 1936) which plots the unstandardized simple main effects (Y-axis) at various levels of the moderator (X-axis). We provide an Excel file in the supplementary files (Johnson Neyman Figure.xlsx), which plots the unstandardized effects of job resources on task performance at various levels of homesickness as per Fig. 3. The entries in the highlighted cells were the estimated standard deviation of homesickness (moderator), the estimated simple main effects, and the corresponding 95% BCCI that can be found under “New/Additional Parameters” of the Mplus output file of Model A4. This Excel file can be adapted by researchers using their own Mplus output values. Draw a horizontal line at the value of zero on the Y-axis; note where this line intercepts with the CI (either the lower limit or the upper limit) and draw a vertical line down to the X-axis. This indicates the boundary between significant and nonsignificant regions. The region where the horizontal line is outside the CI is significant, and the region where the horizontal line is within the CI is nonsignificant. Figure 3 reveals that the higher the level of homesickness, the lower was the positive effect of job resources on task performance. Figure 3 also shows that we have identified a boundary condition for the effect of job resources on task performance. That is, job resources only had a statistically significant positive effect on task performance when the level of homesickness was lower than 0.3 standard deviations above the mean. However, one should interpret the level of the moderator that defines the boundary conditions cautiously because this is sample specific and sample size will affect the width of the CIs and hence the boundary conditions.

Plotting the Standardized Effects at Various Levels of Moderator

While the graph using the Johnson-Neyman technique is superior (Fig. 3) because it provides substantially more information, reviewers may still request the standard graph plotting the simple main effects of the moderator at one standard deviation above and below the mean. Hence, we also plotted the standardized effects of job resources on task performance at homesickness values of one standard deviation above and below the mean to illustrate the nature of the interaction effect (Fig. 4). The standardized coefficients can be obtained from the output of Model A3. The results show that the standardized form of Eq. 8 can be expressed as follows:

where TASKP*, HOMES*, and JOBRES* are the standardized values of TASKP, HOMES, and JOBRES, respectively. We plotted two lines in Fig. 4. The first line was plotted by substituting HOMES* with 1 and JOBRES* with values from − 3 to 3, and the second line was plotted by substituting HOMES* with − 1 and JOBRES* with values from − 3 to 3. To facilitate this, we provide an Excel file (Standardized Effects Figure.xlsx) in the supplementary files that plots the standardized effects in Fig. 4. Researchers can adapt this Excel file by inputting the values from their Mplus output into the relevant highlighted boxes. Note that this graph is more meaningful if the moderator is a dichotomous variable. If the moderator is a continuous variable, one should interpret this graph cautiously because the two levels of moderator chosen may not meaningfully represent the sample (Dawson, 2014).

We next present Examples B to G covering a range of other interactions researchers may wish to investigate.

Example B: Three-Way Interactions (Models B3, B3b, and B4)

Du et al. (2018) also hypothesized that the moderating effect of homesickness was moderated by emotional stability. The test of this hypothesis involves three two-way interactions and one three-way interaction. For this example, the measurement model in Step 1 (Model A1), and the model with only linear effects in Step 2 (Model A2) are the same as those in Example A described above; therefore, we do not repeat these first two steps.

In Step 3, we added to Model A2 the three-way interaction among job resources, homesickness, and emotional stability, as well as the three two-way interactions, to produce Model B3. Since Mplus 8.4 does not provide standardized outputs for three-way interactions yet, the request for standardized outputs on the OUTPUT command was removed. Comparing the loglikelihood values between Model A2 (L0 = − 23,457.537, c0 = 0.9780, and p0 = 147) and Model B3 (L1 = − 23,450.593, c1 = 0.9739, and p1 = 151) resulted in TRd(df = 4) = 16.870 (p < 0.01), which indicated that adding the three-way interaction and three two-way interactions improved the model fit significantly. Results from the output of Model B3 show that the effect of the three-way interaction among job resources, homesickness, and emotional stability on task performance was statistically significant (b = -0.430, p = 0.02). Following Muthén (2012, p. 7), the standardized coefficient can be estimated by dividing the unstandardized coefficient by the standard deviation of the dependent variable and then multiplying it by the standard deviations of all the components of the interaction term. The standard deviations of the latent variables can be obtained from the measurement model in Model A1 and the unstandardized coefficient from Model B3. Thus, the standardized effect of the three-way interaction on task performance equaled to \( \frac{-0.430\ast \sqrt{0.160}\ast \sqrt{0.144}\ast \sqrt{0.765}}{\sqrt{0.126}}=-0.161 \).

Since the R2 for the three-way interaction model is not provided by Mplus, it is estimated by (1 − residual variance of Y/total variance of Y) where residual variance of Y is obtained from Model B3 and total variance of Y from Model A1. Hence, the R2 for task performance in Model B3 is estimated as (\( 1-\frac{0.094}{0.126} \)) = 0.254. In order to estimate the R2 change and effect size Cohen’s f 2 attributable to the three-way interaction, an additional model needs estimating without the three-way interaction effect. This model (Model B3b) has all the linear effects and three two-way interactions; the R2 for task performance in this model is 0.220. The R2 change in task performance attributable to the three-way interaction is (0.254 − 0.220) = 0.034 and Cohen’s f 2 = \( \left(\frac{0.034}{1-0.254}\right) \) = 0.046.

To conduct Step 4, and following Dawson (2014, p. 5), four different moderating effects with different combinations of high and low levels of homesickness and emotional stability on the job resources–task performance relationship were estimated for the simple slope tests for the three-way interaction. The three-way interaction also requires six pairwise comparisons for differences between slopes. Although Dawson (2014, pp. 5–7) provided the standard error for the simple slope tests and the comparisons between slopes, formulae for those standard errors are complicated and the test statistics likely have nonnormal distributions. Hence, we suggest using bootstrapping to conduct the simple slope test and the comparisons between slopes. Model B4 in the supplementary files shows the Mplus syntax for conducting simple slope tests with bootstrapping using LMS. As it took about 5 minutes to run Model B3, we ran Model B4 with just five bootstrap samples (instead of the usual 2000 samples) to estimate the elapsed time. It took 17.25 minutes to run Model B4 and hence it is estimated that running Model B4 with 2000 bootstrap samples may take up to 4 days. Where researchers find this too long to run a model, we recommend RCSLMS as an alternative approach to testing interaction effects and illustrate this in Example C below.

Example C: Three-Way Interaction with RCSLMS (Models C2, C3, C3b, and C4)

Since RCSLMS has much reduced computational demands compared to LMS, RCSLMS is recommended for testing more complex interactions when bootstrapping is required to conduct the simple slope test and the comparisons between slopes. We next demonstrate how to use RCSLMS to test for a three-way interaction. Identical to Step 1 in LMS, Step 1 in RCSLMS is to conduct a CFA by estimating the measurement model with all items to assess measurement quality. That was completed in Model A1 in our numerical example. We estimated all subsequent models in Example C with the Mplus demo version.

Step 2 of the RCSLMS approach was to create a new data set to represent each variable using the simple average of item scores (Example2.dat in the supplementary files). Then the variance and Cronbach’s alpha for each variable were estimated with R. An initial model with only linear effects (Model C2) was estimated to provide baseline fit indices against which to evaluate the fit of models with latent interactions. Thus, Model C2 was created by replacing each latent variable in Model A2 with the simple average score as a single indicator, fixing the factor loading to 1, and the residual variance to (1 − reliability) times the variance of the variable. For example, the simple average of the indicators for job resources was labeled as SJobRes, which had a variance at 0.289 and a reliability at 0.735. Hence, JOBRES was defined as:

Since the variables are modeled as latent variables with single indicators in RCSLMS, the variables will be centered by default in Mplus. All the fit indices for Model C2 indicated the model fitted the data perfectly. However, since the fit indices are not sensitive to missing nonlinear effects (Mooijaart & Satorra, 2009), we examine if the inclusion of the latent interactions can improve model fit using the loglikelihood values difference test.

Step 3 comprises estimating a model (Model C3) in which the three two-way latent interactions and the three-way interaction are added to Model C2, which parallels the LMS process via Model B3. Comparison of the loglikelihood values between Model C3 and Model C2 resulted in TRd(df = 4) = 18.514 (p < 0.001), which indicated that adding the three two-way interactions and the three-way interaction improved the model fit significantly. Results of Model C3 were comparable with those of Model B3. The effects of the interaction between job resources and homesickness, and the three-way interaction among job resources, homesickness, and emotional stability on task performance, were -0.453 (p = 0.001) and -0.436 (p = 0.011), respectively, from Model C3 and were -0.446 (p = 0.008) and -0.430 (p = 0.020), respectively from Model B3. Latent variance of a variable in RCSLMS equals to observed variance minus residual variance, which were all obtained in Step 2. Thus, the standardized three-way interaction effect on task performance equaled to \( \frac{-0.436\ast \sqrt{0.212}\ast \sqrt{0.148}\ast \sqrt{0.710}}{\sqrt{0.161}}=-0.162 \).

The residual variance for task performance in Model C3 was 0.121. Hence, the R2 for task performance in Model C3 is estimated as (\( 1-\frac{0.121}{0.161} \)) = 0.251. As before, estimation of the additional model (Model C3b) with only the linear effects and three two-way interactions, but not the three-way interaction effect, was required to find the R2 change and effect size Cohen’s f 2 attributable to the three-way interaction. R2 for task performance in Model C3b was 0.223. The R2 change in task performance brought by the three-way interaction is (0.251 − 0.223) = 0.028 and Cohen’s f 2 = \( \left(\frac{0.028}{1-0.251}\right) \) = 0.037.

Step 4 required conducting simple slope tests of the three-way interaction and comparisons between slopes with Model C4, which added the bootstrapping function to Model C3 with 2000 bootstrap samples to estimate the CIs. Whereas Model C3 required 47 seconds to run, Model C4 took about 26 hours to run. Results show that the relationships between job resources and task performance were not statistically significant when both homesickness and emotional stability were high (b = -0.116, p > 0.10, 95% BCCI = [-0.435, 0.150]) and when both homesickness and emotional stability were low (b = 0.283, p > 0.05, 95% BCCI = [-0.050, 0.613]). However, the relationships between job resources and task performance were statistically significant when homesickness was high and emotional stability was low (b = 0.218, p < 0.05, 95% BCCI = [0.008, 0.422]), and when homesickness was low and emotional stability was high (b = 0.515, p < 0.01, 95% BCCI = [0.262, 0.780]). Based on Dawson (2014), Jeremy Dawson has provided Excel files on his personal web page for probing two-way and three-way interactions (www.jeremydawson.com/slopes.htm), which are also applicable for results obtained from RCSLMS.

Other Models with More Complex Interactions

In this section, analytical procedures for models with more complex interactions are presented based on LMS. All analyses should go through the 4-step procedure described above. Note that Step1 (measurement model), Step 2 (model with linear effects only), and Step 4 (bootstrapping and probing with figures) remain the same when testing various nonlinear effects; only the models for Step 3 vary and therefore will be briefly discussed, with Examples D to G below representing a range of interactions that researchers may want to investigate. The tests for interactions should be based on theoretically- derived hypotheses and here we posit possible interactions for the sake of demonstration only.

Example D: Quadratic Effect (Model D3)

Besides moderating effects, U-shaped or inverted U-shaped relationships representing quadratic effects are also commonly examined in business and psychology studies (Dawson, 2014, Equation 7). LMS can be used to examine the quadratic effect of a latent variable by simply creating a latent interaction term with the latent variable itself. For example, a latent quadratic term for job resources was defined in Model D3 under the MODEL command with the statement “JRxJR | JOBRES xwith JOBRES;”. Comparing the loglikelihood values between Model D3 and Model A2 gave TRd(df = 1) = 6.985 (p = 0.0082), indicating that adding the latent quadratic effect made a statistically significant improvement to model fit. The results showed that the regression coefficient of JRxJR on TASKP (task performance), that is ω11, was -0.286 (p < 0.05, β = − 0.124), and \( {R}_2^2 \) = 0.212. Comparing these with results of Model A2 showed the latent quadratic effect of job resources accounted for an additional 3.8% of the variance in task performance with an effect size of f 2 = 0.048.

Example E: Moderated Quadratic Effect (Model E3)

The quadratic effect of job resources on task performance might be moderated by homesickness. Thus, both the latent interaction between job resources and homesickness, and that between the quadratic term of job resources and homesickness, were added to Model D3 to produce Model E3 (Dawson, 2014, Equation 8). Comparing the loglikelihood value of Model E3 with that of Model A2 gave TRd(df = 3) = 11.584 (p < 0.01), suggesting that this model fits the data significantly better than the model without latent interaction. However, the moderating effect of homesickness on the curvilinear relationship between job resources and task performance was -0.034 (p = 0.903), which is not statistically significant. The R2 for task performance in Model E3 is estimated as (\( 1-\frac{0.099}{0.126} \)) = 0.214. Calculation of the R2 change in task performance brought by the moderated quadratic effect required estimation of Model E3b that dropped the moderated quadratic effect from Model E3. The R2 for task performance in Model E3b is also 0.214, the same as Model E3, indicating that including the moderated quadratic effect explained no additional variance in task performance.

Example F: Polynomial Regression (Model F3)

A simple extension of the quadratic effect is the polynomial regression model shown in Eq. 1. Su et al. (2019) recently used simulation to show that using LMS and RCSLMS to model polynomial regression when examining congruence is superior to the regression approach that ignores measurement errors. In this polynomial regression model (Model F3), the latent quadratic term of job resources, latent quadratic term of homesickness, and the latent interaction between job resources and homesickness were added to Model A2. Comparing the loglikelihood value of Model F3 with that of Model A2 gave TRd(df = 3) = 13.476 (p = 0.0037), indicating that the inclusion of the latent quadratic terms and the latent interaction improved the model fit significantly. However, the results of Model F3 showed that none of these additional terms had statistically significant effects on task performance. We note a debate between researchers about including quadratic terms. Some researchers (e.g., Cortina, 1993; Edwards, 2009) suggest that whenever an interaction effect is estimated, one should also include the quadratic terms in the model such that the estimated interaction effect will not include spurious effects from omitted quadratic terms. Yet other researchers (e.g., Dawson, 2014) suggest that inclusion of the quadratic terms is only essential when the correlation between X and Z is above 0.5 to provide a more conservative test for the interaction effect. However, Su et al. (2019) found in their simulation that when quadratic effects did not exist in the population, inclusion of the quadratic terms in the model resulted in higher type I error for the quadratic effects and lower power for testing the interaction effects; this was due to attenuation in the estimates of the interaction effects. Hence, joining with other researchers (e.g., Aiken & West, 1991; Cheung, 2009; Harring, Weiss, & Li, 2015; Su et al., 2019), we recommend that the decision as to whether quadratic effects should be included or excluded in a model testing interaction effects should be based on theory and related hypotheses.

Example G: Curvilinear Three-Way Interaction (Model G3)

Finally, the moderating effect of homesickness on the curvilinear (quadratic effect) relationship between job resources and task performance might also be moderated by emotional stability, leading to a curvilinear three-way interaction (Dawson, 2014, Equation 13). This final model, Model G3, combined the three-way interaction and the quadratic effect of job resources, and can be created by inclusion of a second moderator W to the moderated quadratic effect model (Model E3) discussed above. Specifically, the additional linear effect of W, two-way interactions of XW and ZW, three-way interaction of XZW, and the moderated quadratic effects of X2W and X2ZW were added to Model E3. Comparing the loglikelihood value of Model G3 with that of Model A2 gave TRd(df = 8) = 19.758 (p < 0.05), indicating that the model fits the data significantly better than the model with only linear effects. Results of Model G3 showed that the linear three-way interaction (b = -0.402, p < 0.05) had statistically significant effects on task performance. The R2 for task performance in Model G3 is estimated as (\( 1-\frac{0.093}{0.126} \)) = 0.262; comparison of the R2 obtained in Model E3 (which serves as Model G3b) gives an R2 change at 0.048 and Cohen’s f 2 = \( \left(\frac{0.048}{1-0.262}\right) \) = 0.065.

Some Frequently Asked Questions About Modeling Interactions with LMS and RCSLMS

In this final section, we provide answers to questions that we suspect might otherwise puzzle researchers and restrict their ability to implement LMS and RCSLMS appropriately.

What Sample Size Is Required for LMS and RCSLMS to Test Latent Interactions?

The appropriate sample size to test interactions using LMS or RCSLMS depends on the complexity of the model and effect size being tested. At a minimum, the sample size must be larger than the number of estimated parameters; otherwise, the model will be unidentified and no estimated parameters will be provided. Mplus will provide an error message under such circumstances. In this regard, RCSLMS requires a smaller sample size than LMS because fewer parameters are estimated. Simulation results in Table 3 show that, for both LMS and RCSLMS, N = 400 is required to achieve adequate power for testing a small interaction effect and N = 150 is required for testing a medium interaction effect. Although in general N = 100 provides adequate power for testing a large interaction effect for both LMS and RCSLMS, in particular when α is 0.8 or higher, our simulation results also show that using RCSLMS with a small sample size yields inflated type I error rates. Hence, our overall recommendation is to use a minimum sample size of 150 when testing latent interactions.

How Can the Required Computing Resources Be Reduced?

A common criticism of LMS is that it takes a long time to estimate the model parameters (e.g., Kelava et al., 2011; Klein & Moosbrugger, 2000; Sardeshmukh & Vandenberg, 2017). With ongoing improvements in computing speed, this concern should gradually diminish. Among the models we demonstrated, Model G3 is the most complex, containing eight quadratic and interaction terms; the elapsed time for that model was about 16 minutes on a desktop computer with an Intel Core i7-6700T CPU @ 2.80GHz and 16GB RAM. While elapsed time may be acceptable for a single model, combining bootstrapping and complex models with LMS may take a much longer time to run. There are a few tricks one can use to shorten the elapsed time. Our first suggestion is to increase the number of processors for Mplus by specifying “PROCESSORS = 8;” under the ANALYSIS command if the computer has eight core processors. While most modern computers have multiple processors (normally eight), Mplus uses only one processor by default. The above syntax instructs Mplus to use multiple processors, shortening the elapsed time by more than 50%.

The second trick is relevant to highly complex models; for these, use RCSLMS to estimate the parameters instead of a full model with all indicators. Multiple simulation studies (Cheung & Lau, 2017; Su et al., 2019), including those in this teaching note, find that RCSLMS provides similar results to those from LMS. Further, unlike PI, RCSLMS does not have the problem of item parceling that gives different results depending on how the items are combined into parcels.

Finally, LMS adopts numerical integration to approximate the mixture of multivariate normal distributions. The most commonly encountered warning and error messages when running LMS with Mplus are “this model requires a large amount of memory and disk space. It may need a substantial amount of time to complete” and “there is not enough memory space to run Mplus on the current input file.” The higher the number of integration points, the longer elapsed time it will take and the more accurate the results are. While the Mplus default number of integration points is 15, one may try to reduce the elapsed time by using a smaller number of integration points by specifying, for example, “INTEGRATION = 8;” under the ANALYSIS command. Researchers can then gradually increase the number of integration points, although usually the results show minimal change at 8 or more integration points. However, we do not recommend researchers use small numbers of integration points, such as 4 as suggested by Preacher, Zhang, and Zyphur (2016), as doing so will usually lead to nonconvergent solutions or inaccurate estimates.

What Should I Do with Missing Data?