Abstract

Effective treatment of HIV hinges upon maintaining adequate antiretroviral therapy adherence. Accurate, cost-effective measurement of medication adherence is needed to best respond to the HIV pandemic. The visual analogue scale (VAS) appears to be a simple and easy to use measure of adherence but the current literature on its use is mixed. This meta-analysis (1) describes VAS concordance with other measures of medication adherence and viral load; and (2) examines how research methods moderate the reported strength of the VAS–viral load relationship. Literature searches were conducted electronically and by hand with a total of 20 studies included in the present study. The VAS showed large strength associations with most other measures of adherence and a smaller association with viral load. More rigorous methodological quality significantly improved the VAS–viral load effect size. We conclude with optimization recommendations for VAS use in clinical practice and research design.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Antiretroviral therapy (ART) can reduce viral load to undetectable levels in PLWH, forestalling the progression to AIDS (Chan, Wong, & Lee, 2006; Herbst et al., 2009; Palella et al., 1998), and reducing the risk of HIV transmission (Krakower, Jain, & Mayer, 2015; World Health Organization, 2012). Patient behavior forms the foundation of this success and also serves as its rate-limiting step. Suboptimal ART adherence leads to AIDS related morbidities and risk of mortality (Sherr et al., 2010; Bangsberg et al., 2001), creates opportunities for transmission through sexual/drug injection networks (Friedman et al., 2007; Johnson et al., 2014) and increases drug-resistant viral strains (Assoumou et al., 2013; Ferreira et al., 2013; Sinha et al., 2012). Adequate adherence helps preserve the effectiveness of this class of treatments and protect both individual and public health.

Clinicians try to identify patients with suboptimal medication adherence. In a global healthcare context of limited resources and high patient-to-provider ratios (Park-Wyllie, Kam, & Bayoumi, 2009; World Health Organization, 2006), there is a need for adherence monitoring methods that are simple, efficient, and cost-effective. Unannounced pill counts, electronic drug monitoring, tracking pharmacy refill records, and the direct observation of therapeutic drug levels each have the benefit of third party objectivity but carry a heavy financial and person-hour burden; these methods may be unrealistic for scale up in many areas where routine adherence monitoring is needed most. Self-report measures are more expedient and cost-effective, but are subject to biases of recall, reporting, and social desirability. Some estimates suggest rates of “adherence inflation” that are as much as 15 % greater than results using objective measures of adherence (Shi et al., 2010).

The visual analogue scale (VAS) is a type of rating system in which the respondent is presented with a line that visually represents a range of possible ratings or responses to a question. The respondent is instructed to place a mark at a point on the line that represents their rating or response. The VAS line can be presented horizontally or vertically; lines can vary in length, but the accepted standard length of 10 cm is commonly used (Wewers & Lowe, 1990). Labeled endpoints anchor the scale boundaries and can be quantitative (e.g., 1–10, 0–100 %) or qualitative (e.g., best–worst, severe–slight). The VAS typically assists in measuring subjective clinical phenomena (e.g., pain, dizziness) that are otherwise difficult to describe (Torrance, Feeny, & Furlong, 2001; Wewers & Lowe, 1990).

Marking a numbered line suggests a process that is ostensibly simple and language independent, but there are both conceptual and practical concerns about the use of the VAS to measure patient medication adherence. In HIV for example, the VAS is typically presented as a numerical scale of doses taken, anchored by 0 and 100 % on either end of the line. Respondents are then asked to mark the line representing their adherence for the past 30 days. While simple on its face, this process may require a burdensome level of conceptual abstraction. Researchers conducting cognitive interviews have observed that the mathematical manipulation needed to respond to this standard VAS prompt led to a greater number of errors relative to other self-report measures (Wilson et al., 2014). Additional concerns relate to VAS data management. Despite pretentions to quantification, over reporting adherence is as common in VAS data as in other more qualitatively framed self-report measures (Wilson, Carter, & Berg, 2009). In most samples, VAS score distributions exhibit a pronounced negative skew, violating statistical assumptions for parametric testing of this outcome. Subsequent transformation of the variable can impact interpretability (Cohen et al., 2003).

However, there are several factors that might also incline researchers and clinicians toward VAS use in medication adherence research and care. By providing data on a continuous rating scale, the VAS permits more sophisticated analytic possibilities than can be found with data from categorical response sets or Likert scales (Treiblmaier & Filzmoser, 2009). With its single item-structure and visual/graphical format, the VAS appears simpler and briefer to administer than a standard HIV medication adherence questionnaire (Feldman et al., 2013; Kerr et al., 2012). These same physical characteristics also make the VAS inherently well-mated to the touch screen interface of today’s handheld technology and the burgeoning field of mHealth (Muessig et al., 2015). Web-based survey design platforms typically provide a VAS option for presenting questionnaire items and studies have demonstrated the feasibility and acceptability of using patients’ own communication devices for adherence monitoring (Bastawrous & Armstrong, 2013; Brinkel et al., 2014; Furberg et al., 2012). All this makes the VAS a potential option for use in the next wave of mHealth surveillance and interventions, including those targeting medication adherence. In the last 10 years, researchers have studied the VAS measurement of ART adherence compared with other standard measurement instruments. This has yielded a mixed literature: how well the VAS measures ART adherence remains unclear. As the emphasis on monitoring of and interventions to promote medication adherence continues to grow, there is a pressing need to assess the value of this ostensibly simple and expedient self-report measure.

The present study provides a quantitative review and meta-analysis of the VAS used to measure ART adherence. We seek to address the following research questions: (1) What is the average strength of association between the VAS and other measures of medication adherence? (2) How well do VAS scores predict patient viral load? (3) Do methodological factors of VAS administration and study design influence the strength of the VAS–viral load relationship? We hypothesized a priori that studies with stricter adherence to standards of methodological quality would report greater concordance between VAS and viral load values in their samples.

Methods

Data collection

Multiple strategies were used to identify relevant studies. Two independent researchers conducted Boolean searches of publications PubMed/Medline, PsycINFO, and CINHAL electronic databases. Unpublished or “gray literature” was sought using the electronic database Proquest Dissertations and Theses as well as through hand searches of recent year oral and poster abstracts from the archives of the International AIDS Conference and the International Conference on HIV Treatment and Prevention Adherence (2012–2014). Search terms included permutations of visual analog scale and adherence (e.g., [“VISUAL ANALOG SCALE” OR “VISUAL ANALOGUE SCALE” OR VAS] AND [“ADHERENCE” OR “MEDICATION ADHERENCE”]). Studies which met the following a priori criteria were included in this review: (1) use of VAS to measure antiretroviral adherence with PLWH; (2) a comparison of VAS to at least one other measure, biomarker or clinical outcome of antiretroviral adherence; and (3) sufficient data to calculate effect size. Whenever a study met criteria (1) and (2) but did not report sufficient data for effect size calculation, we contacted the corresponding author to request the necessary information.

Coding of studies

Using a standardized, pilot tested coding form (available upon request), two reviewers independently abstracted study data including general information (e.g., study location, year of data collection), participant characteristics (e.g., age, gender, race/ethnicity), design elements, and comparison measures used in addition to the VAS. Coders displayed an acceptable rate of agreement (agreement rate: 93.75 %; weighted κ: 0.817, p < 0.001). Discrepancies were reconciled through discussion.

Risk of bias

In order to assess for risk of bias within individual studies (Higgins & Green, 2011) we used a methodological quality (MQ) rating form developed by the United Kingdom’s National Institute for Health and Care Excellence (NICE) specifically for the assessment of “quantitative studies reporting correlations and associations” (NICE, 2012). This 19-item instrument evaluates the extent bias may be present through appraisal of such factors as population sampling (selection bias), methodological/analytical design (measurement bias), and statistical power. Raters score items with either a minus sign (“−”) indicating high risk of bias; a plus sign (“+”) indicating moderate risk of bias; or a double plus sign (“++”) indicating low risk of bias for that parameter. In accordance with these guidelines, we report descriptions of internal and external validity summary ratings categorically, converting these to numerical scores as necessary for the purpose of testing methodological quality scores as a moderator.

We also assessed for the risk of publication bias favoring studies demonstrating greater concordance between the VAS and other measures or outcomes as well as any asymmetry of effect sizes by study. In addition to graphing a standard “funnel plot” (Fig. 1) we also calculated its statistical equivalent using Begg’s correlation between observed effect size and inverse weighted variance (Begg & Mazumdar, 1994). The resultant correlation was not significantly different from zero (z = −0.10; p = 0.7) and the only notable asymmetry in the funnel plot consisted of one highly weighted study that reported null effects (Gionatti et al., 2013). These findings imply a very low risk of publication bias.

Analytic approach

In this review, effect sizes (ESs) were estimated using the correlation coefficient r (Card, 2012; Cooper & Hedges, 1994; Hedges & Olkin, 1985) that we converted to standardized for (Zr) before applying inverse variance weighting. For reporting purposes we back-transformed all results from Zr to the more familiar r coefficient (Field, 2005) with 95 % confidence intervals, scaled on a continuum from −1 to 1. Positive decimals indicate a positive correlational relationship (i.e., stronger concordance between adherence measures) and negative decimals indicate a negative correlational relationship. An important exception to this is viral load, which typically correlates negatively with measures of adherence. In order to maintain consistency across adherence measures and aid in comparison and interpretation, we present viral load–VAS correlations with the sign reversed. Confidence intervals containing zero reflect an insignificant correlation. Throughout this report we use the basic descriptors put forward by Cohen (1992) to characterize small (r = 0.1), medium (r = 0.3), and large (r = 0.5) effect sizes. All characteristics of this meta-analysis are reported using PRISMA guidelines (Moher et al., 2009).

Many studies reported using multiple adherence measures (e.g., self-report, pill count, electronic data monitoring) in addition to the VAS. To uphold the assumption of independence, a multivariate approach (Becker et al., 2000; Gleser & Olkin, 1994) was followed when more than five comparisons were available for sensitivity analysis but due to the large variability of comparisons measures and small number of studies per comparison measure, average effect sizes (ESs) were calculated separately by type of comparison measure in order that each study would contribute only one outcome per average ES synthesized. When multiple measurements were made within a given type of measure in the same study, such as multiple different measures of self-reported adherence other than the VAS, ESs were averaged within that study before combining ESs between studies. For analyses, correlation coefficients were standardized using the Fischer Zr transformation (Field, 2001). Inverse variance weights for each outcome were also calculated. Final meta-analytical tests of derived ESs were performed using SPSS version 21 (IBM Corp., 2012) and R package metafor (Viechtbauer, 2010). Weighted mean ESs were calculated to estimate overall strength of association between the VAS and each of the comparison variables. ESs were analyzed using random-effects assumptions with the magnitude of heterogeneity across ESs assessed using the I 2 statistic and its confidence interval (Huedo-Medina et al., 2006; Higgins, Thompson, Deeks, & Altman, 2003). Analyses using the Q statistic as a measure of variance in a meta-analytic analog to the one-way ANOVA (Wilson, 2002) assessed whether study characteristics explained variability in the ability of the VAS to predict viral load across studies. Methodological quality rankings have been identified as an under-analyzed element of the data reported in meta-analyses (Johnson et al., 2014). Derived internal and external validity scores were entered into a series of weighted least squares regression models incorporating random-effects assumptions (Schmidt, Oh, & Hayes, 2009; Wilson, 2002) and used the moving constant technique (Johnson & Huedo-Medina, 2011) to produce estimates at meaningful levels of the moderators.

Results

Literature search outcomes

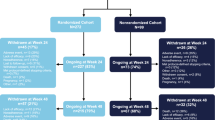

Our search yielded 235 articles. After reviewing title and abstract, 139 studies were excluded as false hits or duplicate (k = 26) results. Of the remaining 96 studies initially retained, 73 were studies using VAS that did not measure ART adherence, did not report analyses directly comparing VAS scores with other measures or outcomes, or relied on caregiver report. The remaining 23 full-text articles met inclusion criteria however eight papers did not report sufficient data to calculate effect sizes. We contacted the primary authors of these studies to request additional data. Five authors responded and their reports (Graham et al., 2012; Gionatti et al., 2013; Kagee & Nell, 2012; Mbaugbaw et al., 2012; Segeral et al., 2010) are therefore included (see Fig. 2). Listings of excluded studies with rationale are available upon request. This meta-analysis reflects a final set of 20 studies that examined the use of VAS to measure ART adherence in samples of PLWH. Table 1 reports study characteristics and findings.

Study characteristics

These 20 peer-reviewed publications represent samples taken over a 18 year period (1996–2014; median = 2006). Included studies were distributed across four continents representing eight different countries from the regions of sub-Saharan Africa (k = 6), East and Southeast Asia (k = 6), North America (k = 6), and Western Europe (k = 2).

Participant characteristics

This meta-analysis represents a total of 6138 participants across included studies, with an aggregate completion rate of 94.13 % (range 71.4–100 %) among consented participants. East or Southeast Asian participants constituted 27.5 % (n = 1688) of the total sample, 18.9 % (n = 1162) of the sample made up of sub-Saharan African participants, North American studies accounted for 17.8 % (n = 1096), and European studies represented 35.7 % (n = 2192). The mean participant age was 38.9 years and 39.5 % of the sample identified as female. Of studies reporting socio-economic indicators (k = 13) of their samples, a majority of participants endorsed limited educational attainment (i.e., less than a high school diploma or its equivalent) and met poverty guidelines for their respective countries. Ten studies reported information on participants’ familiarity with antiretrovirals; of these, 46.8 % of the overall sample was ART naïve (i.e., within 3 months of having initiated ART).

Design characteristics

Most (k = 12) studies reported the comparison and testing of adherence measures as a primary methodological aim of the study, but 40 % of studies identified other aims (e.g., STD surveillance study, adherence intervention study). Included studies exhibited variability (mean 1.6; range 1–4) in the number of comparison measures used to study the VAS. The most frequently reported comparison was of VAS to viral load (k = 12), followed by self-report measures (k = 9). Fewer studies reported comparisons of VAS with pill count (k = 6), electronic data monitoring (k = 4), and pharmacy refill records (k = 3). A total of nine studies (Berg et al., 2012; Buscher et al., 2011; Gill et al., 2010; Gionatti et al., 2013; Graham et al., 2012; Hong et al., 2013; Oyugi et al., 2004; Segeral et al., 2010; Walsh et al., 2002) reported administering the VAS as a self-report questionnaire. Eight studies (Giordano et al., 2004; Kagee & Nell, 2012; Kerr et al., 2012; Maneesriwongul et al., 2006; Mbaugbaw et al., 2012; Peltzer et al., 2010; Wang et al., 2008) reported administering the VAS through a face to face interview; Audio Computer Assisted Self-Interview (ACASI) was used in four studies (Amico et al., 2006; Do et al. 2013; Kalichman et al., 2009; Pellowski et al., 2015). Four studies reported normalizing patients’ experience of missed doses to reduce reporting and social desirability biases (Amico et al., 2006; Berg et al., 2012; Kalichman et al., 2009; Walsh et al., 2002).

VAS concordance with other adherence measures and viral load

Depending on the comparison measure used, average VAS measurements varied in their strength of association: these data are summarized in Table 2. Comparisons of VAS with other subjective self-report measures (k = 9; r = 0.61; 95 % CI 0.44, 0.74); with objective pill count data (k = 6; r = 0.72; 95 % CI 0.54, 0.85); and with objective data from electronic drug monitors (k = 4; r = 0.51; 95 % CI 0.19, 0.73) revealed a large effect size. Aggregating the three studies comparing VAS to pharmacy refill data (k = 3; r = 0.02; 95 % CI −0.54, 0.56) yielded no meaningful association. The average strength of association between VAS and viral load was small (k = 12; r = 0.25; 95 % CI 0.16, 0.36) but significant. All comparison measures exhibited sufficient variation attributable to heterogeneity across studies as reflected in their I 2 values. Of these, only viral load had a sufficient number of studies (k = 12) to warrant an investigation of possible moderators that might account for this observed variation. Figure 3 displays the forest plot of VAS/viral load effect sizes with 95 % confidence intervals.

Did methodological factors moderate the effect size of the VAS–viral load relationship?

In attempting to account for the heterogeneity in the VAS adherence–viral load relationship reported across studies, we examined methodological parameters as categorical predictors of effect size. These results are summarized in Table 3. First, we considered whether the researchers’ stated aims were to specifically assess VAS methodology. Measurement studies of VAS did report larger effect sizes (k = 6; r = 0.31; 95 % CI 0.17, 0.44) than other studies using the VAS (k = 6; r = 0.20; 95 % CI 0.07, 0.32). Because assessment of adherence using VAS asks participants to recall the adherence over a period of 30 days, we also compared the factor of cross-sectional versus longitudinal study design. We defined a longitudinal study as one with 30 days duration or more. Longitudinal studies reported an overall larger effect size (k = 5; r = 0.35; 95 % CI 0.20, 0.48) than cross-sectional studies (k = 7; r = 0.19; 95 % CI 0.08, 0.30).

In addition, we considered certain elements of the VAS administration protocol as moderators of the VAS–viral load relationship. The mode of administration was one such factor. Studies using an interview format showed larger effect sizes (k = 4; r = 0.35; 95 % CI 0.22, 0.46) than studies relying on a self-report questionnaire (k = 8; r = 0.18; 95 % CI 0.07, 0.28). Another factor we assessed was researchers’ attempts to combat participants’ tendency to over-report their adherence. Studies that sought to normalize the experience of missing doses showed larger effect sizes (k = 3; r = 0.34; 95 % CI 0.16, 0.50) than those that did not (k = 9; r = 0.22; 95 % CI 0.11, 0.32).

Other study characteristics did not account for variance in effect size across studies and thus constitute “pertinent negatives” for this analysis. Studies that sampled U.S. and Western European populations (k = 5; r = 0.28; 95 % CI 0.15, 0.34) were not significantly different in their estimates of the VAS and viral load relationship from those that sampled African or East and Southeast Asian populations (k = 7; r = 0.22; 95 % CI 0.09, 0.87). Studies abstracting viral load data from participant medical records (k = 6; r = 0.26; 95 % CI 0.12, 0.39) did not show a significantly different average effect size from those that performed blood draws and assessed viral load as part of study procedures (k = 6; r = 0.24; 95 % CI 0.09, 0.37).

This meta-analysis also operationalized methodological quality through continuous variables. These results are summarized in Table 4. In multivariate meta-regression controlling for year of data collection and study sample size, internal validity scores were positively associated (B = 0.1634; 95 % CI 0.0581, 0.2686; β = 0.5400; p = 0.0067) with VAS–viral load effect sizes. In contrast, similar model testing external validity scores showed this variable was not associated with effect size (B = 0.1595; 95 % CI −0.0885, 0.1378; β = 0.1618; p = 0.6691) in studies reporting an association between VAS and viral load.

Discussion

This paper summarizes the literature evaluating the use of visual analogue scales to measure ART adherence with a meta-analysis of how study characteristics and methodological quality factors moderate the VAS/viral load relationship. Overall, the VAS exhibited large strength (r = 0.5–0.7) associations with other self-report measures and with objective pill count and EDM data. Significant correlations between the VAS and these comparison measures have been observed historically (Hugen et al., 2002; Liu et al., 2001). At the same time, three studies using pharmacy data provide a contrasting estimate that shows no aggregate relationship. Unfortunately, the low number of included studies analyzing the relationship between pharmacy claim data and the VAS makes further interpretation of this finding difficult and may be a result of the difficulty researchers have in accessing complete and valid pharmacy administration records (Farmer, 1999; Hess et al., 2006) for use in adherence research.

In medication adherence research and clinical practice, measures that reliably predict a biological outcome are particularly valuable. This meta-analysis reports an aggregate VAS–viral load effect size estimate that is statistically significant but nonetheless quite small, leaving a good deal of the residual variance (90 %+) across these two variables unexplained by this mean effect size estimate. There are likely biological and behavioral factors that impact viral load irrespective of self-reported ART adherence (Abioye et al., 2015; Maldonado-Martinez et al., 2016). It is important to explore the potentially modifiable factors that influence the utility of a self-report adherence measure to predict a biological outcome. We approached this by comparing the different ways researchers administer and study the VAS in their studies. The modifications we identified (i.e., using face-to-face interviews, normalizing non-adherence, greater study internal validity) appear to substantially strengthen the VAS–viral load relationship; though still modest, moderators approximately doubled the variance accounted for in the effect size estimates across studies.

As a group, studies that set out to specifically investigate the VAS to measure ART adherence showed larger effect sizes than other studies using the VAS. Methodological research aiming to validate the VAS as a credible tool could be more likely to apply greater rigor in administering the tool. Longitudinal studies also showed significantly larger effects (r = 0.35) than the smaller (r = 0.15) effects observed in cross-sectional studies. Cross-sectional research is generally limited in its ability to imply directionality or causation among variables. In this meta-analysis we found that cross-sectional studies more frequently (5:1) relied on retrospective chart abstracted viral load data. These can occur before the typical 30 day VAS recall period, rendering the comparison less valid and perhaps explaining the decreased performance of the VAS in cross-sectional studies. In this sample of studies, type of viral load data did not appear to impact effect sizes, however we were limited to bivariate analyses of categorical moderators. An interesting future analysis would compare the relative contributions of viral load data source and study design.

How researchers administered the VAS also appeared to influence the VAS–viral load relationship. Interviews, whether face to face or audio computer assisted (ACASI), may confer an advantage in using the VAS to measure medication adherence. One possible reason for this could be the potential to provide detailed or individually tailored instructions. As the VAS does require a solid grasp of numeracy to use, such instruction could make a difference in this population, as the modern HIV pandemic disproportionately affects those with lower educational and socio-economic attainment (Pellowski et al., 2013).

In this review, two raters independently assessed the methodological quality of included studies, with high interrater reliability. Methodological quality ratings separately addressed studies’ internal validity (e.g., accounting for covariates, using appropriate design and analysis) and external validity (e.g., sampling techniques, population descriptions). Moderator analyses showed that studies with higher internal validity scores reported larger VAS–viral load effect sizes. In contrast, external validity scores did not moderate this relationship. Taken together, these analyses support that notion that researchers and clinicians alike may be able to optimize VAS measurement through a set of best practices. It also tentatively suggests that mode of recruitment and population sampling does not appear to influence the VAS–viral load relationship as reported in the current literature.

While these findings help provide additional information about the VAS as a medication adherence measure, there are limitations worth noting. Included studies reported a variety of measures alongside the VAS; at the same time the number of studies using any one type of comparison measure was low, with the exception of viral load (k = 12). This presented the challenge of how best to integrate and present the data yielded by this meta-analysis while upholding the necessary statistical assumptions. One approach would be to average effect sizes across measures within each study first and then estimate an overall mean effect size with the largest (k = 20) available sample. We chose against this approach here, feeling that the information on the strength of association between VAS and comparison measures was more meaningful when considered separately, avoiding an “apples and oranges” problem. We also were not able to statistically test the difference between ESs from the different comparison measures (i.e., this meta-analysis did not answer the question of whether the VAS or a questionnaire is superior in predicting viral load) due to the issue of stochastic dependence (Gleser & Olkin, 1994) in analyzing studies with multiple outcomes. Another limitation of the present study is the small number of included studies (k = 20). Our literature searches revealed studies that met inclusion criteria but did not report data sufficient to calculate an effect size. Unfortunately, the authors of these studies were not able to supply these data upon request. As more studies continue to emerge within the literature, it will be important to revisit these analyses and further refine our understanding of the VAS and its relevance in adherence measurement.

In conclusion, VAS demonstrates high levels of concordance with many other measures of adherence. Its ability to predict viral load in samples of PLWH is comparatively weak. Deceptively simple in design and easy to deploy, the VAS is nonetheless subject to cognitive biases and conceptual burdens for the respondent. VAS appears to perform differently under different methodological conditions and favors studies with longitudinal design and greater internal validity. Administration procedures also have the power to optimize VAS ability to predict viral load. Providing an interview format and informing respondents that missed doses are a normal patient experience are two elements of VAS administration that appear to further enhance its utility. Future studies should consider carefully these design and implementation factors when planning to use VAS to measure medication adherence.

References

Abioye, A. I., Siope, A., Salako, A. A., Odesanya, M. O., Okuneye, T. A., Abioye, A. I., et al. (2015). Are there differences in disease progression and mortality among male and female HIV patients on antiretroviral therapy? A meta-analysis of observational cohorts. AIDS Care, 27, 1468–1486. doi:10.1080/09540121.2015.1114994

Amico, K. R., Fisher, W. A., Cornman, D. H., Shuper, P. A., Redding, C. G., Konkle-Parker, D. J., et al. (2006). Visual analog scale of ART adherence: Association with 3-day self-report and adherence barriers. Journal of Acquired Immune Deficiency Syndromes, 42, 445–449.

Assoumou, L., Descamps, D., Yerly, S., Dos Santos, G., Marcelin, A. G., Delaugerre, C., et al. (2013). Prevalence of HIV-1 drug resistance in treated patients with viral load > 50 copies/mL in 2009: A French nationwide study. Journal of Antimicrobial Chemotherapy. doi:10.1093/jac/dkt033

Bangsberg, D. R., Sharon, P., Charlebois, E. D., Clark, R. A., Roberston, M., et al. (2001). Non-adherence to highly active antiretroviral therapy (HAART) predicts progression to AIDS. AIDS, 15, 1181–1183. doi:10.1097/00002030-200106150-00015

Bastawrous, A., & Armstrong, M. J. (2013). Mobile health use in low- and high-income countries: An overview of the peer-reviewed literature. Journal of the Royal Society of Medicine, 106, 130–142. doi:10.1177/014107681247262

Becker, B. J., et al. (2000). Multivariate meta-analysis. San Diego, CA: Academic Press.

Begg, C. B., & Mazumdar, M. (1994). Operating characteristics of a rank correlation test for publication bias. Biometrics, 50(4), 1088–1101.

Berg, K. M., Wilson, I. B., Li, X., & Arnsten, J. H. (2012). Comparison of antiretroviral adherence questions. AIDS and Behavior, 16, 461–468.

Brinkel, J., Krämer, A., Krumkamp, R., May, J., & Fobil, J. (2014). Mobile phone-based mHealth approaches for public health surveillance in sub-Saharan Africa: A systematic review. International Journal of Environmental Resources and Public Health, 11, 11559–11582. doi:10.3390/ijerph111111559

Buscher, A., Hartman, C., Kallen, M. A., & Giordano, T. P. (2011). Validity of self-report measures in assessing antiretroviral adherence of newly diagnosed, HAART-naive. HIV patients. HIV Clinical Trials, 12, 244–254. doi:10.1310/hct1205-244

Card, N. (2012). Applied meta-analysis for social science research. New York: The Guilford Press.

Chan, K. C., Wong, K. H., & Lee, S. S. (2006). Universal decline in mortality in patients with advanced HIV-1 disease in various demographic subpopulations after the introduction of HAART in Hong Kong, from 1993 to 2002. HIV Medicine, 7, 186–192.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155–159.

Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Applied multiple regression/correlation analysis for the behavioral sciences (3rd ed.). Hillsdale, NJ: Lawrence Erlbaum.

Cooper, H. M., & Hedges, L. V. (Eds.). (1994). The handbook of research synthesis and meta-analysis (2nd ed.). New York, NY: Russell Sage.

Do, H. M., Dunne, M. P., Kato, M., Pham, C. V., & Nguyen, K. V. (2013). Factors associated with suboptimal adherence to antiretroviral therapy in Viet Nam: A cross-sectional study using audio computer-assisted self-interview (ACASI). BMC Infectious Diseases, 13, 154.

Farmer, K. C. (1999). Methods for measuring and monitoring medication regimen adherence in clinical trials and clinical practice. Clinical Therapeutics, 21, 1074–1090.

Feldman, B. J., Fredericksen, R. J., Crane, P. K., Safren, S. A., Mugavero, M. J., Willig, J. H., et al. (2013). Evaluation of the single-itme self-rating adherence scale for use in routine clinical care of people living with HIV. AIDS and Behavior, 17, 307–318. doi:10.1007/s10461-012-0326-7

Ferreira, J. L., Rodrigues, R., Lanca, A. M., Correia de Almeida, V., Rocha, S. Q., Ragazzo, T. G., et al. (2013). Transmitted drug resistance among people living with HIV/AIDS at major cities of Sao Paulo State, Brazil. Advances in Virology, published online. Retrieved from doi:10.1155/2013/878237

Field, A. P. (2001). Meta-analysis of correlation coefficients: A Monte Carlo comparison of fixed and random effects methods. Psychological Methods, 6, 161–180.

Field, A. P. (2005). Is the meta-analysis of correlation coefficients accurate when population correlations vary? Psychological Methods, 10, 444–467.

Friedman, S. R., de Jong, W., Rossi, D., Touze, G., Rockwell, R., Des Jarlais, D. C., & Elovich, R. (2007). Harm reduction theory: Users culture, micro-social indigenous harm reduction, and the self-organization and outside-organizing of users’ groups. International Journal of Drug Policy, 18(2), 107–117.

Furberg, R. D., Uhrig, J. D., Bann, C. M., Lewis, M. A., Harris, J. L., Williams, P., et al. (2012). Technical implementation of a multi-component, text message-based intervention for persons living with HIV. Journal of Medical Internet Research Protocols, 16(1), e17. doi:10.2196/resprot.2017

Gill, C. J., Sabin, L. L., Hamer, D. H., Keyi, X., Jianbo, Z., Li, T., et al. (2010). Importance of dose timing to achieving undetectable viral loads. AIDS and Behavior, 14, 785–793. doi:10.1007/s10461-009-9555-9

Gionatti, N., Galli, L., Bocchiola, B., Cahua, T. R., Panzini, P., Zandona, D., et al. (2013). Number of daily pills, dosing schedule, self-reported adherence and health status in 2010: A large cross-sectional study of HIV-infected patients on antiretroviral therapy. HIV Medicine, 14, 153–160.

Giordano, T. P., Guzman, D., Clark, R., Charlebois, E. D., & Bangsberg, D. R. (2004). Measuring adherence to antiretroviral therapy in a diverse population using a visual analogue scale. HIV Clinical Trials, 5, 74–79.

Gleser, L. J., & Olkin, I. (1994). Stochastically dependent effect sizes. The Handbook of Research Synthesis and Meta-Analysis (2nd ed., pp. 357–377). New York: Russell Sage.

Graham, S. M., Jalalian-Lechak, Z., Shafi, J., Chohan, V., Deya, R. W., Jaoko, W., et al. (2012). Antiretroviral treatment interruptions predict female genital shedding of genotypically resistant HIV-1 RNA. Journal of Acquired Immune Deficiency Syndrome, 60, 511–518.

Hedges, L. V., & Olkin, I. (1985). Statistical methods for meta-analysis. San Diego, CA: Academic Press.

Herbst, A. J., Cooke, G. S., Barnighausen, T., Kany Kany, A., Tanser, F., & Newell, M. L. (2009). Adult mortality and antiretroviral treatment roll-out in rural KwaZulu-Natal, South Africa. Bulletin of the World Health Organization, 87, 754–762.

Hess, L. M., Raebel, M. A., Conner, D. A., & Malone, D. C. (2006). Measurement of adherence in pharmacy administrative databases: A proposal for standard definitions and preferred measures. Annals of Pharmacotherapy, 40, 1280–1288.

Higgins, J. P., & Green, S. (Eds.) (2011). Cochrane handbook for systematic reviews of interventions version 5.1.0 [updated March 2011]. The Cochrane Collaboration, 2011. Available from www.cochrane-handbook.org

Higgins, J. P. T., Thompson, S. G., Deeks, J. J., & Altman, D. G. (2003). Measuring inconsistency in meta-analysis. British Medical Journal, 327, 557–560.

Hong, S. Y., Jerger, L., Jonas, A., Badi, A., Cohen, S., Nachega, J. B., et al. (2013). Medication possession ratio associated with short-term virologic response in individuals initiating antiretroviral therapy in Namibia. PLoS One, 8, e56307. doi:10.1371/journal.pone.0056307

Huedo-Medina, T. B., Sánchez-Meca, J., Marın-Martınez, F., & Botella, J. (2006). Assessing heterogeneity in meta-analysis: Q statistic or I 2 index? Psychological Methods, 11, 193–206.

Hugen, P. W., Langebeek, M., Burger, D. M., Zomer, B., van Leusen, R., Schuurman, R., et al. (2002). Assessment of adherence to HIV protease inhibitors: Comparison and combination of various methods, including MEMS (electronic monitoring), patient and nurse report, and therapeutic drug monitoring. Journal of Acquired Immune Deficiency Symdromes, 30, 324–334.

Johnson, B. T., & Huedo-Medina, T. B. (2011). Depicting estimates using the intercept in meta-regression models: The moving constant technique. Research Synthesis Methods, 2, 204–220.

Johnson, B. T., Low, R. E., & MacDonald, H. V. (2014). Panning for the gold in health research: Incorporating studies’ methodological quality in meta-analysis. Psychology and Health,. doi:10.1080/08870446.2014.953533

Kagee, A., & Nell, A. (2012). Assessing the association between self-report items for HIV pill adherence and biological measures. AIDS Care, 24(11), 1448–1452. doi:10.1080/09540121.2012.687816

Kalichman, S. C., Amaral, C. M., Swetzes, C., Jones, M., Macy, R., Kalichman, M. O., et al. (2009). A simple single-item rating scale to measure medication adherence: Further evidence for convergent validity. Journal of the International Association of Physicians in AIDS Care, 8, 367–374. doi:10.1177/1545109709352884

Kerr, S. J., Avihingsanon, A., Putcharoen, O., Chetchotisakd, P., Layton, M., Ubolyam, S., et al. (2012). Assessing adherence in thai patients taking combination antiretroviral therapy. International Journal of STD and AIDS, 23, 160–165. doi:10.1258/ijsa.2009.009152

Krakower, D. S., Jain, S., & Mayer, K. H. (2015). Antiretrovirals for primary HIV prevention: The current status of pre- and post-exposure prophylaxis. Current HIV/AIDS Reports, 12(1), 127–138. doi:10.1007/s11904-014-0253-5

Liu, H., Golin, C. E., Miller, L. G., Hays, R. D., Beck, C. K., Sanandaji, S., et al. (2001). A comparison study of multiple measures of adherence to HIV protease inhibitors. Annals of Internal Medicine, 134, 968–977.

Maldonado-Martinez, G., Hunter-Mellado, R. F., Fernandez-Santos, D., & Rios-Olivares, E. (2016). Persistent HIV viremia: Description of a cohort of HIV infected individuals with ART failure in Puerto Rico. International Journal of Environmental Research and Public Health, 13, 50. doi:10.3390/ijerph13010050

Maneesriwongul, W. L., Tulathong, S., Fennie, K. P., & Williams, A. B. (2006). Adherence to antiretroviral medication among HIV-positive patients in Thailand. Journal of Acquired Immune Deficiency Syndromes, 43, S119–S122. doi:10.1097/01.qai.0000248346.79888.78

Mbuagbaw, L., Thabane, L., Ongolo-Zogo, P., Lester, R. T., Mills, E. J., Smieja, M., et al. (2012). The cameroon mobile phone SMS (CAMPS) trial: A randomized trial of text messaging versus usual care for adherence to antiretroviral therapy. PLoS One, 7, e46909. doi:10.1371/journal.pone.0046909

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med: Available: e1000097. doi: 10.1371/journal.pmed1000097. Accessed 5 June 2013.

Muessig, K., Nekkanti, M., Bauermeister, J., Bull, S., & Hightow-Weidman, L. (2015). A systematic review of recent smartphone, Internet and Web 2.0 interventions to address the HIV continuum of care. Current HIV/AIDS Reports, 12, 173. doi:10.1007/s11904-014-0239-3

National Institute for Health and Care Excellence. (2012). Appendix G Quality appraisal checklist: Quantitative studies reporting correlations and associations. In Methods for the development of NICE public health guidance, 3rd edn. http://www.nice.org.uk/article/pmg4/chapter/appendix-g-quality-appraisal-checklist-quantitative-studies-reporting-correlations-and-associations

Oyugi, J. H., Byakika-Tusiime, J., Charlebois, E. D., Kityo, C., Mugerwa, R., Mugyenyi, P., et al. (2004). Multiple validated measures of adherence indicate high levels of adherence to generic HIV antiretroviral therapy in a resource-limited setting. Journal of Acquired Immune Deficiency Syndromes, 36, 1100–1102.

Palella, F. J., Jr., Delaney, K. M., Moorman, A. C., Loveless, M. O., Fuhrer, J., Satten, G., et al. (1998). Declining morbidity and mortality among patients with advanced human immunodeficiency virus infection. New England Journal of Medicine, 338, 853–860.

Park-Wyllie, L. Y., Kam, D., & Bayoumi, A. M. (2009). The adherence support gap: The ‘ideal’ versus ‘reality’ of antiretroviral adherence support provided by HIV health providers in clinical practice. Annals of Pharmacotherapy, 43, 1036–1044. doi:10.1345/aph.1l663

Pellowski, J. A., Kalichman, S. C., & Finitsis, D. J. (2015). Reliability and validity of a single item rating scale to monitor medication adherence for people living with HIV and lower health literacy. HIV Clinical Trials, 16, 1–9.

Pellowski, J. A., Kalichman, S. C., Matthews, K. A., & Adler, N. (2013). A pandemic of the poor: Social disadvantage and the U.S. HIV epidemic. American Psychologist, 68, 197–209. doi:10.1037/a0032694

Peltzer, K., Friend-du Preez, N., Ramlagan, S., & Anderson, J. (2010). Antiretroviral treatment adherence among HIV patients in KwaZulu-Natal, South Africa. BMC Public Health. Retrieved online from http://www.biomedcentral.com/1471-2458/10/111

IBM Corp. Released 2012. IBM SPSS Statistics for Windows, Version 21.0. Armonk, NY: IBM Corp.

Schmidt, F. L., Oh, I. S., & Hayes, T. L. (2009). Fixed- versus random-effects models in meta-analysis: Model properties and an empirical comparison of differences in results. British Journal of Mathematical and Statistical Psychology, 62, 97–128.

Segeral, O., Madec, Y., Ban, B., Ouk, V., Hak, C. R., Le Tiec, C., et al. (2010). Simplified assessment of antiretroviral adherence and prediction of virological efficacy in HIV-infected patients in Cambodia. AIDS Research and Treatment, 2010, 142076. doi:10.1155/2010/142076

Sherr, L., Lampe, F., Clucas, C., Johnson, M., Fisher, M., Leake Date, H., et al. (2010). Self-reported non-adherence to ART and virological outcome in a multiclinic UK study. AIDS Care, 22, 939–945.

Shi, L., Liu, J., Koleva, Y., Fonseca, V., Kalsekar, A., & Pawaskar, M. (2010). Concordance of adherence measurement using self-reported adherence questionnaires and medication monitoring devices. Pharmacoeconomics, 28, 1097–1107. doi:10.2165/11537400-000000000-00000

Sinha, S., Ahmad, H., Shekhar, R. C., Kumar, N., Dar, L., Samantaray, J. C., et al. (2012). Prevalence of HIV drug resistance mutations in HIV type 1 isolates in antiretroviral therapy naive population from northern India. AIDS Research and Treatment, 2012, 905823. doi:10.1155/2012/905823

Torrance, G. W., Feeny, D., & Furlong, W. (2001). Visual analog scales: Do they have a role in the measurement of preferences for health states? Medical Decision Making: An International Journal of the Society for Medical Decision Making., 21, 329–334.

Treiblmaier, H., & Filzmoser P. (2009). Benefits from using continuous rating scales in online survey research. In Proceedings of the Institut f. Statistik u. Wahrscheinlichkeitstheorie, Forschungsbericht SM-2009-4, November 2009.

Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 36, 1–48. http://www.jstatsoft.org/v36/i03/

Walsh, J. C., Mandalia, S., & Gazzard, B. G. (2002). Responses to a 1 month self-report on adherence to antiretroviral therapy are consistent with electronic data and virological treatment outcome. AIDS, 16, 269–277.

Wang, H., He, G., Li, X., Yang, A., Chen, X., Fennie, K. P., et al. (2008). Self-reported adherence to antiretroviral treatment among HIV-infected people in Central China. AIDS Patient Care and STDs, 22, 71–80. doi:10.1089/apc.2007.0047

Wewers, M. E., & Lowe, N. K. (1990). A critical review of visual analogue scales in the measurement of clinical phenomena. Research in Nursing and Health, 13, 227–236.

Wilson, D. B. (2002). Meta-analysis macros for SAS, SPSS, and Stata [Computer Software]. Available: http://mason.gmu.edu/~dwilsonb/ma.html. Accessed 7 April 2012.

Wilson, I. B., Carter, A. E., & Berg, K. M. (2009). Improving the self-report of HIV antiretroviral medication adherence: Is the glass half full or half empty? Current HIV/AIDS Reports, 6, 177–186.

Wilson, I. B., Fowler, F. J., Jr., Cosenza, C. A., Michaud, J., Bentkover, J., Rana, A., et al. (2014). Cognitive and field testing of a new set of medication adherence self-report items for HIV care. AIDS and Behavior, 18, 2349–2358. doi:10.1007/s10461-013-0610-1

World Health Organization. (2006). Working together for health: The world health report. Geneva: WHO Press.

World Health Organization. (2012). Guidance on pre-exposure oral prophylaxis (PrEP) for serodiscordant couples, men and transgender women who have sex with men at high risk of HIV: Recommendations for use in the context of demonstration projects. Geneva, Switzerland: WHO Document Production Services.

Acknowledgments

This research was supported by U.S. Public Health Service Institutional National Research Service Award T32-MH074387 (PI: Seth C. Kalichman; trainees: David J. Finitsis and Jennifer A. Pellowski). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

David J. Finitsis, Jennifer A. Pellowski, Tania B. Huedo-Medina, Matthew C. Fox and Seth C. Kalichman declare that they have no conflict of interest.

Human and animal rights and Informed consent

All procedures followed were in accordance with ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2000. Informed consent was obtained from all patients for being included in the study.

Rights and permissions

About this article

Cite this article

Finitsis, D.J., Pellowski, J.A., Huedo-Medina, T.B. et al. Visual analogue scale (VAS) measurement of antiretroviral adherence in people living with HIV (PLWH): a meta-analysis. J Behav Med 39, 1043–1055 (2016). https://doi.org/10.1007/s10865-016-9770-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10865-016-9770-6