Abstract

Little is known about the importance of dose timing to successful antiretroviral therapy (ART). In a cohort comprised of Chinese HIV/AIDS patients, we measured adherence among subjects for 6 months using three methods in parallel: self-report using a visual analog scale (SR-VAS), pill count, and electronic drug monitors (EDM). We calculated two adherence metrics using the EDM data. The first metric used the proportion of doses taken; the second metric credited doses as adherent only if taken within a 1-h window of a pre-specified dose time (EDM ‘proportion taken within dose time’). Of the adherence measures, EDM had the strongest associations with viral suppression. Of the two EDM metrics, incorporating dose timing had a stronger association with viral suppression. We conclude that dose timing is also an important determinant of successful ART, and should be considered as an additional dimension to overall adherence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The importance of adherence to successful antiretroviral therapy (ART) is well established. Poor adherence predicts continued progression to AIDS (Bangsberg et al. 2001), encourages drug resistance (Bangsberg et al. 2006), and increases the risk of death (de Olalla et al. 2002). Conversely, seminal studies using electronic drug monitors (EDM) to measure adherence showed that patients who maintained an average of ≥95% adherence achieved the highest likelihood of reaching an undetectable viral load (UDVL; Gross et al. 2001; Mannheimer et al. 2002; McNabb et al. 2001; Paterson et al. 2000). Of note, these earlier studies were conducted among highly treatment-experienced patients, most receiving first-generation protease inhibitor-based regimens. Recent evidence suggests that non-nucleoside reverse transcriptase inhibitor (NNRTI) based regimens may be more forgiving of minor deviations in adherence (Bangsberg 2006). Nevertheless, the essential relationship between adherence and UDVL remains: superb adherence to NNRTIs is rewarded by the highest rates of virologic success (Nachega et al. 2007; San Lio et al. 2008).

A core question in the NNRTI/adherence debate is whether the commonly accepted definition of ‘optimal adherence’ based around the ≥95% threshold is appropriately strict, or overly strict. Implicitly, this debate presupposes that an even stricter definition of adherence would be meaningless. However, the standard approach that defines adherence solely in terms of the proportion of pills taken has the potential to be quite misleading because it fails to account for when the doses were taken. An extreme example is a patient who ingests his/her entire months’ worth of medications in a single day. Under the standard definition, this patient would be 100% adherent, despite being demonstrably non-adherent on 97% (29/30) of days. In qualitative investigations of adherence, we found that patients frequently admitted to mistiming their medications, even when not missing doses outright (Gill et al. 2006; Sabin et al. 2008).

A useful but little-exploited feature of EDM is their ability to assess dose timing. This feature is virtually unique to EDM: self-report tools incorporating dose timing have not been evaluated in the HIV literature, and other surrogate markers, such as pill counts, pharmacy refills, and therapeutic drug-level measurements cannot, by their nature, assess dose timing. Surprisingly, little has been published about the relationship between dose mistiming and HIV clinical outcomes (Liu et al. 2007).

In the China Adherence for Life study, we measured adherence longitudinally using EDM among a cohort of Chinese patients receiving ART. The additional information provided by the EDM data provided an opportunity to assess two questions of interest. The first was whether adopting a stricter definition of adherence that incorporated dose timing would more accurately predict which patients had UDVL at the end of 6 months of observation, compared with the standard definition based solely on the proportion of doses taken. EDM measurements were obtained in parallel with other adherence assessment methods (pill count and self-report). Therefore, the second objective of this analysis was to contrast the comparative accuracy of different surrogate measures of adherence versus an objective clinical outcome (UDVL at the end of 6 months of observation).

Methods

Overview

This analysis used longitudinal observational data from a three-phase adherence study conducted among HIV-positive patients receiving ART at the Dermatology and STD clinic, Dali Second People’s Hospital (DSPH) in Dali, Yunnan Province, China. Dali has a growing HIV epidemic due to its location on heroin trafficking routes from the ‘Golden Triangle’ (Vietnam/Myanmar/Cambodia). All patients took twice-daily regimens consisting of nevirapine or efavirenz, plus either zidovudine and lamivudine or lamivudine and stavudine. Patients were eligible for participation if they were aged ≥18 years and agreed to all study procedures. Of 97 eligible patients at the clinic, 80 agreed to participate. The study was approved by the Institutional Review Boards of Boston University Medical Center and Ditan Hospital, Beijing. All participants provided written informed consent.

Upon enrollment, each participant was given a single EDM bottle (Med-ic®, Ottawa, ON, Canada). We chose to monitor only one component of each patient’s regimen, based on research showing that patients tend either to take all, or miss all, of their medications as a group, obviating the need to monitor all components of a regimen separately (Wilson et al. 2001).

Study Procedures and Data Collection

We collected demographic data at baseline. In addition, patients completed a longitudinal adherence assessment form at each of the 6 monthly clinic visits, at which time they also refilled their medications. Each participant was given 150 yuan (~US $19) as a monthly incentive.

We assessed adherence through three methods in parallel. The monthly follow up forms included a self-report measure using a visual analog scale (SR-VAS), in which patients marked an ‘X’ on a line that was labeled in 10% increments from 0 to 100% to indicate the ‘proportion taken’ in the preceding month. This was self-administered by the study subject, though the study coordinator remained available should the subject have questions about how to complete the self-assessment. Additionally, we conducted a monthly pill count of one of the three medications, that was also expressed as ‘proportion taken’ (See Table 1—Formula 1). Lastly, we monitored a single medication using EDM for the same medication assessed by the pill count. It should be noted that each of these methods allowed for adherence to be expressed in terms of the proportion of doses taken by a subject. For EDM, SR-VAS and Pill Count, this was the average proportion of medications taken across all observations periods for which the subject provided data. Note that for SR-VAS, the subjects provided an overall estimate of their adherence, without reference to a specific medication. By contrast, the PC and EDM measurements were only taken from one of the three bottles of medication in each patient’s regimen, with the assumption that this would reflect adherence of the overall regimen. Both PC and EDM measured adherence from the same bottle of medication; in all cases this was nevirapine.

The EDM time/date data were downloaded each month, but the results were not provided to the clinicians or patients. Each bottle ‘opening’ was interpreted as a dose taken at that time. Repeated openings within the same scheduled dose time were excluded. We created two adherence definitions using the EDM data. The first was ‘proportion taken’ according to the definition used most commonly in the literature (Table 1—Formula 2). Additionally, we derived a second, stricter definition of adherence that incorporated dose timing: EDM ‘proportion taken within dose time’ (Table 1—Formula 3). This required us first to establish a scheduled dosing time for each patient. Patients selected these in consultation with their clinician, and were allowed to change them later if necessary. However, the two daily doses had to be spaced 12 h apart to optimize the pharmacokinetics of the drugs. Thus a patient would be free to choose 9 a.m./9 p.m. as their dose times, but not allowed to choose 9 a.m./8:30 p.m. Any dose taken outside of this ±1 h window would be considered non-adherent for that dose. For example, a dose that was scheduled at 9 a.m., but taken at 10:01 a.m., would be classified as non-adherent. Stated another way, a patient on a 9 a.m./9 p.m. schedule would be considered on time for both doses if they were taken between 8–10 a.m. and 8–10 p.m., respectively. Because there is no consensus in the literature about what constitutes ‘on time dosing’ we selected the ±1-h compliance window a priori.

We measured CD4-cell counts and HIV viral load measures at baseline and 6 months. CD4-cell counts were measured by flow cytometry (FACSCalibur, Becton-Dickinson, San Carlos, California). Viral loads were measured with an Organon Teknica NucliSens analyzer (BioMerieux, Boxtel, Netherlands), with a threshold of detection of 400 copies/ml.

Statistical Analyses

SAS v. 9.0 (SAS Institute, Cary, NC) was used for all analyses. Our analysis set included the subset of patients who completed the 6 months of follow-up and had a 6-month blood draw for viral loads. Our analyses focused on the usefulness of the different adherence measures for predicting whether a patient achieved UDVL at the end of the 6 months of observation. For the purposes of our analysis, we are making the assumption that this would be a function of the strength of association measured in our statistical analyses, as defined below.

While strength of association can be measured in a number of different statistical ways, we elected to assess accuracy via two methods, both pre-specified in our analysis plan in the protocol. First, following the approach defined by Liu in their comparative analysis of adherence assessment measures taken in parallel (Liu et al. 2001), we calculated odds ratios (ORs) for each measure using logistic regression, with UDVL as the dependent variable, and ‘optimal adherence’ according to each measure individually as the predictor variables. We defined ‘optimal adherence’ as maintaining ≥95% adherence as recorded by each measure, averaged over the entire 6 months, and considered it in our regressions as a dichotomous variable (‘optimal adherence’ vs. not). Essentially, this analysis asked the following question: ‘What are the odds that a patient will have UDVL if ‘optimally adherent’ according to measure ‘X’? (Where X could be SR-VAS, pill count, or either of the two EDM measures). Our reasoning was that the odds ratio should increase as a function of the strength of association for each method. In settings of sparse cells, Fisher’s exact was employed instead of Chi square. Due to the small number of patients in the study, we were unable to conduct multivariate analyses.

Our second method employed analysis of summary receiver operating characteristic (SROC) curves. We selected the SROC method because they offer robust and interpretable global assessments of the precision of diagnostic or discriminatory tests, and are widely accepted in the literature for this purpose. SROC curves were plotted for each measure across all potential ranges of sensitivity vs. 1-specificity for correctly classifying UDVL. In this approach, we assume that a stronger association between a given measure and UDVL at 6 months would be reflected by larger areas under the curve (AUC). More specifically, we report the c-statistic from SAS’s PROC LOGISTIC. The c-statistic is equivalent to the nonparametric estimate of the AUC from the ROC curve and is used to describe how well a fitted model discriminates between the two groups in the model (Harrell et al. 1996; Hosmer and Lemeshow 2000). As a guide to interpreting SROC analyses, an AUC of 0.5 connotes a worthless test, providing literally no information about the relationship between the test and the outcome of interest, while an AUC of 1.0 represents a perfect test (Walter 2002).

Results

Data for this analysis were collected between June 2006 and May 2007. We enrolled 80 patients of whom 69 completed the 6 months of observation (Fig. 1). Heroin use was the most common reason for study discontinuation, including death from drug overdose or compulsory incarceration at a state-controlled mandatory drug rehabilitation center. Key baseline participant characteristics are summarized in Table 2. Most participants were males, had limited schooling, and were unemployed. Nearly equal numbers came from the Bai ethnic group, as opposed to Han Chinese, who comprise the majority nationally. Two-thirds had a history of injectable drug use (IDU), and most had previously been at a compulsory drug rehabilitation center. Most had only recently started ART (median duration 2.3 months, range 0–29 months, inter-quartile range 0.2–8.8 months), and 25% were treatment naïve.

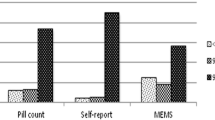

Figure 2 summarizes the mean adherence rates over the 6-month period according to each adherence measure. Apparent adherence rates varied considerably between the different methods. SR-VAS recorded the highest adherence rates, with the overwhelming majority of participants reporting perfect adherence during the 6 months of observation. Pill count adherence and EDM ‘proportion taken’ reported somewhat lower mean rates of achieving ≥95% adherence, though still were very high. By contrast, EDM ‘proportion taken within dose time’ was substantially lower than for all the other methods and had the highest degree of variability in adherence. Surprisingly, the pill counts suggested more than 100% adherence in 42.6% of the participants.

The contrast in the distributions of adherence rates between the different measures was most apparent in comparing the method with the highest apparent adherence (SR-VAS), and the measure with the lowest apparent adherence rates (EDM ‘proportion taken within dose time’) (Fig. 3). SR-VAS adherence rates displayed almost no variability, with 66 of 69 (95.6%) patients reporting ≥95% adherence throughout the 6 months of observation (Fig. 3a). By contrast, according to EDM ‘proportion taken within dose time’, fewer than half achieved ‘optimal adherence’, and approximately one-sixth had measured adherence below 70% (Fig. 3b). The difference in adherence assessments between the two methods was, not surprisingly, highly significant (P < 0.001), and the two methods had no significant correlation (r = 0.14, P = 0.3).

Of the 69 patients who completed 6 months of observation, 8 had detectable viral loads; 57 had UDVL; and 4 patients’ samples could not be analyzed for technical reasons. Table 3 shows the likelihood of having UDVL if ‘optimally adherent’ according to each measure. As can be seen, for pill counts and particularly for SR-VAS, the odds ratios for UDVL were small with wide confidence intervals that overlapped 1.0. Notably, of the eight patients with detectable viremia at the end of 6 months, SR-VAS classified all eight as having optimal adherence. By contrast, EDM ‘proportion taken within dose time’ had the highest likelihood for accurately predicting which patients had UDVL, and had the strongest statistical association with UDVL (P = 0.03); EDM ‘proportion taken’ had the next largest OR for UDVL (P = 0.09). EDM ‘proportion taken within dose time’ was twice as likely as EDM ‘proportion taken’ to predict UDVL. EDM ‘proportion taken’ was in turn twice as likely as the next highest measure (pill count) to predict UDVL.

Table 4 summarizes the results of the SROC analyses for each adherence measure. EDM ‘proportion taken within dose time’ performed best, followed by the AUCs for Pill Count and EDM ‘proportion taken’. By contrast, with an area under the SROC curve of 0.55, SR-VAS essentially had no predictive relationship with UDVL.

Discussion

In this longitudinal adherence study, the strongest relationship between adherence measures and UDVL was the EDM metric that incorporated dose timing. While pill count appeared useful in our SROC analysis, it performed poorly in the logistic regression analysis. Thus, overall, EDM ‘proportion taken within dose time’ was the measure that most accurately predicted UDVL rates at the end of the 6 months of follow-up.

In both logistic regression analysis and SROC analyses, adopting a stricter definition of adherence that incorporated dose timing improved the statistical relationship with UDVL beyond the commonly used adherence metric based on the proportion of doses taken. This indicates that dose timing is important for successful ART. This is noteworthy for several reasons. First, adherence rates and UDVL rates were quite high in this population. Second, all of the patients were receiving NNRTI-based ART regimens, which are considered to be relatively tolerant of non-adherence (Bangsberg 2006; Bangsberg et al. 2006), presumably because of the very long plasma half lives of NNRTIs (half lives for nevirapine and efavirenz = 30 and 50 h, respectively) (Sanford 2005). However, NNRTIs comprised only one of the three drugs in our patients’ ART regimens. All of our patients also received two nucleoside analogs with comparatively short plasma half-lives (zidovudine = 1.1 h; lamivudine = 6 h; stavudine = 1.4 h), which could render these components more sensitive to dose timing errors. This compels us to consider whether dose timing would be even more important for ART regimens not based on NNRTIs, but rather drugs with much shorter plasma half lives, such as early generation protease inhibitors (nelfinavir = 4 h; saquinavir = 1.4 h; indinavir = 1.8 h), integrase inhibitors (raltegravir = 9 h), fusion inhibitors (enfurvitide = 3.8 h) or triple nucleoside combinations (abacavir = 1.5 h) (Sanford 2005).

Our analysis supported earlier findings that established a hierarchy in the accuracy of different adherence measures (Arnsten et al. 2001; George et al. 2000; Liu et al. 2001): In the current analysis, EDM measures were most accurate, followed by pill count. By contrast, our data showed the SR-VAS method had essentially no statistically association with UDVL, and was not a useful measure of adherence in this population. With close to 100% of patients reporting perfect adherence, the lack of variability in the VAS scores precluded any explanatory relationship with clinical outcomes. In more general terms, this invariance imposed a problem with ‘sparse cells’ that greatly limited the interpretability of the odds ratios derived in the logistic regressions. This was a limitation that could only be partially corrected by modifying the statistical analysis approaches used (i.e. Fisher’s exact rather than Chi-squares), but ideally calls for repeating this study with a larger cohort.

As such, while our data provided no support for SR-VAS, given the small sample size for this study, these findings should not be construed as offering a universal condemnation of the validity SR-VAS approach. It is important to recognize that different approaches to self-report may vary greatly in their ability to measure adherence accurately (Simoni et al. 2006), and that different measures may function differently depending on the cultural context. Lu et al. recently contrasted several different versions of self-report in parallel, using UDVL as the outcome standard. The most important aspect of self-report proved to be the dimension in which patients were asked to estimate their adherence, either as a percentage (0–100% of doses taken), as a relative frequency (I take my medicines: ‘all the time’… ‘some of the time’, etc…), or as a qualitative self-efficacy rating (‘my ability to adhere is: ‘excellent’… ‘good’…, etc… ‘). Surprisingly, the least accurate approach was the one that asked patients to quantify the percentage of doses that they had taken—an approach that is conceptually analogous to the SR-VAS (Lu et al. 2008). By contrast, the most accurate self-report measure was the one that defined adherence through qualitative self-efficacy ratings. This seems counterintuitive, insofar as the ‘rating’ method of self-report is the most subjective of the three, and least useful for quantifying adherence as a proportion. Yet perhaps a qualitative rating that allows a patient to still feel that his/her performance is ‘good’ encourages greater honesty than a quantitative rating that, while implying an equivalent degree of success (say ‘70%’), emphasizes the distance from perfection. Unfortunately, this longing for perfection, particularly under the watchful eyes of their clinicians, may encourage patients to overstate their adherence.

Our data raise concerns about the validity of pill counts in this population, since nearly half of our patients had adherence that apparently exceeded 100% according to this method. This was detected when participants presented for follow up visits with fewer doses remaining in their bottle than expected based on their scheduled refill time. Because one cannot actually exceed 100% adherence, we considered several explanations that might better explain this paradoxical result. The most benign explanations were that the patients lost the residual tablets or simply ingested more than their prescribed number of pills (i.e. more than two doses per day). More worrisome explanations were that the participants were sharing the pills with another person or selling the pills on the black market. Another possibility was ‘pill dumping’. ‘Pill dumping’ refers to the practice of discarding left-over tablets prior to coming in for the pill count with the goal of feigning higher than actual levels of adherence, and is one of the principal threats to the validity of pill counts (Rudd et al. 1989). Given the fact that pill counts fared so poorly in our logistic regression analyses, we concluded that regularly scheduled pill counts were not a reliable adherence measure in this population.

The most important strength of this analysis was its longitudinal design and low rate of loss to follow-up. Our principal limitation was small sample size. This is mitigated to some extent by our use of repeated measures for the adherence metrics, which increased the precision for these values at the individual subject level. Nevertheless, the sample size precluded our performing multivariate analyses to examine which factors influenced off-timing rates, and may have reduced the precision in our associations with UDVL. This issue was perhaps most pronounced in the case of the analysis of the association between SR-VA and UDVL, which could impact on the overall generalizability of that result. Similarly, this could have obscured the comparative accuracy of pill counts vs. EDM ‘proportion taken’. While not a weakness of our study per se, the unique cultural context in which the study was conducted may impact its generalizability, and supports the need to conduct similar investigations to this approach in other contexts and settings.

In conclusion, our data show that incorporating dose timing into adherence estimates with the use of EDM improved the accuracy of predicting UDVL. Despite the fact that all of patients were receiving NNRTI-based ART, considered to be relatively forgiving for non-adherence, dose timing still proved to be an important factor in determining clinical success. This suggests that dose timing should be included in adherence counseling sessions. Further research is needed to determine whether the compliance window of ±1 h was optimal. Related to this is whether dose timing will prove to be even more important in the setting of ART that does not include NNRTIs, but rather drugs with comparatively short half lives, such as most currently available protease inhibitors. Lastly, the difficulties we encountered in deriving meaningful data from the SR-VAS tool suggest a need for improved SR tools, perhaps based on more qualitative assessments, but ideally designed to capture elements of dose timing into their assessments.

References

Arnsten, J. H., Demas, P. A., Farzadegan, H., Grant, R. W., Gourevitch, M. N., Chang, C. J., et al. (2001). Antiretroviral therapy adherence and viral suppression in HIV-infected drug users: Comparison of self-report and electronic monitoring. Clinical Infectious Diseases, 33(8), 1417–1423. doi:10.1086/323201.

Bangsberg, D. R. (2006). Less than 95% adherence to nonnucleoside reverse-transcriptase inhibitor therapy can lead to viral suppression. Clinical Infectious Diseases, 43(7), 939–941. doi:10.1086/507526.

Bangsberg, D. R., Acosta, E. P., Gupta, R., Guzman, D., Riley, E. D., Harrigan, P. R., et al. (2006). Adherence-resistance relationships for protease and non-nucleoside reverse transcriptase inhibitors explained by virological fitness. AIDS (London, England), 20(2), 223–231. doi:10.1097/01.aids.0000199825.34241.49.

Bangsberg, D. R., Perry, S., Charlebois, E. D., Clark, R. A., Roberston, M., Zolopa, A. R., et al. (2001). Non-adherence to highly active antiretroviral therapy predicts progression to AIDS. AIDS (London, England), 15(9), 1181–1183. doi:10.1097/00002030-200106150-00015.

de Olalla, P. G., Knobel, H., Carmona, A., Guelar, A., Lopez-Colomez, J. L., & Cayla, J. A. (2002). Impact of adherence and highly active antiretroviral therapy on survival in HIV-infected patients. Journal of Acquired Immune Deficiency Syndromes, 30(1), 105–110. doi:10.1097/00126334-200205010-00014.

Gardner, E. M., Sharma, S., Peng, G., Hullsiek, K. H., Burman, W. J., Macarthur, R. D., et al. (2008). Differential adherence to combination antiretroviral therapy is associated with virological failure with resistance. AIDS (London, England), 22(1), 75–82. doi:10.1097/QAD.0b013e3282f366ff.

George, C. F., Peveler, R. C., Heiliger, S., & Thompson, C. (2000). Compliance with tricyclic antidepressants: The value of four different methods of assessment. British Journal of Clinical Pharmacology, 50(2), 166–171. doi:10.1046/j.1365-2125.2000.00244.x.

Gill, C. J., Hamer, D. H., Bachman-DeSilva, M., Heggenhougen, H. K., Keyi, X., Yuan, Y., et al. (2006). Divergent views regarding adherence to antiretroviral therapy between clinicians and their patients in Dali, Yunnan Province, China (Abstract: WEPE0103). Paper presented at the XVI International AIDS Conference, Toronto, Canada.

Gross, R., Bilker, W. B., Friedman, H. M., & Strom, B. L. (2001). Effect of adherence to newly initiated antiretroviral therapy on plasma viral load. AIDS (London, England), 15(16), 2109–2117. doi:10.1097/00002030-200111090-00006.

Harrell, F. E., Jr, Lee, K. L., & Mark, D. B. (1996). Multivariable prognostic models: Issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Statistics in Medicine, 15(4), 361–387. doi:10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4.

Hosmer, D. W., & Lemeshow, S. (2000). Applied logistic regression. New York: Wiley.

Liu, H., Golin, C. E., Miller, L. G., Hays, R. D., Beck, C. K., Sanandaji, S., et al. (2001). A comparison study of multiple measures of adherence to HIV protease inhibitors. Annals of Internal Medicine, 134(10), 968–977.

Liu, H., Miller, L. G., Golin, C. E., Hays, R. D., Wu, T., Wenger, N. S., et al. (2007). Repeated measures analyses of dose timing of antiretroviral medication and its relationship to HIV virologic outcomes. Statistics in Medicine, 26(5), 991–1007. doi:10.1002/sim.2592.

Lu, M., Safren, S. A., Skolnik, P. R., Rogers, W. H., Coady, W., Hardy, H., et al. (2008). Optimal recall period and response task for self-reported HIV medication adherence. AIDS and Behavior, 12(1), 86–94. doi:10.1007/s10461-007-9261-4.

Mannheimer, S., Friedland, G., Matts, J., Child, C., & Chesney, M. (2002). The consistency of adherence to antiretroviral therapy predicts biologic outcomes for human immunodeficiency virus-infected persons in clinical trials. Clinical Infectious Diseases, 34(8), 1115–1121. doi:10.1086/339074.

McNabb, J., Ross, J. W., Abriola, K., Turley, C., Nightingale, C. H., & Nicolau, D. P. (2001). Adherence to highly active antiretroviral therapy predicts virologic outcome at an inner-city human immunodeficiency virus clinic. Clinical Infectious Diseases, 33(5), 700–705. doi:10.1086/322590.

Nachega, J. B., Hislop, M., Dowdy, D. W., Chaisson, R. E., Regensberg, L., & Maartens, G. (2007). Adherence to nonnucleoside reverse transcriptase inhibitor-based HIV therapy and virologic outcomes. Annals of Internal Medicine, 146(8), 564–573.

Paterson, D. L., Swindells, S., Mohr, J., Brester, M., Vergis, E. N., Squier, C., et al. (2000). Adherence to protease inhibitor therapy and outcomes in patients with HIV infection. Annals of Internal Medicine, 133(1), 21–30.

Rudd, P., Byyny, R. L., Zachary, V., LoVerde, M. E., Titus, C., Mitchell, W. D., et al. (1989). The natural history of medication compliance in a drug trial: Limitations of pill counts. Clinical Pharmacology and Therapeutics, 46(2), 169–176.

Sabin, L., Bachman-DeSilva, M., Hamer, D. H., Keyi, X., Yuan, Y., Fan, W., et al. (2008). Barriers to adherence among patients on antiretroviral medications in southern China: A qualitative study. AIDS Care.

Sanford, J. P. (2005). The Sanford guide to HIV/AIDS therapy 2005 (14th ed.). Sperryville, VA: Antimicrobial Therapy Inc.

San Lio, M. M., Carbini, R., Germano, P., Guidotti, G., Mancinelli, S., Magid, N. A., et al. (2008). Evaluating adherence to highly active antiretroviral therapy with use of pill counts and viral load measurement in the drug resources enhancement against AIDS and malnutrition program in Mozambique. Clinical Infectious Diseases, 46(10), 1609–1616. doi:10.1086/587659.

Simoni, J. M., Kurth, A. E., Pearson, C. R., Pantalone, D. W., Merrill, J. O., & Frick, P. A. (2006). Self-report measures of antiretroviral therapy adherence: A review with recommendations for HIV research and clinical management. AIDS and Behavior, 10(3), 227–245. doi:10.1007/s10461-006-9078-6.

Walter, S. D. (2002). Properties of the summary receiver operating characteristic (SROC) curve for diagnostic test data. Statistics in Medicine, 21(9), 1237–1256. doi:10.1002/sim.1099.

Wilson, I. B., Tchetgen, E., & Spiegelman, D. (2001). Patterns of adherence with antiretroviral medications: An examination of between-medication differences. Journal of Acquired Immune Deficiency Syndromes, 28(3), 259–263.

Acknowledgments

This work was supported by a cooperative agreement (GHS-A-00-03-00030-00) between Boston University and the Office of Health and Nutrition of the United States Agency for International Development (USAID), with additional support from the World Health Organization (WHO) and CDC-GAP/China. Dr. Gill’s work was supported by NIH/NIAID K23 AI 62208. We wish to thank Mary Jordan and Lois Bradshaw at USAID, Jonathan Simon, Donald Thea, Deirdre Pierotti at Boston University, our Boston-based project managers Mini Singh and Anna Knapp, our Chinabased field managers James Chen, Guo Jinhua, and Matt Bobo, Cheng Feng at FHI, Ray Yip at CDC-GAP, and Connie Osborne at the WHO-Beijing office.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gill, C.J., Sabin, L.L., Hamer, D.H. et al. Importance of Dose Timing to Achieving Undetectable Viral Loads. AIDS Behav 14, 785–793 (2010). https://doi.org/10.1007/s10461-009-9555-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10461-009-9555-9