Abstract

We trained six special education staff members in groups of three to conduct differential reinforcement of alternative and differential reinforcement of other behavior procedures using a self-instructional package. Our self-instructional packages were written instructions and PowerPoint™ presentations that incorporated embedded text, video modeling, and voiceover instruction. After training, we evaluated each staff member’s implementation of the reinforcement strategies with a simulated student who engaged in problem behavior. After multiple exposures to the self-instructional package in a group training format, two participants mastered both procedures, two participants mastered one procedure, and two participants did not master either procedure. We discuss the clinical implications of the findings and utility of self-instructional packages in a school-consulting role.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Special education teachers and paraprofessionals can be trained to use differential reinforcement procedures as integral features of behavior intervention plans (Countenance et al. 2014; DiGennaro et al. 2007; Plavnick et al. 2010; Petscher and Bailey 2006). For example, DiGennaro et al. (2007) used performance feedback to train four change agents to implement behavior support plans that incorporated differential reinforcement of alternative behavior (DRA) for one participant and differential reinforcement for other behavior (DRO) for another participant. More broadly, skilled practitioners have used behavioral skills training (BST) and pyramidal training to teach support staff to correctly implement discrete-trial teaching (Sarokoff and Sturmey 2004), preference assessments (Pence et al. 2012), functional analyses (Pence et al. 2014), and other behavioral programming (e.g., Kuhn et al. 2003). Although BST and pyramidal training both improve staff performance, these training modalities may not be widely embraced in school settings due to the substantial cost of hiring a skilled practitioner in behavior analysis (Parsons et al. 2012). Specifically, public schools may not have funds to conduct optimal training.

As previously noted, the ability to implement differential reinforcement procedures should be a core skill set for change agents employed in school settings (Wong et al. 2015). When change agents implement DRA and DRO procedures with high procedural integrity, these procedures can increase appropriate behavior, decrease problem behavior, or both (Codding et al. 2005; St. Peter Pipkin et al. 2010; Vollmer et al. 1999). Nevertheless, treatment errors, particularly those made early in the treatment process, could lead to the reemergence of problem behavior and impede later treatment progress (e.g., St. Peter Pipkin et al. 2010). Thus, further research is needed to identify instructional strategies to effectively train change agents in school settings to implement common behavioral reduction interventions.

One training method, self-instruction packages (SIPs), does not require a skilled practitioner (Graff and Karsten 2012) and might offer practical advantages in settings such as public schools. By design, SIPs are antecedent-only strategies that can be used to train change agents without an expert trainer. Studies have shown that SIPs can teach change agents to correctly implement preference assessments (Deliperi et al. 2015; Delli Bovi et al. 2017; Graff and Karsten 2012; Hansard and Kazemi 2018; Lipschultz et al. 2015; Nottingham et al. 2017; Ramon et al. 2015; Weldy et al. 2014), most-to-least prompting (Giannakakos et al. 2016), discrete-trial instruction (Cardinal et al. 2017; Thiessen et al. 2009; Vladescu et al. 2012), motor imitation programs (Du et al. 2016), picture-exchange communication systems (Martocchio and Rosales 2017), and three-step prompting (Spiegel et al. 2016).

Specifically, Spiegel et al. (2016) evaluated the extent to which a SIP trained three caregivers of children with ASD to implement three-step prompting during tasks in which their children were typically noncompliant. The SIP, which could be completed in 12 min 36 s, improved caregivers’ implementation of three-step prompting with their children and promoted their application of the skills to novel tasks and settings. Notably, researchers remained within 3 m of participants when viewing the SIP, aligning with prior investigations in which researchers remain in close proximity (e.g., Delli Bovi et al. 2017).

Although the findings of the Spiegel et al. (2016) study are promising, additional research is needed to evaluate the extent to which SIPs can train change agents to implement behavioral interventions in settings such as schools. School consultation models may rely on group trainings provided by noncredentialed personnel. If SIPs can promote acquisition of change agents’ implementation of behavioral reduction strategies when delivered in a group format, practitioners in school settings could train more change agents to deliver empirically supported behavioral interventions with less time.

The current study aimed to evaluate the extent to which change agents learned to implement DRA and DRO procedures after viewing written instructions and PowerPoint™ presentations containing voice over instruction and video modeling in a group. This SIP method, when delivered in a group, has the potential to decrease training costs and increase the probability children in special education receive their prescribed behavioral services.

Methods

Participants

As part of a week-long in-service training on evidence-based practices for students with special needs, we recruited six special education staff members (five females and one male) to participate in this study. Participants, on average, were 35 years old (range 22–66 years old) and had varying levels of experience in special education (less than 1 year up to 10 years) as either a teacher or a paraprofessional. Table 1 displays demographic information for each participant. The first author obtained informed consent from participants at the start of the training. Sessions took place in a conference room at a public school’s administrative building. All participants reported some experience with behavioral intervention strategies but no formal training with DRA or DRO. Additionally, participants reported they had or were currently working with children in special education who engaged in problem behavior.

Setting and Materials

Participants used the following materials across all phases of the study: a MotivAider® (to monitor the 30-s interval in the DRO procedure); flash cards depicting common special educational targets (e.g., letters, numbers, shapes, animals, and colors); edibles to use as reinforcers (e.g., skittles, crackers); and a token board with four tokens. The lead author developed and created the PowerPoint™ SIP in approximately 10 h. This time included filming the video clips, editing the video clips, recording the voiceover instruction, and creating the final presentation.

Experimental Design

We used a nonconcurrent multiple probe design across participants to evaluate the effects of the SIP training on staff members’ implementation of DRO and DRA procedures (e.g., Jenkins et al. 2015; LeGray et al. 2010). Participants watched the SIP in triads. We assessed skills for each participant individually (i.e., without the other participants present). Although we conducted sessions for all participants in close temporal proximity, we did not systematically alternate sessions across participants and tiers as required for a concurrent multiple baseline design (e.g., Carr 2005; Coon and Rapp 2018).

Training Simulated Students and Scripts

Prior to conducting the study, the first author used BST to train the second and third authors to act as simulated students. During the instructions component, the first author reviewed the intervention and data collection procedures, and then outlined the programmed behaviors (i.e., five instances of disruption and self-injurious behaviors; four instances of compliance). Following instructions, the first author modeled acting as the simulated student. Simulated students practiced complying with demands and engaging in problem behavior while collecting data during the role-play component. The first author delivered feedback following each role-play simulation.

The first author trained the simulated students to engage in five instances of disruptive and self-injurious behavior (defined below), spaced a minimum of 15–30 s apart, during each 5-min session. In addition, the first author trained the simulated students to engage in appropriate behavior to allow the opportunity for the participant to deliver a token four times during each session. For example, for the DRO 30-s procedure, simulated students refrained from problem behavior during four, 30-s periods. For the DRA procedure, simulated students engaged in independent compliance four times. For the remaining trials, the first author trained the simulated students to engage in compliance with either a gestural prompt or physical prompt. The first author trained simulated students to 90% criterion in engaging in all of these behaviors during their training.

Following training, the simulated students engaged in target behavior, which included self-injurious behavior and disruptive behavior, during the sessions with each participant. Self-injurious behavior was defined as any instance of the simulated student contacting her own body with an open or closed hand from a distance of 15 cm or greater or any instance of a person biting any part of her own body. Disruptive behavior was defined as any instance of the simulated student throwing an object, swiping objects off a surface, ripping or tearing items, or contacting hands or feet to a surface from a distance of 15 cm or greater. Simulated students did not display target behavior while simultaneously engaging in compliance. Given the broad goals of the in-service training were to increase skill sets in implementing evidence-based behavioral interventions, we selected these target behaviors due to the district’s history of referring students who engaged in these behaviors for behavior-analytic services.

Response Measurement

The instructor (OL) and simulated students collected data on each participant’s correct and incorrect implementation of each component using specific data sheets for the DRA and DRO procedures. The dependent measure was the percentage of correct responses per session, which was calculated by dividing the sum of correct responses by the total number of opportunities to respond and then multiplying by 100%. For both the DRA and DRO procedures, we required participants to implement the six components as indicated by Table 2. To be scored as correct for each of the components, participants must have engaged in the correct response within 5 s.

Observers scored component #1 (use three-step prompting including a directive, gesture, and guidance) as correct if the participant followed a no response or incorrect response with a gesture and then physical guidance within 5 s. If the participant, continued to repeat the vocal directive without the moving up the prompt hierarchy, observers scored this incorrect. If the participant did not follow the prescribed sequence (i.e., going from vocal directive to guidance) or repeated a prompt (e.g., multiple gestures), observers scored this as incorrect. Observers scored component #2 (“providing behavior-specific praise following compliance”) as correct if the participant stated the task the simulated student completed before 5 s elapsed (e.g., “Excellent job finding the letter ‘A’”). If the participant stated, “Good!” and did not pair this with an observable behavior, the observer scored the response as incorrect. Additionally, if the participant delivered the praise following 5 s, this was also scored as incorrect. Observers scored component #3 as correct if the participant delivered a token following an independent response (e.g., simulated student did not require gestural or physical prompting) within 5 s for the DRA procedure and if the simulated student did not engage in problem behavior for 30 s for the DRO procedure. For component #4 (refrain from commenting or changing facial expressions following the occurrence of problem behavior), the participant needed to continue presenting the demands without altering their facial reaction (e.g., grimace) or vocalizing on the problem behavior (e.g., “Don’t do that!”) for observers to score this component as correct. For component #5, observers scored this as correct if, following the occurrence of problem behavior, the participant delivered a demand. Finally, for component # 6 (allowing for the token exchange for edibles), observers scored this as correct if the simulated student earned four tokens and the participant prompted the student to exchange (e.g., “You can trade in.”, gestures to the board, “Remember you earned all your tokens.”).

We planned to have at least five opportunities in each session for components 1, 2, 4, and 5 in both DRA and DRO sessions. We also planned for four opportunities for component 3 and one opportunity for component 6.

During each 5-min session, simulated students (a) displayed five instances of problem behavior spaced a minimum of 15–30 s apart, (b) produced four opportunities to earn a token by either engaging in an independent compliant response in the DRA or refraining from problem behavior in the DRO, and (c) produced one opportunity for the participant to allow for a token exchange for edibles. The fluency in which participants implemented the procedure dictated if all the opportunities for token delivery and token exchange could occur in the 5-min session. Said differently, if the participant responded slowly to problem behavior, the delay could have prevented the simulated student from engaging in appropriate behavior, and thus precluded the opportunity for her to earn all the tokens needed to exchange.

One of the major goals of the broader district training was to instruct special education staff members (teachers and paraprofessionals) to interact with special education students with behavioral challenges during instructional time. Thus, for both DRA and DRO, we included demand delivery (e.g., discrete trials of identifying letters, numbers, shapes, animals, and colors and one-step motor directives).

Interobserver Agreement

Simulated students also collected data in vivo during baseline and SIP trainings for 18.5% of sessions across both DRO and DRA procedures. They were not blind to whether the participants were in baseline or treatment. We calculated interobserver agreement (IOA) scores on a step-by-step basis for each participant by dividing the number of total agreements by the number of agreements plus disagreements and converting the result to a percentage. Mean IOA scores across participants for DRA and DRO were 79.4% and 83.9%, respectively. It is possible we obtained lower IOA than expected due to the multiple roles of the simulated students, who collected data on participant performance while simulating a child with problem behavior.

Procedures

Prior to conducting baseline sessions, the first author provided each participant with the materials, as described above, and one-page written instructions for the DRA and DRO procedures (“Appendix A”, Flesch Reading Ease Scores 46.9 and 45.1, respectively). She then instructed the participants to read the materials and provided a 10-min period for them to do so. The first author did not deliver feedback to or answer questions from the participants. Instead, she redirected all questions with nonspecific statements (e.g., “Just try your best.”). All baseline and SIP training sessions occurred across two training days. As mentioned above, we conducted this study within a larger school district training on using evidence-based practices with children with special needs.

Baseline

Following the 10-min reading period, authors instructed participants to implement DRA and DRO procedures with a simulated student. The simulated student terminated the session if the participant indicated that she or he was done (e.g., a participant stating “I’m done.”) or after 5 min elapsed. No participant terminated the baseline sessions, and each participant engaged with the simulated student for the duration of each 5-min session. Neither the instructor nor the simulated students provided feedback to participants. The instructor did not prompt the participants to read the procedures prior to each baseline session. However, participants did have access to the written procedures between baseline sessions.

SIP Training

Prior to each SIP training session, the instructor set up the laptop for the two groups of three participants. The instructor stated to the participants:

It’s time to watch the presentation. Please do not skip ahead. The presentation will change slides by itself. After it is complete, one of you please raise your hand, and we will begin practicing the procedure.

The instructor began the presentation, then stepped away from the participants. She returned to the participants when someone raised their hand.

The participants viewed a PowerPoint™ presentation SIP (a total of 16 slides) on a laptop in their assigned group. Each PowerPoint™ included a corresponding video model for all the steps of the task analysis for the designated reinforcement procedure. For example, on the seventh slide of the DRA-SIP, the audio stated “provide behavior-specific praise following compliance,” and the subsequent slide provided a brief video model of this component. The teacher in the brief video model demonstrated a minimum of one exemplar. For example, following the verbal explanation of the component “use three-step prompting,” the teacher demonstrated the use of this prompting hierarchy two times.

After each step was reviewed and modeled, the final video depicted all the steps required to complete a 5-min session. For the DRO-SIP’s final video model, the teacher demonstrated the use of three-step prompting 35 times; providing behavior-specific praise following compliance 26 times; delivering a token following 30 s in the absence of problem behavior 5 times; refraining from commenting on problem behavior, resetting the timer, and placing a demand 9 times; and exchanging four tokens 1 time. For the DRA-SIP’s final video model, the teacher demonstrated the use of three-step prompting 28 times; providing behavior-specific praise following compliance 18 times; delivering the token following independent compliance 7 times; refraining from commenting on problem behavior and placing a demand 9 times; and exchanging four tokens 1 time.

In the SIPs, the first author served as the teacher and the third author served as the student. Participants watched either the DRA-SIP or the DRO-SIP in a group format following baseline sessions. Each SIP lasted approximately 12 min. “Appendix B” contains an example of a PowerPoint™ Slide and transcribed text of the corresponding voice-over instruction.

Following each presentation viewing, the authors conducted role-play simulations with each participant, individually (i.e., other group members were not present), to assess skill acquisition. During the simulations, the first author instructed the participant to conduct either the DRA or DRO procedure with the simulated student. The simulated student’s behavior during the role-play assessment matched what the student did during the video models. The authors did not provide performance feedback.

The mastery criterion was set at one session with 90% or higher correct implementation of the components for each procedure. Researchers allowed a maximum of four group viewings of each SIP. In an attempt to avoid indirect performance feedback, researchers continued running sessions with participants who reached mastery criterion. This practice ensured participants in the group could not determine who performed correctly by virtue of who was not required to view the SIP again. Following mastery of the DRA or DRO procedure, the authors assessed the untrained procedure prior to introducing its corresponding SIP. If a participant’s performance decreased below mastery level in a subsequent assessment session, her behavior was still considered mastered by virtue of prior performance (e.g., see Cathy with DRO); however, she was exposed to additional assessment and training sessions. The fourth SIP view occurred on the second day of training, and it did not differ from procedures described above. Given the time constraints of the broad school training, we could not devote more time for additional SIP presentation or assessment sessions with the simulated student.

Results

Figure 1 shows the results for the six participants. The left column of panels depicts the percentage of correctly implemented components for all the participants when implementing the DRO procedures. During baseline, none of the participants demonstrated mastery DRO procedures; however, Cathy’s performance improved across sessions. Following the DRO-SIP, Brittany, Callie, Brandy, and Cathy reached the mastery criterion. Abby and David did not achieve mastery of the DRO procedures; however, both participants’ performance improved markedly in the final session.

The right column of panels depicts Brandy, Cathy, David, Abby, Brittany, and Callie’s performance when implementing the DRA procedures. During baseline, none of the participants demonstrated mastery of the DRA procedures; there were increases in performance in Brandy, David, and Abby. Following the DRA-SIP, Brandy, Abby, and Brittany met the mastery criterion, whereas Cathy, Callie, and David improved following the SIP training but did not reach mastery level.

Table 3 displays each participant’s average percent correct on component skills 1–6 (defined in Table 2) during baseline sessions and following the SIP training for both DRO and DRA procedures. To aid in visual analysis of component skills (e.g., Higgins et al. 2017), black boxes denote that the participant conducted the component with an average of 100% accuracy, gray boxes denote that the participant performed that skill with an average of 70.0–99.9% accuracy, white boxes denote the participant performed the skill with less than an average of 70% accuracy, and “X” boxes indicate the simulated student did not provide an opportunity for the participant to demonstrate the skill.

For Abby, average percent correct responding on all component skills improved following the DRO-SIP, with the highest levels on components four and six. Following the DRA-SIP, Abby’s average percent correct responding across sessions also increased for all components, with the highest levels for components one, three, four, and six. Following the DRO-SIP, Brittany’s average percent correct responding improved for five component skills with components one, three, four, and five averaging over 90% correct. Following DRA-SIP, Brittany’s performance on all component skills increased, with notable increases with components one, three, four, and six. For Callie, her performance across most DRO and DRA component skills increased following the SIP trainings. Specifically, Callie’s performance improved to high levels for component one (DRO only) and components three, four, and five (both DRO and DRA).

For Brandy, average percent correct responding on five of the six component skills improved or remained stable following the DRO-SIP. Following baseline for the DRA procedure, Brandy’s average percent correct responding increased for components one, three, four, and five. Following the DRO-SIP, Cathy’s average percent correct responding on five component skills increased, with subpar mastery on component three. For the DRA procedure, Cathy’s performance on five component skills increased, with the exception of a slight decrease for component one. Following DRO-SIP, David’s performance across five component skills increased (with the exception of a minimal decrease for component one). However, only one of these component skills (component six) increased to a high level. On average, David’s performance improved across all component skills during the DRA procedure, with the highest levels achieved for components one, four, and six.

As shown in Table 1, Abby and Brandy required several SIP views, and Brittany required only one view to achieve mastery for the DRA procedures. Callie, Brittany, and Brandy needed three to four SIP views to achieve mastery on the DRO procedures, whereas Cathy required only one SIP view to perform at mastery levels.

Discussion

Overall, the SIPs produced mastery performance for seven of 12 training opportunities across participants and differential reinforcement procedures. Notably, two participants (Brittany and Brandy) mastered both procedures and every participant displayed improved performance for both procedures following SIP training. By contrast, two participants (Abby and David) did not master either procedure with the SIP training, though their performance increased across sessions in the SIP phases. Interestingly, most participants’ performance for providing behavior-specific praise for compliance (component two) did not improve appreciably (with average increases for 3 of the 12 training opportunities). Relatedly, several training opportunities had at least one session in which there was no opportunity to score component six. It is possible that participants’ slow responding contributed to fewer opportunities to observe token exchanges.

As a whole, findings from this study important for two reasons. First, this investigation extends prior literature by evaluating a SIP under environmental arrangements in which a school consultant may encounter. As in the Weldy et al. (2014) study, we presented the SIP to groups of participants without oversight from a trainer. By decreasing the time demands on qualified trainers, SIPs may help broader training become more feasible in settings (e.g., public schools) that have limited access to qualified trainers. Although not all participants reached mastery for both procedures, we extended prior studies (Deliperi et al. 2015; Delli Bovi et al. 2017; Giannakakos et al. 2016; Lipschultz et al. 2015; Spiegel et al. 2016) by having participants view the SIP in groups, thereby increasing the efficiency of this training approach. Given our outcomes, future research should continue to determine whether SIPs are effective training tools when presented to groups of individuals simultaneously.

It is worth noting differential reinforcement procedures taught in the current study were likely more complicated than procedures taught in prior studies. For example, preference assessments involve a sequence of discrete responses for which completion of a step sets the occasion for the subsequent step. To illustrate, Weldy et al. (2014) reported seven steps for setting up and conducting the multiple-stimulus preference assessment, and the simulated students’ behavior determined whether the participant (change agent) conducted four of the seven steps. With the DRA and DRO procedures, participants implemented six steps; however, simulated student behavior influenced whether and when the change agent implemented every step. During our procedure, a participant’s implementation of a three-step prompting procedure was dependent on whether the simulated student complied with a vocal directive, complied with a gesture prompt, or engaged in problem behavior. In addition, participants needed to implement multiple components (e.g., refraining from commenting or changing facial expression paired with placing a demand following problem behavior) within close temporal proximity. In short, teaching change agents the intricacies of DRA and DRO procedures might require some individualized training time with a skilled practitioner.

Some limitations of the current study warrant discussion. First, the potential carryover effect of the SIP presentation to the untrained differential reinforcement procedure is a limitation of this study. Specifically, performance by Brandy and Cathy increased during the DRA baseline and DRO baseline, respectively. The similarity of some DRA and DRO components may have contributed to increased level of responding in baseline conditions for these participants. Future research should investigate the extent in which teaching one behavioral reduction strategy to participants facilitates response generalization to novel behavioral reduction strategies. Researchers conducted this study in an applied, in-service training setting with the ultimate goal to exposing all participants exposed to both SIPs to promote acquisition.

Second, observers produced some suboptimal IOA scores. This may be due to participants’ frequent, incorrect engagement in nonspecific contingent and noncontingent praise. Though providing noncontingent attention is not as problematic as delivering contingent attention for problem behavior, this error may have contributed to some participants’ failure to improve in component two for DRA and DRO procedures. Beyond this training, it is unclear if this error would have undermined the effects of DRA, DRO, or both when aiming to reduce problem behavior in school settings.

A third limitation is that we set the mastery criterion at one session at 90%, which does not allow for steady state responding and is a weaker demonstration of the skill in the participant’s repertoire. Global measures of staff performance may mask repeated errors on specific steps (Cook et al. 2015). By doing a further analysis of the participants’ component skills, we saw participants did make repeated errors on specific steps while still meeting the mastery criterion. Previous staff training studies have addressed this concern by requiring 100% correct implementation, only scoring a step as correct if the participant implemented it correctly across all possible opportunities per session, or both (e.g., Lipschultz et al. 2015; Weldy et al. 2014). Though 100% correct implementation across three consecutive sessions is ideal, school consultants may have to train 20 individuals within 2 h., potentially making such performance difficult to achieve. Future research should evaluate the efficiency of differing levels of mastery of trainee performance within specific time frames and the extent to which this influences change agents’ performance when working in the school setting.

Another limitation to this study is that we did not evaluate participants’ performance with actual consumers of behavior-analytic services. Nevertheless, simulated consumers provided the opportunity to expose trainees to a wide range of possible responses by consumers. In turn, trainees may be in a better position to implement the technologies correctly when actual consumers engage in similar responses. Moreover, a number of studies have demonstrated generalization of acquired skills from simulated to actual consumers following training with SIPs (Deliperi et al. 2015; Delli Bovi et al. 2017; Du et al. 2016; Giannakakos et al. 2016; Lipschultz et al. 2015; Martocchio and Rosales 2017; Vladescu et al. 2012). However, there are very few that have evaluated and included these generalization measures when training behavioral reduction strategies (Spiegel et al. 2016). Without such data, school consultants should use caution if considering this training strategy for instructing school personnel. Future researchers should study specific parameters of SIPs (e.g., duration of the package, use of colloquial language, number of procedure components) that are necessary to achieve correct responding from when implementing behavioral reduction programs. From here, researchers could conduct evaluations of efficiency, preference, maintenance, and generalization of SIPs when compared to more time and resource intensive methods (e.g., BST) in school settings.

It is also worth noting Cathy’s correct responding during the DRO session decreased after she had demonstrated mastery performance in prior sessions. She ultimately recovered mastery-level performance; however, this finding suggests that the skills acquired from a SIP might not maintain over time without a feedback process. That is, correctly imitated behavior by the change agents may require a supporting consequent event (e.g., praise from a supervisor) to maintain over time. For example, Nottingham et al. (2017) found participants mastered preference assessments and generalized their skills to consumers when experimenters delivered brief feedback. Alternatively, brief group feedback (see Luna et al. 2018) would have been a relatively easy way to enhance performance without much additional time. Future researchers should continue to investigate how to deliver feedback when instructing groups.

This study examined if a SIP would increase special education staff members’ correct implementation of differential reinforcement strategies, arguably more complex procedures than prior investigations using SIPs to train preference assessments (Giannakakos et al. 2016; Graff and Karsten 2012; Weldy et al. 2014). School consultants are often tasked with training novice staff members to implement behavior plans (Hogan et al. 2015). Consequently, it is imperative to continue to evaluate potential cost-efficient training strategies, regardless of procedural complexity, so students in special education receive quality behavioral services.

References

Cardinal, J. R., Gabrielsen, T. P., Young, E. L., Hansen, B. D., Kellems, R., Hoch, H., et al. (2017). Discrete trial teaching interventions for students with Autism: Web-based video modeling for paraprofessionals. Journal of Special Education Technology, 32, 138–148. https://doi.org/10.1177/0162643417704437.

Carr, J. E. (2005). Recommendations for reporting multiple-baseline designs across participants. Behavioral Interventions, 20, 219–224. https://doi.org/10.1002/bin.191.

Codding, R. S., Feinberg, A. B., Dunn, E. K., & Pace, G. M. (2005). Effects of immediate performance feedback on implementation of behavior support plans. Journal of Applied Behavior Analysis, 38, 205–219. https://doi.org/10.1901/jaba.2005.98-04.

Cook, J. E., Subramaniam, S., Brunson, L. Y., Larson, N. A., Poe, S. G., & St. Peter, C. (2015). Global measures of treatment integrity may mask important errors in discrete-trial training. Behavior Analysis in Practice, 8, 37–47. https://doi.org/10.1007/s40617-014-0039-7.

Coon, J. C., & Rapp, J. T. (2018). Application of multiple baseline designs in behavior analytic research: Evidence for the influence of new guidelines. Behavioral Interventions, 33, 160–172. https://doi.org/10.1002/bin.1510.

Countenance, A., Sheldon, J., Sherman, J., Schroeder, S., Bell, A., & House, R. (2014). Assessing the effects of a staff training package on the treatment integrity of an intervention for self-injurious behavior. Journal of Developmental and Physical Disabilities, 26, 371–389. https://doi.org/10.1007/s10882-014-9372-6.

Deliperi, P., Vladescu, J. C., Reeve, K. F., Reeve, S. A., & DeBar, R. M. (2015). Training staff to implement a paired-stimulus preference assessment using video modeling with voiceover instruction. Behavioral Interventions, 30, 314–332. https://doi.org/10.1002/bin.1421.

Delli Bovi, G. M. D., Vladescu, J. C., DeBar, R. M., Carroll, R. A., & Sarokoff, R. A. (2017). Using video modeling with voice-over instruction to train public school staff to implement a preference assessment. Behavior Analysis in Practice, 10, 72–76. https://doi.org/10.1007/s40617-016-0135-y.

DiGennaro, F. D., Martens, B. K., & Kleinmann, A. E. (2007). A comparison of performance feedback procedures on teachers’ treatment implementation integrity and students’ inappropriate behavior in special education classrooms. Journal of Applied Behavior Analysis, 40, 447–461. https://doi.org/10.1901/jaba.2007.40-447.

Du, L., Nuzzolo, R., & Alonso-Álvarez, B. (2016). Potential benefits of video training on fidelity of staff protocol implementation. Behavioral Development Bulletin, 21, 110–121. https://doi.org/10.1037/bdb0000019.

Giannakakos, A. R., Vladescu, J. C., Kisamore, A. N., & Reeve, S. A. (2016). Using video modeling with voiceover instruction plus feedback to train staff to implement direct teaching procedures. Behavior Analysis in Practice, 9, 126–134. https://doi.org/10.1007/s40617-015-0097-5.

Graff, R. B., & Karsten, A. M. (2012). Evaluation of a self-instruction package for conducting stimulus preference assessments. Journal of Applied Behavior Analysis, 45, 69–82. https://doi.org/10.1901/jaba.2012.45-69.

Hansard, C., & Kazemi, E. (2018). Evaluation of video self-instruction for implementing paired-stimulus preference assessments. Journal of Applied Behavior Analysis, 51, 675–680. https://doi.org/10.1002/jaba.476.

Higgins, W. J., Luczynski, K. C., Carroll, R. A., Fisher, W. W., & Mudford, O. C. (2017). Evaluation of a telehealth training package to remotely train staff to conduct a preference assessment. Journal of Applied Behavior Analysis, 50, 238–251. https://doi.org/10.1002/jaba.37.

Hogan, A., Knez, N., & Kahng, S. (2015). Evaluating the use of behavioral skills training to improve school staffs’ implementation of behavior intervention plans. Journal of Behavioral Education, 24, 242–254. https://doi.org/10.1007/s10864-014-9213-9.

Jenkins, S. R., Hirst, J. M., & Reed, F. D. D. (2015). The effects of discrete-trial training commission errors on learner outcomes: An extension. Journal of Behavioral Education, 24, 196–209. https://doi.org/10.1007/s10864-014-9215.

Kuhn, S. A., Lerman, D. C., & Vorndran, C. M. (2003). Pyramidal training for families of children with problem behavior. Journal of Applied Behavior Analysis, 36, 77–88. https://doi.org/10.1901/jaba.2003.36-77.

LeGray, M. W., Dufrene, B. A., Sterling-Turner, H., Olmi, D. J., & Bellone, K. (2010). A comparison of function-based differential reinforcement interventions for children engaging in disruptive classroom behavior. Journal of Behavioral Education, 19, 185–204. https://doi.org/10.1007/s10864-010-9109-2.

Lipschultz, J. L., Vladescu, J. C., Reeve, K. F., Reeve, S. A., & Dipsey, C. R. (2015). Using video modeling with voiceover instruction to train staff to conduct stimulus preference assessments. Journal of Developmental and Physical Disabilities, 27, 505–532. https://doi.org/10.1007/s10882-015-9434-4.

Luna, O., Petri, J. M., Palmier, J., & Rapp, J. T. (2018). Comparing accuracy of descriptive assessment methods following a group training and feedback. Journal of Behavioral Education.. https://doi.org/10.1007/s10864-018-9297-8.

Martocchio, N., & Rosales, R. (2017). Video modeling with voice-over instructions to teach implementation of the picture exchange communication system. Behavior Analysis: Research and Practice, 17, 142–154. https://doi.org/10.1037/bar0000069.

Nottingham, C. L., Vladescu, J. C., Giannakakos, A. R., Schnell, L. K., & Lipschultz, J. L. (2017). Using video modeling with voiceover instruction plus feedback to train implementation of stimulus preference assessments. Learning and Motivation, 58, 37–47. https://doi.org/10.1016/j.lmot.2017.01.008.

Parsons, M. B., Rollyson, J. H., & Reid, D. H. (2012). Evidence-based staff training: A guide for practitioners. Behavior Analysis in Practice, 5(2), 2–11.

Pence, S. T., Peter, C. C. S., & Giles, A. F. (2014). Teacher acquisition of functional analysis methods using pyramidal training. Journal of Behavioral Education, 23, 132–149. https://doi.org/10.1007/s10864-013-9182-4.

Pence, S. T., Peter, C. C., & Tetreault, A. S. (2012). Increasing accurate preference assessment implementation through pyramidal training. Journal of Applied Behavior Analysis, 45, 345–359. https://doi.org/10.1901/jaba.2012.45-345.

Petscher, E. S., & Bailey, J. S. (2006). Effects of training, prompting, and self-monitoring on staff behavior in a classroom for students with disabilities. Journal of Applied Behavior Analysis, 39, 215–226. https://doi.org/10.1901/jaba.2006.02-05.

Plavnick, J. B., Ferreri, S. J., & Maupin, A. N. (2010). The effects of self-monitoring on the procedural integrity of a behavioral intervention for young children with developmental disabilities. Journal of Applied Behavior Analysis, 43, 315–320. https://doi.org/10.1901/jaba.2010.43-315.

Ramon, D., Yu, C. T., Martin, G. L., & Martin, T. (2015). Evaluation of a self-instructional manual to teach multiple-stimulus without replacement preference assessments. Journal of Behavioral Education, 3, 289–303. https://doi.org/10.1007/s10864-015-9222-3.

Sarokoff, R. A., & Sturmey, P. (2004). The effects of behavioral skills training on staff implementation of discrete-trial teaching. Journal of Applied Behavior Analysis, 37, 535–538. https://doi.org/10.1901/jaba.2004.37-535.

Spiegel, H. J., Kisamore, A. N., Vladescu, J. C., & Karsten, A. M. (2016). The effects of video modeling with voiceover instruction and on-screen text on parent implementation of guided compliance. Child & Family Behavior Therapy, 38, 299–317. https://doi.org/10.1080/07317107.2016.1238690.

St. Peter Pipkin, C., Vollmer, T. R., & Sloman, K. N. (2010). Effects of treatment integrity failures during differential reinforcement of alternative behavior: A translational model. Journal of Applied Behavior Analysis, 43, 47–70. https://doi.org/10.1901/jaba.2010.43-47.

Thiessen, C., Fazzio, D., Arnal, L., Martin, G. L., Yu, C. T., & Keilback, L. (2009). Evaluation of a self-instructional manual for conducting discrete-trials teaching with children with autism. Behavior Modification, 33, 360–373. https://doi.org/10.1177/0145445508327443.

Vladescu, J. C., Carroll, R., Paden, A., & Kodak, T. M. (2012). The effects of video modeling with voiceover instruction on accurate implementation of discrete-trial instruction. Journal of Applied Behavior Analysis, 45, 419–423. https://doi.org/10.1901/jaba.2012.45-419.

Vollmer, T., Roane, H., Ringdahl, J., & Marcus, B. (1999). Evaluating treatment challenges with differential reinforcement of alternative behavior. Journal of Applied Behavior Analysis, 32, 9–23. https://doi.org/10.1901/jaba.1999.32-9.

Weldy, C. R., Rapp, J. T., & Capocasa, K. (2014). Training staff to implement brief stimulus preference assessments. Journal of Applied Behavior Analysis, 47, 214–218. https://doi.org/10.1002/jaba.98.

Wong, C., Odom, S. L., Hume, K. A., Cox, A. W., Fettig, A., Kucharczyk, S., et al. (2015). Evidence-based practices for children, youth, and young adults with autism spectrum disorder: A comprehensive review. Journal of Autism and Developmental Disorders, 45, 1951–1966. https://doi.org/10.1007/s10803-014-2351-z.

Acknowledgement

We would like to thank Sarah Bedell for allowing us to use her materials (tokens, token board, and flashcards) for the current study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that there are no conflicts of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Differential Reinforcement of Alternative (DRA) Behavior Intervention Plan

Problem Behaviors

Self-injurious behavior: Any instance of student contacting her own body with an open or closed hand from a distance of 6 in or greater or any instance of a person biting any part of her own body.

Disruption: As any instance of the student throwing an object, swiping objects off a surface, ripping or tearing items, or contacting hands or feet to a surface from a distance of 6 in or greater.

-

1.

Directions should be clear and concise (e.g., “touch the letter “A’”).

-

2.

Use three-step prompting to gain compliance.

-

a.

Deliver the instruction.

-

b.

Wait 5 s, then gesture or model correct response.

-

c.

Wait 5 s, then physically guide correct response.

-

a.

Reinforcement Procedures

-

3.

Deliver a token and behavior-specific praise (e.g., “Great job finding the letter A!”) within 5 s following each instance of independent compliance with an instruction.

-

4.

Deliver behavior-specific praise within 5 s following each instance of compliance following a gesture/model prompt (e.g., “Awesome touching the letter C!”).

-

5.

After the student earns 4 tokens, you should say “you earned all your tokens! What do you want to trade for?” and allow the student to put the tokens into your hand before delivering the edible item. Allow 15 s to consume the edible before placing a new demand.

-

6.

Refrain from commenting directly or facially if self-injurious behavior or disruption occurs.

-

7.

Following instances of self-injurious behavior and disruption, you should immediately (within 5 s of the behavior) use physical guidance to complete demands. If there was not a demand in place, you should place a new demand and deliver immediate physical guidance to gain compliance with the demand.

-

8.

Refrain from delivering praise or a token following physical guidance. Following physical guidance, you should deliver a new deliver a new demand.

Differential Reinforcement of Other (DRO) Behavior Intervention Plan

Problem Behaviors

Self-injurious behavior: Any instance of student contacting her own body with an open or closed hand from a distance of 6 in or greater or any instance of a person biting any part of her own body.

Disruption: As any instance of the student throwing an object, swiping objects off a surface, ripping or tearing items, or contacting hands or feet to a surface from a distance of 6 in or greater.

-

1.

Directions should be clear and concise (e.g., “touch the letter “A’”).

-

2.

Use three-step prompting to gain compliance.

-

a.

Deliver the instruction.

-

b.

Wait 5 s, then gesture or model correct response.

-

c.

Wait 5 s, then physically guide correct response.

-

a.

Reinforcement Procedures

-

3.

Deliver a token and behavior-specific praise if the student refrains from a self-injurious behavior and disruption when the timer goes off after 30 s (e.g., “Great job working!”).

-

4.

Deliver behavior-specific praise within 5 s following each instance of compliance following a gesture/model prompt (e.g., “Awesome touching the letter C!”).

-

5.

After the student earns 4 tokens, you should say “you earned all your tokens! What do you want to trade for?” and allow the student to put the tokens into your hand before delivering the edible item. Allow 15 s to consume the edible before placing a new demand.

-

6.

Refrain from commenting directly or facially if self-injurious behavior or disruption occurs.

-

7.

Following instances of self-injurious behavior and disruption, you should immediately (within 5 s of the behavior) use physical guidance to complete demands. If there was not a demand in place, you should place a new demand and deliver immediate physical guidance to gain compliance with the demand.

-

8.

Refrain from delivering praise or a token following physical guidance. Following physical guidance, you should deliver a new deliver a new demand and reset the timer.

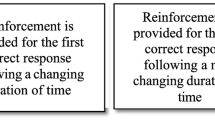

Appendix B

Sample Slide for DRA-SIP and Corresponding Voiceover Script

Voiceover Instruction (each bullet animates one by one).

-

“When implementing a DRA, use 3-step prompting to gain compliance with the child.

-

First, provide a clear, short instruction like “Touch 4”.

-

Wait 3–5 s, if the child engages in the correct response provide behavior-specific praise and a token. “Nice job, touching four!”

-

If the child is incorrect or doesn’t respond, provide a gesture prompt, or point to the correct response.

-

Following the gesture prompt, if the child is correct, provide behavior-specific praise only.

-

If the child is still incorrect, provide physical guidance by placing your hand on top of the child’s hand to guide the correct response.

-

Following physical guidance, do not provide praise or comment.”

Following 3 s, slide switches to a 30-s video model of 3-step prompting

Rights and permissions

About this article

Cite this article

Luna, O., Nuhu, N.N., Palmier, J. et al. Using a Self-Instructional Package to Train Groups to Implement Reinforcement Strategies. J Behav Educ 28, 389–407 (2019). https://doi.org/10.1007/s10864-018-09319-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10864-018-09319-0