Abstract

In this paper, a new variational image denoising model is proposed. The new model could be seen to be a two-step method. In the first step, structure tensor analysis is used to infer something about the local geometry. The eigenvectors and the eigenvalues of the structure tensor are used in the construction of the denoising energy. In the second step, the actual variational denoising takes place. The steps are coupled in the sense that the energy expression is built using the underlying image, not the data. Two variable exponents are incorporated into the regularizer in order to reduce the staircasing effect, which is often present in the methods based on the first-order partial derivatives, and to increase smoothing along the image boundaries. In addition, two pointwise weight functions try to help to preserve small-scale details. In the theoretical part, the existence of a minimizer of a weak form of the original energy is considered. In the numerical part, an algorithm based on iterative minimization is presented and the numerical experiments demonstrate the possible advantages of the new model over some existing variational and partial differential equations methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Variational image denoising methods have attracted much attention since the model based on the minimization of the total variation was proposed by Rudin, Osher and Fatemi (ROF) [27]. Despite its success, some drawbacks of the ROF method are its tendency to produce piecewise constant images and loss of contrast. Since the introduction of the model, several modifications have been proposed.

In this paper, it is assumed that the noise is additive and white. Mathematically,

where \(f_0:{\varOmega }\rightarrow \mathbb {R}\) is the original noise-free image, \(n:{\varOmega }\rightarrow \mathbb {R}\) is the white noise and \(f:{\varOmega }\rightarrow \mathbb {R}\) is the noisy image or the measurement which is available. Variational models to find approximation of \(f_0\) are formulated as energy minimization problems.

Methods, which are based on some kind of orientation matching, could be seen to form one group which tries to improve the ROF method. In these methods, some smoothing process is applied on \(\nabla f/|\nabla f|\) in order to get an approximation v of \(\nabla f_0/|\nabla f_0|\). In the second step, this vector field v is used to guide the variational denoising in such a way that the energy minimization favours images u such that \(\nabla u\) is approximately pointwise parallel to v. For methods belonging to this group, see e.g. the Lysaker–Osher–Tai model [25], the TV-Stokes model [26] and the model proposed in [18].

One group of denoising methods which avoids the staircasing effect is based on the use of the higher-order partial derivatives. For instance in [5], the concept of total generalized variation was introduced where the regularizer automatically balances between the first- and higher-order derivatives. In [23], an adaptive, directionally dependent regularizer based on the first- and second-order derivatives was proposed. Article [11] presents a general framework for higher integer-order variational methods and non-linear diffusion filtering for image denoising. Higher-order methods are not limited to integer-order differentiability, e.g. in [10] fractional-order derivatives appear in the proposed regularizer to handle one-dimensional signals. Earlier, the use of fractional derivatives had appeared in differential equations form, see e.g. [12]. The work [19] on higher degree total variation contains also an interesting discussion regarding the separability of the total variation which is relevant also for our work in this paper.

The methods based on the use of variable exponents are also intended to reduce the staircasing. Edge-preserving regularization is used in the areas where the gradient of the noise-free image is probably large and in the areas where the gradient is probably small, isotropic smoothing is used. The first method on this area was [2] where the variable exponent in the regularizer was based on the underlying image. Due to the non-convexity of the method, in [8] a convex variant of [2] was proposed where the exponent is based solely on the noisy image. See also the subsequent papers [3, 16, 24] on denoising which are based on the use of variable exponents and first-order partial derivatives.

Studying image structures can be challenging due to noise. It could be preferable to use operations which are based on local neighbourhoods, since they could be more robust to noise than operations which are just pointwise based. For instance, structure tensor (see e.g. [14] for a pioneering work on the concept) provides a way to study the dominant orientation of the gradient in a neighbourhood. In the partial differential equations and calculus of variations approaches to image processing, the early works which utilize the structure tensor, are formulated in the partial differential equations framework, see Weickert [32] and Tschumperlé [30]. Currently, there are also variational methods which utilize the structure tensor.

In [15], Grasmair and Lenzen considered an anisotropic total variation model based on the minimization of the energy

where the pointwise anisotropy matrix A(u) is constructed from the linear structure tensor of u. In this model, the approximation of the unit vector field parallel to \(\nabla u\) and the actual denoising step are coupled in the sense that the unit vector field is estimated from the underlying image u, not from the data.

In [28], a two-step method was proposed for image denoising. In the first step, the orientations of the image structures are estimated from the data. In the second step, this directional information is used to build an energy functional to be minimized. One of the regularizers in that paper has the form

in the continuous domain where \(r_1\) and \(r_2\) are the pointwise directions derived in the step 1.

In [13], an iterative two-step method is proposed for image reconstruction where in the first step the structure tensor of the original image is estimated. In the second step, this information is used to construct an energy expression whose minimization favours solutions respecting the local geometry obtained by the structure tensor analysis. In [21], the so-called structure tensor total variation is proposed to solve inverse problems in imaging. The regularizer is based on penalizing the eigenvalues of the structure tensor. The work [20] extends [21] in the sense that in [20] the regularizer is based on the eigenvalues of a non-local version of the structure tensor. In [22], solution adaptive variants of the total variation were considered where the adaptivity is modelled as a fixed point problem. In [36], a gradient energy total variation is proposed using the gradient energy tensor.

The linear structure tensor \(K_\rho *(\nabla u_\sigma \nabla u_\sigma ^\mathrm{T})\), where \(\rho >0\) and \(\sigma >0\) are fixed and K is the Gaussian kernel, can also be obtained by applying the linear isotropic heat equation to the elements of the matrix \(\nabla u_\sigma \nabla u_\sigma ^\mathrm{T}\) where the stopping time depends on \(\rho \). There are also non-linear methods to smooth the tensor field \(\nabla u_\sigma \nabla u_\sigma ^\mathrm{T}\) which aim at better edge preservation, see e.g. Brox et al. [6] and Hahn and Lee [17].

Regarding the works which have some resemblance to our work we also mention [1] where a directional total variation was considered and [35] where an adaptive directional total variation model was proposed for latent fingerprint segmentation.

In this paper, we consider a new adaptive variational image denoising model which is mostly inspired by the works [3, 15]. The regularizer has a formulation which should help to preserve the edges in the estimated directions given by the structure tensor but the regularizer should also encourage smoothing along the edges. On the other hand, in the estimated smooth areas, the regularizer simplifies to isotropic smoothing. The pointwise positive weights in the regularizer also try to help to preserve small-scale details.

In [3], the authors considered variants of [2] for denoising and the regularizer was an interpolation between isotropic total variation and Tikhonov regularization. On the other hand, we consider in this paper a regularizer which in some sense is an interpolation between directional varying anisotropic total variation and Tikhonov regularization.

In the new model we consider in this paper, similar to [15], the approximation of the unit vector field parallel to \(\nabla u\) and the actual denoising step are coupled in the sense that the directional vector field is computed from the underlying image. We utilize both the eigenvectors and the eigenvalues of the structure tensor in the construction of the regularizer. Compared to the energy in (1), our regularizer has a pointwise separable formulation regarding the smoothing along the level lines and in the direction orthogonal to them. This separability is similar to the regularizer in (2). This separability property combined with the local coordinate system, whose axes are the eigenvectors of the structure tensor, is used to design the smoothing behaviour along the level lines and in the direction orthogonal to them. Roughly speaking, one can use locally linear or non-linear smoothing along the edges, whereas in the direction orthogonal to the edges, the smoothing is minor and of non-linear character in order to prevent the edges from getting blurred.

2 The New Model

Let \({\varOmega }\subset \mathbb {R}^2\) be an open and bounded rectangle and \(f\in L^2({\varOmega })\). In this paper, we study variational image denoising of an image f based on the minimization of the energy

where the regularizer R is

and \(\lambda >0\) is the global tuning parameter. In the regularizer, \((\xi (u)(x),\xi (u)^\perp (x))\) is a pointwise, smoothly varying orthonormal coordinate system computed from the image u such that \(\xi (u)(x)\) is approximately parallel to \(\nabla u(x)\) provided \(\nabla u(x)\) is non-vanishing. The choices of the pointwise weights \(\alpha _1(u)(x)\) and \(\alpha _2(u)(x)\) and the varying integrabilities \(p_1(u)(x)\) and \(p_2(u)(x)\) aim at preserving the edges, reducing noise also along the edges of u and preserving small-scale details.

For simplicity, at times we may omit the explicit dependency of \(\alpha _1\), \(\alpha _2\), \(p_1\), \(p_2\), \(\xi \) and \(\xi ^\perp \) on u.

The key features regarding the choices of the parameter functions are:

-

1.

\(0<{\varepsilon }\le \alpha _1,~\alpha _2\le 1\), \(1\le p_1,~p_2\le 2\). Here \({\varepsilon }>0\) is small. This assumption is needed when the existence of a minimizer of the model is considered.

-

2.

In the nearly constant, homogeneous areas, we have for the pointwise weights \(\alpha _1(x)\approx \alpha _2(x)\approx 1\) and for the integrabilities \(p_1(x)\approx p_2(x)\approx 2\) , so \(\alpha _1(u)|\nabla u\cdot \xi (u)|^{p_1(u)}+\alpha _2(u)|\nabla u\cdot \xi (u)^\perp |^{p_2(u)}\approx |\nabla u|^2\) by the Pythagorean theorem. This aims at reducing the staircasing effect.

We mention that if \(\alpha _1=\alpha _2=1\), \(p_1=p_2=2\), then one can actually solve the minimization of the energy (3) in the case \({\varOmega }=\mathbb {R}^2\). The solution is obtained by applying a linear low-pass filter on f. This could be considered to correspond to averaging f locally in a linear way. The derivation of the low-pass filter in the Fourier domain is made as e.g. in [10].

-

3.

In the areas where the structure is one-dimensional, the choices \(\alpha _1(x)\approx 0\), \(p_1(x)\approx 1\) and \(p_2(x)\approx 2\) try to preserve the edges and at the same time encourage smoothing along the edges. Another possibility is to use also \(p_2(x)\approx 1\).

Again, if \(\alpha _1=0\), \(p_2=2\) and \(\alpha _2\), \(\xi \) are constant, then one could solve the minimization of the energy (3) in the case \({\varOmega }=\mathbb {R}^2\). In the Fourier domain, the solution is obtained by applying the linear filter

$$\begin{aligned} \frac{2\lambda }{2\lambda +8\pi ^2\alpha _2|\xi ^\perp \cdot w|^2} \end{aligned}$$on \(\hat{f}(w)\). From the expression of the filter, we see that the filter attenuates all sinusoidal components except those oscillations which in the spatial domain are constant along \(\xi ^\perp \). Components which in the spatial domain oscillate in the direction \(\xi ^\perp \) are attenuated the most. In the spatial domain, along \(\xi ^\perp \), the filter has a low-pass character.

-

4.

In the textured areas, where the structure is clearly not one-dimensional, or at the corners, \(p_1(x)\approx p_2(x)\approx 1\) which tries to preserve the small-scale details along several directions.

To prove the existence of a minimizer of the new model we make the following assumptions on the pointwise varying coordinate system, the pointwise weights and the integrabilities:

3 Strong and Weak Forms

We denote the regularizer R in (3) by \(E_{\mathrm {strong}}\),

which is defined at least if u is differentiable.

We aim to define a suitable weak form \(E_{\mathrm {weak}}\) such that \(E_{\mathrm {weak}}(u)=E_{\mathrm {strong}}(u)\) if u is smooth enough and if \(f\in L^2({\varOmega })\) and \(\lambda >0\), then the energy \(\lambda ||f-u||_{L^2({\varOmega })}+E_{\mathrm {weak}}(u)\) has a minimizer in the space of functions of bounded variation. The choice of the weak form is motivated by the analysis made in [3] where a weak form of the regularizer of the form \(\int _{\varOmega }|\nabla u|^{p(u)} \, \mathrm{d}x\) or \(\int _{\varOmega }|\nabla u|^{p(f)} \, \mathrm{d}x\) was considered. We use the results from [3] in many places in this section.

We aim to express the energy \(E_{\mathrm {strong}}\) in such a way that in the energy, the dependency on u does not involve the derivatives of u. In the same way, the total variation can be obtained starting with the expression \(\int _{\varOmega }|\nabla u| \, \mathrm{d}x\). First, we proceed formally to show how \(E_{\mathrm {weak}}\) is obtained. Since for \(t\in \mathbb {R}\) and \(1\le p\le 2\), we have

then we get the expression (5) for \(|\nabla u\cdot \xi (u)|^{p_1(u)}\).

Analogously, we get (6) for \(|\nabla u\cdot \xi (u)^\perp |^{p_2(u)}.\)

If it is possible to take the supremum outside of the integral, then using the integration by parts, if \(\xi ^\perp =(-(\xi )_2,(\xi )_1)\), and if

we see that we have at least the approximate expression (8) for \(E_{\mathrm {strong}}(u)\) where the occurrences of \(\nabla u\) have vanished. We can now define

as in (8) where we use the notation

Next we intend to show that \(E_{\mathrm {weak}}\) is minorized by a multiple of the total variation plus a constant. This result is similar to [3, p. 75] and the result is needed later when the existence of a minimizer is considered. We begin with a short technical lemma.

Lemma 1

The function

is a restriction of a \(C^1\) function g defined in \((0,+\infty )\). Also, g is increasing on (1, 2) and thus \(g\ge e^{-1}\) on [1, 2].

Proof

Let \(g:(0,+\infty )\rightarrow \mathbb {R}\) be defined by

It is clear that g is a \(C^1\) function on \((0,1)\cup (1,+\infty )\). It is also true that

and

The claim follows. \(\square \)

Next we prove that \(E_{\mathrm {weak}}\) is minorized by a multiple of the total variation plus a constant.

Proposition 1

Let \({\varepsilon }\in (0,1)\) be such that \(\alpha _1(u)\), \(\alpha _2(u)\in [{\varepsilon },1]\) for all \(u\in L^1({\varOmega })\). Then

for all \(u\in L^1({\varOmega })\) where the constant C does not depend on u.

Proof

Now

where \(v_1\), \(v_2\in C^1(\overline{{\varOmega }})\), \(\inf v_1\), \(\inf v_2>0\), \(\sigma _1\), \(\sigma _2\in C_c^1({\varOmega })\), \(\sigma _1^2+\sigma _2^2\le 1\). Since the columns of A are linearly independent, A is of full rank and invertible. Actually,

Let \(v_1:=g\circ p_1(u)\) where g is as in Lemma 1. Then \(v_1\in C^1(\overline{{\varOmega }})\), \(p_1 v_1^{p_1-1}=1\), \(\inf _{x\in {\varOmega }} v_1(x)\ge e^{-1}>0\) and \(v_1\) is bounded. Similarly, we select \(v_2:=g\circ p_2(u).\) Then

Let \((\sigma _1',\sigma _2')\in C_c^1({\varOmega };\mathbb {R}^2)\), \((\sigma _1')^2+(\sigma _2')^2\le 1\), be given. We select \((\sigma _1,\sigma _2)\) such that

Then \((\sigma _1,\sigma _2)\in C_c^1({\varOmega };\mathbb {R}^2)\) and since \({\varepsilon }\le \alpha _1, \alpha _2\le 1\) and \(A^{-1}\) is length-preserving, we see that \(\sigma _1^2+\sigma _2^2\le \frac{1}{{\varepsilon }^2}\) and thus \(({\varepsilon }\sigma _1)^2+({\varepsilon }\sigma _2)^2\le 1\). Now

and thus

We mention that by a similar reasoning, it also follows that

Since \(0<{\varepsilon }\le \alpha _1, \alpha _2\le 1\), \(1\le p_1, p_2\le 2\), \(v_i=g\circ p_i(u)\) where g is bounded on [1, 2] by Lemma 1, we also get an estimate for the constant C. \(\square \)

4 Equivalence of the Strong and Weak Forms for Smooth Functions

In this section, we show that if \(u\in C^1(\overline{{\varOmega }})\), then \(E_{\mathrm {strong}}(u)=E_{\mathrm {weak}}(u)\).

Lemma 2

If \(u\in C^1(\overline{{\varOmega }})\), then

Proof

Let \(v_1\), \(v_2\in C^1(\overline{{\varOmega }})\), \((\sigma _1,\sigma _2)\in C_c^1({\varOmega };\mathbb {R}^2)\) be fixed, where \(\inf v_1\), \(\inf v_2>0\) and \(||\sigma _1||_{L^\infty }\), \(||\sigma _2||_{L^\infty }\le 1\). If \(u\in C^1(\overline{{\varOmega }})\), then using the fact that \(|t|^{p_1}=\max _{v\ge 0}(p_1 v^{p_1-1}|t|-(p_1-1)v^{p_1})\), we see that \(|\nabla u\cdot \xi |^{p_1(u)}\ge p_1 v_1^{p_1-1}|\nabla u\cdot \xi |-(p_1-1) v_1^{p_1}\ge p_1 v_1^{p_1-1}\sigma _1 \nabla u\cdot \xi -(p_1-1) v_1^{p_1}\). Using this and the integration by parts we see that

and since \((\sigma _1,\sigma _2)\in C_c^1({\varOmega };\mathbb {R}^2)\), \(||\sigma _1||_{L^\infty }\), \(||\sigma _2||_{L^\infty }\le 1\) and \(v_1\in C^1(\overline{{\varOmega }}),\) \(v_2\in C^1(\overline{{\varOmega }}),\) where \(\inf v_1\), \(\inf v_2>0\), are arbitrary, we get \(E_{\mathrm {strong}}(u)\ge E_{\mathrm {weak}}(u)\). \(\square \)

Lemma 3

Let \(u\in C^1(\overline{{\varOmega }})\) and \({\varepsilon }>0\). Then there exists \(\sigma _1\in C_c^1({\varOmega })\) such that \(||\sigma _1||_\infty \le 1\) and

An analogous estimate holds for the triplet \(\alpha _2\), \(p_2\) and \(\xi ^\perp \).

Proof

Set \(w_1(x):=\frac{\nabla u\cdot \xi }{|\nabla u\cdot \xi |}\) if \(|\nabla u\cdot \xi |\ne 0\) and \(w_1(x)=0\) otherwise. Then \(\alpha _1 |\nabla u\cdot \xi |^{p_1}=\alpha _1|\nabla u\cdot \xi |^{p_1-1} w_1\nabla u\cdot \xi \).

Select \(\sigma _1\in C_c^1({\varOmega })\) such that

Since \(\sigma _1\) can be obtained by convolving with the standard mollifier a function whose absolute value is less than or equal to one, it follows that \(\sigma _1\) can be chosen such that \(||\sigma _1||_\infty \le 1\). Then

Since \(u\in C^1(\overline{{\varOmega }})\), then \(|\nabla u|\in L^\infty ({\varOmega })\). By choosing n in (9) large enough, we see that \((*)<{\varepsilon }\). \(\square \)

Lemma 4

Let \(u\in C^1(\overline{{\varOmega }})\) and \({\varepsilon }>0\). Then there exist \(\sigma _1\in C_c^1({\varOmega })\) and \(v_1\in C^1(\overline{{\varOmega }})\) such that \(|\sigma _1|\le 1\), \(\inf v_1>0\) and

An analogous estimate holds for the triplet \(\alpha _2\), \(p_2\) and \(\xi ^\perp \).

Proof

Select \(\sigma _1\) by Lemma 3 corresponding to the tolerance \(\frac{{\varepsilon }}{2}\). Since \(u\in C^1(\overline{{\varOmega }})\), then \(|\nabla u|\in L^\infty ({\varOmega })\). Let \(\rho _n:=\eta _{1/n}*|\nabla u\cdot \xi |\) where it is assumed that \(|\nabla u\cdot \xi |=0\) outside \({\varOmega }\) and \(\eta \) is the standard mollifier. Let \(x\in {\varOmega }\). Choose n so large that \(B(x,1/n)\subset {\varOmega }\). Then

It follows from the uniform continuity of \(\nabla u\cdot \xi \) that \(\rho _n(x)\rightarrow |\nabla u\cdot \xi (x)|\) as \(n\rightarrow \infty \).

Let \(v_n:=\rho _n+\frac{1}{n}\). Then \(v_n\in C^1(\overline{{\varOmega }})\), \(\inf v_n>0\) for each n and \(v_n\rightarrow |\nabla u\cdot \xi |\) pointwise as \(n\rightarrow +\infty \). The dominated convergence theorem implies that

as \(n\rightarrow \infty \). We set \(v_n\) in the place of \(v_1\) in \((**)\). By adding and subtracting the term \(\int _{\varOmega }\alpha _1 p_1 |\nabla u\cdot \xi |^{p_1-1}\sigma _1\nabla u\cdot \xi -\alpha _1(p_1-1)|\nabla u\cdot \xi |^{p_1} \, \mathrm{d}x\) and by using Lemma 3 we see that

provided n is large enough. \(\square \)

The next corollary follows directly from Lemmas 2 and 4.

Corollary 1

If \(u\in C^1(\overline{{\varOmega }})\), then \(E_{\mathrm {weak}}(u) = E_{\mathrm {strong}}(u)\).

5 Existence of a Minimizer

The goal of this section is to show that the energy \(E_{\mathrm {weak}}(u)+\lambda ||f-u||_{L^2({\varOmega })}^2\), \(u\in L^2({\varOmega })\), has a minimizer in \(\mathrm {BV}({\varOmega })\). We begin with a technical lemma.

Lemma 5

Let \(v_1\), \(v_2\in C^1(\overline{{\varOmega }})\), \(\inf v_1\), \(\inf v_2>0\) and \(\sigma _1,\) \(\sigma _2\in C_c^1({\varOmega })\) be fixed. Let A be as in (7). Then the map

is continuous from \(L^1({\varOmega })\) to \(C_c^1({\varOmega };\mathbb {R}^2)\).

Proof

Now for G(u) we have the expression (10).

Since \(v_1\in C^1(\overline{{\varOmega }})\), \(\inf v_1>0\) and \(p_1(u)\in C^1(\overline{{\varOmega }})\), it follows that the function \(x\mapsto v_1(x)^{p_1(u)(x)-1}\) is a \(C^1(\overline{{\varOmega }})\) function. This can be seen by writing \(v^{p-1}=e^{(p-1)\log v}\) and applying the mean value theorem. Similarly for \(v_2\) and \(p_2\). Then using Assumption (A) on page 3, we see that \(G(u)\in C^1(\overline{{\varOmega }};\mathbb {R}^2)\). By using [3, Lemma 5] and Assumption (B) it follows that \(u\mapsto v_1^{p_1(u)-1}\) and \(u\mapsto v_2^{p_2(u)-1}\) are continuous maps from \(L^1({\varOmega })\) to \(C^1(\overline{{\varOmega }})\).

By Assumption (B) on page 3, \(u\mapsto \xi _i(u)\), \(u\mapsto p_i(u)\) and \(u\mapsto \alpha _i(u)\) are continuous from \(L^1({\varOmega })\) to \(C^1(\overline{{\varOmega }})\). The claim then follows using the Leibniz rule as in [3, p. 80]. \(\square \)

Theorem 1

Let \(v_1\), \(v_2\), \(\sigma _1\), \(\sigma _2\) and G be as in Lemma 5. Let A be as in (7). Then

is continuous from \(L^1({\varOmega })\) to \(\mathbb {R}\).

Proof

Let \(u_n\rightarrow u\) in \(L^1({\varOmega })\). Using [3, Lemma 7] and Assumption (B) on page 3 we see that \((p_1(u_n)-1)v_1^{p_1(u_n)}\rightarrow (p_1(u)-1)v_1^{p_1(u)}\) in \(L^1({\varOmega })\) as \(n\rightarrow \infty \) and an analogous result holds for \(p_2\) and \(v_2\). By Assumption (B) on page 3, \(\alpha _1(u_n)\rightarrow \alpha _1(u)\) and \(\alpha _2(u_n)\rightarrow \alpha _2(u)\) in \(C^1(\overline{{\varOmega }})\). We get

as \(n\rightarrow \infty \). Next we show that

Now

Here \((*)\rightarrow 0\) as \(n\rightarrow \infty \), since \(||u_n-u||_{L^1({\varOmega })}\rightarrow 0\) as \(n\rightarrow \infty \) and G(u) is a \(C_c^1({\varOmega };\mathbb {R}^2)\) function by Lemma 5.

Since \(u_n\rightarrow u\) in \(L^1({\varOmega })\), then \(u_n\) is bounded in \(L^1({\varOmega })\). On the other hand, by Lemma 5,

as \(n\rightarrow \infty \) and thus \((**)\rightarrow 0\) as \(n\rightarrow \infty \). \(\square \)

Corollary 2

The functional \(E_{\mathrm {weak}}\) is lower-semicontinuous on \(L^1({\varOmega })\).

Proof

By the definition of \(E_{\mathrm {weak}}\) and using Theorem 1 we see that \(E_{\mathrm {weak}}\) is a supremum of continuous functions so then \(E_{\mathrm {weak}}\) is lower-semicontinuous. \(\square \)

Now we come to the main result of this section.

Theorem 2

Let \({\varOmega }\subset \mathbb {R}^2\) be an open rectangle, \(f\in L^2({\varOmega })\), \(\lambda >0\) and set

for \(u\in L^2({\varOmega })\). Then there exists \(u\in \mathrm {BV}({\varOmega })\) which is a minimizer of E.

Proof

Let \((u_n)\subset L^2({\varOmega })\) be a minimizing sequence for E. Since \(E(0)=\lambda ||f||_{L^2({\varOmega })}^2<\infty \), we see that \(E(u_n)\) is bounded from above. Then by Proposition 1, \({\text {TV}}(u_n)\le c\) for each n where \(c>0\) does not depend on n. Since \(L^2({\varOmega })\hookrightarrow L^1({\varOmega })\), we see that \(||u_n||_{L^1({\varOmega })}\le C ||u_n||_{L^2({\varOmega })}\le C(||f-u_n||_{L^2({\varOmega })}+||f||_{L^2({\varOmega })}).\) We see that \(u_n\in \mathrm {BV}({\varOmega })\) and

for each n. Thus there exists \(u\in \mathrm {BV}({\varOmega })\) and a subsequence \(n_k\) such that \(u_{n_k}\rightharpoonup u\) weakly* in \(\mathrm {BV}({\varOmega })\) as \(k\rightarrow \infty \). Thus \(u_{n_k}\rightarrow u\) in \(L^1({\varOmega })\).

Using Corollary 2 we see that

Since \(u_n\) is bounded in \(L^2({\varOmega })\) we can also assume by selecting again a subsequence if necessary that \(u_{n_k}\rightharpoonup u\) in \(L^2({\varOmega })\) and thus \(||f-u||_{L^2({\varOmega })}\le \liminf _{k\rightarrow \infty } ||f-u_{n_k}||_{L^2({\varOmega })}\) which combined with the previous gives that

and the proof is complete. \(\square \)

6 Parameter Functions

In this section, we discuss a possible choice of the pointwise varying coordinate system satisfying the assumptions (A) and (B) on page 3. The choice is based on smoothing of a matrix representation of the image gradient field. We also discuss how the pointwise weights and the varying integrabilities can be chosen in general.

We begin by discussing the concept of the structure tensor. In general, structure tensor can be used to infer something about the local geometry of an image. By a tensor field we understand a function from \({\varOmega }\) to the space of symmetric, positive semidefinite matrices of size \(2\times 2\).

Let \(u\in L^1({\varOmega })\) be an arbitrary image. First, the Gaussian kernel \(G_\sigma \), where \(\sigma >0\) is fixed, is applied on u in order to make the pointwise partial derivatives well defined. Let

Let \(\rho >0\) denote the size of the neighbourhood where the average of the tensor field \(S_\sigma (u)\) is considered and set

The obtained tensor field is called the structure tensor of u. The eigenvectors and eigenvalues of S(u)(x) can be used to infer something about the local geometry of u. This is based on a variational characterization of the eigenvalues of a positive semidefinite matrix. We follow [32]. Let

be an eigenvalue decomposition of S(u)(x). Here \(\lambda _1\ge \lambda _2\ge 0\) since S(u)(x) is positive semidefinite. If \(\lambda _1\approx \lambda _2\approx 0\), it is assumed that x is in the nearly constant intensity area. If \(\lambda _1\gg \lambda _2\approx 0\), then around x the image structure is nearly one-dimensional. If \(\lambda _1\ge \lambda _2\gg 0\), then x is in the corner area or in the texture area where the texture is not locally one-dimensional.

In (12), S(u) can also be obtained by applying the linear, isotropic heat equation to the tensor field \(S_\sigma (u)\). There are also non-linear smoothing processes which can be applied to \(S_\sigma (u)\). Let us discuss the smoothing proposed in [17]. Let \(A(t):{\varOmega }\rightarrow \mathbb {R}^{2\times 2}\), \(A(t):= \left[ {\begin{matrix} a_{11}(t)&{}a_{12}(t)\\ a_{21}(t)&{}a_{22}(t) \end{matrix}} \right] \) such that \(A(0)(x)=S_\sigma (u)(x)\) and

Here \(g(M)=\tilde{g}({\varLambda })v_{{\varLambda }}v_{{\varLambda }}^T+\tilde{g}(\lambda ) v_{\lambda } v_{\lambda }^T\) where it is assumed that \({\varLambda }v_{\varLambda }v_{\varLambda }^T+\lambda v_\lambda v_\lambda ^T\) is an eigenvalue decomposition of M and \(\tilde{g}(s)=\frac{1}{\sqrt{s+1}}\), \(s\ge 0\). In [17] it is proved that if A(0) is pointwise positive semidefinite, then also A(t) is pointwise positive semidefinite for \(t\in (0,T]\).

In (12), if \(u_n\rightarrow u\) in \(L^1({\varOmega })\), then quite similarly as in [15], it follows from Young’s inequality for convolution that we have \(S_{\sigma }(u_n)\rightarrow S_\sigma (u)\) in \(L^2({\varOmega };\mathbb {R}^{2\times 2})\). Since S(u) is obtained from \(S_{\sigma }(u)\) by convolution where the convolution kernel is smooth, then again by Young’s inequality for convolution we see that \(S(u_n)\rightarrow S(u)\) in \(C^1(\overline{{\varOmega }},\mathbb {R}^{2\times 2})\). If the matrix elements in (12) are considered, we have shown that \(u_n\rightarrow u\) in \(L^1({\varOmega })\) implies that \(a(u_n)\rightarrow a(u)\), \(b(u_n)\rightarrow b(u)\) and \(c(u_n)\rightarrow c(u)\) in \(C^1(\overline{{\varOmega }})\).

In (13), by working as in [33, Theorem 1] and using [17, Lemmas 1-2] one could prove an \(L^2\) stability result: If A(t) and \(A_n(t)\) are tensor fields obtained by the diffusion such that \(A_n(0)\rightarrow A(0)\) in \(L^2({\varOmega };\mathbb {R}^{2\times 2})\) as \(n\rightarrow \infty \), and if \(\rho >0\) is a fixed time instant, then \(A_n(\rho )\rightarrow A(\rho )\) in \(L^2({\varOmega };\mathbb {R}^{2\times 2})\) as \(n\rightarrow \infty \). As in the linear case, \(u_n\rightarrow u\) in \(L^1({\varOmega })\) as \(n\rightarrow \infty \) implies that \(S_{\sigma }(u_n)\rightarrow S_\sigma (u)\) in \(L^2({\varOmega };\mathbb {R}^{2\times 2})\) which in turn implies that \(A_n(\rho )\rightarrow A(\rho )\) in \(L^2({\varOmega };\mathbb {R}^{2\times 2})\) where \(A_n(0)=S_{\sigma }(u_n)\) and \(A(0)=S_\sigma (u)\). If in the non-linear case, we call the structure tensor S(u) the tensor field \(G_a*A(\rho )\), where \(a>0\), then as in the linear case we see that \(S(u_n)\rightarrow S(u)\) in \(C^1(\overline{{\varOmega }},\mathbb {R}^{2\times 2})\). In the numerical experiments, we assume that the amount of this smoothing is negligible and we actually use \(a=0\) and in the non-linear case we write

If u is differentiable, then in the limiting case \(\sigma =\rho =0\) we have e.g. in (12) that \(S(u)=\nabla u(\nabla u)^T\) and

We can then consider that the largest eigenvalue of the structure tensor S(u)(x) is approximately equal to \(|\nabla u|^2\).

The matrix S(u)(x) in (12) or in (14) has eigenvalues

and

Since S(u)(x) is positive semidefinite, \(\lambda _1(x)\), \(\lambda _2(x)\ge 0\). The unit eigenvector corresponding to the eigenvalue \(\lambda _1\) is parallel to the vector

provided \(b\ne 0\).

Next we consider how \((\xi ,\xi ^\perp )\) can be chosen in order to fulfil the assumptions (A) and (B) on page 3. We begin with a short technical result.

Proposition 2

Let \(h_n\rightarrow h\) in \(C^1(\overline{{\varOmega }},\mathbb {R}^n)\) and \(S>0\) such that \(||h||_{C^1({\varOmega })}, ||h_n||_{C^1({\varOmega })}<\frac{S}{2}\) for all n. Let \(G\in C^2(\overline{B(0,S)},\mathbb {R})\). Then \(G\circ h_n\rightarrow G\circ h\) in \(C^1(\overline{{\varOmega }},\mathbb {R})\) as \(n\rightarrow +\infty \).

Proof

Using the mean value theorem we see that

which tends to zero as \(n\rightarrow \infty \). Now

Using this and the mean value theorem we get

which tends to zero as \(n\rightarrow \infty \). Then the claim follows. \(\square \)

Now we come to the choice of \((\xi ,\xi ^\perp )\). Our choice is based, as in anisotropic diffusion filtering [32], on the eigenvectors of the structure tensor. We use in addition some regularization in order to get smooth dependencies. We can replace in (17) the square root by its regularization \(s\mapsto \sqrt{s+\delta ^2}\) where \(\delta >0\) is small. Let \(G:\mathbb {R}^3\rightarrow \mathbb {R}^2\),

and set

Let \(\xi ^\perp (u)(x):=(-(\xi (u)(x))_2,(\xi (u)(x))_1)\). Then if \(u_n\rightarrow u\) in \(L^1({\varOmega })\) implies that \(a(u_n)\rightarrow a(u)\), \(b(u_n)\rightarrow b(u)\) and \(c(u_n)\rightarrow c(u)\) in \(C^1(\overline{{\varOmega }})\), it follows by applying Proposition 2 componentwise on the function G that we have \(\xi (u_n)\rightarrow \xi (u)\) in \(C^1(\overline{{\varOmega }};\mathbb {R}^2)\).

We can do analogous regularization in (15) and (16), and obtain regularized eigenvalues \(\lambda _1\) and \(\lambda _2\). If also the pointwise weights \(\alpha _1\) and \(\alpha _2\) and the varying integrabilities \(p_1\) and \(p_2\) are obtained by applying a smooth function on the regularized eigenvalues of S(u)(x) or on \(|\nabla G_\sigma *u|^2\), then the assumptions (A) and (B) on page 3 are valid.

7 Discretization

7.1 General Algorithm

In this section, we consider the numerical aspects of the new model. Since in general the energy (3) is not convex with respect to u, the minimization is challenging. As in [15], we resort to iterative minimization. In [31], a lagged diffusivity fixed point iteration, where the diffusivity is based on the previous iterate, was proposed for the numerical solution of the total variation denoising.

We use two nested loops in the algorithm. In the outer loop we update the pointwise coordinate system \((\xi ,\xi ^\perp )\), the pointwise weights \(\alpha _1\), \(\alpha _2\) and the pointwise integrabilities \(p_1\), \(p_2\) using the previous iterate of u. We use the inner loop to solve a non-quadratic minimization problem with respect to u. There is no guarantee that the algorithm we use finds an actual local minimizer of the total energy.

First, we regularize the energy (3) and instead consider the energy

where \(\delta >0\) is small. If \(\xi \), \(\xi ^\perp \), \(\alpha _1\), \(\alpha _2\), \(p_1\) and \(p_2\) are fixed, let

The functional gradient of \(E^{\xi ,\alpha ,p}\) is

Let

and

Then

We summarize the numerical steps in the algorithm below. In the inner part of the algorithm, a fixed point iteration is used to solve the equation \(2\lambda (u-f)-{\text {div}}(A(u)\nabla u)=0\) for u.

7.2 Update of u

If h denotes the grid size, we assume that we know the noisy image f at the points (ih, jh) where i, \(j\in \mathbb {Z}\). Let \(f_{ij}:=f(ih,jh)\) and similarly for u and the other functions. When we update u, we assume that \(\xi \), \(\xi ^\perp \), \(\alpha _1\), \(\alpha _2\), \(p_1\) and \(p_2\) are available at the locations (ih, jh). We discuss in section 7.3 how they are obtained. In \(\lambda _1\) of (18), the product \(\nabla u\cdot \xi =\partial _x u \, \xi _1+\partial _y u \, \xi _2\) at (ih, jh) is computed as

where m denotes the minmod function. Analogously \((\nabla u\cdot \xi ^\perp )_{ij}\) is computed in \(\lambda _2\) of (19).

In the fixed-point algorithm, A is based on the previous iterate of u. When A is fixed, we solve the equation of the form \(2\lambda (u-f)-{\text {div}}(A \nabla u)=0\) as an evolution equation by applying the AOS (additive operator splitting) scheme on the version involving the time. For more on the AOS scheme in image processing, see [34].

So we assume that A is fixed, \(A:=\left[ {\begin{matrix} a&{}b\\ b&{}c \end{matrix}} \right] \). For \({\text {div}}(A \nabla u)\) we use the discretization described at the end of [32, Chapter 3.4]. Then we can write

where \(A_{i,j,k}\) is constructed from a, \(C_{i,j,l}\) is constructed from c and \(B_{i,j,k,l}\) is constructed from b. Referring to [32, Chapter 3.4], we have e.g. \(A_{i,j,-1}=\frac{a_{i-1,j}+a_{i,j}}{2 h_1^2}\), \(C_{i,j,1}=\frac{c_{i,j+1}+c_{i,j}}{2h_2^2}\) and \(B_{i,j,1,1}=\frac{|b_{i+1,j+1}|+b_{i+1,j+1}}{4h_1 h_2}\). To solve the equation \(2\lambda (u-f)-{\text {div}}(A \nabla u)=0\) we introduce an artificial time variable and find the steady state of \(\partial _t u+2\lambda (u-f)-{\text {div}}(A \nabla u)=0\). If we discretize the previous equation we get

where

If \(\tau \) denotes the timestep, let \(u_{ij}^{t+1}=\frac{v_{ij}^{t+1}+w_{ij}^{t+1}}{2}\) where

and

which can be solved rapidly by using the tridiagonal matrix algorithm as in [34].

7.3 Structure Tensor Computations

In (11), the partial derivatives \(\partial _x\) and \(\partial _y\) of \(v:=G_\sigma *u\) are computed at the locations \(((i+1/2)h,(j+1/2)h)\) using the formulas \(\frac{v_{i+1,j}-v_{ij}+v_{i+1,j+1}-v_{i,j+1}}{2h}\) and \(\frac{v_{i,j+1}-v_{ij}+v_{i+1,j+1}-v_{i+1,j}}{2h}\). After the smoothing of \(S_\sigma (u)\) by \(G_\rho \) or by (13), we have S(u) defined at the locations \(((i+1/2)h,(j+1/2)h)\). We use \(\nabla (G_\sigma *u)\) and the eigenvalues and eigenvectors of S(u) to compute \(\xi \), \(\xi ^\perp \), \(\alpha _1\), \(\alpha _2\), \(p_1\) and \(p_2\) at the locations \(((i+1/2)h,(j+1/2)h)\). These functions can then be computed at the locations (ih, jh) as the averages at the four locations \(((i\pm 1/2)h,(j\pm 1/2)h)\).

8 Numerical Experiments

We begin this section by describing the choices of the weight functions \(\alpha _1\) and \(\alpha _2\) and the integrabilities \(p_1\) and \(p_2\) in (3) used in the experiments. The choices are motivated by the key features mentioned in section 2.

We begin by constructing an auxiliary function which changes its value smoothly from one to zero. Let \(\gamma (x)=ax^5+bx^4+cx^3+dx^2+ex+f\) where the constants a, b, c, d, e, \(f\in \mathbb {R}\). Let \(A>0\) and suppose we require that \(\gamma (0)=1\), \(\gamma (A)=0\), \(\gamma '(0)=\gamma ''(0)=\gamma '(A)=\gamma ''(A)=0\). Then after some calculations we see that \(a=-6/A^5\), \(b=15/A^4\), \(c=-10/A^3\), \(d=e=0\) and \(f=1\). Then \(\gamma \) changes its value smoothly from 1 at \(x=0\) to 0 at \(x=A\).

Let M, \(N\in \mathbb {R}^+\), \(0<M<N\) such that if \(|\nabla u(x)|<M\) we assume that x is in the smooth area and if \(|\nabla u(x)|>N\), x is near an edge. Let \(A:=N-M\) and \(\rho :[0,\infty )\rightarrow [0,1]\) where \(\rho (s)=0\) if \(0\le s\le M\), \(\rho (s)=1\) if \(s\ge N\) and \(\rho (s)=1-\gamma (s-M)\) if \(M<s<N\). Now \(\rho '(M)=\rho ''(M)=\rho '(N)=\rho ''(N)=0\). Then \(\rho \) changes its value smoothly from 0 at \(s=M\) to 1 at \(s=N\).

Let

where \(\lambda _1\) denotes the largest eigenvalue of S(u). Then g works as an edge detector such that \(g\approx 0\) in the near constant areas and \(g\approx 1\) in the non-smooth areas. We use the ratio of the eigenvalues of S(u) to split the edges found by g into one-dimensional edges and the rest, which consists of corners and texture which is not one-dimensional. Let

which measures the pointwise anisotropy such that \(\psi \approx 1\) if \(\lambda _1\approx \lambda _2\) and \(\psi \approx 0\) if \(\lambda _1>>\lambda _2\). See also [29] for discussions on measuring the anisotropy in kernel regression approach to denoising. The function \(\psi \) is used to split the edge areas detected by g. Let

and

Then \(d_a\approx 1\) in the one-dimensional edge areas and \(d_a\approx 0\) otherwise. Analogously, \(d_r\approx 1\) in the corners and texture areas where the texture is not one-dimensional, and \(d_r\approx 0\) otherwise.

In the numerical experiments of this paper, the pointwise weights \(\alpha _1\) and \(\alpha _2\) are of the form

and

where \(k_1\), \(k_2\), \(k_3>0\) are constants.

Next we consider the choices of the integrabilities. Let again \(A=N-M\). Recalling how the function \(\gamma \) was constructed earlier in this section, let \(\varphi :[0,\infty )\rightarrow [1,2]\) such that \(\varphi (s)=2\) if \(s\le M\), \(\varphi (s)=1\) if \(s\ge N\) and \(\varphi (s)=1+\gamma (s-M)\) for \(M<s<N\). Then \(\varphi '(M)=\varphi ''(M)=\varphi '(N)=\varphi ''(N)=0\) and \(\varphi \) changes its value smoothly from 2 at \(s=M\) to 1 at \(s=N\). In the experiments of this paper, we use

where t corresponds to the time needed to blur u using the linear, isotropic heat equation such that the result equals to the convolution with the Gaussian kernel \(G_\sigma \). For the second integrability we use

In Algorithm of Sect. 7.1, the maximum number of outer iterations is set to be 25 in each experiment. We use 5 inner iterations to solve \(2\lambda (u-f)-{\text {div}}(A(u)\nabla u)=0\) for u. We use 10 AOS iterations with the timestep 0.1 to find a candidate for the solution of the equation of the form \(2\lambda (v-f)-{\text {div}}(A\nabla v)=0\).

We use the non-linear structure tensor obtained by (13) in the experiments. We did not see much difference in terms of the PSNR values when the linear structure tensor was used. But visually, the non-linear version seemed to give somewhat better results near the edges and reduced rounding of the corners. In \(G_\varsigma \) of (13), we always use \(\varsigma =0.3\). In the discretization of (13), we use the AOS scheme with the timestep 1.0.

In the numerical experiments, M and N refer to the values used in the construction of g in (20) and \(p_1\) in (24). The parameters \(k_1\), \(k_2\) and \(k_3\) refer to \(\alpha _1\) and \(\alpha _2\) in (22) and (23); \(t_1\) refers to the time in (24) and L refers to the number of the AOS iterations in (13).

In the experiments, we also consider the classical total variation regularization which is based on the minimization of the energy

We solve the corresponding Euler–Lagrange equation using the minmod scheme with explicit time.

For each numerical comparison with the total variation regularization, we minimized the energy (25) for several values of \(\mu \) and then we selected the \(\mu \) for which the best \(\mathrm {PSNR}\) value was obtained. The \(\mathrm {PSNR}\) (peak signal-to-noise ratio) of an \(M\times N\) pixel image u against the ground truth image \(f_0\) is defined by

The noise-free images used in the experiments have their intensity values in the range [0, 255].

We also consider denoising results obtained by the edge-enhancing diffusion method proposed by Weickert [32]. The method is based on the diffusion equation

Here \(D=g(|\nabla u_\sigma |^2)\theta _1\theta _1^T+\theta _2\theta _2^T\), \(g(s)=1-\exp \left( \frac{-C_4}{(s/\lambda ^2)^4}\right) \) for \(s>0\), \(g(s)=1\) for \(s\le 0\), \(\theta _2||\nabla u_\sigma \), \(|\theta _2|=1\), \(\theta _2\perp \theta _1\), \(\lambda >0\) and \(C_4=3.31488\). We use \(t_1\) to denote the time needed to blur u using the linear heat equation such that the result corresponds to the convolution of u with the Gaussian kernel \(G_\sigma \). We used the explicit time scheme and the discretization [32, Chapter 3.4] for \({\text {div}}(A\nabla u)\). We ran the diffusion (26) for several values of \(\lambda \) and \(t_1\) and then selected the stopping time T such that the best PSNR value was obtained. We then selected \(\lambda \), \(t_1\) and T corresponding to the overall best PSNR value.

In the experiments, we also used the second-order total generalized variation regularizer [5] which is defined as

where \(S^{2\times 2}\) denotes the space of symmetric \(2\times 2\) matrices. To minimize the total energy, we used the numerical implementation described in [4].

For comparisons, we also consider results obtained by the non-local means filter [7] and the BM3D method [9]. Regarding the BM3D, we used the matlab code available at http://www.cs.tut.fi/~foi/GCF-BM3D/. We ran this algorithm for several parameter values and selected the one for which the best PSNR value was obtained.

Regarding the implementation of the non-local means filter, we used

where

for \((r,s)\ne (i,j)\). For the centre pixel of a patch, \(C_{i,j}(i,j)=\max _{(r,s)\ne (i,j)} C_{i,j}(r,s)\) is used. In (27) and (28), \(\sigma \) is the standard deviation of the noise, f is the noisy image, \(a\in \mathbb {Z}^+\) is such that \((2a+1)^2\) is the size of the search window, \(b\in \mathbb {Z}^+\) is such that \((2b+1)^2\) is the patch size, P((i, j), b) is a patch of f such that (i, j) is the centre of the patch and \((2b+1)^2\) is the size of the patch, and \(h>0\) is a regularization parameter. In the denominator of (27), \(C_{i,j}\) is a normalization factor,

In the experiments, we ran the algorithm for several values of a, b and h and then we selected the parameter values for which the best PSNR value was obtained.

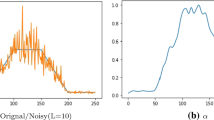

First, we consider the denoising of a synthetic image. In Fig. 1, we see typical differences between the total variation regularization and the new method. In the solution obtained by the total variation regularization, some staircasing and loss of contrast are present. The new method aims at reducing these artefacts. In the new method, we used \(\lambda =0.05\), \(M=4\), \(N=5\), \(k_1=1.0\), \(k_2=0.0001\), \(k_3=0.0001\), \(t_1=0.1\) and \(L=25\).

Next we consider the denoising of four standard test images: cameraman, boat, Lena and lighthouse, each with two different levels of noise. The parameter values used in the edge-enhancing diffusion, the non-local means and the new method are seen in Tables 1, 2 and 3.

First, the cameraman image. The solutions corresponding to different noise levels are seen in Figs. 2 and 3. In Fig. 2c we used \(\mu =0.044\), whereas in Fig. 3c, \(\mu =0.014\). In the total generalized variation results, we used \(\alpha _0=27\), \(\alpha _1=13.5\) in Fig. 2e and \(\alpha _0=92\), \(\alpha _1=41.4\) in Fig. 3e.

Next we consider the denoising of the boat test image in Figs. 4 and 5. We used \(\mu =0.041\) in Fig. 4c and \(\mu =0.018\) in Fig. 5c. Regarding the total generalized variation results we used \(\alpha _0=19\), \(\alpha _1=14.25\) in Fig. 4e and \(\alpha _0=50\), \(\alpha _1=32.5\) in Fig. 5e.

Next we consider the denoising of the Lena test image in Figs. 6 and 7. We see two noisy versions of this test image in Figs. 6b and 7b. In the total variation regularization results we used \(\mu =0.026\) in Fig. 6c and \(\mu =0.014\) in Fig. 7c. In the total generalized variation results we used \(\alpha _0=38\), \(\alpha _1=22.8\) in Fig. 6e and \(\alpha _0=100\), \(\alpha _1=60\) in Fig. 7e.

Finally, we consider the denoising of the lighthouse test image in Figs. 8 and 9. In the solutions obtained by the total variation regularization we used \(\mu =0.027\) in Fig. 8c and \(\mu =0.015\) in Fig. 9c. In the total generalized variation results, we used \(\alpha _0=35\), \(\alpha _1=21\) in Fig. 8e and \(\alpha _0=87\), \(\alpha _1=39.15\) in Fig. 9e.

We see that in all the experiments in Figs. 2, 3, 4, 5, 6, 7, 8 and 9, in terms of the \(\mathrm {PSNR}\) values, the new method gives better results than the total variation regularization, edge-enhancing diffusion and total generalized variation. Nevertheless, just higher \(\mathrm {PSNR}\) values do not necessarily mean visually better. It also seems that the criterion we used to select the ’best’ parameter values in the methods proposed earlier in the literature may leave some noise especially in the results obtained by the edge-enhancing diffusion method. Somewhat the same is with the results obtained by the second-order total generalized variation.

From the experiments, it also seems that using the minimization of the energy (3), the edges are still sharp but are not as grainy as in the results produced by the total variation regularization or the total generalized variation regularization. In this aspect, the new method is quite similar to the edge-enhancing diffusion method. The observation that the new method can reduce noise efficiently along the boundary is also quite expected from the key feature 3 in Sect. 2 since provided the edge direction is estimated correctly, the linear averaging along the edge reduces noise efficiently. This is clearly seen in Fig. 7.

Compared to the total variation regularization, the new method hardly suffers from the staircasing. The new method is also quite as good as total variation at preserving edges except very weak edges which our method tends to blur, since in those areas our regularizer may simplify to isotropic smoothing, but on the other hand, the total variation may not place the weak edges correctly as can be seen with the cameraman experiments. Also, the new method gives higher or the same \(\mathrm {PSNR}\) values than the implementation we used of the NL means algorithm, except with the last image with strong noise, which is not a surprise considering that the lighthouse image has lot of repetitive components. In all the experiments, the best results are obtained by the B3MD method, which is also a non-local method, contrary to our method which is local.

9 Conclusion

In this paper, a new variational image denoising method is proposed. The new method could be seen to be a two-step method in which the steps are in some sense coupled. Structure tensor analysis is used to guide the denoising in such a way that the energy minimization favours solutions whose gradient fields respect the approximation obtained by the structure tensor analysis. In the theoretical part, the existence of a minimizer of a weak form was considered. In the numerical part, an algorithm was proposed based on iterative minimization. The numerical experiments demonstrated the possible advantages of the new method over some existing variational and partial differential equations methods. The future considerations involve extending the method to the non-local framework.

References

Bayram, I., Kamasak, M.E.: Directional total variation. IEEE Signal Process. Lett. 19, 781–784 (2012)

Blomgren, P., Mulet, P., Chan, T., Wong, C.: Total variation image restoration: Numerical methods and extensions. In: Proceedings of the IEEE International Conference on Image Processing, vol. 3, pp. 384–387. IEEE Computer Society Press, Piscataway (1997)

Bollt, E.M., Chartrand, R., Esedoglu, S., Schultz, P., Vixie, K.R.: Graduated, adaptive image denoising: local compromise between total-variation and isotropic diffusion. Adv. Comput. Math. 31, 61–85 (2009)

Bredies, K.: Recovering piecewise smooth multichannel images by minimization of convex functionals with total generalized variation penalty. In: Efficient Algorithms for Global Optimization Methods in Computer Vision, pp. 44–77 (2014)

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3, 492–526 (2010)

Brox, T., Weickert, J., Burgeth, B., Mrazek, P.: Nonlinear structure tensors. Image Vis. Comput. 24, 41–55 (2006)

Buades, A., Coll, B., Morel, J.M.: A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 4, 490–530 (2005)

Chen, Y., Levine, S., Rao, M.: Variable exponent, linear growth functionals in image restoration. SIAM J. Appl. Math. 66, 1383–1406 (2006)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 16, 2080–2095 (2007)

Didas, S., Burgeth, B., Imiya, A., Weickert, J.: Regularity and scale-space properties of fractional high order linear filtering. In: Kimmel, R., Sochen, N.A., Weickert, J. (eds.) Scale Space and PDE Methods in Computer Vision, 5th International Conference, Scale-Space, pp. 13–25. Hofgeismar, Germany (2005)

Didas, S., Weickert, J., Burgeth, B.: Properties of higher order nonlinear diffusion filtering. J. Math. Imaging Vision 35, 208–226 (2009)

Duits, R., Florack, L., de Graaf, J., ter Haar Romeny, B.: On the axioms of scale space theory. J. Math. Imaging Vision 20, 267–298 (2004)

Estellers, V., Soatto, S., Bresson, X.: Adaptive regularization with the structure tensor. IEEE Trans. Image Process. 24, 1777–1790 (2015)

Förstner, W., Gülch, E.: A fast operator for detection and precise location of distinct points, corners and centres of circular features. In: Proceedings of the ISPRS Intercommission Conference on Fast Processing of Photogrammetric Data, Interlaken, Switzerland, pp. 281–305 (1987)

Grasmair, M., Lenzen, F.: Anisotropic total variation filtering. Appl. Math. Optim. 62, 323–339 (2010)

Guo, Z., Sun, J., Zhang, D., Wu, B.: Adaptive Perona–Malik model based on the variable exponent for image denoising. IEEE Trans. Image Process. 21, 958–967 (2012)

Hahn, J., Lee, C.-O.: A nonlinear structure tensor with the diffusivity matrix composed of the image gradient. J. Math. Imaging Vis. 34, 137–151 (2009)

Hahn, J., Tai, X.-C., Borok, S., Bruckstein, A.M.: Orientation-matching minimization for image denoising and inpainting. Int. J. Comput. Vis. 92, 308–324 (2011)

Hu, Y., Jacob, M.: Higher degree total variation (HDTV) regularization for image recovery. IEEE Trans. Image Process. 21, 2559–2571 (2012)

Lefkimmiatis, S., Osher, S.: Non-local structure tensor functionals for image regularization. IEEE Trans. Comput. Imaging 1, 16–29 (2015)

Lefkimmiatis, S., Roussos, A., Maragos, P., Unser, M.: Structure tensor total variation. SIAM J. Imaging Sci. 8, 1090–1122 (2015)

Lenzen, F., Berger, J.: Solution-driven adaptive total variation regularization. In: SSVM Proceedings, pp. 203–215 (2015)

Lenzen, F., Becker, F., Lellmann, J.: Adaptive second-order total variation: an approach aware of slope discontinuities. In: Proceedings of the Fourth International Conference on Scale Space and Variational Methods in Computer Vision (SSVM), pp. 61–73 (2013)

Li, F., Li, Z., Pi, L.: Variable exponent functionals in image restoration. Appl. Math. Comput. 216, 870–882 (2010)

Lysaker, M., Osher, S., Tai, X.-C.: Noise removal using smoothed normals and surface fitting. IEEE Trans. Image Process. 13, 1345–1357 (2004)

Rahman, T., Tai, X-C., Osher, S.: A TV-Stokes denoising algorithm. In: Proceedings of Scale Space and Variational Methods in Computer Vision (SSVM), pp. 473–482 (2007)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60, 259–268 (1992)

Steidl, G., Teuber, T.: Anisotropic smoothing using double orientations. In: Proceedings of the Second International Conference Scale Space and Variational Methods in Computer Vision, pp. 477–489 (2009)

Takeda, H., Farsiu, S., Milanfar, P.: Kernel regression for image processing and reconstruction. IEEE Trans. Image Process. 16, 349–366 (2007)

Tschumperlé, D.: PDE’s based regularization of multivalued images and applications, PhD thesis, University of Nice Sophia Antipolis (2002)

Vogel, C.R., Oman, M.E.: Iterative methods for total variation denoising. SIAM J. Sci. Comput. 17, 227–238 (1996)

Weickert, J.: Anisotropic Diffusion in Image Processing. Teubner-Verlag, Stuttgart (1998)

Weickert, J.: Coherence-enhancing diffusion filtering. Int. J. Comput. Vis. 31, 111–127 (1999)

Weickert, J., Romeny, B.M.T.H., Viergever, M.A.: Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Trans. Image Process. 7, 398–410 (1998)

Zhang, J., Lai, R., Kuo, C.J.: Adaptive directional total-variation model for latent fingerprint segmentation. IEEE Trans. Inf. Forensics Secur. 8, 1261–1273 (2013)

Åstöm, F., Baravdish, G., Felsberg, M.: A Tensor variational formulation of gradient energy total variation. In: Proceedings of the 10th International Conference EMMCVPR, pp. 307–320 (2015)

Acknowledgments

This work was supported by the Academy of Finland and the Vilho, Yrjö and Kalle Väisälä Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tiirola, J. Image Denoising Using Directional Adaptive Variable Exponents Model. J Math Imaging Vis 57, 56–74 (2017). https://doi.org/10.1007/s10851-016-0666-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-016-0666-4