Abstract

In this paper, we present and analyze a new set of low-rank recovery algorithms for linear inverse problems within the class of hard thresholding methods. We provide strategies on how to set up these algorithms via basic ingredients for different configurations to achieve complexity vs. accuracy tradeoffs. Moreover, we study acceleration schemes via memory-based techniques and randomized, ϵ-approximate matrix projections to decrease the computational costs in the recovery process. For most of the configurations, we present theoretical analysis that guarantees convergence under mild problem conditions. Simulation results demonstrate notable performance improvements as compared to state-of-the-art algorithms both in terms of reconstruction accuracy and computational complexity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work, we consider the general affine rank minimization (ARM) problem, described as follows:

The ARM Problem: Assume \(\boldsymbol {X}^{\ast}\in \mathbb{R}^{m \times n} \) is a rank-k matrix of interest (k≪min{m,n}) and let \(\boldsymbol {\mathcal {A}}: \mathbb {R}^{m \times n} \rightarrow\mathbb{R}^{p}\) be a known linear operator. Given a set of observations as \(\boldsymbol {y}= \boldsymbol {\mathcal {A}}\boldsymbol {X}^{\ast}+ \boldsymbol {\varepsilon }\in\mathbb{R}^{p}\), we desire to recover X ∗ from y in a scalable and robust manner.

The challenge in this problem is to recover the true low-rank matrix in subsampled settings where p≪m⋅n. In such cases, we typically exploit the prior information that X ∗ is low-rank and thus, we are interested in finding a matrix X of rank at most k that minimizes the data error \(f(\boldsymbol {X}) := \Vert \boldsymbol {y}- \boldsymbol {\mathcal {A}}\boldsymbol {X}\Vert_{2}^{2} \) as follows:

The ARM problem appears in many applications; low dimensional embedding [1], matrix completion [2], image compression [3], function learning [4, 5] just to name a few. We present below important ARM problem cases, as characterized by the nature of the linear operator \(\boldsymbol {\mathcal {A}}\).

General linear maps: In many ARM problem cases, \(\boldsymbol {\mathcal {A}}\) or \(\boldsymbol {\mathcal {A}}^{\ast}\) has a dense range, satisfying specific incoherence or restricted isometry properties (discussed later in the paper); here, \(\boldsymbol {\mathcal {A}}^{\ast}\) is the adjoint operator of \(\boldsymbol {\mathcal {A}}\). In Quantum Tomography, [6] studies the Pauli operator, a compressive linear map \(\boldsymbol {\mathcal {A}}\) that consists of the Kronecker product of 2×2 matrices and obeys restricted isometry properties, defined later in the paper. Furthermore, recent developments indicate connections of ridge function learning [4, 7] and phase retrieval [8] with the ARM problem where \(\boldsymbol {\mathcal {A}}\) is a Bernoulli and a Fourier operator, respectively.

Matrix Completion (MC): Let Ω be the set of ordered pairs that represent the coordinates of the observable entries in X ∗. Then, the set of observations satisfy \(\boldsymbol {y}= \boldsymbol {\mathcal {A}}_{\varOmega} \boldsymbol{X}^{\ast}+ \boldsymbol {\varepsilon }\) where \(\boldsymbol {\mathcal {A}}_{\varOmega} \) defines a linear mask over the observable entries Ω. To solve the MC problem, a potential criterion is given by (1) [2]. As a motivating example, consider the famous Netflix problem [9], a recommender system problem where users’ movie preferences are inferred by a limited subset of entries in a database.

Principal Component Analysis: In Principal Component Analysis (PCA), we are interested in identifying a low rank subspace that best explains the data in the Euclidean sense from the observations \(\boldsymbol {y}= \boldsymbol {\mathcal {A}}\boldsymbol{X}^{\ast}\) where \(\boldsymbol {\mathcal {A}}: \mathbb {R}^{m \times n} \rightarrow\mathbb{R}^{p}\) is an identity linear map that stacks the columns of the matrix X ∗ into a single column vector with p=m⋅n. We observe that the PCA problem falls under the ARM criterion in (1). While (1) is generally NP-hard to solve optimally, PCA can be solved in polynomial time using the truncated Singular Value Decomposition (SVD) of \(\boldsymbol {\mathcal {A}}^{\ast} \boldsymbol {y}\). As an extension to the PCA setting, [10] considers the Robust PCA problem where y is further corrupted by gross sparse noise. We extend the framework proposed in this paper for the RPCA case and its generalizations in [11].

For the rest of the paper, we consider only the low rank estimation case in (1). As running test cases to support our claims, we consider the MC setting as well as the general ARM setting where \(\boldsymbol {\mathcal {A}}\) is constituted by permuted subsampled noiselets [12].

1.1 Two Camps of Recovery Algorithms

Convex relaxations: In [13], the authors study the nuclear norm \(\Vert \boldsymbol {X}\Vert_{\ast} := \sum_{i = 1}^{\operatorname{rank}(\boldsymbol {X})} \sigma _{i} \) as a convex surrogate of \(\operatorname{rank}(\boldsymbol {X}) \) operator so that we can leverage convex optimization approaches, such as interior-point methods—here, σ i denotes the i-th singular value of X. Under basic incoherence properties of the sensing linear mapping \(\boldsymbol {\mathcal {A}}\), [13] provides provable guarantees for unique low rank matrix recovery using the nuclear norm.

Once (1) is relaxed to a convex problem, decades of knowledge on convex analysis and optimization can be leveraged. Interior point methods find a solution with fixed precision in polynomial time but their complexity might be prohibitive even for moderate-sized problems [14, 15]. More suitable for large-scale data analysis, first-order methods constitute low-complexity alternatives but most of them introduce complexity vs. accuracy tradeoffs [16–19].

Non-convex approaches: In contrast to the convex relaxation approaches, iterative greedy algorithms maintain the nonconvex nature of (1). Unfortunately, solving (1) optimally is in general NP-hard [20]. Due to this computational intractability, the algorithms in this class greedily refine a rank-k solution using only “local” information available at the current iteration [21–23].

1.2 Contributions

In this work, we study a special class of iterative greedy algorithms known as hard thresholding methods. Similar results have been derived for the vector case [24]. Note that the transition from sparse vector approximation to ARM is non-trivial; while s-sparse signals “live” in the union of finite number of subspaces, the set of rank-k matrices expands to infinitely many subspaces. Thus, the selection rules do not generalize in a straightforward way.

Our contributions are the following:

Ingredients of hard thresholding methods: We analyze the behaviour and performance of hard thresholding methods from a global perspective. Five building blocks are studied: (i) step size selection μ i , (ii) gradient or least-squares updates over restricted low-rank subspaces (e.g., adaptive block coordinate descent), (iii) memory exploitation, (iv) active low-rank subspace tracking and, (v) low-rank matrix approximations (described next). We highlight the impact of these key pieces on the convergence rate and signal reconstruction performance and provide optimal and/or efficient strategies on how to set up these ingredients under different problem conditions.

Low-rank matrix approximations in hard thresholding methods: In [25], the authors show that the solution efficiency can be significantly improved by ϵ-approximation algorithms. Based on similar ideas, we analyze the impact of ϵ-approximate low rank-revealing schemes in the proposed algorithms with well-characterized time and space complexities. Moreover, we provide extensive analysis to prove convergence using ϵ-approximate low-rank projections.

Hard thresholding-based framework with improved convergence conditions: We study hard thresholding variants that provide salient computational tradeoffs for the class of greedy methods on low-rank matrix recovery. These methods, as they iterate, exploit the non-convex scaffold of low rank subspaces on which the approximation problem resides. Using simple analysis tools, we derive improved conditions that guarantee convergence, compared to state-of-the-art approaches.

The organization of the paper is as follows. In Sect. 2, we set up the notation and provide some definitions and properties, essential for the rest of the paper. In Sect. 3, we describe the basic algorithmic frameworks in a nutshell, while in Sect. 4 we provide important “ingredients” for the class of hard-thresholding methods; detailed convergence analysis proofs are provided in Sect. 5. The complexity analysis of the proposed algorithms is provided in Sect. 6. We study two acceleration schemes in Sects. 7 and 8, based on memory utilization and ϵ-approximate low-rank projections, respectively. We further improve convergence speed by exploiting randomized low rank projections in Sect. 9, based on power iteration-based subspace finder tools [26]. We provide empirical support for our claims through experimental results on synthetic and real data in Sect. 10. Finally, we conclude with future work directions in Sect. 11.

2 Elementary Definitions and Properties

We reserve lower-case and bold lower-case letters for scalar and vector variable representation, respectively. Bold upper-case letters denote matrices while bold calligraphic upper-case letters represent linear operators. We use calligraphic upper-case letters for set representations. We use X(i) to represent the matrix estimate at the i-th iteration.

The rank of X is denoted as \(\operatorname{rank}(\boldsymbol {X}) \leq \min\lbrace m, n \rbrace\). The empirical data error is denoted as \(f(\boldsymbol {X}) := \Vert \boldsymbol {y}- \boldsymbol {\mathcal {A}}\boldsymbol {X}\Vert_{2}^{2} \) with gradient \(\nabla f(\boldsymbol {X}) := -2 \boldsymbol {\mathcal {A}}^{\ast}(\boldsymbol {y}- \boldsymbol {\mathcal {A}}\boldsymbol {X})\), where ∗ is the adjoint operation over the linear mapping \(\boldsymbol {\mathcal {A}}\). The inner product between matrices \(\boldsymbol {A},~\boldsymbol{B} \in\mathbb{R}^{m \times n} \) is denoted as 〈A,B〉=trace(B T A), where T represents the transpose operation. I represents an identity matrix with dimensions apparent from the context.

Let \(\mathcal{S} \) be a set of orthonormal, rank-1 matrices that span an arbitrary subspace in \(\mathbb{R}^{m \times n}\). We reserve \(\operatorname{span}(\mathcal{S}) \) to denote the subspace spanned by \(\mathcal{S}\). With slight abuse of notation, we use:

to denote the maximum rank a matrix \(\boldsymbol{X} \in \mathbb {R}^{m \times n}\) can have such that X lies in the subspace spanned by the set \(\mathcal{S} \). Given a finite set \(\mathcal{S} \), \(|\mathcal{S}| \) denotes the cardinality of \(\mathcal{S} \). For any matrix X, we use R(X) to denote its range.

We define a minimum cardinality set of orthonormal, rank-1 matrices that span the subspace induced by a set of rank-1 (and possibly non-orthogonal) matrices \(\mathcal{S}\) as:

where \(\mathcal{U}\) denotes the superset that includes all the sets of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that 〈T i , T j 〉=0, i≠j, ∀T i , \(\boldsymbol{T}_{j} \in\mathcal{T} \) and, ∥T i ∥ F =1, ∀i. In general, \(\text{ortho}(\mathcal{S}) \) is not unique.

A well-known lemma used in the convergence rate proofs of this class of greedy hard thresholding algorithms is defined next.

Lemma 1

[27] Let \(\mathcal{J} \subseteq\mathbb{R}^{m \times n} \) be a closed convex set and \(f: \mathcal{J} \rightarrow\mathbb{R} \) be a smooth objective function defined over \(\mathcal{J} \). Let \(\boldsymbol {X}^{\ast}\in\mathcal{J} \) be a local minimum of the objective function f over the set \(\mathcal{J} \). Then

2.1 Singular Value Decomposition (SVD) and Its Properties

Definition 1

[SVD] Let \(\boldsymbol {X}\in\mathbb{R}^{m \times n} \) be a rank-l (l<min{m,n}) matrix. Then, the SVD of X is given by:

where \(\boldsymbol{U}_{\alpha} \in\mathbb{R}^{m \times l}\), \(\boldsymbol{U}_{\beta} \in\mathbb{R}^{m \times(m - l)}\), \(\boldsymbol{V}_{\alpha} \in\mathbb{R}^{n \times l}\), \(\boldsymbol {V}_{\beta} \in\mathbb{R}^{n \times(n - l)} \) and \(\widetilde {\boldsymbol{\varSigma}} = \text{diag}(\sigma_{1}, \dots, \sigma_{l}) \in\mathbb{R}^{l \times l} \) for \(\sigma_{1}, \dots, \sigma_{l} \in\mathbb{R}_{+} \). Here, the columns of U,V represent the set of left and right singular vectors, respectively, and σ 1,…,σ l denote the singular values.

For any matrix \(\boldsymbol{X} \in\mathbb{R}^{m \times n} \) with arbitrary \(\operatorname{rank}(\boldsymbol{X}) \leq\min\lbrace m, n \rbrace\), its best orthogonal projection \(\mathcal{P}_{k}(\boldsymbol {X}) \) onto the set of rank-k (k<rank(X)) matrices \(\mathcal{C}_{k}:= \lbrace \boldsymbol{A} \in\mathbb{R}^{m \times n}: \operatorname{rank}(\boldsymbol {A}) \leq k \rbrace\) defines the optimization problem:

According to the Eckart-Young theorem [28], the best rank-k approximation of a matrix X corresponds to its truncated SVD: if X=UΣV T, then \(\mathcal {P}_{k}(\boldsymbol{X}) := \boldsymbol{U}_{k} \boldsymbol{\varSigma}_{k} \boldsymbol{V}_{k}^{T} \) where \(\boldsymbol{\varSigma}_{k} \in\mathbb {R}^{k\times k} \) is a diagonal matrix that contains the first k diagonal entries of Σ and U k , V k contain the corresponding left and right singular vectors, respectively. Moreover, this projection is not always unique. In the case of multiple identical singular values, the lexicographic approach is used to break ties. In any case, \(\Vert\mathcal{P}_{k}(\boldsymbol{X}) - \boldsymbol{X} \Vert_{F} \leq\Vert\boldsymbol{W} - \boldsymbol{X} \Vert_{F}\) for any rank-k \(\boldsymbol{W} \in\mathbb{R}^{m \times n}\).

2.2 Subspace Projections

Given a set of orthonormal, rank-1 matrices \(\mathcal{S} \), we denote the orthogonal projection operator onto the subspace induced by \(\mathcal{S} \) as \(\mathcal{P}_{\mathcal{S}} \) Footnote 1 which is an idempotent linear transformation; furthermore, we denote the orthogonal projection operator onto the orthogonal subspace of \(\mathcal{S} \) as \(\mathcal{P}_{\mathcal{S}^{\bot}} \). We can always decompose a matrix \(\boldsymbol {X}\in\mathbb{R}^{m \times n} \) into two matrix components, as follows:

If \(\boldsymbol {X}\in\operatorname{span}(\mathcal{S}) \), the best projection of X onto the subspace induced by \(\mathcal{S} \) is the matrix X itself. Moreover, \(\Vert\mathcal{P}_{\mathcal {S}}\boldsymbol {X}\Vert_{F} \leq\Vert \boldsymbol {X}\Vert_{F} \) for any \(\mathcal {S}\) and X.

Definition 2

[Orthogonal projections using SVD] Let \(\boldsymbol {X}\in\mathbb{R}^{m \times n} \) be a matrix with arbitrary \(\operatorname{rank} \) and SVD decomposition given by (4). Then, \(\mathcal{S}:= \lbrace\boldsymbol{u}_{i} \boldsymbol{v}_{i}^{T}: i = 1,\dots, k\rbrace\) (k≤rank(X)) constitutes a set of orthonormal, rank-1 matrices that spans the best k-rank subspace in R(X) and R(X T); here, u i and v i denote the i-th left and right singular vectors, respectively. The orthogonal projection onto this subspace is given by [2]:

where \(\mathcal{P}_{\mathcal{U}} = \boldsymbol{U}_{:, 1:k} \boldsymbol{U}_{:, 1:k}^{T}\) and \(\mathcal{P}_{\mathcal{V}} = \boldsymbol{V}_{:, 1:k} \boldsymbol{V}_{:, 1:k}^{T}\) in Matlab notation. Moreover, the orthogonal projection onto the \(\mathcal{S}^{\bot}\) is given by:

In the algorithmic descriptions, we use \(\mathcal{S} \leftarrow \mathcal{P}_{k} (\boldsymbol {X})\) to denote the set of rank-1, orthonormal matrices as outer products of the k left u i and right v i principal singular vectors of X that span the best rank-k subspace of X; e.g. \(\mathcal{S} = \lbrace\boldsymbol{u}_{i} \boldsymbol {v}_{i},~i = 1,\dots,k\rbrace\). Moreover, \(\widehat{\boldsymbol {X}} \leftarrow\mathcal{P}_{k} (\boldsymbol {X})\) denotes a/the best rank-k projection matrix of X. In some cases, we use \(\lbrace\mathcal{S},~\widehat{\boldsymbol {X}} \rbrace\leftarrow \mathcal{P}_{k} (\boldsymbol {X})\) when we compute both. The distiction between these cases is apparent from the context.

2.3 Restricted Isometry Property

Many conditions have been proposed in the literature to establish solution uniqueness and recovery stability such as null space property [29], exact recovery condition [30], etc. For the matrix case, [13] proposed the restricted isometry property (RIP) for the ARM problem.

Definition 3

[Rank Restricted Isometry Property (R-RIP) for matrix linear operators [13]] A linear operator \(\boldsymbol {\mathcal {A}}: \mathbb{R}^{m \times n} \rightarrow \mathbb {R}^{p} \) satisfies the R-RIP with constant \(\delta_{k}(\boldsymbol {\mathcal {A}}) \in(0,1)\) if and only if:

\(\forall\boldsymbol{X} \in\mathbb{R}^{m \times n}\) such that \(\operatorname{rank}(\boldsymbol {X}) \leq k\). We write δ k to mean \(\delta_{k}(\boldsymbol {\mathcal {A}})\), unless otherwise stated.

[6] shows that Pauli operators satisfy the rank-RIP in compressive settings while, in function learning, the linear map \(\boldsymbol {\mathcal {A}}\) is designed specifically to satisfy the rank-RIP [7].

2.4 Some Useful Bounds Using R-RIP

In this section, we present some lemmas that are useful in our subsequent developments—these lemmas are consequences of the R-RIP of \(\boldsymbol {\mathcal {A}}\).

Lemma 2

[21] Let \(\boldsymbol {\mathcal {A}}: \mathbb{R}^{m \times n} \rightarrow\mathbb {R}^{p} \) be a linear operator that satisfies the R-RIP with constant δ k . Then, \(\forall\boldsymbol{v} \in \mathbb{R}^{p} \), the following holds true:

where \(\mathcal{S} \) is a set of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that \(\operatorname{rank}(\mathcal {P}_{\mathcal{S}}\boldsymbol {X}) \leq k\), \(\forall \boldsymbol {X}\in\mathbb {R}^{m \times n} \).

Lemma 3

[21] Let \(\boldsymbol {\mathcal {A}}: \mathbb{R}^{m \times n} \rightarrow\mathbb {R}^{p} \) be a linear operator that satisfies the R-RIP with constant δ k . Then, \(\forall \boldsymbol {X}\in\mathbb {R}^{m \times n} \), the following holds true:

where \(\mathcal{S} \) is a set of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that \(\operatorname{rank}(\mathcal {P}_{\mathcal{S}}\boldsymbol {X}) \leq k\), \(\forall \boldsymbol {X}\in\mathbb {R}^{m \times n} \).

Lemma 4

[22] Let \(\boldsymbol {\mathcal {A}}: \mathbb{R}^{m \times n} \rightarrow\mathbb {R}^{p} \) be a linear operator that satisfies the R-RIP with constant δ k and \(\mathcal{S} \) be a set of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that \(\operatorname{rank}(\mathcal{P}_{\mathcal{S}}\boldsymbol {X}) \leq k\), \(\forall \boldsymbol {X}\in\mathbb{R}^{m \times n} \). Then, for μ>0, \(\boldsymbol {\mathcal {A}}\) satisfies:

where \(\lambda(\boldsymbol{\mathcal{B}}) \) represents the range of eigenvalues of the linear operator \(\boldsymbol{\mathcal{B}}: \mathbb{R}^{p} \rightarrow\mathbb{R}^{m \times n} \). Moreover, \(\forall \boldsymbol {X}\in\mathbb{R}^{m \times n} \), it follows that:

Lemma 5

[22] Let \(\boldsymbol {\mathcal {A}}: \mathbb{R}^{m \times n} \rightarrow\mathbb {R}^{p} \) be a linear operator that satisfies the R-RIP with constant δ k and \(\mathcal{S}_{1}, \mathcal{S}_{2} \) be two sets of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that

Then, the following inequality holds:

3 Algebraic Pursuits in a Nutshell

Explicit descriptions of the proposed algorithms are provided in Algorithms 1 and 2. Algorithm 1 follows from the ALgrebraic PursuitS (ALPS) scheme for the vector case [31]. Matrix ALPS I provides efficient strategies for adaptive step size selection and additional signal estimate updates at each iteration (these motions are explained in detail in the next subsection). Algorithm 2 (ADMiRA) [21] further improves the performance of Algorithm 1 by introducing least squares optimization steps on restricted subspaces—this technique borrows from a series of vector reconstruction algorithms such as CoSaMP [32], Subspace Pursuit (SP) [33] and Hard Thresholding Pursuit (HTP) [34].

In a nutshell, both algorithms simply seek to improve the subspace selection by iteratively collecting an extended subspace \(\mathcal {S}_{i} \) with \(\operatorname{rank}(\operatorname{span}(\mathcal{S}_{i})) \leq2k\) and then finding the rank-k matrix that fits the measurements in this restricted subspace using least squares or gradient descent motions.

At each iteration, the Algorithms 1 and 2 perform motions from the following list:

(1) Best rank-k subspace orthogonal to \(\mathcal{X}_{i} \) and active subspace expansion: We identify the best rank-k subspace of the current gradient ∇f(X(i)), orthogonal to \(\mathcal{X}_{i} \) and then merge this low-rank subspace with \(\mathcal{X}_{i} \). This motion guarantees that, at each iteration, we expand the current rank-k subspace estimate with k new, rank-1 orthogonal subspaces to explore.

(2a) Error norm reduction via greedy descent with adaptive step size selection (Algorithm 1): We decrease the data error by performing a single gradient descent step. This scheme is based on a one-shot step size selection procedure (Step size selection step)—detailed description of this approach is given in Sect. 4.

(2b) Error norm reduction via least squares optimization (Algorithm 2): We decrease the data error f(X) on the active O(k)-low rank subspace. Assuming \(\boldsymbol {\mathcal {A}}\) is well-conditioned over low-rank subspaces, the main complexity of this operation is dominated by the solution of a symmetric linear system of equations.

(3) Best rank-k subspace selection: We project the constrained solution onto the set of rank-k matrices \(\mathcal {C}_{k}:= \lbrace\boldsymbol{A} \in\mathbb{R}^{m \times n}: \operatorname{rank}(\boldsymbol{A}) \leq k \rbrace\) to arbitrate the active support set. This step is calculated in polynomial time complexity as a function of m×n using SVD or other matrix rank-revealing decomposition algorithms—further discussions about this step and its approximations can be found in Sects. 8 and 9.

(4) De-bias using gradient descent (Algorithm 1): We de-bias the current estimate W(i) by performing an additional gradient descent step, decreasing the data error. The step size selection procedure follows the same motions as in (2a).

4 Ingredients for Hard Thresholding Methods

4.1 Step Size Selection

For the sparse vector approximation problem, recent works on the performance of the IHT algorithm provide strong convergence rate guarantees in terms of RIP constants [35]. However, as a prerequisite to achieve these strong isometry constant bounds, the step size is set μ i =1,∀i, given that the sensing matrix satisfies \(\Vert\boldsymbol{\varPhi}\Vert_{2}^{2} < 1 \) where ∥⋅∥2 denotes the spectral norm [34]; similar analysis can be found in [3] for the matrix case. From a different perspective, [36] proposes a constant step size μ i =1/(1+δ 2K ), ∀i, based on a simple but intuitive convergence analysis of the gradient descent method.

Unfortunately, most of the above problem assumptions are not naturally met; the authors in [37] provide an intuitive example where IHT algorithm behaves differently under various scalings of the sensing matrix; similar counterexamples can be devised for the matrix case. Violating these assumptions usually leads to unpredictable signal recovery performance of the class of hard thresholding methods. Therefore, more sophisticated step size selection procedures should be devised to tackle these issues during actual recovery. On the other hand, the computation of R-RIP constants has exponential time complexity for the strategy of [3].

To this end, existing approaches broadly fall into two categories: constant and adaptive step size selection. In this work, we present efficient strategies to adaptively select the step size μ i that implies fast convergence rate, for mild R-RIP assumptions on \(\boldsymbol {\mathcal {A}}\). Constant step size strategies easily follow from [24] and are not listed in this work.

Adaptive step size selection. There is limited work on the adaptive step size selection for hard thresholding methods. To the best of our knowledge, apart from [24, 37, 38] are the only studies that attempt this via line searching for the vector case. At the time of review process, we become aware of [39] which implements ideas presented in [37] for the matrix case.

According to Algorithm 1, let X(i) be the current rank-k matrix estimate spanned by the set of orthonormal, rank-1 matrices in \(\mathcal{X}_{i} \). Using regular gradient descent motions, the new rank-k estimate W(i) can be calculated through:

We highlight that the rank-k approximate matrix may not be unique. It then holds that the subspace spanned by \(\mathcal{W}_{i} \) originates: (i) either from the subspace of \(\mathcal{X}_{i} \), (ii) or from the best subspace (in terms of the Frobenius norm metric) of the current gradient ∇f(X(i)), orthogonal to \(\mathcal{X}_{i} \), (iii) or from the combination of orthonormal, rank-1 matrices lying on the union of the above two subspaces. The statements above can be summarized in the following expression:

for any step size μ i and \(\mathcal{D}_{i} \leftarrow\mathcal {P}_{k} ( \mathcal{P}_{\mathcal{X}_{i}^{\bot}} \nabla f(\boldsymbol {X}(i)) )\). Since \(\operatorname{rank}(\operatorname{span}(\mathcal {W}_{i})) \leq k\), we easily deduce the following key observation: let \(\mathcal{S}_{i} \leftarrow\mathcal{D}_{i} \cup\mathcal{X}_{i} \) be a set of rank-1, orthonormal matrices where \(\operatorname{rank}(\text {span}(\mathcal{S}_{i})) \leq2k\). Given \(\mathcal{W}_{i} \) is unknown before the i-th iteration, \(\mathcal{S}_{i} \) spans the smallest subspace that contains \(\mathcal {W}_{i} \) such that the following equality

necessarily holds.Footnote 2

To compute step-size μ i , we use:

i.e., μ i is the minimizer of the objective function, given the current gradient ∇f(X(i)). Note that:

due to R-RIP—i.e., we select 2k subspaces such that μ i satisfies (18). We can derive similar arguments for the additional step size selection ξ i in Step 6 of Algorithm 1.

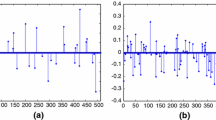

Adaptive μ i scheme results in more restrictive worst-case isometry constants compared to [3, 34, 41], but faster convergence and better stability are empirically observed in general. In [3], the authors present the Singular Value Projection (SVP) algorithm, an iterative hard thresholding algorithm for the ARM problem. According to [3], both constant and iteration dependent (but user-defined) step sizes are considered. Adaptive strategies presented in [3] require the computation of R-RIP constants which has exponential time complexity. Figures 1(a)–(b) illustrate some characteristic examples. The performance varies for different problem configurations. For μ>1, SVP diverges for various test cases. We note that, for large fixed matrix dimensions m,n, adaptive step size selection becomes computationally expensive compared to constant step size selection strategies, as the rank of X ∗ increases.

Median error per iteration for various step size policies and 20 Monte-Carlo repetitions. In brackets, we present the median time consumed for convergence in seconds. (a) m=n=2048, p=0.4n 2, and rank k=70—\(\boldsymbol {\mathcal {A}}\) is formed by permuted and subsampled noiselets [40]. (b) n=2048, m=512, p=0.4n 2, and rank k=50—we use underdetermined linear map \(\boldsymbol {\mathcal {A}}\) according to the MC problem (c) n=2048, m=512, p=0.4n 2, and rank k=40—we use underdetermined linear map \(\boldsymbol {\mathcal {A}}\) according to the MC problem

4.2 Updates on Restricted Subspaces

In Algorithm 1, at each iteration, the new estimate \(\boldsymbol {W}(i) \leftarrow\mathcal{P}_{k} (\boldsymbol{V}(i) ) \) can be further refined by applying a single or multiple gradient descent updates with line search restricted on \(\mathcal{W}_{i} \) [34] (Step 7 in Algorithm 1):

where \(\xi_{i} = \frac{\Vert\mathcal{P}_{\mathcal{W}_{i}} \nabla f(\boldsymbol{W}(i))\Vert_{F}^{2}}{\Vert \boldsymbol {\mathcal {A}}\mathcal {P}_{\mathcal{W}_{i}} \nabla f(\boldsymbol{W}(i))\Vert_{2}^{2}}\). In spirit, the gradient step above is the same as block coordinate descent in convex optimization where we find the subspaces adaptively. Figure 1(c) depicts the acceleration achieved by using additional gradient updates over restricted low-rank subspaces for a test case.

4.3 Acceleration via Memory-Based Schemes and Low-Rank Matrix Approximations

Memory-based techniques can be used to improve convergence speed. Furthermore, low-rank matrix approximation tools overcome the computational overhead of computing the best low-rank projection by inexactly solving (5). We keep the discussion on memory utilization for Sect. 7 and low-rank matrix approximations for Sects. 8 and 9 where we present new algorithmic frameworks for low-rank matrix recovery.

4.4 Active Low-Rank Subspace Tracking

Per iteration of Algorithms 1 and 2, we perform projection operations \(\mathcal{P}_{\mathcal{S}}\boldsymbol {X}\) and \(\mathcal{P}_{\mathcal {S}^{\bot}} \boldsymbol {X}\) where \(\boldsymbol {X}\in\mathbb{R}^{m \times n}\), as described by (6) and (7), respectively. Since \(\mathcal{S} \) is constituted by outer products of left and right singular vectors as in Definition 2, \(\mathcal{P}_{\mathcal{S}}\boldsymbol {X}\) (resp. \(\mathcal{P}_{\mathcal {S}^{\bot}} \boldsymbol {X}\)) projects onto the (resp. complement of the) best low-rank subspace in R(X) and R(X T). These operations are highly connected with the adaptive step size selection and the updates on restricted subspaces. Unfortunately, the time-complexity to compute \(\mathcal{P}_{\mathcal{S}}\boldsymbol {X}\) is dominated by three matrix-matrix multiplications which decelerates the convergence of the proposed schemes in high-dimensional settings. To accelerate the convergence in many test cases, it turns out that we do not have to use the best projection \(\mathcal{P}_{\mathcal{S}}\) in practice.Footnote 3 Rather, employing inexact projections is sufficient to converge to the optimal solution: either (i) \(\mathcal{P}_{\mathcal{U}}\boldsymbol {X}\) onto the best low-rank subspace in R(X) only (if m≪n) or (ii) \(\boldsymbol {X}\mathcal{P}_{\mathcal{V}}\) onto the best low-rank subspace in R(X T) only (if m≫n);Footnote 4 \(\mathcal{P}_{\mathcal{U}}\) and \(\mathcal{P}_{\mathcal{V}}\) are defined in Definition 2 and require only one matrix-matrix multiplication.

Figure 2 shows the time overhead due to the exact projection application \(\mathcal{P}_{\mathcal{S}}\) compared to \(\mathcal {P}_{\mathcal{U}}\) for m≤n. In Fig. 2(a), we use subsampled and permuted noiselets for linear map \(\boldsymbol {\mathcal {A}}\) and in Figs. 2(b)–(c), we test the MC problem. While in the case m=n the use of (6)–(7) has a clear advantage over inexact projections using only \(\mathcal{P}_{\mathcal{U}}\), the latter case converges faster to the desired accuracy 5×10−4 when m≪n as shown in Figs. 2(a)–(b). In our derivations, we assume \(\mathcal{P}_{\mathcal{S}}\) and \(\mathcal{P}_{\mathcal{S}^{\bot}}\) as defined in (6) and (7).

Median error per iteration for Matrix ALPS I and Matrix ALPS II variants over 10 Monte-Carlo repetitions. In brackets, we present the median time consumed for convergene in seconds. (a) n=2048, m=512, p=0.25n 2, and rank k=40. (b) n=2000, m=1000, p=0.25n 2, and rank k=50. (c) n=m=1000, p=0.25n 2, and rank k=50

5 Convergence Guarantees

In this section, we present the theoretical convergence guarantees of Algorithms 1 and 2 as functions of R-RIP constants. To characterize the performance of the proposed algorithms, both in terms of convergence rate and noise resilience, we use the following recursive expression:

In (19), γ denotes the approximation guarantee and provides insights into algorithm’s reconstruction capabilities when additive noise is present; ρ<1 expresses the convergence rate towards a region around X ∗, whose radius is determined by \(\frac{\gamma}{1-\rho} \Vert \boldsymbol {\varepsilon }\Vert_{2} \). In short, (19) characterizes how the distance to the true signal X ∗ is decreased and how the noise level affects the accuracy of the solution, at each iteration.

5.1 Matrix ALPS I

An important lemma for our derivations below is given next:

Lemma 6

[Active subspace expansion]

Let X(i) be the matrix estimate at the i-th iteration and let \(\mathcal{X}_{i} \) be a set of orthonormal, rank-1 matrices such that \(\mathcal{X}_{i} \leftarrow\mathcal{P}_{k}(\boldsymbol {X}(i)) \). Then, at each iteration, the Active Subspace Expansion step in Algorithms 1 and 2 identifies information in X ∗, such that:

where \(\mathcal{S}_{i} \leftarrow\mathcal{X}_{i} \cup\mathcal{D}_{i} \) and \(\mathcal{X}^{\ast}\leftarrow\mathcal{P}_{k}(\boldsymbol {X}^{\ast}) \).

Lemma 6 states that, at each iteration, the active subspace expansion step identifies a 2k rank subspace such that the amount of unrecovered energy of X ∗—i.e., the projection of X ∗ onto the orthogonal subspace of \(\operatorname{span}(\mathcal{S}_{i}) \)—is bounded by (20).

Then, Theorem 1 characterizes the iteration invariant of Algorithm 1 for the matrix case:

Theorem 1

[Iteration invariant for Matrix ALPS I]

The (i+1)-th matrix estimate X(i+1) of Matrix ALPS I satisfies the following recursion:

where \(\rho:= ( \frac{1 + 2\delta_{2k}}{1-\delta_{2k}} ) (\frac{4\delta_{2k}}{1-\delta_{2k}} + (2\delta_{2k} + 2\delta_{3k})\frac{2\delta_{3k}}{1-\delta_{2k}} ) \) and \(\gamma:= ( \frac{1 + 2\delta_{2k}}{1-\delta_{2k}} ) (\frac{2\sqrt{1+\delta _{2k}}}{1 - \delta_{2k}} + \frac{2\delta_{3k}}{1-\delta_{2k}} \sqrt{2(1+\delta_{2k})} ) + \frac {\sqrt{1+\delta_{k}}}{1-\delta_{k}}\). Moreover, when δ 3k <0.1235, the iterations are contractive.

To provide some intuition behind this result, assume that X ∗ is a rank-k matrix. Then, according to Theorem 1, for ρ<1, the approximation parameter γ in (21) satisfies:

Moreover, we derive the following:

which is a stronger R-RIP condition assumption compared to state-of-the-art approaches [21]. In the next section, we further improve this guarantee using Algorithm 2.

Unfolding the recursive formula (21), we obtain the following upper bound for ∥X(i)−X ∗∥ F at the i-th iteration:

Then, given X(0)=0, Matrix ALPS I finds a rank-k solution \(\widehat{\boldsymbol {X}} \in\mathbb {R}^{m \times n} \) such that \(\Vert\widehat{\boldsymbol {X}} - \boldsymbol{X}^{\ast}\Vert_{F} \leq\frac{\gamma+ 1 -\rho }{1-\rho} \Vert \boldsymbol {\varepsilon }\Vert_{2} \) after \(i:= \lceil \frac{\log(\Vert\boldsymbol{X}^{\ast}\Vert_{F}/\Vert \boldsymbol {\varepsilon }\Vert _{2})}{\log(1/\rho)} \rceil\) iterations.

If we ignore steps 5 and 6 in Algorithm 1, we obtain another projected gradient descent variant for the affine rank minimization problem, for which we obtain the following performance guarantees—the proof follows from the proof of Theorem 1.

Corollary 1

[Matrix ALPS I Instance]

In Algorithm 1, we ignore steps 5 and 6 and let \(\lbrace \mathcal {X}_{i+1},~\boldsymbol {X}(i+1) \rbrace\leftarrow\mathcal{P}_{k}(\boldsymbol{V}_{i}) \). Then, by the same analysis, we observe that the following recursion is satisfied:

for \(\rho:= (\frac{4\delta_{2k}}{1-\delta_{2k}} + (2\delta_{2k} + 2\delta_{3k})\frac{2\delta_{3k}}{1-\delta_{2k}} ) \) and \(\gamma:= (\frac{2\sqrt{1+\delta _{2k}}}{1 - \delta_{2k}} + \frac{2\delta_{3k}}{1-\delta_{2k}} \sqrt{2(1+\delta_{2k})} ) \). Moreover, ρ<1 when δ 3k <0.1594.

We observe that the absence of the additional estimate update over restricted support sets results in less restrictive isometry constants compared to Theorem 1. In practice, additional updates result in faster convergence, as shown in Fig. 1(c).

5.2 ADMiRA Instance

In Matrix ALPS I, the gradient descent steps constitute a first-order approximation to least-squares minimization problems. Replacing Step 4 in Algorithm 1 with the following optimization problem:

we obtain ADMiRA (furthermore, we remove the de-bias step in Algorithm 1). Assuming that the linear operator \(\boldsymbol {\mathcal {A}}\), restricted on sufficiently low-rank subspaces, is well conditioned in terms of the R-RIP assumption, the optimization problem (24) has a unique optimal minimizer. By exploiting the optimality condition in Lemma 1, ADMiRA instance in Algorithm 2 features the following guarantee:

Theorem 2

[Iteration invariant for ADMiRA instance]

The (i+1)-th matrix estimate X(i+1) of ADMiRA answers the following recursive expression:

\(\rho:= (2\delta_{2k} + 2\delta_{3k} )\sqrt{\frac {1+3\delta_{3k}^{2}}{1-\delta_{3k}^{2}}}\), and \(\gamma := \sqrt{\frac{1+3\delta_{3k}^{2}}{1-\delta_{3k}^{2}}} \sqrt {2(1+\delta_{3k})} + (\frac{\sqrt{1+3\delta_{3k}^{2}}}{1-\delta_{3k}} + \sqrt{3} )\sqrt{1+\delta_{2k}}\). Moreover, when δ 3k <0.2267, the iterations are contractive.

Similarly to Matrix ALPS I analysis, the parameter γ in Theorem 2 satisfies:

Furthermore, to compare the approximation guarantees of Theorem 2 with [21], we further observe:

We remind that [21] provides convergence guarantees for ADMiRA with δ 4k <0.04 for ρ=1/2.

6 Complexity Analysis

In each iteration, computational requirements of the proposed hard thresholding methods mainly depend on the total number of linear mapping operations \(\boldsymbol {\mathcal {A}}\), gradient descent steps, least-squares optimizations, projection operations and matrix decompositions for low rank approximation. Different algorithmic configurations (e.g. removing steps 6 and 7 in Algorithm 1) lead to hard thresholding variants with less computational complexity per iteration and better R-RIP conditions for convergence but a degraded performance in terms of stability and convergence speed is observed in practice. On the other hand, these additional processing steps increase the required time-complexity per iteration; hence, low iteration counts are desired to tradeoff these operations.

A non-exhaustive list of linear map examples includes the identity operator (Principal component analysis (PCA) problem), Fourier/Wavelets/Noiselets transformations and the famous Matrix Completion problem where \(\boldsymbol {\mathcal {A}}\) is a mask operator such that only a fraction of elements in X is observed. Assuming the most demanding case where \(\boldsymbol {\mathcal {A}}\) and \(\boldsymbol {\mathcal {A}}^{\ast}\) are dense linear maps with no structure, the computation of the gradient ∇f(X(i)) at each iteration requires O(pkmn) arithmetic operations.

Given a set \(\mathcal{S} \) of orthonormal, rank-1 matrices, the projection \(\mathcal{P}_{\mathcal{S}}\boldsymbol {X}\) for any matrix \(\boldsymbol {X}\in\mathbb{R}^{m \times n} \) requires time complexity O(max{m 2 n,mn 2}) as a sequence of matrix-matrix multiplication operations.Footnote 5 In Matrix ALPS I, the adaptive step size selection steps require O(max{pkmn,m 2 n}) time complexity for the calculation of μ i and ξ i quantities. In ADMiRA solving a least-squares system restricted on rank-2k and rank-k subspaces requires O(pk 2) complexity; according to [21, 32], the complexity of this step can be further reduced using iterative techniques such as the Richardson method or conjugate gradients algorithm.

Using the Lanczos method, we require O(kmn) arithmetic operations to compute a rank-k matrix approximation for a given constant accuracy; a prohibitive time-complexity that does not scale well for many practical applications. Sections 8 and 9 describe approximate low rank matrix projections and how they affect the convergence guarantees of the proposed algorithms.

Overall, the operation that dominates per iteration requires O(max{pkmn,m 2 n,mn 2}) time complexity in the proposed schemes.

7 Memory-Based Acceleration

Iterative algorithms can use memory to gain momentum in convergence. Based on Nesterov’s optimal gradient methods [42], we propose a hard thresholding variant, described in Algorithm 3 where an additional update on X(i+1) with momentum step size τ i is performed using previous matrix estimates.

Similar to μ i strategies, τ i can be preset as constant or adaptively computed at each iteration. Constant momentum step size selection has no additional computational cost but convergence rate acceleration is not guaranteed for some problem formulations in practice. On the other hand, empirical evidence has shown that adaptive τ i selection strategies result in faster convergence compared to zero-memory methods with similar complexity.

For the case of strongly convex objective functions, Nesterov [43] proposed the following constant momentum step size selection scheme: \(\tau_{i} = \frac{\alpha_{i}(1-\alpha_{i})}{\alpha_{i}^{2} + \alpha_{i+1}} \), where α 0∈(0,1) and α i+1 is computed as the root ∈(0,1) of

where \(\kappa(\boldsymbol {\mathcal {A}}) \) denotes the condition number of \(\boldsymbol {\mathcal {A}}\). In this scheme, exact calculation of q parameter is computationally expensive for large-scale data problems and approximation schemes are leveraged to compensate this complexity bottleneck.

Based upon adaptive μ i selection, we propose to select τ i as the minimizer of the objective function:

where \(\boldsymbol {\mathcal {A}}\boldsymbol {X}(i), \boldsymbol {\mathcal {A}}\boldsymbol {X}(i-1) \) are already pre-computed at each iteration. According to (26), τ i is dominated by the calculation of a vector inner product, a computationally cheaper process than q calculation.

Theorem 3 characterizes Algorithm 3 for constant momentum step size selection. To keep the main ideas simple, we ignore the additional gradient updates in Algorithm 3. In addition, we only consider the noiseless case for clarity. The convergence rate proof for these cases is provided in the Appendix.

Theorem 3

[Iteration invariant for Matrix ALPS II]

Let \(\boldsymbol {y}= \boldsymbol {\mathcal {A}}\boldsymbol{X}^{\ast}\) be a noiseless set of observations. To recover X ∗ from y and \(\boldsymbol {\mathcal {A}}\), the (i+1)-th matrix estimate X(i+1) of Matrix ALPS II satisfies the following recursion:

where \(\alpha:= \frac{4\delta_{3k}}{1-\delta_{3k}} +(2\delta_{3k} + 2\delta_{4k})\frac{2\delta_{3k}}{1-\delta_{3k}} \). Moreover, solving the above second-order recurrence, the following inequality holds true:

for \(\rho:= \frac{\alpha(1+\tau_{i}) + \sqrt{\alpha^{2}(1+\tau_{i})^{2} + 4\alpha\tau_{i}}}{2}\).

Theorem 3 provides convergence rate behaviour proof for the case where τ i is constant ∀i. The more elaborate case where τ i follows the policy described in (26) is left as an open question for future work. To provide some insight for (28), for τ i =1/4, ∀i and τ i =1/2, ∀i, δ 4k <0.1187 and δ 4k <0.095 guarantee convergence in Algorithm 3, respectively. While the RIP requirements for memory-based Matrix ALPS II are more stringent than the schemes proposed in the previous section, it outperforms Algorithms 1 and 2. Figure 2 shows the acceleration achieved in Matrix ALPS II by using inexact projections \(\mathcal{P}_{\mathcal{U}}\). Using the proper projections (6)–(7), Fig. 3 shows acceleration in practice when using the adaptive momentum step size strategy: while a wide range of constant momentum step sizes leads to convergence, providing flexibility to select an appropriate τ i , adaptive τ i avoids this arbitrary τ i selection while further decreases the number of iterations needed for convergence in most cases.

Median error per iteration for various momentum step size policies and 10 Monte-Carlo repetitions. Here, n=1024, m=256, p=0.25n 2, and rank k=40. We use permuted and subsampled noiselets for the linear map \(\boldsymbol {\mathcal {A}}\). In brackets, we present the median time for convergence in seconds

8 Accelerating Matrix ALPS: ϵ-Approximation of SVD via Column Subset Selection

A time-complexity bottleneck in the proposed schemes is the computation of the singular value decomposition to find subspaces that describe the unexplored information in matrix X ∗. Unfortunately, the computational cost of regular SVD for best subspace tracking is prohibitive for many applications.

Based on [44, 45], we can obtain randomized SVD approximations of a matrix X using column subset selection ideas: we compute a leverage score for each column that represents its “significance”. In particular, we define a probability distribution that weights each column depending on the amount of information they contain; usually, the distribution is related to the ℓ 2-norm of the columns. The main idea of this approach is to compute a surrogate rank-k matrix \(\mathcal{P}_{k}^{\epsilon}(\boldsymbol {X}) \) by subsampling the columns according to this distribution. It turns out that the total number of sampled columns is a function of the parameter ϵ. Moreover, [46, 47] proved that, given a target rank k and an approximation parameter ϵ, we can compute an ϵ-approximate rank-k matrix \(\mathcal{P}_{k}^{\epsilon}(\boldsymbol {X}) \) according to the following definition.

Definition 4

[ϵ-approximate low-rank projection] Let X be an arbitrary matrix. Then, \(\mathcal{P}_{k}^{\epsilon}(\boldsymbol {X}) \) projection provides a rank-k matrix approximation to X such that:

where \(\mathcal{P}_{k}(\boldsymbol {X}) \in \operatorname{arg\,min}_{\boldsymbol{Y}: \operatorname{rank}(\boldsymbol{Y}) \leq k} \Vert \boldsymbol {X}- \boldsymbol {Y}\Vert_{F} \).

For the following theoretical results, we assume the following condition on the sensing operator \(\boldsymbol {\mathcal {A}}\): \(\Vert \boldsymbol {\mathcal {A}}^{\ast} \boldsymbol{\beta} \Vert_{F} \leq\lambda\), \(\forall \boldsymbol{\beta} \in\mathbb{R}^{p}\) where λ>0. Using ϵ-approximation schemes to perform the Active subspace selection step, the following upper bound holds. The proof is provided in the Appendix:

Lemma 7

[ϵ-approximate active subspace expansion]

Let X(i) be the matrix estimate at the i-th iteration and let \(\mathcal{X}_{i} \) be a set of orthonormal, rank-1 matrices in \(\mathbb{R}^{m \times n}\) such that \(\mathcal{X}_{i} \leftarrow\mathcal{P}_{k}(\boldsymbol {X}(i)) \). Furthermore, let

be a set of orthonormal, rank-1 matrices that span rank-k subspace such that (29) is satisfied for \(\boldsymbol{X} := \mathcal{P}_{\mathcal{X}_{i}^{\bot}} \nabla f(\boldsymbol {X}(i)) \). Then, at each iteration, the Active Subspace Expansion step in Algorithms 1 and 2 captures information contained in the true matrix X ∗, such that:

where \(\mathcal{S}_{i} \leftarrow\mathcal{X}_{i} \cup\mathcal {D}_{i}^{\epsilon} \) and \(\mathcal{X}^{\ast}\leftarrow\mathcal {P}_{k}(\boldsymbol{X}^{\ast}) \).

Furthermore, to prove the following theorems, we extend Lemma 10, provided in the Appendix, as follows. The proof easily follows from the proof of Lemma 10, using Definition 4:

Lemma 8

[ϵ-approximation rank-k subspace selection] Let V(i) be a rank-2k proxy matrix in the subspace spanned by \(\mathcal{S}_{i} \) and let \(\widehat{\boldsymbol{W}}(i) \leftarrow \mathcal{P}_{k}^{\epsilon}(\boldsymbol{V}(i)) \) denote the rank-k ϵ-approxi- mation to V(i), according to (5). Then:

where \(\boldsymbol{W}(i) \leftarrow\mathcal{P}_{k}(\boldsymbol {V}(i)) \).

8.1 Matrix ALPS I Using ϵ-Approximate Low-Rank Projection via Column Subset Selection

Using ϵ-approximate SVD in Matrix ALPS I, the following iteration invariant theorem holds:

Theorem 4

[Iteration invariant with ϵ-approximate projections for Matrix ALPS I]

The (i+1)-th matrix estimate X(i+1) of Matrix ALPS I with ϵ-approximate projections \(\mathcal{D}_{i}^{\epsilon}\leftarrow\mathcal{P}_{k}^{\epsilon} ( \mathcal {P}_{\mathcal{X}_{i}^{\bot}} \nabla f(\boldsymbol {X}(i)) ) \) and \(\widehat {\boldsymbol{W}}(i) \leftarrow\mathcal{P}_{k}^{\epsilon}(\boldsymbol{V}(i)) \) in Algorithm 1 satisfies the following recursion:

where \(\rho:= (1 + \frac{3\delta_{k}}{1-\delta_{k}} ) (2 + \epsilon) [ ( 1+ \frac{\delta _{3k}}{1-\delta_{2k}})4\delta_{3k} + \frac{2\delta _{2k}}{1-\delta_{2k}} ]\), \(\beta:= (1 + \frac {3\delta_{k}}{1-\delta_{k}} ) (2 + \epsilon ) (1+ \frac{\delta_{3k}}{1-\delta_{2k}} )2\sqrt{\epsilon}\), and \(\gamma:= (1 + \frac{3\delta_{k}}{1-\delta_{k}} ) (2 + \epsilon) [ (1+ \frac{\delta _{3k}}{1-\delta_{2k}} )\sqrt{2(1+\delta_{2k})} + 2\frac{\sqrt{1+\delta_{2k}}}{1-\delta_{2k}} ]\).

Similar analysis can be conducted for the ADMiRA algorithm. To illustrate the impact of SVD ϵ-approximation on the signal reconstruction performance of the proposed methods, we replace the best rank-k projections in steps 1 and 5 of Algorithm 1 by the ϵ-approximation SVD algorithm, presented in [47]. In this paper, the column subset selection algorithm satisfies the following theorem:

Theorem 5

Let \(\boldsymbol {X}\in\mathbb{R}^{m \times n} \) be a signal of interest with arbitrary \(\operatorname{rank} < \min\lbrace m, n \rbrace\) and let X k represent the best rank-k approximation of X. After 2(k+1)(log(k+1)+1) passes over the data, the Linear Time Low-Rank Matrix Approximation algorithm in [47] computes a rank-k approximation \(\mathcal{P}_{k}^{\epsilon}(\boldsymbol {X}) \in\mathbb {R}^{m \times n} \) such that Definition 4 is satisfied with probability at least 3/4.

The proof is provided in [47]. In total, Linear Time Low-Rank Matrix Approximation algorithm [47] requires O(mn(k/ϵ+k 2logk)+(m+n)(k 2/ϵ 2+k 3logk/ϵ+k 4log2 k)) and O(min{m,n}(k/ϵ+k 2logk)) time and space complexity, respectively. However, while column subset selection methods such as [47] reduce the overall complexity of low-rank projections in theory, in practice this applies only in very high-dimensional settings. To strengthen this argument, in Fig. 4 we compare SVD-based Matrix ALPS II with Matrix ALPS II using the ϵ-approximate column subset selection method in [47]. We observe that the total number of iterations for convergence increases due to ϵ-approximate low-rank projections, as expected. Nevertheless, we observe that, on average, the column subset selection process [47] is computationally prohibitive compared to regular SVD due to the time overhead in the column selection procedure—fewer passes over the data are desirable in practice to tradeoff the increased number of iterations for convergence. In the next section, we present alternatives based on recent trends in randomized matrix decompositions and how we can use them in low-rank recovery.

Performance comparison using ϵ-approximation SVD [47] in Matrix ALPS II. m=n=256, p=0.4n 2, rank of X ∗ equals 2 and \(\boldsymbol {\mathcal {A}}\) constituted by permuted noiselets. The non-smoothness in the error curves is due to the extreme low rankness of X ∗ for this setting

9 Accelerating Matrix ALPS: SVD Approximation Using Randomized Matrix Decompositions

Finding low-cost SVD approximations to tackle the above complexity issues is a challenging task. Recent works on probabilistic methods for matrix approximation [26] provide a family of efficient approximate projections on the set of rank-deficient matrices with clear computational advantages over regular SVD computation in practice and attractive theoretical guarantees. In this work, we build on the low-cost, power-iteration subspace tracking scheme, described in Algorithms 4.3 and 4.4 in [26]. Our proposed algorithm is described in Algorithm 4.

The convergence guarantees of Algorithm 4 follow the same motions described in Sect. 8, where ϵ is a function of m,n,k and q.

10 Experiments

10.1 List of Algorithms

In the following experiments, we compare the following algorithms: (i) the Singular Value Projection (SVP) algorithm [3], a non-convex first-order projected gradient descent algorithm with constant step size selection (we study the case where μ=1), (ii) the inexact ALM algorithm [18] based on augmented Langrance multiplier method, (iii) the OptSpace algorithm [48], a gradient descent algorithm on the Grassmann manifold, (iv) the Grassmannian Rank-One Update Subspace Estimation (GROUSE) and the Grassmannian Robust Adaptive Subspace Tracking methods (GRASTA) [49, 50], two stochastic gradient descent algorithms that operate on the Grassmannian—moreover, to allay the impact of outliers in the subspace selection step, GRASTA incorporates the augmented Lagrangian of ℓ 1-norm loss function into the Grassmannian optimization framework, (v) the Riemannian Trust Region Matrix Completion algorithm (RTRMC) [51], a matrix completion method using first- and second-order Riemannian trust-region approaches, (vi) the Low rank Matrix Fitting algorithm (LMatFit) [52], a nonlinear successive over-relaxation algorithm and (vii) the algorithms Matrix ALPS I, ADMiRA [21], Matrix ALPS II and Randomized Matrix ALPS II with QR Factorization (referred shortly as Matrix ALPS II with QR) presented in this paper.

10.2 Implementation Details

To properly compare the algorithms in the above list, we preset a set of parameters that are common. We denote the ratio between the number of observed samples and the number of variables in X ∗ as SR:=p/(m⋅n) (sampling ratio). Furthermore, we reserve FR to represent the degree of freedom in a rank-k matrix to the number of observations—this corresponds to the following definition FR:=(k(m+n−k))/p. In most of the experiments, we fix the number of observable data p=0.3mn and vary the dimensions and the rank k of the matrix X ∗. This way, we create a wide range of different problem configurations with variable FR.

Most of the algorithms in comparison as well as the proposed schemes are implemented in Matlab. We note that the LMaFit software package contains parts implemented in C that reduce the per iteration computational time. This provides insights for further time savings in our schemes; we leave a fully optimized implementation of our algorithms as future work. In this paper, we mostly test cases where m≪n. Such settings can be easily found in real-world problems such as recommender systems (e.g. Netflix, Amazon, etc.) where the number of products, movies, etc. is much greater than the number of active users.

In all algorithms, we fix the maximum number of iterations to 500, unless otherwise stated. To solve a least squares problem over a restricted low-rank subspace, we use conjugate gradients with maximum number of iterations given by \(\rm{cg\_maxiter}:= 500\) and tolerance parameter \(\rm{cg\_tol} := 10^{-10}\). We use the same stopping criteria for the majority of algorithms under consideration:

where X(i), X(i−1) denote the current and the previous estimate of X ∗ and \(\rm{tol} := 5\times10^{-5}\). If this is not the case, we tweak the algorithms to minimize the total execution time and achieve similar reconstruction performance as the rest of the algorithms. For SVD calculations, we use the \(\rm{lansvd}\) implementation in PROPACK package [53]—moreover, all the algorithms in comparison use the same linear operators \(\boldsymbol {\mathcal {A}}\) and \(\boldsymbol {\mathcal {A}}^{\ast}\) for gradient and SVD calculations and conjugate-gradient least-squares minimizations. For fairness, we modified all the algorithms so that they exploit the true rank. Small deviations from the true rank result in relatively small degradation in terms of the reconstruction performance. In case the rank of X ∗ is unknown, one has to predict the dimension of the principal singular space. The authors in [3], based on ideas in [48], propose to compute singular values incrementally until a significant gap between singular values is found. Similar strategies can be found in [18] for the convex case.

In Matrix ALPS II and Matrix ALPS II with QR, we perform \(\mathcal{Q}_{i} \leftarrow\text{ortho}(\mathcal{X}_{i} \cup \mathcal{X}_{i+1})\) to construct a set of orthonormal rank-1 matrices that span the subspace, spanned by \(\mathcal{X}_{i} \cup\mathcal{X}_{i+1}\). While such operation can be implemented using factorization procedures (such as SVD or QR decompositions), in practice this degrades the time complexity of the algorithm substantially as the rank k and the problem dimensionality increase. In our implementations, we simply union the set of orthonormal rank-1 matrices, without further orthogonalization. Thus, we employ inexact projections for computational efficiency which results in faster convergence. Figure 5 shows the time overhead due to the additional orthogonalization process. We compare three algorithms: Matrix ALPS II (no orthogonalization step), Matrix ALPS II using SVD for orthogonalization and, Matrix ALPS II using QR for orthogonalization. In Figs. 5(a)–(b), we use subsampled and permuted noiselets for linear map \(\boldsymbol {\mathcal {A}}\) and in Fig. 5(c), we test the MC problem. In all the experimental cases considered in this work, we observed identical performance in terms of reconstruction accuracy for the three variants, as can be also seen in Fig. 5. To this end, for the rest of the paper, we use Matrix ALPS II where \(\mathcal {Q}_{i} \leftarrow\mathcal{X}_{i} \cup\mathcal{X}_{i+1}\).

10.3 Limitations of ∥⋅∥∗-Based Algorithms: A Toy Example

While nucluear norm heuristic is widely used in solving the low-rank minimization problem, [54] presents simple problem cases where convex, nuclear norm-based, algorithms fail in practice. Using the ∥⋅∥∗-norm in the objective function as the convex surrogate of the \(\operatorname{rank}(\cdot)\) metric might lead to a candidate set with multiple solutions, introducing ambiguity in the selection process. Borrowing the example in [54], we test the list of algorithms above on a toy problem setting that does not satisfy the rank-RIP. To this end, we design the following problem: let \(\boldsymbol{X}^{\ast}\in\mathbb{R}^{5 \times 4}\) be the matrix of interest with \(\operatorname{rank}(\boldsymbol{X}^{\ast}) = 2\), as shown in Fig. 6(a). We consider the case where we have access to X ∗ only through a subset of its entries, as shown in Fig. 6(b).

In Fig. 7, we present the reconstruction performance of various matrix completion solvers after 300 iterations. Although there are multiple solutions that induce the recovered matrix and have the same rank as X ∗, most of the algorithms in comparison reconstruct X ∗ successfully. We note that, in some cases, the inadequancy of an algorithm to reconstruct X ∗ is not because of the (relaxed) problem formulation but due to its fast—but inaccurate—implementation (fast convergence versus reconstruction accuracy tradeoff).

10.4 Synthetic Data

General affine rank minimization using noiselets: In this experiment, the set of observations \(\boldsymbol {y}\in\mathbb{R}^{p}\) satisfy:

Here, we use permuted and subsampled noiselets for the linear operator \(\boldsymbol {\mathcal {A}}\) [12]. The signal X ∗ is generated as the multiplication of two low-rank matrices, \(\mathbf{L} \in\mathbb {R}^{m \times k} \) and \(\mathbf{R} \in\mathbb{R}^{n \times k} \), such that X ∗=LR T and ∥X ∗∥ F =1. Both L and R have random independent and identically distributed (iid) Gaussian entries with zero mean and unit variance. In the noisy case, the additive noise term \(\boldsymbol {\varepsilon }\in\mathbb{R}^{p}\) contains entries drawn from a zero mean Gaussian distribution with ∥ε∥2∈{10−3,10−4}.

We compare the following algorithms: SVP, ADMiRA, Matrix ALPS I, Matrix ALPS II and Matrix ALPS II with QR for various problem configurations, as depicted in Table 1 (there is no available code with arbitrary sensing operators for the rest algorithms). In Table 1, we show the median values of reconstruction error, number of iterations and execution time over 50 Monte Carlo iterations. For all cases, we assume SR=0.3 and we set the maximum number of iterations to 500. Bold font denotes the fastest execution time. Furthermore, Fig. 8 illustrates the effectiveness of the algorithms for some representative problem configurations.

Low rank signal reconstruction using noiselet linear operator. The error curves are the median values across 50 Monte-Carlo realizations over each iteration. For all cases, we assume p=0.3mn. (a) m=256, n=512, k=10 and ∥ε∥2=10−3. (b) m=256, n=512, k=10 and ∥ε∥2=10−4. (c) m=256, n=512, k=20 and ∥ε∥2=0. (d) m=512, n=1024, k=30 and ∥ε∥2=0. (e) m=512, n=1024, k=40 and ∥ε∥2=0. (f) m=1024, n=2048, k=50 and ∥ε∥2=0

In Table 1, Matrix ALPS II and Matrix ALPS II with QR obtain accurate low-rank solutions much faster than the rest of the algorithms in comparison. In high dimensional settings, Matrix ALPS II with QR scales better as the problem dimensions increase, leading to faster convergence. Moreover, its execution time is at least a few orders of magnitude smaller compared to SVP, ADMiRA and Matrix ALPS I implementations.

Robust matrix completion: We design matrix completion problems in the following way. The signal of interest \(\boldsymbol {X}^{\ast}\in \mathbb{R}^{m \times n}\) is synthesized as a rank-k matrix, factorized as X ∗:=LR T with ∥X ∗∥ F =1 where \(\mathbf{L} \in\mathbb {R}^{m \times k} \) and \(\mathbf{R} \in\mathbb{R}^{n \times k} \) as defined above. In sequence, we subsample X ∗ by observing p=0.3mn entries, drawn uniformly at random. We denote the set of ordered pairs that represent the coordinates of the observable entries as Ω={(i,j):[X ∗] ij is known}⊆{1,…,m}×{1,…,n} and let \(\boldsymbol{\mathcal {A}}_{\varOmega}\) denote the linear operator (mask) that samples a matrix according to Ω. Then, the set of observations satisfies:

i.e., the known entries of X ∗ are structured as a vector \(\boldsymbol {y}\in\mathbb{R}^{p}\), disturbed by a dense noise vector \(\boldsymbol {\varepsilon }\in\mathbb{R}^{p}\) with fixed-energy, which is populated by iid zero-mean Gaussians.

To demonstrate the reconstruction accuracy and the convergence speeds, we generate various problem configurations (both noisy and noiseless settings), according to (35). The energy of the additive noise takes values ∥ε∥2∈{10−3,10−4}. All the algorithms are tested for the same signal-matrix-noise realizations. A summary of the results can be found in Tables 2, 3 and, 4 where we present the median values of reconstruction error, number of iterations and execution time over 50 Monte Carlo iterations. For all cases, we assume SR=0.3 and set the maximum number of iterations to 700. Bold font denotes the fastest execution time. Some convergence error curves for specific cases are illustrated in Figs. 9 and 10.

Low rank matrix recovery for the matrix completion problem. The error curves are the median values across 50 Monte-Carlo realizations over each iteration. For all cases, we assume p=0.3mn. (a) m=700, n=1000, k=30 and ∥ε∥2=0. (b) m=700, n=1000, k=50 and ∥ε∥2=10−3. (c) m=700, n=1000, k=110 and ∥ε∥2=0. (d) m=500, n=2000, k=10 and ∥ε∥2=0. (e) m=500, n=2000, k=50 and ∥ε∥2=10−3. (f) m=500, n=2000, k=80 and ∥ε∥2=10−4

In Table 2, LMaFit [52] implementation has the fastest convergence for small scale problem configuration where m=300 and n=600. We note that part of LMaFit implementation uses C code for acceleration. GROUSE [49] is a competitive low-rank recovery method with attractive execution times for the extreme low rank problem settings due to stochastic gradient descent techniques. Nevertheless, its execution time performance degrades significantly as we increase the rank of X ∗. Moreover, we observe how randomized low rank projections accelerate the convergence speed where Matrix ALPS II with QR converges faster than Matrix ALPS II. In Tables 3 and 4, we increase the problem dimensions. Here, Matrix ALPS II with QR has faster convergence for most of the cases and scales well as the problem size increases. We note that we do not exploit stochastic gradient descent techniques in the recovery process to accelerate convergence which is left for future work.

10.5 Real Data

We use real data images to highlight the reconstruction performance of the proposed schemes. To this end, we perform grayscale image denoising from an incomplete set of observed pixels—similar experiments can be found in [52]. Based on the matrix completion setting, we observe a limited number of pixels from the original image and perform a low rank approximation based only on the set of measurements. While the true underlying image might not be low-rank, we apply our solvers to obtain low-rank approximations.

Figures 11 and 12 depict the reconstruction results. In the first test case, we use a 512×512 grayscale image as shown in the top left corner of Fig. 11. For this case, we observe only the 35 % of the total number of pixels, randomly selected—a realization is depicted in the top right plot in Fig. 11. In sequel, we fix the desired rank to k=40. The best rank-40 approximation using SVD is shown in the top middle of Fig. 11 where the full set of pixels is observed. Given a fixed common tolerance and the same stopping criteria, Fig. 11 shows the recovery performance achieved by a range of algorithms under consideration for 10 Monte-Carlo realizations. We repeat the same experiment for the second image in Fig. 12. Here, the size of the image is 256×256, the desired rank is set to k=30 and we observe the 33 % of the image pixels. In contrast to the image denoising procedure above, we measure the reconstruction error of the computed solutions with respect to the best rank-30 approximation of the true image. In both cases, we note that Matrix ALPS II has a better phase transition performance as compared to the rest of the algorithms.

Reconstruction performance in image denoising settings. The image size is 256×256 and the desired rank is preset to k=30. We observe 33 % of the pixels of the best rank-30 approximation of the image. We depict the median reconstruction with respect to the best rank-30 approximation in dB over 10 Monte Carlo realizations

11 Discussion

In this paper, we present new strategies and review existing ones for hard thresholding methods to recover low-rank matrices from dimensionality reducing, linear projections. Our discussion revolves around four basic building blocks that exploit the problem structure to reduce computational complexity without sacrificing stability.

In theory, constant μ i selection schemes are accompanied with strong RIP constant conditions but empirical evidence reveal signal reconstruction vulnerabilities. While convergence derivations of adaptive schemes are characterized by weaker bounds, the performance gained by this choice in terms of convergence rate, is quite significant. Memory-based methods lead to convergence speed with (almost) no extra cost on the complexity of hard thresholding methods—we provide theoretical evidence for convergence for simple cases but more theoretical justification is needed to generalize this part as future work. Lastly, further estimate refinement over low rank subspaces using gradient update steps or pseudoinversion optimization techniques provides signal reconstruction efficacy, but more computational power is needed per iteration.

We connect ϵ-approximation low-rank revealing schemes with first-order gradient descent algorithms to solve general affine rank minimization problems; to the best of our knowledge, this is the first attempt to theoretically characterize the performance of iterative greedy algorithms with ϵ-approximation schemes. In all cases, experimental results illustrate the effectiveness of the proposed schemes on different problem configurations.

Notes

The distinction between \(\mathcal{P}_{\mathcal{S}} \) and \(\mathcal {P}_{k} \) for k positive integer is apparent from context.

In the case of multiple identical singular values, any ties are lexicographically dissolved.

From a different perspective and for a different problem case, similar ideas have been used in [18].

We can move between these two cases by a simple transpose of the problem.

While such operation has O(max{m 2 n,mn 2}) complexity, each application of \(\mathcal{P}_{\mathcal{S}} \boldsymbol {X}\) requires three matrix-matrix multiplications. To reduce such computational cost, we relax this operation in Sect. 10 where in practice we use only \(\mathcal{P}_{\mathcal{U}}\) that needs one matrix-matrix multiplication.

References

Baraniuk, R.G., Cevher, V., Wakin, M.B.: Low-dimensional models for dimensionality reduction and signal recovery: a geometric perspective. Proc. IEEE 98(6), 959–971 (2010)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Meka, R., Jain, P., Dhillon, I.S.: Guaranteed rank minimization via singular value projection. In: NIPS Workshop on Discrete Optimization in Machine Learning (2010)

Tyagi, H., Cevher, V.: Learning ridge functions with randomized sampling in high dimensions. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2025–2028. IEEE Press, New York (2012)

Tyagi, H., Cevher, V.: Learning non-parametric basis independent models from point queries via low-rank methods. Technical report, EPFL (2012)

Liu, Y.K.: Universal low-rank matrix recovery from Pauli measurements (2011)

Tyagi, H., Cevher, V.: Active learning of multi-index function models. In: Advances in Neural Information Processing Systems, vol. 25, pp. 1475–1483 (2012)

Candes, E.J., Li, X.: Solving quadratic equations via phaselift when there are about as many equations as unknowns (2012). Preprint arXiv:1208.6247

Bennett, J., Lanning, S.: The netflix prize. In: KDD Cup and Workshop in Conjunction with KDD (2007)

Candes, E.J., Li, X., Ma, Y., Wright, J.: Robust principal component analysis? J. ACM 58(3) (2011)

Kyrillidis, A., Cevher, V.: Matrix alps: Accelerated low rank and sparse matrix reconstruction. Technical report, EPFL (2012)

Waters, A.E., Sankaranarayanan, A.C., Baraniuk, R.G.: Sparcs: recovering low-rank and sparse matrices from compressive measurements. In: NIPS (2011)

Fazel, M., Recht, B., Parrilo, P.A.: Guaranteed minimum rank solutions to linear matrix equations via nuclear norm minimization. SIAM Rev. 52(3), 471–501 (2010)

Liu, Z., Vandenberghe, L.: Interior-point method for nuclear norm approximation with application to system identification. SIAM J. Matrix Anal. Appl. 31, 1235–1256 (2009)

Mohan, K., Fazel, M.: Reweighted nuclear norm minimization with application to system identification. In: American Control Conference (ACC). IEEE Press, New York (2010)

Cai, J.-F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20, 1956–1982 (2010)

Recht, B., Re, C.: Parallel stochastic gradient algorithms for large-scale matrix completion. Preprint (2011)

Lin, Z., Chen, M., Ma, Y.: The augmented Lagrange multiplier method for exact recovery of corrupted low-rank matrices (2010). preprint arXiv:1009.5055

Wright, J., Wu, L., Chen, M., Lin, Z., Ganesh, A., Ma, Y.: Fast convex optimization algorithms for exact recovery of a corrupted low-rank matrix. UIUC Technical Report UILU-ENG-09-2214

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24(2), 227–234 (1995)

Lee, K., Bresler, Y.: Admira: atomic decomposition for minimum rank approximation. IEEE Trans. Inf. Theory 56(9), 4402–4416 (2010)

Goldfarb, D., Ma, S.: Convergence of fixed-point continuation algorithms for matrix rank minimization. Found. Comput. Math. 11, 183–210 (2011)

Beck, A., Teboulle, M.: A linearly convergent algorithm for solving a class of nonconvex/affine feasibility problems. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 33–48 (2011)

Kyrillidis, A., Cevher, V.: Recipes on hard thresholding methods. In: Computational Advances in Multi-Sensor Adaptive Processing, Dec. 2011

Kyrillidis, A., Cevher, V.: Combinatorial selection and least absolute shrinkage via the Clash algorithm. In: IEEE International Symposium on Information Theory, July 2012

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53, 217–288 (2011)

Bertsekas, D.: Nonlinear Programming. Athena Scientific, Nashua (1995)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge Univ. Press, Cambridge (1990)

Cohen, A., Dahmen, W., DeVore, R.: Compressed sensing and best k-term approximation. J. Am. Math. Soc. 22(1), 211–231 (2009)

Tropp, J.A.: Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 50(10), 2231–2242 (2004)

Cevher, V.: An alps view of sparse recovery. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5808–5811. IEEE Press, New York (2011)

Needell, D., Tropp, J.A.: Cosamp: iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmon. Anal. 26(3), 301–321 (2009)

Dai, W., Milenkovic, O.: Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 55, 2230–2249 (2009)

Foucart, S.: Hard thresholding pursuit: an algorithm for compressed sensing. SIAM J. Numer. Anal. 49(6), 2543–2563 (2011)

Blumensath, T., Davies, M.E.: Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 27(3), 265–274 (2009)

Garg, R., Khandekar, R.: Gradient descent with sparsification: an iterative algorithm for sparse recovery with restricted isometry property. In: ICML. ACM Press, New York (2009)

Blumensath, T., Davies, M.E.: Normalized iterative hard thresholding: guaranteed stability and performance. IEEE J. Sel. Top. Signal Process. 4(2), 298–309 (2010)

Blumensath, T.: Accelerated iterative hard thresholding. Signal Process. 92, 752–756 (2012)

Tanner, J., Wei, K.: Normalized iterative hard thresholding for matrix completion. Preprint (2012)

Coifman, R., Geshwind, F., Meyer, Y.: Noiselets. Appl. Comput. Harmon. Anal. 10(1), 27–44 (2001)

Foucart, S.: Sparse recovery algorithms: sufficient conditions in terms of restricted isometry constants. In: Proceedings of the 13th International Conference on Approximation Theory (2010)

Nesterov, Y.: Gradient methods for minimizing composite objective function. core discussion papers 2007076, université catholique de louvain. Center for Operations Research and Econometrics (CORE) (2007)

Nesterov, Y.: Introductory Lectures on Convex Optimization. Kluwer Academic, Dordrecht (1996)

Drineas, P., Frieze, A., Kannan, R., Vempala, S., Vinay, V.: Clustering large graphs via the singular value decomposition. Mach. Learn. 56(1), 9–33 (2004)

Drineas, P., Kannan, R., Mahoney, M.W.: Fast Monte Carlo algorithms for matrices ii: computing a low-rank approximation to a matrix. SIAM J. Comput. 36, 158–183 (2006)

Deshpande, A., Rademacher, L., Vempala, S., Wang, G.: Matrix approximation and projective clustering via volume sampling. In: Proceedings of the Seventeenth Annual ACM-SIAM Symposium on Discrete Algorithm, SODA ’06, New York, NY, USA, pp. 1117–1126. ACM Press, New York (2006)

Deshpande, A., Vempala, S.: Adaptive sampling and fast low-rank matrix approximation. Electron. Colloq. Comput. Complex. 13, 042 (2006)

Keshavan, R.H., Montanari, A., Oh, S.: Matrix completion from a few entries. IEEE Trans. Inf. Theory 56(6), 2980–2998 (2010)

Balzano, L., Nowak, R., Recht, B.: Online identification and tracking of subspaces from highly incomplete information. In: 48th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 704–711. IEEE Press, New York (2010)

He, J., Balzano, L., Lui, J.C.S.: Online robust subspace tracking from partial information (2011). arXiv:1109.3827

Boumal, N., Absil, P.A.: Rtrmc: a Riemannian trust-region method for low-rank matrix completion. In: NIPS (2011)

Wen, Z., Yin, W., Zhang, Y.: Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Rice University CAAM Technical Report TR10-07 (2010) Submitted

Larsen, R.M.: Propack: Software for large and sparse svd calculations. http://soi.stanford.edu/~rmunk/PROPACK

Shi, X., Yu, P.S.: Limitations of matrix completion via trace norm minimization. ACM SIGKDD Explor. Newsl. 12(2), 16–20 (2011)

Acknowledgements

This work was supported in part by the European Commission under Grant MIRG-268398, ERC Future Proof, SNF 200021-132548 and DARPA KeCoM program #11-DARPA-1055. VC also would like to acknowledge Rice University for his Faculty Fellowship.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Remark 1

Let \(\boldsymbol {X}\in\mathbb{R}^{m \times n}\) with SVD: X=UΣV T, and \(\boldsymbol{Y} \in\mathbb{R}^{m \times n}\) with SVD: \(\boldsymbol {Y} = \widetilde{\boldsymbol{U}} \widetilde{\boldsymbol{\varSigma}} \widetilde{\boldsymbol{V}}^{T}\). Assume two sets: (i) \(\mathcal{S}_{1} = \lbrace\boldsymbol{u}_{i}\boldsymbol{u}_{i}^{T}: i \in\mathcal {I}_{1} \rbrace\) where u i is the i-th singular vector of X and \(\mathcal{I}_{1} \subseteq\lbrace1, \dots,\allowbreak \operatorname{rank}(\boldsymbol {X}) \rbrace\) and, (ii) \(\mathcal{S}_{2} = \lbrace \boldsymbol{u}_{i}\boldsymbol{u}_{i}^{T}, \tilde{\boldsymbol{u}_{j}} \tilde {\boldsymbol{u}_{j}}^{T}: i \in\mathcal{I}_{2},~j \in\mathcal{I}_{3} \rbrace\) where \(\tilde{\boldsymbol{u}_{i}} \) is the i-th singular vector of Y, \(\mathcal{I}_{1} \subseteq\mathcal{I}_{2} \subseteq\lbrace1, \dots, \operatorname{rank}(\boldsymbol {X}) \rbrace\) and, \(\mathcal{I}_{3} \subseteq\lbrace1, \dots, \operatorname{rank}(\boldsymbol {Y}) \rbrace\). We observe that the subspaces defined by \(\boldsymbol {u}_{i}\boldsymbol{u}_{i}^{T}\) and \(\tilde{\boldsymbol{u}_{j}} \tilde {\boldsymbol{u}_{j}}^{T}\) are not necessarily orthogonal.

To this end, let \(\widehat{\mathcal{S}}_{2} = \text{ortho}(\mathcal {S}_{2})\); this operation can be easily computed via SVD. Then, the following commutativity property holds true for any matrix \(\boldsymbol {W} \in\mathbb{R}^{m \times n} \):

1.1 A.1 Proof of Lemma 6

Given \(\mathcal{X}^{\ast}\leftarrow\mathcal{P}_{k}(\boldsymbol {X}^{\ast}) \) using SVD factorization, we define the following quantities: \(\mathcal{S}_{i} \leftarrow\mathcal{X}_{i} \cup\mathcal{D}_{i}\), \(\mathcal {S}^{\ast}_{i} \leftarrow\text{ortho} (\mathcal{X}_{i} \cup\mathcal {X}^{\ast}) \). Then, given the structure of the sets \(\mathcal {S}_{i}\) and \(\mathcal{S}_{i}^{\ast}\)

and

Since the subspace defined in \(\mathcal{D}_{i} \) is the best rank-k subspace, orthogonal to the subspace spanned by \(\mathcal {X}_{i} \), the following holds true: