Abstract

Real-time Action Recognition (ActRgn) of assembly workers can timely assist manufacturers in correcting human mistakes and improving task performance. Yet, recognizing worker actions in assembly reliably is challenging because such actions are complex and fine-grained, and workers are heterogeneous. This paper proposes to create an individualized system of Convolutional Neural Networks (CNNs) for action recognition using human skeletal data. The system comprises six 1-channel CNN classifiers that each is built with one unique posture-related feature vector extracted from the time series skeletal data. Then, the six classifiers are adapted to any new worker through transfer learning and iterative boosting. After that, an individualized fusion method named Weighted Average of Selected Classifiers (WASC) integrates the adapted classifiers as an ActRgn system that outperforms its constituent classifiers. An algorithm of stream data analysis further differentiates the actions for assembly from the background and corrects misclassifications based on the temporal relationship of the actions in assembly. Compared to the CNN classifier directly built with the skeletal data, the proposed system improves the accuracy of action recognition by 28%, reaching 94% accuracy on the tested group of new workers. The study also builds a foundation for immediate extensions for adapting the ActRgn system to current workers performing new tasks and, then, to new workers performing new tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Assembly is a process of coupling multiple workpieces together to produce a product of full functionality. It accounts for 20% of total production cost and 50% of total production time, respectively. In the automotive industry, the direct labor cost spent on assembly is ranged from 20 to 70% (ElMaraghy and ElMaraghy 2016). Therefore, the efficiency and quality of assembly are critical to manufacturers. The ability to recognize actions of assembly workers in real-time provides an opportunity to timely correct human mistakes and facilitate workers to operate effectively based on their particular needs (Zhou et al. 2013; Wang et al. 2021). Production innovations are occurring faster than ever. Manufacturing workers thus need to frequently learn new methods and skills. While vigorous efforts have been devoted to human action recognition (ActRgn) for various purposes (e.g., Pham et al. 2018; Moniruzzaman et al. 2021), action recognition for manufacturing assembly is rather limited for a few reasons. Such actions are complex, involve many fine motions, require interactions with various tools and parts, and have between-action similarity. Recognizing the detail of such actions in high accuracy is challenging. The lack of publicly available datasets on worker actions in manufacturing assembly is another obstacle facing the manufacturing research community (Al-Amin et al. 2020b).

RGB image-based action recognition has some limitations such as occlusion, luminosity, and the privacy concern (Chen et al. 2017). Therefore, wearable sensors have been predominantly used to recognize actions in manufacturing (Stiefmeier et al. 2008; Tao et al. 2018; Kong et al. 2019). To capture the movement of different body parts, a worker may need to wear multiple wearable devices on the body. This can cause discomfort and pressure to workers in some circumstances, negatively impacting their productivity. Some depth sensors such as the Microsoft Kinect can extract the 3D skeletal data of humans from the depth images they capture, thus being an alternative when RGB and wearable sensors are limited for use. Skeletal data provide a lower-dimensional representation of actions than other sensor data, which make action recognition faster, computationally efficient, and better in accommodating the real-time inference (Pham et al. 2018). The spatially distributed body joints of a worker indicate the posture of the worker. The temporal dynamics of the posture contain features of worker actions (Du et al. 2015). Various approaches were proposed to capture human actions from 3D skeletal data, including hidden Markov models (Rude et al. 2018), dividing the posture into body parts and encoding them into images(Khaire et al. 2018), using the coordinates of body joints directly (Pham et al. 2018), and extracting statistical features from skeletal data (Shen et al. 2020). These methods nonetheless neglect some useful information that can be extracted from skeletal data.

Following the success of Convolutional Neural Network (CNN) in image analysis (Krizhevsky et al. 2012), skeletal data are presented as images and processed by ActRgn CNNs (e.g., Al-Amin et al. , 2019; Li et al. , 2017; Kamel et al. , 2019). Compared to Multilayer Perceptrons (MLP) and Recurrent Neural Networks (RNN), CNN can automatically extract discriminative features of subtle, complex actions from the spatial and temporal relations of body joints, which can be obtained from the time series skeletal data (Al-Amin et al. 2020b). Thus, CNN is an attractive candidate classifier for skeletal data-based action recognition. Yet, challenges are also identified from those pioneer studies. First, how to translate the time series skeletal data into a set of images that capture temporal and spatial cues for action recognition? Second, how to address the negative impact of human heterogeneity on the ActRgn performance, including both the within-subject and the between-subject variances. Third, how to fuse multiple features or classifiers that can be developed from the skeletal data to provide more reliable ActRgn performance? Last but not the least, how to address the limitation of CNN classifiers in analyzing the stream data in real-time? Answers to these questions will advance the knowledge of skeletal data-based human action recognition in manufacturing assembly.

To address the above-discussed challenges, this paper proposes an individualized system of skeletal data-based CNN classifiers for recognizing worker actions in manufacturing assembly. Efforts to create this system are the development and integration of the following capabilities:

-

System architecting that involves extracting feature images from the time series skeletal data to build independent, complementary constituent classifiers and fusing them as an ActRgn system;

-

A method to adapt ActRgn classifiers to individual workers, which addresses the issues of between-subject heterogeneity and within-subject variance;

-

A fusion method named Weighted Average of Selected Classifiers (WASC) which maximizes the ActRgn performance at the system’s level for any individual worker;

-

A data analysis algorithm that improves the ActRgn result from analyzing untrimmed stream data.

The remainder of the paper is organized as the following. The Literature section summarizes the related literature, followed by an elaborated description of the proposed approach to developing the ActRgn system in the Methodology section. Then, an illustrative example and the assessment of the proposed ActRgn system are presented. The conclusion from this study and future work are summarized at the end.

The literature

The prior work on image analysis using CNN, transfer learning, classifier fusion, and temporal coherence information build the foundation for the proposed ActRgn system. Gaps in the literature inspire the technical approach to creating the system.

CNN is a feed forward neural network that works well in image analysis. When using it for action recognition, spatial features of the skeleton in actions are presented as images and a CNN is trained to classify the images (Khaire et al. 2018). The skeleton optical spectra (Li et al. 2019) and the graph convolution (Hou et al. 2018) were proposed for learning dynamic features of the skeleton in actions. Moreover, to incorporate both the spatial and temporal information of actions, a multi-task learning network for action recognition was also developed to jointly process images in parallel (Ke et al. 2017). Long Short Term Memory (LSTM) is an advanced version of recurrent neural networks, which models long-term dependencies with memory cells. It has been applied to skeletal-based action recognition as well (Han et al. 2018). The body joints in each frame are of unequal importance, and so frames in a sequence. Therefore, certain weights are automatically assigned to dominant joints and frames (Song et al. 2017; Liu et al. 2017). CNN and LSTM were also used simultaneously through the score fusion (Li et al. 2017; Nunez et al. 2018). In this approach, spatial domain features and temporal domain features can be extracted and fed to the CNN and the LSTM, respectively. If the input to a CNN classifier captures the spatio-temporal features of the skeleton, the CNN by itself can learn features of actions well. This approach is simple, but not explored thoroughly in the literature.

Knowledge transfer is critical for action recognition due to the inevitable differences between the source and the target population of workers (Cook et al. 2013). Zhao et al. (2011) proposed a transfer learning algorithm named TransEMDT that integrates the decision tree with the k-means clustering algorithm to achieve personalized activity recognition. The use of transfer learning coupled with deep learning for action recognition is still limited. Recently, Al-Amin et al. (2020b) transferred an ActRgn CNN to new subjects through fine-tuning the model with a small amount of data from new subjects. While transfer learning is shown to work, rooms for improvement are noticed.

Classical methods of classifier fusion such as majority voting, Naive Bayes, Dempster-Shafer theory, average fusion, and random forests usually treat classifiers equally. Therefore, they overlook the strength and weaknesses of different classifiers. To overcome this limitation, classifiers may be assigned weights based on their abilities in a variety of approaches. For instance, Ward et al. (2006) ranked classifiers according to the highest rank, borda count, and logistic regression. Hierarchical fusion is another approach (Banos et al. 2013), and Guo et al. (2019) developed it based on the entropy weight. Weighted linear opinion pools and weighted logarithmic opinion pools were also implemented for the classifier fusion (Guo et al. 2012). Weights for classifiers are determined in various ways, including genetic algorithms (Chernbumroong et al. 2015) and classifier performance measurements such as the overall accuracy of classifiers (Chung et al. 2019) and class-level recall values (Tsanousa et al. 2019). However, strength and weaknesses of individual classifiers are not consistent among workers.

The temporal information of objects in successive images can help improve the object detection from video data. Examples include the use of temporal and contextual information from tubelets obtained from videos (Kang et al. 2018), the propagation of deep feature maps from key frames to other frames (Zhu et al. 2017b), and the flow-guided feature aggregation that integrates features from nearby frames (Zhu et al. 2017a). Likewise, the temporal coherence information of sequential actions in assembly can be used to improve the ActRgn accuracy. This method has not been thoroughly explored.

Methodology

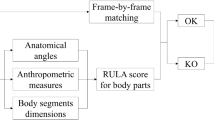

The proposed approach to creating the ActRgn system is illustrated in Fig. 1. First, Skeletal Feature Images (SFIs) extracted from a group of existing workers are used to train a set of CNN classifiers that each captures a unique aspect of assembly actions. Then, these classifiers are refined with the SFIs of a new worker to adapt to that worker through transfer learning and iterative boosting. After that, the adapted classifiers are fused as a system for recognizing the actions of the new worker. The stream data analysis algorithm corrects possible mistakes that the ActRgn system made in analyzing the stream data in real-time. The description of the symbols used in this paper is presented in Table 1.

Data preparation

The study collects data from two mutually exclusive groups of subjects. The first group is a sample of workers currently assigned to perform the assembly operation of the study. The second group is a sample of new workers who will be joining the assembly line to perform this operation. To capture the within-subject variance in the operation, subjects are asked to repeat the operation during the data collection. Each time of operation by a subject is considered as one experiment.

Microsoft Kinect, an infrared light sensor, is used for collecting data of individual workers in assembly operations at a frequency of 30 frames per second. The Kinect outputs the time series 3D coordinates of human body joints in a Euclidean space. The number of joints tracked in this study is J, and each joint has a unique index label, as Fig. 2 illustrates. Let i be the index of frames captured sequentially over time and j be the index of body joints. In any frame i, \(\mathbf {b}_{j,i}=(x_{j,i},y_{j,i},z_{j,i})\) represents the 3D coordinates of joint j.

The assembly operation involves K classes of sequential actions, indexed by k. Therefore, the time series of body joint coordinates collected from each experiment are trimmed into K sequential segments with each pertaining to one and only one action class. Then, a sliding window in a length of W frames is moving along the timeline at the stride size of \(\delta W\) frames to extract skeletal data pieces, named Skeletal Data Images (SDIs), from each time series segment. SDIs extracted from a segment are labeled with the action class of the segment. SDIs are in the size of \(W\times J\times 3\) since each contains the 3D coordinates of the J joints over W successive frames. The selection of the window size W is crucial. SDIs with a very short time span lack sufficient information to capture features of the performed action. Those with a very long time span may contain data of more than one action. \(\delta \) is chosen to be a positive decimal so that two successive SDIs extracted from a segment overlap with each other to capture their temporal connectivity.

Feature extraction from SDIs

This study calculates two categories of geometric features to capture the posture of workers in assembly. Derivatives of the features are further calculated to capture the temporal dynamics of the posture. Normalization is taken in calculating the features to make them invariant to the variations of the location and view of the Kinect in data collection and to the varied body size of subjects.

Geometric features of posture

Given J joints, \(L=\left( {\begin{array}{c}J\\ 2\end{array}}\right) \) joint-to-joint distances can be calculated, indexed by l. The distance features recorded over time form a time series of feature vector,

and the \(l^{\text {th}}\) distance, \(d_{l,i}\), is calculated as

where \(j=\lceil l/J\rceil \) and \(j'=l-\lfloor l/J\rfloor J\). \(\bar{d}_i\) in Eq. (2) is the sum of three distances: the left shoulder (#11) to the right shoulder (#6), the spine shoulder (#3) to the spine mid (#4), and the spine mid to the spine base (#5),

which is used for normalizing the distance features.

Angle features are also calculated to supplement distance features. With J body joints, \(\tilde{L}=J\left( {\begin{array}{c}J-1\\ 2\end{array}}\right) \) angle features can be calculated, indexed by \(\tilde{l}\). The angle features recorded over time form a time series of feature vector,

and the \(\tilde{l}^{\text {th}}\) angle, \(a_{\tilde{l},i}\), is calculated as

where \(\mathbf {b}_{j,i}\), \(\mathbf {b}_{j',i}\), and \(\mathbf {b}_{j'',i}\) are three different body joints in frame i. \((j, j', j'')\rightarrow \tilde{l}\) is a bijection.

Temporal dynamics of the geometric features

The study calculates the first order and second-order derivatives of the distance features, respectively, to capture the temporal dynamics (linear speed and acceleration) of any subject’s posture in the operation. The speed of distance change is approximated by the first-order difference equations:

where \(i_s\) and \(i_e (=i_s+W-1)\) are the indices of the first frame and the last frame of any SDI, and \(\varDelta i\) is the interval of frames for calculating changes in distance features.

The acceleration of distance change is approximated by the second-order difference equations:

Angle related dynamic feature vectors, \(\mathbf{A'}_{i}\) and \(\mathbf{A''}_i\), are similarly calculated. Therefore, six Skeletal Feature Images (SFIs) are calculated from each SDI, and each SFI is one feature vector that spans W frames. The width of SFIs is equal to W and the height, denoted by H, is the dimension of the feature vector. H is equal to L for the distance related feature vectors and \(\tilde{L}\) for the angle related feature vectors.

Training CNNs for action recognition

This study trains six CNNs for recognizing worker actions in assembly. They respectively read one of the six SFIs extracted from a SDI to predict the action class of the SDI. The six CNNs share the same architecture illustrated in Fig. 3, which is composed of three blocks in sequence. Each of the first two blocks contains two convolutional layers followed by a max-pooling layer. The kernel size (Kr), stride size (St), padding size (Pd), and the number of filters (Ft) for each convolution and pooling operation are displayed in Fig. 3. A feature map is generated using the ReLU function from each of these layers. The last feature map generated by the second block is flattened and densified into a \(1\times K\) score vector in the third block, which is converted to a probabilistic prediction of the action class for the SDI using the softmax function. To prevent over-fitting, the dropout technique is applied to drop 50% neurons.

The six SFIs extracted from each SDI, indexed by m, are respectively entered into the six CNNs to generate six probabilistic predictions of the action class for the SDI. Let \(\{p_{m,k}|k=1,\dots ,K\}\) be the probabilistic prediction made by the CNN classifier that analyzes the \(m{\text {th}}\) SFI, where \(p_{m,k}\) is the probability that the SDI would be action class k.

Adapting the CNNs to individual new workers

When new workers join the assembly line, the trained ActRgn CNN classifiers need to adapt to each of the new workers using transfer learning followed by iterative boosting. The approach is summarized in Algorithm 1 and discussed below.

For each new worker, the study collects a training dataset \(\varGamma _T\) for adapting the classifiers to the worker and a validation dataset \(\varGamma _V\) for evaluating the CNNs during the adaption process. The training dataset is split into a number of smaller mutually exclusive and collectively exhaustive subsets that each contains data from a few experiments:

where \(\gamma _0\) is used for transfer learning and \(\gamma _n\) is for the nth iteration of the boosting process, for \(n=1,\dots , N\).

Initial adaption by transferring learning

Transfer learning can adapt CNN classifiers a new worker who performs the same actions. The experimental study of this paper found that low- and medium-level features of assembly actions are well captured by the first two blocks of the ActRgn CNNs in Fig. 3, and distinct features of heterogeneous workers are mainly captured by the third block. Therefore, during transfer learning, the first two blocks of any CNN\(_m\) are frozen and the third block is retrained using the SFIs extracted from the training dataset \(\gamma _0\), denoted as {SFI\(_{m,0}\)}. After the initial adaption through transfer learning, the classifiers become {CNN\(_{m,0}\)}, which are evaluated using the SFIs extracted from the validation dataset \(\varGamma _V\), designated by {SFI\(_{m,V}\)}, to find their classification accuracy {\(\alpha _{m,0}\)}. A study by Al-Amin et al. (2020b) showed that an initial adaption is not sufficient for achieving a satisfactory result because of the within-subject variance.

Improving accuracy through iterative boosting

The performance of the initially adapted CNNs from transfer learning, {CNN\(_{m,0}\)}, can be further boosted iteratively. Let {SFI\(_{m,1}\)} be the SFIs extracted from the training dataset \(\gamma _1\) for the 1st iteration of boosting. {CNN\(_{m,0}\)} are tested on {SFI\(_{m,1}\)}, and misclassified SFIs are denoted by {SFI\(_{m,1}^f\)}. The training dataset for the 1st iteration of boosting, Tr\(_{m,1}\), is the union of {SFI\(_{m,1}^f\)} and the initial training dataset Tr\(_{m,0}\)={SFI\(_{m,0}\)} that has been used for transfer learning. {CNN\(_{m,0}\)} are refined with the updated training dataset Tr\(_{m,1}\) to obtain the updated classifiers {CNN\(_{m,1}\)}. Evaluated on {SFI\(_{m,V}\)}, the performance of the boosted classifiers from this iteration, \(\{\alpha _{m,1}\}\), is determined. This approach aims to boost the performance of classifiers by letting them learn from their weakness. This process continues for sufficient number of iterations to assure that a satisfied performance of the classifiers has been attained. The classifiers achieving the best performance from the boosting process are chosen as the final constituent classifiers of the ActRgn system for the worker, denoted by {CNN\(_{m}^*\)}. That is,

The fusion of classifiers

The method to fuse the results of the six already adapted CNN classifiers is critical because it directly impacts the performance of the ActRgn system. This study proposes a fusion method, named Weighted Average of Selected Classifiers (WASC).

For a SDI, CNN\(_m^*\) classifies it as action class k with the probability \(p_{m,k}\), for any class k. The six classifiers have unequal abilities to predict an action. Therefore, an individualized weight matrix is developed, where the element \(w_{m,k}\) is the weight for \(p_{m,k}\). Let \(r_{m,k}\) be the recall value of CNN\(_m^*\) in recognizing action class k of the worker, obtained from evaluating {SFI\(_{m,V}\)}. \(r_{m,k}\) measures the ability of CNN\(_m^*\) in recognizing action class k, which varies across the six classifiers. First, the study normalizes \(r_{m,k}\)’s across the six classifiers to determine the weight \(w_{m,k}\):

Then, the weighted probability of classification by CNN\(_m^*\) is:

For any action class k, a binary filter \(\xi \) is defined below to select classifiers that assign the largest weighted probability to it:

Finally, the ActRgn system predicts the SDI as action class k with the probability \(p_k\), which is the weighted average of the classification probabilities of selected classifiers:

for any action class k. The final classification result by the ActRgn system, \(\widehat{y}\), is:

Stream data analysis

Besides taking the designated actions in the assembly, workers may be idle or do something else for a variety of reasons such as loss of attention, fatigue, lack of knowledge or information, and so on. SDIs that are irrelevant to the actions for assembly are background SDIs. In processing stream data, the ActRgn system may read background SDIs and mistakenly recognize them as action SDIs. The ActRgn system may have mistakes in classifying action SDIs too. This study develops Algorithm 2 below that analyzes the probabilistic classification result of the ActRgn system in processing stream data to attempt to correct these two types of mistakes.

From testing the ActRgn system with background SDIs, it is noticed that the highest probability of classification is not dominantly high, and quite a few action classes (2\(\sim \)4) are assigned with a non-trivial classification probability. This pattern of background SDIs is quite different than that of action SDIs, where one action class usually receives a dominantly high probability than other classes do. Accordingly, this study establishes a method to detect background SDIs from untrimmed data. For any SDI, let \(p^{(k)}\) be the \(k^{\text {th}}\) highest classification probability. Using the probabilistic classification result of the background SDIs extracted from the validation dataset, a 99% confidence interval (CI) is established for the \((k)^{\text {th}}\) highest probability, named the \((k)^{\text {th}}\) CI and denoted as \([L^{(k)},U^{(k)}]\), for \((k)=1,\dots , 4\). A SDI is classified as a background SDI and labeled as “Background” if two or more than two of the top four classification probabilities fall in their respective CI of classification probabilities for background SDIs.

Actions for assembly are sequential. Therefore, their temporal relationship may help correct some of the classification mistakes. If a SDI is classified as an action class different than that of the preceding SDI, this inconsistency may happen in a transition to the next action or it is a mistake. Observing an inconsistency, the temporal analysis yields an alternative classification result \(\tilde{y}_t\), which is the action class with the second-highest probability. The assumption is that the alternative classification result may contain partial information of the SDI. If the alternative result is consistent with the classification of the preceding SDI, the temporal analysis considers the classification from the ActRgn system as a mistake and thus accepts the alternative classification result to rectify it. Otherwise, the classification result from the ActRgn system is accepted.

Illustrative example and assessment

Experiment design

To demonstrate and assess the proposed ActRgn system, this study analyzes one step in assembling the Bukito 3D printer in a lab setting, which is “putting on the handle". This step involves seven sequential actions (i.e., K=7) that are described in Fig. 4. A Microsoft Kinect is used to output the time series 3D coordinates of 17 body joints (i.e., J=17) displayed in Fig. 2 and the RGB images. The RGB images are annotated with corresponding frame numbers and are used as a reference for the data preparation described in the Methodology section.

15 subjects are recruited including both males and females. They are split into two mutually exclusive groups. The group CRT has 10 subjects who perform the assembly step for 10 times. Out of these, 8 times were used to create the dataset for training the base classifiers, whereas the remaining 2 times were for testing the classifiers. The group NEW has 5 subjects who represent new workers coming to perform the assembly. The group NEW repeats the assembly step for 40 times. Among these, 20 times are training data for adapting the classifiers to new workers through transfer learning and iterative boosting (i.e., \(\varGamma _T\)); 10 times are the data for evaluating the classifiers during the adaption process (i.e., \(\varGamma _V\)); the remaining 10 times are used to create the dataset for testing the proposed ActRgn system. Partial data can be accessed at Al-Amin et al. (2020a).

To extract SDIs from the time series of skeletal data, a sliding window in the length of 30 frames (i.e., W=30) and the stride size of 15 frames (i.e., \(\delta \)=0.5) are used. That is, for every 0.5 seconds the ActRgn system reads the most recent 30 frames to classify the action of the worker during the past one second. Table 2 summarizes the distribution of SDIs in groups CRT and NEW.

The assembly operation mainly involves the 17 joints of the upper body shown in Fig. 2. Given 17 joints, \(L=\left( {\begin{array}{c}17\\ 2\end{array}}\right) =136\) distance features can be calculated. Angles that can be formed by the 10 joints of the upper extremity (i.e., joints #6-#15) are calculated because the assembly operation mainly involves the worker’s upper extremity. The 10 joints provide \(\tilde{L}=10\left( {\begin{array}{c}9\\ 2\end{array}}\right) =360\) angle features. Therefore, the dimension of the three distance related SFIs (i.e., SFI\(_D\), SFI\(_{D'}\), and SFI\(_{D''}\)) is \(30\times 136\). The dimension of three angle related SFIs (i.e., SFI\(_A\), SFI\(_{A'}\), and SFI\(_{A''}\)) is \(30\times 360\). SFIs are all normalized to take values within [\(-1\),1] before being used for training, validating, and testing the ActRgn CNN classifiers.

In training the ActRgn CNN classifiers, the adaptive learning rate optimizer (Adam) along with the cross-entropy loss function is used. Initially, the learning rate of Adam is set as 0.001. It is dynamically decreased over iterations. To avoid the issue of overfitting, L2 and dropout regularization are implemented.

An illustrative example

Using a worker in the group NEW (Sub-1) as an example, the ActRgn system individualized for this worker achieves 97.4% accuracy on the testing dataset. Figure 5 describes how this ActRgn performance is achieved. The six base classifiers, trained on the dataset of group CRT, achieve an accuracy ranging from 48.6% (classifier \(A'\)) to 76.5% (classifier \(A''\)) in recognizing the actions of this worker. The performances of the base classifiers are far below the satisfaction and vary largely. Transfer Learning (TL) is implemented for achieving an initial adaption of the base classifiers to the worker, which increases the accuracy of the six classifiers by 5.3% (classifier \(A'\)) to 29.4% (classifier A). Then, the initially adapted classifiers are further improved through Iterative Boosting (IB), achieving an accuracy ranging from 75.3% (classifier \(D'\)) to 96.0% (classifier D). While the ActRgn accuracy of the adapted classifiers still varies largely, four out of six classifiers have an accuracy higher than 90%. The ActRgn system, as an ensemble of the already adapted classifiers using the WASC fusion, achieves 97.4% accuracy, higher than the accuracy of any constituent classifier of the system by 1.4% (compared to classifier D) to 22.1% (compared to classifier \(D'\)).

The study further reviews the recall and precision matrices in Fig. 6 to determine the class-level performance of the ActRgn system. For worker Sub-1, the SDIs of classes 2, 3, and 4 are perfectly recognized; the SDIs of classes 1, 6, and 7 SDIs are recognized with a recall value greater than 96%; only class 5 has a relatively low recall value, 87.1%. The incorrectly classified class 5 SDIs are all recognized as class 7, which is a major reason for the low precision for class 7. Some action classes share a certain similarity, thus causing confusions. For example, action classes 5 and 7 all involve taking the tool from, or returning it to, a similar location using the same hand. The confusion matrix is found to vary among the tested subjects in this study, because different workers may perform the same action in a slightly different way.

Figure 7 illustrates the result of the ActRgn system in analyzing the untrimmed stream data of the new worker Sub-1 in an experiment. The experiment lasts 41 seconds and 81 SDIs in total are extracted from the stream data, with 66 action SDIs (action classes 1 to 7) and 15 background SDIs (labeled as “Background"). 63 out of 66 (95.5%) action SDIs are correctly recognized, and so 8 out of 15 (53.3%) background SDIs. Among the 7 misclassified background SDIs, 5 SDIs occur during the transition from one action to the next action and other 2 SDIs are 1.5\(\sim \)2 seconds before Act-1 takes place. Moreover, 5 out of the 7 misclassified background SDIs are classified as the preceding or the succeeding action. This is due to the fact that SDIs during the transition may contain data either from the preceding or the succeeding action.

Comparison of basic ActRgn classifiers

This study chose CNN as the basic classifier for building the ActRgn system. To verify the rationale of choosing CNN, this study compares the performances of CNN, MLP, and LSTM as the basic classifiers. Here, both the SDIs and the six types of SFIs are considered as the input to the classifiers. The experimental result in Table 3 shows that CNN outperforms MLP and LSTM in analyzing any of the seven inputs on the CRT testing dataset. The result on the NEW testing dataset is similar except for one exception; that is, the accuracy of CNN in analyzing SFI\(_{D'}\) is 0.56% lower than that of LSTM. This comparative study verifies the advantage of using CNN as the underlying classifiers for building the ActRgn system.

Advantages of the ActRgn system architecture

This study proposes extracting six posture related feature vectors to respectively create six 1-channel (1-C) ActRgn CNN classifiers and then fusing them as an ActRgn system. This architecture is based on two hypotheses. On one hand, each of the six feature vectors conveys unique information of actions to independently support action recognition to a certain degree. On the other hand, the six individual classifiers have complementary strengths. To demonstrate its advantage, the proposed system architecture is compared to a single CNN built on SDIs (i.e., the raw data) and a system of two 3-channel CNNs with one built with the three distance related features [SFI\(_D\), SFI\(_{D'}\), SFI\(_{D''}\)] and the other built with the three angle related features [SFI\(_A\), SFI\(_{A'}\), SFI\(_{A''}\)]. Table 4 shows the ActRgn accuracy of the raw data CNN, the six 1-channel (1-C) CNNs, the two 3-channel CNNs, and the 1-C and 3-C ActRgn systems respectively built with four fusion methods—maximum, average, product, and majority voting (Maj Vot). All CNNs in Table 4 are base classifiers that have not been individualized yet. They are firstly tested on the CRT testing dataset and then on the NEW testing dataset to verify the challenge of worker heterogeneity on action recognition. When tested on the group of new workers, both the subject-level and the group-level accuracy are provided.

In recognizing the actions of any group or any individual in Table 4, at least one 1-C CNN outperforms the raw data CNN, and at least one 3-C CNN is as good as, or better than, the raw data CNN. For example, the six 1-C CNNs and the two 3-C CNNs all outperform the raw data CNN in recognizing the actions of Sub-3. For Sub-2, the 1-C CNN built with SFI\(_{A''}\) is better than the raw data CNN and the 3-C CNN with [SFI\(_A\), SFI\(_{A'}\),, SFI\(_{A''}\)] is as good as the raw data CNN. The observation suggests that some of the feature vectors calculated from SDIs convey information more useful for action recognition than SDIs.

No single feature-based classifier dominates other classifiers for all the five individual subjects. For example, the best 1-C CNN for Sub-1 is the classifier built with SFI\(_{A''}\) and the best 3-C CNN is the classifier built with [SFI\(_D\), SFI\(_{D'}\),, SFI\(_{D''}\)]. But for Sub-3, the best 1-C CNN is the classifier with SFI\(_A\) and the best 3-C CNN is the one built with [SFI\(_A\), SFI\(_{A'}\),, SFI\(_{A''}\)]. Therefore, a system of fused classifiers is more robust to worker heterogeneity than an individual classifier.

Both the 1-C CNN system and the 3-C CNN system can be built through the maximum fusion, average fusion, and product fusion. The 1-C CNN system can also be built through the majority voting. These seven configurations have varied performance, displayed in the seven rows from the bottom in Table 4. At the group level, the seven configurations all outperform the raw data CNN. At the individual level, all the seven configurations are better than the raw data CNN in recognizing the actions of Sub-1, -3, and -4. For Sub-2, the 1-C CNN systems with the average fusion, product fusion, and majority voting outperform the raw data CNN. For Sub-5, the 1-C CNN systems with the average fusion and product fusion achieve higher accuracy than the raw data CNN. This comparison further confirms the advantage of posture-related feature images over the raw data images.

At least one fusion method can build a 1-C CNN system better than all the constituent classifiers, but this is not true for the 3-C CNN system. For example, no 3-C CNN system outperforms all its constituent classifiers for Sub-1 and Sub-2. This indicates the fusion of six 1-C CNN classifiers is a better system architecture than the fusion of two 3-C CNN classifier. The 1-C CNN system indeed is a better ActRgn system than the 3-C CNN system, evidenced by the comparison in Table 4. When testing them on the group CRT, the 1-C CNN system always achieves higher accuracy than the 3-C CNN system, ranging from 5.7% (maximum fusion) to 8.8% (average fusion). Similarly, on the group NEW, the 1-C CNN system has higher accuracy than the 3-C CNN system built with any fusion method. The improvement is up to 6.1% (product fusion). At the individual level, the 1-C CNN system outperforms the 3-C CNN system built with any fusion method, with only one noticed exception. That is, the 1-C CNN system for Sub-2 is outperformed by the 3-C CNN system if the maximum fusion is used. The comparative study in Table 4 supports the use of the proposed ActRgn CNN architecture of this paper.

Effectiveness of transfer learning and iterative boosting

Table 5 evaluates and confirms the effectiveness of transfer learning and iterative boosting for adapting the six classifiers to individual workers. The group-level assessment is also provided at the bottom. The ActRgn accuracy before the adaption (Bfr) is the accuracy of the base classifiers in Table 4. Then, increment due to transfer learning (TL) and that from iterative boosting (IB) are determined. The accuracy after the adaption (Aft) and the corresponding change in accuracy (chg) due to the classifier adaption are calculated too.

Using the new worker Sub-1 as an example, transfer learning increases the ActRgn accuracy by an amount ranging from 5.3% (classifier of SFI\(_{A'}\)) to 29.4% (classifier of SFI\(_A\)). Iterative boosting contributes an additional 6.3% (classifier of SFI\(_{A''}\)) to 31.8% (classifier of SFI\({_A'}\)). The classifier adaption improves the accuracy by 17.6% (classifier of SFI\(_{A''}\)) to 40.3% (classifier of SFI\(_A\)). Helped by transfer learning and iterative boosting, the classifier D has reached 96% accuracy, becoming the best 1-C CNN classifier for Sub-1. The classifier \(D'\), though being the least capable classifier for Sub-1, still reaches 75.3% accuracy. Both transfer learning and iterative boosting effectively adapt the six base classifiers to other new workers too. Yet their contributions vary among workers and classifiers. The most improved classifier is the classifier of SFI\(_A\) for Sub-1, whose accuracy is increased by 40.3%. The least improved classifier is the classifier of SFI\(_D\) for Sub-4, with an increase of 12.8%. At the group level, the classifier adaption increases the ActRgn accuracy by 17.8% to 25.1%. Among the six already adapted classifiers, the classifiers built on the velocity features (i.e., SFI\(_{D'}\) and SFI\(_{A'}\)) are usually not among the top classifiers in terms of accuracy.

Effectiveness of the WASC fusion

To verify the advantage of the proposed Weighted Average of Selected Classifiers (WASC) fusion, it is compared to other five methods: maximum, product, average, majority voting (Maj Vot), and weighted average (Wgt Avg). The weighted average fusion is similar to the proposed WASC fusion except that it does not implement the filter in Eq. (12) to select classifiers. The comparative study is performed at both the individual level and the group level, with the result summarized in Table 6 and visualized in Fig. 8. Figure 8 shows only the weighted average fusion and the WASC fusion outperform all the constituent classifiers across the five tested workers. This verifies the helpfulness of discriminating individual classifiers by their strength at the class level. Furthermore, the WASC is better than the weighted average fusion for recognizing the actions of every individual in the group NEW, increasing the accuracy by 0.4% (Sub-1) to 2.5% (Sub-5). The higher accuracy of the WASC method over the weighted average method confirms the unique strength of the WASC fusion.

Stream data analysis

This paper performs a before-after study to demonstrate that Algorithm 2 can improve the results of the ActRgn system in analyzing stream data. Table 7 computes the accuracy (%) in recognizing all the testing SDIs (All), background SDIs (Bdg), action SDIs (Acts), and individual action classes (Act-1,..., -7). The study is performed using the untrimmed stream test data of the NEW group. At both the subject level and the group level, the comparison shows the ActRgn accuracy before implementing Algorithm 2 (Bfr) and after (Aft). The volume of testing SDIs (size) for the accuracy calculation is provided too as a reference. Although the ActRgn system has a good capability in classifying action SDIs, its accuracy in recognizing background SDIs is zero. This is because the ActRgn system is designed to classify SDIs into one of the seven action classes.

Using the new worker Sub-1 as an example, the accuracy in recognizing action SDIs is 97.2% whereas the accuracy in recognizing background SDIs is 0%. The accuracy in recognizing all the SDIs extracted from the stream data is only 85.4%. After implementing Algorithm 2, the accuracy in recognizing background SDIs is 68.7% and the accuracy in recognizing actions drops about 0.3%, to 96.9%. The accuracy in analyzing the stream data, including both the background and action SDIs, is 93.4%, which is an 8% increase compared to the accuracy before implementing the stream data analysis algorithm. For the other four subjects, the improved accuracy in analyzing the stream data is 6.7% (=90.9-84.2), 6.7% (=85.2-78.5), 4.5% (=90.7-86.2), and 6.6% (=88.6-82.0), respectively. At the group level, the accuracy is improved by 6.4%, from 83.2% to 89.6%.

The capability of the stream data analysis algorithm in detecting the background SDIs varies among the tested subjects. The accuracy is the highest in detecting the background SDIs of Sub-1 (68.7%) and it is the lowest for Sub-5 (38.6%). This variation is mainly caused by the heterogeneity of individual workers. At the group level, the algorithm detected 48.8% of background SDIs correctly, and other background SDIs are recognized as actions. The background SDIs recognized as actions are mainly in the transition of two successive actions.

Although the accuracy in recognizing actions drops about 0.3% for Sub-1, the accuracy in recognizing the actions for the other four subjects increases. Therefore, at the group level the accuracy in recognizing actions increases 1.8%, from 92.2% to 94.1%. This indicates the temporal analysis in Algorithm 2 helps improve the accuracy in recognizing worker actions. By comparing the change in accuracy at the class level in Table 7, it is noticed that the accuracy improvement for some classes may be at the cost of lowering the accuracy of others. Using the new worker Sub-5 as an example, the temporal analysis increases the accuracy in recognizing Act-3, -5 -6, and -7, but decreases the accuracy in recognizing Act-1.

Impact of occlusion

Occlusion is one of the key challenges for skeletal data-based human action recognition. There are a few crucial factors that vary the impact of occlusion. To what extent does occlusion impact the skeletal data-based action recognition? To study this problem, an experimental study is performed to evaluate the impact of occlusion. In the design of experiments, three factors with two levels of each have been considered:

-

The level of occlusion (Level): partial (the left hand) vs. full (the entire human body)

-

The number of occlusions in each time of operation (No.): 2 vs. 3

-

The duration of each occlusion (Duration (sec)): 3 vs. 6

to investigate the impact occlusion.

The untrimmed streaming data of Sub-1 from the NEW group are assessed in the above-said eight experiments (=\(2^3\)). The data comprise 687 SFIs in total, with 604 action SFIs and 83 background SFIs, obtained from ten times of operation by the subject. Table 8 summarizes the accuracy in recognizing actions, the background, and all of them, before (Bfr) and after (Aft) the stream data analysis (SDA) is applied to the fusion result from the Weighted Average of Selected Classifier (WASC). From the table is can be seen that:

-

The impact of full occlusion is more severe than partial occlusion. When a full occlusion happens, input SFIs contain no skeletal data. The ActRgn system lacks the ability to recognize them, which is reflected by the reduced overall accuracy, the accuracy in detecting the background (fully blocked SFIs reduce the ability of STA), and the accuracy in action recognition.

-

When a partial occlusion happens, missing features in the affected SFIs are estimated as the average of the remaining features. The ActRgn system can still recognize some of the partially occluded SFIs.

-

The accuracy declines when the number of occlusions and/or the duration of occlusion increase.

-

Occlusion impairs the ability of stream data analysis on top of WASC. But the stream data analysis still helps improve the overall performance slightly, mainly due to its ability to detect the background.

Conclusion and future work

This paper proposes a system of skeletal data-based CNN classifiers for action recognition, which is individualized for heterogeneous workers to recognize their actions in assembly reliably. The paper demonstrates the advantage of the proposed system architecture that computes posture-related feature vectors using the skeletal data extracted from depth images, builds the constituent classifiers using individual feature vectors, and fuses them to become a system. The study further verifies the importance of individualizing the system for heterogeneous workers, which adapts the ActRgn system to individual workers through transfer learning, iterative boosting, and the WASC fusion method. The algorithm of stream data analysis not only improves the accuracy of the individualized system in recognizing the worker’s actions but differentiate background data and actions to some extent.

The paper builds a foundation for important extensions and future explorations. The revision or an update of the assembly process usually introduces additional actions. Adding new action classes to an existing ActRgn system is an important research problem. We plan on exploring this problem by developing a class incremental learning (Class-IL) strategy (Tao et al. 2020). When the classifiers learn to recognize new action classes, the classifiers might suffer from catastrophic forgetting, which is a long-standing challenge in class-IL that tends to override the previous classes when confronted with new classes. To address this challenge, multiple crucial components of a class-IL algorithm will be explored: including a memory buffer to store a few exemplars of old classes, a constraint on keeping previous knowledge in learning new classes, and a learning system that balances old and new classes (Mittal et al. 2021). Another immediate extension of the study is to adapt the ActRgn system to existing workers performing new tasks and then to new workers performing new tasks. This extension is critical to the scale-up of system implementation. While transfer learning and iterative boosting effectively adapt the constituent classifiers to individuals, a faster adaption is desired to accommodate the quickly changing, highly unpredictable condition of future manufacturing. A hypothesis is that layers of neural networks to refine is dependent on the new subject or new task that the system will adapt to. A method that can optimize the classifier refining process is needed. The current stream data analysis algorithm can detect some background data, but not all of them. The ability to detect background in high accuracy and to differentiate different types of background data is useful in applications. These exciting opportunities call for future research.

References

Al-Amin, M., Qin, R., Moniruzzaman, M., Yin, Z., Tao, W., & Leu, M. C. (2020). Data for the individualized system of skeletal data-based CNN classifiers for action recognition in manufacturing assembly.

Al-Amin, M., Qin, R., Tao, W., Doell, D., Lingard, R., Yin, Z., & Leu, M. C. (2020). Fusing and refining convolutional neural network models for assembly action recognition in smart manufacturing. Proceedings of the Institution of Mechanical Engineers, Part C: Journal of Mechanical Engineering Science, page NA.

Al-Amin, M., Tao, W., Doell, D., Lingard, R., Yin, Z., Leu, M. C., et al. (2019). Action recognition in manufacturing assembly using multimodal sensor fusion. Procedia Manufacturing, 39, 158–167.

Banos, O., Damas, M., Pomares, H., Rojas, F., Delgado-Marquez, B., & Valenzuela, O. (2013). Human activity recognition based on a sensor weighting hierarchical classifier. Soft Computing, 17(2), 333–343.

Chen, C., Jafari, R., & Kehtarnavaz, N. (2017). A survey of depth and inertial sensor fusion for human action recognition. Multimedia Tools and Applications, 76(3), 4405–4425.

Chernbumroong, S., Cang, S., & Yu, H. (2015). Genetic algorithm-based classifiers fusion for multisensor activity recognition of elderly people. IEEE Journal of Biomedical and Health Informatics, 19(1), 282–289.

Chung, S., Lim, J., Noh, K. J., Kim, G., & Jeong, H. (2019). Sensor data acquisition and multimodal sensor fusion for human activity recognition using deep learning. Sensors, 19(7), 1716.

Cook, D., Feuz, K. D., & Krishnan, N. C. (2013). Transfer learning for activity recognition: A survey. Knowledge and Information Systems, 36(3), 537–556.

Du, Y., Fu, Y., & Wang, L. (2015). Skeleton based action recognition with convolutional neural network. In 3rd IAPR Asian conference on pattern recognition (ACPR), pp. 579–583.

ElMaraghy, H., & ElMaraghy, W. (2016). Smart adaptable assembly systems. Procedia CIRP, 44, 4–13.

Guo, M., Wang, Z., Yang, N., Li, Z., & An, T. (2019). A multisensor multiclassifier hierarchical fusion model based on entropy weight for human activity recognition using wearable inertial sensors. IEEE Transactions on Human-Machine Systems, 49(1), 105–111.

Guo, Y., He, W., & Gao, C. (2012). Human activity recognition by fusing multiple sensor nodes in the wearable sensor systems. Journal of Mechanics in Medicine and Biology, 12(05), 1250084.

Han, Y., Chung, S. L., Chen, S. F., & Su, S. F. (2018) Two-stream LSTM for action recognition with RGB-D-based hand-crafted features and feature combination. In IEEE International Conference on Systems, Man, and Cybernetics (SMC), pp. 3547–3552. IEEE.

Hou, Y., Li, Z., Wang, P., & Li, W. (2018). Skeleton optical spectra-based action recognition using convolutional neural networks. IEEE Transactions on Circuits and Systems for Video Technology, 28(3), 807–811.

Kamel, A., Sheng, B., Yang, P., Li, P., Shen, R., & Feng, D. D. (2019). Deep convolutional neural networks for human action recognition using depth maps and postures. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 49(9), 1806–1819.

Kang, K., Li, H., Yan, J., Zeng, X., Yang, B., Xiao, T., et al. (2018). T-CNN: Tubelets with convolutional neural networks for object detection from videos. IEEE Transactions on Circuits and Systems for Video Technology, 28(10), 2896–2907.

Ke, Q., Bennamoun, M., An, S., Sohel, F., & Boussaid, F. (2017). A new representation of skeleton sequences for 3D action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3288–3297. IEEE.

Khaire, P., Kumar, P., & Imran, J. (2018). Combining CNN streams of RGB-D and skeletal data for human activity recognition. Pattern Recognition Letters, 115, 107–116.

Kong, X. T., Luo, H., Huang, G. Q., & Yang, X. (2019). Industrial wearable system: The human-centric empowering technology in Industry 4.0. Journal of Intelligent Manufacturing, 30(8), 2853–2869.

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25, 1097–1105.

Li, B., Li, X., Zhang, Z., & Wu, F. (2019). Spatio-temporal graph routing for skeleton-based action recognition. Proceedings of the AAAI Conference on Artificial Intelligence, 33, 8561–8568.

Li, C., Wang, P., Wang, S., Hou, Y., & Li, W. (2017). Skeleton-based action recognition using LSTM and CNN. In IEEE International conference on multimedia and expo workshops (ICMEW), pp. 585–590. IEEE.

Liu, J., Shahroudy, A., Xu, D., Kot, A. C., & Wang, G. (2017). Skeleton-based action recognition using spatio-temporal LSTM network with trust gates. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(12), 3007–3021.

Mittal, S., Galesso, S., & Brox, T. (2021). Essentials for class incremental learning. arXiv preprint arXiv:2102.09517.

Moniruzzaman, M., Yin, Z., He, Z. H., Qin, R., & Leu, M. (2021). Human action recognition by discriminative feature pooling and video segmentation attention model. IEEE Transactions on Multimedia.

Nunez, J. C., Cabido, R., Pantrigo, J. J., Montemayor, A. S., & Velez, J. F. (2018). Convolutional neural networks and long short-term memory for skeleton-based human activity and hand gesture recognition. Pattern Recognition, 76, 80–94.

Pham, H. H., Khoudour, L., Crouzil, A., Zegers, P., & Velastin, S. A. (2018). Exploiting deep residual networks for human action recognition from skeletal data. Computer Vision and Image Understanding, 170, 51–66.

Rude, D. J., Adams, S., & Beling, P. A. (2018). Task recognition from joint tracking data in an operational manufacturing cell. Journal of Intelligent Manufacturing, 29(6), 1203–1217.

Shen, C., Chen, Y., Yang, G., & Guan, X. (2020). Toward hand-dominated activity recognition systems with wristband-interaction behavior analysis. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 50(7), 2501–2511.

Song, S., Lan, C., Xing, J., Zeng, W., & Liu, J. (2017). An end-to-end spatio-temporal attention model for human action recognition from skeleton data. In Proceedings of AAAI Conference on Artificial Intelligence, pp. 4263–4270.

Stiefmeier, T., Roggen, D., Ogris, G., Lukowicz, P., & Tröster, G. (2008). Wearable activity tracking in car manufacturing. IEEE Pervasive Computing, 7(2), 42–50.

Tao, W., Lai, Z.-H., Leu, M. C., & Yin, Z. (2018). Worker activity recognition in smart manufacturing using IMU and sEMG signals with convolutional neural networks. Procedia Manufacturing, 26, 1159–1166.

Tao, X., Hong, X., Chang, X., Dong, S., Wei, X., & Gong, Y. (2020). Few-shot class-incremental learning. InProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12183–12192.

Tsanousa, A., Meditskos, G., Vrochidis, S., & Kompatsiaris, I. (2019). A weighted late fusion framework for recognizing human activity from wearable sensors. In 10th international conference on information, intelligence, systems and applications (IISA), pp. 1–8. IEEE.

Wang, K.-J., Rizqi, D. A., & Nguyen, H.-P. (2021). Skill transfer support model based on deep learning. Journal of Intelligent Manufacturing, 32(4), 1129–1146.

Ward, J. A., Lukowicz, P., Troster, G., & Starner, T. E. (2006). Activity recognition of assembly tasks using body-worn microphones and accelerometers. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(10), 1553–1567.

Zhao, Z., Chen, Y., Liu, J., Shen, Z., & Liu, M. (2011). Cross-people mobile-phone based activity recognition. In Twenty-second International Joint Conference on Artificial Intelligence, pp. 2545–2550.

Zhou, F., Ji, Y., & Jiao, R. J. (2013). Affective and cognitive design for mass personalization: Status and prospect. Journal of Intelligent Manufacturing, 24(5), 1047–1069.

Zhu, X., Wang, Y., Dai, J., Yuan, L., & Wei, Y. (2017). Flow-guided feature aggregation for video object detection. Proceedings of the IEEE International Conference on Computer Vision, 1, 408–417.

Zhu, X., Xiong, Y., Dai, J., Yuan, L., & Wei, Y. (2017). Deep feature flow for video recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4141–4150.

Acknowledgements

All the authors of this paper received financial support from the National Science Foundation through the Award CMMI-1646162. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Al-Amin, M., Qin, R., Moniruzzaman, M. et al. An individualized system of skeletal data-based CNN classifiers for action recognition in manufacturing assembly. J Intell Manuf 34, 633–649 (2023). https://doi.org/10.1007/s10845-021-01815-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-021-01815-x