Abstract

Road owners are concerned with the state of the road surface and they try to reduce noise coming from the road as much as possible. Using sound level measuring equipment installed inside a car, we can indirectly measure the road pavement state. Noise inside a car is made up of rolling noise, engine noise and other confounding factors. Rolling noise is influenced by noise modifiers such as car speed, acceleration, temperature and road humidity. Engine noise is influenced by car speed, acceleration, and gear shifts. Techniques need to be developed which compensate for these modifying factors and filter out the confounding noise. This paper presents a hierarchical clustering method resulting in a mapping of the road pavement quality. We present the method using a dataset recorded in multiple cars under different circumstances. The data has been retrieved by placing a Raspberry Pi device within these cars and recording the sound and location during various trips at different times. The sound data of our dataset was then corrected for correlation with speed and acceleration. Furthermore, clustering techniques were used in order to estimate the type and condition of the pavement using this set of noise measurements. The algorithms were run on a small dataset and compared to a ground truth which was derived from visual observations. The results were best for a combination of Generalised Additive Model (GAM) correction on the data combined with hierarchical clustering. A connectivity matrix merging points close to each other further enhances the results for road pavement quality detection, and results in a road type detection rate around 90%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Damaged road surfaces and potholes can lead to damage to vehicles and their freight on the road and finally to damage claims. Also damaged roads lead to more traffic noise and possible noise annoyance (Ouis 2001; Lex Brown 2015). Therefore, the road surface condition of all major roads in many regions in the world is periodically screened with statistical procedures, while regional roads are often monitored using visual inspection. Specialised equipment needs to be bought to calibrate the road surface correction, and people need to be trained in order to use the specialised equipment. It also results in difficulty to monitor the state of the road continuously and find out about unexpected changes in the road structure, caused by unexpected events (Singh et al. 2017). In this article a new method is proposed where a car is equipped with a sound intensity sensor and a GPS sensor and the measurements are analysed using machine learning methods. The method compensates for statistical and deterministic noise level fluctuations using knowledge about the confounding factors in the road data and uses statistical clustering methods to cluster by road pavement type. This is a step into the direction of continuous road monitoring using existing devices such as smartphones.

The remainder of this article consists of six sections. Section 2 lists related work for analysing the road structure. Section 3 gives an overview of the method to analyse the road structure used in this paper. Section 4 shows how the data is analysed to correct for unwanted speed and acceleration influence in the data. Section 5 describes the different techniques used to classify the data into different road types. Section 6 defines the metrics to evaluate the clustering and compare the different techniques. Section 7 concludes the article.

2 Related work

There are a few existing methods for road surface monitoring, such as the state of the art Statistical Pass-By (SPB) method (None 1997), an ISO standard where sound is recorded along the side of the road, detecting the median noise and the speed of cars passing by and compensating for the correlation of car speed with the emitted rolling noise. The value measured is representative for the noise produced by the average traffic from the road. The Close Proximity (CPX) method (None 2017), also an ISO standard, is a complementary approach. This is a measurement technique where the road surface is evaluated using a driving vehicle and an accurate GPS sensor. Two microphones are directed towards the tire which monitor the road noise close to the road-tire contact surface. These are placed inside an acoustical isolated housing, built into the chassis of a trailer driving around at a specific reference speed. The car location is monitored using accurate GPS measurements. The latter method can be executed more easily and it is more practical than the former method, but it only takes into account the rolling noise. Absorption of propulsion noise on the road is not taken into account because the measurements are done within the acoustical isolated housing. The CPX method can be used for applications such as:

Determining the compliance of existing road types with a road specification;

Checking the acoustic effect of road maintenance and cleaning or ageing and damaging of the road;

Checking the homogeneity of a road section, both along the length of the road but also on separate driving lanes;

The development and research of new or existing road types.

Both methods described above can only be done in repeated intervals, there is specific equipment needed and workers need to be specifically trained for the measurements. Also, measurements can only be done under certain circumstances, which makes it difficult to monitor the process of road surface deterioration.

Interesting work for road classification using less specialised equipment and post-processing with neural networks has already been researched before, for example with supervised clustering methods using neural networks (Masino et al. 2017). Methods based on the CPX method have been used to do road surface measurements, with compensation for the speed variation using a speed correction formula (Paje et al. 2007, 2009). A technique based on acoustic characterisation with a spectrogram and Principal Component Analysis (PCA) was proposed for the characterisation of the pavement texture (Zhang et al. 2014). There have been tests for acoustic classification of the road surface depending on the weather conditions, using tire-road noise from a vehicle (Kongrattanaprasert et al. 2009). J. Eriksson describes a method called Pothole Patrol, which also uses the inherent mobility of vehicles, in this case taxis, which are running in the same area (Eriksson et al. 2008).

3 Method overview

Car noise is measured by placing a microphone pointed toward the wheel base within the trunk of the car. The microphone monitors the car rolling noise while the car is moving. Additionally, a GPS sensor is placed which monitors the speed and location of the car. A Raspberry Pi device is used to store and synchronise both signals. Subsequently the data is transferred to a database and processed. The data acquired consists of the one-third octave bands with centre frequencies ranging from 20 Hz up to and including 20 kHz in dB (for a total of 31 bands), the accelerometer data which was accumulated over 1 second, and the GPS data. The trajectory evaluated in this paper is shown and described in Fig. 9. The different road types were also monitored manually by driving over the road and noting down where the road type visibly changes.

The model we use for speed and acceleration correction is based on theoretical assumptions and former experiments described in literature, stated below:

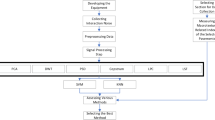

We will execute the following steps:

Compensate for speed and acceleration influences using statistical regression techniques (Ejsmont and Sandberg 2002; Paje et al. 2007).

Assign each measurement to the most likely road segment using a standard map-matching algorithm (Newson et al. 2009).

Use the remaining data for clustering-based classification, clustering road types with similar properties together.

The novelties of the study are using a regression filter to filter out unwanted speed and acceleration correlations before clustering the sound data, and the usage of Ward’s hierarchical clustering algorithm with spatial constraints in order to represent the connectivity of road segments on the map. This way, larger stretches of road with a varying road quality still might get marked as being one road segment with bad quality, making it more intuitive for road owners to identify worn-out stretches of road.

4 Speed and acceleration corrections

4.1 Theoretical model

The data is corrected for interference coming from speed and acceleration of the car, using statistical regression relying on the theoretical model from the Harmonoise project, but also using more advanced model fitting techniques. The Harmonoise sound propagation model predicts long-term average sound levels in road and railway situations. The method of calculating outdoor sound propagation based on the Harmonoise model is described in Defrance et al. (2007) and Salomons et al.(2011).

The Imagine project (Peeters and van Blokland 2007) and CNOSSOS-EU project (Pallas et al. 2016) both note that the vehicle noise model consists of the contributions of rolling noise Lwr, and motor or propulsion noise Lwp. This section will repeat some of the results mentioned in these papers and apply this to the real-life situation where a car is driving over the road in real-life conditions. The acoustic emission of both of these components has a strong positive correlation with the speed.

4.1.1 Rolling noise

The rolling noise component Lw,r(v,f) is formulated in relation to the reference speed vref = 70 km/h:

where the coefficients ar and br are dependent on the frequency in the one-third octave band and the vehicle category. These factors are correct for one vehicle at the side of the road and measured in certain reference conditions. In the CNOSSOS-EU project certain correction coefficients are proposed for conditions deviating from the reference conditions. Because we are measuring trips of the car in all conditions, there will be many possible deviations of these reference conditions. Our model will need to include compensations for these factors that are in our dataset.

The rolling noise correction factors ΔLwr(f) are defined as follows:

where ΔLwr,road(v,f) is a correction factor for the road surface type dependent on the vehicle location and ΔLwr,acc(a) indicates the acceleration correction factor. Other factors are not related to the location of the car and the clustering model should takes care of these factors.

4.1.2 Propulsion noise

The propulsion noise component can be formulated as a linear relation to the reference speed vref = 70 km/h:

with the coefficients ap and bp depending on the frequency in the 1/3 octave band and the vehicle category.

The propulsion noise correction factors ΔLwp(v,f) can be defined as follows:

with ΔLwp,acc(f) = cp ⋅ a the acceleration factor, ΔLwp,road(f) the correction factor for the type of road surface, and ΔLwp,grad(v,f) the correction factor compensation for road gradients.

4.1.3 Total noise

We are mostly interested in frequencies where the monitored noise consists mostly of rolling noise. If we suppose that the following equation gives the total noise contribution Ltot(v,a) inside the trunk of the car (the dependency on f is left out here as this is calculated for each frequency separately):

4.1.4 Fitting and conclusions

We can fit our measured noise signals using the above equation and obtain the coefficients applicable for each car. The theoretical factors for ar, br, am and bm are indicated in the Harmonoise model but will vary in different driving conditions and for different cars. We use the above equations for a Least Squares (LS) fitting problem where we try to minimise the difference between the practical data and the theoretical function above. This function fitting has multiple local minima, which is why we will use the parameters from the Harmonoise model as a starting point. The noise emission for both rolling noise and propulsion noise sources in the ideal Harmonoise model with the theoretical coefficients inserted is plotted in Fig. 1.

The fitting technique reduces the variance of the dataset and takes away a lot of the speed dependency of the data. The R2 score (coefficient of determination) of the resulting model versus the initial data is 0.699 for the training set and 0.685 for the test set. In Fig. 2, the LS fitting derived from the theoretical parameters is illustrated for measurements where one car drove around in multiple conditions. The R2 score is defined in (6), where yi with mean \( \bar {y}\) is the measured value, and fi is the predicted value using the Harmonoise model. The goodness-of-fit increases as the R2 score results in a number closer to 1.

LS Fitting of the Harmonoise parameters to the car measurements for 4 different 1/3 octave frequency bands, the centre frequency is indicated with fc. Lr, Lp and Lt indicate the rolling noise, propulsion (engine) noise, and total noise contributions respectively. The frequency fc = 1600 Hz has the most rolling noise according to this model

The resulting adapted Harmonoise model can be observed in Fig. 3. As can be seen in this figure, the rolling noise at 50 km/h is more apparent between the frequencies starting at roughly 500 Hz and ending at 5000 Hz. If we want to consider the state of roads where you can drive 50 km/h and more, we need to consider that our data tries not to take into account the engine noise of the car. From the results of our adapted Harmonoise model and the fitted parameters, we calculate for a speed of 50 km/h or more if the rolling noise has a larger contribution to the noise than the engine noise. If this is the case, this rolling noise can be included in our data which we will use in the next step to do clustering. From the data we have used, we can determine that the frequencies between approximately 400 Hz and 6300 Hz will have mostly rolling noise, while other frequencies will mostly consist of engine noise.

4.2 Machine learning models

We compare the speed correction technique above with an approach using machine learning; support vector machines using regression. The Support Vector Regression (SVR) technique can be used to do linear regression on the data and find a function that describes the correlation between recorded audio data sound levels and car speed.

For SVR, the following error function needs to be minimised:

where tn is the target value, E𝜖 the 𝜖-insensitive error function, determining how big the error-insensitive region is, and ϕ(x) is a fixed feature-space transformation with an explicit bias parameter b, and w is the weight vector of the function (Bishop 2006). We use the squared-exponential kernel from the scikit-learn (Pedregosa et al. 2011) programming package as the desired feature transformation to fit a function to the noise data using a model based on SVR machine learning. For each separate frequency, we do a fitting where x is the speed and acceleration vector and y is the predicted noise intensity at a certain car frequency. The result of such a fitting is then used to compensate for noise fluctuations due to speed and acceleration differences.

We compare the SVR regression method with another machine learning method, the Generalised Additive Model (GAM) fitting method. The model relates a univariate response variable to the predictor variables using a structure relating all separate features with a function to the expected value of the noise intensity. The general function definition for a GAM model is as in (7) (Hastie et al. 2001):

With bjk as known basis functions, in our case these are penalised b-splines. The backfitting algorithm is used in order to determine the coefficients. In order to generate a smooth approximation of our speed and acceleration function we try to approximate, we keep the number of splines constrained to 5. The result of the GAM fitting to the data compared to the SVR fitting can be seen in Fig. 4.

To evaluate the speed correction, we can use the following formula, which compares the within-segment variance to the total variance of the dataset, using (8) with s indicating a segment. A segment is defined as a road surface with a length of 20 m or less. For the circuit indicated in Fig. 9, we compared these values for a dataset without correction, with GAM correction, and with SVR correction (Table 1).

5 Classification and clustering

For the data recorded using our own recording device, we will do a classification. We will use clustering techniques; K-means clustering and hierarchical clustering. Because we do not know how many road surfaces the road consists of and there is no database available, a secondary goal of the clustering technique is figuring out how many natural clusters the data consists of, i.e. finding out the number of roads which have a discernibly different noise spectrogram. Additional information we can use is that road surfaces generally will be close to each other, in other words, the road surface will not continually change but generally stay the same if close together on the map.

There are some different clustering methods that we will execute and compare for effectiveness. We will use K-means clustering, hierarchical clustering using Ward’s variance minimisation algorithm, and hierarchical clustering supported by a connectivity matrix, and compare the effectiveness of these three algorithms. The features used from this dataset include the third octave frequency bands. The data is normalised before applying the following algorithms to counteract the effect that some of the features would be inappropriately scaled in comparison with the others. The normalisation consists of subtracting the mean value and dividing with the variance for each separate feature.

5.1 K-means clustering

To determine the different types of road, we first use the K-means clustering method and the K-means clustering algorithm (Dempster et al. 1977), for K varying from 2 to 20 and evaluate the different clusterings using an elbow plot. The algorithm alternates between an assignment step and the update step. During the assignment step, the K-means clustering method initialises K cluster centres at random locations and assigns each observation to the closest cluster centre using their Euclidean distance. In the update step, a new cluster centre is calculated for each cluster, this is the centroid of all observations belonging to the cluster. After numerous iterations, this algorithm will converge and the cluster centre locations will no longer change.

The elbow plot is generated by calculating the Mean Squared Error (MSE) between the data points and their respective cluster centres. As the number of clusters increases, this error should decrease: The data points are closer to the cluster centre and the within-cluster variance decreases. As we plot the sum of squares versus the number of clusters, we can often discern a point where the MSE is decreasing slower. This point is the ‘elbow’ of the plot, and it is the point of maximum curvature within the plot.

The result of this adapted elbow detection method can be seen in Fig. 5, where K= 5 is chosen as the number of clusters (as also indicated by the elbow detection method).

Once we have chosen a number of clusters for our data, we visualise the cluster properties, visualising the mean and variance for each noise band. The clusters indicate which road surfaces have similar sound properties according to the measurements. The noise spectrograms have been compensated for speed and centred around 0 using GAM correction, as can be seen in Fig. 6.

Because of its random initialisation, the K-means method is susceptible for random variations and it does not always result in the same clustering. Also, the evaluation of the result of K-means clustering is difficult apart from visual inspection and evaluating the residual MSE of per segment, so this is why we will use an algorithm which does not rely on random initialisation in the next step.

5.2 Hierarchical clustering

The hierarchical clustering algorithm is such an algorithm which does not rely on random initialisation. We cluster according to Ward’s minimum variance algorithm (Ward 1963), where the criterion for choosing a pair of clusters to merge is based on the within-cluster variance after merging. In the initial step, all clusters consist of a single point. The following distance criterion is used as the merging cost between clusters A and B:

With mA the centre of the first cluster and nA the number of points in that cluster. This will result in clusters with a small intra-cluster distance. When using Ward’s method, we can use the inverse elbow method to determine the number of clusters. For this method, when a new cluster is formed, the mean square error between the points and the cluster centre is determined. The cost function increases when the number of clusters goes down. If these is a big jump in merging cost, an elbow again appears in this inverse elbow plot. We pick K = 7, as indicated by the elbow detection method. The inverse elbow plot, clustering dendrogram, and spectrograms per cluster are indicated in Fig. 7.

5.3 Hierarchical clustering with a connectivity matrix

A third version of the clustering algorithm is proposed, using the indirect information that the 20 m long road sections close to each other are usually of the same road type. We can include this information using a connectivity matrix: road segments can only be merged using the usual hierarchical clustering technique if they are connected to each other. The clustering algorithm is constrained to cluster results which are connected to each other, ending in a result on the map which is more agreeable. The inverse elbow plot, clustering dendrogram with connectivity, and spectrograms per cluster for the clustering method with connectivity matrix are indicated in Fig. 8.

5.4 Averaging per segment

Using the techniques described above, we will see in the next section that hierarchical clustering results in the road clustering with the highest precision. We will extend on this method in order to get good results with the larger dataset. Before applying the clustering algorithm, we will assign the point to the nearest road segment, according to a map matching algorithm. This will constrain the number of measurements per segment and also make sure that outlying values due to events on the road (such as road bumps) are not included in the dataset. If we execute the hierarchical clustering algorithm again on this speed fitted dataset, we get results which such as seen in Fig. 10. This averaging technique proves useful to remove outlying values caused by environmental circumstances and to shrink the dataset before using in the clustering algorithm.

6 Validation

The techniques can also be used for larger portions of the road. Proposed hierarchical clustering using a connectivity matrix gives clear results for partitioning the road into different sections with a different road surface. We will use a separate road section where we drove around with a car, equipped with the GPS and sound sensor described in Section 3.

The 3 cars drove 6 times around this road section which has an approximate length of 5 km, and we separately constructed a dataset of this section by taking pictures of the road and analysing the road structure. From the analysis, we concluded that there are at least four different road types in this section, not taking into account that some road surface have aged differently according to their circumstances. Figure 9 gives an overview of the different road surface types on the test circuit. We cluster the measurements in the same number of clusters using our dataset in an unsupervised way and then calculate the accuracy (Fig. 10).

An overview of the testing track with different colours indicating a different type of road. The total length of the circuit is about 5 kilometres. The track was traversed by three cars, that all traversed the track twice in 30 minutes. The numbered road types are respectively: (1) SMA type 1; (2) SMA type 2; (3) HMA; (4) Concrete plates; (5) Worn DAC (6) Very worn DAC

A result for hierarchical clustering with GAM correction and Connectivity-Constrained hierarchical Clustering (CCC) with averaging per segment. The accuracy has been indicated in Fig. 11. The numbering has been adapted to concur with the one in Fig. 9. The road types 1 and 2 are estimated in the result to be the same type of road. The other types of road are well separated from each other and the speed and acceleration effect seems to be compensated

We will compare the results on our testing track using the three different methods. The methods run on the data and on the data corrected for speed dependence with SVR fitting and GAM fitting. The following steps were executed in order:

Per vehicle, the speed and acceleration dependency was calculated using the SVR and GAM models and compensated for, so that only the road surface noise and other variations in the data remain.

The measurements were map-matched and assigned to the nearest road segment.

In each collection with sufficient passages, the measurements were averaged.

The measurements were clustered using the three clustering methods described above: K-means clustering, hierarchical clustering without and with connectivity constraints.

We do 100 runs of each method to account for random fluctuations in the clustering result. To check the robustness of the hierarchical clustering algorithm, we use a random subsample consisting of 90% of our data. Finally, before calculating the accuracy, we assign each separate cluster from the unsupervised clustering method (which has a random number) to the supervised cluster that appears in most of the cases.

We compare the Adjusted Rand Index (ARI), the accuracy, and the Balanced Accuracy (BA) scores for these datasets, which can be seen in Table 2 and Fig. 11. The results with GAM correction and SVR correction seem to be quite similar, while the result which has not been compensated for speed has a much lower accuracy. Notably, the accuracy is much lower when there is no speed correction (about 60%), then when SVR and GAM regression is used (about 85%). Both the GAM and the SVR method seem to give good results when comparing them with the ground truth data. The hierarchical clustering which was constrained with a connectivity matrix seems to give slightly better results on average.

Comparison of the results using different clustering techniques and corrections. {1, 4, 7} K-means clustering; {2, 5, 8} Hierarchical clustering; {3, 6, 9} Hierarchical clustering using connectivity matrix. The black and the white dot indicates the maximum respectively the minimum accuracy evaluated over the 100 runs

7 Conclusion

We proposed an approach to road surface clustering where a car is monitoring the road using a noise and GPS sensor. The features extracted from the noise file are the noise bands and features related to the road roughness. As the car noise is related to different speeds and accelerations as well as to the road types, we try to compensate for this using smooth fitting functions such as GAM and SVR regression. After this compensation, we cluster the features using different clustering techniques. The method that seems to be best in this research is the composition of GAM regression and hierarchical clustering using a connectivity matrix. The connectivity matrix ensures that first measurements will be merged that are close together on the map, effectively resulting in a map of road segments with similar noise features and less scattered clustering results. When comparing unsupervised clustering with results measured on the road, we can determine the road surface with an accuracy of up to 88%. Possible future work is extending this technique to a larger road surface, and using alternative clustering techniques to generate similar results.

References

Bishop, C.M. (2006). Pattern recognition and machine learning (information science and statistics). Berlin: Springer. ISBN 0387310738.

Defrance, J., Salomons, E., Noordhoek, I., Heimann, D., Plovsing, B., Watts, G., Jonasson, H., Zhang, X., Premat, E., Schmich, I., Aballea, F., Baulac, M., de Roo, F. (2007). Outdoor sound propagation reference model developed in the European Harmonoise project. Acta Acustica United with Acustica, 93 (2), 213–227. ISSN 1610-1928.

Dempster, A.P., Laird, N.M., Rubin, D.B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society. Series B (Methodological), 39(1), 1–38. ISSN 00359246. http://www.jstor.org/stable/2984875.

Ejsmont, J., & Sandberg, U. (2002). Tyre/road noise. Reference book. Hisa: INFORMEX Ejsmont & Sandberg.

Eriksson, J., Girod, L., Hull, B., Newton, R., Madden, S., Balakrishnan, H. (2008). The pothole patrol: Using a mobile sensor network for road surface monitoring. In Proceedings of the 6th international conference on mobile systems, applications, and services, MobiSys ’08. ISBN 978-1-60558-139-2, http://doi.acm.org/10.1145/1378600.1378605 (pp. 29–39). New York: ACM.

Hastie, T., Tibshirani, R., Friedman, J. (2001). The elements of statistical learning. Springer series in statistics. New York: Springer.

Kongrattanaprasert, W., Nomura, H., Kamakura, T., Ueda, K. (2009). Detection of road surface conditions using tire noise from vehicles. IEEJ Transactions on Industry Applications, 129(7), 761–767. https://doi.org/10.1541/ieejias.129.761.

Lex Brown, A. (2015). Effects of road traffic noise on health: From burden of disease to effectiveness of interventions. Procedia Environmental Sciences, 30, 3–9. https://doi.org/10.1016/j.proenv.2015.10.001.

Masino, J., Foitzik, M.-J., Frey, M., Gauterin, F. (2017). Pavement type and wear condition classification from tire cavity acoustic measurements with artificial neural networks. The Journal of the Acoustical Society of America, 141(6), 4220–4229. https://doi.org/10.1121/1.4983757.

Newson, P., Krumm, J., Microsoft Research. (2009). Hidden markov map matching through noise and sparseness. In 17th ACM SIGSPATIAL international conference on advances in geographic information systems. https://www.microsoft.com/en-us/research/publication/hidden-markov-map-matching-noise-sparseness/ (pp. 336–343).

None. (1997). Acoustics ? measurement of the influence of road surfaces on traffic noise ? Part 1: statistical pass-by method. Standard, international organization for standardization.

None. (2017). Acoustics ? measurement of the influence of road surfaces on traffic noise ? Part 2: the close-proximity method. Standard, international organization for standardization.

Ouis, D. (2001). Annoyance from road traffic noise: a review. Journal of Environmental Psychology, 21(1), 101–120. https://doi.org/10.1006/jevp.2000.0187. ISSN 02724944.

Paje, S.E., Bueno, M., Terán, F., Viñuela, U. (2007). Monitoring road surfaces by close proximity noise of the tire/road interaction. The Journal of the Acoustical Society of America, 122(5), 2636. https://doi.org/10.1121/1.2766777.

Paje, S.E., Bueno, M., Viñuela, U., Terán, F. (2009). Toward the acoustical characterization of asphalt pavements: Analysis of the tire/road sound from a porous surface. The Journal of the Acoustical Society of America, 125(1), 5–7. https://doi.org/10.1121/1.3025911.

Pallas, M.-A., Bérengier, M., Chatagnon, R., Czuka, M., Conter, M., Muirhead, M. (2016). Towards a model for electric vehicle noise emission in the european prediction method CNOSSOS-EU. Applied Acoustics, 113, 89–101. https://doi.org/10.1016/j.apacoust.2016.06.012.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., et al. (2011). Scikit-learn: Machine learning in python. Journal of Machine Learning Research, 12 (Oct), 2825–2830.

Peeters, B., & van Blokland, G.J. (2007). The noise emission model for European road traffic. IMAGINE deliverable, 11(11).

Salomons, E., van Maercke, D., Defrance, J., de Roo, F. (2011). The harmonoise sound propagation model. Acta Acustica united with Acustica, 97(1), 62–74. https://doi.org/10.3813/aaa.918387.

Singh, G., Bansal, D., Sofat, S., Aggarwal, N. (2017). Smart patrolling: an efficient road surface monitoring using smartphone sensors and crowdsourcing. Pervasive and Mobile Computing, 40, 71–88. https://doi.org/10.1016/j.pmcj.2017.06.002.

Ward, J.H. (1963). Hierarchical grouping to optimize an objective function. Journal of the American Statistical Association, 58(301), 236–244. ISSN 01621459. http://www.jstor.org/stable/2282967.

Zhang, Y., Mcdaniel, J., Wang, M.L. (2014). Pavement macrotexture estimation using principal component analysis of tire/road noise. Proceedings of SPIE - The International Society for Optical Engineering, 9063. https://doi.org/10.1117/12.2045584.

Acknowledgements

This work was executed within the MobiSense research project. MobiSense is co-financed by IMEC and received support from Flanders Innovation & Entrepreneurship. Special thanks are given to the company ASAsense for providing measured road noise data.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

David, J., De Pessemier, T., Dekoninck, L. et al. Detection of road pavement quality using statistical clustering methods. J Intell Inf Syst 54, 483–499 (2020). https://doi.org/10.1007/s10844-019-00570-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-019-00570-z