Abstract

This study used eye-tracking to examine visual attention to faces and objects in adolescents with autism spectrum disorder (ASD) and typical peers. Point of gaze was recorded during passive viewing of images of human faces, inverted human faces, monkey faces, three-dimensional curvilinear objects, and two-dimensional geometric patterns. Individuals with ASD obtained lower scores on measures of face recognition and social-emotional functioning but exhibited similar patterns of visual attention. In individuals with ASD, face recognition performance was associated with social adaptive function. Results highlight heterogeneity in manifestation of social deficits in ASD and suggest that naturalistic assessments are important for quantifying atypicalities in visual attention.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Autism spectrum disorders (ASDs) represent a continuum of neurodevelopmental disorders characterized by impairments in social interaction, communication, and restricted or repetitive interests and behaviors (American Psychiatric Association 2000). Social dysfunction is the hallmark of ASDs and their unifying diagnostic feature, with face perception representing a well-studied facet of this domain. Atypicalities in face perception have been noted early in the development of children with ASD, as children subsequently diagnosed with the disorder display reduced attention to faces during the first year of life in retrospective studies of home videos (Maestro et al. 2001; Osterling and Dawson 1994). Many studies of both children and adults indicate that individuals with ASD show selective difficulties with face recognition and discrimination (Boucher and Lewis 1992; Boucher et al. 1998; McPartland et al. 2004; Tantam et al. 1989), though it has been argued that these difficulties may be neither universal nor limited to faces (Behrmann et al. 2006; Jemel et al. 2006). Reduced face inversion (Langdell 1978) and face decomposition effects (Hobson et al. 1988; Tantam et al. 1989) have been observed in this population and have been interpreted as evidence of application of featural processing strategies (Joseph and Tanaka 2003) instead of the holistic approaches that characterize typical face perception (Elgar and Campbell 2001; Freire et al. 2000).

Much has been learned about visual attention to human faces in ASD through eye-tracking studies. Despite normal psychometrics in terms of basic eye movements (Goldberg et al. 2002; Minshew et al. 1999) individuals with ASD do not consistently demonstrate the pattern of preferential attention to the eyes and core facial features evident in typical development (Haith et al. 1977; Walker-Smith et al. 1977). Most studies examining patterns of gaze to faces in ASD reveal reduced attention to the central features of the face, especially the eyes (Corden et al. 2008; Falck-Ytter 2008; Hernandez et al. 2009; Jones et al. 2008; Klin and Jones 2008; Klin et al. 2002a, b; Pelphrey et al. 2002; Trepagnier et al. 2002). Several studies have, however, only partially replicated this pattern of results (Sterling et al. 2008) or have revealed looking patterns comparable to typical individuals (Anderson et al. 2006; Dapretto et al. 2006; van der Geest et al. 2002). This variability in results may reflect differences in task-related factors, such as deciphering emotional expressions versus viewing faces without explicit directions (Sterling et al. 2008), or differences in the visual stimuli employed, with dynamic facial stimuli being more likely to elicit atypical viewing patterns than static stimuli (Speer et al. 2007).

Despite consideration of task demands and the role of motion in visual attention to faces, the role of the visual properties of face stimuli as a potential influence on patterns of gaze in ASD remains unexplored. Most eye-tracking studies of static face perception have examined visual attention only to faces. This leaves open the possibilities that (a) individuals with ASD display atypical patterns of visual exploration to any object (e.g., preferentially inspecting the lower half) or (b) that atypical looking patterns are elicited by the distinct visual properties of faces (rather than social or experiential factors). To our knowledge, only one study has examined visual attention in ASD to static non-face objects (Anderson et al. 2006). Group differences were observed in looking patterns to non-face stimuli (landscapes) that correlated with autistic symptomatology. Though these findings emphasize the import of investigating visual attention to non-face stimuli, the study design limits inferential power and generalizability. Because individual exemplars of a variety of heterogeneous control stimuli (e.g., non-human faces consisting of a dog, cat, lion, and fox face and toy stimuli consisting of a shape-sorter, ball, boat, and puzzle) were presented, the influence of the particular visual characteristics of a homogenous stimulus class on viewing patterns could not be explored. Secondly, because this study featured an extremely wide developmental span among children with ASD, encompassing a range of 1–6 years of age, developmental effects cannot be extricated from the results.

The role of the visual form of the human face in perception is relevant for understanding visual attention. Neuroimaging studies suggest that the visual properties of faces, including shape, may influence their neural processing (Ishai et al. 1999). Individuals with face recognition problems also have difficulty perceiving stimuli that resemble faces in terms of being three-dimensional and curvilinear (Laeng and Caviness 2001). Behavioral studies of face and object recognition suggest that individuals with ASD display difficulties distinguishing among homogeneous sets of non-face objects (Scherf et al. 2008). The extant literature employing eye-tracking to study static faces cannot extricate “faceness”, per se, from these potential confounds.

The current study sought to systematically examine the influence of the visual properties of static stimuli on visual attention. To do so, four sets of homogenous control stimuli varying in perceptual similarity to human faces were employed. First, human faces were compared to monkey faces. Both stimuli share a common visual configuration but differ in terms of social relevance and developmental exposure. It was predicted that the ASD group would differ from the typical group in terms of reduced attention to eyes and increased attention to mouths for the human faces only. Despite structurally similar visual properties, it was hypothesized that both groups, lacking experience with monkey faces, would scan them similarly. A second comparison contrasted looking patterns between upright human faces and inverted human faces. Given evidence that inversion of faces disrupts typical processing strategies and elicits object-like processing in typical individuals, it was predicted that individuals with ASD would exhibit anomalies relative to typical individuals to upright but not inverted faces. A third comparison examined visual attention to upright human faces versus three-dimensional, curvilinear objects (Greebles; Gauthier and Tarr 1997) and two-dimensional geometric patterns. Both Greebles and patterns were novel stimuli for both groups, but only Greebles, unlike angular geometric patterns, feature three-dimensional curvature. It was hypothesized that Greebles and patterns would elicit scan patterns in individuals with ASD similar to those observed in typical individuals. This study also examined interrelationships among patterns of visual attention to faces and social behavior, including face recognition ability. Consistent with the notion that face processing deficits are a manifestation of social disability, it was predicted that individual differences in visual attention to faces and in the ability to recognize faces would correlate with measures of social ability. In contrast, if social deficits are a manifestation of differences in basic sensory perception (Mottron et al. 2006), differences in viewing patterns to a broader array of non-face visual stimuli might relate to measures of social function.

Methods

Participants

Participants were recruited from the University of Washington Autism Center, local parent advocacy groups, public schools, clinics, hospitals, internet newsgroups, and flyers posted at the University of Washington. Exclusionary criteria included known neurological disorder or disease, genetic syndrome, medication affecting the oculomotor system, significant ocular pathology, and history of seizures, serious head injury, or neurological disease. Additional exclusionary criteria for typical participants included birth or developmental abnormalities, family history of autism spectrum disorder, and learning or language disability. All participants had a performance IQ of 80 or higher, as assessed using an abbreviated form of the Wechsler Intelligence Scale for Children—Third Edition (Block Design and Object Assembly subtests; WISC-III; Wechsler 1997). All participants in the ASD group had pre-existing diagnoses, which were confirmed with the Autism Diagnostic Interview—Revised (ADI-R; Lord et al. 1994), the Autism Diagnostic Observation Schedule—Generic (ADOS-G; Lord et al. 2000), and clinical diagnosis (JM) according to the Diagnostic and Statistical Manual of Mental Disorders: Fourth Edition (DSM-IV-TR; American Psychiatric Association 2000). Of 17 participants meeting inclusion criteria, adequate eyetracking data were collected from 15 individuals with ASD (1 subject’s eyeglasses prevented data collection, and 1 subject’s data were not collected due to equipment failure). Of 18 typically developing participants, 17 provided adequate eyetracking data (1 subject’s data were not collected due to equipment failure). Table 1 presents sample demographic and descriptive information, including sex, race, handedness, chronological age, and performance IQ for both groups. Groups did not significantly differ on any of these factors.

Behavioral Assessments

Face/Object memory

Non-face object recognition was assessed with the Cambridge Neuropsychological Test Automated Battery Pattern Recognition Subtest (Fray et al. 1996; Sahakian and Owen 1992). 12 colored patterns were presented on a computer screen for 3 s each; after a 5 s pause following the 12th pattern, the participant was presented with 12 consecutive pairs of patterns, each consisting of one of the patterns he or she just viewed and one unfamiliar pattern. For each pair, the subject selected (by touching the pressure sensitive computer screen) the pattern that he or she had already seen. This procedure was repeated with a second set of patterns for a total of 24 trials. An analogous task, the Computerized Face Recognition Task, was designed using identical procedures and gray-scale faces digitally masked to conceal hair and background. Dependent variables were number of correct responses and average latency to response.

The Children’s Memory Scale Faces Subtest (CMS; Cohen 1997) was administered as an additional measure of face recognition, yielding a scaled score. In this task, 16 faces were presented for 2 s each and then identified among a larger, sequentially presented set of 48 faces. The Child Mind in the Eyes Test—Revised Version (Baron-Cohen et al. 2001) assessed ability to infer gender and emotion from the eye region. The task presented 36 exemplars twice, requiring participants to identify gender on one iteration and to select mental state from four choices on the second iteration. Number of correct responses for emotion and gender conditions were the resultant dependent variables.

Social function

The Vineland Adaptive Behavior Scales—Survey Form (Sparrow et al. 1984) Communication and Socialization domains provided standard scores reflecting adaptive social and communicative function based on parent report. The Social Competence Questionnaire (Sarason et al. 1985) is a 10-item parent-report questionnaire measuring social communication skill and comfort using a four-point scale to rate responses, ranging from 1 (“not at all like my child”) to 4 (“a great deal like my child”). It yields a social disability score, computed by summing across all items. The Social Responsiveness Scale (SRS; Constantino 2003) is a 65-item parent-report instrument assessing social function during the previous 6 months. A proportional score was calculated for each participant by assigning numerical values to the responses, summing across all items, and dividing by the maximum possible total.

Eye-tracking Procedure

Eye movements were recorded with the ISCAN Eye Tracking Laboratory (ETL-500) system, using the Headband Mounted Eye/Line of Sight Scene Imaging System (ISCAN Incorporated, Burlington, MA) with Polhemus FastTrack head tracking system. The Headband Mounted Imaging System consists of a lightweight headband built into a baseball cap that supports a solid-state infrared illuminator, two miniature video cameras, and a dichroic mirror that reflects infrared light to the cameras but is transparent to the subject. The dichroic mirror reflects an infrared image of the subject’s eye to the eye-imaging camera, which outputs directly to the ETL-500, a computer system running the RK-726PCI Pupil/Corneal Reflection Tracking System and the RK-620 PC Autocalibration System. Point of gaze was sampled at 240 Hz with an accuracy of 0.5 visual degrees. Co-registration of point of gaze with head position (measured by the Polhemus head tracker) preserved accuracy with tolerance for head movement.

Eye-tracking sessions were recorded in a sound-attenuated room. Participants were seated in a comfortable chair approximately 70 cm from a 21-inch flat screen monitor at a resolution of 800 by 600 pixels. Participants were familiarized with the testing setting prior to the experiment and were desensitized to the recording apparatus with a mock device comparable in structure and weight. An automated rapid calibration procedure took approximately 10–20 s, first computing a head vector at central fixation and then five on-screen calibration points at center and six visual degrees towards the top, bottom, left, and right of the monitor. Calibration was manually checked before and after presentation of each stimulus category by testing accuracy of gaze to a target subtending one visual degree at the extremities of the stimulus presentation portion of the monitor.

After calibration, participants were shown 10 stimuli from five stimulus categories, each lasting 8 s and preceded by a 2-second crosshair at the center of the screen. Participants were instructed to focus on the crosshair whenever it was present but to “look at the pictures as [he or she] wish[ed]”. Administration order of individual stimuli was randomized within each category, and the sequence of stimulus category administration was counterbalanced across participants in each group.

Stimuli

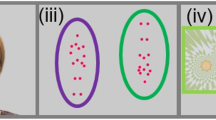

Visual stimuli consisted of digitized grayscale images standardized in terms of background color, size, and visual resolution. Stimuli subtended 15 horizontal visual degrees and 19 vertical visual degrees. The following categories of stimuli were presented: upright human faces, monkey faces, two-dimensional geometric patterns, Greebles, and inverted human faces. All stimuli were bilaterally symmetrical (except for the hair of human faces), and geometric designs were constructed so that, like human faces, monkey faces, and Greebles, most of the visual information was contained in the upper portion of the stimulus field. For each stimulus, ROIs reflecting upper and lower visual areas of stimuli were defined prior to data collection. ROI sizes were equivalent across stimulus categories, and onscreen position was constant across stimuli within a particular category. For upright faces, inverted faces, and monkey faces, upper and lower ROIs corresponded to eyes and mouth, respectively (i.e., for inverted faces the upper ROI corresponded to the upper face though it was displayed on the bottom half of the stimulus monitor). Because no a priori hypotheses existed with respect to regions of interest for geometric designs and Greebles, upper and lower ROIs for upright faces were applied, to allow most direct comparison with upright human faces. Figure 1 depicts on-screen presentation of stimuli with upper and lower ROIs shaded in light and dark, respectively. ROIs were not visible to participants.

Data Processing

The ETL-500 Point of Regard Data Acquisition and Fixation Analysis software package managed the collection and retrieval of eye point of regard parameters. Fixations were predefined as consecutive eye gaze positions focused within an area of one visual degree for a period of 100 ms or greater (Manor and Gordon 2003). Blinks and off-screen gazes were automatically excluded from data analysis by the software program. For each stimulus category, software automatically output a sequential list of all fixations, specifying the duration and location (with respect to predefined ROIs) of each. From this information, proportion of fixations to each ROI and total duration of fixation time to each ROI were computed for each stimulus category.

Results

Patterns of Visual Attention

Separate univariate repeated measures analyses of variance were conducted for each dependent variable (proportion of fixations, duration of fixations) for the following comparisons: (1) faces versus monkey faces, (2) faces versus inverted faces, and (3) faces versus Greebles versus patterns). For each analytic model, group was entered as a between subjects factor, with ROI (upper, lower) and stimulus category as within-subjects factors. Tables 2 and 3 display the mean proportion of fixations and duration of fixations, respectively, to each ROI for each stimulus class for both groups.

Faces versus monkey faces

Main effects of ROI for both proportion of fixations, F (1, 30) = 157.53, p < .01, and duration of fixations, F (1, 30) = 131.83, p < .01, indicated that both groups devoted greater attention to the upper versus lower ROI for both human and monkey faces. No other effects and no between-group differences were detected.

Faces versus inverted faces

A main effect of ROI for both proportion of fixations, F (1, 30) = 159.06, p < .01, and duration of fixations, F (1, 30) = 99.20, p < .01, indicated that both groups devoted greater attentional resources to the upper relative to the lower ROI irrespective of orientation. A stimulus category by ROI interaction for both proportion of fixations, F (1, 30) = 12.30, p < .01, and duration of fixations, F (1, 30) = 9.69, p < .01, indicated that, for both groups, the difference between proportion of fixations to eyes and fixations to mouths was reduced during viewing of inverted faces relative to upright faces. Though participants in both groups looked more at the eyes in both stimulus categories, they showed relatively reduced attention to the eyes and increased attention to the mouth for inverted faces.

Faces versus Greebles versus patterns

A main effect of stimulus category for both proportion of fixations, F (2, 60) = 37.26, p < .01, and duration of fixations, F (2, 60) = 28.60, p < .01, indicated that participants in both groups looked more to ROIs when viewing faces than patterns or Greebles. A main effect of ROI for both proportion of fixations, F (1, 30) = 214.30, p < .01, and duration of fixations, F (1, 30) = 121.37, p < .01, showed that for both groups and across stimulus categories greater attention was allocated to the upper ROI relative to the lower ROI. A stimulus category by ROI interaction for both proportion of fixations, F (2, 60) = 35.02, p < .01, and duration of fixations, F (2, 60) = 25.97, p < .01, indicated that, for both groups; participants looked more to the upper ROI when viewing faces relative to patterns or Greebles. For duration of fixations, a ROI by group interaction, F (1, 30) = 7.47, p < .01, indicated that, across stimulus category, typical individuals spent less time looking to the upper ROI than individuals with ASD. Figure 2 depicts this interaction effect by displaying average duration of fixations to each ROI for each stimulus class for both groups.

Face Recognition and Social/Emotional Functioning

Table 4 shows means for each group on measures of face and object perception and social-emotional functioning, as well as p values for between group t-tests comparing performance on each measure. Individuals with ASD obtained significantly lower scores on the computerized face recognition task and marginally lower scores on the CMS faces subtest. On measures of social function (Vineland social domain, Vineland communication domain, SCS, and SRS), the ASD group’s scores reflected significantly greater impairment.

Visual Attention, Face Recognition, and Social Function

To examine relations among patterns of visual fixation and social functioning in individuals with ASD, Pearson’s product moment correlations were computed among eye-tracking dependent variables for upright face stimuli, measures of social/emotional functioning, and face memory. Analysis revealed that higher scores on the Mind in the Eyes: Gender task were marginally associated with increased proportion of fixations to eyes (r = .51, p < .10); individuals with ASD who devoted greater attentional resources to the eyes were more likely to accurately identify gender by looking at the eyes. Higher Vineland social score was associated with improved performance on the CMS faces task (r = .53, p < .05); individuals with ASD who scored higher on this face recognition measure exhibited higher levels of adaptive social functioning.

Discussion

This study examined visual attention to faces and a variety of control stimuli in individuals with ASD. Relations among fixations, face and pattern recognition, and social-emotional functioning were also explored. Visual fixations were measured while participants passively viewed five different homogenous classes of stimuli including upright human faces, inverted human faces, monkey faces, three dimensional curvilinear forms (Greebles), and two-dimensional geometric patterns. Typically developing individuals and those with ASD displayed comparable patterns of visual attention across stimulus categories. Both groups tended to focus attention to the upper regions of visual stimuli and less attention to the lower regions, particularly for upright face stimuli. The only between group difference to emerge was an interaction effect indicating that, when comparing upright faces, three-dimensional objects, and geometric patterns, individuals with ASD devoted proportionally greater attentional resources to the upper, relative to lower, portions of visual stimuli.

In contrast to our predictions, the sample of children with ASD in this study exhibited normative patterns of visual attention to human faces despite face recognition impairments and significant social deficits. These findings are consistent with other research investigating visual attention to static face stimuli in ASD (Sterling et al. 2008; van der Geest et al. 2002) and may reflect the diminished realism and consequently decreased ecological validity of grayscale, static stimuli (Speer et al. 2007). Dynamic in vivo social interactions may be better able to characterize the variability in eye gaze among individuals with ASD (Klin et al. 2003).

Details of the experimental design may also have influenced results. Extended viewing times of eight seconds may have affected viewing patterns, as previous studies using shorter viewing times (e.g., 2 s; Pelphrey et al. 2002) have detected differences while those employing longer viewing times (e.g., 10 s; van der Geest et al. 2002) have not. The large stimulus display size in the current study may also have impacted scan patterns. There is evidence that, when individuals with ASD are provided with sufficient time and visual resolution to process high spatial frequency information, they display, not only more typical patterns of face recognition, but more typical patterns of brain activity associated with face processing (Hadjikhani et al. 2004). The absence of any competing stimuli on-screen stimuli may also have influenced results, as several studies documenting anomalous viewing patterns simultaneously displayed alternative sources of visual information (Klin et al. 2002b; Vivanti et al. 2008). Face scanning atypicalities as a consequence of difficulties in attention shifting or disengaging might only emerge under such circumstances.

The variability among results in research studies investigating visual attention to static faces in ASD mirrors that observed in the clinical phenotype. Although social deficits represent a pervasive and unifying feature of ASD, there is much variation in the manifestation of this characteristic. Indeed, considering that diagnosis requires that only 6 of 12 diagnostic criteria be met, children may qualify for identical diagnostic labels despite distinct symptom profiles. The diversity observed in clinical manifestation and evident in the present study has also been noted in face processing research using other methods. For example, findings of electrophysiological anomalies in the processing of faces (Dawson et al. 2002; McPartland et al. 2004; O’Connor et al. 2005, 2007; Webb et al. 2006) have failed to replicate in some samples (Kemner et al. 2006; Webb et al. 2009). Hypoactivation of the fusiform gyrus during face perception (Schultz 2005; Schultz et al. 2000) has also failed to manifest in some samples (Hadjikhani et al. 2004; Hadjikhani et al. 2007; Kleinhans et al. 2008, 2009; Pierce et al. 2004; Pierce and Redcay 2008). The current results do not clarify whether individuals with preserved face perception represent a meaningful subgroup within the autism spectrum or simply variation in one aspect of social behavior. This heterogeneity represents informative variance, offering descriptive information about individual differences at a level not yet permitted by extant diagnostic categories.

Differences in visual attention to faces may also reflect developmental variation. We have suggested elsewhere (Dawson et al. 2005) that atypical scan patterns to human faces may represent a developmental consequence of reduced attention to people in early childhood. It then follows that variability in social motivation would predict variability in scan patterns and that this developmentally-induced heterogeneity would be most evident in adolescents and adults. Thus, it is possible that preserved looking patterns in the current sample may reflect high levels of social motivation during development. Though formal quantification of social motivation was not obtained in the current study, clinical interviews suggested strong social interest despite underdeveloped social skills, corresponding to the “active but odd” classification ASD described by Wing and Gould (1979). Individuals with these characteristics might obtain higher levels of developmental exposure to other people and consequently increased experience with human faces and thus display more normative looking patterns. It will be important in future research to examine face processing in ASD in light of social motivation. Indeed, in typically developing populations; personality characteristics associated with social motivation, such as extroversion and introversion, modulate neural responses to faces (Cheung 2010, in press). In considering the influence of development on face scanning, evidence for eye-biased scan patterns in neonates suggests that, to some extent, the typical pattern of upper face preference may be present from birth (Farroni 2002; Farroni et al. 2005).

Our results may also reflect diagnostic distinctions within the autism spectrum. The symptom profile of all participants in the current study was consistent with DSM-IV-TR criteria for Asperger Syndrome. The absence of differential looking patterns between clinical and control groups suggests possible preservation of fixation patterns to faces in this diagnostic subgroup (but see Corden et al. 2008). The absence of clinical comparison groups in this study prevents analysis of fixation differences among Asperger Syndrome and other subtypes of ASD; however, the potential utility of fixation patterns as diagnostic differentiator merits further research.

It is also possible that cognitive or experiential characteristics of the children in the current study contributed to their performance. The sample in the current study was extremely high-functioning, with cognitive abilities a full standard deviation above average. Prior research suggests that face processing anomalies in ASD may be more likely in individuals with lower intelligence (Teunisse and de Gelder 2003). Though, in the current study, recognition ability did not correlate with IQ, the influence of cognitive ability cannot be ruled out. Intervention history may have also impacted performance. Given that eye contact is a common objective in social therapies, it is possible that (a) genuine improvement in eye gaze secondary to intervention was reflected in more normative gaze patterns or (b) acquiescent response bias skewed participants towards more typical viewing patterns under circumstances in which they knew their fixations were being monitored. Parent report and clinical observation suggest that the latter may be more likely, as all participants were impaired in eye gaze in naturalistic contexts.

Despite typical looking patterns and comparable performance to typical counterparts on a measure of pattern recognition, individuals with ASD demonstrated selective impairment on measures of face recognition. Their poor performance on the computerized test of facial recognition is most striking, as this measure was identical in procedure and administration to the pattern recognition subtest, on which they performed comparably to peers. These results suggest a potential disconnect between patterns of visual attention and face recognition skill; face recognition difficulties were evident even among individuals with normative patterns of visual attention. Shortened exposure times in the face computer task and on the face memory test may have also contributed to poorer performance. Performance on the CMS faces task was associated with stronger adaptive social function, as measured by the Vineland social domain. This pattern of results is consistent with the prediction that individuals with stronger face recognition skills should show stronger social ability.

Despite difficulties on measures of identity recognition, children with ASD were comparable to typically developing children on measures of gender and emotion recognition, putatively the more challenging social-cognitive task. As described above, this finding may reflect “teaching to task” in the context of social skills training. This interpretation is also consistent with the observed marginal correlation in the ASD group between gender discrimination and increased attention to eyes. Because gender identification may be less focal in intervention programs for individuals with ASD, it may provide an “uncontaminated” measure of an individual’s ability to extract information from a human face; individuals may have thus been less likely to have had the opportunity or the inclination to develop and practice compensatory strategies for gender identification. When the likelihood of compensatory strategies was eliminated by a novel task, individuals with more typical patterns of attention (i.e., increased attention to eyes) were more proficient at interpreting the information contained in the human face.

One limitation of the current study is that measures of fixation were limited to theoretically-defined regions of interest. Though measures of looking time indicated that these regions captured a significant portion of visual attention in both groups, it is possible that between-group differences in visual attention might have been revealed with exploration of additional regions of interest (e.g., inner versus outer features or stimulus area versus non-stimulus on-screen area). An objective of ongoing work is to use empirically-derived regions of interest to compare viewers with ASD and typical counterparts (Jones and Klin 2010, submitted).

References

American Psychiatric Association. (2000). Diagnostic and statistical manual of mental disorders: DSM-IV-TR (4th ed.). Washington, DC: American Psychiatric Association.

Anderson, C., Colombo, J., & Shaddy, D. (2006). Visual scanning and pupillary responses in young children with autism spectrum disorder. Journal of Clinical and Experimental Neuropsychology, 28, 1238–1256.

Baron-Cohen, S., Wheelwright, S., Scahill, V., Lawson, J., & Spong, A. (2001). Are intuitive physics and intuitive psychology independent? A test with children with Asperger Syndrome. Journal of Developmental and Learning Disorders, 5, 47–78.

Behrmann, M., Thomas, C., & Humphreys, K. (2006). Seeing it differently: Visual processing in autism. Trends in Cognitive Sciences, 10(6), 258–264.

Boucher, J., & Lewis, V. (1992). Unfamiliar face recognition in relatively able autistic children. Journal of Child Psychology and Psychaitry, 33, 843–859.

Boucher, J., Lewis, V., & Collis, G. (1998). Familiar face and voice matching and recognition in children with autism. Journal of Child Psychology and Psychiatry, 39, 171–181.

Cheung, C., Mayes, L., Rutherford, H., & McPartland, J. (2010). Social personality traits predict early brain responses to human faces. Social Neuroscience (in press).

Cohen, M. J. (1997). Children’s memory scale. San Antonio, TX: The Psychological Corporation.

Constantino, J. (2003). Social responsiveness scale. Los Angeles, CA: Western Psychological Services.

Corden, B., Chilvers, R., & Skuse, D. (2008). Avoidance of emotionally arousing stimuli predicts social-perceptual impairment in Asperger’s syndrome. Neuropsychologia, 46(1), 137–147.

Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., et al. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience, 9(1), 28–30.

Dawson, G., Carver, L., Meltzoff, A. N., Panagiotides, H., McPartland, J., & Webb, S. J. (2002). Neural correlates of face and object recognition in young children with autism spectrum disorder, developmental delay, and typical development. Child Development, 73(3), 700–717.

Dawson, G., Webb, S. J., & McPartland, J. (2005). Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Developmental Neuropsychology, 27(3), 403–424.

Elgar, K., & Campbell, R. (2001). Annotation: The cognitive neuroscience of face recognition: Implications for developmental disorders. Journal of Child Psychology and Psychiatry and Allied Disciplines, 42(6), 705–717.

Falck-Ytter, T. (2008). Face inversion effects in autism: A combined looking time and pupillometric study. Autism Research, 1(5), 297–306.

Farroni, T., Csibra, G., Simion, F., & Johnson, M. H. (2002). Eye contact detection in humans from birth. Proceedings of the National Academy of Sciences of the United States of America, 99(14), 9602–9605.

Farroni, T., Johnson, M. H., Menon, E., Zulian, L., Faraguna, D., & Csibra, G. (2005). Newborns’ preference for face-relevant stimuli: Effects of contrast polarity. Proceedings of the National Academy of Sciences of the United States of America, 102(47), 17245–17250.

Fray, P. J., Robbins, T. W., & Sahakian, B. J. (1996). Neuropsychiatric applications of CANTAB. International Journal of Geriatric Psychiatry, 11, 329–336.

Freire, A., Lee, K., & Symons, L. A. (2000). The face-inversion effect as a deficit in the encoding of configural information: Direct evidence. Perception, 29(2), 159–170.

Gauthier, I., & Tarr, M. J. (1997). Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Research, 37(12), 1673–1682.

Goldberg, M. C., Lasker, A. G., Zee, D. S., Garth, E., Tien, A., & Landa, R. J. (2002). Deficits in the initiation of eye movements in the absence of a visual target in adolescents with high functioning autism. Neuropsychologia., 40(12), 2039–2049.

Hadjikhani, N., Joseph, R. M., Snyder, J., Chabris, C. F., Clark, J., Steele, S., et al. (2004). Activation of the fusiform gyrus when individuals with autism spectrum disorder view faces. Neuroimage, 22(3), 1141–1150.

Hadjikhani, N., Joseph, R. M., Snyder, J., & Tager-Flusberg, H. (2007). Abnormal activation of the social brain during face perception in autism. Human Brain Mapping, 28(5), 441–449.

Haith, M. M., Bergman, T., & Moore, M. J. (1977). Eye contact and face scanning in early infancy. Science, 198(4319), 853–855.

Hernandez, N., Metzger, A., Magné, R., Bonnet-Brilhault, F., Roux, S., Barthelemy, C., et al. (2009). Exploration of core features of a human face by healthy and autistic adults analyzed by visual scanning. Neuropsychologia, 47(4), 1004–1012.

Hobson, P., Ouston, J., & Lee, A. (1988). What’s in a face? The case of autism. British Journal of Psychology, 79, 441–453.

Ishai, A., Ungerleider, L. G., Martin, A., Schouten, J. L., & Haxby, J. V. (1999). Distributed representation of objects in the human ventral visual pathway. Proceedings of the National Academy of Sciences of the United States of America, 96(16), 9379–9384.

Jemel, B., Mottron, L., & Dawson, M. (2006). Impaired face processing in autism: Fact or artifact? Journal of Autism and Developmental Disorders, 36(1), 91–106.

Jones, W., Carr, K., & Klin, A. (2008). Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2-year-old toddlers with autism spectrum disorder. Archives of General Psychiatry, 65(8), 946–954.

Jones, W., & Klin, A. (2010). Measuring visual salience with “funnels” of attention (submitted).

Joseph, R., & Tanaka, J. (2003). Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry, 44(4), 529–542.

Kemner, C., Schuller, A. M., & van Engeland, H. (2006). Electrocortical reflections of face and gaze processing in children with pervasive developmental disorder. Journal of Child Psychology and Psychiatry, 47(10), 1063–1072.

Kleinhans, N. M., Johnson, L. C., Richards, T., Mahurin, R., Greenson, J., Dawson, G., et al. (2009). Reduced neural habituation in the amygdala and social impairments in autism spectrum disorders. American Journal of Psychiatry, 166(4), 467–475.

Kleinhans, N. M., Richards, T., Sterling, L., Stegbauer, K. C., Mahurin, R., Johnson, L. C., et al. (2008). Abnormal functional connectivity in autism spectrum disorders during face processing. Brain, 131(Pt 4), 1000–1012.

Klin, A., & Jones, W. (2008). Altered face scanning and impaired recognition of biological motion in a 15-month-old infant with autism. Dev Sci, 11(1), 40–46.

Klin, A., Jones, W., Schultz, R. T., & Volkmar, F. (2003). “Defining and Quantifying the Social Phenotype in Autism”: Dr. Klin and colleagues reply. American Journal of Psychiatry, 160(7), 1359–1360.

Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002a). Defining and quantifying the social phenotype in autism. American Journal of Psychiatry, 159(6), 895–908.

Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002b). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59(9), 809–816.

Laeng, B., & Caviness, V. S. (2001). Prosopagnosia as a deficit in encoding curved surface. Journal of Cognitive Neuroscience, 13(5), 556–576.

Langdell, T. (1978). Recognition of faces: An approach to the study of autism. Journal of Child Psychology and Psychiatry, 19(3), 255–268.

Lord, C., Risi, S., Lambrecht, L., Cook, J. E. H., Leventhal, B. L., DiLavore, P. C., et al. (2000). The autism diagnostic observation schedule-generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders, 30, 205–223.

Lord, C., Rutter, M., & Le Couteur, A. (1994). Autism diagnostic interview—revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24, 659–685.

Maestro, S., Muratori, F., Barbieri, F., Casella, C., Cattaneo, V., Cavallaro, M., et al. (2001). Early behavioral development in autistic children: The first 2 years of life through home movies. Psychopathology, 34(3), 147–152.

Manor, B. R., & Gordon, E. (2003). Defining the temporal threshold for ocular fixation in free-viewing visuocognitive tasks. Journal of Neuroscience Methods, 128(1–2), 85–93.

McPartland, J., Dawson, G., Webb, S. J., Panagiotides, H., & Carver, L. J. (2004). Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry, 45(7), 1235–1245.

Minshew, N. J., Luna, B., & Sweeney, J. A. (1999). Oculomotor evidence for neocortical systems but not cerebellar dysfunction in autism. Neurology, 52(5), 917–922.

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. (2006). Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36(1), 27–43.

O’Connor, K., Hamm, J. P., & Kirk, I. J. (2005). The neurophysiological correlates of face processing in adults and children with Asperger’s syndrome. Brain and Cognition, 59(1), 82–95.

O’Connor, K., Hamm, J. P., & Kirk, I. J. (2007). Neurophysiological responses to face, facial regions and objects in adults with Asperger’s syndrome: An ERP investigation. International Journal of Psychophysiology, 63(3), 283–293.

Osterling, J. A., & Dawson, G. (1994). Early recognition of children with autism: A study of first birthday home videotapes. Journal of Autism and Developmental Disorders, 24(3), 247–257.

Pelphrey, K. A., Sasson, N. J., Reznick, J. S., Paul, G., Goldman, B. D., & Piven, J. (2002). Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32(4), 249–261.

Pierce, K., Haist, F., Sedaghat, F., & Courchesne, E. (2004). The brain response to personally familiar faces in autism: Findings of fusiform activity and beyond. Brain, 127(Pt 12), 2703–2716.

Pierce, K., & Redcay, E. (2008). Fusiform function in children with an autism spectrum disorder is a matter of “who”. Biological Psychiatry, 64(7), 552–560.

Sahakian, B. J., & Owen, A. M. (1992). Computerized assessment in neuropsychiatry using CANTAB. Journal of the Royal Society of Medicine, 85, 399–402.

Sarason, B. R., Sarason, I. G., Hacker, T. A., & Basham, R. B. (1985). Concomitants of social support: Social skills, physical attractiveness, and gender. Journal of Personality and Social Psychology, 49(2), 469–480.

Scherf, K. S., Behrmann, M., Minshew, N., & Luna, B. (2008). Atypical development of face and greeble recognition in autism. Journal of Child Psychology and Psychiatry, 49(8), 838–847.

Schultz, R. T. (2005). Developmental deficits in social perception in autism: The role of the amygdala and fusiform face area. International Journal of Developmental Neuroscience, 23(2–3), 125–141.

Schultz, R. T., Gauthier, I., Klin, A., Fulbright, R. K., Anderson, A. W., Volkmar, F., et al. (2000). Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and Asperger syndrome. Archives of General Psychiatry, 57(4), 331–340.

Sparrow, S. S., Balla, D., & Cicchetti, D. V. (1984). Vineland adaptive behavior scales (Survey Form). Circle Pines, MN: American Guidance Service.

Speer, L. L., Cook, A. E., McMahon, W. M., & Clark, E. (2007). Face processing in children with autism: Effects of stimulus contents and type. Autism, 11(3), 265–277.

Sterling, L., Dawson, G., Webb, S., Murias, M., Munson, J., Panagiotides, H., et al. (2008). The role of face familiarity in eye tracking of faces by individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 38(9), 1666–1675.

Tantam, D., Monaghan, L., Nicholson, J., & Stirling, J. (1989). Autistic children’s ability to interpret faces: A research note. Journal of Child Psychology and Psychiatry, 30, 623–630.

Teunisse, J.-P., & de Gelder, B. (2003). Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain and Cognition, 52(3), 285–294.

Trepagnier, C., Sebrechts, M. M., & Peterson, R. (2002). Atypical face gaze in autism. Cyberpsychology & Behavior, 5(3), 213–217.

van der Geest, J. N., Kemner, C., Verbaten, M. N., & van Engeland, H. (2002). Gaze behavior of children with pervasive developmental disorder toward human faces: A fixation time study. Journal of Child Psychology and Psychiatry and Allied Disciplines, 43(5), 669–678.

Vivanti, G., Nadig, A., Ozonoff, S., & Rogers, S. J. (2008). What do children with autism attend to during imitation tasks? Journal of Experimental Child Psychology, 101(3), 186–205.

Walker-Smith, G., Gale, A., & Findlay, J. (1977). Eye movement strategies involved in face perception. Perception, 6, 313–326.

Webb, S. J., Dawson, G., Bernier, R., & Panagiotides, H. (2006). ERP evidence of atypical face processing in young children with autism. Journal of Autism and Developmental Disorders, 36(7), 881–890.

Webb, S. J., Merkle, K., Murias, M., Richards, T., Aylward, E., & Dawson, G. (2009). ERP responses differentiate inverted but not upright face processing in adults with ASD. Social Cognitive and Affective Neuroscience. [Epub ahead of print]. doi:10.1093/scan/nsp002.

Wechsler, D. (1997). Wechsler intelligence scale for children (3rd ed.). San Antonio, TX: The Psychological Corporation.

Wing, L., & Gould, J. (1979). Severe impairments of social interaction and associated abnormalities in children: Epidemiology and classification. Journal of Autism and Developmental Disorders, 9(1), 11–29.

Acknowledgments

This project was supported by funding from NICHD U19 HD35465 to GD, the University of Washington Gatzert Child Welfare Fellowship and the Department of Psychology Bolles Fellowship to JM, and a Young Investigator’s Award from Cure Autism Now to SW; JM’s writing of the manuscript was supported by CTSA Grant Number UL1 RR024139 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and NIH roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NCRR or NIH. The authors gratefully acknowledge the contributions of Tisa Nalty, Josie Quemodo, Heracles Panagiotides, Robert Joseph, Corri Waitt, and the families who participated in the study. This paper is based on the doctoral dissertation of James McPartland. Portions of this work have been presented at the International Meeting for Autism Research and Biannual Meeting of the Society for Research in Child Development in 2005. The authors report no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

McPartland, J.C., Webb, S.J., Keehn, B. et al. Patterns of Visual Attention to Faces and Objects in Autism Spectrum Disorder. J Autism Dev Disord 41, 148–157 (2011). https://doi.org/10.1007/s10803-010-1033-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-010-1033-8