Abstract

It has been shown that individuals with autism spectrum disorders (ASD) demonstrate normal activation in the fusiform gyrus when viewing familiar, but not unfamiliar faces. The current study utilized eye tracking to investigate patterns of attention underlying familiar versus unfamiliar face processing in ASD. Eye movements of 18 typically developing participants and 17 individuals with ASD were recorded while passively viewing three face categories: unfamiliar non-repeating faces, a repeating highly familiar face, and a repeating previously unfamiliar face. Results suggest that individuals with ASD do not exhibit more normative gaze patterns when viewing familiar faces. A second task assessed facial recognition accuracy and response time for familiar and novel faces. The groups did not differ on accuracy or reaction times.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Autism spectrum disorders (ASD) are characterized by impairments in social interaction and communication and a restricted repertoire of activities and interests. Individuals with ASD have specific deficits in the processing of social and emotional information (Davies et al. 1994; Dawson et al. 1998), which may lead to difficulties establishing social relationships and adapting to social norms. Kanner (1943) first noted that individuals with ASD fail to make appropriate eye contact and attend to faces. In young children, failure to attend to faces is a distinguishing feature and an indicator of ASD (Osterling and Dawson 1994). Reduced eye contact is a diagnostic marker of ASD (American Psychological Association 1994) and is often one of the first concerns among parents of children with ASD.

Role of Social Motivation in Face Processing Deficits

During typical development, human infants are socially motivated to attend to faces. This attention to faces provides the infants with opportunities to extract information about the face and its relation to external events (e.g., speech) and internal states (e.g., emotion). It has been hypothesized that the impairments in face processing observed in individuals with ASD may reflect reduced social interest in faces (Adrien et al. 1993; Dawson et al. 2005; Grelotti et al. 2002; Klin et al. 1999; Tantam et al. 1993). Deficits in social motivation in ASD may lead to a failure to attend to faces and a failure to develop expertise for faces, resulting in a disruption of the development of the brain systems dedicated to processing faces in a typical pattern (Dawson et al. 2005; Grelotti et al. 2002).

Neural Activation During Face Processing

Evidence from brain imaging studies indicates that the posterior lateral fusiform area activates more to faces and face-like stimuli than to other categories of stimuli (e.g., Downing et al. 2006; Puce et al. 1996). Currently, there are two divergent viewpoints regarding the specific role of the fusiform gyrus in face perception. The first suggests that the fusiform gyrus is specialized for face perception (e.g., Rhodes et al. 2004); for this reason, the posterior lateral fusiform gyrus is often referred to as the Fusiform Face Area (FFA; Kanwisher et al. 1997; Kanwisher and Yovel 2006). Evidence from numerous studies suggests that the FFA responds to faces more than any other class of visual stimulus (Haxby et al. 1994; Puce et al. 1995; Kanwisher et al. 1997; Kanwisher 2000). Lesion studies have confirmed that damage to this area of the brain results in prospagnosia, or the inability to recognize faces (Damasio et al. 1990; Whiteley and Warrington 1977).

Alternatively, it has been suggested that the fusiform gyrus is specialized for processing, more generally, objects with which an individual has expertise; as such, fusiform gyrus activity reflects the development of face expertise in humans (Marcus and Nelson 2001; Nelson 2001). Gauthier et al. (2000) and others (e.g., Xu 2005) have demonstrated that fusiform activation increases when viewing stimuli with which the individual has expertise (e.g., cars or birds). Visual training can increase fusiform activation in naïve subjects trained to become experts in a new category of objects (e.g., “Greebles”; Gauthier et al. 1999; Gauthier and Tarr 2002). This would imply that the fusiform gyrus specializes for faces due to extensive attention to and experience with faces.

In individuals with ASD, however, the pattern of neural activation is not as consistent. Several studies have reported hypoactivation of the fusiform gyrus in response to faces (e.g., Critchley et al. 2000; Hall et al. 2003; Hubl et al. 2003; Piggot et al. 2004; Wang et al. 2004). Pierce et al. (2001) demonstrated that whereas the fusiform gyrus was activated in every typical participant when viewing faces, there were inconsistent, “unique” patterns of activation in all participants with ASD. In addition to inconsistent patterns of fusiform gyrus activation, the inferior temporal gyrus (the region of the brain that typical individuals recruit when viewing objects) has been implicated in face processing in individuals with ASD (Schultz et al. 2000).

Recently, however, it has been demonstrated that when an individual with an ASD views a highly familiar face (e.g., a picture of his or her mother), the fusiform gyrus is activated (Aylward et al. 2004; Pierce et al. 2004). Similarly, the fusiform gyrus in typical adults is involved in the discrimination between familiar and unfamiliar faces (Rossion et al. 2003). This suggests that individuals with ASD respond differentially to unfamiliar and highly familiar faces, such that their neural activation patterns appear more “normative” when viewing a personally familiar face. Further, this suggests that the lack of fusiform gyrus activation, which has sometimes been reported in individuals with ASD when viewing unfamiliar faces, may be due to their lack of familiarity and social involvement with such novel, unfamiliar faces, rather than an inherent abnormal functioning of this brain region.

Eye Tracking and Familiar Faces

What are the factors leading to more ‘normative’ face processing when individuals with ASD look at a highly familiar face? It is possible that individuals with ASD are more motivated to look at a highly familiar face, allowing them to gain more experience and affective involvement with the face, thus becoming better experts at processing a familiar face. This would suggest expertise for specific exemplars (e.g., mother’s face) instead of a general class or category of stimuli (e.g., faces). As a result, individuals with ASD may actually utilize different strategies when looking at a highly familiar versus unfamiliar face, contributing to differences in brain activation.

Eye tracking methodology provides a direct assessment of where and for how long a person attends when they view a stimulus such as a face. This non-invasive method can facilitate our understanding of underlying cognitive processes involved in face perception (Karatekin 2007) and the more complex challenges related to social functioning in ASD (Boraston and Blakemore 2007). For example, Dalton and colleagues (2005) demonstrated that decreased gaze fixation on the eye region of the face may account for fusiform gyrus hypoactivation and amygdala activation when individuals with ASD view faces. Their results suggest that reduced gaze fixation is directly related to fusiform hypoactivation and heightened emotional response during face perception in ASD. Other fMRI work has revealed that fusiform gyrus activity is modulated by voluntary visual attention to faces (Wojciulik et al. 1998) and that fusiform gyrus activation in particular is influenced by visual attention to faces, whereas the amygdala was activated by fearful expressions regardless of manipulation of attention (Vuilleumier et al. 2001). This suggests patterns of eye gaze influence regional brain response, specifically in the fusiform gyrus.

Prior work suggests that typical individuals attend to the eyes when scanning faces (Haith et al. 1977) as well as the internal features of the face (Janik et al. 1978; Walker-Smith et al. 1977). Furthermore, eye movements become more predictable with increased exposure and familiarity (Althoff and Cohen 1999), suggesting that exposure to a face can influence eye movements, or patterns of attention to faces. This implies that the way in which an individual scans a face varies, depending on his or her experience with that face.

Individuals with ASD, on the other hand, tend to have fewer fixations on the internal features of the face, particularly the eyes (Trepagnier et al. 2002; Pelphrey et al. 2002; Dalton et al. 2005; Klin et al. 2002). Klin et al. (2002) used eye tracking methodology to demonstrate that while viewing dynamic images via a movie depicting naturalistic social scenes, individuals with ASD tend to spend more time looking at the mouth region of the face than the eyes. When looking at static emotional faces, reports suggest that: (1) children with ASD have similar fixation patterns as typical children (Van der Geest et al. 2002); (2) adults with ASD rely more on information from the mouth than the eyes (Neumann et al. 2006; Spezio et al. 2007a); (3) adults with ASD show less specificity in fixation to the eyes and mouth, that is, they do not gaze more at the eyes and moth even when more information is available in the eyes and mouth, respectively (Spezio et al. 2007b); and (4) adults with ASD make more saccades away from information in the eyes (Spezio et al. 2007b). A recent eye tracking study has also demonstrated that diminished eye gaze to their mother’s eyes during a ‘Still Face’ episode (neutral, expressionless face) distinguished a group of infant siblings at risk for ASD, suggesting that atypical patterns of eye gaze may be an early feature for the development of the disorder (Merin et al. 2007). Variability in terms of sample size, type of stimuli used (e.g., static versus dynamic; emotional versus neutral), and measurements used (e.g., amount of time versus number of fixations) confound deriving a consistent conclusion regarding patterns of attention to facial stimuli in individuals with ASD.

The current study sought to examine the gaze fixation patterns of individuals with ASD while viewing static, neutral familiar and unfamiliar faces. Our primary question was whether facial familiarity influences facial attention similar to the pattern of altered neural activation observed in fMRI studies.

Method

Participants

Thirty adults (28 male; 2 female) with an autism spectrum disorder (ASD) and 29 typical adults (27 male; 2 female), ranging in age from 18 to 44 years participated in this study. The ASD group was 93% Non-Hispanic or White; the typical group was 79% Non-Hispanic or White. Diagnosis of an ASD was confirmed using a parent interview, the Autism Diagnostic Interview-Revised (ADI-R; Lord et al. 1994), and the Western Psychological Services version of the Autism Diagnostic Observation Schedule (ADOS-WPS; Lord et al. 2000). Diagnosis of autism, Asperger’s Disorder, or Pervasive Developmental Disorder-Not Otherwise Specified (PDD-NOS) was determined after careful consideration of ADI-R and ADOS-WPS scores, as well as clinical judgment of an expert based on criteria from the Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition (DSM-IV; American Psychiatric Association 1994). Participants with ASD were excluded if they reported having an identifiable genetic disorder (e.g., fragile X syndrome, Norrie syndrome, neurofibromatosis, tuberous sclerosis), significant sensory or motor impairment, seizures, major physical abnormalities, or taking benzodiazepines or anti-convulsant medication. Typical participants were excluded if they reported having a history of developmental disabilities, psychiatric, or neurological disorders. Written informed consent was obtained from all participants.

Thirty-five participants provided artifact free eye tracking data. The final sample consisted of 18 typically developing participants (17 male; 1 female) and 17 participants with an ASD (16 male; 1 female). The ASD group was composed of individuals with a diagnosis of autism (n = 7), Asperger’s Disorder (n = 7), and PDD-NOS (n = 3). The ASD and typical groups did not differ in age (M age ASD group = 23.50, SD = 7.19; M age typical group = 24.24, SD = 6.86). Full-scale IQ was estimated using the Wechsler Adult Intelligence Scale-Third Edition (WAIS-III; Wechsler 1997a). The groups did not differ on Full Scale IQ scores (ASD group M = 107.12, SD = 13.31, Range = 85–126; typical group M = 109.94, SD = 13.25, Range = 91–139).

Twenty-four participants (13 ASD and 11 typical) were removed from final analyses for the following reasons: (1) For 12 participants, it was not possible to obtain a clear image of the pupil for the purpose of calibrating (e.g., due to droopy eyelids, watery eyes, dark eye color that does not allow for enough contrast between pupil and iris, or excessive blinks); (2) five participants experienced experimenter or equipment error (e.g., software, or difficulty calibrating/tracking due to problems with eye tracking equipment, resulting in unsatisfactory data quality); (3) three of the participants wore glasses or contact lenses, making it difficult to obtain accurate calibration and resulting in unsatisfactory data quality; (4) two participants did not cooperate with experiment instructions; and (5) two participants were disqualified from participation in the study for reasons unrelated to eye tracking (e.g., report of psychiatric disorder part-way through participation in the study).

Testing Apparatus

Eye saccades were recorded using the ISCAN Eye Tracking Laboratory (ETL-500) with the Headband Mounted Eye/Line of Sight Scene Imaging System (ISCAN Incorporated, Burlington, MA). The apparatus consists of a baseball cap supporting a solid-state infrared illuminator, two miniature video cameras, and a dichroic mirror that reflects an infrared image of the participant’s eye to the eye-imaging cameras but is transparent to the participant. These cameras send output directly to the Pupil/Corneal Reflection Tracking System and the Autocalibration system. The Pupil/Corneal Reflection Tracking System tracks the center of the participant’s pupil and a reflection from the surface of the cornea. The Autocalibration System uses the raw eye position data generated by the Pupil/Corneal Reflection System to calculate point of gaze information. Samples were collected at a rate of 240 Hz.

Recording Procedures

Participants were familiarized with the eye tracking equipment and room prior to the experimental session. Eye movements were recorded during viewing of the stimuli in a sound-attenuated room. The participants were seated in a chair approximately 75 centimeters from a 17-inch computer monitor that presented the digitalized, static images. The baseball cap containing the eye tracking system was fitted on the participant’s head and the experimenter monitored the participant’s behavior and eye movements. During calibration, the participant was required to hold his or her head still and look at calibration and fixation points on the monitor in front of them. Once calibration was successfully established, stimuli were presented. For participants requiring additional behavioral support during the eye tracking session, a staff research assistant sat to the side and out of view of the participant, providing verbal reminders, encouragement, and reiteration of the instructions as needed.

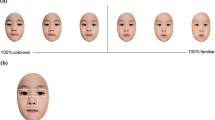

Eye Tracking Stimuli

Visual stimuli consisted of digitalized grayscale images of faces. Each image was 640 pixels in height and 480 pixels in width (6.67 × 5 inches). The highly familiar face consisted of pictures of the mother (ASD n = 14; Typical n = 0), father (ASD n = 2; Typical n = 1), or significant other (e.g., spouse, partner, roommate; ASD n = 1; Typical n = 17). Participants chose their familiar person based on who they lived with or spent the majority of their time with. Non-repeating Unfamiliar faces consisted of photos of middle-aged women. The repeating unfamiliar face, ‘New Friend’, was a randomly chosen repeating, previously unfamiliar (novel) face and was matched to the familiar face in terms of age, gender, and ethnicity. This made it possible to compare a repeating Familiar (emotionally salient) face, a repeating unfamiliar (neutral) face (New Friend), and unfamiliar (neutral) faces (Unfamiliar). This manipulation allows us to address questions of repetition versus (a priori) familiarity.

Passive Viewing of Stimuli

Eye tracking data were collected during passive viewing of facial stimuli. Each stimulus was presented for 8 s duration with a 2 s inter-stimulus (ISI) interval. The Familiar face (e.g., mother, roommate) was presented 10 times throughout the experiment. The New Friend, which had not previously been shown to the participant prior to the passive viewing phase, was presented 10 times throughout the experiment. Ten different Unfamiliar faces were each presented 1 time throughout the experiment, for a total of 10 Unfamiliar face viewings. In total, 30 trials (Familiar face 10 times, New Friend 10 times, and 10 different Unfamiliar faces) were presented. Participants were given the following instructions: “Please look at the pictures in any way you want”. No decision or judgment regarding the stimulus was required from the participant during passive viewing. During the ISI, participants were instructed to look at a crosshair in the center of the screen; this allowed the examiner to verify that calibration remained accurate throughout the experiment and placed the participant’s initial fixation between the eyes.

Facial Recognition Task and Reaction Time

After completion of the passive viewing of the stimuli, all participants were immediately presented with seven faces randomly chosen from the passive viewing phase (Familiar, New Friend, 5 Unfamiliar faces), as well as seven novel faces. Due to an error in saving data, one ASD participant was excluded from the facial recognition analyses, leaving 17 individuals in each group. Stimuli were presented in a random order during the facial recognition task. Participants were instructed to press a green button if they had seen the face before and a red button if they had not. Participant accuracy and reaction times were recorded via button press in custom LabVIEW stimulus display software using a National Instruments data acquisition card (PCI-6503), on a dedicated computer. No previous training was provided to the participants prior to the facial recognition task.

Eye Tracking Data

Fixations were defined as a gaze of at least 100 ms duration within 1 degree of visual angle. The ISCAN Point of Regard Data Acquisition software was used to define the regions of interest within the visual face stimuli. The following uniform regions of interest were defined on each stimulus: Head (including hair and ears), face (including the ‘internal’ features of the face, or the eyes, nose, and mouth), right eye, left eye, and mouth. The right eye and left eye regions were combined during data analysis to comprise a total ‘eye’ region. Because regions are mutually exclusive, fixations from all regions were combined to reflect total time on the face stimulus, designated ‘total face’ region. Raw data (number of recorded fixations and fixation durations for each region) were extracted with the ISCAN Fixation Analysis software and adapted to SQL and SPSS databases in order to further explore the data via statistical analyses.

Statistical Analyses

The first set of analyses examines the degree to which individuals with ASD display different eye gaze behavior (measured by number of fixations and duration of fixations) across the different types of face stimuli (Familiar, Unfamiliar, and New Friend) when compared to typically developing individuals. Repeated measures ANOVAs with face category as a within-subject factor (Familiar, Unfamiliar, New Friend) and diagnosis as a between-group factor (ASD, typical) were conducted for the number of fixations and total fixation durations on the total face. Differences between face categories were examined with two contrasts, Familiar versus Unfamiliar, and Familiar versus New Friend.

The second set of analyses extends the question to investigate how the two groups look at specific regions of interest (eyes and mouth areas) across the different face categories (Familiar, Unfamiliar, and New Friend). For region of interest analyses, the dependent variables were percent of total number of fixations and percent of total fixation duration. Percentages were used to account for absolute differences in total fixation number and duration. A repeated measures ANOVA with face category (Familiar, Unfamiliar, New Friend) and region (eyes, mouth) as within-subject factors, and diagnosis (ASD, typical) as a between-group factor was conducted. Differences were again examined with two contrasts: Familiar versus Unfamiliar, and Familiar versus New Friend.

The third set of analyses examined facial recognition accuracy rates and reaction times, described in more detail below. The significance value p < .05 was used for all analyses, unless otherwise noted.

Results

Eye Gaze Behavior

Number of Fixations on Total Face

Table 1 presents the total number of fixations and total fixation durations made on each stimulus type by each group. Results indicate that there was a significant group by category interaction for total number of fixations. Individuals in the typical group made a greater number of fixations when looking at the Unfamiliar faces compared to the Familiar faces, whereas the individuals with ASD showed a similar number of fixations for the Familiar and Unfamiliar faces. The typical and ASD groups did not exhibit significantly different gaze fixations when they viewed the Familiar versus New Friend faces.

Fixation Durations on Total Face

For both groups, it was found that the total duration of fixation to faces did not significantly differ when comparing the Familiar versus Unfamiliar faces and the Familiar versus New Friend faces.

Regions of Interest

Percent of Fixations in Regions of Interest

Table 2 presents number of fixations, percent number of fixations, fixation durations, and percent fixation durations for eye and mouth regions for each stimulus category by group. Typical individuals consistently made a significantly greater percentage of fixations on the eye and mouth regions across stimulus categories (see Fig. 1) when compared to the ASD group. Both groups made a significantly greater percentage of fixations in the eye region than the mouth region, across stimulus face categories, and a significantly greater percentage of fixations were made on the eyes and mouth of the Unfamiliar stimulus as compared to the Familiar stimulus. There were no differences between percentage of fixations made on the eyes and mouth when comparing the Familiar and New Friend faces.

Percent Fixation Duration in Region of Interest

Typical individuals consistently made a significantly greater percentage of fixation durations on the eye and mouth regions across stimulus categories (see Fig. 2). Both groups spent a significantly greater amount of time in the eye region compared to the mouth region across face stimulus categories. Both groups also spent significantly more time looking at the eyes and mouth of the Unfamiliar compared to the Familiar faces, whereas they spent a similar amount of time looking at the eyes and mouth of the Familiar and New Friend faces. Finally, a significant group by region interaction revealed that the typical group spent significantly more time than the ASD group looking at the eyes, whereas both groups spent a similar amount of time on the mouth region of the face across stimulus categories.

Facial Recognition

Facial Recognition Accuracy and Reaction Time

Both groups demonstrated fairly high accuracy rates when discriminating between faces they had previously seen in the experiment (termed ‘remembered’ in this task; ASD M = 84.87%, SD = 22.29%; Typical M = 87.40%, SD = 12.25%) and those that were new (ASD M = 91.60%, SD = 16.02%; Typical M = 84.15%, SD = 20.77%). Though not significant, it is notable that the group with ASD was more accurate in classifying a face as new versus correctly identifying a face they had seen previously, whereas individuals in the typical group showed almost no difference in terms of correctly classifying remembered versus new faces. Although typically developing individuals showed a tendency toward faster reaction times, the groups did not significantly differ in terms of reaction times (measured in seconds) or accuracy. Both groups were significantly faster (ASD: F(16) = −3.22, p = .005; Typical: F(16) = −2.50, p = .024) to respond to a remembered stimulus (ASD M = 2.19, SD = 1.60; Typical M = 1.45, SD = .72) than a new stimulus (ASD M = 3.01, SD = 1.86; Typical M = 2.50, SD = .84).

Discussion

It has been demonstrated through behavioral observation and clinical accounts that individuals with ASD exhibit reduced attention to faces in general, and eyes in particular (Trepagnier et al. 2002; Pelphrey et al. 2002; Dalton et al. 2005; Klin et al. 2002). Results from several neuroimaging studies, furthermore, have provided evidence for atypical patterns of neural activation during viewing of unfamiliar faces by individuals with ASD (Critchley et al. 2000; Hall, Szechtman et al. 2003; Hubl et al. 2003; Pierce et al. 2001; Piggot et al. 2004; Schultz et al. 2000; Wang et al. 2004), but normal patterns of neural activation for highly familiar faces (Aylward et al. 2004; Pierce et al. 2004). The current study utilized eye tracking technology to examine whether individuals with ASD show different gaze fixation patterns when viewing familiar and unfamiliar faces.

Consistent with previous eye tracking results, individuals in the typically developing group spent a greater percentage of time than those in the ASD group looking at the eyes, regardless of whether the face was familiar or unfamiliar. These results replicate clinical and research reports of reduced attention to eyes among individuals with ASD. Because this pattern of atypical attention to the eyes was consistent regardless of whether the faces were familiar or unfamiliar, this pattern appears to be general and not affected by familiarity of the face.

A novel result of the present study was the finding that, in contrast to typical individuals, individuals with ASD did not exhibit differential gaze patterns when viewing Familiar versus Unfamiliar faces. Specifically, it was found that typical individuals made significantly more frequent fixations when looking at the Unfamiliar faces versus the Familiar face, whereas the frequency of gaze fixations was not influenced by familiarity for the individuals with ASD. Interestingly, both groups made a similar number of fixations and spent about the same amount of time looking at the highly Familiar and New Friend faces. This suggests that within the context oft this experiment, repeated exposure to a face (the New Friend) led to patterns of attention that were similar to that of the highly Familiar face. The social relevance of the face, therefore, did not appear to have an impact on patterns of attention for either group.

Examination of facial regions revealed that both groups made a significantly greater percentage of fixations and spent a larger percentage of time on the eyes than the mouth for all face categories. This was true for the ASD group despite overall reduced attention to the eye region compared to the typical group, and is in contrast to previous studies that found that individuals with ASD spend more time looking at the mouth than the eyes (Klin et al. 2002; Spezio et al. 2007a). This difference in findings across studies likely reflects the differences in face stimuli used. In Klin et al. (2002), participants viewed dynamic images of movie characters interacting and talking with each other; participants in Spezio et al. (2007a) viewed images of emotional faces. In both of these experiments, the task may have emphasized how faces are “used” to provide social and emotional information. This may have resulted in a reliance on the mouth region to gain verbal and expressive information. In the current experiment, the participants were presented with static pictures of faces exhibiting neutral expressions similar to those most used in fMRI and ERP (event-related potential) experiments. The participants were not required to interpret the stimuli or make judgments regarding the faces. Given the opportunity to view the face in any way they wished (per the instructions provided to them), participants in both groups spent more time looking at the eyes than the mouth.

Individuals in the typical and ASD groups made a greater percentage of fixations and spent a larger percentage of time on the eye and mouth regions of the Unfamiliar faces than the Familiar face, with similar fixation patterns for the Familiar and New Friend stimuli. One possible explanation for this pattern of results is that the less familiarity a person has with a face, the more time they spend examining its integral features, particularly the eyes and mouth. With increased familiarity and exposure, one may gain efficiency or need less time to investigate the central features of the face. Given that individuals with ASD did not spend more time on the central features of the Familiar versus Unfamiliar face, the current results call into question the hypothesis that there is a link between fusiform gyrus activation and fixation patterns.

A facial recognition task was included to examine whether facial recognition accuracy differed depending on familiarity with a face. Of note, this task was relatively easy in comparison to standardized tests such as the Wechsler Memory Scale face memory test (WMS-III; 1997b), which utilizes memory for 24 new faces. In general, the ASD group in the current study took longer than the typical group to decide whether they had previously seen the face presented to them. However, accuracy between groups did not differ.

There are limitations to the current study. While eye tracking technology is informative in terms of elucidating strategies used when viewing faces, static black-and-white images are not equivalent to real-life situations in which faces are interactive. In interpersonal situations, individuals are expected to interpret and integrate nonverbal information such as facial expressions and speech, and respond in a timely, reciprocal, and appropriate manner. This is particularly challenging given the complexity of subtle but meaningful alterations in eye gaze, emotional expression, and tone of voice that may fluctuate concurrently. Images in the current study, presented on a computer screen, lack this dynamic quality of human faces. One study has in fact demonstrated that individuals with ASD utilize different gaze patterns than their typical peers when viewing dynamic but not static social stimuli (Speer et al. 2007). Despite this limitation, individuals with ASD still spent significantly less time focusing on the eye region compared to the typical group in the current study.

Eye tracking provides objective, non-invasive, quantitative assessment of attention to faces in general and eyes in particular, an impairment in ASD previously documented through behavioral observation. In the current study, evaluation of eye gaze patterns informs understanding of the social nuances in ASD, specifically in terms of attention to familiar and unfamiliar faces. Results from the current study suggest that repeated exposure to a face, regardless of its social relevance, leads to patterns of eye gaze that resemble those used when looking at a familiar face. This suggests repeated contact with an unfamiliar face, in and of itself, facilitates development of familiarity, an important step in forming social relationships. More ecologically valid paradigms (e.g., naturalistic social encounters) are warranted to further explore the clinical implications of such findings, including how increased exposure to faces impacts other aspects of social interactions, such as nonverbal communication, conversation, and emotional reciprocity. It may be possible that providing individuals with ASD exposure to less complex social stimuli (e.g., still images) prior to more complex interactions, helps to support social processing. The utility of eye tracking is also relevant in light of new intervention techniques designed to improve face processing abilities and strategies in individuals with ASD through the use of computerized face training (e.g., Faja et al. 2008). Face training in autism has the potential to improve social interactions and relatedness; eye tracking allows for evaluation of strategies used and changes in gaze patterns as a function of intervention. Eye tracking may also capture individual differences in gaze patterns so that more targeted training can be provided.

In conclusion, individuals with ASD showed atypical attention to the face stimuli regardless of whether it was unfamiliar, highly familiar, or newly familiar. Their pattern of attention was characterized by less time focused on the eyes compared to typical individuals. Additionally, in contrast to the typical individuals, participants with ASD did not exhibit differential gaze patterns when viewing the highly familiar versus unfamiliar faces. These results suggest that differences in patterns of attention may not underlie the differential neural activation patterns observed when individuals with ASD view highly familiar versus unfamiliar faces.

References

Adrien, J. L., Lenoir, P., Martineau, J., Perrot, A., Hameury, L., Larmande, C. et al. (1993). Blind ratings of early symptoms of autism based upon family home movies. Journal of the American Academy of Child and Adolescent Psychiatry, 32, 617–626.

Althoff, R. R., & Cohen, N. J. (1999). Eye-movement-based memory effect: A reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory and Cognition, 25(4), 997–1010.

American Psychological Association. (1994). Diagnostic and statistical manual of mental disorders (4th ed.). Washington, DC.

Aylward, E., Bernier, R., Field, K., Grimme, A., & Dawson, G. (2004). Normal activation of fusiform gyrus in adolescents and adults with autism during viewing of familiar, but not unfamiliar, faces. Paper presented at the STAART/CPEA (Studies to Advance Autism Research and Treatment/Collaborative Programs for Excellence in Autism) NIH network meeting, May 17–20. Bethesda, MD.

Boraston, Z., & Blakemore, S. J. (2007). The application of eye-tracking technology in the study of autism. The Journal of Physiology, 581, 893–898.

Critchley, H. D., Daly, E. M., Bullmore, E. T., Williams, S. C., Van Amelsvoort, T., Robertson, D. M. et al. (2000). The functional neuroanatomy of social behaviour: Changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain, 123, 2203–2212.

Dalton, K. M., Nacewicz, B. M., Johnstone, T., Schaefer, H. S., Gernsbacher, M. A., Goldsmith, H. H., Alexander, A. L., & Davidson, R. J. (2005). Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience, 8(4), 519–526.

Damasio, A. R., Tranel, D., & Damasio, H. (1990). Face agnosia and the neural substrates of memory. Annual Review of Neuroscience, 13, 89–109.

Davies, S., Bishop, D., Manstead, A. S., & Tantam, D. (1994). Face perception in children with autism and Asperger’s syndrome. Journal of Child Psychology and Psychiatry, 35, 1033–1057.

Dawson, G., Meltzoff, A. N., Osterling, J., Rinaldi, J., & Brown, E. (1998). Children with autism fail to orient to naturally occurring social stimuli. Journal of Autism and Developmental Disorders, 28, 479–485.

Dawson, G., Webb, S. J., & McPartland, J. (2005). Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Developmental Neuropsychology, 27(3), 403–424.

Dawson, G., Webb, S. J., Wijsman, E., Schellenberg, G., Estes, E., Munson, J. et al. (2005). Neurocognitive and electrophysiological evidence of altered face processing in parents of children with autism: Implications for a model of abnormal development of social brain circuitry in autism. Development and Psychopathology, 17, 679–697.

Downing, P. E., Chan, A. W., Peelen, M. V., Dodds, C. M., & Kanwisher, N. (2006). Domain specificity in the visual cortex. Cerebral Cortex, 16(10), 1453–1461.

Faja, S., Aylward, E., Bernier, R., & Dawson, G. (2008). Becoming a face expert: A computerized face-training program for high-functioning individuals with autism spectrum disorders. Developmental Neuropsychology, 33, 1–24.

Gauthier, I., Skudlarski, P., Gore, J. C., & Anderson, A. W. (2000). Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience, 3(2), 191–197.

Gauthier, I., & Tarr, M. J. (2002). Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. Journal of Experimental Psychology: Human Perception and Performance, 28(2), 431–446.

Gauthier, I., Tarr, M. J., Anderson, A.W, Skudanski, P., & Gore, J. C. (1999). Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nature Neuroscience, 2(6), 568–573.

Grelotti, D. J., Gauthier, I., & Schultz, R. T. (2002). Social interest and the development of cortical face specialization: What autism teaches us about face processing. Developmental Psychobiology, 40, 213–225.

Haith, M. N., Bergman, T., & Moore, M. J. (1977). Eye contact and face scanning in early infancy. Science, 198(4319), 853–855.

Hall, G. B., Szechtman, H., & Nahmias, C. (2003). Enhanced salience and emotion recognition in Autism: A PET study. The American Journal of Psychiatry, 160, 1439–1441.

Haxby, J. V., Horwitz, B., Ungerleider, L. G., Maisog, J. M., Pietrini, P., & Grady C. L. (1994). The functional organization of human extrastriate cortex: A PET-rCBF study of selective attention to faces and locations. The Journal of Neuroscience, 14, 6336–6353.

Hubl, D., Bolte, S., Feineis-Matthews, S., Lanfermann, H., Federspiel, A., Strik, W. et al. (2003). Functional imbalance of visual pathways indicates alternative face processing strategies in autism. Neurology, 61, 1232–1237.

Janik, S. W., Wellens, A. R., Goldberg, M. L., & Dell’osso, L. F. (1978). Eyes as the center of focus in the visual examination of human faces. Perceptual and Motor Skills, 47, 857–858.

Kanner, L. (1943). Autistic disturbance of affective contact. Nervous Child, 2, 217–250.

Kanwisher, N. (2000). Domain specificity in face perception. Nature Neuroscience, 3(8), 759–763.

Kanwisher, N., McDermott, J., & Chun, M. M. (1997). The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience, 17(11), 4302–4311.

Kanwisher, N., & Yovel, G. (2006). The fusiform face area: A cortical region specialized for the perception of faces. Philosophical transactions of the Royal Society of London. Series B, Biological sciences, 29, 2109–2128.

Karatekin, C. (2007). Eye tracking studies of normative and atypical development. Developmental Review, doi:10.1016/j.dr.2007.06.006.

Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59(9), 809–816.

Klin, A., Sparrow, S. S., de Bildt, A., Cicchetti, D. V., Cohen, D. J., & Volkmar, F. R. (1999). A normed study of face recognition in autism and related disorders. Journal of Autism and Developmental Disorders, 29(6), 499–508.

Lord, C., Rutter, M., DiLavore, P. C., & Risi, S. (2000). Autism diagnostic observation schedule manual. Los Angeles, CA: Western Psychological Services.

Lord, C., Rutter, M., & LeCouteur, A. (1994). Autism diagnostic interview-revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24, 659–685.

Marcus, D. J., & Nelson. C. A. (2001). Neural bases and development of face recognition in autism. CNS Spectrums, 6(1), 36–59.

Merin, N., Young, G. S., Ozonoff, S., & Rogers, S. J. (2007). Visual fixation patterns during reciprocal social interaction distinguish a subgroup of 6-month-old infants at-risk for autism from comparison infants. Journal of Autism and Developmental Disorders, 37(1), 108–121.

Nelson, C. A. (2001). The development and neural bases of face recognition. Infant and Child Development, 10, 3–18.

Neumann, D., Spezio, M. L., Piven, J., & Adolphs, R. (2006). Looking you in the mouth: Abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Social Cognitive and Affective Neuroscience, 1(3), 194–202.

Osterling, J., & Dawson, G. (1994). Early recognition of children with autism: A study of first birthday home videotapes. Journal of Autism and Developmental Disorders, 24, 247–257.

Pelphrey, K. A., Sasson, N. J., Reznick, J. S., Paul, G., Goldman, B., & Piven, J. (2002). Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32(4), 249–261.

Pierce, K., Haist, F., Sedaghat, F., & Courchesne, E. (2004). The brain response to personally familiar faces in autism: Findings of fusiform activity and beyond. Brain, 127(12), 2703–2716.

Pierce, K., Muller, R. A., Abmrose, G., Allen, G, & Courchesne, E. (2001). Face processing occurs outside the fusiform ‘face area’ in autism: Evidence from functional MRI. Brain, 124, 2059–2073.

Piggot, J., Kwon, H., Mobbs, D., Blasey, C., Lotspeich, L., Menon, V. et al. (2004). Emotional attribution in high-functioning individuals with autistic spectrum disorder: A functional imaging study. Journal of the American Academy of Child and Adolescent Psychiatry, 43, 473–480.

Puce, A., Allison, T., Asgari, M., Gore, J. C., & McCarthy, G. (1996). Differential sensitivity of human visual cortex to faces, letterstrings and textures: A functional magnetic resonance imaging study. The Journal of Neuroscience, 16(16), 5205–5215.

Puce, A., Allison, T., Gore, J. C., & McCarthy, G. (1995). Face-sensitive regions in human extrastriate cortex studied by functional MRI. Journal of Neurophysiology, 74, 1192–1199.

Rhodes, G., Byatt, G., Michie, P. T., & Puce, A. (2004). Is the fusiform face area specialized for faces, individuation, or expert individuation? Journal of Cognitive Neuroscience, 16(2), 189–203.

Rossion, B., Schiltz, C., & Crommelinck, M. (2003). The functionally defined right occipital and fusiform “face areas” discriminate novel from visually familiar faces. Neuroimage, 19, 877–883.

Schultz, R. T., Gauthier, I., Klin, A., Fulbright, R. K., Anderson, A. W., Volkmar, F. et al. (2000). Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and Asperger syndrome. Archives of General Psychiatry, 57, 331–340.

Speer, L. L., Cook, A. E., McMahon, W. M., & Clark, E. (2007). Face processing in children with autism; effects of stimulus contents and type. Autism, 11, 265–277.

Spezio, M. L., Adolphs, R., Hurley, R. S. E., & Piven, J. (2007a). Abnormal use of facial information in high-functioning autism. Journal of Autism and Developmental Disorders, 37, 929–939.

Spezio, M. L., Adolphs, R., Hurley, R. S. E., & Piven, J. (2007b). Analysis of face gaze in autism using “Bubbles”. Neuropsychologia, 47, 144–151.

Tantam, D., Holmes, D., & Cordess, C. (1993). Nonverbal expression in autism of Asperger type. Journal of Autism and Developmental Disorders, 23, 111–133.

Trepagnier, C., Sebrechts, M. M., & Peterson, R. (2002). Atypical face gaze in autism. Cyberpsychology and Behavior, 5(3), 213–217.

van der Geest, J. N., Kemner, C., Verbaten, M. N., & van Engeland, H. (2002). Gaze behavior of children with pervasive developmental disorder toward human faces: A fixation time study. Journal of Child Psychology and Psychiatry, 43(5), 669–678.

Vuilleumier, P., Armony, J. L., Driver, J., & Dolan, R. J. (2001). Effects of attention and emotion on face processing in the human brain: An event-related fMRI study. Neuron, 30(3), 829–841.

Walker-Smith, G. J., Gale, A. G., & Findlay, J. M. (1977). Eye movement strategies involved in face perception. Perception, 6(3), 313–326.

Wang, A. T., Dapretto, M., Hariri, A. R., Sigman, M., & Bookheimer, S. Y. (2004). Neural correlates of facial affect processing in children and adolescents with autism spectrum disorder. Journal of the American Academy of Child and Adolescent Psychiatry, 34, 481–490.

Wechsler, D. (1997a). Wechsler adult intelligence scale (3rd ed.). San Antonio, TX: The Psychological Corporation.

Wechsler, D. (1997b). Wechsler memory scale (3rd ed.). San Antonio, TX: The Psychological Corporation.

Whiteley, A. M., & Warrington, E. K. (1977). Prosopagnosia: A clinical, psychological, and anatomical study of three patients. Journal of Neurology, Neurosurgery, and Psychiatry, 40, 395–403.

Wojciulik, E., Kanwishwer, N., & Driver, J. (1998). Covert visual attention modulates face-specific activity in the human fusiform gyrus: fMRI study. Journal of Neurophysiology, 79(3), 1574–1578.

Xu, Y. (2005). Revisiting the role of the fusiform face area in visual expertise. Cerebral Cortex, 15(8), 1234–1242.

Acknowledgments

We wish to thank the individuals and families who participated in this study. We also wish to thank James McPartland, Ph.D., who contributed his time and technical expertise during the initial stages of this project. This research was funded by a center grant from the National Institute of Mental Health (U54MH066399), which is part of the NIMH STAART Centers.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sterling, L., Dawson, G., Webb, S. et al. The Role of Face Familiarity in Eye Tracking of Faces by Individuals with Autism Spectrum Disorders. J Autism Dev Disord 38, 1666–1675 (2008). https://doi.org/10.1007/s10803-008-0550-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-008-0550-1