Abstract

Let Γ denote a bipartite distance-regular graph with vertex set X and diameter D≥3. Fix x∈X and let L (resp., R) denote the corresponding lowering (resp., raising) matrix. We show that each Q-polynomial structure for Γ yields a certain linear dependency among RL 2, LRL, L 2 R, L. Define a partial order ≤ on X as follows. For y,z∈X let y≤z whenever ∂(x,y)+∂(y,z)=∂(x,z), where ∂ denotes path-length distance. We determine whether the above linear dependency gives this poset a uniform or strongly uniform structure. We show that except for one special case a uniform structure is attained, and except for three special cases a strongly uniform structure is attained.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In his thesis [12], Delsarte introduced the Q-polynomial property for a distance-regular graph Γ (see Sect. 2 for formal definitions). Since then the Q-polynomial property has been investigated by many authors, such as Bannai and Ito [1], Brouwer, Cohen and Neumaier [3], Caughman [4–9], Curtin [10, 11], Jurišić, Terwilliger, and Žitnik [14], Lang [15, 16], Lang and Terwilliger [17], Miklavič [18–21], Pascasio [22, 23], Tanaka [24, 25], Terwilliger [26, 27, 30, 32], and Weng [33, 34].

To simplify this investigation, it is sometimes assumed that Γ is bipartite [4–9, 15, 16, 19, 20] and this is the point of view taken in the present paper. For the rest of this Introduction, assume Γ is bipartite and Q-polynomial. To avoid trivialities, assume Γ has diameter D≥3 and valency k≥3.

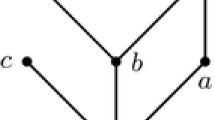

In [28], Terwilliger introduced the subconstituent algebra of Γ. For each vertex x of Γ, the corresponding subconstituent algebra T=T(x) is generated by the adjacency matrix A and a certain diagonal matrix A ∗=A ∗(x). The eigenspaces of A ∗ are the subconstituents of Γ with respect to x. The matrices A and A ∗ satisfy two relations called the tridiagonal relations [29, Lemma 5.4], [31]. The first (resp., second) tridiagonal relation is of degree 3 in A (resp., A ∗) and of degree 1 in A ∗ (resp., A). In [29], the tridiagonal relations are used to describe the combinatorics of Γ. In this description, it is natural to view Γ as the Hasse diagram for a ranked poset. The partial order ≤ is defined as follows. For vertices y,z of Γ, let y≤z whenever ∂(x,y)+∂(y,z)=∂(x,z), where ∂ denotes path-length distance. The poset structure induces a decomposition A=L+R, where L=L(x) (resp., R=R(x)) is the lowering matrix (resp., raising matrix) of Γ with respect to x. For vertices y,z of Γ, the (y,z)-entry of L is 1 if z covers y, and 0 otherwise. The matrix R is the transpose of L. In the first tridiagonal relation, if one eliminates A using A=L+R, one finds that on each x-subconstituent of Γ the elements

are linearly dependent. The coefficients in this linear dependence depend on the subconstituent. We call this collection of dependencies an R/L dependency structure.

Motivated by these R/L dependency structures, in [27] Terwilliger introduced the uniform property for a partially ordered set. In that work, he described the algebraic structure of the uniform posets and displayed eleven infinite families of examples.

In spite of the known connection between the Q-polynomial property and uniform posets, a careful study of this connection was not completed until now. The goal of the present paper is to provide this study. As part of this study we introduce a variation on the uniform property called strongly uniform. Strongly uniform implies uniform. For each Q-polynomial structure on Γ we determine precisely when the corresponding R/L dependency structure is uniform or strongly uniform. To describe our results, let \(\{\theta_{i}\}_{i=0}^{D}\) denote the ordering of the eigenvalues of Γ for the given Q-polynomial structure. Consider the following cases:

-

(i)

Γ is the hypercube H(D,2) with D even and θ i =(−1)i(D−2i) for 0≤i≤D;

-

(ii)

Γ is the antipodal quotient \(\overline{H}(2D,2)\) and θ i =2D−4i for 0≤i≤D;

-

(iii)

D=3 and Γ is of McFarland type with parameters (1,t) for some integer t≥2, and θ 0,θ 1,θ 2,θ 3 are t(t+1),t,−t,−t(t+1) respectively.

(See Sect. 4 for the meaning of McFarland type.) In Case (i), the corresponding R/L dependency structure is not uniform. In Cases (ii) and (iii), this structure is uniform but not strongly uniform. In all other cases, this structure is strongly uniform.

The paper is organized as follows. In Sects. 2 and 3, we discuss the Bose–Mesner algebra and the dual Bose–Mesner algebra of a distance-regular graph. In Sects. 4 and 5, we consider the bipartite case and discuss the associated poset structure. In Sect. 6, we consider R/L dependency structures. In Sect. 7, we review the uniform property and define the strongly uniform property. In Sects. 8–11, we consider a given Q-polynomial structure for our graph. We determine precisely when the corresponding R/L dependency structure is uniform or strongly uniform. Our main result is Theorem 11.9.

2 Preliminaries

Let X denote a nonempty finite set. Let \({\rm Mat}_{X}({\mathbb{R}})\) denote the ℝ-algebra consisting of the matrices with entries in ℝ, and rows and columns indexed by X. Let V=ℝX denote the vector space over ℝ consisting of the column vectors with entries in ℝ and rows indexed by X. Observe that \({\rm Mat}_{X}({\mathbb{R}})\) acts on V by left multiplication. We refer to V as the standard module of \({\rm Mat}_{X}({\mathbb{R}})\). We endow V with the bilinear form \(\langle\: , \rangle: V \times V \to {\mathbb{R}}\) that satisfies 〈u,v〉=u t v for u,v∈V, where t denotes transpose. For y∈X let \(\hat {y}\) denote the vector in V that has y-coordinate 1 and all other coordinates 0. Observe that \(\{\hat{y}\,|\,y \in X\}\) is an orthonormal basis for V.

Throughout the paper, let \(\varGamma =(X,\mathcal {R})\) denote a finite, undirected, connected graph, without loops or multiple edges, with vertex set X, edge set \(\mathcal {R}\), path-length distance function ∂, and diameter D:=max{∂(x,y) | x,y∈X}. For x∈X and an integer i, let Γ i (x)={y∈X | ∂(x,y)=i}. We abbreviate Γ(x)=Γ 1(x). For an integer k≥0, we say Γ is regular with valency k whenever |Γ(x)|=k for all x∈X. We say Γ is distance-regular whenever for all integers 0≤h,i,j≤D and all x,y∈X with ∂(x,y)=h the number \(p_{ij}^{h} := | \varGamma _{i}(x) \cap \varGamma _{j}(y) |\) is independent of x,y. The constants \(p_{ij}^{h}\) are known as the intersection numbers of Γ. For convenience, set \(c_{i}:=p_{1, i-1}^{i} \, (1 \le i \le D)\), \(a_{i}:=p_{1i}^{i} \, (0 \le i \le D)\), \(b_{i}:=p_{1, i+1}^{i} \, (0 \le i \le D-1)\), \(k_{i}:=p_{ii}^{0} \, (0 \le i \le D)\), and c 0:=0, b D :=0. For the rest of this paper, assume Γ is distance-regular with diameter D≥3. By the triangle inequality, for 0≤h,i,j≤D we have \(p_{ij}^{h} = 0\) (resp., \(p_{ij}^{h} \ne0\)) whenever one of h,i,j is greater than (resp., equal to) the sum of the other two. In particular, c i ≠0 for 1≤i≤D and b i ≠0 for 0≤i≤D−1. Observe that Γ is regular with valency k=b 0=k 1 and that c i +a i +b i =k for 0≤i≤D.

We recall the Bose–Mesner algebra of Γ. For 0≤i≤D, let A i denote the matrix in \({\rm Mat}_{X}({\mathbb{R}})\) with (y,z)-entry

We call A i the ith distance matrix of Γ. We abbreviate A:=A 1 and call this the adjacency matrix of Γ. We observe (ai) A 0=I; (aii) \(J = \sum_{i=0}^{D} A_{i}\); (aiii) \(A_{i}^{t} = A_{i}\ (0 \le i \le D)\); (aiv) \(A_{i}A_{j} = \sum_{h=0}^{D} p_{ij}^{h} A_{h} \ (0 \le i,j \le D)\), where I (resp., J) denotes the identity matrix (resp., all 1s matrix) in \({\rm Mat}_{X}({\mathbb{R}})\). Using these facts we find \(\{A_{i}\}_{i=0}^{D}\) is a basis for a commutative subalgebra M of \({\rm Mat}_{X}({\mathbb{R}})\). We call M the Bose–Mesner algebra of Γ. By [1, p. 190], A generates M. By [3, p. 45], M has a basis \(\{E_{i}\}_{i=0}^{D}\) such that (ei) E 0=|X|−1 J; (eii) \(I = \sum_{i=0}^{D} E_{i}\); (eiii) \(E_{i}^{t} =E_{i} \ (0 \le i \le D)\); (eiv) E i E j =δ ij E i (0≤i,j≤D). We call \(\{E_{i}\}_{i=0}^{D}\) the primitive idempotents of Γ. The primitive idempotent E 0 is said to be trivial.

We recall the eigenvalues of Γ. Since \(\{E_{i}\}_{i=0}^{D}\) form a basis for M, there exist scalars \(\{\theta_{i}\}_{i=0}^{D}\) in ℝ such that \(A = \sum_{i=0}^{D} \theta_{i} E_{i}\). Combining this with (eiv), we find

We call θ i the eigenvalue of Γ associated with E i . The \(\{\theta_{i}\}_{i=0}^{D}\) are mutually distinct since A generates M. By (ei) we have θ 0=k. By (eii)–(eiv),

For 0≤i≤D the space E i V is the eigenspace of A associated with θ i . Let m i denote the rank of E i and note that m i is the dimension of E i V. We call m i the multiplicity of θ i .

We recall the Krein parameters of Γ. Let ∘ denote the entrywise product in \({\rm Mat}_{X}({\mathbb{R}})\). Observe that A i ∘A j =δ ij A i for 0≤i,j≤D, so M is closed under ∘. Thus there exist scalars \(q^{h}_{ij} \in {\mathbb{R}}\) (0≤h,i,j≤D) such that

The parameters \(q^{h}_{ij}\) are called the Krein parameters of Γ. By [3, Proposition 4.1.5], these parameters are nonnegative. The given ordering \(\{E_{i}\}_{i=0}^{D}\) of the primitive idempotents is said to be Q-polynomial whenever for 0≤h,i,j≤D the Krein parameter \(q^{h}_{ij}=0\) (resp., \(q^{h}_{ij} \ne0\)) whenever one of h,i,j is greater than (resp., equal to) the sum of the other two. Let E denote a nontrivial primitive idempotent of Γ and let θ denote the corresponding eigenvalue. We say that Γ is Q-polynomial with respect to E (or θ) whenever there exists a Q-polynomial ordering \(\{E_{i}\}_{i=0}^{D}\) of the primitive idempotents of Γ such that E 1=E.

3 The dual Bose–Mesner algebra

We continue to discuss the distance-regular graph Γ from Sect. 2. In this section, we recall the dual Bose–Mesner algebra of Γ. For the rest of the paper, fix x∈X. For 0≤i≤D let \(E_{i}^{*}=E_{i}^{*}(x)\) denote the diagonal matrix in \({\rm Mat}_{X}({\mathbb{R}})\) with (y,y)-entry

We call \(E_{i}^{*}\) the ith dual idempotent of Γ with respect to x [28, p. 378]. For convenience, set \(E^{*}_{i} = 0\) for i<0 or i>D. We observe (esi) \(I = \sum_{i=0}^{D} E^{*}_{i}\); (esii) \(E_{i}^{*t} = E_{i}^{*} \ (0 \le i \le D)\); (esiii) \(E_{i}^{*} E_{j}^{*} = \delta_{ij} E_{i}^{*}\ (0 \le i,j \le D)\). By these facts, \(\{E^{*}_{i}\}_{i=0}^{D}\) forms a basis for a commutative subalgebra M ∗=M ∗(x) of \({\rm Mat}_{X}({\mathbb{R}})\). We call M ∗ the dual Bose–Mesner algebra of Γ with respect to x [28, p. 378]. By (esi)–(esiii),

For 0≤i≤D the subspace \(E^{*}_{i}V\) has basis \(\{\hat{y} \, | \, y \in \varGamma _{i}(x)\}\). Moreover, the dimension of \(E^{*}_{i}V\) is k i .

The algebras M and M ∗ are related as follows. By [28, Lemma 3.2],

Let E denote a nontrivial primitive idempotent of Γ and assume Γ is Q-polynomial with respect to E. Let A ∗=A ∗(x) denote the diagonal matrix in \({\rm Mat}_{X}({\mathbb{R}})\) with (y,y)-entry

We call A ∗ the dual adjacency matrix of Γ that corresponds to E and x. By [28, Lemma 3.11(ii)], A ∗ generates M ∗. We recall the dual eigenvalues for our Q-polynomial structure. Since \(\{E^{*}_{i}\}_{i=0}^{D}\) forms a basis for M ∗ there exist scalars \(\{\theta^{*}_{i}\}_{i=0}^{D}\) in ℝ such that \(A^{*}= \sum_{i=0}^{D} \theta^{*}_{i} E_{i}^{*}\). Combining this with (esiii), we find

We call \(\{\theta^{*}_{i}\}_{i=0}^{D}\) the dual eigenvalue sequence for the given Q-polynomial structure. The \(\{\theta^{*}_{i}\}_{i=0}^{D}\) are mutually distinct since A ∗ generates M ∗. For 0≤i≤D the space \(E^{*}_{i}V\) is the eigenspace of A ∗ associated with \(\theta^{*}_{i}\). By [1, Proposition 3.4.(iv)], we have that \(\theta^{*}_{0} = {\rm rank}(E)\). Let θ denote the eigenvalue of Γ associated with E. By [3, p. 128],

where \(\theta^{*}_{-1}\) and \(\theta^{*}_{D+1}\) are indeterminants.

Lemma 3.1

([29, Lemma 5.4])

Let \(\{E_{i}\}_{i=0}^{D}\) denote a Q-polynomial ordering of the primitive idempotents of Γ and for 0≤i≤D let θ i denote the eigenvalue of Γ for E i . Let \(\{\theta^{*}_{i}\}_{i=0}^{D}\) denote the dual eigenvalue sequence for the given Q-polynomial structure. Then the following (i)–(iii) hold.

-

(i)

There exists β∈ℝ such that

$$ \beta+1 = \frac{\theta_{i-2} - \theta _{i+1}}{\theta_{i-1} - \theta_i} = \frac{\theta^*_{i-2} - \theta^*_{i+1}}{\theta^*_{i-1} - \theta^*_i} $$(8)for 2≤i≤D−1.

-

(ii)

There exist γ,γ ∗∈ℝ such that both

$$ \gamma= \theta_{i-1} - \beta\theta_i + \theta_{i+1}, \qquad\gamma^* = \theta^*_{i-1} - \beta \theta^*_i + \theta^*_{i+1} $$(9)for 1≤i≤D−1.

-

(iii)

There exist ϱ,ϱ ∗∈ℝ such that both

(10)

(10)for 1≤i≤D.

Lemma 3.2

([29, Lemma 5.4])

Let E denote a Q-polynomial primitive idempotent of Γ and let A ∗=A ∗(x) denote the corresponding dual adjacency matrix. Then both

where [r,s]=rs−sr and β,γ,γ ∗,ϱ,ϱ ∗ are from Lemma 3.1.

4 Bipartite distance-regular graphs

We continue to discuss the distance-regular graph Γ from Sect. 2. Recall that Γ is bipartite whenever a i =0 for 0≤i≤D. For Γ bipartite, \(p^{h}_{ij}=0\) if h+i+j is odd (0≤h,i,j≤D). In this case,

The case in which Γ is bipartite with D=3 will play an important role.

By [3, Theorem 1.6.1.], Γ is bipartite with D=3 if and only if Γ is the incidence graph of a square 2-(v,k,λ) design. In this case, c 2=λ and v=1+k(k−1)/λ. See [2] for more information and background on square 2-designs.

Pick integers d≥1 and t≥2. A square 2-(v,k,λ) design is said to have McFarland type with parameters (d,t) whenever

For the moment assume that t is a prime power. By [2, Corollary II.8.17], a square 2-design of McFarland type with parameters (d,t) exists for every integer d≥1. By [2, p. 982], this design can be realized as a McFarland difference set.

Our graph Γ is said to have McFarland type with parameters (d,t) whenever Γ is the incidence graph of a square 2-design of McFarland type with parameters (d,t).

5 The bipartite case; lowering and raising matrices

We continue to discuss the distance-regular graph Γ from Sect. 2. For the rest of this paper, assume that Γ is bipartite.

Define a partial order ≤ on X such that for all y,z∈X,

For y,z∈X define y<z whenever y≤z and y≠z. We say that z covers y whenever y<z and there does not exist w∈X such that y<w<z. Note that z covers y if and only if y,z are adjacent and ∂(x,y)+1=∂(x,z). For 0≤i≤D each vertex in Γ i (x) covers exactly c i vertices from Γ i−1(x), and is covered by exactly b i vertices in Γ i+1(x). Therefore, the partition \(\{ \varGamma _{i}(x)\}_{i=0}^{D}\) of X is a grading of the poset (X,≤) in the sense of [27, Sect. 1].

Definition 5.1

Define matrices L=L(x) and R=R(x) by

Note that R=L t and L+R=A.

We have three observations.

Lemma 5.2

Let L,R be as in Definition 5.1. Then the following (i), (ii) hold for y∈X.

-

(i)

\(L \hat {y} = \sum \hat {z}\), where the sum is over all z∈X that are covered by y;

-

(ii)

\(R \hat {y} = \sum \hat {z}\), where the sum is over all z∈X that cover y.

Motivated by Lemma 5.2, we call L (resp., R) the lowering matrix (resp., raising matrix) of Γ with respect to x.

Lemma 5.3

Let L,R be as in Definition 5.1. Then the following (i), (ii) hold.

-

(i)

\(R E^{*}_{i} V \subseteq E^{*}_{i+1}V\) for 0≤i≤D−1, and \(R E^{*}_{D} V = 0\);

-

(ii)

\(L E^{*}_{i} V \subseteq E^{*}_{i-1}V\) for 1≤i≤D, and \(L E^{*}_{0} V = 0\).

Lemma 5.4

Let L,R be as in Definition 5.1. Then for 1≤i≤D the following (i)–(iv) hold.

-

(i)

\(E^{*}_{i-1} A E^{*}_{i} = L E^{*}_{i}\);

-

(ii)

\(E^{*}_{i-1} A E^{*}_{i} = E^{*}_{i-1} L\);

-

(iii)

\(E^{*}_{i} A E^{*}_{i-1} = R E^{*}_{i-1}\);

-

(iv)

\(E^{*}_{i} A E^{*}_{i-1} = E^{*}_{i} R\).

Moreover

Lemma 5.5

Let L,R be as in Definition 5.1. Then

for 1≤i≤D.

Proof

Straightforward using A=L+R and Lemma 5.3. □

From now on we use the following notational convention.

Notation 5.6

For the rest of this paper, we assume our distance-regular graph Γ is bipartite with valency k≥3. Let \(\{E_{i}\}_{i=0}^{D}\) denote a Q-polynomial ordering of the primitive idempotents of Γ and let \(\{\theta_{i}\}_{i=0}^{D}\) denote the corresponding eigenvalues. Abbreviate E=E 1. Recall our fixed vertex x∈X from Sect. 3. For 0≤i≤D let \(E^{*}_{i} = E^{*}_{i}(x)\) denote the ith dual idempotent of Γ with respect to x. Let A ∗=A ∗(x) denote the dual adjacency matrix of Γ that corresponds to E and x. Let \(\{\theta^{*}_{i}\}_{i=0}^{D}\) denote the dual eigenvalue sequence for the given Q-polynomial structure. Let the scalars β,γ,γ ∗,ϱ,ϱ ∗ be from Lemma 3.1. Let the matrices L=L(x) and R=R(x) be as in Definition 5.1.

With reference to Notation 5.6, we have γ=0 by [3, Theorem 8.2.1] and since Γ is bipartite. Thus by (11),

6 The R/L dependency structure

In this section, we display certain linear dependencies among RL 2,RLR,L 2 R,L.

Lemma 6.1

With reference to Notation 5.6 the following (i), (ii) hold for 1≤i≤D.

-

(i)

\(E^{*}_{i-1} A^{2} A^{*}A E^{*}_{i} = \theta^{*}_{i-1} R L^{2} E^{*}_{i} + \theta ^{*}_{i-1} L R L E^{*}_{i} + \theta^{*}_{i+1} L^{2} R E^{*}_{i}\);

-

(ii)

\(E^{*}_{i-1} A A^{*}A^{2} E^{*}_{i} = \theta^{*}_{i-2} R L^{2} E^{*}_{i} + \theta ^{*}_{i} L R L E^{*}_{i} + \theta^{*}_{i} L^{2} R E^{*}_{i}\).

Proof

Straightforward using A=L+R along with (6) and Lemma 5.3. □

Proposition 6.2

With reference to Notation 5.6, for 1≤i≤D the equation

holds on \(E^{*}_{i}V\).

Proof

Multiply (15) by \(E^{*}_{i-1}\) on the left and by \(E^{*}_{i}\) on the right. Divide the result by \(\theta^{*}_{i-1}-\theta^{*}_{i}\) and simplify using (6) along with Lemmas 5.4(i), 5.5, 6.1. □

We call the equations (16) the R/L dependency structure that corresponds to the given Q-polynomial structure. We have a comment about the coefficients in line (16).

Lemma 6.3

With reference to Notation 5.6 the following (i), (ii) hold.

-

(i)

For 3≤i≤D,

$$\frac{\theta^*_i - \theta^*_{i-1} + (\beta+1) (\theta^*_{i-2} - \theta^*_{i-1})}{\theta^*_i - \theta^*_{i-1}} = \frac{\theta ^*_{i-3} - \theta^*_{i-1}}{\theta^*_i - \theta^*_{i-1}}. $$ -

(ii)

For 1≤i≤D−2,

$$\frac{\theta^*_i - \theta^*_{i-1} + (\beta+1) (\theta^*_i - \theta ^*_{i+1})}{\theta^*_i - \theta^*_{i-1}} = \frac{\theta^*_i-\theta ^*_{i+2}}{\theta^*_i - \theta^*_{i-1}}. $$

Proof

(i) Evaluate the left-hand side using \(\beta+ 1 = (\theta ^{*}_{i-3} - \theta^{*}_{i})/(\theta^{*}_{i-2} - \theta^{*}_{i-1})\).

(ii) Evaluate the left-hand side using \(\beta+ 1 = (\theta ^{*}_{i-1} - \theta^{*}_{i+2})/(\theta^{*}_{i} - \theta^{*}_{i+1})\). □

7 Uniform structures on a poset

In this section, we discuss the uniform property for a partially ordered set [27]. This property involves the notion of a parameter matrix. With reference to Notation 5.6, by a parameter matrix we mean a tridiagonal matrix U=(e ij )1≤i,j≤D with entries in ℝ such that

-

1.

e ii =1 for 1≤i≤D;

-

2.

e i,i−1≠0 for 2≤i≤D or e i−1,i ≠0 for 2≤i≤D;

-

3.

the principal submatrix (e ij ) r≤i,j≤p is nonsingular for 1≤r≤p≤D.

We abbreviate \(e_{i}^{-} := e_{i,i-1}\) for 2≤i≤D and \(e_{i}^{+} := e_{i,i+1}\) for 1≤i≤D−1. For notational convenience, define \(e_{1}^{-} := 0\) and \(e_{D}^{+} := 0\).

By a uniform structure for Γ we mean a pair (U,f) where U=(e ij )1≤i,j≤D is a parameter matrix and \(f=\{f_{i}\} _{i=1}^{D}\) is a vector in ℝD such that the equation

holds on \(E^{*}_{i}V\) for 1≤i≤D. By a strongly uniform structure for Γ we mean a uniform structure (U,f) for Γ such that e i,i−1≠0 and e i−1,i ≠0 for 2≤i≤D. Note that a strongly uniform structure is uniform.

Lemma 7.1

With reference to Notation 5.6, let (U,f) denote a uniform structure for Γ. Then the equation

holds on \(E^{*}_{i-1}V\) for 1≤i≤D.

Proof

The equation (17) holds on \(E^{*}_{i}V\) so

By Lemma 5.4, we have \(L E^{*}_{j} = E^{*}_{j-1} L\) and \(E^{*}_{j}R = RE^{*}_{j-1}\) for 1≤j≤D. Evaluating (18) using this and (14), we find

In line (19), apply the transpose map to each term and recall R=L t. This yields

and the result follows. □

See [27] for more information on uniform posets.

Recall our Q-polynomial structure from Notation 5.6. Our next goal is to determine in which cases the corresponding R/L dependency structure is uniform or strongly uniform. We first consider the case in which β=−2, where β is from line (8).

8 The case β=−2

Recall our Q-polynomial structure from Notation 5.6. In this section, we determine whether the corresponding R/L dependency structure is uniform or strongly uniform, for the case β=−2. We will be discussing the D-dimensional hypercube H(D,2). By [3, Theorem 9.2.1], H(D,2) is distance-regular with diameter D and intersection numbers

By [3, Theorem 9.2.1], the eigenvalues of H(D,2) are \(\{D - 2i\}_{i=0}^{D}\). By [1, p. 304], the ordering \(\{D - 2i\}_{i=0}^{D}\) is Q-polynomial. For this Q-polynomial structure β=2. If D is odd then this Q-polynomial structure is unique. If D is even then H(D,2) has exactly one more Q-polynomial structure, with eigenvalue ordering \(\{(-1)^{i} (D-2i)\}_{i=0}^{D}\) [1, p. 305]. For this Q-polynomial structure β=−2.

Proposition 8.1

([26, Theorem 2])

With reference to Notation 5.6, assume β=−2. Then D is even and Γ is H(D,2) with the following Q-polynomial ordering of the eigenvalues:

Lemma 8.2

With reference to Notation 5.6, assume Γ is H(D,2) and let \(\{\theta_{i}\}_{i=0}^{D}\) denote the Q-polynomial ordering of the eigenvalues (21). Let \(\{\theta^{*}_{i}\}_{i=0}^{D}\) denote the corresponding dual eigenvalue sequence. Then \(\theta^{*}_{i} = \theta_{i}\) for 0≤i≤D. Also

Proof

We have \(\theta^{*}_{i} = \theta_{i}\) by [4, Theorem 1.1]. The remaining assertions follow from Lemma 3.1. □

Proposition 8.3

With reference to Notation 5.6, assume Γ is H(D,2) and consider the Q-polynomial ordering of the eigenvalues (21). Then the corresponding R/L dependency structure is that the equation

holds on \(E^{*}_{i}V\) for 1≤i≤D.

Proof

Evaluate (16) using Lemma 8.2. □

Proposition 8.4

With reference to Notation 5.6, assume Γ is H(D,2) and consider the Q-polynomial ordering of the eigenvalues (21). Then the corresponding R/L dependency structure is not uniform.

Proof

9 The case β≠−2

Recall our Q-polynomial structure from Notation 5.6. Until further notice assume β≠−2. Under this assumption, we show that the corresponding R/L dependency structure is uniform. Moreover, we show that this structure is strongly uniform except in two special cases. The following definition is for notational convenience.

Definition 9.1

With reference to Notation 5.6, assume β≠−2. Let U=(e ij )1≤i,j≤D denote the tridiagonal matrix with entries

For notational convenience, write \(e_{i}^{-} = e_{i,i-1}\) for 2≤i≤D and \(e_{1}^{-} = 0\), and also \(e_{i}^{+} = e_{i,i+1}\) for 1≤i≤D−1 and \(e_{D}^{+} = 0\). Define a vector \(\{f_{i}\}_{i=1}^{D}\) in ℝD such that f i =ϱ/(β+2) for 1≤i≤D.

Proposition 9.2

With reference to Notation 5.6 and Definition 9.1, the equation

holds on \(E^{*}_{i}V\) for 1≤i≤D.

Proof

Divide (16) by β+2 and use Definition 9.1. □

Our next general goal is to determine whether the equations (23) give a uniform or strongly uniform structure. In order to do this, we introduce some parameters q and s ∗.

10 The parameters q and s ∗

Recall our Q-polynomial structure from Notation 5.6. We would like to write the corresponding data in terms of two parameters q and s ∗. However, it will be convenient to exclude several special cases. The first special case is H(D,2) with eigenvalue ordering \(\{D-2i\}_{i=0}^{D}\). The next special case concerns the antipodal quotient of H(2D,2). We denote this quotient graph by \(\overline{H}(2D,2)\). By [3, p. 264], \(\overline{H}(2D,2)\) is distance-regular with diameter D and intersection numbers

and c D =2D. By [3, p. 264], the eigenvalues of \(\overline {H}(2D,2)\) are

By [1, p. 306], the ordering (24) is the unique Q-polynomial structure for \(\overline{H}(2D,2)\). In order to describe some more special cases, we turn our attention to Notation 5.6 with D=3. By [3, Proposition 4.2.2.(ii)], b 2=1 if and only if Γ is antipodal. In this case, b 1=k−1, c 2=k−1, c 3=k. Moreover, Γ has a unique Q-polynomial structure with eigenvalues θ 0=k, θ 1=1, θ 2=−1, θ 3=−k [3, p. 432]. For b 2>1, Γ has exactly two Q-polynomial structures: θ 0=k, \(\theta_{1}=\sqrt{b_{2}}\), \(\theta_{2}=-\sqrt{b_{2}}\), θ 3=−k and θ 0=k, \(\theta_{1}=-\sqrt{b_{2}}\), \(\theta_{2}=\sqrt{b_{2}}\), θ 3=−k [3, p. 432]. In the following table, we summarize the cases discussed so far.

Lemma 10.1

With reference to Notation 5.6, assume the Q-polynomial structure is listed in Table 1. Then the corresponding dual eigenvalue sequence \(\{\theta^{*}_{i}\}_{i=0}^{D}\) is given in the table below.

Case | Dual eigenvalue sequence |

|---|---|

I | \(\theta^{*}_{i} = D-2i \ (0 \le i \le D)\) |

II | \(\theta^{*}_{i} = 2(D-i)^{2} - D \ (0 \le i \le D)\) |

III | \(\theta^{*}_{0} = k\), \(\theta^{*}_{1} = 1\), \(\theta^{*}_{2} = -1\), \(\theta ^{*}_{3}=-k\) |

IV, V | \(\theta^{*}_{0} = \frac{k(k-1)}{c_{2}}, \theta^{*}_{1} = \frac {\theta_{1}(k-1)}{c_{2}}, \theta^{*}_{2} = -1, \theta^{*}_{3}=-\frac{k}{\theta _{1}}\) |

Proof

The \(\{\theta^{*}_{i}\}_{i=0}^{D}\) are computed using (7) with θ=θ 1, once \(\theta^{*}_{0}\) is known. Recall that \(\theta^{*}_{0}\) is the rank of E 1. In Case I, the rank of E 1 is D by [3, Theorem 9.2.1]. In Case II, the rank of E 1 is 2D 2−D by [3, p. 264]. In Case III, the rank of E 1 is k by [3, p. 432]. In Cases IV and V, the rank of E 1 is k(k−1)/c 2 by [3, p. 432]. The result follows. □

Lemma 10.2

With reference to Notation 5.6, assume the Q-polynomial structure is listed in Table 1. Then β, γ ∗, ϱ, ϱ ∗ are given in the table below.

Case | β | γ ∗ | ϱ | ϱ ∗ |

|---|---|---|---|---|

I | 2 | 0 | 4 | 4 |

II | 2 | 4 | 16 | 4(2D−1) |

III | k−1 | 0 | k+1 | k+1 |

IV, V | \(\frac{k-\theta_{1}}{\theta_{1}}\) | \(\frac{(k-1)\theta _{1}-c_{2}}{c_{2}}\) | θ 1(θ 1+k) | \(\frac{(k-1)(k+\theta _{1})}{c_{2}}\) |

Proof

Use Lemma 3.1 and Lemma 10.1. □

We have now completed our description of the special cases.

Lemma 10.3

([9, Lemma 3.2, Lemma 3.3])

With reference to Notation 5.6, assume the Q-polynomial structure is not listed in Table 1 and β≠−2. Then there exist q,s ∗∈ℝ such that the following (i)–(iii) hold.

-

(i)

|q|>1, s ∗ q i≠1 (2≤i≤2D+1);

-

(ii)

b 0=h(q D−1)=c D ,

-

(iii)

θ i =h(q D−i−q i), \(\theta^{*}_{i}=\theta^{*}_{0} + h^{*}(1-q^{i})(1-s^{*} q^{i+1})q^{-i} \ (0 \le i \le D)\), where

Note 10.4

With reference to Notation 5.6, assume the Q-polynomial structure is not listed in Table 1 and β≠−2. Then by [9, Corollary 6.7] the scalar s ∗ from Lemma 10.3 is zero provided D≥12.

Lemma 10.5

With reference to Notation 5.6, assume the Q-polynomial structure is not listed in Table 1 and β≠−2. Then

where q,s ∗ are from Lemma 10.3.

Proof

Use Lemma 3.1 and Lemma 10.3. □

In Lemma 10.3(i), we cited some inequalities involving q and s ∗. We now prove one more inequality involving q and s ∗.

Lemma 10.6

With reference to Notation 5.6, assume the Q-polynomial structure is not listed in Table 1 and β≠−2. Then the scalars q and s ∗ from Lemma 10.3 satisfy s ∗ q≠1.

Proof

We assume s ∗ q=1 and get a contradiction. Recall q∈ℝ and |q|>1. By [5, Theorem 15.6(ii)], the scalar

is nonnegative. The factors q D−1−1 and q D+1−1 have the same sign, since D−1 and D+1 have the same parity. Therefore, q>0. By these comments, q>1. By [5, Theorem 15.6(iii)], the scalar

is nonnegative. Since q>1 the expression (25) is negative, for a contradiction. □

11 The main result

Recall our Q-polynomial structure from Notation 5.6. We are now ready to determine whether the corresponding R/L dependency structure is uniform or strongly uniform. We begin with some computations involving the matrix U from Definition 9.1.

Proposition 11.1

With reference to Notation 5.6 and Definition 9.1, the scalars \(\{e_{i}^{-}\}_{i=2}^{D}\), \(\{e_{i}^{+}\}_{i=1}^{D-1}\), \(\{f_{i}\}_{i=1}^{D}\) are given in the following table:

Case | \(e_{i}^{-}\) | \(e_{i}^{+}\) | f i |

|---|---|---|---|

I | \(-\frac{1}{2}\) | \(-\frac{1}{2}\) | 1 |

II | \(\frac{i-D-2}{2D-2i+1}\) | \(\frac{i-D+1}{2D-2i+1}\) | 4 |

III | \(e_{2}^{-}=\frac{2-k}{2}, e_{3}^{-}=\frac{1}{1-k}\) | \(e_{1}^{+}=\frac{1}{1-k}, e_{2}^{+}=\frac{2-k}{2}\) | 1 |

IV, V | \(e_{2}^{-}=\frac{\theta_{1}-k+1}{\theta_{1}+1}, e_{3}^{-}=-\frac{\theta_{1}^{2}}{c_{2}}\) | \(e_{1}^{+}=\frac{1}{1-k}, e_{2}^{+}=\frac{\theta_{1}-c_{2}}{\theta_{1}(\theta_{1}+1)}\) | \(\theta_{1}^{2}\) |

other | \(-\frac{q^{2}(1 - s^{*} q^{2i-3})}{(q+1)(1-s^{*} q^{2i})}\) | \(-\frac{1-s^{*} q^{2i+3}}{q(q+1)(1-s^{*} q^{2i})}\) | \(\frac{q^{D-1}(1-s^{*} q^{3})^{2}}{(1 - s^{*} q^{D+2})^{2}}\) |

The scalars q,s ∗ are from Lemma 10.3.

Proof

For Cases I–V use Definition 9.1, Lemma 10.1, and Lemma 10.2. For the remaining case use Definition 9.1, Lemma 10.3, and Lemma 10.5. □

With reference to Notation 5.6, assume for the moment that D=3. For an integer t≥2, the following are equivalent: (i) Γ is of McFarland type with parameters (1,t); (ii) the intersection numbers of Γ satisfy k=t(t+1) and c 2=t. Assume that (i), (ii) hold. Then b 1=t 2+t−1, b 2=t 2, c 3=t(t+1). Moreover, the eigenvalue θ 1 is either t or −t. The case θ 1=t is contained in Case IV. We call this situation Case IV′. Let us examine Case IV′ in more detail.

Lemma 11.2

With reference to Notation 5.6, assume the Q-polynomial structure is in Case IV′. Then the following (i)–(iii) hold.

-

(i)

The eigenvalues \(\{\theta_{i}\}_{i=0}^{3}\) are

$$\theta_0=t(t+1), \qquad\theta_1=t, \qquad \theta_2=-t, \qquad\theta_3=-t(t+1). $$ -

(ii)

The dual eigenvalues \(\{\theta^{*}_{i}\}_{i=0}^{3}\) are

$$\theta^*_0=(t+1) \bigl(t^2+t-1\bigr), \qquad \theta^*_1=t^2+t-1, \qquad\theta^*_2=-1, \qquad \theta^*_3=-t-1. $$ -

(iii)

The parameters β,γ ∗,ϱ,ϱ ∗ from Lemma 3.1 are

$$\beta=t, \qquad\gamma^*=t^2+t-2, \qquad\varrho=t^2(t+2), \qquad\varrho^*=t^3+3t^2+t-2. $$

Proof

(i) Immediate from Table 1.

(ii) Immediate from Lemma 10.1.

(iii) Immediate from Lemma 10.2. □

Lemma 11.3

With reference to Notation 5.6, assume the Q-polynomial structure is in Case IV’. Then the following (i)–(iii) hold.

-

(i)

\(e_{2}^{-} = 1-t\) and \(e_{3}^{-}=-t\);

-

(ii)

\(e_{1}^{+} = -(t^{2}+t-1)^{-1}\) and \(e_{2}^{+}=0\);

-

(iii)

f i =t 2 for 1≤i≤3.

Proof

From Case IV of the table of Proposition 11.1, using k=t(t+1), c 2=t, and θ 1=t. □

Corollary 11.4

With reference to Notation 5.6 and Definition 9.1, the following (i)–(iii) hold.

-

(i)

Assume the Q-polynomial structure is in Case II. Then \(e_{D-1}^{+} =0\).

-

(ii)

Assume the Q-polynomial structure is in Case IV′. Then \(e_{2}^{+}=0\).

-

(iii)

For all other cases \(e_{i}^{-} \ne0\) for 2≤i≤D and \(e_{i}^{+} \ne0\) for 1≤i≤D−1.

Proof

(i) Immediate from Case II of the table in Proposition 11.1.

(ii) Immediate from Lemma 11.3(ii).

(iii) Immediate from Proposition 11.1, using Lemma 10.3(i) and Lemma 10.6. □

We recall a result from linear algebra.

Lemma 11.5

([13, p. 29])

Pick an integer d≥3 and let B=(B ij )1≤i,j≤d denote a tridiagonal matrix. Then

Recall the principal submatrices (e ij ) r≤i,j≤p from the beginning of Sect. 7.

Proposition 11.6

With reference to Notation 5.6 and Definition 9.1, for 1≤r≤p≤D the determinant of (e ij ) r≤i,j≤p is given in the following table:

Case | Determinant of (e ij ) r≤i,j≤p | ||

|---|---|---|---|

I | \(\frac{p-r+2}{2^{p-r+1}}\) | ||

II | \(\frac {(p-r+2)(2D-r-p+1)(D-p+1)_{p-r}}{2^{p-r+2}(D-p+1/2)_{p-r+1}}\) | ||

III | 1 if p=r; \(\frac{k}{2(k-1)}\) if p=r+1; \(\frac{1}{k-1}\) if p=r+2 | ||

IV, V |

| ||

other | \(\frac{(q^{p-r+2}-1) (1-s^{*}q^{p+r})(s^{*} q^{2r+1} ; q^{2})_{p-r}}{(q+1)^{p-r+1}(q-1)(s^{*} q^{2r} ; q^{2})_{p-r+1}}\) |

We are using the notation

Proof

For Cases III–V the result follows from a straightforward computation using Proposition 11.1. For the other cases use Proposition 11.1, Lemma 11.5, and induction on p−r. □

Corollary 11.7

With reference to Notation 5.6 and Definition 9.1, for 1≤r≤p≤D the principle submatrix (e ij ) r≤i,j≤p is nonsingular.

Proof

Immediate from Lemma 10.3(i) and Proposition 11.6. □

Proposition 11.8

With reference to Notation 5.6 assume β≠−2. For Case II and Case IV′ the corresponding R/L-dependency structure is uniform but not strongly uniform. In all other cases, the corresponding R/L-dependency structure is strongly uniform.

Proof

Immediate from Proposition 9.2, Corollary 11.4 and Corollary 11.7. □

Theorem 11.9

Let Γ denote a bipartite distance-regular graph with diameter D≥3 and valency k≥3. Fix a vertex x and let L (resp., R) denote the corresponding lowering (resp., raising) matrix from Definition 5.1. Let \(\{\theta_{i}\}_{i=0}^{D}\) denote a Q-polynomial ordering of the eigenvalues of Γ. Consider the following cases:

-

(i)

Γ is the hypercube H(D,2) with D even and θ i =(−1)i(D−2i) for 0≤i≤D;

-

(ii)

Γ is the antipodal quotient \(\overline{H}(2D,2)\) and θ i =2D−4i for 0≤i≤D;

-

(iii)

D=3 and Γ is of McFarland type with parameters (1,t) for some integer t≥2, and θ 0,θ 1,θ 2,θ 3 are t(t+1),t,−t,−t(t+1) respectively.

In Case (i), the corresponding R/L-dependency structure is not uniform. In Cases (ii) and (iii), this structure is uniform but not strongly uniform. In all other cases this structure is strongly uniform.

Proof

Immediate from Propositions 8.4 and 11.8. □

References

Bannai, E., Ito, T.: Algebraic Combinatorics I: Association Schemes. The Benjamin-Cummings Lecture Notes Ser., vol. 58. Benjamin-Cummings, Menlo Park (1984)

Beth, T., Jungnickel, D., Lenz, H.: Design Theory. Cambridge University Press, Cambridge, (1999)

Brouwer, A.E., Cohen, A.M., Neumaier, A.: Distance-Regular Graphs. Springer, Berlin (1989)

Caughman, J.S.: Spectra of bipartite P- and Q-polynomial association schemes. Graphs Comb. 14, 321–343 (1998)

Caughman, J.S.: The Terwilliger algebras of bipartite P- and Q-polynomial schemes. Discrete Math. 196, 65–95 (1999)

Caughman, J.S.: Bipartite Q-polynomial quotients of antipodal distance-regular graphs. J. Comb. Theory, Ser. A 76, 291–296 (1999)

Caughman, J.S.: The parameters of bipartite Q-polynomial distance-regular graphs. J. Algebr. Comb. 15, 223–229 (2002)

Caughman, J.S.: The last subconstituent of a bipartite P- and Q-polynomial association scheme. Eur. J. Comb. 24, 459–470 (2003)

Caughman, J.S.: Bipartite Q-polynomial distance-regular graphs. Graphs Comb. 20, 47–57 (2004)

Curtin, B.: 2-homogeneous bipartite distance-regular graphs. Discrete Math. 187, 39–70 (1998)

Curtin, B.: The Terwilliger algebra of a 2-homogeneous bipartite distance-regular graph. J. Comb. Theory, Ser. A 81, 125–141 (2001)

Delsarte, P.: An algebraic approach to the association schemes of coding theory. Philips Res. Rep., Suppl. No. 10 (1973)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge, (2009)

Jurišić, A., Terwilliger, P., Žitnik, A.: The Q-polynomial idempotents of a distance-regular graph. J. Comb. Theory, Ser. A 100, 683–690 (2010)

Lang, M.: Tails of bipartite distance-regular graphs. Eur. J. Comb. 23, 1015–1023 (2002)

Lang, M.: A new inequality for bipartite distance-regular graphs. J. Comb. Theory, Ser. A 90, 55–91 (2004)

Lang, M., Terwilliger, P.: Almost-bipartite distance-regular graphs with the Q-polynomial property. Eur. J. Comb. 28, 258–265 (2007)

Miklavič, Š.: Q-polynomial distance-regular graphs with a 1=0. Eur. J. Comb. 25, 911–920 (2004)

Miklavič, Š.: On bipartite Q-polynomial distance-regular graphs. Eur. J. Comb. 28, 94–110 (2007)

Miklavič, Š.: On bipartite Q-polynomial distance-regular graphs with c 2=1. Discrete Math. 307, 544–553 (2007)

Miklavič, Š.: Q-polynomial distance-regular graphs with a 1=0 and a 2≠0. Eur. J. Comb. 30, 192–207 (2009)

Pascasio, A.: On the multiplicities of the primitive idempotents of a Q-polynomial distance-regular graph. Eur. J. Comb. 23, 1073–1078 (2002)

Pascasio, A.: A characterization of Q-polynomial distance-regular graphs. Discrete Math. 308, 3090–3096 (2008)

Tanaka, H.: A bilinear form relating two Leonard systems. Linear Algebra Appl. 431, 1726–1739 (2009)

Tanaka, H.: Vertex subsets with minimal width and dual width in Q-polynomial distance-regular graphs. arXiv:1011.2000

Terwilliger, P.: P- and Q-polynomial schemes with q=−1. J. Comb. Theory, Ser. A 42, 64–67 (1987)

Terwilliger, P.: The incidence algebra of a uniform poset. Math. Appl. 20, 193–212 (1990)

Terwilliger, P.: The subconstituent algebra of an association scheme I. J. Algebr. Comb. 1, 363–388 (1992)

Terwilliger, P.: The subconstituent algebra of an association scheme III. J. Algebr. Comb. 2, 177–210 (1993)

Terwilliger, P.: A new inequality for distance-regular graphs. Discrete Math. 137, 319–332 (1995)

Terwilliger, P.: Two relations that generalize the q-Serre relations and Dolan–Grady relations. In: Physics and Combinatorics Nagoya, 1999, pp. 377–398. World Scientific, River Edge (2001)

Terwilliger, P.: The displacement and split decompositions for a Q-polynomial distance-regular graph. Graphs Comb. 21, 263–276 (2005)

Weng, C.: Kite-free P- and Q-polynomial schemes. Graphs Comb. 11, 201–207 (1995)

Weng, C.: Classical distance-regular graphs of negative type. J. Comb. Theory, Ser. A 76, 93–116 (1999)

Acknowledgements

Š. Miklavič was supported in part by ARRS—Javna agencija za raziskovalno dejavnost Republike Slovenija, program no. P1-0285, project no. J1-4010-1669 and project no. BI-USA/09-12-009.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Miklavič, Š., Terwilliger, P. Bipartite Q-polynomial distance-regular graphs and uniform posets. J Algebr Comb 38, 225–242 (2013). https://doi.org/10.1007/s10801-012-0401-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10801-012-0401-1