Abstract

This paper presents a novel approach to express and evaluate the complex class of queries in moving object databases called spatiotemporal pattern queries (STP queries). That is, one can specify temporal order constraints on the fulfillment of several predicates. This is in contrast to a standard spatiotemporal query that is composed of a single predicate. We propose a language design for spatiotemporal pattern queries in the context of spatiotemporal DBMSs. The design builds on the well established concept of lifted predicates. Hence, unlike previous approaches, patterns are neither restricted to specific sets of predicates, nor to specific moving object types. The proposed language can express arbitrarily complex patterns that involve various types of spatiotemporal operations such as range, metric, topological, set operations, aggregations, distance, direction, and boolean operations. This work covers the language integration in SQL, the evaluation of the queries, and the integration with the query optimizer. We also propose a simple language for defining the temporal constraints. The approach allows for queries that were never available. We provide a complete implementation in C+ + and Prolog in the context of the Secondo platform. The implementation is made publicly available online as a Secondo Plugin, which also includes automatic scripts for repeating the experiments in this paper.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The area of moving objects databases has been active since the early 2000s, and is recently receiving a lot of interest because of the advances in the positioning and sensor technologies that generates large amounts of moving objects data. These databases deal with the geometries that change over time, also called spatiotemporal data. There are two classes of models for such data. The first deals with the current movement and the predicted near future (e.g. [21]). These models are optimized for cheaper updates. The second class deals with the trajectories or the history of the movement (e.g. [16]), and these models are optimized for cheaper queries. In this paper, we focus on the second class of models, the trajectory databases.

Having the spatiotemporal trajectories of the moving objects stored in a database system allows for issuing spatiotemporal queries. One can query, for example, for animals which crossed a certain lake during a certain time interval or for the total length of a car trajectory inside a certain zone. There has been a lot of work on providing spatiotemporal data management and query operations (e.g. [4]). Recently more focus is given to the nearest neighbor queries (e.g. [11, 15]), and the trajectory similarity queries (e.g. [19]).

However, due to the recent application domains, trajectories are getting longer. Additionally, due to the privacy restrictions, trajectories are getting anonymized. The precise position and/or extent of the moving objects are more and more replaced by the events or the changes that happened during the movement, the so called semantic trajectories [2]. It is difficult to query, for example, sequences of such changes of data using traditional spatiotemporal queries. This difficulty comes from the fact, that they are composed of one predicate. In many cases, one would need to express temporal orders (relative or absolute) of several changes, each of which need to be expressed as a predicate. For example, find all trains that encountered a delay of more than half an hour after passing through a snow storm is a query that expresses two changes/predicates, one happening after the other. It is very difficult if not impossible to express such a query using the traditional spatiotemporal query methods.

Spatiotemporal pattern (STP) queries provide a more complex query framework for moving objects. In particular, they specify temporal order constraints among a set of time-dependent predicates. For example, suppose the predicates P, Q, and R that may hold over one or more time intervals and/or instants. We would like to be able to express conditions like the following:

-

P then (later) Q then R.

-

P ending before 8:30 then Q for no more than 1 h.

-

(Q then R) during P.

The predicates P, Q, and R, etc. might be of the form:

-

Vehicle X on road W.

-

Train X is inside a snow storm Y.

-

The extent of the storm area Y is larger than 4 kms2.

-

The speed of air plane Z is between 400 and 500 km/h.

For such conditions to hold, there must exist a time interval for each of the predicates, during which it is fulfilled, and this set of time intervals must fulfill the temporal order in the condition. The spatiotemporal patterns described by such conditions cannot be expressed by traditional spatiotemporal queries. One would rather need the spatiotemporal pattern queries.

More about the importance of STP queries in many fields of application is illustrated in [9]. So far we are talking about the spatiotemporal patterns that occur within individual trajectories. That is every trajectory in the database can individually answer the pattern without knowledge of other trajectories. The term Spatiotemporal Patterns is also used in the literature to refer to group patterns. This is more related to the spatiotemporal data mining literature. The methods analyze simultaneous movements and the interaction between objects (e.g. patterns like leadership, play, fighting, migration, trend-setting, ... etc). The research in this direction aims at developing a toolbox of data mining algorithms and visual analytic techniques for movement analysis. For example, algorithms for the flock, leadership, convergence and encounter patterns are presented in [12]. In this paper, we are focusing on the individual spatiotemporal pattern queries, simply denoted spatiotemporal pattern queries (STP queries) during the rest of the paper.

Few proposals exist for handling STP queries as will be detailed in the related work section. All of them lack generality in the patterns that can be expressed. They are limited to certain moving objects types (moving points in most of the proposals), and to certain types of spatiotemporal predicates (spatial predicates and nearest neighbor predicates). The approach described in this paper, expresses and evaluates STP patterns that are neither restricted to certain types of moving objects, nor to certain types of predicates. Our contributions are the following:

-

The proposed approach is based on a very general and powerful class of predicates, the so-called lifted predicates [16]. They are very powerful as they are simply the time dependent version of arbitrary static predicates. Instead of returning a bool value (like standard predicates) they return a moving(bool) (time dependent booleans as defined later). Our approach allows one to formulate temporal constraints on the results of arbitrary expressions returning such moving booleans. Formulating STP queries over lifted predicates allows for a wide range of queries that are not addressed before.

-

The proposed approach can be easily extended to support more complex patterns. Section 6 describes one such extension.

-

In contrast to previous work we are able to actually integrate STP queries into the query optimizer. Obviously for an efficient execution of pattern queries on large databases the use of indexes is mandatory. In Section 7 we consider how STP queries can be mapped by the query optimizer to efficient index accesses.

-

We propose a simple language for describing the relationship between two time intervals (e.g. Allen’s operators). The language makes it easier, from the user point of view, to express interval relations without the need to memorize their names.

-

The complete implementation of the work in this paper is done in the context of the Secondo platform [25]. It is publicly available as a Secondo Plugin and can be downloaded from the Plugins web site [22]. Parallel to this paper, we have written a user manual describing how to install and run the Plugin within a Secondo system.

-

There are automatic scripts for repeating the experiments in this paper. They are installed during the installation of the Plugin. Section 11 describes the procedure to repeat the experiments. The scripts, together with the well documented source code provided in the Plugin, allow the readers to explore our approach, further elaborate on it, and compare with other approaches.

The rest of this paper is organized as follows. Section 2 reviews the related work. Section 3 gives a brief background about the moving objects databases and recalls some necessary definitions from previous work. In Section 4, we define the proposed language. Section 5 formalizes the spatiotemporal pattern predicate as a constraint satisfaction problem, and explains the evaluation algorithms. In Section 6, the basic spatiotemporal pattern predicate is extended into a more expressive version. In Section 7 we show how to integrate our approach seamlessely with the query optimizers. Section 8 is dedicated to the technical aspects of the implementation in the Secondo framework. The experimental evaluation is shown in Section 9. In Section 10, we demonstrate two application examples that emphasize the expressive power of our approach. Section 11 and the Appendices at the end of the paper describe the experimental repeatability. Finally we conclude in Section 12.

2 Related work

A theory and a design for spatiotemporal pattern queries, although important, are not yet well established. Only few proposals exist. In [5], a model that relies on a discrete representation of the spatiotemporal space is presented. The 2D space is partitioned in a finite set of user defined partitions, called zones, each of which is assigned a label. The time domain is partitioned into constant-sized intervals. The trajectories are represented as strings of labels. For example, the trajectory part rzzzh represents a moving object that stayed in zone r for one time unit, moved to zone z and stayed there for three time units, then moved to zone h for one time unit. The user query is composed as a formal expression, which is then evaluated using efficient string matching techniques.

This approach is not general in the sense that the space and time have to be partitioned. The partitioning depends on the intended application and has to be done in advance. Moreover, only patterns that describe the changes of the location of moving points can be expressed. The approach leaves behind all other kinds of predicates (e.g. topological, metric comparisons, ...) as well as other types of moving objects (e.g. moving regions).

In [17], an index structure and efficient algorithms to evaluate STP queries that consist of spatial and neighborhood predicates is presented. The work addresses the problem of conjoint neighborhood queries (e.g. find all objects that were as close as possible to A at time T 1 then were as close as possible to B at time T 2). The two NN conditions in this query have to be evaluated conjointly. In other words, an object which minimizes the sum of the two distances at the two time points is the answer of this query.

Again the approach addresses only limited types of predicates, and handles moving points only. It tightly couples the evaluation of the predicates with the evaluation of the STP query itself. On the one hand, this allows for efficient evaluation of the STP query. It also allows for the conjoint neighborhood queries, which are not possible in our appraoch for example. On the other hand, it is very specific to this set of predicates. In order to support other predicates and/or other data types, one has to find a way to extend their evaluation algorithms. In the context of systems, a modular design that decouples the predicate evaluation from the STP query evaluation would be preferred.

The series of publications [7–9], and [20] provide a concrete formalism for spatiotemporal developments. A spatiotemporal development is a composite structure built as an alternating sequence of spatiotemporal and spatial predicates, and they are themselves spatiotemporal predicates. They describe the change, wrt. time, in the spatial relationship between two moving objects. Consider, for example, a moving point MP and a moving region MR. The development MP Crosses MR is defined as:

-

Crosses = Disjoint meet Inside meet Disjoint

where meet is a spatial predicate that yields true when its two arguments touch each other, and Disjoint is a spatiotemporal predicate that yields true when its two arguments are always spatially disjoint. The spatiotemporal predicates, denoted by being capitalized, differ from the spatial predicates in that, the former hold at time intervals while the latter hold at instants. Spatiotemporal developments consider two spatiotemporal objects and precisely describe the change in their topological relationship.

The spatiotemporal developments in their definition are not equivalent to spatiotemporal patterns, as they can only describe the change in the topological relationship between two objects. This is not general enough to describe STPs. A natural way of describing STPs would involve several interactions between one trajectory and many other objects in the spatiotemporal space, as well as the trajectory’s own motion attributes (e.g. speed, direction, ...etc.).

Additionally, all the related works discussed above share two limitations. First, they do not address issues of system integration and query optimization (e.g. SQL style syntax). Second, only sequential patterns are allowed. A pattern is not allowed to include, for example, concurrent predicates. As shown in the rest of this paper, our approach overcomes these limitations. Mainly, it is designed with expressiveness, system integration, and extensibility in mind.

3 Moving objects databases

In previous work [10, 16], and [4], a model for representing and querying moving objects is proposed. The work is based on abstract data types (ADT). The moving type constructor is used to construct the moving counterpart of every static data type. Moving geometries are represented using three abstractions; moving(point), moving(region) and moving(line). Simple data types (e.g. integer, bool, real) are also mapped to moving types. In the abstract model [16], moving objects are modeled as temporal functions that map time to geometry or value. For example, moving points are modeled as curves in the 3D space (i.e. time to the 2D space).

In [10] a discrete data model implementing the abstract model is defined. For all data types in the abstract model, corresponding discrete types whose domains are defined in terms of finite representations are introduced. In the discrete model, moving types are represented by the sliced representation as units.

Definition 1

A data type moving(\(\underline{\alpha}\)) is a set of units. Every unit is a pair (I, Instant →α). The semantic of a unit is that at any time instant during the interval I, the value of the instance can be calculated from the temporal function Instant →α. Unit intervals are not allowed to overlap, yet gaps are possible (i.e. periods during which the value of the object is undefined).

The moving data types are denoted by appending m to the standard type (e.g. mpoint denotes moving(point)). Similarly, the unit types are denoted by appending u. The mpoint, for example, is modeled in the discrete model as a set of upoints, each of which consists of a time interval and a line function. This is illustrated in Fig. 1. The coordinates of the mpoint at any time instant within the interval are obtained by evaluating the line function. The moving type constructor is similarly applied to the scalar data types (e.g. real, string, bool) [16]. A precise definition of the mbool data type is given in Section 4.

The model offers a large number of operations that fall into three classes:

-

1.

Static operations over the non-moving types. Examples are the topological predicates, set operations and aggregations.

-

2.

Spatiotemporal operations offered for the temporal types (e.g. trajectory of an mpoint, area of an mregion).

-

3.

Lifted operations offered for combinations of moving and non-moving types. Basically they are time dependent versions of the static operations.

Lifted operations are obtained by a mechanism called temporal lifting [16]. All the static operations defined for non-moving types are uniformly and consistently made applicable to the corresponding moving types. For example, a static predicate and its corresponding lifted predicate are defined as follows.

Definition 2

A static predicate is a function with the signature

where T i is a type variable that can be instantiated by any static/non-temporal data type (e.g. integer, point, region).

Example

BrandenburgGate inside Berlin.

Definition 3

A lifted predicate is a function with the signature

where ↑ is the moving type constructor. A lifted predicate is, hence, obtained by allowing one or more of the parameters of a static predicate to be of a moving data type. Consequently, the return type is a time dependent boolean mbool.

Example

Train_RE1206 inside Berlin.

Note that inside in this example is a lifted predicate because the Train_RE1206 is a moving object. It is therefore different from the standard inside predicate in the previous example.

4 Spatiotemporal pattern predicates

The Spatiotemporal Pattern Predicate (STP predicate) is the tool that we propose for expressing STP queries. It describes the pattern as a set of time-dependent predicates that are fulfilled in a certain temporal arrangement (e.g. a sequence). To motivate the idea of our design, consider the following example:

Example

A query for possible bank robbers may look for the cars which entered a gas station, kept close to the bank for a while, then drove away fast.

The query describes an STP consisting of three time-dependent predicates: car inside gas station, car close to the bank, and speed of car ≥ 80 km/h. The predicates are required to be fulfilled in a sequential temporal order.

We propose a modular language design of the STP predicate. It consists of two parts. The first defines a special kind of predicates that accept moving object arguments and report the time intervals, during which they are fulfilled. The second part is to define a language for temporal constraints on the predicate fulfillments.

Fortunately, the lifted predicates [16] in Definition 3 do exactly what is needed in the first part. Lifted predicates yield objects of type mbool, which tell about the time intervals of the predicate fulfillment. Moreover, they are not restricted to certain data types of arguments nor to certain types of operations. Formulating the STP predicate on the top of the lifted predicates easily leverages a considerable part of the available infrastructure. The temporal constraints, in the second part, enforce certain temporal arrangements between the mbool results of the lifted predicates.

We start here by a rough illustration. The details follow later in this section. The bank robbers query is written as follows:

-

SELECT c.licencenumber

-

FROM cars c, landmark l

-

WHERE l.type = ”gas station” and

-

pattern([ c.trip inside l.region as gas,

-

distance(c.trip, bank) < 50.0 as bnk,

-

speed(c.trip) > 80000 as leaving],

-

[stconstraint(gas, bnk, vec(aabb)),

-

stconstraint(bnk, leaving, vec(abab, aa.bb, aabb)])

where c.trip is an mpoint that stores the car’s trajectory. The STP predicate, denoted pattern in the SQL-like syntax, includes a set of three lifted predicates:

c.trip inside l.region,

distance(c.trip, bank) < 50.0,

speed(c.trip) > 80000

having the aliases gas, bnk, and leaving. The syntax of the STP predicate assigns aliases for the lifted predicates, so that they can be referred to in the temporal constraints. This is analogous to the aliases given to attributes and tables in the standard SQL. An alias of a lifted predicate can be any valid unique identifier. The STP predicate in this example includes two temporal constraints, denoted stconstraint in the SQL-like syntax. Each constraint is stating a temporal relationship between two of the lifted predicates (i.e. binary temporal constraints). The syntax vec(.) states the temporal order between the fulfillments of the two lifted predicates. Roughly speaking, the first temporal constraint states that the car came close to the bank after it has left the gas station. The second constraint is a bit more tricky. We wish to say that the car left the bank area quickly. This means that the car started fast, or may have started normally and then sped up after a while. The three arguments to the vec(.) operator state these three possibilities, as formalized later in this section.

Now we start the formal definition of the STP predicate. We first recall the definition of the mbool data type from [10]. Let Instant denote the domain of time instants, isomorphic to \( \mathbb{R}\). Let I T be the set of possible time intervals, i.e:

That is, a time interval can be left-closed and/or right-closed as indicated by the values of lc and rc respectively. It is also possible that the interval collapses into a single time instant, see [10]. Let the domain of Boolean Unit ubool be:

and the domain of mbool is:

where i 1 adjacent \(i_2:\Leftrightarrow i_1.t_2 = i_2.t_1 \wedge (i_1.rc \vee i_2.lc) \). This last condition ensures the mbool objects have a unique representation, the one with the minimum number of units.

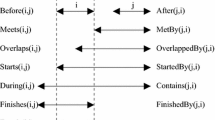

Following we define a language for temporal relationships between pairs of time intervals. It will be the base for the temporal constraints between the lifted predicates inside the STP predicate. In the temporal logic literature some studies define the relationships between pairs of time intervals, and assign them names (e.g. the 13 Allen’s operators [1]). Here we propose a language, instead of names. This is because, in our case 26 such relationships are possible, which makes it difficult for a user to memorize the names. Table 1 shows the 26 terms of this language, and a graphical illustration of each. In the terms, the letters aa denote the begin and end time instants of the first interval. Similarly bb are the begin and end of the second interval. The order of letters describes the temporal relationship, that is, a sequence ab means a < b. The dot symbol denotes the equality constraint, hence, the sequence a.b means a = b, and a.a means that the start and the ends of the first interval are the same (i.e. the interval degenerates into a time instant).

Formally, let \(\mathit{IR}\) be the set of interval relationships of Table 1, that is

Let i 1, i 2 ∈ I T , \(ir = s_1s_2...s_k \in \mathit{IR}\) (note that 4 < = k < = 7, that is, the shortest term includes two a’s and two b’s, and the longest term includes additionally three dots).

Two time intervals i 1, i 2 ∈ I T fulfill a set of interval relationships if they fulfill any of them, that is:

The vec(.) in the SQL-like syntax allows for composing such \(\mathit{SI}\) subsets. For syntactic elegance, one can assign names to them, and use the names in the queries. This is done using the let statement as follows:

-

let then = vec(abab, aa.bb, aabb);

-

let later = vec(aabb);

-

-

SELECT c.licencenumber

-

FROM cars c, landmark l

-

WHERE l.type = ”gas station” and

-

pattern([c.trip inside l.region as gas,

-

distance(c.trip, bank) < 50.0 as bnk,

-

speed(c.trip) > 80000 as leaving],

-

[stconstraint(gas, bnk, later),

-

stconstraint(bnk, leaving, then])

That is, later and then can hence be used inside the stconstraint operator.

For ease of presentation, in the following we define the STP predicate within the relational data model. The definitions can however be adapted easily to fit within other database models (e.g. object oriented), thanks to the ADT modeling of the moving objects which does not depend on a particular database model.

Let tuple denote a tuple type in the sense of the relational data model.Footnote 1 Let D tuple denote the domain of the tuples conforming to this type. Let the domain of the type mbool be:

A time-dependent predicate is a function with signature:

hence it is a function

We denote a predicate with this signature as p tuple , and a set of such predicates as P tuple when the tuple type is relevant.

Note that the definition of a time-dependent predicate is more general than that of a lifted predicate. A lifted predicate also yields an mbool, but it must correspond to some standard static predicate, see Definition 3. Formally, the STP predicate is composed of a set of time-dependent predicates, and a set of temporal constraints, as shown later in this section. Throughout the text, however, we are often using the term lifted predicate instead of the more general term time-dependent predicate because the former seems more relevant from the user point of view. That is, users will be using lifted predicates to compose their STP queries. This will become obvious from the many query examples in the rest of this paper.

Let P tuple = {p 1, ..., p n } be a set of time-dependent predicates. A temporal constraint on P tuple is an element of the set:

Hence it is a binary temporal constraint, that assigns a pair of predicates in P tuple a set of interval relationships. In the SQL-like syntax, the operator stconstraint expresses a temporal constraint. It accepts three arguments: two aliases of time-dependent predicates, and a set of interval relationships composed by the vec(.) operator.

Based on the above definitions, a spatiotemporal pattern predicate is defined as follows:

Definition 4

A spatiotemporal pattern predicate (STP predicate) is a pair (P tuple , C), where \(C \subseteq TC(P_{tuple})\).

In SQL, the operator pattern denotes the spatiotemporal pattern predicate. For an STP predicate to hold, all the temporal constraints in C must be fulfilled. Formally it is as follows:

Let t ∈ D tuple be a tuple and P tuple = {p 1, ..., p n }, we denote by p k (t) the evaluation of p k ∈ P tuple on t. Hence \(p_k(t) \in \mathit{MBool}\). We also define the set of candidate assignments \(\mathit{CA}(P_{tuple}, t)\) as:

where \(p_k^{true} = \{i | (i, true) \in p_k(t) \}\). That is, the \(CA(P_{tuple}, t)\) is simply the Cartesian product of the sets of time intervals during which the time-dependent predicates in P tuple are fulfilled with respect to the tuple t.

Let \(ca = (i_1, ... , i_n) \in CA(P_{tuple}, t)\) and let c = (j, k, SI) ∈ TC(P tuple ) be a temporal constraint

Let \(C \subseteq TC(P_{tuple})\) be a set of temporal constraints, and let t ∈ D tuple be a tuple. The set of supported assignments of C is defined as:

That is, for a candidate assignment to be a supported assignment, it must fulfill all the constraints in C. An STP predicate is fulfilled for a given tuple if and only if such a supported assignment is found.

Definition 5

A spatiotemporal pattern predicate is a function with the signature tuple → bool. Given a tuple t of type tuple, its evaluation is defined as:

5 Evaluating spatiotemporal pattern predicates

The formalization of the STP predicate in the previous section maps pretty well into the well known Constraint Satisfaction Problem (CSP). This section illustrates this mapping and the algorithms used to evaluate the STP predicate.

Definition 6

Formally, a constraint satisfaction problem is defined as a triple \(\langle X,D,C \rangle\), where X is a set of variables, D is a set of initial domains, and C is a set of constraints. Each variable x i ∈ X has a non-empty domain d i ∈ D. CSP algorithms remove values from the domains during evaluation once it is discovered that the values cannot be part of a solution. Each constraint involves a subset of variables and specifies the allowable combinations of values for this subset. An assignment for a subset of variables is supported if it satisfies all constraints. A solution to the CSP is in turn a supported assignment of all variables.

Recalling, from Definition 4, that the STP predicate contains the set P tuple = {p 1, ... , p n } of time-dependent predicates, a straightforward way to construct the sets X, D of the CSP is as follows:

-

1.

For every p i ∈ P tuple , define a variable x i with the same name as the alias of p i in the user query. Set X : = X ∪ x i .

-

2.

Given a tuple t of type tuple, compute for every p i ∈ P tuple its evaluation p i (t).

-

3.

For every \(p_{ij}^{true} \in p_i^{true}\), set \(D_i := D_i \cup p_{ij}^{true}\).

That is, a CSP variable is created for every time-dependent predicate in the STP predicate. The aliases of the lifted predicates, as specified in the user query, are used as the variable names. The initial domain of every CSP variable is the set of time intervals during which the corresponding time-dependent predicate is fulfilled. Finally the set of constraints in the CSP is the same as the set of constraints in the STP predicate. As is shown next, this is not exactly how we map the STP predicate into a CSP. The main difference is that the domains of the variables (i.e. the set D) are evaluated in a lazy fashion. Following, we briefly discuss the known algorithms for solving CSPs. Later in this section, we will be proposing another algorithm for evaluating the CSP that fits more with our approach.

A CSP having only binary constraints is called binary CSP and can be represented graphically in a constraints graph. The nodes of the graph are the variables and the links are the binary constraints. Two nodes are linked if they share a constraint. The neighborhood of a variable in the constraints graph are all variables that are directly linked to it. The spatiotemporal pattern predicate is fulfilled if and only if its corresponding CSP has at least one supported assignment.

CSPs are usually solved using variants of the backtracking algorithm. The algorithm is a depth-first tree search that starts with an empty list of assigned variables and recursively tries to find a solution (i.e. a supported assignments of all variables). In every call, backtracking adds a new variable to its list and tries all the possible assignments. If an assignment is supported, a new recursive call is made. Otherwise the algorithm backtracks to the last assigned variable. The algorithm runs in exponential time and space.

Constraint propagation methods [3] (also called local consistency methods) can reduce the domains before backtracking to improve the performance. Examples are the ARC Consistency and Neighborhood Inverse Consistency (NIC) algorithms. They detect and remove some values from the variable domains that cannot be part of a solution. Local consistency algorithms do not guarantee backtrack-free search. To have the nice property of backtrack-free search one would need to enforce n-consistency (equivalent to global consistency), which is again exponential in time and space.

The solvers for CSPs assume that the domains of the variables are known in advance. This is, however, a precondition that we wish to avoid. In the STP predicate, calculating the domain of a variable is equivalent to evaluating the corresponding lifted predicate. Since this can be expensive, we wish to delay the evaluation of the domains.

The proposed algorithm Solve Pattern below tries to solve the sub-CSP of k − 1 variables (\(CSP_{k-1}\)) first and then to extend it to \(CSP_{k}\). Therefore, an early stop is possible if a solution to the \(CSP_{k-1}\) cannot be found. Which means that, in case no solution is found, the evaluation will be stopped as soon as this is realized, without the uncessary evaluation of the remaining lifted predicates.

The Solve Pattern algorithm uses three data structures: the \(\mathit{SA}\) list (for Supported Assignments), the Agenda and the Constraint Graph. The Agenda keeps a list of variables that are not yet consumed by the algorithm. One variable from the Agenda is consumed in every iteration. Every supported assignment in the \(\mathit{SA}\) list is a solution for the sub-CSP consisting of the variables that have been evaluated so far. In iteration k there are k − 1 previously evaluated variables and one newly evaluated variable (X k with domain D k ). Every entry in \(\mathit{SA}\) at this iteration is a solution for the \(\mathit{CSP_{k-1}}\). To extend the \(\mathit{SA}\), the Cartesian product of \(\mathit{SA}\) and D k is calculated. Then only the entries that constitute a solution for \(\mathit{CSP_{k}}\) are kept in \(\mathit{SA}\). \(\mathit{CSP_{k}}\) is constructed using the consumed variables and their corresponding constraints in the constraint graph.

Algorithm Solve Pattern

input: variables, constraints

output: whether the CSP is consistent or not

1. Clear SA , Agenda and Constraint Graph

2. Add all variables to Agenda

3. Add all constraints to the Constraint Graph

4. WHILE Agenda not empty

-

(a) Pick a variable X i from the Agenda

-

(b) Calculate the variable domain D i (i.e. evaluate the

-

corresponding lifted predicate)

-

(c) Extend SA with D i

-

(d) IF SA is empty return NotConsistent

5. return Consistent

Algorithm Extend

input: i, D i ; the index and the domain of the newly

evaluated variable

1. IF SA is empty

-

(a) FOREACH interval I in D i

-

i. INSERT a new row sa in SA having sa[i]= I and

-

undefined for all other variables

ELSE

(a) set SA = the Cartesian product SA × D i

(b) Construct the subgraph CSP k that involves the

variables in SA from the Constraint Graph.

(c) FOREACH sa in SA

-

i. IF sa does not satisfy the CSP k , remove sa from

-

SA

The methodology for picking the variables from the Agenda has a big effect on the run time. The best method will choose the variables, so that inconsistencies are detected soon. For example, suppose an STP predicate having four predicates with aliases u, v, w, and x. The constraints are:

If the variables are picked in sequential order u, v, w, then x, the space and time costs are the maximum. Since u, v, and w are not connected by any constraints, the \(\mathit{SA}\) is populated by the Cartesian product of their domains in the first three iterations. The actual filter to \(\mathit{SA}\) starts in the fourth iteration after x is picked.

The function that picks the variables from the Agenda chooses the variables according to their connectivity rank in the Constraint Graph. The connectivity rank of a variable is the summation of its individual connectivities in the Constraint Graph. If a given variable is connected to an Agenda variable with a constraint, it gets 0.5 connectivity score for this constraint. This means that evaluating this variable contributes 50% in evaluating the constraint because the other variable is still not evaluated. If the other variable in the constraint is a non-Agenda variable (i.e. a variable that is already evaluated), the connectivity score is 1. Back again to the example, in the first iteration, the variables u, v, and w have connectivity ranks of 0.5, whereas x has 1.5. Therefore, x is picked in the first iteration. In the second iteration u, v, and w have equal connectivity ranks of 1, so the algorithm picks any of them.

This variable picking methodology tries to maximize the number of evaluated constraints in every iteration with the hope that they filter the \(\mathit{SA}\) list and detect inconsistencies as soon as possible.

The time cost of the Solve Pattern algorithm is

where n is the number of variables, d k is the number of values in the domain of the k th variable and e k is the number of constraints in \(CSP_{k}\). The storage cost is

The algorithm runs in O(ed n) and takes O(d n) space.

The exponential time and space costs are not prohibitive in this case. This is because the calculations done within the iterations are simple comparisons of time instants. Moreover, the number of variables in an STP query is expected to be less than 8 in the normal case. The Solve Pattern algorithm is more focused on minimizing the number of evaluated lifted predicates (statement 4.b of the algorithm). The cost of evaluating the lifted predicates varies, but it is expected to be expensive because the evaluation usually requires retrieving and processing the complete trajectory of the moving object. The run time analysis of many lifted predicates is illustrated in [4].

6 Extending the definition of the STP predicates

Back to the example of bank robbers, a sharp eyed reader will notice that the provided SQL statement can retrieve undesired tuples. Suppose that long enough trajectories are kept in the database. A car that entered a gas station in one day, passed close to the bank in the next day, and in a third day sped up will be part of the result. To avoid this, we would like to constrain the period between leaving the gas station till speeding up to be at most 1 h.

Indeed the proposed design is flexible so that such an extension is easy to integrate. The idea is that after the STP predicate is evaluated, the \(\mathit{SA}\) data structure contains all the supported assignments. As illustrated before, a supported assignment assigns an interval to each lifted predicate during which it is satisfied. At the same time the interval values of all variables satisfy all the constraints in the STP predicate. Now that we know the time intervals, we can impose more constraints on them. For example, we state that the period between leaving the gas station (first predicate) till speeding up (third predicate) must be at most 1 h.

The following describes formally an extended version of the STP predicate that allows for such additional constraints. Let P tuple = {p 1, ... , p n } be a set of time-dependent predicates, and let \(C \subseteq TC(P_{tuple})\) be a set of temporal constraints. Let g be a function:

That is, g is a predicate that accepts a set of n time intervals and a tuple, and yields a bool.

An extended STP predicate is defined as follows:

Definition 7

An extended spatiotemporal pattern predicate is a triple (P tuple , C, g). Given a tuple t of type tuple its evaluation is defined as:

That is, the boolean predicate g is applied to the supported assignments in \(\mathit{SA}\) and to the input tuple t. For the extended STP predicate to be fulfilled, g must be fulfilled at least once. The evaluation of the extended STP predicate is, hence, done in two parts, that both must succeed. The first solves the STP predicate (P tuple , C) for the given tuple t, and the second part, which is processed only after the success of the first part, evaluates the boolean predicate g for every supported assignment. Hence, conditions on the list of supported assignments \(\mathit{SA}\) are possible.

Syntactically, the user is provided with two functions start(.) and end(.) that yield the start and end time instants of the intervals in an SA element. The two functions are in the form:

Given a supported assignment \(sa \in \mathit{SA}\) and an index, the two functions yield the start and the end time instants of the time interval at this index in sa.

Formally let \(sa = \{i_1, ..., i_n\} \in \mathit{SA}(P_{tuple}, C, t)\).

where 1 ≤ k ≤ n.

To implement the extension, step 5 in the Solve Pattern algorithm is changed to return \(\mathit{SA}\). The predicate g is then iteratively evaluated for the elements of the \(\mathit{SA}\). The algorithm of evaluating the extended STP predicate is not shown in the paper, because it is a trivial change for the Solve Pattern algorithm.

The extended STP predicate is denoted patternex in the SQL-like syntax. The bank robbers query is rewritten using it as follows:

-

SELECT c.licencenumber

-

FROM cars c, landmark l

-

WHERE l.type = ”gas station” and

-

patternex([c.trip inside l.region as gas,

-

distance(c.trip, bank) < 50.0 as bnk,

-

speed(c.trip) > 100000 as leaving],

-

[stconstraint(gas, bnk, later),

-

stconstraint(bnk, leaving, then],

-

start(leaving) - end(gas) < 1)

where the additional condition start(leaving) - end(gas) < 1 ensures that the time period between the car getting out from the gas station (i.e. end(gas)) till it starts leaving the bank area (i.e. start(leaving)) is less than one hour. Note that in the SQL-like syntax, the start, and end operators get the predicate aliases, rather their indexes as in the definition.

More complex conditions can be expressed. The time intervals can be used, for example, to retrieve parts from the moving object trajectory to express additional spatial conditions. For example, the query for possible bank robbers may more specifically look for the cars which entered a gas station, made a round or more surrounding the bank, then drove away fast. To check that the car made a round surrounding the bank, a possible solution is to check the part of the car trajectory close to the bank for self intersection. The query may be written as follows

-

SELECT c.licencenumber

-

FROM cars c, landmark l

-

WHERE l.type = ”gas station” and

-

patternex([c.trip inside l.region as gas,

-

distance(c.trip, bank) < 50.0 as bnk,

-

speed(c.trip) > 100000 as leaving],

-

[stconstraint(gas, bnk, later),

-

stconstraint(bnk, leaving, then],

-

isSelfIntersecting(

-

trajectoryPart(c.trip, start(bnk), end(bnk))) and

-

(start(leaving) - end(bnk)) < 1)

where trajectoryPart computes the spatial trajectory of the moving object between two time instants and isSelfIntersecting checks a line for self intersection.

7 Optimizing spatiotemporal pattern predicates

In Section 5 we explained the evaluation of the spatiotemporal pattern predicate. The proposed algorithm is efficient because it avoids the unnecessary evaluation of lifted predicates. In the context of large-scale DBMS, this is not enough. Obviously for an efficient execution of pattern queries on large databases the use of indexes is mandatory. It should be triggered by the query optimizer during the creation of the executable plans.

In this section, we demonstrate a generic procedure for integrating the STP predicate with query optimizers. We do not assume a specific optimizer or optimization technique. The optimizer is however required to have some basic features that will probably be available in any query optimizer. In the following subsection, we describe these basic assumptions.

7.1 Query optimization

A typical query optimizer contains two basic modules; the rewriter and the planner [18]. The rewriter uses some heuristics to transform a query into another equivalent query that is, hopefully, more efficient or easier to handle in further optimization phases. The planner creates for the user query (or the rewritten version) the set of possible execution plans (possibly restricted to some classes of plans). Finally it applies a selection methodology (e.g. cost based) to select the best plan.

We assume that the query optimizer contains the rewriter and the planner modules. We also assume that it supports the data types and operations on moving objects, in SQL predicates as described in [16] and [10].

7.2 Query optimization for spatiotemporal pattern predicates

One observation that we like to make clear is that the STP predicate itself does not process database objects directly. Instead, the first operation applied is the evaluation of the lifted predicates that compose the STP predicate. The idea, hence, is to design a general framework for optimizing the lifted predicates within the STP predicate. This framework should trigger the optimizer to use the available indexes for the currently supported lifted predicates as well as for those that might be added in the future. It should utilize the common index structures. Although specialized indexes, as in [17], can achieve higher performance, the overhead of maintaining them within a system is high and they only serve specific purposes, which makes them unfavorable in the context of systems.

The idea is to add each of the lifted predicates, in a modified form, as an extra standard predicate to the query, that is, a predicate returning a boolean value. The standard predicate is chosen according to the lifted predicate, so that the fulfillment of the standard predicate implies that the lifted predicate is fulfilled at least once. This is done during query rewriting. The additional standard predicates in the rewritten query trigger the planner to use the available indexes. To illustrate the idea, the following query shows how the bank robbers query in Section 4 is rewritten.

-

SELECT c.licencenumber

-

FROM cars c, landmark l

-

WHERE l.type = ”gas station” and

-

pattern([c.trip inside l.region as gas,

-

distance(c.trip, bank) < 50.0 as bnk,

-

speed(c.trip) > 100000 as leaving],

-

[stconstraint(gas, bnk, later),

-

stconstraint(bnk, leaving, then])

-

and

-

c.trip passes l.region and

-

sometimes(distance(c.trip, bank) < 50.0) and

-

sometimes(speed(c.trip) > 100000)

The three lifted predicates in the STP predicate x inside y, distance(x, y) < z, and speed(x) < y are mapped to the standard predicates x passes y, sometimes(distance(x, y) < z), and sometimes(speed(x) < y), respectively. Here sometimes(.) is a predicate that accepts an mbool and yields true if the argument ever assumes true during its lifetime, otherwise false. Each of the standard predicates ensures that the corresponding lifted predicate is fulfilled at least once, a necessary but not sufficient condition for the pattern predicate to be fulfilled. Clearly, the rewritten query is equivalent to the original query.

The choice of the standard predicate depends on the type of the lifted predicate and the types of the arguments. For example, the lifted spatial range predicates (i.e. the spatial projection can be described by a box) are mapped into the passes standard predicate. The passes predicate [16], in this example, is fulfilled if the car c.trip ever passed the gas station l.region. If passes fails, then we know that inside is never true and that pattern will also fail. The planner should have for the added passes predicate already some optimization rule available (e.g. use a spatial R-tree index when available). In Section 9.2.2 we show an optimized query written in the Secondo executable language.

To generalize this solution, we define a table of mappings between the lifted predicates (or groups of them) and the standard predicates. Clearly, this mapping is extensible for the lifted predicates that can be introduced in the future. The mapping for the set of lifted predicates proposed in [16] is shown in Table 2.

For the lifted spatial range predicates, they map into passes and the available translation rules for passes do the rest. The distance(x, y) < z is conceptually equivalent to a lifted spatial range predicate, where the spatial range is the minimum bounding box of the static argument extended by z in every side. Other types of lifted predicates are mapped into sometimes. We need to provide translation rules that translate sometimes(.) into index lookups. For every type of lifted predicates, one such translation rule is required. For example, the sometimes(Pred), where Pred is a lifted left range predicate, searches for a B-tree defined on the units of the moving object, and performs a left range search in the B-tree. We show examples for these translation rules within Secondo in Section 8.2.

This two steps optimization helps develop a general framework for optimizing the sometimes(.) predicate, which may also appear directly in the user queries. Note that we can alternatively rewrite all lifted predicates into sometimes(.), and provide translation rules accordingly. It remains an implementation decision, which approach to use.

8 The implementation in Secondo

Secondo [13, 14, 25] is an extensible DBMS platform that does not presume a specific database model. Rather it is open for new database model implementations. For example, it should be possible to implement relational, object-oriented, spatial, temporal, or XML models.

Secondo consists of three loosely coupled modules: the kernel, GUI and query optimizer. The kernel includes the command manager, query processor, algebra manager and storage manager. The kernel may be extended by algebra modules. In an algebra module one can define new data types and/or new operations. The integration of the new types and/or operations in the query language is then achieved by adding syntax rules to the command manager.

The Secondo kernel accepts queries in a special syntax called Secondo executable language. The SQL-like syntax is provided by the optimizer. For more information about Secondo modules see [25] and [24]. For more information about extending Secondo see the documentation on [23].

If it is the case that a new data type needs a special graphical user interface (GUI) for display, the Secondo GUI module is also extensible by adding viewer modules. Several viewers exist that can display different data types. Moving objects, for example, are animated in the Hoese viewer with a time slider to navigate forwards and backwards.

A large part of the moving objects database model presented in [4, 10, 16], that we also assume in the paper, is realized in Secondo. That is, the current Secondo version 2.9.1 includes the algebra modules, the viewer modules, and the optimizer support for moving objects. In the following subsections, we describe the implementation of the STP predicate in Secondo 2.9.1. This implementation is available as a Secondo Plugin as explained in Section 11.

8.1 Extending the kernel

We have implemented the STP predicate in the Secondo kernel in a new algebra module called STPatternAlgebra. The algebra contains:

-

1.

One data type stvector. The class represents a set of interval relationships as defined in Section 4. The Secondo operator vec is used to create an stvector instance. The operator accepts a set of strings from Table 1, and constructs the stvector instance accordingly.

Example: vec(”aabb”, ”a.abb”, ”a.a.bb”).

-

2.

The stconstraint operator. The operator represents a temporal constraint within the STP predicate. The signature of the operator is:

string × string × stvector → bool

The first and second parameters are the aliases for two lifted predicates.

Example: stconstraint(”predicate1”, ”predicate2”, vec(”a.a.bb”)).

-

3.

The stpattern operator. The operator implements the STP predicate. It has the signature:

tuple ×AliasedPredicateList ×ConstraintList → bool

where the AliasedPredicateList is a list of time-dependent predicates, each of which has an alias, and the ConstraintList is a list of temporal constraints (i.e. a list of stconstraint operators).

-

4.

The stpatternex operator. The operator implements the extended STP predicate, Section 6. It has the signature:

tuple ×AliasedPredicateList ×ConstraintList × bool → bool

-

5.

The start(.) and the end(.) operators, described in Section 6. They accept a string representing a predicate alias and return the start/end of the corresponding time interval. The operators have the signature:

string → Instant

Using these operators, the query for bank robbers can be written in Secondo executable language as follows:

-

query cars feed {c}

-

landmark feed {l}

-

filter[.type_l = ”gas station”]

-

product

-

filter[.

-

stpatternex[gas: .trip_c inside .region_l,

-

bnk: distance(.trip_c, bank) < 50.0,

-

leaving: speed(.trip_c) > 100000;

-

stconstraint(”gas”, ”bnk”, vec(”aabb”)),

-

stconstraint(”bnk”, ”leaving”, vec(”abab”,

-

”aa.bb”, ”aabb”));

-

duration2real(start(”leaving”) - end(”gas”)) < (1/24) ]]

-

consume

where feed is a postfix operator that scans a relation sequentially and converts it into a stream of tuples. The query performs a cross product between the tuples of the cars relation and the tuples of landmark relation that has the value “gas station” in their type attribute. The resulting tuple stream after the cross product is filtered using the extended STP predicate stpatternex. Finally, the consume operator converts the resulting tuple stream into a relation, so that it can be displayed.

8.2 Extending the optimizer

The Secondo optimizer is written in Prolog. It implements an SQL-like query language which is translated into an optimized query in Secondo executable language. The Secondo optimizer includes a separate rewriting module that can be switched on and off by setting the optimizer options. The planner implements a novel cost based optimization algorithm which is based on shortest path search in a predicate order graph. The predicate order graph (POG) is a weighted graph whose nodes represent sets of evaluated predicates and whose edges represent predicates, containing all possible orders of predicates. For each predicate edge from node x to node y, so-called plan edges are added that represent possible evaluation methods for this predicate. Every complete path via plan edges in the POG from the bottom-most node (i.e. zero evaluated predicates) till the top-most node (i.e. all predicates evaluated) represents a different execution plan. Different paths/execution plans represent different orderings of the predicates and different evaluation methods. The plan edges of the graph are weighted by their estimated costs, which in turn are based on given selectivities. Selectivities of predicates are either retrieved from prerecorded values, or estimated by sending selection or join queries on small samples of the involved relations to the Secondo kernel and reading the cardinality of the results. The algorithm is described in more detail in [14] as well as in the Secondo programmers guide [23].

Our extension to the optimizer has three major parts: query rewriting, operator description, and translation rules. In the query rewriting, we choose to rewrite all the lifted predicates into sometimes(.). This is because an accurate rewriting based on the mapping in Table 2 requires that we know the data types of the arguments. The Secondo optimizer knows the data types only after query rewriting is done.

Following are the Prolog rules that do the rewriting:

-

inferPatternPredicates([], []).

-

-

inferPatternPredicates([Pred|Preds],

-

[sometimes(Pred)|Preds2] ):-

-

assert(removefilter(sometimes(Pred))),

-

inferPatternPredicates(Preds,Preds2).

where the inferPatternPredicate accepts the list of the lifted predicates within the STP predicate as a first argument, and yields the a list of rewritten predicates in the second argument. The additional sometimes(.) predicates are kept in the table removefilter(.), so that it is possible to exclude them from the executable plan afterwards.

In the operator descriptions, we annotated the lifted predicates by their types (e.g. lifted left range) as in Table 2. Then we provided translation rules for sometimes(.) for every type of lifted predicates. Following is an example for such a rule:

-

indexselectLifted(arg(N), Pred ) =>

-

gettuples(rdup(sort(windowintersectsS(

-

dbobject(IndexName), BBox))), rel(Name, *))

-

:-

-

Pred =..[Op, Arg1, Arg2],

-

((Arg1 = attr(_, _, _), Attr= Arg1) ;

-

(Arg2 = attr(_, _, _), Attr= Arg2)),

-

argument(N, rel(Name, *)),

-

getTypeTree(Arg1, _, [_, _, T1]),

-

getTypeTree(Arg2, _, [_, _, T2]),

-

isLiftedSpatialRangePred(Op, [T1, T2]),

-

(

-

( memberchk(T1, [rect, rect2, region, point, line,

-

points, sline]), BBox= bbox(Arg1)

-

);

-

( memberchk(T2, [rect, rect2, region, point, line,

-

points, sline]), BBox= bbox(Arg2)

-

)

-

),

-

hasIndex(rel(Name, _), Attr, DCindex,

-

spatial(rtree, unit)),

-

dcName2externalName(DCindex, IndexName).

where this rule translates the lifted spatial range predicates into an R-tree window query, as indicated in the rule header. The => operator can be read as translates into. It means that the expression to the right is the translation of the expression to the left, if the conditions in the rule body hold. The body of the rule starts by inferring the types of the arguments of the lifted predicate within the sometimes(.). Then it uses them to make sure that the predicate is of the type lifted spatial range. Finally, it checks whether a spatial R-tree index on the involved relation and attribute is available in the catalog. It tries to find a spatial R-tree built on the units of the moving object. Similar translation rules are provided for other types of indexes. The optimized query in Section 9.2.2 shows the effect of these translation rules.

9 Experimental evaluation

We proceed with an experimental evaluation of the proposed technique. The intention is to give an insight into the performance. It is clear that the runtime of an STP predicate depends on the number and types of the lifted predicates. Therefore, we show three experiments. The first measures only the overhead of evaluating the spatiotemporal pattern predicate. That is, we set the time of evaluating the lifted predicates to negligible values.

In the second experiment, we generate random STP predicates with varying numbers of lifted predicates and constraints and measure the run time of the queries. The experiment also evaluates the optimization of STP predicates. Every query is run twice; once without invoking the optimizer, and another time with the optimizer being invoked.

The third experiment is dedicated to evaluate the scalability of the proposed approach. It mainly evaluates the proposed optimization approach in large databases. A random set of queries is generated and evaluated against relations of cardinalities 50,000, 100,000, 200,0000, and 300,000, where the trajectories are indexed using the traditional R-tree index.

The first two experiments use the berlintest database that is available with the free distribution of Secondo. The last experiment uses the BerlinMOD benchmark [6] to generate the four relations. The benchmark is available for download on [25]. The three experiments are run on a Secondo platform installed on a Linux machine. The machine is a Pentium-4 dual-core 3.0 GHz processor with 2GB main memory.

9.1 The overhead of evaluating STP predicates

To perform the first experiment, we add two operators to Secondo; randommbool and passmbool. The operator randommbool accepts an Instant and creates an mbool object whose definition time starts at the given time instant, and consists of a random number of units. The operator passmbool mimics a lifted predicate. It accepts the name of an mbool database object, loads the object and returns it. More details are given below.

9.1.1 Preparing the data

This section describes how the test data for the first experiment is created. The randommbool operator is used to create a set of 30 random mbool instances and store them as database objects. The operator creates mbool objects with a random number of units varying between 0 and 20. The first unit starts at the time instant provided in the argument. Every unit has a random duration between 2 and 50,000 ms. The value of the first unit is randomly set to true or false. The value of every other unit is the negation of its preceding unit. Hence, the minimal representation requirement [10] of the moving types in Secondo is met. That is, adjacent units can not be further merged because they have different values.

The 30 mbool objects are created by calling randommbool(now()) 30 consecutive times. This increases the probability that the definition times of the objects temporally overlap.

9.1.2 Generating the queries

The queries of the first experiment are selection queries consisting of one filter condition in the form of an STP predicate. The queries are generated with different experimental settings, that is, different numbers of lifted predicates and constraints in the STP predicate. The number of lifted predicates varies between 2 and 8. The number of constraints varies between 1 and 16. The queries are not generated for every combination. For example, it does not make sense to generate STP predicates with 2 lifted predicates and 10 constraints. For N lifted predicates, the number of constraints varies between N − 1 and 2N. The rationale of this is that, if the number of constraints is less than N − 1, then the constraint network can not be complete (i.e. some predicates are not referenced within constraints). On the other hand, having more than 2N constraints increases the probability of contradicting constraints. For every experimental setting, 100 random queries are evaluated and the average run time is recorded.

A query with 3 lifted predicates and 2 constraints, for example, looks like:

-

query thousand feed

-

filter[.

-

stpattern[a: passmbool(mb5),

-

b: passmbool(mb13),

-

c: passmbool(mb3);

-

stconstraint("b", "a", later),

-

stconstraint("b", "c", vec("abab") ]]

-

count

where query thousand feed streams the thousand relation, which contains 1,000 tuples. For every tuple, the STP predicate stpattern is evaluated. Note that the predicate does not depend on the tuples. That is, the same predicate is executed 1,000 times in the query. This is to minimize the effect of the time taken by Secondo to prepare for query execution. The lifted predicates are all in the form of passmbool(X), where X is one of the 30 stored random mbool objects.

The constraints are generated so that the constraint graph is complete. We start by initializing a set called connected having one randomly selected alias. For every constraint, the two aliases are randomly chosen from the set of aliases in the query, so that at least one of them belongs to the set connected. The other alias is added to the set connected if it was not already a member. After the required number of constraints is generated, we check the completeness of the graph. If it is not complete, the process is repeated till we get a connected graph. The temporal connector for every constraint is randomly chosen from a set containing 31 temporal connectors namely, the 26 simple temporal connectors in Table 1 and 5 vector temporal connectors (later, follows, immediately, meanwhile, and then) (shown in Appendix A).

Before running the queries, we query for the 30 mbool objects so that they are loaded into the database buffer. The measured run times should, hence, show the overhead of evaluating the STP predicates in Secondo because other costs are made negligible.

9.1.3 Results

The results are shown in Fig. 2. The number of lifted predicates is denoted as N. Increasing the number of lifted predicates and constraints in the STP predicate does not have a great effect on the run time. This is a direct result of the early pruning strategy in the Solve Pattern algorithm. The results show that the evaluation of STP predicate is efficient in terms of run time.

9.2 STP queries with optimization

The second experiment is intended to evaluate the run time of STP queries. It also evaluates the effect of the proposed optimization. Unlike the first experiment, the STP predicates in this experiment contain lifted predicates. We generate 10 random queries for every experimental setting and record the average run time. Every query is run twice; without being optimized, and after optimization.

9.2.1 Preparing the data

The queries use the Trains20 relation. It is generated by replicating the tuples of the Trains relation in the berlintest database 20 times. The Trains relation was created by simulating the underground trains of the city Berlin. The simulation is based on the real train schedules and the real underground network of Berlin. The simulated period is about 4 h in one day. The schema of Trains20 is similar to Trains with the additional attribute Serial:

Trains20[Serial: int, Id: int, Line: int, Up: bool, Trip: mpoint]

where Trip is an mpoint representing the trajectory of the train. The relation contains 11,240 tuples and has a disk size of 158 MB. To evaluate the optimizer, a spatial R-tree index called Trains20_Trip_sptuni is built on the units of the Trip attribute. A set of 300 points is also created to be used in the queries. The points represent geometries of the top 300 tuples in the Restaurants relation in the berlintest database.

9.2.2 Generating the queries

The queries are generated in the same way as in the first experiment. In this experiment, however, we use actual lifted predicates instead of passmbool. Every lifted predicate in the STP predicate is randomly chosen from

-

1.

distance(trip, randomPoint) < randomDistance.

-

2.

speed(trip) > randomSpeed.

where randomPoint is a point object selected randomly from the 300 restaurant points, randomDistance ranges between 0 and 50, and randomSpeed ranges between 0 and 30. The distance(., .) < . is a sample for the lifted predicates that can be mapped into index access, so that we can evaluate the optimizer. While the queries in the first experiment are created directly in the Secondo executable language, they are created here in Secondo SQL. It is an SQL-like syntax that looks similar to the standard SQL, but obeys Prolog rules. The main differences are that everything is written in lower case, and lists are placed within square brackets.

Here is one query example from the generated queries:

-

SELECT count(*)

-

FROM trains20

-

WHERE pattern([ distance(trip, point170) < 18.0 as a,

-

speed(trip) > 11.0 as b],

-

[stconstraint("a", "b", vec("b.ba.a"))])

where pattern is the SQL operator equivalent to stpattern in the executable language. The rewritten version of the query as generated by the rewriting module of the Secondo optimizer is:

-

SELECT count(*)

-

FROM trains20

-

WHERE [ pattern([ distance(trip, point170) < 18.0 as a,

-

speed(trip) > 11.0 as b],

-

[stconstraint("a", "b", vec("b.ba.a"))]),

-

sometimes(distance(trip, point170) < 18.0),

-

sometimes(speed(trip) > 11.0)]

Finally, the optimal execution plan is:

-

Trains20_Trip_sptuni

-

windowintersectsS[ enlargeRect(bbox(point170), 18.0, 18.0)]

-

sort rdup Trains20 gettuples

-

filter[sometimes((distance(.Trip,point170) < 18.0))]

-

{0.00480288, 1.69712}

-

project[Trip]

-

filter[. stpattern[ a:(distance(.Trip, point170) <

-

18.0),

-

b:(speed(.Trip) > 11.0);

-

stconstraint("a", "b", vec("b.ba.a"))]]

-

{0.00480288, 1.49038}

-

filter[sometimes((speed(.Trip) > 11.0))]

-

{0.883731, 1.48077}

-

count

where the predicates are placed within the filter[] operator, which means that they belong to the where clause in SQL. The rewriter generates for the two lifted predicates in the original query two standard sometimes predicates. The predicate sometimes( distance(., .) < .) is handled by the optimizer as a special kind of range predicate. Since the optimizer can find the spatial R-tree index that we created, it is used. The index access part in the query is:

-

Trains20_Trip_sptuni windowintersectsS[enlargeRect

-

(., ., .)]

This part expands the minimum bounding box of point170 by the distance threshold value 18.0. The enlarged box is intersected with the R-tree to get the candidate tuple id’s. The rest of the query retrieves the data of the candidate tuples and performs the query. The pairs of numbers between the curly brackets do not affect the semantics of the query. They are estimated predicate selectivities and run time statistics used to help estimate the query execution progress.

9.2.3 Results

In Fig. 3, the chart to the left shows the average run times of the non-optimized STP queries. The chart to the right shows the average run times of their optimized counterparts. The N is again the number of lifted predicates. The run times of the optimized STP predicates are very promising.

The high peak in the optimized queries chart at N = 2 and Number of Constraints = 2 is because it happened that five of the ten generated queries have only speed(.) < . predicates. Since the sometimes(speed(.) < .) predicate does not map into index access, the average run time for this experimental setting is close to the non-optimized version.

9.3 Scalability experiment

This experiment evaluates the performance of the proposed approach in large databases. As shown in Section 7.2, the optimization of the STP predicate is carried out without special index structures, which is practically preferred in the context of systems. It remains however questionable, how far are the traditional indexes (e.g. R-trees) effective for such a type of queries. This experiment tries to answer this question.

Obviously if all the lifted predicates within the STP predicate in a given query are not supported by the indexes in the database system, then one is out of luck, and the STP predicate will be evaluated for every tuple. Therefore, in this experiment, we compose the STP predicates by lifted predicates that are supported by index structures available in Secondo.

9.3.1 Generating the data

The data for this experiment is generated using the BerlinMOD benchmark [6]. It simulates an arbitrary number of cars moving in the city Berlin. The scenarios of the trips are quite realistic, simulating the trips to and from the work place, and the leasure time trips. The benchmark is downloadable from the Secondo web site [25]. The trajectory data is generated by running Secondo scripts. It is possible to control the number of cars, and the number of observation days by editing a configuration file.

For this experiment, we have generated the four relations described in Table 3. The table shows for every relation the number of cars/trajectories, the number of simulation days, the number of units of all trajectories, and the storage space of the relation. The number of units is analogous to the total number of observations of all cars, in the discrete sense. Note that in this moving objects model, the trajectories are continuous. That is, the locations of the cars between any two consecutive observations are linearly interpolated. The generation of the four relations using the BerlinMOD benchmark took about five days on the machine described in Section 9.

For each of the four relations, a spatial R-tree index is derived for the trip attribute. The R-tree contains the bounding boxes of the units of the Trip attribute, which are of type upoint.

9.3.2 Generating the queries

The BerlinMOD benchmark generates for every car up to five trips in a working day. Two of them go to and from the work place, and the other three trips are leasure time trips in the afternoon/evening. The leasure time destinations are randomly chosen from the neighborhood of the car’s home location with a probability of 80%, and from the whole map with a probability of 20%. We use this information to design the experiment queries.

For each of the four relations in this experiment, a set of ten queries is randomly generated. Each of the queries randomly picks a car and retrieves its home location and three locations from its neighborhood, call them atmmachine, supermarket, and bakery for example. The query looks for the cars that made a leasure time trip starting from the location home, and passing by the locations atmmachine, supermarket, and bakery in order. Since the locations are chosen from the neighborhood of an existing car, there is some probability that the cars will fulfill the pattern. A sample query for the relation datascar300 looks as follows:

-

SELECT count(*)

-

FROM datascar300 c

-

WHERE [ pattern([ c.trip = home as pred1,

-

c.trip = atmmachine as pred2,

-

c.trip = supermarket as pred3,

-

c.trip = bakery as pred4],

-

[stconstraint("pred1", "pred2", later),

-

stconstraint("pred2", "pred3", later),

-

stconstraint("pred3", "pred4", later)])

-

]

where home is the home location of the car, and the = lifted predicate is fulfilled in the time instants/intervals when its two arguments have the same spatial coordinates. Ten such queries are randomly generated for every relation. The next subsection shows the average runtimes.

9.3.3 Results

In this experiment, we switch on the optimizer. Since the = lifted predicates in the queries belong to the lifted spatial range predicates, as shown in Table 2, the optimizer generates execution plans that use the R-tree indexes, that are generated during the data generation. Figure 4 shows the average runtimes. These results conclude two points:

-

Taking into consideration the large relation sizes as shown in Table 3, and the moderate machine specifications described in Section 9, the average runtimes are cheap regarding such complex query type. To be able to compare, we measured the average runtime of an optimized spatiotemporal range query on the 300,000 relation, and it shows 20 s. This is in comparison to an average of 28.6 s for the STP query. This confirms that the proposed optimization approach works fine without the need for specialized index structures.

-

The runtime seems to scale linearly with the relation size. This is already expected since the STP predicate is applied to every tuple in the input (i.e. the tuples retrieved after the index access). Note that the BerlinMOD benchmark generates all the trips within the limited spatial space of the city Berlin. A larger number of cars in the simulation implies that the window queries on the R-tree index yield more candidates.

To sum things up, the scalability of the STP queries as proposed in this paper is affected by four parameters:

-

1.

The number of lifted predicates in the STP predicate.

-

2.

The number of the temporal constraints in the STP predicate.

-

3.

The number of input tuples/trajectories.

-

4.

The length of the trajectories in terms of number of units.

The scalability in terms of the first three parameters is evaluated already in the three experiments in this paper. The last parameter, the length of trajectories, affects the evaluation time of the STP predicate indirectly as it affects the evaluation time of the lifted predicates. This is because the lifted predicates are evaluated for the complete trajectory. When the trajectories are long (e.g. several weeks of observation time), the cost of evaluating the lifted predicates increases accordingly. The majority of them scale linearly with the number of units in the trajectory. More about the lifted predicate evaluation algorithms can be found in [4].

In the STP predicate, the temporal constraints impose a certain temporal order between the lifted predicates. While evaluating the STP predicate, one gets temporal information from the lifted predicates evaluated so far. A proper analysis of this information can identify parts of the trajectory that can be safely ignored while evaluating other lifted predicates. In future work we plan to study how to utilize this information. Roughly, one would need to redefine the lifted predicates, so that they process the trajectory parts upon request (e.g. in a stream fashion) rather than the whole trajectory.

10 Application examples

To illustrate the expressive power of the proposed approach, we present in the following two subsections more examples for STP queries. Section 10.1 demonstrates a scenario called Finding Ali. It is about a kid called Ali, who moves on the street network of Cairo (the capital of Egypt). He makes several trips riding in several cars. We want to query for these cars using their movement profiles.

In Section 10.2, we demonstrate example queries that the reader can try himself/herself in Secondo. The queries are based on the berlintest database, that is available with the Secondo distribution. Unlike the first application, the queries are not linked to a single scenario. Hence we can demonstrate STP queries that involve moving points, moving regions, and many kinds of lifted operations.

10.1 Finding Ali

We assume that the road network of Cairo is observed for one month and that the complete trajectories of the cars are stored in the database. The queries assume the following schema:

-

Car[PlatesNumber: string, Trip: mpoint] where Trip is the complete trajectory of the car for the whole observation period.

-

Landmark[Name: string, Type: string, Location: point]

-

Heliopolis: A region object marking the boundary of the district Heliopolis where Ali lives.

-

AliHome: A point object marking Ali’s home.

-

FamilyHome: A point object marking the house of the father’s family.

-

SportsClub: A region object marking the boundary of the sports club in which Ali is a member.

10.1.1 The go-to-school trips with the school bus

The bus starts at the school at 6:00–6:30 am, enters the district Heliopolis at 6:45–7:00 am, stops near Ali’s home, picks Ali, exits Heliopolis at 7:45–8:00 am, then goes back to school.