Abstract

Determination of soaked california bearing ratio (CBR) and compaction characteristics of soils in the laboratory require considerable time and effort. To make a preliminary assessment of the suitability of soils required for a project, prediction models for these engineering properties on the basis of laboratory tests—which are quick to perform, less time consuming and cheap—such as the tests for index properties of soils, are preferable. Nevertheless researchers hold divergent views regarding the most influential parameters to be taken into account for prediction of soaked CBR and compaction characteristics of fine-grained soils. This could be due to the complex behaviour of soils—which, by their very nature, exhibit extreme variability. However this disagreement is a matter of concern as it affects the dependability of prediction models. This study therefore analyses the ability of artificial neural networks and multiple regression to handle different influential parameters simultaneously so as to make accurate predictions on soaked CBR and compaction characteristics of fine-grained soils. The results of simple regression analyses included in this study indicate that optimum moisture content (OMC) and maximum dry density (MDD) of fine-grained soils bear better correlation with soaked CBR of fine-grained soils than plastic limit and liquid limit. Simple regression analyses also indicate that plastic limit has stronger correlation with compaction characteristics of fine-grained soils than liquid limit. On the basis of these correlations obtained using simple regression analyses, neural network prediction models and multiple regression prediction models—with varying number of input parameters are developed. The results reveal that neural network models have more ability to utilize relatively less influential parameters than multiple regression models. The study establishes that in the case of neural network models, the relatively less powerful parameters—liquid limit and plastic limit can also be used effectively along with MDD and OMC for better prediction of soaked CBR of fine-grained soils. Also with the inclusion of less significant parameter—liquid limit along with plastic limit the predictions on compaction characteristics of fine-grained soils using neural network analysis improves considerably. Thus in the case of neural network analysis, the use of relatively less influential input parameters along with stronger parameters is definitely beneficial, unlike conventional statistical methods—for which, the consequence of this approach is unpredictable—giving sometimes not so favourable results. Very weak input parameters alone need to be avoided for neural network analysis. Consequently, when there is ambiguity regarding the most influential input parameters, neural network analysis is quite useful as all such influential parameters can be taken to consideration simultaneously, which will only improve the performance of neural network models. As soils by their very nature, exhibit extreme complexity, it is necessary to include maximum number of influential parameters—as can be determined easily using simple laboratory tests—in the prediction models for soil properties, so as to improve the reliability of these models—for which, use of neural networks is more desirable.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Large-scale constructions are taking place all over the world for which huge quantities of filling/stabilization materials are required. For this purpose, soils and industrial by-products such as fly ash, quarry fines etc. are collected from extensive areas. Such soils/materials may have large variations in their engineering properties. Proper estimation of various engineering properties (such as soaked CBR, MDD, OMC etc.) of soils/filling materials used for construction work is essential to ensure satisfactory performance of structures built over them. To obtain the compaction characteristics from laboratory tests, considerable time and effort is required. The soaked CBR test which is an empirical measure for the evaluation of sub grade strength of roads and pavements is not only laborious and time consuming, but also requires costly equipment. Hence while planning various construction projects, due to limited resources and time available, few laboratory tests on soaked CBR and compaction characteristics of soils are conducted. Therefore the soil investigation data obtained are quite insufficient in many cases.

Under these circumstances, if the estimation of the soaked CBR/compaction characteristics of fine-grained soils could be developed on the basis of some laboratory tests—which are simple, speedy and cheap, it shall be useful to engineers. Thus, for a preliminary assessment of the suitability of the soils/filling material required for an earthwork project, determination of compaction characteristics/soaked CBR is important.

Even though development of prediction models for engineering properties of fine-grained soils is beneficial, it is found that researchers differ widely in their opinion regarding the input parameters to be chosen for these prediction models. This could be due to the complex behaviour of soils which, by their very nature, exhibit extreme variability. However this disagreement is a matter of concern as it hampers the use of prediction models. Hence this study focuses on use of various influential input parameters simultaneously, for development of prediction models, using two analytical tools—neural networks and multiple regressions.

2 Literature Review

A detailed literature review was conducted prior to the development of prediction models for engineering properties of fine-grained soils using regression analysis and artificial neural network analysis. The review investigated the current state of knowledge regarding soaked CBR and compaction characteristics of fine-grained soils and examined the various correlations already proposed for these engineering properties of soils. The suitability of regression and neural networks for development of prediction models in geotechnical engineering was analysed. The review also explored the steps to be taken to improve the performance of the prediction models and the various criteria to be adopted for the determination of goodness-of-fit between the predicted and observed data.

Pandian et al. (1997) proposed a method to predict the compaction characteristics of fine-grained soils in terms of the liquid limit. Studies conducted by Sridharan and Nagaraj (2005) to discover which of the index properties of fine-grained soils correlate well with the compaction characteristics proved that that the compaction characteristics do not correlate well with either the liquid limit or the plasticity index of the soils. However, the plastic limit was found to correlate well with the compaction characteristics, namely optimum moisture content and maximum dry unit weight. Roy and Chattopadhyay (2006) derived a correlation for predicting OMC and MDD on the basis of liquid limit of a soil.

Agarwal and Ghanekar (1970) developed a CBR model considering OMC and liquid limit of cohesive soils. Roy et al. (2009) derived correlation for CBR of cohesive soils on the basis of compaction characteristics. Patel and Desai (2010) proposed a correlation between plasticity index, MDD and OMC for soaked CBR of alluvial soils. A study to check the validity of available correlations between CBR and other properties of soils was made by Roy et al. (2007) in which only partial agreement was found between the predicted and tested values. Datta and Chattopadhyay (2011) found that predicted values from correlation given by Patel and Desai (2010) agree with the tested values particularly for CI soils. But for other soils the predicted values are much lower than the tested values.

Studies on applications of artificial neural networks in geotechnical engineering by Shahin et al. (2008) establish that ANNs are well suited to modelling the complex behaviour of most geotechnical engineering materials which, by their very nature, exhibit extreme variability. ANNs learn from data examples presented to them in order to capture the subtle functional relationships among the data even if the underlying relationships are unknown or the physical meaning is difficult to explain. This is in contrast to most traditional empirical and statistical methods which need prior knowledge about the nature of the relationships among the data (Shahin et al. 2008).

Shahin et al. (2008) state that the purpose of ANNs is to non-linearly interpolate (generalize) in high-dimensional space between the data used for calibration. Unlike conventional statistical models, ANN models generally have a large number of model parameters (connection weights) and can therefore over fit the training data, especially if the training data are noisy. In other words, if the number of degrees of freedom of the model is large compared with the number of data points used for calibration, the model might no longer fit the general trend, as desired, but might learn the idiosyncrasies of the particular data points used for calibration leading to ‘memorization’ rather than ‘generalization’. As ANNs have difficulty extrapolating beyond the range of the data used for calibration, in order to develop the best ANN model, given the available data, all of the patterns that are contained in the data need to be included in the calibration set. For example, if the available data contain extreme data points that were excluded from the calibration data set, the model cannot be expected to perform well, as the validation data will test the model’s extrapolation ability, and not its interpolation ability.

In order to ensure that over-fitting does not occur, Smith (1993) and Amari et al. (1997) suggested that the data be divided into three sets; training, testing and validation. The training set is used to adjust the connection weights. The testing set measures the ability of the model to generalize and the performance of the model using this set is checked at many stages of the training process. Training is stopped when the error of the testing set starts to increase. The testing set is also used to determine the optimum number of hidden layer nodes and the optimum values of the internal parameters (learning rate, momentum term and initial weights). The validation set is used to assess model performance once training has been accomplished. According to Taylor et al. (2003) this approach can also be used to ensure that the network converged to the global minimum instead of local minima.

Hecht-Nielsen (1989) provides proof that a single hidden layer of neurons, operating a sigmoidal activation function, is sufficient to model any solution surface of practical interest. To the contrary, Flood (1991) states that there are many solution surfaces that are extremely difficult to model using a sigmoidal network using one hidden layer. In addition, some researchers (Flood and Kartam 1994; Ripley 1996; Sarle 1994) state that the use of more than one hidden layer provides the flexibility needed to model complex functions in many situations. Lapedes and Farber (1988) provide more practical proof that two hidden layers are sufficient, and according to Chester (1990), the first hidden layer is useful to extract the global features of the training patterns. However, Masters (1993) states that using more than one hidden layer often slows the training process dramatically and increases the chance of getting trapped in local minima.

According to Karsoliya (2012), practically, it is very difficult to determine a good network topology just from the number of inputs and outputs. It depends critically on the number of training examples and the complexity of the classification trying to learn. There are problems with one input and one output that require millions of hidden units, and problems with a million inputs and a million outputs that require only one hidden unit, or none at all. However, this is true fact that by taking suitable number of hidden layers and the number of neurons in each hidden layer, better results in less training time can be obtained. Many researchers develop approach to estimate the number of neurons and hidden layers requirement for a neural network but the approximation also get dependable on the type of the database samples for which the network is designed. By increasing the number of hidden layers up to three layer, accuracy can be achieved up to great extent but complexity of the neural network and training time is increased many folds. If accuracy is the main criteria for designing a neural network then hidden layers can be increased. According to Sarle (1995) if early stopping method is adopted, it is essential to use lots of hidden units to avoid bad local optima. As mentioned in this paper, early stopping method is used in this case to develop the neural network models.

The method most commonly used for finding the optimum weight combination of feed-forward MLP neural networks is the back-propagation algorithm (Rumelhart et al. 1986) which is based on first-order gradient descent. The use of global optimization methods, such as simulated annealing and genetic algorithms, have also been proposed (Hassoun 1995). The advantage of these methods is that they have the ability to escape local minima in the error surface and, thus, produce optimal or near optimal solutions. However, they also have a slow convergence rate. Ultimately, the model performance criteria, which are problem specific, will dictate which training algorithm is most appropriate. Breiman (1994) recommended that if training speed is not a major concern, there is no reason why the back-propagation algorithm cannot be used successfully.

Tang et al. (1991) found that when the number of input variables increases, the forecasting ability of the neural network improves. It is likely that more accurate forecasts are produced when more information has been provided by the increased number of input variables. However, there is a trade-off between the accuracy of the model and the model complexity in terms of the number of input variables. To enhance accuracy of the forecast, the size of the training set should be relatively large when the number of input variables increases. Tang et al. also suggested that the amount of data can affect the performance of the forecast technique, and more training data typically means a more accurate forecast. However, even with a small amount of time series data as an input, the neural network can perform reasonably well.

The coefficient of correlation (r), the root mean square error (RMSE) and the mean absolute error (MAE) are the main criteria that are often used to evaluate the prediction performance of ANN models. The coefficient of correlation is a measure that is used to determine the relative correlation and the goodness-of-fit between the predicted and observed data. Smith (1986) suggested the following guidelines for values of |r| between 0.0 and 1.0: |r| ≥ 0.8—strong correlation exists between two sets of variables; 0.2 < |r| < 0.8—correlation exists between the two sets of variables and |r| ≤ 0.2—weak correlation exists between the two sets of variables.

Willmott and Matsuura (2005) examined the relative abilities of root mean square error (RMSE) and mean absolute error (MAE)—to describe average model-performance error. The findings indicated that MAE is a more natural measure of average error and (unlike RMSE) is unambiguous. Dimensioned evaluations and inter-comparisons of average model-performance error, therefore, should be based on MAE.

3 Methodology

The analytical tools used for the study include statistical method such as Regression Analysis and Artificial Intelligence method such as Artificial Neural Network Analysis. EXCEL, the spreadsheet program in Microsoft’s popular Office software package, has been used to perform Regression Analysis. Artificial Neural Network Analysis has been performed using the software package MATLAB. The recommendations of researchers referred in the literature review are also taken to consideration to develop prediction models for CBR and compaction characteristics of fine-grained soils. The fine-grained soil databases used for prediction of soaked CBR, MDD and OMC, collected from various published literatures are given in Table 1 and 2.

The parameters potentially influential to CBR/compaction characteristics of fine grained soils found using simple regression, that can also be determined easily by simple laboratory tests are chosen for prediction. Initially prediction models are developed using only the most effective input parameters—determined by means of simple regression. Later other predication models are developed employing less effective input parameters also-along with more effective parameters. The performance of prediction models are closely observed to derive a conclusion regarding the strength and reliability of the two tools.

3.1 Prediction of Soaked CBR of Fine-Grained Soils Using Regression and Neural Networks

The input parameters OMC, MDD, PL and LL are chosen to develop prediction models for CBR of fine-grained soils using regression analysis and artificial neural network analysis.

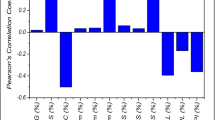

3.1.1 Simple Regression Analysis

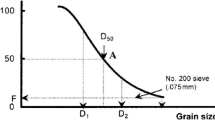

Simple regression analysis was performed prior to multiple regression analysis and neural network analysis in order to identify useful input parameters and to avoid irrelevant/weakly correlated input parameters so as to reduce the chance of neural networks being caught in local minima. To determine the relationship between soaked CBR of fine grained soils and their respective OMC, MDD, plastic limit (PL) and liquid limit (LL) simple regression analysis was used. For soaked CBR, the results of simple regression analyses shown in Fig 1a–d) indicate that in the case of fine-grained soils, MDD and OMC are stronger parameters on soaked CBR than other parameters such as LL and PL. The results of simple regression analyses for compaction characteristics, shown in Fig 2a–d) indicate that in the case of fine-grained soils, the PL bears better correlation with the compaction characteristics than LL. The equations for soaked CBR, MDD and OMC derived using simple regression analyses are given in Table 3.

3.1.2 Multiple Regression Analysis

The simple regression analyses of LL, PL, OMC and MDD with soaked CBR of fine grained soils indicate that, among these four parameters, MDD and OMC are the most effective parameters on soaked CBR of fine grained soils. Based on the results of simple regression analyses, four variables, namely (1/(LL1.34), (1/(PL1.4), (1/(OMC2.74) and (EXP(5.072*MDD)) were used to predict the soaked CBR of fine grained soils (due to the combined effect of various parameters in the multiple regression model, minor modification in the parameter (1/(PL1.81)—obtained using simple regression analysis, was found necessary. When multiple regression analysis was carried out, the performance of the multiple regression model with parameter (1/(PL1.4) was found to be much better than that with parameter (1/(PL1.81) and hence the same was adopted). The summary output is shown in Table 4.

In this case the correlation coefficient (Multiple R) is 0.914, coefficient of determination R2 is 0.835 and adjusted R2 is 0.827. Hence the four independent variables together explain 83.5 % of total variation in dependent variable CBR (df-degree of freedom, SS-sum of squares, ms-mean square error). However when degrees of freedom lost are also taken into consideration, the four independent variables together explain only 82.75 % of total variation in dependent variable. Thus, truly speaking, adjusted R2 indicate the adequacy of the model as it takes into account the deviations as well as degrees of freedom. If adjusted R2 is fairly close to 1, the overall model can be considered adequate to fit the data. But it does not mean that there are no insignificant parameters in it. The residual plots of the four variables shown in Fig. 3 indicate that there is no obvious correlation between the residuals and each independent variable. However, the fact that the residuals look random and that there is no obvious correlation with the variable does not necessarily mean by itself that the model is adequate. More tests are needed.

The overall significance test of the regression model is tested by F-statistic. The column F gives the overall F-test of H0: coefficients = 0 versus Ha: at least one of the coefficients is not equal to zero. Excel computes F as F = [Regression SS/(k−1)]/[Residual SS/(n−k)], where k is the number of regressors including the intercept. The decision rule is when the computed F is greater than critical F, reject the null hypothesis. The computed F (113.7419) in this case is greater than the critical F (3.01—at 0.01 significance level). The closer the significance level is to 0 %, the stronger is the evidence against the null hypothesis and hence the null hypothesis may be rejected. The value of Significance F in ANOVA output is 2.457E × 10−34. Thus the significance F value is much less than 0.01 (hence we reject H0 at significance level 0.01). The corresponding level of confidence is 0.9999. Therefore at least one of coefficients −1.1886, 423.85, −130.72, 6708.641 and 0.00031 is significant for the model. The statistical significance of independent variables and intercept in the multiple regression model is then ascertained by computed t values. This t value of each coefficient is obtained by dividing estimated coefficient by its standard error under the null hypothesis that the coefficient is zero. The computed t static value of all the variables and intercept are greater than critical t value (2), at 0.05 level of significance. This implies that all the variables and intercept in the multiple regression model are statistically significant at 0.05 level of confidence.

The P value approach is also used for evaluating the contribution of individual independent variables. The P value in the regression output indicates the significance of individual coefficient in the regression model. The regression output indicate that Pintercept = 0.0151, which corresponds to fairly high confidence level, 1 − 0.0151 = 0.9849. This suggests that the parameter −1.1886 is significant. The regression output also indicates that confidence levels for other parameters are high, which means that they are also significant. For the regression model, the lower and upper 95 % limits for intercept and variables do not include zero. This agrees with the previous conclusions made about their significance. Hence the multiple regression model obtained, soaked CBR = −1.1886 + 423.85 × (1/LL1.34) − 130.72 × (1/PL1.4) + 6708.641 × (1/OMC2.74) + 0.00031 × (EXP (5.072 × MDD)) is adequate to fit the experimental data. Various prediction models for engineering properties of fine-grained soils thus derived using Multiple Regression Analysis are given in Table 5.

3.1.3 Artificial Neural Network Analysis

The chosen data is divided into three groups, for training, testing and validation, so that the generalization capacity of network could be checked after training phase. The training set is the largest set and is used by neural network to learn patterns present in the data. The testing set is used to evaluate the generalization ability of a supposedly trained network. A final check on the performance of the trained network is made using validation set. Mean Absolute Error (MAE) is used as a measure of error made by the network.

For prediction of soaked CBR using Artificial Neural Network Analysis the four input variables used are the OMC expressed in percentage, MDD expressed in g/cc, PL expressed in percentage and LL expressed in percentage. Hence the input layer has four neurons. The only output is the soaked CBR of soil and therefore the output layer has only one neuron. In this study, a feed forward back propagation neural network with two hidden layers (with hundred neurons each) gave good results. Logistic sigmoid transfer function is used for all the layers and only the output is normalized to get desired results. Since Logistic sigmoid transfer function (often called squashing functions) compress the infinite input range to finite output range normalization of inputs was not found necessary. Learning rate of 0.005 is found to be suitable for good performance. Training goal for the networks is set to 10−4. The networks are trained for fixed number of epochs.

During the training phase, the system adjusts its connection/weight strengths in favour of the inputs that are most effective in determining a specific output. It is possible that repeated training iterations successively improve performance of the network on training data by memorizing training samples. Nevertheless, the resulting network may perform poorly on test data. The use of validation set in the study is an important guard against this overtraining or over fitting network. To avoid overtraining, the error (maximum absolute error and/or mean absolute error) on the test set is monitored. Training is continued as long as the error on the test set decreased. It is terminated when the error on the test set started increasing again. Thus the training is halted when the error on testing dataset is lowest (early stopping method) even though the error on training dataset is found to decrease further as training is continued. As early stopping method is adopted, in order to escape local minima—large numbers of hidden nodes are used. The Mean Absolute Errors of testing and validation data of the model are 0.3634 and 0.5006, respectively. The error plot of testing and validation data is given in Fig. 4. Table 6 indicates different prediction models thus derived for soaked CBR, MDD and OMC using Artificial Neural Network Analysis.

3.2 Comparison of Results of Multiple Regression Analysis and Neural Network Analysis

To study how well the analytical values “fit” the experimental values, the mean absolute errors (MAE) and correlation coefficients (r) of the predicted values are determined. The results of prediction of CBR and compaction characteristics using multiple regression analysis and neural network analysis are shown in Table 7. To evaluate the performances of multiple regression models and ANN models, both MAE and correlation coefficient are taken to consideration. Figure 5a, b illustrate the neural network-multiple regression error plots for prediction of soaked CBR of fine-grained soils using 2 variables (MDD, OMC) and 4 variables (MDD, OMC, PL and LL). Similar error plots for prediction of OMC and MDD of fine-grained soils using 1 variable (PL) and 2 variables (PL and LL) are shown in Fig. 6a–d). The performances of both neural network models and multiple regression models used for prediction of soaked California Bearing Ratio (CBR)/Compaction characteristics of fine-grained soils are summarized in Table 8.

a OMC prediction (1 variable) Multiple regression versus Neural networks—Error plot, b OMC prediction (2 variables) Multiple regression versus Neural networks—Error plot, c MDD prediction (1 variable) Multiple regression versus Neural networks—Error plot, d MDD prediction (2 variables) Multiple regression versus Neural networks—Error plot

The study show that for prediction of soaked CBR of fine-grained soils, using 4 variables—MDD, OMC, PL and LL, though the predicted and the experimental values strongly agree for neural network models as well as multiple regression models the predictions using neural network models are more accurate. Also, fairly good predictions on soaked CBR could be made using the two strong parameters—MDD and OMC alone, in which case also neural network models are more accurate (though the mean absolute errors of ANN models for the prediction of soaked CBR of fine-grained soils using 4 input variables and 2 input variables are lesser than the corresponding multiple regression models, the correlation coefficients of multiple regression models are higher than ANN models -this could at times happen due to smaller sample size and presence of outliers). In the case of prediction of compaction characteristics using two variables PL and LL, neural network models are far more accurate than multiple regression models. The results thus prove that neural network analysis has more potential as a forecasting tool than multiple regression analysis.

The results also indicate that, when the input parameters PL and LL are used along with the two strong parameters OMC and MDD—the accuracy of prediction of soaked CBR increases significantly in the case of neural network analysis while moderate improvement in accuracy is observed for prediction of soaked CBR using multiple regression model. In the case of prediction of OMC, with the use of additional input parameter LL along with PL the accuracy of prediction of neural network model increase considerably while the use of additional input parameter affects the multiple regression model adversely. The additional input parameter—LL also improves the accuracy of prediction of MDD using neural network model notably, while the increase in accuracy of prediction of multiple regression model with the use of additional parameter is negligible. The superior performance in the case of neural network models—with more number of influential parameters—is obvious in individual results of validation data depicted in the error plots.

It can be inferred from the results that in the case of neural network analysis, the use of relatively less influential input parameters along with stronger parameters is definitely beneficial, unlike conventional statistical methods—for which, this approach is risky—with either damaging effect or less benefit. The various input parameters used for prediction of dependent variable need not have strong and equal influence on the dependent variable, for ANN to develop a good model. But in the case of multiple regression models, this approach need not always produce positive results. With the addition of more input parameters, the information available to neural networks increase, which in turn improves the performance of neural networks. ANN models are capable of extracting even the subtle relationship of the dependent variable to the weak independent variables in a better manner than multiple regression models. Only very weak parameters need to be avoided in the case of neural network analysis. However use of less influential parameters alone in ANN models is not likely to give favorable results. The behaviors of soils as such are very complicated and hence to safeguard the dependability of prediction models for soil properties it is always preferable to use more number of influential parameters—which can be done safely only using neural networks. Those parameters which can be easily determined using simple laboratory tests—having moderate correlation with predicted variable can also be included in neural network analysis to improve the prediction results.

On comparing the predictions of engineering properties of fine-grained soils made using regression analysis and neural network analysis it is understood that with a uniformly representative database and sufficient number of input variables, ANN is capable of making more accurate predictions. To get most accurate predictions, the database used for fine-grained soils should be well representative which includes soils belonging to different groups such as CH, CI and CL as well as MH, MI and ML. In this study, the database (inclusive of testing and validation data) used for the predictions of CBR values of fine-grained soils included 112 soil samples while the database used for the predictions of compaction characteristics of fine-grained soils consisted of 145 soil samples. Though these databases cannot be claimed as perfect uniformly representative large databases, representing all possible cases, the results indicate that reasonably accurate predictions can be made even with lesser data. The performances of artificial neural networks will definitely improve with a larger and more representative database and sufficient number of input variables. However, neural network analysis lacks transparency—the output is obtained as numerical values. No information can be gathered about the effect of each input variable on the predicted variable. Neural networks may even be termed as “black boxes” for this inability. For regression analysis, the output obtained in the form of equations/trend lines gives an overall idea about the influence of each input variable on the predicted variable. While the training time/processing time required for obtaining the best neural network model can vary, the regression analysis take definite time.

4 Summary

Predictions of engineering properties (namely soaked CBR, MDD and OMC) of fine-grained soils using neural network models are better than those using regression models. Accurate predictions on CBR of fine-grained soils can be obtained using neural networks with four numbers of influential input parameters namely OMC, MDD, PL and LL. Also precise predictions on compaction characteristics of fine-grained soils can be achieved with two numbers of influential input parameters namely PL and LL. In the case of neural network analysis, the use of relatively less influential input parameters along with stronger parameters is definitely beneficial, unlike conventional statistical methods—for which, this approach need not always return positive/favourable results. The various input parameters used for prediction of dependent variable should not necessarily have strong and equal influence on the dependent variable, for ANN to develop a good model. The behaviours of soils as such are very complicated and hence to safeguard the dependability of prediction models for soil properties it is always preferable to use more number of influential parameters—which can be done securely only using neural networks. However, ANN models give less insight into the underlying physical relationship between each input variable and predicted variable.

5 Conclusions

-

Predictions on soaked CBR and compaction characteristics of fine-grained soils using neural network models are better than those using regression models.

-

Accurate predictions on soaked CBR of fine-grained soils can be obtained using neural networks with four numbers of influential input parameters namely OMC, MDD, PL and LL. Also precise predictions on compaction characteristics of fine-grained soils can be achieved with two numbers of influential input parameters namely PL and LL.

-

The performance of neural networks improves as the number of input parameters increase. Thus for neural network analysis, the use of relatively less influential input parameters along with stronger parameters is definitely beneficial, unlike multiple regression—for which, this approach is sometimes not so advantageous. In other words, in the case of neural network analysis, it is more effective to use all influential parameters—which can be determined easily by simpler laboratory tests—simultaneously, while this approach need not always give better results for conventional statistical methods. With the addition of more input parameters, the information available to neural networks increase, which in turn improves the performance of neural networks. The various input parameters used for prediction of dependent variable need not have strong and equal influence on the dependent variable, for ANN to develop a good model. Hence very weak input parameters alone need to be avoided in neural network analysis.

-

The behaviours of soils as such are very complicated and hence to safeguard the dependability of prediction models for soil properties it is always preferable to use more number of influential parameters in the prediction models—which can be done safely only using neural networks.

References

Agarwal KB, Ghanekar KD (1970) Prediction of CBR from plasticity characteristics of soil. In: Proceedings of the 2nd south-east Asian conference on soil engineering, Singapore, pp 571–576

Amari SI, Murata N, Muller KR, Finke M, Yang HH (1997) Asympotic statistical theory of overtraining and cross-validation. IEEE Trans Neural Netw 8(5):985–996

Breiman L (1994) Comment on neural networks: a review from a statistical by B. Cheng and D. M. Titterington. Stat Sci 9(1):38–42

Chester DL (1990) Why two hidden layers are better than one. Internat Joint Con Neural Netw 1:265–268

Datta T, Chattopadhyay BC (2011) Correlation between CBR and index properties of soil. In: Proceedings of Indian Geotechnical Conference, Kochi

Flood I (1991) A Gaussian-based neural network architecture and complementary training algorithm. In: Proceedings of the International Joint Conference on Neural Networks, New York, pp 171–176

Flood I, Kartam N (1994) Neural networks in civil engineering I: principles and understanding. J Comput Civil Eng 8(2):131–148

Hassoun MH (1995) Fundamentals of artificial neural networks. MIT Press, Cambridge

Hecht-Nielsen R (1989) Theory of the back-propagation neural network. In: Proceedings of the International Joint Conference on Neural Networks, Washington, DC, pp 593–605

Karsoliya S (2012) Approximating number of hidden layer neurons in multiple hidden layer BPNN Architecture. Internat J Eng Trends Technol 3(6):714–717

Lapedes A and Farber R (1988) How neural networks work. Neural information processing systems. American Institute of Physics, College Park, pp 442–456

Masters T (1993) Practical neural network recipes in C++. Academic Press, San Diego

Pandian NS, Nagaraj TS, Manoj M (1997) Re-examination of compaction characteristics of fine-grained soils. Geotechnique 47(2):363–366

Patel RS, Desai MD (2010) CBR Predicted by index properties of soil for alluvial soils of South Gujarat, Indian Geotechnical Conference, Proc. IGC I: 79–82

Ripley BD (1996) Pattern recognition and neural networks. Cambridge University Press, Cambridge

Roy TK, Chattopadhyay BC (2006) Prediction of compaction characteristics of subgrade materials for the road. Proc Indian geotech conf Chennai 2:737–740

Roy TK, Chattopadhyay BC, Roy SK (2007) Prediction of CBR for subgrade of different materials from simple test, Proceedings of the International conference on civil engineering in the new millennium—opportunities and challenges—Kolkata

Roy TK, Chattopadhyay BC, Roy SK (2009) Prediction of CBR from compaction characteristics of cohesive soil. Highw Res J 77–88

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning internal representation by error propagation. Parallel distributed processing. MIT Press, Cambridge

Sarle WS (1994) Neural networks and statistical models. In: Proceedings of the 19th Annual SAS Users Group International Conference, Cary, NC: SAS Institute, pp 1538–1550

Sarle WS (1995) Stopped training and other remedies for overfitting. In: Proceedings of the 27th Symposium on the Interface of Computing Science and Statistics, pp 352–360

Shahin MA, Jaksa MB, Maier HR (2008) State of the art of artificial neural networks in geotechnical Engineering. EJGE paper

Smith GN (1986) Probability and statistics in civil engineering: an introduction. Collins London

Smith M (1993) Neural networks for statistical modeling. Van Nostrand Reinhold, New York

Sridharan A, Nagaraj HB (2005) Plastic limit and compaction characteristics of fine-grained soils. Ground Improvement 9:17–22

Tang Z, Almeida C, Fishwick PA (1991) Time series forecasting using neural networks versus Box-Jenkins methodology. Simulation 57(5):303–310

Taylor B, Darrah M, Moats C (2003) Verification and validation of neural networks: a sampling of research in progress. Proceedings of SPIE 5103:8–16

Willmott CJ, Matsuura K (2005) Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim Res 30:79–82

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Varghese, V.K., Babu, S.S., Bijukumar, R. et al. Artificial Neural Networks: A Solution to the Ambiguity in Prediction of Engineering Properties of Fine-Grained Soils. Geotech Geol Eng 31, 1187–1205 (2013). https://doi.org/10.1007/s10706-013-9643-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10706-013-9643-5