Abstract

The competitive market of mobile applications (apps) has driven app developers to pay more attention to addressing the issues of mobile apps. Prior studies have shown that addressing the issues that are reported in user-reviews shares a statistically significant relationship with star-ratings. However, despite the prevalence and importance of user-reviews and issue reports prioritization, no prior research has analyzed the relationship between issue reports prioritization and star-ratings. In this paper, we integrate user-reviews into the process of issue reports prioritization. We propose an approach to map issue reports that are recorded in issue tracking systems to user-reviews. Through an empirical study of 326 open-source Android apps, our approach achieves a precision of 79% in matching user-reviews with issue reports. Moreover, we observe that prioritizing the issue reports that are related to user-reviews shares a significant positive relationship with star-ratings. Furthermore, we use the top apps, in terms of star-ratings, to train a model for prioritizing issue reports. It is a good practice to learn from the top apps as there is no well-established approach for prioritizing issue reports. The results show that mobile apps with a similar prioritization approach to our trained model achieve higher star-ratings.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The revenue of Android applications (apps) has increased enormously in the past few years (Statista 2017b; Stats 2016). App markets, such as Google Play Store (Google 2017; Statista 2017a), are very competitive for app developers. Google Play Store provides a scoring system where users can rate apps from one star (the lowest star-rating) to five stars (the highest star-rating) and post their comments (i.e., user-reviews). Star-ratings can affect the number of downloads and the income of app development companies (Bavota et al. 2015; Kim et al. 2011). Associated user-reviews with star-ratings contain valuable information, such as bug reports and feature requests (Iacob and Harrison 2013; Panichella et al. 2015). Such information can be useful for app developers to manage issues and demands of users to achieve higher star-ratings.

Traditionally, issues are managed and prioritized through issue tracking systems. Many mobile apps use GitHub (2018a) as an issue tracking system to manage the issues that are reported by developers. It has been found that addressing the issues that are reported in user-reviews can increase the star-ratings (Palomba et al. 2015). However, there is no precise link between the issue reports in issue tracking systems and the user-reviews on Google Play Store. We propose a solution to establish a connection between issue reports and user-reviews. The benefits of having a connection between user-reviews and issue reports are twofold. First, developers can focus on the issue reports that can increase the star-ratings of their apps. However, it is still hard for app developers to decide which user-related issue report should be addressed first. For instance, an issue that is reported by an expert developer may receive high priority (Xuan et al. 2012), as well as an issue appearing in many user-reviews. A resolution of various aspects is beneficial for prioritizing issue reports. Second, app developers would identify the issues that have already been reported in the issue tracking system. Hence, developers can avoid issue report duplications (Cavalcanti et al. 2013) if they plan to add the issues that are reported in the user-reviews to the issue tracking system.

Prior studies, such as Villarroel et al. (2016) and Chen et al. (2014), only focus on user-reviews, but neglect the information provided in issue tracking systems. The issue reports in issue tracking systems are the outcome of developers’ efforts in identifying potential issues and should not be neglected. Chen et al. (2014) propose an approach to extract informative user-reviews using textual features of user-reviews, and rank them. Villarroel et al. (2016) enhance release planning by classifying user-reviews into meaningful groups of bug reports and feature requests. Our work aims to prioritize issue reports by leveraging both user-reviews and issue reports. Therefore, we can prioritize issue reports by integrating both the users’ feedback reflected in user-reviews and developers’ experience in handling issues.

In this paper, we collect all the Android apps (i.e., 1,310 apps) that are available on FDroid (2017). F-Droid is the largest repository for open-source Android apps. We study 326 of 1,310 apps that have a non-trivial amount of user-reviews and issue reports (Khalid et al. 2016). We address the following research questions:

- ᅟ:

RQ1) How precisely can user-reviews be mapped to issue reports?

A user-review is an unstructured piece of text (Palomba et al. 2015) that is not longer than two lines on average. We cluster user-reviews to enhance the precision of matching user-reviews with issue reports. Each cluster contains the user-reviews that are related to the same issue. To map each cluster to its related issue report, we compute the textual similarity between issue reports and clusters of user-reviews. The results show that our approach achieves a precision of 79%.

- ᅟ:

RQ2) Does prioritizing user-related issue reports have a relationship with

- ᅟ:

star-ratings?

To explain the prioritization order of issue reports, first, we compute 59 issue report metrics and 31 user-review metrics. Then, we use the metrics to model the issue reports prioritization of each app. Our models fits well (i.e., adjusted R2 ≥ 0.5 (Nelder and Baker 1972)) for 37% of the apps but fails to fit for 63% of the apps. We observe that the apps which share a significant relationship between their star-ratings and our metrics tend to receive higher star-ratings. The results imply that prioritizing the issue reports with respect to our suggested metrics is beneficial for achieving higher star-ratings.

- ᅟ:

RQ3) How can app developers prioritize user-related issue reports to achieve

- ᅟ:

higher star-ratings?

It is beneficial to learn from the top-rated apps for prioritizing issue reports. We use the top apps to train a prediction model using the random forest technique (Liaw and Wiener 2002). We apply the trained model to the remaining apps. For each app, we compare the similarity score between the predicted prioritization orders and the actual prioritization orders of issue reports. We obtain two groups of apps: (i) the apps with higher similarity scores of prioritization, and (ii) the apps with lower similarity scores. We observe that the first group of apps receive higher star-ratings than the second group. Hence, our suggested method can be a helpful solution for app developers in order to prioritize the issue reports.

Paper organization

Section 2 explains our experiment setup. Section 3 describes the details of the research questions and findings. Section 4 discusses the potential threats to the validity of our work. Section 5 introduces the related work. Finally, we conclude the paper in Section 6.

2 Experiment Setup

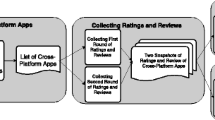

An overview of the experiment setup is depicted in Fig. 1. As shown in Fig. 1, our experiment setup mainly consists of the following steps: (i) preprocessing user-reviews and issue reports, (ii) clustering user-reviews, (iii) computing metrics of both user-reviews and issue reports, and (iv) measuring prioritization orders of issue reports.

We apply the vector space model (Salton et al. 1975) adopting the term frequency-inverse document frequency (TF-IDF) (Salton and Mcgill 1983) to measure the cosine similarity between issue reports and each cluster of user-reviews. We use metrics of clusters of user-reviews and issue reports to model issue reports prioritization. Finally, we build a prediction model based on top apps to predict issue reports prioritization of mobile apps. We observe that the apps that follow similar prioritization orders as our predicted ones, receive better star-ratings.

2.1 Data Sources

We retrieved a set of open-source apps associated with their GitHub repositories from F-Droid app market (FDroid 2017). F-Droid is an app store for open-source Android apps that provides access to both source codes and binary files (FDroid 2017).

2.1.1 Apps

1,310 open-source Android apps were hosted on F-Droid app market (FDroid 2017) as of September 1,2016. Not all the 1,310 apps were associated with GitHub repositories. We obtained 1,120 apps (i.e., 85% of the total apps) that were associated with their GitHub repositories.

We build a distinct model for each individual app (see Section 3.2). The number of Events Per Variable (EPV) is a metric that calculates the ratio of data points to the number of variables (Tantithamthavorn et al. 2017). To avoid the risk of over-fitting and having unstable results, having an EPV ≥ 10 is recommended (Tantithamthavorn et al. 2017). With less than 10 user-reviews or less than 10 issue reports, achieving an EPV ≥ 10 is not possible. Therefore, we excluded the apps that have received less than 10 user-reviews or issue reports from our study. We identified 326 Android apps that meet the aforementioned criteria.

2.1.2 User-Reviews

A user-review that is posted by an individual user contains a text, star-rating, and date on which the review is posted. Figure 2 shows an overview of the process of retrieving user-reviews. We gradually retrieved the user-reviews by building a crawler on top of Selenium automation tool (Selenium 2017). In the following paragraphs, we describe Selenium tool, the crawler, and gradual retrieving method.

Selenium

Selenium provides a set of tools and APIs to automate web browsing. The chief purpose of Selenium is web testing. However, it can be used for other purposes, such as web crawling. The primary parts of Selenium are (i) an IDE, (ii) a client API, and (iii) a web driver. The Selenium IDE is implemented as a Firefox add-on that allows recording, editing, and debugging web tests (Bruns et al. 2009). The client API lets developer communicate with Selenium. Finally, the web driver sends the Selenium commands to the browser (Selenium 2017).

Crawler

To retrieve the user-reviews, we built a crawler using Selenium (2017). The crawler extracts all of the app information, such as app names, and the associated user-reviews.

Gradual retrieving

Google Play Store limits the total number of user-reviews that a user can view to 2,400 user-reviews (Khalid et al. 2014). Therefore, one cannot access all the available user-reviews of an app at once if it comes with more than 2,400 user-reviews (Khalid et al. 2014; Google 2017). Therefore, we run the crawler on a daily basis for five years to get the latest user-reviews of each app. Then, we merge the new user-reviews with the existing user-reviews in our database. Hence, we could capture all the user-reviews for all the subject apps. The process of getting the new user-reviews takes about one hour a day.

2.1.3 Issue Reports

An issue report that is posted by a user on GitHub includes a title, text, and date on which the issue is posted. We retrieved all the available issues of our subject apps using GitHub application programming interface (API) (Developer 2018). As an alternative approach, GHTorrent (Gousios 2013) could also have been used to measure the required metrics. As we only need the issue reports of a limited number of projects (i.e., 1,120 apps), using GHTorrent is not necessary. Figure 3a shows the number of issue reports for each of the 326 subject apps. As shown in Fig. 3a, the number of issue reports varies for each app.

2.2 Preprocessing Data

A user-review is an informal piece of text (Google 2017; Palomba et al. 2015) that can potentially suffer from grammatical issues and typos. For example, a user-review such as “Tha pics couldnt be sentttt” has several typos: “Tha” and “sentttt” need to be changed to “The” and “sent”, respectively. Moreover, user-reviews are usually short with few words. Furthermore, there are no consistent choices of words to describe the same issues. For instance, different users may use either error or problem to report a bug. In addition, user-reviews contain negations that confuse automatic approaches. Without considering negations, a user-review such as “Great app! Runs with no problem!” could have been interpreted as a user-review that reports a problem.

In the following paragraphs, we describe the taken steps for addressing the challenges that are mentioned above. In addition, we asked three non-authors to evaluate the mappings between user-reviews and issue reports. The evaluators are graduate students in computer science and software engineering. We randomly selected 384 user-reviews with the associated issue reports with the confidence level of 95% and the confidence interval of 5%. Each evaluator independently evaluated the mapping between the user-reviews in the sample and the issue reports. We applied the major vote rule to solve the conflicts among the evaluators. In each step, we use the above set of user-reviews as a reference to measure the improvement in the mapping precision.

2.2.1 Filtering Out Uninformative User-Reviews

In total, we collected 170,373 user-reviews. An uninformative user-review, such as “This app is OK”, has no valuable information for app developers. The most recent studies (Chen et al. 2014; Villarroel et al. 2016) propose different approaches to filter out uninformative user-reviews. For example, Chen et al. (2014) employ the expectation maximization for Naïve Bayes classifier (Calders and Verwer 2010) to identify uninformative user-reviews. However, we cluster the related user-reviews together. Therefore, a group of uninformative user-reviews can potentially become informative when they are grouped together. Having groups of related user-reviews (informative or uninformative) allows us to calculate the required metrics more accurately (see Section 2.4). Consequently, we only filter out the user-reviews that only praise or condemn an app. To this end, we use linguistic rules (Iacob and Harrison 2013). The main author defined the linguistic rules by manually investigating 5,000 randomly selected user-reviews. Although more is always better, we chose 5,000 user-reviews because (i) a set of 5,000 user-reviews is a representative sample of user-reviews and (ii) manually analyzing the user-reviews is a time-consuming task. On average, each user-review takes 15 seconds to analyze. Therefore, it takes about 21 hours to analyze all the user-reviews. Among 5,000 user-reviews, we identified 3,789 user-reviews as informative and 1,211 user-reviews as uninformative ones according to the rules that are listed in Table 1. As an example, for the first rule, we match ‘this app works fine’ and ‘is very awful’. For the second rule, we match ‘not a good app’ and ‘terrible’. For the last rule, we match phrases like ‘thank you!!!’.

Moreover, we put aside non-English user-reviews from the collected user-reviews using Language Detector (Optimaize 2017). The Language Detector creates a distinct profile for different languages. Then, it uses each profile to identify the language of a given text (Optimaize 2017). We end up with 130,712 user-reviews. Figure 3b shows the number of user-reviews for each subject app.

2.2.2 Correcting Typos

Typos usually impact the results of text analysis techniques (Nord 2005). We use Jazzy Spell Checker (Jazzy 2017) with a dictionary of 645,289 English words to fix the typos of user-reviews and issue reports. Jazzy provides a set of Java APIs that allows us to detect misspelled words and replace them with the correct ones. Based on manually investigating 384 user-reviews with the confidence level of 95%, Jazzy corrects 68% of the incorrect words. Correcting typos allows us to increase the mapping precision by 4%.

2.2.3 Resolving Synonyms

General-purpose thesaurus, such as WordNet (Miller 1995), are not sufficient to resolve the synonyms of an informal text, such as a user-review (Noei et al. 2018; Villarroel et al. 2016). Therefore, we build our own dictionary of words to resolve the synonyms. To ease the processing of building the dictionary, we applied LDA topic modeling technique (Blei et al. 2003; Noei and Heydarnoori 2016) on our data. We manually investigate each group of words that appear in the same topic and group the words that have similar meaning together accordingly. From each set of similar words, we pick one as the representative word and replace the other words with the representative word of each group. For example, bug, error, and glitch belong to the same group of terms.

We also replaced abbreviations and informal messaging vocabularies with formal words. We find the abbreviations and informal messaging vocabularies from the available online sources (Allacronyms 2017; Netlingo 2017). For example, “luv” should be replaced with “love”. In our experiment, resolving synonyms let us increase the mapping precision by 3%.

2.2.4 Resolving Negations

The negations in the user-reviews can mislead the text processing techniques in getting the real meaning of user-reviews. To avoid this, we use Stanford natural language processing toolkit (Manning et al. 2014) to find and resolve the negated terms (Villarroel et al. 2016). In our experiment, resolving negations increases the precision of our mapping by 3%.

2.2.5 Removing Stop-words

Stop-words are the most common words that exist in a language, such as “is” and “the”. We remove stop-words using Stanford CoreNLP (Manning et al. 2014). Removing stop-words allows text processing techniques to focus on the main words of user-reviews and issue reports (Rajaraman et al. 2012).

2.2.6 Stemming

Reducing inflected words to their word stem is called stemming (Lovins 1968). By stemming user-reviews and issue reports, all forms of a word can be transformed to the same stem. For example, “report” and “reporting” have the same word stem that is “report”. We use the Snowball program (Snowball 2018) to stem the words.

2.2.7 Extracting n-grams

Sometimes, words share a more concrete meaning when they come together. For example, a four-word phrase, such as does_not_send_pictures, shows a problem in sending pictures, while having these four words separated does not reflect its real meaning. A n-gram is a contiguous sequence of n words from a given sentence or sequence of words (Broder et al. 1997). For each user-review and issue report, we extract the n-grams with n varying from 2 to 4. Extracting the n-grams helps us to deal with the negations more effectively. Similar to Villarroel et al. (2016), we extract the n-grams before the preprocessing steps to avoid losing any potential information. In our experiment, extracting 2-grams, 3-grams, and 4-grams, increase the mapping precision by 3%, 1%, and 1% respectively. In total, extracting n-grams (n ∈ {2, 3, 4}) increases the mapping precision by 5%.

2.3 Clustering User-Reviews

We cluster the related user-reviews by customizing the Villarroel et al. approach (2016), such as adding a step for correcting typos. By clustering user-reviews, even short and uninformative user-reviews can become helpful when they are considered together. Furthermore, clustering user-reviews is required in this study for two main reasons: (i) having the related user-reviews clustered together significantly increases the mapping precision by 45%, and (ii) computing the metrics of user-reviews requires a group of related user-reviews, such as quantifying the number of user-reviews that report the same issue.

2.3.1 Approach

We apply DBSCAN (Ester et al. 1996) on user-reviews of each app. DBSCAN is a density-based clustering algorithm that groups the elements of user-reviews (i.e., words and n-grams) together that are closely placed near each other. We compute the distance between two user-reviews by applying the vector space model (Salton et al. 1975) cosine similarity between (i) associated star-ratings (Villarroel et al. 2016), (ii) post-processed user-reviews, and (iii) lists of n-grams. We adopt TF-IDF (Salton and Mcgill 1983) on the vectors of user-reviews. TF-IDF allows us to measure the frequency of each term and estimate how much information each term provides. DBSCAN requires two parameters: (i) the maximum distance between user-reviews, and (ii) the minimum number of user-reviews that can be clustered together. We set the maximum distance between two user-reviews to 0.6 as it gives the best performance of DBSCAN. We set the minimum number of points of DBSCAN to 1 as one user-review may be useful in identifying a potential issue.

2.3.2 Evaluation

We followed the same approach as Villarroel et al. (2016) to evaluate the clustering approach. We randomly selected 384 user-reviews with the confidence level of 95% and the confidence interval of 5%. The external evaluators clustered the related user-reviews. Then, we compared the manually clustered user-reviews with the automatically clustered user-reviews. We achieved an accuracy of 80% in clustering the user-reviews. An example of an issue report that is matched with a cluster of user-reviews is shown at Table 2. As shown in Table 2, the reported issue is about an issue in the auto-correcting module where there exists some user-reviews reporting the same issue.

2.4 Computing Metrics of User-Reviews

We follow the Goal / Question / Metric (GQM) paradigm (Basili 1992; Van Solingen et al. 2002) to capture the metrics of user-reviews. The GQM is a measurement paradigm that is based on three levels: (i) conceptual, (ii) operational, and (iii) quantitative. The conceptual level, i.e., the goal, should be defined with respect to the purpose of a given model. The operational level is a set of questions to describe the goal that is defined at the conceptual level. The quantitative level is a set of metrics that can be measured to address each question of the operational level. Also, the availability of each metric has to be considered. For instance, we cannot capture the level of expertise of a user who posts a user-review, but we can capture the number of users that post similar user-reviews.

We set our goal to quantify the user-reviews. Table 3 shows our GQM model for capturing the user-reviews. As shown in Table 3, we measure 31 metrics of user-reviews, such as the number of similar user-reviews and the proportion of negative and positive user-reviews. For star-ratings, sizes of user-reviews, and sentiment scores, we compute the mean, median, minimum, maximum, 1st quartile, and 3rd quartile of each metric. The median is a metric to measure the central tendency of the data. However, it does not reflect the distribution of data below and above the median. To reduce such a limitation, we measure the 1st and 3rd quartile in addition to the median. For instance, if the 1st quartile is far away from the median but the 3rd quartile is close to the median, we can infer that the data points that are greater than the median are closely placed together in comparison with the data points that are less than the median (Kelley 1947).

2.5 Computing Metrics of Issue Reports

Table 4 shows the GQM model to quantify the issue reports. We compute the mean, median, minimum, maximum, 1st quartile, and 3rd quartile of the following metrics: (i) the sizes of comments, (ii) contribution, (iii) number of following, (iv) number of followers, (v) number of gists of the people who have involved in an issue, and (vi) the time since they have joined GitHub.

Regarding the size of an issue report, it can reflect the amount of information contained in the issue report (Kim et al. 2006). Moreover, Yu et al. (2015) indicate that the size of a given document can be associated with the quality and complexity of a document. For example, consider the example issue reports that are listed in Table 5. The first issue report with a bigger size provides comprehensive details of the reported issue, including the expected behavior, the actual behavior, and the steps to reproduce the issue. However, the second issue with a smaller size report does not provide enough context to understand and resolve the issue.

2.6 Measuring the Prioritization Orders of Issue Reports

Developers react to some issues very fast, while they might postpone responding to some other issues for many weeks. We consider the developers’ reaction attitude as an indicator of the importance of the issues. To estimate developers’ reaction to each issue, we consider the following actions: (i) post comments on an issue report, (ii) submit commits for an issue report, and (iii) adding specific keywords to an issue report, including “Fixed”, “Solved”, “Resolved”, “Closed”, “Feature added”, and “Finished”. To measure the reaction time for each issue, we compute the minimum value of the intervals between each of the aforementioned actions and the time since an issue report has been posted on GitHub. We use the reaction times to measure the prioritization orders of issue reports.

However, some noises may be introduced by considering posting comments as indicators of prioritization orders. For example, a developer may immediately post a comment on an open issue to mention that they will take care of it after dealing with more important issues. We manually investigate the comments of a sample issue reports (384 issue reports) with the confidence level of 95%, confidence interval of 5, and population of 239,736. We observe that only 1.7% of the comments are irrelevant to the associated issue reports which is a tolerable proportion of comments.

3 Research Questions and Results

For each research question, we present our motivation, approach, and findings.

3.1 RQ1) How Precisely can User-reviews be Mapped to Issue Reports?

3.1.1 Motivation

Mobile apps may have hundreds or even thousands of user-reviews. It is not a trivial task for app developers to manually analyze all of the user-reviews. It is beneficial to map user-reviews to issue reports automatically. Therefore, app developers would be able to use the user-reviews to prioritize the issue reports. Moreover, having the knowledge of the issues that are also mentioned in the user-reviews can help app developers to better manage the issues and prevent issue report duplications (Cavalcanti et al. 2013).

3.1.2 Approach

As described in Section 2.3, first, we cluster the related user-reviews. We consider all the user-reviews that belong to the same cluster as a single document that describes the same issue. To determine the similarity between user-reviews and issue reports, we apply the vector space model (Salton et al. 1975). First, we compute TF-IDF (Salton and Mcgill 1983) to obtain the vector of each document, i.e., either a cluster of user-reviews or an issue report. Second, we calculate cosine similarities between issue reports and each cluster of user-reviews. We associate a cluster of user-reviews with an issue report if their similarity is greater than the threshold τ. We evaluate our experiment with different thresholds from 0.05 to 0.95 on five randomly selected apps.

To measure the performance of the mapping approach, we measure the precision of the correctly matched pairs of clusters of user-reviews and issue reports amongst all the matched pairs. We asked three non-authors to manually examine the correctness of each matched pair on a statistically representative sample set of the rest of the apps, i.e., all apps excluding the five apps that we used to determine the best threshold. To obtain such a set, we randomly selected 384 user-reviews with their associated issue reports from 30,520 user-reviews with the confidence level of 95% and the confidence interval of 5%. The three evaluators independently evaluated the sample of user-reviews matched with the issue reports. We applied the major vote rule to resolve the conflicts amongst the evaluators.

3.1.3 Findings

We achieve a precision of 79% in matching user-reviews with issue reports. Figure 4a shows the precision achieved by our approach with various thresholds and Fig. 4b shows the number of matches between user-reviews and issue reports. We set the threshold to 0.85 with a trade-off between the precision of matches and the number of matches. Based on the manual analysis by the three evaluators, our approach achieves a precision of 79% with the threshold τ of 0.85.

We match 27% of the user-reviews with the 33% of the issue reports. The issue tracking systems are normally the working area of app developers (Janák 2009), while user-reviews are from external users. The matches between the issue reports and clusters of user-reviews show the issues that are reflected in both user-reviews and issue reports. In the next research questions, we show that prioritizing only the user-related issue reports shares a significant relationship with star-ratings. Therefore, our approach can help app developers to prioritize user-related issue reports to receive higher star-ratings. Having considered the user-reviews that are grouped together, developers can create new issue reports concerning the user-reviews that are left out. Therefore, with respect to our approach, developers can cover more of the user-reviews when maintaining their apps.

3.2 RQ2) Does Prioritizing User-Related Issue Reports have a Relationship with Star-Ratings?

3.2.1 Motivation

Developers may take different priority orders when addressing the user-related issue reports. Although the lack of issue reports prioritization can negatively impact star-ratings, there is no empirical evidence to show the relation between prioritizing the user-related issue reports and star-ratings. Therefore, we investigate the relationship between star-rating and the metrics of user-reviews and issue reports.

3.2.2 Approach

We model the issue reports prioritization using linear regression models (Faraway 2005). The dependent variable of the regression models is the prioritization orders of the apps. The independent variables are the metrics computed from both user-reviews and issue reports. The goodness of fitness, i.e., adjusted R2 (Nelder and Baker 1972), of the linear regression models shows whether issue reports prioritization has a relationship with the metrics of user-reviews and issue reports. Figure 5 shows an overall overview of our approach.

Before building the regression models, we identify the correlated variables. We apply variable clustering analysis (Hmisc 2017) to build a hierarchical overview of the correlation between the independent metrics (Noei et al. 2017). The metrics within each sub-hierarchy of metrics with Spearman’s |ρ| > 0.7 are considered as correlated variables (Nguyen et al. 2010). We choose one metric that is easier to comprehend for inclusion in our model from each sub-hierarchy of metrics. We build two types of regression models:

- (i)

Generic Model. We build a generic model using all of the subject apps. Building a generic regression model with a high goodness of fitness can show that different apps are following a similar strategy for prioritizing issue reports.

- (ii)

Specific Models. For each app, we build an independent regression model. We get a goodness of fitness for each independent regression model. A higher goodness of fitness can indicate that the issue reports prioritization of an app has a significant relationship with the metrics of user-reviews and issue reports.

The EPV measures the ratio of data points to the number of variables (Tantithamthavorn et al. 2017). An EPV of greater than 10 is recommended to have a low risk of over-fitting and unstable results (Tantithamthavorn et al. 2017). We did not consider 14% of the apps with EPVs less than 10. We divide the apps into two groups; one group with R2 ≥ 0.5 and another one with R2 < 0.5 (Nelder and Baker 1972). We compare the star-ratings of the two groups of apps using Mann–Whitney U test (Mann and Whitney 1947). As a null hypothesis, we assume that the distributions of the star-ratings between the two groups of the apps are the same. The Mann–Whitney U test rejects this hypothesis with a p − value of less than 0.05.

The Mann–Whitney U test may show a significant difference for a sufficiently large sample even if the difference is negligible. Therefore, we also measure the effect size of the differences between star-ratings by applying Cliff’s δ (Cliff 1993). Cliff’s δ is a non-parametric measure without assumptions about the distribution of data (Cliff 1993). Cliff’s δ measures the degree of overlap between the two sets of star-ratings. The output of the Cliff’s δ is a number between − 1 and + 1. If the distribution of star-ratings between the two sets of apps is identical, the Cliff’s δ would be 0 (Cliff 1993). If all the values of the first set are greater than the second set, it would be + 1, and vice versa. We use Cohen’s d (Cohen 2013) to interpret the effect sizes (Zhang et al. 2015). Cliff’s δ could be mapped to Cohen’s standards; the values of 0.147, 0.330, and 0.474 denote small, medium, and large effect size, respectively (Zhang et al. 2015).

3.2.3 Findings

Developers of different apps do not follow the same strategy to prioritize the user-related issue reports. Our generic model using all of the subject apps has a very low goodness of fitness, i.e., R2 < 0.05. Thus, there is no statistically significant universal relationship between the issue reports prioritization and metrics of user-reviews and issue reports for all different apps.

For 37% of the subject apps, issue reports prioritization can be modeled with the metrics of user-reviews and issue reports. The regression models of 37% of the subject apps achieve R2s ≥ 0.5, i.e., the issue reports prioritizations share significant relationships with the metrics of user-reviews and issue reports. Figure 6 shows the obtained R2s. Tables 6 and 7 show two sample models that are built for two distinct apps, i.e., Indic KeyboardFootnote 1 and Vanilla Music,Footnote 2 sorted by p − value. In the last column in both tables, upward arrows indicate when the values of the associated metrics increase, the prioritization rank is more likely to increase, while downward arrows indicate otherwise. For the remaining 63% of the apps, we could not build regression models with a proper goodness of fitness.

Developers of different apps do not consider the same importance level for the metrics of issue reports and user-reviews. Amongst the apps having the user-related issue reports prioritized, the sets of significant metrics are different from each other. For example, some issue reports are prioritized according to the metrics that are defined in the scope of issue tracking systems, such as the contribution of a user who has reported the issue. Some other issue reports are prioritized by considering the user-reviews, such as the number of user-reviews. We count the frequencies of the metrics that share statistically significant relationships with the issue reports prioritization. Table 8 shows the top five metrics of the issue reports and the user-reviews that appear the most. In particular, the size of the title and body, the number of comments, and the contribution of the person who reported the issue are the metrics that appear the most as a significant metric. The star-ratings and the number of user-reviews are the two metrics of user-reviews that have the most relationship with the issue reports prioritization.

The title size is the most popular and the most important metric when it comes to issue reports prioritization. As shown in Table 8, for 59% of the subject apps, the title size appears as a statistically significant metric. The next important metric is the number of comments that are posted for an issue report. The issues that are associated with a higher prioritization order tend to receive more comments. Having considered the title size and the body size as two significant metrics, the contents of issue reports play an important role in issue reports prioritization. Another interesting observation is where the reporter contribution appears as a significant metric for 41% of the apps and the time since the reporter has joined GitHub appears for 40% of the apps. This can show that an issues report that is reported by a developer with a higher reputation tend to be addressed at a faster pace.

Among the user-review metrics, the minimum star-rating, the proportion of neutral star-ratings, and the proportion of low star-ratings appear for 13%, 10%, and 8% of the subject apps as statistically significant metrics, respectively. This may be because developers would like to reduce the number of low star-ratings by addressing the user-reviews that are associated with lower star-ratings (Noei et al. 2017).

Addressing the issues reported in user-reviews has a statistically significant relationship with star-ratings. Figure 7 shows that the apps that we could match their issues reports with user-reviews receive higher star-ratings. The differences between the star-ratings of the apps that we could match their issues reports with the user-reviews (the first and the second boxplot in Fig. 7) and the apps that we could not match their issues reports with the user-reviews (the third boxplot in Fig. 7) are statistically significant with a p − value of 5.37e − 05 and a medium effect size with Cohen′sd of 0.37.

The average of star-ratings of the apps that:

1) We could match their user-reviews with issue reports and issue reports prioritization is statistically significantly related to the metrics.

2) We could match their user-reviews with issue reports but issue reports prioritization does not share a statistically significant relationship with the metrics.

3) We could not match their user-reviews with issue reports.

Prioritizing issue reports with respect to our metrics shares a statistically significant relationship with star-ratings. The first two boxplots in Fig. 7 show the apps that we could match their user-reviews with their issue reports. The differences between the apps that (i) the issue reports prioritization is statistically significantly related to our metrics (the first boxplot in Fig. 7) and (ii) the issue reports prioritization is not statistically significantly related to our metrics (the second boxplot in Fig. 7) are statistically significant with a p − value of 8.82e − 06 and a medium effects size with a Cohen′sd = 0.55. The star-ratings of the first group of apps are higher than the other apps.

3.3 RQ3) How can App Developers Prioritize User-Related Issue Reports to Achieve Higher Star-Ratings?

3.3.1 Motivation

In RQ2, we observe that issue reports prioritization shares a significant relationship with star-ratings. To utilize the important findings of RQ2, we suggest a prioritization method for ranking issue reports in order to achieve higher star-ratings.

3.3.2 Approach

Figure 8 shows an overview of our approach. To better prioritize the issue reports, we define four levels of prioritization. Given the list of issue reports that are ranked based on the prioritization order of issue reports (see Section 2.6), we define the prioritization levels of issue reports as follows:

- (i)

High Priority: The issue reports within the first quartile (0 − 25%) of the prioritization orders are labeled as high priority.

- (ii)

Medium Priority: The issue reports within the second quartile (25 − 50%) of the prioritization orders are labeled as medium priority.

- (iii)

Low Priority: The issue reports within the third quartile (50 − 75%) of the prioritization orders are labeled as low priority.

- (iv)

Trivial Priority: The issue reports within the last quartile (75 − 100%) of the prioritization orders are labeled as trivial priority.

Issue tracking systems usually define a limited number of prioritization orders for the issue reports (Xuan et al. 2012). For example, Bugzilla (2018) defines five orders of prioritization orders from trivial to high priority. We chose four levels of prioritization according to the distribution of the prioritization orders of our subject apps. The high priority issue reports are addressed within an hour. The medium priority issue reports are addressed within a day. The low priority issue reports are addressed within five days. The trivial priority issue reports are addressed after five days.

We build a random forest model (Liaw and Wiener 2002) to predict the prioritization levels of issue reports. Random forest (Ho 1995) is a classification approach that builds a number of decision trees at the training stage. Random forest runs efficiently on large databases and works accurately for predictions (Ho 1995). First, we train a model with the issue reports (that are labeled with four levels of prioritization) of top N apps that hold the highest star-ratings. Second, we use the trained model to predict the prioritization levels of issue reports of the rest of the apps. Third, we measure the accuracy of the predicted levels with the real levels using (1). In (1), for an app a, Ic(i) denotes the number of issue reports with correct predicted levels, and the I(i) shows the total number of issue reports for the app a.

Fourth, we divide the test apps into two groups based on the accuracy of the predicted levels. We put the apps with the pair-wise similarity of more than or equal to the threshold σ into one group. The apps with the pair-wise similarity of less than σ are placed into another group. We compare the average star-ratings of the two groups of apps using the Mann–Whitney U test (Mann and Whitney 1947) to verify whether there is a difference between the star-ratings of the two groups of apps. If the p − value is less than 0.05, it shows that the difference between the star-ratings of two groups of apps is statistically significant. We also calculate the effect size of differences between the star-ratings by measuring Cliff’s δ (Cliff 1993).

To find the best number of top apps (i.e., top N apps), we conduct a sensitivity analysis on the value of N. We did the sensitivity analysis by incrementally adding top apps; starting with the app that has received the highest star-ratings (N = 1), adding the second app with the highest star-ratings (N = 2), and continuing this process until covering all the apps. We train our prioritization model based on top N apps and test the model using the rest of the apps.

We observe that with N = 5, we can build up a prioritization model that can statistically significantly distinguish the star-ratings of the two groups of apps with the lowest p − value (i.e., p − value ≤ 2.7e − 2). Starting from N = 5, as the value of N increases (or decreases) the p − value tends to increase. We decided to consider the top five apps as the difference in the star-ratings of the two groups of apps is larger by having N = 5.

The threshold σ divides the tested apps into two groups of apps that: (i) have similar prioritization orders to our predicted levels, and (ii) have different prioritization orders from our predicted levels. To identify the best value of σ, we conduct a sensitivity analysis. In our sensitivity analysis, we repeat our experiment with different values of 0.01 <= σ <= 0.99 (with the increment value of 0.01). The results of our sensitivity analysis show that any values of σ between 0.43 and 0.55 cause a statistically significant difference between the two groups of the tested apps. 48 apps have a similar prioritization approach to the top five apps.

We calculate the mean decrease in Gini, i.e., Gini importance (Archer and Kimes 2008), to sort the metrics of the ranking model with respect to their importance. The mean decrease in Gini measures the contribution of each metric to the homogeneity of the nodes and the leaves in the model (Biau and Scornet 2016). As all the metrics are numerical, the mean decrease in Gini is a proper approach to identify the important metrics (Strobl et al. 2007; Archer and Kimes 2008).

3.3.3 Findings

Top apps can provide good patterns for other apps in order to prioritize the issue reports as the apps that have similar prioritizations to our predicted prioritizations receive higher star-ratings than other apps. Figure 9 shows the distribution of star-ratings for the apps that: (i) the prioritizations of the issue reports are similar to our predicted prioritizations, and (ii) the prioritizations of the issue reports are different from our predicted prioritizations. As shown in Fig. 9, the star-ratings are higher for the apps that have similar prioritizations to our predicted prioritizations. The difference in star-ratings between the two groups of apps is statistically significant. The p − value is 3.4e − 2 and the effect size is medium with a Cohen′sd = 0.30, indicating that the difference is observable and cannot be neglected.

Table 9 shows the metrics that are used to build the ranking model with respect to the top five apps. As shown in Table 9, the time since a reporter has joined GitHub holds the highest Gini importance. The appearance of the time since a reporter has joined GitHub as a statistically significant metric could be due to the lack of knowledge and experience of the newer members (Steinmacher et al. 2018; Vasilescu et al. 2015). Vasilescu et al. (2015) investigated different aspects of having a diverse (e.g., new and experienced) team members on GitHub. Having diverse developers has some advantages, such as providing a wider range of new ideas (Vasilescu et al. 2015). However, it takes some time for new developers to fit in a project (Zanatta et al. 2017). Improper contributions by newcomers require more efforts for integrations which makes the development process harder (Vasilescu et al. 2015). Also, sometimes new developers are not fully familiar with GitHub (Vasilescu et al. 2015). As a result, new developers face various barriers when contributing to an open source project (Steinmacher et al. 2018; Zanatta et al. 2017), such as lack of experience and communication issues (Steinmacher et al. 2018). Different strategies, such as recruiting mentors, have been suggested in the literature to let new developers better contribute to open source projects (Steinmacher et al. 2012).

The size of an issue is the second metric with the highest Gini importance. Similarly, the size of the user-reviews holds the highest Gini importance among the user-related metrics. This can denote that developers of top five apps normally tend to address the user-reviews that have described an issue more in detail.

For the top apps, we could match a higher proportion of user-reviews to issue reports in comparison with the rest of the apps. As reported in the first research question (see Section 3.1), we could match 27% of the user-reviews with 33% of the issue reports. However, for the top five apps, 52% of the user-reviews are matched with 29% of the issue reports. There is a notable increase in the proportion of the matches in the user-reviews. However, there is a small decrease in the proportion of matches in the issue reports, i.e., 33% to 29%. The top five apps address more issues that are reported in the user-reviews, while developers keep reporting other issues that may not be reflected in the user-reviews.

4 Threats to Validity

In this section, we discuss the threats to the validity of our study (Yin 2013).

4.1 Conclusion Validity

Threats to conclusion validity concern the relationship between the treatment and the outcome. Martin et al. (2015a) report that using an incomplete set of user-reviews can introduce bias to the findings of an empirical study. To eliminate this threat, we take all the user-reviews of our apps into consideration. The choice of modeling technique is another threat to conclusion validity. We use the linear regression model in the second research question. To evaluate the possible threat from the choice of modeling technique, we repeat our experiment using a multinomial regression model and find that our conclusion is not affected (i.e., no generic model can be built and the same trend as in Fig. 7 is obtained).

4.2 Internal Validity

Threats to internal validity concern the analysis methods and selection of subject systems. We select the mobile apps that have more than N matches between the user-reviews and issue reports. The underlying assumption is that the apps with fewer matches do not (or rarely) address the issues described in user-reviews. With our setting (i.e., N = 10) (Khalid et al. 2016), only two of our apps are outliers, which have a small number of issue reports (i.e., 10 and 11) while more than 50% of their issue reports mapped to the user-reviews. Our conclusion remains the same with or without the two outlier apps. The number of comments is one of the metrics that we measured for the issue reports. Only 1.5% of the comments are “+ 1 comments” in our study. We use the reaction time to estimate the prioritization orders. However, such an estimation may introduce some noises. Nonetheless, unfortunately, there is no specific indicator that shows the exact priority of an issue report, i.e., the issue reports are not tagged with specific priority levels. As described in the paper, we carefully measured the reaction times to mitigate this threat. Finally, we did not report the recall of our approach as it requires manually matching the user-reviews with all the issue reports. Instead, we reported the precision.

4.3 External Validity

Threats to external validity concern the possibility to generalize our findings. Although we only study the open-source apps, the subject apps are from diverse categories such as Tools, Video Players & Editors, and Shopping. Therefore, our subject apps can represent a considerable amount of mobile apps. Future work is welcome to examine our findings on proprietary mobile apps.

4.4 Reliability Validity

Threats to reliability validity concern the possibilities of replicating this study. The user-reviews and issue reports of all our subject apps are publicly accessible.

5 Related Work

In this section, we summarize the related work from three aspects: (i) user-reviews, (ii) issue tracking systems and bug reports, and (iii) issue reports prioritization.

5.1 User-reviews

Recent work investigates the user-reviews that are posted on Google Play Store (Google 2017) to ease the process of app development and app maintenance (Galvis Carreño and Winbladh 2013; Guzman and Maalej 2014; Iacob and Harrison 2013).

Chen et al. (2014) proposed a tool to identify the uninformative user-reviews and rank the informative ones. Chen et al. (2014) employed textual analysis to detect the informative user-reviews. Villarroel et al. (2016) classified and ranked the issues that are reported in the user-reviews to help app developers in planning for the next releases of their app. Villarroel et al. (2016) proposed a tool to classify the user-reviews into the groups of bug reports and feature requests. However, neither work considers the issue tracking systems in the ranking process. Some of the issues that are reported in the user-reviews could already have been reported in the issue tracking systems. Therefore, adding such issues to the issue tracking system can introduce issue report duplication (Cavalcanti et al. 2013).

Ciurumelea et al. (2017) proposed an approach to organize user-reviews with respect to different topics, such as performance and memory. Having the user-reviews organized, they recommend source-code using code localization. Di Sorbo et al. (2016) presented an approach to summarize the user-reviews. Iacob and Harrison (2013) employed LDA (Blei et al. 2003) to extract the feature requests from user-reviews. They applied LDA on user-reviews and looked for linguistic rules. Guzman and Maalej (2014) presented an approach to assist developers in analyzing user-reviews. Guzman and Maalej (2014) applied topic modeling techniques on the user-reviews for extracting features.

Moran et al. (2015) introduced a tool, called FUSION, to auto-complete bug reports. Moran et al. (2015) applied statistic and dynamic analysis on the decompiled code of Android apps. FUSION helps developers in reproducing bugs and auto-completing bug reports. Martin et al. (2015b) studied the impact of app release in mobile app stores. Martin et al. (2015b) observed that 40% of app releases impact performance in Google Play Store. Galvis Carreño and Winbladh (2013) applied textual analysis on users’ feedback. They applied topic modeling and sentimental analysis on user-reviews to assist app developer in the revision of requirements for the next releases of their apps.

Earlier studies attempted to extract knowledge from user-reviews and ease the development process. None of the earlier work has integrated the user-reviews into the process of prioritizing the issue reports of the Android apps and has not studied the relationship between prioritizing issue reports and star-ratings.

5.2 Bug Reports and Issue Tracking Systems

The source code and the issue tracking system of the majority of the Android apps are not publicly available. Thus, the number of papers that study bug repositories and issue tracking systems of Android apps is not comparable to the number of papers that study user-reviews.

Some papers are based on empirical studies on characteristics of issue tracking systems. Bhattacharya et al. (2013) conducted an empirical study on 24 open-source Android apps. They defined some metrics of bug report quality and developer involvement. Bhattacharya et al. (2013) observed that bug reports are of high quality, especially the security bug reports have the highest quality among the bug reports. Palomba et al. (2015) studied 100 Android apps. They compared user-reviews with the change log of open-source apps that are available on GitHub. They reported that implementing the users’ feature requests in the next releases can increase the star-ratings.

Some recent researches are based on the source-code of the open-source Android app. McDonnell et al. (2013) studied the API update adoption by Android apps. They noticed that about 28% of references are not up-to-date. They reported that the propagation time of the API references to be updated is around 14 months. Maji et al. (2010) applied a failure characterization study on Android and Symbian apps. They investigate the relationship between bugs, locations of the bugs in the code and code changes. Linares-Vásquez et al. (2015) studied open-source apps. They noticed that app developers rely on manual execution of apps and user-reviews to identify performance bugs.

None of the recent work has studied the relationship between prioritization of issue reports and star-ratings of mobile apps.

5.3 Issue Reports Prioritization

In this section, we introduce the related work that concerns the issue reports prioritization which is mainly done on other eco-systems rather than mobile apps.

Lamkanfi et al. (2010) proposed a severity prediction approach by analyzing textual description of issue reports of three open-source communities, including Mozilla, Eclipse and GNOME. They used Naïve Bayes to label the issue reports as severe or non-severe. Alenezi and Banitaan in Alenezi and Banitaan (2013) employed Naïve Bayes, decision trees, and random forest to predict the priority of issue reports in Bugzilla (2018). They observed that random forest and decision tree outperform Naïve Bayes. Yu et al. (2010) used neural network to prioritize the issue reports. They showed their approach works better than Naïve Bayes. Kanwal and Maqbool (2012) applied Naïve Bayes and SVM to predict the issue reports prioritization. They observed that SVM is better than Naïve Bayes when adopting textual metrics, such as issue report descriptions. However, they observed that when considering the categorical metrics, such as platform, Naïve Bayes performs better than SVM. Menzies and Marcus (2008) ranked the terms that appear in the issue reports by adopting TF-IDF. They used top terms to predict the priority of issue reports. Tian et al. (2012) used the similarity between the current issue reports and the issue reports in the past to estimate the priority of the new issue reports.

None of the above work incorporates user-reviews with issue report for prioritization. We take the metrics of both issue reports and user-reviews to prioritize the issue reports.

6 Conclusion

In this paper, we investigate the prioritizations of user-related issue reports and their relationship with star-ratings. First, we introduce an approach for mapping user-reviews to issue reports. We perform an empirical study of 326 open-source Android apps that have both user-reviews and issue tracking systems publicly available. Our approach achieves a precision of 79%. Second, we observe that prioritizing issue reports is positively related to increases in star-ratings. Finally, we propose a prioritization prediction method using the top apps. The prioritization model can be applied to each app to identify the prioritization orders of issue reports. Our results show that the apps with similar prioritizations to our recommended ones receive higher star-ratings.

In the future, we plan to further investigate the generalizability of our approach. For example, we will apply our approach to user-reviews and issue reports from other app markets and issue tracking systems.

References

Alenezi M, Banitaan S (2013) Bug reports prioritization: which features and classifier to use?. In: 12th international conference on machine learning and applications (ICMLA), IEEE, vol 2, pp 112–116

Allacronyms (2017) Acronyms and abbreviations related to computer science. [Online]. Available: https://www.allacronyms.com/computer-science/abbreviations

Archer KJ, Kimes RV (2008) Empirical characterization of random forest variable importance measures. Comput Stat Data Anal 52(4):2249–2260

Basili VR (1992) Software modeling and measurement: the goal/question/metric paradigm. Tech. rep., Institute for advanced computer studies

Bavota G, Linares-Vasquez M, Bernal-Cardenas CE, Penta MD, Oliveto R, Poshyvanyk D (2015) The impact of api change-and fault-proneness on the user ratings of android apps. IEEE Trans Softw Eng 41(4):384–407

Bertram D, Voida A, Greenberg S, Walker R (2010) Communication, collaboration, and bugs: the social nature of issue tracking in small, collocated teams. In: 2010 ACM conference on computer supported cooperative work, ACM, pp 29–300

Bhattacharya P, Ulanova L, Neamtiu I, Koduru SC (2013) An empirical analysis of bug reports and bug fixing in open source android apps, IEEE, CSMR

Biau G, Scornet E (2016) A random forest guided tour. Test 25(2):197–227

Blei DM, Ng AY, Jordan MI (2003) Latent dirichlet allocation. J Mach Learn Res 3:993–1022

Broder AZ, Glassman SC, Manasse MS, Zweig G (1997) Syntactic clustering of the web. Comput Netw ISDN Syst 29(8-13):1157–1166

Bruns A, Kornstadt A, Wichmann D (2009) Web application tests with selenium. IEEE software 26(5):88–91

Bugzilla (2018) Bugzilla. [Online]. Available: https://www.bugzilla.org/

Calders T, Verwer S (2010) Three naive bayes approaches for discrimination-free classification. Data Min Knowl Disc 21(2):277–292

Cavalcanti YC, Neto PAdMS, Lucrédio D, Vale T, de Almeida ES, de Lemos Meira SR (2013) The bug report duplication problem: an exploratory study. Softw Qual J 21(1):39–66

Chen N, Lin J, Hoi SC, Xiao X, Zhang B (2014) Ar-miner: mining informative reviews for developers from mobile app marketplace. In: 36th international conference on software engineering, ACM, pp 767–778

Ciurumelea A, Schaufelbhl A, Panichella S, Gall H (2017) Analyzing reviews and code of mobile apps for better release planning. In: 24th international conference on software analysis evolution and reengineering, IEEE

Cliff N (1993) Dominance statistics: Ordinal analyses to answer ordinal questions. Psychol Bull 114(3):494

Cohen J (2013) Statistical power analysis for the behavioral sciences. Academic press, Cambridge

De Marneffe MC, MacCartney B, Manning CD, et al (2006) Generating typed dependency parses from phrase structure parses. In: 5th international conference on language resources and evaluation, vol 6, pp 449–454

Developer G (2018) Github developer. [Online]. Available: https://developer.github.com/v3/

Di Sorbo A, Panichella S, Alexandru CV, Shimagaki J, Visaggio CA, Canfora G, Gall HC (2016) What would users change in my app? Summarizing app reviews for recommending software changes. In: 24th ACM SIGSOFT international symposium on foundations of software engineering, ACM, pp 499–510

Ester M, Kriegel H P, Sander J, Xu X, et al (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. In: 2nd international conference on knowledge discovery and data mining, vol 96, pp 226–231

Faraway JJ (2005) Extending the linear model with R: generalized linear, mixed effects and nonparametric regression models. CRC Press, Boca Raton

FDroid (2017) F-droid. [Online]. Available: http://www.f-droid.org/

Galvis Carreño LV, Winbladh K (2013) Analysis of user comments: an approach for software requirements evolution. In: 35th international conference on software engineering, IEEE, pp 582–591

GitHub (2018a) Github. [Online]. Available: http://www.github.com/

GitHub (2018b) Github help. [Online]. Available: https://help.github.com/articles/viewing-contributions-on-your-profile/

Google (2017) Google play store. [Online]. Available: http://play.google.com/

Gousios G (2013) The ghtorrent dataset and tool suite. In: 10th working conference on mining software repositories. IEEE Press, Piscataway, pp 233–236

Guzman E, Maalej W (2014) How do users like this feature? A fine grained sentiment analysis of app reviews. In: 22nd international conference on requirements engineering, IEEE, pp 153–162

Hmisc (2017) Harrell miscellaneous. [Online]. Available: http://cran.r-project.org/web/packages/Hmisc/index.html

Ho TK (1995) Random decision forests. In: 3rd international conference on document analysis and recognition, IEEE, vol 1, pp 278–282

Iacob C, Harrison R (2013) Retrieving and analyzing mobile apps feature requests from online reviews. In: 10th working conference on mining software repositories, IEEE, MSR ’13, pp 41–44

Islam MR, Zibran MF (2017) Leveraging automated sentiment analysis in software engineering. In: 14th International Conference on Mining Software Repositories, IEEE Press, pp 203–214

Janák J (2009) Issue tracking systems. Brno, spring

Jazzy (2017) Jazzy spell checker. [Online]. Available: http://jazzy.sourceforge.net/

Kanwal J, Maqbool O (2012) Bug prioritization to facilitate bug report triage. J Comput Sci Technol 27(2):397–412

Kelley TL (1947) Fundamentals of statistics. Harvard University Press, Harvard

Khalid H, Nagappan M, Shihab E, Hassan AE (2014) Prioritizing the devices to test your app on: a case study of android game apps. In: 22nd international symposium on the foundations of software engineering, pp 370–379

Khalid H, Nagappan M, Hassan AE (2016) Examining the relationship between findbugs warnings and app ratings. IEEE Softw 33(4):34–39

Kim HW, Lee H, Son J (2011) An exploratory study on the determinants of smartphone app purchase. In: 11th international dsi and the 16th APDSI joint meeting

Kim SM, Pantel P, Chklovski T, Pennacchiotti M (2006) Automatically assessing review helpfulness. In: 2006 Conference on empirical methods in natural language processing, Association for Computational Linguistics, pp 423–430

Lamkanfi A, Demeyer S, Giger E, Goethals B (2010) Predicting the severity of a reported bug. In: 7th IEEE working conference on mining software repositories (MSR), IEEE, pp 1–10

Liaw A, Wiener M (2002) Classification and regression by randomforest. R news 2(3):18–22

Linares-Vásquez M, Vendome C, Luo Q, Poshyvanyk D (2015) How developers detect and fix performance bottlenecks in android apps. In: 31st conference on software maintenance and evolution, IEEE, pp 352–361

Lovins JB (1968) Development of a stemming algorithm, MIT Information Processing Group, Electronic Systems Laboratory

Maji AK, Hao K, Sultana S, Bagchi S (2010) Characterizing failures in mobile oses: A case study with android and symbian

Mann HB, Whitney DR (1947) On a test of whether one of two random variables is stochastically larger than the other. Ann Math Stat 18:50–60

Manning CD, Surdeanu M, Bauer J, Finkel J, Bethard SJ, McClosky D (2014) The stanford corenlp natural language processing toolkit. In: 52nd annual meeting of the association for computational linguistics: System demonstrations, pp 55–60

Martin W, Harman M, Jia Y, Sarro F, Zhang Y (2015a) The app sampling problem for app store mining. In: 12th working conference on mining software repositories, IEEE, pp 123–133

Martin W, Sarro F, Harman M (2015b) Causal impact analysis applied to app releases in google play and windows phone store. RN 15:07

McDonnell T, Ray B, Kim M (2013) An empirical study of api stability and adoption in the android ecosystem. In: 29th international conference on software maintenance, IEEE, pp 70–79

Menzies T, Marcus A (2008) Automated severity assessment of software defect reports. In: International conference on software maintenance, IEEE, pp 346–355

Miller GA (1995) Wordnet: a lexical database for english. Commun ACM 38 (11):39–41

Moran K, Linares-Vásquez M, Bernal-Cárdenas C, Poshyvanyk D (2015) Auto-completing bug reports for android applications. In: 10th joint meeting on foundations of software engineering, ACM, pp 673–686

Nelder JA, Baker RJ (1972) Generalized linear models. Encyclopedia of statistical sciences

Netlingo (2017) Top 50 most popular text terms. [Online]. Available: http://www.netlingo.com/top50/popular-text-terms.php

Nguyen TH, Adams B, Hassan AE (2010) Studying the impact of dependency network measures on software quality. In: 26th international conference on software maintenance, IEEE, pp 1–10

Noei E, Heydarnoori A (2016) Exaf: a search engine for sample applications of object-oriented framework-provided concepts. Inf Softw Technol 75:135–147

Noei E, Syer MD, Zou Y, Hassan AE, Keivanloo I (2017) A study of the relation of mobile device attributes with the user-perceived quality of android apps. Empir Softw Eng 22(6):3088–3116

Noei E, Da Costa DA, Zou Y (2018) Winning the app production rally. In: 26th ACM joint meeting on european software engineering conference and symposium on the foundations of software engineering. ESEC/FSE, vol 2018. ACM, New York, pp 283–294

Nord C (2005) Text analysis in translation: Theory, methodology, and didactic application of a model for translation-oriented text analysis. 94, Rodopi

Optimaize (2017) Language detection library for java. [Online]. Available: https://github.com/optimaize/language-detector/

Palomba F, Linares-Vásquez M, Bavota G, Oliveto R, Di Penta M, Poshyvanyk D, De Lucia A (2015) User reviews matter! tracking crowdsourced reviews to support evolution of successful apps. In: 31st international conference on software maintenance and evolution, IEEE, pp 291–300

Panichella S, Di Sorbo A, Guzman E, Visaggio C, Canfora G, Gall H (2015) How can i improve my app? classifying user reviews for software maintenance and evolution. In: 31st international conference on software maintenance and evolution

Rajaraman A, Ullman JD, Ullman JD, Ullman JD (2012) Mining of massive datasets, vol 77. Cambridge University Press, Cambridge

Romero DM, Galuba W, Asur S, Huberman BA (2011) Influence and passivity in social media. In: Joint european conference on machine learning and knowledge discovery in databases, Springer, pp 18–33

Salton G, Mcgill MJ (1983) Introduction to modern information retrieval, 24–51

Salton G, Wong A, Yang CS (1975) A vector space model for automatic indexing. Commun ACM 18(11):613–620

Selenium (2017) Selenium - web browser automation. [Online]. Available: http://seleniumhq.org/

Snowball (2018) Snowball. [Online]. Available: http://snowballstem.org/

Statista (2017a) Number of apps available in leading app stores as of march 2017. [Online]. Available: http://www.statista.com/statistics/276623/number-of-apps-available-in-leading-app-stores

Statista (2017b) Number of smartphone users worldwide from 2014 to 2020 (in billions). [Online]. Available: https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide

Stats A (2016) Number of android applications. [Online]. Available: http://www.appbrain.com/stats/number-of-android-apps

Steinmacher I, Wiese IS, Gerosa MA (2012) Recommending mentors to software project newcomers. In: 3rd international workshop on recommendation systems for software engineering, IEEE Press, pp 63–67

Steinmacher I, Treude C, Gerosa M (2018) Let me in: Guidelines for the successful onboarding of newcomers to open source projects. IEEE Software

Strobl C, Boulesteix AL, Zeileis A, Hothorn T (2007) Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC Bioinf 8(1):25

Tantithamthavorn C, McIntosh S, Hassan AE, Matsumoto K (2017) An empirical comparison of model validation techniques for defect prediction models. IEEE Trans Softw Eng 43(1):1–18

Tian Y, Lo D, Sun C (2012) Information retrieval based nearest neighbor classification for fine-grained bug severity prediction. In: 2012 19th working conference on reverse engineering (WCRE), IEEE, pp 215–224

Van Solingen R, Basili V, Caldiera G, Rombach HD (2002) Goal question metric (gqm) approach. Encyclopedia of software engineering

Vasilescu B, Filkov V, Serebrenik A (2015) Perceptions of diversity on github: a user survey. In: 8th international workshop on cooperative and human aspects of software engineering, IEEE Press, pp 50–56

Villarroel L, Bavota G, Russo B, Oliveto R, Di Penta M (2016) Release planning of mobile apps based on user reviews. In: 38th international conference on software engineering, ACM, pp 14–24

Xuan J, Jiang H, Ren Z, Zou W (2012) Developer prioritization in bug repositories. In: 2012 34th international conference on software engineering (ICSE), IEEE, pp 25–35

Yin RK (2013) Case study research: Design and methods. Sage publications

Yu L, Tsai WT, Zhao W, Wu F (2010) Predicting defect priority based on neural networks. In: International conference on advanced data mining and applications, Springer, pp 356–367

Yu Y, Wang H, Filkov V, Devanbu P, Vasilescu B (2015) Wait for it: determinants of pull request evaluation latency on github. In: 2015 IEEE/ACM 12th working conference on mining software repositories (MSR), IEEE, pp 367–371

Zanatta AL, Steinmacher I, Machado LS, de Souza CR, Prikladnicki R (2017) Barriers faced by newcomers to software-crowdsourcing projects. IEEE Softw 34(2):37–43

Zhang F, Mockus A, Keivanloo I, Zou Y (2015) Towards building a universal defect prediction model with rank transformed predictors. Empir Softw Eng 21(5):2107–2145

Acknowledgments

We thank the anonymous reviewers who reviewed our paper and the associated editor for their valuable feedback.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Miryung Kim

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Noei, E., Zhang, F., Wang, S. et al. Towards prioritizing user-related issue reports of mobile applications. Empir Software Eng 24, 1964–1996 (2019). https://doi.org/10.1007/s10664-019-09684-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-019-09684-y