Abstract

The extraction of patterns displaying significant association with a class label is a key data mining task with wide application in many domains. We introduce and study a variant of the problem that requires to mine the top-k statistically significant patterns, thus providing tight control on the number of patterns reported in output. We develop TopKWY, the first algorithm to mine the top-k significant patterns while rigorously controlling the family-wise error rate of the output, and provide theoretical evidence of its effectiveness. TopKWY crucially relies on a novel strategy to explore statistically significant patterns and on several key implementation choices, which may be of independent interest. Our extensive experimental evaluation shows that TopKWY enables the extraction of the most significant patterns from large datasets which could not be analyzed by the state-of-the-art. In addition, TopKWY improves over the state-of-the-art even for the extraction of all significant patterns.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

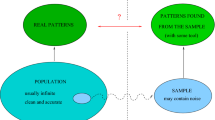

Frequent patterns mining is one of the fundamental primitives in data mining, with applications in a large number of domains, ranging from market basket analysis to biology and medicine (Han et al. 2007). In its original definition (Agrawal et al. 1993) it requires to identify patterns that appear in a fraction at least \(\sigma \) of all the transactions of a transactional dataset. Significant pattern mining (Dong and Bailey 2012; Hämäläinen and Webb 2019; Pellegrina et al. 2019a) is an extension of the problem in which each transaction is assigned a binary class label and the goal is to identify patterns having significant association with one of the class labels. Significance is commonly assessed using a statistical test [e.g., Fisher exact test (Fisher 1922)], that provides a p value quantifying the probability that the association observed in real data arises due to chance alone.

Significant pattern mining is crucial in many applications, providing additional information w.r.t. mining patterns that are frequent in the entire dataset: in market basket analysis it serves to identify itemsets that are purchased more frequently by one group of customers than by another one (e.g., married people vs. singles); in social networks, it finds features characterizing users interested in one specific topic; in biology, it identifies sets of genetic variants appearing more frequently in cancer versus normal tissues or in one cancer type versus another one. In all applications, identifying highly reliable associations is of the utmost importance.

One of the critical issues in significant pattern mining is the multiple hypothesis problem, due to the huge number of patterns appearing in large datasets. When testing only one pattern, if its p value is below a fixed threshold \(\alpha \), one can flag the pattern as significant with the guarantee that the probability of false discovery (e.g., flagging the pattern as significant when it is not) is bounded by \(\alpha \). However, for a fixed significance threshold \(\alpha \), when d patterns are tested we expect \(\alpha d\) of them to have p value below \(\alpha \) even when they are not associated with the class labels. A standard method to correct for multiple hypothesis testing, called Bonferroni method (Bonferroni 1936), is to adjust the significance threshold by dividing \(\alpha \) by the number d of tested patterns. This guarantees that the probability of making one or more false discoveries, called family-wise error rate (FWER), is bounded by \(\alpha \). However, since the number d of patterns can be huge, this approach results in limited statistical power, with very few patterns having p value passing such small threshold (Webb 2006, 2007, 2008). A naïve solution for the problem is to limit the number of elements in the patterns to be tested, hindering the ability to identify large significant patterns.

A breakthrough in significant pattern mining is the work by Terada et al. (2013a), that proposes LAMP, the first method to identify significant patterns without limiting their size. LAMP is based on the work by Tarone (1990), which shows that patterns that cannot reach statistical significance, called untestable, do not need to be taken into account while correcting for multiple hypothesis testing. Subsequent work by Minato et al. (2014) has improved the search strategy employed by LAMP to identify testable patterns. Even so, such methods suffer from limited power, due to the use of Bonferroni correction over the large number of testable patterns.

Recently, methods based on the more powerful Westfall–Young permutation procedure (Westfall and Young 1993) have been proposed, first by Terada et al. (2013b) with FastWY and then by Llinares-López et al. (2015) with Westfall–Young light (WYlight for short). These methods mine several permuted datasets to identify a threshold such that all patterns with p value below the threshold can be flagged as statistically significant while controlling the FWER. Such methods achieve a higher power than methods based on Bonferroni correction, including LAMP, and the state-of-the-art, WYlight, has proved to be more efficient than FastWY also in terms of runtime and memory.

However, the extraction of significant patterns from large datasets is still challenging, with three crucial issues that are not addressed by currently available methods. First, in several cases the dependency among patterns leads to a huge number of statistically significant patterns even after multiple hypothesis correction. A common approach [used, e.g., by Terada et al. (2013b) and Llinares-López et al. (2015)] to partially alleviate this problem is to consider only closed patterns (Han et al. 2007), discarding patterns with redundant information content in terms of appearance in the dataset and of association with the class label. Even with this restriction the number of significant patterns can be extremely large and when this happens one would like to focus on the most significant ones, without resorting to filtering strategies after the expensive extraction of all significant patterns has been performed. A second and related issue is that current methods work by first identifying the exact corrected threshold for statistical significance, and only subsequently mining the real dataset: when the number of significant patterns is huge, one would like to focus on the most significant ones without the burden of computing the exact significance threshold. Third, all methods may need to process several untestable patterns to identify the correct threshold for significance, resulting in a extremely large running time in particular for datasets with many low frequency patterns. These issues make current methods impractical in many cases, as shown by our experimental evaluation.

1.1 Our contribution

In this work we focus on the problem of mining the most statistically significant patterns while rigorously controlling the FWER of the returned set of patterns. In particular, in analogy with frequent pattern mining approaches, we focus on extracting the top-k statistically significant patterns. This problem is more challenging than the extraction of top-k frequent patterns, given that statistical significance does not enjoy the anti-monotonicity property w.r.t. to pattern frequency. In this regards, our contributions are:

we formally define the problem of mining Top-k Statistically Significant Patterns. Our definition allows to properly control the size of the output set while providing guarantees on the FWER of the output.

we design a novel algorithm, called TopKWY, for the problem above, which provides guarantees on the FWER by using the Westfall–Young permutation test procedure. TopKWY adapts to the distribution of significant patterns: it reports all significant patterns when their number is small, while it outputs only the most significant patterns when the number of significant patterns is huge. TopKWY is based on a exploration strategy similar to the one used by TopKMiner (Pietracaprina and Vandin 2007), an efficient algorithm to identify the top-k frequent patterns. We prove that the use of such strategy guarantees that, in contrast to previous approaches, TopKWY will never explore untestable patterns.

we introduce several bounds to prune untestable patterns that improve over the bound introduced by LAMP and used in WYlight as well. We show that such bounds can be effectively used within the exploration strategy employed by TopKWY and that it provides a significant speed-up for real datasets.

we present variants of TopKWY to mine the top-k significant patterns with control of the Generalized Family-Wise Error Rate (g-FWER) or the False Discovery Proportion (FDP), which trade an increase in the size of the output with a (potentially) higher, but still controlled, number of false discoveries. These variants lead to an increase in the statistical power in situations where the number of results reported controlling the FWER is low.

we conduct an extensive experimental evaluation of the use of TopKWY to extract significant itemsets and subgraphs, showing that TopKWY allows the extraction of statistically significant patterns for large datasets while having reasonable memory requirements. Surprisingly, for many datasets TopKWY improves over the state-of-the-art even when it is used to find all statistically significant patterns.

1.2 Additional related work

Since the introduction of the frequent pattern mining problem (Agrawal et al. 1993), a number of methods have been developed to efficiently extract all frequent patterns [see (Han et al. (2007)) for several references]. Given that the number of such patterns can be extremely large and that identifying an appropriate frequency threshold to limit the number of frequent patterns is challenging, methods to identify restricted classes of patterns, e.g., closed patterns (Pasquier et al. 1999) or maximal patterns (Bayardo 1998), have been designed. Methods that directly limit the number of patterns by reporting the k most frequent closed patterns have been designed (Han et al. 2002; Pietracaprina and Vandin 2007) as well.

Many methods have been developed for subgroups discovery [see, e.g., the reviews by Herrera et al. (2011) and by Atzmueller (2015)], that is the task of mining patterns associated with class labels using a quality score to quantify the association between a pattern and class labels. Most of the available methods focus on such scores, without assessing the statistical significance of the association or correcting for multiple hypothesis testing. Contrarily to this general trend, a recent work (Li et al. 2014) uses odds ratio as a measure to quantify statistical significance and to reduce redundancies of subgroups, but still does not correct for multiple hypothesis testing. In (Duivesteijn and Knobbe 2011) multiple hypothesis testing is accounted for using a swap randomization technique (Gionis et al. 2007). The methods we describe in this work can be applied directly to the task of discovering the top-k statistically significant subgroups with proper control of the FWER when the language defining the subgroups is composed by conjunctive formulas, and could possibly be adapted and extended to more general languages.

In addition to the contributions for significant pattern mining mentioned above (Terada et al. 2013a; Minato et al. 2014; Terada et al. 2013b, 2015; Llinares-López et al. 2015) (described more in depth in Sect. 2), recent work has extended the extraction of statistically sound patterns (Hämäläinen and Webb 2019) in directions that are orthogonal to our contributions, for example searching for statistical dependency rules between itemsets and items (Hämäläinen 2012), or using layered critical values (Webb 2008) for correcting for multiple hypothesis testing. Komiyama et al. (2017) have developed efficient techniques for multiple testing correction in the mining of statistical emerging patterns. Terada et al. (2016) and Papaxanthos et al. (2016) have introduced methods to find significant patterns in the presence of covariates.

2 Background and problem definition

2.1 Significant pattern mining

Let the dataset \({\mathcal {D}} = \{t_1, t_2, t_3,\ldots ,t_n\}\) be a set of n transactions, where each transaction is an element from a domain \({\mathcal {F}}\). Each transaction \(t_i\) is associated to a binary label \(c_i \in \{0,1\}\). We denote by \(n_1\) the number of transactions with label 1, and, without loss of generality, we assume that \(n_1\) is the minority class, i.e. \(n_1 \le n/2\). We define a patternS as an element of \({\mathcal {F}}\), potentially with some constraints, and for each transaction t we define the binary variable G(S, t) such that \(G(S,t)=1\) if S is contained in transaction t and \(G(S,t)=0\) otherwise. For example: in the case of itemsets, \({\mathcal {F}}\) is the set of (non-empty) subsets of a universe of binary features \({\mathcal {I}}\), and S is an element of \({\mathcal {F}}\); for subgraphs, \({\mathcal {F}}\) is the set of vertex-labelled graphs, while S is an element of \({\mathcal {F}}\) with the constraint that S is connected. Given a pattern S, we define its support \(x_S\) as the number of transactions containing S, that is \(x_S = \sum _{i=1}^n G(S,t_i)\). We denote by \(a_S\) the number of class 1 transactions containing S. In this work we assume that patterns enjoy the anti-monotonicity property, such that for any super pattern \(S'\) of S (i.e., \(S \subset S'\)) the support \(x_{S'}\) of \(S'\) is \(x_{S'} \le x_{S}\). This holds for itemsets, subgraphs, and many other kind of patterns.

The objective of significant pattern mining is to find patterns with significant statistical association to one of the two classes. In order to quantify the statistical association, a rigorous statistical test is performed. Such test assesses the association between the observations\(G(S,t_1),\ldots ,G(S,t_n)\) of variable G(S, t) (describing the presence of S in the transactions of dataset \({\mathcal {D}}\)) and the observed class labels \(c_i\) of the transactions, representing observations of the variable \(c \in \{0,1\}\) defining the label of a transaction. Since the variables are binary, for a given pattern S the \(2 \times 2\) contingency table represented in Table 1 is considered and the popular Fisher exact test (Fisher 1922) is often employed. Such test considers the marginals\((x_S,n_1,n)\) of the contingency table for pattern S to be fixed; under the null hypothesis of independence between variables G(S, t) and c, the number \(a_S\) of class 1 transactions containing S follows a hypergeometric distribution:

Using such distribution, the probability, called p value, of observing an association that is equally or more extreme than the one observed in the data under the null hypothesis can be computed. Smaller p values indicate more probable associations. The p value \(p_S\) for the pattern S is computed by summing all the probabilities to obtain, under the null hypothesis, contingency tables which are at least as extreme as the one observed in \({\mathcal {D}}\):

2.2 Multiple hypothesis testing

When only one pattern S is tested, it can be flagged as significant when its p value is smaller than a significance threshold\(\alpha \) fixed a priori. This guarantees that the probability of a false discovery (i.e., reporting S as significant when it is not) is bounded by \(\alpha \). However, if such approach is used when testing d hypotheses, the expected number of false positives is \(\alpha d\); when d is high, which is typically the case of significant pattern mining, this results in a large number of false positives. Therefore, an appropriate multiple hypothesis testing correction of the significance threshold needs to be performed in order to obtain rigorous guarantees in terms of the number of false associations reported in output.

One common approach is to perform a correction in order to bound the Family-Wise Error Rate (FWER), which is defined as the probability of reporting at least one false positive. Let FP be the number of false positives, then: \(\hbox {FWER} =\Pr (FP>0)\). For a given value \(\delta \), define \(FWER(\delta )\) as the FWER obtained using \(\delta \) as corrected significance threshold, that is by rejecting (i.e., flagging as significant) all null hypotheses (i.e., patterns) with p value \(\le \delta \). Commonly, it is not possible to evaluate \(FWER(\delta )\) in closed form. One approach to set \(\delta \) is to use the Bonferroni correction, setting \(\delta \) to \(\alpha / d\). Using the union bound, one can easily show that the resulting \(FWER(\delta )\le d\delta =\alpha \). The problem with this approach is that when d is high, \(\delta \) is very close to 0, resulting in low statistical power with many false negatives.

To increase the statistical power, more sophisticated techniques have been devised. In particular, one can look for an optimal corrected significance threshold \(\delta ^*\), which maximizes the statistical power while keeping the FWER bounded by \(\alpha \):

In this regard, a key result from Tarone (1990) is the introduction of minimum attainable p value: if we use a corrected significance threshold \(\delta \), patterns whose p value cannot be \(\le \delta \), called untestable, do not need to be considered in the multiple-hypothesis correction. Therefore, if we define \(k(\delta )\) as the number of patterns having minimum attainable p value \(\le \delta \), then the adjusted Bonferroni correction when threshold \(\delta \) is used can be written as \(\delta =\alpha / k(\delta )\).

LAMP (Terada et al. 2013a) introduced such concepts into the mining of significant patterns. In particular, it exploits the following observation. For a given pattern S, its p value \(p_S\) can be expressed as a function of \(a_S\) only. Since the set of allowable values of \(a_S\) is finite, i.e. \(a_S \in [a_{S,min}, a_{S,max}]\), there exists a minimum attainable p value \(\psi (x_S)\), which depends on \(x_S\), \(n_1\), and n, and which corresponds to the most biased case for Eq. 2:

Given a pattern S, its support \(x_S\), and a corrected significance threshold \(\delta \), if \(\psi (x_S) > \delta \) the pattern S cannot be significant; S is therefore called untestable. To use such observation for significant pattern mining, Terada et al. (2013a) introduced in LAMP a monotonically decreasing lower bound \({\hat{\psi }}(x_S)\) on \(\psi (x_S)\):

Terada et al. (2013a) showed that identifying a suitable significance threshold \(\delta ^*\) translates into finding the maximum support threshold \(\sigma _{\max }\) satisfying:

and

2.3 Westfall–Young permutation testing

LAMP significantly increases the statistical power of the over-conservative standard Bonferroni correction. However, it implicitly assumes that hypotheses are independent, that can result in a loss of power when there is dependence between the hypotheses, as in pattern mining. The Westfall–Young (WY) permutation testing method (Westfall and Young 1993) is a multiple hypothesis testing procedure capable of addressing this issue. This method performs random permutations of the class labels, creating new datasets for which no pattern S is truly associated with the permuted class labels. Since every pattern flagged as significant in the permuted datasets is a false positive, the joint distribution of the null hypotheses can be directly estimated, resulting in improved statistical power with respect to LAMP.

In detail, the WY method starts by creating \(j_p\) permuted datasets, with \(j_p\) sufficiently large (typically in the order of \(10^3\) or \(10^4\)). Then for every permuted dataset j it computes the minimum p value \(p^{(j)}_{\min }\) over all patterns (hypotheses). Then one can estimate \(FWER(\delta )\) as:

where \({\mathbb {1}}(\cdot )\) is the indicator function (equal to 1 if its argument is true and 0 otherwise). Given a user provided threshold \(\alpha \) for the FWER, the best corrected significance threshold \(\delta ^*\) can then be obtained as \(\delta ^* = \max _{\delta } \{FWER(\delta ) \le \alpha \}\) with \(FWER(\delta )\) estimated as above.

The WY method does not provide an efficient way of computing the set \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) of minimum p values. Therefore, a naïve implementation requires to exhaustively test all the hypothesis on all the \(j_p\) permuted datasets. For significant pattern mining, this means exploring all the patterns appearing in a dataset; since this operation can require exponential time, it is even more challenging to repeat the entire process \(j_p\) times, once for every permuted dataset. Terada et al. (2013b) proposed the first efficient implementation, FastWY, of the WY procedure for significant pattern mining. The identification of \(\delta ^*\) is based on a decremental search scheme, which starts with support \(\sigma = n\) and iteratively decrements \(\sigma \) until an appropriate condition, guaranteeing that all values \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) have been computed, is achieved. A more recent method by Terada et al. (2015), HWY, exploits a more efficient mining strategy and parallel computing to accelerate FastWY.

Llinares-López et al. (2015) proposed WYlight to efficiently compute the optimal value \(\delta ^*\) of the corrected significance threshold. The main improvement of WYlight is to avoid the exact computation of all the elements of the set \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) and to only produce its exact lower \(\alpha \)-quantile. This result is obtained by maintaining an estimate of the \(\alpha \)-quantile that is only lowered through the mining process. WYlight performs a depth first exploration of the patterns’ search tree (Han et al. 2000) in which each pattern has support less or equal than its parent, and performs only one pattern mining instance, testing one pattern at a time and computing its p value on all the \(j_p\) permuted datasets at the same time. WYlight maintains a threshold \(\sigma \), initialized at 1, that is raised during the execution of the algorithm pruning patterns whose p values cannot be in the lower \(\alpha \)-quantile of \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\). This is achieved by using the lower bound \({\hat{\psi }}(\sigma )\) on the minimum obtainable p value for patterns of support \(\le \sigma \), which allows to effectively prune the search tree. However, during the computation of \(\delta ^*\), some patterns with support \(< \psi ^{-1}(\delta ^*)\) may be processed, due to the depth first procedure considered by WYlight. After computing \(\delta ^*\), an additional mining of \({\mathcal {D}}\) is performed (with minimum support threshold \(\psi ^{-1}(\delta ^*)\)) to extract the significant patterns with p value \(\le \delta ^*\). As shown in Llinares-López et al. (2015), WYlight significantly improves over FastWY in particular in terms of memory requirements, allowing the extraction of significant patterns from datasets larger than the ones that can be analyzed by FastWY.

2.4 Problem definition

For a dataset \({\mathcal {D}}\), let \(\delta (\alpha ) = \max _{\delta } \{FWER(\delta ) \le \alpha \}\) the threshold obtained through the WY permutation procedure when the bound on the FWER is set to \(\alpha \). Let \(p^{(k)}\) be the p value of the k-th pattern with patterns sorted by (increasing) p value. Given a dataset \({\mathcal {D}}\) and user-provided values k and \(\alpha \), our goal is to extract the set \(TSP({\mathcal {D}},k,\alpha )\) of top-k statistically significant patterns with FWER \(\le \alpha \), defined as:

Note that when less than k patterns have p value below \(\delta (\alpha )\), \(TSP({\mathcal {D}},k,\alpha )\) contains all such patterns. In addition, according to our definition more than k patterns may be in \(TSP({\mathcal {D}},k,\alpha )\), in case many have the same p value \(p^{(k)}\). In particular, for any two patterns \(S,S'\) with \(S' \subset S\) and \(x_{S'} = x_{S}, a_{S'} = a_{S}\) we have that \(p_S = p_{S'}\). For this reason we restrict our interest only to closed patterns, i.e. patterns whose supersets have support strictly lower than the pattern itself. Since the definition of closed pattern does not depend on the class labels, restricting to closed patterns does not bias any analysis.

The following result establishes the required guarantees on false positives in \(TSP({\mathcal {D}},k,\alpha )\) and it is a direct consequence of the fact that \(TSP({\mathcal {D}},k,\alpha )\) is a subset of all the patterns that would be reported using the WY method.

Lemma 1

The set \(TSP({\mathcal {D}},k,\alpha )\) has FWER \(\le \alpha \).

3 TopKWY Algorithm

In this section we present our algorithm TopKWY for mining the set \(TSP({\mathcal {D}},k,\alpha )\). We first present its main strategy (Sect. 3.1) that can be applied to any pattern mining problem. We then analyze TopKWY showing theoretical evidence of the efficiency of its strategy (Sect. 3.2) and introduce improved bounds on the minimum attainable p value used by TopKWY (Sect. 4). We also present extensions of TopKWY (Sect. 5) to control the generalized FWER, to control the False Discovery Proportion (FDP), and to employ different exploration strategies on the tree of candidate patterns. Finally, we introduce some crucial implementation details (Sect. 6), focusing on the problem of mining significant itemsets and subgraphs.

3.1 Main strategy

TopKWY combines two key ideas. First, it maintains an estimate of \(\delta _{m} =\min \{\delta (\alpha ), p^{(k)}\}\) that is updated during the exploration of the patterns and maintains a corresponding minimum support threshold \(\sigma = \psi ^{-1}(\delta _m)\) that is raised during the exploration of the patterns. Analogously to the strategy employed by WYlight (Llinares-López et al. 2015) the updates of \(\delta _{m}\) and \(\sigma \) depend on \(\alpha \), but in addition TopKWY updates them also depending on the p values of members of \(TSP({\mathcal {D}},k,\alpha )\). Second, the search tree of all possible patterns is explored in order of decreasing support, analogously to the strategy used by TopKMiner (Pietracaprina and Vandin 2007) for mining top-k frequent patterns, which guarantees that only patterns of support greater or equal to the final value of\(\sigma \) (i.e., \(\psi ^{-1}(\delta _m)\)) are explored.Footnote 1

TopKWY is described in Algorithm 1. In line 1, the threshold \(\delta _m\) is initialized to \(\alpha \) (the threshold with no correction for multiple hypothesis) and \(\sigma \) is initialized accordingly to \({\hat{\psi }}^{-1}(\delta _m)\). All the elements of the set of minimum p values \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) observed on the permuted datasets are initialized to 1 (their maximum achievable value) in line 2. The labels of the permuted datasets are generated in line 3. The pattern exploration is organized using a priority queue Q where each entry represents a pattern S, with key equal to the support \(x_S\) and value representing all the information needed by the algorithm regarding S (e.g., \(a_S\)) and also with relevant information regarding the parent \(f_S\) of pattern S in the search tree (see Sect. 4). Q is initialized in line 4 and stores the frontier of unexplored patterns, keeping them accessible by non-increasing support. TopKWY stores patterns having p value \(\le \delta _{m}\) in a priority queue P, keeping them accessible by non-decreasing p value. This is the set of candidates for \(TSP({\mathcal {D}},k,\alpha )\), which are collected and produced in output as soon as possible during the exploration. This allows to reduce the memory requirements and to start analyzing the results during the exploration, without the need of waiting for the algorithm’s termination. The first patterns in Q are obtained by the expand(S,Q) operation on line 5 called on the empty pattern \(S=\varnothing \): this procedure generates all patterns children of the pattern S in the search tree (and their corresponding projected datasets), and inserts the ones of support \(\ge \sigma \) in the queue Q. The details of efficient implementations of expand are described in Sect. 6. The while loop (lines 6- 21) implements the main step of the exploration strategy: the most frequent pattern S, its support \(x_S\), its support \(a_S\) in the minority class of \({\mathcal {D}}\), and the relevant information for its parent \(f_S\) are extracted from Q in line 7. If the p value \(p_S\) of S is \(\le \delta _m\), then S is inserted in P in line 10. In line 8, \(\sigma '\) is set to \(x_S\), which is an upper bound to the support of all elements stored in Q. This quantity is used to identify patterns surely in the set \(TSP({\mathcal {D}},k,\alpha )\) without waiting for the final corrected significance threshold \(\delta _m\) to be found, done in lines 11 and 12. k is updated accordingly, reducing it to the number of patterns which still need to be found. In order to compute the corrected significance threshold \(\delta _m\), the algorithm computes the p values of pattern S in the \(j_p\) permuted datasets, updating the values of \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) if needed. This operation is done with the test procedure. Similarly to WYlight, our algorithm processes all the \(j_p\) permutations for every pattern S at once, computing only the needed exact lower quantile of the set of minimum p values of the WY permutations, and not the minimum p values of every permuted dataset. Differently from WYlight, we use an improved lower bound \(\psi '(x_S,f_S)\) to the minimum attainable p value of S to decide (in line 13) whether to test S on the permuted datasets or not (see Sect. 4). This allows to skip the expensive computation of the \(j_p\) supports \(\{a_S^{(j)}\}_{j=1}^{j_p}\) of S on the minority class of the permuted datasets for several patterns S.

The significance threshold \(\delta _m\) is decreased during the exploration in two cases: when the estimated \(FWER(\delta _m)\) for the current threshold \(\delta _m\) increases above \(\alpha \) (line 15), or when more than k patterns with p value \(\le \delta _m\) are observed (line 17). The corresponding minimum support threshold \(\sigma \) is then updated accordingly in line 18. The correctness of these steps are proved in Sect. 3.2. After the update of \(\delta _m\) and \(\sigma \), elements which have become untestable are removed from Q in line 19, and elements which are not significant are removed from P in line 20. The current pattern S is expanded in line 21, and all its children having support \(\ge \sigma \) are inserted into Q. The exploration ends when Q gets empty. When this happens, all elements still contained in P with p value at most \(\delta _m\) are reported as significant in line 22.

The strategy employed by TopKWY can be adapted to incrementally update k for the same \(\alpha \), providing an interactive mining process. This can be achieved by providing a maximum value \(k^*\) in input to in Algorithm 1 to definitely prune untestable patterns, but freezing the computation after k patterns with p value below the current value of \({\hat{\psi }}(\sigma )\) have been found. If the user wants to increase k, the exploration can continue without restarting the entire mining instance.

3.2 Analysis

Some important properties of TopKWY algorithm can be formally stated. The first regards the correctness of the algorithm.

Theorem 1

(Correctness of TopKWY) TopKWY outputs the set \(TSP({\mathcal {D}},k,\alpha )\) of top-k significant patterns with \(FWER \le \alpha \).

Proof

The correctness of TopKWY follows from two observations: first, the final threshold \(\delta _m\) obtained by the algorithm is correct; second, only patterns with p value less or equal than the final value of \(\delta _m\) are produced in output. We start by proving the first statement. \(\delta _m\) is initialized to the value \(\alpha \), that is the uncorrected threshold for significance and is always \(\ge \delta ^*\). \(\delta _m\) is decreased (and the corresponding minimum support threshold \(\sigma \) is increased) during the exploration in two cases. The first case (line 15) is when the estimated \(FWER(\delta _m)\) for the current threshold \(\delta _m\) increases above \(\alpha \). This means that more than \(\alpha j_p\)p values of \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\) are below the current significance threshold \(\delta _m={\hat{\psi }}(\sigma )\), which allows for too many false positives, and the FWER is not correctly controlled to the level \(\alpha \). \(\delta _m\) is then updated to the highest value of \(\delta \) for which \(FWER(\delta ) \le \alpha \). The second case is when more than k patterns with p value \(\le \delta _m\) are observed (line 17). In this case, let \({\tilde{p}}\) be the highest p value of the k most significant patterns observed up to this point. Then all patterns of support \(< {\hat{\psi }}^{-1}({\tilde{p}})\) cannot result in a p value \(< {\tilde{p}}\) and therefore we need to consider (both in \({\mathcal {D}}\) and in the permuted datasets) only patterns of support at least \({\hat{\psi }}^{-1}({\tilde{p}})\). That is, the minimum support threshold \(\sigma \) can be safely increased to \({\hat{\psi }}^{-1}({\tilde{p}})\) with a corresponding significance threshold \({\tilde{p}}\). When \(\delta _m\) is last updated, its value will then be equal to the minimum between \(\delta (\alpha )\) and \(p^{(k)}\).

We now prove the second statement. This is trivially correct for patterns produced in output by line 22. We then consider patterns produced in output in line 11. Note that the current pattern S has support \(\sigma '\) and the search strategy employed by TopKWY guarantees that all patterns with support \(> \sigma '\) have already been explored. Therefore, from this point on the algorithm will never encounter p values \(< {\hat{\psi }}(\sigma ')\) and therefore the corrected significance threshold \(\delta _m\) will be \(\ge {\hat{\psi }}(\sigma ')\). Thus all patterns in P with p value \(< {\hat{\psi }}(\sigma ')\) can be safely produced in output (and removed from P). \(\square \)

The following result provides theoretical guarantees on which patterns will be explored by TopKWY, providing analytical evidence of the efficiency of our strategy.

Theorem 2

(Optimality of TopKWY) TopKWY expands only patterns of support \(\ge {\hat{\psi }}^{-1}(\delta _m) \).

Proof

Similarly to the proof of Theorem 1, when pattern S of support \(\sigma '\) is extracted from Q, we are guaranteed that the algorithm will never encounter p values \(< {\hat{\psi }}(\sigma ')\) again. Therefore the corrected significance threshold \(\delta _m\) will be \(\ge {\hat{\psi }}(\sigma ')\), that is \(\sigma ' \ge {\hat{\psi }}^{-1}(\delta _m)\) (i.e., S is testable). \(\square \)

We now show that in a simplified model for how p values are obtained, there exists a family of datasets for which the expected difference between the number of patterns explored by a DFS strategy and the number of patterns explored by TopKWY is exponential in the size of the dataset. In the simplified model, the p values obtained by random permutations are uniformly distributed in (0, 1]. (Note that we do not assume independence among p values from different itemsets.) Consider now the family of datasets \({\mathcal {D}}_n = \{t_1,\ldots ,t_n\}\) defined on the set of (binary) features \({\mathcal {I}} = \{i_1,\ldots ,i_n\}\) where \(t_j= {\mathcal {I}} \setminus \{i_j\}\). Moreover, half of transactions in \({\mathcal {D}}_n\) have label 0 while the other half have label 1.

Theorem 3

Consider a dataset \({\mathcal {D}}_n\) from the family described above. Let \(\varepsilon \) be a constant such that \(0 < \varepsilon \le \frac{1}{2}\) and \(\varepsilon n \in {\mathbb {N}}\). Assume that the choice of \(\alpha \) and k is such \(\delta ^*={\hat{\psi }}(\varepsilon n) ={\frac{n}{2} \atopwithdelims ()\varepsilon n}/{n \atopwithdelims ()\varepsilon n}\), and that \(j_p\) random permutations are used. Let X be the difference between the number of patterns explored by a DFS strategy and the number of patterns explored by TopKWY in the simplified model above. Then \({\mathbb {E}}[X] = {{\varOmega }}\left( 2^{\varepsilon n}/j_p\right) \).

Proof

Note that in such dataset all patterns are closed. Let W be the set of patterns explored by TopKWY. The DFS strategy has to explore all the patterns in W (since they are testable). We now show that it will also explore, in expectation, \({{\varOmega }}\left( 2^{\varepsilon n}/j_p\right) \) additional patterns, that proves the statement.

It is easy to show that since \(\varepsilon n \in {\mathbb {N}}\) and \(\delta ^* = {\frac{n}{2} \atopwithdelims ()\varepsilon n}/{n \atopwithdelims ()\varepsilon n}\), the set of testable patterns W is given by all patterns of size \(\le \varepsilon n\). For each pattern \(S_i\) and each j with \(1 \le j \le j_p\), let \(X_{ij}\) be the random variable that is 1 if \(S_{i}\) has p value \(\le \delta ^*\) in the j-th permuted dataset, and 0 otherwise. Note that \(S_{ij}\) is a Bernoulli random variable of parameter \(\delta ^*\), for all i and j. Let Y be the number of p values lower than \(\delta ^{*}\), assuming that the DFS has explored \(\varepsilon n + m\) patterns. The expectation of Y is

Note that requiring \({\mathbb {E}} \left[ Y \right] \ge 1\) provides a lower bound to the number of p values needed for the DFS to establish that \(\delta ^* = {\frac{n}{2} \atopwithdelims ()\varepsilon n}/{n \atopwithdelims ()\varepsilon n}\), since \(\alpha j_p\)p values below such threshold must be observed.

Let \(v=\varepsilon n\). The condition \({\mathbb {E}} \left[ Y \right] = (\varepsilon n + m) j_{p} \delta ^{*} \ge 1\) implies

or, equivalently

Note that since the set of testable patterns includes all patterns of size \(\le \varepsilon n\), all the \({{\varOmega }}\left( 2^{\varepsilon n }/j_p\right) \) patterns explored by the DFS after exploring the first \(\varepsilon n \) patterns and before observing the first p value below \(\delta ^*\) have size \(> \varepsilon n\) and, thus, are not in W, which proves the statement. \(\square \)

Compared to a depth first search (DFS) exploration strategy (i.e., the one employed by WYlight), the best first exploration strategy followed by TopKWY has the additional costs required by operations involving data structures P and Q. P can be implemented as a heap of entries \((p,\ell _{p})\), where the key p is a p value and the value \(\ell _{p}\) is a list of patterns which contains all the patterns with the same p value p. With such implementation, operations involving P (lines 10, 11, 16, and 20) can be perfomed with \({O}\left( \log k\right) \) operations, since P will contain at most k entries. Analogously, Q can be implemented as a heap of entries \((x,\ell _x)\), where the key x is a support and the value \(\ell _x\) is a list of patterns with the same value of \({\hat{\psi }}(x)\) (that is, having the same support x). With such implementation, operations involving Q (lines 7, 19, and 21) can be performed with \({O}\left( \log n\right) \) operations (n is the number of transactions in \({\mathcal {D}}\)). Alternatively, P and Q can be implemented so that all operations require time \({O}\left( 1\right) \) by storing references to lists \(\ell _{p}\) and \(\ell _x\) in arrays of size \({O}\left( n^2\right) \) (since the p value of S is a function of the support \(x_S\) of S and the number \(a_S\) of transactions with label 1 and containing S) and \({O}\left( n\right) \), respectively. (Note that this additional space requirement is not impractical, since \({O}\left( n j_{p}\right) \) space is needed to store the random permutations.) We also note that computing the improved lower bound \(\psi '(x_{S},f_{S})\) (line 13), has the same cost as computing the lower bound \({\hat{\psi }}(x_{S})\) to the p value used in WYlight (see Sect. 4). Even with the additional costs required by P and Q, the best first strategy of TopKWY leads to significant improvements in running time, as demonstrate by our experimental evaluation (Sect. 7).

4 Improved bounds on minimum attainable p value

In this section we prove novel and efficiently computable lower bounds on the minimum p value achievable by a pattern S that are tighter than the ones introduced by LAMP (Terada et al. 2013a) and are of particular interest in the context of WY permutation testing. These bounds are based on information computed when processing a parent patternY of S; in the case of itemsets, Y is a parent of S (or, alternatively, S is a child or a super pattern of Y) when \(Y \subset S\). Such bounds can be used to skip the expensive processing of the permutations for S when they ensure that it is not possible to improve the current estimate of the corrected significance threshold. While we present these bounds as a critical component of TopKWY, they may be of independent interest since can be employed in WYlight or similar algorithms to speed-up WY permutation testing.

Let the pattern S be a super pattern of Y, that is \(S \supset Y\). Then \(x_Y \ge a_Y \ge 0\) and \(x_Y \ge x_S\). Since the set of transactions (i.e., the conditional dataset) containing S is a subset of the set of transactions containing Y, we can bound the support \(a_S\) of S in the class \(c_1\) with the following relations:

Considering the \(j_p\) permuted class labels, let \(a_Y^{(j)}\) be the number of transactions containing Y and in the minority class (i.e., \(a_Y^{(j)}\) is the value of \(a_Y\) when the class labels are given by the j-th permutation).An analogous relation holds between \(a_S^{(j)}\) and \(a_Y^{(j)}\), for all j:

An immediate consequence of these bounds on \(a_S^{(j)}\) are lower bounds to \(p_{S}(a_S^{(j)})\).

Lemma 2

Let \({\check{a}}_{S}^{(j)} = \max (a_Y^{(j)}-(x_Y-x_S),0)\) and \({\hat{a}}_{S}^{(j)} = \min (x_S, a_Y^{(j)})\). Then, for all \(j \in [1, j_{p}]\),

This result suggests that if we have already computed \( a_Y^{(j)} , \forall j \in [1, j_{p}]\) while processing the permutations of Y, we could skip the expensive computation of \(a_S^{(j)}\), and, therefore, \(p_{S}(a_S^{(j)}), \forall j \in [1, j_{p}]\), in situations when the lower bounds to \(p_{S}( a_S^{(j)} )\) are greater than the current value of the corrected significance threshold. In the following, we present a bound valid for all \(p_{S}(a_S^{(j)})\) simultaneously, that is a function of only the minimum and maximum elements of \(a_{Y}^{(j)}\), instead of all of them. Let

and

Then, \(\forall j \in [1,j_{p}]\) we bound \(a_S^{(j)}\) as:

This allows to compute a bound \(\psi '(x_S,x_Y,a_{Y_{\min }}, a_{Y_{\max }})\) to the minimum attainable p value of S that is tighter than \(\psi (x_S)\):

The bound in Eq. 4 is evaluated in constant time, assuming \(p_{S}(a)\) is pre-computed for all valid values of a, as done in WYlight and in TopKWY to efficiently implement the test function. The following are simple consequences of Lemma 2 and the fact that \(a_{S_{\min }}\) and \(a_{S_{\max }}\) are always equally or more tight than the naive bounds on \(a_S\) assumed by \(\psi (x_S)\).

Lemma 3

\( \min \left\{ p_S \left( a_{S}^{(j)}\right) : j \in [1,j_{p}] \right\} \ge \psi '(x_S,x_Y,a_{Y_{\min }}, a_{Y_{\max }}) \ge \psi (x_S).\)

If for the current value of the significance threshold \(\delta _m\) it holds that \(\psi '_S>\delta _m\), then we can infer, without computing \(\{a_S^{(j)}\}_{j=1}^{j_p}\), that none of the \(j_p\)p values of S in the permuted datasets will improve the estimate of the current lower-quantile of the set \(\{p^{(j)}_{\min }\}_{j=1}^{j_{p}}\) and therefore cannot contribute to the computation of \(\delta (\alpha )\) or \(\delta _{m}\). That is, all the computation on the permuted datasets can be skipped for the current pattern S. For all children of S, if S is not tested the bounds \(a_{S_{\min }}\) and \(a_{S_{\max }}\) can be propagated to compute bounds also on their class distribution; if S is tested, then we propagate the actual minimum and maximum values of \(\{a_S^{(j)}\}_{j=1}^{j_p}\). In Algorithm 1 we use the bound above with the values propagated by the parent \(f_S\) of S and use \(\psi '(x_S,f_S)\) to highlight this fact. This optimization is particularly effective when patterns have a high degree of correlation, i.e., when patterns share many transactions.

Note that even if S does not need to be tested, descendants of S may need to be tested. However, using the bound \(\psi '(\cdot )\) we can quickly identify cases in which none of the descendants of S need to be explored and therefore the entire subtree can be pruned. In particular, since all the descendants of S will have support \(\le x_S - 1\), considering \(a_S\) (i.e., the number of transactions containing S and in the minority class in the dataset \({\mathcal {D}}\)), the algorithm can find \(\min \{ \psi '(i,x_S,a_{S_{\min }}, a_{S_{\max }}): i \in [\sigma ,x_s-1] \}\), and if such value is \(> \delta _m\) we can prune all the search subtrees rooted in the children of S. This optimization is part of the expand operation in TopKWY. These novel bounds consider the information of one common ancestor pattern to avoid useless computations for many of its children: in practice, the number of tests to perform across the permuted datasets can be significantly smaller than the number of testable patterns, leading to a significant computational speed-up. The approach above can extended to bound \(\min \left\{ p_S \left( a_{S}^{(j)}\right) : j \in [1,j_{p}] \right\} \) by considering the information computed on the intersection of the conditional datasets of any pair of patterns S and Y, even if \(S \not \supset Y\).

Another possible extension of the techniques we derive in this section is to consider not only one pair\(( a_{Y_{\min }} , a_{Y_{\max }})\), but vpairs\((a_{Y_{\min }}^{i} , a_{Y_{\max }}^{i})\), for all \(i \in [1,v]\): for each i, we define the set \(J_{i}\) as a subset of \(\{ 1 , \ldots , j_{p} \}\), with \(\bigcup _{i=1}^{v} J_{i} = \{ 1 , \ldots , j_{p} \} \). For all i, we bound all values \(a_{Y}^{(j)}\) for all \(j \in J_{i}\) with \(a_{Y_{\min }}^{i}\) and \(a_{Y_{\max }}^{i}\). If we define

then we simply obtain

This provides a trade-off between the memory (required to store the 2v bounds on the expanded nodes of the search space) and the time to evaluate the bound (that is linear in the number v of sets instead of constant), and the time the algorithm saves by skipping computations of the permutations, that is a direct consequence of how tight the bounds on the p values are; in fact, the permutations that have to be processed are only the ones belonging to the sets \(J_{i}\) such that \(\min \left\{ p_{S}\left( a_{S_{\min }}^{i} \right) , p_{S}\left( a_{S_{\max }}^{i} \right) \right\} \) is not higher than the current value of the significance threshold.

5 Extensions of TopKWY

5.1 Controlling the generalized FWER

While the main focus of TopKWY is to control the FWER of the output, a simple modification provides an algorithm to control the generalized FWER (g-FWER Lehmann and Romano 2012). The g-FWER is defined as the probability that at least g false positives are reported in output. In several applications one may be willing to tolerate a small amount of false discoveries in order to increase the power of detecting significant patterns, provided the number of false discoveries can be controlled. In such cases methods to discover significant patterns while controlling the g-FWER are preferred to methods controlling FWER.

Let FP be the number of false positives, then: g-FWER\(=\Pr (FP\ge g)\). Let g-\(FWER(\delta )\) be the g-FWER obtained using \(\delta \) as corrected significance threshold, that is by flagging as significant patterns with p value \(\le \delta \). Note that the Westfall–Young procedure can be used to estimate g-\(FWER(\delta )\) as

where \(p^{(j)}_{g}\) is the g-th smallest p value (over all patterns) in the permuted dataset j.

Algorithm 1 can be simply modified to obtain the set of top-k significant patterns with g-FWER \(\le \alpha \). To achieve this, it is sufficient to perform the following changes: replace test(S , \(\{p^{(j)}_{\min }\}_{j=1}^{j_p}\)) (line 14) with test(S , \(\{p^{(j)}_{g}\}_{j=1}^{j_p}\)), where test(S , \(\{p^{(j)}_{g}\}_{j=1}^{j_p}\)) computes the p value of pattern S in the \(j_p\) permuted datasets and updates the values of \(\{p^{(j)}_{g}\}_{j=1}^{j_p}\) if needed; replace \(\max \{\delta : FWER(\delta ) \le \alpha \}\) (line 15) with \(\max \{\delta : g\text {-}FWER(\delta ) \le \alpha \}\). Let TopKWY-g be such modified algorithm. We have the following.

Lemma 4

TopKWY-g outputs the set \(TSP({\mathcal {D}},k,\alpha )\) of top-k significant patterns with g-\(FWER \le \alpha \).

The proof is analogous to the proof of Theorem 1.

In addition to finding the top-k most significant pattern with bounded g-FWER, with g provided in input, TopKWY-g can be adapted to a different scenario. In this case, one may want to retrieve the k most significant patterns, using the random permutations to obtain a rigorous estimate of how many of such results are likely to be false positives. More formally, for k and \(\alpha \) provided by the user, one may be interested in computing the quantity \(g^{\star }\) defined as

that is the mininum value of g such that the g-FWER is controlled when the significance threshold is \(p^{(k)}\), that is the highest p value of the set of top-k results we extracted. \(g^{\star }\) provides useful knowledge on the quality of the set of top-k results provided to the user. In this situation the user is not required to fix a-priori g before examining the data. Further simple modifications to TopKWY-g are sufficient to obtain such variant, that are: remove line 15 and replace line 22 with “produce in output \(\{S' \in P: p_{S'} \le \delta _m \}\) and \(g^{\star } = \min \left\{ g : g\text {-}FWER(p^{k}) \le \alpha \right\} \)”. Let TopKWY\(^{\star }\) be such modified algorithm; we obtain the following guarantees.

Lemma 5

TopKWY\(^{\star }\) outputs the set of patterns \(\left\{ S : p_{S} \le p^{k} \right\} \) of top-k significant patterns and \(g^{\star } = \min \left\{ g : g\text {-}FWER(p^{k}) \le \alpha \right\} \).

5.2 Bounding the proportion of false discoveries

The False Discovery Proportion (FDP) (van der Laan et al. 2004; Lehmann and Romano 2012) of a set of hypotheses \({\mathcal {P}}\) is defined as the ratio \(FD / |{\mathcal {P}}|\), where FD is the (unknown) number of false discoveries \(\in {\mathcal {P}}\); note that when \(|{\mathcal {P}}| = 0\), the FDP is assumed to be 0. Let \(\zeta , \alpha \in (0,1)\) and \(k,g \in [1 , +\infty )\). Define the set \({\mathcal {P}}\) such that all the following hold:

It is possible to prove that a set \({\mathcal {P}}\) satisfying the above guarantees has size at most k and \(FDP \le \zeta \) with probability \(\ge 1 - \alpha \). Simple modifications to TopKWY-g lead to an algorithm that outputs \({\mathcal {P}}\) with the aforementioned guarantees: remove lines 11 and 12, replace line 15 with “\(\delta _{m} \leftarrow \max \{\delta : (\left\lfloor k\zeta \right\rfloor )\text {-}FWER(\delta ) \le \alpha \}\)” and line 22 with “produce in output \({\mathcal {P}}(\delta ^{*}) = \{S' \in P: p_{S'} \le \delta ^{*} \}\) where \(\delta ^{*} = \max \left\{ \delta : ( \left\lfloor \zeta |{\mathcal {P}}(\delta )| \right\rfloor )\text {-}FWER(\delta ) \le \alpha \right\} \)”. Let such algorithm be TopKWY-\(\zeta \). We obtain the following result.

Lemma 6

TopKWY-\(\zeta \) outputs the set of patterns \({\mathcal {P}}\) of size at most k with False Discovery Proportion \(\le \zeta \) with probability \(\ge 1 - \alpha \).

5.3 Alternative exploration strategies

While TopKWY builds on examining the search tree of all possible patterns in order of decreasing support, i.e. with a best first strategy analogous to the one used by TopKMiner (Pietracaprina and Vandin 2007) for mining top-k frequent patterns, it can also be modified to efficiently obtain the set \(TSP({\mathcal {D}},k,\alpha )\) using different exploration strategies, e.g. a level-wise exploration of the search tree [performed, e.g., by the Apriori algorithm (Agrawal and Srikant 1994) for itemsets] or a depth first search on the tree of all possible patterns (Uno et al. 2005; Nijssen and Kok 2004). This can be achieved by setting \(\sigma '\) to \(\max \left\{ x_{S'} : S' \in Q \right\} \) (instead that to \(x_{S}\)) in line 8 of Alg. 1 and by an appropriate choice of the priority for patterns in the priority queue Q, that stores the frontier of unexplored patterns: to obtain a level-wise exploration for itemsets, the priority of pattern S is set to the total number \(|{\mathcal {I}}|\) of items minus |S|; to obtain a depth first search, the priority of patterns S is set to its level in the search tree.

While for strategies other then the best first one the optimality (Theorem 2) is not guaranteed, the possibility to employ other strategies allows to obtain the top-k significant patterns starting from efficient implementations of frequent pattern mining algorithms that build on such strategies for various types of patterns [e.g., subgraphs (Nijssen and Kok 2004)].

6 Implementation details

An efficient implementation of expand and test procedures is critical for the efficiency of TopKWY. This crucially depends on the representation of \({\mathcal {D}}\) and the permuted class labels, and both depend on the type of patterns of interest. In Sects. 6.1 and 6.2 we now describe in more details the implementations for significant itemsets mining and for significant subgraphs mining; in particular, for significant itemsets we discuss the implementation of TopKWY as described in Sect. 3.1 as well as the variant, described in Sect. 5.3 of TopKWY based on the DFS strategy, which we denote by TopKWY-dfs; for significant subgraphs we only consider the implementation of TopKWY-dfs.

6.1 Significant itemset mining

Our implementation of TopKWY is based upon TopKMiner (Pietracaprina and Vandin 2007), which mines top-k frequent closed itemsets. As for TopKMiner, TopKWY uses a PatriciaTrie (Zandolin and Pietracaprina 2003) to store a compact representation of the dataset \({\mathcal {D}}\) in which transactions sharing the same prefix are represented by the same node in the tree. The conditional dataset of (i.e., the set of transactions containing) an itemset Y is stored as a list \(m_Y\) of nodes of the PatriciaTrie. An additional counter is added to every node, representing how many transactions with prefix represented by the node belong to the class 1. The same is done for the \(j_p\) permutations adding \(j_p\) counters to each node in the trie. Since the PatriciaTrie is built adding one transaction at a time, a technique similar to reservoir sampling is used to generate the \(j_p\) permuted labels of every transaction. Let \({\mathbf {r}}\) be a vector of length \(j_p\), with all components \(r^{(j)}, j=1,\ldots ,j_p\) of \({\mathbf {r}}\) initialized to \(n_1\), the number of transactions to assign to the class \(c_1\) for every permutation of index j. For every transaction \(t_{i}\), with \(i \in [1 , n]\), the j-th label of \(t_{i}\) is assigned to \(c_{1}\) with probability \(r^{(j)} / (n-i+1)\), \(c_{0}\) otherwise. If \(c_1\) is chosen, then \(r^{(j)}\) is decreased of one. This method guarantees that the total number of transactions with label \(c_{1}\) will be \(n_1\) for every \(j \in [1,j_p] \) and that the labels of the j-th permuted dataset are obtained by a random permutation of the class lables.

Our implementation of TopKWY-dfs is based upon LCM 3 (Uno et al. 2005), which mines frequent closed itemsets using a depth first strategy. The third version of LCM combines various techniques and data structures to accelerate the generation and the computation of the frequencies of frequent closed itemsets.

6.2 Significant subgraph mining

Our implementation of TopKWY-dfs relies on Gaston (Nijssen and Kok 2004) to mine significant subgraphs. Gaston first considers simple patterns, such as paths and trees, since efficient techniques for isomorphism checking are available for such acyclic structures. Only after this first phase, denoted as “quickstart”, general subgraphs, containing cycles, are evaluated. The search strategy of Gaston relies on a depth first enumeration of subgraphs. We do not provide a subgraph variant of TopKWY because no competitive algorithms based on a best first exploration strategy are currently available (Wörlein et al. 2005; Nijssen and Kok 2006).

7 Experimental evaluation

We implemented and tested TopKWY and TopKWY-dfs for the extraction of significant itemsets and significant subgraphs. Our experimental evaluation has three goals. First, to assess the number of significant patterns found in real datasets. Second, to evaluate the performance of TopKWY: since no other tool for the extraction of top-k significant patterns exists, we compare TopKWY and TopKWY-dfs with the state-of-the-art tool for significant pattern mining, WYlight (Llinares-López et al. 2015). While the techniques introduced in this work can be extended to other multiple hypothesis testing procedures, such as LAMP (Terada et al. 2013a), we do not compare with LAMP or derived strategies (Minato et al. 2014) since (Llinares-López et al. 2015) shows that WY permutation testing results in higher power. Third, to assess the impact of our improved bounds and implementation choices on performances.

In Sect. 7.1 we describe the implementation and computational environment for our experiments. In Sect. 7.2 we describe the datasets we used. In Sect. 7.3 we describe the experiments we have performed and our choice of parameters. Finally, in Sect. 7.4 we report and discuss the results of our experiments.

7.1 Implementation and environment

We implemented TopKWY in C++ as an extension of the TopKMiner algorithm (Pietracaprina and Vandin 2007). For TopKWY-dfs we modified the C implementation of WYlight (based on LCM (Uno et al. 2005) and Gaston (Nijssen and Kok 2004)) made available by the authors at https://github.com/fllinares/wylight. All implementations were compiled with gcc 4.8.4. Our experiments have been performed on a 2.30 GHz Intel Xeon CPU machine with 512 GB of RAM, running on Ubuntu 14.04. Our code and scripts to replicate all experiments described in the paper are available at https://github.com/VandinLab/TopKWY.

7.2 Datasets

For itemsets mining, we performed our experiments using 19 datasets: the 10 largest ones used in Llinares-López et al. (2015) and available at FIMI’04Footnote 2 and UCIFootnote 3, all the datasets used in Komiyama et al. (2017), available from the libSVM repositoryFootnote 4, and 4 additional ones (a9a, bms-web1, accidents, susy) available from libSVM, FIMI’04, and SPMFFootnote 5. The datasets’ statistics are in Table 2. For each dataset, we also note if it already contained class labels (L) or not (U). For unlabeled datasets we simulated a typical analysis requiring to find itemsets correlated with a given item (feature) in a dataset. For every unlabeled dataset we selected the single item whose frequency is closer from below to 0.5, removed the corresponding item from every transaction, and use its appearance to define the target class label. The reported ratio \(n_1/n\) for the minority class of unlabeled datasets refers to the output of this labeling process. For real-valued features we obtained two bins by thresholding at the mean value and using one item for each bin [analogously to Komiyama et al. (2017)].

For subgraphs mining, we considered 11 of the largest datasets with binary target labels available from a repositoryFootnote 6 of benchmark datasets; most of them are also analysed by Llinares-López et al. (2015). The datasets’ statistics are in Table 3. In some cases, we bounded the maximum number of vertexes of the subgraphs that are explored by Gaston, in order to obtain practical running times for the experiments. Such limits are reported in the parenthesis after the dataset’s name in Table 3.

7.3 Parameters and experiments

For TopKWY and TopKWY-dfs we considered \(k=10^{i}\) for \(i \in [1,6]\). For all the datasets we analyzed, we ran TopKWY and TopKWY-dfs, for all such values of k, and WYlight. We fixed the number of permutations \(j_p=10^4\), shown to be a good choice in Llinares-López et al. (2015), and fixed the commonly used value \(\alpha =0.05\) as FWER threshold. For the comparison between TopKWY, TopKWY-dfs, and WYlight, we repeated every experiment 10 times, recording the running time and peak memory provided by the operating system; we report the averages over the 10 runs, standard deviations are negligible and therefore not shown. The measures reported for TopKWY include the time and space to retrieve statistically significant patterns and write them on file, while for TopKWY-dfs and WYlight we only report the time and space needed to find the optimal significance threshold, which corresponds to the first step of the method, therefore reporting a lower bound to their runtimes. We stopped the execution of an algorithm if it did not conclude after (at least) one month of computation; for these cases, the indicated time and peak memory are lower bounds. For experiments testing the impact of parameters or implementation choices on TopKWY we used only one execution.

7.4 Results

7.4.1 Itemsets mining

Table 2 reports the number of significant patterns for \(\alpha =0.05\) in the datasets we considered, obtained by running TopKWY (with \(k=+\infty \)) or WYlight. For some datasets we stopped the computation after 1 month, so only a lower bound is available. In most cases, the number of significant patterns is extremely large: for 11 out of 19 datasets there are \(>5\times 10^5\) significant patterns and in 7 datasets there are \(>10^7\) significant patterns. Therefore a direct way to limit the number of significant patterns in output, as provided by the top-k significant patterns, is required.

Figure 1 compares the running time of TopKWY and WYlight. Note that for the 11 datasets in which the number of significant patterns is \(< 10^6\), TopKWY with \(k=10^6\) identifies all the significant patterns and produces the same patterns found with WYlight. For 15 out of 19 datasets, TopKWY (with \(k=10^{6}\)) is faster than WYlight by a factor at least 2. For 9 datasets TopKWY is faster than WYlight by at least one order of magnitude, and for 6 datasets WYlight requires > 11 days while TopKWY identifies up to the \(10^6\) most significant patterns within one day and the \(10^4\) most significant ones in few hours. Even for the datasets where TopKWY identifies all significant patterns, producing the same patterns as WYlight, TopKWY is always faster than WYlight, with up to one order of magnitude speed-up in some cases. This shows that TopKWY is an effective tool to identify all significant patterns whenever possible and enables the analysis of significant patterns when their number is extremely high. For datasets in which the number of significant patterns is \(>10^6\) we ran TopKWY with \(k=\infty \) to compare its strategy for finding the corrected significance threshold for all significant patterns with the one used by WYlight. The runtime of TopKWY is always lower than the runtime of WYlight by at least \(20\%\), with a significant speed-up in some case (e.g., for chess, TopKWY terminates in 2 days, while WYlight needs more than 10 days). These results show that TopKWY outperform the state-of-the-art even for this task.

Figure 2 compares the peak memory required by TopKWY and WYlight. Given the best first strategy employed by TopKWY, we expected its memory requirement could be higher than WYlight, that follows a depth first strategy. Interestingly, only in three cases TopKWY required 1 order of magnitude more memory than WYlight and in both such cases the requirements are reasonable (\(\le 20\) GBs) for current machines. However, in such cases WYlight required \(> 11\) days to complete, while TopKWY terminated in \(<1\) day, showing that, by using a reasonably larger amount of memory than WYlight, TopKWY renders the identification of significant patterns feasible. In all other cases the memory requirement of TopKWY is either the same or within few GBs of WYlight. For some datasets TopKWY requires significantly less memory than WYlight: surprisingly this happens for datasets (cod-rna, covtypes) on which TopKWY reports the same significant patterns as WYlight (i.e., all significant patterns). In some cases, memory usage decreases slightly when k increases, due to our dynamical allocation of the p values lookup table that may require less space when the minimum support decreases.

We investigated the impact of our implementation choices on the memory requirement of TopKWY (Fig. 3). We compared the space required to store the permuted labels on all the nodes of the PatriciaTrie used by TopKWY (see Sect. 6.1) with the space required by storing the permuted labels for each transaction (as done for example by WYlight). Since TopKWY stores, for each node of the Patricia Trie, a list of \(j_p\) values (i.e., the number of transactions with minority label among the ones sharing the prefix corresponding to the node), one transaction may have more than \(j_p\) values associated to its nodes. In most cases the space required by the two methods is essentially the same, but in three cases the use of the Patricia Trie corresponds to a significant reduction in the memory used. In particular, these three cases are for datasets in which TopKWY identifies all the significant patterns using less memory than WYlight, providing strong evidence of the importance of our encoding of the permuted class labels.

We compared the exploration strategies used by TopKWY and by WYlight by recording the number of patterns they test (Fig. 4), restricting to datasets in which WYlight terminates. In all cases, TopKWY tests a lower number of patterns than WYlight, with differences of almost two orders of magnitude for some datasets. This shows the effectiveness of our exploration strategy and of our novel bounds \(\psi '(\cdot )\) (see Sect. 4) on reducing the number of tests to perform.

We then directly investigated the impact of our novel bounds on the runtime of TopKWY. We compared the running time of WYlight with the running time of two variants of TopKWY: one using our improved bound \(\psi '(\cdot )\) and one using the LAMP bound \({\hat{\psi }}(\cdot )\) (i.e., the same bound used by WYlight). The results for some representative datasets are in Fig. 5a. The results for the other datasets are similar. We observed that, for all datasets other than chess, the exploration strategy employed by TopKWY to extract only the top-k significant patterns already provides a substantial (up to more than one order of magnitude) improvement in the running time of TopKWY with respect to WYlight, even using the same LAMP bounds. When our novel bound \(\psi '(\cdot )\) is used in TopKWY we observe additional speed-ups, for a total up to more than two orders of magnitude. Therefore, the reduction in the number of patterns that need to be tested on the permuted datasets, obtained by the exploration strategy of TopKWY and our improved bound, is a crucial component for the performance of TopKWY.

Finally, we assessed the impact of \(\alpha \) and \(j_p\) on the running time of TopKWY and WYlight on two representative datasets, cod-rna and accidents, which are representative for the two scenarios of a small number of significant patterns (cod-rna) and of a large number of significant patterns (accidents). In these experiments we fixed \(k=10^4\). Figure 5b reports the results for cod-rna. Results for accidents are not reported since the running time of accidents remained essentially the same for all values of \(\alpha \) and \(j_p\). This means that for accidents using the bounds introduced in Sect. 4 the computational effort is dominated by the pattern space exploration (and not the evaluation of the permuted datasets): considering only the top-k significant patterns is therefore crucial to analyze such dataset. For cod-rna, we observe that varying \(\alpha \) has some but small impact on the runtime of both methods while there is a linear dependence of the running time of WYlight on \(j_p\) and a similar but less pronounced dependence of TopKWY. In all cases, TopKWY is faster than WYlight (for accidents WYlight does not terminate within 1 month) showing the efficiency of TopKWY for different ranges of the \(\alpha \) and \(j_p\) parameters.

Comparison between Best First and Depth First strategies. We investigate the impact of the best first strategy adopted by TopKWY on the computational performances of the mining tasks. To do so, we compared TopKWY with TopKWY-dfs, the variant of TopKWY, which explores patterns in depth first order (see Sect. 5.3). We ran TopKWY-dfs on the same set of experiments described in Sect. 7.3. Fig. 6 shows the running times of TopKWY, TopKWY-dfs, and WYlight. We can clearly see that, for 9 datasets out of 19, there is a significant difference in the running times of TopKWY and TopKWY-dfs: this means that, in particular for smaller values of k, the exploration strategy is a critical component of TopKWY. For accidents, one of the most challenging dataset to analyze, both TopKWY-dfs and WYlight can not complete their execution in less than one month, even for \(k=10\). Therefore, for such dataset the best first strategy adopted by TopKWY is crucial.

Results forg-FWER. We investigate the increase in statistical power of TopKWYwhen controlling the generalized-Family-Wise Error Rate (g-FWER) by analyzing datasets described in Sect. 7.3 having less than \(10^6\) results for \(\alpha = 0.05\) when controlling the FWER. As we can see in Fig. 7, for the breast-cancer dataset the number of significant patterns increased by more then two orders of magnitude as the value of g increases (i.e., when more false positives are allowed). Figure 8 show the running time of TopKWY when controlling the g-FWER at different values of g. As expected, the required time slightly increases but it stays practical for all datasets. We do not compare with other methods since TopKWY is the first algorithm to discover significant patterns with a rigorous control on the g-FWER.

In Table 4.a we show the computed values of \(g^{\star }\) by TopKWY\(^{\star }\) (see Sect. 5.1 for its definition) on the breast-cancer dataset for \(k \in \{ 10 , 10^{2} , 10^{3} , 10^{4}\}\). We can see that TopKWY\(^{\star }\) is able to provide informative estimates of the quality of the reported set of k most significant patterns, in terms of the minimum g such that the g-\(FWER(p^{k})\) is \(\le \alpha \), without the need of fixing g a-priori. In Table 4.b we show the number of results found using TopKWY-\(\zeta \) for \(k=10^{4}\) on the breast-cancer dataset, varying \(\zeta \in \{ 0.01 , 0.05 , 0.1 , 0.25 \}\). From these results we can see that TopKWY-\(\zeta \) is a very flexible tool to discover significant patterns with bounds on both the output size and the maximum ratio of false discoveries, providing improved statistical power in situations where the number of significant patterns when controlling the FWER is very low. (We do not show the running times for TopKWY\(^{\star }\) and TopKWY-\(\zeta \) since those are very similar to the ones reported in Fig. 8.)

7.4.2 Subgraphs mining

We ran TopKWY-dfs on the datasets described in Sect. 7.2. Table 3 reports the number of significant patterns for \(\alpha =0.05\) in the datasets we considered, obtained by running TopKWY-dfs (with \(k=+\infty \)) or WYlight. For some datasets we stopped the computation after 1 month, so only a lower bound is available. In most cases, the number of significant patterns is extremely large: for 6 out of 11 datasets there are \(>5\times 10^5\) significant patterns and in 5 datasets there are \(>10^6\) significant patterns. This shows that a direct way to limit the number of significant patterns in output is required for subgraphs mining as well.

We then compared the running time and memory requirement of TopKWY-dfs and of WYlight. Figure 9 compares the running times of TopKWY-dfs and WYlight. As for itemsets mining, when the number of significant patterns is lower than k, TopKWY-dfs finds all of them, obtaining the same output as WYlight. We can see that TopKWY-dfs is, in all cases, faster than WYlight: for 9 datasets out of 11 and for \(k=10^{6}\), TopKWY-dfs improves the running time by a factor at least 2. It is interesting to note that for 5 datasets the number of significant results is \(< 10^{6}\), therefore TopKWY-dfs is faster even if its output is the same of WYlight. For 3 datasets the running time is reduced by more than two orders of magnitude, and WYlight is not able to terminate in less than 1 month. We can observe that these three datasets contains more than \(10^{6}\) significant results; this clearly shows that focusing on the most significant patterns leads to significant computational advantages and enables the analysis of such datasets.

Figure 10 compares the memory usage of TopKWY-dfs and WYlight. Both algorithms are very memory efficient and, while TopKWY-dfs usually requires more memory than WYlight, the difference is small: for 7 of the 8 datasets where WYlight terminates, TopKWY-dfs never requires more than \(8\%\) of the memory of WYlight, and \(23\%\) in the case of MUTAG, where the difference is of few MBs. (For the three datasets were WYlight does not terminate, we only report a lower bound to its memory usage.)

8 Conclusion

In this work we introduce TopKWY, an efficient algorithm to identify the top-k significant patterns with rigorous guarantees on the FWER and provide theoretical evidence of its effectiveness. Our extensive experimental evaluation shows that TopKWY enables the identification of significant patterns on large datasets and that it significantly improves over the state-of-the-art.

Our notion of top-k significant patterns and our algorithm TopKWY could be relevant to other mining problems, for example statistical emerging pattern mining (Komiyama et al. 2017), while providing a bound on the FWER. While we focus on bounding the FWER, a different approach would be to bound the false discovery rate (FDR) (Benjamini and Hochberg 1995), that is the expected ratio of false discoveries among all reported patterns. Bounding the FDR is crucial in cases where the number of significant results obtained bounding the FWER is low. In addition, fully processing extremely large datasets may not be feasible: the combination of the techniques we develop in this work with sampling (e.g., the recently developed techniques by Pellegrina et al. (2019b) to extend TopKWY for the analysis of random samples) is a promising direction that we will investigate in future work.

Notes

References

Agrawal R, Imieliński T, Swami A (1993) Mining association rules between sets of items in large databases. SIGMOD Rec 22:207–216

Agrawal R, Srikant R (1994) Fast algorithms for mining association rules in large databases. In: Proceedings of the 20th international confereence on very large data bases (VLDB ’94), San Francisco, CA, USA. Morgan Kaufmann Publishers Inc, pp 487–499

Atzmueller M (2015) Subgroup discovery. Wiley Interdiscip Rev Data Min Knowl Discov 5(1):35–49

Bayardo RJ Jr (1998) Efficiently mining long patterns from databases. ACM Sigmod Rec 27(2):85–93

Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J Ro Stat Soc Ser B (Methodol) 57:289–300

Bonferroni C (1936) Teoria statistica delle classi e calcolo delle probabilita. Pubblicazioni del R Istituto Superiore di Scienze Economiche e Commericiali di Firenze 8:3–62

Dong G, Bailey J (2012) Contrast data mining: concepts, algorithms, and applications. CRC Press, Boca Raton

Duivesteijn W, Knobbe A (2011) Exploiting false discoveries–statistical validation of patterns and quality measures in subgroup discovery. In: 2011 IEEE 11th international conference on data mining. IEEE, pp 151–160

Fisher RA (1922) On the interpretation of \(\chi \) 2 from contingency tables, and the calculation of p. J R Stat Soc 85(1):87–94

Gionis A, Mannila H, Mielikäinen T, Tsaparas P (2007) Assessing data mining results via swap randomization. ACM Trans Knowl Discov Data (TKDD) 1(3):14

Hämäläinen W (2012) Kingfisher: an efficient algorithm for searching for both positive and negative dependency rules with statistical significance measures. Knowl Inf Syst 32(2):383–414

Hämäläinen W, Webb GI (2019) A tutorial on statistically sound pattern discovery. Data Min Knowl Disc 33(2):325–377

Han J, Pei J, Yin Y (2000) Mining frequent patterns without candidate generation. In: Chen W, Naughton JF, Bernstein PA (eds) SIGMOD conference. ACM, New YorkD, pp 1–12

Han J, Wang J, Lu Y, Tzvetkov P (2002) Mining top-k frequent closed patterns without minimum support. In: Proceedings 2002 IEEE international conference on data mining, 2002. ICDM 2003. IEEE, pp 211–218

Han J, Cheng H, Xin D, Yan X (2007) Frequent pattern mining: current status and future directions. Data Mining Knowl Discov 15:55–86

Herrera F, Carmona CJ, González P, Del Jesus MJ (2011) An overview on subgroup discovery: foundations and applications. Knowl Inf Syst 29(3):495–525

Komiyama J, Ishihata M, Arimura H, Nishibayashi T, Minato S-I (2017) Statistical emerging pattern mining with multiple testing correction. In: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 897–906

Lehmann EL, Romano JP (2012) Generalizations of the familywise error rate. In: Selected works of EL Lehmann. Springer, pp 719–735

Li J, Liu J, Toivonen H, Satou K, Sun Y, Sun B (2014) Discovering statistically non-redundant subgroups. Knowl-Based Syst 67:315–327

Llinares-López F, Sugiyama M, Papaxanthos L, Borgwardt K (2015) Fast and memory-efficient significant pattern mining via permutation testing. In: Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining. ACM, pp 725–734

Minato S-i, Uno T, Tsuda K, Terada A, Sese J (2014) A fast method of statistical assessment for combinatorial hypotheses based on frequent itemset enumeration. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 422–436