Abstract

We introduce the mathematical modeling of American put option under the fractional Black–Scholes model, which leads to a free boundary problem. Then the free boundary (optimal exercise boundary) that is unknown, is found by the quasi-stationary method that cause American put option problem to be solvable. In continuation we use a finite difference method for derivatives with respect to stock price, Grünwal Letnikov approximation for derivatives with respect to time and reach a fractional finite difference problem. We show that the set up fractional finite difference problem is stable and convergent. We also show that the numerical results satisfy the physical conditions of American put option pricing under the FBS model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The development of modern option pricing began with the publication of the BS option pricing formula in 1973, which was used in computing the value of the European option pricing (Black and Scholes 1973; Merton 1973). The BS formula computes the value of European option pricing based on the underlying asset, exercise price, volatility of the asset, and the expiration time of option pricing (Hull 1997; Wilmott et al. 1993). The European option pricing has the ability of exercising only at expiring date, while an American option is its early exercise privilege, that is, the holder can exercise the option prior to the expiration date. Since the additional right should not be worthless, we expect an American option to be worth more than its European counterpart. The extra premium is called the early exercise premium (Kwok 1998; Wilmott 1998). Therefore, analytical solutions of BS models for American option pricing problems are seldom available and so a numerical technique should be used. During the last decades various numerical techniques have been investigated for solving these types of problems. We refer the interested readers to Amin and Khanna (1994), Barraquand and Pudet (1994), Broadie and Detemple (1996), Kalanatri et al. (2015), Kwok (2009), Ross (1999), San-Lin (2000), Meng et al. (2014).

A fractional differential equation provides an interesting instrument for defining memory and hereditary properties of various materials and processes. This is the main advantage of fractional derivatives in comparison with classical integer-order models, in which such effects are in fact neglected. The advantages of fractional derivatives becomes apparent in physics, chemistry, engineering, finance, and other sciences that have been developed in the last decades (Podlubny 1999).

In this paper we use a fractional stochastic dynamics of stock exchange to obtain the FBS model. Since American option pricing under the FBS has a free boundary (optimal exercise boundary) that is unknown, by using the quasi-stationary method we find \(\overline{S}(t)\) and obtain Fractional partial differential equation (FPDE) with known boundary. Then, we introduce a stable and convergent finite difference method for solving the new problem.

The remainder of the paper is organized as follows: In Sect. 2, the FBS model for American put option pricing is introduced. To remove the free boundary, we use the quasi-stationary method in Sect. 3. In Sect. 4, we investigate stability and convergence of finite difference method for FBS model. In Sect. 5, the Newton interpolation is used to evaluate option pricing at some intermediate points. Finally, a brief conclusion is given in Sect. 6.

2 FBS Model for American Put Option Pricing

The stochastic differential equation of stock exchange dynamics is used in the form

where \(\omega (t)\) is a normalized Gaussian white noise, i.e., with zero mean and the unit variance. In addition, we denote by r the interest rate, whilst P(S, t) is the American put option pricing. The details of obtaining FBS model from (1) are explained in Jumarie (2008), so that American put option pricing under the FBS model is presented as

where \(\overline{S}(t)\) denotes the free boundary. The parameters \(\sigma \), r and E denote the volatility of the underlying asset, the interest rate and the exercise price of the option, respectively.

Remark 1

The fractional Black–Scholes model of American option pricing is based on some consideration for real market, because of the following advantages:

-

(1)

the fractional derivative is a generalization of the ordinary derivative,

-

(2)

the fractional derivative is a non-local operator while the ordinary derivatives is a local operator (Baleanu et al. 2012),

-

(3)

the FBS model based on the fractional Brownian motion is more accurate than the ordinary Brownian motion, i.e., substituting \(b(t,\alpha )\) for b(t) as in (1), Jumarie (2008).

3 Quasi-Stationary Method for Determining \(\overline{S}(t)\)

Taking \(\frac{\partial ^{\alpha } P}{\partial t^{\alpha }}=0\) in (2), leads to a second order ordinary differential

with general solution

According to (6) we should set \(C_1=0,\) then

and we use (4) to get

which is solved for \( \overline{S}(t)\) as

Substituting from (12) and (13) in (5), the exercise price E takes the form

with

Put

then

and it follows from (5), (11), (13) and (14) that

which can be solved for \(C_2\) by using a suitable iterative method. Thus, the FBS model is formulated as the boundary value problem

4 Stability and Convergence of Finite Difference Method for FBS Model

This section is related with conditions that must be satisfied if the solution of the finite-difference equations to be reasonably accurate approximation to corresponding solution of FBS model of American option pricing. The conditions are associated with two different but interrelated problems. The first concerns the stability of exact solution of finite difference equations; the second concerns the convergence of the finite difference equations to the solution of the FBS model.

Now we use a finite difference scheme for the derivatives on the right side and Grünwald Letnikov approximation for fractional derivative on the left side of (19) and get

where

Let \(t_k=k\Delta t , k=0,1,2\ldots ,n-1\), \(S_j=j\Delta S, j=1,2,\ldots ,m-1\), where for \(0\le t\le T\), \(\Delta t=\frac{T}{n}\), and \(\Delta S=\frac{S_{max}}{m}\), are time and stock price steps respectively. Therefore, Eq. (22) provides the recursive formula

for \(P^k_j\), \(j=1,2,\ldots ,m-1\).

It also follows from (24) for \(j=0\) and all time values that \(\mathbf {P}_0=E\) ( since \(S_0=0\)) and if

then from Eq. (21) we have \(P(S_1,t)=E-S_1\) and so

where \(\mathbf {P}_j=[P^0_j,P^1_j,P^2_j ,\ldots ,P^{n}_j]^T\) and the \((n+1)\times (n+1)\) matrices \(A=(a_{ij})\), \(B=(b_{ij})\) and \(C=(c_{ij})\) are given by

with

Let \(\tilde{P}_{j}^k\) ( \(k=0,1,\ldots ,n \) ; \(j=0,1,\ldots , m\)) be approximations to \(P_{j}^k\), then the errors \(\varepsilon _{j}^k=\tilde{P}_{j}^k-P_{j}^k\) ( \(k=0,1,\ldots ,n \) , \(j=0,1,\ldots ,m\)) satisfy the recurrence relation

or equivalently

for \(\mathbf {E}_j=[\varepsilon _{j}^0,\varepsilon _{j}^1,\ldots ,\varepsilon _{j}^{n}]^T\) and \(\parallel \mathbf {E}_{j+1}\parallel _{\infty }=\displaystyle \max _{1\le k \le n-1} \mid \varepsilon _{j+1}^{k}\mid \).

Definition 1

(see Smith 1985; Yu and Tan 2003) If for any arbitrary initial rounding errors \(\mathbf {E}_0\) and \(\mathbf {E}_1\) given, there exists a positive constant K, independent of \(\Delta S\) and \(\Delta t\), such that

then the difference approximation (27) is stable.

Lemma 1

(see Zhuang et al. 2009) The coefficients \(g^{\alpha }_i\) for \(i=0,1,2,\ldots \), that were defined in (25) satisfy

-

(1)

\(g^{\alpha }_0=1\), \( g^{\alpha }_1=-\alpha \) and \(g^{\alpha }_i >0\), \(i > 1\).

-

(2)

\(\sum ^{\infty }_{i=0}g^{\alpha }_i=0\) and \(\sum ^{l}_{i=0}g^{\alpha }_i <0\) for \(l=1,2,\ldots \).

Remark 2

Theorem 1

(Stability) If

then the finite difference scheme (27) is stable and we have

where K is a positive constant independent of \(\Delta t\) , \(\Delta S\) and j.

Proof

For \(k=0,1,\ldots ,n-1 \) , \(j=1,2,\ldots ,m-1\) by using (29) we achieve

Since \(\sum _{i =0}^{k+1}g_{i}^{\alpha } <0,\) then

If we set

then

where, we used the inequality

Thus

by definition 1; this proves the stability of (27). \(\square \)

To prove convergence of the scheme (27), let \(P(S_j, t_k)\) (\(k=0,1,2,\ldots ,n-1 \); \(j=1,2,\ldots ,m-1\)) be the exact solution of (19)–(21) at the mesh point \((S_j,t_k)\). Define

and

Using \(\mathbf {F_0}=\mathbf {F_1}=0\) and substituting \(P_j^k=P(S_j,t_k)-\epsilon ^k_j \) into (26), we have

where \(k=0,1,2\ldots ,n-1 \); \(j=1,2,\ldots ,m-1\). Now, we prove the following convergence.

Theorem 2

(Convergence) Let (22) has the smooth solution \(P(S,t)\in C_{S,t}^{2,\alpha }(\Omega )\). Let \(P^k_j\) be the numerical solution computed by use of (27). Then \(P^k_j\) converges to \(P(S_j,t_k)\), if

Proof

We have from (29)

Since \(\sum _{i =0}^{k+1}g_{i}^{\alpha } <0,\) then

Now, we set

and use (32) to get

Since \(\mathbf {F}_0=\mathbf {F}_1=0\), this proves that \(P^k_j\) converges to \(P(S_j,t_k)\). \(\square \)

5 Solving FBS Model for American Put Option Pricing

Now we solve (22)–(24). Since the American put option pricing is only known at the end point (exercise time), then in order to use a higher order Grünwald Letnikov approximation, we need some other points of American put option pricing. To get this intermediate values, we use Newton’s interpolation method, that is, for the points \(P_j^0=P(S_j ,0)\) and \(P_j^T=P(S_j,T)\), we get

which yields

and for the points \(P^0_j\), \(P^{\frac{T}{2}}_j\) and \(P^T_j\),

and

Then it follows from (34)

and

Proposition 1

For \(n=4\), the discrete form of the FBS model is in the form

where

with

for \(j=1,2,\ldots ,10.\)

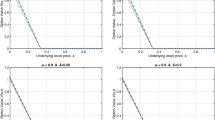

a\(T=0.5\), \( \sigma =0.5\), \(r=0.05\) and \(\alpha =\frac{8}{9}\), b\(T=0.5\), \( \sigma =0.4\), \(r=0.05\) and \(\alpha =\frac{8}{9}\), c\(T=0.25\), \( \sigma =0.4\), \(r=0.05\) and \(\alpha =\frac{9}{11}\), d\(T=0.75 \), \( \sigma =0.32\), \(r=0.07\) and \(\alpha =\frac{5}{9}\), e\(T=1\), \( \sigma =0.4\), \(r=0.01\) and \(\alpha =\frac{8}{9}\), f\(T=1\), \( \sigma =0.6\), \(r=0.02\) and \(\alpha =\frac{1}{2}\), g\(T=0.5\), \( \sigma =0.4\), \(r=0.1\) and \(\alpha =\frac{2}{3}\)

Proof

We return to the Eq. (22) and obtain for \(n=4\),

or, equivalently

which can be rearranged as (38). \(\square \)

The algorithm of the proposed method can be formalized as follows.

For some special values of the parameters T, \(\sigma \), r, \(\alpha \) and \(S_{max}=2\), the results plotted in Fig.1a–g.

Remark 3

For a call (put) option, either European or American, when the current asset price is higher, it has a strictly higher (lower) chance to be exercised and when it is exercised induces higher (lower) cash inflow. Therefore, the call (put) option price function is increasing (decreasing) of the asset price, that is,

or

It is shown in Fig. 1a–g, that our results are true for \(t_0=0\), according to remark 3.

6 Conclusion

We introduced FBS model that was obtained from fractional stochastic differential equation. Then we investigated the stability and convergency of a finite difference scheme and showed that it is stable and convergent. So we solved American put option problem by using Newton interpolation. In continuation we showed numerical results in some figures that satisfied the physical condition of American put option pricing.

References

Amin, k, & Khanna, A. (1994). Convergence of American option values from discrete to continuous-time financial models. Mathematical Finance, 4, 289–304.

Baleanu, D., Diethelm, K., Scalar, E., & Trujillo, J. J. (2012). Fractionak caculus, model and numerical methods (Vol. 3). Singapore: World Scientific.

Barraquand, J., & Pudet, T. (1994). Pricing of American path-dependent contingent claims. Paris: Digital Research Laboratory.

Black, F., & Scholes, M. S. (1973). The pricing of options and corporate liabilities. Journal of Political Economy, 81, 637654.

Broadie, M., & Detemple, J. (1996). American option valuation: New bounds, approximations, and a comparison of existing methods. Review of Financial Studies, 9(4), 121–150.

Hull, J. C. (1997). Options futures and other derivatives. Upper Saddle River: Prentice Hall.

Jumarie, G. (2008). Stock exchange fractional dynamics defined as fractional exponential growth driven by (usual) Gaussian white noise application to fractional Black–Scholes equations. Insurance Mathematics and Economics, 42, 271–287.

Kalanatri, R., & Shahmorad, S., & Ahmadian, D. , (2015). The stability analysis of predictor-corrector method in solving American option pricing model. Computer Econonics. doi:10.1007/s10614-015-9483-x.

Kwok, Y. K. (1998). Mathematical models of financial derivatives. Heidelberg: Springer.

Kwok, Y. K. (2009). Mathematical models of financial derivatives (Vol. 2). Berlin: Springer.

Meng, Wu, Nanjing, H., & Huiqiang, M. (2014). American option pricing with time-varying parameters. Computer Economics, 241, 439–450.

Merton, R. C. (1973). The theory of rational option pricing. The Bell Journal of Economics and Management of Science, 4, 141–183.

Podlubny, I. (1999). Fractional differential equations. Cambridge: Academic press.

Ross, S. H. (1999). An Introduction to mathematical finance. Cambridge: Cambridge University Press.

San-Lin, C. (2000). American option valuation under stochastic interest rates. Computer Economics, 3, 283–307.

Smith, G. D. (1985). Numerical solution of partial differential equations: Finite difference methods. Oxford: Clarendon Press.

Wilmott, P. (1998). The theory and practice of financial engineering. New York: Wiley.

Wilmott, P., Dewynne, J., & Howison, S. (1993). Option pricing, mathematical models and computation. Oxford: Oxford Financial Press.

Yu, D., & Tan, H. (2003). Numerical methods of differential equations. Beijing: Science Publisher.

Zhuang, P., Liu, F., Anh, V., & Turner, I. (2009). Numerical methods for the variable-order fractional advection–diffusion equation with a nonlinear source term. SIAM Jouranl of Numerical Analysis, 47, 1760–1781.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kalantari, R., Shahmorad, S. A Stable and Convergent Finite Difference Method for Fractional Black–Scholes Model of American Put Option Pricing. Comput Econ 53, 191–205 (2019). https://doi.org/10.1007/s10614-017-9734-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-017-9734-0