Abstract

We present a method for solving linearly constrained convex optimization problems, which is based on the application of known algorithms for finding zeros of the sum of two monotone operators (presented by Eckstein and Svaiter) to the dual problem. We establish convergence rates for the new method, and we present applications to TV denoising and compressed sensing problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A broad class of problems of recent interest in image science and signal processing can be posed in the framework of convex optimization. Examples include the TV denoising model [24] for image processing and basis pursuit, which is well known for playing a central role in the theory of compressed sensing. A general subclass of such programming problems is:

Here \(f:\mathbb {R}^{m_{1}}\rightarrow (-\infty ,\infty ]\) and \(g:\mathbb {R}^{m_{2}}\rightarrow (-\infty ,\infty ]\) are proper closed convex functions, \(M:\mathbb {R}^{m_{1}}\rightarrow \mathbb {R}^n\) and \(C:\mathbb {R}^{m_2}\rightarrow \mathbb {R}^{n}\) are linear operators, and \(d\in \mathbb {R}^n\).

A well-known iterative method for solving optimization problems that have a separable structure as (1) does, is the Alternating Direction Method of Multipliers (ADMM), which goes back to the works of Glowinski and Marrocco [13], and of Gabay and Mercier [12]. ADMM solves the coupled problem (1) performing a sequences of steps that decouple functions f and g, making it possible to exploit the individual structure of these functions. It can be interpreted in terms of alternating minimization, with respect to u and v, of the augmented Lagrangian function associated with problem (1). The classical ADMM (i.e., with dual steplength 1) can also be viewed as an instance of the method called Douglas-Rachford splitting applied to the dual problem of (1), as was shown by Gabay in [11].

Other splitting schemes have been effectively applied to the dual problem of (1), which is a special case of the problem of finding a zero of the sum of two maximal monotone operators. For example, the Proximal Forward Backward splitting method, developed by Lions and Mercier [17], and Passty [21], corresponds to the well-known Tseng’s [25] Alternating Minimization Algorithm (AMA) for solving (1). This method has simpler steps than ADMM, in the former one of the minimizations of the augmented Lagrangian is replaced by the minimization of the Lagrangian itself; however, it requires strong convexity of one of the objective functions.

The goal of our work is to construct an optimization scheme for solving (1) applying a splitting method to its dual problem. Specifically we are interested in the family of splitting-projective methods proposed in [9] by Eckstein and Svaiter to address inclusion problems given by the sum of two maximal monotone operators. We will apply a specific instance of these algorithms to solve a reformulation of the dual problem of (1) as the problem of finding a zero of the sum of two maximal monotone operators, which allows us to obtain a new algorithm for solving this problem. To the best of our knowledge, this is the first time that the methods in [9] are applied to the optimization problem (1). The specific instance we choose to study here is motivated by preliminary numerical results (illustrated in Sect. 2), which demonstrate the performance of the method. This iterative method will be referred to as the Projective Method of Multipliers (PMM). The convergence properties of the PMM will be obtained using the convergence results already established in [9]. In contrast to [9], which only studies the global convergence of the family of splitting-projective methods, we also establish in this work the iteration complexity of the PMM. Using the Karush–Kuhn–Tucker (KKT) conditions for problem (1) we give convergence rate for the PMM measured by the pointwise and ergodic iteration-complexities.

The remainder of this paper is organized as follows. Section 2 reviews some definitions and facts on convex functions that will be used in our subsequent presentation. It also briefly discusses Lagrangian duality theory for convex optimization, for more details in this subject we refer the reader to [22]. Section 3 presents the Projective Method of Multipliers (PMM) for solving the class of linearly constrained optimization problems (1). This section also presents global convergence of the PMM using the convergence analysis presented in [9]. Section 4 derives iteration-complexity results for the PMM. Finally, Sect. 5 presents some applications in image restoration and compressed sensing. This section also exhibits preliminary numerical results describing the performance of the PMM in solving these problems.

1.1 Notation

Throughout this paper, we let \(\mathbb {R}^n\) denote an n-dimensional space with inner product and induced norm denoted by \(\left\langle \cdot ,\cdot \right\rangle \) and \(\left\| \cdot \right\| \), respectively. For a matrix A, \(A^T\) indicates its transpose and \(\left\| A\right\| _F=\sqrt{trace(AA^T)}\) its Frobenius norm. Given a linear operator M, we denote by \(M^*\) its adjoint operator. If C is a convex set we indicate by \(\text {ri}\left( C\right) \) its relative interior.

2 Preliminaries

In this section we describe some basic definitions and facts on convex analysis that will be needed along this work. We also discuss the Lagrangian formulation and dual problem of (1). This approach will play an important role in the design of the PMM for problem (1).

2.1 Generalities on convex functions

Given an extended real valued convex function \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\), the domain of f is the set

Since f is a convex function, it is obvious that \(\text {dom}\,f\) is convex. We say that function f is proper if \(\text {dom}\,f\ne \emptyset \). Furthermore, we say that f is closed if it is a lower semicontinuous function.

Definition 1

Given a convex function \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\) a vector \(v\in \mathbb {R}^n\) is called a subgradient of f at \(x\in \mathbb {R}^n\) if

The set of all subgradients of f at x is denoted by \(\partial f(x)\). The operator \(\partial f\), which maps each x to \(\partial f(x)\), is called the subdifferential map associated with f.

It can be seen immediately from the definition that \(x^*\) is a global minimizer of f in \(\mathbb {R}^n\) if and only if \(0\in \partial f(x^*)\). If f is differentiable at x, then \(\partial f(x)\) is the singleton set \(\{\nabla f(x)\}\).

The subdifferential mapping of a convex function f has the following monotonicity property: for any x, \(x'\), v and \(v'\in \mathbb {R}^n\) such that \(v\in \partial f(x)\) and \(v'\in \partial f(x')\), it follows that

In addition, if f is a proper closed convex function, then \(\partial f\) is a maximal monotone operator [23]. This is to say that if \(x,\,v\in \mathbb {R}^n\) are such that inequality (2) holds for all \(x'\in \mathbb {R}^n\) and \(v'\in \partial f(x')\), then \(x\in \text {dom}\,f\) and \(v\in \partial f(x)\).

Given \(\lambda >0\), the resolvent mapping (or proximal mapping) [20] associated with \(\partial f\) is defined as

The fact that \((I+\lambda \partial f)^{-1}\) is an everywhere well defined function, if f is proper, closed and convex, is a consequence of a fundamental result due to Minty [18]. For example, if \(f(x)=\mu \left\| x\right\| _1=\mu \sum |x_i|\) where \(\mu >0\), then

where

The Fenchel-Legendre conjugate of a convex function f, denoted by \(f^*:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\), is defined as

It is simple to see that \(f^*\) is a convex closed function. Furthermore, if f is proper, closed and convex, then \(f^*\) is a proper function (see for instance [22, Theorem 12.2] and [1, Proposition 1.10]).

Definition 2

Given any convex function \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\) and \(\epsilon \ge 0\), a vector \(v\in \mathbb {R}^n\) is called an \(\epsilon \)-subgradient of f at \(x\in \mathbb {R}^n\) if

The set of all \(\epsilon \)-subgradients of f at x is denoted by \(\partial _\epsilon f(x)\), and \(\partial _\epsilon f\) is called the \(\epsilon \)-subdifferential mapping.

It is trivial to verify that \(\partial _0f(x)=\partial f(x)\), and \(\partial f(x)\subseteq \partial _\epsilon f(x)\) for every \(x\in \mathbb {R}^n\) and \(\epsilon \ge 0\). The proposition below lists some useful properties of the \(\epsilon \)-subdifferential that will be needed in our presentation.

Proposition 2.1

If \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\) is a proper closed convex function, \(g:\mathbb {R}^n\rightarrow \mathbb {R}\) is a convex differentiable function in \(\mathbb {R}^n\), and \(M:\mathbb {R}^m\rightarrow \mathbb {R}^n\) is a linear transformation, then the following statements hold:

-

(a)

\(v\in \partial _\epsilon f(x)\) if and only if \(x\in \partial _\epsilon f^*(v)\) for all \(\epsilon \ge 0\);

-

(b)

\(\partial (f+g)(x)=\partial f(x)+\nabla g(x)\) for all \(x\in \mathbb {R}^n\);

-

(c)

\(\partial (f\circ M)(x) \supseteq M^*\partial f(Mx)\) for all \(x\in \mathbb {R}^m\). In addition, if \(\text {ri}\left( \text {dom}\,f\right) \cap \text {range}\,M\ne \emptyset \), then \(\partial (f\circ M)(x)=M^*\partial f(Mx)\) for every \(x\in \mathbb {R}^m\);

-

(d)

if \(x_{i},v_{i}\in \mathbb {R}^{n}\) and \(\epsilon _{i},\alpha _{i}\in \mathbb {R}_{+}\), for \(i=1,\ldots ,k\), are such that

$$\begin{aligned} v_{i}\in \partial _{\epsilon _i} f(x_{i}),\quad i=1,\ldots ,k, \qquad \qquad \sum _{i=1}^{k}\alpha _{i}=1, \end{aligned}$$and we define

$$\begin{aligned} \overline{x} {:=} \sum _{i=1}^{k}\alpha _{i}x_{i}, \qquad \quad \overline{v}{:=}\sum _{i=1}^{k}\alpha _{i}v_{i}, \qquad \quad \overline{\epsilon }{:=}\sum _{i=1}^{k}\alpha _{i}(\epsilon _{i}+\left\langle x_{i}-\overline{x},v_{i}\right\rangle ); \end{aligned}$$then, we have \(\overline{\epsilon }\ge 0\) and \(\overline{v}\in \partial _{\overline{\epsilon }}f(\overline{x})\).

Proof

Statements (a)–(c) are classical results which can be found, for example, in [16, 22]. For a proof of item (d) see [2] and references therein. \(\square \)

2.2 Lagrangian duality

The Lagrangian function\(L:\mathbb {R}^{m_1}\times \mathbb {R}^{m_2}\times \mathbb {R}^n\rightarrow (-\infty ,\infty ]\) for problem (1) is defined as

The dual function is the concave function \(\varphi :\mathbb {R}^n\rightarrow [-\infty ,\infty )\) defined by

and the dual problem to (1) is

Problem (1) will be called the primal problem. Straightforward calculations show that weak duality holds, i.e. \(\varphi ^*\le p^*\), where \(p^*\) and \(\varphi ^*\) are the optimal values of (1) and (5), respectively.

A vector \((u^*,v^*,z^*)\) such that \(L(u^{*},v^{*},z^{*})\) is finite and it satisfies

is called a saddle point of the Lagrangian function L. If \((u^*,v^*,z^*)\) is a saddle point, then \((u^*,v^*)\) is an optimal primal solution and \(z^*\) is an optimal dual solution [22, Theorem 28.3]. Furthermore, if a saddle point of L exists, then \(p^*=\varphi ^*\), i.e. there is no duality gap [22].

Notice that, if \((u^*,v^*,z^*)\) is a saddle point, from the definition of L in (4) and equalities (6) we deduce that

for all \(u\in \mathbb {R}^{m_1}\), \(v\in \mathbb {R}^{m_2}\), \(z\in \mathbb {R}^n\). From these relations we can directly derive the Karush–Kuhn–Tucker (KKT) conditions

which describe an optimal solution of problem (1). Observe that the equality in (7) implies that the primal variables \((u^*,v^*)\) must be feasible. The inclusions in (7) are known as the dual feasibility conditions. We also have that the KKT conditions hold if and only if \((u^*,v^*,z^*)\) is a saddle point of L.

Observe that the dual function \(\varphi \) can be written in terms of the Fenchel-Legendre conjugates of the functions f and g. Specifically,

Hence, if we define the functions \(h_1(z)=\left( f^*\circ -M^*\right) (z)\) and \(h_2(z)=\left( g^*\circ -C^*\right) (z) + \left\langle d,z\right\rangle \), we have that the dual problem (5) is equivalent to minimizing \(h_1+h_2\) over \(\mathbb {R}^n\). Furthermore, since \(f^*\) and \(g^*\) are convex and closed, and \(M^*\) and \(C^*\) are linear operators, it follows that \(h_1\) and \(h_2\) are convex closed functions [22]. Therefore, \(z^*\) is a solution of (5) if and only if

Throughout this work, we assume that

Since condition A.1 implies that the KKT conditions hold, we have from the first inclusion in (7) and Proposition 2.1(a), (c) that \(z^*\in \text {dom}\,(f^*\circ -M^*)\), which implies that \(h_1\) is a proper function. A similar argument shows that \(h_2\) is also a proper function. Therefore, under hypothesis A.1, we have that the subdifferentials \(\partial h_1\) and \(\partial h_2\) are maximal monotone operators.

3 The projective method of multipliers

Our proposal in this work is to apply the splitting-projective methods developed in [9], by Eckstein and Svaiter, to find a solution of problem

and as a consequence a solution of the dual problem (5), since the following inclusion holds

(see Eq. (8) and the comments above).

The framework presented in [9] reformulates the problem of finding a zero of the sum of two maximal monotone operators in terms of a convex feasibility problem, which is defined by a certain closed convex “extended” solution set. To solve the feasibility problem, the authors introduced successive projection algorithms that use, on each iteration, independent calculations involving each operator.

Specifically, if we consider the subdifferential mappings \(\partial h_1\) and \(\partial h_2\), then the associated extended solution set, defined as in [9], is

Since \(\partial h_1\) and \(\partial h_2\) are maximal monotone operators it can be proven that \(S_e(\partial h_1,\partial h_2)\) is a closed convex set in \(\mathbb {R}^n\times \mathbb {R}^n\), see [9, Lemma 2]. It is also easy to verify that if \((z^*,w^*)\) is a point in \(S_e(\partial h_1,\partial h_2)\) then \(z^*\) satisfies inclusion (8) and consequently it is a solution of the dual problem. Furthermore, the following lemma holds.

Lemma 3.1

If \((u^*,v^*,z^*)\) is a saddle point of L, then

Moreover, if we assume the following conditions

-

(A.2)

\(\text {ri}\left( \text {dom}\,f^*\right) \cap \text {range}\,M^*\ne \emptyset \);

-

(A.3)

\(\text {ri}\left( \text {dom}\,g^*\right) \cap \text {range}\,C^*\ne \emptyset \).

Then, for all \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\) there exist \(u^*,v^*\in \mathbb {R}^n\) such that \(w^*=d-Cv^*\), \(w^*=Mu^*\) and \((u^*,v^*,z^*)\) is a saddle point of the Lagrangian function L.

Proof

If \((u^*,v^*,z^*)\) is a saddle point of the Lagrangian function, then the KKT optimality conditions hold, and the inclusions in (7), together with Proposition 2.1(a), imply that

Thus, we have

and

where the second inclusions in (10) and (11) follow from Proposition 2.1(c). Adding d to both sides of (11) and using the definition of \(h_2\) and Proposition 2.1(b) we have \(d-Cv^*\in \partial h_2(z^*)\). Now, adding this last inclusion to (10) we conclude that

The first assertion of the lemma follows combining the relation above with the equality in (7) and the definition of \(S_{e}\left( \partial h_1,\partial h_2\right) \).

By (9) we have that if \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\) then \(w^*\in \partial h_2(z^*)=-C\partial g^*(-C^*z^*) + d\), where the equality follows from condition A.3 and Proposition 2.1(b), (c). Thus, there exists \(v^*\in \partial g^*(-C^*z^*)\) such that \(w^*=-Cv^*+d\), and applying Proposition 2.1(a) we obtain that \(-C^*z^*\in \partial g(v^*)\).

Similarly, using \(-w^*\in \partial h_1(z^*)\), hypothesis A.2 and Proposition 2.1(a), (c), we deduce that there is a \(u^*\) such that \(-w^*=-Mu^*\) and \(-M^*z^*\in \partial f(u^*)\). All these conditions put together imply that \((u^*,v^*,z^*)\) is a saddle point of L. \(\square \)

According to Lemma 3.1, we can attempt to find a saddle point of the Lagrangian function (4), by seeking a point in the extended solution set \(S_e(\partial h_1,\partial h_2)\).

In order to solve the feasibility problem defined by \(S_e(\partial h_1,\partial h_2)\), by successive orthogonal projection methods, the authors of [9] used the resolvent mappings associated with the operators to construct affine separating hyperplanes.

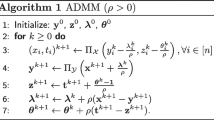

In our setting the family of algorithms in [9, Algorithm 2] follows the set of recursions

where \(\lambda _k\), \(\mu _k>0\) and \(\alpha _k\in \mathbb {R}\) are such that \((\mu _{k}/\lambda _{k}-(\alpha _{k}/2)^{2})>0\), and \(\rho _k\in (0,2)\).

We observe that relations in (12) and the definition of the resolvent mapping yield that \(x_k=(I+\lambda _k\partial h_2)^{-1}(z_{k-1} + \lambda _kw_{k-1})\) and \(b_k=\frac{1}{\lambda _k}(z_{k-1}-x_k)+w_{k-1}\). Similarly, (13) implies that \(y_k=(I+\mu _k\partial h_1)^{-1}((1-\alpha _k)z_{k-1} + \alpha _kx_k - \mu _kw_{k-1}))\) and \(a_k=\frac{1}{\mu _k}((1-\alpha _k)z_{k-1}+\alpha _k x_k - y_k) - w_{k-1}\). Hence, steps (12) and (13) are evaluations of the proximal mappings.

With the view to see that iterations (12)–(16) truly are successive (relaxed) projection methods for the convex feasibility problem of finding a point in \(S_e(\partial h_1,\partial h_2)\), we define, for all integer \(k\ge 1\), the affine function \(\phi _k(z,w):\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}\) as

and its non-positive level set

Thus, by the monotonicity of the subdifferential mappings we have that \(S_e(\partial h_1,\partial h_2) \subseteq H_{\phi _k}\) and we can also verify that the following relations holdFootnote 1

for all integer \(k\ge 1\). Therefore, we conclude that if \(\rho _k=1\) the point \((z_k,w_k)\), calculated by the update rule given by (15)–(16), is the orthogonal projection of \((z_{k-1},w_{k-1})\) onto \(H_{\phi _k}\). Besides, if \(\rho _k\ne 1\) we have that \((z_k,w_k)\) is an under relaxed projection of \((z_{k-1},w_{k-1})\).

As was observed in the paragraph after (16), in order to apply algorithm (12)–(16) it is necessary to calculate the resolvent mappings associated with \(\partial h_1\) and \(\partial h_2\). The next result shows how we can invert operators \(I+\lambda \partial h_1\) and \(I+\lambda \partial h_2\) for any \(\lambda >0\).

Lemma 3.2

Consider \(c\in \mathbb {R}^n\), \(\theta :\mathbb {R}^m\rightarrow (-\infty ,\infty ]\) a proper closed convex function and \(A:\mathbb {R}^m\rightarrow \mathbb {R}^n\) a linear operator such that \(\text {dom}\,\theta ^*\cap \text {range}\,A^*\ne \emptyset \). Let \(z\in \mathbb {R}^n\) and \(\lambda >0\). Then, if \({\tilde{\nu }}\in \mathbb {R}^{m}\) is a solution of problem

it holds that \(c-A{\tilde{\nu }}\in \partial h({\hat{z}})\) where \(h(\cdot )=(\theta ^*\circ -A^*)(\cdot )+\left\langle c,\cdot \right\rangle \) and \({\hat{z}}=z+\lambda (A{\tilde{\nu }}-c)\). Hence, \({\hat{z}}=(I+\lambda \partial h)^{-1}(z)\). Furthermore, the set of optimal solutions of (20) is nonempty.

Proof

If \({\tilde{\nu }}\in \mathbb {R}^{m}\) is a solution of (20), deriving the optimality condition of this minimization problem we have

From the definition of \({\hat{z}}\) and the identity above it follows that

Now, by equation above and Proposition 2.1(a), (c) we have

Since we are assuming that \(\text {dom}\,\theta ^*\cap \text {range}\,A^*\ne \emptyset \), the definition of h and Proposition 2.1(b), (c) yield

Therefore, adding c to both sides of (21) and combining with Eq. (22) we deduce that \(c-A{\tilde{\nu }}\in \partial h({\hat{z}})\). The assertion that \({\hat{z}}=(I+\lambda \partial h)^{-1}(z)\) is a direct consequence of this last inclusion and the definition of \({\hat{z}}\).

Next, we notice that, since \(\partial h\) is maximal monotone, Minty’s theorem [18] asserts that for all \(z\in \mathbb {R}^n\) and \(\lambda >0\) there exist \({\tilde{z}}\), \(w\in \mathbb {R}^n\) such that

Therefore, the inclusion above, together with Eq. (22), implies that there exists \(\overline{\nu }\in \partial \theta ^*(-A^*{\tilde{z}})\) such that \(w=-A\overline{\nu }+c\). This last inclusion yields \(-A^*{\tilde{z}}\in \partial \theta (\overline{\nu })\), from which we deduce that

where the equality above follows from the equality in (23). Finally, replacing w by \(c-A\overline{\nu }\) in the equation above, we obtain

from which follows that \(\overline{\nu }\) is an optimal solution of problem (20). \(\square \)

In what follows we assume that conditions A.2 and A.3 are satisfied. We can now introduce the Projective Method of Multipliers.

Algorithm

(PMM) Let \((z_0,w_0)\in \mathbb {R}^n\times \mathbb {R}^n\), \(\lambda >0\) and \(\overline{\rho }\in [0,1)\) be given. For \(k=1,2,\ldots \).

-

1.

Compute \(v_k\in \mathbb {R}^{m_2}\) as

$$\begin{aligned} v_k\in \arg \min _{v\in \mathbb {R}^{m_2}} g(v)+\left\langle z_{k-1}+\lambda w_{k-1},Cv-d\right\rangle +\frac{\lambda }{2}\left\| Cv-d\right\| ^{2}, \end{aligned}$$(24)and \(u_k\in \mathbb {R}^{m_1}\) as

$$\begin{aligned} u_k\in \arg \min _{u\in \mathbb {R}^{m_1}} f(u)+\left\langle z_{k-1}+\lambda (Cv_k-d),Mu\right\rangle +\frac{\lambda }{2}\left\| Mu\right\| ^{2}. \end{aligned}$$(25) -

2.

If \(\left\| Mu_k+Cv_k-d\right\| +\left\| Mu_k-w_{k-1}\right\| =0\) stop. Otherwise, set

$$\begin{aligned} \gamma _k = \frac{\lambda \left\| Cv_k-d+w_{k-1}\right\| ^2+\lambda \left\langle d-Cv_k-Mu_k,w_{k-1}-Mu_k\right\rangle }{\left\| Mu_k+Cv_k-d\right\| ^2+\lambda ^2\left\| Mu_k-w_{k-1}\right\| ^2}. \end{aligned}$$ -

3.

Choose \(\rho _k\in [1-\overline{\rho },1+\overline{\rho }]\) and set

$$\begin{aligned}&z_{k} = z_{k-1} + \rho _k\gamma _k(Mu_k+Cv_k-d),\\&w_{k} = w_{k-1} - \rho _k\gamma _k\lambda (w_{k-1}-Mu_k). \end{aligned}$$

We observe that condition \(\left\| a_k+b_k\right\| + \left\| x_k-y_k\right\| =0\) in algorithms (12)–(16) implies that \(x_k=y_k\) and \(b_k=-a_k\), and as a consequence we have \((x_k,b_k)\in S_e(\partial h_1,\partial h_2)\). Hence, algorithms (12)–(16) can also be alternatively stated with the stopping criterion \(\left\| a_k+b_k\right\| + \left\| x_k-y_k\right\| =0\), and the following result holds.

Proposition 3.1

The PMM is a special instance of algorithms (12)–(16) (with the stopping criterion \(\left\| a_k+b_k\right\| + \left\| x_k-y_k\right\| =0\)) where

and

for every integer \(k\ge 1\).

Proof

First we notice that (26) implies

for all integer \(k\ge 1\). Next, applying Lemma 3.2 with \(\theta =g\), \(A=C\), \(c=d\), \(z=z_{k-1}+\lambda w_{k-1}\) and \({\tilde{\nu }}=v_k\) we have that \(x_k\) and \(b_k\), defined as in (27), satisfy \(b_k\in \partial h_2(x_k)\) and \(x_k=(I+\lambda \partial h_2)^{-1}(z_{k-1}+\lambda w_{k-1})\). Therefore, the pair \((x_k,b_k)\) satisfies the relations in (12) with \(\lambda _k=\lambda \).

Similarly, applying Lemma 3.2 with \(\theta =f\), \(A=M\), \(c=0\), \(z=x_k-\lambda w_{k-1}\) and \({\tilde{\nu }}=u_k\) we have that the points \(y_k\) and \(a_k\), given in (27), satisfy (13) with \(\mu _k=\lambda \), \(\alpha _k=1\) and \(x_k\) defined in (27).

Moreover, identities in (27) yield

and

Using (29), (30) and the definitions of \(x_k\), \(b_k\), \(y_k\) and \(a_k\) in (27), we can rewrite \(\gamma _k\) in step 2 of the PMM as in (19), which is exactly Eq. (14). Also, the identities in (29) imply that we can restate the stopping criterion in step 2 of the PMM as \(\left\| a_k+b_k\right\| + \left\| x_k-y_k\right\| =0\). Finally, (29) and the update rule in step 3 of the PMM imply that

Thus, the proposition is proven. \(\square \)

From Proposition 3.1 it follows that if for some k the stopping criterion in step 2 of the PMM holds, then

Furthermore, by the definitions of \(x_k\) and \(y_k\) in (27), and the optimality conditions of problems (24) and (25), we have

for all integer \(k\ge 1\). Combining (31) with (32) we may conclude that if the PMM stops in step 2, then \((u_k,v_k,x_k)\) satisfies the KKT conditions, and consequently it is a saddle point of L.

Otherwise, if the PMM generates an infinite sequence, in view of Proposition 3.1, we are able to establish its global convergence using the convergence results presented in [9].

Theorem 3.1

Consider the sequences \(\{(u_k,v_k)\}\), \(\{(z_k,w_k)\}\), \(\{\gamma _k\}\) and \(\{\rho _k\}\) generated by the PMM. Consider also the sequences \(\{x_k\}\), \(\{b_k\}\), \(\{y_k\}\) and \(\{a_k\}\) defined in (27). Then, the following statements hold.

-

(a)

There exist \(z^*\) a solution of the dual problem (5) and \(w^*\in \mathbb {R}^n\) such that \(-w^*\in \partial h_1(z^*)\), \(w^*\in \partial h_2(z^*)\), and \((x_k,b_k)\rightarrow (z^*,w^*)\), \((y_k,-a_k)\rightarrow (z^*,w^*)\) and \((z_k,w_k)\rightarrow (z^*,w^*)\).

-

(b)

\(Mu_k+Cv_k-d\rightarrow 0\) and \(x_k-y_k\rightarrow 0\).

-

(c)

\(\lim \limits _{k\rightarrow \infty }f(u_k)+g(v_k)=p^*\).

Proof

-

(a)

According to Proposition 3.1 the PMM is an instance of [9, Algorithm 2] applied to the subdifferential operators \(\partial h_1\) and \(\partial h_2\), and with generated sequences \(\{(z_k,w_k)\}\), calculated by step 3 of the PMM, and \(\{(x_k,b_k)\}\), \(\{(y_k,a_k)\}\), which are defined in (27). From assumption A.1 and Eq. (28) it follows that the hypotheses of [9, Proposition 3] are satisfied. Thus, invoking this proposition we have that there exists \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\) such that

$$\begin{aligned} (z_k,w_k)\rightarrow (z^*,w^*),\qquad (x_k,b_k)\rightarrow (z^*,w^*)\qquad \text {and}\qquad (y_k,-a_k)\rightarrow (z^*,w^*). \end{aligned}$$(33)Moreover, since \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\) we have that \(-w^*\in \partial h_1(z^*)\), \(w^*\in \partial h_2(z^*)\) and \(z^*\) is a solution of the dual problem (5).

-

(b)

By (33) it trivially follows that \(x_k-y_k\rightarrow 0\) and \(a_k+b_k\rightarrow 0\). Hence, using the definition of \(a_k\) and \(b_k\) we deduce that \(Mu_k+Cv_k-d\rightarrow 0\).

-

(c)

Let \((u^*,v^*,z^*)\) be a KKT point of L, which exists from hypothesis A.1, then from the first equality in (6) we have

$$\begin{aligned} L(u^*,v^*,z^*) \le L(u_k,v_k,z^*), \qquad \quad \text {for }\, k=1,2,\ldots . \end{aligned}$$From equation above, the definition of the Lagrangian function in (4) and the KKT conditions (7) it follows that

$$\begin{aligned} f(u^*) + g(v^*) \le f(u_k) + g(v_k) + \left\langle Mu_k+Cv_k-d,z^*\right\rangle . \end{aligned}$$Since \(p^*=f(u^*)+g(v^*)\), combining inequality above with item (b) we deduce that

$$\begin{aligned} p^*\le \liminf _{k\rightarrow \infty } f(u_k) + g(v_k). \end{aligned}$$(34)Now, we observe that the first inclusion in (32), together with Definition 1, implies

$$\begin{aligned} g(v^*) \ge g(v_k) - \left\langle C^*x_k,v^*-v_k\right\rangle . \end{aligned}$$Equivalently, from the second inclusion in (32) and Definition 1 it follows that

$$\begin{aligned} f(u^*) \ge f(u_k) - \left\langle M^*y_k,u^*-u_k\right\rangle . \end{aligned}$$Adding the two equations above we obtain

$$\begin{aligned} \begin{aligned} p^*\ge&\, f(u_k) + g(v_k) - \left\langle C^*x_k,v^*-v_k\right\rangle - \left\langle M^*y_k,u^*-u_ k\right\rangle \\ =&\, f(u_k) + g(v_k) - \left\langle x_k,Cv^*-Cv_k\right\rangle - \left\langle y_k,Mu^*-Mu_k\right\rangle \\ =&\, f(u_k) + g(v_k) - \left\langle x_k-y_k,Cv^*-Cv_k\right\rangle - \left\langle y_k,d-Mu_k-Cv_k\right\rangle , \end{aligned} \end{aligned}$$where the last equality follows from a simple manipulation and the equality in (7). Since \(\{b_k=d-Cv_k\}\) and \(\{y_k\}\) are convergent sequences, therefore bounded sequences, equation above, together with item (b), yields

$$\begin{aligned} p^*\ge \limsup _{k\rightarrow \infty } f(u_k) + g(v_k). \end{aligned}$$Combining inequality above with (34) we conclude the proof.

\(\square \)

4 Complexity results

Our goal in this section is to study the iteration complexity of the PMM for solving problem (1). In order to develop global convergence bounds for the method we will examine how well its iterates satisfy the KKT conditions. Observe that the inclusions in (32) indicate that the quantities \(\left\| Mu_k+Cv_k-d\right\| \) and \(\left\| x_k-y_k\right\| \) can be used to measure the accuracy of an iterate \((u_k,v_k,x_k)\) to a saddle point of the Lagrangian function. More specifically, if we define the primal and dual residuals, associated with \((u_k,v_k,x_k)\), by

then, from the inclusions in (32) and the KKT conditions it follows that when \(\left\| r_k^p\right\| =\left\| r_k^d\right\| =0\), the triplet \((u_k,v_k,x_k)\) is a saddle point of L. Therefore, the size of these residuals indicates how far the iterates are from a saddle point, and it can be viewed as an error measurement of the PMM. It is thus reasonable to seek upper bounds for these quantities for the purpose of investigating the convergence rate of the PMM.

The theorem below estimates the quality of the best iterate among \((u_1,v_1,x_1),\dots ,(u_k,v_k,x_k)\), in terms of the error measurement given by the primal and dual residuals. We refer to these estimates as pointwise complexity bounds for the PMM.

Theorem 4.1

Consider the sequences \(\{(u_k,v_k)\}\), \(\{(z_k,w_k)\}\), \(\{\gamma _k\}\) and \(\{\rho _k\}\) generated by the PMM. Consider also the sequences \(\{x_k\}\), \(\{b_k\}\), \(\{y_k\}\) and \(\{a_k\}\) defined in (27). If \(d_{0}\) is the distance of \((z_0,w_0)\) to the set \(S_{e}\left( \partial h_1,\partial h_2\right) \), then for all \(k=1,2,\dots ,\) we have

and there exists and index \(1\le i\le k\) such that

where \(\tau {:=}\min \left\{ \lambda ,\dfrac{1}{\lambda }\right\} \).

Proof

Inclusions (35) were established in (32). Therefore, what is left is to show the bounds in (36). Since the point \((z_k,w_k)=(z_{k-1},w_{k-1}) + \rho _k((z_{k-1}^*,w_{k-1}^*)-(z_{k-1},w_{k-1}))\), where \((z_{k-1}^*,w_{k-1}^*)\) is the orthogonal projection of \((z_{k-1},w_{k-1})\) onto \(H_{\phi _k}\), and \(S_e(\partial h_1,\partial h_2)\subseteq H_{\phi _k}\), we take an arbitrary \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\) and observe that for \(k=1,2,\dots \),

where the inequality above follows from a well-known property of the orthogonal projection and the fact that \(\rho _k((z_{k-1}^*,w_{k-1}^*)-(z_{k-1},w_{k-1}))=(z_{k},w_{k})-(z_{k-1},w_{k-1})\). Thus, applying the inequality above recursively, we have

We rearrange terms in the equation above and notice that \(\lambda (w_{j-1}-Mu_j)=x_j-y_j\), which yields

Taking \((z^*,w^*)\) to be the orthogonal projection of \((z_0,w_0)\) onto \(S_e(\partial h_1,\partial h_2)\) in inequality (38), we obtain

Now, for i such that

we use inequality (39) and the fact that \(\rho _j\in [1-\overline{\rho },1+\overline{\rho }]\) to conclude that

Next, we notice that Proposition 3.1, together with the equality in (19), implies

where \(\phi _j\) is the affine function given in (17) associated with \(x_j\), \(y_j\), \(b_j\) and \(a_j\) defined in (27). Moreover, combining Eqs. (17), (27), (29) and (30) we have

Hence, we substitute the relation above into (41) to obtain

Now, we use the following estimate

and the inequality in (42) to deduce that

This last inequality, together with (40), implies

from which the theorem follows. \(\square \)

We now develop alternative complexity bounds for the PMM, which we call ergodic complexity bounds. We define a sequence of ergodic iterates as weighted averages of the iterates and derive a convergence rate for the PMM, which as before, is obtained from estimates of the residuals for the KKT conditions associated with these ergodic sequences.

The idea of considering averages of the iterates in the analysis of the convergence rate for methods for solving problem (1) has been already used in other works. For instance, in [15, 19] it was shown a worst-case \({\mathcal {O}}(1/k)\) convergence rate for the ADMM in the ergodic sense.

The sequences of ergodic means \(\{\overline{u}_k\}\), \(\{\overline{v}_k\}\), \(\{\overline{x}_k\}\) and \(\{\overline{y}_k\}\) associated with \(\{u_k\}\), \(\{v_k\}\), \(\{x_k\}\) and \(\{y_k\}\), respectively, are defined as

Lemma 4.1

For all integer \(k\ge 1\) define

Then, \(\overline{\epsilon }_k^v\ge 0\), \(\overline{\epsilon }_k^u\ge 0\) and

Proof

From inclusions in (35) we have

Thus, the assertion that \(\overline{\epsilon }_k^v\ge 0\) and the first inclusion in (46) are a direct consequence of the first inclusion in the equation above, the definitions of \(\overline{x}_k\), \(\overline{v}_k\) and \(\overline{\epsilon }_k^v\), the fact that \(C^*\) is a linear operator and Proposition 2.1(d).

Similarly, the second inclusion in (46) and the fact that \(\overline{\epsilon }_k^u\ge 0\) follow from the definitions of \(\overline{y}_k\), \(\overline{u}_k\) and \(\overline{\epsilon }_k^u\), linearity of the \(M^*\) operator, the second inclusion in the relation above and Proposition 2.1(d). \(\square \)

According to Lemma 4.1, if \(\left\| \overline{r}^p_k\right\| =\left\| \overline{r}^d_k\right\| =0\) and \(\overline{\epsilon }_k^u=\overline{\epsilon }_k^v=0\), where \(\overline{r}_k^p = M\overline{u}_k + C\overline{v}_k - d\) and \(\overline{r}_k^d = \overline{x}_k - \overline{y}_k\); then it follows that \((\overline{u}_k,\overline{v}_k,\overline{x}_k)\) satisfies the KKT conditions and, consequently, it is a saddle point of the Lagrangian function. Thus, we have computable residuals for the sequence of ergodic means, i.e. the residual vector \((\overline{r}_k^p,\overline{r}^d_k,\overline{\epsilon }_k^u,\overline{\epsilon }_k^v)\), and we can attempt to construct bounds on its size.

For this purpose, we first prove the following technical result. It establishes an estimate for the quantity \(\overline{\epsilon }_k^u+\overline{\epsilon }_k^v\).

Lemma 4.2

Let \(\{u_{k}\}\), \(\{v_k\}\), \(\{z_k\}\), \(\{w_k\}\), \(\{\gamma _k\}\) and \(\{\rho _k\}\) be the sequences generated by the PMM and \(\{x_k\}\), \(\{y_k\}\) be defined in (27). Define also the sequences of ergodic iterates \(\{\overline{u}_k\}\), \(\{\overline{v}_k\}\), \(\{\overline{x}_{k}\}\), \(\{\overline{y}_{k}\}\), \(\{\overline{\epsilon }_{k}^u\}\) and \(\{\overline{\epsilon }_{k}^v\}\) as in (44) and (45). Then, for every integer \(k\ge 1\), we have

Proof

We first show that

By the definitions of \(\phi _j\), \(b_j\) and \(a_j\) we have

We use the definitions of \(\overline{y}_k\), \(\overline{v}_k\), \(\Gamma _k\) and the fact that C is a linear map, to obtain

Now, multiplying (49) by \(\rho _j\gamma _j/\Gamma _k\), adding from \(j=1\) to k and combining with the relation above, we conclude that

Next, we observe that

where the last equality above is a consequence of the definitions of \(\overline{y}_k\) and \(M\overline{u}_k\). We deduce formula (48) combining the equation above with (50).

For an arbitrary \((z,w)\in \mathbb {R}^n\times \mathbb {R}^n\) and all integer \(j\ge 1\) we have

where the second equality follows from the identity \((z_j,w_j)=(z_{j-1},w_{j-1})-\rho _j\gamma _j\nabla \phi _j\), which is a consequence of step 3 in the PMM, (29) and (18). Now, we notice that

where the second and fourth equalities are due to (17), (18) and (27). Substituting the equation above into (51) yields

where formula (19) is used for obtaining the last equality. Rearranging terms in the equation above and adding from \(j=1\) to k, we obtain

Consequently, we have

Now, we use inequality above with \((z,w)=(\overline{y}_k,d-C\overline{v}_k)\), and combine with (48), to obtain

where the second inequality above is due to the definitions of \(\overline{y}_k\), \(\overline{v}_k\), the fact that C is a linear operator and the convexity of \(\left\| \cdot \right\| ^2\). Further, the third inequality in equation above is obtained using the triangle inequality for norms.

Next, we notice that inequality (37) implies

for all integers \(j\ge 0\) and all \((z^*,w^*)\in S_e(\partial h_1,\partial h_2)\). Taking \((z^*,w^*)\) to be the orthogonal projection of \((z_0,w_0)\) onto \(S_e(\partial h_1,\partial h_2)\) in the relation above and using the triangle inequality, we deduce that

Combining (53) with (52) we have

To end the proof we substitute the identity \(y_j-z_{j-1}=\lambda (Mu_j+Cv_j-d)\), which follows from the definition of \(y_j\) in (27), into the above inequality. \(\square \)

The following theorem provides estimates for the quality of the measure of the ergodic means \(\overline{u}_k\), \(\overline{v}_k\), \(\overline{x}_k\) and \(\overline{y}_k\). More specifically, we show that the residuals associated with the ergodic sequences are \({\mathcal {O}}(1/k)\).

Theorem 4.2

Assume the hypotheses of Theorem 4.1. Consider also the sequences \(\{\overline{u}_k\}\), \(\{\overline{v}_k\}\), \(\{\overline{x}_k\}\) and \(\{\overline{y}_k\}\) given in (44), and \(\{\overline{\epsilon }_k^u\}\), \(\{\overline{\epsilon }_k^v\}\) defined in (45). Then, for all integer \(k\ge 1\), we have

and

where \(\vartheta :=\dfrac{1}{\tau ^2(1-\overline{\rho })^2}+1\).

Proof

The inclusions in (54) were proven in Lemma 4.1. To prove the estimates in (55) we first observe that, since

by the update rule in step 3 of the PMM we have

where the last equality above follows from the definitions of \(\Gamma _k\), \(\overline{v}_k\), \(\overline{u}_k\), \(\overline{x}_k\) and \(\overline{y}_k\) in (44), and the fact that M and C are linear operators. Therefore, from (57) we deduce that

and combining the identity above with estimate (53) we obtain

Next, we notice that Eq. (43) and the fact that \(\rho _j\in [1-\overline{\rho },1+\overline{\rho }]\) imply

The inequality above, together with (58), yields

from which the bounds in (55) follow directly.

Now, using the equality in (42) we have

and as a consequence we obtain

Multiplying the inequality above by \(\rho _j\gamma _j2/\tau \), adding from \(j=1\) to k and using (47), we have

Finally, relation above, together with (39) and the fact that \(\rho _j\in [1-\overline{\rho },1+\overline{\rho }]\), yields

The bound in (56) is achieved using this last inequality and (59). \(\square \)

5 Applications

This section describes some simple, preliminary computational testing of the PMM. These experiments are not meant to be exhaustive, but only to provide some preliminary indication of the performance of the PMM.

Specifically, we discuss the specialization of the PMM to two common test problems. First, we consider the total variation model for image denoising (TV denoising). Then, we consider a compressed sensing problem for Magnetic Resonance Imaging, and we exhibit some numerical experiments to illustrate the performance of the PMM when solving these problems.

5.1 TV denoising

Total variation (TV) or ROF model is a common image model developed by Rudin et al. [24] for the problem of removing noise from an image. If \(b\in \mathbb {R}^{m\times n}\) is an observed noisy image, the TV problem for image denoising estimates the unknown original image \(u\in \mathbb {R}^{m\times n}\) by solving the minimization problem

where TV is the total variation norm defined as

Here \(\nabla _1:\mathbb {R}^{m\times n}\rightarrow \mathbb {R}^{m\times n}\) and \(\nabla _2:\mathbb {R}^{m\times n}\rightarrow \mathbb {R}^{m\times n}\) are the discrete forward gradients in the first and second direction, respectively, given by

and we assume standard reflexive boundary conditions

The regularization parameter \(\zeta >0\) controls the tradeoff between fidelity to measurements and the smoothness term given by the total variation.

To solve the TV problem using the PMM we first have to sate it in the form of a linearly constrained minimization problem (1). If we define \(\Omega :=\mathbb {R}^{m\times n}\times \mathbb {R}^{m\times n}\), and the linear map \(\nabla :\mathbb {R}^{m\times n}\rightarrow \Omega \) by

then, taking \(v=\nabla u\in \Omega \), we have that (60) is equivalent to the optimization problem

Now, we solve (62) by applying the PMM with \(f(u)=\dfrac{1}{2}\left\| u-b\right\| _F^2\), \(g(v)=\zeta \left\| v\right\| _1\), \(M=\nabla \), \(C=-I\) and \(d=0\).

Given \({z}_{k-1},\,{w}_{k-1}\in \Omega \), the PMM requires the solution of problems,

and

The optimality condition of problem (63), yields

hence,

Therefore, the solution of problem (63) can be computed explicitly as

where the shrink operator is defined in (3). Deriving the optimality condition for problem (64) we have that

from which it follows that \(u_k\) has to be the solution of the system of linear equations

Thus, the PMM applied to problem (62) produces the iteration:

We used three images to test the PMM in our experiments: the first was “Lena” image of size \(512\times 512\), the second was “Baboon” image of size \(512\times 512\), and the third was “Man” image of size \(768\times 768\), see Fig. 1. All images were contaminated with Gaussian noise using the Matlab function “imnoise” and it was used several values of variance (\(\sigma \)). The PMM was implemented in Matlab code and it was chosen \(\lambda =1\) in all tests, since we have found that choosing this value for \(\lambda \) was effective for all the experiments. The variables \(z_0\) and \(w_0\) were initialized to be zero.

Denoising results. Images a, d and g are contaminated with Gaussian noise, the value of variance (\(\sigma \)) is reported below each image. Images were denoised with \(\zeta =20\) (center) and \(\zeta =50\) (right). The value of \(\rho \) and the number of iterations required to satisfy the stopping criterion, for both the PMM/ADMM, are listed below each image

Residual curves of the PMM and ADMM for the TV denoising problems. (Top) primal error \(\left\| \nabla u_k - v_k\right\| \) versus iteration number k. (Center) dual error \(\left\| x_k-y_k\right\| \) versus iteration number k. (Bottom) error \(\left\| u_k-u^*\right\| \) versus iteration number k (\(u^*\) is the exact solution). (Left) convergence results are for the tested image Lena with \(\sigma =0.06\), \(\zeta =50\) and \(\rho =1.5\). (Right) convergence results are for the tested image Baboon with \(\sigma =0.02\), \(\zeta =20\) and \(\rho =1\)

As a way to provide a reference, we also report the results obtained with ADMM, which is actually equivalent to the Split Bregman (SB) method [10, 14] for TV regularized problems. For a fair comparison, we implemented the generalized ADMM [7] with over and under relaxation factors, see also [6]. For the ADMM, \(\lambda \) was also set to be 1 in all iterations. Moreover, we took as initial guess the contaminated image and the multiplier was initialized to be zero. As in [14] iterations for both method were terminated when condition \(\left\| u_k-u_{k-1}\right\| /\left\| u_k\right\| \le 10^{-3}\) was met; since this stopping criterion is satisfied faster than the stopping condition given by the KKT residuals, while yielding good denoised images.

In Fig. 2 we present some denoising results. It shows the noise contaminated images and the reconstructed images with the PMM. Additionally, in Fig. 3 we report the primal and dual residuals for the KKT optimality conditions for problem (62) for both methods, in some specific tests. The primal and dual residuals for the PMM were defined in Sect. 4. For the ADMM the primal residual is also defined as \(\nabla u_k-v_k\), i.e. it is the residual for the equality constraint at iteration k. The dual residual for the ADMM is defined as the residual for the dual feasibility condition (see Eq. (7) and the comments below). Since the exact solution of the problems are known, we also plotted in Fig. 3 the error \(\left\| u_k-u^*\right\| \) versus iteration, where \(u^*\) is the exact solution. In these experiments both methods were stopped at iteration 50. It can be observed in Fig. 3 that the speed of the PMM and ADMM measured by the residual curves are very similar; however the residuals for the PMM decay faster, and this difference is more evident in the dual residual curve.

In Table 1 we present a more detailed comparison between the methods. It reports the iteration counts and total time, in seconds, required for the PMM and ADMM in specific experiments. In these tests we used \(\rho _k=1\) or \(\rho _k=1.5\) for every integer \(k\ge 1\), in both methods, to observe the acceleration performance. We observe that in the tests the PMM executed fewer iterations than ADMM, and the PMM was generally faster. We also observe that both methods accelerate when \(\rho =1.5\).

However, since it is known that the generalized ADMM performs better in general when \(\rho \) is larger than 1.61 (see for instance [5, 8]), we make a comparison of the two methods taking \(\rho \ge 1.61\). The results of these experiments are reported in Table 2. It can be seen that for these values of \(\rho \), although the performances of both methods are very similar in processing time and number of iterations, the PMM continues to be faster than the ADMM.

The operation of highest computational cost within each iteration of the PMM, and ADMM, for the TV problem, consists in solving problem (66). In our tests we solved this step for both algorithms using the Conjugate Gradient (CG) method with tolerance \(10^{-5}\). This strategy consistently yielded convergence in fewer iterations when using the PMM. Table 3 presents the total number of iteration executed by the CG method in each algorithm for some specific experiments. In the tests presented both methods were stopped at iteration 20.

However, the authors of [14] observed that the ADMM (SB method) attained optimal efficiency by executing, at each iteration of the algorithm, just a single iteration of an iterative method to solve problem (66). Motivated by this, we test the PMM performing one iteration of the CG method at each step of the algorithm. As Fig. 4 below shows, in this case, the PMM also yields good denoised images.

Denoising with one iteration of CG method per iteration. (Left) noisy images, the value of variance is reported below each image. (Center) images denoised with PMM. (Right) images denoised with ADMM. The number of iterations, the total time in seconds (in parenthesis); as well as the used values of \(\zeta \) and \(\rho \) are displayed below each image

5.2 Compressed sensing

In many areas of applied mathematics and computer science it is often desirable to reconstruct a signal from small amount of data. Compressed sensing is a signal processing technique that allow the reconstruction of signals and images from small number of measurements, provided that they have a sparse representation. This technique has gained considerable attention in the signal processing community since the works of Candès et al. [3], and of Donoho [4], and it has had a significant impact in several applications, for example in imaging, video and medical imaging.

For testing the PMM we consider a particular application of compressed sensing in Magnetic Resonance Imaging (MRI), which is an essential medical imaging tool. MRI is based on the reconstruction of an image from a subset of measurements in the Fourier domain. This imaging problem can be modeled by the optimization problem

where TV is the total variation norm (61), F is the Discrete Fourier Transform, R is a diagonal matrix, b is the known Fourier data and u is the unknown image that we wish to reconstruct.

(Left) images used in compressed sensing tests. (Right) the images reconstructed with the PMM. The Shepp–Logan phantom of size \(256\times 256\) (top) was recovered with 25% sampling and \(\rho =1.5\). The CS-Phantom of size \(512\times 512\) (bottom) was recovered with 50% sampling and \(\rho =1.5\)

Residual curves of the PMM and ADMM for the compressed sensing problems. (Top) primal error \(\left\| \nabla u_k - v_k\right\| \) versus iteration number. (Center) dual error \(\left\| x_k - y_k\right\| \) versus iteration number. (Bottom) error \(\left\| u_k-u^*\right\| \) versus iteration number (\(u^*\) is the exact solution). (Left) convergence results are for the Shepp–Logan phantom of size \(256\times 256\) with \(25\%\) sampling and \(\rho =1.5\). (Right) convergence results are for the CS-Phantom of size \(512\times 512\) with \(50\%\) sampling and \(\rho =1.5\)

The matrix R has a 1 along the diagonal at entries corresponding to the Fourier coefficients that were measured, and 0 for the unknown coefficients. The second term in (70) induces the Fourier transform of the reconstructed image to be close to the measured data, while the TV term in the minimization enforces “smoothness” of the image. The parameter \(\zeta >0\) provides a tradeoff between the fidelity term and the smoothness term.

Problem (70) can be posed as a linearly constrained minimization problem (1) in much the same manner as was done for the TV problem in the previous subsection. Therefore, to apply the PMM to (70) we take \(f(u) = \dfrac{\zeta }{2}\left\| RFu-b\right\| ^2_F\), \(g(v)=\left\| v\right\| _1\), \(M = \nabla \), \(C=-I\) and \(d=0\). The resulting minimization problems are

and

Problem (71) can be solved explicitly using the shrink operator (3). Indeed, by the optimality conditions for this problem we have

The optimality condition for the minimization problem (72) is

or equivalently

Thus, we obtain \(u_k\), the solution of the system above, by

We tested the PMM on two synthetic phantom. The first is the digital Shepp–Logan phantom, which was created with the Matlab function “phantom”. The second experiment was done with a CS-Phantom, which was taken from the mathworks web site. Experiments were conducted with different sizes of the phantoms and measuring at random \(25\%\), \(50\%\) or \(75\%\) of the Fourier coefficients. As stopping condition for these problems, it was used the criterion given by the residuals for the KKT conditions. More specifically, the PMM and ADMM were stopped when the primal and dual residuals, associated with each method, were less than \(10^{-6}(m*n)\), where m and n are the dimensions of the image. For all the experiments we used \(\zeta =500\) and \(\lambda =1\), since we found that these choices were effective for both methods.

Figure 5 shows the test images and their reconstructions using the PMM. The performance of the PMM and ADMM can be seen in Fig. 6, which reports the residual curves for both methods, as were the error \(\left\| u_k-u^*\right\| \), where \(u^*\) is the exact solution. Observe that the primal curves for both methods are very similar along all iterations. However, the decay for the dual residual curve for the PMM is much faster than the dual residual for the ADMM.

Table 4 presents some numerical results for the compressed sensing problem. It reports the iteration count and total time required for the PMM and ADMM in the experiments. In these tests, it was used \(\rho \ge 1.61\). We observe that the PMM solves all the problems faster than ADMM.

5.3 The dual residual

It was observed in our numerical experiments that, despite the overall rate of decrease for the PMM and the ADMM are very similar, the dual variable in the PMM sequence is smaller than the ADMM dual variable. This could be an advantage for the PMM, and motivates us to study the performance of the method using a stopping criterion based on the dual residual.

In this subsection we present some preliminary computational results considering a termination condition that only uses information from the dual residual sequences. We use as test problems the TV (60) and CS (70) problems discussed in the previous subsections. The algorithms were run until condition

was satisfied, where \(d_k\) is the corresponding dual residual of the sequence at iteration k, and m and n are the dimensions of the images. In all the experiments we fix \(\lambda =1\) and use \(\rho \ge 1.61\).

Table 5 displays the performance of the PMM and ADMM using criterion (73). The columns mean: Problem, the considered problem (TV or CS with the corresponding image, \(\zeta \), \(\rho \) and noise or sampling); \(\#\) It, iterations needed to satisfy (73); time (s), CPU time (s); and relt, the relative error \(\left\| u_k-u^*\right\| /\left\| u^*\right\| \) at the last iterate, which can be computed since the exact solution \(u^*\) is known.

We observe that the PMM is faster than the ADMM in all the problems, and in 12 instances it is at least 2 times faster than the ADMM. We also observe that, although the high speed exhibited by the PMM, the quality of the solution found by it is very similar to that found by the ADMM, which can be seen by comparing the relt columns of Table 5. Additionally, we notice that in three experiments the ADMM stops because it reaches the maximum number of allowed iterations (1000)Footnote 2, therefore it does not solve the instance by the required accuracy.

Figures 7 and 8 show the image reconstruction results for some tests. It can be observed in Fig. 7 that for the TV problem both methods recover good images using (73). This is not surprising since the stopping criterion used in Sect. 5.1 is more flexible than (73), and the restoration results were satisfactory (see Sect. 5.1). It turns out that for the CS problem, although the termination condition considered in Sect. 5.2 is more restrictive than (73), the PMM and ADMM can also reconstruct images with good quality using this last stopping criterion, as can be seen in Fig. 8.

Notes

Actually, it can be shown that \(\gamma _k\ge c_k\), where \(c_k>0\) (see [9, Proposition 3]).

Marked with an asterisk (*).

References

Brezis, H.: Functional Analysis. Sobolev Spaces and Partial Differential Equations. Universitext. Springer, New York (2011)

Burachik, R.S., Sagastizábal, C.A., Svaiter, B.F., \(\epsilon \)-enlargements of maximal monotone operators: theory and applications. In: Reformulation: nonsmooth, piecewise smooth, semismooth and smoothing methods (Lausanne, 1997), vol. 22 of Appl. Optim, pp. 25–43. Kluwer Academic Publishers, Dordrecht (1999)

Candès, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

Eckstein, J.: Parallel alternating direction multiplier decomposition of convex programs. J. Optim. Theory Appl. 80(1), 39–62 (1994)

Eckstein, J.: Augmented Lagrangian and alternating direction methods for convex optimization: a tutorial and some illustrative computational results. RUTCOR Research Reports 32 (2012)

Eckstein, J., Bertsekas, D.P.: On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. Ser. A 55(3), 293–318 (1992)

Eckstein, J., Ferris, M.C.: Operator-splitting methods for monotone affine variational inequalities, with a parallel application to optimal control. INFORMS J. Comput. 10(2), 218–235 (1998)

Eckstein, J., Svaiter, B.F.: A family of projective splitting methods for the sum of two maximal monotone operators. Math. Program. Ser. B 111(1–2), 173–199 (2008)

Esser, E.: Applications of lagrangian-based alternating direction methods and connections to split bregman. CAM Rep. 9, 31 (2009)

Gabay, D.: Chapter ix applications of the method of multipliers to variational inequalities. Studies Math. Appl. 15, 299–331 (1983)

Gabay, D., Mercier, B.: A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 2(1), 17–40 (1976)

Glowinski, R., Marrocco, A.: Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité, d’une classe de problèmes de Dirichlet non linéaires. Rev. Française Automat. Informat. Recherche Opérationnelle Sér. Rouge Anal. Numér. 9(R–2), 41–76 (1975)

Goldstein, T., Osher, S.: The split Bregman method for \(L1\)-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

He, B., Yuan, X.: On the \(O(1/n)\) convergence rate of the Douglas-Rachford alternating direction method. SIAM J. Numer. Anal. 50(2), 700–709 (2012)

Hiriart–Urruty, J.B., Lemaréchal, C.: Convex analysis and minimization algorithms II: advanced theory and bundle methods. In: Grundlehren der Mathematischen Wissenschaften, vol. 306. Springer, Berlin (1993)

Lions, P.-L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979)

Minty, G.J.: Monotone (nonlinear) operators in Hilbert space. Duke Math. J. 29, 341–346 (1962)

Monteiro, R.D.C., Svaiter, B.F.: Iteration-complexity of block-decomposition algorithms and the alternating direction method of multipliers. SIAM J. Optim. 23(1), 475–507 (2013)

Moreau, J.-J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. France 93, 273–299 (1965)

Passty, G.B.: Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 72(2), 383–390 (1979)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, No. 28. Princeton University Press, Princeton, NJ (1970)

Rockafellar, R.T.: On the maximal monotonicity of subdifferential mappings. Pac. J. Math. 33, 209–216 (1970)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms (Experimental mathematics: computational issues in nonlinear science (Los Alamos, NM, 1991)). Phys. D 60(1–4), 259–268 (1992)

Tseng, P.: Applications of a splitting algorithm to decomposition in convex programming and variational inequalities. SIAM J. Control Optim. 29(1), 119–138 (1991)

Acknowledgements

This work is part of the author’s Ph.D. thesis, written under the supervision of Benar Fux Svaiter at IMPA, and supported by CAPES and FAPERJ. The author would like to thank Carlos Antonio Galeano Ríos and Mauricio Romero Sicre for the many helpful suggestions on this paper, which have improved the exposition considerably.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pentón Machado, M. Projective method of multipliers for linearly constrained convex minimization. Comput Optim Appl 73, 237–273 (2019). https://doi.org/10.1007/s10589-019-00065-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00065-1