Abstract

The advent of the cloud computing paradigm allowed multiple organizations to move, compute, and host their applications in the cloud environment, enabling seamless access to a wide range of services with minimal effort. An efficient and dynamic task scheduler is required to handle concurrent user requests for cloud services using various heterogeneous and diversified resources. Improper scheduling can lead to challenges with under or over-utilization of resources, which could waste cloud resources or degrade service performance. Nature-inspired optimization techniques have been proven effective at solving scheduling problems. This paper accomplishes a review of nature-inspired optimization techniques for scheduling tasks in cloud computing. A novel classification taxonomy and comparative review of these techniques in cloud computing are presented in this research. The taxonomy of nature-inspired scheduling techniques is categorized as per the scheduling algorithms, nature of the scheduling problem, type of tasks, the primary objective of scheduling, task-resource mapping scheme, scheduling constraint, and testing environment. Additionally, guidelines for future research issues are also provided, which should undoubtedly benefit researchers and practitioners as well as open the door for newcomers eager to pursue their glory in the field of cloud task scheduling.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The ever-expanding scope of the transnational corporate atmosphere has prompted the establishment of sophisticated technology to handle it [1]. One such innovation that can expedite software deployment processes and save time and effort is cloud computing [2]. Cloud computing is the practice of accessing and conserving information and applications via the internet rather than locally stored systems at the consumer’s disposal [3, 4]. It uses distant servers connected to the internet to store, administer, and provide online access to information. Organizations must establish sophisticated cloud management to manage and govern the utilization of cloud resources while assuring appropriate, reliable, and adaptable hardware and software that optimizes costs. The early phases of preparing should include developing the cloud deployment approach to the management of clouds [5, 6]. Due to the low probability of infrastructure breakdowns in the cloud, servers are consistently and widely accessible. Cloud computing allows numerous individuals to utilize various applications efficiently and at a lower cost due to the collaboration of shared infrastructures [7].

In cloud computing, a scheduler (broker) is designed to identify possible approaches for allocating a collection of readily accessible scarce resources to new applications to maximize scheduling targets such as makespan, computational cost, monetary cost, reliability, availability, resource utilization, response time, energy consumption, etc. [8, 9]. The scheduler devises strategies for allocating the proper assignments to constrained resources to maximize scheduling outcomes [10]. The scheduling algorithm aims to decide which task will be carried out based on which resource [11]. This approach encourages accessibility to the collaborative resource stream while providing the appropriate consumers with a QOS assurance. The primary objective of a novel scheduling approach is to determine the best combination of resources that can be employed to accomplish an incoming task so that a scheduling algorithm can be used to maximize a variety of QOS factors, including expenses, makespan, flexibility, trustworthiness, task disapproval proportion, the efficiency of resources, utilization of energy, etc., and meet restrictions, such as the deadline and budgetary constraints, etc., to prevent the issue of load imbalance. The solution to user satisfaction is achieving the intended efficiency, which may be accomplished by rendering the primary objective of cloud computing services [12]. Today, a wide range of applications have been developed that employ the scheduling principle, including power system management, scheduling of multi-modal contents on the global web, and electronic circuit board production [13].

Task scheduling in cloud computing is a complicated topic that necessitates tackling a number of effectiveness, resource utilization, and workload administration obstacles while additionally considering diverse assets, task dependencies, QoS specifications, assurances, and confidentiality issues. The majority of task scheduling challenges are either NP-complete or NP-hard. As a result, it takes a very long time to develop an optimal solution compared to other alternatives [14]. There are no particular techniques for obtaining polynomial-time responses to these issues. Taillard suggested a scenario in which 0.02 to 1.01 percent of candidate solutions require the total amount of time necessary to reach the best solution [15]. This illustration demonstrates how challenging it can be to identify the optimum solution to a complex situation. Most researchers have therefore been motivated to pursue an appropriate scheduling algorithm to identify a quick yet effective solution to this scheduling challenge. The cloud service provider must offer an effective and optimal scheduling procedure that considers a number of factors, including cost, time, and SLA standards that must be followed as established by the users.

1.1 Motivation of the research

The two primary scheduling techniques modern computer systems use are exhaustive algorithms and Deterministic Algorithms (DAs) [16]. In terms of efficiency, DAs are substantially better than both conventional (exhaustive) and heuristic methods for scheduling problems. However, DAs have two key drawbacks: first, they were designed to handle some data distributions, and second, only some DAs can handle complex scheduling issues. Meta-heuristic algorithms, also known as approximation algorithms, use iterative techniques to obtain optimal solutions faster than 7DAs and exhaustive algorithms [16,17,18,19]. Meta-heuristic algorithms are classified into nature-inspired and non-nature-inspired [20]. Numerous study findings show that nature-inspired optimization algorithms produce superior scheduling outcomes than conventional and heuristic ones [21, 22]. While numerous nature-inspired optimization scheduling techniques have been successfully used in various computing contexts, including grid and clustering computing, they have yet to be specifically designed for the cloud. As a result, nature optimization algorithms may initially appear inappropriate as a scheduling option for cloud tasks to the general public. Encouraged by this misunderstanding, this study not only offers a systematic overview of scheduling methods used in the cloud environment from a nature-inspired perspective but also establishes a connection between conventional/heuristic scheduling methods and nature-inspired meta-heuristic ones so that cloud researchers who are still enthusiastic about conventional/heuristic scheduling can transit to scheduling based on nature-inspired optimization swiftly. The purpose of discussing both conventional and heuristic algorithms before discussing nature-inspired optimization algorithms is to clarify the differentiation between them easier.

We are also inspired by ideas from peer surveys in earlier works of literature. Task scheduling is a crucial component of cloud computing, which aims to increase VM utilization while lowering data center operating costs, leading to appreciable advancements in QoS metrics and overall performance. With an effective task scheduling technique, a high number of user requests may be processed appropriately and assigned to suitable VMs, which helps to meet the needs of cloud users and service providers more effectively. We carefully reviewed numerous nature-inspired scheduling approaches in the literature, and we discovered that most of the studies of literature do not cover all the aspects such as QoS-based comparative analysis, state-of-the-art, taxonomy, graphical representations, open issues and comparison of simulation tools of task scheduling as shown in Table 1. Because of this, conducting a thorough assessment of task scheduling utilizing nature-inspired optimization is vitally necessary to keep up with the field's continuing, expanding research.

1.2 Contributions of the present study

Although nature-inspired scheduling methods considerably impact cloud services, essential methodologies and backgrounds of this sector still need to be thoroughly and methodically evaluated. As a result, this study aims to compare the prior strategies and assess and critique current cloud scheduling systems in light of nature-inspired algorithms. A unique classification scheme (taxonomy) and a thorough analysis of contemporary nature-inspired scheduling approaches in cloud computing are provided. The effectiveness of existing techniques is evaluated based on qualitative QoS parameter-based criteria. The comparison of numerous simulation tools frequently used in the cloud is also presented. Through extensive investigation and discussion, results related to the pertinent aspects are validated. Finally, research issues are compiled to create a research roadmap that could include potential future study areas and existing trends.

2 Categorization of cloud task scheduling schemes

Cloud task scheduling scheme has been divided into three groups: traditional scheduling, heuristics scheduling, and meta-heuristics scheduling. This section has compared the traditional and heuristics strategies and evaluates and assesses the cloud computing system in light of nature-inspired optimization algorithms.

2.1 Traditional scheduling

Scheduling can be defined as assigning tasks to a set of provided machines under the constraints of objective function optimization. The scheduling challenge is called a single-processor scheduling issue when only one machine exists. The scheduling issue is seen as a multiprocessor scheduling when more than one machine is involved. Based on resource consumption costs, makespan, load balancing, and QoS and its variants, scheduling algorithm performance can be measured. In the context of traditional scheduling, a hierarchy of difficulties was established through numerous investigations [28, 29] concerning the characteristics of tasks (i.e., weight, due date, release date, and processing time), machines (i.e., single or multiple), as well as many other details, such as online vs. offline, batch vs. non-batch, precedence vs. non-precedence, sequence-dependent vs. sequence-independent. Scheduling problems are typically distinguished from one another and described using these limitations. All scheduling issues discussed in [28] have been explained using a three-fold notation. In this approach, a single or parallel machine designates the machine type, sequence-dependent or sequence-independent specifies the processing features and limitations, and makespan indicates the measure's value. According to the length of the scheduling time, the schedule was divided into four categories [29]: short-range, middle-range, long-range, and reactive control/scheduling.

2.2 Heuristics scheduling

Heuristic algorithms vary in performance depending on the problem they are used for; although they work well for some situations, they struggle with others. Heuristics typically deliver a precise response in a reasonable amount of time for a particular type of problem, but they need to improve when it comes to challenging optimization problems. Numerous heuristic techniques have been developed, such as Min-Min [30], Max–Min [30], First Come First Serve (FCFS) [31], Shortest Job First (SJF) [32], Round Robin (RR) [33], Heterogeneous Earliest Finish Time (HEFT) [34], Minimum Completion Time (MCT) [35], and Sufferage [36] in cloud environments to address the scheduling issues relating to workflow and independent tasks/applications.

In the Min-Min heuristics algorithm, the shortest task that can be completed in the shortest amount of time is chosen from among all tasks provided, and it is mapped to a virtual machine that will take the shortest amount of time to complete. When all tasks are successfully scheduled, the procedure is repeated, lengthening the overall makespan as each task's completion time lengthens. Large jobs must wait until smaller ones are completed before being handled by this method, which handles minor chores efficiently. This approach dramatically increases system throughput overall; however, heavy workloads may cause a starvation problem. Chen et al. [37] and Amalarethinam and Kavitha [38] used Min-Min in their proposed work. A set of issues, such as slow task execution, difficulties in the deadline, and priority problems, arise during the execution of the Min-Min algorithm. The max–Min algorithm created the problem of potential resource over-utilization and under-utilization in [39] and [40]. Non-continuous monitoring of nodes, load imbalance, cost, and time overheads due to unmanaged communication and storage, and static task scheduling problems are introduced while implementing Heterogeneous Earliest Finish Time (HEFT) algorithms [41, 42]. SJF can not solve the problem of starvation and load imbalance [43,44,45]. Round Robin needs to improve its ability to balance the load [46, 47]. Sufferage performs flawlessly in many situations; however, this technique has drawbacks if numerous tasks have identical suffrage values [48]. In this scenario, the first arriving task is chosen and run without considering other tasks, which may lead to a starving problem.

2.3 Meta-heuristic scheduling

Due to their efficiency in resolving complex and extensive computational challenges, meta-heuristic algorithms have significantly increased in popularity. Task scheduling is explained by applying heuristic and meta-heuristic methods to obtain optimal or nearly optimal solutions because traditional methods frequently fail to comprehend situations to their optimality. Heuristic solutions frequently become caught in a local minima dilemma, and meta-heuristic algorithms are the most effective way to escape this condition, as mentioned in [24, 26]. Meta-heuristic algorithms efficiently explore the search space to find a sub- or near-optimal solution to NP-complete problems. It also doesn't depend on the problem being solved; it typically uses approximation rather than determinism. Because of their intuitive independence from the problem to be addressed, meta-heuristic algorithms are valuable for tackling problems in various domains with highly acceptable performance. Researchers have systematically evaluated the application of meta-heuristic algorithms on scheduling features in cloud and grid settings [49,50,51,52]. Meta-heuristic techniques are frequently employed as very efficient solutions to NP-hard optimization issues. Meta-heuristic algorithms are classified into two types [53]:

-

•

Nature-inspired

-

•

Non-nature-inspired.

3 Nature-inspired meta-heuristics scheduling

Nature-inspired algorithms are optimization methods that handle challenging optimization issues by emulating the behavior of natural systems [54]. These algorithms effectively locate optimal solutions for multi-dimensional and multi-modal issues. This paper conducts a schematic review of the nature-inspired optimization techniques of task scheduling in the cloud computing application.

4 Taxonomy of nature-inspired task scheduling optimization algorithm in cloud computing

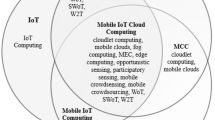

A novel, rigorous taxonomy is presented in Fig. 1 using a number of principal methodologies used in the literature to more thoroughly and clearly comprehend the nature-inspired task scheduling approaches in cloud computing. This taxonomy divides the methods into seven main divisions based on the type of scheduling algorithm (scheduler), nature of the scheduling problem, nature of the task, primary objectives of scheduling, task-resource mapping schemes, scheduling constraints, and testing environment.

4.1 Scheduling algorithm

Nature-inspired scheduling algorithms can be divided into four major categories [55] such as:

-

•

Evolutionary-based

-

•

Swarm-based

-

•

Physics-based

-

•

Hybrid approaches

This study has discussed various algorithms of these four categories of nature-inspired optimization algorithms for task scheduling in cloud computing. Figure 2 illustrates the overall summary of all the nature-inspired optimization algorithms discussed in this section.

4.1.1 Evolutionary-based algorithm

The principles of natural evolution serve as the foundation for evolutionary approaches [56]. An initially produced population that evolved over several generations serves as the basis for the search procedure. The best individuals are constantly brought together to create the next generation, one of these approaches' most vital points. As a result, the population can be improved over several generations. Genetic Algorithm (GA) [57], Memetic Algorithm (MA) [58], Evolution Strategy (ES) [59], Probability-Based Incremental Learning (PBIL) [60], Genetic Programming (GP) [61], Lion Optimization Algorithm (LOA) [62], Imperialist Competitive Algorithm (ICA) [63], Sun Flower Optimization Alg (SFO) [64], and Biogeography-Based Optimizer (BBO) [65] are some of the well-known algorithms. In this section, some evolutionary nature-inspired algorithms that are used in task scheduling of cloud computing are briefly summarized.

4.1.1.1 Genetic algorithm

The Darwinian notion of “survival of the fittest” served as the foundation for the development of the genetic algorithm (GA), which is bio-inspired in that fitness is increased through the process of evolution through reproduction [66]. A Genetic Algorithm analyzes new regions of the solution space while utilizing the best solutions from completed searches. Chromosomes, composed of a collection of components called genes, can represent any solution to a particular problem. Crossover or mutation operators produce new offspring chromosomes once the population has been initialized with randomly produced solutions [67]. The generation of the children is repeated until enough of the best offspring are produced to find the best outcome. The scheduling problem response can be expressed using a variety of representation strategies. Some researchers [68] have encoded the solutions using fixed-length binary strings. A chromosomal matrix is utilized to depict the mapping of tasks on resources in direct representation [69,70,71,72,73]. Paper [74] employed the min-min heuristic and minimum execution time to create the initial population. When populating the population, the order of the tasks was also considered [74,75,76], and genetic operations were then used to solve the workflow scheduling issue. In [76], Round Robin and best-fit techniques identify potential solutions for allocating jobs to resources. According to [77], crossover and mutation operations are carried out based on the level-wise representation after tasks have been organized according to the order of their workflow level. In reference [78], the authors used crossover and mutation operators. The chromosomes are depicted as 2D strings in [79] based on the timeframe and financial constraints. HEFT is paired with a genetic algorithm [80] to create a scheduling plan. Authors [81] have suggested two chromosomes: an ordering chromosome that specifies the execution order following the scientific workflow representation and an allocation chromosome that contains the assignment of tasks to nodes. A task scheduling technique based on the Shadow Price-guided Genetic Algorithm (SGA) was presented by [82]. The DVFS technique is applied to the grid environment to reduce energy consumption and maximize makespan [83]. The authors of [84] describe a multi-agent evolutionary method for distributing load among virtual computers. A VM allocation methodology with appropriate load balancing and system resource utilization is provided by the method utilized by [85]. The migration cost is optimized through elitism selection and tree structure encoding.

4.1.1.2 Memetic algorithm

Dawkins' Memetic Algorithm (MA) [86] is based on the term meme. Memes are concepts like rumors and stories that spread across the community of meme carriers. MAs use a specific local search procedure to enhance or improve individual fitness. Many issues in the real world have been addressed using MA. In [87], the authors used a memetic method to solve a hybrid flow shop scheduling problem involving multiprocessor activities. Researchers have employed MA to address the traveling salesperson problem and quadratic assignment [88]. The memetic has been used with hill climbing, tabu search, and simulated annealing for task scheduling [89, 90]. The best schedule for a workflow application running on a multiprocessor system uses MA for multiprocessor scheduling [91]. The authors of [92] used this algorithm to shorten the schedule. A global search optimization based on the Particle Swarm Optimization (PSO) technique produces candidate solutions.

4.1.1.3 Imperialist competitive algorithm

The mathematical description of the imperialist competition, which improves optimization outcomes, inspired the Imperialist Competitive Algorithm (ICA) [93]. Imperialism refers to using and controlling another nation's economic, political, and human resources by direct legislation. Numerous academics have used ICA-based optimization techniques to address the scheduling issue. An ICA-based technique is used for the flow shop scheduling problem [94]. By reducing waiting time in [95], identical work is extended to reduce maximum completion time. In [96], a bi-objective parallel machine scheduling problem with an emphasis on minimizing mean task completion time was addressed. A power-aware load balancing technique reduces energy consumption in cloud computing data centers based on ICA [97]. A cost-effective resource provisioning mechanism is provided in [98] to assign virtual machines with reservations on an on-demand basis. Makespan is optimized for independent tasks in grid computing [99] utilizing the imperialist competition method. A hybrid technique is used for job shop scheduling in [100] to reduce the makespan. A Gravitational Attraction Search (GAS) technique was introduced in [101] and was integrated with ICS in a different study to optimize the service composition problem in cloud computing more quickly. The authors have expanded their work by combining a PROCLUS classifier with an ICA algorithm to choose the best service provider for particular services [102]. In [103], authors developed an ICA-based scheduling method that takes execution time and execution cost parameters into account for activities that depend on scheduling. In [104], the authors offered an online scheduler based on the ICA and established a dependability model for cloud systems.

4.1.1.4 Lion optimization algorithm

The Lion Optimization Algorithm (LOA) is a meta-heuristic optimization algorithm inspired by the social behavior of lions. The social structure of lions, in which young are born from resident males and females, is called pride. The lion's territorial defense and territorial takeover behavior have been used to solve optimization problems [105]. The lion's behaviors of hunting, going toward a safe area, wandering, and migration were included by the authors in [106]. The proposed work has been contrasted with algorithms for Invasive Weed Optimization (IWO), Biogeography-based Optimization (BBO), Gravitational Search (GSA), Hunting Search (HuS), Bat Algorithm (BA), and Water Wave Optimization (WWO). The simulation outcomes show how effective the LOA algorithm is in solving many other optimization issues.

4.1.1.5 Sunflower optimization algorithm

The Sunflower Optimization Algorithm (SFO) is a population-based algorithm modeled after a natural process that moves sunflowers toward the sun. The SFO is a new meta-heuristic algorithm motivated by sunflowers traveling toward the sun while considering neighboring sunflower pollination. The performance of the current task scheduling is improved by the Enhanced Sunflower Optimization (ESFO) algorithm introduced in the paper [107]. It discovers the best scheduling strategy in polynomial time. An effective hybrid optimization algorithm named Sunflower Whale Optimization Algorithm (SFWOA) is proposed in [108]. The authors of the paper [109] suggest an Opposition-based Sunflower Optimization (OSFO) algorithm improve the efficiency of the task schedulers that are already in use in terms of cost, energy, and makespan. Paper [110] suggests a sunflower optimization algorithm with a sine–cosine algorithm (SFOA-SCA) for enhancing the effectiveness of load balancing in cloud networks.

4.1.2 Swarm-based algorithm

Swarm Intelligence (SI) is a relatively recent method of problem-solving that draws its inspiration from the social behavior of insects and other animals and the collective intelligence of swarms of biological populations [111]. A computational and behavioral paradigm called SI uses the interplay of small information processing units to address a dispersed problem. The most widely used algorithms are Particle Swarm Optimization (PSO) [112], Marriage in Honey Bees Optimization Algorithm (MBO) [113], Whale Optimization [114], Firefly Optimization [115], Artificial Bee Colony (ABC) [116], Ant Colony Optimization (ACO) [117], Artificial Fish-Swarm Algorithm (AFSA) [118], Bat algorithm (BA) [119], Cat Swarm Optimization [120], Termite Algorithm [121], Wasp Swarm Algorithm [122], Monkey Search [123], Wolf Pack Search Algorithm [124], Bee Collecting Pollen Algorithm (BCPA) [125], Symbiotic organism search optimization algorithm [126], Crow Search, Cuckoo Search [127], Dolphin Partner Optimization (DPO) [128], Grey Wolf Search Algorithm [129], Glow worm optimization [130], etc. In this section, some swarm intelligence nature-inspired algorithms that are used in task scheduling of cloud computing are briefly summarized.

4.1.2.1 Ant colony optimization

Marco Dorigo created the ant colony optimization (ACO). The foraging behavior of several ant species inspired it. The pheromone that the ants deposit on the ground instructs the other ants to follow the path. Pheromone values are employed to seek solution space, and the ants record their positions and the caliber of their solutions to identify an optimum solution [131]. Paper [132] suggests using the ACO technique to specify the task and resource selection criteria in clusters. The user specifies QoS limitations in [133] to get the appropriate quality for the scheduling workflow. The paper [134] presents an Ant Colony System (ACS)-based workflow scheduling algorithm that has been enhanced with several additional features. The ACO algorithm and the knowledge matrix notion are combined in [135]. The researchers adopted the ACO approach [136] to track the historical desirability of putting them in the same physical machine. The paper [137] has developed an ant colony-based energy-efficient scheduling algorithm. The updated pheromone schemes are presented in [138, 139]. The population is created using the concept of biased starting ants from [140], where the expected time to complete a task and the standard deviations of tasks are considered. An infinite number of ants are employed in [141] for the grid or cloud-based scheduling of interdependent jobs or workflows. Researchers in [142] suggest independent work scheduling based on the ACO approach for cloud computing systems. Based on ACO, workflow scheduling for grid systems is suggested in [143]. The length of the schedule is kept to a minimum, and tests with time-varying workflow are run in a grid setting. When scheduling workflows in hybrid clouds, reference [144] considers deadline and cost.

4.1.2.2 Particle swarm optimization

Particle swarm optimization (PSO) was created by Eberhart and Kennedy [145] and is based on the social behavior of particles like flocking birds. The particles adjust their path based on their optimal position and the optimal position of the best particle across the board for each generation. The position and speed of the particles are initialized before the population of the particles is created at random [146,147,148,149,150]. Discrete PSO is paired with the Min-Min approach [151] to decrease the execution time of scheduling activities on computational grids. A combination of PSO and Gravitational Emulation Local Search (GELS) will improve the utilization of searching space [152]. Tasks are scheduled using a Hybrid Particle Swarm Optimization (HPSO) to reduce turnaround time and increase resource effectiveness [153]. In paper [154], Tabu Search (TS) and PSO are combined to use TS to create a local search mechanism. PSO reduces the completion time and improves resource utilization when paired with another local search approach called Cuckoo Search [155]. Task scheduling in grid contexts has been done via particle swarm optimization [156, 157]. A PSO-based hyper-heuristic for resource scheduling in the grid context was presented by R. Aron et al. [158]. In [159], a load rebalancing algorithm utilizing PSO and the least position value technique is used for task scheduling. PSO suggests a Task-based System Load Balancing method (TBSLB-PSO) in [160]. The method reduces transfer and task execution times. Improved makespan and resource utilization are found in [161]. Energy consumption is decreased to 67.5% using the particle swarm optimized Tabu search mechanism (PSOTBM) [162].

4.1.2.3 Artificial bee colony optimization

The Artificial Bee Colony (ABC) algorithm is based on how honey bees intelligently forage for food. Dervis Karaboga created this strategy in 2005 to address real-world issues [163]. Every bee in a colony cooperates to find food sources, and this knowledge is used to guide decision-making regarding the search for space exploration. Numerous combinatorial problems, including flow shop scheduling [164], on-shop scheduling [165], project scheduling [166], and traveling salesman [167], have been solved using this technique. [168, 169] provide examples of how bee colony optimization is used for task scheduling in distributed grid systems. For task mapping on the resources, authors have used bees' foraging behavior as a model. The strategy is described in [170], which also distributes the workload of parallel programs across the resources available in grid computing systems. Load balancing [171, 172] presents non-preemptive independent task scheduling based on ABC optimization. Compared to the Min-Min algorithm, resource utilization is enhanced by an average of 5.0383% when bee colony and PSO are combined [173]. ABC is integrated with a Memetic method to reduce the makespan and balance the load [174]. Many writers have used the ABC algorithm to schedule different tasks in cloud computing [175, 176]. The ABC algorithm was made possible for scheduling dependent tasks in a cloud context by several distinctive characteristics, such as modularity and parallelism [177, 178]. Energy-aware scheduling is suggested by [179] to effectively manage the resources and improve their usage in the cloud.

4.1.2.4 Bat algorithm

A swarm-based method called the Bat Algorithm (BA) imitates bats’ echolocation activity [180]. Bats emit a sound pulse, and an echo is created when nearby objects reflect that sound pulse. Bats use the lag time between the signal’s emission and return to determine the prey’s precise location, distance, and speed. Paper [181] uses the BA algorithm in a cloud context for resource scheduling, which optimizes the makespan more effectively than the GA method. In [182], a hybrid technique for BA-Harmony search is suggested for cloud computing work scheduling. The Gravitational Scheduling Algorithm (GSA) [183] is a further extension of BA that considers time restrictions and a trust model. This approach chooses resources for task mapping based on their trust value. To reduce execution costs, the Authors used BA to resolve the workflow scheduling issue in the cloud [184]. The approach performs better in terms of processing costs comparing the algorithm to the best resource selection algorithm. PSO and the bat algorithm are combined in the paper [185] for cloud profit maximization.

4.1.2.5 Cat swarm optimization

The author [186] introduced the Cat Swarm Optimization (CSO) algorithm for continuous optimization issues. Based on the social behavior of cats, a heuristic algorithm for optimization is proposed. It is based on the seeking and tracking behavior modes of cats. CSO is used in the paper [187]. Researchers in [188, 189] provide many modified versions of CSO for resolving discrete optimization issues in various fields. For the zero–one knapsack issue and the traveling salesperson's problem, DBCSO [190] is a binary variant of CSO. In cloud computing, CSO has been utilized to address the scheduling of workflows while considering single and multiple objectives [191]. Makespan, computation expense, and CPU idle time were considered optimization criteria for mapping the dependent tasks. The traditional genetic algorithm is supplemented with CSO and DBCSO to initialize the population [192].

4.1.2.6 Whale optimization algorithm

An innovative method for handling optimization issues is the Whale Optimization Algorithm (WOA). Three operators are used in this algorithm to replicate how humpback whales hunt by searching for prey, circling prey, and using bubble nets. The bubble-net feeding method is the name of this foraging technique [193]. By optimizing the allocation of tasks to resources, the WOA algorithm can reduce the makespan and improve the overall performance of the cloud computing system. Mangalampalli et al. created a Multi-objective Trust-Aware Scheduler with Whale Optimization (MOTSWO) that prioritizes jobs and virtual machines and schedules them to the best virtual resources while consuming the least amount of time and energy possible [194]. The task scheduling technique presented by [195] allocates tasks to the appropriate VMS based on determining task and VM priorities. It is modeled using the WOA to reduce data center energy use and electricity costs. The multi-objective model and WOA are the foundation for the task scheduling algorithm proposed in this research, known as W-Scheduler [196]. To further expand the WOA-based method's capacity to find the best solutions, the authors offer Improved WOA for Cloud Task Scheduling (IWC) [197]. The suggested IWC offers superior convergence speed and accuracy in searching for the optimal task scheduling plans than the present meta-heuristic algorithms, according to simulation-based studies and comprehensive IWC implementation. Another IWC has been presented in [198]. The Vocalization of the Humpback Whale Optimization Algorithm (VWOA) is used to optimize task scheduling in a cloud computing environment [199]. It reduces time, cost, and energy consumption while maximizing resource use in terms of makespan, cost, degree of imbalance, resource utilization, and energy consumption.

4.1.2.7 Firefly algorithm

The flashing behavior of fireflies inspired the nature-inspired optimization method known as the Firefly Algorithm (FA) [200]. A population-based algorithm mimics the flashing behavior of fireflies to choose the best answer. An efficient Trust-Aware Task Scheduling algorithm using Firefly optimization has been presented in [201], which has shown a significant impact over the conventional approaches by minimizing the makespan, availability, success rate, and turnaround efficiency. An acceptable improvement for makespan and resource utilization using the FA has been presented in [202]. The Crow Search algorithm and FA are integrated to enhance global search capability [203]. A hybrid Firefly-Genetic combination is propounded for scheduling tasks in [204]. An intelligent meta-heuristic algorithm based on the combination of ICA and FA has been presented in [205] to show dramatic improvements in makespan, CPU time, load balancing, stability, and planning speed. Another hybrid method using Firefly and SA algorithms has been proposed in [206]. The Cat Swarm Optimization and the FA were combined into a hybrid multi-objective scheduling algorithm [207]. An original modified Firefly Algorithm (mFA) is used to construct and successfully optimize the operational cost minimization problem for DGDCs [208].

4.1.2.8 Crow search algorithm

A novel kind of swarm intelligence optimization algorithm known as the “Crow Search Algorithm” was developed by imitating the intelligent behavior of crows in hiding and finding food [209]. The Crow Search method has been applied to optimization problems. The Crow Search is proposed in [210] for task scheduling in the cloud. Enhanced Crow Search Algorithm (ECSA) is proposed in [211] to improve the random selection of tasks. A technique called the Crow-Penguin Optimizer for Multi-objective Task Scheduling Strategy in Cloud Computing (CPO-MTS) is suggested in [212]. The suggested algorithm quickly determines how to best use the given tasks and cloud resources to complete them. In a multi-objective task scheduling environment, Singh et al. propose the Crow Search-based Load Balancing Algorithm (CSLBA), which focuses on allocating the best resources for the task to be implemented while considering different factors like the Average Makespan Time (AMT), Average Waiting Time (AWT), and Average Data Center Processing Time (ADCPT) [213]. The task resource mapping problem is addressed using a Crow Search-based load balancing method for enhanced optimization [214]. To fix the issue with the resource allocation model, a new optimization technique known as Grey Wolf Optimization and Crow Search Algorithm (GWO-CSA) is created [215]. The Crow Search algorithm and the Sparrow Search Algorithm (SSA) are combined to minimize energy in the cloud computing environment [216]. Mangalampalli et al. proposed a multi-objective task scheduling method using the Crow Search Algorithm to schedule tasks to the appropriate virtual machines while considering the cost per energy unit in data centers [217].

4.1.2.9 Cuckoo search algorithm

Cuckoo Search is one of the most recent algorithms to draw inspiration from nature. Cuckoo Search is based on some cuckoo species' brood parasitism [218]. Additionally, the so-called Lévy flights improve this technique. The Cuckoo Search algorithm is proposed in [219, 220] to optimize task scheduling in cloud computing. A combination of two optimization algorithms, Cuckoo Search and PSO (CPSO), has been proposed in [221] paper to reduce the makespan, cost, and deadline violation rate. The Cuckoo Crow Search Algorithm (CCSA) is an effective hybridized scheduling method developed in [222] to find an appropriate VM for task scheduling. The cuckoo search-based task scheduling method suggested in [223] assists in efficiently allocating tasks among the available virtual machines and maintains a low overall response time (QoS). For better resource management in the Smart Grid, a load-balancing strategy based on the Cuckoo Search is suggested in [224]. A Standard Deviation-based Modified Cuckoo Optimization Algorithm (SDMCOA) is described to schedule tasks and manage resources [225] efficiently. The Multi-objective Cuckoo Search Optimization (MOCSO) algorithm is suggested to resolve multi-objective resource scheduling issues in an IaaS cloud computing context [226]. A combination of Cuckoo Search and oppositional-based learning (OBL) has created a new hybrid algorithm called the Oppositional Cuckoo Search Algorithm (OCSA) [227]. Madni et al. developed the Hybrid Gradient Descent Cuckoo Search (HGDCS) algorithm based on the Gradient Descent (GD) approach and Cuckoo Search algorithm to optimize and address issues with resource scheduling in IaaS cloud computing [228]. The CHSA algorithm, a mix of the Cuckoo Search and Harmony Search (HS) algorithms, is used to optimize the scheduling process [229]. A group technology-based model and Cuckoo Search algorithm are proposed for resource allocation [230]. A Hybridized Optimization algorithm that is the combination of the 'Shuffled Frog Leaping Algorithm' (SFLA) and 'Cuckoo Search' (CS) Algorithm for resource allocation is proposed in [231].

4.1.2.10 Grey wolf search optimization algorithm

A meta-heuristic optimization algorithm called grey wolf optimization (GWO) was developed after studying the social interactions of grey wolves [232]. The GWO algorithm is modeled on how grey wolves hunt, which involves working together as a pack to catch prey. Natesan et al. propose simulating the performance cost grey wolf optimization (PCGWO) algorithm to optimize allocating resources and tasks in cloud computing [233]. The publication [234] offers the modified fractional grey wolf optimizer for multi-objective task scheduling (MFGMTS), a multi-objective optimization technique. Grey wolf optimizer is also proposed in [235]. A hybrid algorithm Genetic Gray Wolf optimization algorithm (GGWO), is proposed by combining gray wolf optimizer (GWO) and genetic algorithm [236]. In the study [237], a mean GWO algorithm has been developed to enhance the system performance of task scheduling in the heterogeneous cloud environment. A multi-objective GWO technique has been developed in [238] for task scheduling to achieve the best possible use of cloud resources while minimizing the data center’s energy consumption and the scheduler’s overall makespan for the given list of tasks. Using the hill-climbing approach and chaos theory, Mohammadzadeh et al. [239] devised IGWO, an enhanced version of the GWO algorithm that speeds up convergence and avoids getting caught in the local optimum. Particle Swarm Optimization and Grey Wolf Optimization, two well-known meta-heuristic algorithms, have been combined to form the PSO-GWO algorithm, which has been proposed in [240]. The experiment's findings indicate that, compared to the traditional Particle Swarm Optimization and Grey Wolf Optimization techniques, the PSO-GWO methodology reduces the average total execution cost and time.

4.1.2.11 Glowworm swarm optimization

The glowworm swarm optimization (GSO) method simulates the movement of the glowworms in a swarm depending on the distance between them and on a luminous substance called luciferin [241]. It is a relatively recent swarm intelligence system. The article [242] proposes a hybrid glowworm swarm optimization (HGSO) based on GSO to achieve more effective scheduling with affordable costs. The suggested HGSO speeds up convergence and makes it easier to escape from local optima by reducing unnecessary computation and dependence on GSO initialization. GSO is used in paper [243] to address the task scheduling issue in cloud computing in order to reduce the overall cost of job execution while maintaining on-time task completion.

4.1.2.12 Symbiotic organism search optimization algorithm

The symbiotic organism search (SOS) optimization technique is a nature-inspired optimization technique that can be used for cloud computing task schedules. The algorithm is founded on the idea that organisms can coexist harmoniously and use one another's advantages to survive. The discrete symbiotic organism search (DSOS) technique is presented in the publication [244] for optimal task scheduling on cloud resources. The discrete symbiotic organism search (DSOS) method has improved, but because the makespan and response time parameters are so significant, it still gets stuck in local optima. As a result, a quicker convergent technique for enhanced Discrete Symbiotic Organism Search (eDSOS) is suggested in [245] when the search space is more extensive or more prominent due to diversification. The chaotic symbiotic organisms search (CMSOS) technique is developed to resolve the multi-objective large-scale task scheduling optimization problem in the IaaS cloud computing environment [246]. The Adaptive Benefit Factors-based Symbiotic Organisms Search (ABFSOS) method is presented to balance local and global search techniques for a faster convergence speed [247]. An energy–aware Discrete Symbiotic Organism Search (E-DSOS) Optimization algorithm has been proposed in [248] for task scheduling in a cloud environment. A modified Symbiotic Organisms Search Algorithm (G_SOS) is suggested to reduce task execution time (makespan), cost, reaction time, and degree of imbalance and speed up convergence for an ideal solution in an IaaS cloud [249].

4.1.3 Physics-based algorithm

Methods based on physics mimic the laws of physics that govern the cosmos [250]. The most widely used algorithms are Henry Gas Solubility Optimization [251], Simulated Annealing (SA) [252], Gravitational Local Search (GLSA) [253], Big-Bang Big-Crunch (BBBC) [254], Gravitational Search Algorithm (GSA) [255], Charged System Search (CSS) [256], Central Force Optimization (CFO) [257], Artificial Chemical Reaction Optimization Algorithm (ACROA) [258], Black Hole (BH) [259], Ray Optimization (RO) [260]. In this section, some physics-based nature-inspired algorithms that are used in task scheduling of cloud computing are briefly summarized.

4.1.3.1 Henry gas solubility optimization

Henry's law is a fundamental gas law that describes how much of a given gas dissolves in a specific kind and amount of liquid at a specific temperature. The Henry Gas Solubility Optimization (HGSO) algorithm mimics the huddling behavior of gas to balance exploitation and exploration in the search space and prevent local optima. A modified Henry gas solubility optimization for the best task scheduling is provided in the paper [261] and is based on the WOA and Complete Opposition-Based Learning (COBL). Henry Gas Solubility Whale Cloud (HGSWC) is the name of the proposed technique. A set of 36 optimization benchmark functions is used to validate HGSWC, compared to traditional HGSO and WOA.

4.1.3.2 Simulated annealing

Simulated Annealing (SA) is a technique for resolving bound- and unconstrained optimization issues. The technique simulates the physical procedure of raising a material’s temperature and gradually decreasing it to reduce flaws while conserving system energy. A combination of Firefly and SA has been presented in [206].

4.1.4 Hybrid algorithm

In hybrid scheduling algorithms, two or more scheduling algorithms are merged to address the task scheduling issue in a cloud context. The fundamental concept behind hybrid algorithms is to combine the benefits of various methods into a single algorithm to improve performance in terms of computation time, result in quality, or both. [262] discusses combining ACO and PSO techniques to improve resource scheduling. The performance and quality of the solution are optimized using a similar hybrid technique by the authors in [263] employing ACO and Intelligent Water Drop algorithm. ACO with Particle Swarm (ACOPS) [264] is presented to schedule VMs more efficiently. In this method, the burden of user requests is dynamically forecasted and mapped onto the available VMs. In [265], authors introduced an algorithm that optimizes work scheduling in cloud environments using fundamental notions from ACO and ABC. A hybrid Gravitational Emulation Local Search approach and PSO is used in [152] to enhance the results. In [148], the hill climbing local search heuristic is integrated with PSO, and in [266], fuzzy logic and GSO are described.

4.2 Nature of scheduling problem

Developing an optimization model that satisfies the objectives by locating the best optimal solution is necessary because there is always an agreement between optimization objectives. Therefore, two types of scheduling objectives are considered for nature-inspired task scheduling. Table 2 represents the categorization of techniques reviewed based on the nature of the scheduling problem.

4.2.1 Single objective

It is feasible to evaluate the optimality of a specific solution in contrast to another one already existing in a single objective optimization. For predetermined objectives, a single best solution is chosen. Regarding task scheduling in cloud computing, most approaches only consider the CPU and memory requirements.

4.2.2 Multi-objective

It is possible to characterize task scheduling in a distributed heterogeneous computing system as a non-linear, multi-objective, and NP-hard optimization problem that aims to maximize cloud resource consumption while meeting QoS standards. It is impossible to directly compare one solution’s optimality to another already existing in multi-objective optimization. Multi-objective scheduling often uses a Pareto dominance relation technique to replace a single optimal solution with various possibilities and provide numerous, varied trade-offs between the objectives.

4.3 Nature of task

There are two scheduling techniques: independent and dependent scheduling (workflow scheduling). When dependent scheduling is used, a workflow connects the tasks to one another. The tasks are autonomous in independent scheduling because they are not dependent on one another. Several authors used both scheduling in their proposed system. Table 3 depicts the categorization of techniques reviewed based on the nature of task.

4.4 Primary objectives of scheduling

Based on specific scheduling criteria, the scheduling process distributes the tasks inside the workflow onto the appropriate resources. The scheduling criteria, such as execution time, cost, reliability, and load balancing influence the success of the scheduling challenge. Tables 4, 5, 6, and 7 illustrate the categorization of techniques based on primary objectives of scheduling using the evolutionary algorithm, swarm intelligence-based algorithm, physics-base algorithm, and hybrid algorithm, respectively.

4.4.1 Makespan

Makespan is described as the overall time required to execute the whole workflow by considering the time when the tasks finished their execution and the time when it has been submitted [290]. In the literature, most of the scheduling algorithms have focused on optimizing makespan [79, 291]. However, minimizing the total execution time reduces the execution cost while mapping the tasks to the resources.

4.4.2 Security

Due to the heterogeneous and scattered nature of cloud computing resources, security is a significant concern. Because of virtualization and multi-tenancy capabilities, providing data security and privacy in a cloud environment is more challenging than in traditional systems.

4.4.3 Reliability

Failures during the execution of a workflow can occur for various reasons, including resource unavailability, resource failure, and network infrastructure. As a result, the scheduling mechanism should consider resource failure and ensure reliable executions even when there is concurrency and failure. The likelihood that the tasks will be carried out successfully and the workflow will be completed is known as reliability. For an application to run smoothly, all resources must be reliable. It is possible to calculate the failure rate; thus, the mapping should be carried out to increase reliability and lower failure rates. Task-level, VM-level, and workflow-level failures are the three levels of failure in a workflow application.

4.4.4 Cost

The cost of an application is determined by two factors depending on two fundamental cloud resources: the cost of computing and the cost of data transit and storage. By supplying a lot of resources, the overall execution time can be reduced, but execution costs, scheduling overheads, and resource underutilization may all rise as a result.

4.4.5 Load balancing

In cloud computing settings, virtual computers predominate as the processing components. There may be instances during scheduling where multiple tasks are allocated to virtual machines (VMs) for simultaneous execution. The loads on the VMs become imbalanced as a result. The scheduler should be able to spread the burden to the available ones to prevent overloading resources. Load balancing over the resource enhances resource consumption and the efficiency of the scheduling process.

4.4.6 Resource utilization

The service provider benefits from higher resource utilization to maximize profit by renting out scarce resources to users to ensure full utilization.

4.4.7 Rescheduling

Because rescheduling necessitates re-evaluating the plan and the cost of data transportation among the dependent activities over the multiple machines, it is generally considered an overhead to the scheduling process [292]. A heavy load on the server can need rescheduling. Rescheduling the jobs is also necessary in the event of failures such as VM shutdowns or system faults [293]. Only some tasks are chosen for rescheduling because it lengthens execution time overall and causes performance to suffer.

4.4.8 Energy efficiency

The amount of CPU and resources used directly influences how much energy a task uses. When CPUs are not appropriately utilized, idle power is not utilized effectively, resulting in significant energy consumption. Due to the high resource demand, it might occasionally consume much energy, reducing performance [294]. Scheduling decisions are crucial, to limit the energy consumption of the allocated resource. They help determine the best order in which tasks should be completed. An energy-efficient storage service is one of the strategies for reducing power usage addressed in [295]. This service may create a predictive model to forecast how users will use the files and data stored there. A few scheduling algorithms might even be created to lower energy usage [296].

4.5 Scheduling constraints

The possibility of the SLA being negatively impacted if many applications cannot meet the deadline, priority, budget, and fault tolerance limits are essential concerns in cloud scheduling. The suggested mechanisms in this respect are detailed in the subsections that follow. Table 8 illustrates the categorization of techniques based on scheduling constraints.

4.5.1 Budget

Budget is a limitation the user imposes on using the cloud service provider's resources. Scheduling decisions are made utilizing the budget constraint to minimize the workflow's overall execution time and guarantee that it is completed within the budget.

4.5.2 Deadline

Applications that depend on timing must finish running in a specific amount of time. These applications are made to give results before the deadline using deadline-constrained scheduling. When scheduling jobs, deadline-constrained scheduling must also consider the associated costs. In time-sensitive applications, robust scheduling with deadlines is essential since it increases the dependability of the program.

4.5.3 Fault tolerant

A cost-effective fault tolerant (CEFT) scheduling strategy that should adhere to a predetermined deadline in cloud systems was discussed in the paper [257]. This method of handling jobs, known as the primary/backup (P/B) strategy, contains two duplicate copies of each task and offers permanent or temporary fault tolerance in the event of hardware failure. The tasks in this strategy are independent and do not follow a hierarchy. The iterative method is used to optimize the suggested resource allocation technique more successfully. The PSO method chooses VM for the next task in each iteration.

4.5.4 Priority

Verma and Kaushal suggested a Bi-Criteria priority-based PSO (BPSO), lowering the makespan and execution costs while scheduling cloud workflow activities [305]. This PSO would take into consideration deadlines and financial restrictions. Each workflow task is prioritized at the bottom level specified by the HEFT algorithm so that the PSO can carry out the tasks according to these priorities.

4.6 Task-resource mapping scheme

To effectively utilize the available resources based on the cloud environment and the submitted workload, static, dynamic, AI-based, and prediction-based mapping of cloud resources to incoming tasks are carried out. Table 9 illustrates the categorization of techniques based on the task resource mapping scheme.

4.6.1 Static

Static scheduling involves prior knowledge of the tasks to decide on a schedule before a task begins to execute. By enhancing load balancing among VMs and balancing the priority of the activities on those VMs, the paper [171] suggested a resource provisioning technique inspired by the ABC algorithm’s behavior to boost resource utilization, increase system throughput, and decrease queuing time.

4.6.2 Dynamic

Dynamic scheduling may occur while a task is being executed and doesn’t need to be aware of every task property. When maximizing resource use is more critical than reducing execution time, this helps manage changing requirements of cloud users [219]. All meta-heuristic scheduling techniques are, in fact, dynamic [220]. However, some research is discussed in this subsection based on the dynamic cloud environment and the dynamic scheduling system specified in the primary keyword of the selected articles. Islam and Habiba have proposed dynamic scheduling methods based on ACO and Variable Neighborhood PSO (VNPSO) [309].

4.6.3 Artificial intelligence (AI)-based

The creation of an intelligent method that works and responds, like humans, to schedule and assign resources with various aspects, such as intelligent and autonomous systems, nature-inspired intelligent systems, operational research systems, agent-based systems, neural networks, machine learning, and expert systems, is supported by the highly technical and specialized methodology known as AI-based scheduling [226]. Greater accuracy and precision are ensured for resource allocation and scheduling in the cloud framework with AI, and failure and error rates are nearly nonexistent. ML algorithms have been used in cloud computing to forecast the status of the resources based on their anticipated future load and security. Incoming requests were mapped to resources using an ANN model by paper [310]; to complete the task more quickly.

4.6.4 Prediction-based

Prediction-based scheduling relates to how various techniques and measurements behave when allocating resources. When it comes to efficient task scheduling and optimum resource allocation in the cloud environment, it can be essential to estimate the critical resource requirements and users’ demand for the future using automatic resource allocation or resource reservation approaches [231, 232]. Paper [311] introduced a Prediction-based ACO classification algorithm (PACO), which operates based on task prioritization and considers various QoS criteria to minimize the workflow’s overall execution time and guarantee that it is completed within the budget.

4.7 Testing tools and their comparison

Testing new strategies in an authentic setting is almost impossible because some trials could harm the end-user QoS. There are numerous well-known simulation tools for examining novel scheduling algorithms and judging their efficiency in various cloud environments. The most well-known tool for task scheduling is the CloudSim simulation toolkit. Its existing programmatic classes can be expanded by the algorithm needed to assess a variety of QoS parameters, including makespan, financial cost, computational cost, reliability, availability, scalability, energy consumption, security, and throughput, as well as includible constraints, such as deadline, priority, budget, and fault tolerance. iCloud, GridSim, CloudSim, CloudAnalyst, NetworkCloudSim, Work flowSim, GreenCloud, and more well-known simulation tools are available on the cloud. Table 10 illustrates the categorization of techniques based on testing tools.

5 Analysis and discussions

This section comprehensively summarizes and examines the nature-inspired meta-heuristics techniques for task scheduling in cloud computing in terms of the scheduling algorithm, type of tasks, the primary objective of scheduling, task-resource mapping scheme, scheduling constraint, and testing environment.

5.1 Scheduling algorithm

Figure 3 shows how various task scheduling methods for clouds inspired by nature can be divided into swarm, evolutionary, physics, and hybrid approaches, along with the proportions of each most commonly used approach. The majority of the algorithms used in the literature are swarm-based optimization methods. The most active algorithm among them is PSO, as seen in Fig. 4. Sometimes, those algorithms are used with additional meta-heuristic or heuristic techniques. Because a hybrid version of the system can further improve overall performance, the search strategy and convergence speed of meta-heuristic techniques vary. Therefore, the hybrid algorithm achieves the second rank in the list. Most researchers used the GA in evolutionary approaches, as seen in Fig. 5.

5.2 Scheduling objective

Multi-objective techniques have more scheduling strategies than single-objective, as shown in Fig. 6. Consequently, it encourages the ongoing incorporation of multi-objective strategies for more dependable task scheduling in the cloud environment.

5.3 Type of task

The most used type of task scheduling is independent rather than workflow scheduling. Some literature reviews also use the combination of independent and workflow scheduling. The usage measurement of the type of task scheduling for nature-inspired algorithms is illustrated in Fig. 7.

5.4 Primary objectives of scheduling

The algorithms are examined in light of the different scheduling objectives in Fig. 8. The many QoS parameters that different scheduling algorithms take into consideration are shown in Fig. 8. The makespan is the most often employed scheduling objective. The second most crucial element is cost. Only a few studies, as shown in Fig. 8, have utilized reliability into consideration while scheduling tasks. Reliable scheduling can lessen the impact of resource breakdowns. Making scheduling decisions using the application's failure probability as a minimum consideration is recommended. The service providers must consider resource consumption. Proper resource usage will be achievable if the submitted applications have the necessary resources. However, there are connections between the use of resources and energy consumption. The fundamental concept behind enhancing resource usage is to combine the load on the virtual machines (VMs) such that the spare VMs can be turned off or repurposed for new application demands. In this situation, predicting future resource demand can help cloud service providers turn a profit. Reducing VM migrations might be made more accessible with the estimation.

5.5 Scheduling constraints

Deadline is the constraint most emphasized by nature-inspired techniques. Fault tolerance is also a widely adopted constraint. Most studies also concentrated on budget and priority at the time of scheduling.

5.6 Task resource mapping scheme

Dynamic scheduling has the most excellent use in nature-inspired optimization problems. AI and prediction-based scheduling are also more widely used than static scheduling, as depicted in Fig. 9.

5.7 Testing environment

CloudSim is the most popular and widely used testing tool in implementing nature-inspired task scheduling algorithms, as depicted in Fig. 10. A few studies also used GridSim, java, Matlab, etc. in some works.

5.8 Discussion

In this study, a novel taxonomy has been used to comprehensively and meticulously categorize different popular nature-inspired scheduling approaches in the cloud in terms of the scheduling algorithm, the nature of the scheduling problem, the type of task, the main scheduling goal, the task-resource mapping scheme, the scheduling constraint, and the testing environment.

Swarm-based optimization techniques are used in most of the scheduling algorithms used in the literature. The most popular swarm-based technique for scheduling issues in clouds is Particle Swarm Optimization. The majority of researchers use Genetic Algorithms in evolutionary-based techniques as well. These algorithms are occasionally integrated with different heuristics or meta-heuristic methods. Most of the studies are multi-objective and independent. In maximal meta-heuristic algorithms, makespan is employed as the scheduling objective. As scheduling objectives, cost and energy efficiency are also given preference. Security and reliability parameters receive less focus. The deadline is the constraint that nature-inspired techniques highlight most. Additionally, fault tolerance is a widely used restriction. Dynamic scheduling achieves higher popularity than static, AI, and prediction-based approaches. The most widely used task scheduling tool is the CloudSim simulation toolkit, whose existing programmatic classes can be expanded in accordance with the algorithm requirements to evaluate a variety of QoS parameters, including makespan, monetary cost, computational cost, reliability, availability, scalability, energy consumption, security, and throughput.

6 Future research issues of nature-optimization task scheduling algorithms

Although nature-inspired algorithms have been used in many applications successfully, there is still plenty of space for analysis and discussion regarding many current problems and topics. Several areas need to be addressed in this field. Cloud task scheduling presents a variety of difficulties, including heterogeneity, uncertainty, and resource dispersion, which conventional resource management techniques need to address. Therefore, to increase the dependability of cloud applications and services, a lot of attention and significance should be given to these cloud qualities. The workload should occupy the fewest resources that will be effectively employed to achieve the shortest job duration (maximize system throughput) and maintain a desired QoS level. Developing novel practical solutions in this field still requires additional research effort. There must be a minimum amount of contact between the computing environment and the cloud consumer to fulfill the QoS standards specified by users while maintaining the SLA. Performance degradation could therefore be prevented through research into an efficient autonomic infrastructure-based technique that, in advance, detects SLA violations.

Increasing energy efficiency in cloud computing is one of the main issues. According to estimates, 53% of all operational expenses go into cooling and powering data centers. As a result, IaaS providers have been given the urgent responsibility of reducing energy use. The goal of data center design should not only be to save energy costs; it also needs to comply with environmental regulations and legal requirements. Energy-aware server consolidation and energy-efficient task scheduling can be used to lower power usage by turning off idle systems. Since cloud service providers today seek to offer their services to end customers with high performance, high quality, and minimal processing time while making maximum profit, reliability is one of the most demanding cloud computing concerns. The scheduling strategy should be used to safeguard and protect the private and sensitive data contained in the submitted applications.

As can be observed from the numerous state-of-the-art scheduling methods chosen for this study, not all problems (challenges) can be solved by a single algorithm. For instance, while an algorithm considers different factors, such as resource utilization, availability, response time, scalability, etc., it may entirely neglect the energy, cost, time, and quality of service (QoS) parameters that are the emphasis of the other algorithm. Hybrid algorithms can be more inventively modified to individually or collectively maximize a variety of scheduling goals, such as energy optimization, load balancing, scalable VM migration, etc.

7 Conclusion

Many scientific applications can now migrate to the cloud because of the adoption of the new distributed computing paradigm and cloud computing infrastructure. With the advantages of cloud computing, including virtualization and shared resource pools, it is possible to manage and run large-scale workflow applications without maintaining physical computing infrastructure. The use of numerous resources accessible via the cloud needs to be optimized. Nature-inspired scheduling algorithms give better optimization results than traditional and heuristic. Over the past few years, nature-inspired optimization has developed rapidly, establishing a significant trend in cloud task scheduling. Several existing studies have employed nature-inspired optimization algorithms to solve scheduling problems. However, their studies do not concentrate on all of the parameters required to analyze the importance of nature-inspired optimization algorithms in task scheduling. Most studies only considered a small number of parameters (such as state-of-the-art, QoS parameters, etc.). Still, they need to simultaneously assess taxonomy, graphical representation, and research difficulties for a deeper understanding of task scheduling techniques based on nature-inspired optimization. To the best of our knowledge, a thorough, systematic, taxonomic assessment of nature-inspired optimization scheduling approaches in the cloud is required to keep up with the ever-increasing growth of nature-inspired algorithms. Therefore, a comprehensive study of several nature-inspired meta-heuristics-based strategies has been conducted in this paper. Conventional and heuristic scheduling approaches were presented to distinguish them from nature-inspired optimization algorithms. A novel taxonomy has been demonstrated to extensively and methodically classify various popular nature-inspired scheduling approaches in the cloud regarding the scheduling algorithm, the nature of the scheduling problem, the type of task, the primary scheduling goal, the task-resource mapping scheme, the scheduling constraint, and the testing environment. The results of each classification stage of taxonomy are analyzed and comprehensively examined to provide a roadmap for researchers working in the area of scheduling for enhancing cloud service.

In most literature, researchers have employed PSO and GA algorithms to carry out most cloud computing tasks. The most active study areas are makespan, cost, and resource utilization which are concentrated by most studies. Single objective scheduling, reliability, rescheduling, budget, priority, AI-based, and prediction-based mapping schemes have attracted less attention from researchers; as a result, they must be urgently included in ongoing research. The solutions produced by many nature-inspired methods that are already in use can be improved by considering the hybrid approach. The advantages of existing nature-inspired algorithms can be combined in a hybrid manner to address various issues brought on by workflow applications that optimize across several criteria. At the end of the discussion, a few simulation tools used in cloud computing are briefly addressed and contrasted for implementing and testing new algorithms. This study also presented some outstanding research issues and upcoming modern trends. This study primarily endeavored to strongly encourage the depth of the fundamental ideas of nature-inspired task scheduling techniques in the cloud computing area, which should give investigators and users advice on how to identify the nature of their scheduling problem, pinpoint their primary QoS parameters, identify the best task-resource mapping scheme, and establish the scheduling constraints that are most suitable for the challenge, without violating the SLA.

Data Availability

Enquiries about data availability should be directed to the authors.

References

Kaur, R., Laxmi, V.: Performance evaluation of task scheduling algorithms in virtual cloud environment to minimize makespan. Int. J. Inf. Technol. (2022). https://doi.org/10.1007/s41870-021-00753-4

Gawali, M.B., Shinde, S.K.: Task scheduling and resource allocation in cloud computing using a heuristic approach. J. Cloud Comput. 7(1), 1–16 (2018)

Singh, S., Chana, I.: A survey on resource scheduling in cloud computing: issues and challenges. J. Grid Comput. 14, 217–264 (2016)

Mathew, T., Sekaran, K.C. and Jose, J., 2014, September. Study and analysis of various task scheduling algorithms in the cloud computing environment. In 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI) (pp. 658–664). IEEE.

Xu, L., Qiao, J., Lin, S., Zhang, W.: Dynamic task scheduling algorithm with deadline constraint in heterogeneous volunteer computing platforms. Future Internet 11(6), 121 (2019)

Damodaran, P., Chang, P.Y.: Heuristics to minimize makespan of parallel batch processing machines. Int. J. Adv. Manuf. Technol. 37, 1005–1013 (2008)

Kim, S.I., Kim, J.K.: A method to construct task scheduling algorithms for heterogeneous multi-core systems. IEEE Access 7, 142640–142651 (2019)

Pinedo, M. and Hadavi, K., 1992. Scheduling: theory, algorithms and systems development. In Operations Research Proceedings 1991: Papers of the 20th Annual Meeting/Vorträge der 20. Jahrestagung (pp. 35–42). Springer, Berlin

Houssein, E.H., Gad, A.G., Wazery, Y.M., Suganthan, P.N.: Task scheduling in cloud computing based on meta-heuristics: review, taxonomy, open challenges, and future trends. Swarm Evol. Comput. 62, 100841 (2021)

Singh, H., Tyagi, S., Kumar, P.: Scheduling in cloud computing environment using metaheuristic techniques: a survey. In: Shal, V. (ed.) Emerging technology in modelling and graphics: proceedings of IEM graph 2018, pp. 753–763. Springer Singapore, Singapore (2020)

Liu, Y., Zhang, C., Li, B., Niu, J.: DeMS: A hybrid scheme of task scheduling and load balancing in computing clusters. J. Netw. Comput. Appl. 83, 213–220 (2017)

Kumar, D.: Review on task scheduling in ubiquitous clouds. J. ISMAC 1(01), 72–80 (2019)

Allahverdi, A., Ng, C.T., Cheng, T.E., Kovalyov, M.Y.: A survey of scheduling problems with setup times or costs. Eur. J. Oper. Res. 187(3), 985–1032 (2008)

Remesh Babu, K.R. and Samuel, P., 2016. Enhanced bee colony algorithm for efficient load balancing and scheduling in cloud. In Innovations in Bio-Inspired Computing and Applications: Proceedings of the 6th International Conference on Innovations in Bio-Inspired Computing and Applications (IBICA 2015) held in Kochi, India during December 16–18, 2015 (pp. 67–78). Springer International Publishing.

Taillard, E.: Some efficient heuristic methods for the flow shop sequencing problem. Eur. J. Oper. Res. 47(1), 65–74 (1990)

Morton, T., Pentico, D.W.: Heuristic scheduling systems: with applications to production systems and project management. John Wiley, Hoboken (1993)

Bissoli, D.C., Altoe, W.A., Mauri, G.R. and Amaral, A.R., 2018, August. A simulated annealing metaheuristic for the bi-objective flexible job shop scheduling problem. In 2018 International Conference on Research in Intelligent and Computing in Engineering (RICE) (pp. 1–6). IEEE.

Gong, G., Chiong, R., Deng, Q., Gong, X.: A hybrid artificial bee colony algorithm for flexible job shop scheduling with worker flexibility. Int. J. Prod. Res. 58(14), 4406–4420 (2020)

Zarrouk, R., Bennour, I.E., Jemai, A.: A two-level particle swarm optimization algorithm for the flexible job shop scheduling problem. Swarm Intell. 13, 145–168 (2019)

Sörensen, K., Glover, F.: Metaheuristics. Encycl. Operations Res. Manag. Sci. 62, 960–970 (2013)

Garg, D. and Kumar, P., 2019. A survey on metaheuristic approaches and its evaluation for load balancing in cloud computing. In Advanced Informatics for Computing Research: Second International Conference, ICAICR 2018, Shimla, India, July 14–15, 2018, Revised Selected Papers, Part I 2 (pp. 585–599). Springer Singapore.

Kaur, N. and Chhabra, A., 2016, March. Analytical review of three latest nature inspired algorithms for scheduling in clouds. In 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT) (pp. 3296–3300). IEEE.

Garg, D. and Kumar, P., 2019. A survey on metaheuristic approaches and its evaluation for load balancing in cloud computing. In Advanced Informatics for Computing Research: Second International Conference, ICAICR 2018, Shimla, India, July 14–15, 2018, Revised Selected Papers, Part I 2 (pp. 585–599). Springer Singapore.

Kalra, M., Singh, S.: A review of metaheuristic scheduling techniques in cloud computing. Egypt. Inf. J. 16(3), 275–295 (2015)

Kaur, N. and Chhabra, A., 2016, March. Analytical review of three latest nature inspired algorithms for scheduling in clouds. In 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT) (pp. 3296–3300). IEEE.

Tsai, C.W., Rodrigues, J.J.: Metaheuristic scheduling for cloud: a survey. IEEE Syst. J. 8(1), 279–291 (2013)

Nandhakumar, C. and Ranjithprabhu, K., 2015, January. Heuristic and meta-heuristic workflow scheduling algorithms in multi-cloud environments—a survey. In 2015 International Conference on Advanced Computing and Communication Systems (pp. 1–5). IEEE.

Hatchuel, A., Saidi-Kabeche, D., Sardas, J.C.: Towards a new planning and scheduling approach for multistage production systems. Int. J. Prod. Res. 35(3), 867–886 (1997)

Lawler, E.L., Lenstra, J.K. and Rinnooy Kan, A.H.G., 1982. Recent developments in deterministic sequencing and scheduling: a survey. In Deterministic and Stochastic Scheduling: Proceedings of the NATO Advanced Study and Research Institute on Theoretical Approaches to Scheduling Problems held in Durham, England, July 6–17, 1981 (pp. 35–73). Springer Netherlands.

Madni, S.H.H., Abd Latiff, M.S., Abdullahi, M., Abdulhamid, S.I.M., Usman, M.J.: Performance comparison of heuristic algorithms for task scheduling in IaaS cloud computing environment. PLoS ONE 12(5), e0176321 (2017)

Mazumder, A.M.R., Uddin, K.A., Arbe, N., Jahan, L. and Whaiduzzaman, M., 2019, June. Dynamic task scheduling algorithms in cloud computing. In 2019 3rd International conference on Electronics, Communication and Aerospace Technology (ICECA) (pp. 1280–1286). IEEE.

Chowdhury, N., M Aslam Uddin, K., Afrin, S., Adhikary, A., Rabbi, F.: Performance evaluation of various scheduling algorithm based on cloud computing system. Asian J. Res. Comput. Sci. 2(1), 1–6 (2018)

Balharith, T. and Alhaidari, F., 2019, May. Round robin scheduling algorithm in CPU and cloud computing: a review. In 2019 2nd International Conference on Computer Applications & Information Security (ICCAIS) (pp. 1–7). IEEE.