Abstract

In reversible watermarking, robustness of the watermark and the perceptual quality of the recovered host image has a major impact on the watermarking method. The proposed method provides improvement in the embedding capacity and the perceptual quality with a better robustness. Here, image pre-processing is performed by Gaussian filtering as a first step of the watermark embedding process. The peak points of the histogram are selected for embedding the secret data. In this method high frequency component modification is performed at the pixel position at which the watermark is embedded. A secret key is provided as an authentication process for the watermarked image, after adding the side information. At the extraction process, after the authentication and extraction of side information, Gaussian filter is applied. By using the side information the watermarked positions are identified, the secret data is extracted and the host image is recovered. The parameters such as embedding capacity, peak signal to noise ratio, Structural SIMilarity Index, bit rate and bit error rate are used for evaluation. The experimental results proves that, the proposed method provide better robustness and perceptual quality when compared with the existing method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the modern era, due to the development of latest communication techniques, the information’s such as images, audio, video, text etc., are transmitted in digital form. The data that is transmitted can be easily hacked by the unauthorized users due to lack of security. Therefore, information security has received very much attention in recent research.

Reversible data hiding method is one, which hides secret information into the host image; at the receiving side, we can completely restore the host image and the secret data. Against all possible attacks, this method is more fragile and has no robustness to withstand. The histogram shifting method and difference expansion techniques are the most effective methods used in spatial domain. In histogram, shifting the data hiding is performed by modifying the generated histogram of the host image.

Ni et al. [1] proposed a technique in which the peak point and the zero point of the image histogram is identified. The peak point is identified in order to calculate the maximum hiding capacity of the images. The histogram shifting of the selected image blocks is proposed by Fallahpour and Sedaaghi [2]. For each block the histogram is generated. Here, the data hiding is performed by relocating the minimum and maximum gray level of the image histogram. Lee et al. [3] proposed a technique with high visual quality for image authentication. The Message Digest 5 (MD5) cryptographic hash technique is used for image integrity. The difference histogram of the image is identified to embed the XOR output of the logo image in binary form and the hash code.

Van Leest et al. [4] work has presented the histogram shifting based reversible data hiding with some modifications when compared with the previous work. In the cover image, the secret information is embedded, based on the addition and subtraction of the selected integer. In this method, the vacant spaces in the histogram are identified to shift the histograms bins and to carry the hidden data the bins are expanded at vacant locations based on the selected integer value

A method based on histogram of the difference pixels is proposed by Tai et al. [5]. The difference between the pixels is calculated and histogram is formed, this histogram from a shape of the Laplacian –like distribution. This method provides better performance when compared with other reversible data hiding techniques. Xuan et al. [6] proposed an adaptive histogram modification method for lossless data hiding based on the histogram pairs. The PSNR value is improved by using the selection of threshold points and the adaptive histogram modification process maintains the underflow/overflow of pixels. For the copyright protection of multimedia the histogram-based lossless data embedding (LDE) has been recognized. This method use arithmetic average difference histogram which provides a stable lossless data hiding technique but has some practical limitations.

Gao et al. [7] developed a generalized statistical quantity histogram method which overcomes the limitations of the adaptive histogram based method. This method uses similarity of statistical quantity, divide and conquer strategy, scalability which provides high robustness. Due to transmission of side information and safe storage, this method provides high security for copyright protection. Qin et al. [8] proposed novel prediction-based reversible stegenographic method by adaptively choosing the reference pixels based on the pixel distributions. The partial differential equation is applied for the image inpainting technique. The secret bits are embedded by choosing the peak and zero points, then shifting the histogram of the prediction error.

Dragoi et al. [9] proposed data embedding scheme using prediction errors by selecting multiple histogram bins. The prediction errors of the host images are calculated in four different modes. The best performance of the watermarking scheme is obtained by histograms of all the four modes. The data extraction and recovery of the original image is performed based on the side information and the pre-computed location maps.

Chen et al. [10], proposed histogram shifting of prediction errors for reversible watermarking. Here, by using the multiple errors prediction scheme the asymmetric error histogram is constructed by choosing the suitable error prediction method. The embedding of secret data is done by combining the maxima and minima errors. The quality of the watermarked image is improved in this scheme due to the reduction in amount of shifted pixels in the asymmetric error histogram.

In Zong et al. [11], the watermark embedding and extraction process is based on the shape of the histogram. The host image pixels are separated into low frequency and high frequency components by using Gaussian low pass filter, as a first step of the data embedding phase. The numbers of gray levels in the low frequency components are selected by using a secret key in a random manner.

The pixel group with highest number of pixels and building of safe band for the selected pixels are done by histogram-shape related index. In the selected pixel group, the pixels used for embedding the secret data are moved to a certain gray level of the same group. In order to overcome these side effects the high frequency component modification is implemented and combined with the watermarked low frequency component.

In this paper, the peak points of the image histogram are considered for the data embedding and extraction process. In the embedding phase, the image is applied to Gaussian filtering which separates the host image into low and high frequency components. In the histogram of the low frequency components, the gray level which has the peak value is selected to embed the secret data. A secret key is used for the image authentication and for the secure transmission of the side information about the embedded pixel positions. The embedding capacity of this method is increased by selecting the consecutive peak value of the histogram.

In the existing scheme [12], only the bits (0’s and 1’s) are embedded in the identified positions. But in the proposed method the characters directly embedded by using their ASCII values in the identified pixel positions. This highly improves the embedding capacity of the images. At the decoding phase, the embedded pixel positions are identified using the secret key. Once the positions are identified, the secret data is extracted and these pixel positions replaced with the original pixel values for the recovery of the host image.

The remaining sections of this paper are organized as follows. The proposed watermark embedding and extraction methods are illustrated in Sect. 2. The simulation results and discussions are provided in Sect. 3. Finally, Sect. 4 concludes the paper.

2 Proposed watermarking method

The block diagram of the proposed watermarking embedding scheme is shown in Fig. 1. The proposed watermarking method has the following steps: Filtering, Peak point identification, pixel shifting, watermark embedding, HFCM, Side information adding, authentication process.

2.1 Filtering process

The input gray scale image is separated into low frequency components (\(\hbox {I}_{\mathrm {low}})\) and high frequency components (\(\hbox {I}_{\mathrm {high}})\). This operation is performed by the two dimensional Gaussian low pass filter [12, 13]. When the image is exposed to some noise during the communication, the effect of noise will be higher in the high frequency component when compared to low frequency component. So, to improve the robustness of the proposed system, the low frequency component of the host image is used to embed the secret data.

The two dimensional Gaussian function [11] is represent by

where (x, y) is the pixel position of the image, \(\upsigma \) is the standard deviation of the Gaussian noise.

The standard deviation ‘\(\upsigma \)’ of the Gaussian distribution is usually assumed as 1. The low pass output is obtained by performing convolution between the filter function and the host image. In mathematical from it is represented as

The Gaussian filter removes the high frequency components of the host image. The high frequency components of the image is obtained by

2.2 Peak point identification and histogram shifting

The image histogram is the pictorial representation of an image which illustrates the number of pixels versus the gray level values. Here, the image content is clearly related to the shape of the histogram. The histogram of low frequency components is plotted which exactly reflects the image content. In 8 bit gray scale images the pixel range varies from 0 to 255, where pixel with value ‘0’ represents black and value ‘255’ represents white. Figure 2 shows the sample histogram of an image.

The embedding capacity of the image is the number of secret bits that can be embedded into the host image. The highest peak point of the histogram is selected as the watermark embedding points. For example, in Fig. 2 the gray level 104 have the peak value of 1419, so the embedding capacity is 11,352 bits (1419 * 8 bits). The Gaussian low pass filter output contains the all the low frequency components (I\(_{\mathrm {low}})\) of the host image. In the proposed system, the histogram of I\(_{\mathrm {low}}\) is plotted, to identify the embedding capacity of the image. In order to improve the embedding capacity multiple peak points in histogram is selected.

The pixel values which contain the peak points are selected for the watermark embedding. The selected group of pixels are shifted to nearest neighbours, to create vacant places in the histogram. These locations are used for embedding the secret data. The maximum shifting count of the selected pixels positions is performed depending upon the length of the secret information, which improves the quality of the watermarked image.

The histogram shifting is performed based on the following conditions.

where \(H_{S}\) is the histogram shifting \(i= 1,2,3,4\ldots \), \(G_{1}\) is the gray level of the first highest peak point, \(G_{2}\) is the gray level of the second highest peak point and so on.

This method of shifting prevents the overflow and underflow of the pixels i.e the gray level shifting is maintained in the range of 0–255.

Figure 3 shows the shifted pixel positions in the histogram. These vacant positions are used embed the secret data in the image i.e. all the pixels at gay level 104 are shifted to neighbouring gray levels and the number of pixels at the gray level 104 is ‘0’.

2.3 Watermark embedding

The watermark embedding is done by pixel replacement method. In this method, the characters are the secret data to be embedded in the host image. The ASCII values of the characters are taken as the values to be hidden in the image. Here, the pixel positions shifted for watermark

The embedding process is done by

where \(I_{low\_wtrmrk}\) is watermarked low frequency image, \(I_{low}\) is low frequency original image, \(I_{low\_shifted}\) is the histogram shifted image, \(G_{i}\) is the selected Graylevel.

2.4 Quality improvement using HFCM

The perceptual quality of the image has direct impact when the pixels are transferred from one level to other. The amount of pixel transferred must be at low level to improve the perceptual quality. In this approach all the selected pixels are shifted to next or the previous gray levels. In order to reduce the side effect of Gaussian filtering \(I_{high}\) of the host image must be properly modified.

Let \(I_{R}\) be the received watermarked image, \(I_{wtrmrk}\) is the original watermarked image and \({I}_{R-low\_wtrmrk}\) be the low frequency component of the received image.

If image is exactly received the equation can be represented as

For the extraction of the watermarked data exactly the \({I}_{R-low\_wtrmrk}=I_{wtrmrk}\), but due to the Gaussian filtering some side effects may be introduce which increases the error in the watermark detection process. If a pixel \(I_{low}\) is modified for the watermarking process means, the pixel \(I_{high}\) must be modified to improve the quality at the receiving side. The relation between the \(I_{low}\) and \(I_{high}\) should be identified before the modification.

For example, consider Gaussian mask of size 5 \(\times \) 5 as shown in Fig. 4, then filter applied the image will be as shown in Fig. 5.

The changes in the pixel \({I\, }_{33}\), due to the Gaussian filter centred at \({I\, }_{33}\) will be

where,

The relation between the original and the low frequency component of the image is given by

We know that the input image is expressed as \(I=I_{high}+I_{low}\)

Now, \(I_{high}={I-I}_{low}\)

Let \(\varphi \) be the modified value in the low frequency image due to watermark embedding.

The received high frequency component can be expressed as

The total modification in the high frequency component is given by

The Gaussian mask has major effect on the centre pixels and degrades towards the edges of the mask. As shown in Fig. 6 , the direct neighbouring pixels of \({\varvec{I}}_{\mathbf {33}}\, are\, (I_{22}, I_{24}\), \(I_{42}\), \(I_{44})\) group 1 and (\(I_{23}\mathrm {,}I_{32}\mathrm {,}I_{34}\mathrm {,}I_{43})\) group 2 . The pixels belonging to same group has similar effect due to the symmetrical and circular property of the Gaussian filtering.

So, here the effects on \(I_{23}\) and \(I_{43}\) is analysed.

If \(I_{33}\) is altered then \({I\, }_{43-low}\) will be effected.

Now,

where \(\varphi \) is the amount of changes done in the pixel value.

The,

The variation in \(I_{44-low}\) is expressed as

Now, the watermarked low frequency components and compensated high frequency components are combined to from the watermarked host image. The gray value at which the watermarks are embedded and number of embedded watermarked bits is added as the side information. The side information is directly embedded by pixel replacement in the watermarked host image by selecting the unused pixel positions and pseudo noise sequence.

In order to improve the security of the image, the watermarked host image is provided with a secret key. This key is securely transmitted to the receiver for the watermark extraction process.

2.5 Watermark extraction

Figure 7 shows the block diagram of the proposed watermark extraction process. The watermark decoding process involves image authentication, extraction of side information, identification of watermarked groups, watermark extraction and image reconstruction.

The received image is applied for the authentication process. The image authentication is performed by using the secret key shared from the transmitting side. By using the pseudo noise sequence, the side information which is extracted from the received image, is used to identify the pixel positions where the secret data is embedded. The received image is applied to the Gaussian filter and the histogram of the low frequency components is constructed. The output of Gaussian filter of the received image will be

If image is exactly received without any error, then the equation is represented as

The watermarked groups is identified and extracted from the side information. After the extraction of the watermark, the pixel position of extracted watermarks is replaced with the original pixel values by using the side information.

3 Simulation results

In this section, by simulation the perceptual quality and robustness of the images are evaluvated for the proposed method and Zong method. All the simulations are performed in MATLAB.

For evaluvation, eight diffrent gray scale images such as rice, Cameraman, Barbara, boat, house, Lena, tree and Mandrill are used as host images of size 512 * 512 as shown in Fig. 8. The watermarked images as shown in Fig. 9. The watermark extarcted images and the reconstructed host images are shown in Figs. 10 and 11, respectively.

The parameters [14] such as embedding capcity (EC), peak signal to noise ratio (PSNR), Structural SIMilarity (SSIM) Index, bit rate (BR) and bit error rate (BER) are used for evaluation. The SSIM, MSE and PSNR are the indicators of perceptual quality of the images. The BER is the indicator of robustness of the image. The higher the PSNR and SSIM, higher the perceptual quality.

Embedding capacity (EC) is defined as the number of that can be embedded in host image.

The similarity between the two images is measured by the parameter known as the SSIM index, expressed as

where,

BR is the ratio of maximum number of the can be embedded to the total number of bits.

BER is the ratio of number of error bits received to the total number of bits transmitted.

The PSNR is define as the ratio of maximum possible pixel value of the image and the MSE.

where

W(i, j) is the watermarked image, I(i, j) is the original image.

The higher PSNR and SSIM and lower BER indicates the better performance of the watermarking methods. All the parameters are tested with different level gray level shifts in the absence of any attacks on the transmitted image.

For all the conditions, the proposed method gives a better performance when compared to Zong method. Table 1 shows the parameter comparison of proposed method and the Zong method with single gray level shift. For the single gray level shift, the proposed system has \(\hbox {PSNR}=48.79\) and \(\hbox {SSIM}=0.96\) and the Zong method has \(\hbox {PSNR}=44.89\) and \(\hbox {SSIM}=0.846\).

Table 2 shows the parameter comparison of proposed method and the Zong method with two gray level shifts. For the two gray level shift, the proposed system has \(\hbox {PSNR}=45.21\) and \(\hbox {SSIM}=0.95\) and the Zong method has \(\hbox {PSNR}=39.27\) and \(\hbox {SSIM}=0.741\).

Table 3 shows the parameter comparison of proposed method and the Zong method with three gray level shifts. For the third gray level shift, the proposed system has \(\hbox {PSNR}=39.88\) and \(\hbox {SSIM}=0.924\) and the Zong method has \(\hbox {PSNR}=35.49\) and \(\hbox {SSIM}=0.670\).

Table 4 shows the parameter comparison of proposed method and the Zong method with four gray level shifts. For the four gray level shift, the proposed system has \(\hbox {PSNR}=36.81\) and \(\hbox {SSIM}=0.871\) and the Zong method has \(\hbox {PSNR}=33.13\) and \(\hbox {SSIM}=0.625\).

Table 5 shows the parameter comparison of proposed method and the Zong method with five gray level shifts. For the five gray level shift, the proposed system has \(\hbox {PSNR}=35.32\) and \(\hbox {SSIM}=0.834\) and the Zong method has \(\hbox {PSNR}=31.79\) and \(\hbox {SSIM}=0.600\).

From the parameter comparison, the proposed method provides better performance in perceptual quality. The embedding capacity of the images has better improvement due to pixel replacement method.

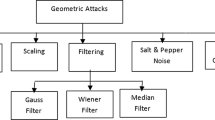

The parameters such as PSNR, SSIM and BER are tested with some common image attacks on the transmitted image. The attacks such as salt and pepper noise, Gaussian noise, median filtering, rotation and scaling are used for the comparison.

Table 6 shows the PSNR comparison of proposed method and the Zong method with common attacks. The proposed method and the Zong method has attained the average \(\hbox {PSNR}=48.08\) and \(\hbox {PSNR}=47.58\) respectively.

Table 7 shows the SSIM comparison of proposed method and the Zong method with common attacks. The proposed method and the Zong method has attained the average \(\hbox {SSIM}=0.97\) and \(\hbox {SSIM}=0.967\) respectively.

Table 8 shows the BER comparison of proposed method and the Zong method with common attacks. The proposed method and the Zong method has attained the average \(\hbox {BER}=0.031\) and \(\hbox {BER}= 0.034\) respectively.

4 Conclusion

The main contributions in this paper are: (1) identifying the watermark embedding points in the low frequency component (2) Watermark embedding and High frequency component modification (3) image authentication (4) watermark extraction and host image reconstruction. Here, watermarks are embedded only in low frequency component of the images by using Gaussian low pass filter in the pre-processing. The embedded positions are identified by using the side information extracted from the watermarked image. The HFCM method improves the quality of the watermarked image. The security of the image is improved in this method by providing a secret as an authentication process. As, demonstrated in the simulation results, the proposed method provides better perceptual quality and robustness.

References

Ni, Z., Shi, Y.Q., Ansari, N., Su, W.: Reversible data hiding. IEEE Trans. Circuits Syst. Video Technol. 16(3), 354–362 (2006)

Fallahpour, M., Sedaaghi, M.H.: High capacity lossless data hiding based on histogram modification. IEICE Electronics Express 4(7), 205–210 (2007)

Lee, S.K., Suh, Y.H., Ho, Y.S.: Reversible image authentication based on watermarking. Proc. IEEE International Conference on Multimedia Expo. 1321-1324 (2006)

Van Leest, A., Van der Veen, M., Bruekers, F.: Reversible image watermarking. Proc. IEEE International Conference on Information Process. 2(1). II-731-II-734 (2003)

Tai, W.L., Yeh, C.M., Chang, C.C.: Reversible data hiding based on histogram modification of pixel differences. IEEE Trans. Circuits Syst. Video Technol. 19(6), 906–910 (2009)

Xuan, G., Shi, Y.Q., Chai, P., Cui, X., Ni, Z., Tong, X.: Optimum histogram pair based image lossless data embedding. Proceedings of International Workshop on Digital Watermarking. 264-278 (2007)

Gao, X., An, L., Yuan, Y., Tao, D., Li, X.: Lossless data embedding using generalized statistical quantity histogram. IEEE Trans. Circuits Syst. Video Technol. 21(8), 1062–1070 (2011)

Qin, C., Chang, C.C., Huang, Y.H., Liao, L.T.: An inpainting assisted reversible steganographic scheme using a histogram shifting mechanism. IEEE Trans. Circuits Syst. Video Technol. 23(7), 1109–1118 (2013)

Dragoi, I.C., Coltuc, D., Wu, H.-T., Huang, J.: Reversible image watermarking on prediction errors by efficient histogram modification. Signal Processing Letters 92(12), 3000–3009 (2012)

Chen, X., Sun, X., H. Zhou, Z., Zhang, J.: Reversible watermarking method based on asymmetric-histogram shifting of prediction errors. Journal of System. Software. 86(10), 2620-2626 (2013)

Zhang, X.: Reversible data hiding with optimal value transfer. IEEE Trans. Multimedia 15(2), 316–325 (2013)

Zong, Tianrui, Xiang, Yong, Natgunanathan, Iynkaran, Guo, Song, Zhou, Wanlei, Beliakov, Gleb: Robust Histogram Shape-Based Method for Image Watermarking. IEEE Trans. Circuits Syst. Video Technol. 25(5), 717–729 (2015)

Ito, Kazufumi, Xiong, Kaiqi: Gaussian Filters for Nonlinear Filtering Problems. IEEE Trans. Autom. Control 45(5), 910–927 (2000)

Nisha., Sunil Kumar.: Image Quality Assessment Techniques. International Journal of Advanced Research in Computer Science and Software Engineering, 3(7), 636–640 (2010)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rajkumar, R., Vasuki, A. Reversible and robust image watermarking based on histogram shifting. Cluster Comput 22 (Suppl 5), 12313–12323 (2019). https://doi.org/10.1007/s10586-017-1614-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-017-1614-9