Abstract

We use an integrated assessment model of climate change to analyze how alternative decision-making criteria affect preferred investments into greenhouse gas mitigation, the distribution of outcomes, the robustness of the strategies, and the economic value of information. We define robustness as trading a small decrease in a strategy’s expected performance for a significant increase in a strategy’s performance in the worst cases. Specifically, we modify the Dynamic Integrated model of Climate and the Economy (DICE-07) to include a simple representation of a climate threshold response, parametric uncertainty, structural uncertainty, learning, and different decision-making criteria. Economic analyses of climate change strategies typically adopt the expected utility maximization (EUM) framework. We compare EUM with two decision criteria adopted from the finance literature, namely Limited Degree of Confidence (LDC) and Safety First (SF). Both criteria increase the relative weight of the performance under the worst-case scenarios compared to EUM. We show that the LDC and SF criteria provide a computationally feasible foundation for identifying greenhouse gas mitigation strategies that may prove more robust than those identified by the EUM criterion. More robust strategies show higher near-term investments in emissions abatement. Reducing uncertainty has a higher economic value of information for the LDC and SF decision criteria than for EUM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Anthropogenic greenhouse gas emissions are projected to cause climatic changes with non-trivial and likely overall negative impacts on human welfare (Nordhaus 2008; Bernstein et al. 2008). Reducing anthropogenic climate forcing is possible, but requires significant investments. As one key objective, climate change strategies can aim to choose a level of investment that appropriately balances the costs and benefits of reducing emissions. The fact that current estimates of the impacts of climate change and the costs of climate mitigation are deeply uncertain imposes non-trivial methodological challenges (cf. Keller et al. 2008a; Lempert 2002). Deep uncertainty (sometimes also referred to as ambiguity, Knightian uncertainty, or imprecision) refers to a situation where the decision-makers do not know or cannot agree on a single probability density function (PDF) of the outcomes (Lempert et al. 2003; Lempert and Collins 2007). Nonetheless, one prominent analytic approach frames climate change decision-making as an inter-temporal optimization problem where policy makers seek to maximize the discounted expected utility of current and future generations (Nordhaus 2008; Keller et al. 2004). This expected utility maximization (EUM) framing poses at least three potentially important problems: (i) EUM poorly describes the actual decision rules people often use under conditions of deep uncertainty (e.g. Ellsberg 1961, 2001), (ii) implementing EUM under conditions of deep uncertainty raises important methodological challenges, for instance how to aggregate differing expert estimates (e.g. Zickfeld et al. 2007), and (iii) the specification of EUM strategies can be highly sensitive to mis-specification of low-probability high-impact events (e.g. Keller et al. 2004). This paper explores alternative decision criteria that may help overcome these challenges.

Integrated Assessment Models of climate change (IAMs) often adopt the classic decision-making framework of expected utility maximization (Ackerman et al. 2009; Bretteville Froyn 2005) that identifies an optimum strategy contingent on a single best estimate joint probability distribution over the uncertain input parameters of the model. EUM has at least two important advantages. The approach rests on a solid theoretical foundation built on a small number of intuitive axioms. Computationally the approach is also relatively straightforward to implement (Quiggin 2008; McInerney and Keller 2008; Tol 2003). However, as recently emphasized by the U.S. Climate Change Science Program, for both theoretical and practical reasons, there are limits to the applicability and usefulness of classic decision analysis to climate-related problems (Morgan et al. 2009, p.25).

As one challenge, under conditions of deep uncertainty the EUM framework has poor descriptive power (Ellsberg 1961). Empirical studies (Budescu et al. 2002; Du and Budescu 2005) show that most decision makers are sensitive to the (im)precision of the decision parameters. Typically, they are vagueness (ambiguity) averse and are willing to pay a premium to avoid it, although in some cases they prefer small degrees of imprecision. Decision problems involving long-term climate change strategies are arguably better described as deeply uncertain (as opposed to just uncertain) (Keller et al. 2008a; Lempert 2002; Welsch 1995). For example, risk estimates of potential low-probability high-impact events such as a shutdown of the North Atlantic thermohaline circulation hinges critically on the highly diverging expert priors (Zickfeld et al. 2007; Keller et al. 2000; Alley et al. 2002; Link and Tol 2004).

Analyzing climate change strategies using an expected utility maximization framework also presents methodological challenges, such as the need to aggregate PDFs from different sources, a step typically requiring strong assumptions (Keith 1996). One typical approach to this aggregation step is to neglect the aspects of deep uncertainty and pick just one example PDF (Nordhaus 2008; Keller et al. 2004). Neglecting the effects of deep uncertainty can lead to inaccurate and over confident predictions.

Finally, the expected utility maximization framework can suggest strategies that prove vulnerable to mis-estimation of deeply uncertain low-probability high-impact events (Peterson et al. 2003; Keller et al. 2004). This vulnerability can arise, for example, when the EUM strategy is located at a narrow peak of the utility function that is surrounded by a deep valley (a situation sometimes referred to as “dancing on the top of a needle”). In this case, an alternative strategy that is located at a slightly lower but much broader peak of the utility has the advantage of trading a small amount of utility in the expected case for a considerable increase in utility if the estimate of the expected case is slightly wrong.

Previous analyses of decision-making under deep uncertainty have typically addressed these two problems by employing alternative decision-making criterion than EUM (cf. Borsuk and Tomassini 2005; Lempert and Collins 2007; Lange 2003; McInerney and Keller 2008), by adopting alternative representations of uncertainty than a single joint probability distribution (cf. Kriegler and Held 2005; Hall et al. 2007; Lempert and Collins 2007), or both. Such analyses have broken important new ground, but they typically analyze highly stylized problems. For example, Lempert and Collins (2007) use a simple model describing a lake that can abruptly turn eutrophic if pollution concentrations exceed some unknown threshold in order to compare the implications of alternative decision making criteria. The study compares EUM and three types of robustness criteria, representing deep uncertainty with a set of alternative probability distributions. The study finds robust strategies preferable to expected utility maximization when deep uncertainty exists about potentially catastrophic impacts and when decision makers have a sufficiently rich menu of decision options to allow them to find one that is robust. Lange (2003) also analyzes the effects of the limited degree of confidence decision criterion—which we will employ below—on the preferred climate change strategies, but does not consider the effects of a potential climate threshold response.

Our study expands on previous work in several ways. It explores alternative decision criteria to EUM adapted from the literature, using the particularly stressing case of an IAM with an uncertain, potentially abrupt climate threshold response. It considers both parametric and structural uncertainty, where the former involves uncertainty about input parameters to the model and the latter involves uncertainty about the model itself. The study considers the effects of learning about the threshold response. The study also demonstrates computational methods that can implement these new decision criteria on a climate change IAM with abrupt changes.

With these resources, the study addresses three main questions. First, what are the effects of considering a new set of decision-criteria adopted from the finance literature on the preferred climate change strategy? Second, what is the economic value of information for these decision-criteria? Third, can relatively simple modifications of the decision-criteria be used to identify more robust decisions in a computationally efficient way? Recognizing the aforementioned problems of applying EUM to the analysis of climate change strategies, it is important to note that our study is silent on the prescriptive questions of which decision-making criteria is the “best” or should be applied by a decision-maker. We see the role of an analyst not as to make these value judgements, rather to suggest the sensitivities and the trade-offs associated with these judgements.

We analyze these questions in two ways. First, we modify the Dynamic Integrated model of Climate and the Economy (DICE) to include the potential for a climatic threshold response. These impacts, representing those that might occur from the shutdown of the North Atlantic Meridional Overturning Circulation (MOC), are considered to be deeply uncertain (Zickfeld et al. 2007; Keller et al. 2008a). Second, we consider three decision-criteria that treat the uncertainty about the potential for large climate impacts in different ways. We analyze expected utility maximization, but given its potential problems, we also analyze two additional decision-criteria that provide avenues to (i) represent the effects of the deep uncertainty on the decision-making process and (ii) offer the possibility of improving the coherence between the decision-making criterion adopted by real decision-makers and the decision-making criterion used in Integrated Assessments Models of climate change (Budescu et al. 2002; Du and Budescu 2005).

These two alternative decision criteria, Safety First (SF) and Limited Degree of Confidence (LDC), both balance the goal of maximizing the expected utility with minimizing the worst-case performance (Aaheim and Bretteville 2001; Bretteville and Froyn 2005). The LDC criterion maximizes a weighted average of the expected utility and the utility in the worst-case futures. The SF criterion maximizes the expected utility with the constraint that the utility in the worst cases exceed some prescribed threshold. The properties of the three decision-criteria are summarized in Table 1. The weighting in the LDC criteria can be interpreted as a measure of decision makers confidence in the best estimate probability distribution used to calculate the expected value. The constraint used by the SF criterion can be interpreted as an ethical requirement that one should maximize utility only after guaranteeing some minimum level of utility (Rawls 1971). SF also has similarities with criterion from the finance literature that combine expected utility with conditional value at risk to help a firm, for example, maximize profits while holding the probability of bankruptcy below some threshold. By placing weight on the performance in the worst-case outcomes, the LDC criterion is similar to decision rules described by Hurwicz (1951) and Ellsberg (1961). The former’s optimism–pessimism criterion prescribes a weighted combination of the best case and worst-case outcome. Ellsberg (1961) suggests that when making decisions under what he calls conditions of ambiguity, people will use as their decision criteria a weighted combination of the expected utility contingent on the best-estimate distribution and of the expected utility of the worst case distribution, where the weighting is a function of decision makers confidence in the available data.

These three criteria (EUM, SF and LDC) can generate a wide range of recommendations for emissions reduction policies depending on the preferences used in the SF and LDC decision-criteria. The Safety First and Limited Degree of Confidence criteria can recommend higher preferred investments in emissions abatement than the EUM criterion, depending, in the former, on the prescribed threshold for worst cases and, in the latter, how much confidence decision makers express in the best estimate distribution. For the SF and LDC criteria we map out the tradeoff between maximizing expected utility and minimizing the effect of the worst cases for a range of preferences. We find that the tradeoff curves for the SF and LDC criteria are equivalent for the considered decision problem, within numerical accuracy. Moreover, the LDC and SF strategies that are at the same location in this tradeoff curve show the same abatement strategy (again within numerical accuracy).

We then examine the robustness of various strategies. Specifically, we analyze how well strategies that emphasize expected utility maximization perform in the worst cases and how well strategies that emphasize worst-cases performance affect the expected performance. We show how applying the two alternative decision-making criteria can help identify strategies that decision makers may find more robust given the range of impacts considered. Finally, we evaluate how the choice of decision-criterion affects the choice of climate strategy given the potential for future learning, as well as the expected economic value of climate information. We demonstrate that constraining the worst cases (as implemented in the Safety First decision criterion) can significantly increase the expected economic value of climate information. We show that the expected value of information can be considerably higher using the SF criterion, compared to the EUM criterion.

2 Alternative decision criteria

We implement the alternative decision criteria using a recent version of the Dynamic Integrated model of Climate and the Economy (DICE-07) (Nordhaus 2007a). DICE is a simple and transparent integrated assessment model that has been widely-used (e.g. Popp 2004; Keller et al. 2004; Nordhaus 2007b; Tol 1994) and is well-documented (Nordhaus 1992, 1994, 2008). In DICE, the objective is to determine an optimal strategy that balances the uncertain costs of reducing greenhouse gas emissions against the uncertain damages associated with climate change. This is achieved by maximizing the discounted sum of utility W (Eq. 1 in the Supplementary Material). We modify this model to (i) incorporate a representation of a potential climate threshold response, (ii) allow for parametric uncertainty, (iii) consider the potential to learn, and (iv) represent different decision-criteria under uncertainty. We note that while a number of studies have explored the importance of parametric uncertainty in DICE (e.g. Nordhaus 2008; Keller et al. 2004), the publicly released version of DICE-07 uses only single parameter estimates, so modifications as described below were required to explore the effects of parametric uncertainty.

The DICE model is described in Section 1 of the Supplementary Material. The modifications to this model are outlined in the remainder of this section. Technical details on the numerical optimization used to determine optimal strategies, and a list of all symbols used in this study, are provided in Sections 1 and 2 of the Supplementary Material.

2.1 Representation of a potential climate threshold response

Keller et al. (2000) incorporate an MOC threshold into an earlier version of DICE using a simple statistical parameterization of model results reported by Stocker and Schmittner (1997). Specifically, Keller et al. (2000) define a critical equivalent CO2 concentration as a function of climate sensitivity. If this critical equivalent CO2 is exceeded an irreversible shutdown of the MOC is triggered. Associated with this shutdown are persistent economic damages, θ 3, expressed as a proportion of economic output. This threshold representation is transparent and has been widely used and investigated (Keller et al. 2004; Lempert et al. 2006; McInerney and Keller 2008). The limitations of this MOC threshold representation are duly noted (Zickfeld and Bruckner 2008). First, the real world MOC is likely sensitive to the rate of temperature change as well as atmospheric CO2 concentrations (Stocker and Schmittner 1997). Second, this analysis neglects uncertainty in the initial strength of the MOC and the sensitivity of fresh-water forcing in the North Atlantic to changes in temperature.

Here we adopt the basic structure of this simple representation but add one additional factor representing the effects of structural model uncertainty. Structural model uncertainty is important in the assessment of a future MOC threshold response (Zickfeld et al. 2007). While the results of Stocker and Schmittner (1997) suggest that the MOC is sensitive to climate change, other models report the presence of stabilizing feedbacks that make the MOC virtually insensitive to global warming (Latif et al. 2000). To account for this structural uncertainty, we introduce a binary variable called the MOC sensitivity (p MOC , with values of either zero or one). The MOC will shutdown when the critical threshold is exceeded and the MOC sensitivity is one.

2.2 Three decision criteria

Here we describe the three decision criteria summarized in Table 1.

2.2.1 Expected utility maximization

The EUM criterion, often used in Integrated Assessment Models of climate change, maximizes the sum of the product of the probability p i of each considered State of the World (SOW) and the well-being W i in this SOW

The well-being for each SOW is calculated using Equation (1) in the Supplementary Material. As described in Section 2.3, it is convenient in this study to consider states of the world defined by parameter intervals with different length, but of equal probability. Thus the EUM criteria becomes

2.2.2 Limited degree of confidence

The limited degree of confidence (LDC) criterion, as discussed by Aaheim and Bretteville (2001), Bretteville Froyn (2005) and Lange (2003), maximizes a weighted average of the worst outcome, i.e., the maximin criterion, and the expected utility. The maximin criterion ranks strategies according to their worst case outcomes, and the strategy that maximizes the minimum utility is chosen:

The LDC criterion is a combination of EUM and maximin according to:

The decision-maker’s degree-of-confidence in the underlying probability distributions, β, lies between 0 and 1. For β = 0, this criteria reduces to maximin, while β = 1 recovers the decision criterion of EUM. This criteria balances between the EUM and maximin criteria, depending on the decision makers’ degree-of-confidence in the underlying probability distribution. The LDC criterion is similar to the first robustness definition used in Lempert and Collins (2007), which considers a robust strategy to be one that may give up a small amount of optimum performance for less sensitivity to assumptions. However Lempert and Collins (2007) use regret rather than absolute performance to balance expected and worst case performance. Both these approaches are premised on the notion that the distribution of outcomes may be imprecise.

However, the implementation of the LDC criterion as discussed in Aaheim and Bretteville (2001) and Lange (2003) poses two problems when applied to the situation at hand. Lange (2003) analyzes a simplified analytical model with a fixed upper bound for the environmental damages. This means that the worst case is precisely known. In contrast, we do not know the upper bound for the considered problem of abrupt climate change (cf. Keller et al. 2008a). In addition, for the numerical approach used here, the worst case depends on the considered number of states of the world (i.e., on the sampling resolution) (Tol 2003). As described in Section 2.3, the PDFs of the MOC damages (θ 3) and climate sensitivity (λ ∗ ) both contain high values with low probabilities, so that as more samples are taken, the maximum sample from each distribution increases and does not converge to an upper limit. If these samples correspond to the worst-case outcomes, the strategy that maximizes the minimum utility depends heavily on sampling resolution (see Tol 2003, for a similar result). Second, a maximin decision criterion only considers the single worst outcome, i.e., it is blind to other poor outcomes. This may be a problem when the worst outcomes correspond to different parts of the parameter space, where improving the worst-case may not necessarily improve the second-worst outcome. The maximin decision criterion does not include probabilistic information by design.

Rather than considering the minimum value of well-being (as in Eq. 2) we use an alternative risk metric: the Conditional Value at Risk (CVaR). The CVaR is the expected value of the worst q-th portion of the utility distribution, which we denote E[W q ]. W q is the portion of outcomes below what is known as the Value-at-Risk for q. The CVaR is also known as the Mean Excess Loss or Mean Shortfall, and has been previously applied to risk analysis in the energy sector (Fortin et al. 2007), the crop insurance industry (Liu et al. 2008), and financial markets (Andersson et al. 2001; Alexander et al. 2006; Quaranta and Zaffaroni 2008). Using the CVaR metric addresses our above concerns with using the minimum value, since it is less dependent on sampling resolution than the minimum value; as the number of samples increases, E[W q ] converges. We modify the limited degree of confidence criterion as originally discussed, for example, by Lange (2003) by replacing the minimum value metric with the CVaR metric and implementing a typical value of q = 0.01 (Andersson et al. 2001; Larsen et al. 2002; Krokhmal et al. 2002):

For the case where each SOW has equal probability this equates to

where \(\hat{W}\) is the same distribution as W, but sorted from lowest to highest values.

2.2.3 Safety first

The safety first (SF) criterion is an extension of the EUM criterion that imposes an additional constraint on the lower tail of the strategy’s performance. The implementation discussed, for example, by Bretteville Froyn (2005) maximizes expected utility with the additional constraint the probability that the well-being is less than a critical value W ∗ must be less than a certain value q:

This criterion retains the best estimate probability distribution used by EUM but adds a term sensitive to size of the impacts in the tails of the distribution. This criterion is similar to the reliability constraint analyzed by McInerney and Keller (2008) where the probability of an undesirable event such as an MOC shutdown is constrained to be less than a certain threshold value.

For reasons similar to above, we rewrite SF using CVaR with q = 0.01:

which is equivalent to

for the case in this study where all SOWs are equally likely.

2.3 Implementing the Decision Criteria

To implement the decision criteria represented by Eqs. 1, 3 and 4 we re-wrote the publicly available DICE-07 code in FORTRAN-90 in order to solve the (rather demanding) numerical optimization problem. We consider uncertainty in four parameters that previous studies have identified as key drivers of preferred abatement strategies (Nordhaus 1994, 2008; Keller et al. 2004; McInerney and Keller 2008). These four factors are: (i) the climate sensitivity, λ ∗ ; (ii) initial growth rate of the carbon intensity, g σ (2005); (iii) the specific damages from crossing the MOC threshold, θ 3; and (iv) the sensitivity of the MOC to increasing atmospheric carbon dioxide concentrations, p MOC . It is important to stress that these four parameters are just a small subset of the relevant uncertainties (Nordhaus 1994; Keller et al. 2008a).

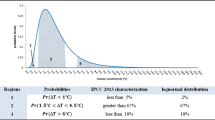

We adopt the empirical distribution of Andronova and Schlesinger (2001) for climate sensitivity, and adopt subjective estimates for the distributions of g σ (2005), θ 3, and p MOC . The mean value of g σ (2005) follows its original value in DICE-07 (−0.073 per decade). We adopt a uniform PDF for this parameter and assign bounds of 50% and 150% of this mean value, equivalent to −0.11 and −0.04 (−0.073 ± 0.5 × 0.073). While MOC specific damages are deeply uncertain (Nordhaus 1994; Keller et al. 2000; Alley et al. 2002; Link and Tol 2004), we following Keller et al. (2004), McInerney and Keller (2008) and Tol (2003) and assign a mean value of 1.5% gross world product (GWP) to MOC specific damages. To allow for low-probability high-impact outcomes, we use a Weibull distribution with a large standard deviation (3.7% GWP) about this mean value. Finally, we adopt a binary distribution of the MOC sensitivity with equal prior weight, resulting in a mean of 0.5. As a result, it is equally likely that the MOC is sensitive or insensitive to anthropogenic climate forcing.

The properties of these distributions are summarized in Table 2 and the cumulative density functions for λ ∗ , g σ (2005) and θ 3 are displayed in Fig. 1. We draw eleven equally likely samples from these three distributions using the technique described in McInerney and Keller (2008). These samples are marked by the star symbols in Fig. 1. After drawing eleven samples from each of these three distributions, and allowing the MOC to be either sensitive or insensitive to anthropogenic warming, there are N SOW = 11 × 11 × 11 × 2 = 2662 equally likely states of the world (SOWs). The number of SOWs considered in this study is much larger than previous studies that consider EUM only. This is because the LDC and SF decision criteria require the lower tail of the utility distribution to be resolved by the sampling procedure, while EUM simply requires resolution of the expected value.

Cumulative density functions (CDFs) for the considered uncertain parameters. Shown are: a the climate sensitivity (λ ∗ ), b the initial growth in carbon intensity (g σ (2005)), and c the damage associated with an MOC shutdown (θ 3). The stars on the horizontal-axis denote the locations of the sampled parameters values. See text for details on the sampling procedure

The same set of 2662 parameter combinations (SOWs) is used to calculate optimal solutions for the EUM, LDC and SF criteria; the differences between solutions are driven by the choice of objective function in the optimization problem. Section 2 of the Supplementary Material provides details on the numerical optimization procedures used.

3 Results and discussion

We first analyze the effects of the climate threshold and uncertainty on optimal abatement with the EUM criterion. We then characterize the effects of using the two alternative decision criteria on (i) preferred abatement, (ii) the distribution of outcomes, (iii) the tradeoffs between the expected utility and the utility in the lower tail, (iv) the robustness of the strategies with respect to uncertainty about key parameters, and (v) the economic value of information.

3.1 Effects of the climate threshold and uncertainty using the expected utility criteria

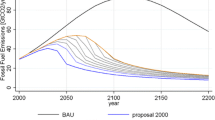

With perfect information (no structural or parametric uncertainty) and no climate threshold, optimal abatement rises from 15% in 2015 to around 60% in 2150 (Fig. 2, circles). With the climate threshold and perfect information, optimal abatement remains the same within the numerical accuracy of our optimization (Fig. 2, crosses). In this case the climate threshold proves inconsequential because it is not crossed during the considered time horizon. This result differs from previous studies (e.g. Keller et al. (2004), Fig. 5) where an identical MOC representation increases optimal abatement in an attempt to avoid or delay the threshold crossing. This difference is due to the reduction in baseline CO2 concentrations between DICE-94 and DICE-07, a result of a complex mixture of changes in the utility function, the pure rate of social time preference, and the carbon intensity (Nordhaus 2008).

Adding parametric and structural uncertainties to the case with a climate threshold also has a small effect for the EUM decision criterion (Fig. 2, squares). Optimal abatement increases only by a few percentage points by 2100. With EUM, the potential for an MOC shutdown has little effect on the optimal near-term abatement strategy in DICE-07.

3.2 Effects of the confidence in the PDF estimate on preferred abatement and the distribution of utilities

The potential MOC shutdown has significantly more salience with the LDC and SF decision criteria. The weight that the decision-maker assigns to the worst case scenarios for the LDC criterion (β in Eq. 3) has a considerable effect on the near-term preferred abatement (Fig. 3). When the decision-maker has no confidence in the underlying parametric distributions (i.e., β = 0) and thus maximizes the expected utility of the worst 1% of cases (i.e. E[W 0.01]) the preferred abatement increases considerably compared with the EUM case (Fig. 3 squares vs. circles). In this “zero confidence” case (squares), abatement increases steadily from more than 20% in 2015 to around 80% in 2100, and reaches 100% around 2135. By comparison, EUM abatement rises from around 15% in 2015 to 40% in 2100 (circles).

Figure 4a and b show the improvement obtained by maximizing expected utility instead of following a business-as-usual (BAU) path, where no GHG abatement is employed. The expected value of the distribution of utilities (solid vertical line) improves, and the variance in the distribution is also reduced. Importantly, the worst cases are greatly improved; the expected value of the worst 1% of outcomes (E[W 0.01]), marked by the dotted vertical line, increases significantly. (The raw values for discounted sum of utility are not particularly meaningful; for the BAU strategy they range between 1.38×105 and 1.52×105. Therefore, we have linearly scaled all utilities so that a rescaled utility of 0 corresponds to the minimum value of BAU utility, while 100 scales to the maximum value of the BAU utility.)

Probability density functions (PDFs) of the discounted sum of utility for a business-as-usual, b expected utility maximization, c intermediate confidence, and d zero-confidence decision criteria in the absence of future learning. The solid vertical lines indicate the expected value of these distributions (E[W]) and dotted lines show the expected value of the worst 1% of outcomes (E[W 0.01]). Utilities have been rescaled to 100% of the business-as-usual (BAU) range

The EUM and zero confidence criteria differ primarily with respect to how much importance they assign to the expected utility of the worst cases, as seen Fig. 4b and d. In each case, the distribution is left-skewed due to the low-probability, high-impact events (panels a and c in Fig. 1). The spread of this distribution is smaller for the zero confidence case, with a noticeable improvement in the worst case outcomes and hence the expected value of the worst 1% of outcomes. However, the decision maker must sacrifice some of the expected utility of the entire distribution for this improvement of the worst cases.

3.3 Tradeoff between expected utility and worst cases performance

Implementing the LDC and SF criteria with a range of preferences for improving the worst case outcomes allows us to examine the tradeoff between expected utility and utility averaged over the worst case outcomes (Fig. 5). For the LDC criterion, we choose samples for β in Eq. 3 of 0, 0.1, ..., 1, and additional values 0.95, 0.97 and 0.99 to increase the resolution of the trade-off curve (Fig. 5, crosses). As we move from left-to-right in this figure, we move from β = 1 (EUM) to β = 0 (zero-confidence). Similarly, the tradeoff curve for SF can also be mapped out by considering a range of values for the threshold W ∗ in Eq. 4 based on values from the EUM and zero-confidence strategies (Fig. 5, circles). Interestingly, the LDC and SF results appear to lie on the same trade-off curve, and abatement for SF and LDC strategies that lie in close proximity are almost identical. For example, Fig. 6 (circles and crosses) displays abatement for the right-most SF point (with W ∗ = 63) and the closest LDC point (with β = 0.8). Note that the analysis of Krokhmal et al. (2002) [Theorem 3] implies that if E[W] and E[W 0.01] are convex functions, the tradeoff curves for SF and LDC will in fact be equivalent. Despite the potential for non-convexity in E[W] and E[W 0.01] due to the climate threshold response, for practical purposes these criteria produce equivalent strategies. This allows combining the two sets of points and tracing out this curve with higher resolution (solid line in Fig. 5).

The tradeoff between the expected utility (E[W]) and the expected value of the lowest 1% of utility (E[W 0.01]). Preferred strategies determined using the limited degree of confidence criterion are marked by the crosses, while those obtained using the safety first criteria are marked by circles. This result considers both the climate threshold and uncertainty, but neglects future learning. Utilities have been rescaled to 100% of the business-as-usual (BAU) range

Preferred abatement levels obtained using the safety first criterion with W ∗ = 62 (circles), and the limited degree of confidence criterion with β = 0.8. See the main text and Eqs. 3 and 4 for a description of these variables. This result considers both the climate threshold and uncertainty, but neglects future learning

For the LDC criterion, we may interpret the points on the tradeoff curve as follows. The preferred solution with β = 1 (i.e., expected utility maximization) corresponds to the point on the curve which has the maximum value in the y-direction, i.e. the maximum value in the [0, 1] direction in Cartesian coordinates. For β = 0 (i.e., zero-confidence) the preferred solution corresponds to the point with maximum value in the x-direction, i.e., in the direction [1, 0]. Similarly, values of β between 0 and 1 correspond to the point on the curve with the maximum value in the direction [1 − β, β]. For example, when β = 0.5, the preferred solution corresponds to the maximum point in the [0.5, 0.5] direction, i.e. along the axis that runs at 45° to the positive x-axis. For the SF criterion, the interpretation is more intuitive: the parameter W ∗ in Eq. 4 corresponds to the value of E[W 0.01] on the curve.

The abatement strategy that corresponds to the SF point in the middle of the trade-off curve, found by constraining W ∗ = 55, is displayed in Fig. 3. The abatement for this strategy lies between expected utility maximization and zero-confidence, and we will refer to this as our “intermediate-confidence” strategy. This strategy greatly improves the worst case outcomes compared with EUM, but maintains a high expected utility (Fig. 4c).

The tradeoff curve is also useful for identifying “sweet spots” in the tradeoff between expected utility and minimizing the worst cases. At the right-hand end of the curve we see a sudden drop off in expected utility. In this region, we must make a large sacrifice in expected utility to obtain a small improvement in the worst cases. A decision-maker might prefer abatement strategies at the top of this “cliff” compared to strategies that are at the bottom.

3.4 Robustness of the preferred strategies

The LDC and SF criteria can be used to derive strategies that may prove more robust with respect to deeply uncertain parameters than the strategy that derives from EUM (Fig. 7). Here we define an increase in robustness with respect to a deeply uncertain parameter as a decreased slope of the expected performance of the strategy over the considered parameter range. Figure 7 demonstrates this effect for (a) climate sensitivity, λ *, and (b) threshold specific damages, θ 3. Each panel shows a sensitivity study with expected utility as a function of the considered parameter and all other parameters sampled over their distribution. The two panels are plotted such that an increase of the parameters implies an increase in climate change damages. The fact that the high climate sensitivity values coincide with the lowest values of the utility function for each strategy (c.f. Fig. 4) shows that the current fat high tail of the climate sensitivity (Knutti and Hegerl 2008; Urban and Keller 2009, 2010) is the dominating factor explaining the worst case scenarios in our analysis. Over the considered ranges of the parameter values, the EUM strategy has the steepest overall slope, followed by the intermediate and zero-confidence strategies (the same strategies as analyzed in Figs. 3 and 4). To some extent, this ranking of slopes is expected given the tradeoff between the expected utility and the expected utility for the worst case scenarios (which are located at the far right of these two panels). What is perhaps surprising is that the curves cross over. This results in a reversal of the strategies ranking with respect to the expected utility for the best case and the worst case scenarios. For example, the expected utility maximizing strategy has the highest utility for the best case scenarios (left hand side of panel a) but has the worst expected utility for the worst case scenarios (right hand side of panel a). This result also shows that the SF criterion can identify strategies in a high dimensional decision-space that may increase robustness relative to EUM.

3.5 Effects of learning

Learning has a significant impact on the abatement paths for the SF and LDC criteria, but not for the EUM criterion. The results thus far have neglected any learning. However, uncertainties in the natural and human systems will often decrease over time as observations are made (cf. Keller and McInerney (2008) and Ricciuto et al. (2008), but see also Oppenheimer et al. (2008) for counter examples). To explore the importance of learning we consider a scenario where decision makers instantaneously learn about the MOC sensitivity in the year 2075. Prior to this time a single abatement path is followed. After 2075 if the MOC is sensitive to climate change decision makers will follow one abatement path, and follow a different path if they learn otherwise. Obviously, this representation is unrealistic; the learning process will likely happen gradually as observations are made (Keller and McInerney 2008; Keller et al. 2007a). However, it proves sufficient to yield interesting results.

Figure 8 shows preferred abatement for three decision criteria with learning: (a) EUM, (b) intermediate-confidence, and (c) zero-confidence. As before, the intermediate confidence strategy is determined using safety first criteria with E[W 0.01] = 55. Learning about the MOC in 2075 has only a small effect on the EUM and zero-confidence criteria (panels a and c). The expected utility maximizer is not particularly sensitive to the MOC threshold (c.f. Fig. 2), so will not significantly alter the abatement path at the time of learning. On the other hand, the decision-maker with zero-confidence in the underlying parameter distributions is trying to avoid the worst case outcomes at all cost. Whether or not the MOC proves sensitive to warming, the worst case outcomes will still occur when the climate sensitivity is largest. Since high abatement levels will help improve these outcomes, the zero confidence decision maker will pursue a high abatement path regardless of what they learn about the MOC.

In contrast, the abatement path for the decision-maker with intermediate confidence (Fig. 8b) is noticeably different after the time of learning. If learning reveals that the MOC is sensitive to climate change, the intermediate-confidence decision maker will increase abatement to avoid or delay an MOC shutdown. If learning reveals that the MOC is insensitive, abatement levels will decrease shortly after the time of learning since the economic damages for an MOC shutdown will no longer be a concern.

Figure 9 maps the tradeoff between E[W] and E[W 0.01] for the cases without learning about the MOC in the considered time-horizon and with learning in 2075. The end points of these curves, corresponding to EUM (left end) and zero-confidence (right end) are similar, which is expected since the abatement strategies are almost the same. However, the intermediate-confidence strategies, with E[W 0.01] = 55, shows a marked improvement in expected utility due to learning. This improvement is even greater for values between 55 and 62.

The tradeoff between expected utility (E[W]) and expected value of the lowest 1% of utility (E[W 0.01]) for the cases with no learning during the considered time-horizon whether the MOC is sensitive to warming (solid), and with learning about this sensitivity in the year 2075 (dashed). Preferred strategies for each case are determined using both the limited degree of confidence and safety first criteria with results for each case collated onto single curves. Both the climate threshold and uncertainty are considered, and utilities have been rescaled to 100% of the business-as-usual (BAU) range

The difference in expected utility between the two tradeoff curves can be translated into an economic value of information (VOI). We approximate the VOI in the optimal growth model by the difference in 2005 consumption that would equalize the objective function for the case with and without the additional information. Figure 10 expresses the VOI for various values of E[W 0.01] as percentage points of 2005 GWP. For reference, 1% of 2005 GWP in DICE-07 is approximately 600 billion US dollars. For the intermediate-confidence criteria, this value is around 20% of GWP, and is larger for values of E[W 0.01] between 55 and 62. If learning occurs at an earlier time, this value will likely be greater (Keller et al. 2007b).

4 Caveats

Our results derive from the analysis of a simple Integrated Assessment Model of Climate Change. The model’s simplicity enables a transparent analysis, but at the cost of neglecting many, potentially important, issues. Integrated Assessment Models of Climate change have many limitations (e.g. Ackerman et al. 2009; Weyant 2009; Nordhaus 2008; Goes et al. 2011; Schienke et al. 2011). We focus here on key issues that are specific to (i) the ethical framework underlying the DICE model, (ii) the only partial representation of climate threshold responses, and (iii) the limited set of options as well as uncertainties considered in this analysis.

First, the DICE model employs a discounted utilitarian and consequentialist framework (i.e., future utilities are discounted in the model at a social rate of time preference). Choosing a value for the social rate of time preference poses nontrivial ethical and analytical challenges. Ramsey (1928) called the approach to “discount later enjoyments in comparison with early ones [...] a practice which is ethically indefensible and arises merely from the weakness of the imagination”. It is important to note, however, that the adopted representation of discounting may be a reasonable description of observed behaviors, that discounting is one dominant and codified approach to assessing climate change strategies, and that there are several reasons that argue for positive social rates of time preference (cf. EPA 2010; Nordhaus 1994; OMB 2003; Stern 2008). Of course, the descriptive approach to choosing a value for the social rate of time preference is not equivalent to a prescriptive statement (cf. Bradford 1999; Schienke et al. 2011). Furthermore, this brief discussion is not to distract from the fact that different and arguably more refined approaches to discounting exist and that our results are likely sensitive to the discounting implementation (cf. Arrow 2009; Weitzman 2001).

Second, our study only analyzes one example of many potential climate threshold responses. Our conclusion may well change for other climate thresholds. For instance, a disintegration of the Greenland Ice sheet might be triggered much earlier than the considered example of a shutdown of the North Atlantic Meridional Overturning Circulation (Keller et al. 2008b; Schneider et al. 2007).

Third, our analysis neglects back-stop technologies, induced technological change (Keller et al. 2008a), and samples only a small subset of the parametric and structural uncertainties. For example, we hypothesize that the consideration of CO2 sequestration as a backstop technology would increase both E[W] and E[W 0.01]; however, the cost and global acceptance of this technology is deeply uncertain.

5 Conclusion

We modify a relatively parsimonious integrated assessment model of climate change to include simple representations of a potential climate threshold response, structural uncertainty, and learning. We use this model to analyze the effects of alternative decision-criteria on preferred strategies, the distribution of outcomes, the robustness of the strategies, and the economic value of information. The simplicity of the model enables an arguably transparent analysis, but it also imposes considerable caveats. Subject to these caveats, we draw four main conclusions.

First, choosing decision criteria that put greater weights on the performance under the worst-case scenarios compared to expected utility maximization acts to increase the preferred investments in abating anthropogenic climate forcing. This increase in near term preferred abatement occurs for scenarios with and without learning. Second, increasing the relative importance of the worst-case scenarios is a promising conceptual and computational technique for identifying strategies that may prove more robust, in the sense that they trade off a small decrease in the expected performance for a sizable increase in the performance under the worst-case scenarios. In particular, we show that it is possible to identify such strategies in a numerically efficient way for relatively high dimension dynamic and non-convex decision problems such as the ones posed by anthropogenic climate change. Third, decision criteria that place higher importance on a strategy’s performance under worst-case scenarios compared to expected utility maximization can considerably increase the economic value of learning about the sensitivity of the MOC to climate change. Finally, a key policy relevant conclusion that follows from our analysis is that increasing near term investment in reducing anthropogenic climate forcing may be a promising avenue for increasing the robustness of climate strategies.

References

Aaheim H, Bretteville C (2001) Decision-making frameworks for climate policy under uncertainty. Working paper 2001:02, CICERO, Oslo, Norway

Ackerman F, DeCanio SJ, Howarth RB, Sheeran K (2009) Limitations of integrated assessment models of climate change. Clim Change 95(3–4):297–315. doi:10.1007/s10584-009-9570-x

Alexander S, Coleman T, Li Y (2006) Minimizing CVaR and VaR for a portfolio of derivatives. J Bank Financ 30:583–605. doi:10.1016/j.jbankfin.2005.04.012

Alley RB, Marotzke J, Nordhaus W, Overpeck J, Pielke R, Pierrehumbert R, Rhines P, Stocker T, Talley L, Wallace JM (2002) Abrupt climate change: inevitable surprises. National Academy Press, Wasington, D.C.

Andersson F, Mausser H, Rosen D, Uryasev S (2001) Credit risk optimization with conditional value-at-risk criterion. Math Program 89:273–291. doi:10.1007/PL00011399

Andronova NG, Schlesinger ME (2001) Objective estimate of the probability density function for climate sensitivity. J Geophys Res 106:22605–22612. doi:10.1029/2000JD000259

Arrow KJ (2009) A note on uncertainty and discounting in models of economic growth. J Risk Uncertain 38(2):87–94

Bernstein L, Bosch P, Canziani O, Chen Z, Christ R, Davidson O, Hare W, Huq S, Karoly D, Kattsov V, Kundzewicz Z, Liu J, Lohmann U, Manning M, Matsuno T, Menne B, Metz B, Mirza M, Nicholls N, Nurse L, Pachauri R, Palutikof J, Parry M, Qin D, Ravindranath N, Reisinger A, Ren J, Riahi K, Rosenzweig C, Rusticucci M, Schneider S, Sokona Y, Solomon S, Stott P, Stouffer R, Sugiyama T, Swart R, Tirpak D, Vogel C, Yohe G (eds) (2008) IPCC, 2007: climate change 2007: synthesis report. Contribution of Working Groups I, II and III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. IPCC, Geneva, Switzerland

Borsuk ME, Tomassini L (2005) Uncertainty, imprecision and the precautionary principle in climate change assessment. Water Sci Technol 52(6):213–225

Bradford DF (1999) On the uses of benefit-cost reasoning in choosing policy toward global climate change. In: Portney PR, Weyant JP (eds) Discounting and intergenerational equity. RFF Press, Washington, DC, pp 37–44

Bretteville Froyn C (2005) Decision criteria, scientific uncertainty, and the global warming controversy. Mitig Adapt Strategies Glob Chang 10:183–211. doi:10.1007/s11027-005-3782-9

Budescu DV, Kuhn KM, Johnson T (2002) Modeling certainty equivalents for imprecise gambles. Org Behav Human Decis Process 88:748–768. doi:10.1016/S0749-5978(02)00014-6

Du N, Budescu DV (2005) The effects of imprecise probabilities and outcomes in evaluating investment options. Manage Sci 51:1791–1803. doi:10.1287/mnsc.1050.0428

Ellsberg D (1961) Risk, ambiguity, and the Savage axioms. Q J Econ 75(4):643–669

Ellsberg D (2001) Risk, ambiguity and decision. Garland, New York

EPA (2010) Technical support document: social cost of carbon for regulatory impact analysis under executive order 12866. Interagency Working Group on Social Cost of Carbon, United States Government. http://www.epa.gov/oms/climate/regulations/scc-tsd.pdf. Accessed 17 June 2011

Fortin I, Fuss S, Hlouskova J, Khabarov N, Obersteiner M, Szolgayova J (2007) An integrated CVaR and real options approach to investments in the energy sector. Economic Series 209, Institute for Advanced Studies, Vienna

Goes M, Keller K, Tuana N (2011) The economics (or lack thereof) of aerosol geoengineering. Clim Change. doi:10.1007/s10584-010-9961-z

Hall J, Fu G, Lawry J (2007) Imprecise probabilities of climate change: aggregation of fuzzy scenarios and model uncertainties. Clim Change 81(3–4):265–281. doi:10.1007/s10584-006-9175-6

Hurwicz L (1951) Optimality criteria for decision-making under ignorance. Cowles Commission Discussion Paper, Statistics, No. 370

Keith DW (1996) When is it appropriate to combine expert judgments? Clim Change 33(2):139–143. doi:10.1007/BF00140244

Keller K, McInerney D (2008) The dynamics of learning about a climate threshold. Clim Dyn 30(2–3):321–332

Keller K, Tan K, Morel F, Bradford D (2000) Preserving the ocean circulation: implications for climate. Clim Change 47:17–43. doi:10.1023/A:1005624909182

Keller K, Bolker BM, Bradford DF (2004) Uncertain climate thresholds and optimal economic growth. J Environ Econ Manage 48:723–741. doi:10.1016/j.jeem.2003.10.003

Keller K, Deutsch C, Hall MG, Bradford DF (2007a) Early detection of changes in the North Atlantic meridional overturning circulation: implications for the design of ocean observation systems. J Climate 20:145–157. doi:10.1175/JCLI3993.1

Keller K, Kim SR, Baehr J, Bradford D, Oppenheimer M (2007b) Human-induced climate change. In: Schlesinger ME (ed) What is the economic value of information about climate thresholds? Harvard University Press, Cambridge, UK, pp 343–354

Keller K, McInerney D, Bradford DF (2008a) Carbon dioxide sequestration: how much and when? Clim Change 88:267–291. doi:10.1007/s10584-008-941

Keller K, Schlesinger M, Yohe G (2008b) Managing the risks of climate thresholds: uncertainties and information needs. Clim Change 91:5–10

Knutti R, Hegerl G (2008) The equilibrium sensitivity of the earth’s temperature to radiation changes. Nat Geosci 1:735–743. doi:10.1038/ngeo337

Kriegler E, Held H (2005) Utilizing belief functions for the estimation of future climate change. Int J Approx Reason 39(2–3):185–209. doi:10.1016/j.ijar.2004.10.005

Krokhmal P, Palmquist J, Uryasev S (2002) Portfolio optimization with conditional value-at-risk objective and constraints. J Risk 4(2):11–27

Lange A (2003) Climate change and the irreversibility effect combining expected utility and maximin. Environ Resour Econ 25(4):417–434. doi:10.1023/A:1025054716419

Larsen N, Mausser H, Uryasev S (2002) Algorithms for optimization of value-at-risk. In: Pardalos P, Tsitsiringos V (eds) Financial engineering, E-commerce, and supply chain. Kluwer Academic Publisher, Boston, pp 129–157

Latif M, Roeckner E, Mikolajewski U, R V (2000) Tropical stabilization of the thermohaline circulation in a greenhouse warming simulation. J Clim 13:1809–1813. doi:10.1175/1520-0442(2000)013<1809:L>2.0.CO;2

Lempert R, Sanstad A, Schlesinger M (2006) Multiple equilibria in a stochastic implementation of DICE with abrupt climate change. Energy Econ 28:677–689. doi:10.1016/j.eneco.2006.05.013

Lempert RJ (2002) A new decision sciences for complex systems. Proc Natl Acad Sci USA 99:7309–7313

Lempert RJ, Collins MT (2007) Managing the risk of uncertain threshold responses: comparison of robust, optimum, and precautionary approaches. Risk Anal 27(4):1009–1026. doi:10.1111/j.1539-6924.2007.00940.x

Lempert RJ, Popper SW, Bankes SC (2003) Shaping the next one hundred years: new methods for quantitative, long-term policy analysis. Tech. rep., RAND MR-1626-RPC

Link PM, Tol RSJ (2004) Possible economic impacts of a shutdown of the thermohaline circulation: an application of fund. Port Econ J 3:99–114. doi:10.1007/s10258-004-0033-z

Liu J, Men C, Cabrera V, Uryasev S, Fraisse C (2008) Optimizing crop insurance under climate variability. J Appl Meteorol Clim 47:2572–2580. doi:10.1175/2007JAMC1490.1

McInerney D, Keller K (2008) Economically optimal risk reduction strategies in the face of uncertain climate thresholds. Clim Change 91:29–41. doi:10.1007/s10584–006–9137-z

Morgan G, Dowlatabadi H, Henrion M, Keith D, Lempert R, McBrid S, Small M, Wilbanks T (2009) U.S. CCSP Synthesis and Assessment Product 5.2, Best practice approaches for characterizing, communicating, and incorporating scientific uncertainty in decisionmaking. Tech. rep., National Oceanic and Atmospheric Administration

Nordhaus W (1992) An optimal transition path for controlling greenhouse gases. Science 258:1315–1319

Nordhaus W (2007a) The challenge of global warming: Economic models and environmental policy. http://www.econ.yale.edu/~nordhaus/DICEGAMS/DICE2007.htm. Accessed 2 May 2007, model version: DICE-2007.delta.v7

Nordhaus W (2007b) A review of the Stern review on the economics of climate change. J Econ Lit 45(3):686–702. doi:10.1257/jel.45.3.686

Nordhaus WD (1994) Managing the global commons. The MIT press, Cambridge, Massachusetts

Nordhaus WD (2008) A question of balance: economic modeling of global warming. Yale Press

OMB (2003) Circular A-4: regulatory analysis. Office of Management and Budget, http://www.ombwatch.org/files/regs/OIRA/a-4-2003.pdf. Accessed 17 June 2011

Oppenheimer M, O’Neill BC, Webster M (2008) Negative learning. Clim Change 89(1–2):155–172. doi:10.1007/s10584-008-9405-1

Peterson GD, Carpenter SR, Brock WA (2003) Uncertainty and the management of multistate ecosystems: an apparently rational route to collapse. Ecology 84(6):1403–1411

Popp D (2004) ENTICE: endogenous technological change in the DICE model of global warming. J Environ Econ Manage 48:742–768. doi:10.1016/j.jeem.2003.09.002

Quaranta A, Zaffaroni A (2008) Robust optimization of conditional value at risk and portfolio selection. J Bank Financ 32:2046–2056. doi:10.1016/j.jbankfin.2007.12.025

Quiggin J (2008) Economists and uncertainty. In: Bammer G, Smithson M (eds) Uncertainty and risk: multidisciplinary perspectives, earthscan risk in society series, London, pp 195–204

Ramsey F (1928) A mathematical theory of saving. Econ J 38(152):543–559

Rawls J (1971) A theory of justice. Harward University Press

Ricciuto DM, Davis KJ, Keller K (2008) A Bayesian synthesis inversion of carbon cycle observations: how do observations reduce uncertainties about future sinks? Glob Biogeochem Cycles 22(GB2030). doi:10.1029/2006GB002908

Schienke E, Baum S, Tuana N, Davis K, Keller K (2011) Intrinsic ethics regarding integrated assessment models for climate management. Sci Eng Ethics 17(3):503–523. doi:10.1007/s11948-010-9209-3

Schneider SH, Semenov S, Patwardhan A, Burton I, Magadza C, Oppenheimer M, Pittock A, Rahman A, Smith J, Suarez A, Yamin F, Corfee-Morlot J, Finkel A, Füssel HM, Keller K, MacMynowski D, Mastrandrea MD, Todorov A, Sukumar R, Ypersele JPv, Zillman J (2007) Assessing key vulnerabilities and the risk from climate change. Cambridge University Press, Cambridge, UK, pp 779–810

Stern N (2008) The economics of climate change. Am Econ Rev 98(2):1–37. doi:10.1257/aer.98.2.1

Stocker T, Schmittner A (1997) Influence of CO2 emission rates on the stability of the thermohaline circulation. Nature 388:862–865

Tol R (1994) The damage costs of climate change: a note on tangibles and intangibles, applied to DICE. Energy Policy 22(5):436–438

Tol R (2003) Is the uncertainty about climate change too large for expected cost-benefit analysis? Clim Change 56(3):265–289. doi:10.1023/A:1021753906949

Urban NM, Keller K (2009) Complementary observational constraints on climate sensitivity. Geophys Res Lett 36(L04708). doi:10.1029/2008GL036457

Urban NM, Keller K (2010) Probabilistic hindcasts and projections of the coupled climate, carbon cycle, and Atlantic meridional overturning circulation systems: a Bayesian fusion of century-scale observations with a simple model. Tellus A 62(5):737–750. doi:10.1111/j.1600-0870.2010.00471.x

Weitzman ML (2001) Gamma discounting. Am Econ Rev 91(1):260–271

Welsch H (1995) Greenhouse-gas abatement under ambiguity. Energy Econ. 17(2):91–100. doi:10.1016/0140-9883(95)00010-R

Weyant JP (2009) A perspective on integrated assessment. Clim Change 95(3-4):317–323. doi:10.1007/s10584-009-9612-4

Zickfeld K, Bruckner T (2008) Reducing the risk of atlantic thermohaline circulation collapse: Sensitivity analysis of emissions corridors. Clim Change 91(3–4):291–315. doi:10.1007/s10584-008-9467-0

Zickfeld K, Levermann A, Morgan M, Kuhlbrodt T, Rahmstorf S, Keith D (2007) Expert judgements on the response of the Atlantic meridional overturning circulation to climate change. Clim Change 82:235–265. doi:10.1007/s10584-007-9246-3

Acknowledgements

We thank David Budescu, Nathan Urban, Marlos Goes, and Brian Tuttle for invaluable discussions. Partial funding from the U.S. National Science Foundation (Grant SES-0345925), and the Penn State Center for Climate Risk Management, is gratefully acknowledged. Comments from the editors and three anonymous reviewers helped to improve the exposition of the manuscript considerably. Of course, all errors and opinions are ours.

Author information

Authors and Affiliations

Corresponding author

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

McInerney, D., Lempert, R. & Keller, K. What are robust strategies in the face of uncertain climate threshold responses?. Climatic Change 112, 547–568 (2012). https://doi.org/10.1007/s10584-011-0377-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-011-0377-1