Abstract

The assessments of the Intergovernmental Panel on Climate Change (IPCC), since the First Assessment Report, have involved calibrated uncertainty language and other methods aimed towards clear communication of the degree of certainty in findings of the assessment process. There has been a continuing tradition of iterative improvement of the treatment of uncertainties in these assessments. Here we consider the motivations for the most recent revision of the uncertainties guidance provided to author teams of the Fifth Assessment Report (AR5). We first review the history of usage of calibrated language in IPCC Assessment Reports, along with the frameworks for treatment of uncertainties that have been provided to IPCC author teams. Our primary focus is the interpretation and application of the guidance provided to author teams in the Fourth Assessment Report, with analysis of the successes and challenges in the application of this guidance and approaches taken in usage of its calibrated uncertainty language. We discuss the ways in which the AR5 Guidance Note attempts to refine the calibrated uncertainty metrics and formalize their interrelationships to improve the consistency of treatment of uncertainties across the Working Group contributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Intergovernmental Panel on Climate Change (IPCC) reports present comprehensive, up-to-date scientific, technical, and socioeconomic assessments of topics related to climate change (IPCC 2008). Author teams synthesize and evaluate available scientific information and then communicate the state of knowledge in an informative and policy-relevant manner (Moss and Schneider 2000). To be useful to decision-makers and other users of the reports, the findings of this assessment process must reflect the strength of and uncertainties in the associated knowledge base, which vary across findings. An important innovation in the history of IPCC reports has been extensive use of calibrated language to communicate the degree of certainty in findings in a consistent manner that enables clear interpretation by users of the reports. This language, with its corresponding approaches for evaluating uncertainties, helps distinguish findings along the spectrum of speculative to well understood.

Treatment of uncertainties has evolved over the course of IPCC assessments (Table 1). In its Policymakers Summary, the Working Group I contribution to the First Assessment Report (FAR) sorted findings under headings related qualitatively to their degree of certainty (IPCC 1990). Additionally, a few individual chapters in the Working Group I contribution used qualitatively calibrated levels of confidence to characterize findings presented in their Executive Summaries (Folland et al. 1990; Mitchell et al. 1990). The Second Assessment Report (SAR) discussed the importance of a consistent framework for evaluating uncertainties (McBean et al. 1996) but did not yet employ such a framework across the Working Groups. Nonetheless, most chapters in the Working Group II contribution to the SAR used qualitative levels of confidence to characterize findings presented in their Executive Summaries (IPCC 1996).

For subsequent IPCC assessment cycles, uncertainties guidance papers have aimed to enable systematic treatment of uncertainties across the Working Groups. A first guidance paper was developed as part of the Third Assessment Report (TAR) cycle, providing a common process and calibrated language for evaluating and communicating the degree of certainty in findings (Moss and Schneider 2000). This guidance paper stated that “guidelines such as these will never truly be completed,” encouraging author teams to debate and think critically about the guidance and to revise it following the TAR. An iterative process of learning and improvement has thus been central to the IPCC uncertainties guidance from the start. For the Fourth Assessment Report (AR4), the IPCC Workshop on Describing Uncertainties in Climate Change to Support Analysis of Risk and of Options (May 2004) and the resulting concept paper (Manning et al. 2004) culminated in the Guidance Notes for Lead Authors of the AR4 on Addressing Uncertainties (IPCC 2005). Now, at the start of the Fifth Assessment Report (AR5), the guidance on treatment of uncertainties has again been revisited. A Cross-Working Group Meeting on Consistent Treatment of Uncertainties (July 2010) led to development of the Guidance Note for Lead Authors of the IPCC Fifth Assessment Report on Consistent Treatment of Uncertainties (Mastrandrea et al. 2010).

Each guidance paper has provided author teams with related but distinct approaches for evaluating and communicating the degree of certainty in findings of the assessment process. The guidance paper for the TAR (Moss and Schneider 2000) provided insight and suggestions for estimating parameters, processes, and outcomes and for formulating clear, precise, and meaningful findings. It also presented calibrated language, both a quantitative “confidence” scale and qualitative descriptors of evidence and agreement, for characterizing the state of knowledge underlying a finding. The confidence scale included terms such as very low confidence (5% or less), medium confidence (33% to 67%), and very high confidence (95% or greater), and the qualitative descriptors of evidence and agreement ranged from speculative to well established. Additionally, the guidance paper instructed author teams to prepare traceable accounts of their judgments about scientific information underlying findings, including descriptions of the type and consistency of lines of evidence and of probabilistic or other information on associated uncertainties.

In the TAR itself, however, usage of calibrated language diverged (Manning 2006). The Working Group II contribution to the TAR closely adopted the approach of the guidance paper, as well as sometimes using the qualitative descriptors of evidence and agreement (IPCC 2001b). On the other hand, the Working Group I contribution to the TAR used a distinct “likelihood” scale to communicate uncertainties evaluated more through statistical and probabilistic information than through author teams’ judgments of the state of knowledge (IPCC 2001a). The Working Group I contribution did use a qualitative “level of scientific understanding” index (ranging from very low to high) to characterize the understanding underlying the contribution of different factors to radiative forcing of the climate system (IPCC 2001a; Ramaswamy et al. 2001). The Working Group III contribution to the TAR did not use calibrated language to communicate the degree of certainty in findings (IPCC 2001c). Correspondingly, the TAR Synthesis Report communicated the degree of certainty in findings in varying ways, depending on the Working Group contribution from which findings originated (IPCC 2001d). Responding to this divergence in usage across the Working Groups, the Guidance Notes for the AR4 attempted to formally define confidence and likelihood as two distinct scales of quantitatively calibrated language (IPCC 2005).

In this paper, we will focus on the transition from the AR4 Guidance Notes to the current uncertainties guidance for the AR5, examining the motivation for the most recent revisions. We will first provide an overview of the AR4 guidance for evaluating and communicating the degree of certainty in key findings of the assessment process. We will then consider how the Guidance Notes were applied in practice across the Working Groups in the AR4, identifying differing interpretations of the calibrated language and inconsistencies and ambiguities that sometimes resulted. We will discuss how these differences stimulated revisions of the uncertainties guidance provided to author teams of the AR5.

2 Overview of the AR4 uncertainties guidance

The AR4 guidance paper on treatment of uncertainties presented three calibrated scales for communicating the degree of certainty in findings (IPCC 2005). This calibrated language revised the scales presented in the TAR uncertainties guidance (Moss and Schneider 2000), also building upon the likelihood scale used by Working Group I in the TAR (IPCC 2001a; Manning 2006).

2.1 Qualitative levels of understanding

As in the TAR guidance paper, the AR4 Guidance Notes provided qualitative language for characterizing the amount of evidence supporting findings and the degree of consensus or agreement among experts regarding the interpretation of this evidence (IPCC 2005). Whereas the TAR uncertainties guidance provided four qualitative terms for together characterizing evidence and agreement (Moss and Schneider 2000), the AR4 Guidance Notes proposed that evidence and agreement be described separately, with evidence ranging in amount from limited to much and agreement ranging from low to high. For findings associated with high agreement and much evidence or when otherwise appropriate, author teams were instructed to use the calibrated confidence or likelihood scales.

2.2 Levels of confidence

As in the TAR guidance paper, the AR4 Guidance Notes again provided quantitatively calibrated levels of confidence (IPCC 2005). The Guidance Notes specified that levels of confidence characterized an author team’s judgment of the correctness of a model, analysis, or statement. They defined five levels of confidence ranging from very low confidence (less than 1 out of a 10 chance of being correct), to medium confidence (about 5 out of 10 chance of being correct), to very high confidence (at least 9 out of 10 chance of being correct). Use of low and very low confidence was suggested only for areas of major concern due to risk or opportunity. Compared to the TAR guidance paper’s presentation, these levels of confidence were not defined as probability ranges.

2.3 Likelihood

The AR4 Guidance Notes also provided a likelihood scale (IPCC 2005). This calibrated language characterized probabilistic evaluation of outcomes having occurred or occurring in the future, as determined through quantitative analysis or elicitation of expert views. The Guidance Notes listed seven terms in the likelihood scale, ranging from exceptionally unlikely (<1% probability of occurrence) to virtually certain (>99% probability of occurrence). These likelihood terms revised the scale used in the Working Group I contribution to the TAR (IPCC 2001a).

2.4 Other aspects of the AR4 uncertainties guidance

As in the TAR guidance paper, the AR4 Guidance Notes provided more generalized advice for reviewing sources of uncertainties and considering group dynamics in the assessment process (IPCC 2005). Further, the Guidance Notes instructed author teams to explain their judgments by providing traceable accounts of the steps used to estimate the degree of certainty for key findings from available lines of evidence. The Guidance Notes also presented a hierarchy with instructions for using the calibrated uncertainty language for common categories of quantitative findings.

3 Use of the AR4 uncertainties guidance

In the AR4, trends in usage of calibrated uncertainty language emerged across the Working Groups. For assessment findings characterized by calibrated uncertainty language, the Working Group I contribution predominantly presented likelihood terms, with less explicit use of confidence (IPCC 2007a). The following statement from the Working Group I Summary for Policymakers (SPM; IPCC 2007b) typifies a finding for which likelihood was assigned:

“It is very likely that hot extremes, heat waves and heavy precipitation events will continue to become more frequent.”

Further details about evaluations of uncertainties, along with variations on the methods of evaluation, were provided in some individual Working Group I chapters (e.g., Le Treut et al. 2007; Forster et al. 2007; Hegerl et al. 2007; Meehl et al. 2007).

The Working Group II contribution to the AR4 presented confidence and likelihood language for assessment findings, relying most heavily on confidence assignments (IPCC 2007c). This example from the Working Group II SPM (IPCC 2007d) represents usage of the calibrated confidence metric:

“Adaptation for coasts will be more challenging in developing countries than in developed countries, due to constraints on adaptive capacity (high confidence).”

Several chapters also described implications of uncertainties in risk-management and decision-making frameworks (e.g., Carter et al. 2007; Kundzewicz et al. 2007; Schneider et al. 2007).

The Working Group III contribution to the AR4 (IPCC 2007e) solely used the qualitative summary terms for evidence and agreement, as exemplified by this finding in the Working Group III SPM (IPCC 2007f):

“With current climate change mitigation policies and related sustainable development practices, global GHG emissions will continue to grow over the next few decades (high agreement, much evidence).”

The Working Group III SPM stated that its assessed scientific disciplines prevented use of the quantitative confidence and likelihood scales.

The AR4 SYR necessarily included a variety of approaches for characterizing the degree of certainty in findings (IPCC 2007g), depending on the Working Group contribution from which findings originated. In some cases, synthesis of findings across Working Groups involved removing uncertainty language assigned through one Working Group’s approach.

4 Confidence and likelihood in the AR4

In the AR4 Guidance Notes, confidence and likelihood were presented as conceptually distinct quantitative metrics (IPCC 2005). Their conceptual distinction, however, was not always maintained in practice in the AR4 (IPCC 2007a, c), in large part because these metrics were intrinsically linked. Here we discuss the linkage between the confidence and likelihood scales, differing interpretations of this linked relationship, and the varying usages of the metrics that resulted.

The AR4 Guidance Notes (IPCC 2005) specified that confidence and likelihood characterized different aspects of uncertainties. Whereas levels of confidence quantified the expert judgment of an author team regarding the correctness of a model, analysis, or statement, likelihood reflected probabilistic evaluation of the occurrence of specific outcomes, based on quantitative analysis (e.g., statistical analysis of observations or model results, or formal elicitation of expert judgment).

All likelihood statements under this framework were based on information, models, and/or analyses for which confidence implicitly or explicitly had been evaluated. For example, a likelihood assignment would not be based on information, models, or analyses that the author team judged incorrect; therefore, the author team must have had some confidence in the underlying evidence (e.g., see paragraph 8D of IPCC (2005)). In some cases, author teams may have more explicitly considered their level of confidence in the information, models, and/or analyses underpinning likelihood assignments. Although the AR4 Guidance Notes implied a linked relationship between confidence and likelihood, they did not provide explicit guidance on how to account for and convey this linkage.

In the absence of such guidance, author teams across Working Groups I and II in the AR4 adopted differing interpretations of the relationship between the metrics. For instance, the Working Group I Technical Summary (TS; Solomon et al. 2007) stated that value uncertainties in processes, system interactions, and data measurements are generally expressed probabilistically (e.g., using likelihood), while structural uncertainties are generally expressed by an author team’s confidence in the results. Thus, in this interpretation, likelihood terms reflected uncertainties explicitly incorporated in available quantitative analyses, while levels of confidence characterized the degree to which models or analyses were judged to be correct. This interpretation suggested that likelihood and confidence assignments separately communicated two important types of uncertainties.

A second interpretation of the relationship between confidence and likelihood can be understood from discussions in Manning (2006) and Kandlikar et al. (2005). These authors argue that it is irrational to assign an outcome high or low likelihood if confidence in the underlying science is low and that instead it is only appropriate to assign moderate likelihood in such situations. This second interpretation is consistent with a conception of likelihood as the “real world” probability of an outcome occurring, a probability reflecting all uncertainties, especially value and structural. In implicitly blending evaluation of confidence into the assignment of likelihood, this interpretation can lead to a different usage of confidence and likelihood. Consider a hypothetical situation in which model projections suggest a high-likelihood outcome, but in which there is only moderate confidence that the models incorporate all relevant processes. Adopting this second interpretation of likelihood as reflecting all uncertainties, an author team could “downgrade” the likelihood suggested by the model projections (e.g., employing likely instead of very likely) to implicitly account for uncertainties due to missing processes. Alternatively, following the first interpretation of likelihood and confidence as separately characterizing value and structural uncertainties, an author team could present the likelihood level suggested by the model projections and separately present the level of confidence in the underlying models and their projections.

In practice in the AR4, the intrinsic linkage between confidence and likelihood and the lack of guidance for conveying the linkage, as well as the fact that both metrics were defined probabilistically, resulted in somewhat different usage of the metrics. The contributions of Working Groups I and II in the AR4 exhibited at least four separate uses of confidence and likelihood.

-

Approach (1): Use of either confidence or likelihood terms to characterize different aspects of uncertainties, with confidence assignments characterizing the author team’s judgment about the correctness of a model, analysis, or statement and with likelihood assignments characterizing the probabilistic evaluation of the occurrence of specific outcomes. For example, the Working Group II AR4 SPM (IPCC 2007d) stated, “[b]ased on satellite observations since the early 1980s, there is high confidence that there has been a trend in many regions towards earlier ‘greening’ of vegetation in the spring linked to longer thermal growing seasons due to recent warming.”

-

Approach (2): Use of likelihood with inclusion of a confidence evaluation in the likelihood assignment, consistent with the interpretation of the likelihood metric as encompassing all relevant uncertainties. For example, the Working Group I AR4 SPM (IPCC 2007b) stated, “[i]t is likely that there has been significant anthropogenic warming over the past 50 years averaged over each continent except Antarctica.” Section 4.1 discusses the ways in which likelihood statements of this type incorporated elements of confidence.

-

Approach (3): Use of confidence and likelihood terms together in individual statements, sometimes following the interpretation of likelihood and confidence given in approach (1). For example, the Working Group II AR4 SPM stated, “[o]ver the course of this century, net carbon uptake by terrestrial ecosystems is likely to peak before mid-century and then weaken or even reverse, thus amplifying climate change (high confidence).”

-

Approach (4): Use of the likelihood or confidence scales “interchangeably,” without clear conceptual distinction. For example, Chapter 11 of the Working Group I AR4 contribution (Christensen et al. 2007), in a table summarizing projected changes related to climate extremes, used a “confidence” scale that combined confidence and likelihood terms.

In the subsequent sections, for findings related to observed and projected changes in climate and impacts, we illustrate these different approaches.

4.1 Observed climate changes and impacts

Many findings in the Working Group I and II contributions to the AR4 (IPCC 2007a, c) pertained to observed changes in climate or in physical, biological, and/or social systems in response to climate change. For such findings, the Working Group I contribution often used likelihood language, while the Working Group II contribution generally used levels of confidence. For the most part, this usage of confidence and likelihood followed approach (1), but in at least some places, elements of the other three approaches appeared. To exemplify these trends, we focus here on an important subcategory of observed findings, related to detection and attribution.

Detection and attribution considers the causes of past changes in physical, biological, and social systems, with particular focus on the role of anthropogenic factors. This statement from the Working Group I SPM (IPCC 2007b) is a prominent example:

“Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations.”

Even though Working Groups I and II used compatible definitions for detection and attribution in the AR4 (Hegerl et al. 2007; Rosenzweig et al. 2007), they adopted somewhat different approaches in characterizing the degree of certainty in detection and attribution findings, which were based on statistical as well as non-probabilistic information.

Chapter 9 of the Working Group I contribution (Hegerl et al. 2007) developed detection and attribution findings from assessment of studies that used a variety of observational data sets, models, forcings, and analysis techniques (see Mastrandrea et al. in this issue). This assessment required consideration of statistical analyses and other factors, including the number of studies, the consistency of detection and attribution results across studies, and the extent to which known uncertainties were incorporated in these studies. For the resulting findings, Hegerl et al. (2007) assigned likelihood and then further “downweighted” these likelihood assignments to account for unincorporated uncertainties, such as structural uncertainties or limited exploration of uncertain historical forcing trajectories, although the extent of downweighting was not specified. This author team thus adopted approach (2) above for the linkage between confidence and likelihood.

Chapter 1 of the Working Group II contribution (Rosenzweig et al. 2007) assessed observed changes in physical and biological systems, using levels of confidence to characterize findings about these changes and their relationship to climate change. Rosenzweig et al. (2007) then employed likelihood to characterize the findings of their detection and attribution assessment, which was based on their assessment of observed changes and more formal attribution analyses. Downweighting as introduced by Hegerl et al. (2007) was not explicitly mentioned; it therefore appears that the interpretation of the linkage between confidence and likelihood by Rosenzweig et al. (2007) was most consistent with approach (1) above.

In the Working Group II SPM (IPCC 2007d), unlike in the underlying chapter text, attribution findings were characterized by both confidence and likelihood terms, as demonstrated by the following two statements:

“A global assessment of data since 1970 has shown it is likely that anthropogenic warming has had a discernible influence on many physical and biological systems. … [T]he consistency between observed and modelled changes in several studies and the spatial agreement between significant regional warming and consistent impacts at the global scale is sufficient to conclude with high confidence that anthropogenic warming over the last three decades has had a discernible influence on many physical and biological systems.” (italics added)

Likelihood language is used to characterize the degree of certainty in the first finding, in the same way that it was used in the underlying chapter text (Rosenzweig et al. 2007). In the second statement, confidence language is used instead. This confidence assignment may have reflected usage consistent with approach (1), with confidence expressing the authors’ judgment about the correctness of the statement. Alternatively, if there was not a clear rationale for this differential use of calibrated language, the confidence and likelihood metrics may have been treated interchangeably, consistent with approach (4) above.

In sum, the Working Group I and II contributions to the AR4 adopted different interpretations of the relationship between confidence and likelihood for characterizing the degree of certainty in detection and attribution findings. The AR5 Guidance Note (Mastrandrea et al. 2010) attempts to clarify the linkage between the confidence and likelihood scales and provide a framework for even more consistent usage. An IPCC Working Group I and II Expert Meeting on Detection and Attribution related to Anthropogenic Climate Change in 2009 also led to a Good Practice Guidance Paper on Detection and Attribution (Hegerl et al. 2010) for detection and attribution assessments in IPCC reports.

4.2 Projected climate changes and impacts, and relevant system properties

Many other findings in the Working Group I and II contributions to the AR4 (IPCC 2007a, c) pertained to projected changes in climate or in physical, biological, and/or social systems in response to climate change. For such findings, the Working Group I contribution generally used likelihood language, while the Working Group II contribution most often used confidence language.

The metric favored by each Working Group appropriately reflected differences in the evidence underlying findings about projected changes. In the Working Group I contribution, such findings were most often based on quantitative model projections, usually drawn out of model ensemble results, which facilitated probabilistic interpretations expressible using likelihood language. In the Working Group II contribution, some findings were similarly based on quantitative model projections, but others were based partially or completely on other types of evidence less amenable to probabilistic interpretations.

Usage of the favored metrics in both contributions most closely followed approach (1) above, but differing interpretations of the linkage between confidence and likelihood again led to some differences in their use. In the Working Group I contribution, although likelihood was the metric of choice, uncertainties were treated in a variety of ways for some projections where confidence was judged to be low or to vary across different components. As one example, the Working Group I contribution used language outside the calibrated metrics, the phrase “cannot be excluded,” to convey uncertainties related to climate sensitivity, a key property of the climate system relevant to climate change projections. Based on analysis of climate models and constraints from past climate changes, the Working Group I SPM stated:

“The equilibrium climate sensitivity … is likely to be in the range 2°C to 4.5°C with a best estimate of about 3°C, and is very unlikely to be less than 1.5°C. Values substantially higher than 4.5°C cannot be excluded, but agreement of models with observations is not as good for those values.”

Meehl et al. (2007) documented the rationale for these statements, explaining that “the levels of scientific understanding and confidence in quantitative estimates of equilibrium climate sensitivity have increased substantially” since the TAR, allowing the likelihood assignments in the statement above. This discussion stopped short of an explicit assignment of confidence, although presumably the authors had a high level of confidence in the likelihood statements presented here. Meehl et al. (2007) further stated that values substantially higher than 4.5°C “cannot be excluded” due to “fundamental physical reasons as well as data limitations.” This description suggests a low level of confidence regarding any assessment of the likelihood of such values, although confidence terms were not employed and alternative language was used instead. In other cases, the Working Group I contribution used terms resembling the confidence scale to characterize judgments of the correctness of analyses, such as the “level of scientific understanding” index in the context of radiative forcing estimates (e.g., Forster et al. 2007; Denman et al. 2007; IPCC 2007b).

For findings related to projections in both the Working Group I and II contributions to the AR4 (IPCC 2007a, c), there were instances where the confidence and likelihood scales were used without clear conceptual distinction, seemingly following approach (4) above. In some of these cases, the scales were also used together to characterize individual findings, additionally following approach (3). For example, in the Working Group II contribution, some findings related to sectoral and regional projections were characterized by both confidence and likelihood. For example, the following finding appeared in the Working Group II SPM (IPCC 2007d):

“Coasts are projected to be exposed to increasing risks, including coastal erosion, due to climate change and sea-level rise. The effect will be exacerbated by increasing human-induced pressures on coastal areas. (very high confidence)”

In the corresponding chapter Executive Summary, the two underlying findings were also characterized with very high confidence (Nicholls et al. 2007). In the TS (Parry et al. 2007), however, likelihood language was additionally introduced:

“Coasts are very likely to be exposed to increasing risks due to climate change and sea-level rise and the effect will be exacerbated by increasing human-induced pressures on coastal areas. (very high confidence)”

In this example, confidence and likelihood language were used together to characterize the degree of certainty in a finding, following approach (3). Given the statement’s form elsewhere in the Working Group II contribution, it seems that the confidence and likelihood terms here may not have characterized different aspects of uncertainty, suggesting approach (4) as well.

5 Evidence and agreement in the AR4

The Working Group III contribution to the AR4 characterized the degree of certainty in findings through the qualitative summary terms for evidence and agreement (IPCC 2007e). The Working Group III SPM indicated that the underlying scientific disciplines, which consider human choices, were viewed as incompatible with quantitative characterization of uncertainties (IPCC 2007f).

Nonetheless, the Working Group III contribution to the AR4 interpreted evaluations of evidence and agreement as conceptually linked to the quantitative metrics presented in the AR4 Guidance Notes. The Working Group III TS described “level of agreement” as “express[ing] the subjective probability of the results being in a certain realm” (Barker et al. 2007). The Working Group III SPM also described “level of agreement” as “the expert judgment of the authors of WGIII on the level of concurrence in the literature” (IPCC 2007f). These descriptions of “agreement” overlapped with confidence and likelihood as presented in the AR4 Guidance Notes (IPCC 2005).

The qualitative evidence and agreement descriptors and the quantitative confidence scale were also linked in the frameworks of both the AR4 Guidance Notes and the TAR guidance paper. For example, in the AR4 Guidance Notes, the phrase “level of confidence” was used to refer to the calibrated language for describing evidence and agreement (see paragraphs 8 and 12 of IPCC (2005)). In the TAR guidance paper (Moss and Schneider 2000), the qualitative terms for evidence and agreement were described as a “supplement,” not an “alternative,” to the quantitative confidence scale, which would allow author teams to explain their confidence assignments.

The AR4 Guidance Notes instructed use of confidence or likelihood for findings assigned high agreement, much evidence, but also stated that the qualitative evidence and agreement summary terms could be used “to express uncertainty in a finding where there is no basis for making more quantitative statements” (IPCC 2005). In evaluating the level of agreement and amount of evidence underlying findings, the Working Group III author teams were developing qualitative judgments of their level of confidence for these findings, but they did not integrate these evaluations into quantitatively calibrated confidence assignments. It also appears that the Working Group III contribution did not employ likelihood terms because required probabilistic evaluations of uncertainties were deemed lacking. The degree of certainty in findings of the Working Group III contribution was thus characterized differently from the degree of certainty in findings in the Working Group I and II contributions.

6 The AR5 uncertainties guidance

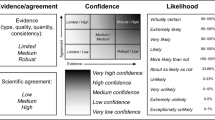

The AR5 Guidance Note (Mastrandrea et al. 2010) builds on the innovations of the previous guidance papers on uncertainties (Moss and Schneider 2000; IPCC 2005) and the successes and challenges of using calibrated uncertainty language in previous Assessment Reports. It provides a further unified and broadly applicable framework for the evidence and agreement, confidence, and likelihood scales. In addition to providing generalized advice on treatment of uncertainties, the AR5 Guidance Note more explicitly presents the relationship among evaluations of evidence and agreement, confidence, and likelihood: evidence and agreement evaluations provide the foundation for confidence assignments, and confidence evaluations underpin likelihood assignments.

The AR5 Guidance Note indicates that the first step in evaluating the validity of an assessment finding is consideration of the type, amount, quality, and consistency of evidence and the degree of agreement and of appropriate summary terms for evidence and agreement. Compared to previous guidance papers on uncertainties, the AR5 Guidance Note includes more explicit mention of the multiple aspects of evidence relevant to an IPCC assessment. The Guidance Note also indicates more directly when author teams should evaluate confidence or should also consider presenting probabilistic information, including likelihood, for findings (e.g., see paragraphs 8 and 11 of Mastrandrea et al. (2010)).

The AR5 Guidance Note also more clearly presents the confidence and likelihood metrics as fundamentally linked but not overlapping. Confidence is described as a qualitative metric synthesizing “author teams’ judgments about the validity of findings as determined through evaluation of evidence and agreement,” without probabilities associated with the five levels of confidence. Likelihood is presented as a subsequent option for characterizing quantitative information on uncertainties, following evaluation of confidence. In addition, author teams are encouraged to present, when available, full probability distributions or more specific probability ranges instead of likelihood terms.

In the AR5 Guidance Note, the more distinct definitions of confidence and likelihood, with the metrics characterizing different aspects of uncertainties (consistent with approach (1) above), can facilitate even more consistent presentation of findings across the Working Group contributions. For assessment of adaptation and mitigation in the Working Group II and III contributions to the AR5, the qualitatively expressed confidence scale should now be more broadly applicable for findings based on social science or other disciplines involving human choices, especially if used in conjunction with future scenarios. It should also be possible to use this qualitative confidence scale as an alternative to the qualitative level of scientific understanding index used in the Working Group I contribution to the AR4 (IPCC 2007a).

The AR5 Guidance Note continues the iterative tradition of treatment of uncertainties in Assessment Reports, with the goal of enabling author teams of all Working Groups to consistently characterize the degree of certainty in findings of the assessment process. Although the writing of the AR5 Guidance Note has been completed, application of the framework it describes is just beginning. We expect that the experiences of author teams during the preparation of the AR5 will also lead to further lessons and innovations in the treatment of uncertainties.

References

Barker T, Bashmakov I, Bernstein L, Bogner JE, Bosch PR, Dave R, Davidson OR, Fisher BS, Gupta S, Halsnæs K, Heij GJ, Kahn Ribeiro S, Kobayashi S, Levine MD, Martino DL, Masera O, Metz B, Meyer LA, Nabuurs G-J, Najam A, Nakicenovic N, Rogner H-H, Roy J, Sathaye J, Schock R, Shukla P, Sims REH, Smith P, Tirpak DA, Urge-Vorsatz D, Zhou D (2007) Technical summary. In: Metz B, Davidson OR, Bosch PR, Dave R, Meyer LA (eds) Climate change 2007: Mitigation. Contribution of Working Group III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 25–93

Carter TR, Jones RN, Lu X, Bhadwal S, Conde C, Mearns LO, O’Neill BC, Rounsevell MDA, Zurek MB (2007) New assessment methods and the characterisation of future conditions. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 133–171

Christensen JH, Hewitson B, Busuioc A, Chen A, Gao X, Held I, Jones R, Kolli RK, Kwon W-T, Laprise R, Magaña Rueda V, Mearns L, Menéndez CG, Räisänen J, Rinke A, Sarr A, Whetton P (2007) Regional climate projections. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 847–940

Denman KL, Brasseur G, Chidthaisong A, Ciais P, Cox PM, Dickinson RE, Hauglustaine D, Heinze C, Holland E, Jacob D, Lohmann U, Ramachandran S, da Silva Dias PL, Wofsy SC, Zhang X (2007) Couplings between changes in the climate system and biogeochemistry. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 499–587

Folland CK, Karl TR, Vinnikov KYA (1990) Observed climate variations and change. In: Houghton JT, Jenkins GJ, Ephraums JJ (eds) Climate change: The IPCC scientific assessment. Report prepared for IPCC by Working Group I. Cambridge University Press, Cambridge, pp 195–238

Forster P, Ramaswamy V, Artaxo P, Berntsen T, Betts R, Fahey DW, Haywood J, Lean J, Lowe DC, Myhre G, Nganga J, Prinn R, Raga G, Schulz M, Van Dorland R (2007) Changes in atmospheric constituents and in radiative forcing. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 129–234

Hegerl GC, Zwiers FW, Braconnot P, Gillett NP, Luo Y, Marengo Orsini JA, Nicholls N, Penner JE, Stott PA (2007) Understanding and attributing climate change. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt K, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 663–745

Hegerl GC, Hoegh-Guldberg O, Casassa G, Hoerling MP, Kovats RS, Parmesan C, Pierce DW, Stott PA (2010) Good practice guidance paper on detection and attribution related to anthropogenic climate change. In: Stocker TF, Field CB, Qin D, Barros V, Plattner G-K, Tignor M, Midgley PM, Ebi KL (eds) Meeting report of the Intergovernmental Panel on Climate Change Expert Meeting on detection and attribution related to anthropogenic climate change. IPCC Working Group I Technical Support Unit, University of Bern, Bern, pp 1–8

IPCC (1990) In: Houghton JT, Jenkins GJ, Ephraums JJ (eds) Climate change: The IPCC scientific assessment. Report prepared for IPCC by Working Group I. Cambridge University Press, Cambridge

IPCC (1996) In: Watson RT, Zinyowera MC, Moss RH (eds) Climate change 1995: Impacts, adaptations and mitigation of climate change: scientific-technical analyses. Contribution of Working Group II to the Second Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2001a) In: Houghton JT, Ding Y, Griggs DJ, Noguer M, van der Linden PJ, Dai X, Maskell K, Johnson CA (eds) Climate change 2001: The scientific basis. Contribution of Working Group I to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2001b) In: McCarthy JJ, Canziani OF, Leary NA, Dokken DJ, White KS (eds) Climate change 2001: Impacts, adaptation, and vulnerability. Contribution of Working Group II to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2001c) In: Metz B, Davidson O, Swart R, Pan J (eds) Climate change 2001: Mitigation. Contribution of Working Group III to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2001d) In: Watson R, Core Writing Team (eds) Climate change 2001: Synthesis report. A contribution of Working Groups I, II, and III to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2005) Guidance notes for lead authors of the IPCC Fourth Assessment Report on addressing uncertainties. Intergovernmental Panel on Climate Change (IPCC), Geneva, Switzerland. http://www.ipcc.ch/pdf/supporting-material/uncertainty-guidance-note.pdf. Accessed 20 February 2011

IPCC (2007a) In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2007b) Summary for policymakers. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 1–18

IPCC (2007c) In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2007d) Summary for policymakers. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 7–22

IPCC (2007e) In: Metz B, Davidson OR, Bosch PR, Dave R, Meyer LA (eds) Climate change 2007: Mitigation. Contribution of Working Group III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

IPCC (2007f) Summary for policymakers. In: Metz B, Davidson OR, Bosch PR, Dave R, Meyer LA (eds) Climate change 2007: Mitigation. Contribution of Working Group III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 1–23

IPCC (2007g) In: Core Writing Team, Pachauri RK, Reisinger A (eds) Climate change 2007: Synthesis report. Contribution of Working Groups I, II and III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Chang. Intergovernmental Panel on Climate Change (IPCC), Geneva

IPCC (2008) Appendix A to the principles governing IPCC work. Procedures for the preparation, review, acceptance, adoption, approval and publication of IPCC reports. Intergovernmental Panel on Climate Change (IPCC). http://www.ipcc.ch/organization/organization_procedures.shtml. Accessed 20 February 2011

Kandlikar M, Risbey J, Dessai S (2005) Representing and communicating deep uncertainty in climate-change assessments. C R Geoscience 337:443–455

Kundzewicz ZW, Mata LJ, Arnell NW, Döll P, Kabat P, Jiménez B, Miller KA, Oki T, Sçen Z, Shiklomanov IA (2007) Freshwater resources and their management. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 173–210

Le Treut H, Somerville R, Cubasch U, Ding Y, Mauritzen C, Mokssit A, Peterson T, Prather M (2007) Historical overview of climate change. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt K, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 93–127

Manning MR (2006) The treatment of uncertainties in the fourth IPCC assessment report. Adv Clim Change Res 2:13–21

Manning M, Petit M, Easterling D, Murphy J, Patwardhan A, Rogner H-H, Swart R, Yohe G (eds) (2004) IPCC workshop on describing scientific uncertainties in climate change to support analysis of risk and of options: Workshop report. Intergovernmental Panel on Climate Change (IPCC), Geneva, Switzerland. http://www.ipcc.ch/pdf/supporting-material/ipcc-workshop-2004-may.pdf. Accessed 20 February 2011

Mastrandrea MD, Field CB, Stocker TF, Edenhofer O, Ebi KL, Frame DJ, Held H, Kriegler E, Mach KJ, Matschoss PR, Plattner G-K, Yohe GW, Zwiers FW (2010) Guidance note for lead authors of the IPCC Fifth Assessment Report on consistent treatment of uncertainties. Intergovernmental Panel on Climate Change (IPCC). http://www.ipcc-wg2.gov/meetings/CGCs/index.html#UR. Accessed 20 February 2011

McBean GA, Liss PS, Schneider SH (1996) Advancing our understanding. In: Houghton JT, Meira Filho LG, Callander BA, Harris N, Kattenberg A, Maskell K (eds) Climate change 1995: The science of climate change. Contribution of WGI to the Second Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 517–531

Meehl GA, Stocker TF, Collins WD, Friedlingstein P, Gaye AT, Gregory JM, Kitoh A, Knutti R, Murphy JM, Noda A, Raper SCB, Watterson IG, Weaver AJ, Zhao Z-C (2007) Global climate projections. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt K, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 747–845

Mitchell JFB, Manabe S, Meleshko V, Tokioka T (1990) Equilibrium climate change—and its implications for the future. In: Houghton JT, Jenkins GJ, Ephraums JJ (eds) Climate change: The IPCC scientific assessment. Report prepared for IPCC by Working Group I. Cambridge University Press, Cambridge, pp 131–172

Moss RH, Schneider SH (2000) Uncertainties in the IPCC TAR: Recommendations to lead authors for more consistent assessment and reporting. In: Pachauri R, Taniguchi T, Tanaka K (eds) Guidance papers on the cross cutting issues of the Third Assessment Report of the IPCC. World Meteorological Organization, Geneva, pp 33–51, http://www.ipcc.ch/pdf/supporting-material/guidance-papers-3rd-assessment.pdf. Accessed 20 February 2011

Nicholls RJ, Wong PP, Burkett VR, Codignotto JO, Hay JE, McLean RF, Ragoonaden S, Woodroffe CD (2007) Coastal systems and low-lying areas. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 315–356

Parry ML, Canziani OF, Palutikof JP, Co-authors (2007) Technical summary. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 23–78

Ramaswamy V, Boucher O, Haigh J, Hauglustaine D, Haywood J, Myhre G, Nakajima T, Shi GY, Solomon S (2001) Radiative forcing of climate change. In: Houghton JT, Ding Y, Griggs DJ, Noguer M, van der Linden PJ, Dai X, Maskell K, Johnson CA (eds) Climate change 2001: The scientific basis. Contribution of Working Group I to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 349–416

Rosenzweig C, Casassa G, Karoly DJ, Imeson A, Liu C, Menzel A, Rawlins S, Root TL, Seguin B, Tryjanowski P (2007) Assessment of observed changes and responses in natural and managed systems. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 79–131

Schneider SH, Semenov S, Patwardhan A, Burton I, Magadza CHD, Oppenheimer M, Pittock AB, Rahman A, Smith JB, Suarez A, Yamin F (2007) Assessing key vulnerabilities and the risk from climate change. In: Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 779–810

Solomon S, Qin D, Manning M, Alley RB, Berntsen T, Bindoff NL, Chen Z, Chidthaisong A, Gregory JM, Hegerl GC, Heimann M, Hewitson B, Hoskins BJ, Joos F, Jouzel J, Kattsov V, Lohmann U, Matsuno T, Molina M, Nicholls N, Overpeck J, Raga G, Ramaswamy V, Ren J, Rusticucci M, Somerville R, Stocker TF, Whetton P, Wood RA, Wratt D (2007) Technical summary. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt K, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 19–91

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mastrandrea, M.D., Mach, K.J. Treatment of uncertainties in IPCC Assessment Reports: past approaches and considerations for the Fifth Assessment Report. Climatic Change 108, 659 (2011). https://doi.org/10.1007/s10584-011-0177-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-011-0177-7