Abstract

The uncertainty language framework used by the Intergovernmental Panel on Climate Change (IPCC) is designed to encourage the consistent characterization and communication of uncertainty between chapters, working groups, and reports. However, the framework has not been updated since 2010, despite criticism that it was applied inconsistently in the Fifth Assessment Report (AR5) and that the distinctions between the framework’s three language scales remain unclear. This article presents a mixed-methods analysis of the application and underlying interpretation of the uncertainty language framework by IPCC authors in the three special reports published since AR5. First, I present an analysis of uncertainty language term usage in three recent special reports: Global Warming of 1.5°C (SR15), Climate Change and Land (SRCCL), and The Ocean and Cryosphere in a Changing Climate (SROCC). The language usage analysis highlights how many of the trends identified in previous reports—like the significant increase in the use of confidence terms—have carried forward into the special reports. These observed trends, along with ongoing debates in the literature on how to interpret the framework’s three language scales, inform an analysis of IPCC author experiences interpreting and implementing the framework. This discussion is informed by interviews with IPCC authors. Lastly, I propose several recommendations for clarifying the IPCC uncertainty language framework to address persistent sources of confusion highlighted by the authors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Intergovernmental Panel on Climate Change (IPCC) is tasked with providing comprehensive assessments of the state of knowledge on the causes and impacts of—and responses to—climate change. In order to effectively communicate expert judgements on thousands of policy-relevant knowledge claims made in each report, the IPCC has implemented a system of calibrated uncertainty language that is designed to encourage the consistent characterization and communication of uncertainty between chapters, working groups, and reports. The IPCC uncertainty language framework has been revised or clarified before each of the last three major assessment reports. However, the decision was made to not update the framework and implementation guidelines prior to the commencement of the Sixth Assessment Report (AR6) cycle, which concludes in 2021/2022.

Despite the absence of a formal update to the framework, scholarship published in the wake of the last major assessment report (AR5) highlights how the framework was applied unevenly between working groups and chapter teams (Adler and Hirsch Hadorn 2014; Mach et al. 2017). Commentaries have also criticized the framework for lacking clarity about how authors are supposed to interpret the relationships between the framework’s three language scales: evidence/agreement, confidence, and likelihood (Aven and Renn 2015; Wüthrich 2017; Helgeson et al. 2018; Winsberg 2018; Aven 2019). However, the literature on the common challenges authors experience interpreting and applying the framework have, thus far, focused exclusively on the major assessment reports, with no consideration of the three special reports (SRs) published since AR5: Global Warming of 1.5°C (SR15) (IPCC 2018), Climate Change and Land (SRCCL) (IPCC 2019a), and The Ocean and Cryosphere in a Changing Climate (SROCC) (IPCC 2019b). These SRs constitute the latest application of the uncertainty language framework and perhaps provide the clearest indication of whether the issues raised since AR5 will be addressed in AR6.

While a significant amount of the literature on the IPCC uncertainty language framework has been written by scholars that have participated in the IPCC assessment process themselves (Adler and Hirsch Hadorn 2014), many of the criticisms are based on authors’ personal experiences or anecdotal examples of uncertainty language drawn from reports. One exception is a systematic uncertainty term usage analysis of AR4 and AR5 conducted by Mach et al. (2017), which identifies trends in the application of the framework to empirically ground a series of recommendations for improving the framework. These trends include an increase in the number of uncertainty terms per report, the growing preference for confidence terms, and the decrease in “low certainty” statements in the Summary for Policymakers (SPM).

This article extends this term usage analysis to the three most recent SRs, confirming that many of these trends identified in AR4 and AR5 continue in the SRs. While the Mach et al. analysis focuses exclusively on SPMs and chapter executive summaries, the term usage analysis presented in this article also examines the application of the uncertainty language framework in the chapter bodies, revealing key differences in language choices between report chapters and the much more widely read SPMs. This finding suggests that the uncertainty language framework is applied differently in different parts of the reports by the same authors.

While the term usage analysis provides a picture of how the uncertainty language framework is being applied, I then present findings from interviews with IPCC coordinating lead authors (CLAs) and lead authors (LAs) on their interpretation of the framework and other decisions that underpin these trends. Author experiences confirm the claim made in the literature that inconsistencies in the usage of the confidence and likelihood scales stem from two fundamentally different interpretations of the framework (Wüthrich 2017). They also highlight how many of the idiosyncratic applications of the framework and inconsistencies between chapters and reports reflect factors such as time pressure, the absence of effective oversight, and the lack of emphasis placed on uncertainty language relative to other tasks.

Recent scholarship in the risk science literature advocates a more fundamental overhaul of the framework, criticizing the IPCC uncertainty language framework for possessing a somewhat convoluted interpretation of probability (Aven and Renn 2015; Aven 2019). While acknowledging the importance of a consistent and scientifically rigorous understanding of probability, here I attempt to sidestep these epistemological debates and analyze the application of the IPCC uncertainty language framework on its own terms. My primary aim is to provide pragmatic recommendations to clarify and improve the application of the existing framework, while acknowledging the importance of reenergizing the conversation about the amending the framework going forward.

This article is organized as follows. Section 2 summarizes the literature on the IPCC uncertainty language framework to date, focusing on recent scholarship addressing the implementation of the system during the production of AR5. Section 3 presents the results from the uncertainty term usage analysis for SR15, SRCCL, and SROCC, reinforcing a number of key questions about how the framework is interpreted and applied by IPCC authors. Section 4 seeks to answer these questions by analyzing the recent experiences of IPCC authors implementing the framework. Section 5 concludes by proposing several recommendations for clarifying and amending the framework to address persistent sources of confusion highlighted by the authors.

2 The IPCC uncertainty language framework

2.1 Evolution of the framework

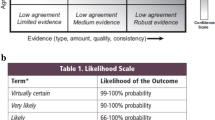

Several articles have chronicled the history of the IPCC uncertainty language framework in detail (e.g., Swart et al. 2009; Mastrandrea and Mach 2011), including the initial development of the framework leading up to the third assessment report (TAR) (Moss and Schneider 2000), as well as subsequent refinements and clarifications for AR4 (IPCC 2005) and AR5 (Mastrandrea et al. 2010). The framework emerged from observations that chapter teams adopted a plurality of approaches to characterize and communicate uncertainty in the first and second assessment reports, leading to calls for a more systematic approach that could be applied across chapters and working groups. The most recent iteration of the framework provides three distinct but related scales that authors can use to qualify uncertain knowledge claims (Fig. 1).

The evidence/agreement scale allows authors to separately assess the type, amount, and quality of the evidence base supporting a claim and the level of scientific agreement in the literature (both on three-point scales). The framework then specifies that evidence and agreement statements be presented together (e.g., “limited evidence, medium agreement”). The guidance note provides some suggestions for how to evaluate evidence and agreement—however, these assessments are highly dependent on the specific context of the topic area and research landscape—and are thus left largely to the expert judgement of the authors.

The five-point confidence scale is closely tied to the evidence/agreement scale. According to the guidance note: “Confidence in the validity of a finding [is] based on the type, amount, quality, and consistency of evidence and the degree of agreement” (Mastrandrea et al. 2010). According to this definition, confidence statements are derived from integrating the evidence and agreement assessments. For example, a “limited evidence, low agreement” assessment might be translated as “low confidence.” However, as illustrated in the three-by-three matrix in Fig. 1, there are a number of evidence/agreement combinations (like “medium evidence, high agreement”) where it is not entirely clear how to translate between the scales.

Lastly, the likelihood scale is used to communicate quantified, probabilistic assessments of uncertainty produced by statistical or modeling analyses, or formal expert elicitation methods. The scale provides 10 likelihood terms that are each attached to a specific probability interval (Fig. 1). In the philosophy of science and risk science literature, the term “likelihood” tends to be used broadly to describe any situation characterized by uncertainty—whether uncertainty can be quantified or not. The IPCC’s framework adopts an unusually narrow interpretation of the term, using likelihood as a strictly quantitative concept that is measured and communicated using (largely frequentist) probabilities.

However, recent scholarship argues that the IPCC framework is built upon on a somewhat convoluted interpretation of probability (Aven and Renn 2015; Aven 2019).Footnote 1 The guidance note specifies that “confidence should not be interpreted probabilistically” (Mastrandrea et al. 2010, pp. 3). However, according to the subjective (i.e., Bayesian) interpretation of probability, which has emerged as the dominant paradigm in most scientific fields today (Morgan 2014), a decision maker’s subjective assessment of their confidence (or degree of belief) in the validity or “truthfulness” of a knowledge claim is, in fact, the very definition of probability. From this perspective, it is not clear why confidence statements cannot also be quantified and expressed probabilistically (Aven and Renn 2015). More fundamentally, some argue that the frequentist and subjectivist interpretations of probability are based on incompatible theories of knowledge and any attempt to reconcile them is bound to create confusion (Nearing et al. 2016). However, here I sidestep the ongoing epistemological debate and attempt to analyze the IPCC uncertainty language framework on its own terms, with the goal of providing pragmatic recommendations that may be applied in the short-term—while acknowledging that more significant amendments may still be necessary in the future.

From its inception, the uncertainty language framework was viewed as an iterative process subject to ongoing improvements, with the first guidance note stating: “guidelines such as these will never truly be completed” (Moss and Schneider 2000, pp. 34). The decision to not amend the framework between AR5 and AR6 was intentional, reflecting a desire to maintain consistency between the two reports.Footnote 2 While there have been no recent updates to the framework, commentary on the application of the framework in AR5 echoes many of the same critiques leveled at the application of the framework in AR4—namely that the framework was applied inconsistently across chapter teams and working groups (Adler and Hirsch Hadorn 2014; Mach et al. 2017) and confusion persists around the three-scale framework itself (Aven and Renn 2015; Wüthrich 2017; Helgeson et al. 2018; Borges de Amorim and Chaffe 2019). Other prominent critiques like the framework’s poor treatment of the concepts of risk and surprise (Aven and Renn 2015; Aven 2019) and concern that the framework no longer reflects the evolving purpose of the IPCC assessment process (Oppenheimer et al. 2007; Kowarsch and Jabbour 2017; Beck and Mahony 2018) are beyond the scope of this paper.

2.2 The relationship between the evidence/agreement, confidence, and likelihood scales

According to the uncertainty framework guidance, confidence assessments are made by integrating the quantity and quality of the underlying evidence base with the level of agreement in the literature using a three-by-three matrix (Fig. 1). However, the framework is far less clear about when and when not to translate evidence/agreement statements into confidence statements (Wüthrich 2017). Also, if specific combinations of evidence/agreement terms directly translate into confidence terms, it is not apparent why there needs to be two separate scales at all.

In a follow-up article to the most recent uncertainty language guidance note, two of the framework’s authors offer a further explanation of how to deploy the two scales together, which appears to justify the existence of two separate scales that appear to communicate similar information. They suggest that confidence language should be used “[f]or findings associated with high agreement and much evidence or when otherwise appropriate” (Mastrandrea and Mach 2011, pp. 663). The guidance note itself specifies that “the presentation of findings with ‘low’ and ‘very low’ confidence should be reserved for areas of major concern” (Mastrandrea et al. 2010, pp. 3). Combining these two guidelines, it appears that stronger evidence/agreement terms should always be translated into confidence language, while weaker evidence/agreement terms should only be translated when the claim is particularly salient to policy makers. However, the terms “robust evidence, high agreement” and “low confidence” frequently appear in the chapters of IPCC reports—a practice that is shaped by “somewhat arbitrary working-group and disciplinary preferences” (Mach et al. 2017, pp. 9).

Meanwhile, the relationship between the confidence and likelihood scales has received considerably more attention in the literature (Committee to Review the IPCC 2010; Curry 2011; Jonassen and Pielke 2011; Jones 2011; Mastrandrea and Mach 2011; Mach et al. 2017; Helgeson et al. 2018). The nature of the confusion around how to distinguish between the confidence and likelihood scales and how they fit together has been most effectively described by Wüthrich (2017) who identifies two dominant interpretations of the relationship in IPCC assessment reports, which he labels the substitutional and non-substitutional interpretations.

The substitutional interpretation sees both the confidence and likelihood scales as providing the same basic information—that is, a measurement of the validity or “truthfulness” of the knowledge claim or finding. Translated into Bayesian terms, both scales communicate the assessor’s degree of belief that the claim accurately reflects the “real world.” From this perspective, an author uses likelihood language when uncertainty has been formally quantified by a statistical analysis, model, or expert elicitation—and qualitative confidence language when there is a lack of quantitative evidence. But the two scales are essentially substitutable, despite the fact that the likelihood scale is generally used to describe frequentist probabilities and the confidence scale is used to describe subjective probabilities. A “very high confidence” statement can be considered to be equivalent to a likelihood statement of “extremely likely” or “very likely,” with the only difference between the two scales being the nature of the evidence informing the assessment. This perspective reflects the version of the uncertainty language framework used in AR4, which attached quantitative indicators to the qualitative confidence labels like “about 8 out of 10 chance” (IPCC 2005). From this perspective, an author might address the issue of model unreliability or structural uncertainty by “downgrading” a likelihood term with a more precise probability interval (e.g., “very likely”) to a term with a less precise interval (e.g., “likely”).

The non-substitutional interpretation, which Wüthrich suggests is used in the majority of cases, only sees confidence as an assessment of the validity or truthfulness of a finding. From this perspective, likelihood language should only be used to refer to an outcome from a specific statistical study or model (or ensemble of models) that produces probabilities. For example, the statement “Between 1979 and 2018, Arctic sea ice extent has very likely decreased for all months of the year” (IPCC 2019a, pp. 4) simply means that a specific study showed that Arctic sea ice has decreased in 90–100% of observations or model runs. The finding from the study is but one piece of evidence which may (or may not) influence an author’s assessment of the validity of the claim (i.e., their confidence assessment). It is conceivable that the model was poorly constructed or there is high structural uncertainty and as a result, an assessor will have low confidence in the finding despite the fact that the study assigned a high probability value to the outcome. Therefore, according to the non-substitutional interpretation, a likelihood assessment is not an assessment at all but the presentation of a specific probabilistic finding—or using Wüthrich’s language, confidence statements are “meta-judgements” while likelihood statements are “intra-finding judgements.”

Winsberg (2018) proposes a similar interpretation of the confidence/likelihood distinction, suggesting that likelihood terms be understood as “first-order probabilistic claims” (based on statistical and modeling analyses), while confidence terms are “second-order probabilistic claims” that describe the “resilience” of the likelihood assessment (i.e., the quality of the statistical and modeling analyses)—despite the fact that the IPCC uncertainty language guidance specifically cautions authors to not interpret confidence statements probabilistically.

According to implementation guidance written by several authors of the framework (Mastrandrea et al. 2011), likelihood statements should be used when confidence is high or very high, which has been interpreted by some commentators that lone likelihood statements contain implicit (high) confidence assessments in the quality of the model (Mastrandrea and Mach 2011; Helgeson et al. 2018). The implication is that authors are discouraged from communicating findings from modeling studies when they have medium or low confidence in the quality of the model. If this interpretation is correct, then we can assume that when confidence and likelihood statements are paired together, the confidence statement refers to the knowledge claim, and the author’s high confidence in the quality of the model is implicit.

Consider, again, the statement about the observed decrease in Arctic sea ice. Adopting the substitutional interpretation, the assessment of the validity of the finding is straightforward: the term “very likely” is equivalent to having “very high confidence” that Arctic sea ice has decreased all months of the year. Therefore, the term “very likely” is both the output of a model, as well as the author’s assessment of the validity of the finding.

But from the non-substitutional interpretation, “very likely” simply means that one model generated the probability interval 90–100%. And therefore, the author is still responsible for assessing the validity of that claim using confidence language. One might argue that if an author is highlighting a particular study or model that makes such a bold claim, then clearly they should have high confidence in not only the quality of the model but in the finding itself. The implication is that all likelihood statements carry not one but two implicit (high) confidence assessments. However, this relationship is far less clear with the likelihood statement “more likely than not” that describes a much wider probability interval (50–100%). It seems far less obvious that an author would have high confidence in a finding supported by such an imprecise probability interval—even if they had high confidence in the quality of the model.

The main takeaway from these critiques is that there continues to be confusion over the relationships between the three scales. So far, the literature highlighting these issues has generally appealed to anecdotal examples of how the scales have been used in IPCC reports. However, a more systematic, empirical analysis of uncertainty language usage—like the study conducted by Mach et al. (2017)—provides a much firmer basis for recommendations for improving the framework. The following section presents an analysis of uncertainty term usage in the three most recent SRs, highlighting the key trends in language usage since AR4.

3 Uncertainty term usage in IPCC special reports

This section analyzes the use of the designated uncertainty terms in the SR15, SRCCL, and SROCC,Footnote 3 building off a study conducted by Mach et al. (2017) comparing uncertainty language usage in AR4 and AR5. The language usage trends discussed in this section are investigated further in Section 4, which presents findings from interviews with IPCC authors on their experiences interpreting and applying the framework in recent SRs.

3.1 Methods of analysis

Major assessment reports like AR4 and AR5 are composed of three sub-reports produced by the IPCC’s three working groups tasked with addressing different components of the climate change issue: the physical science basis (WGI); impacts, adaptation, and vulnerability (WGII); and mitigation (WGIII). Working group sub-reports are made up of approximately 15 to 30 chapters produced by separate author teams, as well as an SPM that presents the key findings from all chapters. By comparison, the SRs contain no sub-reports and include a mixture of new authors and authors that previously contributed to WGI, WGII, and WGIII. The SRs are significantly shorter than the major assessments, ranging from just five to seven chapters in length (plus an SPM).

In addition to comparing uncertainty language usage in the SPMs and chapter executive summaries, I also analyzed the uncertainty statements made in chapter bodies. Incorporating uncertainty statements from the chapter bodies significantly increases the total number of uncertainty statements in the analysis and provides insight into the decision-making of author teams as they determine which knowledge claims (and associated uncertainty statements) to “elevate” to the executive summaries and SPM, which are read by a much larger audience.

For each chapter body, chapter executive summary, and SPM, I tabulated the number of unique instances of evidence/agreement, confidence, and likelihood terms. I analyzed specific terms according to the rate at which they were used (i.e., number of unique instances per page) and according to their proportion of the total number of uncertainty terms used in each chapter and report.

I also analyzed the frequency that authors used confidence and evidence/agreement terms of various “certainty levels.” Following the criteria outlined by Mach et al. (2017), terms were grouped into three categories: low certainty, medium certainty, and high certainty. Lastly, I analyzed working group participation of SR CLAs and LAs in major assessment reports using the IPCC author database (IPCC 2019c), which includes author information for AR4, AR5, and AR6.

3.2 Results and discussion of uncertainty language trends in IPCC special reports

The overall number of uncertainty terms appearing in SPMs has steadily increased from AR4 (an average of 4.3 terms per page) to the two most recent SRs published in 2019 (11.0 and 10.8 terms per page in the SRCCL and SROCC). Figure 2a shows the proportional usage of the evidence/agreement, confidence, and likelihood scales for the SPMs of the three SRs and compares them to the SPM of each working group for AR4 and AR5 based on data from Mach et al. (2017). Much of the analysis conducted by Mach et al. compares the language use of the three working groups. However, SR15, SRCCL, and SROCC all have a mixture of CLAs and LAs with previous WGI, WGII, and WGII experience.Footnote 4 While a significant proportion of the CLAs and LAs from each SR did not participate in AR4, AR5, or AR6 (SR15, 23%; SRCCL, 51%; SROCC, 61%), WGII is the most represented working group for all three SRs, followed by WGIII, and then WGI (Fig. 2c).

a Comparison of proportional usage of uncertainty language scales in SPMs (data for AR4 and AR5 from Mach et al. 2017). b Comparison of proportional usage of uncertainty language scales in chapter bodies, executive summaries, and SPMs. c AR4, AR5, and AR6 working group participation by lead authors of special reports. d Proportion of low, medium, and high certainty terms in the SROCC

The combination of working groups in the SRs makes it difficult to track some of the observations made by Mach et al., such as WGI’s higher use of likelihood terms than WGII and WGIII in both AR4 and AR5. One might expect that SR15—which has the most WGI authors of the three SRs—would also contain a significant number of likelihood statements. However, likelihood statements make up a mere 6.4% of all uncertainty statements in the SR15 SPM. But upon closer inspection of the underlying chapter bodies, 71% of all likelihood language in the SR15 is found in chapter 3, which has nearly half (42%) of all the WGI authors in the entire report, which is consistent with the observed higher rate of usage of likelihood language by WGI authors in AR4 and AR5.

The proportional usage of the three uncertainty language scales in the SPMs of SR15, SRCCL, and SROCC (Fig. 2a) is consistent with the observed increase in the proportional use of confidence language across all working groups from AR4 to AR5. The proportional term usage profiles for the SPMs of the three SRs are also quite similar to one another, with confidence language accounting for 79.4%, 93.6%, and 98.5% of uncertainty language in the SR15, SRCCL, and SROCC SPMs respectively. The proportional term usage in the SRs also closely resembles the term usage in the WGII SPMs for both AR4 and AR5, which should not be surprising considering the large WGII representation in all three SRs (Fig. 2c).

While the use of evidence/agreement statements in AR4 and AR5 SPMs fluctuates considerably between reports and working groups, it has almost disappeared in the SR SPMs. Not a single evidence/agreement statement appears in the SR15 SPM, while evidence/agreement terms make up just 1.5% and 0.5% of all uncertainty terms in the SRCCL and SROCC SPMs respectively. However, the use of the evidence/agreement scale is still prevalent in the underlying chapter bodies of the SRs. In fact, one can observe a regressive use of the evidence/agreement scales moving from the chapter bodies to executive summaries to the SPM (Fig. 2b). The same pattern can be observed with “low certainty” evidence/agreement and confidence terms, which are fairly prevalent in chapter bodies but nearly disappear in executive summaries and SPMs. This trend is illustrated for the SROCC in Fig. 2d.

The term usage analysis also reveals variation in the application of the uncertainty language framework between chapters within the same report. Figure 3 a, b, and c show the proportional usage of the evidence/agreement, confidence, and likelihood scales for each chapter of the SR15, SRCCL, and SROCC, as well as the per page usage of uncertainty terms in the chapter bodies. One chapter in SR15 (ch. 5) and one chapter in SROCC (ch. 5) contain approximately twice as many uncertainty terms per page than the next most language-dense chapter. The common thread between these two chapters is the extensive use of figures and tables to communicate large amounts of evidence/agreement and confidence statements that are not replicated in the chapter text. The use of colors, shadings, and symbols in figures and tables to convey uncertainty statements appears to be a relatively recent innovation in IPCC assessment reports.

a–c Proportional usage of evidence/agreement, confidence, and likelihood scales in SR15, SRCCL, and SROCC chapter bodies. The total number of uncertainty terms is represented by the unbracketed number above each column. The number of uncertainty terms per page is represented by the bracketed number above each column

Looking beyond the chapter executive summaries to their underlying chapter bodies reveals many unusual applications of the uncertainty language framework by specific chapter teams. One example of idiosyncratic language use is the unintentional use of unbracketed and unitalicized likelihood language. For example, chapter 5 of the SRCCL states: “… large shifts in land-use patterns and crop choice will likely be necessary to sustain production growth and keep pace with current trajectories of demand” (IPCC 2019b, pp. 5–28). Here, the term “likely” does not seem to be attached to a particular probability interval or finding from a modeling study, which could confuse readers who are instructed to interpret likelihood terms as assessments of quantitative evidence.

Lastly, there are also many examples where uncertainty statements are combined or integrated when moving from the chapter body to the executive summary. In these situations, two distinct but related knowledge claims presented in a chapter body—each with their own uncertainty term—appear to be integrated into a single knowledge claim with a single uncertainty term in the executive summary. The integration of two specific claims into a broader knowledge claim can be considered a “meta-assessment,” whereby the assessor assigns an uncertainty term (typically a confidence term) that applies to the aggregated evidence and agreement of the two sub-claims. This practice is common across many SR chapters and reveals an unexplored dimension of the IPCC assessment process.

3.3 Conclusions

The term usage analysis of SR15, SRCCL, and SROCC reinforces a number of trends seen in AR4 and AR5, including the overall increase in uncertainty terms per report, working group-specific preferences for particular uncertainty language scales, and the emergence of confidence language as the dominant scale for communicating uncertainty in IPCC assessment reports. Additionally, it reveals a number of other interesting findings that could indicate how the framework is being applied by IPCC authors in the preparation of AR6. These findings include the following: the disappearance of evidence/agreement statements in SPMs (despite their continued use in chapter bodies and executive summaries); the use of color, shading, and symbols in figures and tables by particular chapter teams to communicate large amounts of uncertainty statements; and the meta-assessment of multiple uncertainty statements as findings are passed up from chapter bodies to executive summaries and the SPM.

While the term usage analysis points to several interesting trends, it does not provide much insight into the decisions, factors, and interpretations underlying them. The following section builds off this analysis by describing the recent experiences of IPCC authors interpreting and applying the uncertainty language framework.

4 Author experiences using the uncertainty language framework

This section explores the experiences of IPCC authors applying the uncertainty language framework during the production of the two most recent SRs: the SRCCL and SROCC. The discussion is informed by a series of semi-structured interviews conducted with CLAs and LAs from chapters 4 and 6 of the SRCCL and chapters 2 and 5 of the SROCC, as well as members of the IPCC Bureau responsible for training and supporting authors on the implementation of the framework (N = 14).Footnote 5 Interviewees were asked to reflect on the language usage trends identified in Section 3, why the framework is applied inconsistently within and across chapter teams, and how they interpret the relationships between the three language scales.

The interviews were conducted following the approval of each report’s SPM but before trickleback revisionsFootnote 6 and final copy-edits were applied to individual chapters. Chapter 6 of the SRCCL and chapter 5 of the SROCC were both selected because of their extensive use of color-coded tables to communicate large amounts of calibrated uncertainty language. The other two chapters were selected because they reflect the “typical” or “average” chapter in each report in terms of their use of uncertainty language.

4.1 Decisions and dynamics underpinning language usage trends

Interviewees were unsurprised by the observed trend towards greater use of the confidence scale in recent IPCC assessment reports. They attributed the decrease in likelihood language in the SRCCL and SROCC to the nature of the relevant evidence base for most of the topics covered by the two reports and the relative lack of WGI authors. For instance, one author stated:

WGI tends to use a lot of likelihood statements because they lean on models producing numerical outputs. But I work in the mitigation space where a lot of what we’re looking at is not numerical – we’re looking at things like sustainability, social impacts, and gender influences where it is more appropriate to use levels of evidence and agreement, or to boil those down into confidence statements.

However, authors tended to attribute the decline in evidence/agreement language (and the related increase in confidence language) in the SPM to the tendency of chapter teams to elevate a greater proportion of higher certainty claims into the executive summary and SPM. This explanation would suggest that authors are following the recommendation in the guidance to translate claims with strong evidence and agreement into confidence language. The significantly higher proportion of medium and high certainty terms in the SPM and chapter executive summaries compared with the underlying chapter bodies (Fig. 2d) supports this explanation as well. While authors were not expressly discouraged from using evidence/agreement or “low confidence” qualifiers in the SPM, all interviewees supported the notion that SPMs should largely highlight areas where scientific knowledge is most robust, rather than highlighting knowledge gaps.

Commenting on how SPMs rarely highlight low certainty claims, one interviewee described their thought process for determining which findings should be proposed for the SPM, saying: “We often perform a reality check and say: ‘if this is really a low confidence issue, why are we having it in the SPM?’ – because space is really at such a premium.” Another author described how demand-side pressure for higher certainty claims from policy makers also plays a role in the disproportionally high number of high certainty (and confidence) statements in the SPM:

We only want the most robust messages in the SPM because we have to go through government approval – and if we have a whole bunch of low confidence statements in there, the governments can rightly ask, “if we have low confidence in this, why are you telling us about it? Tell us the things you know, not the things you don’t know.”

When asked whether it is important for scientific assessments to also present important knowledge gaps, authors tend to point towards the IPCC’s stated purpose—to support policy makers—suggesting that pressing policy decisions are better supported with high certainty claims than identified knowledge gaps. However, there was also recognition that in certain situations, low certainty statements may be highlighted in the SPM. These situations tend to involve claims that are of particular interest to policy makers, and their inclusion must be vigorously defended by the author.

The observation in the language usage analysis that author teams appear to be conducting meta-assessments, where multiple claims from the chapter body are combined into a broader claim with a single uncertainty statement in the executive summary or SPM, was confirmed by interviewees as a “common practice.” One author noted that these meta-assessments were particularly important when combining knowledge claims from multiple chapters in the SPM and that their team was very careful to maintain a transparent “line of sight” between the meta-assessment and the underlying evidence in the chapter body.

Large color- and symbol-coded tables and figures are used in both chapter 6 of the SRCCL and chapter 5 of the SROCC to communicate a large number of knowledge claims and their associated uncertainty assessments. Tables illustrating the interactions between various systems and variables use colors, shadings, and symbols to communicate the strength or direction of those interactions, as well as assessments of the uncertainty associated with each relationship. Authors of these chapters explained that the primary purpose of using tables (as opposed to merely describing each relationship in the text) is to communicate the comparative nature of the analysis. However, authors contributing to these chapters also expressed a desire for more guidance on visual communication. While an external design firm is brought in to improve and harmonize figures, diagrams, and tables in the SPM, an interviewee commented that the authors are mostly “winging it” in the chapter bodies.

The unintentional or colloquial use of likelihood terms was also identified as a common problem that is surprisingly difficult to recognize and avoid:

There were several times in text drafting where my natural preference was to summarize the balance of evidence as either “unlikely” or “likely.” However, it would have been inappropriate for me to do so, since these terms need to be used in a precise, quantitative way. Thus, rewording had to be along the lines of “evidence is unconvincing that…” or “it is expected that…”

Other examples of idiosyncratic language use such as lone evidence or agreement terms were dismissed by interviewees as reflecting either “sloppiness” stemming from time constraints or “a lack of proper training.” Interviewees appealed to the significant time constraints built into the IPCC assessment process more than any other factor for explaining the causes of inconsistent language usage. The vast majority of authors conduct IPCC-related work above and beyond their normal professional duties as academics, researchers, or government scientists and spend hundreds of hours writing, reviewing comments, and approving text on evenings and weekends. These time constraints are magnified for SRs, which are prepared on much shorter timelines than the major ARs, with one interviewee suggesting that the most recent SRs “do not necessarily reflect the latest implementation of the IPCC uncertainty guidelines but the implementation of the guidelines under pressure.”

A number of interviewees also pointed to the training process as an area that might be improved going forward. Several authors that had participated in previous IPCC major ARs commented that the presentations delivered at each of the first three lead author meetings for the SRCCL and SROCC were a significant improvement over previous assessment cycles. However, one suggestion echoed by multiple interviewees was to conduct a series of chapter-specific practice examples with each author. Other suggestions focused on the support and oversight that authors receive while developing the chapter text. One author suggested that it would be helpful to have better editing or “policing” in the final stages of revision from an individual outside of the chapter team—but acknowledged the already immense workload of co-Chairs. Another interviewee suggested that perhaps the chapter’s review editors—whose main responsibility is ensuring that authors address all submitted comments—might also play a bigger role in monitoring the implementation of the uncertainty framework.

A final factor that was pointed to as an underlying cause of the inconsistent or “sloppy” application of the framework was the lack of emphasis that uncertainty language received relative to more pressing tasks like meeting deadlines and ensuring that chapter sections comprehensively address the literature. This sentiment was particularly evident in comments about how uncertainty qualifications were sometimes left to the very end of the writing process, as well as comments about how deliberations at SPM approval meetings rarely focus on specific uncertainty terms.

4.2 Translating between the three scales

4.2.1 When to use uncertainty language

Nearly all interviewees expressed support for the uncertainty language framework as a reasonably effective system for characterizing and communicating uncertainty.Footnote 7 However, several authors commented that while the framework is strong “on paper,” a number of issues arise during its implementation. One example is the issue of determining when a knowledge claim requires an uncertainty statement versus merely providing citations. Nearly every sentence in an IPCC assessment report relates to the behavior of physical and social systems. Therefore, authors must decide if each sentence is merely a statement of widely agreed-upon fact (requiring no supporting evidence or uncertainty statement), a policy-relevant knowledge claim (requiring an uncertainty statement), or a sub-claim made in the literature that supports the broader policy-relevant claim (requiring the citation of supporting evidence).

Interviewees describe these decisions as highly subjective. For example, one author asked: “Where is the boundary between ‘established fact’ and ‘very high confidence’?” A few authors alluded to a hierarchy of claims, where more specific claims made in the literature, such as particular numbers, were given citations, and more general claims that were supported by such numbers were qualified with uncertainty language. One interviewee explained how decisions about when to apply uncertainty language may depend on the geographical scale of the claim:

For a global phenomenon like global sea level rise, it is extremely important that you have uncertainty language so you can document exactly how and why you arrived at that conclusion. But as you make it a finer and finer resolution issue, it becomes a fractal problem – the question about uncertainty for specific ecosystems should often be answered with “it depends.”

Others appealed to “rules of thumb” to help guide their decision-making about which sentences to assign uncertainty qualifiers. One author said they had received strong encouragement from the chapter’s CLA to conclude each section with a sentence that has uncertainty language in it. Others described how their chapter teams decided to omit uncertainty statements and then retrofit their sections with uncertainty language once they had a better idea of which findings would be pushed up into the chapter’s executive summary and would apply uncertainty language to these higher visibility claims.

4.2.2 Evidence/agreement vs. confidence

Author testimony confirms the claim made throughout the literature that the distinctions between the three language scales are interpreted differently by different chapter teams, and even by different authors within the same chapter team. As discussed in Section 2.2, the framework is somewhat vague about when to use evidence/agreement statements and when to translate them into confidence statements. Author responses to questions about when and why they deployed the evidence/agreement scale versus the confidence scale reveal two main interpretations of the relationship between the two scales.

The first interpretation, which was shared by the majority of interviewees, sees the relationship as more or less formulaic in nature. According to the formulaic interpretation, every evidence/agreement statement has a “proper” corresponding confidence statement as defined by the three-by-three matrix in the guidance note (Fig. 1). For uncertainty statements with matching levels of evidence and agreement like “medium evidence, medium agreement,” the conversion is straightforward (“medium confidence”), while a statement like “limited evidence, high agreement,” can be converted using the matrix to “medium confidence.” However, some judgement must be exercised for ambiguous terms like “robust evidence, medium agreement.”

Authors expressing the formulaic interpretation employed one of two strategies to determine which scale to use while writing the report. First, a number of authors chose to translate all non-ambiguous evidence/agreement statements into confidence statements and only use the evidence/agreement scale for more ambiguous combinations like “robust evidence, medium agreement.” The second strategy is to use evidence/agreement statements in the chapter body and only translate them into confidence statements in the executive summary and SPM. For example, this was the predominant strategy used by authors of chapter 4 of the SRCCL because it provided “a more explicit and differentiated assessment.”

Meanwhile, the second interpretation of the relationship between the evidence/agreement and confidence scales sees a subtle qualitative distinction between the scales. While the two interviewees that shared this interpretation acknowledged that confidence assessments are based primarily on the strength of evidence and agreement, they also appealed to an additional dimension that is present in confidence assessments but not evidence/agreement assessments:

The evidence/agreement scale is a comment about the literature, where you say: “don’t come to me if it turns out wrong – but I’m just saying there’s a lot of literature and it looks pretty good to me.” Whereas confidence language is upping the ante – it’s “owning” the assessment. There is an extra layer there. It’s not just an algorithmic translation.

According to this explanation, the non-formulaic interpretation sees the confidence scale as expressing a degree of personal ownership over the assessment, while evidence/agreement statements allow authors to maintain a level of detachment from the assessment. From this perspective, the translation matrix is merely a guide. The non-formulaic interpretation seems to support the suggestion that evidence/agreement language should be used for less robust claims and confidence language for claims with strong evidence and agreement, while the formulaic interpretation sees these scales as essentially substitutable at the authors’ discretion.

4.2.3 Confidence vs. likelihood

Interviewees were asked to explain the distinction between the confidence and likelihood scales and describe the circumstances when it is most appropriate to use each scale. Responses largely conformed to the non-substitutional interpretation described by Wüthrich (2017). However, one author’s response perfectly articulated the substitutional interpretation that sees confidence and likelihood terms as interchangeable: “The confidence assessment is qualitative and the likelihood assessment is quantitative. But in practical terms, the descriptors “very confident” and “very likely” represent similar levels of trust that the findings provide an accurate reflection of the real world.”

Meanwhile, interviewees that reflected the non-substitutional interpretation acknowledged the fallibility of models producing the probabilistic outputs on which likelihood statements are based—and the necessity of attaching an implicit or explicit confidence assessment to model outputs. For example:

Model-derived, mathematically exact values are not quite what they seem, since they necessarily depend on the many assumptions and simplifications made in constructing the model. Thus, a “spurious precision” is involved, with a hidden, secondary level of confidence associated with the validity of those assumptions.

When asked whether likelihood statements should also be accompanied by a confidence statement about the quality of the underlying model or the validity of the finding, most authors agreed that the confidence/likelihood distinction was confusing but disagreed on how best to communicate probabilistic findings. Some interviewees supported the use of both scales to qualify individual statements, while others hinted that likelihood statements already contain an implicit “high confidence” assessment. One author felt strongly that attaching both a confidence and likelihood statement to the same claim—while possibly improving transparency and rigor—would ultimately be counter-productive: “Combining the two scales is going to be mostly confusing – both for the people having to come up with the statement and the people that have to interpret it.” Meanwhile, an author contributing to chapter 2 of the SROCC described how their team navigated this problem by avoiding the use of likelihood language when confidence about model quality was not high, electing to use the confidence scale instead:

For the glacier projections, we used a homogeneous data set you can analyze quantitatively. But we downgraded it to “medium confidence” because the models used for the 100-year projections are relatively simple. But we have not done that consistently and it wasn’t clear whether we should do that all the time.

While the IPCC uncertainty guidance stresses both consistency and flexibility in how the framework is applied across chapters and reports, the clear message from authors is that they want more direction on how to interpret the framework.

4.3 Limitations of the study

While the CLAs and LAs interviewed in this study were drawn from multiple chapter teams from two different special reports with the goal of representing potentially diverse experiences and interpretations of the framework, the sample noticeably lacks WGI representation. Of the 14 interviews conducted, only two interviewees had previous experience contributing to a WGI report, which reflects the low participation of WGI authors in the SRCCL and SROCC overall. Both the language usage analysis and reflections of interviewees suggest that WGI authors tend to assess more quantitative studies and use likelihood terms more frequently than the other two working groups. Therefore, it is possible that WGI authors may interpret the distinction between the confidence and likelihood scales differently, for example. However, AR6 will present an opportunity to conduct a more precise comparison of how the uncertainty language framework is interpreted and applied by the three working groups.

5 Improving the characterization and communication of uncertainty in IPCC assessment reports

The experiences of IPCC authors preparing the SRCCL and SROCC illuminate how author interpretations and decisions contribute to the observed trends in uncertainty language usage. Authors see the primary purpose of IPCC assessments as the communication of robust, high certainty findings, which has led to a growing preference for confidence language in chapter executive summaries and the SPM, and a corresponding decrease in evidence/agreement terms.

Meanwhile, author testimony also shows how decisions about when to employ uncertainty language are unavoidably subjective. While there is no perfect set of rules for deciding when to qualify a knowledge claim with an uncertainty statement as opposed to a citation, the practice of adding uncertainty terms after most of a section’s text has already been written should be discouraged since this practice is inconsistent with the spirit of scientific assessment. As many authors noted, an assessment is not a literature review. The evaluation of the validity of knowledge claims is the core output of IPCC assessment reports, and therefore, authors should start making such judgements from the very beginning of the process—even if uncertainty terms must be continuously revised as more evidence is assessed.

Authors continue to be confused about how the three uncertainty language scales fit together. The formulaic interpretation of the relationship between the evidence/agreement and confidence scales, which was shared by the majority of interviewees, sees the three-by-three matrix in the guidance note as the authoritative tool for translating between the two scales. The less popular non-formulaic interpretation asserts that confidence statements add an extra ingredient of “ownership” to the assessment above and beyond simply aggregating evidence/agreement statements. However, this perspective overlooks the fact that evidence/agreement assessments also involve subjective judgement. When making evidence/agreement assessments, authors must determine what constitutes “enough” research, “high quality” research, and “well agreed-upon” research—all of which implicate the author in the resulting statement of evidence and agreement. Therefore, ownership over the judgement is, in fact, present in both scales.

While most authors see the relationship between the confidence and likelihood scales as non-substitutional, where likelihood statements describe (largely frequentist) probabilistic findings from specific models or statistical analyses, there continues to be disagreement about whether a sole likelihood assessment conveys sufficient implicit information about the author’s judgement or if an accompanying confidence statement is necessary.

A number of proposals have been made since AR5 to amend the IPCC uncertainty language framework to address this confusion. For example, Mach et al. (2017) suggest eliminating the confidence scale entirely and simplifying the evidence/agreement scale into five levels of “scientific understanding.” Meanwhile, Helgeson et al. (2018) propose to eliminate confusion and logical inconsistencies between the confidence and likelihood scales by attaching confidence assessments specifically to the probability intervals (where less precise probability intervals are given higher confidence statements and more precise intervals are given lower confidence statements).

However, both of these recommendations involve significant changes to the current system that would need to be applied after the conclusion of the AR6 cycle. Here, I focus on pragmatic recommendations for clarifying the current framework with the hope that they could be applied in the final stages of the AR6 cycle, as well as subsequent special reports that may be initiated before a new framework can be introduced. However, I also support a reenergized conversation about more significant changes to the framework that can balance author familiarity and comfortability with a more consistent and scientifically rigorous interpretation of probability. I also propose a series of recommendations that address structural and institutional obstacles impeding the application of the framework.

5.1 Clarifying the uncertainty language guidance

The non-substitutional interpretation is the most common interpretation of the distinction between the confidence and likelihood scales among IPCC lead authors (Wüthrich 2017). Therefore, in the short-term, the uncertainty language guidance provided to IPCC authors should explicitly endorse this interpretation by providing the following three clarifications:

-

1)

Clarify the relationship between likelihood statements and the specific statistical/modeling analyses from which they are derived. If likelihood statements describe findings produced by particular models or statistical analyses and do not constitute assessments of those findings, then the uncertainty language guidance should encourage authors to clearly connect probability intervals to the models that produced them. For instance, the statement: “According to the single longest and most extensive dataset, the LSAT increase between the preindustrial period and present day was 1.52°C (the very likely range of 1.39°C to 1.66°C)” (SROCC, ch. 2, pp. 3) clearly links the probability interval “very likely” (90–100%) to a specific statistical study.

-

2)

Clarify the relationship between likelihood statements and the underlying assessment of the quality of the specific statistical/modeling analyses. It should be made explicit in both the uncertainty language guidance given to authors as well as the SPM that likelihood statements always contain an implicit assessment of “high confidence” in the quality of the statistical or modeling analysis. Findings stemming from quantitative analyses where the author has medium or low confidence in the model should be qualified using the confidence or evidence/agreement scales only.

-

3)

Clarify the relationship between likelihood statements and the overarching assessment of the validity of the finding. It should not be assumed that lone likelihood statements convey an implicit assessment of the validity of the finding. Even claims supported with an “extremely likely” statement should not be assumed to reflect a “high” or “very high confidence” assessment in the finding. The probabilistic output is simply one piece of evidence that may influence an author’s assessment of the claim. Therefore, findings supported with likelihood statements should be paired with confidence statements assessing the validity of the finding. If there is concern that sentences combining both language scales may confuse readers, confidence statements can be used to support more general claims, while likelihood statements can be positioned as evidence supporting those claims.

Additionally, the guidance should explicitly endorse the more common formulaic interpretation of the relationship between the evidence/agreement and confidence scales and provide greater clarity on when and how evidence/agreement terms are translated into confidence terms:

Mandate that each chapter team establish its own rules for determining when and how to translate evidence/agreement statements into confidence statements at the beginning of the assessment process. These rules must be followed consistently within the chapter and should be articulated somewhere in the chapter body.

5.2 Training, oversight, and resources

Augment training with author-relevant examples

While time constraints may make it difficult to expand the training offered to IPCC authors on the uncertainty language framework, assigning members of the IPCC Bureau or other “uncertainty framework experts” to run through exercises with authors could significantly improve familiarity with the three scales and help authors generate questions about the framework that would typically emerge much later in the assessment process. Exercises can be enhanced by using examples that involve the specific topics and types of evidence that each author is most familiar with.

Increase IPCC Bureau and home institution support of IPCC authors

The rigorous and consistent application of the uncertainty language framework depends on there being sufficient resources, oversight, and time for IPCC authors. The IPCC Bureau can better support IPCC authors by providing more uncertainty expertise than what is typically offered by the overburdened co-Chairs. One possible solution is appointing an additional review editor to each chapter (or to multiple chapters) who is tasked with monitoring the use of uncertainty language and offering support to authors. The most important factor for whether an author has sufficient time to produce the best assessment possible is the level of support they receive from their home institution. Institutions that offer authors meaningful support (e.g., reduced teaching responsibilities) during the final months of the assessment cycle, as well as the months immediately following report approval are significantly better equipped to uphold the high standards of the IPCC assessment process and are more likely to be able to participate in future assessments.

5.3 Conclusion

While AR6 marks the first major assessment report that has not been preceded by an update to the IPCC uncertainty language framework in over 20 years, the implementation of the framework in the three recent SRs reveals that familiar inconsistencies and sources of confusion persist. The AR6 provides an opportunity to address many of these issues. I am sensitive to the argument that author familiarity with the existing framework is an important concern—which was the main justification for why the framework was not updated after AR5. However, going forward, I strongly support a reenergized conversation about more significant changes to the framework that can balance author familiarity with a more consistent and scientifically rigorous interpretation of probability—along with better support, oversight, and resources.

Notes

For a more detailed discussion of Aven’s (2019) interpretation of the confidence and likelihood scales, see the Supplementary Material.

Source: interviews with members of the IPCC Bureau.

The analysis preceded the publication of the final drafts of the SRCCL and SROCC and is based on the “approved drafts,” which were subject to final edits and tricklebacks. Trickleback documents for the SRCCL and SROCC contain only 25–30 suggested revisions associated with uncertainty language each. Therefore, the term usage in the final drafts may vary slightly from the data used in this analysis but will not significantly affect the findings presented here.

The IPCC author database does not provide information on author participation in the FAR, SAR, or TAR.

Interviews by chapter/group: SRCCL Ch. 4 (4); SRCCL Ch. 6 (2); SROCC Ch. 2 (3); SROCC Ch. 5 (3); IPCC Bureau (2). Interview requests were sent to all CLAs and LAs for each chapter and were conducted with all individuals that responded.

A series of final revisions of report chapters following the SPM approval meetings.

A sentiment that is not echoed by many risk/uncertainty scientists (Aven 2019).

References

Adler CE, Hirsch Hadorn G (2014) The IPCC and treatment of uncertainties: topics and sources of dissensus. Wiley Interdiscip Rev Clim Chang 5:663–676

Aven T (2019) Climate change risk–what is it and how should it be expressed? J Risk Res:1–18. https://doi.org/10.1080/13669877.2019.1687578

Aven T, Renn O (2015) An evaluation of the treatment of risk and uncertainties in the IPCC reports on climate change. Risk Anal 35:701–712

Beck S, Mahony M (2018) The IPCC and the new map of science and politics. Wiley Interdiscip Rev Clim Chang 9:e547

Borges de Amorim P, Chaffe PB (2019) Towards a comprehensive characterization of evidence in synthesis assessments: the climate change impacts on the Brazilian water resources. Clim Chang 155:37–57

Committee to Review the Intergovernmental Panel on Climate Change (2010) Climate change assessments: review of the processes and procedures of the IPCC

Curry J (2011) Reasoning about climate uncertainty. Clim Chang 108:723–732

Helgeson C, Bradley R, Hill B (2018) Combining probability with qualitative degree-of-certainty metrics in assessment. Clim Chang 149:517–525

IPCC (2005) Guidance notes for lead authors of the IPCC Fourth Assessment Report on addressing uncertainties. Geneva, Switzerland

IPCC (2018) Summary for Policymakers. In: Masson-Delmotte V, Zhai P, Pörtner HO et al (eds) Global warming of 1.5°C. An IPCC Special Report on the impacts of global warming of 1.5°C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change. World Meteorological Organization, Geneva

IPCC (2019a) Summary for policymakers (approved draft). In: IPCC Special Report climate change, desertification, land degradation, sustainable land management, food security, and greenhouse gas fluxes in terrestrial ecosystems In press

IPCC (2019b) Summary for policymakers. In: Pörtner HO, Roberts DC, Masson-Delmotte V et al (eds) IPCC Special Report on the ocean and cryosphere in a changing climate In press

IPCC (2019c) IPCC authors (beta). https://archive.ipcc.ch/report/authors/. Accessed 1 Nov 2019

Jonassen R, Pielke R (2011) Improving conveyance of uncertainties in the findings of the IPCC. Clim Chang 108:745–753

Jones RN (2011) The latest iteration of IPCC uncertainty guidance: an author perspective. Clim Chang 108:733–743

Kowarsch M, Jabbour J (2017) Solution-oriented global environmental assessments : opportunities and challenges. Environ Sci Pol 77:187–192

Mach KJ, Mastrandrea MD, Freeman PT, Field CB (2017) Unleashing expert judgment in assessment. Glob Environ Chang 44:1–14

Mastrandrea MD, Mach KJ (2011) Treatment of uncertainties in IPCC Assessment Reports: past approaches and considerations for the Fifth Assessment Report. Clim Chang 108:659–673

Mastrandrea MD, Field CB, Stocker TF, et al (2010) Guidance note for lead authors of the IPCC fifth assessment report on consistent treatment of uncertainties

Mastrandrea MD, Mach KJ, Plattner GK et al (2011) The IPCC AR5 guidance note on consistent treatment of uncertainties: a common approach across the working groups. Clim Chang 108:675–691

Morgan MG (2014) Use (and abuse) of expert elicitation in support of decision making for public policy. PNAS 111:7176–7184

Moss R, Schneider S (2000) Uncertainties in the IPCC TAR: recommendations to lead authors for more consistent assessment and reporting. In: Pachauri R, Taniguchi T, Tanaka K (eds) Guidance papers on the cross cutting issues of the Third Assessment Report of the IPCC. World Meteorological Organization, Geneva, pp 33–51

Nearing GS, Tian Y, Gupta HV et al (2016) A philosophical basis for hydrological uncertainty. Hydrol Sci J 61:1666–1678

Oppenheimer M, Neill BCO, Webster M, Agrawala S (2007) The limits of consensus. Science 317(80):1505–1507

Swart R, Bernstein L, Ha-Duong M, Petersen A (2009) Agreeing to disagree: uncertainty management in assessing climate change, impacts and responses by the IPCC. Clim Chang 92:1–29

Winsberg E (2018) Philosophy and climate science. Cambridge University Press, Cambridge

Wüthrich N (2017) Conceptualizing uncertainty: an assessment of the uncertainty framework of the Intergovernmental Panel on Climate Change. In: Massimi M, Romeijn J-W, Schurz G (eds) EPSA15 Selected papers: the 5th conference of the European Philosophy of Science Association in Düsseldorf. Springer, pp 95–107

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 22 kb)

Rights and permissions

About this article

Cite this article

Janzwood, S. Confident, likely, or both? The implementation of the uncertainty language framework in IPCC special reports. Climatic Change 162, 1655–1675 (2020). https://doi.org/10.1007/s10584-020-02746-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-020-02746-x