Abstract

In this paper, explicit peer methods are studied in which some of the stage values are copies of stage values from previous steps. This allows to reduce the number of function calls per step and can be interpreted as being a generalization of the first-same-as-last principle known from Runge–Kutta methods. The variable step size implementation is more complex as the nodes depend on the history of previous step size changes. Optimally zero stable explicit peer methods up to order \(p=8\) are constructed using constraint numerical optimization. In addition the constructed methods are superconvergent of order \(s+1\) for constant step sizes. The new methods show their efficiency in comparison with the Matlab codes ode23, ode45 and ode113 in numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Explicit peer methods for the solution of non-stiff initial value problems

have been introduced in [11]. In numerical tests [13] they have been shown to be very efficient and reliable methods. These methods have been applied successfully also in global error control [8]. Methods of higher order for constant step sizes have been constructed in [3]. Explicit peer methods with s stages compute in each step s numerical solutions which are all of the same order, i.e. the stage order is equal to consistency order. Like in Runge–Kutta methods, usually s function calls are necessary for the computation of the s stage values. One possibility to reduce the number of function calls are methods with the FSAL-property (first same as last) [10]. Here the first stage value of the new step is equal to the last of the preceding step. This reduces the number of function calls to \(s-1\). For constant step sizes the number of function evaluations can be reduced further, cf. [7], where the strong stability preservation (SSP properties) of explicit peer methods was studied. In these methods the FSAL-property is generalized by copying more than one stage value from the last step. This reduces the number of function calls by the number of copied stages.

For variable step sizes this principle of copying old stages by retaining the order of the method requires variable nodes in the peer method. A first attempt to construct corresponding methods was reported in [12]. Methods up to order six were derived under additional conditions related to the SSP property. In this paper we will focus on optimally zero stable methods, which gave excellent results in [11] and [13] (with constant nodes and s function calls per step). Here, we derive explicit peer methods with s stages of order \(p=s\) which require only \(s_e <s\) evaluations of the right-hand side in each step. The outline of the paper is as follows:

In Sect. 2 we recall the definition of explicit peer methods and generalize the copying of stage values from the preceding step to save function calls to the case of variable step sizes. We derive order conditions and prove that under some natural conditions numerical approximations can be computed for all step size sequences.

In Sect. 3 we construct specific optimally zero stable methods up to order \(p=8\) by numerically optimizing stability and accuracy properties. The optimization is done with respect to stability and superconvergence. Numerical tests in Matlab on standard non-stiff problems and comparison with ode23, ode45 and ode113 of the methods obtained are given in Sect. 4. These tests clearly demonstrate the capability of the new methods. Finally, we draw some conclusions.

2 Explicit peer methods with variable nodes

Explicit peer methods for problem (1.1) as introduced in [11] read

Here \(b_{ij}\), \(a_{ij}\), \(c_i\) and \(r_{ij}\), \(i,j=1,\ldots ,s\) are the parameters of the method, where the nodes \(c_i\) are assumed to be pairwise distinct with \(c_s=1\). At each step s stage values \(Y_{m,i}\), \(i=1,\ldots ,s\) are computed approximating the exact solution \(y(t_{m,i})\) where \(t_{m,i}=t_m+c_ih_m\). The coefficients of the method (2.1) depend, in general, on the step size ratio \(\sigma _m=h_m/h_{m-1}\). Defining matrices \(B_m=(b_{ij})_{i,j=1}^s\), \(A_m=(a_{ij})\), \(R_m=(r_{ij})\) and vectors \(Y_m=(Y_{m,i})_{i=1}^s\in \mathbb {R}^{sn}\) and \(F_m=(f(t_{m,i},Y_{m,i}))_{i=1}^s\) leads to the compact form

where \(R_m\) is strictly lower triangular. Like multistep methods peer methods also need s starting values \(Y_{0,i}\).

Except [12] so far peer methods have been considered with constant nodes \(c_i\), i.e. the nodes are independent of \(\sigma _m\). We now drop this restriction of constant step sizes in order to save function calls also for variable step sizes. We consider nodes depending on the time step, denoted by \(c_{m,i}\) and with \(t_{m,i}=t_m + c_{m,i} h_m\). We also assume \(c_{m,s}=1\) for all m.

Conditions for the order of consistency of explicit peer methods can be derived by considering the residuals \(\Delta _{m,i}\) obtained when the exact solution is put into the method

Definition 1

The peer method (2.1) is consistent of order p if

In contrast to explicit Runge–Kutta methods, all stage values \(Y_{m,i}\) of peer methods are approximations of order p to the solution \(y(t_m+c_{m,i} h_m)\), i.e., the stage order is equal to the order. This makes these methods advantageous for instance for problems which are semi-discretized by the method of lines, when space and time step sizes are reduced simultaneously, cf. [7].

By making a Taylor expansion of the exact solution and putting these expansions into the residuals \(\Delta _{m,i}\) we obtain

With Definition 1, the following theorem is valid for variable nodes.

Theorem 1

A peer method (2.1) is consistent of order p iff

The condition (2.3) for \(l=0\) is referred to as preconsistency. It takes the form

Definition 2

A peer method (2.1) is zero stable if there is a constant \(K>0\), so that for all \(m,k \ge 0\) holds

For a zero stable peer method of consistency order p convergence of order p follows by standard arguments, e.g. [11].

The peer methods considered in [11, 13] require s function calls per step. In the following, by special choices for the coefficients of the method, we will save function calls as done for constant step sizes in [7].

Definition 3

A peer method (2.1) is said to have \(n_s\) shifted stages and \(s_e=s-n_s\) effective stages if

and

From property (2.6) it follows immediately \(Y_{m,i}=Y_{m-1,i+1}\), \(i=1,\ldots ,n_s\). With constant nodes for the effective stages, i.e. \(c_{m,i}=c_{m-1,i}=c_i,\ i=n_s+1,\ldots ,s\), the number of function evaluations per step is reduced to \(s_e\).

Because of the structure property (2.6) there are no free parameters of the coefficients of the peer method for the shifted stages. But one easily proves that the order conditions are satisfied for the shifted values.

Lemma 1

Let a peer method (2.1) satisfy (2.6) and (2.7). Then condition (2.3) holds for all shifted stages \(i=1,\ldots ,n_s\) and for all \(l=0,1,\ldots \). \(\quad \square \)

The consistency order of the method can be ensured by the choice of the last \(s_e\) rows of \(A_m\). Because of \(t_{m,i}=t_{m-1,i+1}\) and \(f_{m,i}=f_{m-1,i+1}\) for \(i=1,\ldots ,n_s\) we can choose

We use the notation

and denote the \((s_e,s)\)-matrices consisting of the last \(s_e\) rows of \(B_m\) and \(A_m\) by \(\widehat{B}_m\) and \(\widehat{A}_m\). Then the conditions (2.3) for \(l=1,\ldots ,s\) for the order of consistency \(p=s\) lead to

We thus have

Theorem 2

Let a preconsistent peer method (2.1) have coefficient matrices \(B_m\) and \(R_m\) satisfying (2.6) and nodes satisfying (2.7), (2.8). Then with a matrix \(A_m\) having \(n_s\) zero rows and the last \(s_e\) rows computed by (2.10) the peer method has order of consistency \(p=s\). \(\square \)

If the matrix \(V_1\) is nonsingular Theorem 2 and Lemma 1 show that the construction of peer methods with s stages of order \(p=s\) is possible. By the special structure of the method the number of function calls in each step is reduced to \(s_e\) for all step size sequences. For constant, pairwise distinct nodes, \(V_1\) is always regular but, for variable nodes, this must be checked. The following theorem gives a sufficient condition for the regularity of \(V_1\) so that the coefficient matrix \(A_m\) is uniquely defined for all step size ratios.

Theorem 3

Let the peer method (2.1) with s stages and \(n_s\) shifted stages be given. Let \(c_s=1\) and let \(c_i,\ i=n_s+1,\ldots ,s\) be pairwise distinct and constant for all m and satisfy

Assume that the node conditions (2.7), (2.8) hold. Then the nodes \(c_{m,i}\) are pairwise distinct.

Proof

We prove by induction over m that the shifted nodes are nonpositive and pairwise distinct. By assumption (2.11) using (2.7), (2.8) it follows immediately that

and that they are pairwise distinct, the statement holds for \(m=1\). Suppose the result is true until \(m-1\), i.e.

and the shifted nodes are pairwise distinct. Then from (2.7) it follows

and \(c_{m,i}\le 0\) for \(i=1,\ldots ,n_s\). Because the constant nodes \(c_{n_s+1},\ldots ,c_s\) are assumed to be positive the statement follows. \(\square \)

Remark 1

Equation (2.3) for order p is equivalent to the condition that

holds for all polynomials P(t) of degree p. Choosing integrals of Lagrange basis polynomials, \(P_k(t) = \int _0^t L_k(s)\, ds\), \(k=1,\ldots , s\), where \(L_k(t) = \prod _{\begin{array}{c} l=1,\\ l\ne k \end{array}}^s (t-c_l+1)(c_k-c_l)^{-1}\), yields an explicit representation for the coefficients \(a_{ik}\), namely

Equation (2.13) can also conveniently be written in the form

with the exponentials evaluated componentwise. \(\quad \square \)

3 Construction of special methods

In numerical tests [13] especially explicit peer methods with the stronger property of optimal zero stability and with constant matrices \(B_m=B\) and \(R_m=R\) have given excellent results.

Definition 4

A peer method (2.1) is optimal zero stable if the eigenvalues of B satisfy

Optimal zero stability ensures a strong damping of the parasitic numerical solution components.

In this section we describe the construction of optimally zero stable peer methods of order \(p=s\) with the special structure defined by Definition 3. Furthermore we require superconvergence of order \(p=s+1\) for constant step sizes. For given s and \(n_s\) we use the following strategy:

-

B is constant and has the form

$$\begin{aligned} B=e_se_s^\top + \text { a strictly upper triangular matrix}. \end{aligned}$$Together with preconsistency (\(B\mathbbm {1}=\mathbbm {1}\)) and (2.6) for the first \(n_s\) rows this leaves \((s_e-1)(s_e-2)/2\) parameters in B. This structure also ensures optimal zero stability.

-

R is also constant. With (2.6) and (2.9) we have \(\frac{1}{2}s_e(s_e-1)\) parameters in R.

-

With \(c_s=1\) and the node condition (2.7) for \(\sigma =1\) for \(c_1,\ldots ,c_{n_s}\) we have \(s_e-1\) parameters \(c_{n_s+1},\ldots ,c_{s-1}\).

The free parameters in B, R and \(c_{n_s+1},\ldots ,c_{s-1}\) were determined with the interior point algorithm of fmincon from the optimization toolbox in Matlab. In the optimization process we have considered \(\sigma =1\), i.e. \(A_m\) is computed by (2.6) and (2.10) for \(\sigma =1\), and used the left boundary of the stability interval on the real axis r as objective function. The constraints in the optimization are

-

pairwise distinct nodes \(0<c_{n_s+1},\ldots ,c_{s-1}<1\) (cf. Theorem 3).

-

Superconvergence for constant step sizes ([13]), we require

$$\begin{aligned} |e_s^\top AB(s+1)|\le 1.e{-}14. \end{aligned}$$

The coefficients were computed with fmincon using random starting values. Among the obtained methods we have chosen methods which are also stable in a sufficiently large complex neighbourhood of the real stability interval [r, 0]. Furthermore our aim was to find methods with large \(n_s\) to save function evaluations. It turned out that for \(s>4\) methods with \(s_e=3\) gave the best combination of function saving and good stability. The following methods with \(s=4,\ldots ,8\) stages were obtained in our numerical search, they also were used in the numerical tests in Sect. 4. The notation is Peer\(sn_s\) and \(c_i=c_{0,i}\), \(i=1,\ldots ,s\). The first \(n_s\) rows of B and \(A_m\) are given by (2.6) and to keep the notation simple these coefficients and also all zero elements of R are dropped in the following tables. The values \(a_{ij}\) are for \(\sigma =1\).

Table 1 summarizes the properties of the peer methods which we implemented in Matlab and used in the numerical tests in Sect. 4. For completeness we also added the corresponding values for the Runge–Kutta methods used in the tests, i.e. ode23, the method of Bogacki–Shampine [1], and ode45, the method of Dormand and Prince [5].

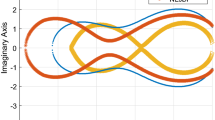

Figure 1 shows the stability regions of the peer methods. For comparison we also included the stability domains of ode45 and of the 6-step Adams–Moulton method of order 7.

4 Numerical tests

We have implemented the methods from Sect. 3 in Matlab. They are of consistency order \(p=s\) and for constant step sizes they are convergent of order \(s+1\). The starting values were computed with ode45, for step size control we estimated the \((p+1)\)-st derivative. We exploit the special structure (2.6) and (2.7) so that only \(s_e\) function evaluations per step are computed.

First, we want to illustrate the superconvergence of the constructed peer methods for constant step sizes. We consider the orbit problem [2, 4] (denoted by KEPL)

with initial values from the exact solution \(y(t)=\left( \cos t,\sin t,-\sin t,\cos t\right) ^{\top }\) for \(t \in \left[ 0,1\right] \). Figure 2 shows the considered step sizes h and the logarithm of the obtained error

at the endpoint \(t_e=1\), where \(Y_{m,s}\) is the numerical solution and \(y_\text {ref}\) the exact solution. For better illustration we added lines with slopes corresponding to orders \(p=5,6,7,8,9\). For the constructed peer methods with \(s=4,\ldots ,8\) the expected orders of superconvergence \(p=s+1\) for constant step sizes can clearly be observed.

In the tests with step size control we compare our methods with ode23, ode45 and ode113 from the Matlab ODE-suite [9]. ode23 is of order 3 with an embedded method of order 2, ode45 is of order 5 with 6 function evaluations and ode113 is based on the Adams methods of orders from 1 to 13.

As test problems we used the following standard test examples for nonstiff initial value problems: KEPL with \(y_0=\left( 1-\varepsilon ,0,0,\sqrt{\frac{1+\varepsilon }{1-\varepsilon }}\right) ^\top \), \(\varepsilon =0.9\) and \(t_e=20\). The reference solution is described in [4]. The other test problems are taken from [6] with same parameters and denoted by the same names as in [6]: AREN, BRUS, LRNZ and PLEI. Reference solutions were computed with ode45 and high accuracy. We have solved these problems with \(rtol = atol\) for \(atol=10^{-i},\ i=1,\ldots ,12\). In the Figures 3, 4, 5, 6, and 7 we present the number of function evaluations and the logarithm of the obtained errors at the endpoint.

The results show that the new methods due to their higher order are clearly superior to ode23 and that the higher order methods are also more efficient than ode45. One also observes that the 7- and 8-stage methods in general are more accurate and need less function calls than the Adams method ode113, especially for medium tolerances.

The step size control in the peer methods works well. As predicted, the shift strategy worked also for variable step sizes for all occurring values of \(\sigma _m\). For the Brusselator problem for crude tolerances the drawback of the small stability regions of peer42 can be observed.

5 Conclusions

We have presented a special class of explicit peer methods with shifted stages. This allows to save function evaluations by retaining order \(p=s\). With a special search strategy using fmincon we constructed various optimally zero stable methods. The proposed methods work also with variable step sizes allowing an usual step size control. The numerical results are promising, the number of function evaluations is substantially reduced. The methods are superior to ode23 and ode45 and at least competitive to ode113. The achieved accuracy is for most problems in much better accordance with the prescribed tolerance than for ode113. The application of the prescribed technique to implicit peer methods will be the topic of future research.

References

Bogacki, P., Shampine, L.F.: A 3(2) pair of Runge–Kutta formulas. Appl. Math. Lett. 2(4), 321–325 (1989)

Butcher, J.C.: Numerical Methods for Ordinary Differential Equations. Wiley, New York (2008)

Calvo, M., Montijano, J.I., Rández, L., Van Daele, M.: On the derivation of explicit two-step peer methods. Appl. Numer. Math. 61(4), 395–409 (2011)

Dormand, J.R.: Numerical Methods for Differential Equations. CRC Press, Boca Raton (1996)

Dormand, J.R., Prince, P.J.: A family of embedded Runge–Kutta formulae. J. Comput. Appl. Math. 6, 19–26 (1980)

Hairer, E., Nørsett, S.P., Wanner, G.: Solving Ordinary Differential Equations I: Nonstiff Problems. Springer, Berlin (1993)

Horváth, Z., Podhaisky, H., Weiner, R.: Strong stability preserving explicit peer methods. J. Comput. Appl. Math. 296, 776–788 (2016)

Kulikov, GYu., Weiner, R.: Variable-stepsize interpolating explicit parallel peer methods with inherent global error control. SIAM J. Sci. Comput. 32, 1695–1723 (2010)

Shampine, L.F., Reichelt, M.W.: The MATLAB ODE suite. SIAM J. Sci. Comput. 18(1), 1–22 (1997)

Schmitt, B.A., Weiner, R., Beck, S.: Two-step peer methods with continuous output. BIT Numer. Math. 53, 717–739 (2013)

Weiner, R., Biermann, K., Schmitt, B.A., Podhaisky, H.: Explicit two-step peer methods. Comput. Math. Appl. 55, 609–619 (2008)

Weiner, R., Podhaisky, H., Klinge, M.: Explicit peer methods with variable nodes. Report 03, Institute of Mathematics, Martin Luther University Halle-Wittenberg (2016)

Weiner, R., Schmitt, B.A., Podhaisky, H., Jebens, S.: Superconvergent explicit two-step peer methods. J. Comput. Appl. Math. 223, 753–764 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Mechthild Thalhammer.

Rights and permissions

About this article

Cite this article

Klinge, M., Weiner, R. & Podhaisky, H. Optimally zero stable explicit peer methods with variable nodes. Bit Numer Math 58, 331–345 (2018). https://doi.org/10.1007/s10543-017-0691-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0691-8