Abstract

The Filon–Clenshaw–Curtis method (FCC) for the computation of highly oscillatory integrals is known to attain surprisingly high precision. Yet, for large values of frequency \(\omega \) it is not competitive with other versions of the Filon method, which use high derivatives at critical points and exhibit high asymptotic order. In this paper we propose to extend FCC to a new method, FCC\(+\), which can attain an arbitrarily high asymptotic order while preserving the advantages of FCC. Numerical experiments are provided to illustrate that FCC\(+\) shares the advantages of both familiar Filon methods and FCC, while avoiding their disadvantages.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The highly oscillatory integral

where \(f, g \in \mathbf {C}^\infty [-1,1]\), occurs in a wide range of applications, e.g. the numerical solution of oscillatory differential and integral equations, acoustic and electromagnetic scattering and fluid mechanics. It is a difficult problem when approached by classical quadrature methods. However, once the mathematics of high oscillation is properly understood, the quadrature of (1.1) becomes fairly simple and affordable. Indeed, high oscillation is actually helpful in the design of a computational method, rather than a stumbling block. A number of innovative methods have been developed in the last two decades: an asymptotic expansion and Filon-type method [9,10,11], Levin’s method [12, 13] and numerical steepest descent [8]. These methods behave very well for \(\omega \gg 1\).

The emphasis in the design of the above methods has been on large \(\omega \), yet there is significant merit in methods which are uniformly good for all \(\omega \ge 0\). This reflects much of recent research. Complex-valued Gaussian quadrature [1, 2] is constructed (and equally efficient) for all \(\omega \ge 0\). The FCC method can be traced to [15,16,17] and they are surveyed in [14]. Recently, Domínguez, Graham and Smyshlayev studied the implementation and error estimate of FCC for the highly oscillatory integrals in [3,4,5]. Their method does not require the computation of derivatives of the endpoints. FCC, applied extensively in many applications, is so popular because of its simple implementation, high precision and easy extension for different integrands f. However, when it comes to the behaviour of the FCC for \(\omega \rightarrow \infty \), it has very low asymptotic order—its error for \(\omega \gg 0\) (in the absence of stationary points) decays like \(O(\omega ^{-2})\), while other quadrature methods for oscillatory integrals (like the asymptotic algorithm, the plain Filon method, ...) can attain any \(O(\omega ^{-s})\) for \(s\ge 2\).

The idea underlying all Filon-type methods is to replace the non-oscillatory function f in (1.1) by a polynomial p, subject to the assumption that the moments \(\int _{-1}^1 x^k \mathrm {e}^{\mathrm {i}\omega g(x)}\hbox {d} x\) can be computed for all \(k\ge 0\). Suppose for the time being that there are no stationary points, i.e. that \(g' \ne 0\) in \([-1,1]\), and recall the asymptotic expansion

where

[11]. The functions \(\sigma _{k,j}\) are independent of f, depending just on \(g'\) and its derivatives. Note that the error of Filon, \(I_{\omega }[f-p]\), is still a highly oscillatory integral. Replacing f by \(f-p\) in (1.2), we obtain the error of a Filon-type method. To derive the asymptotic order \(O(\omega ^{-s-1})\), we let

which determines a Filon method with a Hermite interpolation polynomial p of degree \(2s-1\), referred here as the sth plain Filon method

The error of a plain Filon method can be reduced (without changing its asymptotic order) by adding extra N interpolation points in the interval \((-1,1)\) and this leads to an extended Filon method [6]. The errors of the extended Filon method are analysed in [6] with general interpolation points for large \(\omega \), and with Clenshaw–Curtis points and zeros of Jacobi polynomials for small \(\omega \). Note that the Filon–Clenshaw–Curtis (FCC) procedure of [4, 5] is a particular case of extended Filon that does not require derivatives of the endpoints, where the interpolation points are chosen as \(\cos (k\pi /N)\), \(k=0,\ldots ,N\), and it enjoys a number of important advantages. Firstly, everything is explicit,

and

where \(\mathbf {T}_n(x)\) is the Chebyshev polynomial of the first kind. Note that for large values of N we can compute the \(p_n\)s with Discrete Cosine Transform I (DCT-I) in \(O(N\log N)\), rather than \(O(N^2)\) operations.

The error of FCC (in absence of stationary points) can be computed, in a large measure because of the explicit form of the coefficients, and it is

where r is the regularity of f, which is consistent with asymptotic order 2. (Recall that \(\pm 1\) are interpolation points and this is fully compliant with the reasoning underlying extended Filon methods.) The low asymptotic order notwithstanding, the error of FCC is determined by \(\omega \) and N.

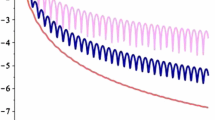

As an example, we display in Fig. 1 the errors (in logarithmic scale) committed by plain Filon with \(s=1,2,3,4\) (from the top to bottom, in the right figure) and by FCC with \(N=8\) for

Note that while \(\mathcal {Q}_{\omega }^{\mathbf {FCC},N,1}[f]\) has asymptotic error \(O(\omega ^{-2})\), a plain Filon method \(\mathcal {Q}_{\omega }^{\mathbf {F},s}[f]\) carries an asymptotic error of \(O(\omega ^{-s-1})\). For \(s=1\) the two asymptotic errors are the same and it can be seen from the figure on the left that FCC emerges as a decisive winner when measuring the maximal error for \(\omega \ge 0\). However, the figure on the right confirms the clear fact that higher asymptotic order always wins for sufficiently large \(\omega \). Recalling that \(\mathcal {Q}_{\omega }^{\mathbf {FCC},N,1}[f]\) requires \(N+1\) function evaluations, while \(\mathcal {Q}_{\omega }^{\mathbf {F},s}[f]\) ‘costs’ 2s function and derivative evaluations, it transpires that the better performance of Filon with \(s\ge 2\) for \(\omega \gg 1\) need not be accompanied by greater computational cost.

Logarithmic errors. On the left \(\mathcal {Q}_{\omega }^{\mathbf {FCC},8,1}[f]\) (solid, blue) and \(\mathcal {Q}_{\omega }^{\mathbf {F},1}[f]\) (dot, orange), while on the right \(\mathcal {Q}_{\omega }^{\mathbf {F},s}[f]\), \(s=1, 2, 3, 4\) (The line styles are dotted (orange), dashed (purple), dash dotted (red), space dotted (green), from the top to bottom) (colour figure online)

Figure 2 redraws Fig. 1 (left) in a different form, scaling the absolute value of the error by \(\omega ^2\). Since asymptotically the error behaves like \(O(\omega ^{-2})\), we expect both Filon (the top, dotted, orange) and FCC (the bottom, solid, blue) to tend to a straight line (or at least being bounded away from zero and infinity) for \(\omega \gg 1\), and this is confirmed in the figure—clearly, FCC is much more accurate!

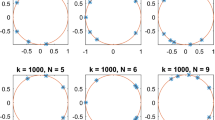

Another way of looking at our methods is by examining the interpolation error. In Fig. 3, we sketch the error function \(p-f\) for FCC with \(N = 8\) (the left figure) and a plain Filon method for \(s=1, 2, 3, 4\) (dotted (orange), dashed (purple), dash dotted (red), space dotted (green), from the bottom to top in the right figure). As we might have expected, FCC, based on interpolation at Chebyshev points of the second kind, gives hugely better minimax approximation, while for plain Filon \(p-f\) is larger in magnitude, but flat near the endpoints. It is precisely this flatness that explains superior performance for \(\omega \gg 1\).

Let us set up the competing advantages of the two methods:

-

1.

Plain Filon demonstrates much better accuracy for \(\omega \gg 1\) which, after all, is the entire point of highly oscillatory quadrature.

-

2.

FCC behaves much better for small \(\omega \) and has smaller uniform error for \(\omega \ge 0\). ‘Better’, rather than ‘best’: even better behaviour can be obtained replacing Chebyshev by Jacobi points, at the price of a minor deterioration in asymptotic behaviour [6].

-

3.

FCC does not require derivatives of the nodes, the quid pro quo being worse asymptotic error. The method might be more suitable when the derivatives of f are not easily available but note that in principle a Filon-type method can be implemented while replacing derivatives with suitable finite-difference approximations [10].

-

4.

An issue often disregarded in papers on highly oscillatory quadrature is that FCC has a considerably simpler form: this is important once we wish to compute (1.1) for a large number of different values of \(\omega \) or, for that matter, for different values of f. Specifically, we can represent any extended Filon method in the form

$$\begin{aligned} \sum _{\ell =0}^{N} \sum _{k=0}^{m_\ell -1} b_{\ell ,k}(\omega ) f^{(k)}(c_\ell ) \end{aligned}$$here \(c_0=-1<c_1<\cdots<c_{N-1}<c_N=1\) are the interpolation points with weights \(m_\ell \): \(N=1\), \(m_0=m_1=s\) for plain Filon and \(c_\ell =\cos (\pi \ell /N)\), \(m_\ell \equiv 1\), \(\ell = 1, 2, \ldots , N-1\), for FCC. Given an interpolating polynomial \(p(x)=\sum _{\ell =0}^{N} \sum _{k=0}^{m_\ell -1} p_{\ell ,k}(x) f^{(k)}(c_\ell )\), we have \(b_{\ell ,k}(\omega )=\int _{-1}^1 p_{\ell ,k}(x)\mathrm {e}^{\mathrm {i}\omega g(x)} \mathrm {d}x\). For plain Filon the generalised weights \(b_{\ell ,k}\) are fairly complicated, e.g. for \(s=2\) and \(g(x)=x\) we have

$$\begin{aligned} b_{0,0}(\omega )= & {} \frac{\mathrm {e}^{-\mathrm {i}\omega }}{-\mathrm {i}\omega }-\frac{3\cos \omega }{(-\mathrm {i}\omega )^3}-\frac{3\mathrm {i}\sin \omega }{(-\mathrm {i}\omega )^4},\\ b_{0,1}(\omega )= & {} \frac{\mathrm {e}^{-\mathrm {i}\omega }}{(-\mathrm {i}\omega )^2}-\frac{\mathrm {e}^{\mathrm {i}\omega }+2\mathrm {e}^{-\mathrm {i}\omega }}{(-\mathrm {i}\omega )^3}-\frac{3\mathrm {i}\sin \omega }{(-\mathrm {i}\omega )^4},\\ b_{1,0}(\omega )= & {} -\frac{\mathrm {e}^{\mathrm {i}\omega }}{-\mathrm {i}\omega }+\frac{3\cos \omega }{(-\mathrm {i}\omega )^3}+\frac{3\mathrm {i}\sin \omega }{(-\mathrm {i}\omega )^4},\\ b_{1,1}(\omega )= & {} -\frac{\mathrm {e}^{\mathrm {i}\omega }}{(-\mathrm {i}\omega )^2}-\frac{2\mathrm {e}^{\mathrm {i}\omega }+\mathrm {e}^{-\mathrm {i}\omega }}{(-\mathrm {i}\omega )^3}-\frac{3\mathrm {i}\sin \omega }{(-\mathrm {i}\omega )^4}. \end{aligned}$$Complexity grows rapidly for larger s. An alternative is to compute \(\int _{-1}^1 p(x)\mathrm {e}^{\mathrm {i}\omega g(x)}\mathrm {d}x\) but this must be done (having formed p, e.g. by solving a linear system) separately for every \(\omega \). The formation of FCC, however, is considerably simpler! We first compute the fast cosine transform \(\{\hat{p}_\ell \}_{\ell =0}^N\) of the sequence \(f(c_\ell )\), \(\ell =0, \ldots ,N\) using \(O(N\log N)\) operations—the interpolating polynomial is then

$$\begin{aligned} p(x)=\sum _{\ell =0}^N \hat{p}_\ell \mathbf {T}_\ell (x)\quad \text{ hence }\quad \mathcal {Q}_\omega ^{\mathbf {FCC},N,1}[f]=\sum _{\ell =0}^N \hat{p}_\ell \hat{b}_\ell (\omega ), \end{aligned}$$where \(\hat{b}_\ell (\omega )=\int _{-1}^1 \mathbf {T}_\ell (x)\mathrm {e}^{\mathrm {i}\omega g(x)}\mathrm {d}x\) can be formed rapidly [4] and their form is typically much simpler.

It is obvious how to reconcile 1 and 2: choose a polynomial p of degree \(N+2s-2\), where \(N,s\ge 1\), which interpolates f at \(\cos (k\pi /N)\), \(k=1,\ldots ,N-1\), and \(f^{(i)}\), \(i=0,\ldots ,s-1\), at \(\pm 1\), and compute \(\int _{-1}^1 p(x)\mathrm {e}^{\mathrm {i}\omega g(x)}\mathrm {d}x\)—this is precisely the method we term “FCC\(+\)” and denote by \(\mathcal {Q}_\omega ^{\mathbf {FCC},N,s}[f]\). While FCC\(+\) has been already stated in [6] and its error, in tandem with the Filon–Jacobi method, analysed by the tools introduced there, nothing has been said in that paper about the construction of such a method. This is the main challenge in the present paper, where we demonstrate that the unique selling point of FCC, namely its computation with FCT, can be extended to FCC\(+\). More specifically, the method also ticks point 4: it can be reduced to standard FCC plus solving two systems of linear equation with dimension \(s-1\) and requires just \(O(N\log N)+O(s^3)\) operations.

In Sect. 2 we introduce FCC\(+\) in an orderly manner and describe its basic properties. In Sect. 3 we present a construction of FCC\(+\) which is consistent with the above point 4 and present some numerical experiments. We conclude with a brief Sect. 4, reviewing the results of this paper.

2 Basic properties of FCC\(+\)

Letting \(s,N\ge 1\), we seek a polynomial p of degree \(N+2s-2\) such that

(Note that the case \(i=0\) is automatically covered by \(j=0\) and \(j=N\).) We then let

This is our FCC\(+\) method. In fact, FCC\(+\) is a special case of Extended Filon Method in [6] whose interpolation nodes are chosen as \(\cos \left( \frac{j \pi }{N}\right) \), \(j = 1, 2, \ldots , N-1\). The error estimate with the infinity norm of f has been introduced in [6] as follows:

for \(\omega \gg 1\). This is true since \(\prod _{j=1}^{N-1} \left( 1-\cos \left( \frac{j \pi }{N}\right) \right) =\prod _{j=1}^{N-1} \left( 1+\cos \left( \frac{j \pi }{N}\right) \right) =N/2^{N-1}\).

We do not require \(g'\ne 0\) in \([-1,1]\), yet note that, once this condition is satisfied, \(\mathcal {Q}_\omega ^{\mathbf {FCC},N,s}[f]-I_\omega [f]\sim O\left( \omega ^{-s-1}\right) \) from (2.2), while for \(\omega \rightarrow 0\) we have Birkhoff–Hermite quadrature with Clenshaw–Curtis nodes. It is convenient to represent

The next conceptual step is to calculate the coefficients \(p_m\) quickly using DCT-I.

We need first to compute \(\mathbf {T}_m^{(k)}(\pm 1)\) for relevant values of k and m. To this end we recall from DLMF (http://dlmf.nist.gov) that

Iterating the last expression, we have

in particular

Consequently,

We deduce that

where

Therefore

Over to (2.1). Incorporating (2.3) and the definition of Chebyshev polynomials, we obtain the linear system

Let

The bottom line in (2.4) is equivalent to

This is DCT-I, \(\mathcal {C}_N\hat{\mathbf {p}}=\mathbf {h}\), and its inverse is \(\mathcal {C}_N^{-1}=(2/N)\mathcal {C}_N\). We deduce that

Consequently, the coefficients \(p_m\), \(m=0,\ldots ,N\), can be calculated in \(O(N\log N)\) operations with DCT-I from the unknown coefficients \(p_m\) for \(m=N+1,\ldots ,N+2s-2\). Specifically, we need first to solve a linear system of \(2s-2\) unknowns \(p_{N+1},\ldots ,p_{N+2s-2}\), and subsequently recover \(p_0,\ldots ,p_N\) as above.

3 The construction of FCC\(+\)

In this section, we complete the construction of FCC\(+\) for a general \(s\ge 2\) by identifying explicitly the linear system for \(p_{N+1},\ldots ,p_{N+2s-2}\). We commence with the simplest case, \(s=2\), subsequently generalising to all \(s>2\).

3.1 \(s = 2\)

We have

and it follows from (2.6) that

Since

for \(M=0,1,\ldots ,N-2\) and \(M=N\) derived from the formula (1.3.43-1) of page 37 in [7], we have

while for \(m=N-1\) the sum is N / 2.

In exactly the same fashion

and we deduce that the sum equals zero for \(m\ne N-2\) and N / 2 for \(m=N-2\).

Let

be the coefficients that feature in the original FCC. We thus deduce that

except for \(m=N-2\) and \(m=N-1\), exactly like in standard FCC!

We have the two remaining equations from (2.4), namely

Therefore

All this results in two linear equations, whose solution is

for the unknowns \(p_{N+1}\) and \(p_{N+2}\).

3.2 A general \(s\ge 3\)

Given a general \(s \ge 3\), we have

Subject to the definition (3.1), for \(m=0,\ldots ,N\), the formula (2.5) can be written as

Since

we obtain

Over to the remaining conditions in (2.4). Substituting (3.2), we have

for \(i=1,\ldots ,s-1\), which can be written into matrix form

where

for \(i = 1, 2, \ldots , s-1\). This also can be separated into two smaller systems adding and subtracting the equations,

and

Solving the equations directly, we can compute for \(s=3\)

For \(s\ge 4\) it probably makes more sense to solve the equations directly than to write down a general solution like above.

3.3 The general algorithm for FCC\(+\)

To construct the general algorithm for the computation of FCC\(+\) coefficients,

where

a concrete process is presented as follows:

Step 1 Calculate the coefficients \(\check{p}_m\), \(m = 0, 1, \ldots , N\), defined in (3.1), which can be represented in matrix form

where

We observe that the computation of (3.7) is nothing else but the discrete cosine transform appearing in the FCC whose cost is \(O\left( N \log (N)\right) \) in [5].

Step 2 Compute the coefficients \(p_m\), for \(m = N+1, N+2, \ldots , N+2s-2\), by solving the system comprising of (3.3) and (3.4). The computational cost for a general system of linear equations is at most \(O\left( s^3\right) \).

Step 3 Based on the coefficients \(p_m\), \(m = N+1, N+2, \ldots , N+2s-2\), in Step 1, and \(\check{p}_j\), \(j = 0, 1, \ldots , N\) in Step 2, derive the coefficients \(\hat{\mathbf {p}} = (\hat{p}_0, \hat{p}_1, \ldots , \hat{p}_{N})^\top \) using the relations (3.2). It is observed that only \(2s-2\) subtraction operations occur which can be omitted. The matrix form for the transformation is

Step 4 Now, using \(\hat{\mathbf {p}} = (\hat{p}_0, \hat{p}_1, \ldots , \hat{p}_{N})^\top \) from Step 3, the coefficients \(p_{m}\), \(m = 1, 2, \ldots , N\) can be represented by (2.5). This completes our derivation of the coefficients \(p_m\), \(m = 1, 2, \ldots , N+2s-2\). The corresponding matrix form for (2.5) is

Step 5 Calculate the coefficients \(\mu _m\) from (3.6), which have been studied in Section 3 of [5], inclusive of its stability. Finally, to calculate the approximate value of (3.5), \(O\left( N+2s-1\right) \) multiplications are required to form the sum.

Altogether, the computational cost is \(O\left( N \log (N)\right) + O\left( s^3\right) \).

Insofar as stability of this algorithm is concerned, stability of Step 1 has been analysed in reference [5]. In the sequel we are concerned with the conditioning of the system of linear equations in Step 2. There are two linear systems with dimension \(s-1\) to be solved. The condition numbers of the two coefficient matrices decide the stability of numerical solution for (3.3) and (3.4), they are displayed in Tables 1 and 2 for \(s \ge 3\) and \(N = 2^j\), \(j = 2, 3, \ldots , 6\), respectively. It transpires from the two tables that the condition number becomes large with increasing s and N. Therefore, for large N, to improve the asymptotic order s, numerical solution of the system might lead to an unacceptable error accumulation. Thus, a suitable algorithm, e.g. singular value decomposition, should be applied to get the numerical values to approximate \(p_{N+m}\), \(m = 1, 2, \ldots , 2s-2\).

3.4 A numerical example

We have demonstrated that FCC\(+\) can attain any asymptotic order \(s \ge 1\) at a cost similar to standard FCC: a single DCT-I computation and a solution of an \((2s-2)\times (2s-2)\) linear system. (Since s is likely to be small, the extra expense of solving a linear system is marginal.) To illustrate the gain in accuracy, we revisit the problem (1.3). Figure 4 displays the magnitude of the error, \(\log _{10}\left| \mathcal {Q}_{\omega }^{\mathbf {FCC},8, s}[f] - I[f]\right| \), for \(s=1\) (i.e., plain FCC, solid line), 2 (dotted line), 3 (dashed line), 4 (dash-dotted line) and \(N=8\), from the top to bottom. We observe that the curves have for \(\omega \gg 1\) the same slope as plain Filon methods in Fig. 1 (right), but the curves lie much lower: the error is considerably smaller! Moreover, for small \(\omega \ge 0\) the performance is starkly better than of plain Filon and marginally improves with greater \(s\ge 1\).

The error (in logarithmic scale) of \(\mathcal {Q}_{\omega }^{\mathbf {FCC},8, 1}[f]\) (the solid line in blue), \(\mathcal {Q}_{\omega }^{\mathbf {FCC},8, 2}[f]\) (the dotted line in orange), \(\mathcal {Q}_{\omega }^{\mathbf {FCC},8, 3}[f]\) (the dashed line in brown) and \(\mathcal {Q}_{\omega }^{\mathbf {FCC},8, 4}[f]\) (the dash-dotted line in purple). The corresponding order is from the top to bottom (colour figure online)

4 Conclusions

Several effective algorithms to compute highly oscillatory integrals have emerged in the last two decades. Among these methods, an extended Filon method enjoys the advantage of simplicity and flexibility: once we can compute the moments \(\int _{-1}^1 x^k \mathrm {e}^{\mathrm {i}\omega g(x)}\mathrm {d}x\), \(k\in \mathbb {Z}_+\), we can construct an extended Filon method with great ease.

Choosing an extended Filon method, we need to make three choices: how many derivatives to compute at critical points, how many extra interpolation points to add and how to choose these interpolation points. We are guided by three goals: good performance for large \(\omega \), good performance for small \(\omega \ge 0\) (and hence good uniform performance) and simplicity of the underlying expressions and ease of their computation. Plain Filon method exhibits excellent behaviour for \(\omega \gg 1\), while FCC is superior for small \(\omega \ge 0\), can be derived cheaply and has a pleasingly simple form. In this paper we have introduced an approach that shares the advantages of both.

We have focused on the case \(g'(x)\ne 0\), \(x\in [-1,1]\), but, like FCC in [4], our approach can be easily generalised to the presence of stationary points, where \(g'\) vanishes.

References

Asheim, A., Huybrechs, D.: Complex Gaussian quadrature for oscillatory integral transforms. IMA J. Numer. Anal. 33(4), 1322–1341 (2013)

Deaño, A., Huybrechs, D., Iserles, A.: The kissing polynomials and their Hankel derminants. Tech. rep., DAMTP, University of Cambridge (2015)

Domínguez, V.: Filon–Clenshaw–Curtis rules for a class of highly-oscillatory integrals with logarithmic singularities. J. Comput. Appl. Math. 261, 299–319 (2014)

Domínguez, V., Graham, I.G., Kim, T.: Filon–Clenshaw–Curtis rules for highly oscillatory integrals with algebraic singularities and stationary points. SIAM J. Numer. Anal. 51(3), 1542–1566 (2013)

Domínguez, V., Graham, I.G., Smyshlyaev, V.P.: Stability and error estimates for Filon–Clenshaw–Curtis rules for highly oscillatory integrals. IMA J. Numer. Anal. 31(4), 1253–1280 (2011)

Gao, J., Iserles, A.: Error analysis of the extended Filon-type method for highly oscillatory integrals. To appear in Research in the Mathematical Sciences (2017)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products, 7th edn. Elsevier, Amsterdam. Translated from the Russian, Translation edited and with a preface by Alan Jeffrey and Daniel Zwillinger, With one CD-ROM. Windows, Macintosh and UNIX (2007)

Huybrechs, D., Vandewalle, S.: On the evaluation of highly oscillatory integrals by analytic continuation. SIAM J. Numer. Anal. 44(3), 1026–1048 (2006)

Iserles, A.: On the numerical quadrature of highly-oscillating integrals. I. Fourier transforms. IMA J. Numer. Anal. 24(3), 365–391 (2004)

Iserles, A., Nørsett, S.P.: On quadrature methods for highly oscillatory integrals and their implementation. BIT 44(4), 755–772 (2004)

Iserles, A., Nørsett, S.P.: Efficient quadrature of highly oscillatory integrals using derivatives. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 461(2057), 1383–1399 (2005)

Levin, D.: Fast integration of rapidly oscillatory functions. J. Comput. Appl. Math. 67(1), 95–101 (1996)

Olver, S.: Moment-free numerical integration of highly oscillatory functions. IMA J. Numer. Anal. 26(2), 213–227 (2006)

Piessens, R.: Computing integral transforms and solving integral equations using Chebyshev polynomial approximations. J. Comput. Appl. Math. 121(1-2), 113–124 (2000). Numerical analysis in the 20th century, Vol. I, Approximation theory

Piessens, R., de Doncker-Kapenga, E., Überhuber, C.W., Kahaner, D.K.: QUADPACK, Springer Series in Computational Mathematics, vol. 1. Springer, Berlin (1983). A subroutine package for automatic integration

Piessens, R., Poleunis, F.: A numerical method for the integration of oscillatory functions. BIT 11(3), 317–327 (1971)

Sloan, I.H., Smith, W.E.: Product integration with the Clenshaw–Curtis points: implementation and error estimates. Numer. Math. 34(4), 387–401 (1980)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Tom Lyche.

The work is supported by the Projects of International Cooperation and Exchanges NSFC-RS (Grant No. 11511130052), the Key Science and Technology Program of Shaanxi Province of China (Grant No. 2016GY-080) and the Fundamental Research Funds for the Central Universities.

Rights and permissions

About this article

Cite this article

Gao, J., Iserles, A. A generalization of Filon–Clenshaw–Curtis quadrature for highly oscillatory integrals. Bit Numer Math 57, 943–961 (2017). https://doi.org/10.1007/s10543-017-0682-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0682-9

Keywords

- Filon–Clenshaw–Curtis quadrature (FCC)

- Highly oscillatory integrals

- Asymptotic order

- Clenshaw–Curtis points

- Discrete cosine transformation (DCT)