Abstract

We introduce two drift-diagonally-implicit and derivative-free integrators for stiff systems of Itô stochastic differential equations with general non-commutative noise which have weak order 2 and deterministic order 2, 3, respectively. The methods are shown to be mean-square A-stable for the usual complex scalar linear test problem with multiplicative noise and improve significantly the stability properties of the drift-diagonally-implicit methods previously introduced (Debrabant and Rößler, Appl. Numer. Math. 59(3–4):595–607, 2009).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the system of Itô stochastic differential equations

where X(t) is a random variable with values in \(\mathbb{R}^{d}\), \(f:\mathbb{R}^{d} \rightarrow \mathbb{R}^{d}\) is the drift term, \(g^{r}: \mathbb{R}^{d}\rightarrow \mathbb{R}^{d},~r=1,\ldots,m\) are the diffusion terms, and W r (t), r=1,…,m are independent one-dimensional Wiener processes. The drift and diffusion functions are assumed smooth enough, Lipschitz continuous and to satisfy a growth bound in order to ensure a unique (mean-square bounded) solution of (1) [6]. Except for some very special cases, (1) cannot be solved analytically and numerical methods are needed. The accuracy of a numerical solution is usually measured in terms of strong error (the rate at which the mean of the error norm decays) and weak error (the rate at which the difference between the mean of a smooth functional of the exact and the numerical solutions decays) [14]. Besides the strong and weak error, the stability of a numerical integrator is an essential issue for many problems. In the case of numerical methods for ordinary differential equations (ODEs) this is a very well studied problem and one desirable property is the so-called A-stability, especially when dealing with stiff problems [11]. More precisely, considering the linear test problem \(dX(t)=\lambda X(t) dt,~\lambda\in\mathbb{C}\), whose solution is stable if and only if \(\lim_{t \rightarrow+\infty} X(t)=0 \iff\lambda\in S_{\text{ODE}}:= \{ \lambda\in\mathbb{C}; \Re(\lambda) <0 \}\) and applying a Runge-Kutta method to it leads to the one step difference equation X n+1=R(p)X n , where the stability function R(p) is a rational function of p=λh. The numerical method is then stable for this problem if and only if \(\lim_{n \rightarrow\infty} X_{n}=0 \iff p \in S_{num}:= \{p \in\mathbb {C}; |R(p)| <1 \}\), and the method is called A-stable if and only if \(S_{\text{ODE}} \subseteq \mathcal{S}_{num}\).

For SDEs, different measures of stability are of interest and in this paper we focus on mean-square stability [20] and mean-square A-stability [13] (the generalization of the A-stability for ODEs to SDEs). One considers the following test problem [7, 13, 20, 22]

in dimensions d=m=1, with fixed complex scalar parameters λ,μ. The exact solution of (2), given by \(X(t)=\exp((\lambda-\frac{1}{2}\mu^{2}) t+\mu W(t))\), is mean-square stable if and only if \(\lim_{t\rightarrow\infty }{\mathbb{E}} (|X(t)|^{2} )=0\) and mean-square stability can be characterized as the set of \((\lambda,\mu)\in\mathbb{C}^{2}\) such that \(\Re(\lambda)+\frac{1}{2}|\mu|^{2}<0\), that will be called \(S^{MS}_{\text{SDE}}\) [13, 20]. Another measure of stability that will be briefly mentioned in this paper is that of asymptotic stability. In particular, the solution of (2) is said to be stochastically asymptotically stable if and only if lim t→∞|X(t)|=0, with probability 1. Asymptotic stability can be characterized as the set of \((\lambda,\mu)\in\mathbb{C}^{2}\) such that \(\Re (\lambda-\frac{1}{2}\mu^{2} )<0\).

Applying a numerical method to the test SDE (2) usually yields the following one step difference equation [13]

where \(p=\lambda h, q =\mu\sqrt{h}\), and ξ n is a random variable. We can then characterize the mean-square stability domain of the method as

A characterization of the numerical asymptotic stability domain can also be derived, assuming R(p,q,ξ)≠0 with probabilityFootnote 1 1 and \({\mathbb{E}((\log|R(p,q,\xi)|)^{2}) < \infty}\), as [13, Lemma 5.1] the set of \((p,q)\in\mathbb{C}^{2}\) such that \(\mathbb{E}(\log|R(p,q,\xi)|)<0\). Finally, a numerical integrator is called

-

mean-square A-stable if \(S^{MS}_{\text{SDE}} \subseteq \mathcal{S}_{num}^{MS}\);

-

mean-square L-stable, if it is mean-square A-stable and if the limit \(\mathbb{E}(|R(p_{k},q_{k},\xi)|^{2}) \rightarrow0\) holds for all sequences \((p_{k},q_{k})\in S^{MS}_{\text{SDE}}\) with ℜ(p k )→−∞.

If we restrict \((p,q) \in \mathbb{R}^{2}\) then the domains of mean-square or asymptotic stability are called regions of stability.

Mean-square A-stable numerical methods are necessarily drift-implicit and it is shown in [13] that the stochastic θ-methods, which have strong order 1/2 and weak order 1 for general SDEs of the type (1) are mean square A-stable for θ≥1/2. In [12] it is shown that A-stable methods which have strong and weak order 1 can be built using the θ-method, with mean-square A-stability achieved for θ≥3/2 (notice that such methods might have large error constants and are usually not used in the deterministic case). We mention that a class of strong order one implicit schemes for stiff SDEs, based on the so-called Balanced method, were recently proposed in [5] with the aim of achieving large asymptotic stability regions. High order strong methods for SDEs are usually difficult to implement due to the need of computing numerically involved stochastic integrals. In contrast higher order weak methods are easier to simulate as the stochastic integrals can in this case be replaced by discrete random variables. However, constructing mean-square A-stable higher order integrators is a non trivial task. In [16] a method of ROW type [10] of weak second order is proposed for Itô SDEs that is mean-square stable under the assumption of real diffusion coefficients. Recently, a class of singly diagonally drift-implicit Runge-Kutta methods of weak second order was proposed in [8]. These methods, called SDIRK in the numerical ODE literature [11, Chap. IV.6] are of interest because they are cheaper to implement than fully drift-implicit methods (see also the discussion in Sect. 2). However, none of the weak second order diagonally implicit Runge-Kutta methods proposed in [8] are mean square A-stable. Moreover except for the variation of the θ-Milstein method for Itô SDEs that was proposed in [2], we are not aware of any other weak second order mean-square A-stable integrator.

In this paper we derive a class of singly diagonally drift-implicit integrators of weak second order (indexed by parameter γ), that we call S-SDIRK methods, for multidimensional SDEs with non-commutative noise. These methods have the same computational cost as the methods derived in [8], but much better stability properties. More precisely, for a particular choice of γ, the mean-square A-stability for general parameters \((p,q)\in\mathbb{C}^{2}\) can be proved. For another choice of γ that leads to a third order method for deterministic problems, for which the mean-square A-stability can be checked numerically. Comparison with the methods derived in [8] is discussed and numerical experiments on a nonlinear test problem with non-commutative noise corroborate the weak second order of convergence predicted by our analysis. Finally, a new stabilization procedure introduced in this paper also allows to improve the stability of the strong and weak order 1 methods introduced in [12] based on the θ-method. In particular, mean-square A-stable methods for any value θ≥1/2 are constructed.

2 Mean-square A-stable diagonally drift-implicit integrators

Instead of considering the general framework of stochastic Runge-Kutta methods [15, 19] we derive our S-SDIRK methods by stabilizing the simplest Taylor based method of weak second order, namely the Milstein-Talay method [21] following the methodology developed in [4] for explicit stabilized stochastic methods. Consider

where

and χ l ,ξ l , l=1…m are independent discrete random variables satisfying respectively

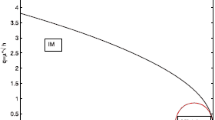

The method (5) is obtained from a second weak order Taylor method [21] replacing stochastic integrals with discrete random increments and derivatives by finite differences. Notice that each step of the above derivative-free scheme only involves five evaluations of the functions g r, r=1,…,m, independently of the dimension m of the Wiener processes, thanks to suitable finite differences involving also noise terms, as first proposed in [19]. A direct proof of the weak second order of the method (5) can easily be established and we refer to [4, Lemma 3.1] for details. However, as it can be seen in Fig. 1, this method (5) has a very restricted mean-square stability domain, which results in a stepsize restriction in the case of stiff problems.

In order to relax this restriction, one needs to stabilize the method (5). There are two alternatives ways for doing this: use stabilized explicit integrators [1, 3, 4], or use implicit integrators, and in particular diagonally drift-implicit ones, which, as mentioned above, is the focus of this paper.

Diagonally implicit Runge-Kutta methods

In the case of numerical methods for ODEs, diagonally implicit Runge-Kutta methods are integrators of the form

where s is the number of internal stages, and a ij ,b i are the coefficients of the method. The advantage of diagonally implicit Runge-Kutta methods over fully implicit Runge-Kutta methods is that one can treat one internal stage after the other (nonlinear systems of size d×d) instead of solving the full nonlinear system of size (d⋅s)×(d⋅s). An additional advantage is that the values for the internal stages already computed can be used to find a good starting value for the next implicit stage that needs to be computed [11]. Moreover, choosing identical diagonal coefficients a ii =γ permits to use at each step a single LU-factorization for all quasi-Newton iterations in all internal stages of the method. In this case the corresponding methods are called singly diagonally implicit Runge-Kutta methods (SDIRK).

New weak order two diagonally implicit A-stable integrators

We introduce the following stabilized integrator of weak order two for the integration of (1).

where \(\beta_{1}=\frac{2-5\gamma}{1-2\gamma}\), \(\beta_{2}=\frac{\gamma}{1-2\gamma}\), \(\beta_{3}=\frac{1}{2} -2\gamma\), and ξ r ,χ r ,J q,r satisfy (7) and (6) respectively. For γ=0, we have \(K_{1}^{*}=K_{2}^{*}=X_{0}\) and \(K_{3}^{*} =X_{0}+(h/2) f(X_{0})\) and we recover the explicit Milstein-Talay method (5). For the stage \({K}_{2}^{*}\), we use D −1 to stabilize \(K_{1}^{*}-X_{0}\), where D=I−γhf′(X 0). This stabilization procedure (used in numerical ODEs to stabilize the error estimator of an integrator) is well-known in ODEs and has been introduced by Shampine [11, Sect. IV.8], its use for SDEs is motivated in Remark 3 below. We emphasize that it does not represent a computational overhead as the LU-factorization of D needed to compute \(D^{-1}(K_{1}^{*}-X_{0})\) is already available from the solution of the nonlinear system for the stages (K 1,K 2) (see Remark 1).

We shall consider two choices for γ that yield mean-square A-stable integrators:

-

the S-SDIRK(2,2) method for the value \(\gamma=1-\frac{\sqrt{2}}{2}\) which gives a weak order 2 A-stable method with deterministic order 2;

-

the S-SDIRK(2,3) method for the value \(\gamma=\frac{1}{2} + \frac{\sqrt{3}}{6}\) which gives a weak order 2 A-stable method with deterministic order 3.

We notice that the value of γ for the S-DIRK(2,3) yields in the deterministic case a method of order 3 which is strongly A-stable, i.e. |R(∞)|<1, while the value of γ for the S-DIRK(2,2) yields a method of order 2 which is L-stable, i.e. it is A-stable and R(∞)=0. L-stability is desirable in the case of very stiff deterministic problems as it permits to damp the very high frequencies.

Complexity

In addition to the solution of the deterministic two stage SDIRK method (which yields the stages (K 1,K 2)) one step of the scheme (9) costs one evaluation of the drift function f, and 5 evaluations of each diffusion functions g r, and the generation of 2m random variables. The cost is similar to the diagonally implicit methods proposed in [8] (in particular the number of evaluation of the diffusion functions g r, r=1,…,m is independent of the number of Wiener processes m).

Remark 1

We emphasize that the computation of \(D^{-1}(K_{1}^{*}-X_{0}) \) in the scheme (9) does not represent any computational overhead. Indeed, as for any deterministic or stochastic diagonally implicit method [8, 11], the usual procedure for evaluating K 1,K 2 is to compute the LU-factorization of D=I−γhf′(X 0) (f′(X 0) is usually further approximated by finite differences) and make the quasi-Newton iterationsFootnote 2

where δ 2i is the Kronecker delta function. The same LU-factorization is then used to compute \(D^{-1}(K_{1}^{*}-X_{0}) \) by solving

whose cost in negligible: the cost of evaluating \(K_{2}^{*}\) together with \(K_{3}^{*}\) is the same as one iteration of (10).

Remark 2

The stabilization procedure used in the method (9) can also be used to improve the stability of the strong order one methods studied in [13] by considering the following variant of the stochastic θ-method

where \(\xi_{r} \sim\mathcal{N}(0,1)\) are independent random variables, and I q,r are the multiple stochastic integrals \(I_{q,r} = \int_{0}^{h} (\int_{0}^{s} dW_{q}(t) ) dW_{r}(s)\). Here, D=I−θhf′(X 0). In the case of commutative noise, these multiple stochastic integrals do not need to be simulated and can be simply replaced by h(ξ q ξ r −δ qr )/2, where δ qr is the Kronecker delta function. The advantage of the integrator (11) is that it can be shown to be mean-square A-stable for all θ≥1/2 (see Remark 6). In contrast, the strong order one θ-Milstein method, whose stability has been analyzed in [13], is mean-square A-stable only for θ≥3/2 and these values of θ yield large error constants.

We next show that the integrator (9) has weak second order.

Theorem 1

Consider the SDE (1) with \(f,g^{r} \in C_{P}^{6}(\mathbb{R}^{d},\mathbb{R}^{d})\), Lipschitz continuous. Then, for all fixed γ≠1/2, the integrator (9) satisfies

for all \(\phi \in C_{P}^{6}(\mathbb{R}^{d},\mathbb{R})\), where C is independent of n,h.

Proof

We base our proof on a well-known theorem by Milstein [17], which states that under our smoothness assumptions a local weak error estimate of order r+1 guarantees a weak order of convergence r. Since the derivative free Milstein-Talay method (5) is already of weak second order (see [4, Lemma 3.1] for a short direct proof), it is sufficient to show that

where \({X}_{1},\bar{X}_{1}\) are the one step numerical approximations given by (9) and (5), respectively. A Taylor expansion argument shows

and

from which we deduce

where \(\mathbb{E}(R_{1})=0\). Furthermore, we notice that the last two lines of (5) and (9) are identical, with the exception that X 0 is replaced by \(K_{2}^{*}\) and \(\bar{K}_{3}\) is replaced by \(K_{3}^{*}\). This induces a perturbation of the form \(h^{2} R_{2} + h^{5/2} R_{3} + \mathcal {O}(h^{3})\) where \(\mathbb{E}(R_{2})=\mathbb{E}(R_{3})=0\) and \(\mathbb{E}(R_{2} \xi_{r})=0\) for all r (a consequence of \(\mathbb{E}(J_{q,r}\xi_{j})=0\) for all indices q,r,j). We deduce

Using \(\bar{X}_{1}=X_{0} + \sqrt{h} \sum_{r=1}^{m} g^{r}(X_{0}) \xi_{r} + \mathcal {O}(h)\), we obtain

We deduce that the local error bound (12) holds. To conclude the proof of the global error, it remains to check that for all \(r\in\mathbb{N}\) all moments \(\mathbb{E}(|X_{n}|^{2r})\) are bounded uniformly for all 0≤nh≤T, with h small enough. These estimates follow from standard arguments [18, Lemma 2.2, p. 102] using the linear growth of f,g r, assumed globally Lipschitz. □

We now illustrate Theorem 1 numerically. In particular, we consider the following non stiff nonlinear test SDE from [8] with a non-commutative noise with 10 independent driving Wiener processes,

where the values of the constants a j ,j=1,…,10 are respectively 10, 15, 20, 25, 40, 25, 20, 15, 20, 25, and the values of b j ,j=1,…,10 are respectively 2, 4, 5, 10, 20, 2, 4, 5, 10, 20. For this problem, by applying Itô’s formula to ϕ(x)=x 2, taking expectations and using the fact that \(\mathbb{E}(X(t))=e^{t}\), one calculates \(\mathbb{E}(X^{2}(t))=({-68013-458120 e^{t}+14926133 e^{2t}})/{14400000}\). We apply the S-SDIRK methods to the problem (14) and approximate \(\mathbb{E}(X^{2}(T))\) up to the final time T=1 for different step sizes h. In Fig. 2, we plot the relative errors using 109 realisations for the new integrators S-SDIRK(2,2) (solid line) and S-SDIRK(2,3) (dashed line). For comparison, we also include the results of the derivative-free Milstein-Talay method (5) (dashed-dotted line) and the DDIRDI5 method (dashed-dotted-dotted line) from [8] (with \(c_{1}=c_{2}=1/2+\sqrt{3}/6\), c 3=c 4=1 in [8]). We note here that the same set of random numbers is used for all four integrators. We observe the expected line of slope 2 for S-SDIRK(2,2) (compare with the reference slope in dotted lines) which confirms the weak order two of the methods predicted by Theorem 1. In addition, we also observe that the methods S-SDIRK(2,3) and DDIRDI5, which have deterministic order 3, are about one magnitude more accurate than the Milstein-Talay method for steps of size h≃10−1. As the noise is small in this example, the third order deterministic accuracy can improve the convergence of S-SDIRK(2,3) or DDIRDI5. We emphasize that this behavior does not hold in general when the diffusion function is not small compared to the drift function as can be seen in Fig. 5 and its related problem.

Weak convergence plots for the nonlinear problem (14) for the Milstein-Talay method (5) (dashed-dotted line), S-SDIRK(2,2) (solid line), S-SDIRK(2,3) (dashed line), DDIRDI5 [8] (dashed-dotted-dotted line). Second moment error at final time T=1 versus the stepsize h, where 1/h=1,2,3,4,6,8,11,16,23,32. Averages over 109 samples

3 Mean-square A-stability

In this section, we study the mean-square stability of the integrators S-SDIRK(2,2) and S-SDIRK(2,3). The stability functions (3) have the form

where \(\mathbb{P}(\xi=\pm\sqrt{3})=1/6,\mathbb{P}(\xi=0)=2/3\), and

We deduce for all \(p,q\in \mathbb{C}\),

Remark 3

We observe that by removing the term involving D −1 in the S-SDIRK methods (9), the denominators of B(p) and C(p) would scale at best as (1−γp)2. The resulting methods would no longer be mean-square A-stable.

In Fig. 3 we visualize the mean-square and asymptotic stability regions for S-SDIRK(2,2) and compare them with the ones of the diagonally-implicit method DDIRDI5 introduced in [8] (\(c_{1}=c_{2}=\frac{1}{2}+\frac{\sqrt{3}}{6}\)). The dashed lines {|p|=q 2/2} indicate for p<0 and p>0 respectively the boundaries of the mean-square and asymptotic stability regions for the exact solution. As we can observe, the mean-square stability regions (dark gray) of S-SDIRK(2,2) are much bigger than the ones of DDIRDI5, and include the ones of the exact solution. This relates with the fact that DDIRDI5 and the class of weak second order methods introduced in [8] are not mean-square A-stable as shown in [4], whereas S-SDIRK(2,2) is a mean-square A-stable integrator, as proved in the next theorem. Moreover it is mean-square L-stable as shown in Remark 4.

Mean-square stability region (dark gray) and asymptotic stability region (dark and light grays) for DDIRDI5 [8] (left pictures) and S-SDIRK(2,2) (right pictures)

Theorem 2

The integrator S-SDIRK(2,2) is mean-square A-stable.

Proof

Since S(p,q) given by (16) is an increasing function of |q|2, we set for \(z\in\mathbb{C}\), \(s(z):=S(z,\sqrt{-2\Re z})\) and the method is mean-square A-stable if and only if

Using \(\gamma=1-\sqrt{2}/2\) and putting z=x+iy, a calculation yields

where

It can be checked that a i (x)<0 for all x<0 and all i=1,2,3. For a given x<0, we consider (18) as a function of y, say g(y) and we observe that g(0)=0 and g(y)>0, for all y<0, g(y)<0, for all y>0. Thus, since s(x+iy) is a smooth function of y that tends to zero for y→∞, we deduce

Finally, an elementary study of the quantity s(x) as a function of the real parameter x<0 yields s(x)≤1. This implies the bound (17) and concludes the proof. □

Remark 4

We observe that in the proof of Theorem 2 we also have lim x→−∞ s(x)=0. Hence the S-SDIRK(2,2) method is also mean-square L-stable.

Remark 5

Using (17), it can be checked numerically that the integrator S-SDIRK(2,3) is mean-square A-stable (see an illustration for real (p,q) in Fig. 4). A rigorous proof is however more tedious to derive because (19) does not hold for this integrator (notice that the scheme is not L-stable for deterministic problems).

Remark 6

For the strong order one θ-methods (11) a simple calculation gives for the stability function (16),

Similarly as in Theorem 2 the mean-square A-stability of (11) can be proved for θ≥1/2.

We now exhibit the advantage of our method over the Milstein-Talay method (5) and the weak second order drift-implicit methods considered in [8]. In particular, we consider the linear test problem (2) and compare the behaviour of the three different methods for a range of parameters λ,μ for which the solution of (2) is mean-square stable. As we can see in Table 1, even for a moderate stiff problem (−λ=μ 2=5) in contrast to the S-SDIRK methods introduced here, there is quite a big stepsize restriction in order for the numerical solution to be mean-square stable for the Milstein-Talay and the DDIRDI5 methods. Furthermore, as expected we observe that the stepsize restriction for the other two methods becomes more severe as we increase the stiffness of the problem.

We finally compare the performance of the introduced stochastic integrators on a nonlinear stiff system of SDEs with a one-dimensional noise (d=2,m=1),

which is inspired from a one-dimensional population dynamics model [9, Chap. 6.2]. Notice that if we linearise (20) around the stationary solution (X,Y)=(1,1), we recover for α=0 the linear test problem (2). We take the initial conditions X(0)=Y(0)=0.95 close to this steady state and use the parameters λ 2=−4, μ 2=1, α=1.

We take for the deterministic part of the problem the stiff parameter λ 1=−500 and we shall consider for the noise parameter μ 1 either the stiff value \(\mu_{1}=\sqrt{500}\) or the nonstiff value μ 1=1. We plot in Fig. 5 the errors for \(\mathbb {E}(X(T)^{2})\) at the final time T=1 versus stepsizes h for the integrators DDIRDI5, S-SDIRK(2,2), S-SDIRK(2,3) taking the averages over 108 samples. Reference solutions where computed using the Milstein-Talay method (5) with stepsize h=10−4. We consider the two cases of a non-stiff noise (μ 1=1) and a stiff noise (\(\mu_{1}=\sqrt{500}\)). In the non-stiff noise case (left picture), the results of S-SDIRK(2,3) are nearly identical to those of DDIRDI5 with hardly distinguishable curves, while in the stiff noise case (right picture), the results for DDIRDI5 are not included because this method is unstable for the considered stepsizes, as predicted by the linear stability analysis (see the stepsize restrictions in Table 1). It is remarkable in both cases that S-SDIRK(2,2) is more than four magnitudes more accurate than S-SDIRK(2,3) for steps with size ∼10−1, a regime for which curves with slope two can be observed. We believe that the mean-square L-stability of the S-SDIRK(2,2) method is responsible for this behavior.

Notes

Notice that if R(p,q,ξ)=0 with a non-zero probability, then (3) is clearly numerically asymptotically stable.

In the implementation, we use the initializations \(K_{1}^{0}=X_{0}\) and \(K_{2}^{0}=X_{0}+(1-\gamma) h f(K_{1})\) and we consider the stopping criteria (\(\|K_{i}^{k+1}-K_{i}^{k}\|=0\) or \(\|K_{i}^{k+1}-K_{i}^{k}\|\geq\| K_{i}^{k}-K_{i}^{k-1}\|\)) which guaranties a convergence up to machine precision for the iterations (10). Other stopping criteria, such as \(\|K_{i}^{k+1}-K_{i}^{k}\|<\mathit{Tol}\) where Tol is a prescribed tolerance could also be considered.

References

Abdulle, A., Cirilli, S.: S-ROCK: Chebyshev methods for stiff stochastic differential equations. SIAM J. Sci. Comput. 30(2), 997–1014 (2008)

Abdulle, A., Cohen, D., Vilmart, G., Zygalakis, K.C.: High order weak methods for stochastic differential equations based on modified equations. SIAM J. Sci. Comput. 34(3), 1800–1823 (2012)

Abdulle, A., Li, T.: S-ROCK methods for stiff Ito SDEs. Commun. Math. Sci. 6(4), 845–868 (2008)

Abdulle, A., Vilmart, G., Zygalakis, K.: Weak second order explicit stabilized methods for stiff stochastic differential equations. SIAM J. Sci. Comput. (2013, to appear)

Alcock, J., Burrage, K.: Stable strong order 1.0 schemes for solving stochastic ordinary differential equations. BIT Numer. Math. 52(3), 539–557 (2012)

Arnold, L.: Stochastic Differential Equations: Theory and Applications. Wiley, New York (1974)

Burrage, K., Burrage, P., Tian, T.: Numerical methods for strong solutions of stochastic differential equations: an overview. Proc. R. Soc. Lond., Ser. A, Math. Phys. Eng. Sci. 460(2041), 373–402 (2004)

Debrabant, K., Rößler, A.: Diagonally drift-implicit Runge–Kutta methods of weak order one and two for Itô SDE: s and stability analysis. Appl. Numer. Math. 59(3–4), 595–607 (2009)

Gard, T.: Introduction to Stochastic Differential Equations. Marcel Dekker, New York (1988)

Hairer, E., Nørsett, S., Wanner, G.: Solving Ordinary Differential Equations I. Nonstiff Problems. Springer Verlag Series in Comput. Math., vol. 8. Springer, Berlin (1993)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations II. Stiff and Differential-Algebraic Problems. Springer, Berlin (1996)

Higham, D.: A-stability and stochastic mean-square stability. BIT Numer. Math. 40, 404–409 (2000)

Higham, D.: Mean-square and asymptotic stability of the stochastic theta method. SIAM J. Numer. Anal. 38(3), 753–769 (2000)

Kloeden, P., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1992)

Komori, Y.: Weak second-order stochastic Runge-Kutta methods for non-commutative stochastic differential equations. J. Comput. Appl. Math. 206(1), 158–173 (2007)

Komori, Y., Mitsui, T.: Stable ROW-type weak scheme for stochastic differential equations. Monte Carlo Methods Appl. 1(4), 279–300 (1995)

Milstein, G.N.: Weak approximation of solutions of systems of stochastic differential equations. Theory Probab. Appl. 30(4), 750–766 (1986)

Milstein, G.N., Tretyakov, M.V.: Stochastic Numerics for Mathematical Physics. Scientific Computing. Springer, Berlin (2004)

Rößler, A.: Second order Runge-Kutta methods for Itô stochastic differential equations. SIAM J. Numer. Anal. 47(3), 1713–1738 (2009)

Saito, Y., Mitsui, T.: Stability analysis of numerical schemes for stochastic differential equations. SIAM J. Numer. Anal. 33, 2254–2267 (1996)

Talay, D.: Efficient numerical schemes for the approximation of expectations of functionals of the solution of a SDE and applications. In: Lecture Notes in Control and Inform. Sci., vol. 61, pp. 294–313. Springer, Berlin (1984)

Tocino, A.: Mean-square stability of second-order Runge-Kutta methods for stochastic differential equations. J. Comput. Appl. Math. 175(2), 355–367 (2005)

Acknowledgement

The research of A.A. is partially supported under Swiss National Foundation Grant 200021_140692.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anne Kværnø.

Rights and permissions

About this article

Cite this article

Abdulle, A., Vilmart, G. & Zygalakis, K.C. Mean-square A-stable diagonally drift-implicit integrators of weak second order for stiff Itô stochastic differential equations. Bit Numer Math 53, 827–840 (2013). https://doi.org/10.1007/s10543-013-0430-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-013-0430-8