Abstract

Feature selection plays a key role in data mining and machine learning algorithms to reduce the processing time and increase the accuracy of classification of high dimensional datasets. One of the most common feature selection methods is the wrapper method that works on the feature set to reduce the number of features while improving the accuracy of the classification. In this paper, two different wrapper feature selection approaches are proposed based on Farmland Fertility Algorithm (FFA). Two binary versions of the FFA algorithm are proposed, denoted as BFFAS and BFFAG. The first version is based on the sigmoid function. In the second version, new operators called Binary Global Memory Update (BGMU) and Binary Local Memory Update (BLMU) and a dynamic mutation (DM) operator are used for binarization. Furthermore, the new approach (BFFAG) reduces the three parameters of the base algorithm (FFA) that are dynamically adjusted to maintain exploration and efficiency. Two proposed approaches have been compared with the basic meta-heuristic algorithms used in feature selection on 18 standard datasets. The results show better performance of the proposed approaches compared with the competing methods in terms of objective function value, the average number of selected features, and the classification accuracy. Also, the experiments on the emotion analysis dataset demonstrate the satisfactory results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, due to the large increase in the amount of information, feature selection becomes an essential stage when using machine learning and data mining. Feature selection is an effective and fundamental step applied as the prerequisite of classification methods [1, 2]. Classification is the process of classifying data using class labels. The high-dimensional datasets require high processing time for learning and testing the model [3]. Applying feature selection to the dataset before the learning process improves the performance of the classification task [3]. Also, feature selection reduces the dimension of the dataset and maintains more appropriate features so that the speed of operation on the data set is high and the data set is understandable [4]. The various benefits of feature selection are summarized as follows:

-

Feature selection reduces the training time and improves the performance of the classification methods.

-

It provides a better visualization and understanding of the data in the dataset and also enhances users’ ability to use the data.

-

Feature selection reduces computational complexity effectively. On the other hand, it makes it easier to work on larger datasets.

Feature selection algorithms are generally divided into two main categories: wrapper and filter approaches [5,6,7]. The filtering approach is based on the inherent features of the data, not on a specific grouping. The nature of filters is to search for dependent features and remove non-dependent ones. The wrapper methods use a learning algorithm to analyze different feature subsets. Appropriateness of the selected subset is determined by a classification algorithm in these methods. According to the wrapper-based methods, the objective of feature selection is to minimize the number of selected features while maintaining the maximum classification accuracy, which can be considered as an optimization problem. This is where choosing an appropriate optimization algorithm to solve the feature selection problem becomes a challenge. On the other hand, due to the high dimensionality of the search space, selecting feature subsets using traditional optimization methods is not efficient [8]. Unlike traditional methods, meta-heuristic algorithms have shown superior performance in solving various optimization problems and can be useful for the feature selection problem [2, 9,10,11,12].

Because of the stochastic nature as well as the balance between the two important principles of exploration and efficiency, meta-heuristic algorithms are one of the important methods in solving optimization problems including feature selection. Until now, various meta-heuristic algorithms have been proposed which most of them have been inspired by natural phenomena and behavior of organisms [13,14,15]. Although meta-heuristic algorithms provide acceptable solutions in a reasonable time, they do not guarantee optimal solutions [16]. A new meta-heuristic algorithm called Farmland Fertility Algorithm (FFA) has been proposed in 2018 [15]. The algorithm is inspired by the phenomenon of farmland divisions and how each part of the farmland is improved. The simulation and different testing results of this algorithm show that it outperforms other meta-heuristic algorithms in solving optimization problems. Given the novelty and superior results of this algorithm in solving optimization problems, we are encouraged to present two different binary versions of this algorithm in this paper. Then, the proposed algorithms are used for feature selection. As an empirical example, we use the proposed algorithms in analyzing text psychology.

There are two approaches for emotion analysis, namely Lexicon-based and machine learning approaches [17]. In the Lexicon-based approach, points are given according to a dictionary that contains all the positive and negative words. This method is very simple in that it considers words such as “good”, “excellent”, “bad”, “ugly”, etc. that have a positive or negative connotation in the text and collects their points. The final result of the sentence score is obtained. If the sum of positive points is more, the sentence is considered positive and if the sum of negative points is more, the sentence is considered negative. Despite its simplicity, this method is rarely used due to the complexity of the linguistic structure. Because a sentence can have a lot of negative words provide a positive meaning.

Machine learning approaches are divided into two main categories: Unsupervised and supervised learning [7]. Unsupervised methods (e.g. clustering) are used to infer patterns from a dataset in which only the value of inputs is known and no information about the correct output is available. In contrast, supervised methods (e.g. classification) are the techniques that learn a model from a dataset consisting of input data and the correct output. Supervised learning includes various algorithms such as neural networks, decision trees, vector machines, etc. The supervised algorithms consist of two stages, namely the training stage and the evaluation stage. In the training phase, the model is constructed using the training dataset. The shape of the built model depends on the type of learning algorithm. In the evaluation phase, the experimental data set is used to validate and calculate the accuracy of the constructed model. The test dataset is not used by the algorithm in the model training phase.

The rest of the paper is organized as follows: Section (2) surveys related works and binary versions of various meta-initiative algorithms. In Section (3), the theory and formulation of the FFA are described. In Section (4), the details of two proposed approaches are presented. In Section (5), the performances of the proposed approaches are assessed and the results are presented in graphs and tables. The final section of the paper includes the conclusion and future works.

2 Related works

In this section, we will review state-of-the-art methods and binary versions of a variety of meta-heuristic algorithms in solving the feature selection problem. The details of the review are given in Table 1. In this table, the binary version of the meta-heuristic algorithms and the results of them are described and compared with the other algorithms. Also a column of the table is considered for the comparative algorithms.

As can be seen from Table 1, some researchers have used the original version of each meta-heuristic algorithm to provide the binary versions [1, 2, 5, 21, 24, 25, 29,30,31, 36, 40]. In these versions, it is attempted to modify the processes of each meta-heuristic algorithm to generate only 0 and 1 for feature selection. In some approaches, researchers have tried to first improve the meta-heuristic algorithms and then provide the binary version of it for feature selection [11, 18,19,20, 22, 26, 38, 39]. These versions try to use processes such as Ashup to improve and deliver the binary version of the meta-heuristic algorithms. In some binary versions, a combination of two meta-heuristic algorithms has been used to provide the binary versions [27, 32, 37, 38, 41]. The purpose of combining the two meta-heuristic algorithms is to be able to use the processes of both algorithms to solve the feature selection problem. Finally, some researchers have used two functions namely S-shaped and V-shaped, to provide the binary versions of the meta-heuristic algorithm [1, 5, 6, 9, 19, 21, 24, 25, 30, 35, 39]. Both the S-shaped and the V-shaped functions have been designed so that they can convert continuous solutions of the meta-heuristic algorithms into the binary solutions for feature selection.

Researchers have investigated some feature selection methods. The disadvantages of the methods are uninformative features, high-dimensional space, term weighting scheme, trapped in the local search, premature convergence, a balance between local and global search, and more [7].

Considerable effort is needed to improve the complexity of optimization algorithms for the feature selection problem. Choosing an optimization algorithm for feature selection is a time-consuming process. Most researchers have focused on supervised methods to eliminate uninformative features. Other researchers have used metaheuristic algorithms and unsupervised feature selection techniques to reduce the dimension. Smaller dimensions lead to better performance of the method. Researchers do not use all of the features, but rather weigh in on the feature selection application and use the high-weight features. Researchers use algorithms with these properties to speed up convergence, eliminate premature convergence, avoid local collapse, and balance local and global search [7]. But most of them could not solve all the problems. The FFA has been investigated in terms of different application areas and solving optimization problems. In this paper, we improve it and use it for feature selection.

3 Farmland fertility algorithm

The FFA is a new meta-heuristic algorithm inspired by farmland fertility in nature and was introduced in 2018 [15]. This algorithm attempts to optimize the solutions in each segment by dividing farmland into the segments and using both local and global memory. This algorithm assumes that an environment of farmland is divided into different segments based on soil quality. Each segment of farmland has some soil with a certain quality and the soil quality of each segment is different. An example of segmented farmland, local and global memories are shown in Fig. 1.

A segmented example of a farmland and local and global memories[15]

In the FFA, the worst-performing segment of the farmland is combined with existing solutions in the global memory. Also the solutions found in other segments of the farmland are combined with all the available solutions in the search space. After each segment of the farmland changed its solution with the global memory and random solutions in the search space, farmers decide to combine each soil in each segment of the farmland based on the best available solution in their local memory. Of course, the condition for combining with the best solution in the local memory is that not all the solutions are combined with their local memories, and at this stage, some solutions are combined with the best global solution to improve the quality of the existing solutions. Following the general theory of the FFA, Fig. 2 illustrates the flowchart of this algorithm and then the formulation and steps of this algorithm are shown according to [15].

FFA [15]

3.1 Initial population production and parameter adjustments

At this stage, the initial population is randomly generated and the initial parameters are also adjusted. Of course, unlike other algorithms, this algorithm divides solutions. The initial population number is determined by Eq. (1):

In Eq. (1), N denotes the total number of solutions available in the search space, k shows the number of segments of the land or space, and n indicates the number of solutions available in each segment of the farmland. The standard number of segments of the farmland can be determined based on the optimization problem. As a result, the entire search space is divided into k segments, each segment having a certain number of solutions. Also, n is an integer variable. At this stage, the solutions available throughout the search space are also evaluated according to the objective function. In [15], a separate segment is specify to determine the value of k, which determines the optimum value of k as 2 ≤ k ≤ 8. The value expressed for k can be changed according to the optimization problem.

3.2 The quality determination of each segment

In this algorithm, the quality of each segment of farmland is obtained by averaging the solutions available in each segment of farmland. Initially, the solutions are assigned to different segments by Eq. (2).

Equation (2) simply separates the solutions of each segment to calculate the average of each. In this equation, x shows all the solutions in the search space, s = {1, 2, …. k} represents the segment number, and j = {1, 2, …. D} shows the dimension of the variable x. According to the value of s, the index of each solution is stored as a member of the variable aj. Then, the quality of each segment is determined by Eq. (3).

In Eq. (3), Fit _ Sections determines the quality of the solutions in each segment of the farmland, which in the search space is the average fitness or competence of all the solutions available in each segment. Therefore, for each segment of farmland, the average of the total solutions within that segment is obtained and stored in Fit _ Sections. Here, Fit represents the objective function or level of competence, and xji represents all solutions available in Sections. Also, i = {1.2. …. n} shows the dimension of the variable x.

3.3 Updating the local and global memories

At this point, the local and global memories of each segment will be updated. The local memory stores some of the best solutions of each segment and the global memory stores the best solutions of all segments, which are determined by the number of the best solutions in local and global memories via Eqs. (4) and (5).

In Eqs. (4) and (5), MGlobal and Mlocal show the number of solutions in the global and local memories, respectively. The solutions fall into these memories based on their fitness and competence, and at this point, both memories are updated. Also, t is a vector with random values of [0.1, 1]. For example, if the value of t is 0.1, the number of solutions will be one-tenth of the total population. N represents the total number of solutions available in the search space and n represents the number of solutions available in each segment of farmland. The round function is used to round a number of its Count has a decimal point.

3.4 Changing the soil quality in each segment

At this point, the worst-performing segment is most likely to change. The matter about the segment with the worst quality in the farmland is that all the solutions in the worst segment of the farmland are combined with one of the solutions in global memory according to Eqs. (6) and (7).

In Eq. (6), α is a number between −1 and 1 that is initialized at the beginning of the algorithm, and rand[−1, 1] is assumed to generate random numbers between −1 and 1. In Eq. (7), XMGlobal is a random solution of the global memory solutions, Xij is the solution selected from the worst segment of the farmland for the modifications, and h is a decimal number that can be calculated by Eq. (6). The solutions in the other segments are changed according to Eqs. (8) and (9).

In Eq. (8), β is a number between −1 and 1 that is initialized at the beginning of the algorithm, and rand[0, 1] is assumed to generate random numbers between 0 and 1. In Eq. (9), Xuj is a random solution from the solutions available in the whole search space (a random solution is chosen from all the solutions found in the segments), Xij is the selected solution from the segments (except the worst segment) for the modifications, and h is a decimal number that can be calculated via Eq. (9).

3.5 Updating solutions based on local and global memories

In this step, the farmers decide to combine each soil within the farmland segments based on the best available cases in their local memory (BestLocal) at the last step. Of course, the condition for combining with the best in the local memory is that not all solutions in all segments are combined with their local memories, and at this point, some of the solutions available in all the segments will be combined with the best solution found so far (BestGlobal) to improve the quality of the available solutions. The combination of the desired solution with BestLocal or BestGlobal is determined by Eq. (10).

In Eq. (10), the new solution might be created in two ways. In this equation, Q is a parameter between 0 and 1 that must be specified at the beginning of the algorithm. This parameter determines the extent of the solution’s combination with BestGlobal. The rand function generates a random number between 0 and 1, and b represents an integer to select one of the solutions available in global or local memory. Also, ω1 is an integer that must be specified at the beginning of the algorithm and then its value will be gradually reduced by the algorithm (Eq. 11).

In Eq. (11), Rv is a random number between zero and one.

4 The proposed approach

The FFA in continuous form was fully described in Section (3). In this section, we will present two different versions of the algorithm to solve the feature selection problem according to the basic FFA. In Section (4.1), we present a simple binary version of FFA based on the sigmoid function. The purpose of this approach is to provide a simple and more understandable binary version with the least changes. In Section (4.2), a binary version based on the crossover and mutation operators of the genetic algorithm (GA) will be presented. The purpose of this approach is to produce an advanced version of FFA by changing its processes with processes similar to the GA. It will help us to provide a more comprehensive version of FFA for feature selection. Finally, in Section (4.3), a multi-objective function is introduced to reduce the features and increase the classification accuracy.

4.1 BFFA based on the sigmoid function (BFFAS: S-shaped)

In this subsection, we introduce a new binary version of the FFA based on the sigmoid function. As stated in Section (3), the process of FFA moves in a continuous space and therefore all solutions in the population of this algorithm are continuous numbers. Given that selecting a feature or not is a matter, the new binary solution must contain the numbers 0 and 1, where 1 represents the selection of a feature for the new dataset, and 0 indicates not choosing a feature. To this end, we used the sigmoid or S-shaped function [34, 35] to move the processes of the FFA in a binary space. Therefore, in this proposed model, the sigmoid function is used to change the continuous positioning of the solutions into a binary-state in the FFA using Eq. (12):

In Eq. (12), \( {FFA}_i^d(t) \)shows the continuous value of the ith solution in the population of the FFA in the dth dimension of the iteration t. The sigmoid function is presented in Fig. 3. Here in the following, we will analyze the output of this function and how to incorporate it into the FFA.

According to Fig. 3, the output of the sigmoid transfer function is still in a continuous state between 0 and 1, and therefore, to convert it into a binary value, a threshold has to be set. A random threshold is presented in Eq. (13) to convert the solution into the binary value for feature selection using the sigmoid function:

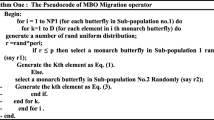

In Eq. (13), \( {FFA}_i^d \) shows the position of the ith solution in the population of the FFA in the dth dimension of the iteration t. Also, rand is a function that produces numbers between 0 and 1 in a uniform distribution. Thus the solutions available in the population of the FFA are forced to move in a binary search space using Eqs. (12) and (13). Then, these relationships are embedded more precisely into the FFA. To better understand, the proposed method is described as pseudocode, illustrated in Algorithm 1.

This algorithm starts by adjusting the parameters (line 01) and then determining the farmland segments (line 02). In line (03), unlike the continuous FFA, the population is randomly created from 0 and 1. Lines 04–09 show the main stages of the continuous FFA including segmentation, quality determination of each segment, updating local and global memories, and soil quality changes in each segment. Lines 09–12 include the new step to convert the solutions produced in the previous steps to binary by the sigmoid transfer function based on Eqs. (12) and (13). At this point, all the continuous solutions are converted into binary solutions. Lines 13–20 perform the stage of evaluating new solutions and updating them based on local and global memories to generate new solutions. Lines 21–23 show the new step to convert the new solutions produced in the previous steps to binary by the sigmoid transfer function based on the Eqs. (12) and (13). At this point, all the continuous solutions are converted into binary solutions. Finally, lines 24–26 evaluate the new binary solutions. If the algorithm has reached its end condition, it will show the best solution. Thus, in this approach, we developed a new binary method using the sigmoid transfer function by placing lines 13–20 and 21–23 in two separate parts of the FFA. The reason of using the sigmoid transfer function in two parts is that the FFA evaluates and modifies the solutions in two stages.

4.2 BFFA based on GA (BFFAG: GA-Base)

In this subsection, BFFA based on the GA process called BFFAG is presented. This algorithm uses new operators called BGMU and BLMU and also a dynamic mutation operator. The BGMU operator is utilized to update the binary solutions based on the global memory and the entire search space of the FFA algorithm and the BLMU operator is used to update the binary solutions based on the local and global memories of the FFA algorithm. These operators increase the efficiency and exploration of the FFA algorithm. Furthermore, in the final part of the FFA algorithm, the dynamic mutation operator is used to increase the exploration of this algorithm. The BFFAG approach also defines genetic and novel operators to maintain a balance between exploration and efficiency. The genetic algorithm involves mutation and crossover operators that are designed and implemented in BGMU and BLMU operators to generate binary solutions. As shown in Section (3), the steps of the FFA are done in continuous form. But to use this algorithm in feature selection, one must use operators proportional to the binary space. In the following, the BFFAG approach is described step by step.

4.2.1 Initial population production and parameters adjustment

At this stage, the initial population is randomly generated, and the initial parameters are adjusted. The initial population is determined according to Eq. (1). Unlike the continuous version of the FFA, at this stage, binary solutions are generated according to Eq. (14).

In Eq. (14), N represents the total number of solutions available in the search space, D shows the number of dimensions of each problem, and Xij represents a random binary solution. Therefore, according to the FFA algorithm based on k and n, where the initial population is represented by N, a random initial population can be generated according to the pseudo-code in Algorithm 2.

4.2.2 Quality determination of each segment and updating the local and the global memories

At this stage, such as continuous FFA, segmentation and quality determination of each segment is performed. It is not necessary to apply any changes to this stage, as only the computation, segmentation, and quality determination are performed for each segment. According to the FFA algorithm, some of the best solutions of each segment are stored in local memory, and the best solutions of all segments are stored in global memory, which are determined by the number of the best local memory and the number of the best global memory based on Eqs.(4) and (5). This stage does not need to be changed.

4.3 Changing the soil quality in each segment

In the BFFAG approach, soil quality changes in each segment will be done based on the new BGMU operator. In this operator, the solutions will be based on the genetic crossover operator. Also, some modifications are considered based on the basic concepts of the FFA algorithm. Fig. 4 shows the new BGMU operator performance. Furthermore, the pseudocode of this operator is illustrated in Algorithm 3. One of the advantages of this operator is the reduction of α and β parameters. This operator selects a random solution from the best solutions in global memory and a random solution from the entire search space. Finally, the new solution is generated from the current solution (Xi) and the combination of two solutions Xk and Xm.

By the BGMU, the changes in soil quality in each section are converted into a binary format, which would increase the efficiency of the binary FFA. This operator has two parameters. The first parameter (Xi) represents the same current solution. The second parameter (w) is a random number between zero and one. This parameter (w) will have a great impact on the efficiency of the algorithm because its large value causes the use of the solutions available in the global memory. Therefore, according to the law of equilibrium of the meta-heuristic algorithms, the efficiency of the algorithm should be initially low and then can be improved by increasing the number of iterations. In BGMU operator, this parameter is dynamically set through the Eq. (15):

In Eq. (15), It denotes the current iteration, MaxIt denotes the maximum iteration, and rand generates a random number between 0 and \( \frac{It}{MaxIt} \). Thus, according to the pseudo-code in Algorithm 3, the parameter w makes use of the current solution (Xi), the best global memory solutions (Xk), and the solutions in the search space (Xm). Fig. 5 shows how to use these solutions and increase the efficiency using the BGMU operator.

Figure 5a shows that increasing the number of iterations, the parameter w gets closer to 1 and we will see an increase in efficiency. The higher the value of the parameter w, the greater the probability of using global memory. Fig. 5b shows that the population using global memory is initially small and increases with increasing the number of iterations. Fig. 5c shows that the BGMU operator initially seeks to explore and makes the most of the entire search space, but when the number of iterations increases, the exploration reaches its minimum. Finally, Fig. 5d shows that it almost uses the current solution correctly to generate a new solution during the exploration.

4.4 Updating the solutions based on the local and global memories

In the continuous FFA, the solutions in each segment are combined based on the best available solution in their local memory (BestLocal). At this point, some of the solutions available everywhere are combined with the best solution ever found (BestGlobal) to improve the quality of the available solutions. The binary version also uses a process to combine the desired solution (BestLocal) or (BestGlobal). In this section, the operation is defined by the new BLMU operator whose pseudo-code is shown in Algorithm 4.

According to Algorithm 4, the BLMU operator is combines multiple points and the probability of combining each point is considered to be 50%. This value is considered to use local and global memories equally. The combination of the solution with the solutions in local and global memories is determined by the value of parameter Q defined as Eq. (16):

In Eq. (16), the combination of a new solution in the binary version is based on the Q parameter, but we used the genetic crossover process instead of the continuous combination. It should be noted that the parameter ω1 is considered as changes in the continuous version. This parameter is removed in the binary version and the changes are controlled by the dynamic mutation operator. So, in the binary version, a mutation operator is required to apply changes. The mutation operator enhances exploration in meta-heuristic algorithms. In the proposed approach, this operator is dynamically controlled, so the parameter ω1 is eliminated. The pseudo-code of the dynamic mutation operator is shown in Algorithm 5.

As seen in Algorithm 5, the dynamic mutation operator is intended to maintain the exploration by the number of current generations and the maximum generation. Thus, according to the meta-heuristic algorithms, the number of mutations should be large at first and gradually decrease. In this section, to test the dynamic mutation operator, population size is set to 50, the number of iterations is set to 100, and number of dimensions is set to 10. The results of 3 independents trials are shown in Fig. 6.

Figure 6 shows that the exploration reduction using the dynamic mutation operator is exactly as expected. The number of dimensions covered by the mutation operator is initially large and decreases with gradually increasing the number of iterations, so that it reaches the lowest possible value in the last iterations. Thus, we presented a binary FFA based on the genetic algorithm using the BGMU, the BLMU, and the dynamic mutation operators. The pseudo-code of the proposed approach is illustrated in Algorithm 6.

4.5 The objective function

In this sub-section, we describe the objective function for feature selection in the proposed approach. Feature selection can be considered as a multi-objective optimization problem in which two conflicting goals including the minimum number of the selected features and higher classification accuracy are achieved. Therefore, we need a classification algorithm to define the objective function of the feature selection problem. In this paper, we use the simplest classification method, namely the K-Nearest Neighbors (KNN) classifier [42, 43] used by most researchers [1, 7, 8, 16, 28, 44, 45]. The KNN classifier is used to more accurately evaluate the features selected by the proposed approach and other algorithms. Each solution is evaluated based on the proposed multi-objective function which depends on the KNN classifier. In the proposed multi-objective function, to balance the number of features selected in each solution (minimum) and the classification accuracy (maximum), the objective function in Eq. (17) is used to evaluate a solution in each meta-heuristic algorithm.

In Eq. (17), αγR(D) represents the error rate of the classification method, | R | shows the multilinearity of the selected subset, and | N | shows the total number of features available in the dataset. Also, α represents the importance of classification quality and β shows the length of the subset. The values of these two parameters are taken as α ∈ [0, 1] and β = (1-α) from [5]. In this paper, the initial value of α is assumed to be 0.99 and thus the value of β will be equal to 0.01.

Here, the important point is the percentage of testing and training, which is typically considered to be 30 to 70 or 20 to 80. But since the goal of feature selection is to find effective features, the training percentage should be higher. In this case, the algorithm can better find the effective features. That’s why 80% of the data is for training and 20% for testing.

5 Results and discussions

In this section, the evaluation and results of the proposed approaches will be discussed. All experiments are performed using MATLAB software on a Core i5 processor with 8 GB of RAM. Also for the KNN algorithm, k is set to be 5, which is the most appropriate value according to references [1, 14, 35]. To evaluate the performance of the two proposed approaches and other competing algorithms, various experiments have been conducted on 18 feature selection datasets from the UCI data repository [46] . Furthermore, we apply our proposed approaches to the emotion analysis dataset. The experimental results for the UCI datasets are presented in Section 5.1 and the results for the emotion analysis datasets are reported in Section 5.2. The results show that the proposed approaches perform better than the other algorithms in terms of the average number of the selected features, classification accuracy, and objective function value on the UCI datasets, as well as emotion recognition accuracy on the emotion analysis datasets.

5.1 Experiments on the UCI dataset

In this sub-section, the performance of the two proposed approaches compared to other meta-heuristic algorithms in the feature selection problem is evaluated using 18 datasets taken from the UCI learning database site. Each dataset has a specified number of features so that by running the two proposed approaches on each dataset, their performance can be investigated on the number of selected features. Table 2 lists the number of features, the number of samples and the number of classes in each dataset.

We will use the 18 datasets listed in Table 2 with various feature numbers (between 8 and 56) to test the algorithms in this section. We first evaluate the two proposed approaches and compare them with the original FFA. Then we select one of the proposed approaches with the best results as the main method and compare it with other important meta-heuristic algorithms including Genetic Algorithm (GA) [47], Binary Bats Algorithm (BBA) [48], V-shaped Binary PSO (BPSO) [49], Binary Flower Pollination Algorithm (BFPA) [10], Binary Gray Wolf Optimizer (BGWO) [21] and Binary Dragonfly Algorithm (BDA) [14] and Binary Chaotic Crow Search Algorithm (BCCSA) [11]. Table 3 shows the parameters of the proposed approaches and the other comparative algorithms.

5.1.1 Performance evaluation of proposed approaches (BFFAG, BFFAS) and comparison with the original FFA

The purpose of this section is to evaluate the performance of proposed BFFAG and BFFAS approaches in terms of different criteria including the average number of features, the classification accuracy and the convergence of objective function, and compare the results with the original FFA. For all experiments in this section, we set the parameters according to Table 3. Table 4 shows the average number of features obtained by the proposed BFFAG and BFFAS approaches and the basic FFA basic.

From Table 4, it can be seen that the BFFAG approach obtains the best performance in terms of feature selection. The BFFAG approach is about 89% more successful than the BFFAS and FFA on 18 datasets. In addition, the classification accuracy results obtained by the proposed BFFAG and BFFAS approaches and the original FFA are shown in Table 5.

The results in Table 5 show that the BFFAG approach obtains the best performance. This approach is about 67% more successful than the BFFAS and FFA approaches on 18 datasets. The results are shown in Figs. 7, 8 and 9.

From Figs. 7, 8 and 9, it is seen that the BFFAG approach achieves better convergence rate of objective function compared to the other methods. All the experiments in this section demonstrate the superiority of the proposed BFFAG approach over the BFFAS and the original FFA in various criteria including the average number of features, the classification accuracy, and the convergence rate of objective function. Therefore, this approach is selected as the main method and is compared with the existing meta-heuristic algorithms, in the Section 5.1.2. To further analysis, more experiments are performed to prove the superiority of the proposed BFFAG method, in the following section.

5.1.2 Further discussion

As shown in Section (5.1.1), the BFFAG approach is able to provide acceptable results in terms of convergence of the objective function, classification accuracy and average number of features compared to the BFFAS and FFA methods. In terms of feature selection, this algorithm also performs better in the high-dimensional dataset than the other algorithms. For example, in the D10 suite of 56 attributes, it is able to select an average of 22 attributes, and it also performs more successfully than other methods in the high-dimensional in accuracy criteria[21, 33, 34, 56]. In this sub-section, the stability of the proposed BFFAG and BFFAS approaches and original FFA is evaluated in different implementations. To this end, algorithms are implemented 5 times, and then the best objective function of each algorithm is obtained. The implementations are performed according to the parameters in Table 3. The results are shown in Figs. 10, 11 and 12.

The results of different implementations of the FFA in Fig. 10 show that the FFA achieves better stability in some datasets. On the other hand, some datasets, such as D14 and D10, show different results in each implementation. This experiment proves that the FFA has relatively moderate stability in five different implementations.

The results of the different implementation of the BFFAS algorithm in Fig. 11 show that the BFFAS algorithm achieves better stability in some datasets. On the other hand, some datasets, such as D14 and D18, show different results. Of course, the BFFAS algorithm performs much better than the original FFA. This experiment demonstrates that the BFFAS algorithm performs relatively well in five different implementations.

The results of different implementation of the BFFAG algorithm in Fig. 12 show that the BFFAG algorithm achieves better stability in most datasets. Only in the second run, the BFFAG has a slightly different performance. The results of this experiment show the remarkable superiority of the BFFAG approach over the BFFAS and FFA approaches. The BFFAG approach also proved to be very stable. In the following, two proposed BFFAG and BFFAS approaches, and original FFA are statistically investigated (best, worst, average, and standard deviation). The results of this investigation are shown in Table 6.

From Table 6, it is seen that the BFFAG approach performs better than the BFFAS and FFA. The experiment proved that the proposed BFFAG approach is the best method in terms of statistical criteria.

5.1.3 Comparison with other approaches

In this sub-section, the proposed BFFAG approach is compared with the other binary meta-heuristic algorithms including Genetic Algorithm (GA) [47], Binary Bats Algorithm (BBA) [48], V-shaped Binary PSO (BPSO) [49], Binary Flower Pollination Algorithm (BFPA) [10], Binary Gray Wolf Optimizer (BGWO) [21], Binary Dragonfly Algorithm (BDA) [14] and Binary Chaotic Crow Search Algorithm (BCCSA) on 18 datasets. All experiments are performed according to the parameters in Table 3. Therefore, the number of iterations is set to 50 for all algorithms. The convergence results of the proposed BFFAG and other comparative algorithms on 18 datasets are shown in Figs. 13, 14 and 15.

From Figs. 13, 14 and 15, it can be seen that the proposed BFFAG approach is able to perform better overall. The BFFAG approach outperforms all algorithms in 15 datasets. The comparison among the algorithm show that the proposed BFFAG approach is 83% more convergent than other algorithms. However, to further evaluate the results of the proposed BFFAG and other comparative algorithms on 18 datasets, the statistical criteria are given in Table 7.

Table 7 shows that the “Best” criterion of the proposed BFFAG method performs 83% better than other algorithms. However, the proposed BFFAG approach is failed to minimize the parameters such as standard deviation, due to in-process exploration. In some datasets such as D14, the proposed BFFAG approach with a “Best” value of 0.0099 works much better, and certainly, this algorithm cannot reduce other parameters simultaneously. Table 8 presents the results of the proposed approach and other algorithms in terms of the average number of features.

Table 8 shows that the BFFAG approach performs very well, and proves its superiority on 9 datasets. It should be noted that both the number of features and the classification accuracy are taken into account in the objective function. Therefore the minimum number of features cannot be obtained in all datasets. The classification accuracy of BFFAG and other comparative algorithms are given in Table 9.

From Table 9, it is seen that the BFFAG approach shows high efficiency in terms of the classification accuracy. Our approach performed better in terms of classification accuracy on 9 datasets and is close to the best results in the other datasets. This is because that both the feature selection and the classification accuracy are considered in the objective function. Therefore, we cannot content with classification accuracy. Experiments in this section prove that the proposed approach has good binary space exploration and efficiency. Thus, the superiority of the BFFAG in feature selection and classification accuracy over other methods is obvious.

5.1.4 Further discussion

In this section, the results of the proposed BFFAG and other algorithms are discussed with further experiments. To this end, the proposed BFFAG and other comparative algorithms are implemented 5 times and then the best objective function of each algorithm is obtained. The implementations are performed according to the parameters in Table 3. The purpose of this experiment is to evaluate the robustness of the proposed BFFAG and other comparative algorithms in different implementations. The results of the different implementations of the GA are shown in Fig. 16.

From Fig. 16, it can be seen that the GA achieves better stability in the datasets of {D1: D9, D12, D15, D16, D17}. But it is less stable in the data sets of {D10, D11, D13, D14, and D18}. These results prove that the GA has moderate stability and the algorithm is more influenced by two parameters of mutation and compound. Fig. 17 shows the results of different implementations of the BBA.

Fig. 17 shows that the BBA achieves better stability in the datasets of {D1: D9, D15, D16}, but in datasets of {D10, D11, D12, D13, D14, D18} exhibits less stability. These results prove that the BBA approach has relatively moderate stability, and its results are less reliable. Fig. 18 shows the results of the different implementations of the BPSO algorithm.

From Fig. 18, it can be seen that the BPSO achieves better stability in the datasets of {D1: D9, D12, D15, D17}. But it is less stable in the datasets of {D1, D10, D11, D13, and D14}. However, in the D14 dataset, this algorithm performs the worst possible. These results prove that the BPSO has moderate stability and results in some datasets are less reliable. Fig. 19 shows the results of different implementations of the BFPA.

From Fig. 19, it can be seen that the BFPA achieves better stability in the datasets of {D1: D9, D11, D12, D14, D16}. But it is less stable in the datasets of {D10, D13, D17, and D18}. However, in the D10 dataset, this algorithm performs the worst possible. These results prove that the BFPA has better stability than BBA and BPSO. Fig. 20 shows the results of different implementations of the BGWO.

From Fig. 20, it can be seen that the BGWO achieves better stability in the datasets of {D1: D6, D8, D9, D12, D15, D18}. But it is less stable in the datasets of {D10, D11, D13, and D14}. These results prove that the BGWO has better stability than GA, BPSO, and BBA and its results are more acceptable. Fig. 21 shows the results of different implementations of the BDA.

From Fig. 21, it can be seen that the BDA achieves better stability in the datasets of {D1: D6, D8, D9, D12, D15, D17}. But it is less stable in the datasets of {D10, D11, D13, D14 and D18}. These results prove that the BDA has better stability than GA, BPSO, and BBA and its results are more acceptable. Fig. 22 shows the results of different implementations of the BCCSA.

From Fig. 22, it can be seen that the BCCSA achieves better stability in the datasets of {D1: D6, D8:D12, D15, D16, and D17}. But it is less stable in the datasets of {D7, D13, D14 and D18}. These results prove that the BCCSA has relatively good stability compared to other algorithms. Fig. 23 shows the results of different implementations of the BFFAG. From Fig. 23, it can be seen that the BFFAG achieves better stability in the datasets of {D1: D6, D8, D9, D11, and D18}. But it is less stable in the datasets of {D7, D10}. These results prove that the BFFAG has relatively stronger stability than other algorithms. Fig. 24 shows the results of the average of the five performances.

From Fig. 24, it can be seen that the proposed BFFAG approach is a reliable and high-performance method in feature selection compared to other methods.This algorithm has a significant advantage over other methods.

5.2 T-test

The t-test as a type of inferential statistics is used to determine the significant difference between the mean of a group with a default value or the means of two groups. There are different types of t-tests, the three most common of which are:

1. One-Sample t-test (This test is used to examine the average of a community.)

2. Paired-samples t-test (This test is used to examine two means of a community.)

3. Independent-Samples t-test (This test is used to examine the mean of two independent communities.)

In this article, we utilize the Paired-samples t-test in SPSS software. Our purpose is to show the effect of the proposed algorithm on improving the evaluation parameters. To this end, two related groups are considered, one is the proposed method and the other is one of the comparable algorithms. The optimal mean value of the proposed methods (BFFAG and BFFAS) and the other competing methods are extracted based on the Tables 6 and 7, and then their mean difference are obtained in pairs. The results of the t-test are presented in Table 10.

Moreover, the average number of selected features of the proposed method (BFFAG) and several other algorithms are extracted from Table 8 and then their mean difference are obtained in pairs. The results of the t-test related to the evaluation of the average number of selected features are shown in Table 11.

From Tables 10 and 11, we can find that the significance level (Sig.) is less than the test level of 0.05 in most cases, and so the null hypothesis is rejected. In other words, the results show that there is a significant difference in the mean value of the objective function (Table 10) as well as the average number of selected features (Table 11) of the proposed method in different datasets compared to the other methods. According to the number of means after improving the method, the mean of the objective function (Mean) of the proposed method is negative, i.e. it decreases compared to most of the competing methods. Therefore, it can be concluded that the new algorithm reduces the optimal mean.

5.3 Experiments on emotion analysis dataset

In this sub-section, the performance of the proposed approaches is evaluated on a large dataset with more features. To this end, the emotion analysis dataset as one of the largest datasets is used. Here, we have used the popular emotion analysis data in IMDB Large Movie Review Dataset, where the opinions of 50,000 IMDB users are stored. This dataset contains two classes as negative and positive (i.e. positive and negative opinions). Because it takes a long time to analyze this dataset, researchers select a percentage of it and perform the necessary tests. In this paper, 2000 training data and 200 test data were used. The purpose of using this data is to construct a model that can correctly classify the given test texts into one of two classes of positive or negative. On the other hand, we need a dataset with features. Here, we use the common natural language processing libraries such as nltk, scikit-learn, and regular-expression (re).

In this experiment, the textual data is first read in Python programming language, and each of the opinions is classified into positive (1) and negative (0) groups. With the re and nltk libraries, punctuation marks are removed from the texts. At this point, the texts are converted to a list of separate words using the word_tokenize module. To extract the root of the words and remove the words’ extras, LancasterStemmer is used as a word rooter module. This process is applied to the training and test data. We then use the TfidfVectorizer module in the nltk library to convert the resulting words into the input features for the classification algorithms. Each text in the algorithm is mapped to a vector according to the following points:

-

The number of words used in the text.

-

The repetition rate of each word in the text.

-

The location of a particular word in the text relative to the other words and the adjacent words.

-

Total number of unique words in all text collections.

After a complete preprocessing, 3675 features are built using the TfidfVectorizer algorithm, and also a feature is selected to determine the positive and negative classes. Here we use basic algorithms including GA, BBA, BPSO, BFPA, BGWO, BDA and BCCSA to compare with the proposed BFFAG approach. The Application architecture of the proposed approach is shown in Fig. 25.

Figure 26 shows convergence rate of the objective function of two proposed approaches and other comparative algorithms on the preprocessing with 2200 samples and 3675 features with different iterations.

From Figs. 26 and 27, it is seen that the BFFAG approach exhibits better convergence in 7 of 10 iterations, indicating that this approach performs better by increasing the number of iterations. This approach, however, performs poorly in two iterations of 20 and 80. Table 12 shows the results of the proposed and other algorithms in terms of the average number of selected features.

This table shows that the BFFAG approach performs very well. This approach has proven to work well in most iterations. The BFFAS approach also shows acceptable results compared to the FFA. Fig. 28 compares classification accuracy of two proposed approaches with that of other comparative algorithms in different number of iterations on the emotion analysis dataset.

As can be seen from Fig. 28, the classification accuracy of the BFFAG approach is higher than that of the other methods, in addition to the better feature selection shown in Table 12. This is because that both feature selection and classification accuracy are considered in the objective function. According to Figs. 26, 27 and Table 12, it can be seen that the BFFAG approach outperforms other methods in terms of convergence, classification accuracy and the objective function on dataset emotional analysis.

6 Conclusions and future work

The FFA is a new meta-heuristic algorithm that is powerful in solving optimization problems due to its local and global memories and division of solutions into good and bad. In this paper, two new binary versions of FFA, namely BFFAS and BFFAG, was proposed for feature selection problem. In the proposed BFFAS and BFFAG approaches, respectively, the sigmoid transfer function and new genetic operators were introduced to convert the continuous FFA into binary versions. The proposed genetic operators, namely BGMU and BLMU, were used to update binary solutions based on global memory and total search space, and local and global memory, respectively. Also, the DM operator was utilized to maintain exploration in the FFA, rather than the parameter w. These three operators increase the performance of the original FFA. At the same time, the number of parameters of the FFA are reduced.

The experiments were implemented on 18 datasets from UCI datasets. Experimental results showed that our BFFAG approach outperforms the BFFAS and FFA in most criteria. Furthermore, the BFFAG approach achieves better results in the objective function value, average number of features and classification accuracy compared to the state-of-the-art algorithms, namely GA, BBA, BPSO, BFPA, BGWO, BDA, and BCCS, on most of the datasets. We used t-test to better compare and analyze outputs of the methods. The t-test results showed that there is a significant difference in the mean value of the objective function as well as the average number of selected features of the proposed method in different datasets compared to the other methods.

Moreover, the proposed BFFAG approach was implemented on the popular IMDB Large Movie Review Dataset. In this case, 3675 attributes were extracted from this database to compare algorithms in high dimensions. The results of this experiment demonstrated the superiority of the BFFAG approach compared the other algorithms in terms of the convergence, average number of features and accuracy.

For future work, the proposed approaches can be applied to real world problems such as 0–1 knapsack problem, scheduling problem and etc. Also, the proposed approaches can be applied on datasets with dimensions larger than 500. In addition, other classifiers such as decision tree, support vector machine and naive Bayes can be used to evaluate the performance of the proposed approaches. Finally, investigating the impact of local search processes by proposed approaches on a large and small scales will be an interesting task for the future.

References

Mafarja M, Mirjalili S (2018) Whale optimization approaches for wrapper feature selection. Appl Soft Comput 62:441–453

Sayed SA-F, Nabil E, Badr A (2016) A binary clonal flower pollination algorithm for feature selection. Pattern Recogn Lett 77:21–27

Zorarpacı E, Özel SA (2016) A hybrid approach of differential evolution and artificial bee colony for feature selection. Expert Syst Appl 62:91–103

Dong H et al (2018) A novel hybrid genetic algorithm with granular information for feature selection and optimization. Appl Soft Comput 65:33–46

Emary E, Zawbaa HM, Hassanien AE (2016) Binary ant lion approaches for feature selection. Neurocomputing 213:54–65

Mafarja M, Aljarah I, Faris H, Hammouri AI, al-Zoubi A’M, Mirjalili S (2019) Binary grasshopper optimization algorithm approaches for feature selection problems. Expert Syst Appl 117:267–286

Abualigah LMQ (2019) Feature selection, and enhanced krill herd algorithm for text document clustering: Springer

Rajamohana S, Umamaheswari K (2018) Hybrid approach of improved binary particle swarm optimization and shuffled frog leaping for feature selection. Comput Electr Eng 67:497–508

Faris H, Mafarja MM, Heidari AA, Aljarah I, al-Zoubi A’M, Mirjalili S, Fujita H (2018) An efficient binary salp swarm algorithm with crossover scheme for feature selection problems. Knowl-Based Syst 154:43–67

Rodrigues D, et al (2015) Binary flower pollination algorithm and its application to feature selection, In Recent advances in swarm intelligence and evolutionary computation, Springer. p. 85–100

Sayed GI, Hassanien AE, Azar AT (2017) Feature selection via a novel chaotic crow search algorithm. Neural Comput and Applic 31:171–188

Gharehchopogh FS, Gholizadeh H (2019) A comprehensive survey: whale optimization algorithm and its applications. Swarm Evol Comput 48:1–24

Gharehchopogh FS, Shayanfar H, Gholizadeh H (2019) A comprehensive survey on symbiotic organisms search algorithms. Artif Intell Rev: p. 1–48

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Shayanfar H, Gharehchopogh FS (2018) Farmland fertility: a new metaheuristic algorithm for solving continuous optimization problems. Appl Soft Comput 71:728–746

Mafarja MM, Mirjalili S (2017) Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing 260:302–312

Shehu HA, Tokat S (2020) A Hybrid Approach for the Sentiment Analysis of Turkish Twitter Data. In the international conference on artificial intelligence and applied mathematics in engineering. Part of the lecture notes on data engineering and communications technologies book series (LNDECT, volume 43) pp 182–190

Zhang Y, Wang S, Phillips P, Ji G (2014) Binary PSO with mutation operator for feature selection using decision tree applied to spam detection. Knowl-Based Syst 64:22–31

Xiang J, Han XH, Duan F, Qiang Y, Xiong XY, Lan Y, Chai H (2015) A novel hybrid system for feature selection based on an improved gravitational search algorithm and k-NN method. Appl Soft Comput 31:293–307

Hancer E, Xue B, Karaboga D, Zhang M (2015) A binary ABC algorithm based on advanced similarity scheme for feature selection. Appl Soft Comput 36:334–348

Emary E, Zawbaa HM, Hassanien AE (2016) Binary grey wolf optimization approaches for feature selection. Neurocomputing 172:371–381

Wan Y, Wang M, Ye Z, Lai X (2016) A feature selection method based on a modified binary-coded ant colony optimization algorithm. Appl Soft Comput 49:248–258

Abualigah LM, Khader AT, al-Betar MA, Alomari OA (2017) Text feature selection with a robust weight scheme and dynamic dimension reduction to text document clustering. Expert Syst Appl 84:24–36

Pashaei E, Aydin N (2017) Binary black hole algorithm for feature selection and classification on biological data. Appl Soft Comput 56:94–106

Mafarja MM, Eleyan D, Jaber I, Hammouri A, Mirjalili S (2017) Binary dragonfly algorithm for feature selection. In 2017 International Conference on New Trends in Computing Sciences (ICTCS) pp 12–17

Chen Y-P, Li Y, Wang G, Zheng YF, Xu Q, Fan JH, Cui XT (2017) A novel bacterial foraging optimization algorithm for feature selection. Expert Syst Appl 83:1–17

Abualigah LM, Khader AT, Hanandeh ES (2018) Hybrid clustering analysis using improved krill herd algorithm. Appl Intell 48(11):4047–4071

Abualigah LM, Khader AT, Hanandeh ES (2018) A combination of objective functions and hybrid krill herd algorithm for text document clustering analysis. Eng Appl Artif Intell 73:111–125

Allam M, Nandhini M (2018) Optimal feature selection using binary teaching learning based optimization algorithm. J King Saud Univ Comput Inf Sci 1–13

Mafarja M, Aljarah I, Heidari AA, Faris H, Fournier-Viger P, Li X, Mirjalili S (2018) Binary dragonfly optimization for feature selection using time-varying transfer functions. Knowl-Based Syst 161:185–204

Papa JP, Rosa GH, de Souza AN, Afonso LCS (2018) Feature selection through binary brain storm optimization. Comput Electr Eng 72:468–481

Mafarja MM, Mirjalili S (2018) Hybrid binary ant lion optimizer with rough set and approximate entropy reducts for feature selection. Soft Comput:1–17

De Souza, RCT, Dos Santos Coelho L, De Macedo CA, Perezan, J (2018) A V-Shaped Binary Crow Search Algorithm for Feature Selection. In 2018 IEEE Congress on Evolutionary Computation (CEC) pp 1–8

Arora S, Anand P (2019) Binary butterfly optimization approaches for feature selection. Expert Syst Appl 116:147–160

Hussien AG, Hassanien AE, Houssein EH, Bhattacharyya S, Amin M (2019) S-shaped binary whale optimization algorithm for feature selection, In Recent trends in signal and image processing. Part of the Advances in Intelligent Systems and Computing book series (AISC, volume 727) pp 79–87

Santana CJ Jr, Macedo M, Siqueira H, Gokhale A, Bastos-Filho CJA (2019) A novel binary artificial bee colony algorithm. Futur Gener Comput Syst 98:180–196

Yan C, Ma J, Luo H, Patel A (2019) Hybrid binary coral reefs optimization algorithm with simulated annealing for feature selection in high-dimensional biomedical datasets. Chemom Intell Lab Syst 184:102–111

Zhang Y, Gong DW, Gao XZ, Tian T, Sun XY (2020) Binary differential evolution with self-learning for multi-objective feature selection. Inf Sci 507:67–85

Abdel-Basset M, el-Shahat D, el-henawy I, de Albuquerque VHC, Mirjalili S (2020) A new fusion of grey wolf optimizer algorithm with a two-phase mutation for feature selection. Expert Syst Appl 139:112824

Unler A, Murat A (2010) A discrete particle swarm optimization method for feature selection in binary classification problems. Eur J Oper Res 206(3):528–539

Kabir MM, Shahjahan M, Murase K (2012) A new hybrid ant colony optimization algorithm for feature selection. Expert Syst Appl 39(3):3747–3763

Barani F, Mirhosseini M, Nezamabadi-pour H (2017) Application of binary quantum-inspired gravitational search algorithm in feature subset selection. Appl Intell 49(180):304–318

Al-Tashi Q, Rais HM, Abdulkadir SJ, Mirjalili S, Alhussian H (2020) A Review of grey wolf optimizer-based feature selection methods for Classification. Evolutionary machine learning techniques. Part of the Algorithms for Intelligent Systems book series (AIS) pp 273–286

Liao TW, Kuo RJ (2017) Five discrete symbiotic organisms search algorithms for simultaneous optimization of feature subset and neighborhood size of KNN classification models. Appl Soft Comput 64:581–595

Mafarja M, Aljarah I, Heidari AA, Hammouri AI, Faris H, Ala’M AZ, Mirjalili S (2017) Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowl-Based Syst 145:25–45

Asuncion A, Newman DJ (2007) UCI machine learning repository. Irvine, CA: University of california, School of Information and Computer Science. http://www.ics.uci.edu/~mlearn/MLRepository.html. Accessed 2019.8.18

Sivanandam S, Deepa S (2008) Genetic algorithm optimization problems. In Introduction to genetic algorithms

Mirjalili S, Mirjalili SM, Yang X-S (2014) Binary bat algorithm. Neural Comput Applic 25(3–4):663–681

Mirjalili S, Lewis A (2013) S-shaped versus V-shaped transfer functions for binary particle swarm optimization. Swarm Evol Comput 9:1–14

Sayed GI, Hassanien AE, Azar AT (2019) Feature selection via a novel chaotic crow search algorithm. Neural Comput Applic 31(1):171–188

Acknowledgments

The authors would like to thank the editor in chief, the associate editor, and reviewers for their constructive feedback during the review process. They genuinely appreciate the reviewers’ comments for making this paper more complete.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hosseinalipour, A., Gharehchopogh, F.S., Masdari, M. et al. A novel binary farmland fertility algorithm for feature selection in analysis of the text psychology. Appl Intell 51, 4824–4859 (2021). https://doi.org/10.1007/s10489-020-02038-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-02038-y