Abstract

For years, Value-at-Risk and Expected Shortfall have been well established measures of market risk and the Basel Committee on Banking Supervision recommends their use when controlling risk. But their computations might be intractable if we do not rely on simplifying assumptions, in particular on distributions of returns. One of the difficulties is linked to the need for Integral Constrained Optimizations. In this article, two new stochastic optimization-based Simulated Annealing algorithms are proposed for addressing problems associated with the use of statistical methods that rely on extremizing a non-necessarily differentiable criterion function, therefore facing the problem of the computation of a non-analytically reducible integral constraint. We first provide an illustrative example when maximizing an integral constrained likelihood for the stress-strength reliability that confirms the effectiveness of the algorithms. Our results indicate no clear difference in convergence, but we favor the use of the problem approximation strategy styled algorithm as it is less expensive in terms of computing time. Second, we run a classical financial problem such as portfolio optimization, showing the potential of our proposed methods in financial applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Risk management and asset allocation are the bulk of portfolio management, and are of great interest for investors, risk managers and regulators. In most related cases, however, no closed-form solutions are available for many risk and asset allocation problems, unless we make further stringent hypotheses. More generally, several statistical problems, such as inferential statistics (Isaacson and Madsen (1976), Dong and Wets (2000), Shapiro et al. (2014)), statistical process control (Feng et al. (2018), Glizer and Turetsky (2019)), and estimation of density function (Cramer (1989)) among others, rely indeed on constrained optimizations. In such cases, classical methods for solving constrained optimization problems often employ the Lagrangian method, which requires the selection of the Lagrange multiplier so that the constraint is satisfied (e.g., Bertsekas (2014) for an illustration).

However, on the one hand, it is reported in the literature that standard optimization methods often break down in many situations: when problems involve non-differentiable functions, large and multi-modal search spaces, non-convex or non-linear programs, multiple objectives, high-dimensional problems, or integral constraints. Charalambous (1978), for example, identifies a number of situations where classical numerical optimization techniques fail and where standard methods produce undesirable results.

On the other hand, Simulated Annealing (SA) algorithms are classically used to solve optimization problems. From a strictly practical point of view, the phenomenon of cooling applied to metals to obtain an optimal temperature share similarities with many financial application optimization problems when solved using SA, as shown by, e.g., Cramer (1989), Woodside-Oriakhi et al. (2011) and Castellano et al. (2021). As stated by Robert and Casella (2013), it appears that the SA algorithm borrowed its name from metallurgy and from physics. Especially in computational physics, the relationship with portfolio optimization is clearer, since the problem solved by SA is an optimization problem, where the function to be minimized is called “energy” and the variance factor which controls the convergence is called “temperature”. In more technical terms, the underlying problems are basically the same, and SA algorithms are classically used to solve optimization problems in different areas of operation research such as, for instance: commodity queuing inventories (Shajin et al. (2021)), planning and release (Etgar et al. (1997), Etgar et al. (2017)), payment scheduling (He et al. (2009)), graph partitioning (D’Amico et al. (2002)), energy district design (Bergey et al. (2003)), political district design (Ricca and Simeone (2008)), and more recently for forecasting the relationship between oil and gas (Ftiti et al. (2020)). An area where SA reveals itself to be particularly useful is also risk management, with applications in many fields, e.g., tunnel construction (Ryu et al. (2015)), disaster risk management (Edrisi et al. (2020)) and facility layouts with uncertainty (Tayal and Singh (2018)). In our context of financial applications, it happens that a large number of optimization problems in finance can be solved by applying optimization heuristics, such as SA or Genetic Algorithms (GA), as for instance in Gilli and Schumann (2012). These methods can handle non-convex optimization problems overcoming many difficulties such as multiple local optima and discontinuities in the objective function. For example, Cramer (1989) applies SA to Markowitz (1952) portfolio selection problems with realistic constraints, whilst Woodside-Oriakhi et al. (2011) use SA for imposing a cardinality constraint onto the classical portfolio optimization program. Bick et al. (2013) also employ SA for solving constrained consumption-investment optimization problems, whilst Porth et al. (2016) propose to improve risk diversification combining portfolios of crop insurance policies and an SA approach to find the optimal allocation. More recently, Castellano et al. (2021) provide an approach to systemic risk minimization based on SA.

In this article, attention will be devoted to optimization problems for which: 1) the target function is not necessarily differentiable and 2) the constraints are characterized by non-analytically reducible integrals. It happens that analytical intractability of the integral constraint is a major cause of limits in the application of classical optimization techniques. We address this issue by exploring two alternative numerical optimization strategies. A first strategy consists of solving an auxiliary (approximated) problem, while the other entails the construction of an approximation algorithm. This model approximation approach decomposes further into two single sequential strategies, depending on the type of integral approximation technique employed, i.e. either simulation-based or deterministic-rule. In the latter case, the deterministic approximation approach generally involves the replacement of the non-analytically reducible integral with a numerical quadrature (see Shapiro et al. (2014) and Smith et al. (2015)). In the case of the simulation-based approach, the integral constraint is reformulated as a stochastic constraint and then a Monte Carlo simulation combined with another technique, such as the Sample Average Approximation (SAA), is implemented to approximate the problem. On the basis of these auxiliary problems, simulation-based optimization procedures can then be applied. However, the need to concurrently draw samples from both the decision and auxiliary variable spaces can make the algorithms computationally expensive. To alleviate this problem, methods such as the Group Independent Metropolis Hastings (GIMH) of Beaumont (2003) and the Inhomogeneous Markov Chain (IMC) simulation of de Mello and Bayraksan (2014) may be mobilized. As for the approximation algorithm approach, these entail, however, the replacement of the intractable target function by a (consistent or unbiased) estimator within the Markov Chain Monte Carlo (MCMC) algorithm. This procedure is referred to as the Monte Carlo Within Metropolis (MCWM) - Cf. Andrieu and Roberts (2009).

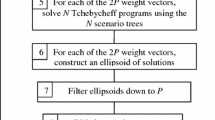

Before presenting our empirical results obtained in some settings of classical financial applications, the primary goal of this article is to design efficient and reliable simulation-based algorithms for solving integral constrained optimization problems by finding Extended Saddle Points (ESP) of a relevant penalty function reformulation of the integral constrained problem. Two SA algorithms are proposed, both relying on importance sampling strategies. Given a penalty function reformulation of the integral constrained problem, the first algorithm, called the Group Independence Metropolis Hastings Simulated Annealing (GIMHSA), requires the construction of an auxiliary problem upon which the GIMH method is implemented. The second method denominated as the Monte Carlo Within Metropolis Simulated Annealing (MCWMSA), differently, focuses on an approximation of the target (penalty) function used for the MCMC updating step of the MCWM method. We compare standard SA combined with quadrature and the two new algorithms first on a low dimension optimization problem given by the maximization of an integral constrained likelihood for a stress-strength reliability problem. Our empirical trials confirm the effectiveness of the two new algorithms and convergence properties similar to the standard SA. Nevertheless, in a higher dimension, standard SA becomes less effective while our proposed algorithm does not suffer from convergence issues. Our results indicate no clear differences in the convergence of the GIMHSA and MCWMSA (see Fig. 1) but we favor the use of the MCWMSA, since the coding difficulty is lower and it is rather quite less expensive in terms of computing time when the optimization problems are complex.

The SA algorithm we propose extends the Constrained SA (CSA) strategy based on the Extended Saddle Point (ESP) theory developed by Smith and Wong (2017) to an integral constrained setup. In contrast with the exogenously given penalty factor in the SA strategy proposed by Wah et al. (2006) and adopted in Smith et al. (2015), we endogenously obtain the penalty factors by augmenting the decision variables. More precisely, we optimize the penalty function by undertaking a minimization exercize over the decision variable space and maximization over the penalty factor space. This procedure therefore provides, in addition to the probabilistic descents in the problem-decision variable space as in conventional SA (as in Juditsky and Nemirovski (2020)), a probabilistic accent in the penalty factor subspace using a unified method based on a piecewise version of the Metropolis acceptance probability to control descents and ascents; this technique offers the benefit of reducing the risk for the chain to produce poor results. Embedded within the proposed SA-type algorithm is the simultaneous evaluation of the integral in the constraint which is done using a Monte Carlo integration procedure. The effectiveness of the algorithms is first assessed herein by applying them to an inferential problem on reliability in stress-strength with independent exponentiated exponential distributions. Our results in the simulation study here show that our algorithms are robust procedures for solving integral constrained statistical optimization problems.

The article is organized as follows. Section 2 introduces the integral constrained optimization problem and discusses the adopted method of the penalty function. In Sect. 3 we describe the constrained simulated annealing and discuss its implementation. Section 4 is dedicated to the empirical studies of the proposed algorithms, providing 3 applications using 6 sets of datasets used in previous publications. Section 5 concludes, whilst proofs and some robustness tests are left in the Appendix.

2 On integral constrained optimizations

Let \(\mathcal {X}=\{x: x\in \mathbb {R}^{n}\}\) be the solution space (i.e. the set of all feasible solutions) where x are bounded continuous variables. Let \(f: \mathcal {X}\rightarrow \mathbb {R}\) be a lower bounded objective function defined on the solution space, \(\mathcal {X}\). Furthermore, let \(g(x) = (g_{1}(x),\dots ,g_{I}(x))^{T}\) represent a vector of I equality constraint functions. Functions \(f(\cdot )\) and \(g(\cdot )\) are general functions that can be discontinuous and not in closed-form. In our case, one of the equality constraints, say \(g_{1}\), is assumed to be characterized by an integral function whose solution is not available in closed-form. The goal is to find a global minimum, \(x^{*}\), subject to the equality constraintsFootnote 1\(g(x)=0\) (i.e., \(x^{*}\in \mathcal {X}\) such that \(f(x)\ge f(x^{*})\), \(g(x)=0\) for all \(x\in \mathcal {X}\)). We have the following mathematical representation of the problem:

where \(\displaystyle {g_{1}(x)=\int _{\mathcal {Y}} h(x,y)dy - \psi _{0}}\) with \(\psi _{0}\) a real constant, \(\mathcal {Y}\subset \mathbb {R}^{m}\) and \(h: \mathcal {X}\times \mathcal {Y}\rightarrow \mathbb {R}\) a real-valued measurable function.

Several constraint handling methods have been developed in the literature for dealing with constrained optimization problems (Zhang et al. (2015)). In this article, we adopt the penalty function method because of its relative ease of use and amenability to complex optimization problems. More precisely, we reformulated the integral constrained optimization problem in Eq. 1 into an unconstrained one using the non-differentiable penalty described in Charalambous (1978). Furthermore, by relying on Extended Saddle Points (ESP) theory (Wah et al. (2006)), the ESP solution of the following minmax problem:

corresponds to a solution of the problem in Eq. 1, where \(p\ge 1\), and \(\lambda _{i}> 0\), \(i=1,2,\dots ,I\), are penalty factors. Accordingly, stochastic optimization algorithms, such as the CSA proposed by Wah et al. (2006) may be applied. Unfortunately, the penalty function, \(L(x,\lambda )\), in Eq. 2 cannot be evaluated analytically due to the integral constraint. In practice, researchers approximate the constraint, \(g_{1}(x)\) by a numerical approximant, \(g_{1}^{M}(x)\), so that \(g_{1}^{M}(x){\longrightarrow } g_{1}(x)~ \forall x\) as M goes to infinity. We propose here to apply the Monte Carlo method for numerical integration with a focus on importance sampling. Following this argument, the integral can be written in the form:

where q(y; x) is a probability density function on \(\mathcal {Y}\), \(Y{\sim } q(\cdot ;x)\) and \(E_{q}[\cdot ]\) denotes the expectation with respect to the importance density q. The expectation can be approximated by the importance sampling estimator which reads:

where \(Y_{i}{\mathop {\sim }\limits ^{iid}} q,\) \(i=1,\dots ,M\), is a set of auxiliary variables. Since \(g_{1}^{M}(x)\) converges to \(g_{1}(x)\) almost surely uniformly in x provided that the \(E_{q^{M}}[|g_{1}^{M}(x)|]<\infty \) (see Homem-de-Mello (2008), Feng et al. (2018) and Shapiro et al. (2014)), the approximation can always be made more precise by increasing M.

In light of the above, two alternative implementations of the importance sampling estimator (4) are explored in the construction of stochastic algorithms for estimating the ESP value and solution of the minmax problem in Eq. 2. The first consists of approximating the analytically intractable penalty function required for the computation of the acceptance probability of a simulated annealing type algorithm update, while the second entails optimizing an approximation of the analytically intractable penalty function in minmax problems of Eq. 2.

3 Simulated annealing for integral constrained optimization

In this section, we propose two SA type algorithms, based on the CSA developed by Wah et al. (2006), for solving the integral constrained problem in Eq. 1 by finding an ESP solution to the minmax problem in Eq. 2. We proceed with the construction of these algorithms in the following section.

3.1 Two simulated annealing type algorithms

Let us present the first algorithm proposal, i.e. the MCWMSA.

3.1.1 Monte carlo within metropolis simulated annealing (MCWMSA)

The MCWMSA algorithm is based on the combination of the Monte Carlo Within Metropolis (MCWM) of Andrieu and Roberts (2009) and the CSA of Wah et al. (2006). The MCWM is an iterative sampling algorithm where the MCMC uses an approximation of the target function in its execution. The MCWMSA consists of replacing the analytically intractable penalty function, \(L(x,\lambda )\), required for the computation of the acceptance probability in the CSA by \(L^{M}(x,\lambda )\) such that:

In the next few lines, we shall present the details of the first Algorithm.

3.1.2 Algorithm 1

Let \((x,\lambda )\) be a point in the \(\mathcal {X}\times \mathbb {R}_{+}^{r}\) space and let \(G(x,\lambda ;x',\lambda ')\) be an arbitrary proposal transition function satisfying the condition \(G(x,\lambda ;x',\lambda ')>0\) if and only if \(G(x',\lambda ';x,\lambda )>0\). Denote the target function corresponding to the minmax problem 2 by:

where \(T_{k}=T_{0}a^{k}\) (as in Wah et al. (2006)) is the temperature at the k-th iterate of the algorithm, \(T_{0}\) the initial temperature, and \(a\in (0,1)\) the cooling rate. After setting the length of the Markov Chain N and the initial state \((x,\lambda )\) with \(\lambda =0\), at the kth iteration the MCWMSA consists of the following steps:

-

1.

Let \((x_{k,0},\lambda _{k,0})\) be the current state and \(T_{k}\) the current temperature, then use the MCWM algorithm to update the Markov Chain \((x_{k,\ell },\lambda _{k,\ell })\), \(\ell = 1,\ldots , N\), as follows:

-

1.a

Draw \(u_{k,\ell }\sim \mathcal {B}er(q)\) where \(\mathcal {B}er(\cdot )\) denotes a Bernoulli distribution with q the probability to make a proposal in the solution space and \(1-q\) the probability to make a proposal in the penalty space.

-

1.b

Draw a trial solution \((x_{k,\ell }^{*},\lambda _{k,\ell }^{*})\) from the proposal distribution \(G(x_{k,\ell -1}\), \(\lambda _{k,\ell -1};\) \(\cdot \), \(\cdot )\) given by:

$$\begin{aligned} G(x_{k,\ell -1},\lambda _{k,\ell -1};x_{k,\ell }^{*},\lambda _{k,\ell }^{*}) = {\left\{ \begin{array}{ll} H(x_{k,\ell -1},x_{k,\ell }^{*})\delta (\lambda _{k,\ell -1} - \lambda _{k,\ell }^{*}) &{}{\text {if }u_{k,\ell }=1}\\ \tilde{H}(\lambda _{k,\ell -1},\lambda _{k,\ell }^{*})\delta (x_{k,\ell -1}-x_{k,\ell }^{*}) &{} {\text {if }u_{k,\ell }=0 }, \end{array}\right. } \end{aligned}$$(7)where \(\delta (x-y)\) denotes a Dirac function which takes the value 1 if \(x=y\) and 0 otherwise. \(H(x,\cdot )\) and \(\tilde{H}(\lambda ,\cdot )\) are, respectively, the proposal distributions for generating samples for the decision variables and penalty variables. A detailed description of the proposal distributions \(H(\cdot ,\cdot )\) and \(\tilde{H}(\cdot ,\cdot )\) is presented in the next Subsection 3.1.3.

-

1.c

Draw importance samples \(y_{k,\ell ,1},\dots ,y_{k,\ell ,M}\) from \(q(\cdot \,;x_{k,\ell -1})\) and \(y_{k,\ell ,1}^{*}\), \(\dots \), \(y_{k,\ell ,M}^{*}\) from \(q(\cdot \,;x_{k,\ell }^{*})\).

Then compute:

$$\begin{aligned} w^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*};x_{k,\ell -1},\lambda _{k,\ell -1}) = {\left\{ \begin{array}{ll} \pi ^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*}) G_{l,l-1}&{}{\text {if }u_{k,\ell }=1}\\ \pi ^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*})^{-1} G_{l,l-1}&{}{\text {if }u_{k,\ell }=0},\\ \end{array}\right. } \end{aligned}$$(8)where: \(G_{l,l-1} = G(x_{k,\ell }^{*},\lambda _{k,\ell }^{*};x_{k,\ell -1},\lambda _{k,\ell -1})\), and:

$$\begin{aligned} w^{M}(x_{k,\ell -1},\lambda _{k,\ell -1};x_{k,\ell }^{*},\lambda _{k,\ell }^{*}) = {\left\{ \begin{array}{ll} \pi ^{M}(x_{k,\ell -1},\lambda _{k,\ell -1}) G_{l-1,l} &{}{\text { if }u_{k,\ell }=1}\\ \pi ^{M}(x_{k,\ell -1},\lambda _{k,\ell -1})^{-1}G_{l-1,l}&{}{\text { if }u_{k,\ell }=0},\\ \end{array}\right. } \end{aligned}$$(9)where: \( G_{l-1,l} = G(x_{k,\ell -1},\lambda _{k,\ell -1};,x_{k,\ell }^{*},\lambda _{k,\ell }^{*}). \)

Then compute:

$$\begin{aligned} \pi ^{M}(x_{k,\ell -1},\lambda _{k,\ell -1}) = \exp (-L^{M}(x_{k,\ell -1},\lambda _{k,\ell -1})/T_{k}), \end{aligned}$$and:

$$\begin{aligned} \pi ^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*}) = \exp (-L^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*})/T_{k}), \end{aligned}$$where:

$$\begin{aligned} g_{1}^{M}(x) = \dfrac{1}{M}\sum _{i=1}^{M}\dfrac{h(x_{k,\ell -1},y_{k,\ell ,i})}{q(y_{k,\ell ,i};x_{k,\ell -1})}\,\, \hbox {and}\,\, g_{1}^{M}(x_{k,\ell }^{*}) = \dfrac{1}{M}\sum _{i=1}^{M}\dfrac{h(x_{k,\ell }^{*},y_{k,\ell ,i}^{*})}{q(y_{k,\ell ,i}^{*};x_{k,\ell }^{*} )}. \end{aligned}$$Accept the trial solution \((x_{k,\ell }^{*},\lambda _{k,\ell }^{*})\) with probability:

$$\begin{aligned} A^{M}_{T_{k}}(x_{k,\ell -1},\lambda _{k,\ell -1};x_{k,\ell }^{*},\lambda _{k,\ell }^{*}) = \min \left\{ \dfrac{w^{M}(x_{k,\ell }^{*},\lambda _{k,\ell }^{*};x_{k,\ell -1},\lambda _{k,\ell -1}) }{w^{M}(x_{k,\ell -1},\lambda _{k,\ell -1};x_{k,\ell }^{*},\lambda _{k,\ell }^{*})},1\right\} , \end{aligned}$$(10)or reject it with probability \((1-A_{T_{k}}^{M}(x_{k,\ell -1},\lambda _{k,\ell -1};x_{k,\ell }^{*},\lambda _{k,\ell }^{*}))\).

-

1.a

-

2.

Stop the MCWMSA and keep both the last solution \((x_{k,N},\lambda _{k,N})\) and the corresponding estimated value of the minmax problem in Eq. 2 if the current temperature \(T_{k}\) is below a threshold value \(T^{*}\); otherwise, update k to \(k+1\), and proceed to step (1) with \((x_{k+1,0},\lambda _{k+1,0}) = (x_{k,N},\lambda _{k,N})\).

Algorithm#1 in Appendix C provides a pseudo-code for the MCWMSA.

3.1.3 Group independence metropolis hastings simulated annealing (GIMHSA)

Let us now turn to the presentation of the second proposed algorithm, i.e. the GIMHSA.

The GIMHSA is similar in spirit to the MCWMSA but differs in that the algorithm operates directly on an approximation of the minmax problem in Eq. 2 given by the following:

Since the minmax problem in Eq. 11 depends on the set of random vectors \(Y_{1:M}=\{Y_{1},\dots ,Y_{M}\}\), its ESP value, \(L^{*M}\), is an estimator of the ESP value, \(L^{*}\), of the problem in Eq. 2, and an ESP solution pair \((x^{*M},\lambda ^{*M})\) from the set of ESP solutions to problem 11 is an estimator of a ESP solution pair, \((x^{*},\lambda ^{*})\), of the problem 2.

In the next few lines, we now present in details the second algorithm proposal.

3.1.4 Algorithm 2

Let \((x,\lambda )\) be a point in the \(\mathcal {X}\times \mathbb {R}_{+}^{r}\) space and let \(y_{1:M}=\{y_{1},\dots ,y_{M}\}\) be a random sample of size M with \(y_{i}\in \mathcal {Y}\) and \(Y_{i}\sim q_{x},~\forall i\). Also, let \(G(x,\lambda ;x',\lambda ')\) be an arbitrary proposal transition function satisfying the condition \(G(x,\lambda ;x',\lambda ')>0\) if and only if \(G(x',\lambda ';x,\lambda )>0\). Denote the target function corresponding to the minmax problem 11 by:

where \(T_{k}=T_{0}a^{k}\) is the temperature at the k-th iteration of the algorithm, \(T_{0}\) the initial temperature, and \(a\in (0,1)\) the cooling rate. After setting the length of the Markov Chain N and the initial state \((x,\lambda )\) with \(\lambda =0\), the GIMHSA iterates the following steps:

-

1.

Let \((x,\lambda )\) be the current state, \(y_{1:M}=\{y_{1},\dots .y_{M}\}\) the current collection of importance samples drawn independently from \(q_{x}\), and \(T_{k}\) the current temperature. Compute:

$$\begin{aligned} \pi ^{M}(x,\lambda ) \propto \exp (-L^{M}(x,\lambda )/T_{k}),~~{\text {with}}~~ g_{1}^{M}(x) = \dfrac{1}{M}\sum _{i=1}^{M}\dfrac{h(x,y_{i})}{q_{x}(y_{i})}, \end{aligned}$$then use the Metropolis Hastings algorithm to update the Markov Chain N times as follows:

-

1.a

Draw \(u\sim \mathcal {B}er(q)\) where q is the probability to make a proposal in the solution space and \(1-q\) the probability to make a proposal in the penalty space.

-

1.b

Draw a trial solution \((x^{*},\lambda ^{*})\) from the proposal distribution \(G(x,\lambda ;\cdot ,\cdot )\) given by:

$$\begin{aligned} G(x,\lambda ,x^{*},\lambda ^{*}) = {\left\{ \begin{array}{ll} H(x,x^{*})\delta _{\lambda }(\lambda ^{*}) &{}{\text {if }u=1}\\ \tilde{H}(\lambda ,\lambda ^{*})\delta _{x}(x^{*}) &{} {\text {if }u=0 }, \end{array}\right. } \end{aligned}$$(13)where \(H(x,\cdot )\) and \(\tilde{H}(\lambda ,\cdot )\) are respectively the proposal distributions for generating samples for the decision variables and penalty variables. A detailed description of the proposal distributions \(H(\cdot ,\cdot )\) and \(\tilde{H}(\cdot ,\cdot )\) is presented in Sect. 3.1.3.

-

1.c

Draw importance samples \(y_{1}^{*},\dots ,y_{M}^{*}\) from \(q_{x^{*}}\). Then compute:

$$\begin{aligned} w^{M}(x^{*},\lambda ^{*};x,\lambda ) = {\left\{ \begin{array}{ll} \pi ^{M}(x^{*},\lambda ^{*}) G(x^{*},\lambda ^{*};x,\lambda ) &{}{\text { if }u=1}\\ \pi ^{M}(x^{*},\lambda ^{*})^{-1} G(x^{*},\lambda ^{*};x,\lambda ) &{}{\text { if }u=0}, \end{array}\right. } \end{aligned}$$(14)where:

$$\begin{aligned} \pi ^{M}(x^{*},\lambda ^{*}) \propto \exp (-L^{M}(x^{*},\lambda ^{*})/T_{k}),~~{\text {with}}~~ g_{1}^{M}(x^{*}) = \dfrac{1}{M}\sum _{i=1}^{M}\dfrac{h(x,y_{i}^{*})}{q_{x^{*}}(y_{i}^{*})}. \end{aligned}$$Accept the trial solution \((x^{*},\lambda ^{*})\) and \(y_{1:M}^{*}\) with probability:

$$\begin{aligned} A_{T_{k}}^{M}(x,\lambda ;x^{*},\lambda ^{*}) = \min \left\{ \dfrac{w^{M}(x^{*},\lambda ^{*};x,\lambda ) }{w^{M}(x,\lambda ;x^{*},\lambda ^{*})},1\right\} , \end{aligned}$$(15)or reject it with probability \((1-A_{T_{k}}^{M}(x,\lambda ;x^{*},\lambda ^{*}))\).

-

1.a

-

2.

Stop the GIMHSA and keep both the last solution \((x,\lambda )\), \(y_{1:M}\) and the corresponding estimated value of the minmax problem in Eq. 11 if the current temperature \(T_{k}\) is less than \(10^{-6}\); otherwise, update k to \(k+1\), and proceed to step (1).

It is worth noting that the GIMHSA procedure may also be viewed as an approximation of a Metropolis Hastings algorithm with target density \(\pi (x,\lambda )\), making it a special case of the MCWMSA algorithm. This dual interpretation of the GIMHSA presents an opportunity for designing algorithms that inherit the potential efficiency of a Markov Chain with a one-step transition kernel (denoted later on \(P_{GIMHSA}\), see sub-Section 3.2 below) while still being able to produce samples from the target function, and not an approximation. A pseudo-code for the GIMHSA is provided in Algorithm#1 in Appendix C.

3.1.5 Proposal distributions \(H(x,x')\) and \(\tilde{H}(\lambda ,\lambda ')\)

In generating random points, \((x',\lambda ')\), from the neighbourhood, \(\mathcal {N}(x,\lambda )\), of the current point \((x,\lambda )\) using the proposal distributions \(G(x,\lambda ;x',\lambda ')\), we start off, at each iteration step, by making a decision either to update the decision variables, x, while the penalty factors are kept fixed or as an alternative to update the penalty factor, \(\lambda \), maintaining the decision variables constant. In our Metropolis Hastings SA version, decision variables, x, need to be updated more frequently than the penalty factors, \(\lambda \), to enforce the convergence of the algorithm. Thus, we choose large values of the generation ratio, q, for example \(q=20n/(20n+r)\) with n and r being, respectively, the number of decision variables and the number of constraints. Upon doing this, we then draw trial solutions for the decision variables from \(H(x,\cdot )\) and the penalty factors from \(\tilde{H}(\lambda ,\cdot )\). Each of these proposal distributions are described below.

As regards to the proposal distribution \(H(x,\cdot )\) for the decision variables, given the challenging task of finding one good proposal distribution in n dimensions for generating \(x'\) from the neighbourhood of x, we propose n good proposal distributions for updating each component of x. However, to improve the efficiency of the algorithm, we only make \(x'\) to differ from x in the j-th component at each iteration step. Let j be a uniform number in \(\{1,2,\dots ,n\}\) and \(\{\sigma _{j}: 1\le j\le n\}\), a set of parameters where \(\sigma _{j}\) represents the scale parameter for the proposal distribution of the j-th decision variable. We generate a trial solution for the decision variables as follows:

where \(\mathbf{{e}}_{j}^{(1)}=(e_{j,1}^{(1)},\dots ,e_{j,n}^{(1)})\) is the j-th column of an \(n\times n\) identity matrix and \(\theta _{j}\) is Cauchy distributed as such:

In addition, we dynamically update the scale parameter of the Cauchy proposal corresponding to the \(j-\)th component of the decision variable, \(\sigma _{j}\) according to the following rule (see Andrieu and Thoms (2008)):

where \({\overline{A}}_{j,T_{k}}\) denotes the simple average of the acceptance probabilities associated with the \(j-\)th decision variable at the k-th temperature step, and A is the target acceptance rate set to 0.44 as in Andrieu and Thoms (2008).

In generating the trial point \(\lambda '\) from \(\tilde{H}(\lambda ,\cdot )\), we follow a similar strategy to that of the decision variables. More precisely, we generate \(\lambda '\) so that it differs from \(\lambda \) only in the j-th component where j is a uniform random number on the set \(\{1,2,\dots ,I\}\). A trial point for \(\lambda _{j}'\) is drawn from a uniform distribution over the interval \((a_{j}^{(\lambda )}, b_{j}^{(\lambda )})\) with \(a_{j}^{(\lambda )}= \max \{\lambda _{j}-w_{j}^{(\lambda )}h_{j}(z), 0\}\), \(b_{j}^{(\lambda )}= \min \{\lambda _{j}+w_{j}^{(\lambda )}h_{j}(z), 10,000\}\) and \(w_{j}^{(\lambda )}\) represent a scale parameter that controls the width of the search space. Note that the neighbourhood of \(\lambda _{j}\) from which \(\lambda _{j}'\) is generated is adjusted according to the degree of constraint violation. Thus, whenever \(g_{j}(x)=0\) is satisfied, \(\lambda _{j}\) does not need to be updated. Furthermore, we adjust the scale parameter, \(w_{j}^{(\lambda )}\), according to the following rule:

where we set, experimentally, \(\eta _{0}=1.25\), \(\eta _{1}=0.95\), \(\tau _{0}=1.0\) and \(\tau _{1}=0.01\) so that the MH chain is not moving too fast in the penalty subspace. That is, when \(g_{j}(x)\) is reduced too quickly (i.e. \(g_{j}(x)< \tau _{1} T \) is satisfied), \(g_{j}(x)\) is over-weighted, leading to a possibly poor objective value or to some difficulties in satisfying other under-weighted constraints. Hence, we reduce \(\lambda _{j}\)’s neighborhood. In contrast, if \(g_{j}(x)\) is reduced too slowly (i.e. \(g_{j}(x)> \tau _{0} T \) is satisfied), we enlarge \(\lambda _{j}\)’s neighborhood in order to improve its chance of satisfaction. Note that \(w_{j}^{\lambda }\) is adjusted using T as a reference because constraint violations are expected to decrease when T decreases.

3.2 Asymptotic convergence of MCWMSA and GIMSA

In this section, we show the asymptotic convergence of both the MCWMSA and GIMSA to a constrained global minimum in a discrete constrained optimization problem.

3.2.1 Asymptotic convergence of MCWMSA

The MCWMSA algorithm (Algorithm 1) describes a Markov Chain \((x_{i},\lambda _{i})\), \(i=1,2,\ldots \), in the state vector \((x,\lambda )\) since the auxiliary variables y are drawn independently at each iteration. Modeling this chain as an Inhomogeneous Markov Chain that consists of a sequence of Homogeneous Markov Chains of a finite length, each at a specific temperature in a cooling schedule. The one-step transition kernel, \(P_{MCWMSA}(x,\lambda ;x',\lambda ')\), of the MCWMSA algorithm from \((x,\lambda )\) to \((x',\lambda ')\) is:

where:

and \(\mathcal {N}(x,\lambda )\) denotes the neighborhood of \((x,\lambda )\), \(q_{x}^{M}\) the joint probability distribution of the importance densities and \(G(x,\lambda ;x',\lambda ')\), the proposal distribution of \((x',\lambda ')\in \mathcal {N}(x,\lambda )\) conditional on \((x,\lambda )\) which satisfies:

with:

Since the acceptance probability in the MCWMSA depends upon an approximation of the target function, the invariant distribution of \(P_{MCWMSA}\) is not \(\pi {(x,\lambda )}\) and therefore MCWMSA will not produce samples from \(\pi {(x,\lambda )}\) even in a steady state. However, by relying on the ergodicity of a one-step transition kernel, \(P_{MCWM}\), of an MCWM sampling procedure, Andrieu and Roberts (2009) show that these samples are distributed asymptotically according to an approximation of \(\pi {(x,\lambda )}\), which should be all more precise, as the sample size grows large. In view of this, it suffices to show that the Markov Chain is ergodic.

Theorem 1

Let \((x,\lambda )\) denote the state of the inhomogeneous Algorithm 1. The couple \((x,\lambda )\) is strongly ergodic if the annealing schedule \(\{T_{k}: k=0,1,\dots \}\) satisfies:

where:

Proof

See Appendix A. \(\square \)

3.2.2 Asymptotic convergence of GIMHSA

In this case, the sequence \((x_{i},\lambda _{i})\), \(i=1,2,\ldots \), is no longer a Markov Chain, but rather the triplet \((x_{i},\lambda _{i},y_{i})\), \(i=1,2,\ldots \), is. The GIMHSA defines a Markov Chain with a one-step transition kernel, \(P_{GIMHSA}(x,\lambda ,y;x',\lambda ',y')\), given by:

where:

Since there exists an integer M so that \(P_{GIMHSA}^{M}(x,\lambda ,y;x'\),\(\lambda ',y')>0\), for all \((x,\lambda ,y),(x',\lambda ',y')\) the chain is irreducible. The chain is also aperiodic, thus the samples \((x_{i},\lambda _{i})\) produced are distributed in the limit as \(i\rightarrow \infty \) according to the target function \(\pi ^{M}(x,\lambda )\). The GIMH can be viewed as a special case of the MCWM sampling strategy, thus the irreducible and aperiodic property of the GIMHSA sampling scheme can easily be deduced from the weak ergodicity property of the MCWMSA algorithm.

4 Numerical illustrations

In this section, we illustrate the efficiency of our algorithms by first conducting an inferential exercize on reliability in stress-strength problems and, second, optimization exercizes for financial portfolio allocation problems.

To begin with, we make explicit all the necessary parameters required in the successful implementation of the SA algorithms. These include the parameters constituting the cooling schedule, i.e. the initial temperature (\(T_{0}\)), the cooling function, the Metropolis loop length (\(N_{T}\)), and the stopping condition. The logarithmic cooling schedule is a well known schedule that guarantees convergence. Nonetheless, this requires infinitely slow cooling. In lieu, most users prefer the geometric cooling proposed by Kirkpatrick et al. (1983), as such:

where \(T_{0}\) and a, respectively, denote the initial temperature and the cooling rate. Consistent with the literature, the cooling rate, \(0.8< a < 0.99\), which has been shown to be quite effective across many applications (e.g., Stander and Silverman (1994), Wah et al. (2006)) is set to 0.95 in this article. Results of robustness checks are made available in Appendix B. The closer the value of the cooling factor a is to 1, the slower the rate of decay. As for the initial temperature, \(T_{0}\), we choose a value to ensure that around 60% of initial uphill moves are accepted (as in Rayward-Smith et al. (1996)), or similarly to achieve an acceptance probability of about 0.90 irregardless of the value of the cost function (e.g., Rutenbar (1989), Bohachevsky et al. (1986)). To ensure that the simulated annealing spends sufficient time to thoroughly explore local optimal at each temperature, we fixed a priori the number of iterations, \(N_T\), to 1, 000 (as in Fisk et al. (2022)). The process is terminated at a temperature, \(T^{*}\), low enough that useful improvements can be expected anymore. Arguably, higher values of \(T^{*}\) would downgrade the precision of the output; on the contrary, smaller values would increase the accuracy of the numerical solution, but at the cost of a larger computing time. On this basis, we fixed the terminating temperature to \(10^{-6}\) (see van Laarhoven et al. (1992) and Fisk et al. (2022)).

4.1 First application: reproduction of inference and reliability in a stress-strength problem

For the sake of a better demonstration of the two new algorithms, we first employ a low dimension optimization problem given by the maximization of an integral constrained likelihood for a stress-strength reliability problem. We consider the inferential exercize on reliability in stress-strength problems with independent Exponentiated Exponential (EE) distributions (as in Gupta and Kundu (2001)) studied by Smith et al. (2015). This problem fits the form of Eq. 1 and reads:

where \(l(\cdot )\) is the Log-likelihood, \(\phi \) the parameter vector, x and y are, respectively, outcomes of random variables X and Y, and \(\psi _0\) the target reliability value.

Given two random samples \(x=\{x_{1},\dots ,x_{n}\}\) and \(y=\{y_{1},\dots , y_{m}\}\) drawn, respectively, from two independent EE distributionsFootnote 2, \(F_{X}(\cdot )\) and \(F_{Y}(\cdot )\), \(l({\varvec{\phi }};x,y)\) in Eq. 23 is given by:

and is the Log-likelihood function of the parameter vector (decision variables) \({\varvec{\phi }}=(\alpha _{1},\beta _{1},\alpha _{2},\beta _{2})\) of two independent exponentiated exponential (EE) distributions.

It is important to note that even though this problem is only four-dimensional, it is still a challenging problem. The Log-likelihood, \(l(\cdot )\), fails a number of commonly assumed properties of Log-likelihood functions which provide support and guarantee the parameter estimation results obtained when classical optimization techniques are applied. For instance, the Log-likelihood of the exponentiated exponential distribution is neither a concave, nor even a quasi- or pseudo-concave function; there exists a small region where the gradient of \(l(\cdot )\) vanishes with a negative definite Hessian matrix; elsewhere, a frequent change in the sign of the determinant of the Hessian matrix is observed (see Smith et al. (2015) for a detailed discussion). These observations therefore suggest that extreme care has to be taken should a derivative-based technique be opted for.

In problem 23, \(\mathcal {R}\) denotes the reliability between two EE distributions. Within the reliability framework, the stress-strength model describes the life of a component which has a random strength X that is subjected to a random stress Y. The component is adjudged to fail immediately the stress surpasses the strength, otherwise the component is considered to function properly (i.e. \(X>Y\)). Therefore, \(\mathcal {R}= Pr(Y <X)\) is a suitable measure for quantifying reliability. Applications of this concept can be found in many areas, especially in engineering concepts such as structures, deterioration of rocket motors, static fatigue of ceramic components, fatigue failure of aircraft structures, and the aging of concrete pressure vessels. In our case, given that both stress, Y, and strength, X, are described by independent EE distributions, then the reliability \(\mathcal {R}\) of the random variables can be written as (see Kundu and Gupta (2005)):

Except in a few exceptional cases, the integral in Eq. 25 is known not to be analytically reducible (see Nadarajah (2011)). In addition, \(\mathcal {R}\) has been shown to be homogeneous of degree 0 in \((\beta _{1},\beta _{2})\), upon the introduction of a change of variable.Footnote 3 This observation suggests that the contours of \(\mathcal {R}\) are constant along the line in the parameter space satisfying \(\beta _{2}=c\beta _{1}\), with c being a real constant.

While noting that the probability density function, \(f_{X}(\cdot )\), of the EE distribution, \(EE(\alpha _{1},\beta _{1})\), can be expressed as:

then the integral in Eq. 25, \(\mathcal {R}\), can be written as:

We sample directly from the distribution:

with samples (\(M=10,000\) in Eq. 4) drawn by using:

during the execution of our algorithms.

Smith and Wong (2017) present the difficulties (i.e. numerical accuracy issues, poor performances when the value of \(\psi _{0}\) is close to the boundary) involved in using the Lagrange multiplier approach to solve the integral constrained problem 1. They also describe an application of simulated algorithms to integral constrained problems. Ours defer from theirs in a number of ways. First, while Smith and Wong (2017) optimized sequentially the penalty function, by gradually increasing the penalty value until convergence is reached, the decision variables as well as the penalty values are simultaneously optimized within our Monte Carlo styled strategy. Our strategy when compared to the sequential optimization procedure adopted by Smith and Wong (2017), has the potential of considerably reducing the computational time required for the optimization exercize. Second, the Smith and Wong (2017) approach is based on optimizing an approximation of the penalty function while we offer two ways of solving the problem, i.e. either by an approximated problem or by an approximation algorithm. We shall see in the following, that even with a small sample, our simulated annealing algorithms perform efficiently and effectively in estimating the parameters of the integral constrained likelihood problem.

Finally, we illustrate the application of our algorithms by using the simulated data generated in Smith et al. (2015) reported in Table 1 below.

The data is generated by drawing random samples of size 10 each from the two EE distributions, EE(2, 3) and EE(5, 1.6015), with \(\psi _{0}=0.1\) as the corresponding value on reliabilityFootnote 4, \(\mathcal {R}\).

Given the above set-up, we executed our stochastic optimization algorithms 10 times each on a penalty function representation of the integral constrained problem 23. Figure 1 displays the convergence towards the optimal Log-likelihood obtained by Smith et al. (2015) of a run, each, of our two algorithms. From this figure there seems to be no difference in the rate of convergence of the algorithms. In contrast with the GIMHSA algorithm, the MCWMSA algorithm requires more time in its execution largely because of the need to compute the penalty function twice at each iteration step (see Eq. 5). In Table 2, we report the parameter estimates obtained by Smith et al. (2015) alongside the statistical results (i.e. mean and standard deviation) obtained from 10 independent runs of our algorithms.

The results in Table 2 indicate that on average the GIMHSA algorithm outperforms other methods as it is the algorithm with the lowest constraint violation (i.e. \(\mathcal {R}-0.1\)) estimate.Footnote 5

4.2 Second application: portfolio optimizations in a simple gaussian world

As a second illustration, we implement our algorithms to a set of portfolio optimization problems with positivity constraint on the weights, namely the Markowitz (1952) mean-variance portfolio optimization with positive constraint on the weights.

The Markowitz mean-variance problem with short-selling constraints is taken up in the section to demonstrate the effectiveness of our algorithms. Using this problem as a benchmark, we are able to assess the quality of the solutions of our algorithms, since an optimal portfolio to the Markowitz mean-variance problem can easily be obtained using, for example, Quadratic Programming (QP).

Suppose we have N assets to choose from, let \(\mathbf{W}^{T}=(w_{1},\dots ,w_{N})\) be the transposed vector of weights with \(w_{i}\in \mathbb {R}\) being the weight of the i-th asset in the portfolio \(\mathbf{X}^{T}=(x_{1},\dots ,x_{N})\). Also, let \(\mathbf{r}^{T}=(r_{1},\dots ,r_{N})\) be a transposed real random vector with \(r_{i}\), \(i=1,\dots , N\), the return on asset i. Furthermore, we define \(\mathbf{r}\) by the following affine transformation:

where \({\varvec{\varSigma }}\in \mathrm {R}^{N\times N}\) is a non-singular, positive definite matrix (called precision matrix), \(\varvec{\mu }\in \mathrm {R}^{N}\) a real vector and \(\varvec{\epsilon }\) a real random vector. Following these assumptions, the random variable \(R\in \mathbb {R}\) representing the return on the whole portfolio can be expressed as:

with the corresponding expected value (E[R]) and variance (var(R)) given as:

and:

where \(f(\cdot )\) denotes the probability density function of \(\varvec{\epsilon }\). Under certain distributional assumptions on \(\varvec{\epsilon }\), solutions to the integrals in Eq. (29) and (30) can be obtained in closed-forms.

Subject to the above assumptions and given a desired level of expected portfolio returns, \(R_{exp}\), the Makowitz mean-variance portfolio selection problem can be summarized as follows:

where \(\mathbf{W}^{T} = (w_{1},\dots , w_{N})\).

Given the multivariate normal assumption on \(\varvec{\epsilon }\) in the simple Gaussian world of this first application, we numerically evaluate the portfolio expected return and variance following a Monte Carlo procedure. Let \(\varvec{\epsilon }_{i}\), \(i=1,2,\dots , M\) be a sample of size M drawn from the multivariate normal distribution, \(f(\cdot )\), then the integrals in the definition of portfolio expected returns and variance in Eqs. (29) and (30) are evaluated using the empirical counterparts as follows:

and:

The consistency of the Monte Carlo approximation, for \(M\longrightarrow \infty \), is guaranteed by the strong law of large numbers (see Robert and Casella (2013), Sect. 3.3, for further details).

We explore some of the properties of the portfolio optimization problem and propose a slight variation of the sampling procedure described in Section 3.1.3 by limiting our algorithms to the consideration of solutions that strictly satisfy the return and the budget constraints. More precisely, we follow the proposal by Crama and Schyns (2003) who state that in problem 31 given a portfolio \(\mathbf{W}\), the neighborhood of the current \(\mathbf{W}\) contains all solutions \(\mathbf{W}^{*}\) with the following property: there exist three assets, labeled \(i_{1}\), \(i_{2}\) and \(i_{3}\), without loss of generalityFootnote 6, so that:

where S is a Cauchy distributed random variable and \(\alpha \) a tuning parameter set equal to:

where \(r_{i_{1}}\), \(r_{i_{2}}\) and \(r_{i_{3}}\) corresponds to the returns on the three randomly selected assets whose weights are to be modified.

Furthermore, in the presence of a minimum (\({\underline{w}}_{i}\)) and a maximum (\({\overline{w}}_{i}\)) restriction on the proportion of total investment that can be held in asset i (\(i=1,2,\dots ,N\)), then a feasible interval of variation for S to ensure that a move from the current solution W to \(W^{*}\) satisfies the floor and ceiling restrictions can be constructed. Suppose the three assets (say, \(i_{1}\), \(i_{2}\) and \(i_{3}\)) to be modified for a move from W to \(W^{*}\) are known, then we arrive at the following interval of variation for S:

by combining the ceiling and floor restrictions with Eq. (32).

To empirically assess our algorithms on the mean-variance optimization problem, we next consider three databases (US, Chinese, and French stock markets) covering different economic sectors and used in Costola et al. (2022). The daily prices on US, French, and Chinese Stock Markets covers, respectively, the period 19/3/2008 to 31/12/2019, 6/4/2006 to 4/10/2019 and 18/8/2008 to 31/12/2019. Our algorithm finds the exact optimal risk for all values of the targeted expected returns. The algorithm requires between 58 and 70 seconds per portfolio of between 29 and 40 securities within a standard setting (see Table 4). The efficient frontier for these instances are plotted in Fig. (2).

The steps for generating the efficient frontiers in Fig. 2 are briefly presented in Algorithm#1 in Appendix C, following Calvo et al. (2012).

Efficient Frontiers in the US, Chinese and French Equity Markets. Note. Are plotted here the Markowitz Mean-variance Efficient frontiers obtained using: Quadratic programming (Quadratic Prog.), Monte Carlo Within Metropolis Simulated Algorithm (MCWMSA), and Group Independent Metropolis Hastings Simulated Annealing (GIMHSA). See Table 4 for the performances of the various algorithms with some precise examples

The solutions obtained with the two algorithms are very close to the ones resulting from QP for the three stock markets considered, as simple eyeball analyses of Figs. 2 and 3 reveal. The difference in the optimal portfolio variance obtained with QP and SA for a given targeted expected return is of the order of \(10^{-4}\) (see Fig. 3), which is negligible for the applications of SA to asset allocation problem.

4.3 Third application: mean-expected shortfall problems in a non-gaussian world

As a third illustration, we implement our algorithms in the case of the Mean-Expected Shortfall problem (as in Casarin and Billio (2007)), when the data are Non-Gaussian and the constraint a true integral, for which few approaches have been developed in the literature to the best of our knowledge. As previously, the return vector \(\mathbf{r}^{T}=(r_{1},\dots ,r_{N})\) with \(r_{i}\), \(i=1,\dots , N\), being the return on asset i, is defined by the affine transformation in Eq. 27 and the corresponding portfolio return, \(R\in \mathbb {R}\), is given in Eq. 28.

Considering the Expected Shortfall of the portfolio, \(ES_{\alpha }(R)\), as the measure of risk, the goal is to maximize the E[R] subject to \(ES_{\alpha }(R)\) among several other constraints. This problem fits the form of Eq. 1, so that, for \(\alpha \in [0,1]\):

where:

and \(\underline{R}\) a return threshold, and with:

where \(F_{L}(x) = Pr(L\le x)\).

Except for some ad hoc densities, the exact formula for the ES is not available, which often renders the portfolio selection problem a challenging exercize to undertake.

In our case, suppose the vector of returns on the assets satisfies the affine transformation defined by Eq. 27, then the Expected Shortfall on the portfolio return leads to:

For illustrative purposes, we now assume that the vector of asset returns is characterized by a class of Multivariate Skew Student-t distributions which accounts for fat tails and skewness, in the more realistic Non-Gaussian world of this second application. More precisely, we assume that the random vector \({\varvec{\epsilon }}^{T}=(\epsilon _{1},\dots ,\epsilon _{N})\) in Eq. 27 follows a Multivariate Skew Student-t distribution, \(Sk\mathcal {T}_{N}(\mathbf{\varvec{\epsilon }};0,I_{N},\nu ,\gamma )\) with \(\nu =\{\nu _{1},\dots ,\nu _{N}\}\) the vector of degrees of freedom and \(\gamma = \{\gamma _{1},\dots , \gamma _{N}\}\) the vector of parameters that control the skewness of the distribution. The components \(\epsilon _{i}\) of \({\varvec{\epsilon }}\), \(i=1,2.\dots ,N\), are independent and Skew Student-t distributed with density:

and:

the density of a univariate Symmetric Student-t distribution. The mean and variance of \(\epsilon _{i}\) are respectively given as follows:

and:

Subject to the above assumptions, it can easily be shown that \(\mathbf{r}\) follows a Multivariate Skew Student-t distribution, \( Sk\mathcal {T}_{N}(\mathbf{r};\varvec{\mu },\varvec{\varSigma },\nu ,\gamma ) \), with a Variance-CoVariance of returns matrix given by:

where \(\varvec{\varXi }(\nu ,\gamma )\) is a diagonal matrix parametrized in \(\nu \) and \(\gamma \), with elements \(\xi _{i}=V(\epsilon _{i})\) on the main diagonal.

To simulate asset returns from a Skew Student-t distribution with \(\nu \) degrees of freedom and skewness \(\gamma \), we use the following stochastic representation. Let Z have a Bernoulli distribution with parameter \(1/(\nu ^{2} + 1)\) and X a Student-t distribution with \(\nu \) degrees of freedom, then we have:

To facilitate the implementation of our optimization strategy, we embed the following Monte Carlo evaluation of ES within our optimization scheme as briefly presented here below.

-

1.

Draw \(\varvec{\epsilon }_{j}^{T}= (\epsilon _{j1},\epsilon _{j2},\dots ,\epsilon _{jN})\sim Sk\mathcal {T}_{N}(\varvec{\epsilon }_{j};0,\mathrm {I}_{N},\nu ,\gamma )\), for \(j=1,\dots ,M\).

-

2.

Compute \({-\mathbf{W}^{T}{\varvec{\varSigma }}^{1/2}{\varvec{\epsilon }}_{j}}\) for \(j=1,2,\dots ,M\).

-

3.

Denote \(L_{j} = -\mathbf{W}^{T}{\varvec{\varSigma }}^{1/2}{\varvec{\epsilon }}_{j}\). If we order the sample \((L_{1},\dots , L_{M})\) so that \(L_{1,M}\ge L_{2,M}\ge \dots \ge L_{M,M}\) and:

$$\begin{aligned} \begin{aligned} P(L\le x)&= \int \mathrm {I}_{\{L\le x\}}f_{L}(l)dl = E[\mathrm {I}_{\{L\le x\}}]\\&\approxeq \dfrac{1}{M} \sum _{j=1}^{M}\mathrm {I}_{\{L_{j}\le x\}}, \end{aligned} \end{aligned}$$that is:

$$\begin{aligned} {\hat{P}}(L\le x) = \dfrac{1}{M}\sum _{i=1}^{M}\mathrm {I}_{\{L_{i,M}\le x\}}. \end{aligned}$$ -

4.

Evaluate:

$$\begin{aligned} ES_{\alpha }(R)= & {} - \mathbf {W}^{T}\varvec{\mu } + \dfrac{1}{\alpha }\int _{0}^{\alpha }\sum _{i=1}^{M-1} \left( L_{i,M} \mathrm {I}_{(\frac{i-1}{M}, \frac{i}{M}]}(u) + L_{M,M} \mathrm {I}_{(\frac{M-1}{M}, 1]}(u)\right) du\nonumber \\= & {} - \mathbf {W}^{T}\varvec{\mu } + \dfrac{1}{M\alpha }\left( \sum _{i=1}^{{\left\lfloor M\alpha \right\rfloor }}L_{i,M} + \left( M\alpha -{\left\lfloor M\alpha \right\rfloor } \right) L_{{\left\lfloor M\alpha \right\rfloor }+1 ,M} \right) , \end{aligned}$$(41)where \({\left\lfloor x \right\rfloor }\) and \(\mathrm {I}_{(a,b)}\) denote the greatest integer function and the indicator function on the interval (a, b), respectively.

As a first step in solving the portfolio optimization in Eq. 34, we consider a revised problem by exploring the convexity property of Expected Shortfall, i.e.:

and solve the following problem:

A pseudo-code for the Expected Shortfall efficient frontiers in Fig. 4 is given in Algorithm#1 in Appendix C.

In-view of the above set-up we next illustrate further the effectiveness of our SA type algorithms in two settings. The first exercize studies the effect of heavy tails and skewness of the asset distribution on the ES efficient frontier within a strategic allocation problem. The second one shows the effect of model specification errors on the ES efficient frontier.

The first exercize considers a strategic asset allocation problem similar to the one in Casarin and Billio (2007). Strategic allocation decisions refer to long-term investments and usually involve a small number of assets, often represented as the benchmark of different asset classes. Long-term investors can mitigate the adverse effect arising from their exposure to the increasing volatility of the financial markets by imposing a constraint on their portfolio’s downside risk.

Table 5 presents the characteristics of the three asset classes used in Casarin and Billio (2007). Figure 4 displays the Mean-Expected Shortfall Efficient frontiers under different parameter settings of the Skew Student-t distribution.

The mean and scale parameters are the same across frontiers while the skewness and kurtosis vary across the frontiers. Our numerical results show that, for a given expected return level, the portfolio risk of normally distributed asset returns is lower than those of heavy-tailed asset returns (see Symmetric Normal and Symmetric Student-t frontiers in Fig. 4). For both normal and heavy tail distributions, the presence of (left) asymmetry in the distribution implies a larger risk when compared to the case of symmetric distribution (see Skew Normal and Skew Student-t frontiers in Fig. 4).

In the second exercize dedicated to Mean-Expected Shortfall optimization, we consider the asset classes used in the long-term global asset allocation with model risk studied in Boucher et al. (2013). The asset classes are represented by cash, stock and bond markets.Footnote 7 The main characteristics in terms of means and variance-covariances are presented in Table 6.

We first assume the investor is using a non-correctly specified model (a Normal distribution) for the returns, plugged into the ES optimization problem. Then, we evaluate the actual (realized) expected return and risk using the best flexible model (that is a Skew Student-t distribution) fitted on the real market data on the entire sample. A pseudo-code for the Expected Shortfall efficient frontiers with estimation errors (Fig. 5) is provided in Algorithm#1 in Appendix C.

Finally, we compare the two ex post biased frontiers with the optimal one obtained from the problem 42 with the best flexible model. In Fig. 5, the Expected Return and ES in the Normal case differ from the Skew Student-t since the Normal distribution assumption for the entire sample period is too restrictive and suffers from an estimation bias. As expected the Symmetric Normal frontier is above the two Skew Student-t frontiers (because of the better fitting of asymmetry and tails provided by the Skew Student-t). We shall also note that asymmetry and tail estimates are crucial for a correct evaluation of the frontier since the differences of the two Skew Student-t frontiers are small, while the differences between the Symmetric Normal and the Skew Student-t ones are large.

In this case (Dataset#6 and Application#3), as in all our previous exercizes (Dataset#1-#5 and Application#1-#3), the proposed MCWSA and GIMHSA algorithms reach convergence and are able to efficiently provide optimal solutions within a reasonable and realistic computational time for a practitioner.

Mean-Expected Shortfall Efficient Frontiers according to various Estimation Error Assumptions for the Returns for Dataset#6 and Application#3. Note. Are plotted here three Mean-ES efficient frontiers. The two first ones are obtained by using the incorrect return distribution (Symmetric Normal mean and ES in dashed black: “Sym. Normal Biased Efficient Frontier”), and the correctly estimated distribution (Skew Student-t mean and ES in dotted-dashed blue: “Skew Student-t Unbiased Efficient Frontier”). The third one is obtained using the optimal weights corresponding to the incorrect allocations (Symmetric Normal) all together with the mean and ES under the Skew Student-t assumption (solid red line: “Skew Student-t Biased Efficient Frontier”), where degrees of freedom and skewness parameters are estimated on the data

5 Conclusion

The problem of designing efficient algorithms for optimizing a non-differentiable function subject to an integral constraint whose solution is not available in a closed-form is an important one, in particular in Operational Research and more specifically in Finance. In this article, we propose two new stochastic optimization algorithms referred to as Monte Carlo within Metropolis Simulated Annealing (MCWMSA) and Group Independent Metropolis Hastings Simulated Annealing (GIMHSA), which both rely on the use of importance sampling. The latter employed Monte Carlo simulations in approximating the analytically intractable integral constrained problem, before using a Simulated Annealing-type algorithm, while the former used the Monte Carlo strategy in constructing an approximate algorithm. We were first able to prove some convergence properties of the two algorithms. We show that the sequence of samples generated by MCWMSA and GIMHSA is an ergodic Markov Chain process and the limiting distribution is the true target function.

We study first the performance of our algorithms by applying them to the problem of maximizing the integral constrained likelihood for the stress-strength reliability with independent exponentiated exponential distributions, as discussed in Smith et al. (2015). Our results confirm the effectiveness of our algorithms in solving the integral constrained problem. It also happens that GIMHSA gives the best results in the sense that it produces the lowest constraint violations.

As a complement, we secondly provide examples of potential uses in finance. A first simple benchmark show that they provide accurate approximations of the true solutions in the case of the classical portfolio optimization problem of Markowitz (1952), in which the constrained integral simply concerns the constrained expected return. We further confirm this basic result by numerically solving the positively constrained Expected Return / Expected Shortfall strategic asset allocation problems (as in Casarin and Billio (2007)). In the this case, efficient results are obtained. We also complement this later result using the dataset used in Boucher et al. (2013) for the sake of robustness and show the impact of some potential estimation errors on the estimated frontiers. Our results finally show that the two proposed algorithms quickly converge to practical optimal solutions, within a decent and operational computational time for risk and asset managers.

We can imagine several extensions of this work. On the algorithmic added-value of the article, we may think about parallel GIMSA with and without interactions. Also, application of the proposed algorithms to mixed-integer programming problems, such as the portfolio selection problem with or without a cardinality constraint (see Woodside-Oriakhi et al. (2011)) will be consider in further studies.

Regarding financial applications, competing with existing numerical approximations for VaR and ES when Cumulative Density Function analytical expressions are unknown, will be a decent challenge (see, for instance, Azzalini and Valle (1996) Skew Normal distribution). To complement this, the recently proposed joint approach for estimating VaR and ES (see Wang et al. (2018); Meng and Taylor (2020)) might be a target of interest for testing further our proposed improved approaches. Finally, tackling the full constrained problem of portfolio optimization in a dynamic setting would be beneficial for investors (see Costola et al. (2022)).

Notes

Without loss of generality, we only consider a minimization problem with equality constraints, since a maximization problem can be transformed into a minimization problem by negating the objective function while inequality constraints, say, \(g_{i}(x)\le 0\) can likewise be transformed into an equivalent equality constraint of the form \((g_{i}(x))^{+}=\max \{0,g_{i}(x)\}=0\).

We denote the EE distribution with \(EE(\alpha ,\beta )\) where \(\alpha \) and \(\beta \) are, respectively, the shape and scale parameters. The respective distribution function, \(F_{X}(\cdot )\), may be written following the definition by Gupta and Kundu (2001) as:

$$\begin{aligned} \begin{aligned} F_{X}(x;\alpha ,\beta )&= \left( 1-\exp (-\beta x) \right) ^{\alpha },~~\alpha>0, \beta>0, x>0. \end{aligned} \end{aligned}$$Smith et al. (2015) consider the change of variable \(z=\beta _{1}x\) to obtain:

$$\begin{aligned} \mathcal {R} = \alpha _{1}\int _{0}^{\infty } \exp (-\beta _{1}z) \left( 1- \exp {(-\beta _{1}z)} \right) ^{\alpha _{1}-1}\left( 1- \exp {(-(\beta _{2}/\beta _{1})z)} \right) ^{\alpha _{2}}dz, \end{aligned}$$while Nadarajah (2011) poses the transformation \(z=\exp (-\beta _{1} x)\) and obtains:

$$\begin{aligned} \mathcal {R} = \alpha _{1}\int _{0}^{1} \left( 1- z \right) ^{\alpha _{1}-1}\left( 1- z^{\beta _{2}/\beta _{1}} \right) ^{\alpha _{2}}dz. \end{aligned}$$All results are obtained using MatLab (version R2019a) on a laptop with a Intel(R) Core(TM) i7-5500U CPU @ 2.40GHz and 8GB RAM. The codes used in this article are available upon request from the corresponding author.

In our robustness checks we vary the value of main parameters of the algorithms, namely: a, N and \(T^{*}\), obtaining similar results in terms of efficiency and computational time (Cf. Appendix B).

We thank the first referee for noticing that the choice of the randomly chosen assets has no importance (since it can be generalized for all triplets of assets, and, more generally, to all sets of assets).

We follow Boucher et al. (2013) to build up our database: the “Equity” asset class is represented by a composite index of 95% of the MSCI Europe and 5% of the MSCI World; the “Bonds” asset class comes from the x-trackers II iBoxx Sovereigns Eurozone AAA UCITS 1C index (denoted as XBAT index); the “Money Market” is represented by the Euro Overnight Index Average (EONIA).

References

Andrieu, C., & Roberts, G. O. (2009). The pseudo-marginal approach for efficient Monte Carlo computations. The Annals of Statistics, 37(2), 697–725. https://doi.org/10.1214/07-aos574

Andrieu, C., & Thoms, J. (2008). A tutorial on adaptive MCMC. Statistics and Computing, 18(4), 343–373.

Anily, S., & Federgruen, A. (1987). Ergodicity in parametric nonstationary Markov chains: An application to simulated annealing methods. Operations Research, 35(6), 867–874. https://doi.org/10.1287/opre.35.6.867

Azzalini, A., & Valle, A.D. (1996). The multivariate skew-normal distribution. Biometrika 83(4):715–726, http://www.jstor.org/stable/2337278.

Beaumont, M. A. (2003). Estimation of population growth or decline in genetically monitored populations. Genetics, 164(3), 1139–1160. https://doi.org/10.1093/genetics/164.3.1139

Bergey, P. K., Ragsdale, C. T., & Hoskote, M. (2003). A simulated annealing genetic algorithm for the electrical power districting problem. Annals of Operations Research, 121(1), 33–55. https://doi.org/10.1023/A:1023347000978

Bertsekas, D. P. (2014). Constrained optimization and Lagrange multiplier methods. Cambridge: Academic press.

Bick, B., Kraft, H., & Munk, C. (2013). Solving constrained consumption-investment problems by simulation of artificial market strategies. Management Science, 59(2), 485–503. https://doi.org/10.1287/mnsc.1120.1623

Bohachevsky, I. O., Johnson, M. E., & Stein, M. L. (1986). Generalized simulated annealing for function optimization. Technometrics, 28(3), 209–217. https://doi.org/10.1080/00401706.1986.10488128

Boucher, C., Jannin, G., Kouontchou, P., & Maillet, B. (2013). An economic evaluation of model risk in long-term asset allocations. Review of International Economics, 21(3), 475–491. https://doi.org/10.1111/roie.12049

Calvo, C., Ivorra, C., & Liern, V. (2012). On the computation of the efficient frontier of the portfolio selection problem. Journal of Applied Mathematics, 2012, 1–25. https://doi.org/10.1155/2012/105616

Casarin, R., & Billio, M. (2007). Stochastic optimization for allocation problems with shortfall risk constraints. Applied Stochastic Models in Business and Industry, 23(3), 247–271. https://doi.org/10.1002/asmb.671

Castellano, R., Cerqueti, R., Clemente, G. P., & Grassi, R. (2021). An optimization model for minimizing systemic risk. Mathematics and Financial Economics, 15(1), 103–129. https://doi.org/10.1007/s11579-020-00279-6

Charalambous, C. (1978). A lower bound for the controlling parameters of the exact penalty functions. Mathematical programming, 15(1), 278–290. https://doi.org/10.1007/BF01609033

Costola, M., Maillet, B., & Yuan, Z. (2022). Mean–variance efficient large portfolios: A simple machine learning heuristic technique based on the two-fund separation theorem. Annals of Operations Research. https://doi.org/10.1007/s10479-022-04881-3

Crama, Y., & Schyns, M. (2003). Simulated annealing for complex portfolio selection problems. European Journal of Operational Research, 150(3), 546–571. https://doi.org/10.1016/S0377-2217(02)00784-1

Cramer, J.S. (1989). Econometric applications of maximum likelihood methods. CUP Archive.

D’Amico, S. J., Wang, S. J., Batta, R., & Rump, C. M. (2002). A simulated annealing approach to police district design. Computers and Operations Research, 29(6), 667–684. https://doi.org/10.1016/S0305-0548(01)00056-9

Dong, M. X., & Wets, R. J. B. (2000). Estimating density functions: A constrained maximum likelihood approach. Journal of Nonparametric Statistics, 12(4), 549–595. https://doi.org/10.1080/10485250008832822

Edrisi, A., Nadi, A., & Askari, M. (2020). Optimization-based planning to assess the level of disaster in the city. International Journal of Human Capital in Urban Management, 5(3), 199–206. https://doi.org/10.22034/IJHCUM.2020.03.02

Etgar, R., Shtub, A., & LeBlanc, L. J. (1997). Scheduling projects to maximize net present value - the case of time-dependent, contingent cash flows. European Journal of Operational Research, 96(1), 90–96. https://doi.org/10.1016/0377-2217(95)00382-7

Etgar, R., Gelbard, R., & Cohen, Y. (2017). Optimizing version release dates of research and development long-term processes. European Journal of Operational Research, 259(2), 642–653. https://doi.org/10.1016/j.ejor.2016.10.029

Feng, M. B., Maggiar, A., Staum, J., & Wachter, A. (2018). Uniform convergence of sample average approximation with adaptive importance sampling. In 2018 Winter Simulation Conference (WSC). IEEE. https://doi.org/10.1109/wsc.2018.8632370

Fisk, C. L., Harring, J. R., Shen, Z., Leite, W., Suen, K. Y., & Marcoulides, K. M. (2022). Using simulated annealing to investigate sensitivity of SEM to external model misspecification. Educational and Psychological Measurement. https://doi.org/10.1177/00131644211073121

Ftiti, Z., Tissaoui, K., & Boubaker, S. (2020). On the relationship between oil and gas markets: A new forecasting framework based on a machine learning approach. Annals of Operations Research, 313(2), 915–943. https://doi.org/10.1007/s10479-020-03652-2

Gilli, M., & Schumann, E. (2012). Heuristic optimisation in financial modelling. Annals of Operations Research, 193, 129–158. https://doi.org/10.1007/s10479-011-0862-y

Glizer, V.Y., & Turetsky, V. (2019). Robust optimization of statistical process control. In: 2019 27th Mediterranean Conference on Control and Automation (MED), IEEE, https://doi.org/10.1109/med.2019.8798539.

Gupta, R. D., & Kundu, D. (2001). Exponentiated exponential family: An alternative to gamma and Weibull distributions. Biometrical Journal: Journal of Mathematical Methods in Biosciences, 43(1), 117–130. https://doi.org/10.1002/1521-4036(200102)43:1<117::AID-BIMJ117>3.0.CO;2-R

He, Z., Wang, N., Jia, T., & Xu, Y. (2009). Simulated annealing and tabu search for multi-mode project payment scheduling. European Journal of Operational Research, 198(3), 688–696.

Homem-de-Mello, T. (2008). On rates of convergence for stochastic optimization problems under non-independent and identically distributed sampling. SIAM Journal on Optimization, 19(2), 524–551. https://doi.org/10.1137/060657418

Isaacson, D. L., & Madsen, R. W. (1976). Markov chains Theory and Applications. New York: Wiley.

Juditsky, A., & Nemirovski, A. (2020). Statistical Inference via Convex Optimization. Princeton University Press. https://doi.org/10.2307/j.ctvqsdxqd

Kirkpatrick, S., Gelatt, C. D., & Vecchi, M. P. (1983). Optimization by simulated annealing. Science, 220(4598), 671–680. https://doi.org/10.1126/science.220.4598.671

Kundu, D., & Gupta, R. D. (2005). Estimation of \(P[Y < X]\) for generalized exponential distribution. Metrika, 61(3), 291–308. https://doi.org/10.1007/s001840400345

van Laarhoven, P. J. M., Aarts, E. H. L., & Lenstra, J. K. (1992). Job shop scheduling by simulated annealing. Operations Research, 40(1), 113–125. https://doi.org/10.1287/opre.40.1.113

Markowitz, H. (1952). Portfolio selection. The Journal of Finance, 7(1), 77–91. https://doi.org/10.1111/j.1540-6261.1952.tb01525.x

de Mello, T. H., & Bayraksan, G. (2014). Monte Carlo sampling-based methods for stochastic optimization. Surveys in Operations Research and Management Science, 19(1), 56–85. https://doi.org/10.1016/j.sorms.2014.05.001

Meng, X., & Taylor, J. W. (2020). Estimating value-at-risk and expected shortfall using the intraday low and range data. European Journal of Operational Research, 280(1), 191–202. https://doi.org/10.1016/j.ejor.2019.07.011

Nadarajah, S. (2011). The exponentiated exponential distribution: A survey. AStA Advances in Statistical Analysis, 95(3), 219–251. https://doi.org/10.1007/s10182-011-0154-5

Porth, L., Boyd, M., & Pai, J. (2016). Reducing risk through pooling and selective reinsurance using simulated annealing: An example from crop insurance. The Geneva Risk and Insurance Review, 41(2), 163–191. https://doi.org/10.1057/s10713-016-0013-0

Rayward-Smith, V., Osman, I., Reeves, C., & Simth, G. (1996). Modern Heuristic Search Methods. New York: Wiley.

Ricca, F., & Simeone, B. (2008). Local search algorithms for political districting. European Journal of Operational Research, 189(3), 1409–1426. https://doi.org/10.1016/j.ejor.2006.08.065

Robert, C., & Casella, G. (2013). Monte Carlo statistical methods. Berlin: Springer.

Rutenbar, R. (1989). Simulated annealing algorithms: An overview. IEEE Circuits and Devices Magazine, 5(1), 19–26. https://doi.org/10.1109/101.17235

Ryu, D. W., Kim, J. I., Suh, S., & Suh, W. (2015). Evaluating risks using simulated annealing and building information modeling. Applied Mathematical Modelling, 39(19), 5925–5935. https://doi.org/10.1016/j.apm.2015.04.024

Seneta, E. (1981). Non-negative Matrices and Markov Chains. New York: Springer. https://doi.org/10.1007/0-387-32792-4

Shajin, D., Jacob, J., & Krishnamoorthy, A. (2021). On a queueing inventory problem with necessary and optional inventories. Annals of Operations Research. https://doi.org/10.1007/s10479-021-03975-8

Shapiro, A., Dentcheva, D., & Ruszczyński, A. (2014). Lectures on stochastic programming: Modeling and theory. US: SIAM.

Smith, B., & Wong, A. (2017). Simulated annealing of constrained statistical functions. In: Computational Optimization in Engineering - Paradigms and Applications, InTech, https://doi.org/10.5772/66069.

Smith, B., Wang, S., Wong, A., & Zhou, X. (2015). A penalized likelihood approach to parameter estimation with integral reliability constraints. Entropy, 17(6), 4040–4063. https://doi.org/10.3390/e17064040

Stander, J., & Silverman, B. W. (1994). Temperature schedules for simulated annealing. Statistics and Computing, 4(1), 21–32. https://doi.org/10.1007/bf00143921

Tayal, A., & Singh, S. (2018). Integrating big data analytic and hybrid firefly-chaotic simulated annealing approach for facility layout problem. Annals of Operations Research, 270(3), 489–514. https://doi.org/10.1007/s10479-016-2237-x

Wah, B. W., Chen, Y., & Wang, T. (2006). Simulated annealing with asymptotic convergence for nonlinear constrained optimization. Journal of Global Optimization, 39(1), 1–37. https://doi.org/10.1007/s10898-006-9107-z

Wang, H., Hu, Z., Sun, Y., Su, Q., & Xia, X. (2018). Modified backtracking search optimization algorithm inspired by simulated annealing for constrained engineering optimization problems. Computational Intelligence and Neuroscience, 2018, 1–27. https://doi.org/10.1155/2018/9167414

Woodside-Oriakhi, M., Lucas, C., & Beasley, J. (2011). Heuristic algorithms for the cardinality constrained efficient frontier. European Journal of Operational Research, 213(3), 538–550. https://doi.org/10.1016/j.ejor.2011.03.030

Zhang, C., Lin, Q., Gao, L., & Li, X. (2015). Backtracking search algorithm with three constraint handling methods for constrained optimization problems. Expert Systems with Applications, 42(21), 7831–7845. https://doi.org/10.1016/j.eswa.2015.05.050

Acknowledgements

We thank Jean-Luc Prigent for positive comments and encouragement on the preliminary draft of this article, as well as participants to the FEM2021 conference (Paris, on-line, June 2021), as well as the Editor in charge and two anonymous referees who significantly contributed to the improvement of the article. Roberto Casarin and Anthony Osuntuyi gratefully acknowledge the funding received from the EURO Horizon 2020 project on Energy Efficient Mortgages Action Plan (EeMAP), grant agreement No 746205 and the VERA project on economic and financial cycles under the grant number No. 479/2018 Prot. 33287 -VII / 16 of 08.06.2018. The usual disclaimers apply.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A proof to theorem 1: ergodicity of MCWMSA

The proof for Theorem 1 follows standard arguments for the convergence of Inhomogeneous Markov Chains (e.g., Isaacson and Madsen (1976) and Seneta (1981) for related results). More precisely, we first form a lower bound on the probability of reaching any solution from any local minimum, and then show that this bound does not approach zero too quickly. Let G be the generation probability that satisfies 19.

-

a.

Let:

$$\begin{aligned} \displaystyle \varDelta _{G} = \min _{\begin{array}{c} (x',\lambda ')\in \mathcal {N}({x,\lambda }),\\ {x,\lambda }\in \mathcal {S} \end{array}} G(x,\lambda ;x',\lambda ') \end{aligned}$$and:

$$\begin{aligned} \displaystyle \varDelta _{L^{M}} = 2\max _{\begin{array}{c} (x',\lambda ')\in \mathcal {N}(x,\lambda ),\\ (x'\lambda ')\in \mathcal {S},y,y'\in \mathcal {Y}^M \end{array}}\{|L^{M}(x',\lambda ') -L^{M}(x,\lambda )|\}, \end{aligned}$$be, respectively, the value of the smallest one-step solution generation probabilities and the size of the largest potential jump in the value of the auxiliary penalty function between any pair of trial solutions. \(\mathcal {S}=\mathcal {X}\times \mathbb {R}_{+}^{r}\) denotes the search space while \(\mathcal {N}(x,\lambda )\) represents the neighborhood of \((x,\lambda )\). If \((x,\lambda )\ne (x',\lambda ')\), then for all \((x,\lambda )\in \mathcal {S}\) and \((x',\lambda ')\in \mathcal {N}(x,\lambda )\), we have:

$$\begin{aligned} \begin{aligned}&P_{MCWMSA}(x,\lambda ;x',\lambda ') = \int _{\mathcal {Y}^{M}}\int _{\mathcal {Y}^{M}} G(x,\lambda ;x',\lambda ')A^{M}_{T_{k}}(x,\lambda ;x',\lambda ')q^{M}_{x}(y)q^{M}_{x'}(y')dydy' \\&\quad = G(x,\lambda ;x',\lambda ')\int _{\mathcal {Y}^{M}}\int _{\mathcal {Y}^{M}} A^{M}_{T_{k}}(x,\lambda ;x',\lambda ')q^{M}_{x}(y)q^{M}_{x'}(y')dydy' \\&\quad = {\left\{ \begin{array}{ll} G(x,\lambda ;x',\lambda ')\int _{\mathcal {Y}^{M}}\int _{\mathcal {Y}^{M}} \min \left\{ \exp \left( -\frac{(L^{M}(x',\lambda ')-L^{M}(x,\lambda ))}{T}\right) ,1\right\} q^{M}_{x}(y)q^{M}_{x'}(y')dydy', &{} {\text {if }u=1 }\\ G(x,\lambda ;x',\lambda ')\int _{\mathcal {Y}^{M}}\int _{\mathcal {Y}^{M}} \min \left\{ \exp \left( -\frac{(L^{M}(x,\lambda )-L^{M}(x',\lambda '))}{T}\right) ,1\right\} q^{M}_{x}(y)q^{M}_{x'}(y')dydy' , &{} {\text {if }u=0 } \end{array}\right. }\\&\quad \ge G(x,\lambda ;x',\lambda ')\exp \left( -\frac{\varDelta _{L^{M}}}{T_{k}}\right) \int _{\mathcal {Y}^{M}}\int _{\mathcal {Y}^{M}} q^{M}_{x}(y)q^{M}_{x'}(y')dydy'\\ \\&\quad \ge \varDelta _{G}\exp \left( -\frac{\varDelta _{L^{M}}}{T_{k}}\right) . \end{aligned} \end{aligned}$$(A.1) -

b.

For any \(\lambda \), let: