Abstract

The bounded degree sum-of-squares (BSOS) hierarchy of Lasserre et al. (EURO J Comput Optim 1–31, 2015) constructs lower bounds for a general polynomial optimization problem with compact feasible set, by solving a sequence of semi-definite programming (SDP) problems. Lasserre, Toh, and Yang prove that these lower bounds converge to the optimal value of the original problem, under some assumptions. In this paper, we analyze the BSOS hierarchy and study its numerical performance on a specific class of bilinear programming problems, called pooling problems, that arise in the refinery and chemical process industries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Polynomial programming is the class of nonlinear optimization problems involving polynomials only:

where f and all \(g_j\) are n-variate polynomials. We will assume throughout that

-

the feasible set

is compact;

is compact; -

for all \(x \in F\) one has

.

.

The second condition is theoretically without loss of generality (by scaling the \(g_j\)).

In general, these problems are \(\mathcal {NP}\)-hard, since they contain problems like the maximum cut problem as special cases; see e.g. Laurent (2009). In 2015, Lasserre et al. introduced the so-called bounded degree sum-of-squares (BSOS) hierarchy to obtain a nondecreasing sequence of lower bounds on the optimal value of problem (1) when the feasible set is compact. Each lower bound in the sequence is the optimal value of a semidefinite programming (SDP) problem. Moreover, the authors of Lasserre et al. (2015) showed that, under some assumptions, this sequence converges to the optimal value of problem (1). From their numerical experiments, they concluded that the BSOS hierarchy was efficient for quadratic problems.

In this paper, we analyze the BSOS hierarchy in more detail. We also study variants of the BSOS hierarchy where the number of variables is reduced.

The numerical results in this paper are on pooling problems, that belong to the class of problems with bilinear functions. The pooling problem is well-studied in the chemical process and petroleum industries. It has also been generalised for application to wastewater networks; see e.g. Karuppiah and Grossmann (2006). It is a generalization of a minimum cost network flow problem where products possess different specifications. There are many equivalent mathematical models for a pooling problem and all of them include bilinear functions in their constraints. Haverly (1978) described the so-called P-formulation, and afterwards many researchers used this model (e.g. Adhya et al. 1999; Ben-Tal et al. 1994; Foulds et al. 1992). Also, there are Q-, PQ-, and TP-formulations; in this paper, we use the P- and PQ-formulations and point the reader to the survey by Gupte et al. (2017) where all the formulations are described, as well as the PhD thesis by Alfaki (2012).

One way of getting a lower bound for a pooling problem is using convex relaxation, as done e.g. by Foulds et al. (1992). Similarly, Adhya et al. (1999) introduced a Lagrangian approach to get tighter lower bounds for pooling problems. Also, there are many other papers studying duality (Ben-Tal et al. 1994), piecewise linear approximation (Misener et al. 2011), heuristics for finding a good feasible solution (Alfaki and Haugland 2014), etc. A relatively recent survey on solution techniques is Misener and Floudas (2009).

In a seminal paper in 2000, Lasserre (2001) first introduced a hierarchy of lower bounds for polynomial optimization using SDP relaxations. Frimannslund et al. (2010) tried to solve pooling problems with the LMI relaxations obtained by this hierarchy. They found that, due to the growth of the SDP problem sizes in the hierarchy, this method is not effective for the pooling problems. In this paper, we therefore consider the BSOS hierarchy as an alternative, since it is not so computationally intensive.

The structure of this paper is as follows: We describe the BSOS hierarchy in Sect. 2. In Sect. 3 the pooling problem is defined, and we review three mathematical models for it, namely the P-, Q- and PQ-formulations. Also, we solve some pooling problems by the BSOS hierarchy in this section. Section 4 contains the numerical results after a reduction in the number of linear variables and constraints in each iteration of the BSOS hierarchy.

2 The bounded degree SOS (BSOS) hierarchy for polynomial optimization

In this section, we briefly review the background of the BSOS hierarchy from Lasserre et al. (2015). For easy reference, we will use the same notation as in Lasserre et al. (2015).

In what follows \({\mathbb {N}}^{k}\) will denote all k-tuples of nonnegative integers, and we define

The space of \(n\times n\) symmetric matrices will be denoted by \({\mathcal {S}}^n\), and its subset of positive semidefinite matrices by \({\mathcal {S}}_+^n\).

Consider the general nonlinear optimization problem (1). For fixed \(d\ge 1\), the following problem is clearly equivalent to (1):

The underlying idea of the BSOS hierarchy is to rewrite problem (1) as

The next step is to use the following positivstellensatz by Krivine (1964) to remove the quantifier ‘\(\forall x \in F\)’.

Theorem 1

([14], see also §3.6.4 in Laurent 2009 ) Assume that \(g_j(x)\le 1\) for all \(x\in F\) and \(j=1,\ldots ,m\), and \(\{1,g_1,\ldots ,g_m\}\) generates the ring of polynomials. If a polynomial g is positive on F then

for finitely many \(\lambda _{\alpha \beta } > 0\).

Defining

we arrive at the following sequence of lower bounds (indexed by d) for problem (1):

For a given integer \(d > 0\) the right-hand-side is a linear programming (LP) problem, and the lower bounds converge to \(f^*\) in the limit as \(d \rightarrow \infty \), by Krivine’s positivstellensatz. This hierarchy of LP bounds was introduced by Lasserre (2005).

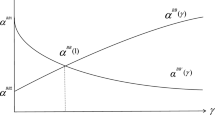

A subsequent idea, from Lasserre et al. (2015) was to strengthen the LP bounds by enlarging its feasible set as follow: If we fix \(\kappa \in {\mathbb {N}}\), and denote by \(\sum [x]_\kappa \) the space of sums of squares polynomials of degree at most \(2\kappa \), then we may define the bounds

The resulting problem is a semidefinite programming (SDP) problem, and the size of the positive semidefinite matrix variable is determined by the parameter \(\kappa \), hence the name bounded-degree sum-of-squares (BSOS) hierarchy. By fixing \(\kappa \) to a small value, the resulting SDP problem is not much harder to solve than the preceding LP problem, but potentially yields a better bound for given d.

For fixed \(\kappa \) and for each d, one has

where \(s(\kappa )={n+\kappa \atopwithdelims ()\kappa }\), and \(v_\kappa (x)\) is a vector with a basis for the n-variate polynomials up to degree \(\kappa \).

Letting \(\tau =\max \{deg(f),2\kappa ,d\max _j{deg(g_j)}\}\), we may eliminate the variables x in two ways to get an SDP problem:

-

Equate the coefficients of the polynomials on both sides of the equality in (4), i.e. use the fact that two polynomials are identical if they have the same coefficients in some basis.

-

Use the fact that two n-variate polynomials of degree \(\tau \) are identical if their function values coincide on a finite set of \(s(\tau ) = {n+\tau \atopwithdelims ()\tau }\) points in general position.

The second way of obtaining an SDP problem is called the ‘sampling formulation’, and was first studied in Lofberg and Parrilo (2004). It was also used for the numerical BSOS hierarchy calculations in Lasserre et al. (2015), with a set of \(s(\tau )\) randomly generated points in \({\mathbb {R}}^n\).

We will instead use the points

Thus we obtain the following SDP reformulation of (4):

The following theorem, proved in Lasserre et al. (2015), gives some information on feasibility and duality issues for the BSOS relaxation.

Theorem 2

(Lasserre et al. 2015) If problem (1) is Slater feasible, then so is the dual SDP problem of (5). Thus (by the conic duality theorem), if the SDP problem (5) has a feasible solution, it has an optimal solution as well.

Note that problem (5) may be infeasible for given d and \(\kappa \). One only knows that it will be feasible, and therefore \(q^\kappa _d\) will be defined, for sufficiently large d.

Remark 1

Assume that at the d-th level of the hierarchy we have \(q_d^\kappa =f^*\), i.e. finite convergence of the BSOS hierarchy, then

Let \(x^* \in F\) be an optimal solution (\(f(x^*) = f^*\)), then it is clear from (6) that

and due to the fact that Q is positive semidefinite, then

Hence, for an \((\alpha , \beta )\in {\mathbb {N}}^{2m} _d\), if \(h_{\alpha \beta }(x)\) is not binding at an optimal solution, then \(\lambda _{\alpha \beta }=0\). We will use this observation to reduce the number of variables later on. \(\square \)

3 The P-, Q- and PQ-formulations of the pooling problem

In this section, we describe the P-, Q- and PQ-formulations of the pooling problem. The notation we are using is the same as in Gupte et al. (2017). To define the pooling problem, consider an acyclic directed graph \(G=({\mathcal {N}},{\mathcal {A}})\) where \({\mathcal {N}}\) is the set of nodes and \({\mathcal {A}}\) is the set of arcs. This graph defines a pooling problem if:

-

(i)

the set \({\mathcal {N}}\) can be partitioned into three subsets \({\mathcal {I}},{\mathcal {L}}\) and \({\mathcal {J}}\), where \({\mathcal {I}}\) is the set of inputs with I members, \({\mathcal {L}}\) is the set of pools with L members and \({\mathcal {J}}\) is the set of outputs with J members.

-

(ii)

\({\mathcal {A}}\subseteq ({\mathcal {I}}\times {\mathcal {L}}) \cup ({\mathcal {I}}\times {\mathcal {J}}) \cup ({\mathcal {L}}\times {\mathcal {L}}) \cup ({\mathcal {L}} \times {\mathcal {J}}) \); see Fig. 1.

In this paper, we consider cases where \({\mathcal {A}}\cap {\mathcal {L}}\times {\mathcal {L}}=\emptyset \), which is called standard pooling problem because there is no arc between the pools.

For each arc \((i,j)\in {\mathcal {A}}\), let \(c_{ij}\) be the cost of sending a unit flow on this arc. For each node, there is possibly a capacity restriction, which is a limit for sum of the incoming (outgoing) flows to a node. The capacity restriction is denoted by \(C_i\) for each \(i\in N\). Also, there are some specifications for the inputs, e.g. the sulfur concentrations in them, which are indexed by k in a set of specifications \({\mathcal {K}}\) with K members. By letting \(y_{ij}\) be the flow from node i to node \(j, u_{ij} \) the restriction on \(y_{ij}\) that can be carried from i to j, and \(p_{lk}\) the concentration value of kth specification in the pool l, the pooling problem can be written as the following optimization model:

where \(\mu ^{max}_{jk}\) and \(\mu ^{min}_{jk}\) are the upper and lower bound of the kth specification in output \(j\in {\mathcal {J}}\), and \(\lambda _{ik}\) is the concentration of kth specification in the input i. Here is a short interpretation of the constraints:

-

(9): volume balance between the incoming and outgoing flows in each pool.

-

(10): capacity restriction for each input.

-

(11): capacity restriction for each pool.

-

(12): capacity restriction for each output.

-

(13): limitation on each flow.

-

(14): specification balance between the incoming and outgoing flows in each pool.

-

(15): upper bound of the output specification.

-

(16): lower bound of the output specification.

For a general pooling problem, the aforementioned model is called the P-formulation. Consider a pool \(l\in {\mathcal {L}}\) and the arc incident to it from input \(i\in {\mathcal {I}}\). Let us denote by \(q_{il}\) the ratio between the flow in this arc and the total incoming flow to this pool. So, \(y_{il}=q_{il}\sum _{j\in {\mathcal {J}}}y_{lj}\), and \(p_{lk}=\sum _{i\in {\mathcal {I}}}\lambda _{ik}q_{il}\) for any \(k\in {\mathcal {K}}\). Applying these to the P-formulation yields the following problem called the Q-formulation:

Adding two sets of redundant constraints

gives an equivalent problem, called the PQ-formulation. It is clear that all formulations are nonconvex quadratic optimization problems which are not easy to solve (Haugland 2016).

3.1 McCormick relaxation and the pooling problem

Assume that x and y are variables with given lower and upper bounds

Then, the following inequalities are implied when \(\chi =xy\):

It is known that the convex hull of

which is called the McCormick relaxation (Gupte et al. 2017), is exactly the set of \((x,y,\chi )\) that satisfies the inequalities (19).

In the pooling problem, the following lower and upper bounds on the variables are implied:

So, one can get a lower bound by using the McCormick relaxation of each bilinear term in the P- or PQ-formulations.

The redundant constraints (18) guarantee that the relaxation obtained by using the McCormick relaxation for the PQ-formulation is stronger than that for the P-formulation (Gupte et al. 2017).

In this paper, we are going to use the BSOS hierarchy to find a sequence of lower bounds that converges to the optimal value of the pooling problem. First we analyze the P-formulation and in Sect. 4.3 we compare the results by using the PQ-formulation.

3.2 Solving pooling problems with the BSOS hierarchy

The BSOS hierarchy is only defined for problems without equality constraints and the P-formulation has \((K+1)L\) equality constraints. The simplest way of dealing with equality constraints, is to replace each equality constraint by two inequalities; however, this process increases the number of constraints which is not favorable for the BSOS hierarchy. Another way of doing so is eliminating the equality constraints (9) and (14), if possible.

3.2.1 Eliminating equality constraints

Let \(l\in {\mathcal {L}}\). We assume without loss of generality that the first t inputs feed the pool l. Therefore, equality constraints (9) and (14) can be written as follows:

Let \(rank(A)=r\). Applying a singular value decomposition (see, e.g. Boyd and Vandenberghe 2004, Appendix A.5.4), there are matrices \(U=[U_1,U_2]\in {\mathbb {R}}^{(K+1)\times (K+1)},V=[V_1,V_2]\in {\mathbb {R}}^{t\times t},\varSigma \in {\mathbb {R}}^{r \times r}\) such that

where \(V_1\in {\mathbb {R}}^{t \times r}, V_2\in {\mathbb {R}}^{t\times (t-r)}, U_1\in {\mathbb {R}}^{(K+1) \times r}, U_2\in {\mathbb {R}}^{(K+1)\times (K+1-r)}, 0_1\in {\mathbb {R}}^{r \times (t-r)},\;0_2\in {\mathbb {R}}^{(K+1-r)\times r}, 0_3\in {\mathbb {R}}^{(K+1-r) \times (t-r)}\). Therefore, (20) can be written as

The fact that \(V^TV=I\), implies that all columns in V, and hence in \(V_1\) are linearly independent. Therefore, taking the QR decomposition of \(V_1^T\), i.e., \(V_1^T=Q[R_1,R_2]\), where \(R_1\in {\mathbb {R}}^{r \times r}\) is upper triangular and invertible, \(R_2\in {\mathbb {R}}^{r\times (t-r)}\), and \(Q\in {\mathbb {R}}^{r \times r}\) is orthonormal (\(Q^TQ=QQ^T=I\)), (21) is equivalent to

Note that one may use Eq. (23) to eliminate the variables \(y_{1l},y_{2l}, \ldots , y_{rl}\).

Concerning (22), if, for a feasible solution of (8)–(16), \(\sum _{j\in J}y_{lj}=0 \), then any other choice of the values \(p_{lk}, k=1,\ldots ,K,\) gives another feasible solution. So in this case one may choose values that satisfy

which is a system of K variables and \(K-r+1\) linearly independent equalities with \(r\ge 1\). Conversely, a feasible solution with the property \(\sum _{j\in J}y_{lj}\ne 0 \) definitely satisfies (24). So, instead of (22), we may solve (24), which may be done using the QR decomposition. Thus we may write \(p_{lr},\ldots , p_{lK}\) as a linear function of \(p_{l1},\ldots ,p_{l(r-1)}\), and subsequently eliminate \(p_{lr},\ldots , p_{lK}\) as well.

Remark 2

We emphasize that after these substitutions, the equivalent mathematical model to the pooling problem is still nonconvex quadratic optimization problem.

Remark 3

The interpretation of eliminating equality constraints is as follows when the matrix A is full rank (\(rank(A)=\min \{K+1,t\}\)): For pools with exactly \(K + 1\) entering arcs, the entering flow values are given by the total leaving flow and the concentrations in the pool. With more than \(K + 1\) arcs, say \(t, t - K - 1\) flow values can be chosen freely and the remaining \(K + 1\) determined by total leaving flow and concentrations. When \(t < K + 1\), a basis of t concentration values define the \(K + 1 - t\) remaining ones.

3.2.2 First numerical results

In this section, we study convergence of the BSOS hierarchy of lower bounds \(q^1_d\) (\(d=1,2,\ldots \)) for pooling problems (\(\kappa =1\)). First, it is worth pointing out the number of variables and constraints needed to compute \(q^1_d\). The number of constraints, as it is mentioned in the previous section, is \({n+2d \atopwithdelims ()2d}\). Also, the number of linear variables is one more than the size of \({\mathbb {N}}^{2m}_d\), namely \({2m+d\atopwithdelims ()d}+1\).

Table 1 gives some information of the standard pooling problem instances we use in this paper. The GAMS files of the pooling problem instances that we use in this paper, except DeyGupte4, can be found on the website http://www.ii.uib.no/~mohammeda/spooling/. DeyGupte4 is constructed in this paper (Appendix) by using the results of Dey and Gupte (2015). In Table 1, we recall in column “PQ-linear relaxation value” the lower bound proposed in Alfaki and Haugland (2013) of each instance. This lower bound is the optimal value of the PQ-formulation after applying McCormick relaxation for each bilinear term. It is proved in Dey and Gupte (2015) that any optimal value of a piecewise linear approximation of the PQ-formulation (for a precise definition see Appendix) for DeyGupte4, has optimal value in \([-4,-3]\). Also, columns “# var.” and “# const.” contain the number of variables and constraints in the P-formulation after eliminating equality constraints, respectively.

Example 1

By way of example, we give the details for the first instance in Table 1, called Haverly1. Its optimal solution is shown in Fig. 2, and the optimal value is \(-400\) (Adhya et al. 1999). The optimal flow from node i to node j is denoted by \(y^*_{ij}\) in Fig. 2.

This instance has three inputs (denoted by \(1,\ 2,\ 3\)), one pool (denoted by 4), two outputs (denoted by \(5,\ 6\)), and one specification. The mathematical model for this instance is as follows:

So, we can use the elimination method described in the previous section, which implies that \(y_{14}=\frac{1}{2}(y_{45}+y_{46})(p_1-1), y_{24}=\frac{1}{2}(y_{45}+y_{46})(3-p_1)\). Therefore, the reformulated model of this instance using scaling \(x_1:=\frac{p_1}{3}, \ x_2:=\frac{y_{45}}{200}, \ x_3:=\frac{y_{46}}{200}, \ x_4:=\frac{y_{35}}{200}, \ x_5:=\frac{y_{36}}{200}\), is

where the leftmost inequalities are redundant, (26a) and (26b) are from the sign constraints after the elimination, (26c), (26d), (26e), and (26f) are from (25a), (25b), (25c) and (25d), respectively.

The last step is to multiply the constraint functions by a factor 0.9 (any value in (0, 1) will do, but we used 0.9 for our computations), to ensure that the ‘\(\le 1\)’ conditions hold with strict inequality on the feasible set. Thus, we define \(g_1(x) = -0.9\cdot \frac{3}{4}(x_1-1)(x_2+x_3)\), etc.

We will use the BSOS hierarchy to find the optimal value of this example (Table 2). \(\square \)

The results for Haverly1 and the other pooling problem instances are listed in Table 2. All computations in this paper were carried out with MOSEK 8 on an Intel i7-4790 3.60 GHz Windows computer with 16 GB of RAM. “Numerical Prob.” and “\(\approx \)” in the tables mean the solver reported a numerical problem, and only obtained an approximate optimal value, respectively. In all the tables from now on, columns “# lin. var.”, “size of SDP var.” and “# const.” present the number of linear variables, the size of the semidefinite matrix variable and the number of constraints in the hierarchy.

As it is clear, in order to compute \(q^\kappa _d\) we can have a large number of linear variables and constraints (depending of d), which affects the speed and the time we need to solve (5). In the coming section, we describe how one can reduce the number of linear variables and constraints at each level of the BSOS hierarchy significantly.

4 Reduction in the number of linear variables and constraints

In this section, we propose a method to reduce the number of linear variables and an upper bound for the number of linearly independent constraints in each iteration.

4.1 Reduction in the number of variables

As it is mentioned in Remark 1, if we can identify constraints that are not binding at optimality, then we can reduce the number of variables.

In particular, by construction the constraints \(g_j(x) \le 1\) will never be binding at optimality. Recalling that the variable \(\lambda _{\alpha \beta }\) corresponds to

we know from Remark 1 that, in case of finite convergence, we will have \(\lambda _{\alpha \beta } = 0\) whenever \(\alpha = 0\).

Hence, instead of solving (5) to compute \(q_d^\kappa \), we may compute the following (weaker) bound more efficiently:

The advantage of (27) is that it has \({ m+d \atopwithdelims ()d }\) fewer nonnegative variables than (5). We emphasize that problem (27) is not equivalent to (5), i.e. the lower bounds \(q_d^\kappa \) and \({{\hat{q}}}_d^\kappa \) are not equal in general — the bound \({{\hat{q}}}_d^\kappa \) is weaker, and may be strictly weaker.

The precise relation of the bounds \(q_d^\kappa \) and \({{\hat{q}}}_d^\kappa \) is spelled out in the next theorem, which follows from the argument in Remark 1.

Theorem 3

If, for given d and \(\kappa , q_d^\kappa \) and \({{\hat{q}}}_d^\kappa \) are both finite, then \({{\hat{q}}}_d^\kappa \le q_d^\kappa \). Moreover, if the sequence \(q_d^\kappa \) (\(d= 1,2,\ldots \)) from (5) converges finitely to \(f^*\), then so does \({{\hat{q}}}_d^\kappa \) (\(d= 1,2,\ldots \)) from (27). More precisely, if \(q_{d^*}^\kappa = f^*\) for some \(d^* \in {\mathbb {N}}\), then \({{\hat{q}}}_{d^*}^\kappa = f^*\).

It is important to note that finite convergence of the sequence \(q_d^\kappa \) (\(d= 1,2,\ldots \)) is not guaranteed in general. Sufficient conditions for finite convergence are described in Lasserre et al. (2015).

The numerical results for using (27) for the pooling problem instances is demonstrated in Table 3. The “rel. time” column from this table onward gives the solution time for each level of the hierarchy as a ratio of that in Table 2, which shows that there is a significant reduction in computational times when compared to Table 2.

4.2 Reduction in the number of constraints

From now on we fix \(\kappa =1\) and \(v_1(x)=\left( 1,x_1,\ldots ,x_n\right) \). As it was mentioned, the number of constraints in each level of the BSOS hierarchy is \({n+2d \atopwithdelims ()2d}\), where n is the number of variables in the original problem (1) and d is the level of the BSOS hierarchy. So, the number of constraints increases quickly with d. In this subsection, we discuss the redundancy of constraints and how we can eliminate linearly dependent constraints.

Let \(\text{ svec }\) denote the map from the \((n+1)\times (n+1)\) symmetric matrix space \(S^{n+1}\) to \({\mathbb {R}}^{1 \times {n+2 \atopwithdelims ()2}}\) given by

It will also be convenient to number the elements of \(\varDelta (n,\tau )\) as \(x^1,\ldots ,x^L\) where \(L = s(\tau )\). Finally, we will use the notation \(|\beta | = \sum _i \beta _i\).

So, for \(d\ge 1\) and \(\kappa =1\) we may write the linear equality constraints in (5) as \(H_dy_d=b_d\), where

and \(L={n+2d \atopwithdelims ()2d}\). It is clear that \(H_d \in {\mathbb {R}}^{L \times \left[ {2m+d \atopwithdelims ()d}+L+1\right] }\).

In the following theorem we prove that all the constraints are linearly independent when \(d=1\).

Theorem 4

For the general problem (1) with quadratic functions f(x) and \(g_j(x), j=1,\ldots ,m\), all of the constraints in the first iteration of the BSOS hierarchy are linearly independent, i.e. if \(d=1\), all of the constraints of (5) are linearly independent.

Proof

Fix \(d=1\), which implies \(\tau =2\) and \(L={n+2 \atopwithdelims ()2}\) in (5). Then,

and,

for \( x^1,\ldots ,x^L \in \varDelta (n,2)\), defined in (5). To show that all of the rows in \(H_1\) are linearly independent, we prove that the submatrix

is a full rank matrix by induction over n, the dimension of x. Assume that \(n=1\). Because \(\varDelta (1,2)= \{0,\frac{1}{2},1\}\), it is clear that the rank of the matrix \(V^1_1=\left[ \begin{matrix} 1 &{}0 &{}0\\ 1&{} \frac{\sqrt{2}}{2}&{}\frac{1}{4}\\ 1&{} \sqrt{2}&{} 1 \end{matrix}\right] ,\) is 3, which means that \(V^1_1\) is a full rank matrix.

Now, suppose that \(V^1_n\) is a full rank matrix, and let us show it is full rank for \(n+1\). When \(x\in {\mathbb {R}}^{n+1}\), we can partition the points in \(\varDelta (n+1,2)\) into three cases:

-

(I)

points with \(x_{n+1}=0\). These points can be generated by considering all of the points in \(\varDelta (n,2)\), and adding a 0 as their last component.

-

(II)

points with \(x_{n+1}=\frac{1}{2}\). The points in this class can be sub-partitioned into two groups:

-

(i)

points with one nonzero component.

-

(ii)

points with two nonzero components.

-

(i)

-

(III)

points with \(x_{n+1}=1\). Clearly, there is only one point in this class.

According to the definition of \(\text{ svec }\left( v_1(x)v_1(x)^T\right) \), each of \(V^1_{n+1}\)’s column is related to \(x^\gamma \), where \(\gamma \in {\mathbb {N}}_2^{n+1}\). Let us order the columns of \(V^1_{n+1}\) as follows: first we put all of the columns related to \(x^{\alpha }\), where \((\alpha ,0)\in {\mathbb {N}}^{n+1}_2\), after that the columns related to \(x_{n+1}, x_{n+1}^2, x_{n+1}x_i, i=1,\ldots ,n\). So, because each row of \(V^1_{n+1}\) is related to a point in \(\varDelta (n+1,2)\), after ordering its rows, the matrix looks like this:

for some \(a_1\in {\mathbb {R}}^{n\times L}, \text{ and } a_2, a_3 \in {\mathbb {R}}^{1 \times L}\). Due to the induction assumption, \(V^1_n\) is a full rank matrix, which implies that \(V^1_{n+1}\) is a full rank matrix. Therefore, the constraints in the first iteration of the BSOS hierarchy are linearly independent.\(\square \)

In Theorem 4, we prove that if \(d=1\), then all of the constraints in (5) are linearly independent. In the next theorem, we prove that for \(d\ge 2\), if we rewrite \(H_d\) as \([{\bar{H}}_d,V_n^d]\), where

then \(Rank(H_d)=Rank({\bar{H}}_d)\).

Theorem 5

Suppose that f is quadratic, \(d\ge 2\), and \(\varTheta \subseteq \varDelta (n,2d)\). The equality constraints in (5) corresponding to the points in \(\varTheta \) applied to the general problem (1) with sign constraints over all of the variables, are linearly independent if and only if rows in \({\bar{H}}_d\) corresponding to the points in \(\varTheta \) are linearly independent.

Proof

The ‘if’ part is trivial.

To prove the ‘only if’ part, without loss of generality we assume that \(x^p, p=1,\ldots ,t\) generate linearly independent constraints, which means that the first t rows of \(H_d\) are linearly independent. Since the objective function f is quadratic, \(b_d\) is a linear combination of the columns of \(V^d_n\). Because of the sign constraints for all of the variables, each column of \(V_n^d\) is also a column in \({\bar{H}}_d\), for \(d\ge 2\). This means that \(V_n^d\) is a submatrix of \({\bar{H}}_d\), which implies that the first t rows in \({\bar{H}}_d\) are linearly independent. \(\square \)

After elimination of the equality constraints in pooling problem (8), we rewrite the model with sign constraints over all of the remaining variables. So, when using Theorem 5 to find the linearly independent constraints, we only need to check \({\bar{H}}_d\).

Theorem 6

Fix \(d\ge 2\). Consider \({\hat{H}}_d\), which is a matrix with all of the columns of \({\bar{H}}_d\) related to \(( \alpha , \beta )\) with \(\beta = 0\). Then \(\text{ Range }({\bar{H}}_d)=\text{ Range }({\hat{H}}_d)\).

Proof

Since we consider \(\beta =0\), we can write \({\hat{H}}_d\) as follows:

where \(L={n+2d \atopwithdelims ()2d}, g(x)=\left( g_1(x),\ldots ,g_m(x)\right) \), for each \(\alpha \in {\mathbb {N}}^m_d, g(x^p)^{\alpha }=\prod _{j=1}^{m}g_j(x)^{\alpha _j}\), and \( \left( g(x^p)^{\alpha }\right) _{\alpha \in {\mathbb {N}}^m_d} \in {\mathbb {R}}^{1 \times {m+d \atopwithdelims ()d}}, p=1,\ldots ,L\).

Because the columns of \({\hat{H}}_d\) are a subset of the columns of \({\bar{H}}_d\), so \(\text{ Range }({\hat{H}}_d)\subseteq \text{ Range }({\bar{H}}_d)\). To prove the other containment, we show that all columns of \({\bar{H}}_d\) are linear combinations of \({\hat{H}}_d\)’s columns. Each column of \({\bar{H}}_d\) is related to a function \(h_{\alpha \beta }(x)\) for some \((\alpha ,\beta )\in {\mathbb {N}}_d^{2m}\). If \(\beta =0\) for a column of \({\bar{H}}_d\), then it is a column of \({\hat{H}}_d\). Now consider a column with \(\beta \ne 0\). Therefore, \(h_{\alpha \beta }(x)\) related to this column is equal to

for some \(\gamma _i \in {\mathbb {N}}^m_{|\beta |}, a_i\in {\mathbb {R}}, i=1,\ldots ,w\), and \(w\ge 0\). Hence, \( h_{\alpha \beta }(x)=\sum _{i=1}^{w}a_ig(x)^{\gamma _i+\alpha } \). Because \(\gamma _i+\alpha \in {\mathbb {N}}^m_{d}, g(x)^{\gamma _i+\alpha }\) is related to a column of \({\hat{H}}_d\), for each \(i=1,\ldots ,w\). This means that any column of \({\bar{H}}_d\) is a linear combination of the columns in \({\hat{H}}_d\). \(\square \)

By Theorem 6, to find the number of linearly independent constraints in (5), we only need to check the columns related to \(h_{\alpha \beta }(x)\) with \(\beta =0\).

It is clear that the results in this paper, except Theorem 4, can be modified for the LP bounds (3). In fact, Theorems 5 and 6 are true in each level, even \(d=1\). In Table 4, the results of solving the pooling problem instances in Table 1 are shown after eliminating the linearly dependent constraints using (3) and \({{\hat{q}}}_d^1\) in (27). Note that the computational times at the \(d=2\) and \(d=3\) levels are greatly reduced when compared to the times in Table 3. For some instances because of the large number of constraints in the last level of the hierarchy, we could not find the number of linearly independent constraints and we put “-” as in Table 4. Also in this table we show how much stronger the BSOS hierarchy is compared to the LP bounds (3) after reducing the number of variables and deleting the linear dependent constraints. As one can see, the main difference between the BSOS hierarchy and (3) is in the first level, in which the number of independent constraints in (3) is much smaller than the BSOS hierarchy. If there is a difference between two hierarchies, it is presented in Table 4 with “()”, in which the value corresponds to the LP bounds (3). It can be seen that there is a pay-off between using (3) and the BSOS hierarchy. By using the LP bounds you may solve each level faster (4 cases) but the lower bound can be strictly weaker than the one from the BSOS hierarchy (2 cases).

4.3 Lower bounds using PQ-formulation

Up to now, we evaluated the BSOS hierarchy on the P-formulation. Since the McCormick relaxation (Sect. 3.1) of the PQ-formulation is stronger than that of the P-formulation Gupte et al. (2017), it is worthwhile to evaluate the BSOS hierarchy using the PQ-formulation. In Table 5 we present these results for the PQ-formulation. To eliminate the equality constraints, we replace them by two inequalities.

4.4 Upper bound for the number of linearly independent constraints

According to Theorem 6, to find the number of linearly independent columns of \(H_d\), for \(d\ge 2\) we only need to find the rank of the linear space, say \(N_d\), spanned by \(\{g(x)^{\alpha }\}_{\alpha \in {\mathbb {N}}^m_{d}}\). Hence, the dimension of \(N_d\) is an upper bound on the number of linearly independent constraints. In this part we give an upper bound on the dimension of \(N_d\), which is an upper bound on the number of linearly independent constraints in (5).

It is clear that \(N_d\) is a subspace of the linear space \(M_d\) spanned by \(\left\{ w(x)^{\alpha }\right\} _{\alpha \in {\mathbb {N}}^{\omega }_{d}}\), where w(x) is a vector containing all of the monomial existing in (1), and \(\omega \) in the size of w(x). Therefore, \(rank(M_d)\) is an upper bound on \(rank(N_d)\), and hence an upper bound of the number of linearly independent constraints in each iteration of the BSOS hierarchy.

In the rest of this part, we try to find \(rank(M_d)\) for the pooling problems, and assume that the number of outgoing flows from each pool is equal to J. After elimination of equality constraints in the pooling problem (8), the functions defining the inequality constraints can be partitioned into three classes:

-

(I)

bilinear functions,

-

(II)

\(x_i, i=1,\ldots ,n\),

-

(III)

some other affine functions.

The bilinear functions are those related to constraints (15) and (16), or those related to the constraints (13) after elimination of equality constraints. Hence, the only bilinear terms in the reformulated problem are \(p_{lk}y_{lj}\), for each pool l and specification k, where there is an outgoing flow from pool l to output j. Therefore,

where \(\bar{{\mathcal {I}}}, \bar{{\mathcal {L}}}\) and \(\bar{{\mathcal {J}}}\) are respectively including (i, l), (l, k) and (l, k, j) that \(y_{il}, p_{lk}\) and \(p_{lk}y_{lj}\) appear in (8) after elimination of the equality constraints, and

Clearly the number of variables in the pooling problem (8) after elimination of equality constraints is \(I\times L+L\times J+|\bar{{\mathcal {I}}}|+|\bar{{\mathcal {L}}}|\). For \(d=1\), we prove in Theorem 4 that all of the constraints in (5) are linearly independent, with the number of \({n+2 \atopwithdelims ()2}\). For \(d\ge 2\), we are seeking for the monomials up to degree 2d that appear in \(M_d\). If \(d=2\), the number of monomials with degree at most 2 is \({n+2 \atopwithdelims ()2}\). The number of monomials with degree 3 that appear in \(M_d\) is at most

because for each \(k \in {\mathcal {K}}\) and \(l\in {\mathcal {L}}\), in this case the only way of having a monomial with degree 3 is by multiplying a monomial of degree 2 with a variable, which makes \({n+1 \atopwithdelims ()2}-{n-J+1 \atopwithdelims ()2}\) monomials of degree 3. And finally, the number of monomials of degree 4 that appear in \(M_d\) is \(\left[ {K\times L\times J \atopwithdelims ()2}+K\times L\times J\right] \), because the only ways to make such monomials are by taking the square of a monomial with degree 2, or multiplying two degree 2 monomials. Therefore, the number of linearly independent constraints for \(d=2\) is at most

With the same line of reasoning as above, the number of monomials with degree at most 6 for \(d=3\) is less than or equal to

Example 2

Consider the example (1). The only bilinear terms in (1) are \(y_1y_2\) and \(y_1y_3\). So,

Therefore, the number of linearly independent constraints is at most

if \(d=2\), and

if \(d=3\). \(\square \)

4.5 Improving lower bounds by adding valid inequalities

Adding redundant constraints to the original problem (1) increases the number of linear variables in (4); this introduces some flexibility in each level of the hierarchy because of the new linear variables and may provide a stronger lower bound. As it was mentioned in Sect. 3.1, for each bilinear term in the P- or PQ-formulations there are four valid inequalities given by (19). So, in Table 6 we present the result of adding these valid inequalities to the P-formulation and using \({{\hat{q}}}_d^1\) in (27) to solve the problem. In each level of the hierarchy in this table, we use the upper bounds for the number of constraints proposed in Sect. 4.4. As Table 6 shows, this improvement helps to obtain the optimal values of Haverly1, Harverly2, Ben-Tal4, and DeyGupte4, and to get a good approximation of the optimal value of Foulds2 in the second level of the hierarchy. Also, for Adhya1,2,4 we obtained better lower bounds than the PQ-linear relaxation values in Table 1.

5 Conclusion

In this paper we analysed and evaluated the bounded degree sum-of-squares (BSOS) hierarchy of Lasserre et al. (2015) for a class of bilinear optimization problems, namely pooling problems. We showed that this approach is successful in obtaining the global optimal values for smaller instances, but scalability remains a problem for larger instances. In particular, the number of nonnegative variables and linear constraints grows quickly with the level of the hierarchy. We have showed that it is possible to eliminate some variables and redundant linear constraints in the hierarchy in a systematic way, and this goes some way in improving scalability. More ideas are needed, though, if this approach is to become competitive for medium to larger scale pooling problems.

References

Adhya, N., Tawarmalani, M., & Sahinidis, N. V. (1999). A Lagrangian approach to the pooling problem. Industrial and Engineering Chemistry Research, 38(5), 1956–1972.

Alfaki, M. (2012). Models and solution methods for the pooling problem. Ph.D. thesis, University of Bergen

Alfaki, M., & Haugland, D. (2013). Strong formulations for the pooling problem. Journal of Global Optimization, 56(3), 897–916.

Alfaki, M., & Haugland, D. (2014). A cost minimization heuristic for the pooling problem. Annals of Operations Research, 222(1), 73–87.

Ben-Tal, A., Eiger, G., & Gershovitz, V. (1994). Global minimization by reducing the duality gap. Mathematical Programming, 63(1–3), 193–212.

Boyd, S., & Vandenberghe, L. (2004). Convex optimization. Cambridge: Cambridge University Press.

Dey, S. S., & Gupte, A. (2015). Analysis of MILP techniques for the pooling problem. Operations Research, 63(2), 412–427.

Foulds, L. R., Haugland, D., & Jörnsten, K. (1992). A bilinear approach to the pooling problem. Optimization, 24(1–2), 165–180.

Frimannslund, L., El Ghami, M., Alfaki, M., & Haugland, D. (2010). Solving the pooling problem with LMI relaxations. In: S. Cafieri, B.G.-Tóth, E. Hendrix, L. Liberti & F. Messine (Eds.), Proceedings of the Toulous Global Optimization Workshop (TOGO), pp. 51–54.

Gupte, A., Ahmed, S., Dey, S. S., & Cheon, M. S. (2017). Relaxations and discretizations for the pooling problem. Journal of Global Optimization, 67(3), 631–669. doi:10.1007/s10898-016-0434-4.

Haugland, D. (2016). The computational complexity of the pooling problem. Journal of Global Optimization, 64(2), 199–215.

Haverly, C. A. (1978). Studies of the behavior of recursion for the pooling problem. ACM SIGMAP Bulletin, 25, 19–28.

Karuppiah, R., & Grossmann, I. E. (2006). Global optimization for the synthesis of integrated water systems in chemical processes. Computers and Chemical Engineering, 30(4), 650–673.

Krivine, J. L. (1964). Anneaux préordonnés. Journal d’Analyse Mathématique, 12(1), 307–326.

Lasserre, J. B. (2001). Global optimization with polynomials and the problem of moments. SIAM Journal on Optimization, 11(3), 796–817.

Lasserre, J. B. (2005). Polynomial programming: LP-relaxations also converge. SIAM Journal on Optimization, 15(2), 383–393.

Lasserre, J. B., Toh, K., & Yang, S. (2015). A bounded degree SOS hierarchy for polynomial optimization. EURO Journal on Computational Optimization, 1–31. doi:10.1007/s13675-015-0050-y.

Laurent, M. (2009). Sums of squares, moment matrices and optimization over polynomials. In Emerging applications of algebraic geometry (pp. 157–270). Berlin: Springer.

Lofberg, J., & Parrilo, P. A. (2004). From coefficients to samples: A new approach to SOS optimization. In 2004. CDC. 43rd IEEE Conference on Decision and Control, vol. 3, pp 3154–3159. IEEE.

Misener, R., & Floudas, Ch. A. (2009). Advances for the pooling problem: Modeling, global optimization, and computational studies. Applied Computational Mathematics, 8(1), 3–22.

Misener, R., Thompson, J. P., & Floudas, Ch. A. (2011). APOGEE: Global optimization of standard, generalized, and extended pooling problems via linear and logarithmic partitioning schemes. Computers and Chemical Engineering, 35(5), 876–892.

Acknowledgements

The authors would like to thank Claudia D’Ambrosio and Ruth Misener for useful discussions and providing us some references. Also, we are grateful to the two anonymous reviewers for their helpful and constructive comments, in particular Remark 3 and Sect. 3.2.1, that greatly contributed to improving the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Ahmadreza Marandi: The research of this author was supported by the EU Marie Curie Initial Training Network number 316647 (“Mixed Integer Nonlinear Optimization (MINO)”).

Appendix: A new pooling problem instance

Appendix: A new pooling problem instance

In this section we use a result in Dey and Gupte (2015) to construct a pooling problem instance (DeyGupte4) for which piecewise linear approximations of the PQ-formulation fail.

Consider a standard pooling problem with \(I=2\) inputs, \(L=2\) pools and \(J=4\) outputs. Assume that both inputs are connected to the pools and both pools are connected to the outputs (Fig. 3). Let \(K=2\) and the concentration of specifications be (1, 0) and (0, 1) for the first and second input, respectively. We number the inputs by 1, 2, pools by 3, 4, and outputs by 5, 6, 7, 8. Let \(\mu ^{max}_{jk}=\mu ^{min}_{jk}\) (given in Fig. 3), \(u_{il}=4\) and \(u_{lj}=1\), for \(i=1,2, l=3,4, j=5,6,7,8\), and \(k=1,2\). Set the capacity of inputs, pools and outputs \(C_1=C_2=8, C_3=C_4=4\), and \(C_5=C_6=C_7=C_8=1\). Let

\(c_{il}=0\), for \(i=1,2\) and \(l=3,4\). Set \(c_{3j}=-1, c_{4j}=\frac{2}{\delta }\), for all \(j=5,6,7,8\), and the rest of the costs as 0.

The optimal value of this problem is \(-1\) with the optimal solution constructed by sending flows from inputs to the first pool, and from it to one of the outputs such that the restriction in the specification in it is satisfied (Dey and Gupte 2015).

Let \(g,h:[0,1]\times [0,1]\rightarrow {\mathbb {R}}\) be piecewise linear functions such that

Assume that

is the piecewise linear approximation of

such that for all \(\alpha , \beta \in [0,1]\) and \(\vert e\vert \le 0.05, (\alpha , \beta , \alpha \beta +e)\in {\mathcal {S}}\). As it was mentioned in Sect. 3.1, in the pooling problem:

So, for any bilinear term \(v_{ljk}=\frac{p_{lk}-m_\lambda }{M_\lambda -m_\lambda } \frac{y_{lj}}{{\min \{C_j,u_{lj}\}}}, l\in {\mathcal {L}},\ j\in {\mathcal {J}},\ k\in {\mathcal {K}},\) one can use \({\mathcal {S}}\) to find a lower bound for the optimal value of the pooling problem. It is proved in Dey and Gupte (2015) that applying this approximation to the PQ-formulation gives an objective value in \([-4,-3]\).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Marandi, A., Dahl, J. & de Klerk, E. A numerical evaluation of the bounded degree sum-of-squares hierarchy of Lasserre, Toh, and Yang on the pooling problem. Ann Oper Res 265, 67–92 (2018). https://doi.org/10.1007/s10479-017-2407-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-017-2407-5

is compact;

is compact; .

.