Abstract

The max-k-cut problem is to partition the vertices of an edge-weighted graph \(G = (V,E)\) into \(k\ge 2\) disjoint subsets such that the weight sum of the edges crossing the different subsets is maximized. The problem is referred as the max-cut problem when \(k=2\). In this work, we present a multiple operator heuristic (MOH) for the general max-k-cut problem. MOH employs five distinct search operators organized into three search phases to effectively explore the search space. Experiments on two sets of 91 well-known benchmark instances show that the proposed algorithm is highly effective on the max-k-cut problem and improves the current best known results (lower bounds) of most of the tested instances for \(k\in [3,5]\). For the popular special case \(k=2\) (i.e., the max-cut problem), MOH also performs remarkably well by discovering 4 improved best known results. We provide additional studies to shed light on the key ingredients of the algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(G=(V,E)\) be an undirected graph with vertex set \(V = \{1,\ldots ,n\}\) and edge set \(E \subset V \times V\), each edge \((i,j)\in E\) being associated a weight \(w_{ij}\in Z\). Given \(k\in [2,n]\), the max-k-cut problem is to partition the vertex set V into k (k is given) disjoint subsets \(\{S_{1},S_{2},\ldots ,S_{k}\}\), (i.e., \(\displaystyle \mathop {\cup }_{i=1}^k S_i = V, S_{i} \ne \emptyset , S_{i} \cap S_{j} = \emptyset , \forall i \ne j\)), such that the sum of weights of the edges from E whose endpoints belong to different subsets is maximized, i.e.,

Particularly, when the number of partitions equals 2 (i.e., \(k=2\)), the problem is referred as the max-cut problem. Max-k-cut is equivalent to the minimum k-partition (MkP) problem which aims to partition the vertex set of a graph into k disjoint subsets so as to minimize the total weight of the edges joining vertices in the same partition (Ghaddar et al. 2011).

The max-k-cut problem is a classical NP-hard problem in combinatorial optimization and can not be solved exactly in polynomial time (Boros and Hammer 1991; Kann et al. 1997). Moreover, when \(k=2\), the max-cut problem is one of the Karp’s 21 NP-complete problems (Karp 1972) which has been subject of many studies in the literature.

In recent decades, the max-k-cut problem has attracted increasing attention for its applicability to numerous important applications in the area of data mining (Ding et al. 2001), VLSI layout design (Barahona et al. 1988; Chang and Du 1987; Chen et al. 1983; Pinter 1984; Cho et al. 1998), frequency planning (Eisenblätter 2002), sports team scheduling (Mitchell 2003), and statistical physics (Liers et al. 2004) among others.

Given its theoretical significance and large application potential, a number of solution procedures for solving the max-k-cut problem (or its equivalent MkP) have been reported in the literature. In Ghaddar et al. (2011), the authors provide a review of several exact algorithms which are based on branch-and-cut and semidefinite programming approaches. But due to the high computational complexity of the problem, only instances of reduced size (i.e., \(|V| < 100\)) can be solved by these exact methods in a reasonable computing time.

For large instances, heuristic and metaheuristic methods are commonly used to find “good-enough” sub-optimal solutions. In particular, for the very popular max-cut problem, many heuristic algorithms have been proposed, including simulated annealing and tabu search (Arráiz and Olivo 2009), breakout local search (Benlic and Hao 2013), projected gradient approach (Burer and Monteiro 2001), discrete dynamic convexized method (Lin and Zhu 2012), rank-2 relaxation heuristic (Burer et al. 2002), variable neighborhood search (Festa et al. 2002), greedy heuristics (Kahruman et al. 2007), scatter search (Martí et al. 2009), global equilibrium search (Shylo et al. 2012) and its parallel version (Shylo et al. 2015), memetic search (Lin and Zhu 2014; Wu and Hao 2012; Wu et al. 2015), and unconstrained binary quadratic optimization (Wang et al. 2013). Compared with max-cut, there are much fewer heuristics for the general max-k-cut problem or its equivalent MkP. Among the rare existing studies, we mention the very recent discrete dynamic convexized (DC) method of Zhu et al. (2013), which formulates the max-k-cut problem as an explicit mathematical model and uses an auxiliary function based local search to find satisfactory results.

In this paper, we partially fill the gap by presenting a new and effective heuristic algorithm for the general max-k-cut problem. We identify the contributions of the work as follows.

-

In terms of algorithmic design, the main originality of the proposed algorithm is its multi-phased multi-strategy approach which relies on five distinct local search operators for solution transformations. The five employed search operators (\(O_1{-}O_5\)) are organized into three different search phases to ensure an effective examination of the search space. The descent-based improvement phase uses the intensification operators \(O_1\)–\(O_2\) to find a (good) local optimum from a starting solution. Then by applying two additional operators (\(O_3\)–\(O_4\)), the diversified improvement phase aims to discover promising areas around the obtained local optimum which are then further explored by the descent-based improvement phase. Finally, since the search can get trapped in local optima, the perturbation phase applies a random search operator (\(O_5\)) to definitively lead the search to a distant region from which a new round of the search procedure starts. This process is repeated until a stopping condition is met. To ensure a high computational efficiency of the algorithm, we employ bucket-sorting based techniques to streamline the calculations of the different search operators.

-

In terms of computational results, we assess the performance of the proposed algorithm on two sets of well-known benchmarks with a total of 91 instances which are commonly used to test max-k-cut and max-cut algorithms in the literature. Computational results show that the proposed algorithm competes very favorably with respect to the existing max-k-cut heuristics, by improving the current best known results on most instances for \(k\in [3,5]\). Moreover, for the very popular max-cut problem (\(k=2\)), the results yielded by our algorithm remain highly competitive compared with the most effective and dedicated max-cut algorithms. In particular, our algorithm manages to improve the current best known solutions for 4 (large) instances, which were previously reported by specific max-cut algorithms of the literature.

The rest of the paper is organized as follows. In Sect. 2, the proposed algorithm is presented. Section 3 provides computational results and comparisons with state-of-the-art algorithms in the literature. Section 4 is dedicated to an analysis of several essential parts of the proposed algorithm. Concluding remarks are given in Sect. 5.

2 Multiple search operator heuristic for max-k-cut

2.1 General working scheme

The proposed multiple operator heuristic algorithm (MOH) for the general max-k-cut problem is described in Algorithm 1 whose components are explained in the following subsections. The algorithm explores the search space (Sect. 2.2) by alternately applying five distinct search operators (\(O_1\) to \(O_5\)) to make transitions from the current solution to a neighbor solution (Sect. 2.4). Basically, from an initial solution, the descent-based improvement phase aims, with two operators (\(O_1\) and \(O_2\)), to reach a local optimum I (Algorithm 1, lines 10–19, descent-based improvement phase, Sect. 2.6). Then the algorithm continues to the diversified improvement phase (Algorithm 1, lines 28–38, Sect. 2.7) which applies two other operators (\(O_3\) and \(O_4\)) to locate new promising regions around the local optimum I. This second phase ends once a better solution than the current local optimum I is discovered or when a maximum number of diversified moves \(\omega \) is reached. In both cases, the search returns to the descent-based improvement phase with the best solution found as its new starting point. If no improvement can be obtained after \(\xi \) descent-based improvement and diversified improvement phases, the search is judged to be trapped in a deep local optimum. To escape the trap and jump to an unexplored region, the search turns into a perturbation-based diversification phase (Algorithm 1, lines 40–43), which uses a random operator (\(O_5\)) to strongly transform the current solution (Sect. 2.8). The perturbed solution serves then as the new starting solution of the next round of the descent-based improvement phase. This process is iterated until the stopping criterion (typically a cutoff time limit) is met.

2.2 Search space and evaluation solution

Recall that the goal of max-k-cut is to partition the vertex set V into k subsets such that the sum of weights of the edges between the different subsets is maximized. As such, we define the search space \(\Omega \) explored by our algorithm as the set of all possible partitions of V into k disjoint subsets, \(\Omega = \{\{S_{1},S_{2},\ldots ,S_{k}\}: \displaystyle \mathop {\cup }_{i=1}^k S_i = V, S_{i} \cap S_{j} = \emptyset , S_{i}\subset V, \forall i \ne j\)}, where each candidate solution is called a k-cut.

For a given partition or k-cut \(I=\{S_{1},S_{2},\ldots ,S_{k}\} \in \Omega \), its objective value f(I) is the sum of weights of the edges connecting two different subsets:

Then, for two candidate solutions \(I' \in \Omega \) and \(I'' \in \Omega \), \(I'\) is better than \(I''\) if and only if \(f(I')>f(I'')\). The goal of our algorithm is to find a solution \(I_{best} \in \Omega \) with \(f(I_{best})\) as large as possible.

2.3 Initial solution

The MOH algorithm needs an initial solution to start its search. Generally, the initial solution can be provided by any eligible means. In our case, we adopt a randomized two step procedure. First, from k empty subsets \(S_i = \emptyset , \forall i\in \{1,\dots ,k\}\), we assign each vertex \(v \in V\) to a random subset \(S_i \in \{S_{1},S_{2},\ldots ,S_{k}\}\). Then if some subsets are still empty, we repetitively move a vertex from its current subset to an empty subset until no empty subset exists.

2.4 Move operations and search operators

Our MOH algorithm iteratively transforms the incumbent solution to a neighbor solution by applying some move operations. Typically, a move operation (or simply a move) changes slightly the solution, e.g., by transferring a vertex to a new subset. Formally, let I be the incumbent solution and let mv be a move, we use \(I' \leftarrow I \oplus mv\) to denote the neighbor solution \(I'\) obtained by applying mv to I.

Associated to a move operation mv, we define the notion of move gain \(\varDelta _{mv}\), which indicates the objective change between the incumbent solution I and the neighbor solution \(I'\) obtained after applying the move, i.e.,

where f is the optimization objective [see Formula (2)].

In order to efficiently evaluate the move gain of a move, we develop dedicated techniques which are described in Sect. 2.5. In this work, we employ two basic move operations: the ‘single-transfer move’ and the ‘double-transfer move’. These two move operations form the basis of our five search operators.

-

Single-transfer move (st): Given a k-cut \(I = \{S_1 , S_2, \ldots , S_k \}\), a vertex \(v \in S_p\) and a target subset \(S_q\) with \(p,q \in \{1,\ldots ,k\}, p\ne q\), the ‘single-transfer move’ displaces vertex \(v \in S_p\) from its current subset \(S_p\) to the target subset \(S_q \ne S_p\). We denote this move by \(st(v,S_p,S_q)\) or \(v\rightarrow S_q\).

-

Double-transfer move (dt): Given a k-cut \(I = \{S_1 , S_2, \ldots , S_k \}\), the ‘double-transfer move’ displaces vertex u from its subset \(S_{cu}\) to a target subset \(S_{tu} \ne S_{cu}\), and displaces vertex v from its current subset \(S_{cv}\) to a target subset \(S_{tv} \ne S_{cv}\). We denote this move by \(dt(u,S_{cu},S_{tu};v,S_{cv},S_{tv})\) or dt(u, v), or still dt.

From these two basic move operations, we define five distinct search operators \(O_1 - O_5\) which indicate precisely how these two basic move operations are applied to transform an incumbent solution to a new solution. After an application of any of these search operators, the move gains of the impacted moves are updated according to the dedicated techniques explained in Sect. 2.5.

-

The \(\mathbf {O_1}\) search operator applies the single-transfer move operation. Precisely, \(O_1\) selects among the \((k-1)n\) single-transfer moves a best move \(v\rightarrow S_q\) such that the induced move gain \(\varDelta _{(v\rightarrow S_q)}\) is maximum. If there are more than one such moves, one of them is selected at random. Since there are \((k-1)n\) candidate single-transfer moves from a given solution, the time complexity of \(O_1\) is bounded by O(kn). The proposed MOH algorithm employs this search operator as its main intensification operator which is complemented by the \(O_2\) search operator to locate good local optima (see Algorithm 1, lines 10–19 and Sect. 2.6).

-

The \(\mathbf {O_2}\) search operator is based on the double-transfer move operation and selects a best dt move with the largest move gain \(\varDelta _{dt}\). If there are more than one such moves, one of them is selected at random.

Let \(dt(u,S_{cu},S_{tu};v,S_{cv},S_{tv})\) (\(S_{cu}\ne S_{tu}\), \(S_{cv}\ne S_{tv}\)) be a double-transfer move, then the move gain \(\varDelta _{dt}\) of this double transfer move can be calculated by a combination of the move gains of its two underlying single-transfer moves (\(\varDelta _{u\rightarrow S_{tu}}\) and \(\varDelta _{v\rightarrow S_{tv}}\)) as follows:

where \(\omega _{uv}\) is the weight of edge \(e(u,v) \in E\) and \(\psi \) is a coefficient which is determined as follows:

The operator \(O_2\) is used when \(O_1\) exhausts its improving moves and provides a first means to help the descent-based improvement phase to escape the current local optimum and discover solutions of increasing quality. Given an incumbent solution, there are a total number of \((k-1)^2n^2\) candidate double-transfer moves denoted as set DT. Seeking directly the best move with the maximum \(\varDelta _{dt}\) among all these possible moves would just be too computationally expensive. In order to mitigate this problem, we devise a strategy to accelerate the move evaluation process.

From Formula (4), one observes that among all the vertices in V, only the vertices verifying the condition \(\omega _{uv} \ne 0\) and \(\varDelta _{dt(u,v)} > 0\) are of interest for the double-transfer moves. Note that without the condition \(\omega _{uv} \ne 0\), performing a double-transfer move would actually equal to two consecutive single-transfer moves, which on the one hand makes the operator \(O_2\) meaningless and on the other hand fails to get an increased objective gain. Thus, by examining only the endpoint vertices of edges in E, we shrink the move combinations by building a reduced subset: \(DT^R = \{dt(u,v) : dt(u,v) \in \ DT, \omega _{uv} \ne 0, \varDelta _{dt(u,v)} > 0\}\). Based on \(DT^R\), the complexity of examining all possible double-transfer moves drops to O(|E|), which is not related to k. In practice, one can examine \(\phi |E|\) endpoint vertices in case |E| is too large. We empirically set \(\phi = 0.1/d\), where d is the highest degree of the graph.

To summarize, the \(O_2\) search operator selects two st moves \(u\rightarrow S_{tu}\) and \(v\rightarrow S_{tv}\) from the reduced set \(DT^R\), such that the combined move gain \(\varDelta _{dt(u,v)}\) according to Formula (4) is maximum.

-

The \(\mathbf {O_3}\) search operator, like \(O_1\), selects a best single-transfer move (i.e., with the largest move gain) while considering a tabu list H (Glover and Laguna 1999). The tabu list is a memory which is used to keep track of the performed st moves to avoid revisiting previously encountered solutions. As such, each time a best st move is performed to displace a vertex v from its original subset to a target subset, v becomes tabu and is forbidden to move back to its original subset for the next \(\lambda \) iterations (called tabu tenure). In our case, the tabu tenure is dynamically determined as follows.

$$\begin{aligned} \lambda = rand(3,n/10 ) \end{aligned}$$(6)where rand(3, n / 10) denotes a random integer between 3 and n / 10. Based on the tabu list, \(O_3\) considers all possible single-transfer moves except those forbidden by the tabu list H and selects the best st move with the largest move gain \(\varDelta _{st}\). Note that a forbidden move is always selected if the move leads to a solution better than the best solution found so far. This is called aspiration in tabu search terminology (Glover and Laguna 1999). Although both \(O_3\) and \(O_1\) use the single-transfer move, they are two different search operators and play different roles within the MOH algorithm. On the one hand, as a pure descent operator, \(O_1\) is a faster operator compared to \(O_3\) and is designed to be an intensification operator. Since \(O_1\) alone has no any diversification capacity and always ends with the local optimum encountered, it is jointly used with \(O_2\) to visit different local optima. On the other hand, due to the use of the tabu list, \(O_3\) can accept moves with a negative move gain (leading to a worsening solution). As such, unlike \(O_1\), \(O_3\) has some diversification capacity, and when jointly used with \(O_4\), helps the search to examine nearby regions around the input local optimum to find better solutions (see Algorithm 1, lines 28–38 and Sect. 2.7).

-

The \(\mathbf {O_4}\) search operator, like \(O_2\), is based on the double-transfer operation. However, \(O_4\) strongly constraints the considered candidate dt moves with respect to two target subsets which are randomly selected. Specifically, \(O_4\) operates as follows. Select two target subsets \(S_p\) and \(S_q\) at random, and then select two single-transfer moves \(u \rightarrow S_p\) and \(v\rightarrow S_q\) such that the combined move gain \(\varDelta _{dt(u,v)}\) according to Formula (4) is maximum. Operator \(O_4\) is jointly used with operator \(O_3\) to ensure the diversified improvement search phase.

-

The \(\mathbf {O_5}\) search operator is based on a randomized single-transfer move operation. \(O_5\) first selects a random vertex \(v \in V\) and a random target subset \(S_p\), where \(v \not \in S_p\) and then moves v from its current subset to \(S_p\). This operator is used to change randomly the incumbent solution for the purpose of (strong) diversification when the search is considered to be trapped in a deep local optimum (see Sect. 2.8).

Among the five search operators, four of them (\(O_1\)–\(O_4\)) need to find a single-transfer move with the maximum move gain. To ensure a high computational efficiency of these operators, we develop below a streamlining technique for fast move gain evaluation and move gain updates.

2.5 Bucket sorting for fast move gain evaluation and updating

The algorithm needs to rapidly evaluate a number of candidate moves at each iteration. Since all the search operators basically rely on the single-transfer move operation, we developed a fast incremental evaluation technique based on a bucket data structure to keep and update the move gains after each move application (Cormen et al. 2001). Our streamlining technique can be described as follows: let \(v\rightarrow S_x\) be the move of transferring vertex v from its current subset \(S_{cv}\) to any other subset \(S_x\), \(x \in \{1,\dots ,k\}, x \ne cv\). Then initially, each move gain is determined as follows:

where \(\omega _{vi}\) and \(\omega _{vj}\) are respectively the weights of edges e(v, i) and e(v, j).

Suppose the move \(v\rightarrow S_{tv}\), i.e., displacing v from \(S_{cv}\) to \(S_{tv}\), is performed, the move gains can be updated by performing the following calculations:

-

1.

for each \(S_x\ne S_{cv}\), \(S_x\ne S_{tv}\), \(\varDelta _{v\rightarrow S_x} = \varDelta _{v\rightarrow S_x} - \varDelta _{v\rightarrow S_{tv}}\)

-

2.

\(\varDelta _{v\rightarrow S_{cv}} = - \varDelta _{v\rightarrow S_{tv}}\)

-

3.

\(\varDelta _{v\rightarrow S_{tv}} = 0\)

-

4.

for each \(u\in V-\{v\}\), moving \(u\in S_{cu}\) to each other subset \(S_y \in S-\{S_{cu}\}\),

For low-density graphs, \(\omega _{uv} = 0\) stands for most cases. Hence, we only update the move gains of vertices affected by this move (i.e., the displaced vertex and its adjacent vertices), which reduces the computation time significantly.

The move gains can be stored in an vector, with which the time for finding the best move grows linearly with the number of vertices and partitions (O(kn)). For large problem instances, the required time to search the best move can still be quite high, which is particular true when k is large. To further reduce the computing time, we adapted the bucket sorting technique of Fiduccia and Mattheyses (1982) initially proposed for the two-way network partitioning problem to the max-k-cut problem. The idea is to keep the vertices ordered by the move gains in decreasing order in k arrays of buckets, one for each subset \(S_{i}\in \{S_{1},S_{2},\ldots ,S_{k}\}\). In each bucket array i, the \(\mathrm{j{th}}\) entry stores in a doubly linked list the vertices with the move gain \(\varDelta _{v\rightarrow S_i}\) currently equaling j. To ensure a direct access to each vertex in the doubly linked lists, as suggested in Fiduccia and Mattheyses (1982), we maintain another array for all vertices, where each element points to its corresponding vertex in the doubly linked lists.

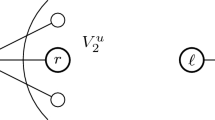

Figure 1 shows an example of the bucket structure for \(k=3\) and \(n=8\). The 8 vertices of the graph (Fig. 1, left) are divided to 3 subsets \(S_1, S_2\) and \(S_3\). The associated bucket structure (Fig. 1, right) shows that the move gains of moving vertices e, g, h to subset \(S_1\) equal \(-1\), then they are stored in the entry of \(B_1\) with index of \(-1\) and are managed as a doubly linked list. The array AI shown at the bottom of Fig. 1 manages position indexes of all vertices.

For each array of buckets, finding the best vertex with maximum move gain is equivalent to finding the first non-empty bucket from top of the array and then selecting a vertex in its doubly linked list. If there are more than one vertices in the doubly linked list, a random vertex in this list is selected. To further reduce the searching time, the algorithm memorizes the position of the first non-empty bucket (e.g., \(gmax_1, gmax_2, gmax_3\) in Fig. 1). After each move, the bucket structure is updated by recomputing the move gains (see Formula (8)) of the affected vertices which include the moved vertex and its adjacent vertices, and shifting them to appropriate buckets. For instance, the steps of performing an \(O_1\) move based on Fig. 1 are shown as follows: First, obtain the index of maximum move gain in the bucket arrays by calculating \(max (gmax_1, gmax_2, gmax_3)\), which equals \(gmax_3\) in this case. Second, select randomly a vertex indexed by \(gmax_3\), vertex b in this case. At last, update the positions of the affected vertices a, b, d.

The complexity of each move consists in (1) searching for the vertex with maximum move gain in O(l) (l being the current length of the doubly link list with the maximum gain, typically much smaller than n), (2) recomputing the move gains for the affected vertices in \(O(kd_{max})\) (\(d_{max}\) being the maximum degree of the graph), and (3) updating the bucket structure in \(O(kd_{max})\).

Bucket data structures have been previously applied to the specific max-cut and max-bisection problems (Benlic and Hao 2013; Lin and Zhu 2014; Zhu et al. 2015). This work presents the first adaptation of the bucket sorting technique to the general max-k-cut problem.

2.6 Descent-based improvement phase for intensified search

The descent-based local search is used to obtain a local optimum from a given starting solution. As described in Algorithm 1 (lines 10–19), we alternatively uses two search operators \(O_1\) and \(O_2\) defined in Sect. 2.4 to improve a solution until reaching a local optimum. Starting from the given initial solution, the procedure first applies \(O_1\) to improve the incumbent solution. According to the definition of \(O_1\) in Sect. 2.4, at each step, the procedure examines all possible single-transfer moves and selects a move \(v\rightarrow S_q\) with the largest move gain \(\varDelta _{v\rightarrow S_q}\) subject to \(\varDelta _{v\rightarrow S_q}>0\), and then performs that move. After the move, the algorithm updates the bucket structure of move gains according to the technique described in Sect. 2.5.

When the incumbent solution can not be improved by \(O_1\) (i.e., \(\forall v\in V, \forall S_q, \varDelta _{v\rightarrow S_q} \le 0\)), the procedure turns to \(O_2\) which makes one best double-transfer move. If an improved solution is discovered with respect to the local optimum reached by \(O_1\), we are in a new promising area. We switch back to operator \(O_1\) to resume an intensified search to attain a new local optimum. The descent-based improvement phase stops when no better solution can be found with \(O_1\) and \(O_2\). The last solution is a local optimum \(I_{lo}\) with respect to the single-transfer and double-transfer moves and serves as the input solution of the second search phase which is explained in the next section.

2.7 Diversified improvement phase for discovering promising region

The descent-based local phase described in Sect. 2.6 alone can not go beyond the best local optimum \(I_{lo}\) it encounters. The diversified improvement search phase is used 1) to jump out of this local optimum and 2) to intensify the search around this local optimum with the hope of discovering other improved solutions better than the input local optimum \(I_{lo}\). The diversified improvement search procedure alternatively uses two search operators \(O_3\) and \(O_4\) defined in Sect. 2.4 to perform moves until a prescribed condition is met (see below and Algorithm 1, line 38). The application of \(O_3\) or \(O_4\) is determined probabilistically: with probability \(\rho \), \(O_3\) is applied; with \(1-\rho \), \(O_4\) is applied.

When \(O_3\) is selected, the algorithm searches for a best single transfer move \(v\rightarrow S_q\) with maximum move gain \(\varDelta _{v\rightarrow S_q}\) which is not forbidden by the tabu list or verifies the aspiration criterion. Each performed move is then recorded in the tabu list H and is classified tabu for the next \(\lambda \) (calculated by Formula (6)) iterations. The bucket structure is updated to actualize the impacted move gains accordingly. Note that the algorithm only keeps and updates the tabu list during the diversified improvement search phase. Once this second search phase terminates, the tabu list is cleared up.

Similarly, when \(O_4\) is selected, two subsets are selected at random and a best double-transfer dt move with maximum move gain \(\varDelta _{dt}\) is determined from the bucket structure (break ties at random). After the move, the bucket structure is updated to actualize the impacted move gains.

The diversified improvement search procedure terminates once a solution better than the input local optimum \(I_{lo}\) is found, or a maximum number \(\omega \) of diversified moves (\(O_3\) or \(O_4\)) is reached. Then the algorithm returns to the descent-based search procedure and use the current solution I as a new starting point for the descent-based search. If the best solution founded so far (\(f_{best}\)) can not be improved over a maximum allowed number \(\xi \) of consecutive rounds of the descent-based improvement and diversified improvement phases, the search is probably trapped in a deep local optima. Consequently, the algorithm switches to the perturbation phase (Sect. 2.8) to displace the search to a distant region.

2.8 Perturbation phase for strong diversification

The diversified improvement phase makes it possible for the search to escape some local optima. However, the algorithm may still get deeply stuck in a non-promising regional search area. This is the case when the best-found solution \(f_{best}\) can not be improved after \(\xi \) consecutive rounds of descent and diversified improvement phases. Thus the random perturbation is applied to strongly change the incumbent solution.

The basic idea of the perturbation consists in applying the \(O_5\) operator \(\gamma \) times. In other words, this perturbation phase moves \(\gamma \) randomly selected vertices from their original subset to a new and randomly selected subset. Here, \(\gamma \) is used to control the perturbation strength; a large (resp. small) \(\gamma \) value changes strongly (resp. weakly) the incumbent solution. In our case, we adopt \(\gamma =0.1|V|\), i.e., as a percent of the number of vertices. After the perturbation phase, the search returns to the descent-based improvement phase with the perturbed solution as its new starting solution.

3 Experimental results and comparisons

3.1 Benchmark instances

To evaluate the performance of the proposed MOH approach, we carried out computational experiments on two sets of well-known benchmarks with a total of 91 large instances of the literature.Footnote 1 The first set (G-set) is composed of 71 graphs with 800–20,000 vertices and an edge density from 0.02 to 6 %. These instances were previously generated by a machine-independent graph generator including toroidal, planar and random weighted graphs. These instances are available from: http://www.stanford.edu/yyye/yyye/Gset. The second set comes form (Burer et al. 2002), arising from 30 cubic lattices with randomly generated interaction magnitudes. Since the 10 small instances (with less than 1000 vertices) of the second set are very easy for our algorithm, only the results of the 20 larger instances with 1000 to 2744 vertices are reported. These well-known benchmarks were frequently used to evaluate the performance of max-bisection, max-cut and max-k-cut algorithms (Benlic and Hao 2013; Festa et al. 2002; Shylo et al. 2012, 2015; Wang et al. 2013; Wu and Hao 2012, 2013; Wu et al. 2015; Zhu et al. 2013).

3.2 Experimental protocol

The proposed MOH algorithm was programmed in C++ and compiled with GNU g++ (optimization flag “−O2”). Our computer is equipped with a Xeon E5440/2.83GHz CPU with 2GB RAM. When testing the DIMACS machine benchmarkFootnote 2, our machine requires 0.43, 2.62 and 9.85 CPU time in seconds respectively for graphs r300.5, r400.5, and r500.5 compiled with g++ −O2.

3.3 Parameters

The MOH algorithm requires five parameters: tabu tenure \(\lambda \), maximum number \(\omega \) of diversified moves, maximum number \(\xi \) of consecutive non-improving rounds of the descent and diversified improvement phases before the perturbation phase, probability \(\rho \) for applying the operator \(O_3\), and perturbation strength \(\gamma \). For the tabu tenure \(\lambda \), we adopted the recommended setting of the Breakout Local Search (Benlic and Hao 2013), which performs quite well for the benchmark graphs. For each of the other parameters, we first identified a collection of varying values and then determined the best setting by testing the candidate values of the parameter while fixing the other parameters to their default values. This parameter study was based on a selection of 10 representative and challenging G-set instances (G22, G23, G25, G29, G33, G35, G36, G37, G38 and G40). For each parameter setting, 10 independent runs of the algorithm were conducted for each instance and the average objective values over the 10 runs were recorded. If a large parameter value presents a better result, we gradually increase its value; otherwise, we gradually decrease its value. By repeating the above procedure, we determined the following parameter settings: \(\lambda =rand(3,|V|/10 ), \omega =500, \xi =1000 , \rho =0.5\), and \(\gamma =0.1|V|\), which were used in our experiments to report computational results.

Considering the stochastic nature of our MOH algorithm, each instance was independently solved 20 times. For the purpose of fair comparisons reported in Sects. 3.4 and 3.5, we followed most reference algorithms and used a timeout limit as the stopping criterion of the MOH algorithm. The timeout limit was set to be 30 minutes for graphs with \(|V| < 5000\), 120 minutes for graphs with \(10{,}000\ge |V| \ge 5000\), 240 minutes for graphs with \(|V| \ge 10{,}000\).

To fully assess the performance of the MOH algorithm, we performed two comparisons with the state-of-the-art algorithms. First, we focused on the max-k-cut problem (\(k=2,3,4,5\)), where we thoroughly compared our algorithm with the recent discrete dynamic convexized algorithm (Zhu et al. 2013) which provides the most competitive results for the general max-k-cut problem in the literature. Secondly, for the special max-cut case (\(k=2\)), we compared our algorithm with seven most recent max-cut algorithms (Benlic and Hao 2013; Kochenberger et al. 2013; Shylo et al. 2012; Wang et al. 2013; Wu and Hao 2012, 2013). It should be noted that those state-of-the-art max-cut algorithms were specifically designed for the particular max-cut problem while our algorithm was developed for the general max-k-cut problem. Naturally, the dedicated algorithms are advantaged since they can better explore the particular features of the max-cut problem.

3.4 Comparison with state-of-the-art max-k-cut algorithms

In this section, we present the results attained by the MOH algorithm for the max-k-cut problem. As mentioned above, we compare the proposed algorithm with the discrete dynamic convexized algorithm (DC) (Zhu et al. 2013), which was published very recently. DC was tested on a computer with a 2.11 GHz AMD processor and 1 GB of RAM. According to the Standard Performance Evaluation Cooperation (SPEC) (www.spec.org), this computer is 1.4 times slower than the computer we used for our experiments. Note that DC is the only heuristic algorithm available in the literature, which published computational results for the general max-k-cut problem.

Tables 1, 2, 3, and 4, respectively show the computational results of the MOH algorithm (\(k=2,3,4,5\)) on the 2 sets of benchmarks in comparison with those of the DC algorithm. The first two columns of the tables indicate the name and the number of vertices of the graphs. Columns 3 to 6 present the results attained by our algorithm, where \(f_{best}\) and \(f_{avg}\) show the best objective value and the average objective value over 20 runs, std gives the standard deviation and time(s) indicates the average CPU time in seconds required by our algorithm to reach the best objective value \(f_{best}\). Columns 7 to 10 present the statistics of the DC algorithm, including the best objective value \(f_{best}\), average objective value \(f_{avg}\), the time required to terminate the run tt(s) and the time bt(s) to reach the \(f_{best}\) value. Considering the difference between our computer and the computer used by DC, we normalize the time of DC by dividing them by 1.4 according to the SPEC mentioned above. The entries marked as “-” in the tables indicate that the corresponding results are not available. The entries in bold indicate that those results are better than the results provided by the reference DC algorithm. The last column (gap) indicates the gap of the best objective value for each instance between our algorithm and DC. A positive gap implies an improved result.

From Table 1 on max-2-cut, one observes that our algorithm achieves a better \(f_{best}\) (best objective value) for 50 out of 74 instances reported by DC, while a better \(f_{avg}\) (average objective value) for 71 out of 74 instances. Our algorithm matches the results on other instances and there is no result worse than that obtained by DC. The average standard deviation for all 91 instances is only 2.82, which shows our algorithm is stable and robust.

From Tables 2, 3, and 4, which respectively show the comparative results on max-3-cut, max-4-cut and max-5-cut. One observes that our algorithm achieves much higher solution quality on more than 90 % of 44 instances reported by DC while getting 0 worse result. Moreover, even our average results (\(f_{avg}\)) are better than the best results reported by DC.

Note that the DC algorithm used a stopping condition of 500 generations (instead of a cutoff time limit) to report its computational results. Among the two timing statistics (tt(s) and bt(s)), bt(s) roughly corresponds to column time of the MOH algorithm. Still given that the two algorithms attain solutions of quite different quality, it is meaningless to directly compare the corresponding time values listed in Tables 1, 2, 3, and 4. To fairly compare the computational efficiency of MOH and DC, we reran the MOH algorithm with the best objective value of the DC algorithm as our stopping condition and reported our timing statistics in Table 5. One observes that our algorithm needs at most 16 seconds (less than 1 second for most cases) to attain the best objective value reported by the DC algorithm, while the DC algorithm requires at least 44 seconds and up to more than 2000 seconds for several instances. More generally, as shown in Tables 1, 2, 3, and 4, except the last 17 instances of the very competitive max-2-cut problem for which the results of DC are not available, the MOH algorithm requires rarely more than 1000 seconds to attain solutions of much better quality.

We conclude that the proposed algorithm for the general max-k-cut problem dominates the state-of-the-art reference DC algorithm both in terms of solution quality and computing time.

3.5 Comparison with state-of-the-art max-cut algorithms

Our algorithm was designed for the general max-k-cut problem for \(k\ge 2\). The assessment of the last section focused on the general case. In this section, we further evaluate the performance of the proposed algorithm for the special max-cut problem (\(k=2\)).

Recall that max-cut has been largely studied in the literature for a long time and there are many powerful heuristics which are specifically designed for the problem. These state-of-the-art max-cut algorithms constitute thus relevant references for our comparative study. In particular, we adopt the following 7 best performing sequential algorithms published since 2012.

-

1.

Global equilibrium search (GES) (2012) (Shylo et al. 2012)—an algorithm sharing ideas similar to simulated annealing and utilizing accumulated information of search space to generate new solutions for the subsequent stages. The reported results of GES were obtained on a PC with a 2.83GHz Intel Core QUAD Q9550 CPU and 8.0GB RAM.

-

2.

Breakout local search (BLS) (2013) (Benlic and Hao 2013)—a heuristic algorithm integrating a local search and adaptive perturbation strategies. The reported results of BLS were obtained on a PC with 2.83GHz Intel Xeon E5440 CPU and 2GB RAM.

-

3.

Two memetic algorithms respective for the max-cut problem (MACUT) (2012) (Wu and Hao 2012) and the max-bisection problem (MAMBP) (2013) (Wu and Hao 2013)—integrating a grouping crossover operator and a tabu search procedure. The results reported in the two papers were obtained on a PC with a 2.83GHz Intel Xeon E5440 CPU and 2GB RAM.

-

4.

GRASP-Tabu search algorithm (2013) (Wang et al. 2013)—a method converting the max-cut problem to the UBQP problem and solving it by integrating GRASP and tabu search. The reported results were obtained on a PC with a 2.83GHz Intel Xeon E5440 CPU and 2GB RAM.

-

5.

Tabu search (TS-UBQP) (2013) (Kochenberger et al. 2013)—a tabu search algorithm designed for UBQP. The evaluation of TS-UBQP were performed on a PC with a 2.83GHz Intel Xeon E5440 CPU and 2GB RAM.

-

6.

Tabu search based hybrid evolutionary algorithm (TSHEA) (2016) (Wu et al. 2015)—a very recent hybrid algorithm integrating a distance-and-quality guided solution combination operator and a tabu search procedure based on neighborhood combination of one-flip and constrained exchange moves. The results were obtained on a PC with 2.83GHz Intel Xeon E5440 CPU and 8GB RAM.

One notices that except GES, the other five reference algorithms were run on the same computing platform. Nevertheless, it is still difficult to make a fully fair comparison of the computing time, due to the differences on programming language, compiling options, and termination conditions, etc. Our comparison thus focuses on the best solution achieved by each algorithm. Recall that for our algorithm, the timeout limit was set to be 30 minutes for graphs with \(|V| < 5000\), 120 minutes for graphs with \(1000\ge |V| \ge 5000\), 240 minutes for graphs with \(|V| \ge 10{,}000\). Our algorithm employed thus the same timeout limits as (Wu and Hao 2012) on the graphs \(|V|< 10{,}000 \), but for the graphs \( |V| \ge 10{,}000\), we used 240 minutes to compare with BLS Benlic and Hao (2013).

Table 6 gives the comparative results on the 91 instances of the two benchmarks. Columns 1 and 2 respectively indicate the instance name and the number of vertices of the graphs. Columns 3 shows the current best known objective value \(f_{pre}\) reported by any existing max-cut algorithm in the literature including the latest parallel GES algorithm (Shylo et al. 2015). Columns 4 to 10 give the best objective value obtained by the reference algorithms: GES (Shylo et al. 2012), BLS (Benlic and Hao 2013), MACUT (Wu and Hao 2012), TS-UBQP (Kochenberger et al. 2013), GRASP-TS/PM (Wang et al. 2013), MAMBP (Wu and Hao 2013) and TSHEA (Wu et al. 2015). Note that MAMBP is designed for the max-bisection problem (i.e., balanced max-cut), however it achieves some previous best known max-cut results. The last column ‘MOH’ recalls the best results of our algorithm from Table 1. The rows denoted by ‘Better’, ‘Equal’ and ‘Worse’ respectively indicate the number of instances for which our algorithm obtains a result of better, equal and worse quality relative to each reference algorithm. The entries are reported in the form of x/y/z, where z denotes the total number of the instances tested by our algorithm, y is the number of the instances tested by a reference algorithm and x indicates the number of instances where our algorithm achieved ‘Better’, ‘Equal’ or ‘Worse’ results. The results in bold mean that our algorithm has improved the best known results. The entries marked as “–” in the table indicate that the results are not available.

From Table 6, one observes that the MOH algorithm is able to improve the current best known results in the literature for 4 instances, and match the best known results for 74 instances. For 13 cases (in italic), even if our results are worse than the current best known results achieved by the latest parallel GES algorithm (Shylo et al. 2015), they are still better than the results of other existing algorithms, except for 4 instances if we refer to the most recent TSHEA algorithm (Wu et al. 2015). Note that the results of the parallel GES algorithm were achieved on a more powerful computing platform (Intel CoreTM i7-3770 CPU @3.40GHz and 8GB RAM) and with longer time limits (4 parallel processes at the same time and 1 hour for each process).

Such a performance is remarkable given that we are comparing our more general algorithm designed for max-k-cut with the best performing specific max-cut algorithms. The experimental evaluations presented in this section and last section demonstrate that our algorithm not only performs well on the general max-k-cut problem, but also remains highly competitive for the special case of the popular max-cut problem.

4 Discussion

In this section, we investigate the role of several important ingredients of the proposed algorithm, including the bucket sorting data structure, the descent improvement search operators \(O_1\) and \(O_2\) and the diversified improvement search operators \(O_3\) and \(O_4\).

4.1 Impact of the bucket sorting technique

As described in Sect. 2.5, the bucket sorting technique is utilized in the MOH algorithm for the purpose of quickly identifying a suitable move with the best objective gain. To verify its effectiveness, we implemented another MOH version where we replaced the bucket sorting data structure with a simple vector and conducted an experimental comparison on the max-3-cut problem. For this experiment, we used 20 representative Gxx instances and ran 20 times both MOH versions to solve each chosen instance with a time limit of 300 seconds.

Table 7 reports the average of the best objective values and the total number of iterations of each MOH version for each instance. From Table 7, we observe that the MOH algorithm using the bucket sorting structure conducted 3.3 times more iterations on average than using the vector structure within the given time span. Moreover, the former is able to find better results for 16 instances and only one worse result. In conclusion, this experiment confirms that using the devised bucket sorting technique is able to considerably improve the computational efficiency and search capacity of the MOH algorithm.

4.2 Impact of the descent improvement search operators

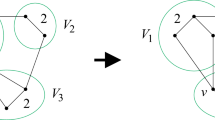

As described in Sect. 2.6, the proposed algorithm employs operators \(O_1\) and \(O_2\) for its descent improvement phase to obtain local optima. To analyze the impact of these two operators, we implement three variants of our algorithm, the first one using the operator \(O_1\) alone, the second one using the union \(O_1\cup O_2\) such that the descent search procedure always chooses the best move among the \(O_1\) and \(O_2\) moves (Lü et al. 2011), the third one using operator \(rand(O_1,O_2)\) where the descent procedure applies randomly and with equal probability \(O_1\) or \(O_2\), while keeping all the other ingredients and parameters fixed as described in Sect. 3.3. The strategy used by our original algorithm, detailed in Sect. 2.6, is denoted as \(O_1+O_2\).

This study was based on the max-cut problem and the same 10 challenging instances used for parameter tuning of Sect. 3.3. Each selected instance was solved 10 times by each of these variants and our original algorithm. The stopping criterion was a timeout limit of 30 minutes. The obtained results are presented in Table 8, including the best objective value \(f_{best}\), the average objective value \(f_{avg}\) over the 10 independent runs, as well as the CPU times in seconds to reach \(f_{best}\). To evaluate the performance, we display in Fig. 2a the gaps between the best objective values obtained by different strategies and the best objective values by our original algorithm. We also show in Fig. 2b the box and whisker plots which indicate, for different \(O_1\) and \(O_2\) combination strategies, the distribution and the ranges of the obtained results for the 10 tested instances. The results are expressed as the additive inverse of percent deviation of the averages results from the best known objective values obtained by our original algorithm.

From Fig. 2a, one observes that for the tested instances, other combination strategies obtain fewer best known results compared to the strategy \(O_1 + O_2\), and produce large gaps to the best known results on some instances. From Fig. 2b, we observe a clear difference in the distribution of the results with different strategies. For the results with the strategies of \(O_1 + O_2\), the plot indicates a smaller mean value and significantly smaller variation compared to the results obtained by other strategies. We thus conclude that the strategy used by our algorithm (\(O_1 + O_2\)) performs better than other strategies.

4.3 Impact of the diversified improvement search operators

As described in Sect. 2.7, the proposed algorithm employs two diversified operator \(O_3\) and \(O_4\) to enhance the search power of the algorithm and make it possible for the search to visit new promising regions. The diversified improvement procedure uses probability \(\rho \) to select \(O_3\) or \(O_4\). To analyze the impact of operators \(O_3\) and \(O_4\), we tested our algorithm with \(\rho =1\) (using the operator \(O_3\) alone), \(\rho = 0.5\) (equal application of \(O_3\) and \(O_4\) used in our original MOH algorithm), \(\rho =0\) (using the operator \(O_4\) alone), while keeping all the other ingredients and parameters fixed as described before. The stopping criterion was a timeout limit of 30 minutes. We then independently solved each selected instance 10 times with those different values of \(\rho \). The obtained results on the max-cut problem for the 10 challenging instances used for parameter tuning of Sect. 3.3 are presented in Table 9, including the best objective value \(f_{best}\), the average objective value \(f_{avg}\) over the 10 independent runs, as well as the CPU times in seconds to reach \(f_{best}\). To evaluate the performance, we again calculate the gaps between different best objective values shown in Fig. 3a and average objective values shown in Fig. 3b, where the set of values \(f_{best}\), \(f_{avg}\), when \(\rho = 0.5\), are set as the reference values.

As in Sect. 4.2, to evaluate the performance, we show in Fig. 3a the gaps between the best objective values obtained with different values of \(\rho \) and the best objective values by our original MOH algorithm (\(\rho =0.5\)). We also show in Fig. 3b the box and whisker plots which indicates, for different values of \(\rho \), the distribution and the ranges of the obtained results for the 10 tested instances. The results are expressed as the additive inverse of percent deviation of the averages results from the best known objective values obtained by our original algorithm.

Figure 3a discloses that using \(O_3\) or \(O_4\) alone obtains fewer best known results than using them jointly and achieves significantly worse results on some particular instances. From Fig. 3b, we observe a visible difference in the distribution of the results with different strategies. For the results with the parameter \(\rho = 0.5\), the plot indicates a smaller mean value and significantly smaller variation compared to the results obtained by other strategies. We thus conclude that jointly using \(O_3\) and \(O_4\) with \(\rho = 0.5\) is the best choice since it produces better results in terms of both best and average results.

5 Conclusion

Our multiple search operator algorithm (MOH) for the general max-k-cut problem achieves a high level performance by including five distinct search operators which are applied in three search phases. The descent-based improvement phase aims to discover local optima of increasing quality with two intensification-oriented operators. The diversified improvement phase combines two other operators to escape local optima and discover promising new search regions. The perturbation phase is applied as a means of strong diversification to get out of deep local optimum traps. To obtain an efficient implementation of the proposed algorithm, we developed streamlining techniques based on bucket sorting.

We demonstrated the effectiveness of the MOH algorithm both in terms of solution quality and computation efficiency by a computational study on the two sets of well-known benchmarks composed of 91 instances. For the general max-k-cut problem, the proposed algorithm is able to improve 90 percent of the current best known results available in the literature. Moreover, for the very popular special case with \(k=2\), i.e., the max-cut problem, MOH also performs extremely well by discovering 4 improved best results which were never reported by any max-cut algorithm of the literature. We also investigated the importance of the bucket sorting technique as well as alternative strategies for combing search operators and justified the combinations adopted in the proposed MOH algorithm.

Given that most ideas of the proposed algorithm are general enough, it is expected that they can be useful to design effective heuristics for other graph partitioning problems.

Notes

Our best results are available at: http://www.info.univ-angers.fr/pub/hao/maxkcut/MOHResults.zip.

References

Arráiz, E., & Olivo, O. (2009). Competitive simulated annealing and tabu search algorithms for the max-cut problem. In: Proceedings of the 11th annual conference on genetic and evolutionary computation, pp. 1797–1798. ACM.

Barahona, F., Grötschel, M., Jünger, M., & Reinelt, G. (1988). An application of combinatorial optimization to statistical physics and circuit layout design. Operations Research, 36(3), 493–513.

Benlic, U., & Hao, J. K. (2013). Breakout local search for the max-cut problem. Engineering Applications of Artificial Intelligence, 26(3), 1162–1173.

Boros, E., & Hammer, P. (1991). The max-cut problem and quadratic 0–1 optimization: Polyhedral aspects, relaxations and bounds. Annals of Operations Research, 33, 151–180.

Burer, S., & Monteiro, R. D. (2001). A projected gradient algorithm for solving the maxcut SDP relaxation. Optimization Methods and Software, 15(3–4), 175–200.

Burer, S., Monteiro, R. D., & Zhang, Y. (2002). Rank-two relaxation heuristics for max-cut and other binary quadratic programs. SIAM Journal on Optimization, 12(2), 503–521.

Chang, K. C., & Du, D. H. C. (1987). Efficient algorithms for layer assignment problem. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 6(1), 67–78.

Chen, R. W., Kajitani, Y., & Chan, S. P. (1983). A graph-theoretic via minimization algorithm for two-layer printed circuit boards. IEEE Transactions on Circuits and Systems, 30(5), 284–299.

Cho, J. D., Raje, S., & Sarrafzadeh, M. (1998). Fast approximation algorithms on maxcut, k-coloring, and k-color ordering for VLSI applications. IEEE Transactions on Computers, 47(11), 1253–1266.

Cormen, T. H., Leiserson, C. E., Rivest, R. L., & Stein, C. (2001). Introduction to algorithms (Vol. 6). Cambridge: MIT Press.

Ding, C. H., He, X., Zha, H., Gu, M., & Simon, H. D. (2001). A min–max cut algorithm for graph partitioning and data clustering. In: Proceedings IEEE international conference on data mining, 2001. ICDM 2001, pp. 107–114. IEEE.

Eisenblätter, A. (2002). The semidefinite relaxation of the k-partition polytope is strong. In: Cook, W. J., & Schulz, A. S. (Eds.), Integer Programming and Combinatorial Optimization (pp. 273–290). Berlin: Springer.

Festa, P., Pardalos, P. M., Resende, M. G., & Ribeiro, C. C. (2002). Randomized heuristics for the max-cut problem. Optimization Methods and Software, 17(6), 1033–1058.

Fiduccia, C. M., & Mattheyses, R. M. (1982). A linear-time heuristic for improving network partitions. In: 19th Conference on design automation, 1982, pp. 175–181. IEEE.

Ghaddar, B., Anjos, M. F., & Liers, F. (2011). A branch-and-cut algorithm based on semidefinite programming for the minimum k-partition problem. Annals of Operations Research, 188(1), 155–174.

Glover, F., & Laguna, M. (1999). Tabu search. Berlin: Springer.

Kahruman, S., Kolotoglu, E., Butenko, S., & Hicks, I. V. (2007). On greedy construction heuristics for the max-cut problem. International Journal of Computational Science and Engineering, 3(3), 211–218.

Kann, V., Khanna, S., Lagergren, J., & Panconesi, A. (1997). On the hardness of approximating max k-cut and its dual. Chicago Journal of Theoretical Computer Science, 2.

Karp, R. M. (1972). Reducibility among combinatorial problems. Berlin: Springer.

Kochenberger, G. A., Hao, J. K., Lü, Z., Wang, H., & Glover, F. (2013). Solving large scale max cut problems via tabu search. Journal of Heuristics, 19(4), 565–571.

Liers, F., Jünger, M., Reinelt, G., & Rinaldi, G. (2004). Computing exact ground states of hard ising spin glass problems by branch-and-cut. In: Hartmann, A., & Rieger, H. (Eds.), New Optimization Algorithms in Physics (pp. 47–68). London: Wiley.

Lin, G., & Zhu, W. (2012). A discrete dynamic convexized method for the max-cut problem. Annals of Operations Research, 196(1), 371–390.

Lin, G., & Zhu, W. (2014). An efficient memetic algorithm for the max-bisection problem. IEEE Transactions on Computers, 63(6), 1365–1376.

Lü, Z., Glover, F., Hao, & J. K. (2011). Neighborhood combination for unconstrained binary quadratic problems. In MIC post-conference book, pp. 49–61.

Martí, R., Duarte, A., & Laguna, M. (2009). Advanced scatter search for the max-cut problem. INFORMS Journal on Computing, 21(1), 26–38.

Mitchell, J. E. (2003). Realignment in the national football league: Did they do it right? Naval Research Logistics (NRL), 50(7), 683–701.

Pinter, R. Y. (1984). Optimal layer assignment for interconnect. Journal of VLSI and Computer Systems, 1(2), 123–137.

Shylo, V., Glover, F., & Sergienko, I. (2015). Teams of global equilibrium search algorithms for solving the weighted maximum cut problem in parallel. Cybernetics and Systems Analysis, 51(1), 16–24.

Shylo, V., Shylo, O., & Roschyn, V. (2012). Solving weighted max-cut problem by global equilibrium search. Cybernetics and Systems Analysis, 48(4), 563–567.

Wang, Y., Lü, Z., Glover, F., & Hao, J. K. (2013). Probabilistic grasp-tabu search algorithms for the UBQP problem. Computers & Operations Research, 40(12), 3100–3107.

Wu, Q., & Hao, J. K. (2012). A memetic approach for the max-cut problem. In: Coello Coello, C., Cutello, V., Deb, K., Forrest, S., Nicosia, G., & Pavone, M. (Eds.), Parallel Problem Solving from Nature - PPSN XII, Lecture Notes in Computer Science (vol. 7492, pp. 297–306). Berlin: Springer.

Wu, Q., & Hao, J. K. (2013). Memetic search for the max-bisection problem. Computers & Operations Research, 40(1), 166–179.

Wu, Q., Wang, Y., & Lü, Z. (2015). A tabu search based hybrid evolutionary algorithm for the max-cut problem. Applied Soft Computing, 34(1), 827–837.

Zhu, W., Lin, G., & Ali, M. M. (2013). Max-k-cut by the discrete dynamic convexized method. INFORMS Journal on Computing, 25(1), 27–40.

Zhu, W., Liu, Y., & Lin, G. (2015). Speeding up a memetic algorithm for the max-bisection problem. Numerical Algebra, 5(2), 151–168.

Acknowledgments

We are grateful to the reviewers of the paper which helped us to improve the work. The work was supported by the PGMO (2014-0024H) project from the Jacques Hadamard Mathematical Foundation, National Natural Science Foundation of China (Grant No. 71501157) and China Postdoctoral Science Foundation (Grant No. 2015M580873). Support for Fuda Ma from the China Scholarship Council is also acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ma, F., Hao, JK. A multiple search operator heuristic for the max-k-cut problem. Ann Oper Res 248, 365–403 (2017). https://doi.org/10.1007/s10479-016-2234-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-016-2234-0