Abstract

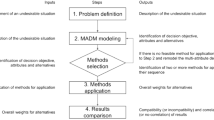

In multiple attribute decision analysis, many methods have been proposed to determine attribute weights. However, solution reliability is rarely considered in those methods. This paper develops an objective method in the context of the evidential reasoning approach to determine attribute weights which achieve high solution reliability. Firstly, the minimal satisfaction indicator of each alternative on each attribute is constructed using the performance data of each alternative. Secondly, the concept of superior intensity of an alternative is introduced and constructed using the minimal satisfaction of each alternative. Thirdly, the concept of solution reliability on each attribute is defined as the ordered weighted averaging (OWA) of the superior intensity of each alternative. Fourthly, to calculate the solution reliability on each attribute, the methods for determining the weights of the OWA operator are developed based on the minimax disparity method. Then, each attribute weight is calculated by letting it be proportional to the solution reliability on that attribute. A problem of selecting leading industries is investigated to demonstrate the applicability and validity of the proposed method. Finally, the proposed method is compared with other four methods using the problem, which demonstrates the high solution reliability of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Decision quality is a general concept that is usually characterized by professionalization, correctness, reliability, robustness, and adaptability of decisions. There are two general ways to assess the quality of decisions: (1) judging decisions based on outcomes; and (2) judging decisions according to the process for making them (Davern et al. 2008). On the one hand, the quality of judgments from a decision maker influences the quality of outcomes. The confidence or satisfaction of the decision maker also contributes to the quality of outcomes (Williams et al. 2007). On the other hand, providing diagnostic information and feedback to decision makers can improve the quality of decision process (Davern et al. 2008).

In literature, research has examined various factors influencing decision quality, including information quality (Raghunathan 1999; Gao et al. 2012), information quantity (Gao et al. 2012), thought mode (Gao et al. 2012), the quality of a decision maker (Raghunathan 1999; Malhotra et al. 2007), and time pressure (Kocher and Sutter 2006). In view of the impact of information technology on firm performance, Raghunathan presented that simultaneous improvement in the quality of information and a decision maker resulted in the upgrade of decision quality using theoretical and simulation models. Using online shopping as background, Gao et al. investigated the combinational effect of information quantity, information quality, and thought modes on decision quality by integrating unconscious thought theory and information processing theory. Malhotra et al. investigated the influence of domain experts on the quality of decisions and constructed rules to identify experts with high level of expertise who could make high-quality decisions in the oil and gas industry. With a view to solving decision problems in economics and finance, Kocher and Sutter examined the influence of time pressure and time-dependent incentive schemes on decision quality using experiments. The above research is clearly domain-relevant and focuses on different perspectives of quality of decisions.

This paper focuses on a general aspect of decision quality, the reliability of decisions in multiple attribute decision analysis (MADA). From the perspective of outcomes, i.e., solutions to MADA problems, large differences among the performances of alternatives on some attributes mean high reliability of solutions or the ranking order of alternatives on the attributes. If such attributes are given higher weights during attribute aggregation processes, the aggregated ranking order will be more reliable. Therefore, attribute weights influence solution reliability in MADA (Aouni et al. 2013; Miranda and Mota 2012; Socorro García-Cascales et al. 2012; Wang 2012). If there is flexibility in attribute weight assignment, the weights should be assigned with a view to achieving high reliability of solutions.

There have been many attempts to determine attribute weights using subjective judgments of a decision maker, called subjective methods. The weights are elicited based on the experience, knowledge, and perception of the decision maker about the decision problem via different elicitation methods. Representative subjective methods comprise direct rating (Bottomley and Doyle 2001), eigenvector method (Saaty 1977; Takeda et al. 1987), linear programming of preference comparisons (Horsky and Rao 1984), linear programming model (Horowitz and Zappe 1995), and goal programming model based on pairwise comparison ratings (Shirland et al. 2003). However, different attribute weights may be elicited from the same decision maker using different subjective methods. There is no single method that can guarantee a more accurate set of attribute weights than others (Deng et al. 2000; Diakoulaki et al. 1995). Furthermore, solution reliability, as defined in Sect. 3.2, is rarely considered in these subjective methods.

Different from the subjective methods, other methods have been proposed to determine attribute weights using the performance information of alternatives assessed on each attribute rather than subjective judgments of a decision maker. These methods are called objective methods, in which attribute weights reflect the amount of information or discriminating power contained in attributes. Representative objective methods include entropy method (Deng et al. 2000; Xu 2004; Chen and Li 2010, 2011), standard deviation (SD) method (Deng et al. 2000), correlation coefficient and standard deviation integrated (CCSD) method (Wang and Luo 2010), criteria importance through intercriteria correlation (CRITIC) method (Diakoulaki et al. 1995), and deviation maximization method (Wang 1998). These objective methods are particularly useful when reliable subjective judgments about attribute weights cannot be obtained from a decision maker due to various reasons such as lack of experience and partial knowledge about the decision problem under consideration. However, none of those objective methods consider solution reliability as defined in Sect. 3.2.

In this paper, we investigate the determination of attribute weights to guarantee high reliability of solutions to MADA problems with imprecise information on alternative ratings (Wang 2012) due to lack of data and partial knowledge about the problems under consideration. In traditional hard computing, such MADA problems cannot be dealt with. In contrast, the problems can be handled in soft computing, which is a collection of methodologies that can tolerate imprecision, uncertainty, and approximate reasoning (Zadeh 1994a, b). Representative methodologies of soft computing include fuzzy logic, neurocomputing, and probabilistic reasoning (Zadeh 1994a). As a soft computing methodology, the evidential reasoning (ER) approach was proposed based on Dempster–Shafer theory (Dempster 1967; Shafer 1976) and decision theory to model probability uncertainty and uncertainties caused by partial or missing information (Yang 2001; Xu 2012) as described in Sect. 2.1. Other types of uncertainties such as fuzziness, interval performance data, and interval belief degrees can also be handled in the extensions of the ER approach (Wang et al. 2006b; Yang et al. 2006; Guo et al. 2007). The details about the development of the ER approach can be found in Xu (2012). On the basis of the ER approach, an objective method is proposed to determine attribute weights using the solution reliability on each attribute.

It is assumed that data quality is high, time is sufficient, a decision maker has high level of expertise, and information load is appropriate, which indicates that the decision maker can give reasonable assessments of each alternative on each attribute. On this assumption, the decision process and subjective judgments are considered appropriate and the reliability of a solution or a ranking order of alternatives depends more on data aggregation methods. To guarantee high reliability of solutions to MADA problems, solution reliability on each attribute is defined, constructed, and then used to determine attribute weights in the proposed method. The constructed solution reliability on one attribute is expressed as an ordered weighted averaging (OWA) of superior intensity of each alternative on the attribute (a concept defined in Sect. 3.2). Characteristic of solution reliability is analyzed to theoretically determine OWA operator weights based on the minimax disparity method (Wang and Parkan 2005). In order to achieve high reliability of the solution to a MADA problem, the attribute with higher solution reliability is assigned a larger weight than others. If subjective judgments on attribute weights are available, an optimization model is constructed by incorporating the judgments as its constraints and minimizing the differences between the weights which satisfy the constraints and those which can achieve high solution reliability.

The rest of the paper is organized as follows. Section 2 presents the preliminaries related to the proposed method of determining attribute weights. Section 3 introduces the proposed method. In Sect. 4, a problem of selecting leading industries is investigated to demonstrate the applicability and validity of the proposed method. Section 5 compares the proposed method with other objective methods of determining attribute weights. Finally, this paper is concluded in Sect. 6.

2 Preliminaries

2.1 ER distributed modeling framework for MADA problems

As the important foundations of the proposed method, the ER distributed modeling framework is described and the uncertainties handled in the framework are defined and illustrated by examples in this section.

Suppose that a MADA problem has \(M\) alternatives \(a_{l}\, (l = 1, {\ldots }, M)\) and \(L\) attributes \(e_{i}\, (i = 1,{\ldots },L)\). The relative weights of the \(L\) basic attributes are denoted by \(w = (w_{1}, w_{2}, {\ldots }, w_{L})^{T}\) such that 0 \(\le w_{i} \le 1\) and \(\sum _{i=1}^L {w_i =1} \) where the notation ‘\(T\)’ denotes ‘transpose’. Assume that \(\varOmega = \{H_{1}, H_{2}, {\ldots }, H_{N}\}\) denotes a set of grades which is increasingly ordered from worst to best. The \(M\) alternatives are assessed on the \(L\) attributes using \(H_{n}\, (n = 1, {\ldots }, N)\). Let \(B(e_{i}(a_{l})) = (\beta _{1,i}(a_{l}), {\ldots }, \beta _{N,i}(a_{l}))\) denote a distributed assessment vector representing that the performance of alternative \(a_{l}\) on attribute \(e_{i}\) is assessed to grade \(H_{n}\) with a belief degree of \(\beta _{n,i}(a_{l})\), where \(0 \le \beta _{n,i}(a_{l}) \le 1\), \(\sum _{n=1}^N {\beta _{n,i} (a_l )} \le 1\) and \(\sum _{n=1}^N {\beta _{n,i} (a_l )} + \beta _{\varOmega ,i}(a_{l}) = 1\). Here, \(\beta _{\varOmega ,i}(a_{l})\) denotes the degree of global ignorance of \(B(e_{i}(a_{l}))\). If \(\beta _{\varOmega ,i}(a_{l}) = 0\), the assessment is said to be complete; otherwise, incomplete. In the distributed framework, the uncertainties which will be handled in this paper are described as follows.

Definition 1

Uncertainties in the distributed framework (Yang 2001; Xu 2012) mainly include probability uncertainty, the uncertainty caused by absence of data, and the uncertainty caused by partial or incomplete data.

Let us use examples to illustrate the uncertainties as defined in Definition 1. Due to different road and traffic conditions, and changes of weather, the fuel consumption of a car in mile per gallon cannot be described by a precise number but by an objective probability distribution, which is a distributed assessment in nature. Subjective judgments of an expert can also be expressed as probability uncertainty. Suppose that the quietness of an engine is assessed using \(\varOmega =\{H_{n}, n = 1, {\ldots }, 6\}=\{Worst, Poor, Average, Good, Excellent, Top\}\). When an expert states that he is 50 % sure the engine is good and 30 % sure it is excellent, his assessment can be expressed as {(\(H_{4}\), 0.5), (\(H_{5}\), 0.3)}, which describes subjective probability uncertainty. The assessment is incomplete and the remaining belief 0.2 means that the expert is 20 % uncertain about the engine; that is, the expert is not sure to which grade (or grades) the belief degree 0.2 should be assigned in the assessment, which describes the uncertainty caused by partial knowledge or partially available data. Because the belief degree 0.2 can be assigned not only to one grade, but also to multiple grades, this type of uncertainty in the assessment can be seen as an extension of traditional probability uncertainty. More specially, when there is no knowledge or data available for the expert to give his assessment, he may only be able to give {(\(\varOmega \), 1)}; that is, he is 100 % unsure about the engine. Such a case represents the uncertainty caused by absence of data. More examples with respect to uncertainties modeled by the ER approach can be found in (Yang 2001; Xu 2012).

2.2 OWA operators

The OWA operator was proposed by Yager (1988) to linearly aggregate a set of ordered values. It will be used to create solution reliability on an attribute by aggregating superior intensity of each alternative on the attribute, as presented in Sect. 3.2, and thus simply introduced as follows.

Definition 2

An OWA operator with \(p\) dimension is defined as a mapping \(F\): \(\mathfrak {R}^{p}\rightarrow \mathfrak {R}\). This mapping is associated with a weight vector \(\theta = (\theta _{1}, {\ldots }, \theta _{p})\) such that \(0 \le \theta _{j} \le 1\, (j = 1, {\ldots }, p)\), \(\sum _{j=1}^p {\theta _j =1} \), and \(F(x_{1}, {\ldots }, x_{p})=\sum _{j=1}^p {\theta _j y_j } \), where \(y_{j}\) is the \(j\)th largest element of \(x_{1}, {\ldots }, x_{p}\).

OWA operators provide a framework to uniformly consider different decision criteria under uncertainty such as maximax (optimistic), maximin (pessimistic), equally likely (Laplace), and Hurwicz criteria (Ahn and Choi 2012; Wang and Chin 2011). It is noticed that the uncertainty in OWA operators is about the weights of values to be aggregated rather than the values; that is, the uncertainty is different from those described by the distributed assessments in Sect. 2.1. In the framework, different choice of \(\theta \) depends on the orness degree (Yager 1988), also called the attitudinal character, i.e.,

The orness degree is limited to [0, 1] and used to measure the optimism level of a decision maker. The conditions of orness\((\theta )>0.5\), orness\((\theta )<0.5\), and orness\((\theta )=0.5\) mean that the decision maker is optimistic, pessimistic, and neutral, respectively, which reflects the uncertainty in decision making. Determination of \(\theta \) is the prerequisite of applying OWA operators in decision making. In the following, we present two representative models for determining \(\theta \) given the orness degree.

Model 1:

Model 2:

Model 1, referred to as maximum entropy method, was suggested by O’Hagan (1988) to maximize the entropy of weight distribution, while Model 2, called minimax disparity method, was proposed by Wang and Parkan (2005) to minimize the maximum disparity between any two adjacent weights. The OWA operator weights resulting from the two models have the following characteristics (Wang and Chin 2011):

-

(a)

The weights are in ascending or descending order. That is, \(\theta _{1} \ge \theta _{2} \ge {\cdots } \ge \theta _{p} \ge 0\) if orness degree \(\alpha > 0.5\) and \(0 \le \theta _{1} \le \theta _{2} \le {\cdots } \le \theta _{p}\) if \(\alpha \le 0.5\).

-

(b)

The weights depend on the rank-order of \(y_{1}, {\ldots }, y_{p}\) and the optimism level of the decision maker (orness degree).

-

(c)

The weights satisfy \(\theta _{1} = 1\) and \(\theta _{j}\, (j \ne 1) = 0\) if \(\alpha = 1\), which means that the decision maker is purely optimistic and considers only the largest value \(y_{1}\) in decision analysis.

-

(d)

The weights satisfy \(\theta _{p} = 1\) and \(\theta _{j}\, (j \ne p) = 0\) if \(\alpha = 0\), which means that the decision maker is purely pessimistic and considers only the smallest value \(y_{p}\) in decision analysis.

-

(e)

The weights satisfy \(\theta _{1} = \theta _{2} = {\cdots } = \theta _{p} = 1 / p\) if \(\alpha = 0.5\), which means that the decision maker is neutral and treats \(y_{1}, {\ldots }, y_{p}\) equally in decision analysis.

-

(f)

The weights resulting from Model 1 form a geometric progression, namely, \(\theta _{j+1} /\theta _j \equiv q\) for \(j = 1, {\ldots }, p\), where \(q > 0\). However, the weights resulting from Model 2 form an arithmetical progression, namely, \(\theta _{j+1} - \theta _{j}=d\) or \(\theta _{j} - \theta _{j+1}=d\) for \(j = 1, {\ldots }, p-1\), where \(d > 0\).

3 Determination of attribute weights by solution reliability

In this section, a new method of determining attribute weights to improve solution reliability is proposed based on OWA operators.

3.1 Construction of minimal satisfaction of alternatives

The minimal satisfaction of alternative \(a_{l}\, (l = 1,{\ldots },M)\) is constructed as follows.

Suppose that the assessments \(B(e_{i}(a_{l}))\, (i = 1, {\ldots }, L, l = 1, {\ldots }, M)\) weighted by \(w\) are combined using the analytical ER algorithm (Wang et al. 2006a) to generate the aggregated assessment \(B(a_{l}) = (\beta _{1}(a_{l}), {\ldots }, \beta _{N}(a_{l}))\) (\(l = 1, {\ldots }, M\)) such that \(\sum _{n=1}^N {\beta _n (a_l )} +\beta _{\varOmega }(a_{l})=1\). Here, a belief degree of \(\beta _{n}(a_{l})\) is assigned to a grade \(H_{n}\) and the uncertainty of \(B(a_{l})\) is denoted by \(\beta _{\varOmega }(a_{l})\). This combination process is on the assumption of attribute independence, similar to the combination of utility functions on attributes on the assumption of utility independence in multi-attribute utility theory (MAUT) (Dyer and Jia 1998), which provides a popular framework of analyzing real MADA problems (Kainuma and Tawara 2006; Ananda and Herath 2005; Butler et al. 2001; Brito and Almeida 2012). The \(B(a_{l})\) is then combined with the utilities of assessment grades \(u(H_{n})\, (n = 1, {\ldots }, N)\) such that \(0 = u(H_{1}) < u(H_{2}) < {\cdots } < u(H_{N}) = 1\) to form the minimum and maximum expected utilities of alternative \(a_{l}\, (l = 1, {\ldots }, M)\), i.e.,

and

The indifference-based and choice-based methods (Daniels and Keller 1992), and the maximum entropy method based on an analogy between probability and utility (Abbas 2006) can be used to estimate utilities of assessment grades. In the indifference-based method, certainty and probability equivalents are usually employed to determine utilities of assessment grades (Hershey and Schoemaker 1985). The determination of utilities of assessment grades is similar to the determination of utility function on an attribute in MAUT (Keeney and Raiffa 1993).

To facilitate the comparison of alternatives, we define the minimal satisfaction of alternative \(a_{l}\) as

which is limited to [\(-\)1, 1] as \(0 \le u_{\min }(a_{l}) \le 1\) and \(0 \le u_{\max }(a_{l}) \le 1\, (l = 1, {\ldots }, M)\). Minimal satisfaction measures the gain from selecting alternative \(a_{l}\) under the worst case scenario when there is unknown in the performances of any alternatives. Alternatives with larger minimal satisfaction are more preferred. In other words, if \(V(a_{l}) > V(a_{m})\), alternative \(a_{l}\) is better than alternative \(a_{m}\). \(V(a_{l})\) is derived from the minimum and maximum expected utilities of alternative \(a_{l}\), while MAUT is consistent with expected utility theory on the assumption of utility independence (Keeney and Raiffa 1993). Thus, there is an inner similarity between \(V(a_{l})\) and MAUT.

Definition 3

The minimal satisfaction of alternative \(a_{l}\, (l = 1, {\ldots }, M)\) on attribute \(e_{i}\, (i = 1, {\ldots }, L)\) is similarly defined as

where

Clearly, \(V(e_{i}(a_{l}))\) is also limited to [\(-\)1, 1]. More importantly, \(V(e_{i}(a_{l}))\) is defined using the minimum and maximum expected utilities of alternative \(a_{l}\) on attribute \(e_{i}\) and thus there is also an inner similarity between \(V(e_{i}(a_{l}))\) and MAUT. On the other hand, if \(B(e_{i}(a_{l})) = (\beta _{1,i}(a_{l}), {\ldots }, \beta _{N,i}(a_{l}))\) (\(i = 1, {\ldots }, L\)) and \(B(a_{l}) = (\beta _{1}(a_{l}), {\ldots }, \beta _{N}(a_{l}))\) are considered the coefficients of \(u(H_{n})\) (\(n = 1, {\ldots }, N\)) in \(V(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L\)) and \(V(a_{l})\), respectively, the analytical ER algorithm is then used to combine \(V(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L\)) to form \(V(a_{l})\). From this perspective, the formation principle of \(V(a_{l})\) is also very similar to that of multi-attribute utility function in MAUT.

3.2 Measurement of solution reliability

To achieve high reliability of the solution to a MADA problem, the attributes on which alternatives are performing significantly differently should be assigned larger weights. In other words, assessments on those attributes should contribute more to the aggregated assessment than assessments on other attributes. To formalize the idea, the following concepts are firstly defined.

Definition 4

Suppose that \(V(e_{i}(b_{l}))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M\)) represents the ordered minimal satisfaction of alternatives on attribute \(e_{i}\) such that \(V(e_{i}(b_{1})) \ge {\cdots } \ge V(e_{i}(b_{M}))\). That is, \(V(e_{i}(b_{l}))\) is the \(l\)th largest of \(V(e_{i}(a_{1}))\), ..., \(V(e_{i}(a_{M}))\). Then, superior intensity of alternative \(b_{l}\) (\(l = 1, {\ldots }, M-1)\) on attribute \(e_{i}\) and solution reliability on attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) are defined respectively as

and

where \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) such that \(0 \le \theta _{l} \le 1\) (\(l = 1, {\ldots }, M-1)\) and \(\sum _{l=1}^{M-1} {\theta _l } =1\).

As \(V(e_{i}(b_{M}))\) is the smallest, i.e., it has no superiority over others, its superior intensity \(\Delta V(e_i (b_M ))\) is assumed to be 0 and not included in Eq. (19). Because there is an inner similarity between \(V(e_{i}(b_{l}))\) and MAUT, \(Q(e_{i})\) is closely related with MAUT.

To calculate \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)), \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) in Eq. (19) is assumed to be independent of attribute \(e_{i}\). That is, a set of \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) is applied to the calculation of \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). If it is not, the attribute weights calculated in Eq. (27) may be affected unfairly. On the assumption that data quality is high, time is sufficient, a decision maker has high level of expertise, and information load is appropriate, solution reliability on attribute \( e_{i}\) (\(i = 1, {\ldots }, L\)) in Definition 4 is defined from the perspective of outcomes rather than decision process. Because \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) contributes to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)), its property is described as follows.

Property 1

Given \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) in Eq. (18), it is satisfied that

Proof of Property 1 is presented in Appendix.

Large \(Q(e_{i})\) means high reliability of solution on attribute \(e_{i}\). In contrast, small \(Q(e_{i})\) means that the minimal satisfaction of alternatives on attribute \(e_{i}\) is close to each other, so solution reliability on attribute \(e_{i}\) is low. The property of \(\Delta V(e_i (b_1 )) \ge {\cdots } \ge \Delta V(e_i (b_{M-1} ))\) (\(i = 1, {\ldots }, L\)) in Eq. (20) means that \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) can be combined using OWA operator to form \(Q(e_{i})\). Thus, the determination of \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) is transformed to the determination of OWA operator weights for calculating \(Q(e_{i})\).

3.3 Determination of \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) for calculating solution reliability on attributes

The definition of OWA operator in Sect. 2.2 shows that \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) can be seen as an OWA operator to combine \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) as we have \(\Delta V(e_i (b_1 )) \ge {\cdots } \ge \Delta V(e_i (b_{M-1} ))\) (\(i = 1, {\ldots }, L\)) in Property 1. Both the maximum entropy method (O’Hagan 1988) and the minimax disparity method (Wang and Parkan 2005) can be used to generate \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\). As demonstrated in (Wang and Chin 2011), OWA operator weights resulting from the minimax disparity method form an arithmetical progression and are easier to compute than those resulting from the maximum entropy method that form a geometric progression. Therefore, the minimax disparity method is used in this paper to determine \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) for calculating \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)).

Before determining \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\), we analyze the characteristic of \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). Suppose that alternatives \(b_{l}\) (\(l = 1, {\ldots }, M\)) are ordered, i.e., \(V(e_{i}(b_{1})) \ge {\cdots } \ge V(e_{i}(b_{M}))\). When the ordered alternatives \(b_{l}\) (\(l = 1, {\ldots }, M\)) are considered on attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)), \(b_{1}\) is the best choice for the MADA problem. Alternative \(b_{2}\) becomes the best choice when alternative \(b_{1}\) is unavailable, and so on. Therefore, \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) in Eq. (19) should be decreasing to show the decreasing contribution of \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)), i.e., \(\theta _{1} > {\cdots } > \theta _{M-1}\). Further, to make sure that each \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) is contributing to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)), each \(\theta _{l }\) should not be zero, i.e., \(\theta _{1} > {\cdots } > \theta _{M-1} >\) 0. This requirement corresponds with the situation of orness degree \(\alpha > 0.5\) with respect to OWA operator. In the minimax disparity method, the difference between \(\theta _{l}\) and \(\theta _{l+1}\) (\(l = 1, {\ldots }, M-2)\) is assumed to be the same under the constraint of \(\theta _{1} > {\cdots } > \theta _{M-1} >\) 0 (Wang and Chin 2011). This approach does not emphasize the different contribution of \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). If more weight is given to \(\Delta V(e_i (b_l ))\) than to \(\Delta V(e_i (b_{l+1} ))\) (\(l = 1, {\ldots }, M-1)\), the solution reliability will be increased. Therefore, we propose that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) should satisfy the following assumption.

Assumption 1

Suppose that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) in Eq. (19). To emphasize the decreasing contribution of \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) to \(Q(e_{i})\), it is then needed that

Based on Assumption 1, two situations will be discussed to determine \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\): (1) the largest weight \(\theta _{1}\) is given; and (2) orness degree \(\alpha \) is provided. The first situation is handled in the following theorem.

Theorem 1

It is assumed that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) in Eq. (19) and \(\Delta d\) is defined in Eq. (25). Given the largest weight \(\theta _{1}\), \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) is determined by \(\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) using the minimax disparity method based on Assumption 1, i.e., minimizing the maximum disparity between \(\theta _{l}\) and \(\theta _{l+1}\) (\(l = 1, {\ldots }, M-2\)), where \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \), \(\Delta d=\frac{6(M-1)\theta _1 -12}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3}\), and \(\varepsilon \) is a small positive number close to zero.

Proof of Theorem 1 is presented in Appendix.

The parameter \(\varepsilon \) in Theorem 1 is used to minimize the maximum disparity between \(\theta _{l}\) and \(\theta _{l+1}\) (\(l = 1, {\ldots }, M-2\)), that is, to minimize \(d_{1}\). From the proof of Theorem 1, can be inferred that \(d_{1} > \frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}\). Thus, \(\varepsilon > 0\) is needed to minimize \(d_{1}\). In general, \(\varepsilon \) should be significantly smaller than the value of \(\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}\), such as \(\varepsilon < \frac{4(M-1)\theta _1 -6}{10(M-2)(M-1)}\) or \(\varepsilon < \frac{4(M-1)\theta _1 -6}{100(M-2)(M-1)}\). The proof of Theorem 1 shows that \(\theta _{M-1}=\frac{6-4(M-1)\theta _1 +(M-2)(M-1)d_1 }{2(M-1)}\), which deduces from \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \) that \(\theta _{M-1}\) increases along with the increase of \(d_{1}\). Compared with the situation of \(\varepsilon = \frac{4(M-1)\theta _1 -6}{110(M-2)(M-1)}\), the situation of \(\varepsilon = \frac{4(M-1)\theta _1 -6}{15(M-2)(M-1)}\) means that superior intensity of \(b_{M-1}\), \(\Delta V(e_i (b_{M-1} ))\), contributes more to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) (see Eqs. (18) and (19)). To guarantee that Theorem 1 is meaningful, the allowable ranges of \(\theta _{1}\) are determined by the following theorem.

Theorem 2

On the assumption that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) in Eq. (19), the determination of \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) in Theorem 1 based on Assumption 1 requires that \(\frac{4-(M-2)(M-1)\varepsilon }{2(M-1)} < \theta _{1} < \frac{3-(M-2)(M-1)\varepsilon }{M-1}\) where \(\varepsilon \) is a small positive number close to zero.

Proof of Theorem 2 is presented in Appendix.

When a value close to \(\frac{3-(M-2)(M-1)\varepsilon }{M-1}\) is given to \(\theta _{1}\), \(\Delta V(e_i (b_1 ))\) contributes more to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). In contrast, if \(\theta _{1}\) is close to \(\frac{4-(M-2)(M-1)\varepsilon }{2(M-1)}\), \(\Delta V(e_i (b_1 ))\) contributes less to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). It can be deduced from Theorem 2 that a large \(\varepsilon \) may lead to too small or even negative allowable ranges of \(\theta _{1}\). This further explains why \(\varepsilon \) should be significantly smaller than the value of \(\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}\). When orness degree \(\alpha \) is provided, \(\theta _{l}\) (\(l = 1 {\ldots }, M-1)\) is determined by the following theorem.

Theorem 3

Let \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) denote the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) in Eq. (19). Given orness degree \(\alpha \) such that 0.5 \(<\) \(\alpha \) \(<\) 1, \(\theta _{1}\) and \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) can be determined by \(\frac{(24\alpha -12)(M-2)+(M-2)(M-1)M\varepsilon }{2(M-1)M}\) and \(\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) (\(l = 2, {\ldots }, M-1)\), respectively, where \(d_{1}=\frac{2(24\alpha -12)(M-2)-6M}{(M-2)(M-1)M}+3\varepsilon \), \(\Delta d=\frac{3(24\alpha -12)(M-2)-12M}{(M-3)(M-2)(M-1)M}+\frac{6\varepsilon }{M-3}\), and \(\varepsilon \) is a small positive number close to zero.

Proof of Theorem 3 is presented in Appendix.

The selection of \(\varepsilon \) in Theorem 3 is the same as that in Theorem 1. To guarantee that Theorem 3 is meaningful, the allowable ranges of the orness degree \(\alpha \) are determined by the following theorem.

Theorem 4

On the assumption that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) in Eq. (19), the determination of \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) in Theorem 3 based on Assumption 1 requires that \(\frac{8M-12-(M-2)(M-1)M\varepsilon }{12M-24} <\) \(\alpha \) \(< \frac{6M-8-3(M-2)(M-1)M\varepsilon }{8M-16}\) where \(\alpha \) is the orness degree for \(Q(e_{i})\) and \(\varepsilon \) is a small positive number close to zero.

Proof of Theorem 4 is presented in Appendix.

Theorem 3 shows that \(\theta _{1}\) increases along with the increase of orness degree \(\alpha \). Thus, when a value close to \(\frac{6M-8-3(M-2)(M-1)M\varepsilon }{8M-16}\) is given to orness degree \(\alpha \), \(\Delta V(e_i (b_1 ))\) contributes more to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). In contrast, if orness degree \(\alpha \) is close to \(\frac{8M-12-(M-2)(M-1)M\varepsilon }{12M-24}\), \(\Delta V(e_i (b_1 ))\) contributes less to \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)). Similar to Theorem 2, Theorem 4 further explains why \(\varepsilon \) should be significantly smaller than the value of \(\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}\).

Conclusions in Theorems 1–4 are clearly related to the number of alternatives, i.e., \(M\). When \(M > 3\), the conclusions are meaningful. In the following, we analyze the situation where \(1 \le M \le 3\) with respect to Theorems 1–4:

-

(1)

when \(M = 1, Q(e_{i})\) (\(i = 1, {\ldots }, L\)) of Eq. (19) is unnecessary.

-

(2)

when \(M = 2, Q(e_{i})\) (\(i = 1, {\ldots }, L\)) is equal to \(\theta _1 \cdot \Delta V(e_i (b_1 ))=\theta _1 \cdot (V(e_i (b_1 ))-V(e_i (b_2 )))\) such that \(\theta _{1} = 1\). In this situation, Theorems 1–4 are unnecessary.

-

(3)

when \(M = 3\), \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) is equal to \(\sum _{l=1}^2 \theta _l \cdot \sum _{m=1,m>l}^3 (V(e_i (b_l ))-V(e_i (b_m )))/2 \) such that \(\theta _{1} + \theta _{2} = 1\). If \(\theta _{1}\) is given, \(\theta _{2}\) is clearly known. When \(\alpha \) is provided, \(\theta _{1} = \alpha \) is obtained according to Eq. (1), which generates \(\theta _{2} = 1 - \alpha \). Theorems 1–4 are similarly unnecessary.

3.4 Determination of attribute weights

On condition that Assumption 1 is satisfied, \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) can be determined using Theorems 1–4 when a decision maker provides the largest weight \(\theta _{1}\) or the orness degree \(\alpha \). Following the principle of high reliability of the solution to a MADA problem, we use \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) to determine attribute weights.

On attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)), as explained in Sect. 3.2, large \(Q(e_{i})\) means high reliability of solution. In other words, attribute \(e_i^*\) with larger \(Q(e_i^*)\) contributes more to solution reliability for the MADA problem than others from Eq. (19), and vice versa. In particular, if the performances of alternatives on attribute \(e_i^o \) are the same, \(Q(e_i^o )\) is equal to 0 and the decision maker can make a decision to the MADA problem without considering the attribute, which is similar to the conclusion drawn by Deng et al. (2000). Thus, attribute weights can be determined by

The determination of attribute weights in Eq. (27) is closely related with MAUT, which is due to the tight relationship between \(Q(e_{i})\) and MAUT, as presented in Sects. 3.1 and 3.2. Overall there is an inner relationship between the proposed method and MAUT.

When a decision maker can provide subjective preferences about attribute weights, we assume that the preferences are expressed as linear inequality constraints, following Wang (2012). Representative constraints include bounded constraints of weights (e.g., \(LB_{i} \le w_{i} \le UB_{i}\) (\(i \in \{1, {\ldots }, L\}\))), bounded preference ratio of weights (e.g., \(LB_{i} \le w_{i}/w_{j} \le UB_{i}\) (\(i, j \in \{1, {\ldots }, L\}\))), and bounded preference difference of weights (e.g., \(LB_{i} \le w_{i}\) – \(w_{j} \le UB_{i}\) (\(i, j \in \{1, {\ldots }, L\}\))). Under such conditions, Eq. (27) can be extended to the following optimization model to determine attribute weights:

Here, \(C(w) = \{w {\vert } A\cdot w \le c\}\) denotes the subjective preferences of the decision maker about \(w\), i.e., the set of all feasible weight vectors, where \(A\) is a \(R \times L\) matrix of coefficients, \(c\) is a column vector with \(R\) elements, and \(R\) is the number of constraints. Let us use an example to illustrate \(C(w)\). Suppose that for five attributes the decision maker gives \(C(w) = \{0.2 \le w_{1} \le 0.3, 0.4 \le w_{2}/w_{3} \le 0.6, 0.2 \le w_{4}-w_{5} \le 0.4\}\), i.e.,

Equation (27) can be seen as a special case of the model in Eqs. (28)–(31) with the optimal objective value 0. The model can be solved by using Matlab. Resulting attribute weights are then used to calculate \(V(a_{l})\) (\(l = 1, {\ldots }, M\)) and generate a rank-order of alternatives as a solution with high reliability to the MADA problem. In brief, the whole procedure of determining attribute weights by solution reliability on each attribute with the possible subjective preferences of the decision maker considered and generating a solution with high reliability to a MADA problem is presented as follows:

-

Step 1. Calculate \(V(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M\)) using \(B(e_{i}(a_{l}))\) and \(u(H_{n})\) (\(n = 1, {\ldots }, N\)) as detailed in Eqs. (15)–(17).

-

Step 2. Determine \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) in Definition 4 using Theorem 1 if the largest weight \(\theta _{1}\) satisfying Theorem 2 is given; or else, determine \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) in Definition 4 using Theorem 3 when orness degree \(\alpha \) satisfying Theorem 4 is provided. In particular, when \(M=2\) or \(M=3\), \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) can be directly determined without using Theorems 1 and 3 no matter whether the largest weight \(\theta _{1}\) or the orness degree \(\alpha \) is provided, as discussed in Sect. 3.3.

-

Step 3. Calculate \(Q(e_{i})\) (\(i = 1, {\ldots }, L\)) using \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) and Eqs. (18) and (19).

-

Step 4. Determine \(w_{i}\) (\(i = 1, {\ldots }, L\)) using \(Q(e_{i})\) and Eq. (27) if no subjective preference about \(w\) is provided; otherwise, incorporate the subjective preference using Eq. (30) and solve the model in Eqs. (28)–(31) to obtain \(w_{i}\) (\(i = 1, {\ldots }, L\)).

-

Step 5. Calculate \(V(a_{l})\) (\(l = 1, {\ldots }, M\)) using \(w_{i}\) (\(i = 1, {\ldots }, L\)), \(B(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L\), \(l = 1, {\ldots }, M\)), the analytical ER algorithm (Wang et al. 2006a), and Eq. (14) to generate a rank-order of alternatives as the solution with high reliability to the MADA problem.

4 Illustrative example

In this section, a problem of selecting leading industries in Anhui province of China is analyzed to demonstrate the applicability and validity of the proposed method.

4.1 Description of the problem of selecting leading industries

The choice of leading industries to preferentially develop significantly influences the economic structure and the development of a region. Scientific selection of such industries can facilitate sound and rapid development of the economy in the region. Leading industries are generally characterized by their high contributions to the society, strong correlation between their development and regional economical development, and large market potential.

In this paper, we investigate the selection of leading industries in the industry-cluster region in the north of the Yangtze River. This region is located in megalopolis along the Yangtze River in Anhui province closed to Wuhu, a city in Anhui province. Thus, the region is managed by the government of Wuhu. By considering major national strategic needs, domestic and international industrial development trend, basic orientation of national industrial layout, and industrial foundation and advantages of the region, the development and reform commission of Wuhu initially identified twelve industries as candidates. These industries comprise modern logistics, electronic information, agricultural products processing, new material, medical equipment, biomedicine, organic chemical, naval architecture and ocean engineering equipment, car manufacturing, numerical control machine, mechanical component, and engineering machinery industries. An official from the development and reform commission of Wuhu acted as a decision maker to choose five excellent industries as leading industries with the help of ten experts from the development and reform commission of Wuhu, relevant departments of Wuhu government, relevant industries, and a collaborating university. Seven attributes were used to evaluate the twelve industries, comprising expandability, pioneer, adaptability, competitiveness, environmental protection, difficulty, and risk. The twelve industries, denoted by \(I_{l}\) (\(l = 1, {\ldots }, 12\)), are assessed on seven attributes using the following set of assessment grades, as presented in Table 1:

The decision maker gives \(u(H_{n})\) (\(n \)= 1, ..., 5) = (0, 0.25, 0.5, 0.75, 1) using a probability assignment approach (Farquhar 1984; Winston 2011). In the problem, the data are from Wuhu government and their quality is considered high; the decision maker has sufficient time to make the decision; the decision maker is highly specialized with the help of ten experts, and information load is appropriate for the decision maker and the ten experts. Therefore, the assessment process is considered appropriate and reliable. Due to the nature of the problem of selecting leading industries, there is no information available for assigning attribute weights using subjective methods. With a view to increasing solution reliability, the proposed method is employed to determine attribute weights and generate the corresponding solution.

4.2 Solution to the problem of selecting leading industries

Following the five steps outlined in Sect. 3.4, the minimal satisfaction of each industry on each attribute is calculated firstly using the assessment data in Table 1, as presented in Table 2.

In the following, we consider two situations for calculating \(Q(e_{i})\) (\(i = 1, {\ldots }, 7\)) and generating two solutions. One is when the largest weight \(\theta _{1}\) is provided and the other when orness degree \(\alpha \) is provided.

(1) \(\theta _{1}\) is provided

Suppose that \(\varepsilon \) approaches to 0, then it can be known from Theorem 2 that 2/(12\(-\)1) \(< \theta _{1} < 3\)/(12\(-\)1). The decision maker expects more contribution of \(\Delta V(e_i (b_1 ))\) to \(Q(e_{i})\) (\(i = 1, {\ldots }, 7\)), so he specifies \(\theta _{1} = 2.8\)/(12\(-\)1) = 0.2545. Then, \(\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}=\frac{4{*}(12-1){*}0.2545-6}{(12-2){*}(12-1)} = 0.0473\) is obtained. Due to the fact that less contribution of \(\Delta V(e_i (b_{M-1} ))\) to \(Q(e_{i})\) (\(i = 1, {\ldots }, 7\)) is expected by the decision maker, \(\varepsilon = 0.0001 < 0.0473\) / 100 is given.

Using Theorem 1, we obtain \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon = 0.0474\), \(\Delta d=\frac{6(M-1)\theta _1 -M}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3} = 0.0049\), and (\(\theta _{2}, {\ldots }, \theta _{11})\) = (0.2072, 0.1647, 0.1271, 0.0943, 0.0665, 0.0435, 0.0255, 0.0123, 0.0039, 0.0005). After obtaining \(\theta _{l}\) (\(l = 1, {\ldots }, 11\)) and the minimal satisfaction of each industry on each attribute as shown in Table 2, solution reliability on each attribute is then calculated using Eqs. (18) and (19):

Thus, \(w_{i}\) (\(i = 1, {\ldots }, 7\)) = (0.0294, 0.0993, 0.1151, 0.2354, 0.0797, 0.2291, 0.212) is obtained by using Eq. (27). The minimal satisfaction of each industry is correspondingly calculated using Eq. (14) as \(V(I_{l})\) (\(l = 1, {\ldots }, 12\)) = (\(-\)0.4401, \(-\)0.2413, \(-\)0.254, \(-\)0.3316, \(-\)0.0157, \(-\)0.2262, \(-\)0.3791, \(-\)0.384, 0.0157, \(-\)0.3382, \(-\)0.3535, \(-\)0.4134), which generates the rank-order of industries, i.e., \(I_{9} \succ I_{5} \succ I_{6} \succ I_{2} \succ I_{3} \succ I_{4} \succ I_{10} \succ I_{11} \succ I_{7} \succ I_{8} \succ I_{12} \succ I_{1}\). The five excellent industries selected as leading industries are car manufacturing, medical equipment, biomedicine, electronic information, and agricultural products processing in descending order.

(2) Orness degree \(\alpha \) is provided

On condition that \(\varepsilon = 0.0001\), orness degree \(\alpha \) is limited to (\(\frac{8M-12-(M-2)(M-1)M\varepsilon }{12M-24}\), \(\frac{6M-8-3(M-2)(M-1)M\varepsilon }{8M-16}) = (0.6989, 0.7951)\) using Theorem 4.

Similar to Situation (1), the decision maker expects more contribution of \(\Delta V(e_i (b_1 ))\) to \(Q(e_{i})\) (\(i = 1, {\ldots }, 7\)), so he specifies \(\alpha = 0.75\) for calculating \(\theta _{l}\) (\(l = 1, {\ldots }, 11\)). Using Theorem 3, we obtain \(d_{1}=\frac{2(24\alpha -12)(M-2)-6M}{(M-2)(M-1)M}+3\varepsilon = 0.0367\), \(\Delta d=\frac{3(24\alpha -12)(M-2)-12M}{(M-3)(M-2)(M-1)M}+\frac{6\varepsilon }{M-3} = 0.0031\), and (\(\theta _{1}, {\ldots }, \theta _{11})\) = (0.2278, 0.1911, 0.1575, 0.1271, 0.0997, 0.0754, 0.0542, 0.0362, 0.0212, 0.0093, 0.0005). Based on the resulting \(\theta _{l}\) (\(l = 1, {\ldots }, 11\)) and the minimal satisfaction of each industry on each attribute given in Table 2, solution reliability on each attribute can be calculated by using Eqs. (18) and (19) as

Thus, \(w_{i}\) (\(i = 1, {\ldots }, 7\)) = (0.03, 0.1001, 0.1155, 0.234, 0.0813, 0.2261, 0.2129) is obtained by using Eq. (27). The minimal satisfaction of each industry is correspondingly calculated using Eq. (14) as \(V(I_{l})\) (\(l = 1, {\ldots }, 12\)) = (\(-\)0.4362, \(-\)0.239, \(-\)0.2534, \(-\)0.3277, \(-\)0.0154, \(-\)0.2234, \(-\)0.3762, \(-\)0.3812, 0.0154, \(-\)0.3363, \(-\)0.351, \(-\)0.4106), which generates the following rank-order of industries,

The top five industries are still car manufacturing, medical equipment, biomedicine, electronic information, and agricultural products processing in descending order.

It can be observed that the same rank-order of industries is obtained in both situations. The resulting attributes weights \(w_{i}\) (\(i = 1,{\ldots },7\)) in both situations are very similar. This is due to the fact that the largest weight \(\theta _{1}\) and orness degree \(\alpha \) are related and one can be calculated from the other through Eq. (1), and Theorems 1 and 3.

When the subjective preference of the decision maker about \(w\) is available, we may obtain different \(w\) and different rank-order of industries. Suppose that the decision maker provides subjective constraints on \(w\), i.e., \(C(w) = \{0.1 \le w_{2} \le 0.2, w_{7} \ge w_{6}\), \(2.5 \le w_{4}\) / \(w_{3} \le 3\), \(0.2 \le w_{7}-w_{2} \le \) 0.3, \(w_{4}+w_{5} \ge \) 3\(w_{3}\)}. Solving the model in Eqs. (28)–(31) then generates \(w\) = (0.0128, 0.1, 0.0891, 0.2227, 0.0631, 0.2123, 0.3) and \(V(I_{l})\) (\(l = 1, {\ldots }, 12\)) = (\(-\)0.504, \(-\)0.245, \(-\)0.3267, \(-\)0.3383, \(-\)0.0221, \(-\)0.2356, \(-\)0.3924, \(-\)0.4277, 0.0221, \(-\)0.401, \(-\)0.4246, \(-\)0.455). Thus, the rank-order of industries is \(I_{9} \succ I_{5} \succ I_{6} \succ I_{2} \succ I_{3} \succ I_{4} \succ I_{7} \succ I_{10} \succ I_{11} \succ I_{8} \succ I_{12} \succ I_{1}\). The resulting \(w\) and \(V(I_{l})\) (\(l = 1, {\ldots }, 12\)) are significantly different from those in the above two situations where the largest weight \(\theta _{1}\) and orness degree \(\alpha \) are respectively provided. With respect to the rank-order of industries, although the resulting top five industries and their rank-order are the same as those in the above two situations, the resulting rank-order of \(I_{7}\), \(I_{10}\), and \(I_{11}\) is changed.

5 Discussions

In this section, we compare the proposed method with other objective methods from the perspective of solution reliability. The methods to be compared comprise the entropy method (Deng et al. 2000), the SD method (Diakoulaki et al. 1995), the CRITIC method (Diakoulaki et al. 1995), and the CCSD method (Wang and Luo 2010). To facilitate the comparison, the CRITIC and CCSD methods are extended to handle distributed assessments in the ER context.

(1) Entropy method

The minimal satisfaction of each alternative is used to generate a rank-order of alternatives in the ER approach, so the entropy of minimal satisfaction of each alternative on one attribute can be used to measure the contrast intensity of the attribute. Because an attribute with higher contrast intensity can contribute more to solution stability than others, the attribute should be assigned a larger weight. In other words, attribute weights can be determined by the entropy of minimal satisfaction of each alternative on attributes.

After \(V(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L\), \(l = 1, {\ldots }, M\)) is calculated, as presented in Sect. 3.1, the normalized entropy of \(V(e_{i}(a_{l}))\) on attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) is measured by

where \(\tilde{V}(e_i (a_l ))=\frac{\bar{{V}}(e_i (a_l ))}{\sum _{m=1}^M {\bar{{V}}(e_i (a_m ))} }\) and \(\bar{{V}}(e_i (a_l )) = (V(e_{i}(a_{l}))-(-1))/2\). Here, \(\tilde{V}(e_i (a_l ))\) and \(\bar{{V}}(e_i (a_l ))\) are normalized due to the fact that \(V(e_{i}(a_{l}))\in \) [\(-\)1, 1]. The denominator ln\(M\) is used to limit NE \(_{i}\) to [0,1]. The contrast intensity of attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) in the entropy method is then calculated as

Thus, the weight of attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) is determined by

(2) SD method

Similar to the entropy method, the standard deviation of minimal satisfaction of alternatives on attributes can be used to measure the contrast intensity of attributes, and thus to determine attribute weights.

Again using \(V(e_{i}(a_{l}))\) (\(i = 1, {\ldots }, L\), \(l = 1, {\ldots }, M\)) discussed in Definition 3, the SD of \(V(e_{i}(a_{l}))\) (\(l = 1, {\ldots }, M\)) on attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) can be calculated by

where \(\bar{{V}}(e_i (a_l )) = (V(e_{i}(a_{l}))-\)(\(-\)1))/2. Here, \(\bar{{V}}(e_i (a_l ))\) is a normalization of \(V(e_{i}(a_{l}))\) due to the fact that \(V(e_{i}(a_{l}))\in \)[\(-\)1, 1]. Thus, the weight of attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) is determined by

(3) CRITIC method

In the CRITIC method, the covariance between the performance distributions of alternatives on two different attributes is used to calculate the correlation coefficient between the two attributes. The coefficient is then combined with the SD of the performance distributions of alternatives on attributes to determine attribute weights.

To determine attribute weights using the CRITIC method, a correlation coefficient matrix among attributes is constructed based on the correlation coefficient of interval-valued intuitionistic fuzzy sets (Park et al. 2009) as

where

and \(B(e_i (\cdot ))\) denotes the set of \(B(e_{i}(a_{l}))\) (\(l = 1, {\ldots }, M\)).

By using Cauchy–Schwarz inequality, we have \(\sum _{l=1}^M {\sum _{n=1}^N {\beta _{n,i} (a_l )\cdot \beta _{n,j} (a_l )} } \le \sum _{l=1}^M {\sqrt{(\sum _{n=1}^N {\beta _{n,i} (a_l )^{2})\cdot (\sum _{n=1}^N {\beta _{n,j} (a_l )^{2})} } }} \le \sqrt{(\sum _{l=1}^M {\sum _{n=1}^N {\beta _{n,i} (a_l )^{2}} )} \cdot (\sum _{l=1}^M {\sum _{n=1}^N {\beta _{n,j} (a_l )^{2}} )} }\), \(\sum _{l=1}^M {\beta _{\Omega ,i} (a_l )\cdot \beta _{\Omega ,j} (a_l )} \le \sqrt{(\sum _{l=1}^M {\beta _{\Omega ,i} (a_l )^{2}} )\cdot (\sum _{l=1}^M {\beta _{\Omega ,j} (a_l )^{2}} )}\), and further \(\sqrt{(\sum _{l=1}^M {\sum _{n=1}^N {\beta _{n,i} (a_l )^{2}} )} \cdot (\sum _{l=1}^M {\sum _{n=1}^N {\beta _{n,j} (a_l )^{2}} )} }+\sqrt{(\sum _{l=1}^M {\beta _{\Omega ,i} (a_l )^{2}} )\cdot (\sum _{l=1}^M {\beta _{\Omega ,j} (a_l )^{2}} )}\le \sqrt{(\sum _{l=1}^M {(\sum _{n=1}^N {\beta _{n,i} (a_l )^{2}} +\beta _{\Omega ,i} (a_l )^{2})} )\cdot (\sum _{l=1}^M {(\sum _{n=1}^N {\beta _{n,j} (a_l )^{2}} +\beta _{\Omega ,j} (a_l )^{2})} )}\), which deduces that 0 \(\le r_{ij} \le \) 1. Using \(r_{ij}\) of Eq. (37) and \(\sigma _{i}\) of Eq. (35) (\(i, j = 1, {\ldots }, L\)), the weight of attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) is determined by

(4) CCSD method

In the CCSD method, the aggregated assessment of alternative \(a_{l}\) (\(l = 1, {\ldots }, M\)) on all attributes except the \(i\)th one, \(e_{i}\) (\(i \in \) {1, ..., \(L\)}), is firstly calculated as

using the analytical ER algorithm (Wang et al. 2006a), \(B(e_{j}(a_{l}))\) (\(j = 1, {\ldots }, L\), \(j \ne i\)), and weight vector \(w_j^{i-} =\frac{w_j }{\sum _{k=1,k\ne i}^L {w_k } }\) (\(j = 1, {\ldots }, L\), \(j \ne i\)). The constraint \(\sum _{j=1,j\ne i}^L {w_j^{i-} } \)=1 is clearly satisfied. The uncertainty of \(B^{i-}(a_l )\) is denoted by \(\beta _\Omega ^{i-} (a_l )\) to satisfy \(\sum _{n=1}^N {\beta _n^{i-} (a_l )} +\beta _\Omega ^{i-} (a_l ) = 1\).

By referring to the correlation coefficient of interval-valued intuitionistic fuzzy sets (Park et al. 2009), the correlation coefficient between \(B(e_i (\cdot ))\) and \(B^{i-}(\cdot )\), where \(B^{i-}(\cdot )\) denotes the set of \(B^{i-}(a_l )\) (\(l = 1, {\ldots }, M\)), is calculated as

where

Similar to 0 \(\le r_{ij} \le \) 1 in the CRITIC method, \(R_{i}\) is also limited to [0, 1]. Using \(R_{i}\) of Eq. (40) and \(\sigma _{i}\) of Eq. (35) (\(i = 1, {\ldots }, L\)), the weight of attribute \(e_{i}\) (\(i = 1, {\ldots }, L\)) is determined by solving the following optimization model:

To compare the above four methods with the proposed method from the perspective of solution reliability, similar to the definition of solution reliability on an attribute, the reliability of the aggregated solution is defined as \(Q=\sum _{l=1}^{M-1} {\theta _l \cdot \Delta V(b_l )} \), where \(\Delta V(b_l )=\sum _{m=l+1}^M {(V(b_l )-V(b_m ))/2} \) (\(l = 1, {\ldots }, M-1)\) such that \(V(b_{1}) \ge \) ... \(\ge V(b_{M})\).

For the sake of simplicity, we set (\(\theta _{1}\), ..., \(\theta _{11})\) = (0.2545, 0.2072, 0.1647, 0.1271, 0.0943, 0.0665, 0.0435, 0.0255, 0.0123, 0.0039, 0.0005), the same as the first situation in Sect. 4.2 where the largest weight \(\theta _{1}\) is provided.

In the following, the above four methods are used to determine attribute weights and generate four solutions to the problem of selecting leading industries. The resulting attribute weights and the reliability of the aggregated solutions are compared with those in the first situation using the proposed method, as presented in Table 3. The solutions generated by the five methods are compared in Table 4.

Table 3 shows that the weights \(w_{i}\) (\(i = 1, {\ldots }, 7\)) generated by the entropy method are clearly different from those generated by the other four methods. In particular, compared with the weights generated by the other four methods, the weights \(w_{1}\), \(w_{2}\), and \( w_{5}\) generated by the entropy method are highly underestimated, which results in the overestimation of the weights \(w_{4}\) and \( w_{6}\) generated by the method and the highest reliability of the aggregated solution in the five methods. Although high reliability of the aggregated solution is preferred, well-balanced attribute weights should also be considered. Among the four methods, the proposed method generates the lowest \(w_{3}\) which is the main contributor to the highest reliability of the aggregated solution, but the differences among the weights \(w_{3 }\) generated by all the four methods are not significant. In fact, there are no significant differences among all weights \(w_{i}\) (\(i = 1, {\ldots }, 7\)) generated by SD, CRITIC, CCSD, and the proposed methods. This means that the high reliability of the aggregated solution from the proposed method is achieved through assigning more balanced weights than those assigned by the entropy method. Thus, among the five methods compared, only the proposed method has achieved high solution reliability with a set of well-balanced attribute weights.

It is shown from Table 4 that the minimal satisfaction of the twelve industries generated by the five methods is different. Different attribute weights produced by the five methods contribute to different minimal satisfaction of the industries. The rank-order of the industries generated by the five methods is correspondingly different although the top five industries obtained by the five methods are the same. In particular, in terms of the minimal satisfaction produced, the second and the third industries are very close if using SD, CRITIC, CCSD, and the proposed methods, while using the entropy method, it is the second and the sixth that are very close. This results in a clear difference between the rank-order of the second, the third, and the sixth industries generated by the entropy method and those by the other four methods. The difference reflects the imbalanced attribute weights generated by the entropy method, which are different from those generated by the other four methods, as presented in Table 3. The rank-order of the other seven industries is not discussed as it is of no interest to the decision maker.

In brief, the proposed method can generate a reasonable set of attribute weights by using solution reliability on each attribute and uses the attribute weights to guarantee high reliability of the aggregated solution. In particular, the proposed method can also consider the subjective preference of a decision maker about attribute weights by extending Eq. (27) to the optimization model in Eqs. (28)–(31). In the above four methods considered, this feature is only found in the CCSD method.

6 Conclusions

Focusing on high solution reliability, we propose in this paper a method of determining attribute weights by using the definition of solution reliability on an attribute in the context of the ER approach. This is an objective method in its original form, but can be extended to handle the subjective preference of a decision maker about attribute weights. It uses the assessments of alternatives on each attribute to construct solution reliability on each attribute with the help of OWA operator and then determine attribute weights by using the constructed solution reliability.

The characteristic of solution reliability on an attribute is analyzed and used to theoretically determine OWA operator weights depending on whether the largest weight or the orness degree of OWA operator is given. A problem of selecting leading industries for preferential development is investigated to demonstrate the validity and applicability of the proposed method. To compare the proposed method with existing objective methods, four methods including the entropy method (Deng et al. 2000), the SD method (Diakoulaki et al. 1995), the CRITIC method (Diakoulaki et al. 1995), and the CCSD method (Wang and Luo 2010), are extended to the context of the ER approach, and then used to determine attribute weights and generate four solutions to the problem. The comparison reveals that the attribute weights and the rank-order of industries generated by the proposed method can guarantee the highest reliability of solution while maintaining a reasonable balance among attribute weights.

References

Abbas, A. E. (2006). Maximum entropy utility. Operations Research, 54(2), 277–290.

Ahn, B. S., & Choi, S. H. (2012). Aggregation of ordinal data using ordered weighted averaging operator weights. Annals of Operations Research, 201, 1–16.

Ananda, J., & Herath, G. (2005). Evaluating public risk preferences in forest land-use choices using multi-attribute utility theory. Ecological Economics, 55, 408–419.

Aouni, B., Colapinto, C., & Torre, D. L. (2013). A cardinality constrained stochastic goal programming model with satisfaction functions for venture capital investment decision making. Annals of Operations Research, 205, 77–88.

Bottomley, P. A., & Doyle, J. R. (2001). A comparison of three weight elicitation methods: Good, better, and best. Omega, 29, 553–560.

Brito, A. J., & de Almeida, A. T. (2012). Modeling a multi-attribute utility newsvendor with partial backlogging. European Journal of Operational Research, 220, 820–830.

Butler, J., Morrice, D. J., & Mullarkey, P. W. (2001). A multiple attribute utility theory approach to ranking and selection. Management Science, 47(6), 800–816.

Chen, T. Y., & Li, C. H. (2010). Determining objective weights with intuitionistic fuzzy entropy measures: A comparative analysis. Information Science, 180, 4207–4222.

Chen, T. Y., & Li, C. H. (2011). Objective weights with intuitionistic fuzzy entropy measures and computational experiment analysis. Applied Soft Computing, 11, 5411–5423.

Daniels, R. L., & Keller, L. R. (1992). Choice-based assessment of utility functions. Organizational Behavior and Human Decision Process, 52, 524–543.

Davern, M. J., Mantena, R., & Stohr, E. A. (2008). Diagnosing decision quality. Decision Support Systems, 45, 123–139.

Dempster, A. P. (1967). Upper and lower probabilities induced by a multivalued mapping. Annals of Mathematical Statistics, 38, 325–339.

Deng, H., Yeh, C. H., & Willis, R. J. (2000). Inter-company comparison using modified TOPSIS with objective weights. Computers and Operations Research, 27, 963–973.

Diakoulaki, D., Mavrotas, G., & Papayannakis, L. (1995). Determining objective weights in multiple criteria problems: The critic method. Computers and Operations Research, 22, 763–770.

Dyer, J. S., & Jia, J. M. (1998). Preference conditions for utility models: A risk-value perspective. Annals of Operations Research, 80, 167–182.

Farquhar, P. H. (1984). Utility assessment methods. Management Science, 30(11), 1283–1300.

Gao, J., Zhang, C., Wang, K., & Ba, S. (2012). Understanding online purchase decision making: The effects of unconscious thought, information quality, and information quantity. Decision Support Systems, 53, 772–781.

Guo, M., Yang, J. B., Chin, K. S., & Wang, H. W. (2007). Evidential reasoning based preference programming for multiple attribute decision analysis under uncertainty. European Journal of Operational Research, 182, 1294–1312.

Hershey, J. C., & Schoemaker, P. (1985). Probability versus certainty equivalence methods in utility measurement: Are they equivalent? Management Science, 31, 1213–1231.

Horowitz, I., & Zappe, C. (1995). The linear programming alternative to policy capturing for eliciting criteria weights in the performance appraisal process. Omega, 23(6), 667–676.

Horsky, D., & Rao, M. R. (1984). Estimation of attribute weights from preference comparisons. Management Science, 30, 801–822.

Kainuma, Y., & Tawara, N. (2006). A multiple attribute utility theory approach to lean and green supply chain management. International Journal of Production Economics, 101, 99–108.

Keeney, R. L., & Raiffa, H. (1993). Decisions with multiple objectives preferences and value tradeoffs. Cambridge: Cambridge University Press.

Kocher, M. G., & Sutter, M. (2006). Time is money-Time pressure, incentives, and the quality of decision-making. Journal of Economic Behavior and Organization, 61, 375–392.

Malhotra, V., Lee, M. D., & Khurana, A. (2007). Domain experts influence decision quality: Towards a robust method for their identification. Journal of Petroleum Science and Engineering, 57, 181–194.

Maria de Miranda Mota, C., & Teixeira de Almeida, A. (2012). A multicriteria decision model for assigning priority classes to activities in project management. Annals of Operations Research, 199, 361–372.

O’Hagan, M. (1988). Aggregating template or rule antecedents in real-time expert systems with fuzzy set logic. Proceedings of the 22nd annual IEEE Asilomar conference on signals, systems and computers (pp. 681–689). California: Pacific Grove.

Park, D. G., Kwun, Y. C., Park, J. H., & Park, I. Y. (2009). Correlation coefficient of interval-valued intuitionistic fuzzy sets and its application to multiple attribute group decision making problems. Mathematical and Computer Modelling, 50(9–10), 1279–1293.

Raghunathan, S. (1999). Impact of information quality and decision-maker quality on decision quality: A theoretical model and simulation analysis. Decision Support Systems, 26, 275–286.

Saaty, T. L. (1977). A scaling method for priorities in hierarchical structures. Journal of Mathematical Psychology, 15, 234–281.

Shafer, G. (1976). A mathematical theory of evidence. Princeton: Princeton University Press.

Shirland, L. E., Jesse, R. R., Thompson, R. L., & Iacovou, C. L. (2003). Determining attribute weights using mathematical programming. Omega, 31, 423–437.

Socorro García-Cascales, M., Teresa Lamata, M., & Miguel Sánchez-Lozano, J. (2012). Evaluation of photovoltaic cells in a multi-criteria decision making process. Annals of Operations Research, 199, 373–391.

Takeda, E., Cogger, K. O., & Yu, P. L. (1987). Estimating criterion weights using eigenvectors: A comparative study. European Journal of Operational Research, 29, 360–369.

Wang, J. M. (2012). Robust optimization analysis for multiple attribute decision making problems with imprecise information. Annals of Operations Research, 197, 109–122.

Wang, Y. M. (1998). Using the method of maximizing deviations to make decision for multi-indices. System Engineering and Electronics, 7(24–26), 31.

Wang, Y. M., & Chin, K. S. (2011). The use of OWA operator weights for cross-efficiency aggregation. Omega, 39, 493–503.

Wang, Y. M., & Luo, Y. (2010). Integration of correlations with standard deviations for determining attribute weights in multiple attribute decision making. Mathematical and Computer Modelling, 51, 1–12.

Wang, Y. M., & Parkan, C. (2005). A minimax disparity approach for obtaining OWA operator weights. Information Sciences, 175, 20–29.

Wang, Y. M., Yang, J. B., & Xu, D. L. (2006a). Environmental impact assessment using the evidential reasoning approach. European Journal of Operational Research, 174(3), 1885–1913.

Wang, Y. M., Yang, J. B., Xu, D. L., & Chin, K. S. (2006b). The evidential reasoning approach for multiple attribute decision analysis using interval belief degrees. European Journal of Operational Research, 175(1), 35–66.

Williams, M. L., Dennis, A. R., Stam, A., & Aronson, J. E. (2007). The impact of DSS use and information load on errors and decision quality. European Journal of Operational Research, 176, 468–481.

Winston, W. (2011). Operations research: Applications and algorithms. Beijing: Tsinghua University Press.

Xu, D. L. (2012). An introduction and survey of the evidential reasoning approach for multiple criteria decision analysis. Annals of Operations Research, 195, 163–187.

Xu, X. Z. (2004). A note on the subjective and objective integrated approach to determine attribute weights. European Journal of Operational Research, 156, 530–532.

Yager, R. R. (1988). On ordered weighted averaging aggregation operators in multi-criteria decision making. IEEE Transactions on Systems, Man, and Cybernetics, 18, 183–190.

Yang, J. B. (2001). Rule and utility based evidential reasoning approach for multiattribute decision analysis under uncertainties. European Journal of Operational Research, 131, 31–61.

Yang, J. B., Wang, Y. M., Xu, D. L., & Chin, K. S. (2006). The evidential reasoning approach for MADA under both probabilistic and fuzzy uncertainties. European Journal of Operational Research, 171, 309–343.

Zadeh, L. A. (1994a). Soft computing and fuzzy logic. IEEE Software, 11(6), 48–56.

Zadeh, L. A. (1994b). Fuzzy logic, neural networks, and soft computing. Communications of the ACM, 37(3), 77–84.

Acknowledgments

This research was supported by the State Key Program of National Natural Science Foundation of China (No. 71131002), the National Key Basic Research Program of China (No. 2013CB329603), the National Natural Science Foundation of China (No. 71201043), the Humanities and Social Science Foundation of Ministry of Education in China (No. 12YJC630046), and the Natural Science Foundation of Anhui Province of China (No. 1208085QG130).

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of Property 1 and Theorems 1–4

Appendix: Proof of Property 1 and Theorems 1–4

1.1 Proof of Property 1

Property 1

Given \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) in Eq. (18), it is satisfied that

Proof

From the condition of \(V(e_{i}(b_{1}))\) \(\ge {\cdots } \ge V(e_{i}(b_{M}))\) specified in Definition 4, we can deduce that \((V(e_i (b_l ))-V(e_i (b_m )))/2 \ge \) 0 for \(m=l\) + 1, ..., \(M\). Using Eq. (18), we can further reason that \(\Delta V(e_i (b_1 ))=(V(e_i (b_1 ))-V(e_i (b_2 )))/2+\sum _{m=3}^M {(V(e_i (b_1 ))-V(e_i (b_m )))/2} =(V(e_i (b_1 ))-V(e_i (b_2 )))/2+\Delta V(e_i (b_2 )) \ge \Delta V(e_i (b_2 ))\). Similarly, \(\Delta V(e_i (b_2 )) \ge \) ... \(\ge \Delta V(e_i (b_{M-1} ))\) can be inferred. Therefore, Eq. (20) holds.

Given \(x\) and \(y\) such that \(x < y\) and \(x\), \(y \in \) {1, ..., \(M-1\)}, because \(V(e_{i}(b_{x})) = V(e_{i}(b_{x+1}))\), we can deduce from Eq. (18) that \(\Delta V(e_i (b_x ))=(V(e_i (b_x ))-V(e_i (b_{x+1} )))/2+\sum _{m=x+2}^M {(V(e_i (b_x ))-V(e_i (b_m )))/2} =\sum _{m=x+2}^M {(V(e_i (b_{x+1} ))-V(e_i (b_m )))/2} =\Delta V(e_i (b_{x+1} ))\). Similarly, we can infer from \(V(e_{i}(b_{x})) = {\ldots } = V(e_{i}(b_{y}))\) that \(\Delta V(e_i (b_x )) = {\ldots } = \Delta V(e_i (b_y ))\), which verifies Eq. (21).

Because \(V(e_{i}(b_{l}))\) \(\in \) [\(-\)1, 1] (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\), as presented in Sect. 3.1, \((V(e_i (b_l ))-V(e_i (b_m )))/2\) for \(m=l + 1, {\ldots }, M\) is limited to [0, 1]. This can deduce from Eq. (18) that \(\Delta V(e_i (b_l ))\) (\(l = 1, {\ldots }, M-1)\) is limited to [0, \(M-l\)] (\(l = 1, {\ldots }, M-1)\). As a result, Eq. (22) holds.

Equations (20) and (22) show that \(M-1 \ge \Delta V(e_i (b_1 )) \ge \) ... \(\ge \Delta V(e_i (b_{M-1} )) \ge \) 0. In this situation, it can be derived that \(\sum _{l=1}^{M-1} {\theta _l \cdot \Delta V(e_i (b_l ))} \ge \) 0 and \(\sum _{l=1}^{M-1} {\theta _l \cdot \Delta V(e_i (b_l ))} \le \sum _{l=1}^{M-1} {\theta _l \cdot \Delta V(e_i (b_1 ))} =\Delta V(e_i (b_1 )) \le M-1\) on the condition that 0 \(\le \) \(\theta _{l} \le \) 1 (\(l = 1, {\ldots }, M-1)\) and \(\sum _{l=1}^{M-1} {\theta _l } \)=1, as specified in Definition 4. Therefore, Eq. (23) holds. \(\square \)

1.2 Proof of Theorem 1

Theorem 1

It is assumed that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for calculating \(Q(e_{i})\) in Eq. (19) and \(\Delta d\) is defined in Eq. (25). Given the largest weight \(\theta _{1}\), \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) is determined by \(\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) using the minimax disparity method based on Assumption 1, i.e., minimizing the maximum disparity between \(\theta _{l}\) and \(\theta _{l+1}\) (\(l = 1, {\ldots }, M-2\)), where \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \), \(\Delta d=\frac{6(M-1)\theta _1 -12}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3}\), and \(\varepsilon \) is a small positive number close to zero.

Proof

The requirement of \((\theta _l -\theta _{l+1} )-(\theta _{l+1} -\theta _{l+2} )=d_l -d_{l+1} =\Delta d >\) 0 (\(l = 1, {\ldots }, M-3\)) in Assumption 1 can deduce that \(\theta _{l}=\theta _1 -(l-1)d_1 +(1+{\ldots }+(l-2))\Delta d=\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) (\(l = 2, {\ldots }, M-1)\). Then, we have

which can further reason that \(\Delta d=\frac{6-6(M-1)\theta _1 +3(M-2)(M-1)d_1 }{(M-3)(M-2)(M-1)}\).

The constraint of \(\theta _{1} >\) ... \(>\) \(\theta _{M-1} >\) 0 in Assumption 1 needs that \(\theta _{M-1}=\theta _1 -(M-2)d_1 +\frac{(M-3)\cdot (M-2)}{2}\Delta d >\) 0, which is combined with \(\Delta d=\frac{6-6(M-1)\theta _1 +3(M-2)(M-1)d_1 }{(M-3)(M-2)(M-1)}\) to deduce that 6-6(\(M-1)\theta _{1}\)+3(\(M-2\))(\(M-1)d_{1} >\) 2(\(M-2\))(\(M-1)d_{1}\)-2(\(M-1)\theta _{1}\), i.e., \(d_{1} > \frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}\). Following the principle of the minimax disparity method, \(d_{l}\) (\(l = 1, {\ldots }, M-2\)) should be minimized. Due to the requirement of \(d_l -d_{l+1} =\Delta d >\) 0 (\(l = 1, {\ldots }, M-3\)) in Assumption 1, the minimum \(d_{1}\) results in the minimum \(d_{l}\) (\(l = 2, {\ldots }, M-2\)). Therefore, we obtain the minimal \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \) with the help of a small positive number \(\varepsilon \) close to zero. In this situation, it can be derived from \(\Delta d=\frac{6-6(M-1)\theta _1 +3(M-2)(M-1)d_1 }{(M-3)(M-2)(M-1)}\) that \(\Delta d=\frac{6(M-1)\theta _1 -12}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3}\). \(\square \)

1.3 Proof of Theorem 2

Theorem 2

On the assumption that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for calculating \(Q(e_{i})\) in Eq. (19), the determination of \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) in Theorem 1 based on Assumption 1 requires that \(\frac{4-(M-2)(M-1)\varepsilon }{2(M-1)} <\) \(\theta _{1} < \frac{3-(M-2)(M-1)\varepsilon }{M-1}\) where \(\varepsilon \) is a small positive number close to zero.

Proof

The determination of \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) by Theorem 1 depends on Assumption 1, so the constraints in Eqs. (24)–(26) are satisfied when \(\theta _{1}\) is given.

By using Theorem 1, we can know that \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \) and \(\Delta d=\frac{6(M-1)\theta _1 -12}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3}\) where \(\varepsilon \) is a small positive number close to zero. The requirements of \(d_{1} >\) 0 and \(\Delta d >\) 0 in Eqs. (24)–(26) deduce that 4(\(M-1)\theta _{1}\)-6+(\(M-2\))(\(M-1)\varepsilon \) \(>\) 0 and 6(\(M-1)\theta _{1}\)-12+3(\(M-2\))(\(M-1)\varepsilon \) \(>\) 0, i.e., \(\theta _{1} > \frac{6-(M-2)(M-1)\varepsilon }{4(M-1)}\) and \(\theta _{1} > \frac{4-(M-2)(M-1)\varepsilon }{2(M-1)}\). As \(\frac{6-(M-2)(M-1)\varepsilon }{4(M-1)}<\frac{4-(M-2)(M-1)\varepsilon }{2(M-1)}\) when \(\varepsilon \) is sufficiently close to zero, we can obtain that \(\theta _{1} > \frac{4-(M-2)(M-1)\varepsilon }{2(M-1)}\). On the other hand, it can be derived from \(d_l -d_{l+1} =\Delta d >\) 0 (\(l = 2, {\ldots }, M-3\)) in Eq. (25) that \(d_{l}=d_1 -(l-1)\Delta d >\) 0 (\(l = 2, {\ldots }, M-2\)), i.e., \(d_{1} > (l-1)\Delta d\) (\(l = 2, {\ldots }, M-2\)). When \(d_{M-2}=d_1 -(M-3)\Delta d >\) 0, \(d_{1} > (l-1)\Delta d\) (\(l = 2, {\ldots }, M-2\)) is clearly satisfied. From \(d_{M-2} >\) 0, we can obtain that \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon > (M-3)\Delta d=\frac{6(M-1)\theta _1 -12+3(M-2)(M-1)\varepsilon }{(M-2)(M-1)}\), i.e., \(\theta _{1} < \frac{3-(M-2)(M-1)\varepsilon }{M-1}\). Therefore, \(\theta _{1}\) is limited to (\(\frac{4-(M-2)(M-1)\varepsilon }{2(M-1)}\), \(\frac{3-(M-2)(M-1)\varepsilon }{M-1})\). \(\square \)

1.4 Proof of Theorem 3

Theorem 3

Let \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) denote the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for \(Q(e_{i})\) in Eq. (19). Given orness degree \(\alpha \) such that 0.5 \(<\) \(\alpha \) \(<\) 1, \(\theta _{1}\) and \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) can be determined by \(\frac{(24\alpha -12)(M-2)+(M-2)(M-1)M\varepsilon }{2(M-1)M}\) and \(\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) (\(l = 2, {\ldots }, M-1)\), where \(d_{1}=\frac{2(24\alpha -12)(M-2)-6M}{(M-2)(M-1)M}+3\varepsilon \), \(\Delta d=\frac{3(24\alpha -12)(M-2)-12M}{(M-3)(M-2)(M-1)M}+\frac{6\varepsilon }{M-3}\), and \(\varepsilon \) is a small positive number close to zero.

Proof

According to Eq. (1), the orness degree for \(Q(e_{i})\) in Eq. (19) is calculated as

Theorem 1 indicates that \(\theta _{l}=\theta _1 -(l-1)d_1 +\frac{(l-2)\cdot (l-1)}{2}\Delta d\) (\(l = 2, {\ldots }, M-1)\), with which we can obtain that

Suppose that \(\alpha _{1} = (\frac{1}{6}(M-1)M(2M-1)-5-M(\frac{(M-1)M}{2}-3)+(M-3)(M-1))d_{1}\) and \(\alpha _{2}=\sum _{l=3}^{M-1} {\frac{1}{2}(M-1-l)(l-2)(l-1)\Delta d} \), then it is derived that

and

Thus, we have \(\alpha = f(\alpha _{1}, \alpha _{2})=\frac{1}{M-2}((M-2)\theta _1 +(M-3)(\theta _1 -d_1 )+(M-3)(M-1)\theta _1 -(\frac{(M-1)M}{2}-3)\theta _1 + \alpha _{1} + \alpha _{2})=\frac{1}{M-2}(\frac{1}{2}(M-2)(M-1)\theta _1 -\frac{1}{6}(M-3)(M-2)(M-1)d_1 +\frac{1}{24}(M-4)(M-3)(M-2)(M-1)\Delta d)\), which can deduce that

From Theorem 1, we can know that \(d_{1}=\frac{4(M-1)\theta _1 -6}{(M-2)(M-1)}+\varepsilon \) and \(\Delta d=\frac{6(M-1)\theta _1 -12}{(M-3)(M-2)(M-1)}+\frac{3\varepsilon }{M-3}\). Then, \(\theta _{1}\) is calculated as \(\frac{(24\alpha -12)(M-2)+(M-2)(M-1)M\varepsilon }{2(M-1)M}\), which is used to determine \(d_{1}\) and \(\Delta d\) with \(d_{1}=\frac{2(24\alpha -12)(M-2)-6M}{(M-2)(M-1)M}+3\varepsilon \) and \(\Delta d=\frac{3(24\alpha -12)(M-2)-12M}{(M-3)(M-2)(M-1)M}+\frac{6\varepsilon }{M-3}\). \(\square \)

1.5 Proof of Theorem 4

Theorem 4

On the assumption that \(\theta _{l}\) (\(l = 1, {\ldots }, M-1)\) represents the weight of \(\Delta V(e_i (b_l ))\) (\(i = 1, {\ldots }, L, l = 1, {\ldots }, M-1)\) for calculating \(Q(e_{i})\) in Eq. (19), the determination of \(\theta _{l}\) (\(l = 2, {\ldots }, M-1)\) in Theorem 3 based on Assumption 1 requires that \(\frac{8M-12-(M-2)(M-1)M\varepsilon }{12M-24} <\) \(\alpha \) \(< \frac{6M-8-3(M-2)(M-1)M\varepsilon }{8M-16}\) where \(\alpha \) is the orness degree for \(Q(e_{i})\) and \(\varepsilon \) is a small positive number close to zero.

Proof