Abstract

This paper presents the multiple instance classification problem that can be used for drug and molecular activity prediction, text categorization, image annotation, and object recognition. In order to model a more robust representation of outliers, hard margin loss formulations that minimize the number of misclassified instances are proposed. Although the problem is \(\mathcal{NP}\)-hard, computational studies show that medium sized problems can be solved to optimality in reasonable time using integer programming and constraint programming formulations. A three-phase heuristic algorithm is proposed for larger problems. Furthermore, different loss functions such as hinge loss, ramp loss, and hard margin loss are empirically compared in the context of multiple instance classification. The proposed heuristic and robust support vector machines with hard margin loss demonstrate superior generalization performance compared to other approaches for multiple instance learning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

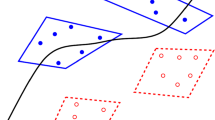

Multiple Instance Learning (MIL) is a supervised machine learning problem, where class labels are defined on the sets, referred to as bags, instead of individual data instances. Each instance in a negative bag is negative, whereas positive bags may contain false positives. This notion of bags makes multiple instance learning particularly useful for numerous interesting applications. For instance, in drug activity prediction, unless there is at least one effective ingredient (actual positive instance), a drug (bag) is ineffective (negative labeled). Similarly, in molecular activity prediction, in order to observe a particular activity (positive labeled) for a molecule (bag), there has to be at least one conformation (instance) that exhibits the desired behavior (actual positive). Text categorization deals with matching a document (bag) with a topic of interest (positive label) based on a set of keywords that have been frequently used in the same concept (actual positive instances). In image annotation, pictures with an object of interest (positive labeled bags) are not expected to include that object in all segments, but only in subsets (actual positive instances).

A number of different approaches have been proposed to perform classification for MIL data. Employed methods include diverse density, decision trees, nearest neighbor algorithm, and support vector machines. In this paper, we propose a robust approach for MIL based on hard margin Support Vector Machine (SVM) formulations. Cross validation results show that our approach provides more accurate predictions than a traditional SVM approach to MIL. In general, the term robustness implies a non-drastic change in performance under different settings such as noisy environment or worst case scenario depending on the context. In the context of classification, we use robustness to indicate minimal influence of outliers on the classifier, thus better generalization performance.

The remainder of this paper is organized as follows: In Sect. 2, we provide basics of SVM with different loss functions, MIL, and a brief literature survey. Section 3 defines the problem and presents exact integer programing and constraint programming formulations. In Sect. 4, we propose a three-phase heuristic to be used for larger problems for both linear and nonlinear classification. Section 5 presents the optimality performance of our heuristic and cross validation results for the proposed approach on linear and nonlinear classification of publicly available data sets. In order to show the hard margin loss is of the essence for robustness, we also demonstrate cross validation results for linear classification using hinge loss, ramp loss, and hard margin loss on randomly generated data sets. We provide brief concluding remarks and directions for future research in Sect. 6.

2 Background

2.1 Support vector machines

SVMs are supervised machine learning methods that are originally used to classify pattern vectors which belong to two linearly separable sets from two different classes (Vapnik 1995). The classification is achieved by a hyperplane that maximizes the distance between the convex hulls of both classes. Although extensions are proposed for regression and multi-class classification, SVMs are particularly useful for binary (2-class) classification due to strong fundamentals from the statistical learning theory, implementation advantages (e.g., sparsity), and generalization performance. When misclassified instances are penalized in the linear form, SVM classifiers are proven to be universally consistent (Steinwart 2002). A classifier is consistent if the probability of misclassification (in expectation) converges to a Bayes’ optimal rule when the number of data instances increase. A classifier is universally consistent if it is consistent for all distributions of data. SVMs can also perform nonlinear classification utilizing separating curves by implicitly embedding original data in a nonlinear space using kernel functions. SVMs have a wide range of applications including pattern recognition (Byun and Lee 2002), text categorization (Joachims 1998), biomedicine (Brown et al. 2000; Lal et al. 2004; Noble 2004), brain-computer interface (Molina et al. 2003; Lal et al. 2004), and financial applications (Trafalis and Ince 2000; Huang et al. 2004).

In a typical binary classification problem, class S + and S − are composed of pattern vectors x i ∈ℝd, i=1,…,n. If x i ∈S +, it is given the label y i =1; if x i ∈S −, then it is given the label y i =−1. The ultimate goal is to determine which class a new pattern vector x i ∉{S +∪S −} belongs to. SVM classifiers solve this problem by finding a hyperplane (w,b) that separates instances in classes S + and S − with the maximum interclass margin. The original hinge loss 2-class SVM problem is as follows:

In this formulation, w is the normal vector and b is the offset parameter for the separating hyperplane. ξ i are slack variables for misclassified pattern vectors. The goal is to maximize the interclass marginFootnote 1 and minimize misclassification. The role of scalar C in the objective function is to control the trade-off between margin violation and regularization. It should be noted that parameter C might differ for positive and negative class (e.g., C 1 and C 2) to cover unbalanced classification problems.

Lagrangian dual formulation for (1a)–(1c) leads to an optimization problem where input vectors only appear in the form of dot products and a suitable kernel function can be introduced for nonlinear classification (Cristianini and Shawe-Taylor 2000). This dual problem is a concave maximization problem, which can be solved efficiently. The dual for hinge loss formulation in (1a)–(1c) is given as

Using a hinge loss function for ξ i as in (1a) or a quadratic loss function results in an increased sensitivity to outliers due to penalization of continuous measure of misclassification (Brooks 2011; Trafalis and Gilbert 2006; Xu et al. 2006). Different loss functions are proposed in the literature to model a better representation of the outliers that leads to more robust classifiers. These functions ensure that the distance from the hyperplane has a limited (if any) effect on the quality of the solution for misclassified instances. For instance, hard margin loss considers the number of misclassifications instead of their distances to the hyperplane (Brooks 2011). Minimizing the number of misclassified points is proven to be \(\mathcal{NP}\)-hard (Chen and Mangasarian 1996). Orsenigo and Vercellis (2003) use a similar approach called discrete SVM (DSVM), and propose a heuristic algorithm to generate local optimum decision trees. Recently, Brooks (2011) formulate the hard margin loss formulation using a set of binary variables v i , which are equal to one if the instance is misclassified.

Constraints (3b) can be linearized using a sufficiently large constant M as follows:

In SVM classifiers, functional distance (i.e., 〈w,x i 〉+b) is expected to be equal to 1 (−1) for correctly classified positive (negative) labeled instances that provide support. Therefore, a positive and a negative labeled instance can be on the desired sides of the hyperplane yet incur misclassification penalties when functional distances are in (0,1) and (−1,0), respectively. In order to smooth out this effect, an approach is to penalize misclassified instances with a functional distance in (−1,1) based on their distance and incur a fixed penalty for those out of (−1,1) range (Brooks 2011; Mason et al. 2000). This approach is called ramp loss or robust hinge loss, which can be formulated as

where the conditional constraint (5b) can be linearized using M as follows:

Shen et al. (2003) use optimization with ramp loss but the solution method does not guarantee global optimality. Xu et al. (2006) solve the non-convex optimization problem using semi-definite programming techniques but state that the procedure works inefficiently. Wang et al. (2008) propose a concave-convex procedure (CCCP) to transform the associated non-convex optimization problem into a convex problem and use Newton optimization technique in the primal space.

Next, we focus on MIL and present methods that are employed highlighting a set of SVM studies.

2.2 Multiple instance learning

The MIL setting is introduced by Dietterich et al. (1997) for the task of drug activity prediction and design. Same setting has also been studied for applications such as identification of proteins (Tao et al. 2004), content based image retrieval (Zhang et al. 2002), object detection (Viola et al. 2006), prediction of failures in hard drives (Murray et al. 2006) and text categorization (Andrews et al. 2003). In contrast to a typical classification setting where instance labels are known with certainty, MIL deals with uncertainty in labels. In multiple instance binary classification, a positive bag label shows that there is at least one actual positive instance in the bag which is a witness for the label. On the other hand, all instances in a negative bag must belong to the negative class so there is no uncertainty on negative labeled bags.

Several methods have been applied to solve MIL problems, from expectation maximization methods with diverse density (EM-DD) (Chen and Wang 2004; Zhang and Goldman 2002), to deterministic annealing (Gehler and Chapelle 2007), to extensions of k−NN, citation k−NN, and diverse density methods (Dooly et al. 2003), to kernel based SVM methods (Andrews et al. 2003).

SVM methods have first been employed by Andrews et al. (2003) for MIL. In this study, integer variables are used to indicate witness status of points in positive bags. Witness point has to be placed on the positive side of the decision boundary, otherwise a penalty is incurred. Selecting each of these representations leads to a heuristic for solving the resulting mixed-integer program approximately. In contrast, Mangasarian and Wild (2008) introduce continuous variables to represent the convex combination of each positive bag, which must be placed on the positive side of the separating plane. This representation leads to an optimization problem that contains both linear and bilinear constraints, which is solved to a local optimum solution through a linear programming algorithm. An integer programming formulation that penalizes negative labeled instances without a bag notion is proposed in Kundakcioglu et al. (2010). The setting leads to a maximum margin hyperplane between a selection of instances from positive bags and all instances from negative bags. This problem is proven to be \(\mathcal{NP}\)-hard and a branch and bound algorithm is proposed.

Next, we introduce our robust classification approach through different hard margin loss formulations for MIL.

3 Mathematical modeling

Despite the large number of approaches for MIL, to the best of our knowledge, our study is the first one that utilizes a robust SVM classifier for MIL. Instead of a continuous measure for misclassification, we use a hard margin loss formulation and minimize the number of misclassified instances to overcome the aforementioned outlier sensitivity issue.

The data consists of pattern vectors (instances) x i ∈ℝd, i=1,…,n and bags j=1,…,m. Each data instance belongs to one bag. Bags are labeled positive or negative and sets of positive and negative bags are represented as J +={j:y j =1} and J −={j:y j =−1}, respectively. Note that, labels y j are associated with bags, rather than instances. Next, we introduce instances in positive and negative bags as I +={i:i∈I j ∧j∈J +}, I −={i:i∈I j ∧j∈J −}, respectively. The goal in our robust SVM model is to maximize the interclass margin where a fixed penalty (independent from the distance) is incurred for a bag if

-

the bag is positive labeled and all instances in the bag are misclassified (on the negative side),

-

the bag is negative labeled and at least one instance in the bag is misclassified (on the positive side).

Here we present three integer programming and two constraint programming formulations for the described model.

3.1 Integer programming formulations

In order to use hard margin loss for multiple instance data, we define a set of variables η i to indicate actual positive instances from each positive bag. η i is one when we select positive instance i (as witness) and zero otherwise. We consider one selected instance from each positive bag as the witness of all instances in that bag. In order to incorporate the effect of misclassifying a bag in the objective function, we introduce two sets of variables \(v^{+}_{j},v^{-}_{j}\) that indicate misclassification of positive and negative bags, respectively. A positive bag is misclassified (\(v^{+}_{j}=1\)) if all the instances in that positive bag is misclassified (v i =1 ∀i∈I j ,j∈J +). A negative bag is misclassified (\(v^{-}_{j}=1\)) if at least one instance in that bag is misclassified (∃i∈I j , j∈J −|v i =1). Therefore, the multiple instance hard margin SVM (MIHMSVM) can be formulated as follows:

In this formulation, (7c) is always satisfied for all positive instances that are not witnesses (i.e., η i =0), which sets v i =0 due to nature of the objective function. Therefore, only the witness of a positive bag with η i =1 might deteriorate the objective function. This ensures that a positive bag does not incur any penalty if at least one instance is correctly classified. On the other hand, v i values for negative instances are calculated as in a typical classification problem. However, (7e) ensures that the maximum of these values are penalized in the objective function and a negative bag does not incur a penalty if all instances are correctly classified. It should be noted that MIHMSVM is \(\mathcal{NP}\)-hard since a special case with a single instance in each bag is proven to be \(\mathcal{NP}\)-hard (Mangasarian and Wild 2008).

This formulation utilizes 2|I +|+|I −| binary variables and |J −| continuous variables. Instead of using constraints (7e), we can use the binaries inside separation constraints directly. This will not only reduce the number of binary variables, but eliminate the need for continuous variables as well. We obtain a simpler formulation with 2|I +|+|J −| binary variables as follows:

Next formulation, influenced by Mangasarian and Wild (2008), considers the fact that it is enough to select the instances with minimum misclassification from positive bags. Therefore, we utilize variables \(v^{+}_{j}\), for positive bags that shows the minimum misclassification associated with that bag. By penalizing this variable in the objective function, we obtain

In order to linearize (9d), we introduce new variables \(\hat{z}_{i}\) that should be equal to η i v i . We relax the integrality of η i and \(v^{+}_{i}\) and come up with the following formulation with |I +|+|J −| binary variables:

It should be noted that constraints (10f) and (10g) are redundant since the summation of \(\hat{z}_{i}\) is to be minimized.

Next, we obtain a novel formulation using the number of instances in positive bags to identify positive bag witnesses. Our experience with the following formulation is that it is far superior compared to IP1 and IP2. We use the fact that, a positive bag is misclassified if all instances in that bag are misclassified, i.e., \(\sum_{i\in I_{j}}v_{i}=|I_{j}|\). We also relax the integrality of \(v^{+}_{i}\) and obtain a formulation with |I +|+|J −| binary variables:

Suppose j′ is a positive bag with |I j′| instances. When all of the instances in the bag are misclassified (i.e., v i =1,∀i∈I j′) then \(\sum_{i\in I_{j'}} v_{i} = |I_{j'}|\) and \(v^{+}_{i}=1\) is forced. Otherwise, \(\sum_{i\in I_{j'}} v_{i} \leq|I_{j'}|+1\) and \(v^{+}_{i}\) will be free and set to 0 due to the objective function.

Next, we present two constraint programming formulations for benchmarking purposes. In contrast to integer programming approaches, constraint programming prioritize exploiting special functions and finding a feasible solution during the computational procedure.

3.2 Constraint programming formulations

In order to evaluate the performance of IP formulations and take advantage of the special structure of the problem, we introduce two constraint programming formulations. IBM ILOG CPLEX CP Optimizer (2011) is employed that utilize robust constraint propagation and search algorithms.

Our first constraint programming formulation is as follows:

In CP1, (12b) is defined for all negative labeled instances and ensures that each negative labeled instance is correctly classified OR its corresponding bag is misclassified (i.e., \(v^{-}_{j}=\nobreak1\)). On the other hand, (12c) is defined for all positive bags and forces either one of the instances in the bag to be correctly classified OR the bag is misclassified (i.e., \(v^{+}_{j}=\nobreak 1\)).

Next, we propose a hybrid approach using constraint programming with the constraint set from a fast IP implementation, IP3. The formulation is as follows:

In CP2, constraints on bag misclassification are partially adapted from CP1 and IP3. Next, we present the nonlinear hard margin loss formulation for MIL.

3.3 Nonlinear classification

By making the substitution \(\mathbf{w}=\sum_{i=1}^{n} y_{i} x_{i} \alpha _{i}\) with nonnegative α i variables for i=1,…,n in (7a)–(7h), we obtain the following nonlinear classification formulation for multiple instance hard margin SVM:

The use of (14a)–(14j) is that the original data can be embedded in a nonlinear space by replacing the dot products with a suitable kernel function K in (14a), (14b), and (14c). It should be noted that (14e) ensures instances that are not selected do not play a role on the hyperplane. Therefore, for a given set of η values, the formulation reduces to the hard margin loss formulation in Brooks (2011).

Note that, both linear and nonlinear formulations presented in this section can utilize different penalty terms to solve unbalanced classification problems. Next, we present formulations for different loss functions for the multiple instance classification problem.

3.4 Multiple instance classification with hinge and ramp loss

In this section, we develop formulations for multiple instance hinge loss support vector machines and multiple instance ramp loss support vector machines for benchmarking purposes.

In order to incorporate bags in the objective function of hinge loss SVM, i.e., formulation (1a)–(1c), two sets of new variables \(\xi^{+}_{j}\), \(\xi^{-}_{j}\) are introduced that incorporate the positive and negative bag misclassification, respectively. \(\xi^{+}_{j}\) should be equal to minimum ξ i in each positive bag to select the actual positive of that bag. For negative bags, \(\xi^{-}_{j}\) should be greater than or equal to each instance’s ξ i in that bag. Therefore, the problem can be formulated as

which can be linearized as

Next, we formulate ramp loss for MIL. Similar to the previous formulations, variables \(\xi^{+}_{j} \), \(\xi^{-}_{j}\), \(v^{+}_{j}\), \(v^{-}_{j}\) are defined to incorporate the misclassification of positive and negative bags with the ramp loss definition discussed in Sect. 2. The resulting formulation for ramp loss SVM for MIL data is

which can be linearized using two sets of variables,

as follows:

Next section presents a heuristic algorithm for larger problems to be solved using hard margin loss formulation, which is \(\mathcal {NP}\)-hard and exact methods may be computationally intractable.

4 Three-phase heuristic algorithm

In this section, we develop a three-phase heuristic for the proposed MIHMSVM model. First, we explore the details of the algorithm for linear classification and present the pseudocode. Next, we highlight the modifications needed to perform nonlinear classification.

4.1 Linear classification

The idea of our algorithm is to start with a feasible hyperplane and fine tune the orientation considering MIL restrictions. Instead of starting with a random hyperplane, we take advantage of the efficiency of SVM on a typical classification problem. Therefore, the first phase of the algorithm consists of applying hinge loss SVM classifier on all instances considering their labels regardless of their bags. We use LIBSVM (Chang and Lin 2011) since a fast classification of the data set is needed. The optimal separating hyperplane in this step (w 1,b 1) gives a rough idea on positioning of bags. Next, we select a representative for each bag. Bag representatives may be interpreted as witnesses for positive bags. Although MIL setting does not entail negative bag witnesses, the reason we select representatives for negative bags is to keep the number of positive and negative labeled instances balanced and avoid biased classifications for the next step. The choice of bag representatives is based on the maximum functional distance from the hyperplane, which is in line with margin maximization objective considering MIL setting. This approach provides furthest correctly classified (or least misclassified) instances in positive bags and closest correctly classified (or most misclassified) instances in negative bags as representatives. Next, we use hinge loss SVM classifier for selected instances from all bags. The optimal separating hyperplane of this step is (w 2,b 2) that supposedly gives a better representation of data. This classifier will be used to find the correctly classified negative bags (where all instances are on negative side) and positive bags (where at least one instance is on positive side) as an initial solution at the end of the first phase.

In the second phase, a hard separation problem is solved. The instance with maximum functional distance from (w 2,b 2) in each correctly classified positive bag constitute the positive labeled training set. On the other hand, all instances in correctly classified negative bags are included in the negative labeled training set. Note that, a hard separation problem (i.e., formulation (3a)–(3c) where v i =0, ∀i) is polynomially solvable, and the resulting solution from phase one assures there will be no misclassification at this step. Since there are no misclassification terms for instances, an imbalance (possibly large number of negative labeled instances) does not imply a biased classifier. Let (w 3,b 3) be the optimal separating hyperplane at the end of this step. Next, we search for fast inclusion of misclassified bags while maintaining feasibility of the hard separation problem by fixing (w 3,b 3). Finally, we compute current objective function value of MIHMSVM using ∥w 3∥2 and number of misclassified bags. This hyperplane also becomes the current best solution.

In the third (improvement) phase, we employ a more rigorous inclusion process. Misclassified bags are sorted in ascending order of their distance from their corresponding support hyperplane and considered as candidates to be correctly classified one by one. Distance between a positive bag and the support hyperplane is defined as the distance between closest instance and the positive support hyperplane (i.e., 〈w,x i 〉+b=1). On the other hand, distance between a negative bag and the support hyperplane is defined as the distance between furthest instance and the negative support hyperplane (i.e., 〈w,x i 〉+b=−1). This approach is in line with our model assumptions in Sect. 3. If a positive bag is considered, instance with the smallest distance will be temporarily added to the training set. If a negative bag is selected, all instances in the bag will be temporarily added to the training set. Next, training set is examined for feasibility and if the problem is feasible, hyperplane (w 4,b 4) is obtained. If hard margin loss objective function is less than the current best objective, candidate bag will be added to the solution and best hyperplane is updated. The objective functions are compared based on the fact that by adding a bag, we decrease the misclassification by one in trade of a change in the norm of the hyperplane. Thus, in an iteration, if (∥w 4∥2−∥w best ∥2)/2 is less than C, then we conclude the overall objective is reduced. The search will continue until no improvement is possible and the final best solution is the heuristic solution for the problem. The pseudocode is presented in Algorithm 1.

4.2 Nonlinear classification

Nonlinear extension of Algorithm 1 utilizes a number of modifications. In the first phase, regular hinge loss SVM is substituted with nonlinear SVM with a kernel function to obtain (α 1,b 1). Next, in the construction of P and N, 〈w 1,x i 〉 are substituted with \(\sum_{j=1}^{n} y_{j} \mathbf{K}(\mathbf{x}_{j}, \mathbf{x}_{i}) {\alpha_{1}}_{j}\) to calculate the distances. At the last step of the first phase, nonlinear SVM with kernel is employed again to obtain (α 2,b 2). Likewise, in the second phase, 〈w 2,x i 〉 are substituted with \(\sum_{j=1}^{n} y_{j} \mathbf{K}(\mathbf{x}_{j}, \mathbf{x}_{i}) {\alpha_{2}}_{j}\).

In order to obtain a nonlinear hard separation in Phase II, we used the following formulation based on Brooks (2011):

Optimal solution to (19a)–(19d) provides (α 3,b 3) that is used for fast inclusion. For distance calculation and in order to ensure hard separability, 〈w 3,x i 〉 are substituted with \(\sum_{j=1}^{n} y_{j} \mathbf{K}(\mathbf{x}_{j}, \mathbf{x}_{i}) {\alpha_{3}}_{j}\). At the last step of Phase II, ∥w 3∥2 is substituted with the optimal objective function value of (19a)–(19d), i.e., 1/2∑ i∈P∪N ∑ j∈P∪N y i y j K(x i ,x j )α 3 i α 3 j .

As expected, in the third phase, instead of working with w, we keep considering α vectors. Decision of candidate instance for inclusion is performed by substituting dot products 〈w best ,x i 〉 with \(\sum_{j=1}^{n} y_{j} \mathbf{K}(\mathbf{x}_{j}, \mathbf{x}_{i}) {\alpha_{\mathit{best}}}_{j}\). Hard separation with (w 4,b 4) is also substituted with (α 4,b 4) which gets the optimal solution for formulation (19a)–(19d).

Next, we report computational performance for the proposed algorithm. We also show hard margin loss is virtually more robust and better in terms of generalization performance compared to other loss functions.

5 Computational results

In this section, we first present the superior performance of hard margin loss in practice compared to ramp and hinge loss functions using randomly generated data sets. Next, we evaluate the performance of our heuristic in terms of time and proximity to the optimal solution. Finally, we show the cross validation performance of the proposed heuristic on the publicly available data sets. All computations are performed on a 2.93 GHz Intel Core 2 Duo computer with 4.0 GB RAM. The algorithms are implemented in C++ and used in conjunction with MATLAB 7.11.0 R2010b (2011) environment in which the data resides.

We use MUSK1 and MUSK2 data set from UCI Machine Learning Repository (Frank and Asuncion 2012). MUSK1 data set consists of descriptions of 92 molecules (bags) with different shapes or conformations. Among them 47 of molecules judged by human experts are labeled as musks (positive bags) and remaining 45 molecules are labeled as non-musks (negative bags). The total number of conformations (instances) are 476 that gives an average of 5.2 conformations for each molecule (bag). MUSK2 data set consists of descriptions of 102 molecules in which 39 of molecules are labeled as musks and remaining 63 molecules are labeled as non-musks. Total number of conformations is 6,598 which gives an average of 64.7 conformations for each molecule. Each conformation in data sets is represented with a vector of 166 features extracted from surface properties.

Leave one bag out cross validation:

Traditional cross validation methods (e.g., leave one out, n-fold) cannot reflect a fair assessment of multiple instance approaches due to ambiguity with actual instance labels. Therefore, we employ an extension that we refer to as leave one bag out cross validation (LOBOCV), which uses one bag from the original data set for validation (test data) and remaining instances as training data. After the separating hyperplane is obtained, label of the test bag is predicted and compared with its actual label. This routine is repeated until each bag in the sample is validated once and the percentage of correctly classified bags is reported.

5.1 Robustness of MIHMSVM

The robustness of the objectives will be discussed based on randomly generated data and the results obtained using IBM ILOG CPLEX Optimization Studio 12.2 (2011). Table 1 shows the cross validation results for three loss functions presented, namely hard margin loss (MIHMSVM) in (7a)–(7h), ramp loss (MIRLSVM) in (18a)–(18m), and hinge loss (MIHLSVM) in (16a)–(16l). In our computational studies, we consider a number of different C values. Small values result in a larger number of misclassified bags, which is not desired. On the other hand, values greater than 1 do not lead to a drastic decrease in the number of misclassifications (see Brooks 2011). Therefore, we set C=1 for our experiments in this section. This penalty parameter also provides the best generalization performance for larger data sets, as shown in Sect. 5.3. Problem instances are generated using predetermined number of bags and features and the following pattern vector distributions:

-

TB1

Normal distribution: Features for instances in negative bags are normally distributed with mean 0, standard deviation 1. The mean of features for a positive bag are normally distributed with mean 1, standard deviation 5, and instances within each positive bag are offset using a normal distribution with mean 0, standard deviation 1. There are 4 instances in each positive and negative bag.

-

TB2

Uniform distribution: Features for instances in negative bags are uniformly distributed between −1 and 2. The mean of features for a positive bag are uniformly distributed between −2 and 4, and instances within each positive bag are offset uniformly between −1 and 1. There are 4 instances in each positive and negative bag.

-

TB3

Randomly selected features and bags from MUSK1 data set.

The results shows hard margin loss is usually superior in practice compared to other loss functions. Loss functions would have minimal effect on classifiers for easy problems where a clean separation is possible. This can be observed in Table 1 when the ratio of number of instances to number of features is relatively low. In fact, for all cases created using TB1 and TB2, we observe the same accuracy for all three loss functions, which are not presented due to space considerations. This behavior changes for (i) odd distributions with outliers, (ii) when there are bags with small number of instances, and (iii) when the ratio of number of instances to number of features is higher. This directly points to MUSK1 data set with larger number of instances as can be seen in the last few rows of Table 1. In order to show this effect on relatively smaller instances, we generate the following instances by injecting outliers:

-

TB1o

Normal distribution: Features for instances in negative bags are normally distributed with mean 0, standard deviation 4. The mean of features for a positive bag are normally distributed with mean 5, standard deviation 4. There are 4 instances in each positive and negative bag. One out of five negative bags are injected one noisy instance that is normally distributed with mean ±90 and standard deviation 2.

-

TB2o

Uniform distribution: Features for instances in negative bags are uniformly distributed between −10 and 10. The mean of features for a positive bag are uniformly distributed between −5 and 15. There are 4 instances in each positive and negative bag. One out of five negative bags are injected one noisy instance that is uniformly distributed between ±(80,100).

Table 2 highlights accuracy differences for the three loss functions. Although separating hyperplanes are different, accuracies are the same in cases with 20 bags and 10 features. When the number of bags increase or the number of features decrease, accuracies tend to change, hard margin usually performing the best among the three. This is more apparent for larger and fuzzier data sets that are presented in Sect. 5.3. It should be noted ramp loss formulation takes significantly more time that hinge and hard margin loss in all test cases, thus it is omitted from further benchmark problems. The complexity of ramp loss SVM for conventional data is an open problem but we conjecture that multiple instance learning with ramp loss is \(\mathcal{NP}\)-hard.

5.2 Heuristic performance: optimal solution and time

In order to assess the capabilities of different formulations, we employ principal component analysis (PCA) on the MUSK1 data set so variability of data can be controlled by choosing a subset of features. When controlling the size of the problems, features with larger (smaller) weights in the first few principal components can be selected to create data sets with more (less) variability. This is a naive process that sheds a light on the analysis since data with less variability is typically harder to separate with a separating hyperplane. We use IBM ILOG CPLEX Optimization Studio 12.2 (2011) for all exact formulations and set the time limit to 30 minutes. As values greater than 1 do not lead to a significant decrease in the number of misclassifications but an artificial increase in the optimality gap for our heuristic, we set C=1 for our experiments in this section as well.

Tables 3, 4, and 5 show that formulations IP3 and CP2 perform the best. In fact, IP3 is superior to other formulations in a majority of test instances but CP2 is particularly successful when number of features increase, which makes separation relatively easier. Our results show that, although we consider a harder generalization of an \(\mathcal{NP}\)-hard problem in MIL context, medium sized problems can be solved in reasonable time using effective formulations.

Our heuristic also performs well compared to the optimal solution in terms of objective function value. It can be observed that the largest difference in objective function value between the heuristic and optimal solution in harder data sets is close to 9, when the total number of instances are 320 and the number of features was 10, which is a difficult separation problem. Although the optimality gap seems to be large, it should be noted that 8 or less additional bags are misclassified (among more than 60 bags) compared to the optimal solution with significant time savings. Furthermore, we expect proximity of heuristic hyperplane to the optimal hyperplane, thus a subtle difference in cross validation results.

5.3 Robust classification performance for larger data sets: cross validation results

5.3.1 Linear classification

In this section, we present leave one bag out cross validation results for linear classification using the three-phase heuristic. All instances and features of MUSK1 data are used in computing these results. We also use a set of C values to observe the effect on the performance of our algorithm. As Table 6 shows, highest cross validation accuracy of 79.35 % is achieved for C=1.

Table 6 also shows the performance of our algorithm against hinge loss formulation (i.e., MIHLSVM) that is solved using CPLEX. Accuracy of our heuristic algorithm for MIHMSVM is consistently higher than MIHLSVM. It should be noted that the time reported in the table is for validation of 92 bags. For a given C value, it usually takes more than 20 days to perform cross validation using hinge loss formulation on CPLEX, whereas our heuristic takes less than 6 minutes.

5.3.2 Nonlinear classification

In order to assess the performance of our heuristic for nonlinear classification, MUSK2 data is considered with a Gaussian radial basis function. Formally, the Gaussian kernel is represented as

Different C and σ values are compared and the results are presented in Table 7. The default selection in Chang and Lin (2011) is also considered that sets 2σ 2 equal to the number of features. The best accuracy achieved is 84.31 % for C=1 and σ=5. It should be noted that C=0.1 is not presented in Table 7 because the regularization term outweighs the misclassification term in the objective function and the same cross validation accuracy of 38.24 % is obtained for all values of σ. Our results show that the accuracy tends to decrease when σ increases as this converges to a linear separation. The total time spent for cross validation of 102 bags for our heuristic rarely exceeds an hour for nonextreme values of parameters. It is also noteworthy to mention that the time spent usually reduces with increased C since the misclassification penalty outweighs the quadratic regularization term in the objective function, providing a relatively more tractable problem.

6 Concluding remarks

In this paper, we propose a robust support vector machine classifier for multiple instance learning. We show that hard margin loss classifiers provide remarkably better generalization performance for multiple instance data in practice, which is in line with theory. We develop three integer programs and two constraint programs and compare their time performance in achieving optimal solutions. Furthermore, we develop a heuristic that can handle even large problem instances within seconds. Our heuristic provides higher cross validation accuracy for MIL data compared to conventional hinge loss based SVMs in significantly less time.

In this study, we observe that ramp loss classifiers are slow in practice. Alternative formulations can be developed and problem complexity can be studied for ramp loss SVM for conventional and multiple instance data. Another important future study is a comparison of approaches highlighted in Sect. 2.2 using a fair cross validation scheme (e.g., leave one bag out), instead of random validation schemes that generate varying results in different runs.

References

Andrews, S., Tsochantaridis, I., & Hofmann, T. (2003). Support vector machines for multiple-instance learning. In Advances in neural information processing systems (pp. 577–584).

Brooks, J. P. (2011). Support vector machines with the ramp loss and the hard margin loss. Operations Research, 59(2), 467–479.

Brown, M. P. S., Grundy, W. N., Lin, D., Cristianini, N., Sugnet, C. W., Furey, T. S., Ares, M., & Haussler, D. (2000). Knowledge-based analysis of microarray gene expression data by using support vector machines. Proceedings of the National Academy of Sciences of the United States of America, 97, 262–267.

Byun, H., & Lee, S. W. (2002). Applications of support vector machines for pattern recognition: a survey. In Pattern recognition with support vector machines (pp. 571–591).

Chang, C.-C., & Lin, C.-J. (2011). LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology, 2, 1–27.

Chen, C., & Mangasarian, O. L. (1996). Hybrid misclassification minimization. Advances in Computational Mathematics, 5, 127–136.

Chen, Y., & Wang, J. Z. (2004). Image categorization by learning and reasoning with regions. Journal of Machine Learning Research, 5, 913–939.

Cristianini, N., & Shawe-Taylor, J. (2000). An introduction to support vector machines and other kernel-based learning methods. Cambridge: Cambridge University Press.

Dietterich, T. G., Lathrop, R. H., & Lozano-Pérez, T. (1997). Solving the multiple instance problem with axis-parallel rectangles. Artificial Intelligence, 89(1–2), 31–71.

Dooly, D. R., Zhang, Q., Goldman, S. A., & Amar, R. A. (2003). Multiple instance learning of real valued data. Journal of Machine Learning Research, 3, 651–678.

Frank, A., & Asuncion, A. (2012). UCI machine learning repository. University of California, Irvine, School of Information and Computer Sciences. URL:http://archive.ics.uci.edu/ml.

Gehler, P. V., & Chapelle, O. (2007). Deterministic annealing for multiple-instance learning. In International conference on artificial intelligence and statistics.

Huang, Z., Chen, H., Hsu, C. J., Chen, W. H., & Wu, S. (2004). Credit rating analysis with support vector machines and neural networks: a market comparative study. Decision Support Systems, 37(4), 543–558.

IBM ILOG CPLEX Optimization Studio 12.2 (2011). URL:www.ibm.com/software/integration/optimization/cplex-optimizer.

Joachims, T. (1998). Text categorization with support vector machines: learning with many relevant features. In Machine learning: ECML-98 (pp. 137–142).

Kundakcioglu, O. E., Seref, O., & Pardalos, P. M. (2010). Multiple instance learning via margin maximization. Applied Numerical Mathematics, 60(4), 358–369.

Lal, T. N., Schroder, M., Hinterberger, T., Weston, J., Bogdan, M., Birbaumer, N., & Scholkopf, B. (2004). Support vector channel selection in BCI. IEEE Transactions on Biomedical Engineering, 51(6), 1003–1010.

Mangasarian, O. L., & Wild, E. W. (2008). Multiple instance classification via successive linear programming. Journal of Optimization Theory and Applications, 137(3), 555–568.

Mason, L., Bartlett, P., & Baxter, J. (2000). Improved generalization through explicit optimization of margins. Machine Learning, 38(3), 243–255.

MATLAB—The Language of Technical Computing (2011). URL:www.mathworks.com/products/matlab.

Molina, G. N. G., Ebrahimi, T., & Vesin, J. M. (2003). Joint time-frequency-space classification of EEG in a brain-computer interface application. EURASIP Journal on Applied Signal Processing, 2003, 713–729.

Murray, J. F., Hughes, G. F., & Kreutz-Delgado, K. (2006). Machine learning methods for predicting failures in hard drives: a multiple-instance application. Journal of Machine Learning Research, 6(1), 783–816.

Noble, W. S. (2004). Support vector machine applications in computational biology. In Kernel methods in computational biology (pp. 71–92). Cambridge: MIT Press.

Orsenigo, C., & Vercellis, C. (2003). Multivariate classification trees based on minimum features discrete support vector machines. IMA Journal of Management Mathematics, 14, 221–234.

Shen, X., Tseng, G. C., Zhang, X., & Wong, W. H. (2003). On ψ-learning. Journal of the American Statistical Association, 98(463), 724–734.

Steinwart, I. (2002). Support vector machines are universally consistent. Journal of Complexity, 18, 768–791.

Tao, Q., Scott, S., Vinodchandran, N. V., & Osugi, T. T. (2004). SVM-based generalized multiple-instance learning via approximate box counting. In Proceedings of the twenty-first international conference on machine learning (p. 101). New York: ACM.

Trafalis, T. B., & Gilbert, R. C. (2006). Robust classification and regression using support vector machines. European Journal of Operational Research, 173(3), 893–909.

Trafalis, T. B., & Ince, H. (2000). Support vector machine for regression and applications to financial forecasting. In Proceedings of the IEEE-INNS-ENNS international joint conference on neural networks (Vol. 6, pp. 348–353). Los Alamos: IEEE Comput. Soc.

Vapnik, V. N. (1995). The nature of statistical learning theory. New York: Springer.

Viola, P., Platt, J., & Zhang, C. (2006). Multiple instance boosting for object detection. Advances in Neural Information Processing Systems, 18.

Wang, L., Ji, H., & Li, J. (2008). Training robust support vector machine with smooth ramp loss in the primal space. Journal of the American Statistical Association, 71, 3020–3025.

Xu, L., Crammer, K., & Schurmans, D. (2006). Robust support vector machine training via convex outlier ablation. In Proceedings of the 21st national conference on artificial intelligence.

Zhang, Q., & Goldman, S. A. (2002). EM-DD: an improved multiple-instance learning technique. Advances in neural information processing systems (Vol. 2, pp. 1073–1080).

Zhang, Q., Goldman, S. A., Yu, W., & Fritts, J. E. (2002). Content-based image retrieval using multiple-instance learning. In Proceedings of the 19th international conference on machine learning (pp. 682–689), Citeseer.

Acknowledgements

The authors would like to thank Shishir Shah and two anonymous referees for their valuable comments and suggestions that improved the paper in several aspects. This work is supported by University of Houston New Faculty Research Grant.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Poursaeidi, M.H., Kundakcioglu, O.E. Robust support vector machines for multiple instance learning. Ann Oper Res 216, 205–227 (2014). https://doi.org/10.1007/s10479-012-1241-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-012-1241-z