Abstract

The best linear unbiased predictor (BLUP) is called a kriging predictor and has been widely used to interpolate a spatially correlated random process in scientific areas such as geostatistics. However, if an underlying random field is not Gaussian, the optimality of the BLUP in the mean squared error (MSE) sense is unclear because it is not always identical with the conditional expectation. Moreover, in many cases, data sets in spatial problems are often so large that a kriging predictor is impractically time-consuming. To reduce the computational complexity, covariance tapering has been developed for large spatial data sets. In this paper, we consider covariance tapering in a class of transformed Gaussian models for random fields and show that the BLUP using covariance tapering, the BLUP and the optimal predictor are asymptotically equivalent in the MSE sense if the underlying Gaussian random field has the Matérn covariance function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interpolation of a spatially correlated random process is widely used in mining, hydrology, forestry and other fields. This method is often called kriging in geostatistical literature. It requires the solution of a linear equation based on the covariance matrix of observations in different spatial points that is the size of the number of observations. The operation count for the direct computation of it is of order \(n^3\) with sample size \(n\). Hence as the sample size is larger, the computation becomes a more formidable one in practice. To deal with this problem, Furrer et al. (2006) proposed covariance tapering. The basic idea of covariance tapering is to reduce a spatial covariance function to zero beyond some range by multiplying the true spatial covariance function by a positive definite but compactly supported function. Then the resulting covariance matrix is so sparse that it is much easier and faster to obtain the solution. Furrer et al. (2006) proved the asymptotic efficiency of the BLUP using covariance tapering which we call the tapered BLUP for the original BLUP. Furthermore, Zhu and Wu (2010) investigated properties of covariance tapering for convolution-based nonstationary models and proved that the tapered BLUP is asymptotically efficient in specific assumptions. An alternative approach to reduce the computational time is to calculate a spatial prediction based on a small and manageable number of observations that are given in points close to a prediction point. This approach often shows good performance. However, it is not clear how we may choose samples in a neighborhood of the prediction point and theoretical properties are not derived completely. On the other hand, in covariance tapering it is shown that the MSE ratio of the tapered BLUP and the true BLUP converges to 1 as the sample size goes to infinity regardless of the selection of the taper range (Furrer et al. 2006).

Covariance tapering is also used for the estimation of parameters of a covariance function. The log-likelihood function of Gaussian random fields includes the determinant and the inverse of the covariance matrix between the observations in different spatial points, which is difficult to calculate for large data sets. Kaufman et al. (2008) applied covariance tapering to the log-likelihood function and showed that the estimators maximizing the tapered approximation of the log-likelihood are strongly consistent. Du et al. (2009) proved that this tapered maximum likelihood estimator has the asymptotic normality in one-dimensional case. Recently, Wang and Loh (2011) showed the asymptotic normality of the tapered maximum likelihood estimator in multidimensional case by letting the taper range converge to 0 when the sample size goes to infinity.

The BLUP is identical with the conditional expectation if an underlying random field is Gaussian and consequently is the optimal predictor in the MSE sense. However, if the original data take a nonnegative value or have a skewed distribution, we frequently apply a nonlinear transformation to it to get a data which are nearer Gaussian. Typical ones are a chi-squared process and a lognormal process (Cressie 1993). For example, precipitation data are approximately regarded as a chi-squared process because the standardized square root values known as anomalies are closer to a Gaussian distribution (Johns et al. 2003; Furrer et al. 2006). On the other hand, the variable such as topsoil concentrations of cobalt and copper takes a positive value and has a right skewed sampling distribution. This kind of spatial data is often obtained in large numbers and modeled by the lognormal distribution (Moyeed and Papritz 2002; De Oliveira 2006). However, the optimality of the BLUP and the tapered BLUP for the original data is not clear because it is non-Gaussian. In this paper, we show that the tapered BLUP and the BLUP in a class of transformed Gaussian models for random fields are asymptotically equivalent to the conditional expectation, which is the optimal predictor in the MSE sense. This is an extension of Furrer et al. (2006).

Granger and Newbold (1976) considered a class of the transformed models in a time series context and calculated the mean, the covariance function and the mean squared error of predictors. Our work can be also regarded as an extension of their results to spatial processes. Moreover, since the conditional mean and the BLUP include the inverse of the covariance matrix, covariance tapering is useful to reduce the computational difficulty.

The subsequent sections are organized as follows. We introduce a kriging predictor and asymptotic properties in Sect. 2. In Sect. 3, we introduce a class of transformed Gaussian models for random fields and covariance tapering and then prove that the tapered BLUP of transformed random fields has the asymptotic efficiency with respect to the optimal predictor. In Sect. 4, computer experiments are conducted. A conclusion and future studies are mentioned in Sect. 5. Supplementary materials are given in Appendix A. We outline the technical Proofs in Appendices B–D.

2 Spatial prediction and its asymptotic properties

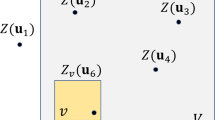

Let \(\{ Z({{\varvec{s}}}), {{\varvec{s}}} \in \mathbb R ^d \}\) be a random field with known constant mean and covariance function. Suppose we have observations from a single realization of the random field \(Z({{\varvec{s}}})\), denoted by \({{\varvec{Z}}}=(Z({{\varvec{s}}}_1),\ldots ,Z({{\varvec{s}}}_n))^{\prime }\), measured at sampling locations \({{\varvec{s}}}_1,\ldots ,{{\varvec{s}}}_n \in \mathbb R ^d\). The goal is to predict \(Z({{\varvec{s}}}_0)\) at an unobserved location \({{\varvec{s}}}_0 \in \mathbb R ^d\) based on \({{\varvec{Z}}}\). Then the best linear unbiased predictor (BLUP) and its mean squared error (MSE) are

where \(\mu _Z=E[Z({{\varvec{s}}})],\,\sigma _Z^2=\text{ var }(Z({{\varvec{s}}}_0)),\,({{\varvec{c}}}_{Z})_i=\text{ cov }(Z ({{\varvec{s}}}_0),Z ({{\varvec{s}}}_i)),\,(\Sigma _Z)_{ij}=\text{ cov }(Z ({{\varvec{s}}}_i),\,Z ({{\varvec{s}}}_j))\,(i,j=1,\ldots ,n)\) and \(\mathbf{1}=(1,\ldots ,1)^{\prime }\). \(\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0)\) and \(E[\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) are called as the simple kriging predictor and the simple kriging variance of \(Z({{\varvec{s}}}_0)\), respectively (see, e.g., Cressie 1993; Stein 1999).

Hereafter, we assume that \(\{Z({{\varvec{s}}})\}\) is a stationary random field and the covariance function \(C({{\varvec{h}}})\) is defined by

In what follows, we write \({{\varvec{h}}}_i= {{\varvec{s}}}_i-{{\varvec{s}}}_0, {{\varvec{h}}}_{ij}= {{\varvec{s}}}_i-{{\varvec{s}}}_j \; (i,j=1,\ldots ,n)\).

Now we will review some asymptotic properties of the kriging predictor using an incorrect covariance function, which are used in the Proof of our main theorem. Hereafter, we assume that sampling locations \({{\varvec{s}}}_1,\ldots ,{{\varvec{s}}}_n\) are in \(D \subset \mathbb R ^d\) where \(D\) is a bounded subset and a prediction point \({{\varvec{s}}}_0 \in D\) is an accumulation point of \(\{{{\varvec{s}}}_i,i=1,2,\ldots \}\), that is, \({{\varvec{s}}}_0\in \overline{\{{{\varvec{s}}}_i,i=1,2,\ldots \}}\) where \(\overline{\{{{\varvec{s}}}_i,i=1,2,\ldots \}}\) is the closure of \(\{{{\varvec{s}}}_i,i=1,2,\ldots \}\). This assumption is called infill asymptotics and often used as an asymptotic framework in spatial statistics (Cressie 1993).

\(C_0({{\varvec{h}}})\) and \(C_1({{\varvec{h}}})\) denote the covariance functions of the true and the assumed models, respectively. Then from (1), the BLUP under \(C_i({{\varvec{h}}})\; (i=0,1)\) is \(\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0,C_i)=\mu _Z + {{\varvec{c}}}_{i}^{\prime } \Sigma _{i}^{-1}({{\varvec{Z}}}-\mu _Z \cdot {\mathbf{1}})\) where \(({{\varvec{c}}}_{i})_k=C_i({{\varvec{h}}}_k)\) and \((\Sigma _{i})_{kl}=C_i({{\varvec{h}}}_{kl})\,\; (k,l=1,\ldots ,n)\). Let \(Z^{*}({{\varvec{s}}}_0)\) be a predictor of \(Z({{\varvec{s}}}_0)\) and let \(E_{C_i}[Z^{*}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) be the MSE of \(Z^{*}({{\varvec{s}}}_0)\) under \(C_i\) (\(i=0,1\)). This MSE may depend not only on \(C_i\) but also on other distributional properties if \(Z^{*}({{\varvec{s}}}_0)\) is a nonlinear predictor. Then \(Z^{*}({{\varvec{s}}}_0)\) is called a consistent predictor if

Furthermore, a consistent predictor \(Z^{*}({{\varvec{s}}}_0)\) is called asymptotically efficient with respect to the BLUP \(\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0,C_0)\) if

Next let \(\hat{Z}({{\varvec{s}}}_0)\) be the optimal predictor of \(Z({{\varvec{s}}}_0)\) which minimizes the MSE under the true model. Then if

\(Z^{*}({{\varvec{s}}}_0)\) is called asymptotically efficient with respect to the optimal predictor \(\hat{Z}({{\varvec{s}}}_0)\). If \(\{Z({{\varvec{s}}})\}\) is Gaussian, the two definitions of the asymptotic efficiency are equivalent because \(\hat{Z}({{\varvec{s}}}_0) = \hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0,C_0)\).

Stein (1993) gave the following sufficient conditions for the asymptotic efficiency of the incorrect kriging predictor with respect to the BLUP. Hereafter, \(\parallel \cdot \parallel \) means the Euclidean norm and a function is called bandlimited if it is the Fourier transform of a function with bounded support.

Theorem 1

(Stein 1993) Let \(f_i(\varvec{\lambda }), \varvec{\lambda } \in \mathbb R ^d\) be the spectral density function of \(C_i({{\varvec{h}}})\,(i=0,1)\). Suppose that

where \(\phi (\varvec{\lambda })\) is bandlimited and \(\lim _{ \Vert \varvec{\lambda } \Vert \rightarrow \infty } f_1(\varvec{\lambda })/f_0(\varvec{\lambda }) =c \; (>0)\). Then as \(n \rightarrow \infty \)

and

It is known that if

for some \(r>d\), then (3) is satisfied (see Stein 1993, p. 402). Theorem 1 states that the low frequency behavior of the spectral density function has little impact on the kriging prediction.

3 Covariance tapering in transformed random fields

This section introduces a class of transformed Gaussian models for random fields and covariance tapering and derives the asymptotic optimality of the tapered BLUP.

3.1 Transformed random fields

Assume that \(\{ Y({{\varvec{s}}}), {{\varvec{s}}} \in D \subset \mathbb R ^d \}\) is an isotropic Gaussian random field with mean \(\mu _Y = E[Y({{\varvec{s}}})]\) and Matérn covariance function defined by

where \(K_{\nu }(\cdot )\) is the modified Bessel function of the second kind of order \(\nu \) (see, e.g., Cressie 1993; Stein 1999). The spectral density function of this covariance function is

where \(A=(\Gamma (\nu +d/2)\alpha ^{2\nu })/(\pi ^{d/2}\Gamma (\nu ))\). For example if \(\nu =0.5\), Matérn covariance function is

It is called the exponential covariance function and widely used in many applications. For simplicity, we set \(\mu _Y = 0\) and \(\sigma _Y^2 = 1\).

Now let \(Z({{\varvec{s}}})=T(Y({{\varvec{s}}}))\) be an observable random field with the underlying field \(\{ Y({{\varvec{s}}}) \}\) where \(T(x)\) is an unknown real function, which satisfies \(\int ^{\infty }_{-\infty }T(x)^2 \phi (x)\mathrm{d}x< \infty \) and \(\phi (x)=e^{-x^2/2}/\sqrt{2 \pi }\) is the density function of \(N(0,1)\). Then \(Z({{\varvec{s}}})\) can be expressed by an infinite sum of Hermite polynomials

where \(H_j(x) \; (j=0,1,2,\ldots )\) is the \(j\)th order Hermite polynomial (see Appendix A for properties of Hermite polynomials). Note that \(\alpha _j\)’s depend on \(T\). By (9) and (11) of Appendix A,

Next we will derive the optimal predictor in transformed random fields by a similar discussion of Granger and Newbold (1976).

Define

where \({{\varvec{Y}}}=(Y({{\varvec{s}}}_1),\ldots ,Y({{\varvec{s}}}_n))^{\prime },\,({{\varvec{c}}}_{Y})_i=C_Y(\Vert {{\varvec{h}}}_i \Vert )\) and \((\Sigma _Y)_{ij}=C_Y(\Vert {{\varvec{h}}}_{ij} \Vert )\,(i,j=1,\ldots ,n)\). Then the conditional distribution of \(W({{\varvec{s}}}_0)\) given \({{\varvec{Y}}}\) is \(N(0,1)\) and \(Y({{\varvec{s}}}_0)\) is expressed by

Then from (2.7.6) of Brockwell and Davis (1991); p. 63, (10) and (13) of Appendix A, the optimal predictor \(\hat{Z}({{\varvec{s}}}_0)\) of \(Z({{\varvec{s}}}_0)\), that is, the conditional mean given \({{\varvec{Y}}}\) is

and from (9) of Appendix A, its mean squared error is

\(\hat{Z}({{\varvec{s}}}_0)\) is infeasible because \(T(\cdot )\) is unknown. From (1) and (2), the BLUP of \(Z({{\varvec{s}}}_0)\) is

and its mean squared error is

where \({{\varvec{Z}}}=(Z({{\varvec{s}}}_1),\ldots ,Z({{\varvec{s}}}_n))^{\prime }=(T(Y({{\varvec{s}}}_1)),\ldots ,T(Y({{\varvec{s}}}_n)))^{\prime },\,({{\varvec{c}}}_{Z})_i=C_Z(\Vert {{\varvec{h}}}_i \Vert )\) and \((\Sigma _Z)_{ij}=C_Z(\Vert {{\varvec{h}}}_{ij} \Vert )\,(i,j=1,\ldots ,n)\). Hereafter we assume that \(\mu _z = \alpha _0\) and \(C_Z(\Vert {{\varvec{h}}} \Vert )\) are known. If they are unknown, the BLUP and the tapered BLUP introduced in the next section are also infeasible as the optimal predictor is. However, the estimators of these predictors are easily constructed by the sample mean and covariance of the observable \(\{ Z({{\varvec{s}}}) \}\). Whereas that of the optimal predictor depends on the unobservable \(\{ Y({{\varvec{s}}}) \}\) and requires a rather difficult procedure. A more rigorous discussion on the BLUP and the tapered BLUP with estimated coefficients is left for a future study. Some comments are given in Sect. 5.

3.2 Covariance tapering

The computational complexity of \(\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0)\) is challenging for large spatial data sets because it includes the inverse of the covariance matrix with the same size as the number of observations. To reduce the computational burden, covariance tapering has been developed by Furrer et al. (2006). They show the asymptotic optimality of covariance tapering in the Gaussian random field with Matérn covariance function, which corresponds to the case of \(T(x) = x\) in the framework of the transformed random field.

We will review the result of Furrer et al. (2006). Let \(T(x) = x\) and hence \(Z({{\varvec{s}}}) = Y({{\varvec{s}}})\) in this subsection. From (1) and (2), the BLUP of \(Y({{\varvec{s}}}_0)\) and its MSE are defined by

Let \(C_{\theta }(x)\) be a compactly supported correlation function with \(C_{\theta }(0)=1\) and \(C_{\theta }(x) = 0\) for \(x \ge \theta \). \(C_{\theta }(x)\) is called the taper function with the taper range \(\theta \). Then consider the product of the original covariance function and the taper function, that is

Now we replace \(C_Y(\Vert {{\varvec{h}}} \Vert )\) in \(\hat{Y}^\mathrm{BLUP}({{\varvec{s}}}_0)\) with \(C_\mathrm{tap}^Y(\Vert {{\varvec{h}}} \Vert )\) and obtain the tapered BLUP

where \(({{\varvec{c}}}_{Y}^\mathrm{tap})_i=C^Y_\mathrm{tap}(\Vert {{\varvec{h}}}_i \Vert )\) and \((\Sigma _Y^\mathrm{tap})_{ij} = C^Y_\mathrm{tap}(\Vert {{\varvec{h}}}_{ij} \Vert )\,(i,j=1,\ldots ,n)\). The resulting covariance matrix \(\Sigma _Y^\mathrm{tap}\) has many zero elements and is called a sparse matrix, so that it is much faster to calculate \({\Sigma _Y^\mathrm{tap}}^{-1}{{\varvec{Y}}}\) than \(\Sigma _Y^{-1}{{\varvec{Y}}}\). Next let \(f_{\theta }(\Vert \varvec{\lambda }\Vert )\) be the spectral density function of \(C_{\theta }(\Vert {{\varvec{h}}} \Vert )\). Then Furrer et al. (2006) imposed the following condition.

Taper condition: for some \(\epsilon > 0\) and \(M_{\theta } < \infty \)

Although \(\hat{Y}^\mathrm{tapBLUP}({{\varvec{s}}}_0)\) is not the BLUP under the true covariance function \(C_Y(\cdot )\), Furrer et al. (2006) showed that this predictor is asymptotically efficient with respect to the BLUP.

Theorem 2

(Furrer et al. 2006) If \(f_{\theta }\) satisfies the taper condition, Theorem 1 holds with \(C_0=C_Y\)C\(C_1=C_YC_{\theta }\), that is

Theorem 2 also means the asymptotic efficiency of the tapered BLUP with respect to the optimal predictor because \(\hat{Y}^\mathrm{BLUP}\) is the optimal predictor in the Gaussian random field \(\{ Y({{\varvec{s}}})\}\).

In this paper, we shall show that for any transformation \(T(x)\) the tapered BLUP of \(Z({{\varvec{s}}}_0)\) is asymptotically efficient not only with respect to \(\hat{Z}^\mathrm{BLUP}({{\varvec{s}}}_0)\) but also with respect to \(\hat{Z}({{\varvec{s}}}_0)\).

3.3 Main result

As in Furrer et al. (2006), the tapered BLUP of \(Z({{\varvec{s}}}_0)\) is defined by

where \(({{\varvec{c}}}_{Z}^\mathrm{tap})_i=C^Z_\mathrm{tap}(\Vert {{\varvec{h}}}_i \Vert ),\,(\Sigma _Z^\mathrm{tap})_{ij} = C^Z_\mathrm{tap}(\Vert {{\varvec{h}}}_{ij} \Vert )\) and \(C^Z_\mathrm{tap}(\Vert {{\varvec{h}}} \Vert )=C_Z (\Vert {{\varvec{h}}}\Vert )C_{\theta } (\Vert {{\varvec{h}}}\Vert )\,(i,j=1,\ldots ,n)\). Hereafter it is assumed that \(0 < \sum _{j=1}^{\infty } \alpha _j^2 j! j\) otherwise \(\alpha _j=0\) for any \(j \ge 1\) and \(Z({{\varvec{s}}})=T(Y({{\varvec{s}}}))=c\) where \(c\) is a constant. \(E[\hat{Z}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) of (7) and \(E[\hat{Z}^\mathrm{tapBLUP}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) are denoted by \(E_{C_Z}[\hat{Z}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) and \(E_{C_Z}[\hat{Z}^\mathrm{tapBLUP}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2\) to highlight the expectation under the true model for the observable process \(\{ Z({{\varvec{s}}}) \}\) though \(Z({{\varvec{s}}})\) and \(C_Z\) are functions of the underlying \(Y({{\varvec{s}}})\) and \(C_Y\), respectively.

The following theorem is our main result.

Theorem 3

Suppose that \(\sum _{j=1}^{\infty } \alpha _j^2 j! j^{2\nu +d+\max \{1,2\epsilon \}} < \infty \) where \(\epsilon \) is given in the taper condition. If \(f_{\theta }\) satisfies the taper condition, the tapered BLUP, the BLUP and the optimal predictor are asymptotically equivalent in the MSE sense, that is

and

as \(n \rightarrow \infty \).

Theorem 3 states an extension of Theorem 2 by Furrer et al. (2006) in terms of the asymptotic efficiency of the tapered BLUP not only with respect to the BLUP but also with respect to the optimal nonlinear predictor. Moreover, it is also shown that the BLUP is asymptotically efficient with respect to the optimal one. One may evaluate the presumed mean squared error \(E_{C^Z_\mathrm{tap}}[\hat{Z}^\mathrm{tapBLUP}({{\varvec{s}}}_0)-Z({{\varvec{s}}}_0)]^2 = C^Z_\mathrm{tap}(0)-{{{\varvec{c}}}_{Z}^\mathrm{tap}}^{\prime }{\Sigma _Z^\mathrm{tap}}^{-1}{{\varvec{c}}}_{Z}^\mathrm{tap}\) to assess a prediction uncertainty. The following corollary shows that in the transformed model it is asymptotically equivalent to the MSE of the optimal predictor.

Corollary 1

Under the conditions of Theorem 3,

The tapered BLUP can be calculated by using the only observable data \(\{ Z({{\varvec{s}}}) \}\) when the transformation \(T(\cdot )\) is unknown. However, to justify the practical use of the tapered BLUP, the property of the plug-in predictor must be investigated. It is a future work.

We give two examples, which are often applied to an empirical analysis.

Example 1

(The squared transformation). If \(Z({{\varvec{s}}})=(\sigma Y({{\varvec{s}}})+\mu )^2,\,\sigma > 0\) and \(Y({{\varvec{s}}}) \sim N(0,1),\,Z({{\varvec{s}}})\) has the following expression using Hermite polynomials

Since \(\alpha _0 = \mu ^2+\sigma ^2,\,\alpha _1 = 2\mu \sigma ,\,\alpha _2 = \sigma ^2\) and \(\alpha _j = 0,\,j \ge 3\), clearly the assumption of Theorem 3 is satisfied. Then from (4)–(7),

and

In general, for any finite order polynomial \(Z({{\varvec{s}}})=\sum _{j=0}^{m} a_j(\sigma Y({{\varvec{s}}}) + \mu )^j\) with \(a_j \in \mathbb R \) and \(m < \infty \), the corresponding coefficients \(\alpha _j\) of the Hermite polynomials satisfy the assumption of Theorem 3 because \(\alpha _j=0\) (\(j>m\)).

Example 2

(The exponential transformation). Consider the following transformation

where \(Y({{\varvec{s}}}) \sim N(0,1)\). This kind of random fields \(Z({{\varvec{s}}})\) is called a log-Gaussian random field. Then by (12) of Appendix A,

Since \(\alpha _j=\exp (\mu +\sigma ^2/2)\sigma ^j/j!\), the assumption of Theorem 3 is satisfied. It follows from (4)–(7) and (12) of Appendix A that

and

The approach to calculate this nonlinear optimal predictor (8) is known as the lognormal simple kriging (Wackernagel 2003). It is known that the lognormal simple kriging predictor is superior to the BLUP in log-Gaussian random field in the MSE sense (e.g., Cressie 1993; Wackernagel 2003). However, they are asymptotically equivalent under infill asymptotics when the underlying Gaussian random field has the Matérn covariance function.

Remark 1

The assumption \(\sum _{j=1}^{\infty } \alpha _j^2 j! j^{2\nu +d+\max \{1,2\epsilon \}} < \infty \) is relaxed for some \(\nu \). If \(\nu =0.5,\,f_Z(\Vert \varvec{\lambda }\Vert )\) has the analytical form,

Consequently a weaker condition \(\sum _{j=1}^{\infty }\alpha _j^2 j! j \!<\! \infty \) than \(\sum _{j=1}^{\infty } \alpha _j^2 j! j^{2\nu +d+\max \{1,2\epsilon \}} \!<\! \infty \) is sufficient for Theorem 3.

Remark 2

The tapered BLUP is based on the observed data \(\{ Z({{\varvec{s}}}) \}\). If \(T(\cdot )\) is known, we can apply a tapering technique to the underlying Gaussian random field and have

where \(\{ Y({{\varvec{s}}}) \}\) is a Gaussian random field with zero-mean and unit variance. If \(\hat{Z}^\mathrm{tap}({{\varvec{s}}}_0)\) converges in mean square, by (9) and (11) of Appendix A, \(\hat{Z}^\mathrm{tap}({{\varvec{s}}}_0)\) is an unbiased predictor and

\(\hat{Z}^\mathrm{tap}({{\varvec{s}}}_0)\) is well defined and has the consistency in the previous two examples.

However, the MSE of \(\hat{Z}^\mathrm{tap}({{\varvec{s}}}_0)\) seems to be more erratic than that of \(\hat{Z}^\mathrm{tapBLUP}({{\varvec{s}}}_0)\) in some simulations. Whether the asymptotic efficiency of \(\hat{Z}^\mathrm{tap}({{\varvec{s}}}_0)\) with respect to the optimal predictor holds is a future study.

4 Computational experiments

We conduct Monte Carlo simulation by using MATLAB. First, we see the convergence and the finite sample accuracy of Theorem 3 for different sample sizes, taper ranges and smoothness of covariance functions. Since

we see the ratios of the MSE of the tapered BLUP and that of the optimal predictor. Next, the time required for one calculation of predictors is measured. Finally, we compare the tapered BLUP with the BLUP obtained by an iterative method, which is more time saving than the direct method. The examples of Sect. 3 are used for the transformation \(T(\cdot )\).

Let \(D=[-1,1]^2\) be the sampling domain. The data locations \(\{{{\varvec{s}}}_i \}_{i \ge 1}\) are sampled from a uniform distribution over \(D\). The spatial prediction is for the center location \({{\varvec{s}}}_0=(0,0)\). This sampling scheme satisfies infill asymptotics (see Lemma 7 in Appendix D).

For a fixed configuration of sampling locations \(\{ {{\varvec{s}}}_i, i=1,2,\ldots ,n \}\), each expectation in the ratio of the MSE of the tapered BLUP and that of the optimal predictor is approximated by sample mean of 1,000 simulations. We iterate this procedure 100 times and calculate mean, standard deviation and 95 % interval of the approximated ratios to see the variability of the MSE ratio. \(\sigma ^2\) and \(\alpha \) are determined so that \(C_Z(0) = 1\) and \(C_Z\) decreases to 0.05 over the distance 0.8 to examine the influence of different transformations, taper ranges and the smoothness parameter \(\nu \) of the covariance function. Therefore, \(\sigma ^2\) and \(\alpha \) depend on each transformation and \(\nu \). We use Wendland\(_2\) taper function

which was introduced by Wendland (1995) and satisfies the taper condition if \(d=2\) and \(\nu < 2.5\) (Furrer et al. 2006).

The first experiment examines the convergence and the accuracy of the ratio between the MSE of the tapered BLUP and that of the optimal predictor for different \(\nu \). The smoothness parameter is \(\nu =0.5, 1.0\) and \(1.5\) and the taper range is \(\theta =0.8\). The sample size is 100, 300 and 500. Table 1 shows that as \(\nu \) is larger, the MSE ratio and fluctuations increase because the discrepancy between the true covariance function and the tapered one is larger in the small distance in this simulation.

The second one examines the convergence and the accuracy of the MSE ratio with different taper ranges. The sample size is 100, 300 and 500 for \(\theta = 0.6\) and \(0.75\), wider ranges and is 300, 1,000, 2,000 and 3,000 for \(\theta = 0.15\) and \(0.3\), narrower ranges, respectively. The smoothness parameter is \(\nu =0.5\) and \(1.5\).

Tables 2 and 3 summarize the results. The number in the parentheses is the average of the number of locations falling within the taper range in 100 sets of the data locations sampled from a uniform distribution over \(D\). As \(\theta \) decreases, the MSE ratio increases because the discrepancy between the true covariance function and the tapered one is larger and the sample size within the taper range is smaller when \(\theta \) is smaller. If we choose the taper range such that the number of the samples within it is greater than 70 in the case of \(\nu =0.5\), the percentage increase of the MSE of the tapered BLUP over that of the optimal predictor seems to be within about 7 %. In the case of \(\nu = 1.5\), it seems that the taper range such that the number of the samples within it is greater than 140 is a safe choice. Except the small \(n\), the exponential transformation has slightly larger variability than the squared one although the mean of the exponential one is closer to one than that of the squared one. This suggests that the convergence rate of Theorem 3 may depend on the transformation. Since the MSE ratio converges to one as \(n\) is larger regardless of the taper range, Tables 2 and 3 support the result of Theorem 3.

Next we consider the computational times of the true optimal predictor, the BLUP and the tapered BLUP for one fixed realization. The computational time of the optimal predictor is measured as the benchmark, though it is infeasible in practice. We put \(\nu =0.5\) and \(\theta =0.15, 0.3\) and \(0.6\) for the squared transformation. All computations are carried out by using the MATLAB function sparse on Linux powered 3.33 GHz Xeon processor with 8 Gb RAM. For the calculation of the sparse matrix, there is also the R package spam (Furrer and Sain 2010). The sample size \(n\) is from 1,000 to 9,000 with the increment 1,000. Figure 1 shows that covariance tapering reduces the computational time substantially for \(\theta = 0.15\) and 0.3. However, it does not work well for \(\theta = 0.6\). It seems that as \(\theta \) increases the calculation of some algorithm specific to solve a linear equation of a sparse matrix takes much more time and offsets the computational efficiency. For \(n = 6,000\), the percentages of non-zero entries in the tapered covariance matrix, that is the sparsity of the matrices, are 1.6, 6.3 and 21.6 % for \(\theta = 0.15,\,0.3\) and 0.6, respectively.

Finally, we consider an iterative method to solve the linear equation of the BLUP. It is known that iterative methods have a lower operation count than direct methods. Here, we focus on the conjugate gradient method without preconditioning a linear system (Golub and Van Loan 1996) and apply to \(\hat{Z}^\mathrm{BLUP}\). We put \(n = 1000, 3000,\,\nu =0.5\) and \(\theta =0.15,0.3\). The count of iterations is \(40\) and \(80\). The exponential transformation is used and the number of replications is 1,000. From Tables 4 and 5, the tapered BLUP by using the direct method is much more time saving than the BLUP with the iterative method. Furthermore, its MSE ratio to the optimal predictor is almost equal to that of the BLUP for sufficiently large \(n\). It is a future work to find an efficient preconditioner and compare \(\hat{Z}^\mathrm{BLUP}\) to \(\hat{Z}^\mathrm{tapBLUP}\) by using the conjugate gradient method.

5 Conclusion and future studies

This paper studies covariance tapering for prediction of large spatial data sets in transformed random fields and shows the asymptotic efficiency of the tapered BLUP not only with respect to the BLUP but also with respect to the optimal predictor. Monte Carlo simulations support theoretical results. This result provides a contribution to the analysis of non-Gaussian large spatial data sets and the nonlinear prediction, especially when the transformation is unknown and the optimal predictor is infeasible.

However, the case of unknown parameters must be considered in future. First for \(\{Z({{\varvec{s}}})\},\,\mu _Z\) and \(\nu \) are crucial ones by the following reason. Assume that \(\mu _Z\) and \(\nu \) are correctly specified and consider the spectral density function

for any \(\tilde{\alpha }>0\) and \(\tilde{\sigma }^2 >0\). Then similar to Theorem 3, the tapered BLUP based on \(\mu _Z\) and the covariance function of \(f_M(\Vert \varvec{\lambda }\Vert )\) is also asymptotically efficient with respect to the optimal predictor. The mean \(\mu _Z\) may be estimated by the sample mean of the observations \({{\varvec{Z}}}=(Z({{\varvec{s}}}_1), \ldots ,Z({{\varvec{s}}}_n))^{\prime }\) or a more efficient estimator. Since \(\nu \) determines the high frequency behavior of \(f_M(\Vert \varvec{\lambda }\Vert )\), it may be estimated nonparametrically at high frequency bands by using Fourier transforms of \({{\varvec{Z}}}=(Z({{\varvec{s}}}_1),\ldots ,Z({{\varvec{s}}}_n))^{\prime }\) (see e.g., Fuentes and Reich 2010).

Next, the estimation of \(T(x)\) should be considered. If \(T(x)\) is correctly specified, it can be helpful to check the condition in Theorem 3 and determine an appropriate range parameter \(\theta \). One candidate for \(T^{-1}(x)\) (not \(T(x)\)) may be the Box–Cox transformation (Box and Cox 1964). Then we have to take account of the instability of its identification which can have a serious effect on the estimation of the parameters (Bickel and Doksum 1981).

Finally how does the tapered BLUP with estimated parameters work well? Putter and Young (2001) considered a related topic. However, it seems to need another consideration in our setting as Stein (2010) points out.

6 Appendix A: Properties of hermite polynomials

In this appendix, we state some relevant results on properties of Hermite polynomials (Granger and Newbold 1976; Gradshteyn and Ryzhik 2007; Olver et al. 2010). The system of Hermite polynomials \(H_n(x)\) is defined by \(H_n(x)=\exp (x^2/2)(-d/dx)^n\,\exp (-x^2/2)\). For example, \(H_0(x)=1,\,H_1(x)=x,\,H_2(x)=x^2-1,\,H_3(x)=x^3-3x,\,H_4(x)=x^4-6x^2+3,\,H_5(x)=x^5-10x^3+15x\) and so on. The Hermite polynomials are a complete orthogonal system with respect to the standard normal probability density function. Therefore, if \(X \sim N(0,1)\),

and since \(H_0(x)=1\),

If \(X\) and \(Y\) are distributed as bivariate normal random vector with zero means, unit variances and correlation coefficient \(\rho \) (\(-1< \rho <1\)),

Finally we have

and

7 Appendix B: Properties of \(f_Y\) and \(f_Z\)

To prove the asymptotic optimality of \(\hat{Z}^\mathrm{tapBLUP}({{\varvec{s}}}_0)\), we shall derive some lemmas. Let \(f_Z\) denote the spectral density function of \(C_Z\), given by (5).

Lemma 1

where

and \(f_Y^{(j-1)}(\Vert \varvec{\lambda }\Vert )\;(j \ge 2)\) is the \((j-1)\) times convolution of \(f_Y(\Vert \varvec{\lambda }\Vert )\), that is

Proof

Note that \(C_Z(\Vert {{\varvec{h}}} \Vert )=\sum _{j \ge 1} \alpha _j^2 j! (C_Y(\Vert {{\varvec{h}}} \Vert ))^j \le \sum _{j \ge 1} \alpha _j^2 j! C_Y(\Vert {{\varvec{h}}} \Vert )\) because \(0 \le C_Y(\Vert {{\varvec{h}}} \Vert ) \le 1\). Moreover, from 16 of Grandshtyne (Gradshteyn and Ryzhik 2007; p. 676),

Therefore, by using polar coordinates,

where \(\partial B_d\) is the surface of the unit sphere in \(\mathbb R ^d\) and \(U\) is the uniform probability measure on \(\partial B_d\). We have

because \(0 \le C_Y(\Vert {{\varvec{h}}} \Vert )\). Then it follows from Bochner’s theorem, the dominated convergence theorem and the inversion formula that

where the third equality is derived by the property that the multiplication of the Fourier transforms of some functions respectively is the Fourier transform of the convolution of these functions. \(\square \)

Lemma 2

Suppose that \(\sum _{j=1}^{\infty } \alpha _j^2 j! (j-1)^{2\nu +d+1} < \infty \). Then

Proof

From Lemma 1,

Then, it suffices to consider the second term \(II\). First consider the lower bound of \(f_Z(\Vert \varvec{\lambda }\Vert )\Vert \varvec{\lambda }\Vert ^{d+2\nu }\). We have

Since

and

by the dominated convergence theorem,

Next we consider the upper bound of \(f_Z(\Vert \varvec{\lambda }\Vert )\Vert \varvec{\lambda }\Vert ^{d+2\nu }\). We set \(\varvec{\lambda }= \rho {{\varvec{v}}},\,\varvec{\omega }_1= r_1 {{\varvec{u}}}_1,\ldots ,\varvec{\omega }_{j-1}= r_{j-1} {{\varvec{u}}}_{j-1}\) with \(\Vert {{\varvec{v}}}\Vert =\Vert {{\varvec{u}}}_1\Vert = \cdots =\Vert {{\varvec{u}}}_{j-1}\Vert =1\). Then for \(j \ge 2\), the integral part of (14) multiplied by \( \Vert \varvec{\lambda }\Vert ^{d+2 \nu }\) reduces to

Since in \(\{\Vert r_1 {{\varvec{u}}}_1+ \cdots + r_{j-1}{{\varvec{u}}}_{j-1} \Vert \le \rho /2 \}\),

and for \(1 \le k \le j-1\),

On the other hand, \(\{ \Vert r_1 {{\varvec{u}}}_1+ \cdots + r_{j-1}{{\varvec{u}}}_{j-1} \Vert > \rho /2 \}\) implies that \(r_i > \rho /(2(j-1))\) for some \(i\) (\(1 \le i \le j-1\)). Therefore

because

Finally we have

\(\square \)

Lemma 3

and if \(f_{\theta }(\Vert \varvec{\lambda } \Vert )\) satisfies the taper condition,

Proof

First consider (16). We shall show the assertion by mathematical induction. For \(j=0\), the result holds clearly. Assume that it holds for \(j=K\). Consider \(j=K+1\). We shall show the assertion in the same way as Proposition 1 of Furrer et al. (2006). Note that \(f_Y^{(K+1)}=f_Y *f_Y^{(K)}\). Then

We divide the numerator into the following five parts

where \(\varvec{\lambda }=\rho {{\varvec{v}}},\,\Vert {{\varvec{v}}} \Vert =1,\,\Delta , \Delta ^{^{\prime }} \rightarrow \infty ,\,\Delta /\rho \rightarrow 0\) and \(\Delta ^{^{\prime }}/\rho \rightarrow 0\) as \(\rho \rightarrow \infty \). Define \(\Delta =O(\rho ^{\delta })\) for some \((2\nu +d)/(2\nu +d+2\epsilon )<\delta <1\) as in Proposition 1 of Furrer et al. (2006).

Case 1: \(0 \le \Vert {{\varvec{x}}} \Vert < \Delta ^{^{\prime }}\)

Note that \(\Vert \varvec{\lambda }-{{\varvec{x}}} \Vert \ge \rho - \Delta ^{^{\prime }}\). Since the result holds for \(j=K\),

as \(\rho \rightarrow \infty \). It follows that

Case 2: \(\Delta ^{^{\prime }} \le \Vert {{\varvec{x}}} \Vert < \rho /2\)

as \(\rho \rightarrow \infty \).

Case 3: \(\rho /2 \le \Vert {{\varvec{x}}} \Vert < \rho -\Delta \)

as \(\rho \rightarrow \infty \).

Case 4: \(\rho -\Delta \le \Vert {{\varvec{x}}} \Vert < \rho +\Delta \)

as \(\rho \rightarrow \infty \).

Case 5: \(\rho +\Delta \le \Vert {{\varvec{x}}} \Vert \)

as \(\rho \rightarrow \infty \). The result (16) holds for \(j= K+1\). See Hirano and Yajima (2012) for details of these Proofs.

Next we consider (17). If \(j=0\), the result holds from Proposition 1 of Furrer et al. (2006). Then for any \(j > 0\), the assertion is shown in the same way as (16). \(\square \)

8 Appendix C: Proofs of Theorem 3 and Corollary 1

We first prepare three lemmas.

Lemma 4

Suppose that \(\sum _{j=1}^{\infty } \alpha _j^2 j! j < \infty \). Then

Proof

A stationary random field is mean square continuous if and only if the covariance function is continuous at the origin (see Stein 1999; p. 20). Hence from Yakowitz and Szidarovszky (1985), \(E_{C_Y}[\hat{Y}^\mathrm{BLUP}({{\varvec{s}}}_0)-Y({{\varvec{s}}}_0)]^2=1-{{\varvec{c}}}_{Y}^{\prime }\Sigma _{Y}^{-1}{{\varvec{c}}}_{Y} \ge 0\) and \({{\varvec{c}}}_{Y}^{\prime }\Sigma _{Y}^{-1}{{\varvec{c}}}_{Y} \rightarrow 1\) as \(n \rightarrow \infty \). Put \(a_n={{\varvec{c}}}_{Y}^{\prime }\Sigma _{Y}^{-1}{{\varvec{c}}}_{Y}\;(\le 1)\). From (2) and (7),

Since \( \alpha _j^2 j! \left( \sum _{i=0}^{j-1}a_n^i \right) \le \alpha _j^2 j! j\), by the dominated convergence theorem, the assertion is obtained. \(\square \)

Lemma 5

Suppose that \(\sum _{j=1}^{\infty } \alpha _j^2 j! j^{2\nu +d+1} < \infty \). Then

Proof

As in the Proof of Lemma 2 for any \(j \ge 1\),

Then by the dominated convergence theorem, Lemmas 1 and 3,

By applying Theorem 1 with \(C_0 = C_Y\) and \(C_1 = C_Z\), we have as \(n \rightarrow \infty \)

and

Therefore as \(n \rightarrow \infty \)

\(\square \)

Let \(f_\mathrm{tap}\) denote the spectral density function of the tapered covariance function \(C^Z_\mathrm{tap}(\Vert \mathbf{h}\Vert )=C_Z(\Vert \mathbf{h}\Vert )C_{\theta }(\Vert \mathbf{h}\Vert )\). Therefore

Lemma 6

Suppose that \(\sum _{j=1}^{\infty } \alpha _j^2 j! j^{2\nu +d+\max \{1,2\epsilon \}} < \infty \) where \(\epsilon \) is given in the taper condition. If \(f_{\theta }\) satisfies the taper condition,

Proof

From Lemma 1, we have

As in the Proof of Lemma 2, we have

where \(A_1\) is a constant being independent of \(\Vert \varvec{\lambda } \Vert \). See Hirano and Yajima (2012) for details. Then, by (17), (18) and the dominated convergence theorem, we have the assertion.\(\square \)

Noting that Lemmas 2 and 6 are the verifications of the conditions of Theorem 1, we have the following proposition.

Proposition 1

Under the conditions of Theorem 3,

and

as \(n \rightarrow \infty \).

Proof of Theorem 3

We decompose the ratio of the MSE into the three terms

Then by Lemmas 4 and 5, the first term and the second term converge to \(1 / \sum _{j \ge 1} \alpha _j^2 j! j\) and \(\sum _{j \ge 1} \alpha _j^2 j! j\), respectively as \(n \rightarrow \infty \). From Proposition 1, the third term converges to 1 as \(n \rightarrow \infty \). The Proof is completed.\(\square \)

Proof of Corollary 1

We decompose the ratio of the MSE into the two terms

Then by the Proof of Theorem 3, the first term converges to 1 as \(n \rightarrow \infty \). Finally by Proposition 1, the second term converges to 1 as \(n \rightarrow \infty \). The Proof is completed.\(\square \)

9 Appendix D: Property of the sampling scheme in Sect. 4

Lemma 7

Let \(\{{{\varvec{S}}}_i \}_{i \ge 1}\) be i.i.d. sequence in \(D\). Suppose also that \(P(\{ \omega | \Vert {{\varvec{S}}}_i-{{\varvec{s}}}_0 \Vert \le \epsilon \})>0\) for any \(\epsilon > 0,\,P({{\varvec{S}}}_i={{\varvec{s}}}_0)=0\) and \({{\varvec{s}}}_0 \in D\). Then

Proof

Define \(A_{ij}=\{ \omega | \Vert {{\varvec{S}}}_i - {{\varvec{s}}}_0 \Vert \le 1/j \}\) for \(i,j \in \mathbb N \). By the assumption, \(P(A_{ij})>0\). Then

because \(P(A^c_{ij})=1-P(A_{ij})<1\). Thus, \(P \left( \bigcup _{i=1}^{\infty } A_{ij} \right) =1\). Define \(B_j = \bigcup _{i=1}^{\infty } A_{ij}\). Then

Therefore

\(\square \)

References

Bickel, P.J., Doksum, K.A. (1981). An analysis of transformations revisited. Journal of the American Statistical Association, 76, 296–311.

Box, G.E.P., Cox, D.R. (1964). An analysis of transformations. Journal of the Royal Statistical Society Series B, 26, 211–252.

Brockwell, P.J., Davis, R.A. (1991). Time series: theory and methods (2nd ed.). New York: Springer.

Cressie, N. (1993). Statistics for spatial data, revised edition. New York: Wiley.

De Oliveira, V. (2006). On optimal point and block prediction in log-Gaussian random fields. Scandinavian Journal of Statistics, 33, 523–540.

Du, J., Zhang, H., Mandrekar, V.S. (2009). Fixed-domain asymptotic properties of tapered maximum likelihood estimators. Annals of Statistics, 37, 3330–3361.

Fuentes, M., Reich, B. (2010). Spectral domain. In A. E. Gelfand, P. J. Diggle, M. Fuentes, & P. Guttorp (Eds.), Handbook of spatial statistics. Boca Raton: Chapman and Hall/CRC.

Furrer, R., Genton, M.G., Nychka, D. (2006). Covariance tapering for interpolation of large spatial datasets. Journal of Computational and Graphical Statistics, 15, 502–523. (Erratum and Addendum: Journal of Computational and Graphical Statistics, 21, 823–824).

Furrer, R., Sain, S.R. (2010). spam: a sparse matrix R package with emphasis on MCMC methods for Gaussian markov random fields. Journal of Statistical Software, 36(10), 1–25.

Golub, G.H., Van Loan, C.F. (1996). Matrix computations. Baltimore: Johns Hopkins University Press.

Gradshteyn, I.S., Ryzhik, I.M. (2007). Table of integrals, series, and products (7th ed.). Amsterdam: Academic Press.

Granger, C.W. J., Newbold, P. (1976). Forecasting transformed series. Journal of the Royal Statistical Society: Series B, 38, 189–203.

Hirano, T., Yajima, Y. (2012). Covariance tapering for prediction of large spatial data sets in transformed random fields. Discussion paper CIRJE-F-823, Graduate School of Economics, University of Tokyo, Tokyo (http://www.cirje.e.u-tokyo.ac.jp/research/dp/2011/list2011.html).

Johns, C., Nychka, D., Kittel, T., Daly, C. (2003). Infilling sparse records of spatial fields. Journal of the American Statistical Association, 98, 796–806.

Kaufman, C., Schervish, M., Nychka, D. (2008). Covariance tapering for likelihood- based estimation in large spatial data sets. Journal of the American Statistical Association, 103, 1545–1555.

Moyeed, R.A., Papritz, A. (2002). An empirical comparison of kriging methods for nonlinear spatial point prediction. Mathematical Geology, 34, 365–386.

Olver, F.W., Daniel, W.L., Boisvert, R.F., Clark, C.W. (2010). NIST handbook of mathematical functions. New York: Cambridge University Press.

Putter, H., Young, G.A. (2001). On the effect of covariance function estimation on the accuracy of kriging predictors. Bernoulli, 7, 421–438.

Stein, M.L. (1993). A simple condition for asymptotic optimality of linear predictions of random fields. Statistics and Probability Letters, 17, 399–404.

Stein, M.L. (1999). Interpolation of spatial data. New York: Springer.

Stein, M. L. (2010). Asymptotics for spatial processes. In A. E. Gelfand, P.J. Diggle, M. Fuentes, P. Guttorp (Eds.), Handbook of spatial statistics. Boca Raton: Chapman and Hall/CRC.

Wackernagel, H. (2003). Multivariate geostatistics: an introduction with applications. Berlin: Springer.

Wang, D., Loh, W.-L. (2011). On fixed-domain asymptotics and covariance tapering in Gaussian random field models. Electronic Journal of Statistics, 5, 238–269.

Wendland, H. (1995). Piecewise polynomial, positive definite and compactly supported radial functions of minimal degree. Advances in Computational Mathematics, 4, 389–396.

Yakowitz, S.J., Szidarovszky, F. (1985). A comparison of kriging with nonparametric regression methods. Journal of Multivariate Analysis, 16, 21–53.

Zhu, Z., Wu, Y. (2010). Estimation and prediction of a class of convolution-based spatial nonstationary models for large spatial data. Journal of Computational and Graphical Statistics, 19, 74–95.

Acknowledgments

The authors are grateful to Professor Akimichi Takemura and Professor Mark G. Genton for helpful comments and discussions. We also acknowledge the suggestions from the associate editor and three anonymous referees that refined and improved the manuscript. This work is supported by the Research Fellowship (DC1) from the Japan Society for the Promotion of Science and by the Grants-in-Aid for Scientific Research (A) 23243039 from the Japanese Ministry of Education, Science, Sports, Culture and Technology.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Hirano, T., Yajima, Y. Covariance tapering for prediction of large spatial data sets in transformed random fields. Ann Inst Stat Math 65, 913–939 (2013). https://doi.org/10.1007/s10463-013-0399-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-013-0399-8