Abstract

The exponential increase in the number of diabetics around the world has led to an equally large increase in the number of diabetic retinopathy (DR) cases which is one of the major complications caused by diabetes. Left unattended, DR worsens the vision and would lead to partial or complete blindness. As the number of diabetics continue to increase exponentially in the coming years, the number of qualified ophthalmologists need to increase in tandem in order to meet the demand for screening of the growing number of diabetic patients. This makes it pertinent to develop ways to automate the detection process of DR. A computer aided diagnosis system has the potential to significantly reduce the burden currently placed on the ophthalmologists. Hence, this review paper is presented with the aim of summarizing, classifying, and analyzing all the recent development on automated DR detection using fundus images from 2015 up to this date. Such work offers an unprecedentedly thorough review of all the recent works on DR, which will potentially increase the understanding of all the recent studies on automated DR detection, particularly on those that deploys machine learning algorithms. Firstly, in this paper, a comprehensive state-of-the-art review of the methods that have been introduced in the detection of DR is presented, with a focus on machine learning models such as convolutional neural networks (CNN) and artificial neural networks (ANN) and various hybrid models. Each AI will then be classified according to its type (e.g. CNN, ANN, SVM), its specific task(s) in performing DR detection. In particular, the models that deploy CNN will be further analyzed and classified according to some important properties of the respective CNN architectures of each model. A total of 150 research articles related to the aforementioned areas that were published in the recent 5 years have been utilized in this review to provide a comprehensive overview of the latest developments in the detection of DR.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Diabetes mellitus is becoming an ever-common disease with Type II diabetes reaching epidemic levels and Type I diabetes on a steady increase all around the world. With diabetes comes various health complications and life-limiting diseases including but not limited to macrovascular diseases such as ischemic heart disease, peripheral artery diseases and stroke. Additionally, it may also result in various microvascular diseases such as diabetic neuropathy, retinopathy and nephropathy. Diabetic retinopathy (DR) is a health complication that occurs as a result of prolonged diabetes with poor blood sugar management. As the name suggests, DR is a health concern pertaining to the eyes and ultimately results in vision loss. Diabetes affects insulin production and sensitivity, and therefore the ability of the body to absorb glucose, leading to high blood sugar levels. Left unmanaged, high blood sugar levels may result in blood vessel damage to the retinal vasculature of the eye. Blockage of the capillaries responsible for transporting blood to and from the eye results in decreased blood flow to the retina. The continuous need for oxygen sources naturally stimulates angiogenesis to occur, generating new blood vessels to carry out blood transport which the ruptured vessels have failed to do. This, in theory, should resolve the oxygen deprivation the eye has previously faced but instead leads to further complications as for some reason, instead of reparative angiogenesis, retinal neovascularization occurs. These complicates matters as the new outburst of unexplained vessel growth compromises vision and might lead to blindness in diabetic patients.

DR affects up to 80% of people who have had diabetes for longer than 20 years and is responsible for 12% of all new cases of blindness. Globally, DR has always been the leading cause of vision loss and even blindness among those aged between 20 and 74 years old (Vujosevic et al. 2020), and it affects an estimated 285 million people worldwide. As the number of diabetic patients continue to increase all around the world, we are thus looking at the possibility that the DR arising from diabetes may prove to be a growing burden to ophthalmologists in terms of the amount of time and effort needed to treat these growing numbers. Automation of the most tedious processes may be the solution towards alleviating some of this taxing burden caused by this disease on the economy and healthcare system.

A large part of our understanding revolves around the cause of DR to tie to systemic risk factors. Studies have shown that good glycemic management substantially hinders the development of DR. On the other hand, the accumulation of advanced glycemic end product (AGE)—a by-product of poor glycemic management—is known to correlate with retinopathy risk (Genuth et al. 2015). Dyslipidemia, when lipid amounts in blood reaches abnormal levels and hypertension, also known as high blood pressure, may also influence DR. However, studies suggest that intensive blood pressure control has little effect in hindering the severity progression of DR (The ACCORD Study Group and ACCORD Eye Study Group 2010). The systemic risk factors, however, only contribute collectively to about less than 20% of all DR risks. This suggests additional factors playing vital roles in DR initiation and progression continue to remain undiscovered. A recent discovery shows that diabetes also has an impact on the retinal neovascular unit and its interdependent neuronal, vascular, glial and immune cells (Duh et al. 2017). This opens up many new possibilities to discover and explore in the pursuit of identifying DR initiation factors. Whilst medical experts continue to seek more ways to detect DR through other factors previously gone unnoticed, the development of convolutional neural networks are showing promise in the field of early detection for DR.

DR is generally classified into two stages: non-proliferative DR and proliferative DR. A major criterion in DR evaluation and analysis is the evident development of microvascular lesions such as microaneurysms, intraretinal hemorrhages, exudates, capillary nonperfusions, intraretinal microvascular abnormalities and venous caliber abnormalities accompanied with neuronal infarcts and retinal neovascularization.

Other hallmark features foreboding the development of early DR usually accompanying and/or preceding lesion development are the thickening of the vascular basement membrane, premature death of pericytes and eventual endothelial cell death (Duh et al. 2017; Antonetti et al. 2016). Several retinal vascular pathological processes in DR have a direct impact on vision.

One such example is vessel integrity loss, which implies gradual non perfusion of the retinal vasculature. This progresses into the eventual occlusion or degeneration of capillaries (Stitt et al. 2016) which in turn results in regions of ischemia and impaired oxygenation of retinal neurons. If the condition persists and capillary nonperfusion becomes progressive, hypoxia and proangiogenic growth factors stimulate abnormal formation of blood vessels in the retina. This is an indication that NPDR has worsened into a state of DR—known for pathologic preretinal neovascularization. Vision loss is highly likely at this point. Otherwise, in the NPDR stage, things can also go sideways if retinal neovascularization occurs. Retinal neovascularization leads to vitreous hemorrhage or tractional retinal detachment (Stitt et al. 2016), both bearing high risks of visual impairment.

Although there are only two stages of DR, it is worth mentioning diabetic macular edema at this point. This is an important categorization since DME can occur at any of the two stages of DR and is also the most common cause of vision loss in DR patients. Simply put, edema is when the blood-retinal barrier breaks down and causes vascular leakage of circulated fluids and protein into the neural retina—yet another cause for vision loss.

As of current knowledge, DR is not a curable disease. One characteristic of DR that continues to baffle researchers is the how reparative angiogenesis almost never occurs despite the strong hypoxia stimulus and enhanced proangiogenic growth factor production. A recent study suggests that semaphorins—a protein class that controls axonal growth core guidance—may be the root cause of this phenomenon. Specifically, semaphorins 3A, 3F and 6A (Wei et al. 2015) are implicated to suppress the revascularization response from the ischemic retina, steering the neo-vessels to the vitreous instead. Since this proangiogenic growth factor almost always results in pathologic preretinal neovascularization rather than beneficial, reparative angiogenesis. Extensive research has been done over the decades to identify growth factors such as VEGF (Aiello et al. 1994). Along the lines of this understanding, the implementation of anti-VEGF treatments has been quite effective as a form of treatment for this condition (Rami et al. 2017; Gross et al. 2015). We will mention more on existing treatment methods but for now, knowing that for patients with no DR are mild NPDR, simple screening and glycemic control is often enough; patients experiencing moderate or higher levels of DR will have various treatment options depending on the level of severity ranging from laser scatter treatment to vitrectomy.

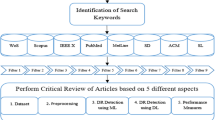

The study on the automated detection of DR has been gathering more and more attention worldwide since 2015. Among all search engines, it can be observed that the number of articles produced has been increasing rapidly since 2015. In examining the current research trend on the automated detection of DR, the following syntax was keyed into a research database, such as Web of Science Database:

("Diabetic Retinopathy" OR ("Fundus" AND ("exudate" OR "microaneurysm" OR "hemorrhages" OR "red lessions" OR "dark lessions" OR "cotton wool spots" OR "macular edema" OR "vessel network" OR "blood vessel" OR "optic disk"))) AND ("Convolutional" OR "Automated" OR "machine learning" OR "artificial intelligence" OR "neural network" OR "deep learning" OR "fuzzy logic" OR "random forest" OR "support vector machine")

The period of searching was restricted to 2015 or later, and only published journal articles were the target of searching. The search result on all the published journal articles in https://app.dimensions.ai/ was subsequently exported to a bibliometric program, yielding the author co-citation network shown in Fig. 1.

Among all the 1575 publications in the list obtained, it was found that Wong T.Y. was co-cited 1262 times, which is the most number of co-citation among the thousands of authors, displayed by the bibliometric program as the largest circle. It is worth noting that Wong T.Y. is also a co-author for seven of the journal articles that were reviewed in this paper namely, references no. (Ting et al. 2017, 2018; Bellemo et al. 2019; Xie et al. 2020; Wong and Bressler 2016; Sabanayagam et al. 2018; Yip et al. 2020).

1.1 The structure of this review article

This review article consists of a total of 5 sections. The tree diagram in Fig. 2 provides an overview for the main contents of this article.

In Chapter 1, a comprehensive overview of DR which includes its complications, detection and treatment methods are presented.

Chapter 2 presents the main content of this article which is a review on the automated detection of DR using fundus images across the recent years is presented.

In Sect. 2.1, thorough general descriptions and analysis will be done on the model which an AI is built upon for the automated detection of DR using fundus images. Substantial graphical presentation is provided, showing how the choices of the model to build the AI has changed across the recent years.

Sections 2.2 and 2.3 follows immediately after Sect. 2.1 to serve as more detailed discussions to the model used for DR detection which forms the most crucial part of this article.

In Sect. 2.2, an even more in-depth study is conducted specifically for CNN-based models, which were found to be the main choice among all the models to build an algorithm. In fact, there are two major groups: Those who deploys readily available general purpose CNN structure, and those who improvise their own CNN structure dedicated for the purpose of the automated detection of DR.

A thorough discussion of the other models used in the detection of DR using fundus photography is presented in Sect. 2.3. Firstly, all the AI which deploys standalone models other than CNN were reviewed. Then, the attention is given to all the AI which deploys hybrid models, for which the type of the hybrid model’s constituents, as well as the trending of choices, are reviewed and presented.

Then, in Sect. 2.4, thorough general descriptions and analysis will be done. Different tasks an AI are built to diagnose, such as the detection of various diseased tissue resulted from complications of DR. Substantial graphical presentation are likewise provided. As the majority of the AI are designed for the general grading of DR according to their levels of severity, Sect. 2.4.1 is provided for a more in-depth analysis among those articles who performed general DR gradings.

The major datasets that have been used in fundus photography in the studies that have been reviewed in this paper are presented in Sect. 2.5. This is then followed by a review on all the comparative studies performed by all the authors, as well as our own commentaries as which models seems to perform best for the detection of DR based on our observations.

In Sect. 3, other recent developments in the field of automated fundus-based DR detection are presented. First, in Sect. 3.1, a brief review on all the recently trademarked applications for automated DR detection using fundus images is given, in which the authors had also published articles. Then, in Sect. 3.2, some newly developed performance enhancing add-ons are presented. Such add-ons were developed by some dedicated research groups to provide a performance boost when used alongside with the existing ML algorithms for automated DR detection using fundus images.

In Sect. 4, a review on the recent development of DR detection algorithm using the OCTA (Optical Coherence Tomography Angiography) images is presented. OCTA imaging is an emerging new way of retinal emerging that provide unprecedented image details over the conventional fundus imaging reviewed in Sects. 2 and 3.

Concluding remarks are presented in Sect. 5. Moreover, potential future directions of research will also be highlighted in this section, such as a shift of attention from using the conventional fundus images to using the recently developed OCTA image for automated DR detection. This is then followed by acknowledgements and the list of references.

2 Recent development on the automated detection of DR using fundus images

Altogether, more than 150 articles published since 2015 were collected from reputable journals across the World Wide Web, out of which 81 of those research articles [2015: (Ţălu et al. 2015; Mahendran and Dhanasekaran 2015a, b; Ibrahim et al. 2015), 2016: (Banerjee and Kayal 2016; Partovi et al. 2016; Wardoyo et al. 2016; Abràmoff et al. 2016; Prentašić and Lončarić 2016; Gulshan et al. 2016; Akyol et al. 2016; Gharaibeh 2016; Gharaibeh and Alshorman 2016), 2017: (Das and Puhan 2017; Dutta et al. 2015; Tan et al. 2017a, b; Xu et al. 2017; Quellec et al. 2017; Raju et al. 2017; Ting et al. 2017; Al-Jarrah and Shatnawi 2017; Abbas et al. 2017; Javidi et al. 2017; Zhang et al. 2017; Barkana et al. 2017), 2018: (Kaur and Mittal 2018; Mansour 2018; Brown et al. 2018; Randive et al. 2018; Ramachandran et al. 2018; Hemanth et al. 2018; Saleh et al. 2018; Seth and Agarwal 2018; Al-Hazaimeh et al. 2018), 2019: (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Sahlsten et al. 2019; Liu et al. 2019; Hemanth et al. 2019; Sun 2019; Zhang et al. 2019; Li et al. 2019a, b; Eftekhari et al. 2019; Bellemo et al. 2019; Pires et al. 2019; Qummar et al. 2019; Zeng et al. 2019; Son et al. 2019; Gulshan et al. 2019; Nazir et al. 2019; Nair and Muthuvel 2019; Chowdhury et al. 2019; Pratheeba and Singh 2019; Memari et al. 2019; Jebaseeli et al. 2019a, b), 2020: (Colomer et al. 2020; Mateen et al. 2020; Wu et al. 2020; Shaban et al. 2020; Pao et al. 2020; Torre et al. 2020; Shah et al. 2020; Zago et al. 2020; Qiao et al. 2020; Srivastava and Purwar 2020; Shankar et al. 2020a, b; Samanta et al. 2020; Xie et al. 2020; Ayhan et al. 2020; Ali et al. 2020; Derwin et al. 2020a, b; Jadhav et al. 2020; Usman and Almejalli 2020; Luo et al. 2020; Gayathri et al. 2020; Bhardwaj et al. 2021)] are observed to be research articles in which new AI algorithms for DR detection using fundus images were innovated. In fact, from all the literature published in this area from January 2015 until September 2020, a total of 84 newly developed algorithms was observed from that 81 research articles.

Among most articles encountered, the norm has always been to focus on developing a single algorithm using a single type of model at a time. There were nonetheless 3 noticeable exceptions found during 2015–2020, where each of the 3 exceptions produced 2 innovated algorithms using a distinct type model from one another.

-

(i)

In Mahendran et al. (2015a): One using probabilistic neural network, thus classified as Other-NN in Sect. 2.1.2; the other using support vector machine, thus classified as SVM in Sect. 2.1.2.

-

(ii)

In Mahendran et al. (2015b): One using k-means clustering, thus classified as Other ML in Sect. 2.1.2; the other using fuzzy c-means, thus classified as Fuzzy-ML in Sect. 2.1.2.

-

(iii)

In Saleh et al. (2018): One using random forest, thus classified as RF in Sect. 2.1.2; the other using dominance-based rough set approach, thus classified as Other ML in Sect. 2.1.2.

Moreover, there also exist few articles, each produced multiple algorithms which however remained very close in structure to each other, and those algorithms in an article were all created using one same type of model, such as CNN. For clarity reasons, all algorithms of such kind from one same article using one same type of model will be counted as one in our review.

Hence, there are altogether 84 distinctive entries of newly developed AI algorithms for DR detection based on fundus images found from all the literature published in this area from January 2015 until September 2020. Those 84 distinctive entries shall be collectively referred as the New Fundus Algorithms throughout the remaining sections of this review article.

On the other hand, we have also gone through many review articles published previously (Amin et al. 2016; Lu et al. 2018; Ting et al. 2018; Sorour et al. 2018; Raman et al. 2019; Grzybowski et al. 2019; Nagendran et al. 2020; Yip et al. 2020; Chaki et al. 2020; Alyoubi et al. 2020; Stolte and Fang 2020; Kandel and Castelli 2020; Ishtiaq et al. 2020; Islam et al. 2020). We found that all of these review articles did not manage to provide a comprehensive comparison between the methods used by each of the authors for the detection of DR. This is especially prevalent today where most of the recent studies have deployed convolutional neural network (CNN) but with differences in its internal architecture. Moreover, though many of the review articles did provide a table mentioning the choices of datasets across the years, none of them was found to be a reliable overview of the research trend due to the fact that they reviewed only very few articles (often less than 20 articles even for a period of 10 years or more).

In three of the succeeding Sects. 2.1, 2.4 and 2.5, all the New Fundus Algorithms will be studied by examining their classifications under three different approaches:

-

(i)

According to the choices of their constituent models, which can be one (as in Pure CNN), many (as in a hybrid model), or none (as in a conventional morphological method), in Sect. 2.1.

-

(ii)

According to the specific tasks they are designed to perform, in Sect. 2.4.

-

(iii)

According to the datasets they have used to train their ML-based algorithms, in Sect. 2.5.

3 The study of the new fundus algorithms by their overall model groups, choices of constituent models and methods

3.1 Observation on the constituent models among all entries of the New Fundus Algorithms

Among the 84 entries of the New Fundus Algorithms, most of them were observed to be built upon one or several of the following 6 constituent models of AI which are all ML based:

-

(i)

CNN Convolutional neural network.

-

(ii)

ANN Artificial neural network.

-

(iii)

Other-NN Other derivatives of ANN than CNN, such as recurrent neural network (RNN) and long-short-term memory (LSTM).

-

(iv)

Fuzzy-ML Fuzzy logic-based methods, such as fuzzy inference system and/or fuzzy time series among others, and with ML implemented.

-

(v)

SVM Support vector machine.

-

(vi)

RF Random forest.

It is worth noting that all neural network-based models (ANN, CNN, Other-NN) as well as SVM and RF, inherently incorporates ML in their own structures.

As the entire structure of an ANN is itself a component of a CNN structure (the classification section, see Fig. 7), any algorithm which said to combines some CNN structures with some ANN structures will be regarded as a Pure CNN model instead of hybrid model. On the other hand, the structure of RNN and LSTM are entirely different from that of a conventional ANN, so an algorithm which incorporates features of both ANN and Other-NN will be considered as a hybrid of ANN with Other-NN.

With regards to the procedure of repeated iteration during the ML procedure, it was further observed that some entries had even deployed genetic algorithm to facilitate their machine learning procedures.

3.2 Classification of the New Fundus Algorithms into 10 mutually exclusive model groups

All 84 entries of the New Fundus Algorithms are found to be fully classifiable under all the 10 mutually exclusive model groups as described below:

-

(i)

Conventional Entries that are built solely upon the conventional methods which does not incorporate any recent AI models, with or without ML. Most of these methods are morphological based.

-

(ii)

Pure CNN Entries that are built solely using CNN, without deploying genetic algorithm.

-

(iii)

Pure ANN Entries that are built solely using ANN, without deploying genetic algorithm. In this review, if any entry is known to be NN-based, but it was not mentioned anywhere in its article about which particular NN model it belongs to, then that entry will be classified under this model group

-

(iv)

Pure Other-NN Entries that are built solely using Other-NN, without deploying genetic algorithm.

-

(v)

Pure Fuzzy-ML Entries that are built solely using Fuzzy-ML, without deploying genetic algorithm.

-

(vi)

Pure SVM Entries that are built solely using SVM, without deploying genetic algorithm.

-

(vii)

Pure RF Entries that are built solely using RF, without deploying genetic algorithm.

-

(viii)

Other ML Entries that are built using ML method(s) other than those among the CNN, ANN, Other-NN, Fuzzy-ML, SVM and RF, without deploying genetic algorithm.

-

(ix)

Only Genetic Entries which deployed genetic algorithm onto an unknown host model, i.e. such host model was not mentioned anywhere in the article.

-

(x)

Hybrid ML Entries which satisfy at least one of the following conditions below:

-

(a)

Entries which incorporate 2 or more constituent models among {CNN, ANN, Other-NN, Fuzzy-ML, SVM, RF}.

-

(b)

Entries which incorporate 1 constituent model among {CNN, ANN, Other-NN, Fuzzy-ML, SVM, RF} together with some other ML methods.

-

(c)

Entries which incorporate 1 constituent model among {CNN, ANN, Other-NN, Fuzzy-ML, SVM, RF} together with genetic algorithm.

-

(a)

Due diligence had been carried out in studying all the articles containing entries of the New Fundus Algorithms. The proportion of all 84 entries among the New Fundus Algorithms that belongs to each of the 10 model groups is thus as shown in Fig. 3.

Now we present Fig. 4 which shows the 10 model groups containing these 84 entries of the New Fundus Algorithms arranged by year of publication. This is then followed by Fig. 5 which gives the other perspective around: The year of publication of these 84 entries arranged by the model groups they belong.

Thus, from Figs. 4 and 5, it is clearly visible that a lot of research work on the New Fundus Algorithms seemed to converge into the sole use of convolutional neural networks (Pure CNN) since 2016 (Abràmoff et al. 2016; Prentašić and Lončarić 2016; Gulshan et al. 2016; Tan et al. 2017a, b; Xu et al. 2017; Quellec et al. 2017; Raju et al. 2017; Ting et al. 2017; Mansour 2018; Brown et al. 2018; Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Sahlsten et al. 2019; Liu et al. 2019; Hemanth et al. 2019; Sun 2019; Zhang et al. 2019; Li et al. 2019a; Eftekhari et al. 2019; Bellemo et al. 2019; Pires et al. 2019; Qummar et al. 2019; Zeng et al. 2019; Mateen et al. 2020; Wu et al. 2020; Shaban et al. 2020; Pao et al. 2020; Torre et al. 2020; Shah et al. 2020; Zago et al. 2020; Qiao et al. 2020; Srivastava and Purwar 2020; Shankar et al. 2020a; Samanta et al. 2020; Xie et al. 2020; Ayhan et al. 2020). Particularly since 2019, a tremendous increase was observed in the annual number of entries among the New Fundus Algorithms which adopted CNN as their main model of development (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Sahlsten et al. 2019; Liu et al. 2019; Hemanth et al. 2019; Sun 2019; Zhang et al. 2019; Li et al. 2019a; Eftekhari et al. 2019; Bellemo et al. 2019; Pires et al. 2019; Qummar et al. 2019; Zeng et al. 2019; Mateen et al. 2020; Wu et al. 2020; Shaban et al. 2020; Pao et al. 2020; Torre et al. 2020; Shah et al. 2020; Zago et al. 2020; Qiao et al. 2020; Srivastava and Purwar 2020; Shankar et al. 2020a; Samanta et al. 2020; Xie et al. 2020; Ayhan et al. 2020). Meanwhile, the computational power of consumer-based PC had increased tremendously since 2016 for both CPU and GPU. Most of the software needed to run the programming are now free (gratis) and provides plenty of freedom of use, even for commercial use. This grants all the researchers unprecedented access to parallel GPU computing necessary to carry out the resource intensive ML procedures to train the AI built using pure CNN. In the near future, it is therefore very likely to have an even higher number of new pure CNN based algorithms appearing every year.

3.3 An even more detailed classification of the entries in accordance with their constituent models and methods

To provide an even more detailed insight to the model groups used among the New Fundus Algorithms, Table 1 and Fig. 6 are presented to show the general distribution of all the 84 entries according to their constituent models and methods which they are based upon. In particular, the six constituent models are as previously mentioned in Sect. 2.1.1, and they are reviewed together with another 2 methods, namely: Other ML (i.e. those who deployed other ML models as their constituents, but not using genetic algorithm) and Genetic (i.e. those who deployed genetic algorithm).

The areas of all regions in Fig. 6 are shown to be directly proportional to the number of entries that fall within each combination. Moreover, in Fig. 6, all the cardinalities representing the entries under hybrid ML are displayed in green. The remaining 9 black numbers once again correspond to the number of entries under model group number 1 to 9 as defined under Sect. 2.1.2.

It is therefore clearly observable in Fig. 6, that 37 out of the 84 entries belongs to the model group of pure CNN. Section 2.2 is therefore dedicated for an in-depth study of all the pure CNN-based algorithms grouped among the 37 entries (see the beginning of Sect. 2 for more details), revealing all the intricate details and the minute differences among all entries belonging to pure CNN. Entries in other model groups will be discussed under Sect. 2.3.

3.4 Pure CNN: the main model group for the new fundus algorithms: an even more in-depth review

With the abundance of 37 entries of the New Fundus Algorithm that are purely built upon CNN, this section is dedicated to provide an in-depth studies on all the CNN based algorithms grouped among these 37 entries. Firstly in Sect. 2.2.1, the fundamental design of the CNN architecture is reviewed. This is then followed by a thorough study on the recently introduced new general purpose CNN blueprints for the detection of DR in Sect. 2.2.2. Then, in Sect. 2.2.3, several self-customized CNN architectures that are dedicated for DR detection are also discussed.

3.4.1 The fundamental CNN design and the needs of preprocessing

Figure 7 below shows the essential structure of a typical CNN architecture for general DR grading.

One of the most obvious methods to further improve the outcome of a CNN based algorithm is to increase the “clarity” of the image right before it is fed into the system. This was usually accomplished by the algorithms by raising the contrast and sharpness of the image with photo editing software or similar programs, to accentuate all the relevant features on the fundus image (Tan et al. 2017a, b; Eftekhari et al. 2019; Zago et al. 2020; Samanta et al. 2020) and to suppress all the unwanted artifacts and noises of the image (Prentašić and Lončarić 2016), before feeding the data into the CNN. This is also to ensure that all the images fed into the CNN possess uniform amount of overall brightness and color (Tan et al. 2017a, b; Eftekhari et al. 2019; Zago et al. 2020; Samanta et al. 2020). Moreover, in a dataset of fundus images where the resolutions vary among the images, resizing was usually done to standardize the image size before fed into the CNN (Sahlsten et al. 2019; Qummar et al. 2019). Such procedure is thus unanimously referred to as preprocessing (Fig. 8).

Among all entries of the New Fundus Algorithm built using pure CNN, we tabulated in Fig. 9 the number of algorithms where significant preprocessing was observed before feeding the input into CNN [2016: (Prentašić and Lončarić 2016; Gulshan et al. 2016), 2017: (Tan et al. 2017a, 2017b; Xu et al. 2017; Raju et al. 2017), 2018: (Mansour 2018), 2019: (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Sahlsten et al. 2019; Liu et al. 2019; Hemanth et al. 2019; Zhang et al. 2019; Li et al. 2019a; Eftekhari et al. 2019; Pires et al. 2019; Qummar et al. 2019; Zeng et al. 2019), 2020: (Mateen et al. 2020; Wu et al. 2020; Pao et al. 2020; Zago et al. 2020; Srivastava and Purwar 2020; Samanta et al. 2020)]. Those articles which promotes pure CNN had also given elaboration on the method of preprocessing. This is then compared against those other algorithms who was not found to perform preprocessing [2016: (Abràmoff et al. 2016), 2017: (Quellec et al. 2017; Ting et al. 2017), 2018: (Brown et al. 2018), 2019: (Sun 2019; Bellemo et al. 2019), 2020: (Shaban et al. 2020; Torre et al. 2020; Shah et al. 2020; Qiao et al. 2020; Shankar et al. 2020a; Xie et al. 2020; Ayhan et al. 2020)], either the articles mention that themselves, or there is nowhere in their article which suggest any preprocessing had been done.

It can be observed that there is a significant drop in the number of entries (it is worth reminding that each entries can either be one single algorithm or several algorithms that are structurally very close to one another, as mentioned in Sect. 1.1) who were observed to perform standalone preprocessing in 2020 (Mateen et al. 2020; Wu et al. 2020; Pao et al. 2020; Zago et al. 2020; Srivastava and Purwar 2020; Samanta et al. 2020), compared to the year 2019 (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Sahlsten et al. 2019; Liu et al. 2019; Hemanth et al. 2019; Zhang et al. 2019; Li et al. 2019a; Eftekhari et al. 2019; Pires et al. 2019; Qummar et al. 2019; Zeng et al. 2019). Through the observation on the articles in 2020 (Mateen et al. 2020; Wu et al. 2020; Shaban et al. 2020; Pao et al. 2020; Torre et al. 2020; Shah et al. 2020; Zago et al. 2020; Qiao et al. 2020; Srivastava and Purwar 2020; Shankar et al. 2020a; Samanta et al. 2020; Xie et al. 2020; Ayhan et al. 2020), it is concluded that preprocessing no longer needed due to the newly developed CNN architectures (since 2020 and beyond) which by itself is already capable of tracing even the tiniest difference among the colors or textures, without the needs to be provided with a manually enhanced image.

Another obvious way to further improve the outcome of a CNN based algorithm, is to increase the complexity of the CNN itself. It is worth noting that the most legacy architecture of CNN, LeNet (Lecun et al. 1998), had remained the blueprint by most authors for innovating new CNN based algorithms for automated DR detection until 2017. Many groups of authors had thus improvised on LeNet by introducing additional number of layers in the feature extraction or the classification or both, accompanying by adding more neurons and the more feature maps in each layer. One notable example is done by Tan et al. (2017a) which they developed an AI algorithm that detects EX, MA and HM using a CNN enlarged from LeNet.

3.4.2 The recent general-purpose CNN (RGP-CNN): new blueprints for the mainstream CNN developers for DR detection

It was not until the year 2018 that a large number of more recent, profoundly complex architectures of CNN began to get attention among AI developers for DR detection, such as AlexNet (Krizhevsky et al. 2012), VGGNet (Simonyan and Zisserman 2015), GoogleNet/Inception (Szegedy et al. 2015), ResNet (He et al. 2015), DenseNet (Huang et al. 2017) and SeNet (Hu et al. 2018). Such newly developed architectures were however general-purpose in nature and thus their structures were not specifically tuned for any practical purpose, let alone for DR detection. For the remaining part of this article, those architectures shall be collectively referred to as Recent General-Purpose CNN (abbr. RGP-CNN). It is worth mentioning that an RGP-CNN tends to be extremely complex in structure, except some very early versions like AlexNet. For example, InceptionV3 consists of more than 100 crisscrossing layers and 7 different operations including convolution itself (https://software.intel.com/content/www/us/en/develop/articles/inception-v3-deep-convolutional-architecture-for-classifying-acute-myeloidlymphoblastic.html). Hence, throughout this review, any RGP-CNN deployed in a given AI algorithm will be represented by a single icon as shown in Fig. 10.

It is worth reminding that an RGP-CNN were not tuned for any dedicated practical applications and thus it can still be possibly outperformed by a smaller but dedicated CNN architecture customized specially for DR detection.

Following the commonly accepted notation in literature, if a number is mention after the name of an RGP-CNN, that number specifies the number of layers presented in that particular subclass of RGP-CNN. Thus, VGGNet19 specifies that it is a particular subclass of VGGNet that is 19 layers deep. Some author groups in literature however did not mention such number of layers in a particular subclass of an RGP-CNN they used in their works, in such a case, only the name of that RGP-CNN will be presented in this review.

In 2018, Mansour et al. (2018) deployed AlexNet, which is just newer than LeNet, for General DR Grading. Also in the same year, Wan et. al. (2018) were observed to be among the pioneers to perform a comparative study on the performance of six RGP-CNN when adopted for DR detection. The architecture studied by Wan et. al. (2018) were: AlexNet, 3 derivatives of VGGNet (VGGNet-s, VGGNet16, VGGNet19), GoogleNet(a.k.a. Inception), and ResNet. Then, following the footsteps of such comparative studies, most algorithms from the year 2018 had begun to discard the conventional LeNet and began to adopt one or several RGP-CNN as their blueprints to develop their own CNN based algorithm for DR detection. Among the RGP-CNN commonly studied by those inventing authors were ResNet (Liu et al. 2019; Zhang et al. 2019; Mateen et al. 2020; Shankar et al. 2020a; Wan et al. 2018), GoogleNet (Mateen et al. 2020; Shankar et al. 2020a; Wan et al. 2018), and VGGNet (Mateen et al. 2020; Shankar et al. 2020a; Wan et al. 2018).

With such RGP-CNN as the new blueprints, some CNN based algorithms innovated since 2019 (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Zhang et al. 2019; Bellemo et al. 2019; Qummar et al. 2019; Zeng et al. 2019; Mateen et al. 2020; Shankar et al. 2020a; Xie et al. 2020) contained multiple RGP-CNN architectures (not to be confused with a hybrid AI model, because it is still a CNN based model).

Among those algorithms with multiple RGP-CNN architectures, some of them deployed mutually distinct RGP-CNN architectures (Zhang et al. 2019; Bellemo et al. 2019; Qummar et al. 2019; Mateen et al. 2020; Xie et al. 2020), all working concurrently on an identical preprocessed data of a fundus image. The final output was then computed from the outcomes yielded by all the constituent RGP-CNN using certain formula or even another ANN-like network. There were six such algorithms observed among the literature published since 2019, all of which are presented in Table 2.

On the other hand, those algorithms with multiple RGP-CNN architectures deployed mutually identical RGP-CNN (Gao et al. 2018; Gonzalez-Gonzalo et al. 2020; Zeng et al. 2019; Shankar et al. 2020a), which all working concurrently on distinctively preprocessed data of a fundus image. Likewise, the final output was then computed from the outcomes yielded by all the constituent RGP-CNN using certain formula or even another ANN-like network. It is worth noting that two mutually identical RGP-CNN used in a given innovated algorithm will still have their network’s weights combination different with each other because each of them will be trained for a different purpose, say examining the left eye and the right eye as encountered in Zeng et al. (2019).

The algorithm developed by Zheng et al. (2019) for General DR Detection takes both eyes as inputs, analyses, and even compares them simultaneously using InceptionV3 for both eyes. Hence the name “Binocular Siamese-like” called by the authors themselves (Fig. 11).

The algorithm developed by Zeng et al. (2019) for General DR Detection using two mutually identical CNN architecture

Later in 2020, Shankar et al. (2020a) proposed another algorithm for General DR Grading which deploys two concurrent ResNet50, said to be connected consecutively to an ANN (“synergic”) (Fig. 12).

The CNN architecture developed by Shankar et al. (2020a) for general DR grading

Besides, there were another two invented algorithms for DR that are rather complex then the rest of the hybrid CNN architectures aforementioned: Firstly, Gonzalez-Gonzalo et al., in their recent article published in JAMA in 2019 (Gonzalez-Gonzalo et al. 2020), incorporates six DenseNet joined concurrently in their algorithm for General DR Grading, each of them took on one of the three RGB channels for each of the two distinctively preprocessed images, which were done using different methods from the raw fundus image. The final result is simply the mean (“average DR score”) of the six outputs from each of the six DenseNet architectures. Secondly, Gao et al. (2018) proposed an algorithm for General DR Detection which consists of four concurrent InceptionV3, each of them work on a quarterly section of the image and produces a tiny monochrome image instead a mere numerical output like from 0 to 4. The final answer was concatenated from that 4 monochrome images produced by that four InceptionV3. That yielded a final monochrome image which, in addition to General DR Grading, also capable of giving the approximate (because that final image is still very small) location of the diseased tissues.

3.4.3 Self-customized CNN architecture dedicated for DR detection

Nevertheless, there were some authors who chose to innovate their own customized architecture of CNN dedicated for DR detection without relying to any RGP-CNN in literature as their blueprints. These self-customized CNN architectures tend to be just as complex as RGP-CNN developed on the same period. Moreover, there is a much greater degree of diversity among the algorithms which uses customized CNN architectures than those who simply deploy RGP-CNN’s. This is because each of the internal constituents can potentially be fully customized by the authors, unlike using a package of RGP-CNN where each of the internal components can hardly be changed (or even impossible, as in the case of cloud computing). As a result, the internal constituents for each of these individually customized CNN architectures aforementioned (Sun 2019; Li et al. 2019a; Eftekhari et al. 2019; Pires et al. 2019; Wu et al. 2020; Pao et al. 2020) will likewise not mentioned in this review.

Among all of the groups of authors observed since 2018, Liu et al. (2019) was among the few who proves that an algorithm built using self-customized CNN dedicated for a certain task of DR screening, can potentially outperform one that is built using an RGP-CNN of much greater complexity. Liu et al. (2019) then further compared their invented algorithm against 5 RGP-CNN’s (ResNet50, ResNet101, DenseNet121, SeNet50, SeNet101), and using only 32 layers, it outperformed all of them, achieving an accuracy of 93.80%. The algorithm built by Liu et al. (2019) was for General DR Detection.

Eftekhari et al. (2019) constructed two mutually distinct customized CNN architectures joined in series for EX detection. In contrast, Wu et al. (2020) constructed two mutually distinct customized CNN architectures joined concurrently to perform General DR Grading, involving two distinctively preprocessed data from a fundus image. One of the CNN performs General DR Detection (Normal/DR), the other performs classification on the severity of DR in 4 stages (Mild NPDR/Moderate MPDR/Severe NPDR/PDR). On the other hand, Pao et al. (2020) constructed two mutually identical customized CNN architecture which are joined concurrently to perform General DR Grading using, also involving distinctively preprocessed data of a fundus image. Both of the CNN however are capable to perform General DR Grading on their own. Instead, one of the CNN worked on the preprocessed red channel of the fundus image, the other worked on the preprocessed blue channel of the fundus image. The blue channel of the fundus image were not used in their CNN.

Pires et al. (2019) constructed three customized CNN architecture which are all joined concurrently in their models, each of them is in charge of a preprocessed input image of a different resolution. The customized CNN architecture constructed by Sun (2019), also for General DR Detection, has the all data normalized between each layer. Moreover, the algorithm developed by Li et al. (2019a) use a U-Net structure (Cao et al. 2018) for the feature extraction segment.

3.5 A review on the other minority models groups used by the entries of the new fundus algorithms

In this section, some of the other machine learning models that have been used for the detection of DR using conventional fundus photography are reviewed.

3.5.1 ANN: a tabular representation

Through observation, there was also a slight but observable increase in the annual number of articles which developed an ANN based model. Nevertheless, the number of new ANN algorithms has always been much fewer than that of CNN, this is because CNN by its fundamental structure and purpose was inherently created for analyzing graphical images (Lecun et al. 1998), thus they are much more suited to analyze fundus images than ANN which was built to analyze verbal or numerical data.

Altogether, 12 recent articles utilizing ANN models were observed during the period of 2016–2020. Their particulars are summarized in Table 3 below.

It is worth noting that most algorithms utilizing ANN are designed to perform general classification of DR’s presence or severity. This is mainly because the general classification of DR does not require a large number of pixels to be used as inputs from the raw data. In such cases, the severity will mainly depend on how many inputs are with colors unusually differs from that of a retina.

3.5.2 Other standalone algorithms: a tabular representation

In this section we will discuss the author groups from the year 2015 who were found using models other than CNN or ANN do develop their algorithm for DR detection. Table 4 shows the list of authors who used a standalone, ML algorithm which was neither ANN nor CNN.

As shown in Table 4, FCM, RF and SVM were among the ML models right after CNN and ANN in terms of the frequency of appearance in literature.

On the other hand, in 2020, Usman and Almejalli (2020) was the first author who was observed innovating an algorithm using a standalone Genetic Algorithm. In that article, they proposed using genetic algorithms (GA) to do General DR Grading.

There were 8 entries from the New Fundus Algorithms, among seven articles (Ţălu et al. 2015; Banerjee and Kayal 2016; Partovi et al. 2016; Wardoyo et al. 2016; Das and Puhan 2017; Dutta et al. 2015; Kaur and Mittal 2018), who used conventional methods till 2019. During the year 2015 to 2019, it is evident that the percentage of algorithms which deployed conventional methods generally were on decline, reaching 0 counts by the year 2019. However, in the year 2020, Colomer et al. (2020) was the only observed group of authors who revived the conventional morphological method again, this time by inventing a morphological algorithm that can be combined with some varieties of other non-NN ML algorithms, such as SVM or genetic algorithm. This was said to produce hybrids that surpass each of the standalone constituents.

3.5.3 Hybrid models with ML

In every year from 2015 to 2020, there were around 2 full-length journal articles per year that were found deploying hybrid models to develop their algorithm. In total, 13 articles were found altogether (Ibrahim et al. 2015; Gharaibeh 2016; Gharaibeh and Alshorman 2016; Zhang et al. 2017; Barkana et al. 2017; Seth and Agarwal 2018; Al-Hazaimeh et al. 2018; Li et al. 2019b; Jebaseeli et al. 2019a, b; Luo et al. 2020; Gayathri et al. 2020; Bhardwaj et al. 2021) during this period. The constituents of their algorithms are as shown in Table 5.

During 2015–2019, as shown in Table 5, 8 of the entries (Ibrahim et al. 2015; Gharaibeh 2016; Gharaibeh and Alshorman 2016; Barkana et al. 2017; Seth and Agarwal 2018; Li et al. 2019b; Jebaseeli et al. 2019a, b) used one NN-based model as one of the constituents, and some non-NN-based models as the other constituents. This was to compensate the various shortcomings of NN-based models, such as the sheer amount of time and computation needed on the ML process. There was indeed a pattern observed, among the 8 entries, on the choices of both their NN-based constituent and their non-NN-based constituent as time goes by, as shown in Table 5, where authors had begun to use CNN in place of ANN as the NN-based constituent (Seth and Agarwal 2018; Li et al. 2019b), and to use SVM in place of Fuzzy-ML as the non-NN-based constituent (Seth and Agarwal 2018; Li et al. 2019b; Jebaseeli et al. 2019a, b).

On the other hand, all three author groups in the year 2020 uses combinations that were never deployed during 2015–2019.

3.6 The study of the New Fundus Algorithms by their designed tasks

Through due diligence of studying all the articles containing the entries of the New Fundus Algorithms, it was observed that most of these entries were built for one or several specifically designed tasks, classifiable into three main task groups as shown in Table 6.

In analogy with Table 1 and Fig. 6 under Sect. 2.1.3, we now present Table 7 and Fig. 13 showing the general distribution of all the 84 entries of the New Fundus Algorithm according to their designed tasks, shown in abbreviations referable to Table 6.

In Fig. 13, the areas of all regions are likewise shown directly proportional to the number of entries, similar to Fig. 6 from Sect. 2.1.3.

As it can be observed that the majority of the models are dedicated to general DR grading, Sect. 2.4.1 is presented to provide an in-depth review concerning about those works.

3.6.1 An in-depth review of works who performed general DR grading

In 2015, Mahendran and Dhanasekaran (2015a) accomplished the DR grading through various parameters, each summarized from the data contained in an image, such as the number of pixels as well as the grey levels of the pixels. Besides, Ibrahim et al. (2015) even considered Fourier spectrum of the textures, in addition to some parameters similar to those used by Mahendran and Dhanasekaran (2015a). On the other hand, Ţălu et al. (2015) solely looked at the fractals pattern obtained from a fundus image through certain algorithms, which may or may not be resulted from blood vessels. In such a case, the grading made will depend on how much the fractal patterns truly resemble blood vessels instead of other diseased tissues.

In 2016, Gharaibeh and Alshorman (2016) separates the optical disk and blood vessels in their process of general DR grading.

In 2017, Xu et al. (2017) deployed a CNN which works on 3 input images of 224 × 224, such 3 input images were obtained from the raw image using different preprocessing techniques. Quellec et al. (2017) and Raju et al. (Raju et al. 2017) deployed a CNN which works on 1 input images of 448 × 448. These three CNN networks deployed in Xu et al. (2017), Quellec et al. (2017) and Raju et al. (2017) were given very detailed descriptions. As a comparison, the CNN deployed in Xu et al. (2017) contains 8 convolution layers, the CNN deployed in Raju et al. (2017) contains 11 convolution layers, whereas the CNN deployed in Quellec et al. (2017) contains 13 convolution layers. Moreover, the CNN deployed in Xu et al. (2017) deploys 2 × 2 max pooling, whereas the CNN deployed in Quellec et al. (2017) and Raju et al. (2017) deployed 3 × 3 max pooling.

Meanwhile in 2017, Al-Jarrah and Shatnawi (2017) deployed a conventional ANN which various parameters, such as the area of exudates and hemorrhages. Abbas et al. (Abbas et al. 2017) also deployed conventional ANN, however the structure of their inputs were not clearly mentioned in Abbas et al. (2017). Based on the common practice of conventional ANN, it can be expected that Abbas et al. (2017) could have deployed similar methods like Al-Jarrah and Shatnawi (2017) or Mahendran and Dhanasekaran (2015a) to obtain the appropriate input.

In 2018, Mansour (2018) feed 512 × 512 images as inputs into his CNN. As a trade-off due to the larger image size, his CNN consists of 5 convolution layers, less than that of Xu et al. (2017), Quellec et al. (2017) and Raju et al. (2017). Seth and Agarwal (2018) deployed a hybrid of CNN and SVM, which also worked on 512 × 512 images as the input. In particular, Seth and Agarwal (2018) trained their model on various orientations of a given image, such as mirror and rotations.

Meanwhile in 2018, Hemanth et al. (2018) used 6 types of textural features (mean, variance, standard deviation, contrast, entropy and energy) as inputs of his neural network-based algorithm. Such methodology is deemed similar to that deployed by Mahendran and Dhanasekaran (2015a) did in 2015.

In 2019, RGP-CNN had become the norm for general DR grading. Such ML process are usually done on external server platform, such as Google Colab, and are likewise trained from ground zero without human intervention. Among the vast varieties of RGP-CNN used are InceptionV3 (Gao et al. 2018; Sahlsten et al. 2019; Zeng et al. 2019) and VGGNet (Bellemo et al. 2019). On the other hand, Saleh et al. (2018) used a unique ML algoritm, whereas Randive et al. (2018) deployed a proprietary software (MATLAB) and hence the detail of their structure remains unknown.

In 2020, the choices of model once again shifted from RGP-CNN to self-customized CNN structure. For instance, Wu et al. (2020) deployed two customized CNN networks, once worked on 256 × 256 preprocessed image as its input, the other worked on 512 × 512 preprocessed image as its input. On the other hand, Gayathri et al. (2020) deployed a customized CNN networks which worked on 227 × 227 preprocessed image.

Moreover, Shaban et al. (2020) deployed a customized CNN with increasingly many numbers of filters as the computation progresses. Pao at el. (2020) deployed a customized CNN with 2 channels, one accepted the red channel of the input image, the other accept the green channel of the input image. Shankar et al. (2020a) also deployed a customized CNN where all 3 channels (Red, Green, Blue) were used. Torre et al. (2020) deployed a dedicated CNN which accepts 3 × 640 × 640 input images. Shah et al. (2020) deployed a simplistic CNN with only two convolutional layers. Zago et al. (2020) deployed a CNN with 5 convolution layers which accepts 3 × 65 × 65 images.

Moreover, Jadhav et al. (2020) devised their unique method of general DR grading. In such grading, the blood vessels are first separated by a grey level threshold, leaving the other features to have their entropy (e.g. variance) measured. Luo et al. (2020), deployed a unique Self-supervised Fuzzy Clustering Network. Ali et al. (2020) customized a totally unique grading method in which they provided a detailed flowchart for their unique model. In addition, Bhardwaj et al. (2021).

There remain some authors in 2020 who still used RGP-CNN. For example, Shankar et al. (2020a) deployed InceptionV4 in their model for general DR grading. Samanta et al. (2020) deployed Densenet121. Xie et al. (2020) deployed VGGNet, ResNet and Densenet in their model.

In most articles observed which deployed preprocessing, such preprocessing was often done by nullifying the local color and luminosity, thus exposing all the textures at the same grey tone. However, the details of such formulations were not mentioned.

3.7 Study of the New Fundus Algorithms by their datasets used in the ML process

It is worth noting that the development of an ML algorithm necessitates training and testing with real life datasets. Hence, during the period of 2015–2018, most entries among the New Fundus Algorithms were observed to use one particular publicly available dataset, though there is nevertheless a drastic increase in the average size of the dataset used, as more and more of the authors chose to use Kaggle-EyePACS dataset (https://www.kaggle.com/c/diabetic-retinopathy-detection/dat), the largest dataset available publicly even as of 2020.

In 2019, there was observed an increase in the number of entries who solely rely on actual clinical data, rather than the publicly available datasets. In 2020, the Covid-19 pandemic was deemed to have severely hindered the procedure of obtaining clinical data through various medical institutions. Nevertheless, despite the Covid-19 pandemic, 13 entries among the New Fundus Algorithms were actually published just during the first 8 months 2020. 5 of those 13 entries even used multiple collection of datasets (instead of relying on a single dataset) obtained through various independent websites, to make the best out of what is available during the Covid-19 pandemic.

Just like the Euler diagrams in Figs. 6 and 13, we would like to provide similar illustration on how the authors picked their datasets. Due to the immense complexity involved, even an Euler diagram proves impractical for this case.

Thus, we will solely present Table 8 which shows the list of the most used datasets by the New Fundus Algorithms. Some of the most used datasets include Kaggle-EyePACS and Messidor. Also in Table 8, we also included references (Huang et al. 2017; Cao et al. 2018) in which the authors had done their comparative studies on several existing RGP-CNN architectures. It is worth noting that a single algorithm may had been tested on multiple datasets. In addition, there are two articles (Abbas et al. 2017; Chowdhury et al. 2019) which only mentioned that “various publicly available datasets” were used, without pinpointing which datasets they used, whether partly or entirely. There are also another three articles observed (Ţălu et al. 2015; Wardoyo et al. 2016; Wu et al. 2020) where no descriptions were given by these authors on their choices of datasets.

It is worth reminding that the three articles (Mahendran and Dhanasekaran 2015a, b; Seth and Agarwal 2018) each contributed to two entries among the New Fundus Algorithms, whereas in Huang et al. (2017) and Cao et al. (2018) the authors merely did comparative studies on the existing algorithms instead of innovating new one(s) of their own.

Thus, it is observed that more and more research teams resorted to use larger datasets, such as Kaggle-EyePACS with 88,704 samples, as computing speed progressed. The deployments of clinical datasets were also on the rise till 2019, for which 2020 marks a very difficult year to obtain such non-public datasets due to the Covid-19 pandemic.

3.8 A presentation of all the observed comparative studies performed by all the authors who innovates entries from the New Fundus Algorithms

As the entries of the New Fundus Algorithms were constructed, numerous comparative studies were done by the authors against some previous models, which was presented in Table 9. It is worth noting that for all such cases presented in Table 9, each source article yields exactly one New Fundus Algorithm.

For each New Fundus Algorithm, their claimed performances are as presented in Table 10. Due to the limitation of scope, the performances among the competitors of a given New Fundus Algorithm are presented as a range. Unless mentioned otherwise in Table 10, the percentage represents accuracies, calculated by the formula:

Hence, based on the comparative studies done across all the relevant articles, CNN was deemed to be the best overall performing models for DR detection through fundus images. This is consistent with the very nature of CNN which directly deal with pixels on an image, rather than relying on the numerical data which are themselves only representatives from the raw data.

On a quantitative perspective, however, it is worth noting that the quantitative comparisons of accuracy is only applicable to all the models involved within one single article. For example, the New Fundus Algorithm proposed in Al-Hazaimeh et al. (2018), which yielded an accuracy of 98.4%, is deemed better than all the competitor tested by the same authors within (Al-Hazaimeh et al. 2018) itself, which yielded accuracies ranges in 89.6% to 97.6%. Still, it cannot be concluded that the New Fundus Algorithm proposed in Al-Hazaimeh et al. (2018), which yielded an accuracy of 98.4%, is better than the New Fundus Algorithm proposed in Liu et al. (2019), which yielded an accuracy of 90.84% by a different team of authors. This is because there remain many degrees of freedom involved. such as the duration of the machine learning process, the size of the dataset used, and the amount of computational resource available (e.g., computer hardware). Such parameters may differ significantly among different authors. Moreover, on all articles observed, there remains a great deal of detail of computations which are not given by the authors, such as the exact preprocessing formula.

4 A brief review on some other developmental progress related to automated fundus based DR detection

4.1 Some recently commercialized applications for fundus-based automated DR detections

In addition to the 81 articles which produced 84 distinctive New Fundus Algorithms. Another 5 articles (Rajalakshmi et al. 2018; Natarajan et al. 2019; He et al. 2019; Rogers et al. 2021; Wang et al. 2020) gives elaborations on the recently commercialized applications by the authors of those journals, which in turn are prominent staffs of their respective companies. An overview is provided in Table 11 below.

In particular, the authors of Rajalakshmi et al. (2018) had actually compared the results given by their algorithms with that of actual medical experts. Subsequently, their automatic DR performance is concluded to be at least on par with the manual grading by those medical experts.

In Natarajan et al. (2019) the performance of an AI was examined in actual context. The AI algorithm was designed to performs on a mobile device (with the application installed) attached to a fundus camera. It is also worth mentioning that most countries in the world do not approve apps ran on mobile devices for medical use.

The authors of He et al. (2019) only mentioned that they used a “deep learning algorithm”, however the internal mechanism of the algorithm was not discussed. It is however deemed likely to be CNN by this review article. Based on their testing, the authors in He et al. (2019) had thus concluded that it is now feasible to deploy AI for DR in China.

4.2 Some recently developed standalone add-ons to improve existing ML-based algorithms for fundus-based automated DR detections

There are altogether 4 articles (Gupta et al. 2017; Nilashi et al. 2019; Gadekallu et al. 2020a, b) found to have worked on developing standalone add-one to further enhance pre-existing ML based algorithms.

In Gupta et al. (2017), an extension is recommended to several existing MF-based algorithms. Such extension works by dividing the fundus images into several segments. Thus, when coupled with an algorithm that can only detects the presence/absence of diseased tissue but cannot pinpoint the location (e.g. G, G2, G3, G4, G5), such patching can somewhat help in pointing out the location of such diseased tissue.

In Nilashi et al. (2019) and Gadekallu et al. (2020a) The datasets consist of lists of 20 attributes derived from MASSIDOR, thus no images were involved. Instead, the add-on works in improving the efficiency of internal computations undertaken by some ML-based algorithms.

In Gadekallu et al. (2020b), another add-on of computations as also introduced in which, combined with some existing NN based algorithms (ANN, CNN, Other-NN) claimed to be able to increase their accuracies even further.

5 Recent development on the automated detection of DR using OCTA (optical coherence tomography angiography) images

OCTA (Optical Coherence Tomography Angiography) is a newly developed retinal scanning technology that can extract much more information of the retina as compared to conventional fundus images. In particular, OCTA is capable of resolving even the tiniest retinal blood vessels, causing all MA to show up with much more clarity, as compared to a conventional fundus image. However, up until the end of 2020, almost all the research done on automated DR detection has remain focused only on utilizing conventional fundus images, and all DR detection algorithms created for OCTA were only at very primitive stages of development. As technology advances, it is expected that the use of OCTA will soon replace that of conventional fundus imaging. Therefore, it is crucial to develop new algorithms that are able to utilize this frontier technology to enable early detection of DR, especially at the very early stage where only MA is often present.

There are altogether 13 articles (Srinivasan et al. 2014; Carlo et al. 2015; Sandhu et al. 2018a, b, 2020; Guo et al. 2019; Lee et al. 2019; Cano et al. 2020; Heisler et al. 2020; Zahran et al. 2020; Abdelsalam 2020; Le et al. 2020; Wang et al. 2017), each of them was found to have proposed some new algorithms that works on interpreting OCTA images to a certain extent. Thus, throughout all the 13 aforementioned articles, there are altogether 13 algorithms. These 13 algorithms shall therefore be collectively referred as the new OCTA algorithms throughout the remaining passages of this article.

Both the constituent model groups and the designed tasks, among all entries of the new OCTA algorithms, are thus summarized in Tables 12 and 13. In particular, most of the entries designed for G (from Sandhu et al. 2018a, b, 2020; Guo et al. 2019; Cano et al. 2020; Heisler et al. 2020; Zahran et al. 2020; Abdelsalam 2020; Le et al. 2020) are claimed to be capable of distinguishing the following 3 different categories of DR severity, namely.

-

(i)

Normal.

-

(ii)

Diabetic without DR (a feature that is detectable only by OCTA imaging).

-

(iii)

DR.

Moreover, all the datasets involved in training and testing the new OCTA algorithms are obtained clinically and therefore not publicly available.

Table 14 is presented to show the sizes of the clinical datasets obtained for training and testing all the new OCTA algorithms. Although the number of samples may seem like only a few, the OCTA image of a single eye already carries a tremendous amount of information showing every intricate detail of the blood vessels and other type of tissue.

6 Conclusion

Due to the exponential growth in numbers of predicted future diabetes mellitus patients and inadvertently the victims subject to DR, the number of ophthalmologists worldwide may not be able to match regulatory screening demands. A safe clinically available automated detection algorithm has been a strongly sought-after option in tackling the problem.

It was found that most entries from the New Fundus Algorithms, including those deploying the advanced ML models, still work on the principal of maximum likelihood. Therefore, they can only detect the disease when it is at an advanced stage. Those entries from the New Fundus Algorithms that use ML algorithms (including those using deep learning algorithms) only look at the degree of brightness to detect the optical disc and exudates in the retina based on the common assumption that the brightest area in the retina must be the optical disc. Therefore, most systems are not even able to detect the optical disc correctly. This hampers the ability of the system to do early detection which is pertinent to prevent vision loss.

It was also found that most entries from New Fundus Algorithms, even those designed to pinpoint diseased or healthy tissues (e.g., EX and HM), can only work with images with fixed and very low pixel count of length and width. For example, even algorithms designed to “pinpoint” exudates are usually designed to work on 160 by 240 pixels, manually preprocessed images. Such common practice of shrinking down the images to predetermined pixel sizes has rendered early detection almost impossible. This is because in the early stages of DR, the diseased tissues (e.g., microaneurysms) are usually too small to be visible on such a low-resolution image.

Among the entries from the New Fundus Algorithms that uses Pure CNN, many have resorted to use RGP-CNN (e.g., ResNet50) as there has been many readily made programming packages for these RGP-CNN. With this mere adaptation of the existing, general-purpose structure, the ML models deployed by most of the models are not optimized specifically for the task of automated detection of DR.

As OCTA imaging is a very recent technology that is very resource demanding, the research on the automated DR detection using OCIA imaging is still at the very early stages of development.

There are still several challenges to the detection of DR. One of them is to develop image processing techniques/methods that are able to process retinal images with low resolution and blurred retinal images. Another is to develop accurate detection methods for the detection of borderline cases. This can be done using a combination of advanced mathematical tools such as complex fuzzy logic and machine learning algorithms. Finally, by acquiring as many clinical datasets will lead to the development of a novel and fully functional DR detection algorithm for OCTA images. Then, a dedicated ML algorithm not based on any pre-existing models can be used for the purpose of automated DR detection. As every single part of the programming code is designed specifically for the task of DR detection, the performance of our dedicated ML algorithm is thus optimized and will even be capable of being run on any electronic devices.

Moreover, it has also came to our attention that Mongan et al. (2020) had outlined a checklist for artificial intelligence in medical imaging (abbr. CLAIM) in 2020. Although the article was published in the field of radiology, it serves as a new benchmark for all upcoming AI in medical imaging to comply or even surpass. Such very recent and unprecedentedly comprehensive checklist shall prove very useful to all our future undertaking in developing a fully functional DR detection algorithm, because a full compliance with the checklist guarantees a major advantage over the previous New Fundus Algorithms, which were innovated mostly before 2020 and therefore severely fall short of the checklist suggested by Mongan et al. (2020).

References

Abbas Q, Fondon I, Sarmiento A, Jiménez S, Alemany P (2017) Automatic recognition of severity level for diagnosis of diabetic retinopathy using deep visual features. Med Biol Eng Comput 55:1959–1974

Abdelsalam MM (2020) Effective blood vessels reconstruction methodology for early detection and classification of diabetic retinopathy using OCTA images by artificial neural network. Inf Med Unlocked 20:100390

Abràmoff MD, Lou Y, Erginay A, Clarida W, Amelon R, Folk JC, Niemeijer M (2016) Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol vis Sci 57(13):5200–5206

Aiello LP, Avery RL, Arrigg PG, Keyt BA, Jampel HD, Shah ST, Pasquale LR, Thieme H, Iwamoto MA, Park JE, Nguyen HV, Aiello LM, Ferrara N, King GL (1994) Vascular endothelial growth factor in ocular fluid of patients with diabetic retinopathy and other retinal disorders. N Engl J Med 331(22):1480–1487

Akyol K, Bayir Ş, Şen B (2016) Detection of hard exudates in diabetic retinopathy retinal images by utilizing visual dictionary and classifier approaches. Mugla J Sci Technol 2(1):1–6

Al-Hazaimeh OM, Nahar KMO, Al-Naami B, Gharaibeh N (2018) An effective image processing method for detection of diabetic retinopathy diseases from retinal fundus images. Int J Signal Imaging Syst Eng 11(4):206–216

Ali A, Qadri S, Mashwani WK, Kumam W, Kumam P, Naeem S, Goktas A, Jamal F, Chesneau C, Anam S, Sulaiman M (2020) Machine learning based automated segmentation and hybrid feature analysis for diabetic retinopathy classification using fundus image. Entropy 22(5):567

Al-Jarrah MA, Shatnawi H (2017) Non-proliferative diabetic retinopathy symptoms detection and classification using neural network. J Med Eng Technol 41(6):498–505

Alyoubi WL, Shalash WM, Abulkhair MF (2020) Diabetic retinopathy detection through deep learning techniques: a review. Inf Med Unlocked 20:100377

Amin J, Sharif M, Yasmin M (2016) A review on recent developments for detection of diabetic retinopathy. Scientifica 2016:6838976

Antonetti DA, Barber AJ, Bronson SK, Freeman WM, Gardner TW, Jefferson LS, Kester M, Kimball SR, Krady JK, LaNoue KF, Norbury CC, Quinn PG, Sandirasegarane L, Simpson IA, JDRF Diabetic Retinopathy Center Group (2016) Diabetic retinopathy: seeing beyond glucose-induced microvascular disease. Diabetes 55(9):2401–2411

Ayhan MS, Kühlewein L, Aliyeva G, Inhoffen W, Ziemssen F, Berens P (2020) Expert-validated estimation of diagnostic uncertainty for deep neural networks in diabetic retinopathy detection. Med Image Anal 64:101724

Banerjee S, Kayal D (2016) Detection of hard exudates using mean shift and normalized cut method. Biocybern Biomed Eng 36(4):679–685

Barkana BD, Saricicek I, Yildirim B (2017) Performance analysis of descriptive statistical features in retinal vessel segmentation via fuzzy logic, ANN, SVM, and classifier fusion. Knowl Based Syst 118:165–176

Bellemo V, Lim ZW, Lim G, Nguyen GD, Xie Y, Yip MYT, Hamzah H, Ho J, Lee XQ, Hsu W, Lee ML, Musonda L, Chandran M, Chipalo-Mutati G, Muma M, Tan GSW, Sivaprasad S, Menon G, Wong TY, Ting DSW (2019) Artificial intelligence using deep learning to screen for referable and vision-threatening diabetic retinopathy in Africa: a clinical validation study. Lancet Digital Health 1(1):e35–e44

Bhardwaj C, Jain S, Sood M (2021) Hierarchical severity grade classification of non-proliferative diabetic retinopathy. J Ambient Intell Humaniz Comput 12:2649–2670

Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RVP, Dy J, Erdogmus D, Ioannidis S, Kalpathy-Cramer J, Chiang MF (2018) Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol 136(7):803–810

Cano J, O’neill WD, Penn RD, Blair NP, Kashani AH, Ameri H, Kaloostian CL, Shahidi M (2020) Classification of advanced and early stages of diabetic retinopathy from non-diabetic subjects by an ordinary least squares modeling method applied to OCTA images. Biomed Opt Express 11(8):4666–4678

Cao W, Czarnek N, Shan J, Li L (2018) Microaneurysm detection using principal component analysis and machine learning methods. IEEE Trans Nanobiosci 17(3):191–198

Carlo TE, Chin AT, Filho MAB, Adhi M, Branchini L, Salz DA, Baumal CR, Crawford C, Reichel E, Witkin AJ, Duker JS, Waheed NK (2015) Detection of microvascular changes in eyes of patients with diabetes but not clinical diabetic retinopathy using optical coherence tomography angiography. Retina 35(11):2364–2370

Chaki J, Ganesh ST, Cidham SK, Theertan SA (2020) Machine learning and artificial intelligence based diabetes mellitus detection and self-management: a systematic review. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2020.06.013

Chowdhury AR, Chatterjee T, Banerjee S (2019) A random forest classifier-based approach in the detection of abnormalities in the retina. Med Biol Eng Comput 57:193–203

Colomer A, Igual J, Naranjo V (2020) Detection of early signs of diabetic retinopathy based on textural and morphological information in fundus images. Sensors 20(4):1005

Das V, Puhan NB (2017) Tsallis entropy and sparse reconstructive dictionary learning for exudate detection in diabetic retinopathy. J Med Imaging 4(2):024002

Derwin DJ, Selvi ST, Singh OJ, Shan BP (2020a) A novel automated system of discriminating microaneurysms in fundus images. Biomed Signal Process Control 58:101839

Derwin DJ, Selvi ST, Singh OJ (2020b) Secondary observer system for detection of microaneurysms in fundus images using texture descriptors. J Digit Imaging 33:159–167

Duh EJ, Sun JK, Stitt AW (2017) Diabetic retinopathy: current understanding, mechanisms, and treatment strategies. JCI Insight 2(14):e93751

Dutta MK, Parthasarathi M, Ganguly S, Ganguly S, Srivastava K (2015) An efficient image processing based technique for comprehensive detection and grading of nonproliferative diabetic retinopathy from fundus images. Comput Methods Biomech Biomed Eng 5(3):195–207

Eftekhari N, Pourreza HR, Masoudi M, Ghiasi-Shirazi K, Sae E (2019) Microaneurysm detection in fundus images using a two-step convolutional neural network. Biomed Eng Online 18:67

Gadekallu TR, Khare N, Bhattacharya S, Singh S, Maddikunta PKR, Srivastava G (2020a) Deep neural networks to predict diabetic retinopathy. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-020-01963-7

Gadekallu TR, Khare N, Bhattacharya S, Singh S, Maddikunta PKR, Ra IH, Alazab M (2020b) Early detection of diabetic retinopathy using PCA-Firefly based deep learning model. Electronics 9(2):274

Gao Z, Li J, Guo J, Chen Y, Yi Z, Zhong J (2018) Diagnosis of diabetic retinopathy using deep neural networks. IEEE Access 7:3360–3370

Gayathri S, Varun PG, Palanisamy P (2020) A lightweight CNN for diabetic retinopathy classification from fundus images. Biomed Signal Process Control 62:102115

Genuth S, Sun W, Cleary P, Gao X, Sell DR, Lachin J, Monnier VM (2015) Skin advanced glycation end products glucosepane and methylglyoxal hydroimidazolone are independently associated with long-term microvascular complication progression of type 1 diabetes. Diabetes 64(1):266–278

Gharaibeh NY (2016) A novel approach for detection of microaneurysms in diabetic retinopathy disease from retinal fundus images. Comput Inf Sci 10(1):1–15

Gharaibeh NY, Alshorman AA (2016) An effective diagnosis of diabetic retinopathy with aid of soft computing approaches. J Power Energy Eng 10(8):474–485

Gonzalez-Gonzalo C, Sanchez-Gutierrez V, Hernandez-Martınez P, Contreras I, Lechanteur YT, Domanian A, Ginneken BV, Sanchez CC (2020) Evaluation of a deep learning system for the joint automated detection of diabetic retinopathy and age-related macular degeneration. Acta Ophthalmol 98(4):368–377

Gross JG, Glassman AR, Jampol LM, Inusah S, Aiello LP, Antoszyk AN, Baker CW, Berger BB, Bressler NM, Browning D, Elman MJ, Ferris FL 3rd, Friedman SM, Marcus DM, Melia M, Stockdale CR, Sun JK, Beck RW (2015) Panretinal photocoagulation vs intravitreous ranibizumab for proliferative diabetic retinopathy: a randomized clinical trial. JAMA 314(20):2137–2146

Grzybowski A, Brona P, Lim G, Ruamviboonsuk P, Tan GSW, Abramoff M, Ting DSW (2019) Artificial intelligence for diabetic retinopathy screening: a review. Eye 34(3):451–460

Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR (2016) Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316(22):2402–2410

Gulshan V, Rajan RP, Widner K, Wu D, Wubbels P, Rhodes T, Whitehouse K, Coram M, Corrado G, Ramasamy K, Raman R, Peng L, Webster DR (2019) Performance of a deep-learning algorithm vs manual grading for detecting diabetic retinopathy in India. JAMA Ophthalmol 137(9):987–993

Guo YK, Hormel TT, Xiong HL, Wang BJ, Camino A, Wang J, Huang D, Hwang TS, Jia YL (2019) Development and validation of a deep learning algorithm for distinguishing the nonperfusion area from signal reduction artifacts on OCT angiography. Biomed Opt Express 10(7):3257–3268