Abstract

Till now, the detection of diabetic retinopathy seems to be one of the sensitive research topics since it is related to health care of any individual. A number of contributions in terms of detection already exists in the dice; still, there present some problems regarding the detection accuracy. This issue motivates to develop a new detection model of diabetic retinopathy, and moreover, this model tells the severity of retinopathy from the given fundus image. The proposed model includes preprocessing, segmentation, feature extraction and classification stages. Here, Triplet Half band Filterbank (THFB) Segmentation is performed, local vector pattern (LVP) is used for extracting the features, principle component analysis (PCA) procedure is used to reduce the dimensions of the feature vector, and neural network (NN) is used for classification purpose. The proposed model compares its performance over other conventional classifiers like support vector machine (SVM), k nearest neighbor (k-NN) and Navies Bayes (NB) in terms of positive and negative measures. The positive measures are accuracy, specificity, sensitivity, precision, negative predictive value (NPV), F1-Score and Matthews Correlation Coefficient (MCC). Similarly, the negative measures are the false positive rate (FPR), false negative rate (FNR) and false discovery rate (FDR), and the efficiency of the proposed model is proven.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In this era, the Diabetic retinopathy (DR) [1,2,3,4,5] is one of the chief public health problems as it leads to blindness in patients with diabetes [6]. Moreover, DR is an impediment to diabetes that leads to injury of vision as well as even become blindness. This is actually the most usual reason for blindness among people who are in working age [7]. Hence, there has collective attention for making the health care systems, which can screen a huge count of people for diagnosing the DR as early as possible for an optimum treatment [8]. DR happens when their present swell and leak in vessels, or leak fluid and some additional growth of anomalous blood vessels. Here, the initial phase of DR is denoted as microaneurysm [9,10,11] that normally looks as small, either dark red spots or minuscule hemorrhages making clusters along circular shape. Moreover, its size differs from 10 to 100 µ and it approximates to the 1/12th diameter of the average optic disc [12].

In DR, since there has great sugar level in blood, small blood vessels across the retina via blood circulate gets clogged. Moreover, the damaged vessels [41] are the reason for lack of blood in the retinal area that leads to permanent vision loss [13]. Appropriate screening aids in primary detection and timely treatment decreases the chances of whole blindness. Computer-aided diagnosis (CAD) [14, 38] systems are there outside to help in detection of DR automatically. In order to detect the retinopathy eye disease, CAD system uses fundus image [15, 16] models that are essential for diagnosing various eye diseases. CAD systems have the ability to distinguish healthily as well as abnormal retinal images [17, 18], which are anticipated to decrease the workload of ophthalmologist’s via examining those images [13].

For the automatic [19] assessment of retinal disease, there needs an optimal segmentation, so that the appropriate lesion extraction and processing are easy to perform. Several methods like receiver operating characteristic (ROC) analysis and optimization methods [39, 42, 43, 45,46,47] could detect early symptoms of retinopathy and grant objective diagnosis, which is by criteria determined by ophthalmologists. In order to develop such automated systems, various components are necessary to recognize the retinal features (anatomical), (optic disc [20, 21], fovea, blood vessels, and some pathology like exudates [22], hemorrhages, and microaneurysms) [8]. However, the system suffers from the problem when smearing image processing approaches [44] to retinal images in terms of uneven illumination. In contrast, detection of retinal exudates in the huge count of images is really expensive in professional duration, and that opens to human error.

1.1 Motivations

Diabetic retinopathy is retinopathy originated by complications of diabetes that can ultimately lead to blindness. If DR is detected in time the progression to vision impairment can be minimized or prevented. Nevertheless, this is difficult as the disease frequently demonstrates minor symptoms until it is late to offer effectual treatment. At present, detecting DR is manual procedure, which is performed by trained clinician by examining digital fundus images. Usually, it results in miscommunication and delayed outcomes those results in delayed treatment. In this paper, the LVP Extraction and Triplet-based Segmentation is exploited for the DR Recognition. The advantage of Triplet based segmentation is feature extraction, which provides low computational complexity and it is feasible for many applications. This Triplet based segmentation satisfies perfect reconstruction and offers linear phase, regularity, better frequency-selectivity, near-orthogonality, as well as good time–frequency localization. In addition, local vector pattern (LVP) in high-order derivative space is used for feature extraction. On the basis of the vector of each pixel constructed by calculating the values among the referenced pixel and the adjacent pixels with diverse distances from different directions, the vector representation of the referenced pixel is produced. The LVP minimizes the feature length through comparative space transform to encode various spatial surrounding relationships between the referenced pixel and its neighborhood pixels. Additionally, the concatenation of LVPs is compacted to create more distinctive features. The LVP is more resilient structure of micropatterns than standard local pattern descriptors.

This paper intends to develop a new DR detection model that exploits four phases of progression: Preprocessing, Segmentation, Feature Extraction, and Classification. Here, the THFB based segmentation is performed, LVP features are extracted in the feature extraction phase, and the extracted features are processed under PCA decomposition procedure. In addition, the NN is the classifier, which is used for detecting the presence of disease. The rest of the paper is prepared as follows: Sect. 2 reviews the literature work. Section 3 details the functioning approach of the proposed model. Section 4 explains the NN classifier that used to detect the DR existence in the image. Section 5 discusses the obtained outcomes, and Sect. 6 concludes the paper.

2 Literature review

2.1 Related works

In 2016, Seoud et al. [7] have developed a new model of detecting both microaneurysms as well as hemorrhages from color fundus images, and they were also described as well as evaluated. The major contribution was a novel shape set features, which was named as dynamic shape features. This might not need precise segmentation of areas to be classified. The mentioned features have denoted the assessment of the shape while image flooding, which permits discrimination among lesions along vessel segments. The method was evaluated using six databases and has the robustness of proposed model was proven in terms of variability in the quality of image resolution.

In 2014, Zhang et al. [23] have proposed a noninvasive approach for detecting ‘Diabetes mellitus (DM)’ as well as ‘Nonproliferative diabetic retinopathy (NPDR)’. In this, the first stage of DR was under feature extraction, and the features comprise color, geometry, and texture. The device like capture device captures the tongue images. The tongue color gamut was recognized with 12 colors that have represented the features of the tongue. They have applied the combination of 34 features, and the results have shown that the proposed model could distinct Healthy and NPDR (DM samples without NPDR) tongues.

In 2013, Ranamuka and Meegama [12] had proposed a detection model that was on the basis of morphological processing with fuzzy logic that could identify the exudates (hard) from input images. Initially, the exudates were found via mathematical morphology that has included the removal of the optic disc. Consequently, the exudates (hard) were extracted using fuzzy logic (adaptive) algorithm, which has used the values in RGB color space of input image and this have formed the fuzzy sets along with membership functions. The comparison results of proposed work over conventional methods have proven the effectiveness of proposed model with high sensitivity and specificity of detecting hard exudates.

In 2011, Ram et al. [24] have presented a new model for automatic detection of microaneurysms MA from digital color fundus images. They have formulated MA detection as the issue of target detection. Moreover, a consecutive rejection-based approach was proposed to reduce the count of clutter responses gradually. They have also designed processing stages for rejecting the distinct classes of clutter using a set of dedicated features. The outcome of the wide assessment of developed model on three varied retinal image datasets was specified and that have highlighted the superiority of developed strategy.

In 2010, Agurto et al. [4] have developed the utility of multiscale amplitude-modulation–frequency-modulation (AM–FM) approaches for discerning among normal as well as pathological retinal images. The approach that has presented here was tested via standard images. They have used 120 regions (40 × 40 pixels) presenting four lesions types commonly related to DR. The types of region comprised microaneurysms, exudates, neovascularization, and so on. Moreover, they have used distance measures among the extracted feature vectors for measuring the interstructure similarity. The overall results have demonstrated the ability of proposed methodology for using in automatic DR screening.

In 2017, Amin et al. [13] have proposed a system that can classify exudates and non-exudates regions from retinal images. The developed approach was on the basis of pre-processing to extract the lesions of candidate followed by features extraction and classification. Gabor filter was applied in the pre-processing stage. The developed approach was assessed using certainly accessible datasets aiding the parameters like true positive, false positive and other statistical analysis, and the results have reviewed the substantial growth of proposed work.

In 2017, Akram and Khan [25] have proposed a CAD system for detecting the early stage of DR. The algorithms for retinal image preprocessing was proposed, and the blood vessel improvement, segmentation with optic disk localization as well as detection could lead to the identification of varied DR lesions via developed hybrid fuzzy classifier. Then, the approaches were examined on four various databases and have compared to other methods. The outcomes have shown the dominant performance of proposed work.

In 2009, Osareh et al. [8] have developed a model for an automatic finding of exudate pathologies in input images, which was on the basis of computational intelligence models. The segmentation was done via fuzzy c-means clustering. An algorithm that was associated with genetic was used for ranking the features. NN classifier classified the chosen feature vectors. Then the implementation of the algorithm was attained by large image dataset that comprises 300 labeled (manually) retinal images. The outcomes of proposed model shave shown its superiority over other methods in terms of accurate identification of retinal exudates.

2.1.1 Nature inspired algorithms for diabetic retinopathy detection

Sopharak et al. [48] proposed the real-time and simple hybrid method on the basis of the mathematic morphology and the naive Bayes classifier. This method was exploited to detect MA from non-dilated retinal image. This comprised of coarse segmentation utilizing mathematic morphology and fine segmentation exploiting naive Bayes classifier. Mookiah et al. [49] worked on advanced image processing and machine learning algorithms. This method was exploited to pre-process retinal digital images, extract the features and classify the retinal images. Welikala et al. [50] presented an automated method for the detection of new vessels from retinal images. This method was on the basis of a two classification method. In addition, two vessel segmentation methods were applied to create two separate binary vessel map that each hold important information. Quellec et al. [5] presented a novel multiple-instance learning framework. This framework was exploited for automated image classification. In 2-D retinal image datasets, the proposed framework was applied to diabetic retinopathy screening. Generally, the multiple-instance learning was a type of supervised learning that deals with uncertainty of instance labels.

Osareh et al. [8] developed an approach for automated identification of exudate pathologies in retinopathy images on the basis of the computational intelligence methods. By exploiting fuzzy c-means clustering and using some preprocessing steps, i.e., color normalization and contrast enhancement, the color retinal images are segmented.

2.1.2 Wavelet based segmentation for diabetic retinopathy detection

Quellec et al. [51] presented a novel approach for detecting Microaneurysms in the retina, on the basis of the template-matching in the wavelet domain. The wavelet basis is adapted utilizing the lifting scheme framework. A new criterion was proposed to evaluate a lesion detector. Niemeijer et al. [52] presented an automatic system to find the location of the major anatomical structures in color fundus photographs; the macula, the optic disc, as well as the vascular arch. These structures are found by fitting a single point-distribution-model to the image, which comprise points on each structure. The technique can handle optic disc and macula centered images of both the left and the right eye. Salazar-Gonzalez et al. [53] presented a novel method for blood vessels and optic disk segmentation in retinal images. By integrating the MRF image reconstruction, mechanism of flux, and compensation factor into the graph cut method. The procedure also includes contrast enhancement, adaptive histogram equalization, binary opening, and distance transform for preprocessing. Kaur and Mittal [54] proposed a novel and generalized exudates segmentation method. This method was exploited for the computer-aided diagnosis of diabetic retinopathy has been proposed. The proposed method reliably segments exudates present in retinal fundus images.

2.1.3 Texture patterns for diabetic retinopathy detection

Morales et al. [55] examines the discrimination capabilities in the texture of fundus images to distinguish between pathological and healthy images. For this purpose, the performance of local binary patterns (LBP) as a texture descriptor for retinal images was used and compared with other descriptors such as LBP filtering (LBPF) and local phase quantization (LPQ). Galshetwar et al. [56] proposed a novel approach for content based image retrieval (CBIR) in DR. The concept of salient point selection and inter-plane relationship technique was utilized. Abdillah et al. [57] uses texture feature because of its feasibility. Here, local binary pattern (LBP), texture feature was exploited because it has better data representation than other algorithms. Sarwinda et al. [58] worked on texture feature capabilities from fundus images to differentiate among DR, age-related macular degeneration (AMD) screening and normal. The proposed approach exploits the enhancement of local LBP with estimation of LBP original and magnitude value of fundus images.

2.2 Review

The review of conventional methods (tabulated in Table 1) in the literature section is as follows: random forest (RF) classifier [7] identifies the blot haemorrhages (HE) and has the ability of discrimination among lesions and vessel segments. However, it does not concentrate on bright lesions. SVM classification [23] scales well to high dimensional data but it is difficult to understand, and good kernel function is also complex. Fuzzy logic [12] is very useful for rapid prototyping. However, the proof of characteristics of fuzzy systems is impossible in most cases. Gaussian filter [24] accurately detects the lesion, and it is more flexible. Nevertheless, Practical implementation is difficult, and it is not so robust. AM–FM method [4] has the capability of differentiating retinal features, and Global classification is possible. However, the formulation is difficult, and it is less accurate. EX detection model [13] Achieves greater precision in detecting DR and less time-consuming. However, it cannot give the severity of disease and also the automatic detection is quite difficult. Fuzzy classifier [25] separates the noisy areas and detects the optic disk, but the thing is early detection of DR is somewhat difficult with this method. Thus to overcome all the mentioned issues, it is important to develop a new DR detection model.

3 Functioning approaches to proposed detection process

The representation of proposed detection model is in Fig. 1. The structure has four phases: Preprocessing, Segmentation, Feature Extraction, and classification. The fundus image \(I\) is the input image, from which the abnormalities [Haemorrhage, Microaneurysm, Exudates and IntraRetinal Microvascular Abnormalities (IRMA)] are segmented via THFB [26] based segmentation. The segmented image \(S\) is given for extracting the features. The features are extracted using LVP [27] that results in the LVP features \(F\). Then it is given to PCA [28] based dimensionality reduction that results in \({F^{red}}\) features. The extracted \({F^{red}}\) features are progressed under the classification. In this paper, NN [29, 40] classifier is used to detect the presence of retinopathy. Moreover, the classifier classifies the severity of retinopathy (stage of retinopathy): normal, early, moderate and severe.

In the conventional approaches, the count of abnormal particles such as Haemorrhage, Microaneurysm, Exudates, and IRMA are manually progressed to confirm the existence of diabetic retinopathy. However, this is not possible for all the images as it is time-consuming. Thus this paper goes with a new concept of getting the abnormal components, from which the features are extracted based on intensity, and they are given to NN classifier to detect the retinopathy.

3.1 Phase I: Preprocessing

This work goes out with the pre-processing phase as the initial phase, and the respective processes are given below.

Contrast enhancement: The contrast of \(I\) is improved first, and this is the initial phase. The explicit process adjusts the image’s intensity [30], and thus the visibility of image gets extremely improved by adopting relative darkness and brightness of \(I\), which is defined in Eq. (1), where co specifies the contrast enhancement of \(I\). By this, the existing \(I\) converts into a new grey level image. The contrast-enhanced image is specified as \(C\)

Morphological operations: The pre-processing also stuffed with some morphological operations in \(I\), which makes certain changes in the given \(I\). The operations normally include some dilation operation, erosion operation, a combination of both operations. Moreover, these operations are useful in representation and description of region shapes like boundaries, skeletons and convex hulls, which results in the pre-processed image \(P\).

3.2 Phase II: Triplet half band filter bank based segmentation

The analysis and synthesis LP filters of the class of corresponding three half-band filters are given in Eqs. (2) and (3) [26].

where \({Q_0}(a),\,{Q_1}(a)\) and \({Q_2}(a)\) are the half band filters that approximate as 1 in passband and 0 in the stopband. The analysis and synthesis high pass filter (HPF) are attained using Eq. (4). Here, the parameter \(q\) grants flexibility to select the magnitude at \(\omega =0.5\pi\).

The segmented abnormalities in fundus image, \(S\) will be the given to the next phase for extracting the features and it is explained below.

3.3 Phase III: Feature extraction

This is the next phase from which the features are extracted from \(S\). In this paper, LVP features are extracted and the detailed process is as follows: LVP is the improvement of Local Tetra Pattern (LTrP) that identifies the relationship of reference pixel along eight neighbors at \(d\) distance and encodes them via Comparative Space Transformation (CST). Moreover, LVP is quite familiar with its awesome pose, lighting as well as illumination variations. The fundamental LVP contribution is CST that can encode the derivatives that of pairwise in four diverse directions. The \(\left( {\vec {m} - 1} \right){\text{th}}\) order derivative in four diverse directions at \(r{p_0}\) reference pixel at \(d\) is assessed as in Eqs. (5–8).

Further, \(\vec {m}{\text{th}}\) order LVP is assessed from \((\vec {m} - 1){\text{th}}\) order derivative using \({E_2}\left( {.,.} \right)\) in \(\theta\) derivative direction as given in Eq. (9).

where \(\theta =0^\circ ,45^\circ ,90^\circ \,{\text{and}}\,\,135^\circ\). \(E\) is the encoding function, and it is defined in Eq. (10).

where \(\theta =0^\circ ,45^\circ ,90^\circ ,{\text{and }}135^\circ\), \(b=1,2,...8\).

The accuracy of LVP recognition particularly in unconstrained environ is worst because it encodes the relationship among pixels in the local neighborhood of \(rp\) at many distances in the higher order derivative space by ignoring the discriminative data that exist in the locality of \(rp\) in the given image. Usually, the features of each abnormality may differ in their size, length, and so on and hence the cumulative feature vector might have a large size that is impossible to process in a precise manner.

4 Dimensionality reduction and classification

4.1 PCA dimensionality reduction

Thus, this paper uses PCA procedure to reduce the dimensions of those features. The procedure is as follows: The extracted \(F\) is given as the input. As the initial step, a group of \(\overline {{DA}}\) data vectors \(v{e_1}, \ldots v{e_{\overline {{DA}} }}\) are chosen from the given input and \(v{e^0}\) specifies the distinct group observation of variables \(v\). The assessment of empirical mean curve is done with all \(cl\) column: \(cl=1, \ldots v\). The subsequent mean value is located in \({p_{(v)}}\) with \(v \times 1\) dimensions, and it is given in Eq. (11). The mean deviation is assessed as in Eq. (12), where \(K\) denotes the \(\overline {{DA}} \times v\) matrix and \(u\)refers to the column vector \(\overline {{DA}} \times 1\) of all 1s: \(u[ve]=1\)

Further, the calculation of the covariance matrix \(c{v^{(X)}}\) is done as given in Eq. (13). The eigenvector, as well as eigenvalues, are assessed by valuing \(X\) matrix that also diagonalizes \(c{v^{(X)}}\) as given in Eq. (14).

where \(d{i^{(X)}}\) specifies the eigenvalues’ diagonal matrix. The eigenvalue matrix decrease of \(d{i^{(X)}}\) is achieved by sorting the column of eigenvector matrix \(Y\). The evaluation of cumulative energy content \(cm\) is given in Eq. (15), it holds the sum of energy content of eigenvalues from 1 via \(cl\).

The eigenvectors’ subset is selected by saving the \(cn\) column of \(X\) as \(Z\) matrix. Moreover, the cost of \(cn\) is chosen via \(e^{\prime}\) vector. The column of \(\bar {X}\) matrix, \(\bar {X}=E \cdot Z=KLT\left\{ {LO} \right\}\) is denoted as the vector that defines Kosambi–Karhunen–Loeve transform in \(LO\) row matrix. The \(E\) matrix is defined as in Eq. (16), where \(l^{\prime}=l^{\prime}\left( {cl} \right)=\left\{ {\sqrt {c{v^{\left( X \right)}}|cl,cl|} } \right\}\): \(cl=1, \ldots v\), and the process results in the dimensional reduced feature \({F^{red}}\).

The dimensional reduced feature \({F^{red}}\) is given as the input to NN.

4.2 Phase IV: NN classification

In this paper, NN classifier is used to identify or detect the DR along with the stage of severity. The network model is determined in Eqs. (17), (18) and (19), where \(l\) specifies the hidden neuron, \(\bar {w}_{{\left( {\bar {B}l} \right)}}^{{\left( {HI} \right)}}\)denotes the bias weight to \(l{\text{th}}\) hidden neuron, \({N_l}\) indicates the count of input neurons, \({N_h}\) specifies the count of hidden neurons, \(\bar {w}_{{\left( {\bar {B}j} \right)}}^{{\left( o \right)}}\) is the output bias weight to \(j{\text{th}}\) layer, \(\bar {w}_{{\left( {lj} \right)}}^{{\left( o \right)}}\) denotes the output weight from\(l{\text{th}}\) hidden neuron to \(j{\text{th}}\) layer, \(\bar {w}_{{\left( {kl} \right)}}^{{\left( {HI} \right)}}\)denotes the \(k{\text{th}}\) input to \(l{\text{th}}\)hidden neuron and \(NF\) is the activation function. The network output \({\hat {H}_j}\) is given in Eq. (18), where \({H_j}\) is the actual output, \(\left| {{H_j} - {{\hat {H}}_j}} \right|\) denotes the error between actual and predicted output.

The classifier outputs whether the given image is affected with DR and particularly with the stage of severity.

5 Results and discussion

5.1 Simulation setup

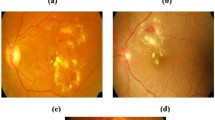

The proposed DR detection model was implemented in MATLAB 2014 a. The DIARETDBI database was used for experimentation, which has 88 iris images. The sample images with the results of proposed work over other wavelets were illustrated in Fig. 2. The proposed model was compared to other conventional classifiers such as SVM [31], NB [32] and k-NN [33] in terms of Accuracy, Specificity, Sensitivity, Precision, NPV, F1-Score, MCC, FPR, FNR and FDR to prove the efficiency of the proposed model in detecting DR.

5.2 Analytical result of proposed model over conventional classifiers

The graphical results given in Fig. 3 illustrates the performance of proposed THFB based NN classifier on other classifiers like KNN, NB and SVM that based on wavelets such as THFB, Symlets, Biorthogonal, Coiflet and Daubechies; (combinations on k-NN:T-kNN, B-kNN, C-kNN, D-kNN and S-kNN, combinations on NB: T-NB, B-NB, C-NB, D-NB and S-NB, combinations on SVM: T-SVM, B-SVM, C-SVM, D-SVM and S-SVM). Both positive and negative measures are examined, which reveals that as in Fig. 3a, the accuracy of proposed model is very high, which is 6.41, 16.90, 18.57, 15.27, and 40.67% better from the conventional T-kNN, B-kNN, C-kNN, D-kNN and S-kNN, respectively. The sensitivity of proposed model is 3.03, 79.41, 80.88, 86.76, and 88.88% better than the models like T-kNN, B-kNN, C-kNN, D-kNN, and S-kNN, respectively. The precision of proposed model is 37.73, 60.27 and 61.64% superior to T-kNN, B-kNN, and C-kNN, respectively, and 63.01% better than both D-kNN and S-kNN models.

The performance of proposed model over NB classifier is shown in Fig. 3c. It is evident that the accuracy of the proposed model is 6.41, 13.69, 10.66, 12.16, and 40.67% better from the methods like T-NB, B-NB, C-NB, D-NB, and S-NB, respectively. The proposed model has high sensitivity, and it is 13.55, 86.56, 88.05, 89.55, and 50.74% better than T-NB, B-NB, C-NB, D-NB, and S-NB, respectively. The precision of proposed model is 41.17, 61.11, 62.5, 63.88 and 65.27% superior to T-NB, B-NB, C-NB, D-NB, and S-NB, respectively. MCC of the proposed model is 51.28% better than T-NB model.

Figure 3e illustrates the performance of proposed model over conventional SVM classifier. The graphical representation tells that the accuracy of the proposed model is 7.59, 19.71, 18.05, 16.43, and 44.06% better from T-SVM, B-SVM, C-SVM, D-SVM, and S-SVM, respectively. The sensitivity of proposed model is 1.49, 80.88, 82.35, 86.76 and 51.47% better than T-SVM, B-SVM, C-SVM, D-SVM, and S-SVM, respectively. The precision of proposed model is 22.03, 59.72, 61.11, 62.5 and 63.8% better from T-SVM, B-SVM, C-SVM, D-SVM and S-SVM, respectively. The MCC of the proposed model is 11.76% better than T-SVM model.

Similarly, the negative measure analysis is as follows: Fig. 3b gives the analytical result of proposed model over existing k-NN in terms of negative measures. It is evident that FPR of the proposed model is very low than other method, which is 42.10%, 8.33%, 15.38% and 66.66% better than T-kNN, B-kNN, C-kNN, and S-kNN, respectively. the FNR of proposed model is 5.71, 64.51, 64.89, 65.62 and 52.17% superior to T-kNN, B-kNN, C-kNN, D-kNN and S-kNN, respectively. FDR of proposed model is 32.65, 54.79, 55.40, 54.16 and 54.79% better from T-kNN, B-kNN, C-kNN, D-kNN and S-kNN, respectively.

Figure 3d illustrates the performance of proposed model over NB. It is evident that the FNR of the proposed model is 12.82, 63.04, 63.82, 64.21 and 51.42% better than T-NB, B-NB, C-NB, D-NB, and S-NB, respectively. The FDR of the proposed model is 36.53, 54.79, 54.16, 53.52 and 54.79% superior to T-NB, B-NB, C-NB, D-NB, and S-NB, respectively. Subsequently, from Fig. 3f, it is evident that the FNR of the proposed model is 2.85, 62.63, 63.04, 63.82 and 50.72% better from T-SVM, B-SVM, C-SVM, D-SVM, and S-SVM, respectively. FDR of proposed model is 28.57%, 58.33, 58.90, 58.33, and 57.74% better than T-SVM, B-SVM, C-SVM, D-SVM and S-SVM, respectively. Here, the analytical results are a favor to the proposed model, which tells that the detection of DR by proposed model is more perfect than other combinations of conventional classifiers. Figure 4 demonstrates the LVP pattern of abnormal and normal image.

5.3 Classification performance

The performance of NN classifier using triplet is tabulated in Table 2, from which it is evident that the proposed model with the aid of triplet has dominated the conventional methods for both positive and negative measures. The tabulated result shows that the accuracy of NN is 6.52, 8.68, and 11.78% better than SVM, k-NN and NB, respectively. The sensitivity of proposed model is 1.78 and 7.54% better from k-NN and NB, respectively. The specificity of NN classifier is 8.21, 9.72, and 12.85% better than SVM, k-NN and NB, respectively. The precision of NN is 21.42, 26.02, and 36.99% better from SVM, k-NN and NB, respectively. The FPR of NN classifier is very low, which is 40, 43.74, and 49.99% better from SVM, k-NN and NB, respectively. FNR of NN is 3.12 and 11.42% better than the k-NN and NB, respectively. NPV of NN is very high, which is 8.21, 9.72, and 12.85% better than SVM, k-NN and NB, respectively. The FDR of NN is 27.14, 30.35, and 36.30% better than SVM, k-NN and NB, respectively. F1-Score of NN is high, which is 10.46, 13.62, and 21.92% better from SVM, k-NN and NB, respectively. MCC of NN is 21.63, 28.44 and 47.99% superior to SVM, k-NN, and NB, respectively. The performance outcome has shown the better performance of NN in terms of all positive and negative measures, which show that the recognition of retinopathy is more accurate in NN than other conventional methods.

5.4 Performance of THFB based NN

The overall performance of proposed THFB based NN classifier over NN classifier that based on Symlets, Biorthogonal, Coiflet, and Daubechies (S-NN, D-NN, C-NN and B-NN) is summarized in Table 3. From the table, it is evident that the accuracy of the proposed model in detecting DR is high, which is 16.66, 17.13, 15.29 and 28.94% better than B-NN, C-NN, D-NN, and S-NN, respectively. The sensitivity of proposed model is 84.21% superior to both B-NN and C-NN models, respectively, and 87.71% better from D-NN and 57.89% better from and S-NN models. The specificity of the proposed model is 16.17% better from S-NN model. The precision of proposed model is 55.78, 57.21, 55.14 and 57.89% better than B-NN, C-NN, D-NN, and S-NN, respectively. The FPR of the proposed model is low, which is 54.99% better than S-NN model. FNR of the proposed model is 60.75%, better from both B-NN and C-NN models, and 61.72 and 51.56% better than D-NN and S-NN, respectively. The F1Score of the proposed model is 76.98, 77.17, 80.97 and 57.89% better than B-NN, C-NN, D-NN, and S-NN, respectively. MCC of the proposed model is 93.63, 94.77, 94.01 and 91.66% superior to the models like B-NN, C-NN, D-NN, and S-NN, respectively. Thus, the accurate detection of DR is well proven for the proposed over whereas the conventional methods are very poor in detecting DR. Table 4 summarizes the relative performance of the proposed method over the conventional methods.

6 Conclusion

This paper has proposed a new detection model of DR and the model has also granted the severity of retinopathy from the given fundus image. The proposed model comprises certain phases like Preprocessing, Segmentation, Feature Extraction and Classification. Here, THFB Segmentation was done, LVP was used for extracting the features, PCA procedure was used to minimize the dimensions of the feature vector, and NN was used for classification purpose. Next, to the implementation, the performance of the proposed model was compared to other methods like SVM, k-NN, and NB. The investigational outcome has reviewed that the accuracy of NN is 6.52, 8.68, and 11.78% better than SVM, k-NN and NB, respectively. The sensitivity of proposed model is 1.78 and 7.54% better from k-NN and NB, respectively. The specificity of NN classifier is 8.21, 9.72, and 12.85% better than SVM, k-NN and NB, respectively. The precision of NN is 21.42, 26.02, and 36.99% better from SVM, k-NN and NB, respectively. Thus the analytical outcome has shown the superior performance of NN in correspondence with all positive and negative measures, which show the betterment of the proposed model in recognizing DR more accurately. In future, Whale with particle swarm optimization (WP) can be exploited in order to determine the severity of retinopathy from the fundus image.

References

Cheung N, Mitchell P, Wong TY (2010) Diabetic retinopathy. Lancet 376(9735):124–36

Yau JWY et al (2012) Global prevalence and major risk factors of diabetic retinopathy. Diabetes Care 35(3):556–564

Niemeijer M, Abramoff MD, Ginneken BV (2009) Information fusion for diabetic retinopathy CAD in digital color fundus photographs. IEEE Trans Med Imaging 28(5):775–785

Agurto C et al (2010) Multiscale AM–FM methods for diabetic retinopathy lesion detection. IEEE Trans Med Imaging 29(2):502–512

Quellec G et al (2012) A multiple-instance learning framework for diabetic retinopathy screening. Med Image Anal 16(6):1228–1240

Molven A, Ringdal M, Nordbø AM, Raeder H, Støy J, Lipkind GM, Steiner DF, Philipson LH, Bergmann I, Aarskog D, Undlien DE, Joner G, Søvik O; Norwegian Childhood Diabetes Study Group, Bell GI, Njølstad PR (2008) Mutations in the insulin gene can cause MODY and autoantibody-negative type 1 diabetes., Diabetes 57(4):1131–1135

Seoud L, Hurtut T, Chelbi J, Cheriet F, Langlois JMP (April 2016) Red lesion detection using dynamic shape features for diabetic retinopathy screening. IEEE Trans Med Imaging 35(4):1116–1126

Osareh A, Shadgar B, Markham R (2009) A computational-intelligence-based approach for detection of exudates in diabetic retinopathy images. IEEE Trans Inf Technol Biomed 13(4):535–545

Zhang B, Wu X, You J, Li Q, Karray F (2010) Detection of microaneurysms using multi-scale correlation coefficients. Pattern Recognit 43(6):2237–2248

Lazar I, Hajdu A (2013) Retinal microaneurysm detection through local rotating cross-section profile analysis. IEEE Trans Med Imaging 32(2):400–407

Antal B, Hajdu A (2012) Improving microaneurysm detection using an optimally selected subset of candidate extractors and preprocessing methods. Pattern Recognit 45(1):264–270

Ranamuka NG, Meegama RGN (March 2013) Detection of hard exudates from diabetic retinopathy images using fuzzy logic. IET Image Proc 7(2):121–130

Amin J, Sharif M, Yasmin M, Ali H, Fernandes SL (2017) A method for the detection and classification of diabetic retinopathy using structural predictors of bright lesions. J Comput Sci 19:153–164

Sanchez CI, Niemeijer M, Dumitrescu AV, Suttorp-Schulten MSA, Abr`amoff MD, van Ginneken B (2011) Evaluation of a computer-aided diagnosis system for diabetic retinopathy screening on public data. Investig Ophthalmol Visual Sci 52(7):4866–4871

Odstrcilik J et al (2013) Retinal vessel segmentation by improved matched filtering: evaluation on a new high-resolution fundus image database. IET Image Proc 7(4):373–383

Trucco E et al (2013) Validating retinal fundus image analysis algorithms: issues and a proposal. Investig Ophthalmol Vis Sci 54(5):3546–3559

Zhang X, Thibault G, Decencière E, Marcotegui B, Laÿ B, Danno R, Cazuguel G, Quelle G, Lamard M, Massin P, Chabouis A, Victor Z, Ergina A (2014) Exudate detection in color retinal images for mass screening of diabetic retinopathy. Medical Image Anal 18(7):1026–1043

Decenci`ere E et al (2014) Feedback on a publicly distributed image database: the Messidor database. Image Anal Stereol 33:231–234

Niemeijer M et al (2010) Retinopathy online challenge: automatic detection of microaneurysms in digital color fundus photographs. IEEE Trans Med Imaging 29(1):185–195

Mendonc¸a AM, Sousa A, Mendonc¸a L, Campilho A (2013) Automatic localization of the optic disc by combining vascular and intensity information. Comput Med Imaging Graph 37(5–6):409–417

Akram MU, Khan A, Iqbal K, Butt WH (2010) Retinal image: optic disk localization and detection. In: Campilho A, Kamel M (eds) Image analysis and recognition, vol 6112. Springer, Berlin, Heidelberg, pp 40–49

Walter T, Klein JC, Massin P, Erginay A (Oct. 2002) A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina. IEEE Trans Med Imaging 21(10):1236–1243

Zhang B, Vijaya Kumar BVK, Zhang D (Feb. 2014) Detecting diabetes mellitus and nonproliferative diabetic retinopathy using tongue color, texture, and geometry features. IEEE Trans Biomed Eng 61(2):491–501

Ram K, Joshi GD, Sivaswamy J (2011) A successive clutter-rejection-based approach for early detection of diabetic retinopathy. IEEE Trans Biomed Eng 58(3):664–673

Usman M, Akram, Shoab A, Khan (2017) Automated detection of dark and bright lesions in retinal images for early detection of diabetic retinopathy. J Med Syst 36(5):3151–3162

Amol D, Rahulkar, Raghunath S, Holambe (2012) Half-Iris feature extraction and recognition using a new class of biorthogonal triplet half-band filter bank and flexible k-out-of-n:A postclassifier. IEEE Trans Inf Forensics Secur 7(1):230–240

Hung TY, Fan KC (2014) Local vector pattern in high-order derivative space for face recognition. In: 2014 ieee international conference on image processing (ICIP), Paris, pp. 239–243

Han Y, Feng X-C, Baciu G. (2013) Variational and PCA based natural image segmentation. Pattern Recognit 46(7):1971–1984

Mohan Y, Chee SS, Xin DKP, Foong LP (2016) Artificial neural network for classification of depressive and normal in EEG. In: 2016 IEEE EMBS conference on biomedical engineering and sciences (IECBES)

Kaur R, Kaur S (2016) Comparison of contrast enhancement techniques for medical image. In: 2016 conference on emerging devices and systems (ICEDSS), Namakkal, pp. 155–159

Meyer D, Leisch F, Hornik K (2003) The support vector machine under test. Neurocomputing 55(1–2):169–186

Sugumaran V, Muralidharan V (2012) A comparative study of Naïve Bayes classifier and Bayes net classifier for fault diagnosis of monoblock centrifugal pump using wavelet analysis. Appl Soft Comput 12(8):2023–2029

Wu Y, Ianakiev K, Govindaraju V (2002) Improved k-nearest neighbor classification. Pattern Recogn 35(10):2311–2318

Naït-Ali A, Adam O, Motsch JF (2000) Modelling and recognition of brainstem auditory evoked potentials using Symlet wavelet. ITBM-RBM 21(3):150–157

Lina J-M, Mayrand M (1995) Complex Daubechies wavelets. Appl Comput Harmonic Anal 2(3):219–229,

Winger LL, Venetsanopoulos AN (2001) Biorthogonal nearly coiflet wavelets for image compression. Sig Process Image Commun 16(9)859–869

Prasad PMK, Prasad DYV, Rao GS (2016) Performance analysis of orthogonal and biorthogonal wavelets for edge detection of X-ray images. Proc Comput Sci 87:116–121

Kumar BSS, Manjunath AS, Christopher S (2018) Improved entropy encoding for high efficient video coding standard. Alex Eng J 57(1):1–9

Kota PN, Gaikwad AN (2017) Optimized scrambling sequence to reduce Papr in space frequency block codes based MIMO-OFDM system. J Adv Res Dyn Control Syst 502–525

Bhatnagar K, Gupta SC (2017) Extending the neural model to study the impact of effective area of optical fiber on laser intensity. Int J Intell Eng Syst 10(4):274–283

Balaji GN, Subashini TS, Chidambaram N (2015) Detection of heart muscle damage from automated analysis of echocardiogram video. IETE J Res 61(3):236–243

Bramhe SS, Dalal A, Tajne D, Marotkar D (2015) Glass shaped antenna with defected ground structure for cognitive radio application. In: International conference on computing communication control and automation, Pune, pp. 330–333

Yarrapragada KSSR, Krishna BB (2017) Impact of tamanu oil-diesel blend on combustion, performance and emissions of diesel engine and its prediction methodology. J Braz Soc Mech Sci Eng 39:1797–1811

Sreedharan NPN, Ganesan B, Raveendran R, Sarala P, Dennis B, Rajakumar BR (2018) Grey Wolf optimisation-based feature selection and classification for facial emotion recognition. IET Biom. https://doi.org/10.1049/iet-bmt.2017.0160

Sarkar A, Murugan TS (2017) Cluster head selection for energy efficient and delay-less routing in wireless sensor network. Wirel Netw. https://doi.org/10.1007/s11276-017-1558-2

Wagh AM, Todmal SR (2015) Eyelids, eyelashes detection algorithm and hough transform method for noise removal in iris recognition. Int J Comput Appl 112(3):28–31

Iyapparaja M, Tiwari M (2017) Security policy speculation of user uploaded images on content sharing sites. IOP Conf Ser Mater Sci Eng 263(4):042019

Sopharak A, Uyyanonvara B, Barman S (2013) Simple hybrid method for fine microaneurysm detection from not-dilated diabetic retinopathy retinal images. Comput Med Imaging Graph 37(5–6):394–402

Mookiah MRK, Rajendra Acharya U, Martis RJ, Chua CK, Lim CM, Ng EYK, Laude A (2013) Evolutionary algorithm based classifier parameter tuning for automatic diabetic retinopathy grading: a hybrid feature extraction approach. Knowl Based Syst 39:9–22

Welikala RA, Fraz MM, Dehmeshki J, Hoppe A, Tah V, Mann S, Williamson TH, Barman SA (2015) Genetic algorithm based feature selection combined with dual classification for the automated detection of proliferative diabetic retinopathy. Comput Med Imaging Graph 43:64–77

Quellec G, Lamard M, Josselin PM, Cazuguel G, Cochener B, Roux C (2008) Optimal wavelet transform for the detection of microaneurysms in retina photographs. IEEE Trans Med Imaging 27(9):1230–1241

Niemeijer M, Abramoff MD, van Ginneken B (2007) Segmentation of the optic disc, macula and vascular arch in fundus photographs. IEEE Trans Med Imaging 26(1):116–127

Salazar-Gonzalez A, Kaba D, Li Y, Liu X (2014) Segmentation of the blood vessels and optic disk in retinal images. IEEE J Biomed Health Inform 18(6):1874–1886

Kaur J, Mittal D (2018) A generalized method for the segmentation of exudates from pathological retinal fundus images. Biocybern Biomed Eng 38(1):27–53

Morales S, Engan K, Naranjo V, Colomer A (2017) Retinal disease screening through local binary patterns. IEEE J Biomed Health Inform 21(1):184–192

Galshetwar GM, Waghmare LM, Gonde AB, Murala S (2017) Edgy salient local binary patterns in inter-plane relationship for image retrieval in diabetic retinopathy. Proc Comput Sci 115:440–447

Abdillah B, Bustamam A, Sarwinda D (2017) Classification of diabetic retinopathy through texture features analysis. In: 2017 International conference on advanced computer science and information systems

Sarwinda D, Bustamam A, Arymurthy AM (2017) Fundus image texture features analysis in diabetic retinopathy diagnosis. In: 2017 eleventh international conference on sensing technology (ICST), Sydney, NSW, pp. 1–5

Acknowledgement

We acknowledged our sincere thanks to Dr. Amol D Rahulkar, National Institute of Technology, Goa and Pimpri Chinchwad Education Trust’s Pimpri Chichwad College of Engineering & Research, Ravet, Pune for their encouragement and valuable support during this research work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Randive, S.N., Rahulkar, A.D. & Senapati, R.K. LVP extraction and triplet-based segmentation for diabetic retinopathy recognition. Evol. Intel. 11, 117–129 (2018). https://doi.org/10.1007/s12065-018-0158-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-018-0158-0