Abstract

Feature Selection (FS) methods alleviate key problems in classification procedures as they are used to improve classification accuracy, reduce data dimensionality, and remove irrelevant data. FS methods have received a great deal of attention from the text classification community. However, only a few literature surveys include them focusing on text classification, and the ones available are either a superficial analysis or present a very small set of work in the subject. For this reason, we conducted a Systematic Literature Review (SLR) that asses 1376 unique papers from journals and conferences published in the past eight years (2013–2020). After abstract screening and full-text eligibility analysis, 175 studies were included in our SLR. Our contribution is twofold. We have considered several aspects of each proposed method and mapped them into a new categorization schema. Additionally, we mapped the main characteristics of the experiments, identifying which datasets, languages, machine learning algorithms, and validation methods have been used to evaluate new and existing techniques. By following the SLR protocol, we allow the replication of our revision process and minimize the chances of bias while classifying the included studies. By mapping issues and experiment settings, our SLR helps researchers to develop and position new studies with respect to the existing literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Automated text classifiers can be used to handle several real-world problems, such as spam filtering, sentiment analysis, and news classification. Texts are usually represented by a high-dimensional and sparse document-term matrix in a space having the dimensionality of the size of the vocabulary containing word frequency counts. The high dimensionality can cause some problems, such as the curse of dimensionality and model overfitting. Feature Selection (FS) can be used to reduce dimensionality, remove irrelevant data, and increase the learning accuracy. FS is the process of automatically or manually select the features which contribute most to the classification of a given text. In text classification problems, the feature is usually some representation of a subset of words. A significant subset of features extracted from text corpora may not be relevant for the text classification task. These non-relevant features can either deteriorate the efficiency and accuracy of the classification models (Kumbhar and Mali 2013). For this reason, FS for text classification became a popular research topic in artificial intelligence and data mining conferences and journals.

Some general reviews about FS are available. Chandrashekar and Sahin (2014) and Kumar (2014) provide a general introduction to FS methods and classify them into the filter, wrapper, and embedded categories. Pereira et al. (2018) give a comprehensive survey and novel categorization of the FS techniques focusing on multi-label classification. However, these surveys did not consider in their analyses the different methods to handle the high dimensionality of the feature space, the different text representation formats such as bag of words and word embedding, and the power of the features’ semantics for choosing the most efficient set of features.

FS methods have received a great deal of attention from the text classification community due to their strength in improving retrieval recall and computational efficiency (Kumbhar and Mali 2013). However important, there are only a few literature surveys (Kumbhar and Mali 2013; Shah and Patel 2016; Deng et al. 2019) that include them focusing on text classification. The ones available are either a superficial analysis or present a very small set of work in the subject. Kumbhar and Mali (2013) and Shah and Patel (2016) are more introductory studies, and both surveys don’t focus only on FS methods. Besides to FS, Kumbhar and Mali (2013) address feature extraction methods and Shah and Patel (2016) address algorithms for text classification. For the best of our knowledge, there is only one review work focused exclusively on FS for text classification (Deng et al. 2019). Although Deng et al. (2019) provide a good overview of the subject, a limited proportion of published papers about FS for text classification have been included (28 studies). Among these, only fourteen were published in the last ten years, and six were published in the last five years. Besides, no clear criteria for inclusion or exclusion of the selected articles were defined. The study selection was made from other FS reviews that are not specific to text classification.

Our literature review expands existing surveys on FS methods, including up-to-date researches and providing a thorough analysis of FS methods considering the text classification task. The contribution of our literature survey lays on:

-

Including a more significant number of papers covered (175 studies) resulting from a more comprehensive review in the theme;

-

Bringing more up-to-date researches, including studies from 2013–2020;

-

Proving a reproducible review according to an established literature review protocol;

-

Providing a new research categorization for understanding the FS methods area;

-

Providing a description of the experimental settings carried by the 175 reviewed studies; and

-

Last but not least, we classified all 175 papers retrieved in our study according to our categorization scheme.

This paper is organized as follows: Section 2 provides background information about the main elements for text classification, including FS. The protocol of our SLR, which includes the research questions and inclusion/exclusion criteria for selecting the studies from the literature, is detailed in Sect. 3. Section 4 summarizes the issues addressed in the included studies. In Sect. 5, we cover all of the included studies by organizing them into a new categorization scheme specific to FS methods for text classification. The categorization schema proposed in this paper provides a simplified way to organize the actual methods as well as positioning new studies about FS for text categorization. The mapping of the included studies into this categorization schema allows us to identify which are the issues/topics that already have a significant amount of studies and which ones have been less explored (possibly research gaps). In Sect. 6, we survey the experiment settings used to evaluate the proposed methods. We believe that the mapping of existing studies and their experiment settings would help researchers to position and develop new studies about FS for text classification.

2 Background

Text classification is the problem to determine which class(es) a given document belongs to (Manning et al. 2008). The classification problem can be divided into three main sub-types: binary, multiclass and multilabel. If only two classes are predefined, the problem is called as a binary classification problem. If three or more classes are defined, and each document can only be associated with one of these classes, it is known as a multiclass classification problem. Finally, if each document can be simultaneously associated with two or more classes (or labels), it is defined as a multilabel classification problem.

Currently, developing models for text classification is a sophisticated process involving not only the training of models, but also numerous additional procedures, e.g., data pre-processing, transformation, and dimensionality reduction (Mirończuk and Protasiewicz 2018). This background section presents the main concepts directly related to this review’s theme. Section 2.1 discusses distinct text representation models punctuating its advantages and disadvantages. Section 2.3 introduces the main concepts on FS specifically for text classification. Finally, Sect. 2.2 presents learning algorithms/architectures for text classification.

2.1 Representation models for textual data

Once you have labeled documents, the first step to construct a classification model is to extract features from text corpus. Different models of feature representation and weighting can be used for text classification and each representation model has advantages and disadvantages that must be considered. Below, we present two groups of representation models that are widely used in text classification architectures: N-gram based Models and Word Embedding Models.

N-gram is a set of N words which occurs “in that order” in a text set (Kowsari et al. 2019). The simplest and most widely used N-gram model is the BoW in which the \(N = 1\) (called 1-gram or uni-gram model). In this model, each feature corresponds a unique word in the text. However, the N-gram model can also be applied with N values greater than 1. For example, in the 2-gram model each feature corresponds to two consecutive words. N-gram models with \(N > 1\) could detect more information in comparison to 1-gram (Kowsari et al. 2019) because with \(N = 1\) the word order information is disregarded while in 2-gram or higher models part of the word order information is captured.

In the N-gram model, each feature (a word or set of words) receives a value/weight for each document in the corpus. This value is usually calculated based on the frequency of that word (or set of words) in each document. The simplest is precisely the frequency of the word (or set of words) in the document, known as Term Frequency (TF). However, other weighting methods may be used. The most well-known and widely used method is the Term Frequency-Inverse Document Frequency (TF-IDF). In this method, the Inverse Document Frequency (IDF) is used in conjunction with TF in order to reduce the effect of implicitly common words in the corpus (Kowsari et al. 2019).

The N-gram model is usually chosen to represent text in machine learning activities due to its simplicity, robustness and the observation that simple models trained on huge amounts of data outperform complex systems trained on less data (Mikolov et al. 2013a). However, recall that N-gram models don’t measure the semantic similarity of the words becoming a limiting factor for some types of machine learning tasks (Mikolov et al. 2013a). Thus, many researchers have been looking for representation models that capture the syntactic or semantic similarity of words (Mikolov et al. 2013a, b; Kowsari et al. 2019).

Unlike N-gram models that represent each word (or set of words) by a single value/weight per document, word embedding models represent each word (or set of words) by a N-dimension vector of real numbers (Kowsari et al. 2019). The idea behind word embedding models is that similar words have vectors with close values. In this way, the level of syntactic or semantic similarity between words can be measured based on the distance of their vectors. Different techniques for estimating word vectors have been proposed, as Word2Vec (Mikolov et al. 2013a), Glove (Pennington et al. 2014) and FastText (Bojanowski et al. 2017).

2.2 Text classification architectures

Over the years, different types of algorithms have been developed for the task of text classification (Kowsari et al. 2019). These algorithms can be divided into two main groups: traditional machine learning and deep learning. Some traditional algorithms, like Support Vector Machines (SVM), Naive Bayes (NB) and k-Nearest Neighbors (KNN), are widely studied for the text classification problem and are still commonly used by the scientific community (Kowsari et al. 2019). However, architectures based on deep learning like Convolutional Neural Network (CNN), Deep Belief Network (DBN), and Hierarchical Attention Network (HAN) are increasingly being researched for text classification (Kowsari et al. 2019). Despite having the potential to achieve excellent results in some situations, deep learning architectures have some limitations and disadvantages. Table 1 compares deep learning and traditional architecture for text classification.

Table 1 shows that each text classification architecture has advantages and disadvantages. Thus, each specific situation must be analyzed before choosing between using deep learning or traditional architecture for text classification. Two central points in this choice are data volume and the need to have model interpretability. Deep learning usually requires much more data than traditional machine learning algorithms and not facilitate a comprehensive theoretical understanding of learning (Kowsari et al. 2019). Therefore, if the volume of data available is small or there is a need for the interpretability of the model, the traditional architecture will probably be more suitable.

2.3 Feature selection for text classification

As shown in Sect. 2.1, the main representation models used for text classification result in high-dimensional vectors. High dimensionality can cause some problems, such as the curse of dimensionality and model overfitting. For this reason, many researchers use dimensionality reduction techniques to produce smaller feature spaces (Kowsari et al. 2019). According to Mirończuk and Protasiewicz (2018), dimensionality reduction techniques can be organized into three groups: FS, feature projection, and instance selection. While the first two types of methods aim to reduce the dimensionality of the feature space, the third aims to reduce the number of instances used for training. In this section, we focus on FS and feature projection methods.

In FS methods, the resulting feature set is a subset of the initial feature set. On the other hand, the feature projection results in a new group of features mapped from the original features. Both methods can be used in isolation or combined to reduce dimensionality. This systematic review focuses specifically on FS methods, so methods that perform feature projection are not in this study’s scope.

FS methods are usually classified into three categories: filter, wrapper, and embedded (Kumar 2014). This categorization is based on the FS strategy regarding how the FS integrates into the learning activity. Filter methods are executed as a previous step and are independent of the learning activity. Wrapper methods, on the other hand, encapsulate the predictor (i.e., the classifier) and utilize the performance of the predictor to assess the relevance of features or search for the most relevant subset of features. Finally, embedded methods include FS as part of the training process.

A relevant advantage of selecting features over projecting features is because the resulting feature set is a subset of the original features. In this way, each resulting feature preserves the same meaning as the original features. This is an important point for text classification, as each feature usually represents a word or set of words. According to the survey work carried out by Mirończuk and Protasiewicz (2018), FS is the most researched dimensionality reduction technique for text classification. In our SLR, we focus specifically on FS studies for text classification.

The FS activity, which is the focus of this review, can be useful in traditional architecture and deep learning for text classification. As traditional architecture is more dependent on feature engineering activities, the selection of features has an important role in improving the classification model’s accuracy. As the deep learning architecture has less dependence on feature engineering, FS tends to have less impact on the accuracy of the model. However, deep learning architectures are usually quite expensive to train. For this reason, FS may have an important utility for the deep learning architecture to reduce the computational cost.

3 Systematic literature review

The purpose of our review is to collect, organize in categories, and provide a comprehensive and recent review of FS methods for text classification. We decided to conduct a SLR to use a reproducible methodology and define explicit eligibility criteria. We aim to minimize the review bias and attempt to identify all studies that are related to our research questions.

There are several guidelines available to conduct SLRs, being Cochrane reviews protocol one of the most common in the health domain (Higgins and Green 2008). Based on Cochrane reviews protocol and other methods available in the literature, Kitchenham (2004) proposed a protocol focused on software engineering. The SLR reported in this paper follows the Kitchenham’s procedures for SLR.

We have performed a SLR in three databases: (1) IEEE Xplore Digital Library, (2) ACM Digital Library, and (3) Science Direct. Our SLR protocol includes the following steps: (i) the elaboration of research questions; (ii) the definition of search strategy; (iii) high-level paper selection and classification; and (iv) detailed review of selected papers. The searches were conducted using both title and abstract. It returned a total of 1376 unique papers from journals and conference considering the past eight years (2013–2020). After abstract screening and full-text eligibility analysis, 175 studies were included in our SLR.

3.1 Research questions and search strategy

The purpose of this SLR is to find primary studies using an unbiased search strategy to answer the following research questions:

-

1.

What are the main issues/problems that are being addressed by FS studies in text categorization task?

-

2.

What are the different categories of methods that have been proposed?

-

3.

What are the settings used to analyze and compare FS methods in experiments from the text categorization domain? For example: Text representation, Datasets, classifier algorithms and validation settings.

Preliminary searches were performed to assess the volume of potentially relevant studies. We identified that the query returned a small number of studies when applied only to the studies’ title. Searches using full text returned an impractical volume of non-relevant studies (dozens of thousands) because the searched terms are widespread in artificial intelligence literature. Therefore we decide to perform the search using title and abstract. Additionally, in our preliminary searches, we identified the words’ main variants on the concepts we are looking for. Based on that, we construct our query string:

(Feature OR Features OR Variable OR Variables OR Attribute OR Attributes) AND (Selection OR Select OR Selecting OR selected) AND (Text OR Texts OR Document OR Documents) AND (Categorization OR Classification OR Categorize OR Classify OR Categorizing OR Classifying OR Classifier)

3.2 Conducting the review

Study selection refers to the assessment of retrieved papers. For this, we defined inclusion and exclusion criteria. The first exclusion criteria specified was based on practical issues (i.e., language and date of publication). This SLR considered papers published in English and between the years 2013 and 2020. The year restriction was established considering a large number of included studies in this period. The study selection activity was executed in two steps: (1) title and abstract screening; and (2) full text screening. We performed both steps manually.

In the first screening phase, papers were included only if they contain, either in the title or in the abstract, descriptions related to Feature Selection and Classification Tasks topics in Text Domain.

After the first screening step, full texts were retrieved and analyzed individually. At this point, the aim was to ensure that only those studies that are related to the subject considered in this review and that are related to our research questions would be selected. The following are the main reasons for studies exclusion after the full-text analysis: (1) The study does not focus exclusively on FS (70 studies). (2) The study does not evaluate the FS method using text datasets (33 studies). (3) The study does not evaluate the classification task’s method (6 studies).

The SLR reported in this paper was conducted in October 2020. Included papers reached the amount of 175 studies. Among these studies, 71 (40.57% of total) were retrieved from ACM Digital Library, 71 (40.57% of total) cames from IEEE Xplore Digital Library and 33 (18.86% of total) of them were retrieved from Science Direct. This list of papers includes journal articles and conference proceedings.

The research group that executed this SLR is composed of one D.Sc. candidate and two professors, all addressing Artificial Intelligence and Data Mining topics. Fig. 1 shows the PRISMA flow diagram for this SLR. This diagram presents a systematic review’s main activities, indicating the number of studies evaluated at each stage. The PRISMA flow diagram was proposed by Moher et al. (2009) within a work that raised the preferred reporting items for systematic reviews and meta-analyses, the PRISMA statement.

4 Feature selection issues for text classification

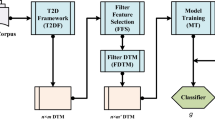

We read and analyzed all included studies to identify the main issues that are being addressed by them (Research Question 1). After analyzing each study, we identified the main groups of problems/issues and mapped the included studies to these groups. We found that these groups of problems represent sub-tasks of the FS process (Fig. 2). They are related to each other and can be organized as:

-

1.

Measure feature relevance – Measure the relevance of each feature is an essential task in FS activity. There are different ways to estimate the relevance of features, such as measuring the correlation with the target, the variable entropy, or calculating the redundancy of features (Kumar 2014). However, the basic idea is that the higher the relevance of a feature, the greater must be the power to increase the accuracy of the model (in our case, a text classifier). These studies compare existing metrics or define new metrics for calculating the predictive potential of each feature. The large part of the studies included in this review deal with issues related to the task of measure feature relevance.

-

2.

Subset search – The subset search task aims to find the best subgroup of features to be used in the classification. We found two main ways to perform this search: (a) evaluating several different subsets directly in the classification activity (wrapper method), and (b) using some heuristics to assess the relevance of each subset without evaluating in a specific classifier (filter method). In both approaches, optimization methods (such as genetic algorithms or Particle Swarm Optimization (PSO)) can be used to help the search. Subset search methods commonly use as its basis some of the existing feature relevance metrics (such as Chi-square (CHI), Information Gain (IG), or Mutual Information (MI)).

-

3.

Globalization – Relevance metrics and subset search methods commonly can be applied specifically for one class or label of the dataset. Therefore, a method that globalizes the results of each class/label is required to construct a final set of features that represents all classes or labels. One alternative to globalization is to use specific sets of features for each class/label. However, the classifier must be designed to work this way. We mapped studies about class/label specific features in the globalization category in this review.

-

4.

Ensemble – Each FS method has specific advantages and disadvantages, so combining two or more methods can lead to better results than using them separately. Ensemble studies propose or evaluate approaches to combining FS methods and/or metrics.

Sections 4.1–4.4 discuss the most relevant issues and studies for each task and present an overview of the main approaches we found to deal with each presented issue. The methods proposed in the included studies will be described in Sect. 5.

Fig. 3 presents the evolution of the number of studies by the issue group. This chart shows an increasing number of papers that address the subset search problem while a decreasing number of studies that focus on how to measure the relevance of features. Since the studies search for this review happened in October 2020, the numbers of papers for the 2020 are still partial because the source databases were still incomplete for those dates. Therefore, we mark this part of the graph in gray to show that it includes preliminary data.

4.1 Issues about measure feature relevance

After analyzing included papers that deal with the task of measure feature relevance, we mapped the most frequent issues related to this task:

-

How to deal with the unbalanced or skewed datasets?

-

How to avoid that rare terms receive high scores?

-

How to identify and measure the redundancy of terms?

-

How to consider the sparsity of the matrix?

-

How to consider the position of terms?

Each one of these issues is explained next.

Unbalanced or Skewed DatasetsFor text classification, data are characterized by a large number of highly sparse terms and highly skewed categories (Rehman et al. 2018). A skewed dataset has an unbalanced class distribution, which means the number of instances in one class (majority class(es)) may be many times bigger than the number of other class instances (minority class(es)) (Japkowicz 2000). If the unbalance of classes is not treated during or before the FS, the positive features of the minority classes may receive minor relevance scores because they are present in a smaller number of documents. Consequently, the minority classes may have no features on the selected feature set. If some class/label does not have any of its features included in the final set, the classifier may not be able to classify the documents of this class/label.

Rare Terms/FeaturesSeveral relevance metrics are based on the correlation between features and the target. In these metrics, features with high correlation receive higher scores. Because rare terms tend to be present in a few or only one class, they tend to have a higher correlation with the target. For this reason, many of the relevance metrics assign higher scores for rare features. As an example, we can cite Balanced Accuracy Measure (ACC2) and IG (Rehman et al. 2017; Wang et al. 2014a). However, rare features usually are not relevant to classification tasks, because they will have a very low likelihood of to be present in new documents.

We found two main strategies to deal with rare terms in included studies: (1) eliminating rare terms before applying the FS method; and (2) adapt the relevance metric formula to assign lower scores to rare terms.

Redundancy ExclusionFeature redundancy is usually defined as a high correlation among features (Wang et al. 2013). Redundant features are likely to appear simultaneously in the same documents. Considering that two features are redundant and one of them is already selected, select the second feature will contribute very little or nothing to the total relevance of the selected set. For this reason, the redundancy between features is an important factor to measure the relevance of each feature. The goal of FS is to select a highly-relevant subset with a minimum redundancy (Labani et al. 2018).

Like the case of dealing with rare features, we found two main approaches that deal with redundant features: (1) eliminating redundant features during the pre-processing phase before applying the FS method; and (2) adapt the relevance metric formula to assign lower scores to redundant features.

Data SparsityFor text classification, features usually consider in its recipe the frequency of words/phrases in each document. The number of possible words/phrases in all dataset training documents can be huge, and this number defines the length of the initial feature vector (Ong et al. 2015). Since each document usually includes a low percentage of the total set of words/phrases, most of the features of each document will be zero, i.e., zero frequency for words/phrases that are not present in that document.The result is a sparse composite matrix.

The matrix sparsity degrades the performance of the text classification (Ong et al. 2015). For this reason, properly handling the sparsity issue is recommended for FS methods. An alternative to address this issue is to use another representation format which does not result in a sparse matrix.

Position/Location Inside the TextThe term location inside a text can be related to the importance of a term and, consequently, for measuring the relevance of a feature. Song et al. (2016) defines that the feature words have different capabilities to express the text in different positions of the text. Especially for news articles, information that is in the title, subtitle, or first paragraph tends to be more relevant than information on the other paragraphs. Therefore, the location of terms within the text can be useful for FS methods.

4.2 Issues about subset search

The subset search task aims to find the best subgroup of features to be used in the classification. This search can be performed using an optimization method (such as genetic algorithms or PSO) and evaluating several different subsets directly in the classification activity (wrapper method) or using some heuristics to evaluate the relevance of each subset without evaluating in a specific classifier (filter method). Most subset search studies are focused on evaluating metaheuristics methods to improve search efficiency. Other studies focus on reducing redundancy and hyperparameter optimization.

Search Strategy/Search Efficiency and EffectivenessThe simplest way to perform the subset search is exhaustively to evaluate all candidate subsets according to some evaluation function. However, for a dataset with N features, there exists \(2^{N}\) candidate subsets. Due to a large number of features in text domain datasets, the exhaustive search usually is too costly and virtually prohibitive (Tang et al. 2014). For this reason, the main issue of the subset search for text classification is the search efficiency. Several studies included in this review proposes different metaheuristics or methods to perform the search efficiently.

Most of the included studies about subset searches are based on swarm optimization methods. In those methods, subsets of candidate features are mapped as particles, and the Swarm Optimization method tries to find the best solution (best feature subset) by exploring the search space moving the particles to find the global optimum configuration (Chopard and Tomassini 2018).

RedundancyFeature redundancy is usually defined in terms of some correlations within the features (Wang et al. 2013). The goal of FS is to select a highly-relevant subset with a minimum redundancy (Labani et al. 2018). Most subset search methods avoid select redundant features naturally by evaluating several combinations of features. As explained in Sect. 4.1, methods that measure the relevance of each feature in isolation need address feature redundancy explicitly.

Feature Selection and Hyperparameter OptimizationClassification algorithms usually have several parameters whose values heavily influence its performance. Thus, determining appropriate values of parameters of a classifier is a critical issue (Ekbal and Saha 2015). Like FS, finding the best combination of parameters can be addressed as an optimization problem, called Hyperparameter Optimization. Grid search and manual search are the most widely used strategies for hyperparameter optimization (Bergstra and Bengio 2013).

Subset search methods usually are wrapper methods. That is, they use the accuracy of the classifier to evaluate each candidate subset. But notice that the predictor parameters influence the performance of the subset search. Similarly, the selected features influence hyperparameter optimization. For this reason, performing the two searches in an integrated manner may be a good approach to find the best combination of selected features and predictor parameters.

4.3 Issues about globalization

Relevance metrics and subset search methods commonly can be explicitly applied for one class or label of the dataset. Therefore, a method that globalizes the results of each class/label is required in order to construct a final set of features that represents all classes or labels. One alternative to globalization is to use specific sets of features for each class/label. Studies about class/label specific features are mapped in the globalization category in this review.

Analyzing included studies, we found three ways to implement FS globalization within a text classification architecture:

-

1.

Implement a local FS method for each class/label and perform the globalization subsequently.

-

2.

Implement a global FS method designed to deal with globalization problems.

-

3.

Adapt/Use a class/label specific classification scheme (selecting specific features for each class/label).

For the first three approaches, the main issue to be addressed is the representativeness of each class and label in the selected final set of features. For the fourth approach, the main question is how to transform the classifier or transform the problem to be able to use specific subsets of features per class/label. These globalization issues will be detailed in the following two paragraphs.

Classes/Labels Representativeness on Final Feature SetThe basic scheme of filter-based FS assigns a score to each feature based on its discriminating power. It selects the top-k features from the feature set, where k is an empirically determined number (Agnihotri et al. 2017b). If the classification problem is multiclass or multi-label, some classes/labels may have few or no selected features in the final feature set. Therefore, a central issue for globalization is ensuring adequate representativity for all classes/labels in the final dataset.

Class/Label Specific FeaturesInstead of performing the globalization and obtaining a single subset of features to be used in the classifier, it is possible to use subsets of specific features for each class or label. Some studies show this approach can improve the classification performance (Tang et al. 2016c, a). However, the classical theory as it stands requires operating in a common feature space and fails to provide any guidance for a suitable class-specific architecture (Baggenstoss 2003). Therefore, when using class/label specific features, the central issue is how to adapt the problem or the classifier to work with these class/label specific features.

In addition to the globalization approaches we identified in our systematic review, other approaches that address the globalization issue:

-

1.

FS based on sparse learning, which focuses on the relationship between features and classes or labels (Braytee et al. 2017).

-

2.

FS based on manifold learning (Xu et al. 2010).

4.4 Issues about ensemble

Each FS method has specific advantages and disadvantages, so combining two or more methods can lead to better results than using them separately. Ensemble studies propose or evaluate approaches by combining FS methods or metrics. We found that only seven of the included studies address the issue of ensembling FS methods. Another included studies deal with the FS methods specifically for ensembling learning approaches (for example, Boosting-based algorithms (Al-Salemi et al. 2018)), but they are not focused on ensembling FS methods. Virtually all of the included studies about the ensemble issue address the same central problem of how to combine and aggregate the results of different FS methods. We found three main approaches to ensemble FS methods:

-

1.

Combining selected subsets – Execute/Performs two or more FS methods isolated and then create a final set of features by combining the subsets selected by the different methods.

-

2.

Chaining FS methods – Execute two or more FS methods in sequence, where the subset selected by a method becomes the input for the next method.

-

3.

Ensembling rankings – Construct two or more relevance rankings (using different relevance metrics), combining the resulting rankings into a unique ranking and finally select the features using a predetermined threshold.

For each approach, different ways of combining or rankings the sets are available. The main issue of the included studies is precisely to define/find the best way to perform this combination to obtain the most relevant subset of features.

5 Feature selection methods for text classification

As explained in Sect. 4, FS for text classification should address different issues. Our SLR found that several types of methods are being proposed and evaluated to address such issues. We analyzed all the included studies of our SLR and mapped the main characteristics of each method. Based on this mapping, we designed a new categorization scheme that allows to group the methods from four different perspectives (Research Question 2): strategy, approach, target, and labeled data dependence (Fig. 4). The proposed categorization scheme helps to organize in groups and compare current methods. Additionally, it will help the positioning of future studies about FS for text classification.

The first perspective addresses the different strategies as the selection of features can be performed. It is detailed in Sect. 5.1. The different approaches (statistical, machine learning, or semantical) are mapped on the second perspective and is detailed in Sect. 5.2. The third perspective maps the type of target that the method was built to handle (binary, multiclass, multi-label, hierarchical, or ordinal). It is explained in Sect. 5.3. The fourth perspective maps the level of dependence on labeled data, being detailed in Sect. 5.4.

Each perspective is composed of a set of categories, as shown in Fig. 4. We mapped each of the studies included in this review according to each of these four perspectives of the classification schema applied on the methods described by them.Table 2 maps the issues groups described in Sect. 4 into each perspective of our categorization schema presented in this section. This table can be used to identify which strategies and approaches are being used to address each issue group. The most relevant studies on each category will be indicated during the explanation of the perspectives and respective categories in the following sections.

5.1 Categorization by strategy

As presented in Sect. 2.3, FS methods are usually classified into three categories: filter, wrapper, and embedded. The first three flows in Fig. 5 represents each one of these strategies. In this SLR, we have included two more categories to the three classical ones: Two-stages Pure and Two-stages Hybrid. Note that both strategies have the same flow in Fig. 5. The difference is in the choice to combine the same or different strategies. Two-stages Hybrid strategy methods combine FS methods are based on different strategies of selection. For example, the first stage may apply the filter strategy, while the second stage may use the wrapper strategy. On the other hand, some studies combine two different methods but using the same strategy. For these cases, we classify the studies in a separate category (Two-stages Pure strategy). Each one of the five strategies considered in this survey will be presented next. Fig. 6 summarizes the amount of included studies by strategy over the years.

Flow diagram for each Feature Selection (FS) Strategy presenting the interaction between the FS activity and the model training activity. Each of these strategies is detailed in Sect. 5.1

Filter StrategyThe main characteristic of the methods that are based on the filter strategy is to be independent of the classifier. In other words, the filter strategy does not use the performance of the classifier to assess the relevance of features or subsets of features. Lazar et al. (2012) subdivided these filter methods into two classes: ranking-based and space search. Ranking-based methods use some relevance metric to assess the predictive power of each feature, construct a ranking based on this relevance score, and apply a threshold to select the most relevant features (Chandrashekar and Sahin 2014). Space search methods aim to find the best subset of features by evaluating different combinations of features.

Table 3 summarizes studies that apply a ranking-based approach to implement the filter strategy. The studies in this table are grouped according to the base method employed to handle the problem of measuring the relevance. We found that these studies usually propose an improved version of some existing relevance metrics or propose new relevance metrics. As detailed in Sect. 3, our systematic review focused on mapping studies published between 2013 and 2020. However, some studies published before this time window have proven themselves in the literature. For example, the Distinguishing Feature Selector (\(\hbox {DFS}^{*}\)) method proposed by Uysal and Gunal (2012).

However, filter methods are not restricted to handle the problem of measure relevance (ranking methods). The filter strategy can also be used to handle the subset search, globalization, or ensemble problems. Table 4 presents the filter methods that address each of these issues. In Table 4, the studies are also grouped according to the base method utilized.

As described in Sect. 4.1, one of the most relevant issues for text classification is data sparsity. The FS methods generally help to reduce data sparsity by removing less relevant features. However, we found one study based on the filter strategy that mainly focuses on dealing with this issue. Ong et al. (2015) propose an improved FS metric known as Sparsity Adjusted Information Gain (SAIG), which modifies the conventional IG metric and aims to adjust the feature ranking scores according to the matrix sparsity.

Additionally, some two-stage FS methods perform the filter strategy in both stages (Two-stages Pure Strategy). Despite having two stages, these methods cannot be classified as having a hybrid strategy because they only use one strategy (the filter strategy). As an example, we can cite the study of Karabulut (2013) that presents a novel two-stage filter method based on IG theory and Geometric Particle Swarm Optimization (GPSO).

Wrapper StrategyDifferent from the filter strategy, the methods that use the wrapper strategy are dependent on the predictor because they use the performance of the predictor to evaluate the relevance of features or search for the best subset of features. For this reason, wrapper methods tend to be more computationally costly than filter methods. Most wrapper methods are based on search techniques. As explained in Sect. 4.2, the exhaustive search usually is too costly and most times prohibitive (Tang et al. 2014). For this reason, the main issue related to wrapper methods is the search efficiency (explained in Sect. 4.2). Several studies included in this review propose different methods to improve search efficiency using metaheuristic search methods. Therefore, we identified that the wrapper strategy is commonly implemented with a metaheuristic approach. Section 5.2 details the metaheuristic approach and lists the included studies based on this approach. Wrapper methods usually are subset search methods. That is, they use the accuracy of the classifier to evaluate each candidate subset. Therefore, the predictor parameters influence the subset search performance. Similarly, the features selected influence hyperparameter optimization. For this reason, wrapper methods perform the two searches (subset search and hyperparameter search) in an integrated manner can be the best approach to find the best combination of selected features and predictor parameters. Despite the relevance of this issue, the only study included addressing it was developed by Ekbal and Saha (2015).

Embedded StrategyThe main characteristic of embedded methods is the incorporation of the FS as part of the training process. Embedded methods aim to reduce the computation time taken up for reclassifying different subsets, which is done in wrapper methods (Chandrashekar and Sahin 2014). The embedded methods include studies that are evaluated in specific/atypical learning situations:

-

Aspect-based Sentiment Analysis—Zainuddin et al. (2018).

-

Class-specific Features—Tang et al. (2016c).

-

Ensemble of Multi-label Classifiers—Guo et al. (2017).

-

Multi-objective Genetic-Programming—Nag and Pal (2016).

-

Positive and Unlabeled Learning—Zhang et al. (2014b).

Our review found that most embedded strategy studies (5 of 7) focus on the subset search issue described in Sect. 4. As embedded FS methods are part of the training algorithm, this strategy can deal efficiently with the subset search issue (Nag and Pal 2016). Additionally, we identified one embedded strategy study focused on the measure relevance issue (Naik and Rangwala 2016) and another one focused on the globalization issue by implementing class-specific features (Tang et al. 2016c). None of the embedded strategy studies in this review focus on FS ensemble issue.

Two-stages Hybrid Strategy Each of the strategies presented until now (filter, wrapper, and embedded) has specific advantages and disadvantages. For this reason, many studies explore hybrid methods that combine two different strategies into a single method. In this way, it is possible to combine their advantages and mitigate specific problems/risks. Several studies perform a filter stage before conduct the subset search to reduce the search space. For example:

-

Filter Stage + Genetic Algorithm Based Search—Ghareb et al. (2016).

-

Filter Stage (IG or CHI) + Rough Set—Kun and Lei (2014).

-

Filter Stage + Markov Blanket Filter (MBF) Subset Search—Javed et al. (2015).

-

Filter Stage + Support Vector Machine-Recursive Feature Elimination (SVM-RFE)—Zhang et al. (2014a).

-

Filter Stage (CHI) + Support Vector Machine-Recursive Feature Elimination (SVMRFE)—Chen et al. (2019).

-

Filter Stage (CHI) + Particle Swarm Optimization (PSO)—Somantri et al. (2019).

-

Filter Stage (MI) + Recursive Feature Elimination (RFE)—Jie and Keping (2019).

-

Filter Stage (IG) + Particle Swarm Optimization (PSO)—Bai et al. (2018).

-

Filter Stage (IG) + Binary Gravitational Search Algorithm (BGSA)—Kermani et al. (2019).

-

Filter Stage (IG) + Improved Sine Cosine Algorithm (ISCA)—Belazzoug et al. (2020).

-

Filter Stage (Ontology Filter) + Particle Swarm Optimization (PSO)—Abdollahi et al. (2019).

Two-stages Pure Strategy Some studies combine two different methods but using the same strategy. For this reason, they cannot be classified as hybrid strategy methods. Therefore, we classify them into a different strategy (Two-stages Pure). The following studies combine two stages based on filter strategy or based on wrapper strategy:

-

Filter (IG or CHI or MI) + Filter (Clustering)—Ghareb et al. (2016).

-

Li et al. (2013b) propose a two-step FS method. At the first step, redundancy analysis among original features based on a categorical fuzzy correlation degree is applied to filter the redundant features with a similar categorical term frequency distribution. In the second step, a conventional IG feature relevance metric is adopted to select the final feature set.

-

Wrapper (Forward Feature Construction) + Wrapper (Genetic Algorithm)—Rasool et al. (2020).

5.2 Categorization by approach

During the review, we identified that FS works could be grouped according to the approach used. In this paper, the approach is related to the computational, statistical, or semantic technique used to select features. While the strategy (Sect. 5.1) defines how the method will fit into the training process and how it relates to the classifier, the approach (presented in this section) concerns the technique employed to perform the selection of features.We decided to map each method based on their primary approach since we identified that most methods do some combination of approaches, mainly with the statistic-based approach. For this reason, we did not map them into a separate category (hybrid approach).

Most published studies (109 of 175, 62.29%) use statistical metrics to measure the relevance of features and select them. These methods will be classified as statistic-based approaches. However, other studies use different approaches to select features. The main groups of approaches we have found were machine-learning-based techniques (such as clustering), semantic-based techniques, and rule-based techniques (such as Apriori). Each of these approaches will be detailed below.

Statistic-Based Approaches Gunduz and Cataltepe (2015) propose a feature relevance metric called Balanced Mutual Information (BMI) that is able to deal with the class imbalance problem through oversampling of the minority classes. They use the Synthetic Minority Oversampling Technique (SMOTE) for oversampling, which creates new minority class instances by searching for nearest neighbors of a randomly selected minority class instance. The new minority class instance value is generated by interpolation of randomly selected instances and selected neighbors of this instance. Rehman et al. (2018) propose a new feature relevance metric called Max-Min Ratio (MMR). It is a product of max-min ratios of true positives and false positives and their difference, which allows MMR to select smaller subsets of more relevant terms even in the presence of highly skewed classes.

As discussed in Sect. 4.1, there are two main strategies to deals with rare terms: (1) eliminate rare terms during the pre-processing phase before applying the FS method, and (2) adapt the relevance metric formula to assign lower scores to rare terms. Rehman et al. (2015) adopt the first strategy explicitly by removing rare features before evaluating the proposed relevance metric called Relative Discrimination Criterion (RDC). Rehman et al. (2017) adopt the second strategy in a recent study. They propose the Normalized Difference Measure (NDM) that is an improved version of the ACC2 (Forman 2004) modified specially to assign lower relevance scores to rare features.

Labani et al. (2018) demonstrated that RDC is an effective method for identifying relevant features. A drawback is that the correlation between features is ignored, and thus RDC cannot identify redundant features. In order to mitigate this problem, Labani et al. (2018) propose the Multivariate Relative Discrimination Criterion (MRDC) that is an evolution of the RDC. Labani et al. (2018) modified the original formula to identify and measure the redundancy of features based on the correlation between then. As a result, MRDC assigns a higher relevance score to features with high discriminative power and low redundancy.

Document Frequency (DF) of a feature refers to the number of documents that include that feature. The term frequency refers to the occurrence number of a certain feature in a certain document. Most popular FS metrics for text classification such as IG, CHI, and Odds Ratio (OR), are based on DF and don’t use the term frequency (Baccianella et al. 2013). However, the term frequency is a piece of important information for FS because it represents the importance of feature to each document (Wu and Xu 2016). High-Frequency terms (except stop words) that occurred in few documents are often regards as discriminators in the real-life corpus (Wang et al. 2014a).

To overcome this drawback, Baccianella et al. (2013) propose to logically break down each training document of length k into k training “micro-documents”, each consisting of a single word occurrence and endowed with the same class information of the original training document. This transformation has the double effect of (a) allowing all the original FS methods based on binary information to be still straightforwardly applicable, and (b) making them sensitive to term frequency information. Wang et al. (2014a) propose a new FS metric based on term frequency and Student’s t-Test. The T-TEST function is used to measure the diversity of the distributions of a term frequency between the specific category and the entire corpus. Wu and Xu (2016) propose a new FS metric that combines DF and term frequency called Limiting DF’s Word Frequency. Its primary principle is summarized as follows: pre-set the threshold value of minimum DF \(\alpha\) and the threshold value of maximum DF \(\beta\), if the DF of feature word is between \(\alpha\) and \(\beta\) then calculate the word frequency of this feature word or delete it otherwise.

Metaheuristic Approaches As explained in Sect. 5.1, metaheuristic search methods can be implemented to address the subset search issue and usually is combined with wrapper strategy. Metaheuristic algorithms use problem-specific heuristic information and efficiently manage the search process without exploring the whole search space (Gökalp et al. 2020). Therefore, they are ideal candidates to overcome the drawbacks of wrapper-based methods (Gökalp et al. 2020). Common meta-heuristic algorithms include the genetic algorithm and PSO (Lin et al. 2016). The included studies that implement the metaheuristic approach is listed below:

-

Binary Black Hole Algorithm (BBHA)—Pashaei and Aydin (2017).

-

Binary Particle Swarm Optimization (BPSO)—Shang et al. (2016).

-

Cat Swarm Optimization (CSO)—Lin et al. (2016).

-

Genetic Algorithm and Wrapper Approaches (GAWA)—Rasool et al. (2020).

-

Improved Particle Swarm Optimization (IPSO)—Lu et al. (2015).

-

Multi-Objective Automated Negotiation based Online Feature Selection (MOANOFS)—BenSaid and Alimi (2021).

-

Multi-Objective Relative Discriminative Criterion (MORDC)—Labani et al. (2020).

-

Memetic Feature Selection based on Label Frequency Difference (MFSLFD)—Lee et al. (2019).

-

Optimized Swarm Search-based Feature Selection (OS-FS)—Fong et al. (2016).

-

Small World Algorithm (SWA)—Lu and Chen (2017).

-

Wrapper Feature Selection Algorithm based on Iterated Greedy (WFSAIG)—Kyaw and Limsiroratana (2019).

-

Wolf Intelligence Based Optimization of Multi-Dimensional Feature Selection Approach (WI-OMFS)—Gökalp et al. (2020).

Machine Learning-Based ApproachesAmong the included studies, 17 studies use some machine learning methods directly in the FS process. These studies mainly used the following techniques:

-

Clustering—Song et al. (2013), Yang et al. (2014), Zhou et al. (2014), Sheydaei et al. (2015), Nam and Quoc (2016), Roul et al. (2016b), Malji and Sakhare (2017), Chormunge and Jena (2018), Kumar and Harish (2018), Guru et al. (2020).

-

SVM—Rzeniewicz and Szymanski (2013), Zhang et al. (2014a), Tripathy et al. (2017).

-

Word Embedding—Yang and Zheng (2016), Lampos et al. (2017), Tian et al. (2018), Lan et al. (2020).

Semantic-Based ApproachesEvaluate the meaning of words can be useful for FS methods because it helps to identify the relevance of words inside a text and identify the similarity between words. Among the studies included, only 11 studies use a semantic approach. Below are the semantic technologies used by each study:

-

Context-capturing Features—Hagenau et al. (2013),

-

Crowd-based Feature Selection (CrowdFS)—Pintas et al. (2017).

-

Discriminative Personal Purity (DPP)—Ortega-Mendoza et al. (2018).

-

Latent Selection Augmented Naive Bayes (LSAN)—Feng et al. (2015a).

-

Semantic Measures—Ouhbi et al. (2016).

-

Semantic Similarity—Zong et al. (2015).

-

Topic Guessing—Méndez et al. (2019).

We categorize two studies (Su et al. 2014, Zhu et al. 2017) that use Word Embeddings as a semantic approach because it was used to map the meaning of the words. Both studies used Word Embedding to map the similarity of the words and perform the similarity expansion in FS. The aim of similarity expansion is to expand the set of selected features based on similarity of words.

Rule-Based Approaches Among the studies included, only four use rule-based approach. Agnihotri et al. (2016) propose a novel hybrid FS called Correlative Association Score (CAS) of terms. The CAS utilizes the concept of the Apriori algorithm to select the most informative terms. Sheydaei et al. (2015) proposed the Bit-priori Association Classification Algorithm (BACA), which combines the rule approach with a semantic approach. More recently, Wang and Hong (2019) proposes the Hebb Rule Based Feature Selection (HRFS) that assumes that terms and classes are neurons and select terms under the assumption that a term is discriminative if it keeps “exciting” the corresponding classes. Finally, Sundararajan et al. (2020) proposes the multi-rule based ensemble FS model for sarcasm classification.

Linguistics Approaches In our review, we found three FS studies based mainly on the linguists’ approach. The proposed methods use lexical or grammar information to measure the relevance of the features (Mladenović et al. 2016, Qin et al. 2016, Jiang and Yu 2015).

5.3 Categorization by target

Document classifiers may have different types of targets. Binary classifiers estimate one class for each new document within two possible categories (usually positive and negative categories). Multiclass classifiers assign each new document to one class from a list including three or more possible classes. In multi-label classification, a classifier attempts to assign multiple labels to each document, whereas a hierarchical classifier maps text onto a defined hierarchy of output categories (Mirończuk and Protasiewicz 2018). Hierarchical and ordinal classifiers can be viewed as specific types of multiclass classifiers in which classes have a relationship with each other. In the hierarchical classification, the classes are organized into hierarchical levels, whereas in ordinal classification, the classes are organized in order or sequence.

During our review, we identified that each FS method is specifically designed to work with a specific target type. The following paragraphs present the main proposed methods of each target type.

Binary Text Classification Among the 175 included studies, 43 studies (24.57%) focus on FS for binary text classification. We found that 20 studies (46.51% of 43) are related to the sentiment analysis and 6 studies (13.95% of 43) are related to spam detection. Both sentiment analysis and spam detection are usually handled as a binary classification problem. Table 5 presents the FS studies that were evaluated with binary datasets grouped by problem domain.

Multiclass Text Classification Most of studies about FS for text classification (118 of 175 included studies, 67.43%) focus on multiclass. Among these, 82 studies (69.49% of 118 studies) evaluate the method proposed using the main news classification benchmarks (datasets Reuters-21578, 20Newsgroup, Fudan, Sogou News). FS methods for multiclass or multi-label text classification need to address the globalization issue described in Sect. 4.3. In our review, we found studies for each implementation options described in Sect. 4.3:

-

1.

Implement a local FS method for each class/label and subsequently perform globalization (Shang et al. 2013; Xu and Jiang 2015).

-

2.

Implement a global FS method designed to deal with globalization problems (Lee and Kim 2013; Agnihotri et al. 2017b).

-

3.

Adapt/Use a class/label specific classification scheme (Tang et al. 2016a, c).

Multi-Label Text ClassificationAmong the included studies, only nine studies focused on FS for multi-label text classification:

-

Based on supervised topic modeling for Boosting-based multi-label text categorization—Al-Salemi et al. (2017).

-

Using Diversified Greedy Backward-Forward Search (DGBFS)—Ruta (2014).

-

Using Ensemble Embedded Feature Selection (EEFS)—Guo et al. (2017) and Guo et al. (2019).

-

Using label Pairwise Comparison Transformation (PCT) method, which converts each original multi-label sample into multiple samples with same feature vectors and different label vectors —Xu and Xu (2017).

-

Using Multivariate Mutual Information (MMI)—Lee and Kim (2013).

-

Using two-stage term reduction strategy based on IG theory and GPSO search—Karabulut (2013).

-

Using Fuzzy Rough Feature Selection (FRFS)—Zuo et al. (2018).

-

Using Memetic Feature Selection based on Label Frequency Difference (MFSLFD)—Lee et al. (2019).

As detailed in Sect. 3, our SLR protocol focused studies that explicitly state the application of text classification in the title or abstract. However, some works outside this scope are also interesting and were experimentally tested on text data. A relevant example is the mutual Information-based multi-label FS method using interaction information (Lee and Kim 2015).

Hierarchical Text ClassificationAmong the included studies, only three of them focused on FS for hierarchical text classification. Naik and Rangwala (2016) investigate various filter-based FS methods for dimensionality reduction to solve the large-scale hierarchical classification problem. Lifang et al. (2017) propose a hierarchical FS method using Kullback-Leibler divergence to measure the correlation between the class and subclasses, and using MI to calculate the correlation between each feature and subclass. Song et al. (2016) propose a FS method based on category distinction and feature position information for Chinese text classification. This is the only included study in our review that deals with the issue of considering the position of words during the FS process.

Ordinal Text Classification Among the included studies, only two focused on FS for ordinal text classification. Baccianella et al. (2013) evaluate the use of micro-documents in ordinal classification. They logically break down each training document of length k into k training “micro-documents”. The purpose of the use of micro-documents was explained earlier in this section. Baccianella et al. (2014) propose four novel FS metrics that have been specifically devised for ordinal classification and test them on two datasets of product review data.

5.4 Categorization by labeled data dependence

According to the Encyclopedia of Machine Learning (Sammut and Webb 2010), supervised learning refers to any machine learning process that learns a function from an input type to an output type using data comprising examples that have both input and output values. The same Encyclopedia, define unsupervised learning to any machine learning process that seeks to learn structure in the absence of either an identified output and semi-supervised learning to any machine learning process that uses both labeled and unlabeled data to perform an otherwise supervised learning or unsupervised learning task. Labeled data are data for which each object has an identified target value, the label (Sammut and Webb 2010).

Like learning methods (such as classification and regression), FS methods can also be classified into supervised, unsupervised, and semi-supervised according to their dependence on labeled data. FS methods that need labeled data can be classified as supervised method. On the other hand, FS methods that don’t need labeled data can be classified as an unsupervised FS methods. Finally, FS methods that work with both labeled and unlabeled data are classified as semi-supervised.

Supervised Methods Most FS studies for text classification propose supervised methods. Considering the 175 studies included in this review, 166 (94.86% of total) are based on supervised methods. Supervised methods are mostly methods that measure the relevance of features alone or in subsets of features based on a labeled training set. Table 6 present all included studies grouped by labeled data dependence and year of publication.

Unsupervised Methods Considering the 175 studies included in this review, only four (2.29% of total) are based on unsupervised methods. In these works, three different unsupervised techniques were used:

-

Term Frequency-Inverse Document Frequency (TF-IDF) and Glasgow expressions—Manochandar and Punniyamoorthy (2018) propose two modifications to the traditional TFIDF and Glasgow expressions using graphical representations to reduce the size of the feature set.

-

Word Co-occurrence Matrix—Wang et al. (2016) propose an unsupervised FS algorithm through Random Projection and Gram-Schmidt Orthogonalization (RP-GSO) from the word co-occurrence matrix.

-

Word Embedding—Rui et al. (2016) propose an unsupervised FS method that utilizes Word Embedding to find groups of words with similar semantic meaning. Word Embedding maps the words into vectors and remains the semantic relationships between words. After mapping the similar semantic groups, the method maintains the most representative word on behalf of the words with similar semantic meaning. Lampos et al. (2017) propose an unsupervised FS method that uses Neural Word Embeddings, trained on social media content from Twitter, to determine how strongly textual features are semantically linked to an underlying health concept.

Semi-Supervised MethodsSemi-supervised learning uses both labeled and unlabeled data to perform an otherwise supervised learning or unsupervised learning task (Sammut and Webb 2010). Considering the 175 studies included in this review, only five (2.86% of total) studies are based on semi-supervised methods:

6 Experiment settings analysis

The studies included in this review use different combinations of experiment settings, such as different datasets, classification algorithms, and performance metrics. Due to a large number of studies and the consequently large amount of experiment’s settings used, define the ideal setting for a new experiment can be very challenging.

The aim of this section is mapping and summarizing the settings of the experiments that are being used to analyze and compare FS methods for text categorization (Research Question 3). We focus on analyzing the following settings:

-

What text representation are being used? (Sect. 6.1)

-

What public datasets, language of text corpora in datasets, and dataset domains are being used to evaluate the methods? (Sect. 6.2)

-

What classifier algorithms are being used to evaluate the effectiveness of FS methods? (Section 6.3)

-

Which validation settings are the most used? (Sect. 6.4)

We aim to help the design of new researches by providing a summary of which experiment settings are being used. Additionally, we have identified which settings are desirable and are underutilized.

6.1 Text representation used in experiments

Textual data can be represented in different formats for text classification. In Sect. 2, we present the widely used N-gram and Word Embedding representation models. Considering the works included in this review, Table 7 shows that 88.57% of the methods were evaluated using exclusively Bag of Words (BoW) (uni-gram). Among the remaining works, no other mode of representation was found to be prevalent. It is interesting to note that eight studies used the combination of different representations, two of which combining BoW and Word Embedding.

6.2 Datasets used in experiments

The primary way to evaluate the effectiveness/efficiency of a FS method is training and measuring the performance of a classifier using the FS method. In this section, we indicate which public datasets are most commonly used in FS studies for text classification. We also map the most frequently used languages and domains.

Public Datasets Most of the papers included in this review used public datasets to evaluate the proposed methods. Few studies have used private or specifically collected datasets. The use of public datasets is recommended because it facilitates the comparison of methods. Table 8 presents the most used public datasets. As our review mapped a considerable number of studies and each one can use several different datasets, a list of all datasets would be very long and would mostly include datasets that were used by a single study. For this reason, we focus on mapping and presenting in Table 8 only the datasets that are public and that were used by at least two studies mapped in our review.

Language of Text Corpora in Datasets The majority of the papers included in this review (72.57%) used only English text corpora to evaluated their FS methods. The second most used language is Chinese (26 studies). The third language is Arabic (seven studies). Only four studies perform experiments using two different languages (English and Chinese). Wang and Hong (2019) were the only ones who used three different datasets languages (English, Turkish and Kurdish Sorani) in the same study. Table 9 presents the languages with at least two studies utilizing that language in their datasets.

The following languages were considered by only one study: Serbian (Mladenović et al. 2016), Hinglish (Ravi and Ravi 2016), Indian (Trivedi and Tripathi 2017), Tibetan (Jiang and Yu 2015), Vietnamese (Hai et al. 2015), Japanese (Fukumoto and Suzuki 2015), Russian (Vychegzhanin et al. 2019), Idonesian (Somantri et al. 2019), Italian (Ferilli et al. 2015), and Malay (Alshalabi et al. 2013).

6.3 Classification algorithms used in experiments

The studies included in this review propose new or improved FS methods for text classification. To evaluate the performance of the proposed method, the authors perform the classification task using one or more classification algorithms. The choice of the classification algorithms for the experiment directly impacts the classification result and, therefore, the evaluation of the proposed method. Table 10 and Fig. 7 present the most used classification algorithms in studies experiments.

The most used algorithms are NB and SVM because they are recognized as having good results in the task of classifying texts (Agnihotri et al. 2017b). Table 11 presents the distribution of studies by the number of algorithms used.

6.4 Validation settings used in experiments

When designing a new experiment, the scientists must clearly define the validation method and whether any statistical tests will be performed to refute or not their hypotheses. This section presents the main evaluation settings used in studies included in this review.

Validation Method To evaluate the proposed method, classification algorithms need to be trained and tested using different datasets. This is usually done by:

-

Performing k-fold cross-validation. In cross-validation, the data is partitioned into k subsets, called folds. The learning algorithm is then applied k times, each time one different fold is selected as the test set, and the remaining are used as the training set (Sammut and Webb 2010).

-

Splitting the dataset into two different sets (training and test sets). Some studies use the standard split between training and testing available in some public datasets. Other studies define their criteria for this division. The most common is the division based on predefined percentages. However, some studies perform division based on other criteria, such as time division or varying the size of each set within a predefined range.

Approximately half (44.00%) of the studies covered in this review were cross-validated and the other half (43.43%) used different sets of training and testing. Table 12 and Fig. 8 present the validation methods used.

Statistical Significance Test The machine learning community has become increasingly aware of the need for statistical validation of the published results (Demšar 2006). Studies covered in this survey usually evaluate the efficacy of the proposed methods by comparing the proposed solution to other FS methods. The purpose of the comparison is to verify whether the use of the proposed method increases the accuracy/precision/coverage of the classification activity in contrast to the other FS methods. Although practically all studies performed comparisons to demonstrate an improvement in classification performance, we identified that only 29.71% of them used some statistical method to confirm the statistical significance of the results. Table 13 shows which statistical methods have been used for this purpose.

7 Research trends and discussion

Based on the analysis of the problems, methods, and experiment settings raised in this review, we found relevant research trends and discussion points. In this section, we detail these research trends presenting our view on each of them. Sections 7.1– 7.4 present research trends and discussions based on each perspective of categorization model that we propose in Sect. 5. Sections 7.5 to 7.7 present research trends and discussions about experiment settings mapped in Sect. 6.

7.1 Filter has been the feature selection dominant strategy for text classification, but a change is coming

In Sect. 5.1, we identified that most studies about FS for text classification implement the filter strategy. We found three main reasons for this preference for filter strategy (Kumar 2014; Tang et al. 2014):

-

Simplicity—Filter-based methods usually have simpler design and development than wrapper and embedded methods.

-

Classifier independence—In filter strategy, the result of FS is not biased by choice of classifiers.

-

Computationally efficient—Filter-based methods are efficient and fast to compute. This advantage becomes even more important for text classification and other problems with high-dimensional data.

Despite filtering be the widely used strategy, we found that the percentage of studies based on filter strategy is decreasing, and the rate of studies based on wrapper strategy is increasing as shown in Table 14 and Fig. 9. The columns about Filter Strategy encompass the Two-Step (Filter+Filter) studies and the columns about Wrapper Strategy cover Hybrid (Filter+Wrapper) studies. We believe that the percentage of studies using other strategies (wrapper, embedded, and hybrid) will continues to increase. We see the following reasons for this increase:

-

Large volume of published studies using the filter strategy – Since the volume of work using the filter strategy is large, we believe that the margin for improvement of results using this strategy is reduced. Therefore, we see that researchers tend to explore other strategies to pursue better results.

-

Evolution of computing power and cost – The increase in processing power and computational cost reduction facilitates research techniques that are more computationally intensive, such as wrappers methods. In other words, the computational efficiency of the filter strategy tends to become a less important factor, as the available computing power increases.

7.2 Metaheuristic approach is the trend

In Sect. 5.2, we identified that most studies about FS for text classification are mainly based on the statistic-based approach. However, we have analyzed the evolution of approaches used by grouping them by publication year (Fig. 10), and we noticed that the number of studies based on statistical approaches has been decreasing since 2016. On the same graph, we can see a gradual increase in the number of studies based on metaheuristics from 2015 to 2019. In 2016, 4 times more studies were published based on statistical approaches compared to the number of studies based on metaheuristics in the same year. On the other hand, almost the same number of studies were published in each approach during 2019. If this trend continues, in the coming years, the predominant approach will be metaheuristic.

We believe that the increasing use of the metaheuristic approach is motivated by the same factors then the use of the wrapper strategy (discussed in Sect. 7.1). Similarly, a considerable volume of studies is already available based on purely statistical approaches. In this way, researchers tend to have a smaller margin to achieve better results using the same approach. For this reason, they tend to explore more sophisticated approaches, such as metaheuristic techniques. The increasing use of the metaheuristic approach can also be directly related to the wrapper strategy’s greater use. Wrapper strategy is frequently employed to search for the best subset of features (as explained in Sect. 4). Since this subset search is a hard problem (as explained in Sect. 4.2), metaheuristic search techniques are usually the solution adopted.

In addition to the two predominant approaches (statistical and metaheuristic), semantic-based and machine learning-based approaches have also been used in a relevant number of studies. However, as it is possible to observe in the graph by year (Fig. 10), neither approach had a significant increase or decrease in the volume of studies per year. Approaches that have had few scattered studies over the years were not included in this chart. For example, we only found one study mainly based on the grammatical approach published in 2015 and three rule-based studies published in 2016, 2018, and 2019.

Considering the observations made in the previous paragraphs, we conclude that the metaheuristic approach tends to become prevalent in the coming years and the number of studies based mainly on a statistical approach tends to continue decreasing. We also believe that researchers will tend to combine two or more approaches in the same study to seek better results. This review focused on mapping the principal approach used in each study. A future work could be examining all secondary approaches used in each study and how they are being combined.

7.3 Multiclass classifiers are still dominant