Abstract

This paper presents a comprehensive survey on vision-based robotic grasping. We conclude three key tasks during vision-based robotic grasping, which are object localization, object pose estimation and grasp estimation. In detail, the object localization task contains object localization without classification, object detection and object instance segmentation. This task provides the regions of the target object in the input data. The object pose estimation task mainly refers to estimating the 6D object pose and includes correspondence-based methods, template-based methods and voting-based methods, which affords the generation of grasp poses for known objects. The grasp estimation task includes 2D planar grasp methods and 6DoF grasp methods, where the former is constrained to grasp from one direction. These three tasks could accomplish the robotic grasping with different combinations. Lots of object pose estimation methods need not object localization, and they conduct object localization and object pose estimation jointly. Lots of grasp estimation methods need not object localization and object pose estimation, and they conduct grasp estimation in an end-to-end manner. Both traditional methods and latest deep learning-based methods based on the RGB-D image inputs are reviewed elaborately in this survey. Related datasets and comparisons between state-of-the-art methods are summarized as well. In addition, challenges about vision-based robotic grasping and future directions in addressing these challenges are also pointed out.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

An intelligent robot is expected to perceive the environment and interact with it. Among the essential abilities, the ability to grasp is fundamental and significant in that it will bring enormous power to the society Sanchez et al. (2018). For example, industrial robots can accomplish the pick-and-place task which is laborious for human labors, and domestic robots are able to provide assistance to disabled or elder people in their daily grasping tasks. Endowing robots with the ability to perceive has been a long-standing goal in computer vision and robotics discipline.

As much as being highly significant, robotic grasping has long been researched. The robotic grasping system Kumra and Kanan (2017) is considered as being composed of the following sub-systems: the grasp detection system, the grasp planning system and the control system. Among them, the grasp detection system is the key entry point, as illustrated in Fig. 1. The grasp planning system and the control system are more relevant to the motion and automation discipline, and in this survey, we only concentrate on the grasp detection system.

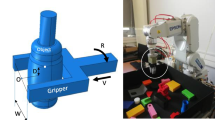

The robotic arm and the end effectors are essential components of the grasp detection system. Various 5-7 DoF robotic arms are produced to ensure enough flexibilities and they are equipped on the base or a human-like robot. Different kinds of end effectors, such as grippers and suction disks, can achieve the object picking task, as shown in Fig. 2. The majority of methods paid attentions on parallel grippers (Mahler et al. 2017), which is a relatively simple situation. With the struggle of academia, dexterous grippers (Liu et al. 2019; Fan and Tomizuka 2019; Akkaya et al. 2019) are researched to accomplish complex grasp tasks. In this paper, we only talk about grippers, since suction-based end effectors are relatively simple and limited in grasping complex objects. In addition, we concentrate on methods using parallel grippers, since this is the most widely researched.

The essential information to grasp the target object is the 6D gripper pose in the camera coordinate, which contains the 3D gripper position and the 3D gripper orientation to execute the grasp. The estimation of 6D gripper poses varies aiming at different grasp manners, which can be divided into the 2D planar grasp and the 6DoF grasp.

2D planar grasp means that the target object lies on a planar workspace and the grasp is constrained from one direction. In this case, the height of the gripper is fixed and the gripper direction is perpendicular to one plane. Therefore, the essential information is simplified from 6D to 3D, which are the 2D in-plane position and 1D rotation angle. In earlier years when the depth information is not easily captured, the 2D planar grasp is mostly researched. The mostly used scenario is to grasp machine components in the factory. The grasping contact points are evaluated whether they can afford the force closure Chen and Burdick (1993). With the development of deep learning, large number of methods treated oriented rectangles as the grasp configuration, which could be beneficial from the mature 2D detection frameworks. Since then, the capabilities of 2D planar grasp are enlarged extremely and the target objects to be grasped are extended from known objects to novel objects. Large amounts of methods by evaluating the oriented rectangles (Jiang et al. 2011; Lenz et al. 2015; Pinto and Gupta 2016; Mahler et al. 2017; Park and Chun 2018; Redmon and Angelova 2015; Zhang et al. 2017; Kumra and Kanan 2017; Chu et al. 2018; Park et al. 2018; Zhou et al. 2018) are proposed. Besides, some deep learning-based methods of evaluating grasp contact points (Zeng et al. 2018; Cai et al. 2019; Morrison et al. 2018) are also proposed in recent years.

6DoF grasp means that the gripper can grasp the object from various angles in the 3D space, and the essential 6D gripper pose could not be simplified. In early years, analytical methods were utilized to analyze the geometric structure of the 3D data, and the points suitable to grasp were found according to force closure. Sahbani et al. (2012) presented an overview of 3D object grasping algorithms, where most of them deal with complete shapes. With the development of sensor devices, such as Microsoft Kinect, Intel RealSense, etc, researchers can obtain the depth information of the target objects easily and modern grasp systems are equipped with RGB-D sensors, as shown in Fig. 3. The depth image can be easily lifted into 3D point cloud with the camera intrinsic parameters and the depth image-based 6DoF grasp becomes the hot research areas. Among 6DoF grasp methods, most of them aim at known objects where the grasps could be precomputed, and the problem is thus transformed into a 6D object pose estimation problem (Wang et al. 2019; Zhu et al. 2020; Yu et al. 2020; He et al. 2020). With the development of deep learning, lots of methods (ten Pas et al. 2017; Liang et al. 2019; Mousavian et al. 2019; Qin et al. 2020; Zhao and Nanning 2020) illustrated powerful capabilities in dealing with novel objects.

Both 2D planar grasp and 6DoF grasp contain common tasks which are object localization, object pose estimation and grasp estimation.

In order to compute the 6D gripper pose, the first thing to do is to locate the target object. Aiming at object localization, there exist three different situations, which are object localization without classification, object detection and object instance segmentation. Object localization without classification means obtaining the regions of the target object without classifying its category. There exist cases that the target object could be grasped without knowing its category. Object detection means detecting the regions of the target object and classifying its category. This affords the grasping of specific objects among multiple candidate objects. Object instance segmentation refers to detecting the pixel-level or point-level instance objects of a certain class. This provides delicate information for pose estimation and grasp estimation. Early methods assume that the object to grasp is placed in a clean environment with simple background and thus simplifies the object localization task, while in relatively complex environments their capabilities are quite limited. Traditional object detection methods utilized machine learning methods to train classifiers based on hand-crafted 2D descriptors. However, these classifiers show limited performance since the limitations of hand-crafted descriptors. With the deep learning, the 2D detection and 2D instance segmentation capabilities improves a lot, which affords object detection in more complex environments.

Most of the current robotic grasping methods aim at known objects, and estimating the object pose is the most accurate and simplest way to a successful grasp. There exist various methods in computing the 6D object poses, which varies from 2D inputs to 3D inputs, from traditional methods to deep learning methods, from textured objects to textureless or occluded objects. In this paper, we categorize these methods into correspondence-based methods, template-based methods and voting-based methods, where only feature points, the whole input and each meta unit are involved in computing the 6D object pose. Early methods tackled this problem in 3D domain by conducting partial registration. With the development of deep learning, methods using RGB image only can provide relatively high accurate 6D object poses, which highly improves the grasp capabilities.

Grasp estimation is conducted when we have the localized target object. Aiming at 2D planar grasp, the methods are divided into methods of evaluating the grasp contact points and methods of evaluating the oriented rectangles. Aiming at 6DoF grasp, the methods are categorized into methods based on the partial point cloud and methods based on the complete shape. Methods based on the partial point cloud mean that we do not have the identical 3D model of the target object. In this case, two kinds of methods exist which are methods of estimating grasp qualities of candidate grasps and methods of transferring grasps from existing ones. Methods based on complete shape means that the grasp estimation is conducted on a complete shape. When the target object is known, the 6D object pose could be computed. When the target shape is unknown, it can be reconstructed from single-view point clouds, and grasp estimation could be conducted on the reconstructed complete 3D shape. With the joint development of the above aspects, the kinds of objects that could be grasped, the robustness of the grasp and the affordable complexity of the grasp scenario all have improved a lot, which affords many more applications in industrial as well as domestic applications.

Aiming at these tasks mentioned above, there have been some works (Sahbani et al. 2012; Bohg et al. 2014; Caldera et al. 2018) concentrating on one or a few tasks, while there is still lack of a comprehensive introduction on these tasks. These tasks are reviewed elaborately in this paper, and a taxonomy of these tasks is shown in Fig. 4. To the best of our knowledge, this is the first review that broadly summarizes the progress and promises new directions in vision-based robotic grasping. We believe that this contribution will serve as an insightful reference to the robotic community.

The remainder of the paper is arranged as follows. Section 2 reviews the methods for object localization. Section 3 reviews the methods for 6D object pose estimation. Section 4 reviews the methods for grasp estimation. The related datasets, evaluation metrics and comparisons are also reviewed in each section. Finally, challenges and future directions are summarized in Sect. 5.

2 Object localization

Most of the robotic grasping approaches require the target object’s location in the input data first. This involves three different situations: object localization without classification, object detection and object instance segmentation. Object localization without classification only outputs the potential regions of the target objects without knowing their categories. Object detection provides bounding boxes of the target objects as well as their categories. Object instance segmentation further provides the pixel-level or point-level regions of the target objects along with their categories.

2.1 Object localization without classification

In this situation, the task is to find potential locations of the target object without knowing the category of the target object. There exist two cases: if you known the concrete shapes of the target object, you can fit primitives to obtain the locations. If you can not ensure the shapes of the target object, salient object detection(SOD) could be conducted to find the salient regions of the target object. Based on 2D or 3D inputs, the methods are summarized in Table 1.

2.1.1 2D localization without classification

This kind of methods deal with 2D image inputs, which are usually RGB images. According to whether the object’s contour shape is known or not, methods can be divided into methods of fitting shape primitives and methods of salient object detection. Typical functional flow-chart of 2D object localization without classification is illustrated in Fig. 5.

Fitting 2D shape primitives The shape of the target object could be an eclipse, a polygon or a rectangle, and these shapes could be regarded as shape primitives. Through fitting methods, the target object could be located. General procedures of this kind of methods usually contain enclosed contour extraction and primitive fitting. There exist many algorithms integrated in OpenCV Bradski and Kaehler (2008) for primitives fitting, such as fitting ellipse Fitzgibbon and Fisher (1996) and fitting polygons Douglas and Peucker (1973). This kind of methods are usually used in 2D planar robotic grasping tasks, where the object are viewed from a fixed angle, and the target object are constrained with some known shapes.

2D salient object detection Compared with shape primitives, salient object regions could be represented in arbitrary shapes. 2D salient object detection(SOD) aims to locate and segment the most visually distinctive object regions in a given image, which is more like a segmentation task without object classification. Non-deep learning SOD methods exploit low-level feature representations (Jiang et al. 2013; Zhu et al. 2014; Peng et al. 2016) or rely on certain heuristics such as color contrast Cheng et al. (2014), background prior Wei et al. (2012). Some other methods conduct an over-segmentation process that generates regions Shi et al. (2015), super-pixels (Yang et al. 2013; Wang et al. 2016), or object proposals Guo et al. (2017) to assist the above methods.

Deep learning-based SOD methods have shown superior performance over traditional solutions since 2015. Generally, they can be divided into three main categories, which are Multi-Layer Perceptron (MLP)-based methods, Fully Convolutional Network (FCN)-based methods and Capsule-based methods. MLP-based methods typically extract deep features for each processing unit of an image to train an MLP-classifier for saliency score prediction. Zhao et al. (2015) proposed a unified multi-context deep learning framework which involves global context and local context, which are fed into an MLP for foreground/background classification to model saliency of objects in images. Zhang et al. (2016) proposed a salient object detection system which outputs compact detection windows for unconstrained images, and a maximum a posteriori (MAP)-based subset optimization formulation for filtering bounding box proposals. The MLP-based SOD methods cannot capture well critical spatial information and are time-consuming. Inspired by Fully Convolutional Network (FCN) Long et al. (2015), lots of methods directly output whole saliency maps. Liu and Han (2016) proposed an end-to-end saliency detection model called DHSNet, which can simultaneously refine the coarse saliency map. Hou et al. (2017) introduced short connections to the skip-layer structures, which provides rich multi-scale feature maps at each layer. Liu et al. (2018) proposed a pixel-wise contextual attention network called PiCANet, which generates an attention map for each pixel and each attention weight corresponds to the contextual relevance at each context location. With the raise of Capsule Network (Hinton et al. 2011; Sabour et al. 2017, 2018), some capsule-based methods are proposed. Liu et al. (2019) incorporated the part-object relationships in salient object detection, which is implemented by the Capsule Network. Qi et al. (2019) proposed CapSalNet, which includes a multi-scale capsule attention module and multi-crossed layer connections for salient object detection. Readers could refer to some surveys (Borji et al. 2019; Wang et al. 2019) for comprehensive understandings of 2D salient object detection.

Discussions The 2D object localization without classification are widely used in robotic grasping tasks but in a junior level. During industrial scenarios, the mechanical components are usually with fixed shapes, and many of them could be localized through fitting shape primitives. In some other grasping scenarios, the background priors or color contract is utilized to obtain the salient object for grasping. In Dexnet 2.0 Mahler et al. (2017), the target objects are laid on a workspace with green color, and they are easily segmented using color background subtraction.

2.1.2 3D localization without classification

This kind of methods deal with 3D point cloud inputs, which are usually partial point clouds reconstructed from single-view depth images in robotic grasping tasks. According to whether the object’s 3D shape is known or not, methods can also be divided into methods of fitting 3D shape primitives and methods of salient 3D object detection. Typical functional flow-chart of 3D object localization without classification is illustrated in Fig. 6.

Fitting 3D shape primitives The shape of the target object could be a sphere, a cylinder or a box, and these shapes could be regarded as 3D shape primitives. There exist lots of methods aiming at fitting 3D shape primitives, such as RANdom SAmple Consensus (RANSAC) Fischler and Bolles (1981)-based methods, Hough-like voting methods Rabbani and Van Den Heuvel (2005) and other clustering techniques (Rusu et al. 2009; Goron et al. 2012). These methods deal with different kinds of inputs and have been applied in areas like modeling, rendering and animation. Aiming at object localization and robotic grasping tasks, the input data is a partial point cloud, where the object is incomplete, and the ambition is to find the points that can constitute one of the 3D shape primitives. Some methods (Jiang and Xiao 2013; Khan et al. 2015) detect planes at object boundaries and assemble them. Jiang and Xiao (2013) and Khan et al. (2015) explored the 3D structures in an indoor scene and estimated their geometry using cuboids. Rabbani and Van Den Heuvel (2005) presented an efficient Hough transform for automatic detection of cylinders in point clouds. Some methods (Rusu et al. 2009; Goron et al. 2012) conduct primitive fitting after segmenting the scene. Rusu et al. (2009) used a combination of robust shape primitive models with triangular meshes to create a hybrid shape-surface representation optimal for robotic grasping. Goron et al. (2012) presented a method to locate the best parameters for cylindrical and box-like objects in a cluttered scene. They increased the robustness of RANSAC fits when dealing with clutter through employing a set of inlier filters and the use of Hough voting. They provided robust results and models that are relevant for grasp estimation. Readers could refer to the survey Kaiser et al. (2019) for more details.

3D salient object detection Compared with 2D salient object detection, 3D salient object detection consumes many kinds of 3D data, such as depth image and point cloud. Although above 2D salient object detection methods have achieved superior performance, they still remain challenging in some complex scenarios, where depth information could provide much assistance. RGB-D saliency detection methods usually utilize hand-crafted or deep learning-based features from RGB-D images and fuse them in different ways. Peng et al. (2014) proposed a simple fusion strategy which extends RGB-based saliency models by incorporating depth-induced saliency. Ren et al. (2015) exploited the normalized depth prior and the global-context surface orientation prior for salient object detection. Qu et al. (2017) trained a CNN-based model which fuses different low level saliency cues into hierarchical features for detecting salient objects in RGB-D images. Chen et al. (Chen et al. 2019; Chen and Li 2019) utilized two-stream CNNs-based models with different fusion structures. Chen and Li (2018) further proposed a progressively complementarity-aware fusion network for RGB-D salient object detection, which is more effective than early-fusion methods Hou et al. (2017) and late-fusion methods Han et al. (2018). Piao et al. (2019) proposed a depth-induced multi-scale recurrent attention network (DMRANet) for saliency detection, which achieves dramatic performance especially in complex scenarios. Pang et al. (2020) proposed a hierarchical dynamic filtering network (HDFNet) and a hybrid enhanced loss. Li et al. (2020) proposed a Cross-Modal Weighting (CMW) strategy to encourage comprehensive interactions between RGB and depth channels. These methods demonstrate remarkable performance of RGB-D SOD.

Aiming at 3D point cloud input, lots of methods are proposed to detect saliency maps of a complete object model Zheng et al. (2019), whereas, our ambitious is to locate the salient object from the 3D scene inputs. Kim et al. (2008) described a segmentation method for extracting salient regions in outdoor scenes using both 3D point clouds and RGB image. Bhatia and Chalup (2013) proposed a top-down approach for extracting salient objects/regions in 3d point clouds of indoor scenes.They first segregates significant planar regions, and extracts isolated objects present in the residual point cloud. Each object is then ranked for saliency based on higher curvature complexity of the silhouette.

Discussions 3D object localization is widely used in robotic grasping tasks but also in a junior level. In Rusu et al. (2009) and Goron et al. (2012), fitting 3D shape primitives has been successfully applied into robotic grasping tasks. In Zapata-Impata et al. (2019), the background is first filtered out using the height constraint, and the table is filtered out by fitting a plane using RANSAC Fischler and Bolles (1981). The remained point cloud is clustered and K object’s clouds are achieved finally. There also exist some other ways to remove the background points through fitting background points using existing full 3D point cloud. These methods are successfully applied into robotic grasping tasks.

2.2 Object detection

The task of object detection is to detect instances of objects of a certain class, which can be treated as a localization task plus a classification task. Usually, the shapes of the target objects are unknown, and accurate salient regions are hardly achieved. Therefore, the regularly bounding boxes are used for general object localization and classification tasks, and the outputs of object detection are bounding boxes with class labels. Based on whether using region proposals or not, the methods can be divided into two-stage methods and one-stage methods. These methods are summarized respectively in Table 2 aiming at 2D or 3D inputs.

2.2.1 2D object detection

2D object detection means detecting the target objects in 2D images by computing their 2D bounding boxes and categories. The most popular way of 2D detection is to generate object proposals and conduct classification, which is the two-stage methods. With the development of deep learning networks, especially Convolutional Neural Network (CNN), two-stage methods are improved extremely. In addition, large number of one-stage methods are proposed which achieved high accuracies with high speed. Typical functional flow-chart of 2D object detection is illustrated in Fig. 7.

Two-stage methods The two-stage methods can be referred as region proposal-based methods. Most of the traditional methods utilize the sliding window strategy to obtain the bounding boxes first, and then utilize feature descriptions of the bounding boxes for classification. Large number of hand-crafted global descriptors and local descriptors are proposed, such as SIFT Lowe (1999), FAST Rosten and Drummond (2005), SURF Bay et al. (2006), ORB Rublee et al. (2011), and so on. Based on these descriptors, researchers trained classifiers, such as neural networks, Support Vector Machine (SVM) or Adaboost, to conduct 2D detection. There exist some disadvantages of traditional detection methods. For example, the sliding windows should be predefined for specific objects, and the hand-crafted features are not representative enough for a strong classifier.

With the development of deep learning, region proposals could be computed with a deep neural network. OverFeat Sermanet et al. (2013) trained a fully connected layer to predict the box coordinates for the localization task that assumes a single object. Erhan et al. (2014) and Szegedy et al. (2014) generated region proposals from a network whose last fully connected layer simultaneously predicts multiple boxes. Besides, deep neural networks extract more representative features than hand-crafted features, and training classifiers using CNN Krizhevsky et al. (2012) features highly improved the performance. R-CNN Girshick et al. (2014) uses Selective Search (SS) Uijlings et al. (2013) methods to generate region proposals, uses CNN to extract features and trains classifiers using SVM. This traditional classifier is replaced by directly regressing the bounding boxes using the Region of Interest (ROI) feature vector in Fast R-CNN Girshick (2015). Faster R-CNN Ren et al. (2015) is further proposed by replacing SS with the Region Proposal Network (RPN), which is a kind of fully convolutional network (FCN) Long et al. (2015) and can be trained end-to-end specifically for the task of generating detection proposals. This design is also adopted in other two-stage methods, such as R-FCN Dai et al. (2016), FPN Lin et al. (2017). Generally, two-stage methods achieve a higher accuracy, whereas need more computing resources or computing time.

One-stage methods The one-stage methods can also be referred as regression-based methods. Compared to two-stage approaches, the single-stage pipeline skips separate object proposal generation and predicts bounding boxes and class scores in one evaluation. YOLO Redmon et al. (2016) conducts joint grid regression, which simultaneously predicts multiple bounding boxes and class probabilities for those boxes. YOLO is not suitable for small objects, since it only regress two bounding boxes for each grid. SSD Liu et al. (2016) predicts category scores and box offsets for a fixed set of anchor boxes produced by the sliding window. Compared with YOLO, SSD is faster and much more accurate. YOLOv2 Redmon and Farhadi (2017) also adopts sliding window anchors for classification and spatial location prediction so as to achieve a higher recall than YOLO. RetinaNet Lin et al. (2017) proposed the focal loss function by reshaping the standard cross entropy loss so that detector will put more focus on hard, misclassified examples during training. RetinaNet achieved comparable accuracy of two-stage detectors with high detection speed. Compare with YOLOv2, YOLOv3 Redmon and Farhadi (2018) and YOLOv4 Bochkovskiy et al. (2020) are further improved with a bunch of improvements, which shows large performance improvements without sacrificing the speed, and is more robust in dealing with small objects. There also exist some anchor-free methods, which doesn’t utilize the anchor bounding boxes, such as FCOS Tian et al. (2019), CornerNet Law and Deng (2018), ExtremeNet Zhou et al. (2019), CenterNet Zhou et al. (2019); Duan et al. (2019) and CentripetalNet Dong et al. (2020). Further reviews of these works can refer to recent surveys (Zou et al. 2019; Zhao et al. 2019; Liu et al. 2020; Sultana et al. 2020).

Discussions The 2D object detection methods are widely used in 2D planar robotic grasping tasks. This part can refer to Sect. 4.1.2.

2.2.2 3D object detection

3D object detection aims at finding the amodel 3D bounding box of the target object, which means finding the 3D bounding box that a complete target object occupies. 3D object detection is deeply explored in outdoor scenes and indoor scenes. Aiming at robotic grasping tasks, we can obtain the 2D and 3D information of the scene through RGB-D data, and general 3D object detection methods could be used. Similar with 2D object detection tasks, two-stage methods and one-stage methods both exist. The two-stage methods refer to region proposal-based methods and one-stage methods refer to regression-based methods. Typical functional flow-chart of 3D object detection is illustrated in Fig. 8.

Two-stage methods Traditional 3D detection methods usually aim at objects with known shapes. The 3D object detection problem is transformed into a detection and 6D object pose estimation problem. Many hand-crafted 3D shape descriptors, such as Spin Images Johnson (1997), 3D Shape Context Frome et al. (2004), FPFH Rusu et al. (2009), CVFH Aldoma et al. (2011), SHOT Salti et al. (2014), are proposed, which can locate the object proposals. In addition, the accurate 6D pose of the target object could be achieved through local registration. This part is introduced in Sect. 3.1.2. However, these methods face difficulties in general 3D object detection tasks. Aiming at general 3D object detection tasks, the 3D region proposals are widely used. Traditional methods train classifiers, such as SVM, based on the 3D shape descriptors. Sliding Shapes Song and Xiao (2014) is proposed which slides a 3D detection window in 3D space and extract features from the 3D point cloud to train an Exemplar-SVM classifier Malisiewicz et al. (2011). With the development of deep learning, the 3D region proposals could be generated efficiently, and the 3D bounding boxes could be regressed using features from deep neural networks rather than training traditional classifiers. There exist various methods of generating 3D object proposals, which can be roughly divided into three kinds, which are frustum-based methods (Qi et al. 2018; Xu et al. 2018; Wang and Jia 2019), global regression-based methods (Song and Xiao 2016; Chen et al. 2017; Liang et al. 2019) and local regression-based methods.

Frustum-based methods generate object proposals using mature 2D object detectors, which is a straightforward way. Frustum PointNets Qi et al. (2018) leverages a 2D CNN object detector to obtain 2D regions, and the lifted frustum-like 3D point clouds become 3D region proposals. The amodel 3D bounding boxes are regressed from features of the segmented points within the proposals based on PointNet Qi et al. (2017). PointFusion Xu et al. (2018) utilized Faster R-CNN Ren et al. (2015) to obtain the image crop first, and deep features from the corresponding image and the raw point cloud are densely fused to regress the 3D bounding boxes. FrustumConvNet Wang and Jia (2019) also utilizes the 3D region proposals lifted from the 2D region proposal and generates a sequence of frustums for each region proposal.

Global regression-based methods generate 3D region proposals from feature representations extracted from single or multiple inputs. Deep Sliding Shapes Song and Xiao (2016) proposed the first 3D Region Proposal Network (RPN) using 3D convolutional neural networks (ConvNets) and the first joint Object Recognition Network (ORN) to extract geometric features in 3D and color features in 2D to regress 3D bounding boxes. MV3D Chen et al. (2017) represents the point cloud using the bird’s-eye view and employs 2D convolutions to generate 3D proposals. The region-wise features obtained via ROI pooling for multi-view data are fused to jointly predict the 3D bounding boxes. MMF Liang et al. (2019) proposed a multi-task multi-sensor fusion model for 2D and 3D object detection, which generates a small number of high-quality 3D detections using multi-sensor fused features, and applies ROI feature fusion to regress more accurate 2D and 3D boxes. Part-\(\hbox {A}^{2}\) Shi et al. (2020) predicts intra-object part locations and generates 3D proposals by feeding the point cloud to an encoder-decoder network. A RoI-aware point cloud pooling is proposed to aggregate the part information from each 3D proposal, and a part-aggregation network is proposed to refine the results. PV-RCNN Shi et al. (2020) utilized voxel CNN with 3D sparse convolution (Graham and van der Maaten 2017; Graham et al. 2018) for feature encoding and proposals generation, and proposed a voxel-to-keypoint scene encoding via voxel set abstraction and a keypoint-to-grid RoI feature abstraction for proposal refinement. PV-RCNN achieved remarkable 3D detection performance on outdoor scene datasets.

Local regression-based methods mean generating point-wise 3D region proposals. PointRCNN Shi et al. (2019) extracts point-wise feature vectors from the input point cloud and generates 3D proposal from each foreground point computed through segmentation. Point cloud region pooling and canonical 3D bounding box refinement are then conducted. STD Yang et al. (2019) designs spherical anchors and a strategy in assigning labels to anchors to generate accurate point-based proposals, and a PointsPool layer is proposed to generate dense proposal features for the final box prediction. VoteNet Qi et al. (2019) proposed a deep hough voting strategy to generate 3D vote points from sampled 3D seeds points. The 3D vote points are clustered to obtain object proposals which will be further refined. MLCVNet Xie et al. (2020) proposed Multi-level Context VoteNet which considers the contextual information between the objects. H3DNet Zhang et al. (2020) predicts a hybrid set of geometric primitives such as centers, face centers and edge centers of the 3d bounding boxes, and formulates 3D object detection as regressing and aggregating these geometric primitives. A matching and refinement module is then utilized to classify object proposals and fine-tune the results. Compared with point cloud input-only VoteNet Qi et al. (2019), ImVoteNet Qi et al. (2020) additionally extracts geometric and semantic features from the 2D images, and fuses the 2D features into the 3D detection pipeline, which achieved remarkable 3D detection performance on indoor scene datasets.

One-stage methods One-stage methods directly predict class probabilities and regress the 3D amodal bounding boxes of the objects using a single-stage network. These methods do not need region proposal generation or post-processing. VoxelNet Zhou and Tuzel (2018) divides a point cloud into equally spaced 3D voxels and transforms a group of points within each voxel into a unified feature representation. Through convolutional middle layers and the region proposal network, the final results are obtained. Compared with VoxelNet, SECOND Yan et al. (2018) applies sparse convolution layers Graham et al. (2018) for parsing the compact voxel features. PointPillars Lang et al. (2019) converts a point cloud to a sparse pseudo-image, which is processed into a high-level representation through a 2D convolutional backbone. The features from the backbone are used by the detection head to predict 3D bounding boxes for objects. TANet Liu et al. (2020) proposed a Triple Attention (TA) module and a Coarse-to-Fine Regression (CFR) module, which focuses on the 3D detection of hard objects and the robustness to noisy points. HVNet Ye et al. (2020) proposed a hybrid voxel network which fuses voxel feature encoder (VFE) of different scales at point-wise level and projects into multiple pseudo-image feature maps. Above methods are mainly voxel-based 3D single stage detectors, and Yang et al. (2020) proposed a point-based 3D single stage object detector called 3DSSD, which contain a fusion sampling strategy in the downsampling process, a candidate generation layer, and an anchor-free regression head with a 3D center-ness assignment strategy. They achieved a good balance between accuracy and efficiency. Point-GNN Shi and Rajkumar (2020) utilized graph neural network on the point cloud and designed a graph neural network with an auto-registration mechanism which detects multiple objects in a single shot. DOPS Najibi et al. (2020) proposed an object detection pipeline which utilizes a 3D sparse U-Net Graham and van der Maaten (2017) and a graph convolution module. Their method can jointly predict the 3D shapes of the objects. Associate-3Ddet Du et al. (2020) learns to associate feature extracted from the real scene with more discriminative feature from class-wise conceptual models. Comprehensive review about 3D object detection could refer to the survey Guo et al. (2019).

Discussions 3D object detection only presents the general shape of the target object, which is not sufficient to conduct a robotic grasp, and it is mostly used in autonomous driving areas. However, the estimated 3D bounding boxes could provide approximate grasp positions and provide valuable information for the collision detection.

2.3 Object instance segmentation

Object instance segmentation refers to detecting the pixel-level or point-level instance objects of a certain class, which is closely related to object detection and semantic segmentation tasks. Two kinds of methods also exist, which are two-stage methods and one-stage methods. The two-stage methods refer to region proposal-based methods and one-stage methods refer to regression-based methods. The representative works of the two methods are shown in Table 3 aiming at 2D inputs and 3D inputs.

2.3.1 2D object instance segmentation

2D object instance segmentation means detecting the pixel-level instance objects of a certain class from an input image, which is usually represented as masks. Two-stage methods follow the mature object detection frameworks, while one-stage methods conduct regression from the whole input image directly. Typical functional flow-chart of 2D object instance segmentation is illustrated in Fig. 9.

Two-stage methods This kind of methods could also be referred as region proposal-based methods. The mature 2D object detectors are used to generate bounding boxes or region proposals, and the object masks are then predicted within the bounding boxes. Lots of methods are based on convolutional neural networks (CNN). SDS Hariharan et al. (2014) uses CNN to classify category-independent region proposals. MNC Dai et al. (2016) conducts instance segmentation via three networks, respectively differentiating instances, estimating masks, and categorizing objects. Path Aggregation Network (PANet) Liu et al. (2018) was proposed which boosts the information flow in the proposal-based instance segmentation framework. Mask R-CNN He et al. (2017) extends Faster R-CNN Ren et al. (2015) by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition, which achieved promising results. MaskLab Chen et al. (2018) also builds on top of Faster R-CNN Ren et al. (2015) and additionally produces semantic and instance center direction outputs. Chen et al. (2019) proposed a framework called Hybrid Task Cascade (HTC), which performs cascaded refinement on object detection and segmentation jointly and adopts a fully convolutional branch to provide spatial context. PointRend Kirillov et al. (2020) performs point-based segmentation predictions at adaptively selected locations based on an iterative subdivision algorithm. PointRend can be flexibly applied to instance segmentation tasks by building on top of them, and yields significantly more detailed results. FGN Fan et al. (2020) proposed a Fully Guided Network (FGN) for few-shot instance segmentation, which introduces different guidance mechanisms into the various key components in Mask R-CNN He et al. (2017).

Single-stage methods This kind of methods could also be referred as regression-based methods, where the segmentation masks are predicted as well the objectness score. DeepMask Pinheiro et al. (2015), SharpMask Pinheiro et al. (2016) and InstanceFCN Dai et al. (2016) predict segmentation masks for the the object located at the center. FCIS Li et al. (2017) was proposed as the fully convolutional instance-aware semantic segmentation method, where position-sensitive inside/outside score maps are used to perform object segmentation and detection. TensorMask Chen et al. (2019) uses structured 4D tensors to represent masks over a spatial domain and presents a framework to predict dense masks. YOLACT Bolya et al. (2019) breaks instance segmentation into two parallel subtasks, which are generating a set of prototype masks and predicting per-instance mask coefficients. YOLACT is the first real-time one-stage instance segmentation method and is improved by YOLACT++ Bolya et al. (2019). PolarMask Xie et al. (2020) formulates the instance segmentation problem as predicting contour of instance through instance center classification and dense distance regression in a polar coordinate. SOLO Wang et al. (2019) introduces the notion of instance categories, which assigns categories to each pixel within an instance according to the instance’s location and size, and converts instance mask segmentation into a classification-solvable problem. CenterMask Lee and Park (2020) adds a novel spatial attention-guided mask (SAG-Mask) branch to anchor-free one stage object detector (FCOS Tian et al. (2019)) in the same vein with Mask R-CNN He et al. (2017). BlendMask Chen et al. (2020) also builds upon the FCOS Tian et al. (2019) object detector, which uses a blender module to effectively predict dense per-pixel position-sensitive instance features and learn attention maps for each instance. Detailed reviews refer to the survey (Minaee et al. 2020; Sultana et al. 2020; Hafiz and Bhat 2020).

Discussions 2D object instance segmentation is widely used in robotic grasping tasks. For example, SegICP Wong et al. (2017) utilize RGB-based object segmentation to obtain the points belong to the target objects. Xie et al. (2020) separately leverage RGB and Depth for unseen object instance segmentation. Danielczuk et al. (2019) segments unknown 3d objects from real depth images using Mask R-CNN He et al. (2017) trained on synthetic data.

2.3.2 3D object instance segmentation

3D object instance segmentation means detecting the point-level instance objects of a certain class from an input 3D point cloud. Similar to 2D object instance segmentation, two-stage methods need region proposals, while one-stage methods are proposal-free. Typical functional flow-chart of 3D object instance segmentation is illustrated in Fig. 10.

Two-stage methods This kind of methods could also be referred as proposal-based methods. General methods utilize the 2D or 3D detection results and conduct foreground or background segmentation in the corresponding frustum or bounding boxes. GSPN Yi et al. (2019) proposed the Generative Shape Proposal Network (GSPN) to generates 3D object proposals and the Region-PointNet framework to conduct 3D object instance segmentation. 3D-SIS Hou et al. (2019) leverages joint 2D and 3D end-to-end feature learning on both geometry and RGB input for 3D object bounding box detection and semantic instance segmentation. 3D-MPA Engelmann et al. (2020) predicts dense object centers based on learned semantic features from a sparse volumetric backbone, employes a graph convolutional network to explicitly model higher-order interactions between neighboring proposal features, and utilizes a multi proposal aggregation strategy other than NMS to obtain the final results.

Single-stage methods This kind of methods could also be referred as regression-based methods. Lots of methods learn to group per-point features to segment 3D instances. SGPN Wang et al. (2018) proposed the Similarity Group Proposal Network (SGPN) to predict point grouping proposals and a corresponding semantic class for each proposal, from which we can directly extract instance segmentation results. MASC Liu and Furukawa (2019) utilizes the sub-manifold sparse convolutions (Graham and van der Maaten 2017; Graham et al. 2018) to predict semantic scores for each point as well as the affinity between neighboring voxels at different scales. The points are then grouped into instances based on the predicted affinity and the mesh topology. ASIS Wang et al. (2019) learns semantic-aware point-level instance embedding and semantic features of the points belonging to the same instance are fused together to make per-point semantic predictions. JSIS3D Pham et al. (2019) proposed a multi-task point-wise network (MT-PNet) that simultaneously predicts the object categories of 3D points and embeds these 3D points into high dimensional feature vectors that allow clustering the points into object instances. JSNet Zhao and Tao (2020) also proposed a joint instance and semantic segmentation (JISS) module and designed an efficient point cloud feature fusion (PCFF) module to generate more discriminative features. 3D-BoNet Yang et al. (2019) was proposed to directly regress 3D bounding boxes for all instances in a point cloud, while simultaneously predicting a point-level mask for each instance. LiDARSeg Zhang et al. (2020) proposed a dense feature encoding technique, a solution for single-shot instance prediction and effective strategies for handling severe class imbalances. OccuSeg Han et al. (2020) proposed an occupancy-aware 3D instance segmentation scheme, which predicts the number of occupied voxels for each instance. The occupancy signal guides the clustering stage of 3D instance segmentation and OccuSeg achieves remarkable performance.

Discussions 3D object instance segmentation is quite important in robotic grasping tasks. However, current methods mainly leverage 2D instance segmentation methods to obtain the 3D point cloud of the target object, which utilizes the advantages of RGB-D images. Nowadays 3D object instance segmentation is still a fast developing area, and it will be widely used in the future if its performance and speed improve a lot.

3 Object pose estimation

In some 2D planar grasps, the target objects are constrained in the 2D workspace and are not piled up, the 6D object pose can be represented as the 2D position and the in-plane rotation angle. This case is relatively simple and is addressed quite well based on matching 2D feature points or 2D contour curves. In other 2D planar grasp and 6DoF grasp scenarios, the 6D object pose is mostly needed, which helps a robot to get aware of the 3D position and 3D orientation of the target object. The 6D object pose transforms the object from the object coordinate into the camera coordinate. We mainly focus on 6D object pose estimation in this section and divide 6D object pose estimation into three kinds, which are correspondence-based, template-based and voting-based methods. During the review of each kind of methods, both traditional methods and deep learning-based methods are reviewed.

3.1 Correspondence-based methods

Correspondence-based 6D object pose estimation involves methods of finding correspondences between the observed input data and the existing complete 3D object model. When we want to solve this problem based on the 2D RGB image, we need to find correspondences between 2D pixels and 3D points of the existing 3D model. The 6D object pose can thus be recovered through Perspective-n-Point (PnP) algorithms Lepetit et al. (2009). When we want to solve this problem based on the 3D point cloud lifted from the depth image, we need to find correspondences of 3D points between the observed partial-view point cloud and the complete 3D model. The 6D object pose can thus recovered through least square methods. The methods of correspondence-based methods are summarized in Table 4.

3.1.1 2D image-based methods

When using the 2D RGB image, correspondence-based methods mainly target on the objects with rich texture through the matching of 2D feature points, as shown in Fig. 11. Multiple images are first rendered by projecting the existing 3D models from various angles and each object pixel in the rendered images corresponds to a 3D point. Through matching 2D feature points on the observed image and the rendered images (Vacchetti et al. 2004; Lepetit et al. 2005), the 2D–3D correspondences are established. Other than rendered images, the keyframes in keyframe-based SLAM approaches Mur-Artal et al. (2015) could also provide 2D–3D correspondences for 2D keypoints. The common 2D descriptors such as SIFT Lowe (1999), FAST Rosten and Drummond (2005), SURF Bay et al. (2006), ORB Rublee et al. (2011), etc., are usually utilized for the 2D feature matching. Based on the 2D–3D correspondences, the 6D object pose can be calculated with Perspective-n-Point (PnP) algorithms Lepetit et al. (2009). However, these 2D feature-based methods fail when the objects do not have rich texture.

Typical functional flow-chart of 2D correspondence-based 6D object pose estimation methods. Data from the lineMod dataset Hinterstoisser et al. (2012)

With the development of deep neural networks such as CNN, representative features could be extracted from the image. A straightforward way is to extract discriminative feature points (Yi et al. 2016; Truong et al. 2019) and match them using the representative CNN features. Yi et al. (2016) presented a SIFT-like feature descriptor. Truong et al. (2019) presented a method to greedily learn accurate match points. Superpoint DeTone et al. (2018) proposed a self-supervised framework for training interest point detectors and descriptors, which shows advantages over a few traditional feature detectors and descriptors. LCD Pham et al. (2020) particularly learns a local cross-domain descriptor for 2D image and 3D point cloud matching, which contains a dual auto-encoder neural network that maps 2D and 3D inputs into a shared latent space representation.

There exists another kind of methods (Rad and Lepetit 2017; Tekin et al. 2018; Crivellaro et al. 2017; Hu et al. 2019), which uses the representative CNN features to predict the 2D locations of 3D points, as shown in Fig. 11. Since it’s difficult to selected the 3D points to be projected, many methods utilize the eight vertices of the object’s 3D bounding box. Rad and Lepetit (2017) predicts 2D projections of the corners of their 3D bounding boxes and obtains the 2D–3D correspondences. Different with them, Tekin et al. (2018) proposed a single-shot deep CNN architecture that directly detects the 2D projections of the 3D bounding box vertices without posteriori refinements. Some other methods utilize feature points of the 3D object. Crivellaro et al. (2017) predicts the pose of each part of the object in the form of the 2D projections of a few control points with the assistance of a Convolutional Neural Network (CNN). KeyPose Liu et al. (2020) predicts object poses using 3D keypoints from stereo input, and is suitable for transparent objects. Hu et al. (2020) further predicts the 6D object pose from a group of candidate 2D–3D correspondences using deep learning networks in a single-stage manner, instead of RANSAC-based Perspective-n-Point (PnP) algorithms. HybridPose Song et al. (2020) predicts a hybrid intermediate representation to express different geometric information in the input image, including keypoints, edge vectors, and symmetry correspondences. Some other methods predict 3D positions for all the pixels of the object. Hu et al. (2019) proposed a segmentation-driven 6D pose estimation framework where each visible part of the object contributes to a local pose prediction in the form of 2D keypoint locations. The pose candidates are them combined into a robust set of 2D–3D correspondences from which the reliable pose estimation result is computed. DPOD Zakharov et al. (2019) estimates dense multi-class 2D–3D correspondence maps between an input image and available 3D models. Pix2pose Park et al. (2019) regresses pixel-wise 3D coordinates of objects from RGB images using 3D models without textures. EPOS Hodan et al. (2020) represents objects by surface fragments which allows to handle symmetries, predicts a data-dependent number of precise 3D locations at each pixel, which establishes many-to-many 2D–3D correspondences, and utilizes an estimator for recovering poses of multiple object instances.

3.1.2 3D point cloud-based methods

Typical functional flow-chart of 3D correspondence-based 6D object pose estimation methods is illustrated in Fig. 12. When using the 3D point cloud lifted from the depth image, 3D geometric descriptors could be utilized for matching, which eliminates the influence of the texture. The 6D object pose could then be achieved by computing the transformations based on 3D-3D correspondences directly. The widely used 3D local shape descriptors, such as Spin Images Johnson (1997), 3D Shape Context Frome et al. (2004), FPFH Rusu et al. (2009), CVFH Aldoma et al. (2011), SHOT Salti et al. (2014), can be utilized to find correspondences between the object’s partial 3D point cloud and full point cloud to obtain the 6D object pose. Some other 3D local descriptors could refer to the survey Guo et al. (2016). However, this kind of methods require that the target objects have rich geometric features.

There also exist deep learning-based 3D descriptors (Zeng et al. 2017a; Yew and Lee 2018) aiming at matching 3D points, which are representative and discriminative. 3DMatch Zeng et al. (2017a) is proposed to match 3D feature points using 3D voxel-based deep learning networks. 3DFeat-Net Yew and Lee (2018) proposed a weakly supervised network that holistically learns a 3D feature detector and descriptor using only GPS/INS tagged 3D point clouds. Gojcic et al. (2019) proposed 3DSmoothNet, which matches 3D point clouds with a siamese deep learning architecture and fully convolutional layers using a voxelized smoothed density value (SDV) representation. Yuan et al. (2020) proposed a self-supervised learning method for descriptors in point clouds, which requires no manual annotation and achieves competitive performance. StickyPillars Simon et al. (2020) proposed an end-to-end trained 3D feature matching approach based on a graph neural network, and they perform context aggregation with the aid of transformer based multi-head self and cross attention.

3.2 Template-based methods

Template-based 6D object pose estimation involves methods of finding the most similar template from the templates that are labeled with Ground Truth 6D object poses. In 2D case, the templates could be projected 2D images from known 3D models, and the objects within the templates have corresponding 6D object poses in the camera coordinate. The 6D object pose estimation problem is thus transformed into an image retrieval problem. In 3D case, the template could be the full point cloud of the target object. We need to find the best 6D pose that aligns the partial point cloud to the template and thus the 6D object pose estimation becomes a part-to-whole coarse registration problem. The methods of template-based methods are summarized in Table 5.

3.2.1 2D image-based methods

Traditional 2D feature-based methods could be used to find the most similar template image and 2D correspondence-based methods could be utilized if discriminative feature points exist. Therefore, this kind of methods mainly aim at texture-less or non-texture objects that correspondence-based methods can hardly deal with. In these methods, the gradient information is usually utilized. Typical functional flow-chart of 2D template-based 6D object pose estimation methods is illustrated in Fig. 13. Multiple images which are generated by projecting the existing complete 3D model from various angles are regarded as the templates. Hinterstoisser et al. (2012) proposed a novel image representation by spreading image gradient orientations for template matching and represented a 3D object with a limited set of templates. The accuracy of the estimated pose was improved by taking into account the 3D surface normal orientations which are computed from the dense point cloud obtained from a dense depth sensor. Hodaň et al. (2015) proposed a method for the detection and accurate 3D localization of multiple texture-less and rigid objects depicted in RGB-D images. The candidate object instances are verified by matching feature points in different modalities and the approximate object pose associated with each detected template is used as the initial value for further optimization. There exist deep learning-based image retrieval methods Gordo et al. (2016), which could assist the template matching process. However, seldom of them were used in template-based methods and perhaps the number of templates is too small for deep learning methods to learn representative and discriminative features.

Typical functional flow-chart of 2D template-based 6D object pose estimation methods. Data from the lineMod dataset Hinterstoisser et al. (2012)

Above methods find the most similar template explicitly, and there also exist some implicitly ways. Sundermeyer et al. (2018) proposed Augmented Autoencoders (AAE), which learns the 3D orientation implicitly. Thousands of template images are rendered from a full 3D model and these template images are encoded into a codebook. The input image will be encoded into a new code and matched with the codebook to find the most similar template image, and the 6D object pose is thus obtained.

There also exist methods (Xiang et al. 2018; Do et al. 2018; Liu et al. 2019) that directly estimate the 6D pose of the target object from the input image, which can be regarded as finding the most similar image from the pre-trained and labeled images implicitly. Different from correspondence-based methods, this kind of method learns the immediate mapping from an input image to a parametric representation of the pose, and the 6D object pose can thus be estimated combined with object detection Patil and Rabha (2018). Xiang et al. (2018) proposed PoseCNN for direct 6D object pose estimation. The 3D translation of an object is estimated by localizing the center in the image and predicting the distance from the camera, and the 3D rotation is computed by regressing a quaternion representation. Kehl et al. (2017) presented a similar method by making use of the SSD network. Do et al. (2018) proposed an end-to-end deep learning framework named Deep-6DPose, which jointly detects, segments, and recovers 6D poses of object instances form a single RGB image. They extended the instance segmentation network Mask R-CNN He et al. (2017) with a pose estimation branch to directly regress 6D object poses without any post-refinements. Liu et al. (2019) proposed a two-stage CNN architecture which directly outputs the 6D pose without requiring multiple stages or additional post-processing like PnP. They transformed the pose estimation problem into a classification and regression task. CDPN Li et al. (2019) proposed the Coordinates-based Disentangled Pose Network (CDPN), which disentangles the pose to predict rotation and translation separately. Tian et al. (2020) also proposed a discrete-continuous formulation for rotation regression to resolve this local-optimum problem. They uniformly sample rotation anchors in SO(3), and predict a constrained deviation from each anchor to the target.

There also exist methods that build a latent representation for category-level objects. This kind of methods can also be treated as the template-based methods, and the template could be implicitly built from multiple images. NOCS Wang et al. (2019), LatentFusion Park et al. (2020) and Chen et al. (2020) are the representative methods.

3.2.2 3D point cloud-based methods

Typical functional flow-chart of 3D template-based 6D object pose estimation methods is illustrated in Fig. 14. Traditional partial registration methods aim at finding the 6D transformation that best aligns the partial point cloud to the full point cloud. Various global registration methods (Mellado et al. 2014; Yang et al. 2015; Zhou et al. 2016) exist which afford large variations of initial poses and are robust with large noise. However, this kind of method is time-consuming. Most of these methods utilize local registration methods such as the iterative closest points(ICP) algorithm Besl and McKay (1992) to refine the results. This part can refer to some review papers (Tam et al. 2013; Bellekens et al. 2014).

Some deep learning-based methods also exist, which can accomplish the partial registration task in an efficient way. These methods consume a pair of point clouds, extract representative and discriminative features from 3D deep learning networks, and regress the relative 6D transformations between the pair of point clouds. PCRNet Sarode et al. (2019), DCP Wang and Solomon (2019), PointNetLK Aoki et al. (2019), PRNet Wang and Solomon (2019), DeepICP Lu et al. (2019), Sarode et al. (2019), TEASER Yang et al. (2020) and DGR Choy et al. (2020) are the representative methods and readers could refer to the recent survey Villena-Martinez et al. (2020). There also exist methods (Chen et al. 2020; Gao et al. 2020) that directly regress the 6D object pose from the partial point cloud. G2L-Net Chen et al. (2020) extracts the coarse object point cloud from the RGB-D image by 2D detection, and then conducts translation localization and rotation localization. Gao et al. (2020) proposed a method which conduct 6D object pose regression via supervised learning on point clouds.

3.3 Voting-based methods

Voting-based methods mean that each pixel or 3D point contributes to the 6D object pose estimation by providing one or more votes. We roughly divide voting methods into two kinds, which are indirectly voting methods and directly voting methods. Indirectly voting methods mean that each pixel or 3D point vote for some feature points, which affords 2D–3D correspondences or 3D-3D correspondences. Directly voting methods mean that each pixel or 3D point vote for a certain 6D object coordinate or pose. These methods are summarized in Table 6.

3.3.1 Indirect voting methods

This kind of methods can be regarded as voting for correspondence-based methods. In 2D case, 2D feature points are voted and 2D–3D correspondences could be achieved. In 3D case, 3D feature points are voted and 3D-3D correspondences between the observed partial point cloud and the canonical full point cloud could be achieved. Most of this kind of methods utilize deep learning methods for their strong feature representation capabilities in order to predict better votes. Typical functional flow-chart of indirect voting-based 6D object pose estimation methods is illustrated in Fig. 15.

In 2D case, PVNet Peng et al. (2019) votes projected 2D feature points and then finds the corresponding 2D–3D correspondences to compute the 6D object pose. Yu et al. (2020) proposed a method which votes 2D positions of the object keypoints from vector-fields. They develop a differentiable proxy voting loss (DPVL) which mimics the hypothesis selection in the voting procedure. In 3D case, PVN3D He et al. (2020) votes 3D keypoints, and can be regarded as a variation of PVNet Peng et al. (2019) in 3D domain. YOLOff Gonzalez et al. (2020) utilizes a classification CNN to estimate the object’s 2D location in the image from local patches, followed by a regression CNN trained to predict the 3D location of a set of keypoints in the camera coordinate system. The 6D object pose is then achieved by minimizing a registration error. 6-PACK Wang et al. (2019) predicts a handful of ordered 3D keypoints for an object based on the observation that inter-frame motion of an object instance can be estimated through keypoint matching. This method achieves category-level 6D object pose tracking on RGB-D data.

3.3.2 Direct voting methods

This kind of methods can be regarded as voting for template-based methods if we treat the voted object pose or object coordinate as the most similar template. Typical functional flow-chart of direct voting-based 6D object pose estimation methods is illustrated in Fig. 16.

In 2D case, this kind of methods are mainly used for computing the poses of objects with occlusions. For these objects, the local evidence in the image restricts the possible outcome of the desired output, and every image patch is thus usually used to cast a vote about the 6D object pose. Brachmann et al. (2014) proposed a learned, intermediate representation in the form of a dense 3D object coordinate labelling paired with a dense class labelling. Each object coordinate prediction defines a 3D-3D correspondence between the image and the 3D object model, and the pose hypotheses are generated and refined to obtain the final hypothesis. Tejani et al. (2014) trained a Hough forest for 6D pose estimation from an RGB-D image. Each tree in the forest maps an image patch to a leaf which stores a set of 6D pose votes.

In 3D case, Drost et al. (2010) proposed the Point Pair Features (PPF) to recover the 6D pose of objects from a depth image. A point pair feature contains information about the distance and normals of two arbitrary 3D points. PPF has been one of the most successful 6D pose estimation method as an efficient and integrated alternative to the traditional local and global pipelines. Hodan et al. (2018) proposed a benchmark for 6D pose estimation of a rigid object from a single RGB-D input image, and a variation of PPF Vidal et al. (2018) won the 2018 SIXD challenge.

Deep learning-based methods also assist the directly voting methods. DenseFusion Wang et al. (2019) utilizes a heterogeneous architecture that processes the RGB and depth data independently and extracts pixel-wise dense feature embeddings. Each feature embedding votes a 6D object pose and the best prediction is adopted. They further proposed an iterative pose refinement procedure to refine the predicted 6D object pose. MoreFusion Wada et al. (2020) conducts an object-level volumetric fusion and performs point-wise volumetric pose prediction that exploits volumetric reconstruction and CNN feature extraction from the image observation. The object poses are then jointly refined based on geometric consistency among objects and impenetrable space.

3.4 Comparisons and discussions

In this section, we mainly review the methods based on the RGB-D image, since 3D point cloud-based 6D object pose estimation could be regarded as a registration or alignment problem where some surveys (Tam et al. 2013; Bellekens et al. 2014) exist. The related datasets, evaluation metrics and comparisons are presented.

3.4.1 Datasets and evaluation metrics

There exist various benchmarks Hodaň et al. (2018) for 6D pose estimation, such as LineMod Hinterstoisser et al. (2012), IC-MI/IC-BIN dataset Tejani et al. (2014), T-LESS dataset Hodaň et al. (2017), RU-APC dataset Rennie et al. (2016), and YCB-Video Xiang et al. (2018), etc. Here we only reviewed the most widely used LineMod Hinterstoisser et al. (2012) dataset and YCB-Video Xiang et al. (2018) dataset. LineMod Hinterstoisser et al. (2012) provides manual annotations for around 1,000 images for each of the 15 objects in the dataset. Occlusion Linemod Brachmann et al. (2014) contains more examples where the objects are under occlusion. YCB-Video Xiang et al. (2018) contains a subset of 21 objects and comprises 133,827 images. These datasets are widely evaluated aiming at various kinds of methods.

The 6D object pose can be represented by a \(4 \times 4\) matrix \(\mathrm{P} = [R,t;0,1]\), where R is a \(3 \times 3\) rotation matrix and t is a \(3 \times 1\) translation vector. The rotation matrix could also be represented as quaternions or angle-axis representation. Direct comparison of the variances between the values can not provide intuitive visual understandings. The commonly used metrics are the Average Distance of Model Points (ADD) Hinterstoisser et al. (2012) for non-symmetric objects and the average closest point distances (ADD-S) Xiang et al. (2018) for symmetric objects.

Given a 3D model M, the ground truth rotation R and translation T, and the estimated rotation \(\hat{R}\) and translation \(\hat{T}\), ADD means the average distance of all model points x from their transformed versions. The 6D object pose is considered to be correct if the average distance is smaller than a predefined threshold.

ADD-S Xiang et al. (2018) is an ambiguity-invariant pose error metric which takes both symmetric and non-symmetric objects into an overall evaluation. Given the estimated pose \({{[{\hat {\rm{R}}}|{\hat {\rm{T}}}]}}\) and the ground truth pose \(\mathrm{{[R|T]}}\), ADD-S calculates the mean distance from each 3D model point transformed by \({{[{\hat {\rm{R}}}|{\hat {\rm{T}}}]}}\) to its closest point on the target model transformed by \(\mathrm{{[R|T]}}\).

Aim at the LineMOD dataset, ADD is used for asymmetric objects and ADD-S is used for symmetric objects. The threshold is usually set as \(10\%\) of the model diameter. Aiming at the YCB-Video dataset, the commonly used evaluation metric is the ADD-S metric. The percentage of ADD-S smaller than 2cm (\(<\hbox {2cm}\)) is often used, which measures the predictions under the minimum tolerance for robotic manipulation. In addition, the area under the ADD-S curve (AUC) following PoseCNN Xiang et al. (2018) is also reported and the maximum threshold of AUC is set to be 10cm.

3.4.2 Comparisons and discussions

6D object pose estimation plays a pivotal role in robotic and augment reality areas. Various methods exist with different inputs, precision, speed, advantages and disadvantages. Aiming at robotic grasping tasks, the practical environment, the available input data, the available hardware setup, the target objects to be grasped, the task requirements should be analyzed first to decide which kinds of methods to use. The above mentioned three kinds of methods deal with different kinds of objects. Generally, when the target object has rich texture or geometric details, the correspondence-based method is a good choice. When the target object has weak texture or geometric detail, the template-based method is a good choice. When the object is occluded and only partial surface is visible, or the addressed object ranges from specific objects to category-level objects, the voting-based method is a good choice. Besides, the three kinds of methods all have 2D inputs, 3D inputs or mixed inputs. The results of methods with RGB-D images as inputs are summarized in Table 7 on the YCB-Video dataset, and Table 8 on the LineMOD and Occlusion LineMOD datasets. All recent methods on LineMOD achieve high accuracy since there’s little occlusion. When there exist occlusions, correspondence-based and voting-based methods perform better than template-based methods. The template-based methods are more like a direct regression problem, which highly depend on the global feature extracted. Whereas, correspondence-based and voting-based methods utilize the local parts information and constitute local feature representations.

There exist some challenges for nowadays 6D object pose estimation methods. The first challenge lies in that current methods show obvious limitations in cluttered scenes in which occlusions usually occur. Although the state-of-the-art methods achieve high accuracies on the Occlusion LineMOD dataset, they still could not afford severe occluded cases since this situation may cause ambiguities even for human beings. The second one is the lack of sufficient training data, as the sizes of the datasets presented above are relatively small. Nowadays deep learning methods show poor performance on objects which do not exist in the training datasets and perhaps the simulated datasets could be one solution. Although some category-level 6D object pose methods (Wang et al. 2019; Park et al. 2020; Chen et al. 2020) emerged recently, they still can not handle large number of categories.

4 Grasp estimation

Grasp estimation means estimating the 6D gripper pose in the camera coordinate. As mentioned before, the grasp can be categorized into 2D planar grasp and 6DoF grasp. For 2D planar grasp, where the grasp is constrained from one direction, the 6D gripper pose could be simplified into a 3D representation, which includes the 2D in-plane position and 1D rotation angle, since the height and the rotations along other axes are fixed. For 6DoF grasp, the gripper can grasp the object from various angles and the 6D gripper pose is essential to conduct the grasp. In this section, methods of 2D planar grasp and 6DoF grasp are presented in detail.

4.1 2D planar grasp

Methods of 2D planar grasp can be divided into methods of evaluating grasp contact points and methods of evaluating oriented rectangles. In 2D planar grasp, the grasp contact points can uniquely define the gripper’s grasp pose, which is not the situation in 6DoF grasp. The 2D oriented rectangles can also uniquely define the gripper’s grasp pose. These methods are summarized in Table 9 and typical functional flow-chart is illustrated in Fig. 17.

Typical functional flow-chart of 2D planar grasp methods. Data from the JACQUARD dataset Depierre et al. (2018)

4.1.1 Methods of evaluating grasp contact points

This kind of methods first sample candidate grasp contact points, and use analytical methods or deep learning-based methods to evaluate the possibility of a successful grasp, which are classification-based methods. Empirical methods of robotic grasping are performed based on the premise that certain prior knowledge, such as object geometry, physics models, or force analytic, are known. The grasp database usually covers a limited amount of objects, and empirical methods will face difficulties in dealing with unknown objects. Domae et al. (2014) presented a method that estimates graspability measures on a single depth map for grasping objects randomly placed in a bin. Candidate grasp regions are first extracted and the graspability is computed by convolving one contact region mask image and one collision region mask image. Deep learning-based methods could assists in evaluating the grasp qualities of candidate grasp contact points. Mahler et al. (2017) proposed DexNet 2.0, which plans robust grasps with synthetic point clouds and analytic grasping metrics. They first segment the current points of interests from the depth image, and multiple candidate grasps are generated. The grasp qualities are then measured using the Grasp Quality-CNN network, and the one with the highest quality will be selected as the final grasp. Their database have more than 50k grasps, and the grasp quality measurement network achieved relatively satisfactory performance.

Deep learning-based methods could also assist in estimating the most probable grasp contact points through estimating pixel-wise grasp affordances. Robotic affordances (Do et al. 2018; Ardón et al. 2019; Chu et al. 2019) usually aim to predict affordances of the object parts for robot manipulation, which are more like a segmentation problem. However, there exist some methods (Zeng et al. 2018; Cai et al. 2019) that predict pixel-wise affordances with respect to the grasping primitive actions. These methods generate grasp qualities for each pixel, and the pair of points with the highest affordance value is executed. Zeng et al. (2018) proposed a method which infers dense pixel-wise probability maps of the affordances for four different grasping primitive actions through utilizing fully convolutional networks. Cai et al. (2019) presented a pixel-level affordance interpreter network, which learns antipodal grasp patterns based on a fully convolutional residual network similar with Zeng et al. (2018). Both of these two methods do not segment the target object and predict pixel-wise affordance maps for each pixels. This is a way which directly estimate grasp qualities without sampling grasp candidates. Morrison et al. (2018) proposed the Generative Grasping Convolutional Neural Network (GG-CNN), which predicts the quality and pose of grasps at every pixel. Further, Morrison et al. (2019) proposed a Multi-View Picking (MVP) controller, which uses an active perception approach to choose informative viewpoints based on a distribution of grasp pose estimates. They utilized the real-time GG-CNN Morrison et al. (2018) for visual grasp detection. Wang et al. (2019) proposed a fully convolution neural network which encodes the origin input images to features and decodes these features to generate robotic grasp properties for each pixel. Unlike classification-based methods for generating multiple grasp candidates through neural network, their pixel-wise implementation directly predicts multiple grasp candidates through one forward propagation.

4.1.2 Methods of evaluating oriented rectangles

Jiang et al. (2011) first proposed to use an oriented rectangle to represent the gripper configuration and they utilized a two-step procedure, which first prunes the search space using certain features that are fast to compute and then uses advanced features to accurately select a good grasp. Vohra et al. (2019) proposed a grasp estimation strategy which estimates the object contour in the point cloud and predicts the grasp pose along with the object skeleton in the image plane. Grasp rectangles at each skeleton point are estimated, and point cloud data corresponding to the grasp rectangle part and the centroid of the object is used to decide the final grasp rectangle. Their method is simple and needs no grasp configuration sampling steps.

Aiming at the oriented rectangle-based grasp configuration, deep learning methods are gradually applied in three different ways, which are classification-based methods, regression-based methods and detection-based methods. Most of these methods utilize a five dimensional representation Lenz et al. (2015) for robotic grasps, which are rectangles with a position, orientation and size: (x,y,\(\theta\),h,w).