Abstract

Face recognition is being widely accepted as a biometric technique because of its non-intrusive nature. Despite extensive research on 2-D face recognition, it suffers from poor recognition rate due to pose, illumination, expression, ageing, makeup variations and occlusions. In recent years, the research focus has shifted toward face recognition using 3-D facial surface and shape which represent more discriminating features by the virtue of increased dimensionality. This paper presents an extensive survey of recent 3-D face recognition techniques in terms of feature detection, classifiers as well as published algorithms that address expression and occlusion variation challenges followed by our critical comments on the published work. It also summarizes remarkable 3-D face databases and their features used for performance evaluation. Finally we suggest vital steps of a robust 3-D face recognition system based on the surveyed work and identify a few possible directions for research in this area.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Face recognition is becoming a commonly used biometric technique with widespread applications in public records, authentication, intelligence, safety, security, and many other vigilance systems. Unlike other biometric traits such as iris, fingerprint, palm-print, signature, vein-pattern etc., which require a user to be attentive and intentional while capturing input, face recognition requires least user attention. An ideal face recognition system is expected to detect and recognize a human face when the person is unaware of being biometrically examined. This reduces the harm of altering fiducial identity features by vicious persons. Also, tampering of basic facial features is difficult and can be easily noticed. This may make it the most reliable and preferred candidate amongst the other biometric techniques in the days to come (Li and Jain 2011).

Face recognition is defined as either online or offline recognition of one or more people using available face database (Zhao et al. 2003). Face recognition is classified into two domains namely, identification and verification. For an identification/recognition system, input face is matched against all faces in an available database, which in turn provides the determined identity (Zhao et al. 2003). A verification/authentication system compares an input face with a similar claimed face from a database. It either validates or rejects the claim based on the matching score. The input to a face recognition system is usually referred to as a ‘query’ or ‘probe’, whereas collection of faces in dataset is denoted as a ‘gallery’ or a ‘database’ (Bowyer et al. 2006).

After conduction of Face Recognition Vendor Tests FRVT-2000 (Blackburn et al. 2001), FRVT-2002 (Phillips et al. 2003), researchers realized the importance of 3-D human facial scans. A 3-D facial scan conveys much more discriminating information, as it provides the exact shape information including the depth profile associated with a human face. After the availability of 3-D scanners and subsequent 3-D face databases, many researchers focused their energies toward 3-D face recognition, expecting to utilize the more precise information associated with a 3-D facial shape (Bowyer et al. 2006; Xi et al. 2011; Ballihi et al. 2012; Mohammadzade and Hatzinakos 2013). The acquired 3-D facial data is invariant to illumination (Al-Osaimi et al. 2012; Smeets et al. 2010) and pose changes (Ocegueda et al. 2013). In fact all other poses and occlusion invariant features can be computationally estimated using a complete 3-D frontal scan, even in presence of occlusions, improper illumination or expression variations. Research efforts on 3-D face analysis are focused on improvement of recognition accuracy and to address many challenges faced by these systems such as expression variations and occlusions. The 3-D face recognition systems are more immune to spoofing and deception (Määttä et al. 2012). Various agencies organized the Face Recognition Grand Challenge (FRGC) (Phillips et al. 2005) and FRVT-2006 (Phillips et al. 2010) evaluation tests which involved assessment of 3-D face recognition algorithms on databases with large gallery size. This indicates the growing interest of the research community in 3-D face recognition. Bowyer et al. (2006) have summarized the research trends in 3-D face recognition up to 2006. Abate et al. (2007) have reviewed the associated literature up to year 2007. Smeets et al. (2012) have recently studied various algorithms for expression invariant 3-D face recognition and evaluated the complexity of existing 3-D face databases. Some of the earlier developments in face recognition are surveyed by Chellappa et al. (1995), Jaiswal et al. (2011), Zhao et al. (2003) and Tan et al. (2006).

This paper surveys published work on 3-D face recognition from a general viewpoint, and also reviews it with special focus on some challenges such as expression and occlusion variations. An extensive review of prominent 3-D face databases that will help forthcoming researchers to choose a database for their research problem is also presented. There are some multimodal approaches based on both shape (3-D) and texture (2-D) data. The joint use of these shape and textural features accommodates more information and facilitates extraction of more discriminant features. We have reviewed the literature associated only with 3-D shape, and also approaches that address 3-D face recognition and related challenges. The survey of pure 2-D and multimodal (2-D and 3-D) face recognition techniques is outside the scope of this paper.

The rest of this paper is organized as follows: in Sect. 2 a brief overview of 3-D face recognition systems, various 3-D data acquisition and representation techniques is presented along with their advantages and limitations. It also includes a summary of 3-D landmark detection, face segmentation, face registration, feature extraction and matching techniques. Section 3 summarizes prominent 3-D face databases. In this section, complexity of each database is examined for challenges such as pose, expression and occlusion variations. The surveyed state-of-the-art methods for expression invariant 3-D face recognition are discussed in Sect. 4 along with their advantages and limitations. Section 5 addresses the review of occlusion invariant 3-D face recognition approaches. Section 6 presents a discussion on various challenges faced by the published research so far and also summarizes various algorithmic steps in 3-D face recognition. Section 7 presents the conclusion and future scope of research in 3-D face recognition approaches.

2 3-D face recognition system

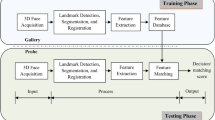

3-D face recognition is defined as a biometric technique which uses individual 3-D facial shape to recognize human faces using 3-D models of both probe and gallery faces. A general 3-D face recognition system is depicted in Fig. 1.

A 3-D human face captured during face acquisition may contain unwanted body parts or areas like hair, ears, neck, shoulders and accessories like glasses and ornaments that need to be effectively eliminated. Major landmarks facilitate the segmentation process which extracts face shape from the entire scan. Facial shape needs to be aligned before actual matching. 3-D registration techniques are used for face shape alignment. The discriminant features are extracted and stored using the surveyed region-based and holistic approaches for all faces in the gallery. Feature vector for a probe (query) scan is extracted and matched against the gallery feature vectors one by one. The gallery scan that has the closest matching distance with the probe scan below a predefined distance threshold is considered as the recognized gallery scan, and the class of the corresponding feature vector is declared as the result.

2.1 3-D facial data acquisition techniques

The methods to acquire 3-D information about a facial surface adopt optical triangulation principle for depth estimation (Amor et al. 2013) and are broadly classified into stereo acquisition, laser beam scanning and fringe pattern based techniques.

Triangulation principle

Triangulation principle (Haasbroek 1968) states that the depth (L) and coordinates of a point (O) on an object in 3-D space can be estimated if length (M) of one side of a triangle OXY and angles P and Q between line XY and other sides of the triangle i.e. lines OX and OY at points X and Y could be measured depicted in Fig. 2. The points X and Y represent an illumination source and a sensor or both can be sensors like cameras.

Thus, depth L of each point O on a 3-D shape can be estimated using (1).

2.1.1 Stereo acquisition

Stereo acquisition based systems capture the 3-D facial data with two calibrated 2-D cameras. The concurrently captured 2-D images are registered and integrated to form a 3-D model using visual features and landmarks in both (Winkler and Min 2013). This is a passive method of 3-D face acquisition (Uchida et al. 2005) and involves complex computations since a 3-D face model is reconstructed from multiple 2-D images. The accuracy of 3-D face model heavily depends upon the surrounding illumination conditions. Illumination intensity should be consistent during the acquisition of 2-D calibrated images (Gökberk et al. 2009) to avoid erratic registration. Figure 3 presents a schematic for stereo 3-D image acquisition.

Locations of calibrated cameras and an object form a triangle and hence the depth can be estimated with the triangulation principle. The manifold images captured using a set of cameras share some common points in 3-D space. Such shifted common points are known as disparity (Koschan et al. 2007). If the value of disparity (d) is small, the estimated depth is large and vice-versa. Figure 3 shows stereo depth acquisition of point C using camera A and camera B. The distances \(\hbox {D}_\mathrm{A}\) and \(\hbox {D}_\mathrm{B}\) are measured from the centre of the camera lens. Subsequently, the disparity is computed using (2) (Koschan et al. 2007).

It can be interpreted that if the point D is relatively farther from the camera planes, its disparity will be lesser (Koschan et al. 2007). In this way, the depth of various points in 3-D space is computed using stereo acquisition.

This method depends upon point-pair correspondence and feature extraction in addition to the conventional triangulation (Sansoni et al. 2009). The stereo acquisition technique is advantageous due to its high sensing speed, ease of availability of acquisition devices, no specific wavelength illumination source requirement and low cost. This technique suffers from errors in exact localization of point-pairs in distinct images which affect the overall reconstruction process. The process of conversion of the captured 2-D images into a 3-D model is computationally heavy. The resultant reconstruction accuracy is low, restricted to well illuminated scenes and depends upon resolution quality of captured 2-D images (Sansoni et al. 2009).

2.1.2 Laser beam scanning

Laser beam based 3-D scanner projects a laser beam on the subject to be scanned, i.e. a human face. The beam gradually scans the whole object just like a 2-D scan. A charge-coupled device camera is used to acquire the reflected light from the subject along with a 2-D intensity image. The 3-D data is generated using triangulation process, which yields the depth information. Laser beam based scanners offer the highest capturing range (\(\sim \)8 feet) as compared to other acquisition techniques (\(\sim \)1.6 to 5 feet) (Gökberk et al. 2009). Figure 4 presents the basic principle of a laser beam scanning system for 3-D objects.

The depth estimation is contingent upon bending of the emitted laser light. The laser source emits single wavelength laser light to point A in 3-D space at an angle \(\beta \) with the baseline. The intersection of laser source and baseline is P units away from the origin. The focal distance of CCD camera is denoted by D (Koch et al. 2012; Koschan et al. 2007). The 3-D position i.e. (X, Y, Z) coordinates of point A are given by (3).

This is an active triangulation technique which involves laser source as an illumination device and CCD camera as a sensor. The CCD camera is tuned to the wavelength of the transmitted laser light. The advantages of laser beam scanning method are high accuracy due to tight focus of laser source, illumination robustness due to utilization of a single standard wavelength and less power requirements (Scopigno et al. 2002). This method is slow in terms of acquisition speed due to line-wise scanning (Daoudi et al. 2013) of the complete 3-D surface. These devices are costlier than other 3-D acquisition devices.

2.1.3 Fringe pattern acquisition using structured light

This technique employs special system cameras to capture reflections from facial surfaces illuminated with fringe patterns. This is an active triangulation technique where the source projects encoded structured light patterns on the object to be scanned (Sansoni et al. 2009). The fringe patterns are predefined for specific angles and coded using structured light. Generally halogen lights project the structured light pattern on a facial surface (Malassiotis and Strintzis 2005; Breitenstein et al. 2008; Gökberk et al. 2009; Je et al. 2013). These systems are expensive compared to the stereo acquisition devices and offer more legitimate 3-D facial surface representation. They are less expensive and also less accurate compared to laser beam scanner devices. Analogous to stereo acquisition methods, the captured data also depends upon illumination conditions (Gökberk et al. 2009). Basic principle of this method is depicted in Fig. 5.

Various types of structured light pattern consist of manifold slits, multi-color (RGB), pixel-grid and dotted patterns (Sansoni et al. 2009). This technique is advantageous due to its wide Field-Of-View (FOV), high speed of acquisition, low cost, less computations for conversion from 2-D to 3-D, computerized control and implementation simplicity. This technique suffers from haziness due to use of single multi-stripe encoded pattern (Scopigno et al. 2002; Zhang and Lu 2013).

2.2 3-D face representations

2.2.1 Point cloud representation

Each point on the 3-D point cloud is denoted by \(\{x, y,z\}\) coordinates. Most of the scanners use this representation (Beumier and Acheroy 2000; Chenghua et al. 2004) in order to store the captured 3-D facial information. Sometimes texture attributes are also concatenated to the shape information. In this case, the representation simply becomes \(\{x, y,z, p,q\}\) (Amor et al. 2013), where p and q are spatial coordinates. The disadvantage of this representation is that the neighborhood information is not available as each point is simply expressed as three/five attribute feature vector (Gokberk et al. 2008). Figure 6a indicates point cloud representation for a sample face from the GavabDB 3-D face database (Moreno and Sanchez 2004). Point cloud is a collection of unstructured coordinates in the 3-D space \(\mathfrak {R}^{3}\) (Koch et al. 2012). Often the point cloud data is fitted to a smooth surface to avoid drastic variations due to noise.

2.2.2 3-D Mesh representation

The 3-D Mesh representation uses pre-computed and indexed local information about the 3-D surface (Amor et al. 2013). It requires more memory and storage space than point cloud representation, but it is more preferred as it is flexible and more suitable for 3-D geometric transformations such as translation, rotations and scaling. Each 3-D polygonal mesh is expressed as a collection of mesh elements: vertices (points), edges (connectors between vertices) and polygons (shapes formed by edges and vertices) (Amor et al. 2013). 3-D mesh representation for an exemplary face belonging to the GavabDB database (Moreno and Sanchez 2004) is shown in Fig. 6b. Most of the 3-D mesh data is represented in terms of triangular mesh elements derived from the 2-D representation in \(\mathfrak {R}^{3}\) (Koch et al. 2012). The 2-D texture coordinates are also embedded in the vertex information which is helpful for construction of accurate 3-D models.

2.2.3 Depth image representation

Depth images are also called 2.5D or range image representations (Bowyer et al. 2006). The Z-axis intensities of facial information are mapped onto a 2-D plane and the model appears like a smooth 3-D surface represented in terms of intensity. It gives realization of continuous depth information corresponding to various points on the facial shape. Since it is a 2-D representation, many existing 2-D image processing approaches can readily be applied to this representation. Figure 6c indicates a representative 3-D depth image from the Texas 3-D face database (Gupta et al. 2010a). Range image is an orthogonal projection of Z-axis coordinates on the 2-D image surface where uniform sampling is achieved (Koch et al. 2012). Range representation needs fewer memory resources as variable sampling is transformed into uniform sampling. Range images can be readily visualized with gray scale image viewers. Range images can be converted to 3-D mesh format as per the application requirements using the triangulation principle. Figure 6c depicts range image representation of a facial surface where the nose is closer to the camera. Thus, the nose has highest intensity and the intensity diminishes with increase in distance from the camera.

As discussed above, the 3-D facial information is usually represented in the three forms namely; point cloud data, mesh data and depth image representation as shown in Fig. 6a–c. The difference between the isolated points in point cloud, continuous edges and vertices in mesh and smooth surface representation in range data followed by their respective computer screen display shots can be clearly observed.

2.3 Landmark detection and face segmentation

Most of the published 3-D face recognition approaches assume manually localized and registered faces in a common coordinate system (Colombo et al. 2006). However, while designing a complete face recognition system, automated detection and registration of human faces is essential. Facial landmarks detection plays a vital role in automatic face localization and registration process. Facial landmarks consist of a set of fiducial points defined by the anthropometric studies (Farkas 1994), which can be used for face detection, face recognition, facial expression recognition and pose estimation applications (Xi et al. 2011). Many approaches utilize landmarks (such as nose tip and eye corners) to segment a 3-D facial surface from background and other body parts. The nose tip is invariant to most expression changes and it is noticeable for a broad range of non-frontal poses (Malassiotis and Strintzis 2005). Various methods for landmark(s) detection on 3-D facial surfaces are surveyed for landmarks namely the nose-tip (NT), inner eye-corners (IE) and outer eye-corners (OE) which are also known as PRN (pronasal), EN (endocanthion) and EX (exocanthion) points by anthropometric experts (Vezzetti and Marcolin 2012). In Table 1, we enlist the prominent landmark localization approaches along with the detected landmarks and pose flexibility. This subsection is further dedicated to the discussions and our comments on the work summarized in Table 1. A frontal 3-D face registration can be accomplished with only one landmark by means of the iterative closest point (ICP) algorithm, whereas, for non-frontal faces, location information of minimum three landmarks is essential (Colbry et al. 2005; Vezzetti and Marcolin 2012).

The 3-D surface derivatives play a vital role in computation of the mean (H) and Gaussian (K) curvature maps. A facial surface is partitioned into concave, convex and saddle regions by employing signs of the HK maps for segmentation. The nose tip and eye corners are searched within the segmented regions by applying various thresholding algorithms. Colombo et al. (2006) detected the eyes in the concave segmented HK maps \((H_{ min }\) and \(K_{ min }\)) and a nose tip around peak values of the mean curvature map \((H_{ max })\). However, the experimentation is performed on a small dataset. Detection rate of the reported approach is affected by self-occlusions and camouflage. Another method to detect inner eye corners and nose tip was proposed by Conde et al. (2006a). The regions with high values of mean curvature (H) were segmented into three different sets with clustering. Each cluster contained a feature point corresponding to nose tip and inner eye corners. This approach further employed SVM classifier for feature point localization. An advantage of this approach is that it can be directly applied on a 3-D mesh, acquired using laser beam scanning. To locate the nose tip and inner eye corners in a range image (Segundo et al. 2007) employed HK classification and median filtering. Principal component analysis (PCA) was utilized to compute surface normal vectors. Eye-corners are searched in concave regions and nose tip is searched in convex surface. Performance of this method is adversely affected when input range image has pose variations more than \({\pm }15^{\circ }\). In Szeptycki et al. (2009), a coarse-to-fine rotation invariant method was proposed to detect nose tip and inner eye corners. A generic facial statistical model was built and fitted to locate outer eye corners and other fiducial landmarks. Though these landmark detection methods use computationally heavy techniques, in effect they are computationally moderate due to their selective or guided search techniques.

Principal curvatures based nose tip detection method was proposed in Malassiotis and Strintzis (2005). An elliptical region of interest was marked on the range image to locate a nose tip. Nose tip value was detected by considering the regions with large values of the principal curvatures (\(k_1\) and \(k_2\)). Principal curvatures \(k_1\) and \(k_2\) are the solutions of the quadratic equation \(\lambda ^{2}+2H\lambda +K=0\) and built upon from the mean (H) and Gaussian (K) maps of the facial surface. This method is robust for large variations in pose and expressions. In Dibeklioglu et al. (2008), the surface curvature maps were computed as a part of pre-processing of a range image. Then, a difference map between Gaussian and mean curvatures was transformed to logarithmic scale for nose tip localization. The eye corners clustering based approach was proposed in Yi and Lijun (2008) for localization of nose tip and inner eye corners. The possible inner eye candidate’s vertices were segmented from the rest of the 3-D mesh using concave regions of principal curvature based maps. The authors have employed the 2-means clustering method for separation of left and right eye corners. A flat surface was fitted to the candidate clusters using least summation distance method. A point on the positive side of the flat surface with maximum distance from clusters was marked as nose tip. Performance of the surface curvature based approaches is sensitive to the noise present in facial range images. Statistical shape based point distribution model (PDM) was presented for the extraction of nose tip and eye corners in Nair and Cavallaro (2009). Principal curvature based shape index and curvedness index maps were computed and candidate landmarks were located using regional segmentation. Furthermore, they fitted the PDM model for accurate registration of 3-D meshes. Performance of this method suffers when the mesh contains self-occlusions. Due to curvature based approaches, local segmentation and the simple 2-means classification technique, these approaches are computationally efficient. However, the performance is sensitive to noise and mesh occlusions.

In Koudelka et al. (2005), initially, the positively and negatively affected pixels are located using gradient operator on pixels of a range image. Subsequently, a radial symmetry plane is computed to obtain the radial symmetric features. A nose tip was declared within large positive values and eye corners were localized around dark intensities of the radial symmetry map. Effective energy based hierarchical filtering method was proposed in Xu et al. (2006b) to locate a nose tip. The values of neighboring effective energy, mean and variance of neighboring pixels were fed to the support vector machine (SVM) classifier. This method is suitable for frontal, viewpoint invariant and rotated facial range images. It requires SVM based classification which makes it computationally heavy (Chew et al. 2009). To address this issue, Chew et al. (2009) proposed a direct approach based on the effective energy and avoided the use of SVM classifier. The effective energy for a pixel P with its neighborhood pixels \(P_n \) for an angle between normal vector for P and \((P_n -P)\) was computed using \(E=\left\| {P_n -P} \right\| \cdot \cos (\theta )\). The surface pixels P with negative effective energy (E) were marked as potential candidates for nose tip location. This approach does not require initial training unlike (Xu et al. 2006b) and is computationally efficient.

In D’Hose et al. (2007), the authors pre-processed range images to cluster characteristic features (including the nose tip and eyes) using the Gabor wavelets. The segmented areas were refined using iterative closest point (ICP) algorithm for fine alignment and detection. The pre-processing stage is computationally efficient since only horizontal and vertical directions for Gabor filters were employed. Though this algorithm employs the computationally heavy ICP algorithm, it is applied only on small tentative feature regions in wavelet domain resulting in minimum computational requirement.

A 3-D shape signature based approach was proposed in Breitenstein et al. (2008). The 3-D location of a pixel along its orientation was examined and segmented to check if it has the maximum value compared to its neighbors. These local directional maximas were combined to form a sparse aggregated signature map. The cell located at the center of the signature map was denoted as nose tip. This approach is robust to partial occlusion, expression variations and vast range of poses such as \({\pm }90^{\circ }\) yaw, \({\pm }30^{\circ }\) roll and \(\pm 45^{\circ }\) pitch, though it is computationally heavy due to localization of a pixel in 3-D space in presence of the said pose variations.

In Romero and Pears (2009), the authors proposed a graph matching based method to detect eminent landmarks. The regional distribution of neighborhood pixels was conceptualized as a pattern of sample points around concentric circles. These patterns were encoded as cylindrically sampled radial basis function (CSR) histograms. The graph edges were effectively encrypted into these descriptors and utilized for detection of eye corners and nose tip. Like most graph-based techniques, this approach is computationally light.

A face profile based approach for detection of nose tip in range images or uniformly sampled point clouds was proposed in Peng et al. (2011). Initially, 61 left-most (min group, \([-90^{\circ },-3^{\circ }])\) and right-most (max group, \([0^{\circ },90^{\circ }])\) 2-D profiles were computed from the input facial scan. The possible nose tip candidates were located using the proposed perimeter difference parameter. This method does not involve any computationally complex operations and is independent of any statistical model or training and hence demands fewer computations.

In Mohammadzade and Hatzinakos (2013), the authors constructed an Eigen-nose space using a training set of nose regions. The pixel in the input facial scan is marked as a nose tip if the mean square error between a candidate feature vector and an Eigen nose-space projection is less than a predefined threshold. Additionally, to reduce computational complexity, the authors have proposed a low-resolution wide-nose Eigen space, which speeds up the nose tip detection process. This reduces the otherwise heavy computational requirement of the original algorithm.

2.4 Registration techniques

Face registration means transforming a probe (query) face such that it is aligned with other faces in the gallery (database). Face registration is an essential step prior to feature extraction and the matching of two facial surfaces. Various algorithms to perform registration between two facial representations are briefed in the following subsections.

2.4.1 Iterative closest point (ICP) algorithm

Most of the 3-D face recognition systems introduced so far use the iterative closest point (ICP) algorithm (Besl and McKay 1992) for registration and matching of 3-D facial surfaces. The ICP algorithm transforms a 3-D point cloud shape ‘M’ for alignment with another 3-D point cloud shape ‘N’. A series of incremental transformations are performed repeatedly. During each iteration step, the mean square error (MSE) between both point clouds is computed. The algorithm converges when the value of the minimum MSE is achieved based on the registered 3-D shape information. The ICP algorithm does not depend on extraction of any local features. Thus, ICP essentially is a semi-blind 3-D shape matching algorithm. ICP based registration is performed either between a probe and set of gallery faces or between a probe and average face model. A few methods that use ICP algorithm or it’s variants for face registration are presented in Yue et al. (2010), Cai and Da (2012), Störmer et al. (2008), Alyuz et al. (2010, 2008, 2012) Al-Osaimi et al. (2009), Ben Amor et al. (2008), Cook et al. (2004), Xueqiao et al. (2010a, b), Abate et al. (2006), Colombo et al. (2008), Kakadiaris et al. (2007), Amberg et al. (2008), Xiaoguang et al. (2006), Queirolo et al. (2010), Faltemier et al. (2008), Li and Da (2012), Ballihi et al. (2012) and Russ et al. (2005). A disadvantage of ICP-based registration is that it is prone to converge at a local minimum point and requires sufficient initialization for facial surface alignment (Yi and Lijun 2008). Thus, predefined localized landmarks enhance the accuracy of initial correspondence between the two point clouds (D’Hose et al. 2007; Nair and Cavallaro 2009). ICP based templates require large amount of storage space and it is comparatively slow in terms of registration time (Spreeuwers 2011). Nonetheless, it has been used by many researchers due to its robust registration performance.

2.4.2 Spin images

Spin images-based global registration method maps every point on 3-D facial surface to an oriented point p with surface normal n forming a 2-D basis (p, n) (Johnson and Hebert 1998, 1999). Point p acts as origin resulting in a dimensionality reduction \((x,y,z)\rightarrow (\alpha ,\beta )\) using a 3-D to 2-D transformation (Johnson and Hebert 1999). The cylindrical coordinates \((\alpha ,\beta )\) stand for the perpendicular span through a surface normal and elevation respectively (Johnson and Hebert 1999). A spin image is analogous to a distance histogram and represents neighborhood around a specific oriented point with associated directions. Spin image representation is used for face registration in Conde et al. (2006a), Kakadiaris et al. (2007) and Conde and Serrano (2005). The spin map \(S_0 (x)\) is calculated using (4). The spin image based registration is robust to partially occluded or noisy 3-D surfaces (Johnson and Hebert 1999). Compared to conventional ICP, this approach is computationally lighter due to dimensionality reduction in the form of the said transformation from 3-D to 2-D space.

2.4.3 Simulated annealing

Simulated annealing is an iterative stochastic approach which looks for better neighbor solution while matching two range images. Parameters for translation and rotation are treated as a transformation vector. Initially, centers of mass for two facial images are aligned. The M-estimator sample consensus (MSAC) measure (Torr and Zisserman 2000) and MSE are minimized for coarse alignment. The fine alignment is attained based on surface interpenetration measure (SIM) distance (Queirolo et al. 2010). This approach is employed for face registration in Kakadiaris et al. (2007), Queirolo et al. (2008) and Bellon et al. (2006). The SIM is preferred over MSE for quality evaluation of two registered range images (Queirolo et al. 2010). Due to the statistically heavy stochastic search algorithms, this technique is computationally inefficient. However, it has minimum tendency to get trapped in local maxima or minima. This ensures a correct and robust estimation of facial features.

2.4.4 Intrinsic coordinate system

All 3-D point cloud surfaces are transformed to a common reference coordinate system using a set of localized landmark structures. This system is referred to as the ‘Intrinsic Coordinate System’ (Spreeuwers 2011). The (x, y, z) coordinates are mapped to intrinsic coordinates (u, v, w) using vertical symmetry plane, nose tip, and slope of the nose bridge. This approach is effective in terms of time and computational complexity as all faces in the gallery and the probe image share a common coordinate system instead of extrinsic alignment with another 3-D facial shape or an average 3-D facial model. The efficiency of registration depends upon accurate localization of 3-D facial landmark structures.

2.5 Feature extraction and matching

After facial shape registration, most approaches extract significant features and match them using metrics such as the Euclidean distance (Heseltine et al. 2004; Mpiperis et al. 2008; Berretti et al. 2010; Gordon 1992; Gupta et al. 2010b), Hausdorff distance (Koudelka et al. 2005; Russ et al. 2005; Yeung-hak and Jae-chang 2004; Achermann and Bunke 2000; Gang et al. 2003), and angular Mahalanobis distance (Amberg et al. 2008) against the stored set of feature vectors for gallery faces. Major feature extraction algorithms, dimensionality reduction techniques and classifiers are discussed below.

2.5.1 Gabor wavelet filters

The Gabor kernel functions \(\psi _j (\vec {x})\) are formulated as the Gaussian modulated complex exponentials. Convolution of a range image \(I(\vec {x})\) with the family of Gabor functions results in directional Gabor wavelet subbands (Moorthy et al. 2010). The family of linear bandpass Gabor functions is obtained using variations in parameters of mother Gabor kernel \(\psi _j (\vec {x})\). The human brain’s primary visual cortex is responsible for extraction and computation of perceptual data (Tai Sing 1996). It is advantageous to employ the Gabor wavelet filters since they model the receptive fields of visual cortex (Tai Sing 1996; Moorthy et al. 2010; Daugman 1985). The Gabor wavelet functions typically have oriented localization properties which are essential for robust feature extraction. The resultant Gabor coefficients obtained after filtering a range image with oriented Gabor wavelets are known as Gabor features. The Gabor wavelet filters based feature extraction techniques on range images are used for precise 3-D feature extraction (Moorthy et al. 2010; Jahanbin et al. 2008; Xueqiao et al. 2010b). Gabor wavelets yield precise features in transform domain. The computational complexity depends upon the level of Gabor wavelet transformations used in the specific application.

2.5.2 Local binary pattern (LBP)

Local binary pattern (LBP) approach is introduced by Ojala et al. (2002) for feature extraction from spatial 2-D texture images. A set of binary values is obtained upon comparison of each pixel value with intensities of its \(3\times 3\) neighboring pixels. These binary values are subsequently encoded to a decimal number which represents the LBP value for a particular pixel. A histogram of such LBP values acts as a discriminant feature vector and is widely used by face recognition researchers. A histogram of Multi Scale Local Binary Pattern (MS-LBP) based on LBP is introduced by Di et al. (2010) where, feature information is extracted using the MS-LBP histogram and employed for 3-D face recognition. Huang et al. (2006) realized that range images of two different persons may have same depth variations and the 2D-LBP is not suitable to distinguish them. Based on the inference that the exact values of differences may be different, they have proposed the extended 3D-LBP (Di et al. 2012) for feature detection in 3-D scans. The LBP representation is in integer numbers and hence LBP is a computationally effective technique compared to other feature detection techniques demanding heavy floating point computations.

2.5.3 Markov random field model

Ocegueda et al. (2011) proposed the Markov random field (MRF) model-based approach for extraction of discriminant features from 3-D faces. The MRF model is based on combination of probability and graph theory and is widely used for solving image processing and computer vision related complex problems such as face recognition, image denoising, image segmentation, image reconstruction and 3-D vision. The MRF model considers mutual dependencies amongst label field of range image pixels. The MRF model is based on the concept of near-neighbor dependence which is commonly observed in most of the range images. The discriminant features are selected based on Posterior marginal probabilities of the MRF model. This approach is computationally efficient due to involvement of graph theory and computation of Markov random probabilities based on a small number of prior event probabilities.

2.5.4 Scale invariant feature transform

Scale invariant feature transform (SIFT) detects landmarks using local extrema in the scale-space difference of Gaussian (DOG) function (Lowe 2004). SIFT features are invariant to orientation and scale. Di et al. (2010) applied the SIFT transform on shape index (SI) maps and multi-scale local binary pattern (MS-LBP) depth maps. It is also utilized by Berretti et al. (2013) for feature extraction on multi-scale extended local binary pattern (MS-eLBP) maps. SIFT is extended for feature extraction on 3-D mesh representation using MeshSIFT algorithm (Maes et al. 2010) and employed in Berretti et al. (2013), Smeets et al. (2011, 2013). Inherently, SIFT offers scale and rotation invariant features at moderate computational costs. However, application of SIFT on 3-D data demands considerable computational resources in terms of memory and computational speed.

2.5.5 Local shape patterns

The local shape pattern (LSP) computes differential structure factor (similar to LBP) as well as orientation factor (similar to SIFT) on a \(3\times 3\) neighborhood for a range image (Huang et al. 2011). The LBP is an established technique for efficient feature vector generation from facial images. The top-left first four pixel values are subtracted from their opposite pixels on the same diameter. The binary values are obtained in a way similar to the LBP. The 4-bit code is then converted to a decimal code to form an orientation pattern. The LSP combines the LBP with orientation information, which results in obtaining a more discriminant feature vector for 3-D face recognition. This approach is computationally heavy compared to LBP but it is lighter compared to transformation techniques like Gabor wavelets or Markov random fields.

2.5.6 Haar-like features

Viola and Jones (2001) developed this Haar-like feature detection algorithm. This technique deals with neighboring regions, adds up intensities in each region and computes difference between sums from each region. For a range image, Haar-like features calculate discontinuities in depth values. Mian et al. (2011) computed Haar-like features on range images and its gradients. The Haar-like features are employed for description of characteristics of the signed shape difference maps (SSDM) (Yue et al. 2010). Due to the use of computationally simple Haar-like features, this algorithm is computationally very fast. This is also one of the most popular online available algorithms for real time face detection and tracking.

Major dimensionality reduction and feature classification techniques used by 3-D face recognition approaches are enlisted in Table 2.

Principal component analysis (PCA) (Turk and Pentland 1991) is a popular technique for dimensionality reduction of feature vectors. The procedure finds a set of orthonormal basis vectors which optimally represents the feature vector data. It transforms the original feature data vectors into a lower dimensional subspace. The new coordinate system forms the axes in such a way that they are a linear combination of input data vectors. The first component with maximum variance is denoted as the principal component. The second component is perpendicular to primary axis of principal component and indicates the direction of second highest statistical variance from the original data and so forth. PCA is computationally complex due to computation of multiple orthogonal Eigen-space vectors. Linear discriminant analysis (LDA) (Belhumeur et al. 1997) projects high dimensional feature vectors into a low dimensional subspace and computes an optimal linear combination of components that maximize intra-class distance and minimize inter-class distance simultaneously. The true reconstruction error rate of LDA is a parametric function of Gaussian distribution of classes. LDA is based on PCA and hence requires more computations than PCA but yields a smaller but more robust feature vector compared to PCA. Support vector machine (SVM) classifier (Cortes and Vapnik 1995) is a supervised machine learning method which is popular for addressing binary classification problems. SVM attempts to place a decision boundary which highly separates the feature vectors of genuine class from imposter class. The performance of SVM classifier is notably robust to sparse and noisy input feature vectors. During classification phase, they isolate a training data using a hyper-plane which is maximally separated from binary labeled classes. If the linear separation between the classes is not feasible, SVMs utilize kernel based methods to isolate the classes with non-linear mapping in the feature vector space. The SVM is extended to multi-class domain for face recognition, object classification and fingerprint recognition related problems. The SVM classifier for multiclass domain is computationally very heavy. The nearest neighbor (NN) classifiers are subdivided into 1-NN and k-NN classifiers (Cover and Hart 2006). The 1-NN classifiers allocate the class label of its nearest neighbor to a queried test feature point. The k-NN classifiers denote a class label to queried test feature point based on majority voting between the classes of k-nearest neighbors. The nearest neighbor classifiers are computationally very light. However, their performance depends upon the used distance measure.

2.6 Performance measures

The performance of a 3-D face verification/identification system is examined in terms of the percentage of false decisions it gives during verification/identification process. While comparing a probe face (query) with a claimed or possible identity (a face from gallery data), a system is observed for two types of errors. They are known as false reject errors and false accept errors. False reject error appears when a genuine face is compared with a possible or claimed identity and similarity score (Z) is lower than predefined threshold \((\lambda )\), and the genuine person is not recognized by the system. False accept error arises when fake/imposter probe is compared with a possible or claimed gallery face and similarity score (Z) resulting from the match is higher than predefined threshold \((\lambda )\) and the wrong person is authorized as established identity (Phillips et al. 2010). Figure 7 portrays the errors that occur during face verification process.

False accept rate (FAR) is the percentage of fake (imposter) probes which have similarity score greater than predefined threshold. False reject rate (FRR) is the percentage of genuine probes which have similarity scores less than predefined threshold. When FRR versus FAR is plotted on logarithmic scale or its variants, emanating graph is called receiver operating characteristic (ROC) curve. A point on ROC graph, where FRR and FAR are equal, provides the equal error rate (EER) point which is a single-valued error metric (Jain et al. 2008) for the rejection rate. For an accurate face recognition algorithm, the FRR, FAR and EER should be as minimum as possible. The performance of identification system is evaluated through the cumulative match characteristics (CMC) curve (Jain et al. 2008). The CMC curve is a plot between ranks and recognition rates which contains Rank-1 recognition rate \((\hbox {R}_{1}\)-RR) that implies the first best matching rate when a probe is compared with all gallery faces (Smeets et al. 2012). These performance measures offer robustness to the performance benchmarking of the face recognition algorithms.

3 3-D face databases

For the development of a high quality robust face recognition algorithm, its testing on multiple large datasets which have inherent complexities similar to real life situations is a must. After the availability of 3-D face scanners and powerful digital computers capable of processing huge data at a time, many 3-D face databases have become available for research. Various publicly available 3-D face databases are enlisted in Table 3.

The 3DRMA database is captured at Royal Military Academy, Belgium using a structured light based 3-D face scanner. Initially, the camera and structured light projectors are aligned and calibrated in order to reduce the relative errors. They have acquired facial information of 120 different subjects. The 3-D facial surface is extracted through labeled stripe detection method (Beumier and Acheroy 2000). The FSU 3-D database is developed at Florida State University, FL and contains facial images of 37 distinct persons in a mesh format. The database is acquired using the Minolta Vivid 700 laser scanner (Hesher et al. 2003). The GavabDB database consists of mesh surfaces of 61 individuals. The age variation of the subjects is in between 18 and 40. They have acquired both intrinsic (pose, expression, occlusion) as well as extrinsic variations (varying background, scale, lighting effects) (Moreno and Sanchez 2004). The Face Recognition Grand Challenge V 2.0 (FRGC V 2.0) database is a part of a protocol specially designed to access the increase in recognition performance by different state-of-the-art algorithms (Phillips et al. 2005). The 3-D data is captured under controlled illumination conditions. The 3-D shape and the corresponding texture image of a specific person were acquired with Minolta Vivid 900/910 scanner. The training partition of the dataset consists of 943 scans whereas the validation partition contains 4007 3-D scans for 466 individuals. Experiment 3 of FRGC challenge focuses on 3-D face recognition where, both training and query faces are 3-D facial surfaces. The BU3D-FE (Binghamton University 3D Facial Expression) database specifically incorporates the expression variation challenges for 3-D face recognition (Lijun et al. 2006). The various expressive faces of 100 subjects were acquired with the 3DMD digitizer which stores a range image as well as mesh structure of the person in front of a projection beam. CASIA 3-D face database is collected at the Chinese Academy of Sciences Institute of Automation (Xu et al. 2006a). It contains facial images of 123 subjects, captured with laser based Minolta Vivid 910 scanner. The advantage of this database is that it not only contains expression and pose variations but also combinations of poses under different expressions. It also involves scans with illumination variations. Face Recognition and Artificial Vision group acquired the FRAV3D database which consists of facial scans of 105 subjects (Conde et al. 2006b). Minolta Vivid-700 laser scanner was used for capturing 3-D mesh and texture information. ND 2006 database is a superset of FRGC v 2.0 database (Phillips et al. 2005), acquired at the University of Notre Dame (Faltemier et al. 2007). The facial scans of 888 individuals were captured using Minolta Vivid 910 scanner (Konica 2013). The output of this laser based scanner consists of color texture along with 3-D facial range images. Michigan state university (MSU) has developed a database which contains simultaneous expression and large pose variations. They have acquired the range scans of 90 subjects. Since expression and pose variations are imposed simultaneously, face recognition using this database is challenging and complex (Xiaoguang and Jain 2006). The ZJU-3DFED database consists of 3-D facial scans of 40 individuals captured using structured light based Inspeck 3-D Mega capturor sensor at the Zhejiang University (Wang et al. 2006). Lack of pose variations is the main limitation of this database. The ‘Identification par l’Iris et le Visage via la Video’ \((IV^{2})\) multimodal database (Colineau et al. 2008) involves 3-D faces as well as 2-D videos, audios and iris data of 365 subjects. 3-D facial surfaces are captured using Konica Minolta Vivid 7000 sensor. Bosphorus 3-D face database is acquired using Inspeck Mega Capturor-II scanner at the Bogazici University (Savran et al. 2008). The shape and texture data were captured within less than a second per subject. The raw point cloud data of 105 subjects was processed for noise removal using median and Gaussian filters. It also offers manual marking of 24 facial landmarks like chin middle, eye corners for each facial scan. Researchers from the University of York (Heseltine et al. 2008) acquired 3-D facial data with help of two cameras using stereo based modeling technique. In addition, another camera mapped the captured 2-D texture information onto the 3-D mesh model. Beijing University of Technology gathered BJUT-3D database which contains 3-D mesh and color texture data of 500 subjects. The database is acquired with the Cyberware 3030 RGB/PS 3-D scanner, which stores texture along with shape data for each facial scan. In order to avoid occlusion due to hair, all subjects were asked to wear a swimming cap. Smoothing has been performed for the sake of removing noise from the captured data. Hole filling filters were operated on the facial scan data in order to deal with missing parts. Finally, after preprocessing, the facial region from the redundant parts has been separated and stored as a part of BJUT-3D database (Bao-Cai et al. 2009). The range images of 118 subjects along with corresponding 2-D color textures were acquired at the University of Texas at Austin using stereo based scanning system (Gupta et al. 2010a). Locations of 25 anthropometric fiducial landmarks are included along with the database (Gupta et al. 2010b) which is a unique feature of this database. UMB-DB 3-D face database consists of frontal facial scans of 143 subjects acquired using Minolta Vivid 900 laser scanner at university of Milano Bicocca (Colombo et al. 2011). This database is useful for the evaluation of algorithm performance under unconstrained scenarios since they have captured naturally occurring occlusions. The 3D-TEC dataset is a subset of the twins’ days dataset captured with Minolta Vivid 910 scanner during the twin’s days festival in Twinsburg, Ohio. It contains 3-D facial scans of 107 twins acquired with neutral and smiling expressions (Vijayan et al. 2011). The RGB color and depth data of 52 subjects is acquired using Microsoft’s Kinect camera (Shotton et al. 2013) at the EURECOM institute. The data was captured during two sessions separated with half a month’s difference. The manual annotation information is also available for six fiducial landmarks. The 3D expression variations are captured by various databases enlisted in Table 4. These databases can also be used for 3D expression recognition.

From Table 4, it is evident that the FSU, FRGC v 2.0, BU3D-FE, ND 2006 and Bosphorus are the databases which contain large variations amongst facial expressions and can be utilized for benchmarking of identification/verification performance of a novel algorithm.

Table 6 enlists various occlusions available in publicly accessible 3-D face databases. The most common intrinsic occlusions that affect the performance of face recognition systems are due to hair, randomly posed hands that cover facial region and eyeglasses. The occlusion invariant face recognition system should address the extrinsic occlusions like missing facial data due to hat, scarf, paper and eyeglasses. It should be noted that the Bosphorus dataset appears with all the three constraints. Thus it should be imperative for the validation and benchmarking of novel 3-D face recognition algorithms.

As indicated in Table 5, the CASIA and Bosphorus database captured stepwise pose variations. These databases are most challenging for the automatic landmark localization and normalization algorithms. The FRAV3D database contains severe Z-axis pose variations which also severely affect the performance of real time 3-D face recognition systems.

4 Expression invariant 3-D face recognition

3-D face recognition is comparatively robust to illumination and pose variations than 2-D image-based face recognition. However, owing to its non-rigid nature, the captured 3-D faces deform with expression variations which is a prominent challenge for recognition from the 3-D shape (Samir et al. 2006; Kin-Chung et al. 2007). An obvious approach to take on this challenge is to identify expression invariant 3-D human face features and use them for matching. Expression invariant face recognition approaches are broadly classified as holistic and region based methods. Figure 8 presents the classification of different approaches for expression invariant 3-D face recognition.

4.1 Local processing based expression invariant approaches

In recent years, there has been a growing interest in using local processing based approaches for addressing the expression variation problem in 3-D face recognition. The facial surface is divided into different local sub-regions. Instead of matching entire facial surfaces, face recognition algorithms are applied on smaller facial sub-regions. Generally, those sub-regions which have lesser effect of expression variations are selected for matching process. Figure 9 indicates the general steps used in local processing based approaches which address expression variation problem in 3-D face recognition.

After acquisition of a 3-D probe face, it is segmented to avoid outliers and registered with the gallery faces. Usually, local patches invariant to expression variations such as the area surrounding nose, forehead and eyes are extracted and matched with the corresponding local patches from gallery faces to find the closest match. Individual matching results are typically fused to achieve a better rank-1 recognition rate. For performance improvement, various fusion schemes are proposed in the literature. Major fusion schemes are briefly discussed below. More details on fusion schemes can be found in Spreeuwers (2011).

4.1.1 Fusion schemes

Sensor level fusion

Sensor level fusion combines the raw sensor data during initial stages of 3-D face recognition system, before feature extraction and matching. The raw sensor data includes the biometric information of the same person acquired with different sensors. Sensor level fusion is widely referred in multi-biometrics literature as the image or pixel level fusion. This fusion scheme belongs to the ‘fusion before matching’ class (Ross et al. 2006; Li and Jain 2011). For example, multiple calibrated 2-D cameras enable pose invariant face recognition by forming a 3-D model that can be rotated and translated. The calibration and compatibility of the 2-D cameras is a must for reconstruction of a reliable 3-D model. As this scheme achieves fusion at sensor level, it requires minimum computational overhead.

Feature level fusion Feature level fusion involves combination of features extracted from different facial representations of a single subject. Using the results of multiple feature extractors, the ultimate feature vector is derived. If the feature extraction algorithms have compatible outputs, template improvement or template update schemes are employed to obtain the resultant feature vector (Ross et al. 2006; Li and Jain 2011). Template improvement attains the removal of duplicate data in feature sets. On the other hand, template update modifies the resultant feature vector based on certain statistical operations such as mean, variance or standard deviation. This fusion scheme is among the ‘fusion before matching’ class. If the feature vectors participating in fusion have incompatible ranges and distributions, feature normalization is employed to modify the scale and location of feature vectors. Transformation techniques such as min-max normalization and median normalization are popularly used in the reported literature for feature normalization (Ross et al. 2006). This requires considerable computational overhead as registration and feature detection is to be done individually for all images.

Rank level fusion During identification experiment, ranks are assigned to gallery images according to decreasing sequence of confidence. The rank outputs of several subsystems are fused to construct a consensus rank for each individual probe image. The consensus of ranks provides eminent information to the decision-making module. The highest-rank, Borda-count and logistic regression are popular statistical methods to attain the rank level fusion. Rank level fusion is independent of normalization techniques (Ross et al. 2006; Li and Jain 2011) and belongs to the ‘fusion after matching’ class. This is computationally complex as rank of each matching gallery image is to be computed.

Decision level fusion The decisions of individual classifiers are combined in decision level fusion. Exemplary techniques are behavior knowledge space, AND and OR rules, Bayesian decision fusion, majority voting, Dempster–Shafer evidence-based method and weighted majority voting schemes (Ross et al. 2006; Li and Jain 2011). The behavior knowledge space-based technique computes a storage map during training that links the decision of individual classifiers to a single decision. The AND rule declares a match only when all classifiers agree with the specified template, whereas, OR rule affirms a match when at least one classifier agrees with the particular template. The Bayesian decision fusion selects a label with highest probabilistic discriminant function value. Majority voting based technique performs decision level fusion based on majority of classifiers agreeing with the specific template. If the face recognition system contains say, M classifiers, then at least \(g(g=(M/2)+1,(M{\hbox { mod }}2)=0\) and \(g=(M+1)/2,(M{\hbox { mod }}2)=1)\) classifiers must agree with the specified template to declare it as a match. Dempster–Shafer evidence based method adopts belief theory to obtain more flexible and robust results than the probability based Bayesian decision level fusion. This method is preferred when the complete knowledge base related to the problem is not available. Weighted majority voting technique is utilized when classifier efficiency in terms of accuracy is not similar with other classifiers. The classifier ‘C’ may give more accurate matching rates and hence assigned more weight in the majority voting scheme. This scheme belongs to ‘fusion after matching’ class.

Score level fusion It is the combination of the match scores of individual classifiers based on weighing schemes. Sum, product, max, min and median rules are utilized for performing score level fusion. The combination of matching scores from various classifiers provides a new feature vector. The efficiency of score level fusion depends upon similarity metrics, probability distributions and numerical ranges of individual classifiers. It is the most popular fusion technique for face recognition due to readily available match scores and reduced processing overheads. Score level fusion scheme belongs to the ‘fusion after matching’ class (Ross et al. 2006; Li and Jain 2011). Score level fusion is computationally heaviest as matching scores given by multiple classifiers are to be computed for all the gallery images and fused for the final decision.

4.1.2 Approaches

The first systematic study of 3-D face recognition based on local processing was carried out by Chang et al. (2006). After segmentation and pose normalization, the circular regions in the vicinity of the nose tip are selected and registered with a generic 3-D face model. Three regions namely: (i) entire nose region, (ii) interior nose region and (iii) circular region around the nose tip are extracted as small probe surfaces and matched with each region in gallery using the ICP algorithm. Score level fusion is performed based on sum, product and minimum rules. The neutral probe to neutral gallery identification rate is 97.1 % and expressive probe to neutral gallery identification rate is 87.1 %. Although they have achieved 97.1 % identification rate, the probe images in the real life scenario may comprise of expressive faces. It is stated that the performance may increase with extra local patches in the fusion process at the cost of increased computations.

Kin-Chung et al. (2007) proposed linear discriminant analysis (LDA) and linear support vector machine (LSVM) fusion based 3-D face recognition system by capturing local characteristics from multiple regions as summation invariants. From a single frontal facial image, ten sub-regions and subsequent feature vectors are extracted. Further, summation invariants are computed with the moving frames (Fels and Olver 1998) technique. LDA and LSVM based linearly optimum fusion rules provide improved performance. The performance of reported approach decreases with increasing severity of expressions.

Faltemier et al. (2008) used 28 best-performing sub-regions from a facial surface for 3-D face recognition. For a probe image, total 38 sub-regions are extracted; a few of them overlap with each other. Each sub-region from a probe image is matched with a gallery image region by employing the ICP algorithm. Highest rank-one recognition rate achieved by individual region matching is 90.2 % which promoted the use of the fusion strategy. The modified Borda-count fusion method attains overall 97.2 % rank-one recognition rate. This algorithm performed well despite incomplete facial information in some of the range images from the FRGC v 2.0 database.

Boehnen et al. (2009) proposed an alternative method to the ICP based matching. Instead of comparing whole 3-D point clouds with each other which is time consuming, each face is represented as a 3-D signature template. The comparison time for such templates is much less than ICP based approaches. Eight reference regions are described for signature matching. A novel match sum threshold fusion scheme is proposed which performs better than the modified Borda-count technique. The authors have mentioned that the performance can be improved upon addition of regions with variability of shapes and density. However, identification and separation of such patches, their processing and fusion for final decision requires considerable computational power.

Queirolo et al. (2010) developed a new technique for range image registration based on Simulated Annealing. Subsequently, the elliptical and circular area in the vicinity of the nose, upper head and the entire facial region are extracted from the facial surface. To match the range images, surface interpenetration measure (SIM) is utilized as a similarity metric with sum rule for fusion. If two range images present good interpenetration, it means that, they are registered correctly and have high SIM values. This approach is quite faster than the techniques which utilize the ICP based registration with MSE as a similarity metric.

Spreeuwers (2011) proposed an intrinsic coordinate system defined by the nose tip, perpendicular symmetry plane passing through the nose and nose bridge slope for face registration. Thirty overlapping sub-regions are defined for 3-D face recognition. After matching probe and gallery images, the majority voting scheme is opted for performing decision level fusion. This approach performs fastest computation of similarity matrix on FRGC v 2.0 database using ‘all versus all’ protocol for the verification experiments. Each local classifier has different parameters in order to further improve the performance of the proposed system. In addition, the fusion is performed using techniques based on majority voting approach. More advanced fusion and matching techniques may yield enhanced performance.

Li and Da (2012) used local processing based approach for matching 3-D faces. Initially, the facial surface is split into six regions which are forehead, left mouth, right mouth, nose, left cheek and right cheek. Deformation mapping based adaptive region selection scheme is proposed for automatic region selection. The partial central profile is extracted and compared against partial central profiles of the other gallery faces to form an initial rejection classifier. Sub-regions are matched using the ICP algorithm. Subsequently, weighted sum fusion rule method is employed for overall recognition performance improvement. The approach can fail when the nose tip localization is incorrect which causes improper normalization leading to inaccurate matching.

Lei et al. (2013) divided 3-D facial surface into nose, eyes-forehead and mouth regions. By assuming the nose region as rigid, eyes-forehead region as semi-rigid and mouth as non-rigid regions, only rigid along with semi-rigid regions are considered to eradicate the effect of facial expressions. Four fundamental geometric features namely: A: Angle between the two predefined random points and the nose tip, C: Radius of a circle between the nose tip and two predefined random points, N: Angle of a line joining predefined points and Z-axis, D: Length of two predefined points on a facial surface are extracted and subsequently transformed into a region based histogram descriptor. A machine learning based SVM technique is employed for classification. The results indicate that feature level fusion performs better than score level fusion for this experimental scenario. Authors also recommend the fusion of low level features of the rigid and semi-rigid facial parts. The local region segmentation is performed with a fixed size binary mask which may not be the best fit for all facial scans. This may affect the final matching scores and recognition rates.

Tang et al. (2013) proposed a local binary pattern (LBP) based 3-D face division scheme. The facial surface is divided into 29 blocks which is denoted as a sparse division and another 59 blocks are denoted by a dense division. They have utilized the nearest neighbor based classifier for 3-D face recognition.

Li et al. (2014) computed the normal vectors to a range image using local plane fitting technique. Subsequently, the normal component images were extracted for each x, y and z axis. Each normal component image was subdivided into local patches of fixed size. The local patches were subjected to multi-scale uniform LBP to obtain multi-scale multi component local normal pattern (MSMC-LNP) histograms. Specific weights have been assigned to different patches depending upon their position in a face to obtain an expression robust feature vector. The method was tested using three scales and respective normal components. Weighted sparse representation (W-SRC) classifier has been employed for final classification. The range image registration is performed with the ICP algorithm which is computationally heavy. The overall algorithm is computationally heavy.

Regional Bounding SpheRe descriptors (RBSR) were presented for efficient feature extraction on 3-D facial surfaces (Ming 2015). Initially, the facial surface is divided into local patches by using shape band algorithm. The local patches involve the forehead, mouth, left cheek, right cheek, nose, left eye and right eye regions. The RBSR descriptors extracted from the local patches are transformed with prior weightage into the spherical domain. The regional and global regression mapping (RGRM) captured the manifold configuration of pre-weighted RBSR feature vectors. This method is heavily dependent upon robust, consistent and accurate detection of the local patches.

Different region based approaches reported so far are summarized in Table 7.

As indicated in Table 7, Spreeuwers (2011) has achieved the highest rank-one recognition rate on FRGC v 2.0 database. This approach requires significantly less time than the other state-of-the-art methods for registration and matching.

4.2 Holistic approaches

Unlike local processing based techniques, the whole facial surface is considered simultaneously for face recognition in holistic approaches. The various methods using holistic approaches are broadly classified as (i) curvature based, (ii) morphable model based and (iii) frequency domain approaches.

4.2.1 Curvature based approaches

A few holistic approaches extract curvature information associated with the facial shape. General steps involved in curvature based approach are presented in Fig. 10.

The curvature information is represented as level or radial curves. Level curves are extracted at distance ‘X’ along the depth direction from some reference point. In most of the approaches, nose tip is the reference point. Figure 11a depicts level curves marked on a range image. Profile information is recorded by rotating a vertical plane which makes angle ‘Y’ with the reference plane to derive radial curves. The reference plane passes through a landmark such as nose-tip. Figure 11b illustrates radial curves marked on 3-D facial surface. The extracted curves represent the facial surface profile and information in a compact form and are further converted into a feature template for matching templates of gallery faces. Since the facial surface is represented by a very compact representation, these approaches are much appreciated due to less computational requirements during feature computation and matching.

Samir et al. (2006) represented the facial surface as a height map and extracted the level curves. Geodesic lengths of facial curves are used as a similarity metric for matching amongst different curves using the nearest neighbor classifier. This approach is invariant to range image acquisition noise.

Haar and Veltkamp (2009) used profile and contour (level) curves for face matching. Profile curves are collected starting from the nose tip. To compare two profile curves, sample values were examined along the curves. The contour curves are obtained by combining samples in all profile curves with the same depth value. The number of profiles and contours-based distance between the corresponding samples for two faces are used as dissimilarity measure. This technique requires exact nose-tip location for initial pose normalization. Good recognition rate is achieved subject to the accurate localization of the nose-tip.

Ballihi et al. (2012) used the level sets/circular curves and streamlines/radial curves as Euclidean distance functions of the 3-D face. The feature extraction is performed using the machine learning based Adaboost method (Freund et al. 1999). Pair-wise comparison of facial curves is accomplished using differential geometry tools. Each face is characterized using a highly compact 3-D face signature which is effective in terms of storage and retrieval cost. This yields good recognition rates at reasonably less computational cost.

Drira et al. (2013) proposed a 3-D face recognition method based on curvature analysis of a 3-D facial surface. The Riemannian framework is used for analyzing 3-D facial surfaces. The radial curves are extracted with nose tip as the center. They have compared radial curves from a probe with gallery faces with the help of elastic shape analysis framework for curves (Srivastava et al. 2011) of 3-D facial surfaces. The authors have manually located the nose tip for non-frontal facial surfaces in the Bosphorus and GavabDB datasets. This technique also achieves good recognition rates subject to accurate localization of nose-tip by a human operator.

Lei et al. (2014) extracted a set of radial curves from upper part of a face due to its semi-rigid nature. A novel feature extraction method called angular radial signatures (ARS) is proposed which represent a 3-D face into a set of mapped 1-D feature vectors. The 1-D feature vectors are found to be very close to each other in the feature vector space. Thus, Kernal principal component analysis (KPCA) is employed to address the linear inseparability issue. The KPCA transforms 1-D ARS features to a high-dimensional feature space. Subsequently, a SVM classifier is trained with a set of KPCA feature vectors. For a probe face, its corresponding KPCA feature vector is compared against the learned SVM model to find the closest match. This technique is computationally complex due to the use of SVM and required training.

Table 8 presents the major curvature based approaches published and discussed so far along with their benchmarking.

As indicated in Table 8, the Riemannian framework based approach (Drira et al. 2013) is found to be a robust curve based 3-D face recognition technique as it achieves consistent performance for identification as well as verification experiments on FRGC v 2.0 dataset, but at a considerable computational cost.

4.2.2 Morphable model-based approaches

The morphable models vary their shape in accordance with an unknown facial shape. They are also known as deformable models. A general methodology applicable to the morphable model-based approaches is depicted in Fig. 12.

In order to compare an input probe face with gallery faces, initially a probe is registered with the morphable model to ensure the unified coordinate system. After this alignment, point-to-point feature correspondence is established between a probe and the morphable model. The 3-D morphable model is fitted to the probe. The deformation parameters/coefficients obtained after the completion of fitting procedure are encoded into a feature vector or represented in some suitable form to achieve compact template representation. A morphable model is built by training samples from a train dataset. Once the morphable model is constructed, it can be used for matching probes having any alignment, pose, scale or even occlusion. Major approaches that use the morphable models are enlisted below in Table 9 with their performance on various databases.

Kakadiaris et al. (2007) proposed a fully automatic expression invariant 3-D face recognition system based on the morphable models. An annotated face model (AFM) is built using the average 3-D facial mesh model. After initial alignment, the AFM fitting gives geometric characteristics of probe face based on elastically deformable model scheme by Metaxas and Kakadiaris (2002). A difference between probe scan and the AFM is transformed into a 2-D-geometry image which constitutes encoded information on a 2-D grid sampled from the deformed AFM. Consequently, the geometry image is transformed to a normal map which distributes the information evenly amongst three components. The Haar wavelet transform is applied on a normal map and geometry images. The pyramid transform is employed for the geometry images. The \(L_{1}\) distance and structural similarity index measure (SSIM) are used as performance metrics. The transformation to a spin image is computationally heavy but it facilitates fast matching in terms of system-time (Spreeuwers 2011).

Xiaoguang and Jain (2008) proposed an algorithm to match range images having expression variations using 3-D face models with neutral expression. A collection of 94 landmarks is utilized to study the 3-D surface deformation due to specific expressions. The mapping is then established between the collection of landmarks having neutral expression and a 3-D neutral model. A comparable deformation is incorporated onto a 3-D neutral model by applying thin plate spline (TPS)-based mapping. Every probe scan associated with a deformable model is matched against gallery faces based on the cost function minimization algorithm. The formation of the user specific deformable model for each person in the gallery is computationally complex.

Mpiperis et al. (2008) established the combination of elastically deformable model framework and Bilinear models for jointly addressing the problems of identity invariant 3-D face expression recognition and expression invariant 3-D face recognition. Based on the elastically deformable models, bilinear model coefficients are computed. During a test phase, elastically deformable model is fitted to both probe and gallery face sets to associate point to point correspondences. Euclidean distance is employed as a metric for comparison of probe and gallery surfaces. In order to build a bilinear model, a large annotated training dataset is needed. The recognition performance using bilinear models hampers with improper localization of facial landmarks.

Amberg et al. (2008) introduced a method for expression invariant face recognition by fitting identity and expression separated 3-D morphable models to a 3-D facial probe shape. A statistical PCA based 3-D morphable model is trained with a combination of 270 individual neutral and 135 expressive face components. A variant of the non-rigid ICP is employed as a fitting algorithm. A 3-D morphable model is fit to the point correspondences using systematic Jacobian and Gauss–Newton Hessian approximations. Angular Mahalanobis distance is used as dissimilarity metric. This approach suffers from a large processing time (40 s per probe).

Al-Osaimi et al. (2009) employed the PCA to learn and model expression deformations. The PCA eigenspace technique is employed because it is computationally effective than other closed form solutions. The generic PCA deformation model is built using non-neutral faces of distinct persons. The expression deformation templates are used to eliminate (morph out) the expressions from non-neutral face scan. The ICP based matching increased the computational time complexity.