Abstract

The aim of this paper is to tackle the self-propelling at low Reynolds number by using tools coming from control theory. More precisely we first address the controllability problem: “Given two arbitrary positions, does it exist “controls” such that the body can swim from one position to another, with null initial and final deformations?”. We consider a spherical object surrounded by a viscous incompressible fluid filling the remaining part of the three dimensional space. The object is undergoing radial and axi-symmetric deformations in order to propel itself in the fluid. Since we assume that the motion takes place at low Reynolds number, the fluid is governed by the Stokes equations. In this case, the governing equations can be reduced to a finite dimensional control system. By combining perturbation arguments and Lie brackets computations, we establish the controllability property. Finally we study the time optimal control problem for a simplified system. We derive the necessary optimality conditions by using the Pontryagin maximum principle. In several particular cases we are able to compute the explicit form of the time optimal control and to investigate the variation of optimal solutions with respect to the number of inputs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding the motion of solids (such as aquatic microorganisms and micro or nano swimming robots) is a challenging issue since the propelling mechanism should be adapted to very low Reynolds numbers, i.e., it should be essentially based on friction forces, with no role of inertia (which is essential for the swimming mechanism of macroscopic objects, such as fish like swimming). Among the early contributions to the modeling and analysis of these phenomena, we mention the seminal works by Taylor [21], Lighthill [14, 15], and Childress [6]. In several relatively more recent papers (see, for instance, Shapere and Wilczek [20], San Martin Takahashi and Tucsnak [19], Alouges, DeSimone and Lefebvre [2], Alouges, DeSimone and Heltai [3] and Lauga and Michelin [16]) the self-propelling at low Reynolds number has been investigated by using tools coming from optimization and control theory.

The aim of this work is to give a rigorous mathematical approach to the analysis and the control of a system modeling the low Reynolds number swimming of spherical object. In order to propel itself in the fluid, the swimmer is assumed to perform radial deformations. Roughly speaking, the main problems tackled in this work can be stated as follows:

-

1.

Controllability: given an initial and a final position, prove the existence of a sequence of deformations steering the mass center of the swimmer from the first position to the second, such that at the beginning and the end of the process the body is a unit ball.

-

2.

Time optimal control: among the sequences of deformations of given amplitude steering the mass center from one position to another, determine a sequence accomplishing this task in minimal time.

The controllability based approach to swimming at low Reynolds numbers has been used, as far as we know in [19] and [2]. In [19] the swimmer is a ball and the control is a tangential velocity field on the boundary (so that the body is not deforming), whereas in [2] the authors consider a three-sphere swimmer. The main novelty we bring in this direction is that we consider a one piece (connected) body which advances by undergoing appropriate deformations. As far as we know, the associated time optimal control problems have not been tackled in the literature but related optimal control problems, with the efficiency cost function have been investigated (see, for instance [3, 16, 20]).

We give below some notation which will be used throughout this work. Firstly, we denote by S 0 the unit ball in ℝ3 and by S(t) the domain occupied by the swimmer at instant t. The corresponding density field is denoted by ρ, so that, for every positive t, ρ(t,⋅) maps S(t) into (0,∞). The fluid domain is denoted by \(\varOmega (t)=\mathbb{R}^{3}\backslash\overline{S(t)}\), see Fig. 1. We assume that the motion takes places at zero Reynolds number so that, at each time t≥0 the velocity field u(t,⋅):Ω(t)→ℝ3 and the corresponding pressure field p(t,⋅):Ω(t)→ℝ of the fluid satisfy the Stokes equations:

where \(\mu\in\mathbb{R}^{*}_{+}\) is the viscosity. We assume that the fluid is at rest at infinity and that it sticks onto the swimmer, so that we impose the conditions

where v S the velocity of the swimmer. The needed well-posedness results for the system (1.1)–(1.3) will be recalled in Sect. 2.

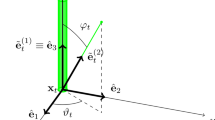

We assume that the motion of the swimmer can be decomposed into a rigid part, which is unknown, and a motion not affecting its mass center and its global orientation, which will be the input of our problem. The rigid part of the motion is characterized, at each instant t, by the position h(t) of the mass center and a rotation matrix R(t). More precisely, the motion of the swimmer is characterized by a map X:S 0×[0,∞)→ℝ3 which can be written

where X ⋆:[0,∞)×S 0→ℝ3 is the input function (see Fig. 2). The current domain S(t) occupied by the swimmer is thus given by

Since swimming is generally a periodic action, we constrain the input X ⋆ to satisfy

for some T>0. The corresponding Eulerian velocity field v S of the swimmer can thus be written

where R ⊤(t) stands for the transposed matrix of R(t) and ω is the angular velocity vector, defined by \(\dot{R}=R^{\top}A(\boldsymbol{\omega})\), with

Using (1.5), condition (1.3) becomes

To be consistent with the assumption of low Reynolds number flow, we assume that at each instant t the swimmer is in equilibrium under the action of forces exerted by the fluid. This means that we have

where

is the classical Cauchy stress tensor in a viscous incompressible fluid.

The full system under investigation, of unknowns u, p, σ, h and R, with the given input X ⋆ is formed by the equations (1.1), (1.2), (1.6), (1.7) and (1.8). The unknown σ can be substituted, using (1.8), into (1.7) so that it suffices to consider the unknowns u, p, h and R. Moreover, solving, for each positive t the stationary Stokes equations (1.1), the full system reduces to a system of ordinary differential equations for h and R (see Sect. 3).

The main theoretical results concern controllability issues. They imply that for any h 0, h 1∈ℝ3 there exists T>0 and an input X ∗ satisfying (1.4) such that the mass center of the swimmer is steered from h 0 to h 1 in time T, that is, we have

A special attention is devoted to the case in which X ⋆ is axi-symmetric, so that the trajectory of the mass center is a straight line. In this situation we provide a simplified model and we apply Pontryagin’s principle to give an explicit solution of the associated time optimal control problem.

2 Some Background on the Exterior Stokes Problem

In this section we first recall some known results on the exterior (i.e., posed in ℝ3∖S, where S is a bounded obstacle) Dirichlet boundary value problem for the stationary Stokes system. We next introduce the force operator, which associates to a given Dirichlet condition on the boundary of S the total force exerted by the fluid on that obstacle. The main results in this section concern the smoothness of this operator with respect to the shape of the obstacle. In order to apply the standard control theoretic results we need this dependence to be quite regular, say C ∞. Let us note that the same problem, with more general deformations and with less regular dependence on the shape, has been considered in Dal Maso, DeSimone and Morandotti [8]. Since the smoothness with respect to the shape results needed in this work do not seem to follow directly from [8], we give below a quick derivation of the needed smoothness results, using a classical approach introduced in Murat and Simon [17] (see also [4]).

Throughout this section, S denotes a bounded domain of ℝ3 with locally Lipschitz boundary and we set \(\varOmega =\mathbb{R}^{3}\setminus\overline{S}\). To state some well-posedness results for the exterior Stokes problem we recall the definition of some function spaces, which are borrowed from [9].

Definition 2.1

The homogeneous Sobolev space \(D_{0}^{1,2}(\varOmega )\) is the closure of \(C_{0}^{\infty}(\varOmega )\) with respect to the norm |⋅|1,2 defined by

The homogeneous Sobolev space D 1,2(Ω) is defined by

We note that \(D_{0}^{1,2}(\varOmega )\subset D^{1,2}(\varOmega )\) and that |⋅|1,2 is a semi-norm on D 1,2(Ω). Moreover, we denote by \(D_{0}^{-1,2}(\varOmega )\) completion of \(C_{0}^{\infty}(\varOmega )\) with respect to the norm

The far field condition lim|x|→∞ u(x)=0 should be understood in the sense that

where (r,θ,ϕ)∈ℝ+×[0,π]×[0,2π) denotes the spherical coordinates (see Fig. 3).

At this stage we need a well-posedness result for the exterior Stokes system. This result is a direct consequence of Galdi, [9, Chap. 5, Theorem 2.1, p. 251] and of a lifting result concerning the divergence operator, sometimes called Bogovski’s Lemma, see, for instance, [9, Chap. 3, Theorem 3.4, p. 142].

Theorem 2.2

Let \(\boldsymbol{f}\in D_{0}^{-1,2}(\varOmega )\), g∈L 2(Ω) and \(\boldsymbol{v}_{0}\in H^{\frac{1}{2}}(\partial \varOmega )\). Then there exists a unique weak solution (v,p)∈D 1,2(Ω)×L 2(Ω) of:

such that:

We next define an operator playing a central role in the remaining part of this work. More precisely, we introduce the force operator, associating to each Dirichlet boundary condition v 0 in (2.2) the resulting force exerted by the fluid on S.

Let e z be the unitary vector in the direction Oz and let (u z ,q z )∈D 1,2(Ω)×L 2(Ω) be the weak solution of:

An important role in this work is played by the bounded linear operator \(\mathbb{F}(S):H^{\frac{1}{2}}(\partial S)\to\mathbb{R}\) defined by

where (v,p) is the solution of (2.2) with f=0, g=0 and

is the Cauchy stress tensor in the fluid. Note that, for (v,p) smooth enough, we have

where n is the unitary vector field normal to ∂S and oriented towards the exterior of S. In other words, for (v,p) smooth enough, \(\mathbb{F}(S)(\boldsymbol{v}_{0})\) is the force on the direction Oz exerted by the fluid on the solid S due to the velocity field v 0 at the interface.

Remark 2.3

It can be easily checked that \(\mathbb{F}\) is essentially invariant with respect to translations of S. More precisely, for every h∈ℝ3 and for every \(\boldsymbol{v}_{0}\in H^{\frac{1}{2}}(\partial(S+\boldsymbol{h}))\), we have:

with \(\tilde{\boldsymbol{v}_{0}}\in H^{\frac{1}{2}}(\partial S)\) defined, for every x∈∂S, by \(\tilde{\boldsymbol{v}_{0}}(\mathbf{x})=\boldsymbol{v}_{0}(\mathbf {x}+\boldsymbol{h})\).

In the remaining part of this section we study the regularity of \(\mathbb{F}\) with respect to shape of S.

We first recall a classical result on the robustness of the locally Lipschitz property of the boundary ∂S with respect to small geometric perturbations (see, for instance, Bello, Fernandez-Cara, Lemoine and Simon [4]).

Lemma 2.4

There exists a positive constant c(S) such that for every θ∈W 1,∞(ℝ3,ℝ3) with \(\|\theta\|_{W^{1,\infty}(\mathbb{R}^{3},\mathbb{R}^{3})}<c(S)\), the set (I+θ)(S) is an open bounded domain with locally Lipschitz boundary in ℝ3.

We then define the set of deformations for S:

Then for every θ∈Θ(S), the mapping I+θ is a diffeomorphism of ℝ3, (I+θ)(S) is an open bounded domain of ℝ3 with a locally Lipschitz boundary and \((I+\theta)(\varOmega )=\mathbb{R}^{3}\setminus\overline{(I+\theta)(S)}\). We next give a differentiability (with respect to the shape) result. We skip its proof since it can be obtained by a slight variation of the proof of Theorem 6 from [4], combined with Theorem 2.2.

Theorem 2.5

Let \(\boldsymbol{v}_{0}\in H^{\frac{1}{2}}(\partial \varOmega )\). The map associating to every τ∈Θ(S) the couple

where (v(τ),p(τ)) is the solution of

is infinitely differentiable in a neighborhood of 0.

We are now in a position to prove the smoothness of the real-valued function \(\mathbb{F}\), defined in (2.4), with respect to the shape of the solid.

Theorem 2.6

For every \(\boldsymbol{v}_{0}\in H^{\frac{1}{2}}(\partial \varOmega )\), the real-valued function defined in Θ(S) by

is of class C ∞ in a neighborhood of 0.

Proof

For τ∈Θ(S), let (v(τ),p(τ)) be the solution of (2.7) and let (u z (τ),q z (τ))∈D 1,2((I+τ)(Ω))×L 2((I+τ)(Ω)) satisfy:

We define (U z (τ),Q z (τ))=(u z (τ),q z (τ))∘(I+τ) and (V(τ),P(τ))=(v(τ),p(τ))∘(I+τ). Then, using (2.4) and the change of variables x=(I+τ)y, it follows that:

where f is given by

Using Theorem 2.5, one can easily check that the mapping τ↦f(τ)∘(I+τ) is differentiable at 0, with values in L 1(Ω). Hence, according to Lemma 12 in [4] (see also [11, Corollaire 5.2.5]) the mapping \(\tau\mapsto \mathbb{F}((I+\tau)(S))(\boldsymbol{v}_{0}\circ (I+\tau)^{-1}) = \int_{\varOmega }f(\tau)\circ(I+\tau)\, \mathrm{ Jac}(I+\tau)\, \mathrm{d}\mathbf{y}\) is also differentiable at 0. In addition, Theorem 2.5 ensures that the mappings τ↦(U z (τ),Q z (τ)) and τ↦(V(τ),P(τ)) are C ∞ in a neighborhood of 0. It follows that \(\tau\mapsto \mathbb{F}((I+\tau)(S))(\boldsymbol{v}_{0}\circ (I+\tau)^{-1})\) is also C ∞ in a neighborhood of 0. □

3 The Case of Radial Deformations

In this section and in the remaining part of this work we assume that the swimmer’s body occupies at rest the unit sphere S 0 of ℝ3 and its mass density is constant (ρ 0=1).

Moreover, we consider only radial axi-symmetric deformations X ⋆(t,⋅) of the unit sphere S 0. This means, using appropriate spherical coordinates (r,θ,ϕ)∈ℝ+×[0,π]×[0,2π) (see Fig. 3), that X ⋆ can be written in the form

where r ⋆∈C ∞([0,T]×[−1,1],ℝ) and θ is the function which maps x∈ℝ3 to the angle θ(x)∈[0,π] (see Fig. 3). We denote by Jac X ⋆ the Jacobian of the mapping X ⋆ and by ρ ⋆ the mass density field on S ⋆(t)=X ⋆(t,⋅)(S 0) which is defined by

Let (P i ) i≥0 be the Legendre polynomials which are known to form an orthogonal basis in L 2([−1,1]). The result below shows that, under mild assumption on r ⋆ the deformations defined by (3.1) satisfy the so-called self-propelling conditions. This result is given without proof.

Proposition 3.1

Assume that for every t∈[0,T] we have r ⋆(t,⋅) orthogonal to {P 0,P 1} (in the L 2 sense) and that

Then, the following properties hold.

-

For every t>0, the mapping X ⋆(t,⋅) is a diffeomorphism from S 0 onto X ⋆(t,⋅)(S 0) and

$$ 0 < \mathrm{Jac}\, X^\star(t,\mathbf{y})\quad \bigl(t\in[0,T],\ \mathbf {y}\in S_0 \bigr). $$(3.4)Moreover, X ⋆(t,⋅)(S 0) is a bounded domain with Lipschitz boundary for every t∈[0,T].

-

The mass center is fixed, i.e.,

$$ 0 = \int_{S^\star(t)}\rho^\star\bigl(t, \mathbf{x}^\star\bigr)\mathbf{x}^\star\, \mathrm{d} \mathbf{x}^\star\quad \bigl(t\in[0,T] \bigr). $$(3.5) -

The angular momentum is constant, i.e.,

$$ 0 = \int_{S^\star(t)}\rho^\star\bigl(t, \mathbf{x}^\star\bigr) \dot{X}^\star\bigl(t,{X^\star(t, \cdot)}^{-1}\bigl(\mathbf{x}^\star\bigr)\bigr)\times \mathbf{x}^\star\, \mathrm{d}\mathbf{x}^\star\quad \bigl(t \in[0,T] \bigr). $$(3.6)

Formulas (3.5) and (3.6) correspond to the self-propelling conditions which are natural requirements in understanding swimming from a mathematical view point (at least for large Reynolds numbers). In the precise control problem which is considered in this work (i.e., at low Reynolds numbers and with X ∗ time periodic), the reachable space by general axi-symmetric deformations X ∗ coincides with the reachable space obtained with the smaller input space defined corresponding to the assumptions in Proposition 3.1. This means, that in the particular case considered in this work, the self-propelling conditions play no role, as shown in Proposition 3.4 below.

We next show that, under the assumptions of Proposition 3.1, the considered system reduces to a quite simple system of ordinary differential equations. Indeed, using the notations introduced by (2.4) and assuming that the deformation X ⋆ is defined by (3.1), we can rewrite problem (1.1)–(1.3), (1.7) in the form:

Using the fact that for every t∈[0,T], \(\mathbb{F} (X^{\star}(t,\cdot)(S_{0})+h(t)\mathbf{e}_{z} )\) is a linear map from \(H^{\frac{1}{2}} (\partial (X^{\star}(t)(S)+h(t)\mathbf{e}_{z}) )\) to ℝ and using Remark 2.3, (3.7) becomes:

We also need a result, often in the literature, asserting that the drag acting on a solid moving at constant velocity in Stokes fluid does not vanish. For the sake of completeness, we provide the reader with a short proof.

Lemma 3.2

For every bounded and open domain S⊂ℝ3 with Lipschitz boundary we have:

Proof

Let (u z ,q z ) be the solution of the exterior homogeneous Stokes problem with boundary condition e z (see (2.3)). Using the Green formulae, we have:

We argue by contradiction. Assuming that \(\mathbb{F}(S)(\mathbf{e}_{z})=0\), we have that \(\nabla \boldsymbol {u}_{z} +\nabla \boldsymbol {u}_{z}^{T}\), i.e., the symmetric strain rate tensor, is equal to zero. Then it is well known that u z (x)=a+b×x, with a,b∈ℝ3. But due to the fact that \(\nabla\boldsymbol{u}_{z}\in L^{2}(\mathbb{R}^{3}\setminus\overline{S})\), we obtain that b=0. Using the fact that u z decays to zero far from S (in the sense of (2.1)), we deduce that a=0. This is in contradiction with the boundary condition u z =e z on ∂S. Consequently \(\mathbb{F}(S)(\mathbf{e}_{z})\neq0\). □

The remaining part of this work is devoted to the study of the system (3.7)–(3.8). We first note that the following simple result holds.

Proposition 3.3

Assume that the smooth function r ⋆ satisfies (3.3). Then there exists a unique solution h∈C ∞([0,T],ℝ) solution of (3.7)–(3.8).

Proof

Using Proposition 3.1, Lemma 3.2 and Theorem 2.6, we obtain that the map

is C ∞ on [0,T].

Hence, the unique solution of (3.7)–(3.8) is h∈C ∞([0,T],ℝ) defined by:

□

The proposition below shows that, if we assume that r ⋆ is time-periodic, then the orthogonality on P 0 and P 1 (and, consequently, the self-propelling conditions), assumed in Proposition 3.1, does not affect the set of reachable final positions h(T) of the solid.

Proposition 3.4

Let \(X^{\star}_{1}\) and \(X^{\star}_{2}\) be two axi-symmetric deformations defined for t∈[0,T] satisfying \(X^{\star}_{i}(0,\cdot)=X^{\star}_{i}(T,\cdot)=I,\ i\in\{1,2\}\) and which satisfy, for every t∈[0,T], the condition

for some h c ∈C 1([0,T],ℝ). Let h 1 (resp. h 2) be defined by (3.10) with \(X^{\star}=X^{\star}_{1}\) (resp. \(X^{\star}=X^{\star}_{2}\)). Then h 1(T)=h 2(T).

Proof

From (3.9) it follows that

Using Remark 2.3 it follows that

and

Hence

Finally, since \(X^{\star}_{i}(0,\cdot)=X^{\star}_{i}(T,\cdot)=I,\ i\in\{1,2\}\) (consequently h c (0)=h c (T)=0), we obtain h 2(T)=h 1(T). □

Denote, for the remaining part of this paper

where (P i ) i∈ℕ are the Legendre polynomials and (r(y),θ(y),ϕ(y))∈ℝ+×[0,π]×[0,2π) are the spherical coordinates of y (see Fig. 3).

The remaining part of this section deals with the first derivative with respect to the shape of a certain ratio of hydrodynamical forces. We use, in particular, asymptotic formulas derived by Lighthill (see [15]). The precise form in which we use these results is borrowed from Shapere and Wilczek [20, Eq. (2.12)].

Proposition 3.5

Let \(\mathbb{F}\) be the force operator introduced in Sect. 2, let L≥1 and let M SW=(M SW i,j ) i,j∈{1,…,L}∈M L (ℝ) be defined by:

for every i,j∈{1,…,L}. Then

for every α=(α 1,…,α L )T∈C ∞(ℝ+,ℝL), where (D i ) i∈{1,…,L} are given by (3.11).

As a consequence of the above proposition, we have the following result.

Proposition 3.6

Let L≥1 and (D i ) i≥1 be the deformations defined by (3.11). Then for every i,j∈{1,…,L}, we have:

where \(M^{SW}\in\mathcal{M}_{L}(\mathbb{R})\) is the matrix defined in Proposition 3.5 by (3.12) and, for every τ∈Θ(S) and every \(\boldsymbol{v}_{0}\in H^{\frac{1}{2}}(\partial \varOmega )\), \(\mathbb{F}'(\tau)(\boldsymbol{v}_{0})\) stands for the differential of \(\theta\mapsto\mathbb{F}((I+\theta)(S))(\boldsymbol{v}_{0}\circ (I+\theta)^{-1})\) at 0 in the direction τ (which exists according to Theorem 2.6).

Proof

For every α∈C ∞(ℝ+,ℝL), we deduce from Theorem 2.6 that the map

is of class C ∞ in a neighborhood of the origin. By comparing the second order Taylor expansion of this function around the origin with formula (3.13) we obtain the required result. □

4 Controllability Results

Recall from the introduction that our aim is to steer the mass center of the swimmer in a prescribed final position, with initial and final deformation equal to zero. Since we also want to control the final deformation, it seems convenient to extend the state space by including in it the variables describing the deformation (i.e., the function r ⋆) and to use the derivative with respect to time of r ⋆ as a new input function.

Based on the above remark and under the assumptions of Proposition 3.3, the system (3.7)–(3.8) can be written:

with the initial conditions

where the deformation velocity w ⋆ is given by

and Q ⋆ is the new input function.

With the above notation, the main result in this section states as follows:

Theorem 4.1

For every h 1∈ℝ, there exists T>0 and Q ⋆∈C ∞([0,T]×[−1,1],ℝ) such that the solution (h,r ⋆) of (4.1)–(4.3) satisfies:

-

1.

h(T)=h 1 and r ⋆(T,⋅)=0,

-

2.

for every t∈[0,T], \(\|Q^{\star}(t,\cdot)\|_{L^{\infty}(-1,1)}\le 1\),

-

3.

for every t∈[0,T], r ⋆(t,⋅)∈{P 0,P 1}⊥ and inf(t,x)∈[0,T]×[−1,1] r ⋆(t,x)>−1.

In order to prove the above result, we use Chow’s theorem (see for instance [22, Chap. 5, Proposition 5.14, p. 89] or [13]):

Theorem 4.2

(Chow)

Let m, n∈ℕ and let (f i ) i=1,n be C ∞ vector fields on ℝn. Consider the control system, of state trajectory q,

with input function \(\boldsymbol{u}=(u_{i})_{i=1,m}\in C^{\infty}([0,+\infty) , B_{\mathbb{R}^{m}}(0,r) )\) for some r>0.

Let \(\mathcal{O}\) an open and connected set of ℝn and assume that

Then the system (4.4) is controllable, i.e., for every q 0, \(\boldsymbol{q}_{1}\in\mathcal{O}\) there exists T>0 and \(\boldsymbol{u}\in C^{\infty}([0,T],B_{\mathbb{R}^{m}}(0,r))\) such that q(0)=q 0 and q(T)=q 1 and \(\boldsymbol{q}(t)\in\mathcal{O}\) for every t∈[0,T].

In the previous theorem, the Lie-bracket of two vector fields g 1, g 2 is a new vector field defined by

We recall that a Lie-algebra is a space closed for the Lie-bracket [⋅,⋅] and that (Lie{f 1,…,f m },[⋅,⋅]) is the smallest Lie-algebra containing {f 1,…,f m }. Moreover, Lie q {f 1,…,f m }⊂ℝn is the subspace of ℝn spanned by all the values in q of the vector fields in Lie{f 1,…,f m }.

We are now in position to prove our main controllability result.

Proof of Theorem 4.1

Let consider the two dimensional space of deformations X ⋆ defined by

with \(\alpha_{i}\in C^{\infty}([0,T],(-\frac{1}{2},\frac{1}{2}) )\) and (D i ) i∈{1,2} introduced in (3.11).

Due to the fact that |P i (ζ)|≤1 for every ζ∈[−1,1] and i∈ℕ, the above defined deformations X ⋆ satisfy the assumptions in Proposition 3.1. Moreover, if we assume that α i (0)=α i (T)=0 then X ⋆(0,⋅)=X ⋆(T,⋅)=I. With the above notation, it is easily seen that (3.9) writes:

The above system can be written in the condensed form:

where \(\boldsymbol{q}=(h,\alpha_{1},\alpha_{2})^{T}\in\mathbb{R}\times(-\frac {1}{2},\frac{1}{2})^{2}\) and \((\tilde{\boldsymbol{f}}_{i})_{i\in\{1,2\}}\) are the C ∞ vector field on \(\mathbb{R}\times(-\frac{1}{2},\frac{1}{2})^{2}\) defined by:

for every \((h,\alpha_{1},\alpha_{2})^{\top}\in\mathbb{R}\times(-\frac {1}{2},\frac{1}{2})^{2}\). According to Proposition 3.6,

where the matrix \(\displaystyle M^{SW}\in\mathcal{M}_2(\mathbb{R})\) is given by:

Hence \([\tilde{\boldsymbol{f}}_{1},\tilde{\boldsymbol{f}}_{2}]_{\boldsymbol {q}=0}=(\langle M^{SW}\mathbf{e}_{2},\mathbf{e}_{1}\rangle-\langle M^{SW}\mathbf{e}_{1},\mathbf{e}_{2}\rangle,0,0)^{\top}=\frac {3}{35}(1,0,0)^{\top}\), so that

Since \(\tilde{\boldsymbol{f}}_{1}\), \(\tilde{\boldsymbol{f}}_{2}\) and \(\boldsymbol{q}\mapsto[\tilde{\boldsymbol{f}}_{1},\tilde{\boldsymbol {f}}_{2}]_{\boldsymbol{q}}\) are C ∞ functions (see Theorem 2.6), there exists ε>0 such that for every q∈{0}×]−ε,ε[2, \(\mathrm{dim}\, \mathrm{Lie}_{\boldsymbol{q}}\{\tilde{\boldsymbol{f}}_{1},\tilde {\boldsymbol{f}}_{2}\}=3\). Using the fact that \(\tilde{\boldsymbol{f}}_{1}\), \(\tilde{\boldsymbol{f}}_{2}\) and \([\tilde{\boldsymbol{f}}_{1},\tilde{\boldsymbol{f}}_{2}]\) do not depend on h (i.e., on the first component of q), it follows that

We conclude by using Theorem 4.2 with \(\mathcal{O}\) a neighborhood of ℝ×{(0,0)⊤}. □

In the end of this section we study the time optimal control associated to the controllability problem introduced in Theorem 4.1, by restricting the inputs to a vector space of dimension L≥2.

Let us first give some notations. Given α∈ℝL, we denote by S ⋆(α) the deformed sphere,

where D i are defined in (3.11) and c>0 is small enough in order to ensure that \(\mathrm{Jac}(I+\sum_{i=1}^{L}\alpha_{i}D_{i})>0\) on S 0. We also introduce, for every α∈ℝL small enough, the vector f(α)=(f i (α)) i∈{1,…,L}∈ℝL with

With the above notation and assumptions, the control system (4.1)–(4.3) writes

Looking to the proof of Theorem 4.1, we easily see that the following corollary holds:

Corollary 4.3

For every L≥2 and h 1∈ℝ, there exists T>0 and β∈C ∞([0,T],ℝL) such that the solution (h,α) of (4.8)–(4.10) satisfies:

with the constraint on the control variable:

and the state constraint

Remark 4.4

According to Corollary 4.3, we see that two scalar inputs are sufficient to steer the sphere to any final position lying on e z . Note that, due to the scallop Theorem, see Purcell [18], at least two controls are necessary. It follows that with six scalar inputs the sphere can deform itself periodically in time in order to reach any final position h 1∈ℝ3.

Proposition 4.5

For any L≥2 and h 1∈ℝ, there exists a minimal time T ∗≥0 such that the control problem (4.8)–(4.11) with constraints (4.12) and (4.13) admits a solution for controls β chosen in L ∞((0,T),ℝL).

Proof

For L≥2, the existence of a solution \((\overline{T},\overline {h},\overline{\boldsymbol {\alpha }},\overline{\boldsymbol {\beta }})\) satisfying (4.8)–(4.13) is ensured according to the controllability result given in Corollary 4.3. To prove the existence of a minimal time, we shall make use of the classical Filippov Theorem (see for instance [5, 10] or [1]). First, due to the linearity of the optimal problem with respect to the control variables β, the set

is convex for any state variables α with |α(t)|2≤c and for all \(t\in[0,\overline{T}]\).

Moreover, using Theorem 2.6 we deduce that the functions f i are continuous with respect to α. Therefore, there exists a constant C>0 such that, for all \(t\in [0,\overline{T}]\),

for any |α(t)|2≤c and |β(t)|2≤1. The uniform bound on f provides a bound on h. Indeed, for all \(t\in[0,\overline{T}]\), we have

Thus, all the state and control variables are uniformly bounded, provided that the control and the state satisfy the given constraints. Therefore, we can apply the Filippov-Cesari theorem (see [10, Theorem 3.1] or [5, Theorem 9.3]) to conclude to the existence of an optimal solution (T ∗,h ∗,α ∗,β ∗). □

In the next two sections, we consider a simplified model for which we shall explicitly determine the optimal solutions (T ∗,h ∗,α ∗,β ∗) of problem (4.8)–(4.13). We neglect the state constraint (4.13) and we obtain the optimal explicit solutions by directly applying Pontryagin’s maximum principle.

To obtain the simplified model we note that if α is small enough, according to Proposition 3.5, (4.8) can be approximated by

The above equation is of independent interest. In particular, it can be seen as a generalization of the system describing a nonholonomic integrator, see, for instance Coron [7]. In the remaining part of this work we study a slight generalization of the above control system, obtained by replacing the matrix M SW by an arbitrary non-symmetric matrix \(M\in\mathcal{M}_{L}(\mathbb{R})\).

5 A Simplified Optimal Control Problem Without State Constraints

In this section we consider the simplified control system described at the end of the last section. More precisely, given an integer L≥2, \(M\in\mathcal{M}_{L}(\mathbb{R})\) with M ⊤≠M and h 1∈ℝ∗, our aim consists in determining the minimal time T ∗ for which there exists β∈L ∞((0,T ∗),ℝL) such that

and the solution (α,h)∈W 1,∞((0,T ∗),ℝL)×W 1,∞((0,T ∗),ℝ) of

satisfies

We assume that h 1≠0, since otherwise the time optimal control problem is trivial.

Before integrating the Pontryagin maximum principle, we give some elementary facts about this control problem.

Proposition 5.1

The time T ∗>0 and the control β ∗∈L ∞((0,T ∗),ℝL) are solutions of the time optimal control system (5.1)–(5.5) if and only if T ∗ and β ∗ are solution of the time optimal control system obtained from (5.1)–(5.5) by replacing (5.2) with the equation

In other words, the optimal time and controls of the system (5.1), (5.2)–(5.5) coincide with the ones of the system (5.1), (5.6), (5.3)–(5.5).

Proof of Proposition 5.1

Note that (5.2) can be written in the form

Since M+M ⊤ is symmetric, we have,

Integrating \(\langle(M+M^{\top})\boldsymbol {\alpha },\,\dot{\boldsymbol {\alpha }}\rangle\) over t∈[0,T ∗] yields \(\int_{0}^{T^{*}}\langle(M+M^{\top})\boldsymbol {\alpha },\,\dot{\boldsymbol {\alpha }}\rangle\, \mathrm{d}t=0\) due to the initial and final conditions α(0)=α(T ∗)=0. In other words, the symmetric part of M does not play any role in the control system. This fact clearly implies that the optimal times and the optimal time controls of the two considered systems coincide. □

The remaining part of this section is devoted to the application of Pontryagin’s maximum principle which yields, at least for the simplified model with no state constraints, an explicit solution of the optimal control problem. We first recall, following [22], some basic facts on Pontryagin’s maximum principle and we adapt the general procedure to our case. To this end we introduce the Hamiltonian \(\mathcal{H}\) for the minimal time problem of the control system (5.1)–(5.5). To accomplish this goal we gather the state variables in a single vector by setting

and we denote

Then the differential system (5.2)–(5.5) reads

where 0 L denotes the null vector in ℝL. The Hamiltonian of the system (5.7), (5.8) for the optimal time problem is the function \(\mathcal{H}: \mathbb{R}^{L+1}\times\mathbb{R}^{L}\times\mathbb {R}^{L+1}\times(-\infty,0]\) defined by

with q, r∈ℝL+1, β∈ℝL and s 0≤0. We recall that r is generally designed as the adjoint state. In the present case it is convenient to write r into the form

with p 0∈ℝ and p∈ℝL. With the above notation, the Hamiltonian can be also written:

with p∈ℝL, p 0∈ℝ and s 0∈ℝ, s 0≤0.

The Pontryagin maximum principle ensures that if (T,q,β) is an optimal solution to (5.7), (5.8), then there exist a function r:[0,T]→ℝL+1 and a scalar s 0≤0 such that the pair (r,s 0) is nontrivial and such that

Moreover, since the system (5.7), (5.8) is autonomous, if (T,h,α,β,p 0,p) is an optimal solution to (5.15)–(5.20) then

This property, combined with the expression (5.9) for the Hamiltonian leads to

This fact implies that, going back to the variables h and α, that (5.10)–(5.12), reduce to determining T∈ℝ+, h∈W 1,∞([0,T],ℝ), α∈W 1,∞([0,T],ℝL), β∈L ∞((0,T),ℝL), p 0∈W 1,∞([0,T],ℝ) and p∈W 1,∞([0,T],ℝL) such that \(((p_{0},\boldsymbol {p})^{\top},s_{0} )\not\equiv0\), the relation (5.14) holds and

We are now in a position to show that the cost multiplier s 0 is negative and to express the optimal control β in function of the state and adjoint state.

Lemma 5.2

Let (T,h,α,β,p 0,p,s 0) be a solution of (5.13)–(5.20). Then s 0<0, p(0)≠0 and p 0≠0. In addition, for every t∈[0,T] we have that p 0 M α(t)+p(t)≠0 and the optimal control variables are given by

Proof

We first remark that since h 1≠0 we necessarily have T>0. We start by proving that s 0, p(0) and p 0 do not vanish. We argue by contradiction.

• Suppose that s 0=0. From (5.9) and (5.14), we deduce that

On the other hand, (5.17) implies that p 0 is a constant, so that (5.22) and (5.15) give

Furthermore, using (5.15), (5.16) and (5.18), we obtain

Combining (5.24) and (5.23), we deduce that

Integrating the above formula on [0,T] we get

On the other hand, since (p 0,p)⊤ and s 0 form a nontrivial pair and s 0=0, we necessarily have \((p_{0},\boldsymbol {p})\not\equiv(0,0)\). If p 0=0 then p≠0 and the Hamiltonian reduces to \(\mathcal {H}(\boldsymbol {\alpha },\boldsymbol {\beta },p_{0},\boldsymbol {p},s_{0})= \langle \boldsymbol {p}, \boldsymbol {\beta }\rangle\). From the maximum principle (5.13), we obtain that \(\boldsymbol {\beta }=\frac{\boldsymbol {p}}{{|\boldsymbol {p}|}_{2}}\) and \(\mathcal{H}(\boldsymbol {\alpha },\boldsymbol {\beta },p_{0},\boldsymbol {p},s_{0})={|\boldsymbol {p}|}_{2}\ne0\) which is in contradiction with (5.22). Hence, we have p 0≠0 and we deduce from (5.25) that h 1=0 which is a contradiction. We have thus shown that s 0≠0.

• Assume that p(0)=0. Using the relation (5.14) at t=0 we obtain that s 0=0, which is a contradiction. Thus, p(0)≠0.

• Finally, suppose that p 0=0. Then, according to (5.18) we have that p(t)=p(0) for all t∈[0,T]. The maximum principle property (5.13) provides the optimal control \(\boldsymbol {\beta }(t)=\frac{\boldsymbol {p}(0)}{|\boldsymbol {p}(0)|_{2}}=\boldsymbol {\beta }(0)\) for all t∈[0,T]. Since α(0)=α(T)=0, we get β(0)=0 by integrating (5.16) so that β(t)=0 for all t∈[0,T]. We deduce from (5.14) that s 0=0 which is a contradiction. Thus, p 0≠0.

We are now in position to determine the optimal control variables β. Using the fact that s 0<0, it follows from (5.14) that p 0 M α(t)+p(t)≠ 0 for every t∈[0,T]. Using the expression (5.9) of the Hamiltonian, together with (5.13) we deduce that the optimal control β is indeed given by (5.21). □

The result below shows that, for the simplified control problem (5.1)–(5.5), without state constraints, an optimal solution can be explicitly determined. For the remaining part of the paper, we denote by σ(A) the set of the complex eigenvalues of a matrix A.

Proposition 5.3

The minimal time T ∗ such that (5.1)–(5.5) admits a solution is given by

where \(\lambda^{*}=\max \{|\lambda|,\ \lambda\in\sigma (\frac {1}{2}(M-M^{\top}) ) \}>0\). Moreover, an optimal time control is

where the initial control β 0∈ℝL is chosen such that |β 0|2=1 with \(\boldsymbol {\beta }_{0}\in\mathrm{Ker} ( (\frac {M-M^{\top}}{2} )^{2}+{\lambda^{*}}^{2} )\).

Proof

Let us denote by M A the skew-symmetric part of the non-symmetric matrix M, i.e. \(M_{A}=\frac{1}{2}(M-M^{\top})\neq0\). According to Proposition 5.1, it is enough to consider the control system (5.1)–(5.5) with M replaced by M A . From Lemma 5.2 with the relation (5.14), we deduce that the optimal control variables β is given by

Hence, differentiating the above relation over t and using (5.16) and (5.18), we obtain

with \(\delta=-\frac{s_{0}}{2p_{0}}\). Integrating the above ordinary differential equation in β yields for all t∈[0,T],

Now, we turn to the computation of α and h. Using (5.16), we obtain

and then we get

Using (5.29) in (5.15) we obtain that

Integrating the above relation on [0,T] we obtain

On the other hand, (5.21) and (5.28) imply that

This fact, combined with (5.30) and the final condition h(T)=h 1, leads to

We obtain from (5.28) that

We now turn to the final condition α(T)=0. Since M A is the skew-symmetric part of M, M A is a normal matrix. Thus there exist a unitary matrix \(U\in\mathcal{M}_{L}(\mathbb{C})\) with UU ∗=U ∗ U=I L and a diagonal matrix Λ=i diag(λ 1,…,λ L ) where (iλ j ) j are the eigenvalues of M A , such that M A =UΛU ∗. Let us note that the non-zero eigenvalues of M A are all pure imaginary and they must occur in complex conjugate pairs. Therefore, if iλ is an eigenvalue of M A then −iλ is also an eigenvalue. The columns of the matrix U are formed by the eigenvectors of M A . We clearly can rearrange the matrices U and Λ such that there exists R∈ℕ∗ with 2R≤L and λ j =0 for every j>2R. Using the above decomposition of M A and relation (5.31), we obtain

Integrating the above formula on [0,t], we obtain for every t∈[0,T],

The condition α(T)=0 with T>0 leads to

We deduce from condition (5.34) that

Moreover, for every k∈{1,…,2R}, we either have [U ∗ β(0)] k =0 or \({\frac{|\lambda_{k}|}{|h_{1}|}T^{2}\in2\pi\mathbb{N}^{*}}\).

Hence, the set of times T>0 such that condition (5.34) holds with β(0)∈ℝL∖{0} is given by

The minimum element of the set \(\mathcal{K}\) is

where λ ∗=max{λ 1,…,λ 2R }>0.

Finally, we choose β(0)=β 0∈ℝL with |β 0|2=1 such that \(\boldsymbol {\beta }_{0}\in\mathrm{Ker} (M_{A}^{2}+{\lambda^{*}}^{2} )\) and it can be easily checked that the condition (5.34) is satisfied with T=T ∗ i.e. α(T ∗)=0. The proof of Proposition 5.3 is then complete. □

In the case where the matrix M is equal to the matrix M SW given by (3.12), the minimal time obtained in Proposition 5.3 tends to a limit when the number L of deformation modes goes to +∞. This limit is a lower bound for the minimal time obtained using a finite dimensional input space and it can be seen as the minimal time obtained by allowing an infinite dimensional input space.

Corollary 5.4

Let us consider the optimal control problem (5.1)–(5.5) with the (L×L)-matrix M=M SW defined by (3.12). We denote by \(T^{*}_{L}\) the corresponding minimal time given by (5.26). Then, the map \(L\mapsto T^{*}_{L}\) is nonincreasing and

Proof

Using the expression (3.12) of the matrix M SW, we obtain that its skew-symmetric part \(M_{A}=\frac{1}{2} (M^{SW}-{M^{SW}}^{\top})\) is the (L×L)-bidiagonal matrix given by

with

According to Proposition 5.3, the minimal time is given by \(T^{*}_{L}=\sqrt{\frac{2\pi|h_{1}|}{\lambda^{*}_{L}}}\), where \(\lambda^{*}_{L}>0\) is given by \(\lambda^{*}_{L}=\max\{|\lambda|,\ \lambda\in\sigma(M_{A})\}\). We first prove that \(L\mapsto T^{*}_{L}\) is nonincreasing. For that it suffices to check that \(\lambda^{*}_{L}\) is nondecreasing function with respect to L. In order to check this assertion we denote, for every integer k with 2≤k≤L, by A k the complex (k×k)-matrix

Let us remark that A k is a Hermitian matrix and thus all its eigenvalues μ i =μ i (A k ) for i=1,…,k are real numbers. We may assume that the eigenvalues μ i are arranged in increasing order, so that μ k (A k )=max1≤i≤k μ i (A k ). Moreover, one can easily check that if μ is an eigenvalue of A k then −μ is also an eigenvalue. This implies that μ k (A k )≥0 for every 1≤k≤L, so that \(\mu_{L}(A_{L})= \lambda^{*}_{L}\). The inclusion principle (see [12, Theorem 4.3.15]) ensures that

The inclusion property (5.38) leads to \(\lambda^{*}_{L-1}\le \lambda^{*}_{L}\). Thus, the largest eigenvalue modulus \(\lambda^{*}_{L}\) is an increasing function of L.

Next, we prove that \(\lambda^{*}_{L}\) tends to \(\frac{1}{2}\) as L goes to +∞. To this end, we first show that:

• The upper bound is obtained by applying the Gershgorin theorem (see [12, Theorem 6.1.1]) for the localization of the eigenvalues of M A . Indeed, the eigenvalues λ of M A are such that

In particular, we have

• The lower bound is obtained by using the Rayleigh-Ritz theorem for the Hermitian matrix A L (see [12, Theorem 4.2.2]). The largest eigenvalue modulus \(|\lambda^{*}_{L}|\) is characterized by

Let us choose u=(u k )1≤k≤L ∈ℂL such that

Then, a straightforward calculation leads to

and u ∗ u=L. According to (5.41) and the fact that a k ≤0 for all 2≤k≤L, we obtain that

Thus, estimate (5.39) is proved. Finally, we prove that \(\lim_{L\to+\infty} \frac{2}{L}\sum_{k=2}^{L} |a_{k}| =1\). Let us denote

We have that

and then

One can easily check that the right-hand side of estimate (5.42) tends to 0 as L goes to +∞. Hence, we deduce that \(\lim_{L\to+\infty} \mathcal{S}_{L} =0\) and then \(\lim_{L\to+\infty} \frac{2}{L}\sum_{k=2}^{L} |a_{k}| =1\). We conclude from (5.39) that \(\lim_{L\to+\infty} \lambda^{*}_{L}=\frac{1}{2}\) and the proof of Corollary 5.4 is completed. □

The fact, proved rigorously above, that the minimal time \(T^{*}_{L}\) is a decreasing function of the number L of deformation modes is checked numerically in Fig. 4 below where the eigenvalues of \(\frac{1}{2} (M^{SW}-(M^{SW})^{\top})\) are computed with the ARPACK library in MATLAB.

To conclude, we give a numerical computation of an optimal solution for the simplified control problem (5.1)–(5.5), in the case where the matrix M is the Shapere-Wilczek matrix M=M SW defined by (3.12). The optimal solution corresponding to the final position h 1=1 is computed according to the formulae given in Proposition 5.3. Radial and axi-symmetric deformations are considered with a number L=10 of deformation modes. The position and the shape of the swimmer at several instants t are depicted in Fig. 5. The corresponding minimal time is T ∗≃4.483.

References

Agrachev, A.A., Sachkov, Y.L.: Control Theory from the Geometric Viewpoint Encyclopaedia of Mathematical Sciences, vol. 87. Springer, Berlin (2004). Control Theory and Optimization, II

Alouges, F., Desimone, A., Heltai, L.: Numerical strategies for stroke optimization of axisymmetric microswimmers. Math. Models Methods Appl. Sci. 21, 361–387 (2011)

Alouges, F., DeSimone, A., Lefebvre, A.: Optimal strokes for low Reynolds number swimmers: an example. J. Nonlinear Sci. 18, 277–302 (2008)

Bello, J., Fernandez-Cara, E., Lemoine, J., Simon, J.: The differentiability of the drag with respect to the variations of a Lipschitz domain in a Navier-Stokes flow. SIAM J. Control Optim. 35, 626–640 (1997)

Cesari, L.: Optimization—Theory and Applications. Applications of Mathematics, vol. 17. Springer, New York (1983). Problems with ordinary differential equations

Childress, S.: Mechanics of Swimming and Flying. Cambridge Studies in Mathematical Biology, vol. 2. Cambridge University Press, Cambridge (1981)

Coron, J.-M.: Control and Nonlinearity. Mathematical Surveys and Monographs, vol. 136. Am. Math. Soc., Providence (2007)

Dal Maso, G., DeSimone, A., Morandotti, M.: An existence and uniqueness result for the motion of self-propelled microswimmers. SIAM J. Math. Anal. 43, 1345–1368 (2011)

Galdi, G.P.: An Introduction to the Mathematical Theory of the Navier-Stokes Equations. Vol. I. Springer Tracts in Natural Philosophy, vol. 38. Springer, New York (1994). Linearized steady problems

Hartl, R.F., Sethi, S.P., Vickson, R.G.: A survey of the maximum principles for optimal control problems with state constraints. SIAM Rev. 37, 181–218 (1995)

Henrot, A., Pierre, M.: Variation et Optimisation de Formes. Mathématiques & Applications (Berlin) [Mathematics & Applications], vol. 48. Springer, Berlin (2005). Une analyse géométrique [A geometric analysis]

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (1985)

Jurdjevic, V.: Geometric Control Theory. Cambridge Studies in Advanced Mathematics, vol. 52. Cambridge University Press, Cambridge (1997)

Lighthill, J.: Mathematical Biofluiddynamics Regional Conference Series in Applied Mathematics, vol. 17. Society for Industrial and Applied Mathematics, Philadelphia (1975). Based on the lecture course delivered to the Mathematical Biofluiddynamics Research Conference of the National Science Foundation held from July 16–20, 1973 at Rensselaer Polytechnic Institute, Troy, New York

Lighthill, M.J.: On the squirming motion of nearly spherical deformable bodies through liquids at very small Reynolds numbers. Commun. Pure Appl. Math. 5, 109–118 (1952)

Michelin, S., Lauga, E.: Efficiency optimization and symmetry-breaking in a model of ciliary locomotion. Phys. Fluids 22, 111901 (2010)

Murat, F., Simon, J.: Sur le contrôle par un domaine géométrique. Report of L.A. 189 76015, Université Paris VI (1976)

Purcell, E.M.: Life at low Reynolds number. Am. J. Phys. 45, 3–11 (1977)

San Martín, J., Takahashi, T., Tucsnak, M.: A control theoretic approach to the swimming of microscopic organisms. Q. Appl. Math. 65, 405–424 (2007)

Shapere, A., Wilczek, F.: Efficiencies of self-propulsion at low Reynolds number. J. Fluid Mech. 198, 587–599 (1989)

Taylor, G.: Analysis of the swimming of microscopic organisms. Proc. R. Soc. Lond. Ser. A 209, 447–461 (1951)

Trélat, E.: Contrôle Optimal. Mathématiques Concrètes [Concrete Mathematics]. Vuibert, Paris (2005). Théorie & applications [Theory and applications]

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lohéac, J., Scheid, JF. & Tucsnak, M. Controllability and Time Optimal Control for Low Reynolds Numbers Swimmers. Acta Appl Math 123, 175–200 (2013). https://doi.org/10.1007/s10440-012-9760-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10440-012-9760-9

Keywords

- Stokes equations

- Fluid-structure interaction

- Controllability

- Time optimal control

- Low Reynolds number swimming