Abstract

Since the adoption of digital video cameras and cross-correlation methods for particle image velocimetry (PIV), the use of color images has largely been abandoned. Recently, however, with the re-emergence of color-based stereo and volumetric techniques, and the extensive use of color microscopy, color imaging for PIV has again become relevant. In this work, we explore the potential advantages of color PIV processing by developing and proposing new methods for handling multi-color images. The first method uses cross-correlation of every color channel independently to build a color vector cross-correlation plane. The vector cross-correlation can then be searched for one or more peaks corresponding to either the average displacement of several flow components using a color ensemble operation, or for the individual motion of colored particles, each with a different behavior. In the latter case, linear unmixing is used on the correlation plane to separate each known particle type as captured by the different color channels. The second method introduces the use of quaternions to encode the color data, and the cross-correlation is carried out simultaneously on all colors. The resulting correlation plane can be searched either for a single peak, corresponding to the mean flow or for multiple peaks, with velocity phase separation to determine which velocity corresponds to which particle type. Each of these methods was tested using synthetic images simulating the color recording of noisy particle fields both with and without the use of a Bayer filter and demosaicing operation. It was determined that for single-phase flow, both color methods decreased random errors by approximately a factor of two due to the noise signal being uncorrelated between color channels, while maintaining similar bias errors as compared to traditional monochrome PIV processing. In multi-component flows, the color vector correlation technique was able to successfully resolve displacements of two distinct yet coupled flow components with errors similar to traditional grayscale PIV processing of a single phase. It should be noted that traditional PIV processing is bound to fail entirely under such processing conditions. In contrast, the quaternion methods frequently failed to properly identify the correct velocity and phase and showed significant cross talk in the measurements between particle types. Finally, the color vector method was applied to experimental color images of a microchannel designed for contactless dielectrophoresis particle separation, and good results were obtained for both instantaneous and ensemble PIV processing. However, in both the synthetic color images that were generated using a Bayer filter and the experimental data, a significant peak-locking effect with a period of two pixels was observed. This effect is attributed to the inherent architecture of the Bayer filter. In order to mitigate this detrimental artifact, it is suggested that improved image interpolation or demosaicing algorithms tuned for use in PIV be developed and applied on the color images before processing, or that cameras that do not use a Bayer filter and therefore do not require a demosaicing algorithm be used for color PIV.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Particle image velocimetry (PIV) typically uses monochrome images of a displacing particle image pattern to evaluate a velocity field. As a result, the full-color information from a scene is compressed into a single intensity channel. Only by using color filters can the original wavelength of the incident light be resolved. Typically this is not a problem, as current PIV algorithms only work with a single channel of intensity data at a time. This is often sufficient since particle images are typically illuminated with a single-wavelength laser beam, and the particles either scatter or fluoresce within a narrow band of wavelengths. If multiple color particles are used, separate cameras with tuned wavelength filters are needed to image each one. For color images, the images must first be converted into a single scalar value, or each color channel must be isolated for processing.

Early PIV systems, before the advent of digital video recording and cross-correlation (Willert and Gharib (1991), used photographic film to capture particle images. In these systems, recordings were typically made with multiple exposures on a single frame, and the particle images were autocorrelated to yield the displacement information (Keane and Adrian 1990, 1991; Adrian and Westerweel 2011), leaving the user with a directional ambiguity. One solution was to exploit the color sampling properties of the photographic film and record two light pulses of different colors (Adrian 1986; Goss et al. 1991). The color recordings were then digitized using matching optical filters to scan and process the developed photographic negative. This resulted in a pair of images, each with only a single-particle exposure, which could then be matched using particle tracking or image correlation. Using this method, color information enabled successful PIV measurements, but in the final analysis, each image was still handled by the algorithms as a monochromatic intensity field. However, as digital video cameras and cross-correlation PIV matured, such approaches were abandoned due to the increased benefits provided by all digital systems.

There is also a long history of using color spatial variation to determine the out-of-plane velocity component. Early approaches used a pair of overlapping and differently colored laser beams (Cenedese and Paglialunga 1989; Brücker 1996) to recover the out-of-plane component. More recently, these techniques have been extended to multiple light sheets, in which in-plane velocities are tracked by separating the color images into multiple channels and the out-of-plane components are recovered from standard two-camera stereo methods (Pick and Lehmann 2009). An alternative approach uses color sheets, overlapped (McGregor et al. 2007) or continuous across the volume, (Watamura et al. 2013) so that velocity can be estimated by the color (and thus the depth) of each particle.

Overall, most of the existing work has treated color simply as a way to sort the various particle images into different categories and has ignored its potential for increasing the information content of PIV recordings and thus the signal-to-noise ratio. In this work, we preserve the information from all color components throughout the cross-correlation analysis rather than simplifying to a composite intensity channel or examining only a single correlation between two pre-defined channels. Additionally, this work proposes two methods that have not been previously applied to PIV processing of color images to simultaneously sort and measure the velocities of two or more types of particles in a flow. Although color images have been previously used for measurements in multiphase flows, these methods typically rely on a series of multiple filters, light sources, or cameras, as well as complex data reduction schemes (Towers et al. 1999). In contrast, the approach presented here is more straightforward and can be performed with the simple introduction of a color camera into a standard digital PIV setup.

These techniques are based on two distinct approaches to handling the color information. The first is the correlation of all available color channels as separate planes and the assembly of those correlations into a color vector cross-correlation plane. This plane can then be searched for the peak value in an average sense, if only a single velocity component is desired, or for peaks matching the individual colors of the particles which were imaged. It is not necessary, as has been done previously, to carefully choose particles whose colors fall very nearly into a single color channel on the camera sensor (typically a particular wavelength of red, green, or blue).

The method for identifying a signal of a particular color in a mix of multispectral sources is implemented using linear unmixing (Zimmermann 2005) which originated with work on hyper spectral data analysis in the satellite imaging community (Landgrebe 2002) and has recently been adopted by the biological imaging community for identifying cells stained with or expressing various fluorescent pigments (Tsurui et al. 2000; Lansford et al. 2001). It is designed to separate features most closely matching a set of reference colors from data containing a mixture of recorded wavelengths. However, it is important to note that for this work, instead of applying unmixing on the recorded images, unmixing will be applied to the color vector correlation planes.

The second approach is the encoding of the three-component color information into a type of complex number known as a quaternion and then processing the images simultaneously across the entire recorded color space in a single operation. Quaternions are a type of hypercomplex number first proposed by Hamilton in 1843 (Hamilton 1866), which encode four dimensions of real scalar data into a real part (w) and three imaginary parts (a, b, and c), e.g. q = w + ai + bj + ck where i, j, and k are orthogonal, imaginary components such that ii = jj = kk = − 1 and ij = k, jk = i, ki = j. In the case of color image data, it is common to decompose the perceived color of a given light source at each location in an image into three components, e.g., the RGB (red, green, blue) colorspace. Thus, for RGB images, it is natural to encode this color data as the i, j, k components of a pure quaternion 2D matrix.

Once color vector data have been encoded into a matrix of quaternion values, standard Fourier transforms (FT) in both discrete and continuous forms can be extended to apply to quaternion fields. Sangwine et al. (2001), Ell and Sangwine (2007) have shown that the single forward and inverse FT for real or complex numbers give rise to families of related quaternions transforms. Using these transforms, a quaternion cross-correlation, C, between two signals f and g can be efficiently implemented. See the above referenced work for more details.

For simple motions, the correlation peak is scalar only with no hypercomplex parts. However, if the image color changes, a match will still occur and the rotation in color space can be recovered from the quaternion magnitude and direction (Moxey et al. 2003). This is not explored here, but should allow the matching of patterns that changes color over time.

In this work, we show how quaternion cross-correlation can be applied to PIV image data to determine the displacement of multispectral color images. For single phase, single velocity component flows, only a single peak need to be identified from the resulting quaternion correlation plane. For multi-component flows, two (or more) peaks are identified, corresponding to each of the different tracer types used. Then, the identified displacement peaks are used to decompose the images into subimages containing only a single velocity component and flow tracer type and these are sorted based on their average color. This technique was originally introduced by Alexiadis and Sergiadis for use in machine vision motion estimation (Alexiadis and Sergiadis 2009a, b) and will be referred to as velocity phase separation in this paper.

Each of the proposed techniques is validated using synthetic color and monochrome images of flow fields undergoing uniform translations. Both single-phase and multi-component flows are simulated with images containing two to three distinct particle colors; furthermore, the effect of the Bayer color filter common to digital color cameras is simulated to determine its effect on the results. Finally, the methods are tested on experimental images of a microchannel flow experiment in which contactless dielectrophoresis (cDEP) is used to separate out two different particle types from each other as they flow through the device.

2 Methods

2.1 Color image correlation

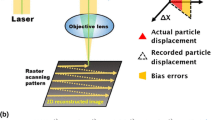

Several new methods were tested that allowed using information from three-color images. These methods include encoding the RGB color data as quaternion numbers; using quaternion cross-correlation, color ensemble cross-correlation in which the correlation planes of each individual scalar color are summed, and individual color correlation in combination with linear demixing of the RGB correlation plane. Their relationships are shown schematically in Fig. 1 and described in more detail below. In each case, the methods were adapted to allow the use of a phase-only transform and the spectral energy filter of the robust phase correlation (RPC) method (Eckstein et al. 2008; Eckstein and Vlachos 2009a, b).

Schematic of the three proposed color cross-correlation techniques. Color ensemble correlation averages the scalar correlation of each color channel into a single field. Linear demixing uses knowledge about the color of each source particle to separate the full RGB correlation plane. Quaternion cross-correlation operates on RGB data stored as hypercomplex numbers and is then used to separate the source images based on the velocity of each particle, rather than the color (mean RGB values of the separated images are used to sort the measured displacements)

2.1.1 Quaternion cross-correlation

For this approach, diagrammed in the lower left corner of Fig. 1, each RGB color image was encoded as a 2D quaternion matrix using Eq. (1). These quaternion images were then cross-correlated using quaternion discrete FT. The result of this operation is depicted as C Q in Fig. 1, with the real part giving the magnitude of the correlation surface and the vectors depicting the direction of the three hypercomplex components of the quaternion field. The resulting cross-correlation includes both real and hypercomplex components. To define the maximum value for the peak search and subpixel fit (here implemented with a standard three-point Gaussian fit in each direction), either the magnitude of the resulting quaternion or the absolute value of the real part may be used. Based on preliminary testing (results not shown), it was determined that the second approach yields slightly better results and was therefore used for the remainder of this work.

In the case of multiple displacements of different colors, since a translation of matching color elements creates a scalar peak regardless of the color of the translating image components, an additional step is required to sort out the source color of the particles that give rise to each peak in the correlation plane. The procedure used for this is described in Velocity Phase Separation section below.

All quaternion math was carried out using version 1.9 of the Quaternion Toolbox for MATLAB (qtfm), available on SourceForge (Sangwine and Le Bihan 2011).

2.1.2 Color vector cross-correlation

It is also possible to use traditional scalar methods on each separate color component, yielding three separate correlation fields, C R, C G, and C B, respectively, for the red, green, and blue channels. These three correlation planes can then be combined into a color image C RGB where the color of each peak corresponds to the average color of the correlating structures that gave rise to it. When a single color channel visualizes each individual flow tracer, the displacements can be determined by simply finding the peak in each channel and applying a subpixel fit. For example, for microPIV applications, this would require the use of fluorescent probes with emission spectra matched to the camera color filter with no overlap between filters. However, this is not typical and each flow tracer is often visualized by all three color channels, creating correlation peaks in all three correlation planes (as shown in Fig. 1). This is in contrast to quaternion cross-correlation, in which the peak is in the scalar direction if the color of the object is the same in both frames, regardless of the original color. This provides a clear advantage for color vector cross-correlation if the color of the flow tracers is known ahead of time.

To separate the contribution to the correlation plane of each particle color, linear unmixing (described in detail below) is applied to the C RGB correlation plane, resulting in scalar correlation planes corresponding to each original particle type (planes C P1, C P2, and C P3 in the lower right of Fig. 1) with minimal cross talk from particles of different colors. The peaks are then identified, and a standard Gaussian three-point fit is used to find their subpixel locations.

2.1.3 Color ensemble cross-correlation

For the case in which all tracers have uniform motion, it is not necessary to consider each color channel separately. Traditionally, if color images were used to image such a flow, these images would be converted to grayscale before cross-correlation or only a single color channel would be chosen. Here, it is proposed to keep the full-color information until after the correlation step in order to preserve the additional information from the original images. At this point, the three-color correlation fields are averaged to yield a scalar field, C (RGB), in which the peak could be located using standard PIV techniques. This is most appropriate where the group velocities of all tracer types are approximately equal. For the case of different particle colors with different motions, the source of the peaks is not distinguishable based only on information in the correlation plane, but techniques like velocity phase separation could be used.

2.2 Linear unmixing of cross-correlation

Linear unmixing was performed by solving the following inverse problem (Zimmermann 2005).

S represents the signal as a function of the sampled wavelengths, λ j , and R is a matrix of the linear response of the sensor at each wavelength to the excitation of each of A i input types. In this case, our signal wavelengths are limited to the red, green, and blue color channels of the image, though in a general case many more wavelengths can be sampled using different filters. The input types A i correspond to different particle types used in the images. The number of different source types must be less than or equal to the number of signal channels for the problem to be solvable. Given these restrictions, for the unmixing problem studied here, Eq. (2) can be written as follows.

In the presence of noise and other errors in the measured signal, an exact solution was generally not available, and instead the relative intensities of each component A i were solved for by a least squares inverse algorithm. When only two source components were used to form the final images (i.e., two particle types), the response matrix coefficients corresponding to the third component were set to dummy values, though tests revealed that they could be safely removed without affecting the final results (changing the inversion problem to an overdetermined system).

For tests with synthetic images, the response matrix was determined based on the known input colors of each particle type. For experimental images, approximate values of R were determined by manual inspection of the recorded color images, but might also be recovered more accurately using knowledge of the particle emission and camera filter spectra.

2.3 Velocity phase separation of images

Due to the formulation of the quaternion cross-correlation, which yields scalar peaks regardless of the color of the matched patterns, an ambiguity exists regarding which particle type created each of the identified peaks. To solve this problem, we decomposed particle image patterns into the sum of two or more subimages in the Fourier domain, each with a unique associated velocity, using Eq. (4). This approach was originally used in motion tracking for machine vision (Alexiadis and Sergiadis 2009b).

The equation arises from the Fourier shift theorem, which states that a shift in image coordinates is manifested by a linearly varying complex phase in the Fourier domain with a slope proportional to the shift. Given that F and the displacement terms are known, inversion can be used to determine the C terms one frequency component at a time. Before the inversion is computed, the discriminant of the shift matrix is calculated and any matrices that are too close to singular have their source components C set to zero for that frequency. In theory, it should be possible to solve the entire system for all frequency components simultaneously, but in testing, it was found that the resulting system of equations was too stiff and frequently failed to properly invert.

To apply this method to PIV data, we first divided each flow field into smaller interrogation regions, which were processed using quaternion cross-correlation, and subsequently identified the two largest peaks in the resulting correlation plane. Then, each ROI was decomposed using Eq. (4) into two subimages, each matching one of the identified velocity peaks. As previously mentioned, each subimage was sorted based on the average particle color, and a final flow field was assembled for each tracer type. For efficiency, Eq. (4) was implemented separately on each color channel.

2.4 Synthetic image generation

Most digital cameras record color images in one of two ways. In the first, incident light is split using filters into at least three distinct wavelength bands and three or more sensors image every color across the entire scene. These sensors can either be stacked in alignment with the light path (such as the Foveon X3) or split up with the light being directed using prisms or mirrors (so-called “3CCD” or “3MOS” designs). Usually the colors correspond to the red, green, and blue wavelengths typically used in storing or transmitting RGB image data. The second, more common approach is to place a filter at each pixel on a single sensor so that each pixel accepts light from only a single color band, again usually corresponding to either red (R), green (G), or blue (B) light. The most common pattern for such a filter is known as a Bayer filter, and the colors are arranged in a repeating 2 × 2 mosaic pattern with G and R alternating in one row and B and G in the next. Under this arrangement, there are twice as many green pixels as either red or blue. Other arrangements of color filters are possible, but are less common. The full-color image with RGB data at each pixel must then be reconstructed using a demosaicing algorithm, and often a secondary de-aliasing filter is applied to minimize image color artifacts caused by this approach, although this has the effect of reducing resolution as well. Full-color images can also be reconstructed by subsampling the original sensor data so that each reconstructed pixel contains data from at least one complete Bayer filter element, in which case interpolation is not necessary. This reduces the possibility of color artifacts, but reduces the maximum available spatial resolution.

To analyze the performance of the new methods, color synthetic images of PIV particle fields were generated to simulate the above camera imaging processes (see Fig. 2). Because most color cameras use a Bayer filter to image three-color data on a single CCD or CMOS sensor, it was important to model the effect of this procedure on images. Additionally, for the same particle fields, images recreating the grayscale intensity field without color filtering as well as full-color images without the use of a Bayer filter were simulated. The full procedure for the image generation process is detailed below.

Artificial color image generation procedure. Full-spectrum color images are generated from images of single color particle fields and full spectrum, and native grayscale images are recorded. For Bayer-filtered images, the full-spectrum image is subsampled, noise is added, and then the images are demosaiced and saved as either color or grayscale. Solid colored lines indicate paths for data of that color, while checkered lines indicate Bayer-filtered data. Dashed black lines indicate the addition of noise to the signals

Image generation began with the creation of one or more pairs of particle image intensity fields (marked in Fig. 2 as P1, P2, and P3). Following commonly accepted practice for simulating PIV images, these fields contained Gaussian particle images with a mean diameter of 3 pixels at the 1/e 2 intensity level and a standard deviation on that diameter of 1.0 pixel. The recorded intensity for each particle was integrated over a fill factor of 100 %, and intensities were discretized to a dynamic range of 8 bits (intensity counts from 0 to 255). Particles were uniformly distributed across a Gaussian light sheet. Each of these particle image pairs was then assigned an RGB color, the selected full-intensity color was linearly scaled by the corresponding grayscale intensity field, and the resulting fields were summed to yield a three-color image (“full-spectrum image, noise free” in Fig. 2). Values exceeding the maximum intensity were truncated. This image formed the baseline image from which all the other versions were derived. Perspective effects on the apparent motion and location of each particle were not simulated.

To simulate intensity-only grayscale images, this full-color image field was collapsed to a luminance-only field using the RGB2GRAY() command in MATLAB. The function calculates the luminance according to the following form.

This form is the same as used to calculate the luminance channel for a 3-channel NTSC luminance–chrominance image. At this point, the data are assumed to be equivalent as what the sensor would be imaging, and normally distributed noise with a standard deviation of 5 % of fullscale and a mean of 5 % was added to the image (“raw grayscale with noise” in Fig. 2).

To simulate a full-spectrum image with noise (such as might be acquired by a Foveon X3 camera), the same noise field as used in the previous image was added to the green channel and then rotated and flipped to create two additional noise fields that, for a given point in the image, would not be locally correlated between color image channels. These two fields were then added to the blue and red channels so that all three color components have image noise of equivalent statistical properties.

To generate Bayer-filtered color images, the noise-free full-color images were subsampled according to a GRBG Bayer filter to create a mosaic single-sensor intensity image. At this point, the noise field used in the grayscale images was added to the synthetic sensor data. The resulting image field was then demosaiced using MATLAB’s DEMOSAIC() command, which implements a gradient-corrected linear interpolation (Malvar et al. 2004). De-aliasing was not used in order to preserve the maximum possible spatial resolution. This image was then used as is [“Bayer color with noise” in Fig. 2) or was further truncated (again using RGB2GRAY())] to yield an image that might represent the output if a researcher was to average a color image field for processing in a traditional PIV algorithm that only deals with grayscale images (“RGB to gray with noise” in Fig. 2).

It can be seen qualitatively in Fig. 2 that the final images which went through the Bayer filter/demosaicing steps show increased color and intensity noise. As will be shown later in the Results section, this has a significant impact on the results of the PIV processing.

2.5 Artificial flow fields

The synthetic image generation procedure described above was used to simulate recordings of several simple flow fields. For each image pair and particle type, a flow field was generated and particles were randomly seeded throughout the image. The particle locations were then displaced in accordance with the simulated velocity field to yield an image in which the displacements were a function of the particle type. In each case, it was assumed that the simulated flow tracers would be independent of the motion of any surrounding tracers.

For each flow field, RPC-based correlation was used (Eckstein et al. 2008; Eckstein and Vlachos 2009b), and each region of interest was 64 × 64 pixels in size and windowed using a Gaussian function to an effective circular resolution of 32 pixels (Eckstein and Vlachos 2009a). Images were 1,024 × 1,024 pixels in size for each case. The flow field was sampled on a 32 × 32 pixel grid for 0 % overlap to maintain independent measurements, for a total of 1,024 vectors per synthetic flow field. Only uniform flow was simulated, under the assumption that the relative effects of shear and rotation would be approximately similar to previously tested PIV algorithms.

2.5.1 Uniform flow

The first image set was designed to test the effect of using color correlation techniques on a flow field in which the imaged particles were multiple colors but all traveling in the same direction. The objective was to investigate whether multispectral particle images could provide improved accuracy and precision of PIV measurements compared to grayscale images. Three different particles were simulated, all with equal velocity. Multiple image pairs were generated, each with a single velocity for all particles in the field. For the whole set, velocities ranged from 0 to 8 pixels/frame in the x direction and were fixed at 0.3 pixels/frame in the y direction. Particle 1 had at 100 % intensity a color of (63,255,192), or mostly green/cyan in an RGB color space. Particle 2 was mostly red with a little magenta, (255,15,127). Particle 3 was mostly blue (15,95,255). These colors were chosen based on preliminary experimental images of microchannel flow with three different fluorescent particle types approximating red, green, and blue emission spectra.

Due to the chosen colors, the green particles had the highest intensity at 100 % brightness, followed by the red and then, finally, the blue. Pure colors were intentionally not chosen in order to allow for the effect of cross talk between color channels, since in real images, it is almost never possible to precisely match particle colors to camera color channels. Particles were seeded into the flow at an average density of 0.005 particles/pix2 for each color, or 0.015 particles/pix2 total. This yielded about 15 particles per 32 × 32 interrogation region.

2.5.2 Two component flow

The second test condition examined the ability of the multi-spectral methods to accurately distinguish the motion of two independent velocity tracers. For these image sets, only the particle 1 (green) and particle 2 (red) types were used, and the seeding density was 0.01 particles/pix2 for each, for a total density of 0.02 particles/pix2. It is important to note that since we were trying to resolve each group independently, it is the seeding density for each particle type that controls the success of the PIV correlation, not the total. For 32 × 32 pixel ROIs, only about 10 particles on average are visible per type, which is on the lower end of the generally accepted optimal particle density (Adrian and Westerweel 2011). For each image pair, all of the particles of a given color were assigned a single displacement between 0 and 8 pixels/frame in the x direction. Displacement in the y direction was set to a uniform 0.3 pixels/frame for both particle fields. Multiple image pairs were generated so that the set spanned every possible combination of velocities for the two particle types.

2.6 Experimental flow in a microchannel

Contactless dielectrophoresis (cDEP) was selected as a representative application. cDEP is a recently developed technique for particle and cell manipulation and sorting. In conventional DEP, an electric field applied between electrodes inserted into a microfluidic device exerts a force on dielectric particles in the fluid. The dielectrophoretic force depends on the particle radius r, fluid permittivity ε m , and electric field gradient \(\Delta \left| E \right|^{2}\), as well as the Clausius–Mossotti factor f CM , which describes the relationship between the dielectric constants of the particle and the fluid:

Because of the relationship between DEP force and particle properties, DEP can be used to separate particles with different size and electrical properties by tuning the frequency and strength of the electric field. cDEP is a variant of DEP that uses fluid electrode channels which are isolated from the main microfluidic channel and thus enable sterile sorting. For further information on cDEP and its applications, see (Shafiee et al. 2009, 2010; Salmanzadeh et al. 2011).

This experiment was performed using the cDEP system described in (Sano et al. 2011) (microfluidic device Design 3). Distilled water containing a mixture of two types of polystyrene beads—1-μm diameter red fluorescent particles and 10-μm diameter green fluorescent particles (Fluoro-Max R0100 and Fluoro-Max G1000, Thermo Scientific)—was perfused at a rate of 0.005 mL/h through the microfluidic device using a syringe pump (PhD Ultra, Harvard Apparatus). The conductivity of the particle solution was measured to be 25 μs/cm (SevenGo Pro Conductivity Meter, Mettler-Toledo). The electric field was applied as a sinusoid with 50 kHz and 400 V RMS. Images were acquired at 19 Hz with a color camera (Leica DFC420) mounted on a Leica DMI 6000B microscope equipped with fluorescence illumination and filters and had a size of 648 × 864 pixels at a resolution of 0.83 μm/pix with 5X magnification. A representative image is shown in Fig. 3.

Based on the acquired images, it was determined that the green particles had an apparent size of about 16 pixels in diameter and an average RGB color vector corresponding to (75, 220, 255), almost pure cyan, while the red particles were approximately 2–3 pixels in size and were almost pure red with a color of (255, 68, 55).

3 Results

3.1 Effect of bayer-filtered images on PIV

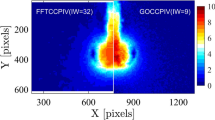

The results of the error analysis of single component velocity tests for uniform flow using three differently colored particles are shown in Fig. 4. Both Bayer-filtered and full-spectrum RGB images were tested, as well as grayscale-converted Bayer filter images. Native grayscale fields were also examined to provide a baseline for traditional scalar PIV methods for comparison to the new methods.

From the results, it was apparent that every processing type when used with image data that had been previously Bayer filtered and demosaiced, whether RGB or grayscale-converted images, demonstrated severe bias and random error fluctuations as a function of displacement. This effect was similar to classical peak locking, but instead had a period of 2 pixels. This detrimental behavior is attributed to the Bayer filter itself, which also has a period of 2 pixels. Examination of the demosaiced images showed clear checkerboard patterns in the particle image data (see for instance Fig. 5), and it is likely that this was the root cause of the periodic errors. Errors were lowest when the displacements were an even multiple of two and were highest when the displacement was mismatched with the Bayer filter at a single pixel.

Although the magnitudes of the resultant errors remained reasonable (below about 0.1 pixels/frame), they were significantly higher than the correlations computed on unfiltered images, which had bias errors that remained less than 0.05 pixels/frame even for large displacements (8 pixels in a 32 pixel window resolution) and random errors between about 0.02 and 0.04 pixels/frame. It is probable that more sophisticated post-processing of the demosaiced images could alleviate this aliasing artifact in the images. As it was not the intent of this work to evaluate the performance of different image processing techniques, for the remainder of this work, only color images created without the use of the Bayer filter were analyzed. However, these results highlight an important issue that needs to be addressed if color PIV processing is performed and additional testing with experimental images and commercial software needs to be performed to quantify to what extent this problem affects color images in practice.

Returning to the comparison of the different color image processing algorithms versus a traditional grayscale approach, there appears to be little effect on the bias errors in either the x or y directions (Fig. 4a and c). However, the random error dropped by about ½ in both directions, falling from about 0.03 pixels/frame to less than 0.02 in x (Fig. 4b) and from about 0.03 to about 0.015 pixels/frame in y (Fig. 4d). This is consistent with the proposed mechanism that uncorrelated noise between color channels is suppressed by preserving the full-color information in the cross-correlation. Additionally, the color ensemble correlation performed slightly better than quaternion cross-correlation, though the difference was marginal.

Linear unmixing of a color vector cross-correlation was not tested on these images since it would have resulted in three individual velocity fields each made on a particle field having 1/3 the seeding density that the other methods were testing, making it an unfair comparison.

3.2 Synthetic image tests of velocity separation

For the two component flow tests, both the quaternion cross-correlation with velocity separation and the color vector cross-correlation with linear unmixing of the correlation plane were tested. Results of the error analysis for each particle type (P1 and P2) for bias and random error in both the x and y directions are plotted in Fig. 6 for all combinations of x displacements between 0 and 8 pixels/frame. For these results, only valid measurements (those with an error less than 0.5 pixels/frame) were counted toward the final statistics. Each point in a subplot indicates the combined bias error, random error, or valid vector count calculated for the 1,024 vectors in a single image pair with a given combination of P1 and P2 u-velocities (represented by the position on the vertical or horizontal axis).

Error analysis of multi component velocity measurements of color data using either quaternion correlation and velocity separation (left column) or color scalar correlation with linear unmixing (right column). a and d Bias and b and e random errors on u-displacement measurements for particle 1 and particle 2. c and f valid vector fraction for measurements (errors calculated only on valid measurements)

If the errors were uncorrelated, we would have expected the error on each particle velocity to be independent of the motion of the other particle type. In other words, the errors on P1 motion should only vary along the vertical axis, and the errors on P2 velocity should only vary along the horizontal axis. However, as illustrated by the dramatic diagonal stripes in many of the plots (in this region U P1≈U P2), both methods showed some cross talk for displacements less than the average particle diameter of 3 pixels. For the quaternion cross-correlation, both particle types showed approximately equal bias errors toward the displacement of the other particle type for displacements less than this threshold, with slightly higher random errors for particle 2. Examination of the correlation planes showed that once the particle displacements were too close together, the peaks began to merge into one, distorting the shape of both and biasing the subpixel peak location toward the position of the other peak.

In contrast, the bias errors were lower for particle 1 using the color vector cross-correlation approach than for particle 2. Additionally, for particle 1, the bias was toward particle 2, while for particle 2 the bias was away from particle 1. The cause for this seems to be that cross talk in the linear unmixing led to a small amount of the correlation peak energy of particle 2 being deposited in the unmixed correlation planes for particle 1, biasing the result toward particle 2. This process, on the other hand, led to missing energy for particle 2 on the side of the correlation peak toward particle 1, biasing the peak detection algorithm away from the other correlation peak. Although not tested here, the exact amount and behavior of this error due to the linear unmixing is likely dependent on the exact color values chosen for each particle type, and this behavior will not necessarily generalize to any particular choice of particle 1 and particle 2.

Overall, the errors for the quaternion cross-correlation (left column, Fig. 6) were considerably worse than for the color vector processing (right column, Fig. 6). Comparing Fig. 6c to f, it can be seen that for the color vector cross-correlation method, the valid vector percentage was very near 100 % for the conditions tested. However, the quaternion cross-correlation failed more than 50 % of the time in many cases and often had nearly 0 % valid detection probability. This was due to several factors. The first was the previously mentioned merging of the correlation peaks. Second, the selection of two valid peaks often proved problematic, with the second true signal peak sometimes being confused for noise peaks of similar height. Finally, even if the peaks were correctly selected, the sorting algorithm often had trouble automatically assigning each decomposed subimage to the proper particle type. Even if the last two problems could be addressed, the first is likely insurmountable without significant new development of the quaternion cross-correlation theory.

Figure 7 shows a close-up of the results for the color vector cross-correlation method for 0 displacement in x for particle 1, and 0–8 pixels displacement for particle 2. These conditions correspond closely (albeit with different seeding densities) to those used in generating the uniform flow images for which results are plotted in Fig. 4. Therefore, we can compare the results for traditional or color PIV on a field with a single flow component to the results for a flow with two velocity components.

a Bias and b Random error on the color vector cross-correlation measurement of the u-velocity of particle 1 (green) and 2 (red) versus increasing displacement of particle 2 for a fixed particle 1 displacement of U P1 = 0. Errors are higher in the regions that the particle displacements differ by less than the width of the particles. Values are the same as in Fig. 6d and e. Bias and random errors for the same particle fields correlated with only particle 1 (solid black) or particle 2 (dashed black) present are also shown for comparison

For particle 1, which has 0 velocity in the x direction, the u-bias error shown in Fig. 7a was very close to zero, as could be expected from the single component v-bias errors plotted in Fig. 4c. For particle 2, although the bias error did climb to about 0.15 for displacements less than one particle width by 3.5 pixels displacement, the trend has returned to a very close match of the errors that could be expected if particle 2 was the only flow tracer in the image. The random errors shown in Fig. 7b are slightly increased, however, as compared to the single component uniform flow as plotted in Fig. 4b. For particle 1, random errors peaked near 0.045 pixels/frame before returning to the baseline value near 0.03, while the errors for particle 2 climbed to between 0.035 and 0.04 and remained there for the range of displacements tested. This was in contrast to the random errors reported for single component flow data processed with full-color algorithms, which showed values around 0.02 pixels/frame, though it compared much more favorably to the grayscale processing which had random errors nearer to 0.03 pixels/frame.

3.3 MicroPIV in cDEP channel

The algorithm was tested on microPIV results of an experiment featuring two particle types of different size and color. cDEP was used to exert a horizontal force on the larger green particles, sorting them from the red particles which generally behaved as flow tracers as the cDEP force was negligible due to their smaller volume. A field of view near the end of the cDEP region featuring a symmetric divergence was selected for analysis. At this point, the degree of separation of the two types of particles was near the maximum achieved for this geometry and field settings. Since the quaternion cross-correlation proved to perform poorly with synthetic images, results for this method on these images are not presented here.

Images were processed using Gaussian windowing with a resolution of 32 × 32 pixels on a 32 pixel grid (0 % overlap) and correlated using single-pass RPC. No validation (other than the peak ratio filtering discussed below) was performed on the resulting vector field. Instantaneous results were favorable, but due to the low seeding density for the green particles, especially on the right side of the image, ensemble correlation of the three-color correlation plane over the sequence of images was used to capture the average velocity over a larger region. Additionally, since no green particles ever appeared in some areas near the right wall of the channel, the peak ratio was used to filter out these regions. Based on examination of the results, correlations with a peak ratio less than 12 were blanked out of the displayed vector plots in Fig. 8a.

Although the cDEP procedure had a significant effect on the particle distribution over the length of the microchannel, due to the small magnitude of the forces involved, it was not possible to isolate a slip velocity for the green particles transverse to the mean direction of the smaller red particles (Fig. 8b). However, the apparent slip velocity between the two phases was readily apparent and only in the region near the right wall did there appear to be contamination of the velocity for green particles by light emitted from the red particles. This was because few green particles reached this region, whereas the red particles were densely and uniformly present throughout the flow, so it only took a small amount of leakage between the unmixed correlation planes for the signal from the red to overpower the small signal from the green. This can be seen in the patchy areas of disorganized and low-velocity flow near the wall and upper right of the image.

More concerning, however, was the blocky appearance of the slip velocity vector field. This appeared to have the typical characteristics of peak locking. Examination of the raw x and y displacement fields for the red particles (P1) showed this more clearly. Figure 9 contours these values for the red particle field. Qualitatively, the measured displacements appeared to be grouped around even integer displacements, with sharp transitions between regions of different velocities. Plotting a histogram of all measurements (Fig. 10) helped to quantify the problem. For displacements of the red P1 particle in either x or y, there were almost no values near odd integers, and very large peaks for even values. For the much larger (16 pixel diameter) green particles, little periodic grouping is seen. The larger particle size evidently helped to counteract this effect. Although peak locking is a common problem for PIV cross-correlation and ensemble averaging of the correlation planes tends to exacerbate the error, it typically has a period of one integer pixel, not two. This suggests the error was introduced by the demosaicing software for features of similar size to the Bayer filter, as was previously demonstrated by the synthetic image testing (Fig. 4).

Despite the shortcomings introduced by the Bayer filter, it is clear from these results that both velocity components could be resolved, even under challenging microPIV conditions. Additional work on image dealiasing and filtering may provide a solution to the peak-locking problem created by the Bayer filter.

4 Discussion and conclusions

Synthetic image tests on flows with only a single velocity component demonstrated an advantage for color correlation methods over traditional single channel images. For the two methods tested (quaternion cross-correlation and color ensemble cross-correlation), the bias errors were similar to a traditional grayscale image processing, but the random errors were reduced by almost a factor of one half (Fig. 4), with the color ensemble method being slightly better than the quaternion method. From examination of the correlation planes and images, it is hypothesized that the reason for this improvement is that noise tends to be decorrelated between color channels, while the displacement signal is the same. As such, the signal-to-noise ratio of the resulting correlation plane is increased when multispectral image data are used to find the displacements. Using low noise, high-bit-depth cameras would reduce the noise seen in both grayscale and color image results, but is not expected to change the relative improvement assuming a similar quality color sensor is available.

However, this was only for images that were generated without the use of a Bayer filter to reconstruct the full-color spectrum. The use of this 2 × 2 color mosaic pattern introduced clear periodic patterns versus displacement with a period of 2 pixels in both the bias and random errors. Errors were increased in magnitude by 2x to 3x or more versus images without this problem. These results send a clear warning to any researcher using a color camera in their work, because even software conversion of color images back to grayscale is not sufficient to eliminate this problem.

One potential limitation of this work is that only the built-in MATLAB function for demosaicing images was used in conjunction with our synthetic images, and no additional de-aliasing or filtering steps were performed. These filtering steps are nearly ubiquitous in commercial camera software and hardware, though they often trade spatial resolution to eliminate the so-called color moiré or aliasing artifacts seen here. One potential problem with the filtering schemes that might have been selected by manufacturers is that they were likely selected with the goal of increasing the esthetic appeal of the final results, rather than ensuring that no 2 × 2 patterned noise remains in the image. However, it is difficult to make universal judgments on whether any particular camera will suffer from these problems since the exact schemes used are typically proprietary and are not disclosed to the public. This means that the user of any given camera would be wise to evaluate the severity of this error under the exact acquisition settings that will be used in their experiment before depending on the images generated for sensitive research needs. Partial validation for the possibility of this error occurring in practice was demonstrated here in the microPIV experiment performed. For the images acquired using this camera (Leica DFC420), cross-correlation revealed a dramatic bias error that severely contaminated the resulting vector fields (Figs. 8 and 10). Workarounds may include 2 × 2 subsampling of the acquired images to avoid the need for Bayer demosaicing, or use of cameras that directly measure all three colors at every pixel.

Beyond the potential for enhancing the measurement quality in single-component vector fields, the use of multiple flow tracers to sample multiple types of flow behavior simultaneously is also possible using the methods described here. Such situations are common in multiphase flows, and the results show that it is possible to separate the independent behavior of each particle type with minimum cross talk between signals and with accuracy and precision similar to that achievable by measuring each component of the flow individually. Although quaternion cross-correlation paired with velocity separation performed poorly because of the inability of the quaternion cross-correlation to differentiate closely spaced displacements, color vector correlation did not suffer from the same problems and a linear unmixing approach proved very successful in resolving two simultaneous flow components (Fig. 6). This type of measurement capability is not attainable using a conventional grayscale PIV processing approach. Application of the method to experimentally acquired microPIV images showed that the method could successfully detect the velocity signal from two different particle types in real images using both instantaneous and time ensemble correlations (Fig. 8). However, as previously discussed, severe peak locking was observed in the time ensemble correlation data, likely as a result of the use of a Bayer filter by the camera as seen in the synthetic data tests. This compromises the ability of the method to make accurate measurements of velocity, even though the displacement peaks are readily detectable.

In conclusion, this paper introduced and explored three correlation methods for employing color data in PIV processing. It was shown that for single-component flow data seeded with particles of multiple colors, multispectral image data could be used to reduce the random error of PIV methods by about a factor of two as compared to grayscale processing of the same images. Additionally, linear unmixing and velocity phase separation were tested to separate out the individual signals from two flow tracers of differing velocities. Although quaternion cross-correlation paired with velocity phase separation failed due to an inability to distinguish displacements separated by less than a single-particle diameter, color vector correlation avoided this problem and linear unmixing of the resulting correlation planes yielded results similar to measuring each flow component independently. Finally, it was shown that influence of the Bayer filter caused a severe peak-locking error on PIV fields measured using color data for both synthetic and experimental images. Whether this error is common to all commercially available color camera systems is an issue that needs to be addressed before color processing in PIV becomes routinely viable.

References

Adrian RJ (1986) Image shifting technique to resolve directional ambiguity in double-pulsed velocimetry. Appl Opt 25(21):3855–3858

Adrian RJ, Westerweel J (2011) Particle image velocimetry. Cambridge University Press, New York

Alexiadis DS, Sergiadis GD (2009a) Estimation of motions in color image sequences using hypercomplex fourier transforms. IEEE Trans Image Process 18(1):168–187

Alexiadis DS, Sergiadis GD (2009b) Motion estimation, segmentation and separation, using hypercomplex phase correlation, clustering techniques and graph-based optimization. Comput Vis Image Underst 113(2):212–234

Brücker C (1996) 3-D PIV via spatial correlation in a color-coded light-sheet. Exp Fluids 21(4):312–314

Cenedese A, Paglialunga A (1989) A new technique for the determination of the third velocity component with PIV. Exp Fluids 8(3–4):228–230

Eckstein A, Vlachos PP (2009a) Assessment of advanced windowing techniques for digital particle image velocimetry (DPIV). Measur Sci Technol 20(7):075402

Eckstein A, Vlachos PP (2009b) Digital particle image velocimetry (DPIV) robust phase correlation. Measur Sci Technol 20(5):055401

Eckstein A, Charonko J et al (2008) Phase correlation processing for DPIV measurements. Exp Fluids 45(3):485–500

Ell TA, Sangwine SJ (2007) Hypercomplex fourier transforms of color images. IEEE Trans Image Process 16(1):22–35

Goss LP, Post ME et al (1991) Two-color particle-imaging velocimetry. J Laser Appl 3(1):36–42

Hamilton WR (1866) Elements of quaternions. Longmans, Green, and co, London

Keane RD, Adrian RJ (1990) Optimization of particle image velocimeters. Part I: double pulsed systems. Measur Sci Technol 1:1202–1215

Keane R, Adrian R (1991) Optimization of particle image veIocimeters: II. Multiple pulsed systems. Measur Sci Technol 2:963–974

Landgrebe D (2002) Hyperspectral image data analysis. IEEE Signal Process Mag 19(1):17–28

Lansford R, Bearman G et al (2001) Resolution of multiple green fluorescent protein color variants and dyes using two-photon microscopy and imaging spectroscopy. J Biomed Opt 6(3):311–318

Malvar HS, He L-W et al (2004). High-quality linear interpolation for demosaicing of Bayer-patterned color images. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, 2004 (ICASSP ‘04)

McGregor TJ, Spence DJ et al (2007) Laser-based volumetric colour-coded three-dimensional particle velocimetry. Opt Lasers Eng 45(8):882–889

Moxey CE, Sangwine SJ et al (2003) Hypercomplex correlation techniques for vector images. IEEE Trans Signal Process 51(7):1941–1953

Pick S, Lehmann F-O (2009) Stereoscopic PIV on multiple color-coded light sheets and its application to axial flow in flapping robotic insect wings. Exp Fluids 47(6):1009–1023

Salmanzadeh A, Shafiee H et al (2011) Microfluidic mixing using contactless dielectrophoresis. Electrophoresis 32(18):2569–2578

Sangwine SJ, Le Bihan N (2011, 2011/10/04/2012/01/13/15:58:38) Quaternion toolbox for Matlab. SourceForge from http://qtfm.sourceforge.net/

Sangwine SJ, Ell TA et al (2001) Vector phase correlation. Electron Lett 37(25):1513–1515

Sano MB, Caldwell JL et al (2011) Modeling and development of a low frequency contactless dielectrophoresis (cDEP) platform to sort cancer cells from dilute whole blood samples. Biosens Bioelectron 30(1):13–20

Shafiee H, Caldwell JL et al (2009) Contactless dielectrophoresis: a new technique for cell manipulation. Biomed Microdev 11(5):997–1006

Shafiee H, Sano MB et al (2010) Selective isolation of live/dead cells using contactless dielectrophoresis (cDEP). Lab Chip 10(4):438–445

Towers DP, Towers CE et al (1999) A colour PIV system employing fluorescent particles for two-phase flow measurements. Measur Sci Technol 10(9):824

Tsurui H, Nishimura H et al (2000) Seven-color fluorescence imaging of tissue samples based on fourier spectroscopy and singular value decomposition. J Histochem Cytochem 48(5):653–662

Watamura T, Tasaka Y et al (2013) LCD-projector-based 3D color PTV. Exp Thermal Fluid Sci 47:68–80

Willert CE, Gharib M (1991) Digital particle image velocimetry. Exp Fluids 10(4):181–193

Zimmermann T (2005) Spectral imaging and linear unmixing in light microscopy. In: Rietdorf J (ed) Microscopy techniques. Springer, Berlin, pp 245–265

Acknowledgments

The authors would like to thank Jaka Cemazar for his help performing cDEP experiments. This work was partially supported by the NSF IDBR 1152304 and NIH 5R21CA158454-02.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Charonko, J.J., Antoine, E. & Vlachos, P.P. Multispectral processing for color particle image velocimetry. Microfluid Nanofluid 17, 729–743 (2014). https://doi.org/10.1007/s10404-014-1355-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10404-014-1355-5