Abstract

The objective of this study was to obtain neural networks that would precisely estimate inside-bark diameter (d ib ) and heartwood diameter (d h ) and compare to the results obtained by the Taper models. The databank was formed so as to eliminate inconsistent and biased data, and stratified: minimum d ib of 4, 6 and 8 cm and minimum d h of 10, 15 and 20 cm. The adjusted Taper model used was the Kozak model. For the fitting of artificial neural networks (ANN), tests were performed to identify the independent variables and the database scope level, i.e., the following input variables were tested: diameter at breast height (dbh), total height (H), height at diameter d ib or d h (h) and outside-bark diameter at h (d ob ), bark thickness at 1.3 m and project, and the scope at database level or project level. The estimates obtained by the neural networks and Kozak model were evaluated by residual graphs in function of the respective diameter observed and graph of the observed versus estimated values. ANN were found to be more efficient in estimating inside-bark and heartwood diameters for Tectona grandis trees than the Kozak model. The variables that must be used to fit the networks are dbh, H, h and d ob . Stratification by project results in precision gain, with precision being higher for wider commercial diameters. Thus, linear-type artificial neural networks can be efficient in describing the taper of Tectona grandis trees.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The main elements in even-aged forest management are land classification, silvicultural treatment prescription, and growth and yield prediction. Prediction consists in predicting harvest and future growth stocks, which are essential for forest management.

The variables inside-bark-diameter (d ib ) and heartwood diameter (d h ) are fundamental for the quantification of forest growth yield, as they are independent variables of volumetric models. Presently, these diameters are obtained through rigorous cubing. This procedure is onerous in terms of both operational costs and tree losses, besides being periodically conducted (at intervals of 2 or 3 years) to fit models, rendering them more precise.

Taper models allow inside-bark and heartwood diameter estimation using diameter at breast height (dbh), total height, commercial height and cubing-originated d ib or d h data.

Teak wood (Tectona grandis Linn) is highly valued in the international market for its beauty, stability, durability and resistance. It is specially used in the production of pieces of noble use and fine furniture as well as in ship building, furniture, structures, floor tiles, chips, panels, pillars and railroad crossties. There has been an increasing demand for teak wood with international market price estimates for 2,015 varying between US$ 1,480 and 1,850 per m3, depending upon diametrical class. Due to the high value of teak wood, alternatives are chosen to avoid or at least reduce cubing in forest management (Oliveira et al. 2007).

Artificial neural networks (ANN) are mathematical–computational models inspired in the functioning of the biological network and their fundamental elements, the neurons, present in the human brain. Their processing is highly parallel, executed by units denominated artificial neurons, “that have the natural tendency to store experimental knowledge, making them available for use” (Aleksander and Morton 1990).

Diamantopoulou (2005) demonstrated the superiority of the ANN models to the regression models due to their ability to overcome the problems in forest data. This study used of artificial neural network (ANN) models for accurate bark volume estimation of standing pine trees (Pinus brutia) as an alternative to regression models. Görgens (2006), using data of different forestry companies, tested some forms of preprocessing of data and architectures of ANN to estimate the volume of eucalyptus trees and teak.

ANN are capable of, through a small learned example, generalizing the knowledge assimilated and applying it to a set of unknown data. ANN also have the interesting ability to extract nonexplicit characteristics from a set of information supplied as examples. Thus, neural networks are an alternative to estimate d ib and d h .

This study is aiming at obtaining artificial networks that precisely estimate the inside-bark and heartwood diameters of Tectona grandis Linn (Teca) trees. Presently, these are obtained through cubing and posterior fit of the Taper models.

Materials and methods

The data used in this study originate from a stand of the exotic species Tectona grandis, located in the central southern region of the state of Mato Grosso, Brazil. A total of 583 trees, 2 to 14 years old, were measured through a forest inventory comprising 17 projects. The variables used were diameter at breast height (dbh), total height (H), inside-bark (d ib ) and outside-bark diameters (d ob ), and heartwood diameter (d h ) along the stem obtained by scaling. The variable bark thickness at 1.3 m was obtained through the following expression:

where b1.3 m = bark thickness at 1.3 m and d ib1.3 m = inside-bark diameter at 1.3 m.

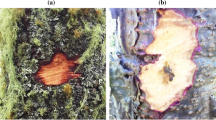

The data were evaluated regarding consistency, through d ib = f(h) and d h = f(h) graphs, where h is the height along the stem, and incompatible or discrepant data were eliminated. The estimates of heartwood diameter of trees with d h = 0.0 cm were eliminated from the database.

Inside-bark diameter estimate consisted of three strata based on a d ib minimum of 4, 6 and 8 cm, since, after reaching these dimensions, logs not sent to the saw mill could still be used as firewood, coal, fences, etc. To obtain estimated heartwood diameter, the data were stratified based on d h minimum of 10, 15 and 20 cm, which are the minimum diameters for wood mill machinery.

The taper model selected, which is the most used in Brazil, was developed by Kozak et al. (1969). The Kozak model was fit per project and database to estimate d ib and d h using regression analysis (Statistica 7.0).

where d = diameter at height h: d ib or d h ; dbh = diameter at breast height (1.3 m); h = distance from the soil until the point diameter d is considered; H = total height; β i = regression parameters (i = 0, 1, 2); and ε = random error, with ε ~ N (0, σ2).

To fit Kozak’s equation to estimate inside-bark and heartwood diameters, d was substituted by d ib and d h , respectively.

The software Statistica 7.0® was used to estimate the parameters of the adjusted equations for each test. To fit the artificial neural networks, tests were carried out in each stratum to estimate d ib and d h :

-

Input variables: dbh, H, h and d ob . Scope level: project;

-

Input variables: dbh, H, h and d ob . Scope level: all the database;

-

Input variables: dbh, H, h, d ob , bark thickness at 1.3 m. Scope level: project;

-

Input variables: dbh, H, h, d ob , bark thickness at 1.3 m. Scope level: all the database;

-

Input variables: dbh, H, h, d ob , project. Scope level: all the database.

An artificial neural network may consist of one or more layers. Each layer may contain one or more neurons (simple processing units). The input layer only receives the (quantitative or qualitative) values of the input variables and transmits them to the next layer, with their neurons being called source nodes. The intermediate or hidden layers and the output layer map knowledge, processing information, with their neurons also being called computation nodes. A compute node k receives the input signals (x i ) and ponders them with weights (w ki ); a sum is obtained by adding the inputs multiplied by their respective weights and adding a bias sign (b k ). The result of this sum (v k ) acts through an activation function (f (v k )) and provides the neuron output (y k ). An example of a neural network and a compute node is shown in Fig. 1.

Example of a neural network and one compute node (Adapted from Haykin 2001)

The application of this paper to the ANN fits into the learning task function approximation, that is it consists in the design of a neural network that approximates the unknown function f(x), which describes the mapping of the input–output pairs {(x 1, y 1), (x 2, y 2),…, (x n , y n )} of a set of n training patterns. The ANN types apt for this task are radial basis function (RBF), multilayer perceptron (MLP) and linear (Perceptron). A RBF neural network consists of three layers, an input layer of source nodes, a hidden layer that applies a nonlinear transformation in the input space for a hidden space of high dimensionality, i.e., with a large number of neurons, and an output layer that is linear and supplies the network answer. A MLP contains an input layer, one or more hidden layers and an output layer, usually trained by error backpropagation algorithm. A Perceptron is the simplest form of neural network, consisting of an input layer and an output layer.

To fit the ANN, the Intelligent Problem Solver of software Statistica 7.0® was used. This software normalizes the data at the interval of 0–1, tests different architectures and network models and selects networks that best represent the data. This software groups the training data into three parts: 50% for network training, 25% to train and compare it with the first part to obtain the moment to stop training and 25% to verify the generalization of the trained network. Thus, a trained network has already had its capacity of generalization evaluated.

Thirty ANN were fit for each test, and the three best ones were selected based on the best accuracy. The network models tested were radial basis function (RBF), multilayer perceptron (MLP) and linear (Perceptron), with different architectures, i.e., number of neurons per layer.

The estimates obtained by the Kozak models and by the ANN were evaluated for precision and ease of fit using percentage residual graphs in relation to the observed versus estimated d ib and d h . The best alternative was also evaluated in terms of database stratification and input variables.

The estimates obtained by the Kozak model and by the ANN were evaluated for precision and ease of fit through graphs of observed versus estimated d ib and d h , coefficient of determination (R²) and relative root mean squared error (RMSE%) cited by Mehtätalo et al. (2006), in which the estimators are presented in formulas (3) and (4). The best alternative was also evaluated in terms of database stratification and input variables.

where Y i is the diameter observed i (d ib or d h ), \( \hat{Y}_{i} \) is the diameter estimated i (d ib or d h ), \( \bar{Y} \) is the arithmetic mean of the diameters observed (d ib or d h ) and n is the total number of observations.

Results and discussion

The coefficient of determination (R²) and the relative root mean squared error (RMSE%) for each test and stratum by fitting the model of Kozak and by training of artificial neural networks are presented in Tables 1 and 2, respectively. The ANN fit better to the data, i.e., they were more precise. This fact can be verified by the dispersion of the observed versus estimated values, higher R 2 values and lower RMSE% values, compared to the fit by the Kozak model. RMSE% analysis also allows to verify the effect of stratification according to the minimum diameter, and again it can be confirmed that the higher the minimum diameter, the better the fit. In terms of project, the ANN were found to be better than the ANN in terms of the entire database. In general, the linear-type nets (Perceptron) were superior to the MLP and RBF types.

The Kozak model fittings presented a determination coefficient (R 2) between 0.5530 and 0.8649, i.e., from 55.30 to 86.49% of the dependent variable (d ib and d h ), are explained by the independent variables (dbh, H, h, d ob ). Only the d h minimum of 20 cm was not fit due to the fact that all the d h measurements in this condition were in h = 0 m, i.e., at ground level and without fitting condition. For inside-bark diameter estimation, the Kozak model presented the same behavior for all the strata (minimum d ib of 4, 6 and 8 cm), overestimating the smallest diameters. The heartwood diameter for d ib minimum of 10 cm had values overestimated up to 67% for diameters between 10 and 12 cm. The d ib and d h estimates presented residuals of around 20% for most the data (Fig. 2).

The three best networks trained to estimate d ib and d h for each test (number of input variables) and stratum (d min) are presented in Table 3. The number sequence that follows the type of network describes the number of neurons by layer, for example RBF 4-123-1 reports that the RBF network has 4 neurons in input layer, 123 neurons in hidden layer and 1 in layer output.

The artificial neural networks presented difficulties in estimating the smallest diameters, located on the tree tops, verified by the higher extent of the residuals, which later concentrate in a constant range. Thus, stratification proved that the higher the minimum diameter, the higher the precision estimates, a fact observed for both d ib and d h (Fig. 3).

For the d ib estimate, the percentage residuals concentrated mostly in the range ±10%, at database level. However, for the project tests, the range was ±5%. The d h had estimates between +20 and −10% for all the strata (10, 15 and 20 cm), with the tendency to overestimate the smallest diameters being more pronounced in the stratum with d h minimum of 10 cm (Fig. 4).

Bark thickness at 1.3 m did not contribute significantly to network training. The inclusion of the input variable project resulted in longer training, without significant gains in diameter estimation. The other variables, d ob , dbh, H and h, were important and indispensable for network fitting.

Of the types of network training, RBF, MLP and linear, the linear network presented the best estimates for both d ib and d h .

Conclusions

The ANN have several benefits, such as input–output mapping, adaptability, tolerance to fault and noise, neurobiological analogy, are able to learn from training data and provide adequate responses to data not used in training (generalization), as well as some limitations: they can be seen as “black boxes”, as it is not known why the network generates a certain result and the models do not justify their answers, it is difficult to define the ideal architecture of the network, there are no rules to define the number of neurons in each layer or the number of layers, and there is no guarantee of algorithm convergence for an optimal solution. However, such limitations do not preclude the use of the technique.

ANN were found to be more efficient in estimating inside-bark and heartwood diameters for Tectona grandis trees than the Kozak model. The variables that must be used to fit the networks are dbh (diameter at breast height), H (total height), h (height at diameter d ib or d h ) and d ob (outside-bark diameter at h). Stratification by project results in precision gain, with precision being higher for wider commercial diameters. Thus, linear-type artificial neural networks can be efficient in describing the taper of Tectona grandis trees.

References

Aleksander I, Morton H (1990) An introduction to neural computing. Chapman & Hall, London

Diamantopoulou MJ (2005) Artificial neural networks as an alternative tool in pine bark volume estimation. Comput Electron Agric 48:235–244

Görgens E (2006) Estimação do volume de árvores utilizando redes neurais artificiais. Dissertation, Universidade Federal de Viçosa

Haykin S (2001) Redes neurais: princípios e prática. Bookman, Porto Alegre

Kozak A, Munro DD, Smith JGH (1969) Taper functions and their applications in forest inventory. For Chron 45(4):278–283

Mehtätalo L, Maltamo M, Kangas A (2006) The use of quantile trees in the prediction of the diameter distribution of a stand. Silva Fennica 40(3):501–516

Oliveira LC, Angeli A, Stape JL (2007) Teca é a nova opção na indústria mundial. Rev da Madeira 106:81–86

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by T. Seifert.

Rights and permissions

About this article

Cite this article

Leite, H.G., da Silva, M.L.M., Binoti, D.H.B. et al. Estimation of inside-bark diameter and heartwood diameter for Tectona grandis Linn. trees using artificial neural networks. Eur J Forest Res 130, 263–269 (2011). https://doi.org/10.1007/s10342-010-0427-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10342-010-0427-7