Abstract

Usually, it is difficult to implement integer ambiguity resolution within a short amount of time for GNSS positioning in urban areas due to the contamination of non-line-of-sight signals and multipath. This study proposes a sequential ambiguity selection strategy for partial ambiguity resolution. First, the ambiguities are selected based on the filtered residuals of the phase and code measurements. In addition, the elevation angle and decorrelated variances are used as the metrics for selecting ambiguity subsets. Two kinematic experiments are carried out in urban areas to evaluate the performance of the strategy. Among the three independent strategies, the first one performs better than the others, as the dependency of observation quality on the elevation angle is low and the decorrelated variances are prone to be contaminated by biased ambiguities. When the proposed sequential ambiguity selection strategy is used, the percentage of correctly fixed epochs is increased by approximately 10–20%. The RMS of N/E/U is improved from the decimeter level (for full ambiguity resolution) to the centimeter level. The improvement is more obvious in the obstructed area and during the re-initialization phase.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Because of the modernization and development of the new and established Global Navigation Satellite Systems (GNSS), the number of GNSS satellites in orbit already exceeds 100. The use of multi-GNSS signals can improve the positioning accuracy and reliability for real-time kinematic (RTK) users, particularly the integer ambiguity resolution (Odolinski et al. 2015; Liu et al. 2021). Once the ambiguity is correctly fixed, the carrier phase can be regarded as the range with mm accuracy to achieve cm- or mm-level kinematic or static positioning (Odolinski et al. 2015). However, fast, reliable, and correct determination of integer ambiguity is still a major challenge, particularly in urban areas.

Although the multi-GNSS and multifrequency signals can provide more redundant observations, they increase the dimension of ambiguity. It is unnecessary to fix all ambiguities to the correct integers, particularly in a complex urban environment. Once a subset of ambiguities is corrected, highly accurate positioning can be ensured. This is termed partial ambiguity resolution (PAR) (Teunissen et al. 1999). Hence, selecting the ambiguity subsets is essential for implementing PAR. In general, the methods can be classified into three categories. First, the ambiguity subset is selected based on the characteristics of observations, e.g., the elevation angle (Li et al. 2014; Teunissen et al. 2014) or signal-to-noise ratio (SNR) (Parkins 2011). However, the method's main disadvantage is that some non-line-of-sight (NLOS) and multipath signals may have high elevation angles or SNRs (Hsu 2018), which are particularly worse in urban areas. Second, ambiguity selection is based on the combination of multifrequency observations, e.g., an extra wide lane or the wide lane combination, which can theoretically be viewed as a special case of decorrelation (Teunissen et al. 2002). However, if only (extra) wide-lane ambiguities are fixed, the positioning accuracy can only reach the decimeter level, i.e., it is far from meeting the requirement of centimeter-level accuracy (Feng 2008). The last is to select the ambiguity subset based on the quality of ambiguities indicated by various metrics, e.g., the ambiguity dilution of precision (ADOP) (Teunissen 1997; Parkins 2011) or the variance after decorrelation (Wang and Feng 2013). However, the bad observations will pollute the estimation of float ambiguities, making the variances unable to reflect the real quality of float ambiguities (Henkel and Günther 2010).

Considering the pros and cons of the above methods, the combined strategy is usually used for the selection of ambiguity subsets. Parkins (2011) selected the ambiguities based on the two ordering methods, i.e., ADOP and SNR. Li et al. (2014) selected a subset of ambiguities by gradually increasing the elevation angle. After selecting the ambiguities based on elevation and variances, the subsets of the ambiguities are further selected by using the decorrelated variances (Li and Zhang 2015). In addition, some other strategies for the selection of the ambiguity subsets are also used for the ambiguity resolution: Hou et al. (2016) proposed a two-step success rate criterion (TSRC), allowing the selection of the subset such that the expected precision gain is maximized among a set of preselected subsets, while at the same time, the failure rate is controlled. Castro-Arvizu et al. (2021) proposed the precision-driven PAR approach that employed the formal precision of the (potentially fixed) positioning solution as the selection criteria for the ambiguity subset.

The above studies showed good performance with experimental static data. However, for kinematic datasets, especially in urban areas, GNSS observations are prone to interference by more unpredictable factors, such as the frequent loss of lock and cycle slips due to occlusion or rapid changes in satellites, large observation noise and more gross errors (Hsu 2018). Thus, effective and robust methods should be applied to resist these undesirable errors, yielding high-precision and high-reliability float ambiguity. For example, Shi et al. (2019) proposed a quality control algorithm considering both code and phase observation errors, and Liu et al. (2019) proposed an improved robust Kalman filter strategy based on the IGG3 (Institute of Geodesy and Geophysics) (Yang 1994) method for kinematic RTK. However, they did not address the influence of GNSS quality control on ambiguity resolution. Minimizing the influence of outliers to obtain a high-precision float solution is an important prerequisite for ambiguity resolution. Therefore, to improve the positioning performance in urban areas, the PAR strategy needs to be investigated to analyze the impacts of abnormal observations on AR and improve the success rate of AR and the GNSS positioning performance.

The principles of LAMBDA (Least-squares AMBiguity Decorrelation Adjustment) (Teunissen 1995) and PAR are presented following the introduction. Afterward, the proposed ambiguity selection strategy based on the IGG3 quality control method as well as the sequential partial ambiguity resolution are presented. By analyzing two field experiments, we evaluate the effectiveness and the performance of the proposed algorithm. Finally, this study is summarized.

LAMBDA method

The GNSS ambiguity is the integer part of the unknown cycles when the carrier phase is first tracked. The observation of the double-difference measurements for RTK can be described as follows:

where \(a{\in {\mathbb{Z}}}^{n}\) is the integer ambiguity parameter vector and \(b\in {\mathbb{R}}^{n}\) is the real-valued vector, mainly including baseline components and an atmospheric delays parameter. \(A\) and \(B\) are the design matrices. \(y\in {\mathbb{R}}^{m}\) contains the observed-minus-computed values for the code and carrier-phase observables, and \(\varepsilon\) represents the random noises.

GNSS precise positioning usually contains four steps: (1) estimate the float ambiguities and other parameters; (2) fix float ambiguities to generate integer values; (3) validate the integer ambiguities, and (4) update the position coordinates using the fixed ambiguities. Then, the estimates of float ambiguity \(\widehat{a}\) and other parameters \(\widehat{b}\) with the variance–covariance matrix can be resolved by weighted least squares estimation, denoted as follows:

The integer estimation maps the real-valued float ambiguities to integers:

where \(\mathrm{\rm I}:{\mathbb{R}}^{n}\to {\mathbb{Z}}^{n}\) is the integer mapping from the n-dimensional space of real numbers to the n-dimensional space of integers.

The most extensively used integer estimation methods are integer rounding (IR), integer bootstrapping (IB) and integer least squares (ILS). The ILS method is efficiently implemented in the LAMBDA software, and it has the optimal performance regarding the success rate, i.e., the probability of correctly fixing the integer ambiguities. In this study, we use LAMBDA to solve any subset of ambiguities.

Within LAMBDA, due to the high correlation between the ambiguities, the Z-transformation is used for decorrelation (Teunissen 1995) and transforming the original ambiguities into a new set as follows:

The corresponding variance–covariance matrices can be obtained as follows:

After transformation, the float solution becomes as follows:

The search space of the ambiguity subset \(\widehat{z}\) after decorrelation in the Z domain is substantially reduced. Once the search is finished, \(\widehat{z}\) will be mapped back to the original space to obtain the integer estimations of \(\widehat{a}\). Afterward, the third step will be used to validate the fixed ambiguities \(\check{a}\) using an ambiguity acceptance test, and the ratio test is the widely used one (Euler and Schaffrin 1991). Once the estimates pass the ambiguity validation, the integer solution of the baseline components and other parameters can be obtained:

If the full-set ambiguity resolution (FAR) cannot be implemented, the float ambiguity vector \(\widehat{z}\) in the Z domain can be divided into two parts, \({\widehat{z}}_{1}\) and \({\widehat{z}}_{2}\), and the corresponding covariance matrix is as follows:

where \({\widehat{z}}_{1}\) is the double-difference ambiguity that is hard to fix, and \({\widehat{z}}_{2}\) is the ambiguity that is easily fixed according to specific criteria in the Z space. Once \({\widehat{z}}_{2}\) is correctly fixed, the fixed solution of \(\check {b}\) can be obtained directly using the fixed partial ambiguity \({\widehat{z}}_{2}\) as follows:

where \({Q}_{\widehat{b}{\widehat{z}}_{2}}\) is the submatrix of \({Q}_{\widehat{b}\widehat{z}}\), relating to \({\widehat{z}}_{2}\).

A sequential strategy for partial ambiguity resolution

This section presents a strategy based on the IGG3 quality control algorithm for ambiguity selection. Moreover, a sequential partial ambiguity resolution strategy is further proposed.

Quality control and ambiguity selection based on IGG3

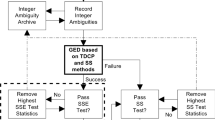

The abnormal observations will bias the float ambiguity estimation. If they are not handled carefully, the selection of ambiguity subsets and ambiguity resolution will be impacted (Teunissen 2001; Henkel and Günther 2010). The filtered residuals usually can reflect the quality of the observations; in particular, the double-difference phase filtered residuals can be used to measure the quality of the ambiguity estimates. Therefore, quality control and an ambiguity selection approach are proposed based on the IGG3 algorithm. Figure 1 shows the workflow, and the following describes it in detail.

Given that the Kalman filter is used to estimate the unknown variable \(X\), the filtered residuals \(V\) of the observations (including code filtered residuals \({v}_{P}\) and phase filtered residuals \({v}_{L}\)) and their variance and covariance matrix \({C}_{vv}\) can be obtained as follows:

where \(B\) is the design matrix, \(l\) is the observed-minute-computed (OMC) vector, and \({C}_{l}\) is the variance–covariance matrix of OMC. The normalized filtered residuals \({\overline{v} }_{i}\) can be obtained as follows:

where the subscripts \(i\) represent the code (\(P\)) or phase (L). Shi et al. (2019) show that the positioning accuracy can be improved by considering the code errors in relative positioning. Hence, we adjust the weight \({\gamma }_{ii}\) for the raw and the normalized filtered residuals of both the code and phase by using the IGG3 algorithm (Yang et al. 2002; Liu et al. 2019):

For the code filtered residuals \({v}_{P}\), \({k}_{0}=2\) m and \({k}_{1}=3\) m are taken as the thresholds, whereas \({\overline{k} }_{0}=1.5\), \({\overline{k} }_{1}=4\) are used for the normalized code filtered residuals \({\overline{v} }_{P}\). Once \({v}_{P}\) or \({\overline{v} }_{P}\) falls in the weight-reduced or the rejection region, the cycle slip will be checked on this satellite, and if it exists, the ambiguity will be reset. The observation \(l\) of this satellite will be deleted, and \({C}_{l}\) will be resized to reduce the influence of abnormal codes on ambiguity initialization. Afterward, the algorithm is applied backward to re-estimate until all codes fall in the accept region.

For the carrier filtered residuals \({v}_{L}\), \({k}_{0}=3\) cm and \({k}_{1}=9\) cm are taken as the thresholds, whereas \({\overline{k} }_{0}=1.5\) and \({\overline{k} }_{1}=4\) are used for the normalized carrier filtered residuals \({\overline{v} }_{L}\) (Liu et al. 2019). As the kinematic urban data are processed in this study, the value of \({\overline{k} }_{1}\) is slightly increased compared to Liu et al. (2019). Once the filtered residuals fall into the down-weight or the rejection region, the phase observations are abnormal observations with high probability. Hence, the corresponding ambiguities will be marked and not used for ambiguity resolution. Similar to the code, the algorithm is applied backward to re-estimate the unknown variable \(X\) for iteration. Furthermore, if the phase filtered residual falls into the rejection region twice, the undetected cycle slip is assumed in the observations. Hence, the ambiguity parameter is reset, and the algorithm is applied backward to the estimate until all phases pass the test.

Finally, the filtered float solution \(X\) and its corresponding covariance matrix \({C}_{x}\) are obtained, and all the marked ambiguities are not used for ambiguity resolution. For computational efficiency, the maximum number of iterations is set to 5. Considering that the filtered residuals of normal observations are biased by the gross errors, only the one with the largest variance is removed for estimation once more than one observation falls into the rejection domain at the same time (Liu et al. 2019).

With the above approach, most of the abnormal observations are down-weighted for estimation, and the corresponding ambiguities are removed for ambiguity resolution. However, due to the complexity of the urban environment, there are possible undetected outliers. Therefore, the following two empirical criteria are used for abnormal ambiguity identification:

-

1)

The satellites tracked over less than 2 continuous epochs are excluded for ambiguity resolution, as they are potential NLOS signals.

-

2)

If the ratio value decreases substantially (i.e., lower by half) compared to that of the previous one when the ambiguity is selected for ambiguity resolution, it is possible that the quality of the corresponding measurement is low. Hence, the satellite is excluded for ambiguity resolution at the current epoch.

Sequential partial ambiguity resolution

Due to the high complexity of the urban environment, the selection of ambiguity subsets for PAR needs to consider many more factors to ensure the robustness, stability, reliability, and accuracy of the positioning. The above quality control strategy is not only used to improve the precision of float resolution but also used for low-quality ambiguity identification to reduce their contamination on AR. In addition, considering that the dependency of observation quality on elevation is still valid in some cases, ambiguity selection based on the elevation angle is also used (Li et al. 2014; Teunissen et al. 2014). Finally, subset selection based on decorrelated variance is employed to further improve the fixed success rate, as it fully utilizes the covariance information of ambiguities (Shi and Gao 2012).

With the above consideration, a sequential partial ambiguity resolution (SPAR) strategy is proposed to improve the ambiguity fixing rate and the positioning accuracy in the urban environment. Figure 2 demonstrates the workflow, and the specific process is presented in the following:

-

1)

Full ambiguity resolution (FAR). The bootstrapping success rate test (BSRT) is calculated with all float ambiguities. Once the BSRT is passed, the LAMBDA algorithm is applied for FAR, and the ratio test is used for ambiguity validation. The fixed solution is obtained when the radio test is passed. However, if any one of the tests fails, step 2 will be performed.

-

2)

PAR with ambiguity selection based on IGG3. Afterward, similar procedures as those in step 1 are employed, and if any one of the tests fails, step 3 will be performed.

-

3)

PAR with ambiguity selection based on the elevation angle. In this step, the elevation angle is raised with a 5° step size until 35° to prevent too high elevation angle and poor geometry. Afterward, similar procedures as those in step 1 are used, and we move on to the final step if it fails.

-

4)

Ambiguity selection based on the decorrelated variance. First, BSRT is performed for the selected subsets after removing the ambiguity with the largest variance each time. Then, the LAMBDA algorithm is used to fix the selected ambiguities, and the ratio test is used for validation. Once the ratio test is passed, the fixed solution is obtained. Otherwise, the ambiguities are reselected based on the decorrelated variance until there are no more than 4 ambiguities. In this case, the float solution is output.

Experiment and analysis

To verify the performance of the proposed algorithm, we carried out two kinematic experiments in urban areas. The RTK is employed for positioning, and the length of the baseline is less than 10 km. Table 1 summarizes the basic information of the two experiments. In addition to the buildings along the way, the tunnel and the viaduct are passed in the first experiment, whereas the second is obstructed by the trees. NovAtel OEM7500 is used by Experiments 1 and 2 to track GPS L1/L2, BDS B1/B2 and GLONASS G1/G2 signals for the rover. The smoothed RTK solution from the NovAtel Waypoint 8.9 software package is used as the reference. However, it cannot output the positioning for all epochs; thus, only those with a quality factor less than 4 are selected for positioning analysis. The ambiguity fixed solution with horizontal or vertical errors greater than 10 cm or 15 cm is considered to be fixed incorrectly. In the discussion of ambiguity, the reference integer double-difference ambiguity is obtained from postprocessing solutions.

Data processing methods

For ambiguity validation, the ratio test is usually used, and the threshold \(c\) is selected empirically, such as 1.5 (Han and Rizos 1996), 2.0 (Wei and Schwarz 1995), or 3.0 (Leick et al. 2015). However, if a large threshold, 3.0 for example, is set, it is prone to reject the correctly fixed ambiguity. However, it is possible to accept incorrectly fixed ambiguities if a smaller threshold is used. Therefore, it is better to use the varying threshold instead of the fixed threshold. Teunissen and Verhagen (2009) demonstrated that the Fixed Failure-rate Ratio Test (FFRT) is better than the fixed threshold Ratio test as it can adopt the variation of the number of ambiguities. For FFRT, given a bootstrapping success rate and the number of ambiguities, the critical value μ can be found in a look-up table given in (Verhagen et al. 2013). In addition, considering that the ratio test cannot reflect the observation condition and the quality of the fixed solution (Teunissen and Verhagen 2009), SPAR uses both BSRT and FFRT for ambiguity validation.

To show the performance of the proposed SPAR strategy and make comparisons with that of the FAR and PAR based on each strategy, 5 solutions are determined for each experiment and listed in Table 2. For them, the raw dual-frequency observations of GPS, GLONASS, and BDS are used for positioning, and the a priori noises of the code and carrier measurements are set as 30 cm and 0.3 cm, respectively. The weighting is based on the elevation angle, and the mask elevation for data processing and the ambiguity resolution are set to 7° and 15°, respectively. The broadcast ephemeris is used for the computation of the satellite position. The tropospheric and ionospheric delays are corrected using the Saastamoinen and Klobuchar models, respectively. The IGG3 quality control method is used in each solution, and further to demonstrate its benefit for float solutions, both positioning errors of the float solutions (FS) and float solutions with the IGG3 quality control algorithm (FS-IGG3) are analyzed at some representative period. The LAMBDA algorithm is used to fix the ambiguity, and the threshold of the ratio test is set as listed in Table 2.

Analysis of experiment 1

The experiment started from the campus of Wuhan University and finished at the Fozuling area of Wuhan, and Fig. 3 illustrates the trajectory. Figure 4 shows the variation of the number of satellites and PDOP (Position Dilution of Precision) during the whole experiment. The average number of satellites is approximately 22, and the PDOP value is 0.54 on average due to the multi-GNSS used. However, the number of satellites varies rapidly. In the three areas, which are labeled in Fig. 4 as a (viaduct of Luoshi Road) , b (Mafangshan underground tunnel), and c (noise barrier of the Xiongchu viaduct), no GNSS signals can be tracked.

Figure 5 shows the time series of the positioning errors of Experiment 1 in the north, east, and up components for the five solutions. It is clear that when the full ambiguity fixed strategy is adopted, there are many points with large positioning errors, resulting in positioning accuracies of only 5.7 cm, 15.9 cm and 13.5 cm in the north, east, and up directions, respectively. With PAR, the positioning performance can be improved. A noticeable achievement can be observed for solution PAR-IGG3, and the accuracy achieves 3.4 cm, 7.5 cm, and 8.4 cm in the north, east, and up directions, respectively. Compared with the FAR solution, the accuracy is improved by 40.4%, 52.8%, and 37.8%, respectively, which indicates that low-accuracy observations have a large negative effect on ambiguity resolution. When only the decorrelated variances are used for ambiguity selection (PAR-DCV solution), the accuracy in the north, east, and up directions is improved by 14.0%, 6.3% and 15.6% to 4.9 cm, 14.9 cm, and 11.4 cm, respectively. This indicates that ambiguity selection based on the decorrelated variances has little contribution to PAR if the observations with low quality are not handled properly, as the impacts of these observations on the selection of ambiguity subsets are amplified by the decorrelation process and contaminate the ambiguity resolution (Henkel and Günther 2010). In addition, for solution PAR-ELE, only 14.0%, 3.1%, and 5.9% improvements in positioning accuracy can be obtained in the north, east, and up directions, respectively, which are even lower than those of PAR-DCV. By combining all the strategies for ambiguity selection, the solution PAR-SEQ shows the best performance, and 3.4 cm, 5.9 cm and 6.8 cm accuracies are achieved in the north, east, and up directions, respectively. Compared with the FAR solution, the positioning accuracy is improved by 40.4%, 62.9% and 49.6%, respectively.

Time series of positioning errors of Experiment 1 in the north (N, blue), east (E, green), and up (U, red) components for the five solutions with full ambiguity resolution (FAR), PAR with ambiguity selection based on IGG3 (PAR-IGG3), the elevation angle (PAR-ELE), the decorrelated variances (PAR-DCV), and the SPAR strategy (PAR-SEQ). The green shadow represents the marked area ①, ② and ③ in Fig. 3, respectively

The condition of Experiment 1 is relatively complicated. Therefore, it is easy to fix the ambiguity to wrong values. Figure 6 illustrates the float and the incorrectly fixed epochs for the five solutions. Figure 3 also shows the distribution of the float solution and the incorrectly fixed solution along the way, and Table 3 lists the corresponding statistical results. Among all the solutions, FAR has the lowest percentage of correctly fixed epochs (approximately 78.83%). Due to the relatively low criteria value used for the ratio test (1.5), the proportion of incorrectly fixed epochs reaches 11.70%. With PAR, the percentage of correctly fixed epochs is noticeably improved, particularly for solution PAR-IGG3. The percentage of correctly fixed epochs increases by 18% to 96.74%, which again confirms that the abnormal observations have a large impact on AR. The greatest percentage of correctly fixed epochs is obtained by solution PAR-SEQ (98.02%) and shows that the combination of the ambiguity selection strategy can improve the reliability of RTK in complex environments.

By combining all these strategies, the best positioning can be obtained, especially in severe occlusion. For example, as seen in the marker ① region in Fig. 3, the vehicle exits the noise barrier, and the receiver starts to track the GNSS signals. However, the observation quality is still poor, and the ambiguity needs to be re-initialized. Figure 7 shows the time series of the positioning errors of FS and FS-IGG3. When the IGG3 quality control algorithm is adopted, the accuracy of the float solution is improved. And the ambiguity selection based on IGG3 quality control algorithm is also helpful for AR, and Fig. 8 illustrates the integer ambiguities of C09 in the L1 frequency and C11 in the L2 frequency for FAR and PAR-IGG3. It is found that several incorrect resolutions in FAR, and the ambiguity selection based on IGG3 can overcome this dilemma. For example, in the UTC 01:35:05 epoch, the FAR integer ambiguity of C09-L1 and C11-L2 is abnormal. However, in PAR-IGG3, the ambiguity of G10, R04 and R13 are deleted for AR because of their poor quality, so the integer ambiguity of C09-L1 and C11-L2 can be fixed correctly. In summary, the quality control method is an important method for float estimation and AR and helps the convergence of accuracy quickly after GNSS completely unlocked.

The PAR-ELE method is easy to implement and can sometimes make a contribution, particularly under good observation conditions. For example, in area ② in Fig. 3, it can be seen from Fig. 9 (left panel) that the elevation angles of most outliers are relatively low, so the accuracy of the PAR-ELE solution during this period has improved. However, the noise level is not completely correlated with the elevation angle (Hsu 2018), as shown in the blue circle in Fig. 9 (left panel). PAR-ELE does not perform very well in these epochs. In addition, it can be seen from Fig. 9 (right panel) that the dependency of abnormal observations on the SNR is weak, and the method of the ambiguity selection based on the SNR may not be suitable for urban areas.

In area ③ in Fig. 3 (the intersection of Xiongchu and Guanggu Viaduct), in addition to the buildings on both sides, the occlusion from the noise barrier becomes strong. Similar to Fig. 7, it can also be observed that the accuracy of the FS solution is lower than that of FS-IGG3 in Fig. 10. This indicates that the estimation is biased due to abnormal observations. Furthermore, in contrast to ①, there is no obvious abnormality for the float ambiguity, but Fig. 11 demonstrates that the number of fixed ambiguities between PAR-IGG3 and PAR-DCV is different. When low precision ambiguity is included in the estimation procedure, the stochastic model cannot reflect the quality of real measurements. As a result, the AR using LAMBDA is contaminated, and the ambiguity resolution based on PAR-DCV is low, making the PAR-DCV solution inferior to PAR-IGG3 during UTC 01:48:25–01:48:45. In addition, although PAR-DCV has fixed more ambiguity than PAR-IGG3 for the epoch marked by the orange cycle in Fig. 11, the PAR-IGG3 solution still fixes more than 20 ambiguity parameters in these epochs. Therefore, their performance is almost similar. Overall, in the period of 60 s, PAR-SEQ only has one float solution epoch, whereas 4 float epochs are there for PAR-IGG3 and PAR-DCV. This shows the effectiveness of SPAR.

Analysis of experiment 2

The experiment is carried out in downtown Yizhuang, Daxing District, Beijing, and Fig. 12 illustrates the trajectory of Experiment 2. As the experiment was carried out in the summer, in addition to the buildings, most of the occlusion originated from the trees along the road. Figure 13 shows the variations in the number of satellites and PDOP during the whole experiment. The average number of tracked satellites is 24, and the PDOP value is 0.49. As the buildings along the trajectory are not too high, the number of tracked satellites is slightly large. However, in some regions, including the beginning of the experiment and the epochs around UTC 3:15, the number of visible satellites decreases considerably, mainly due to the obstruction of the trees.

Figure 14 shows the time series of the five solutions, and the positioning errors in the earth, north, and up directions are also plotted in the legend. It can be observed that the performance of FAR is the worst among them all, particularly in the serious occlusion region. The positioning accuracy only achieves 15.9 cm, 18.5 cm, and 22.2 cm in the north, east, and up directions, respectively. Other solutions show relatively better performance. For PAR-IGG3, the position accuracy in the north, east, and up directions reach 6.6 cm, 7.1 cm, and 6.6 cm, respectively. The improvement of the PAR-ELE solution is marginal, and only a slight improvement in the east direction can be observed. This is mainly caused by the interference from the trees, resulting in the low dependency of observation noise on the elevation angle. Therefore, ambiguity selection based on the elevation angle does not work well. The positioning accuracy of the PAR-DCV solution reaches 14.9 cm, 16.9 cm, and 20.4 cm in the north, east, and up directions, respectively. The improvement is also low with respect to FAR, as the unremoved abnormal ambiguities contaminate the ambiguity selection based on the decorrelated variance. By combining the advantages of all these strategies, PAR-SEQ is the best solution, with positioning accuracy of 5.9 cm, 6.2 cm and 6.4 cm in the north, east, and up directions, respectively, and the improvement reaches 62.9%, 66.5% and 71.2% with respect to that of the FAR solution.

Time series of the positioning errors of Experiment 2 in the north (N, blue), east (E, green), and up (U, red) component for the five solutions with a full ambiguity resolution (FAR), PAR with ambiguity selection based on IGG3 (PAR-IGG3), the elevation angle (PAR-ELE), the decorrelated variances (PAR-DCV), and the SPAR strategy (PAR-SEQ). The green shadow represents the area ① and ② in Fig. 12

Figure 15 illustrates the float and the incorrectly fixed epochs for different solutions, the distribution of the float solution can be found Fig. 12, and the statistics are listed in Table 4. The FAR solution still has the lowest proportion of correctly fixed epochs (only 87.54%) with the largest float epochs (9.34%). Due to the low dependency of the observation noise on the elevation angle, the PAR-ELE solution shows a performance similar to that of FAR. Although the proportion of correctly fixed epochs is slightly increased for solution PAR-DCV, the percentage of incorrectly fixed epochs increases by a factor of two and reaches 6.66%. With respect to the two, the PAR-IGG3 solution increases the proportion of correctly fixed epochs to 96.36%. It is further improved by PAR-SEQ to 97.45%, with the lowest percentage of float and incorrectly fixed epochs. In summary, Experiment 2 confirms again that the PAR-SEQ has stronger adaptability to complex environments than any one of the strategies.

It can be concluded from Fig. 14 and Table 4 that the accuracy improvement of PAR-SEQ is more obvious than the others in the shade areas. For further investigation, the data during UTC 02:37:20–02:42:20 are analyzed when the car moved from Tianbao Middle Street (Fig. 12 ①) to Tianhua North Street (Fig. 12 ②). The positioning errors are marked in green shadow in Fig. 14. Compared with the occlusion of buildings, the interference of trees with GNSS signals is much more serious and random. There is no noticeable correlation between the abnormal observations and the elevation angle or SNR (Fig. 16). Hence, there is little improvement for the PAR-ELE solution in Experiment 2.

Figure 17 zooms the positioning errors of the FS and FS-IGG3 solutions. The large positioning error at the beginning of the FS solution can be observed, as many cycle slips occur during this period due to the shade of the trees shown in Fig. 18. For FS-IGG3, the abnormal code observations are removed to prevent them from initializing the ambiguity. Moreover, the low-quality measurements also bias the estimation of ambiguity, resulting in the long time needed for convergence for FS. Although there are more cycle slips at the end of the experiment, the number of ambiguity initializations is less than that in the beginning. This explains why the FS solution does not show the noticeable position error at the end period.

Figure 19 demonstrates the number of fixed ambiguities for PAR-IGG3 and PAR-DCV. It was found that PAR-IGG3 and PAR-DCV solutions have similar performance when the observation condition is good, such as the periods marked by the cyan circle. However, in the occluded area, the performance of PAR-IGG3 is slightly better, as indicated by the number of fixed epochs and ambiguities shown by the orange circle in Fig. 19. However, PAR-DCV has more incorrectly fixed epochs. Figure 20 illustrates the integer ambiguities of G08 at the L1 frequency and G21 at the L2 frequency for PAR-IGG3 and PAR-DCV. PAR-IGG3 has the best precision and the smallest number of incorrect integer ambiguities. Therefore, a reasonable stochastic model helps to provide an unbiased estimate of the ambiguity and ensures the reliability of the fixed solution. When compared to PAR-SEQ, in the marked area, PAR-SEQ reduces 7 float epochs and 5 incorrectly fixed epochs compared with PAR-IGG3 and reduces 7 float epochs and 31 incorrectly fixed epochs compared to PAR-DCV. These results further confirm the effectiveness of SPAR.

However, the positioning errors of some epochs are still large for the PAR-SEQ solution due to noticeable occlusion. It may be a challenge to solve this with GNSS alone. However, when the vehicle crosses the intersection without occlusion of trees, such as Fig. 12 ③, the ambiguity can be fixed correctly. At this time, if the ambiguities and the state of the vehicle are transmitted with the help of other sensors (such as Inertial Navigation System), the positioning performance will be further improved (Chai et al. 2022).

Conclusions

A sequential ambiguity resolution strategy based on the IGG3 quality control algorithm is proposed and applied to improve the RTK ambiguity resolution and the positioning accuracy in urban areas. In contrast to the traditional partial ambiguity resolution method, the proposed strategy uses the robust quality control algorithm IGG3 for the detection of abnormal ambiguities to reduce the contamination of the low-quality observations on the float ambiguity and the variance–covariance matrix. In addition, the elevation angle and the decorrelated variance-based strategy are used sequentially for the further selection of ambiguity subsets, which improves the ambiguity resolution performance in urban areas.

To verify the performance of the algorithm, two sets of kinematic experiments in urban areas were carried out. The results indicate that the sequential ambiguity resolution strategy can substantially improve the ambiguity resolution and GNSS positioning performance, particularly in the occlusion region and in the re-initialization stage after loss-of-lock. The performance of different ambiguity selection strategies is also evaluated and compared. Among them, the IGG3 approach shows the best performance as low-quality ambiguities are removed for AR. This confirms that the detection of abnormal observations and ambiguities is essential for PAR. Ambiguity selection with the elevation angle assumes that the observation noise is related to the satellite elevation angle, however, it is not valid in the urban environment where there are many more NLOS signals and multipath. Considering the simple operation of this strategy, it is still incorporated into the sequential ambiguity resolution strategy. In addition, the decorrelated variance is used for further ambiguity selection. By combining them, the best performance can be obtained. With the proposed strategy, the centimeter-level accuracy is obtained, as shown by two kinematic experiments carried out in urban regions. However, the positioning accuracy still cannot be guaranteed in some areas with GNSS occlusion. Hence, multi-sensor fusion can be used to provide a better solution in the urban environment.

Data availability

The collected datasets for the two field experiments in the study are available from the corresponding author upon the request.

References

Castro-Arvizu JM, Medina D, Ziebold R, Vilà-Valls J, Chaumette E, Closas P (2021) Precision-aided partial ambiguity resolution scheme for instantaneous RTK positioning. Remote Sens 13(15):2904. https://doi.org/10.3390/rs13152904

Chai D, Sang W, Chen G, Ning Y, Xing J, Yu M, Wang S (2022) A novel method of ambiguity resolution and cycle slip processing for single-frequency GNSS/INS tightly coupled integration system. Adv Space Res 69(1):359–375. https://doi.org/10.1016/j.asr.2021.10.007

Euler H-J, Schaffrin B (1991) On a measure for the discernibility between different ambiguity solutions in the static-kinematic GPS-mode. In: IAG Symposia no 107, kinematic systems in geodesy, surveying, and remote sensing. Springer, New York, pp 285–295

Feng Y (2008) GNSS three carrier ambiguity resolution using ionosphere-reduced virtual signals. J Geod 82(12):847–862. https://doi.org/10.1007/s00190-008-0209-x

Han S, Rizos C (1996) Integrated method for instantaneous ambiguity resolution using new generation GPS receivers. In: Proceedings of position, location and navigation symposium—PLANS '96. IEEE, Atlanta, GA, USA, pp 254–261

Henkel P, Günther C (2010) Partial integer decorrelation: optimum trade-off between variance reduction and bias amplification. J Geod 84(1):51–63. https://doi.org/10.1007/s00190-009-0343-0

Hou Y, Verhagen S, Wu J (2016) A data driven partial ambiguity resolution: two step success rate criterion, and its simulation demonstration. Adv Space Res 58(11):2435–2452. https://doi.org/10.1016/j.asr.2016.07.029

Hsu L-T (2018) Analysis and modeling GPS NLOS effect in highly urbanized area. GPS Solut 22(1):7. https://doi.org/10.1007/s10291-017-0667-9

Leick A, Rapoport L, Tatarnikov D (2015) GPS satellite surveying. Wiley, Hoboken

Li B, Shen Y, Feng Y, Gao W, Yang L (2014) GNSS ambiguity resolution with controllable failure rate for long baseline network RTK. J Geod 88(2):99–112. https://doi.org/10.1007/s00190-013-0670-z

Li P, Zhang X (2015) Precise point positioning with partial ambiguity fixing. Sensors 15(6):13627–13643. https://doi.org/10.3390/s150613627

Liu W, Li J, Zeng Q, Guo F, Wu R, Zhang X (2019) An improved robust Kalman filtering strategy for GNSS kinematic positioning considering small cycle slips. Adv Space Res 63(9):2724–2734. https://doi.org/10.1016/j.asr.2017.11.041

Liu X, Zhang S, Zhang Q, Zheng N, Zhang W, Ding N (2021) Theoretical analysis of the multi-GNSS contribution to partial ambiguity estimation and R-ratio test-based ambiguity validation. GPS Solut 25(2):52. https://doi.org/10.1007/s10291-020-01080-0

Odolinski R, Teunissen PJG, Odijk D (2015) Combined BDS, Galileo, QZSS and GPS single-frequency RTK. GPS Solut 19(1):151–163. https://doi.org/10.1007/s10291-014-0376-6

Parkins A (2011) Increasing GNSS RTK availability with a new single-epoch batch partial ambiguity resolution algorithm. GPS Solut 15(4):391–402. https://doi.org/10.1007/s10291-010-0198-0

Shi J, Gao Y (2012) A fast integer ambiguity resolution method for PPP. In: Proceedings of the 25th international technical meeting of the satellite division of the institute of navigation (ION GNSS 2012). pp 3728–3734

Shi J, Huang Y, Ouyang C (2019) A GPS relative positioning quality control algorithm considering both code and phase observation errors. J Geod 93(9):1419–1433. https://doi.org/10.1007/s00190-019-01254-w

Teunissen PJG (1995) The least-squares ambiguity decorrelation adjustment: a method for fast GPS integer ambiguity estimation. J Geod 70(1–2):65–82. https://doi.org/10.1007/BF00863419

Teunissen PJG (1997) A canonical theory for short GPS baselines. Part IV: precision versus reliability. J Geod 71(9):513–525. https://doi.org/10.1007/s001900050119

Teunissen PJG, Joosten P, Tiberius C (1999) Geometry-free ambiguity success rates in case of partial fixing. In: Proceedings of the 1999 national technical meeting of the institute of navigation. pp 201–207

Teunissen PJG (2001) Integer estimation in the presence of biases. J Geod 75(7–8):399–407. https://doi.org/10.1007/s001900100191

Teunissen PJG, Joosten P, Tiberius C (2002) A comparison of TCAR, CIR and LAMBDA GNSS ambiguity resolution. In: Proceedings of the 15th international technical meeting of the satellite division of the institute of navigation (ION GPS 2002). pp 2799–2808

Teunissen PJG, Verhagen S (2009) The GNSS ambiguity ratio-test revisited: a better way of using it. Surv Rev 41(312):138–151. https://doi.org/10.1179/003962609X390058

Teunissen PJG, Odolinski R, Odijk D (2014) Instantaneous BeiDou+GPS RTK positioning with high cut-off elevation angles. J Geod 88(4):335–350. https://doi.org/10.1007/s00190-013-0686-4

Verhagen S, Li B, Teunissen PJG (2013) Ps-LAMBDA: ambiguity success rate evaluation software for interferometric applications. Comput Geosci 54:361–376. https://doi.org/10.1016/j.cageo.2013.01.014

Wang J, Feng Y (2013) Reliability of partial ambiguity fixing with multiple GNSS constellations. J Geod 87(1):1–14. https://doi.org/10.1007/s00190-012-0573-4

Wei M, Schwarz K-P (1995) Fast ambiguity resolution using an integer nonlinear programming method. In: Proceedings of the 8th international technical meeting of the satellite division of the institute of navigation (ION GPS 1995). pp 1101–1110

Yang Y (1994) Robust estimation for dependent observations. Manuscr Geod 19(1):10–17

Yang Y, Song L, Xu T (2002) Robust estimator for correlated observations based on bifactor equivalent weights. J Geod 76(6):353–358

Acknowledgements

This study is financially supported by the National Natural Science Foundation of China (41974035, 42030109), and Yong Elite Scientists Sponsorship Program by China Association of Science and Technology (2018QNRC001). The editor and reviewers are acknowledged for their valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, Z., Xu, G., Guo, J. et al. A sequential ambiguity selection strategy for partial ambiguity resolution during RTK positioning in urban areas. GPS Solut 26, 92 (2022). https://doi.org/10.1007/s10291-022-01279-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10291-022-01279-3