Abstract

Reliability testing, namely receiver autonomous integrity monitoring (RAIM), consists of statistical testing of least-squares residuals of observations, e.g., on an epoch-by-epoch basis aiming towards reliable navigation fault detection and exclusion (FDE). In this paper, classic RAIM and FDE methods are extended with testing of range-rate residuals to find inconsistent velocity solutions in order to contribute to the reliability of the system with special focus on degraded signal environments. Reliability enhancement efforts discussed include a Backward-FDE scheme based on statistical outlier detection and an iteratively reweighted robust estimation technique, a modified Danish method. In addition, measurement weighting assigned to code and Doppler observations is assessed in the paper in order to allow fitting a priori variance models to the estimation processes. The schemes discussed are also suitable in terms of computational convenience for a combined GPS/Galileo system. The objective of this paper is to assess position and velocity reliability testing and enhancement in urban and indoor conditions and to analyze the navigation accuracy conditions with high sensitivity GPS (HSGPS) tests. The results show the necessity of weighted estimation and FDE for reliability enhancement in degraded signal-environment navigation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Location-based services in personal and vehicular navigation applications and the emergency call positioning requirements demand navigation capability also in degraded signal environments such as in urban areas and indoors. Urban areas place many challenges for satellite navigation due to, e.g., buildings causing signal attenuation and blockages of the satellite signals. Therefore, high-sensitivity receiver processing (MacGougan et al. 2002) is necessary to ensure sufficient observation availability. With high-sensitivity GPS (HSGPS), however, signals are also obtained in which the surroundings have induced deterioration, e.g., multipath propagation and cross-correlation effects. Thus, these severe interference effects associated with the low-power signals result in large measurement errors; hence reliability monitoring and enhancement schemes are essential.

Integrity and reliability information can be provided with the aid of user range accuracy (URA), information at the signal level and with receiver autonomous integrity monitoring (RAIM), i.e., statistical reliability testing techniques, at the user level. It is also important to monitor both the GPS-based position and velocity solutions in order to obtain a consistent navigation solution. Reliability monitoring contains statistical testing of least-squares (LS) residuals of the observations, e.g., on an epoch-by-epoch basis. Here the aim is to detect and exclude measurement errors with assigned certainty levels and, therefore, obtain consistency among the observations. Traditional RAIM techniques (Brown 1992; Parkinson and Spilker 1996; Ober 2003) aiming at fault isolation (Van Graas and Farrell 1993) of observation errors will lead to a computationally demanding user-level integrity monitoring process if generalized to future integrated GPS/Galileo observations and multiple faults are considered. Therefore, there is a need to develop methods, which lend themselves to combined GPS/Galileo, multiple error situations and allow simultaneous monitoring of the pseudorange and pseudorange rate (Doppler) observations in degraded signal environments. The problem of multiple and simultaneous signal failures affecting the pseudorange and pseudorange rate observations can easily occur in difficult positioning conditions and require special attention.

In this paper, statistical reliability testing for monitoring whether the model used for parameter estimation is correct includes applying a global test for detecting an inconsistent navigation situation and a local fault identification test for localizing and eliminating measurement errors recursively. In addition, a modified Danish estimation method for position and velocity reliability enhancement by deweighting observations iteratively is being assessed for urban navigation. The fault detection and exclusion (FDE) and recursively reweighted estimation schemes are tested and examined with static and kinematic urban/indoor GPS tests with a high-sensitivity receiver.

The rest of the paper is organized as follows. The first section of the paper discusses the concepts of LS position and velocity estimation in GPS, measurement weighting, RAIM, and the Backward-FDE scheme for position and velocity reliability monitoring for urban environments. In addition, an estimation approach including the outlier detection by itself when deweighting measurements based on LS residuals (a modified Danish method) is discussed. The second section presents results and analyses of the implemented position and velocity reliability-enhancement schemes on real HSGPS data. Finally, conclusions are given with remarks for future research tasks.

Reliability monitoring

Introduction

The linearized pseudorange measurement equations in LS epoch-by-epoch GPS positioning can be expressed as follows (Kaplan 1996; Parkinson and Spilker 1996)

where \({\mathbf{\Delta} \mathbf{\rho }}\) is the misclosure vector, the difference between the predicted and measured pseudorange measurements, ɛ is the vector containing pseudorange measurement errors assumed normally distributed with zero-mean, and H is the design matrix. The array \({\mathbf{\Delta}\mathbf{x}}\) is defined as

and contains the incremental components from the linearization point of the unknown user coordinates and clock bias. The unknown array \({\mathbf{\Delta}\mathbf{x}}\) can be estimated as follows

where C l is the covariance matrix of the pseudorange measurements. In this paper, it is assumed to be a diagonal matrix. Thus, the state estimate of the unknown user coordinates is obtained by adding the incremental component to an approximate component, i.e., the linearization point.

The linearized Doppler measurement equation leading to a GPS-derived velocity solution (Kaplan 1996; Parkinson and Spilker 1996) can be presented as follows

where \({\mathbf{\Delta} \dot{\mathbf{\rho}}} = {\hat{\dot{\mathbf{\rho}}}} - \dot{\mathbf{\rho }}\) is the difference between the predicted and measured pseudorange rate observations and ζ is the vector containing frequency estimate errors in m/s and assumed normally distributed with zero-mean. The array g is defined as

and contains the unknown components of the user velocity and receiver clock-drift. The array g can be estimated as follows

where C d is the covariance matrix of the Doppler (measured pseudorange rate) measurements and is assumed to be diagonal.

If redundant observations have been made, LS residuals of, in this case, pseudorange or pseudorange rate measurements can be obtained from LS estimation as follows

or

The resulting residual vector \({\hat{\mathbf{r}}}\) can be used to test the internal consistency (Teunissen 1998; Kuang 1996) among the pseudorange or pseudorange rate measurements. In addition, the residuals can be normalized (Teunissen 1998; Baarda 1968) as follows

where n denotes the number of observations and \(({\mathbf{C}}_{{\hat{\mathbf{r}}}} )_{ii} \) is the ith diagonal element of the cofactor matrix \({\mathbf{C}}_{{\hat{\mathbf{r}}}} .\) The cofactor matrix \({\mathbf{C}}_{{\hat{\mathbf{r}}}} \) denoting the covariance matrix of the residuals (Ryan 2002) is presented as

where C denotes either the covariance matrix of pseudorange measurements, C l , or the covariance matrix of pseudorange rate measurements, C d .

Simple height and vertical velocity constraints can be added to the estimation processes resulting in an increased measurement redundancy for the solution computation process as well as the reliability monitoring. This is accomplished by constraining the variance of the height and the vertical velocity states by adding pseudo-observations to the functional models of the estimation processes accompanied by related standard deviations to the stochastic models. Basically, this leaves only the horizontal and time components as unknowns to be estimated in the position and velocity computation. The epoch-by-epoch LS approach is used in this research instead of more practical filtering approaches because of sensitivity analysis purposes.

Observation weighting based on C/N0

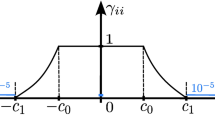

Carrier-to-noise density ratio, C/N0, is an essential measurable signal-quality value in a GPS receiver. It can be used to weight the measurements (Wieser and Brunner 2000). In degraded signal environments, where signal quality is often dependent on signal power, C/N0-based weights can be applied to observations, providing more appropriate a priori estimates of the measurement error distributions. The covariance matrix of the observations can be constructed as follows, following the example of (Hartinger and Brunner 1999)

where C refers either to C l or C d and the constants a and b can be chosen empirically by assessing the actual observed measurement errors and the equipment used. In this paper, constants for C l are chosen as a=10 and b=1502, and for C d they are a=0.05 and b=50. The resulting dependency between the standard deviations of the observation errors and the C/N0 values is presented in Fig. 1.

Statistical reliability testing (RAIM)

In order to detect the measurement error, LS residuals can be tested statistically. The observation errors are assumed to be Gaussian zero-mean and uncorrelated. Unfortunately, these assumptions do not necessarily hold in degraded signal environments.

Global test

A global test for detecting an inconsistent position or velocity solution is related to an a posteriori variance factor, \(\hat \sigma _0^2 ,\) and the degree of freedom (n−p) such that we test whether \(\hat \sigma _0^2 (n - p)\) is centrally χ2 distributed or not. The a posteriori variance factor, \(\hat \sigma _0^2 ,\) and the threshold for the global test, \(\hat \sigma _{0{\text{T}}}^2 ,\) are defined as

where p denotes the number of parameters to be estimated, and the value α represents the false alarm rate. In the global test, a null-hypothesis represented by H0 states that the adjustment model is correct and the distributional assumptions meet the reality, as opposed to Ha which states that the adjustment model is not correct. If the a posteriori variance factor exceeds the threshold, the null-hypothesis H0 is rejected, and inconsistency is detected in the adjustment model. Figure 2 presents the central and non-central χ2 density functions for eight degrees of freedom, (n−p)=8, that represent the null-hypothesis, H0, and the alternative hypothesis, Ha, of the global consistency test. In Fig. 2, parameter β represents the probability of a missed detection and λ the non-centrality parameter of the biased χ2 distribution. The hypothesis testing is conducted as

Therefore, the threshold, which depends on the false alarm rate and the degree of freedom, determines whether the null-hypothesis of the global test is accepted or rejected. If it is rejected, i.e. if the global test fails, an inconsistency in the assessed observations is assumed and some action should be taken to identify the potential measurement error.

Local test

An attempt to identify the measurement error, either in the position or velocity computation, may be performed if there is enough redundancy to form the residuals. On assuming that only one error can exist at a time in the pseudorange or correspondingly in the pseudorange rate observations, the error can be detected by testing each normalized residual, w i , statistically against a quantile of a normal distribution with a false alarm rate, α0. The null-hypothesis H0,i, which states that the ith observation is not an outlier, is rejected if the ith normalized residual exceeds the threshold. The underlying assumptions of the local data snooping include the fact that the normalized residuals are normally distributed with zero expectation in the unbiased case (Teunissen 1998; Leick 2004). The two normal distributions of the local test hypotheses adapted from Leick (2004) are presented in Fig. 3. The parameter λ0 in Fig. 3 is the non-centrality parameter of the unbiased and biased normal distributions. The local testing is conducted as follows

Only the observation with the largest value of w i is tested and possibly rejected, because an outlier in one observation generally causes several w i to be increased. The measurement with the largest normalized residual exceeding the threshold is regarded as an outlier, and that measurement is excluded from the solution computation (Teunissen 1998) i.e., the kth observation is suspected to be erroneous when

The global and local consistency tests are a part of a statistical reliability testing/outlier detection procedure proposed originally by Baarda (1968). If H0 in the global test is rejected, the local test is carried out for fault identification. The parameters α, β, α0, and β0 are interrelated (Baarda 1968; Caspary 1988) and only two of them can be chosen arbitrarily. The risk level α of the global test must be related to the corresponding parameter in the local test, α0, together with the probability of missed detection β0=β, which is the same for both tests. An erroneous measurement that causes the global test to fail must, therefore, be identified with the local test. The α value, when setting the α0 and β0 values, is obtained by the following procedure:

Sequential local test

The assumption that only a single error can occur at a given time is not always valid, especially in urban areas. As an approximation for a testing procedure to detect multiple observation errors using the local fault identification test, the single-fault local test may be applied recursively whenever a fault is detected. Thus, if an outlier is found and excluded, the test is repeated on the subsample remaining after deletion of the outlier (Hawkins 1980; Petovello 2003), assuming that there is enough redundancy to perform multiple exclusions. In general, to exclude m observations either from position or velocity computation, a redundancy of at least m+1 is needed. The identification and exclusion of measurements with the local test is performed sequentially until no more outliers are found as described in Fig. 4.

Fault detection and exclusion

For an overall FDE procedure, after estimating the residuals, the global test can be performed to find out the consistency status of the solution. If some inconsistency is detected, the sequential local test can be performed and erroneous observations recursively excluded. For detecting a failure, there must be at least one redundant measurement, (n−p)>0, but, in order to exclude erroneous observations, the redundancy must be at least two, (n−p)>1. The combined scheme, where the sequential local test is performed in case the global test fails, is suitable mainly in single-fault situations, since the LS procedure tends to spread an error and several errors, in particular, into all the residuals (Kuusniemi and Lachapelle 2004). Incorrect measurements might be marked to be erroneous in the sequential local test, especially in large or multiple bias situations (Lu 1991). In addition, when performing epoch-by-epoch navigation calculations under degraded signal environments such as indoors, all the available measurements are vital for the solution geometry and, therefore, a minimum number of necessary exclusions is desired.

If more than one observation is being excluded, the global and the following sequential local test should include reconsideration of an observation rejected during an earlier iteration (Wieser 2001). A Backward-FDE scheme in Fig. 5 containing such a backward testing constitutes an eligible reliability enhancement possibility for degraded signal environments. A measurement excluded during the sequential local testing is back-implemented into the solution computation if the global test passes when it is included in the solution as presented in Fig. 5. In addition, in the sequential local testing part of the procedure, a measurement is excluded only if the resulting geometry is acceptable in terms of the horizontal dilution of precision (HDOP) value. This backward testing ensures that unnecessary exclusions are not performed.

Modified danish method: iteratively reweighted estimation for reliability enhancement

The Danish method (Jørgensen et al. 1985; Wieser 2001) is an iteratively reweighted robust estimation technique used in geodetic applications. By iterative weighted LS estimation, it aims at achieving consistency between the model and the observations, and more precisely, to have more appropriate a priori weights of the observations in the estimation procedure. In this paper, a modification of the Danish method is used for degraded signal environments to identify and thus deweight the outlying observations. This is performed by assessing the LS residuals and deweighting the measurements iteratively, in case a given normalized residual exceeds a certain threshold. The modified Danish method includes a test statistic different from the original Danish method proposed by Jørgensen et al. (1985). As a result, in this modified Danish method, the variance for observation i in iteration k+1 of an epoch can be constructed as follows

where σ 2i,0 denotes the a priori variance of observation i, which can be obtained from the C/N0-dependency presented in Eq. 11 for either pseudorange or pseudorange rate observations, depending on whether the position or velocity estimation is concerned. Therefore, if the ith normalized residual in the kth iteration, \(\frac{{|{\hat{\mathbf{r}}}_{i(k)} |}} {{\sigma _{i,0} }},\) exceeds a certain threshold defined here with the quantile of a normal distribution \({\mathbf{n}}_{1 - \frac{{\alpha _0 }}{2}} \) as in the statistical local test, the variance of the ith observation in the k+1th iteration of the estimation procedure of an epoch is increased according to an exponential function (Wieser 2001) as shown in Eq. 18. If the given normalized residual of the ith observation is regarded as acceptable, the a priori variance for that observation is maintained in the estimation procedure. The covariance matrix in the k+1th iteration in the LS estimation, both for the pseudorange and the pseudorange rate measurements, is constructed as

The iterations in the modified Danish estimation method start with a traditional LS estimation using the inverse of the covariance matrix of the observations as the weight matrix (Wieser 2001), either with pseudoranges or pseudorange rate observations. After this, in each iteration k, the normalized residuals for all the observations are computed using the residuals of the current iteration. Then, the new variances are computed using Eq. 18 and another LS estimation iteration is conducted. When the variance of an observation grows exponentially, the weight of that observation decreases rapidly in the estimation, and the observation can be regarded as being excluded. The iterations for an epoch are stopped when the variances no longer change significantly, and the norm of the unknowns to be estimated is small enough for the solution to be accepted.

The presented Danish estimation technique provides a convenient way to conduct reliability enhancement within either the position or velocity solution estimation by iterative deweighting, which is computationally more convenient compared to statistical reliability testing in terms of FDE.

Position and velocity reliability monitoring

In order to assess the monitoring and enhancement capabilities of the Backward-FDE scheme and the Danish method, we need to compare their performance both in position and velocity domains. In our comparisons, the reliability monitoring is performed on the range residuals to exclude erroneous pseudorange measurements from the position estimation and on the range-rate residuals to exclude erroneous measured Doppler observations from the velocity estimation.

In harsh signal environments, where the availability even with HSGPS is fairly low, the use of FDE and Danish estimation for enhancing reliability is restricted due to the limited redundancy. Aiding in terms of self-contained sensors or the future navigation system Galileo increases the redundancy significantly and thus improves the reliability monitoring capabilities. However, in very poor conditions, with more severely erroneous than accurate measurements present, reliability monitoring is simply unable to perform proper detection and the following exclusions. Generally, robust estimators have at most a breakdown point of 50% (Rousseeuw and Leroy 1987), and thus this issue needs to be taken into account. The difficult task of proper observation weighting, for example using C/N0 values as in this paper, is crucial for the reliability assessment procedures to detect and exclude erroneous measurements properly. Otherwise, the reliability schemes end up detecting only an inconsistency between the observations and the model, and the error detection and exclusion for accuracy enhancement suffers. On occasion, the reliability enhancement methods fail to detect and exclude measurement errors successfully due to the underlying assumptions not holding. There is also always a β-value set, i.e., a probability of overlooking the outliers, which accounts for some of the remaining errors. In addition, the geometry of the system plays an important role in the detection and exclusion capability, and the exclusion of any observation deteriorates the geometry in general, leading to observation errors in the observations left having potentially more influence on the solution.

Static and kinematic indoor and urban HSGPS reliability tests

Static and kinematic indoor and urban environment HSGPS data were gathered and assessed to test the performance of the FDE and reliability enhancement procedures discussed. The tests were performed with a high sensitivity receiver, SiRF XTrac-LP. In processing the data collected, a modified version of the code and Doppler processing software package, C3NAVG2, developed at the University of Calgary, was used with height and vertical velocity constraining to have the best obtainable redundancy for reliability monitoring. The certainty levels for the Backward-FDE and the Danish method were chosen as follows: α0=0.1% and β0=β=10%. A position dilution of precision (PDOP) cutoff of 10 was applied, and in the Backward-FDE scheme for taking into account the geometry weakening, the sequential local test allowed the exclusion of an erroneous measurement only if the resulting HDOP value was smaller than 5. The different approaches compared include raw epoch-by-epoch LS estimation, C/N0-weighted LS, the Danish estimation method, and a weighted LS estimation where the Backward-FDE is applied. The results of the reliability enhancement schemes, the Danish method and the Backward-FDE, shown in both position and velocity domains, pass the reliability check, i.e., the testing to check whether the model used for parameter estimation is correct. This comprises testing the consistency between the observations and the consistency between the observations and a priori knowledge with the global test. In addition, the reliability check contains verifications for sufficient redundancy.

Static indoor test

First, a test was performed inside a residential garage shown in Fig. 6 over a period of ca. 12 h (Lachapelle et al. 2003). During the test, the wooden garage door was closed. Differential corrections were utilized in processing the data to eliminate orbital and atmospheric errors. Figure 7a presents the wide variety of C/N0 values obtained in the indoor test for different elevation angles. The number of available satellites and the HDOP values for the indoor test are shown in Fig. 7b. The overall availability was fairly good, and the signals in all the elevation groups included both low-power and fair-power signals. The pseudorange and pseudorange rate errors during the static indoor test are illustrated in Fig. 8. The error values for each satellite were obtained by post-processing techniques in a single-point mode when the reference position and zero velocity were known. Statistics of pseudorange and pseudorange rate errors in terms of their root mean square (RMS) values for all the available satellites are presented in the figure. On occasion, the pseudorange error, for example, reached over 200 m due to the cross-correlation effects indoors. As an example, Fig. 9a, b present the pseudorange and pseudorange rate error values, respectively, with respect to the signal-to-noise ratios of the indoor test, which confirm that the low-power signals generally include more errors.

Figure 10a presents the raw LS and the C/N0-based weighted LS position solutions in a local level frame around the true position of the indoor test and Fig. 10b presents the respective horizontal speed errors. Since the speed estimates of the static test should be zero, Fig. 10b can be interpreted as horizontal speed estimation errors. The figures demonstrate the importance of proper observations weighting in order to enhance the position and velocity accuracy; accuracy is significantly improved when observation weighting is applied. Availabilities of solutions in time were 99.9% in the 12-h indoor test.

Figure 11a presents the reliable horizontal position solutions and Fig. 11b the reliable horizontal speed solutions when the Danish and the Backward-FDE methods are applied. Both position and velocity accuracies are significantly improved on applying the reliability enhancement methods. However, there are still some significant errors present in both the methods, in which the reliability enhancement in terms of detection and exclusion of a failure has been unsuccessful; nevertheless, within the level of the assigned guaranteed performance. The availabilities of a solution are slightly decreased on applying the reliability checks to the Danish method and the Backward-FDE.

Kinematic downtown test

A 10-min vehicular test was performed in a dense downtown area in Calgary, Canada. The trajectory of the vehicular test is shown on a map in Fig. 12 with pictures showing the test conditions with constructions causing blockages. Differential corrections were applied to the observations in the test. Figure 13a presents the C/N0 values of the observations versus elevation angles and Fig. 13b presents the HDOP values and the number of available satellites for the vehicular test. For example, the RMS value of the C/N0 values for PRN 9 in the vehicular test is 28.1 dB Hz, and, in general, most of the tracked signals are low in power; the lower the power, the lower the elevation is.

Figure 14a presents the raw LS and the C/N0-based weighted LS position solutions of the downtown vehicular test in a local level frame displayed on a map. No reliability monitoring has been applied. Huge outliers can be observed with both the raw and weighted LS approaches, though weighting the observations reduces the errors. Figure 14b presents the respective horizontal speeds of raw LS and the C/N0-based weighted LS processing with respect to a reference horizontal speed obtained from an integrated inertial measurement unit/global positioning system (IMU/GPS)—Black Diamond System by NovAtel, Inc. Weighting the pseudorange rate observations based on C/N0 reduces some of the huge outliers in the horizontal speed solution. In all, the figures again demonstrate the importance of proper observations weighting in order to enhance position and velocity accuracy but further enhancement is required with outlier detection and exclusion. Availabilities of solutions in time were 99.8% in the vehicular test.

Figure 15a presents the reliable horizontal position solutions and Fig. 15b the reliable horizontal speed solutions when reliability checking in terms of the Danish and the Backward-FDE methods are applied to the vehicular test. Figure 15b includes again the reference horizontal speed obtained from the integrated IMU/GPS system to give some impression of the actual speed of the vehicle. Position and velocity accuracies are significantly improved with both the reliability enhancement methods even though there are some errors still present in both the Danish method and the Backward-FDE approaches, however, within the predetermined level of performance depending on the geometry. The availabilities of a solution are slightly decreased on applying the reliability checks in the Danish method and the Backward-FDE. Overall, surprisingly good results were obtained in the severely degraded signal environments with just effective reliability monitoring and high sensitivity receiver processing.

Conclusions

This paper discussed reliability monitoring and enhancement procedures suitable for poor signal-environment HSGPS-FDE, and presented two case studies with static and kinematic indoor and urban HSGPS data. As the redundancy is a limiting factor, there is only very little that can be accomplished in terms of accuracy improvement when implementing FDE methods and reweighted robust estimation schemes into HSGPS navigation. However, the presented procedures of reliability checking, the Danish estimation and the Backward-FDE methods, prove to be essential indoors and in harsh downtown environments, both for position and velocity accuracy and reliability enhancement. The Danish method is computationally more convenient when the deweighting of observations is performed iteratively during the solution estimation. The importance of observation weighting in degraded signal environments was also discussed in this paper.

As the lack of sufficient redundancy is a limiting factor for reliability monitoring, self-contained sensors should be included in degraded signal-environment navigation to improve the accuracy and reliability further by increasing the redundancy. In addition, the future Galileo system will also contribute to the increased redundancy, and thus, improve the reliability of navigation even in urban areas. In this paper, epoch-by-epoch LS estimation was used for position and velocity computation for sensitivity analysis purposes of the implemented reliability enhancement methods, but for real applications, certain filtering approaches have to be established, which is a task for continuing research.

References

Baarda W (1968) A testing procedure for use in geodetic networks. Netherlands Geodetic Commission, Publication on Geodesy, New Series 2, 5, Delft, Netherlands, pp 1–97

Brown RG (1992) A baseline RAIM scheme and a note on the equivalence of three RAIM methods. In: Proceedings of ION NTM 1992, The Institute of Navigation, pp 127–138

Caspary WF (1988) Concepts of network and deformation analysis. UNSW, Australia, School of Surveying, Monograph 11:1–183

Hartinger H, Brunner FK (1999) Variances of GPS phase observations: the SIGMA-ɛ model. GPS Solutions 2(4):35–43

Hawkins DM (1980) Identification of Outliers. Chapman and Hall, London, pp 1–188

Jørgensen PC, Kubik K, Frederiksen P, Weng W (1985) Ah, robust estimation! Aust J Geod Photogram Surv 42:19–32

Kaplan ED (ed) (1996) Understanding GPS: principles and applications. Artech House Inc., pp 1–554

Kuang S (1996) Geodetic network analysis and optimal design. Ann Arbor Press, Chelsea, pp 1–368

Kuusniemi H, Lachapelle G (2004) GNSS signal reliability testing in urban and indoor environments. In: Proceedings of ION NTM 2004. The Institute of Navigation, pp 210–224

Lachapelle G, Kuusniemi H, Dao DTH, MacGougan G, Cannon ME (2003) HSGPS signal analysis and performance under various indoor conditions. In: Proceedings of ION GPS 2003. The Institute of Navigation, pp 1171–1184

Leick A (2004) GPS Satellite Surveying, 3rd edn. Wiley, New York, pp 1–435

Lu G (1991) Quality control for differential kinematic GPS positioning. Master’s Thesis, University of Calgary, UCGE Report 20042, pp 1–103

MacGougan G, Lachapelle G, Klukas R, Siu K, Garin L, Shewfelt J, Cox G (2002) Performance analysis of a stand-alone high sensitivity receiver. GPS Solutions 6(3):179–195

Ober PB (2003) Integrity prediction and monitoring of navigation systems. PhD Thesis, Delft University of Technology, Integricom Publishers, The Netherlands, pp 1–146

Parkinson BW, Spilker JJ (ed) (1996) Global positioning system: theory and applications, vol 1 and 2. AIAA Inc., pp 1–793 and pp 1–643

Petovello MG (2003) Real-time integration of a Tactical-Grade IMU and GPS for high-accuracy positioning and navigation. PhD Thesis, University of Calgary, UCGE Report 20173, pp 1–242

Rousseeuw PJ, Leroy AM (1987) Robust regression and outlier detection. Wiley, New York, pp 1–329

Ryan S (2002) Augmentation of DGPS for marine navigation. PhD Thesis, The University of Calgary, UCGE Report 20164, pp 1–248

Teunissen PJG (1998) Quality control and GPS. In: Teunissen PJG, Kleusberg A (eds) GPS for Geodesy, 2nd edn. Springer, Berlin Heidelberg, New York, pp 271–318

Van Graas F, Farrell JL (1993) Baseline fault detection and exclusion algorithm. In: Proceedings of ION AM 1993. The Institute of Navigation, pp 413–420

Wieser A (2001) Robust and fuzzy techniques for parameter estimation and quality assessment in GPS. PhD Thesis, TU Graz, pp 1–253

Wieser A, Brunner FK (2000) An extended weight model for GPS phase observations. Earth Planets Space 52:777–782

Acknowledgements

The authors would like to thank Dr. A. Wieser and Ms. Diep Dao from the University of Calgary, Canada for their effort. The Nokia Foundation, the National Technology Agency of Finland, and the Graduate School of Electronics, Telecommunications, and Automation are acknowledged for financial support and SiRF Technologies Inc. for providing the test equipment.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kuusniemi, H., Lachapelle, G. & Takala, J.H. Position and velocity reliability testing in degraded GPS signal environments. GPS Solutions 8, 226–237 (2004). https://doi.org/10.1007/s10291-004-0113-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10291-004-0113-7