Abstract

Recent technological innovations have created new opportunities for the increased adoption of virtual reality (VR) and augmented reality (AR) applications in medicine. While medical applications of VR have historically seen greater adoption from patient-as-user applications, the new era of VR/AR technology has created the conditions for wider adoption of clinician-as-user applications. Historically, adoption to clinical use has been limited in part by the ability of the technology to achieve a sufficient quality of experience. This article reviews the definitions of virtual and augmented reality and briefly covers the history of their development. Currently available options for consumer-level virtual and augmented reality systems are presented, along with a discussion of technical considerations for their adoption in the clinical environment. Finally, a brief review of the literature of medical VR/AR applications is presented prior to introducing a comprehensive conceptual framework for the viewing and manipulation of medical images in virtual and augmented reality. Using this framework, we outline considerations for placing these methods directly into a radiology-based workflow and show how it can be applied to a variety of clinical scenarios.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Virtual and augmented reality have sought to address 3D visualization needs in medicine since the early 1990s [1,2,3]. The early, popular applications focused on visualizing complex anatomy in conjunction with the planning of and training for surgical procedures [4], including a surgical planning process in virtual reality (VR) using hardware developed by the National Aeronautics and Space Administration in the USA [5]. Today, the domain of virtual reality in medicine encompasses medical education, surgical planning, communication facilitation, and a wide range of therapeutic interventions. As of 2012, Pensieri and Pennacchini counted nearly 12,000 publications using common search terms for VR applications in healthcare [6]. Total spending on virtual reality and augmented reality (AR) products is expected to reach USD $215 billion in 2021 [7] with the global healthcare augmented and virtual reality market expected to reach $5.1 billion by 2025 [8].

A review and analysis of VR/AR is timely, as a succession of technological breakthroughs has drastically increased the quality and accessibility of compelling, immersive VR and AR in an era of personalized medicine. In parallel, the medical community has quickly adopted a new era of related transformative technologies in the form of 3D printing applications [9]. This review will outline the landscape of VR and AR technologies made accessible within the last 3 years. The effective use of virtual reality in radiological and other medical applications will be discussed, and parallels and differences with medical 3D printing will be illustrated.

Brief History of VR and AR

The visualization of digital information in three dimensions started with early prototypes of VR technologies dating back to the 1950s. The first devices are credited to Morton Heilig, who introduced the idea of multi-sensory cinematic and simulation experiences [10]. In 1960, Heilig was granted a patent for his concept of a head-worn analog display that encompassed the wearer’s periphery, and included optical controls, stereophonic sounds, and smells [11]. A refined design of a head-mounted display (HMD) was released in 1966 by Ivan Sutherland (Sword of Damocles). This design featured two cathode ray tubes that combined to produce a stereoscopic display with a 40° field of view. Being too heavy to wear unsupported, this HMD was suspended from the ceiling by cantilevers which also served to track the wearer’s viewing direction. By 1973, computer-generated graphics were introduced and begun to supplant panoramic images or camera feeds. The first 3D “wire-frame” graphics displayed between 200 and 400 polygon scenes (frames) at a rate of 20 frames per second, using processors that are the precursors of modern graphics processing units (GPUs) that are necessary to achieve modern VR and AR applications.

Developed alongside the first head-mounted displays were peripheral devices that provided some of the earliest examples of haptic feedback. Work in this area was largely driven by NASA and other government agencies interested in developing flight simulators and training systems for space exploration. Eventually, commercial versions of VR systems with integrated haptic feedback peripherals were released in the late 1980s and early 1990s by major video game developers and the entertainment industry but were plagued by performance issues and high costs. As such, VR/AR technologies were relegated to large government, academic, and corporate institutions who nonetheless realized the potential for medical applications. Over the last two decades, various medical VR/AR technologies have been evaluated for their ability to support visualization, simulation, and guidance of medical interventions, and for the ability to aid in diagnosis, planning, or therapy [12, 13].

Definitions of Virtual Reality (VR) and Augmented Reality (AR)

The terms “virtual reality” and “augmented reality”, while not new, are frequently confused due to their occasional misuse by the media during the growing popularity of new consumer devices. While distinct, both technological streams share the hallmarks of real-time simulation with multi-modal sensation or interaction of virtual elements coupled with positional tracking [10]. Where VR and AR differ is the incorporation of real-world elements. Virtual reality refers to the immersion within a completely virtual environment, which is often or most-easily achieved by taking over the entirety of a participant’s peripheral field-of-view via a head-mounted display (HMD). CAVE Automatic Virtual Environments, or CAVEs, can also be used, wherein the virtual environment is created by a series of room projectors displaying images on the room walls (the CAVE) that are synchronized to shutter glasses worn by the user to demultiplex the projected stereoscopic views.

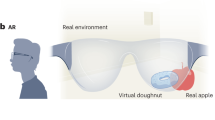

In AR, virtual elements are overlapped onto the surrounding real-world environment, often using an HMD that does not occlude the wearer’s vision. Modern AR systems range from simple, handheld displays showing models superimposed on real-world video images [14] to head-mounted devices with see-through glasses that allow wearers to visualize virtual elements superimposed on the surrounding real-world environment with additive blending [15, 16]. The latter is known as see-through AR. Other implementations consist of video pass-through headsets with front-facing cameras that supply a stereoscopic video feed upon which virtual models are superimposed [14]. This is referred to as pass-through AR. The interaction of real-world images and virtual elements in virtual reality, see-through AR, and pass-through AR implementations is illustrated in Fig. 1.

Given their common elements, the relationship between VR and AR can be better-conceptualized using the concept of the reality-virtuality continuum [17, 18]. Figure 2 illustrates this continuum between two extremes, with the real world (reality) on the one end, and a completely virtual reality on the other. The space between defines the mixed-reality virtuality continuum that includes a combination of both real and virtual elements. Augmented reality refers to a largely real environment with few virtual elements, while the less relevant concept of augmented virtuality (AV) is composed of a predominately virtual environment with elements of the real world.

Components of VR and AR

The two minimum components required to create a virtual or augmented reality experience are (1) positional tracking of the user’s eyes or head, and (2) visualization of virtual elements from the user’s perspective. These two components together facilitate a 3D perspective of virtual elements that maintain an independent position in space; this is a predicate of a 3D environment with which the user can interact.

Positional Tracking

Positional tracking in VR and AR is primarily used to determine, in real-time, the image and perspective to display to the user’s eyes. Secondary to this function, tracking is also used to determine the position of the user’s hands or handheld peripherals (e.g., controllers) to allow for 3D interaction with virtual elements. Tracking technology generally comes in two forms: full positional (6 degrees of freedom) or rotational-only (3 degrees of freedom) tracking. Rotational-only tracking can be accomplished with a combination of gyroscopes, accelerometers, and sometimes magnetometers called inertial measurement units (IMU) [10], commonly found in most smartphones. Full positional tracking requires the additional use of computer vision [19], laser-based tracking [20], magnetic tracking [10], or a combination of these technologies.

Visualization

In current VR head-mounted devices, visualization is provided by small, high-resolution screens. Today, these screens have exceptional capabilities, as the technology was mass marketed and advanced owing to the development of the smartphone. Recent advances in low-persistence display technology, i.e., display technology that only displays the image for a small proportion of the total frame time, has greatly contributed in the reduction of visual blurring due to head motion, and has been a key factor in the improved sense of immersion produced by modern systems compared to their predecessors [21]. In between the eyes and the screens, specially crafted lenses are inserted that angle the incoming light from the screen in order to both make the images displayed on the screen appear more distant, and to encompass as much of the user’s field-of-view as possible.

Environment

Today, virtual reality refers to a number of diverse environments, ranging from 360° pre-recorded video to fully immersive virtual interactive environments. The two main determinants that characterize the experience are the level of interaction that is possible with the visualized environment, and the perception of immersion in the environment. As expected, the continuum of experiences differs in tandem with the technical sophistication, and different approaches are readily categorized and distinguished by the degree of sophistication in visualization and tracking technology they employ as is illustrated in Fig. 3.

Modern Virtual and Augmented Reality Technology

Virtual Reality Hardware Technologies

Virtual and augmented reality have recently re-emerged as booming and rapidly changing technologies, catalyzed by advances in smartphone display technology, improvements in graphics processing units (GPUs), and tracking technology including revolutions in inertial measurement units [22] and optical tracking systems. The two first modern widely-available PC-based consumer VR platforms were the HTC Vive (HTC Corporation, New Taipei City, China) and the Oculus Rift (Oculus VR, Menlo Park, CA).

The Oculus Rift, released in March 2016, uses a proprietary tracking system called Constellation which achieves six degrees of freedom positional and rotational tracking using IMUs, USB connected optical cameras, and patterned infrared (IR) LED markers on the tracked devices. Following the initial release of the headset, handheld controllers, called Oculus Touch, were released in December of 2016 to provide a complete, interactive VR system.

The HTC Vive was a collaboration between HTC Corporation and Valve Corporation (Bellevue, WA) and was released concurrently with tracked controllers. It uses a full room-scale, 360° tracking system called SteamVR® Tracking [20]. This tracking system makes use of IMUs in combination with two “base stations” that regularly sweep the room with IR lasers (which are detected by photodiodes on the tracked objects) and is capable of tracking a rectangular tracking volume with a 5-m diagonal separation. Both the HTC Vive and Oculus Rift have two OLED displays with a resolution of 1080 × 1200 pixels per eye. The next-generation Vive system, the HTC Vive Pro, was recently released and features higher resolution displays (1440 × 1600 pixels per display) and other incremental upgrades to the form factor. Additionally, Valve has released an upgraded version of its SteamVR® Tracking system that will cover a larger tracking volume using additional base stations.

More recently, Windows Mixed Reality has emerged as a third hardware platform choice. This suite of headsets, produced by various partnered companies, share the same inside-out, computer vision tracking—i.e., where tracking is accomplished by analyzing changes in images of the surrounding environment from cameras mounted in the headset rather than by external cameras tracking the location of markers on the headset—and run on the Windows Mixed Reality software platform. Windows Mixed Reality VR systems tend to be cheaper than the HTC or Oculus alternatives although their inside-out tracking potentially sacrifices tracking robustness for ease and convenience of hardware setup.

Mobile vs Tethered Technologies

Apart from PC-connected or so-called “tethered” VR hardware, innovations in modern VR technology have also led to new mobile form factors for VR headsets. Initially, those implementations used compatible smartphones that could be attached to head mounted devices containing lenses. Image rendering and rotational-only tracking were accomplished with the internal hardware of the smartphones. Notable examples of such mobile, smartphone-based VR headsets are the Samsung Gear VR (Samsung, Seoul, South Korea), Bridge Mixed Reality and Positional Tracking VR Headset (Occipital, San Francisco, CA), Google Cardboard (Google, Mountain View, CA) which is simply a handheld cardboard shell with lenses, and Google Daydream [23].

Next-generation mobile headsets will feature all-in-one form factors, where the headsets are stand-alone devices that do not require a smartphone to function. Three examples are the Oculus Go with IMU-based 3 degrees of freedom, and the HTC Vive Focus and Lenovo Mirage Solo (Lenovo, Hong Kong, China) with 6 degrees of freedom computer vision tracking.

Mobile VR platforms are likely to see limited clinical adoption beyond certain roles in the near future, largely due to limitations in computational ability which is considerably reduced compared to that of high-end PC-based solutions. The rendering of most medical datasets requires a high degree of detail of high spatial resolution and is thus likely to be too challenging for current mobile processors. Wireless transmitters that allow consumer VR headsets to communicate with computers without the need for cables may however offer a short-term solution, allowing clinical adopters of virtual or augmented reality technologies to combine the computational ability of high-end PCs with the convenience of a mobile form factor.

Augmented Reality Technologies

The first commercially available, and by far the most popular, augmented reality system, called the HoloLens, was introduced by Microsoft Corporation (Redmond, Washington) in March of 2016. This system uses inside-out computer vision tracking and the reflection of high-definition light projectors onto the retina [24] in a mobile form-factor. Inside-out computer vision tracking is the tracking method of choice for most augmented reality systems since its absence would remove the advantage augmented reality offers, namely, allowing virtual elements to interact with the real world.

The choice of a mobile form-factor and the computational demand of inside-out computer vision tracking currently places limits on the rendering capability of the HoloLens, which potentially results in image latency and additionally poses a limitation in model detail (resolution) which can be detrimental for medical applications. Additionally, the system currently has a narrow field-of-view, and some performance issues have been reported [25]. As such, the current iteration of this technology likely is insufficient and will require improvements before it can meet clinical demands in general. However, as the technology progresses, we expect that near-eye, optical see-through AR display systems will inevitably be adopted for a host of medical applications.

Hardware and Software Requirements

Modern tethered virtual reality systems require a high-end PC to ensure the ability to display high-resolution wireframe and volume renderings at sufficiently high frame rates. Computers fulfilling these requirements will generally be very similar to computers designed for video games, using high-end CPUs and graphics cards. Depending on the specifications of a VR system, the PC may need a certain number of universal serial bus (USB) 3.0 ports and at least one high-definition multimedia interface (HDMI) video port.

Commercial VR systems additionally include software platforms to facilitate the interaction of individual applications with VR hardware. To date, three software platforms exist: SteamVR (Valve Corporation, Bellevue, WA) which is compatible with all major VR systems through their OpenVR software development kit, the Oculus software platform (Oculus VR, Menlo Park, CA) which is compatible with the Oculus Rift, and the Windows Mixed Reality platform (Microsoft Corporation, Redmond, WA) which supports the suite of Windows Mixed Reality immersive headsets created by third parties. Adopters of virtual reality technology will need to be cognizant of fulfilling PC hardware requirements and will want to adopt a VR system and compatible software platform to suit their needs.

Future Systems

The landscape of VR and AR hardware is rapidly evolving [15]. This evolution is being accelerated by consumer market interest and the ability of competing vendors to integrate their systems with the SteamVR® or Windows Mixed Reality software platforms. Valve corporation also freely licenses SteamVR® Tracking to hardware developers [20, 26], opening the opportunity for third parties to make new HMDs or other accessories that are compatible with their tracking system.

The technological landscape is somewhat fractured at the time of writing (early 2018), and early adopters must make decisions about which platforms to pursue. However, compatibility of future technologies will be greatly facilitated by the development of OpenXR, a cross-platform, open standard for virtual reality, and augmented reality applications and devices. This standard is being created under the direction of the Khronos Group in collaboration with a group of companies that includes Microsoft, Valve, Oculus, and HTC among others.

Developing Applications for AR/VR Systems

Commercial medical virtual reality software is only just starting to emerge [27,28,29,30,31] with only a few applications and use cases currently available. This means that current adopters of virtual reality for medical applications may need to develop their own software applications. Fortunately, the creation of virtual reality applications is greatly facilitated by accessible, well documented, and user-friendly game engines such as Unity (Unity Technologies, San Francisco, CA) and Unreal Engine (Epic Games, Cary, NC). These engines provide the underlying software algorithms that enable developers to quickly create 3D objects, place them in an environment, and handle their interactions. Furthermore, freely available plugins for the various virtual reality software platforms, such as SteamVR, Oculus, or Windows Mixed Reality, are available to quickly develop simple applications for the corresponding virtual reality system. These engines and platform-specific plugins create an exciting opportunity for clinicians and researchers to experiment with virtual reality in radiology and medicine, a field ripe with largely unexplored creative potential. We expect the impact of this re-emerging technology to be well-served by the development of in-house applications and commercial products in the near future.

VR Sickness

An important consideration for adopters of virtual reality technology is the phenomenon of virtual reality or cyber-sickness [32, 33]. The most common cause of nausea and discomfort resulting from exposure to virtual reality experiences is locomotion occurring in the virtual scene that does not directly translate to real-life motion. This causes vestibular mismatch, similar to car sickness but in the opposite “direction”: The eyes are receiving signals of motion, while the inner ear receives signals that no motion is present. Vestibular mismatch immediately induces a reaction in a fraction of users, while others remain immune to its effect.

To avoid this phenomenon, most VR environments should be, and often are, designed such that the user’s virtual perspective is only changed whenever there is actual movement of the user’s head. This has design implications when converting non-VR 3D applications to a virtual reality environment. One example is a VR version of virtual colonography fly-throughs; there is a relative high proportion of users who become nauseated. VR systems without full 6 degrees of freedom positional tracking, such as mobile VR headsets that only track rotations, may have higher risk of causing VR sickness regardless of application design since they have limited ability to faithfully reproduce head movements in the virtual scene, and thus, there will always be some amount of disconnect between actual and viewed movement.

Another factor that affects the comfort of VR experiences is the refresh rate (frame rate) of on-screen images. This can be hardware (display technology) or software (low-end PC)-limited; a threshold of at least 60 frames per second is reported as a minimum to avoid discomfort and nausea over time. This threshold places significant technical challenges for medical applications given the rich, high-resolution datasets produced by modern imaging modalities. Rendering this data requires significantly more computing power than simpler objects found in, e.g., video games. Apart from vestibular mismatch and frame rate, other causes contributing to possible user discomfort may exist, such as, for example, the unique decoupling of depth of focus (which is necessarily a constant depth in VR headsets) and ocular vergence, i.e., the simultaneous movement of both eyes in opposite directions to obtain single binocular vision.

From a clinical perspective, users should be made aware of the possibility of VR sickness and steps should be taken to avoid its occurrence such as avoiding experiences with vestibular mismatch and ensuring sufficient frame rate through the use of adequate hardware and appropriately optimized software.

Medical Applications of VR and AR

The Role of VR in Medicine

Virtual and augmented reality technologies have many potential medical roles (Fig. 4). We organize them by their proximity to direct patient interaction. In the absence of a patient’s presence entirely, VR and AR are a clinician tool or intervention planning aid. From the patient perspective, virtual reality can enhance therapy administered under clinician guidance. However, virtual and augmented reality can also be used as a tool for patient education about their health or treatment, or to deliver treatment. Under this schema, medical use of virtual reality is thus further categorized into either clinician-as-user or patient-as-user applications.

Patient-as-user clinical applications have been more widely pursued to date, likely because VR applications facilitated entirely new domains of therapy that are otherwise not possible. Given that there is no reference standard for these applications, there is no “bar” to exceed, and these novel therapeutic experiences can still be effective even if technologically limited by current or past systems. In contrast, clinician-as-user applications must prove to be as good or better than current clinical care pathways, and so their adoption is linked with the ability of the technology to deliver a robust and improved experience. It is the opinion of the authors that the new wave of virtual reality technology is facilitating new possibilities in the clinician-as-user domain of medical virtual reality applications.

VR applications were reviewed as early as 1998 [34] with roughly the same classes of applications as we now define 20 years later. There are three main clinician-as-user categories: training, surgery (and other intervention) planning, and medical image interpretation. Additionally, VR and AR applications are in testing, and use for patient-as-user applications, i.e. the device is worn by the patient who undergoes the experience to achieve a therapeutic goal. We now review each of these categories.

Training

Many sophisticated studies supporting the utility of VR in medicine are in the field of medical training. In one study, 12 interventional cardiologists were randomly divided into two equal-sized groups. One group underwent training for learning carotid artery procedures using a VR simulator and the other underwent traditional patient-based training. Both groups had ample skills in intravascular catheter manipulation, although none of the 12 experienced interventionalists had performed carotid intervention prior to this study. The group trained with the VR simulator and overall better performance than those trained traditionally [35]. Other studies support equally desirable outcomes. VR has been successfully used for resident procedural training, e.g., to simulate lumbar punctures [36] or to better understand complex imaging anatomy, for example, the ultrasound appearance of spinal anatomy [37]. VR/AR methods appear to have great promise in interventional radiology. Similar results were found from other sub-specialists. For example, cadaver studies of endoscopic access with and without an image registration system showed that use of such a system enhanced the efficiency to access difficult target organs [38].

There is an abundance of other educational applications. For example, investigators have constructed a 3D virtual model of a cell from serial block-face scanning electron microscope (SBEM) imaging data, opening up new opportunities for learning and public engagement [39]. Not all reports of the use of VR/AR in clinician education are uniformly positive, however. Among medical students learning skull anatomy, both AR and VR were perceived to have a role as educational tools; however, headaches, dizziness, and blurred vision were more commonly reported when these supplanted more traditional tablet-based educational applications [14]. Nonetheless, educational applications continue to be a topical issue, with many new reports appearing at annual meetings of multiple specialties. In an abstract presented at the 11th Annual Scientific Meeting of Medical Imaging and Radiation Therapy [40], VR was incorporated as a medical school teaching program for radiology, with student feedback suggesting that virtual radiography simulation empowered students to develop confidence, technical, and cognitive skills. In another example, the HoloLens was used to display molar impaction derived from segmented cone-beam CT data sets and led to a better understanding of these complex 3D relationships in maxillofacial radiology [41].

Surgery

One of the most-studied applications of 3D models in surgical planning is 3D printing, wherein 3D models of anatomy and pathology derived from medical images are manufactured using a 3D printer [42, 43]. Such models have been used for multiple procedures and have been evaluated by regulatory bodies [44, 45]. Like AR/VR, 3D printing requires crossing disciplines, and engagement of users from many specialties [46]. As is the case with all diagnostic tools, scientific studies must support guidelines on appropriate use [47]. Such studies in 3D printing are making it increasingly acknowledged that physical models are appropriate for planning complex cases, e.g., congenital heart disease procedures. VR and AR that have similar capabilities of presenting 3D models derived from medical images thus likely have significant potential to also enhance surgery and promote minimally invasive procedures [12]. Brief reviews of the use of historical mixed reality technologies in support of surgical procedures are provided by Linte et al. [12, 13]. One advantage of AR/VR over 3D printing is that it can additionally provide simultaneous display of real and virtual images [48]. Like 3D printing, the use of AR and VR has focused on complex cases, where superior appreciation of complex 3D relationships is expected to enhance the confidence of the interventionalist. In addition to the methods described in this review, simpler, very low-cost solutions using two smartphones and a VR headset have been used to view slit lamp and surgical videos in 3D [49].

Early testing using technologies described in this review includes training for laparoscopic surgeries [50] as well as neuroimaging for surgical planning [51] and robot-assisted surgery [52]. In an orthopedic preclinical study using video-tracked AR markers, surgeons use an augmented radiolucent drill whose tip position is detected and tracked in real-time under video guidance [53].

Image Interpretation

This review includes examples from our lab where VR/AR approaches have been implemented into clinical practice. To our knowledge, our group is the first to place these technologies directly into a radiology-based workflow. However, many other groups have created VR experiences that import medical images and are used for radiology reporting [54]. One example is a depth three-dimensional (D3D) augmented reality system that provides depth perception and focal point convergence that could potentially be used to identify and assess microcalcifications in the breast [55].

For studying pathology, the HoloLens AR tool has been considered as ideally suited for digital pathology and has been tested for autopsy as well as gross and microscopic examination [56]. Similarly, volumetric electron microscopy data has been evaluated in the CAVE environment, including for advanced visualization, segmentation, and advanced analyses of spatial relationships between very small structures within the volume [57]. Finally, preliminary work has been done in ophthalmology to display images of the retina, with preliminary reports suggesting high concordance between an experimental VR method and traditional viewing platforms [58].

Patient-Based VR/AR Therapies

A significant portion of the literature on medical application of VR/AR focuses on the patient experience, and the incorporation of VR/AR to enhance more traditional care pathways. A systematic review (2005–2015) of the use of virtual reality among patients in acute inpatient medical settings [59] included 11 studies over 3 general applications: first, as a pain management tool/to provide distraction from pain, particularly in children [60,61,62,63,64,65,66], second, to address eating disorders [67, 68], and third, for cognitive and motor rehabilitation [69]. The authors of that review found large heterogeneity in the studies reviewed. This partly reflects the implementation of new technologies, and partly the fact that there are many possible ways that these collective technologies can be integrated clinically. The diseases and targeted outcomes within these three categories are diverse, as is the hardware/software combinations used to generate the VR patient experience. Thus, while the early data shows promise for such applications to enhance the overall patient experience, there remains a significant need to implement a smaller number of strategies for more detailed trials.

Again, not all trials of VR/AR as therapeutic tools have generated positive results. One randomized, multicenter, single-blinded controlled trial of patients after ischemic stroke included 71 subjects who used a Wii gaming machine versus a control cohort of 70 patients who underwent more traditional recreational therapy. Those in the Wii group did not show therapeutic benefit in motor function recovery [70]. Whether the particular method used in that study was sufficient to qualify as a VR approach or not was nonetheless questionable [71], highlighting the need for additional studies to determine which, if any VR and AR experiences, should be used. In general, the appropriateness of therapeutic applications of VR and AR remains an important, open, question. Other focused studies have shown great promise for sophisticated simulations as strategies to enhance rehabilitation. In leg amputees, interventions may be used for persistent phantom limb pain, associated with the sensation that the missing extremity is still present [72].

Because VR simulates reality, it can increase access to psychological therapies wherein patients can repeatedly experience problematic situations and be taught, via evidence-based psychological treatments, how to overcome encountered difficulties [73]. Such Virtual Reality Exposure treatment was studied in 156 Iraq and/or Afghanistan veterans with post-traumatic stress disorder [74], and VR methods have also been studied as a treatment option for spider phobia [75]. VR has also been explored as a potential method to deliver Dialectical Behavior Therapy to patients with Borderline Personality Disorder [76]. A comprehensive review of the experience of one large center, the Virtual Reality Medical Center in the use of VR therapy to treat anxiety orders, has been provided in [77].

Virtual Reality Visualization of Medical Images and Models

While the therapeutic applications of VR and AR are increasing, in the remainder of this review, we focus exclusively on their use for visualization of medical images and segmented models for clinician-as-user applications. The visualization of medical image data in virtual reality can take two main paths: (1) the visualization of derived (i.e., segmented from the images) models, such as those that are 3D-printed for, e.g., surgical planning, and (2) the visualization of unsegmented (raw) image data. Recent improvements in virtual reality technology introduce the opportunity of creatively combining the intuitive 3D visualization of derived medical models with traditional and more inventive ways to concurrently view the source 3D medical image datasets.

The different work flows of the paths to possible image visualizations in AR/VR are shown in Fig. 5. Straightforward application of VR is shown in the leftmost path; in this path, image data used for clinical interpretation can be used to create segmented models that are subsequently viewed in virtual reality (Fig. 6a). Alternatively, the source image data can be viewed in one of three ways to aid the clinician to comprehend the three-dimensional nature of the information. One such workflow is when one simply creates a virtual screen or monitor that replicates the way in which source, thin section reconstructed image slices (e.g., in the axial plane for an axial acquisition) are currently viewed on a 2D monitor (Fig. 6b). A second possible work flow involves the ability to perform a 2D multiplanar reformation that samples a “slice” of the 3D volume in space. However, in a VR system, the orientation of the particular plane can also be displayed so that the user can better comprehend the spatial relationship of the particular plane in the 3D image volume (Fig. 6c). The location and orientation of the planar reformation are interactively selected and can be changed in real-time by the user. This is typically done within “3D visualization” [78] using either a stand-alone thick client or a thin-client that is also used as a radiology workstation. We define this mode of source image data viewing in VR as “2.5D visualization.” More specifics will follow. Finally, volume rendering techniques that display the source image data as a 3D rendering (a form of 3D visualization), again similar to a standard radiology 3D workstation, are naturally visualized and manipulated in a VR environment (Fig. 6d). There are numerous approaches to render 3D data (Fig. 7). These and other details of the different workflows are described below.

Visualization of Segmented Images

3D visualization refers to the collective methods of viewing a medical imaging volume on a 2D computer screen [79]. There are similarities between the 3D visualization workflow, subsequent workflow for 3D printing, and the workflow requirements for virtual reality. However, some differences between these workflows exist, as detailed below.

3D models destined for printing are often saved as standard tessellation language (STL), additive manufacturing file (AMF), or 3D manufacturing file (3MF) formats [80]. Since these formats may not be compatible with virtual reality or 3D rendering software, models may need to be saved as wavefront or object (OBJ) files which have the option to include material properties such as color and shading characteristics in addition to the definition of triangles that are found in STL files.

For 3D printing, there are recommendations for the number of triangles describing the shape of a model for 3D printing for different anatomical sites [42]. These recommendations were chosen to represent the limit of detail beyond which has no benefit to the provider. The demanding computational requirements of rendering images for virtual reality (i.e., the requirement of having two high-definition displays, one for each eye, and both of which display data in a synchronized manner at a high frame rate) may limit the number of triangles/vertices that one can use in a model (or group of models whenever multiple models must be displayed) before impacting smooth rendering performance and subsequently introducing the risk of nauseating the user. These limits will depend directly on the technical specifications of the computer hardware used for visualization and the details of the software implementation but, given current technology, will generally be lower than the number of triangles recommended for 3D printing. Given current computer hardware limitations, this creates a challenge for model creators to include sufficient anatomical detail to maintain clinical accuracy while allowing for smooth, real-time interactive visualization. We recommend that early adopters acquire the best-performing computer system available to help them overcome this limitation as much as possible.

Models displayed in 3D rendering have lighting and material parameters that affect how they are visualized in a scene lit by one or more virtual light sources. Analogous to the selection of printing material and color, the clinical efficacy of VR-visualized models can be improved through careful selection of material properties for the displayed model, such as color, lighting parameters (i.e., smoothness, glossiness), transparency, or textures. In addition to material properties, the displayed object will look different based on the rendering technique used to render these properties. For example, models can be rendered with smooth or flat shading; in each shading model, the effects of light interacting with a model (e.g., reflection, refraction) are calculated by making use of normal vectors defined for each vertex of the triangles comprising the model. This would be analogous to the vertices of the triangles for STL models. For a given point on the face of a triangle in a model, the effective normal is calculated as a weighted mean of the normal at each of the vertices. The vertex normals can be defined as a face-specific parameter (one normal direction per face: flat shading) where the normal is the same at each vertex of the triangle, or as a vertex-specific parameter (one normal per vertex: smooth shading) where the normal at each vertex is the mean of the normals for the adjoining faces. Smooth vs. flat normals and their effect on shading are illustrated in Fig. 7. The choice of smooth or flat shading can have implications for the amount of detail required in a model’s mesh. The appearance of smoothness can normally be achieved with sufficient model detail (i.e., number of triangles in the model), but smooth appearance can also be accomplished with a lower detail model using smooth shading normals. In the case of a low detail model, one needs to be careful that the resulting visualization still reflects the anatomy with high fidelity.

For 3D printing, segmentation typically includes only those tissues directly relevant for the model to be held by the clinician. This choice is derived by the permanent, fixed nature of a 3D printed model, and additional structures, generally, distract or obscure those anatomical features highlighted by the model. Since segmented models in virtual reality may be resized, clipped, faded, or removed by the user interactively in real-time via a software user-interface, the requirement of parsimonious anatomical inclusion is no longer necessary. This enables clinicians, should they collectively decide, to include more surrounding or contextual anatomy in their models. The benefit of a larger amount of segmented tissue must be weighed against the limits of the performance of the rendering engine with respect to the number of total vertices in a scene. Judicious use of detail in each segmented model, for example, sacrificing some detail for models of less direct relevance, can help improve the overall responsivity and interactive experience. Notably, the flexibility offered through virtual reality may even be a powerful tool to guide and assess the selection of tissues to include for 3D printed models themselves—particularly given the current absence of clear guidelines for the tissues that should be included for a given indication.

To be compatible with 3D printer software, polygon meshes defining models to be printed by 3D printers have strict requirements for being closed surfaces with no open faces or holes so that printers can fill each volume defined by the mesh’s closed surface with solid or porous material. A segmented polygon mesh viewed with 3D rendering in VR has no such requirements; however, the illusion of being a solid object is maintained only insofar as the defined surfaces remain fully rendered. One of the immediate benefits of using virtual reality to visualize and manipulate 3D models is the ability to intuitively manipulate handheld cutting planes that allow one to visualize cross sections of the model in real time (Fig. 8).

However, without properly accounting for this interaction, the illusion of viewing a solid object can be disrupted when using a cut plane through the model, e.g., in order to cut anatomy that is located more proximal to the user to see more distal anatomy obscured by it. The intuitive understanding of the anatomy can be hindered when using cut planes without appropriate rendering. Specifically, by default, 3D rendering engines generally avoid rendering the back faces or inside surfaces of objects to avoid unnecessary computational overhead. This technique is known as back-face culling; the effect of clipping through an object with back-face culling can be seen in Fig. 9b. If one renders objects with no back-face culling (Fig. 9c, d), then the inside surfaces of the object become visible when the object is cut away. This is generally a better representation than is produced by back-face culling, but depending on the method used to render the back faces, it can be visually confusing to the user (Fig. 9c). The simplest solution to maintain the illusion of a solid object is to add a second material, a single, unlit color, that is only used to render the back-faces of objects (Fig. 9d). This conveniently reproduces the illusion that the cutting plane is interacting with a solid object.

Illustration of back-face rendering options. (a) Unclipped object. (b) Clipped object with back-face culling; when the front corner is clipped, the white background is seen through the opening. (c) Clipped object with back-faces rendered; when the corner is clipped, the inside aspect of the faces in the back of the object is revealed, but the resulting visualization may be confusing to some users. (d) A clipped object with a single, unlit color used to render all back faces; when the corner is clipped, the internal aspect of the faces in the back is now rendered as in (c), but the single color gives the illusion of a solid object

The resulting effect of the various rendering options is demonstrated in Fig. 10, which illustrates a model of the heart interacting with a cutting plane: The figure illustrates back-face culling (Fig. 10a), both front and back faces rendered with the single material properties selected for the heart (Fig. 10b), and with a poorly chosen unlit back-face color (Fig. 10c), or an appropriately chosen unlit back-face color (Fig. 10d). Despite its simplicity, this technique works remarkably well so long as other objects in the scene do not enter the interior volume of the object. If this happens, then the front faces of the other objects will appear hovering inside, in this example inside the myocardial wall. With several interfacing models that can be manipulated, a more elaborate solution, specific to each application, may be required (e.g., a biopsy needle which needs to be seen in the tissue it is entering, versus a surgical guide that merely needs to be shown resting against the tissue and does not need to be seen within the tissue).

Visualization of Unsegmented Images

2D Visualization

Visualization of unsegmented images in virtual reality can be generally accomplished in three ways, distinguished by their integration of three-dimensional information. The first reproduces or simulates two-dimensional screens typically used for image viewing. In this case, virtual reality removes some limitations associated with having a physical display such as size, and may aid in removing distractions as this “virtual workstation” fully encompasses the field-of-view of the user, blinding them to the real-world. In 2D visualization, any spatial information contained in the 3D volume of the image is unused.

The viewing of unsegmented images in virtual reality involves the conversion of DICOM image information to an image texture. In the context of VR and rendering, texture is a 2- or 3-D image that is used as a property of the object’s material in a 3D rendering scene. The rendering engine merely renders the texture on the object (in this case, a 2D plane object) rather than a single color on the object as in Figs. 9 and 10. Textures come in various storage formats (e.g., RGBA 32-bit floating point per channel). The conversion of pixel values, for example, Hounsfield units for CT images, to texture formats must be carefully designed such that full gray-scale resolution of images is preserved.

Display Resolution in VR and AR

The concept of display resolution in virtual reality requires specific consideration. With a regular radiology workstation, display resolution is a fixed characteristic of the physical display itself (which is distinct from the generally lower underlying resolution of image sets themselves). In head-mounted display mediated virtual reality, the physical displays are viewed at a fixed distanced by the eyes through lenses, and the perception of the entire virtual scene is defined by the fixed resolution of these displays, with visual acuity playing no role. Early developer kits of modern VR headsets were relatively low-resolution, and the individual pixels composing the virtual scene were readily apparent. The sensation of seeing the world being composed of individual pixels has been likened to viewing the world through a screen door, and thus the result of lower resolution displays with less than optimal pixel “fill-factors” (i.e., the ratio of light producing area to total area in an individual pixel) has often been referred to as the “screen-door effect.” This limitation in current HMD technology has implications for the clinical adoption of virtual reality for unsegmented medical image viewing, where image resolution may have image quality guideline considerations [81, 82]. Fortunately, display technology is quickly advancing to the point where individual pixels in VR headsets will be completely unresolvable by the human eye.

Because the headset display resolution is associated with the entire virtual scene, display resolution of the medical image being displayed is no longer a fixed characteristic as it is on a physical workstation monitor. In a physical monitor, e.g., 512 × 512 CT image is always displayed using all 1600 × 1200 pixels if that is the resolution of the monitor and the image is scaled to the full screen size. In a VR headset, which has a resolution of 1080 × 1200 pixels for each eye for, e.g., the HTC Vive, the same image is displayed using a portion of the 1080 × 1200 pixels at each time point, while the user is interacting with it, depending on the proportion of the field-of-view of the user that the image is covering in the virtual environment. The effective resolution at which the image is displayed in the virtual environment is modified not only by changing the size (scaling) of the image in the virtual environment (just as it would change if the user of the standard monitor scaled the image to take up only, e.g., half the monitor size) but also by the proximity of the image to the user (positioning), while the effect of visual acuity on reading images is nearly eliminated. These concepts are illustrated in Fig. 11.

Illustration of the considerations for apparent resolution for (a) a traditional radiological display and (b) virtual reality visualization. The effect of distance and scale in a virtual reality visualization is illustrated in (c). In all cases shown, the medical image is composed of a fixed number of pixels as shown by the gray grid on top of the heart. As shown in (c), depending on how far or close the user is to the heart in the virtual environment, the number of pixels used to render the heart is different (the vertical screen is always near the eyes)

2.5D Visualization

The natural progression from 2D visualization is a method of visualization where the 2D image displayed is positionally connected to the underlying 3D image volume from which it was derived. This is achieved by modeling a moveable viewing plane that samples a three-dimensional volume containing the scan set, such that the image displayed on the plane is the cross section that intersects the volume. As a result, one can re-orient and reposition the volume or the viewing plane allowing the user to fluidly see any oblique image cross section. We refer to this visualization method as 2.5D visualization since the image being seen by the user is a 2D cross-section, but the 3D spatial information as to where that 2D cross-section is contained within the scan volume is also visualized. This is equivalent to the cross-hairs used in 3D workstations to convey the orientation of the multiplanar reformation. The main benefit of 2.5D visualization is that it allows the user to intuitively and quickly select oblique views of images while maintaining an understanding of the positional relationship with the 3D volume. Displaying the images in this familiar form factor also aligns with the considerable clinical training for interpreting such images.

As with 2D visualization, the image information from DICOM files must be converted to an image texture, but now, the use of a 3D texture is required in order to enable the computer to more quickly render the arbitrary plane as it is interactively moved by the user. (In contrast, if a 2D texture on a plane object was used, the texture would have to be recalculated on the fly from the image dataset, based on where the plane is positioned). One must associate the 3D texture with a rectangular prism object that has the dimensions, position, and orientation of the original scan volume. Consequently, this necessitates an additional step to the preprocessing required for 2D visualization, wherein the spatial information of the scan must be extracted from DICOM headers.

3D Visualization: Volume Rendering

The most three-dimensionally integrated method of visualizing medical images is with volume rendering [83, 84], where the image is displayed as a 3D object in space. As in 2.5D visualization, a 3D image texture is used, except in this case, the image is not a cross-sectional sampling of the texture but is generated by performing raycasting through the volume [85]. In raycasting, for each pixel of the image that displays the rectangular prism containing the image volume, a ray of sight is calculated through the volume. Along the ray, the image volume is sampled at equidistant points using interpolation. The sampled values (isovalues) are converted into a material color and opacity, and consecutive sampling points are composited to produce a final color and opacity.

Volume rendering allows for considerable choice in the interpretation of isovalues and can result in any number of different resulting volumetric images. For example, the volume can reflect the underlying grayscale value of CT numbers, or bones only could be rendered with realistic coloring and lighting, etc. These various options are produced by using transfer functions which map isovalues to the accumulation of opacity (alpha) and color in an individual raycasting step [86, 87].

As discussed previously, the benefit of a compelling and immersive virtual reality experience is contingent on a high frame rate (> 60FPS) which must be rendered in high resolution on two displays. These requirements pose a unique challenge for virtual reality implementations of volume rendering which comes with a high computational burden. As a result, volume rendering shaders (i.e., the code provided to graphics processing units for image display) must be carefully optimized—beyond the amount that implementations on non-VR platforms require. Techniques such as early-ray termination and empty-space leaping [88] are among the tools that can be used to achieve a high-performance implementation. These techniques may require the pre-processing of underlying scan data to generate optimization textures that are used in addition to isovalue 3D textures. Additionally, GPU shader calculations that generate lighting effects on volume rendered images may also require associated 3D gradient textures to be pre-computed.

Manipulation of Data in VR and AR

Virtual reality does not simply differ from traditional 2D or 3D modes of visualization, but also facilitates new and intuitive methods of interaction. As with 3D printing, an immersive virtual reality experience incorporates a true 3D perspective for interacting with medical information but builds on the benefit of 3D printing by including an entire surrounding environment for 3D interaction. The immersive element of virtual reality, created by including input from tracked controllers or hands and the position of a user’s head, opens previously unavailable ways of interacting with 3D medical information. Basic user interaction tools include the ability to grab and reposition models and 3D image datasets, change their scale through hand gestures, manipulating virtual handheld measurement tools, and manipulating dataset transparency and window and leveling. More complex interactions such as handheld clipping planes (Fig. 12) that potentially use transparency, blending of fused image set data, or even time, are part of a new creative and innovative domain for interacting with medical data. It is the experience of the authors that the quality of the design of an intuitive user-interface has a considerable impact on the ease or difficulty with which medical information in virtual reality is understood.

VR as a Tool for Collaboration

Virtual reality creates a wide variety of collaborative opportunities. We actively design and use experiences where clinicians and other health care experts inhabit the same virtual space and discuss the same medical data that is either a mutually interactable object in front of them, or the shared virtual environment itself. This can be accomplished by sharing the same physical space, but more interestingly by establishing the collaborative experience over a network between two remote locations. While we have implemented this at our institution, the potential has not been fully exploited.

The only technical challenge that needs to be overcome with remotely connected applications is the sharing of large medical data sets. It is likely that for a fluid experience, the datasets that are being visualized must be locally stored, with only the shared interaction (positioning, orienting, etc.) of the datasets passed over the network. Cloud rendering options may be another interesting alternative [89].

Summary

Virtual and augmented reality are poised for increased adoption in a variety of applications in radiology, surgery, and other therapeutic interventions. Historical applications in medicine have largely emphasized patient-as-user applications with VR/AR being used as the intervention itself. Recent breakthroughs have enabled expansion of clinician-as-user applications, where adoption has been historically limited by the ability of the technology to achieve a sufficient quality of experience.

We have gone beyond the definitions of virtual and augmented reality to set the stage for clinicians and other interested parties to distinguish between technologies, and to understand how they relate to their use of real and virtual elements. The various experiences commonly referred to as virtual reality have been categorized by their level of technological sophistication. The current options for consumer-level virtual and augmented reality systems are growing, as is the literature. The work introduces a comprehensive conceptual framework for how medical images in virtual reality can be viewed, and we have outlined a number of considerations for placing these technologies directly into a radiology-based workflow.

References

Sutherland J, La Russa D: Virtual reality. In: Rybicki FJ, Grant GT Eds. 3D Printing in Medicine: A Practical Guide for Medical Professionals. Cham: Springer International Publishing, 2017, pp. 125–133

Satava RM: Virtual reality surgical simulator. Surg Endosc 7:203–205, 1993

Phillips JR: Virtual reality: A new vista for nurse researchers? Nurs Sci Q 6:5–7, 1993

Chinnock C: Virtual reality in surgery and medicine. Hosp Technol Ser 13:1–48, 1994

Rosen JM, Soltanian H, Redett RJ, Laub DR: Evolution of virtual reality. IEEE Eng Med Biol Mag 15:16–22, 1996

Pensieri C, Pennacchini M, Sivan Y, Gefen D, Boulos MK, Claudio P, Maddalena P: Overview: Virtual reality in medicine. J Virtual Worlds Res 7:1–34, 2014

Worldwide Spending on Augmented and Virtual Reality. Available at https://www.idc.com/getdoc.jsp?containerId=prUS42959717. Accessed January 2018.

Healthcare Augmented & Virtual Reality Market Worth $5.1 Billion By 2025. Available at https://www.grandviewresearch.com/press-release/global-augmented-reality-ar-virtual-reality-vr-in-healthcare-market. Accessed January 2018.

Rybicki FJ, Grant GT: 3D Printing in Medicine. Cham: Springer International Publishing, 2017

Burdea GC, Coiffet P: Virtual Reality Technology. New York: Wiley, 2003

Heilig ML: Stereoscopic-television apparatus for individual use. US Patent 2:955–156, 1960

Linte CA, Davenport KP, Cleary K, Peters C, Vosburgh KG, Navab N, Eddie P, Jannin P, Peters TM, Holmes DR, Robb RA: On mixed reality environments for minimally invasive therapy guidance: Systems architecture, successes and challenges in their implementation from laboratory to clinic. Comput Med Imaging Graph 37:83–97, 2013

Yaniv Z, Linte CA: Applications of augmented reality in the operating room. Fundam Wearable Comput Augment Real 485–510, 2016

Moro C, Štromberga Z, Raikos A, Stirling A: The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat Sci Educ 1–11, 2017

Plasencia DM: One step beyond virtual reality: Connecting past and future developments. Crossroads ACM Mag Stud 22:18–23, 2015

Abrash M: Why You Won’t See Hard AR Anytime Soon. Available at http://blogs.valvesoftware.com/abrash/why-you-wont-see-hard-ar-anytime-soon/. Accessed May 2017

Milgram P, Takemura H, Utsumi A, Kishino F: Augmented reality: A class of display on the reality-virtuality continuum. Proc SPIE 2351:282–292, 1995

Milgram P, Kishino F: A taxonomy of mixed reality visual displays. IEICE Trans Inf Syst 77:1–15, 1994

Foxlin E, Harrington M, Pfeifer G: Constellation: A Wide-Range Wireless Motion-tracking System for Augmented Reality and Virtual Set Applications. Proc. 25th Annu. Conf. Comput. Graph. Interact. Tech. - SIGGRAPH ‘98 98, pp. 371–378, 1998

Valve: SteamVR Tracking. Available at https://partner.steamgames.com/vrtracking. Accessed May 2017

Valve Reveals Timeline of Vive Prototypes, We Chart it For You. Available at http://www.roadtovr.com/valve-reveals-timeline-of-vive-prototypes-we-chart-it-for-you/. Accessed May 2017

Tazartes D: An historical perspective on inertial navigation systems. In: Proc. Int. Symp. Inertial Sensors Syst, pp. 1–5, 2014

Riva G, Wiederhold BK: Gaggi: Being different: The transformative potential of virtual reality. Annu Rev Cybern Therapy Telemed 14:3–6, 2016

Tepper OM, Rudy HL, Lefkowitz A, Weimer KA, Marks SM, Stern CS, Garfein ES: Mixed reality with HoloLens: Where virtual reality meets augmented reality in the operating room. Plast Reconstr Surg 140:1066–1070, 2017

Wang S, Parsons M, Stone-McLean J, Rogers P, Boyd S, Hoover K, Meruvia-pastor O, Gong M, Smith A: Augmented reality as a telemedicine platform for remote procedural training. Sensors 17:1–21, 2017

Lee N: LG’s SteamVR headset is a bulky yet promising HTC Vive alternative. Available at https://www.engadget.com/2017/03/02/lg-steamvr-headset/. Accessed May 2017

Bharathan R, Vali S, Setchell T, Miskry T, Darzi A, Aggarwal R: Psychomotor skills and cognitive load training on a virtual reality laparoscopic simulator for tubal surgery is effective. Eur J Obstet Gynecol Reprod Biol 169:347–352, 2013

3D Systems Extends Leadership in Precision Healthcare with Technology Advancements to Quickly Create 3D Models. Available at https://www.3dsystems.com/press-releases/3d-systems-extends-leadership-precision-healthcare-technology-advancements-quickly. Accessed April 2018

3D Systems Leverages Virtual Reality to Advance Surgical Training. Available at https://www.3dsystems.com/press-releases/3d-systems-leverages-virtual-reality-advance-surgical-training. Accessed April 2018

Interactive Virtual Reality. Available at http://www.echopixeltech.com/interactive-virtual-reality/. Accessed April 2018

TeraRecon Launches Augmented Reality 3-D Imaging at HIMSS17. Available at https://www.itnonline.com/content/terarecon-launches-augmented-reality-3-d-imaging-himss17. Accessed April 2018

Rebenitsch L, Owen C: Review on cybersickness in applications and visual displays. Virtual Real 20:101–125, 2016

Rebenitsch L: Managing cybersickness in virtual reality. Crossroads ACM Mag Stud 22:46–51, 2015

Satava RM, Member A, Jones SB: Current and future applications of virtual reality for medicine. Proc IEEE 86:484–489, 1998

Cates CU, Lönn L, Gallagher AG: Prospective, randomised and blinded comparison of proficiency-based progression full-physics virtual reality simulator training versus invasive vascular experience for learning carotid artery angiography by very experienced operators. BMJ Simul Technol Enhanc Learn 2:1–5, 2016

Ali S, Qandeel M, Ramakrishna R, Yang CW: Virtual simulation in enhancing procedural training for fluoroscopy-guided lumbar puncture: A Pilot Study. Acad Radiol 25:235–239, 2018

Ramlogan R, Niazi AU, Jin R, Johnson J, Chan VW, Perlas A: A virtual reality simulation model of spinal ultrasound: Role in teaching spinal sonoanatomy. Reg Anesth Pain Med 42:217–222, 2017

Azagury DE, Ryou M, Shaikh SN, San José Estépar R, Lengyel BI, Jagadeesan J, Vosburgh KG, Thompson CC: Real-time computed tomography-based augmented reality for natural orifice transluminal endoscopic surgery navigation. Br J Surg 99:1246–1253, 2012

Johnston APR, Rae J, Ariotti N, Bailey B, Lija A, Webb R, Ferguson C, Maher S, Davis TP, Webb RI, Mcghee J, Parton RG: Journey to the centre of the cell: Virtual reality immersion into scientific data. Traffic 105–110, 2017.

Shanahan M: Use of a virtual radiography simulation enhances student learning. J Med Radiat Sci 63:27, 2016

Syed AZ, Zakaria A, Lozanoff S: Dark room to augmented reality: Application of HoloLens technology for oral radiological diagnosis. Oral Surg Oral Med Oral Pathol Oral Radiol 124:e33, 2017

Mitsouras D, Liacouras P, Imanzadeh A, Giannopoulos AA, Cai T, Kumamaru KK, George E, Wake N, Caterson EJ, Pomahac B, Ho VB, Grant GT, Rybicki FJ: Medical 3D printing for the radiologist. RadioGraphics 35:1965–1988, 2015

Giannopoulos AA, Mitsouras D, Yoo S-J, Liu PP, Chatzizisis YS, Rybicki FJ: Applications of 3D printing in cardiovascular diseases. Nat Rev Cardiol 13:701–718, 2016

Christensen A, Rybicki FJ: Maintaining safety and efficacy for 3D printing in medicine. 3D Print Med 3:1, 2017

Di Prima M, Coburn J, Hwang D, Kelly J, Khairuzzaman A, Ricles L: Additively manufactured medical products—The FDA perspective. 3D Print Med 2(1), 2015

Rybicki FJ: Medical 3D printing and the physician-artist. Lancet 391:651–652, 2018

Rybicki FJ: Message from Frank J. Rybicki, MD, incoming chair of ACR appropriateness criteria. J Am Coll Radiol 14:723–724, 2017

Douglas DB, Wilke CA, Gibson D, Petricoin EF, Liotta L: Virtual reality and augmented reality: Advances in surgery. Biol Eng Med 2:1–8, 2017

Gallagher K, Jain S, Okhravi N: Making and viewing stereoscopic surgical videos with smartphones and virtual reality headset. Eye 30:503–504, 2016

Huber T, Wunderling T, Paschold M, Lang H, Kneist W, Hansen C: Highly immersive virtual reality laparoscopy simulation: Development and future aspects. Int J Comput Assist Radiol Surg 13:281–290, 2018

Kersten-Oertel M, Gerard I, Drouin S, Mok K, Sirhan D, Sinclair DS, Collins DL: Augmented reality in neurovascular surgery: Feasibility and first uses in the operating room. Int J Comput Assist Radiol Surg 10:1823–1836, 2015

Moglia A, Ferrari V, Morelli L, Ferrari M, Mosca F, Cuschieri A: A systematic review of virtual reality simulators for robot-assisted surgery. Eur Urol 69:1065–1080, 2016

Londei R, Esposito M, Diotte B, Weidert S, Euler E, Thaller P, Navab N, Fallavollita P: Intra-operative augmented reality in distal locking. Int J Comput Assist Radiol Surg 10:1395–1403, 2015

King F, Jayender J, Bhagavatula SK, Shyn PB, Pieper S, Kapur T, Lasso A, Fichtinger G: An immersive virtual reality environment for diagnostic imaging. J Med Robot Res 01:1640003, 2016

Douglas DB, Petricoin EF, Liotta L, Wilson E: D3D augmented reality imaging system: Proof of concept in mammography. Med Devices Evid Res 9:277–283, 2016

Hanna MG, Ahmed I, Nine J, Prajapati S, Pantanowitz L: Augmented reality technology using Microsoft HoloLens in anatomic pathology. Arch Pathol Lab Med, 2018. https://doi.org/10.5858/arpa.2017-0189-OA

Calì C, Baghabra J, Boges DJ, Holst GR, Kreshuk A, Hamprecht FA, Srinivasan M, Lehväslaiho H, Magistretti PJ: Three-dimensional immersive virtual reality for studying cellular compartments in 3D models from EM preparations of neural tissues. J Comp Neurol 524:23–38, 2016

Zheng LL, He L, Yu CQ: Mobile virtual reality for ophthalmic image display and diagnosis. J Mob Technol Med 4:35–38, 2015

Dascal J, Reid M, Ishak WW, Spiegel B, Recacho J, Rosen B, Danovitch I: Virtual reality and medical inpatients: A systematic review of randomized, controlled trials. Innov Clin Neurosci 14:14–21, 2017

Schmitt YS, Hoffman HG, Blough DK, David R, Jensen MP, Soltani M, Carrougher GJ, Mn RN, Nakamura D, Otr L, Sharar SR: A randomized, controlled trial of immersive virtual reality analgesia during physical therapy for pediatric burn injuries. Burns 37:61–68, 2011

Carrougher GJ, Hoffman HG, Nakamura D, Lezotte D, Soltani M, Leahy L, Engrav LH, Patterson DR: The effect of virtual reality on pain and range of motion in adults with burn injuries. J Burn Care Res 30:785–791, 2009

Kipping B, Rodger S, Miller K, Kimble RM: Virtual reality for acute pain reduction in adolescents undergoing burn wound care: A prospective randomized controlled trial. Burns 38:650–657, 2012

Morris LD, Louw QA, Crous LC: Feasibility and potential effect of a low-cost virtual reality system on reducing pain and anxiety in adult burn injury patients during physiotherapy in a developing country. Burns 36:659–664, 2010

Hoffman HG, Patterson DR, Seibel E, Soltani M, Jewett-Leahy L, Sharar SR: Virtual reality pain control during burn wound debridement in the hydrotank. Clin J Pain 24:299–304, 2008

Patterson DR, Jensen MP, Wiechman SA, Sharar SR: Virtual reality hypnosis for pain associated with recovery from physical trauma. Int J Clin Exp Hypn 58:288–300, 2010

Li WH, Chung JO, Ho EK: The effectiveness of therapeutic play, using virtual reality computer games, in promoting the psychological well-being of children hospitalised with cancer. J Clin Nurs 20:2135–2143, 2011

Cesa GL, Manzoni GM, Bacchetta M, Castelnuovo G, Conti S, Gaggioli A, Mantovani F, Molinari E, Cárdenas-López G, Riva G: Virtual reality for enhancing the cognitive behavioral treatment of obesity with binge eating disorder: Randomized controlled study with one-year follow-up. J Med Internet Res 15:1–13, 2013

Manzoni GM, Pagnini F, Gorini A, Preziosa A, Castelnuovo G, Molinari E, Riva G: Can relaxation training reduce emotional eating in women with obesity? An exploratory study with 3 months of follow-up. J Am Diet Assoc 109:1427–1432, 2009

Larson EB, Ramaiya M, Zollman FS, Pacini S, Hsu N, Patton JL, Dvorkin AY: Tolerance of a virtual reality intervention for attention remediation in persons with severe TBI. Brain Inj 25:274–281, 2011

Saposnik G, Cohen LG, Mamdani M, Pooyania S, Ploughman M, Cheung D, Shaw J, Hall J, Nord P, Dukelow S, Nilanont Y, De los Rios F, Olmos L, Levin M, Teasell R, Cohen A, Thorpe K, Laupacis A, Bayley M: Efficacy and safety of non-immersive virtual reality exercising in stroke rehabilitation (EVREST): A randomised, multicentre, single-blind, controlled trial. Lancet Neurol 15:1019–1027, 2016

Silver B: Virtual reality versus reality in post-stroke rehabilitation. Lancet Neurol 15:996–997, 2016

Ambron E, Miller A, Kuchenbecker KJ, Laurel B, Coslett HB: Immersive low-cost virtual reality treatment for phantom limb pain: Evidence from two cases. Front Neurol 9:1–7, 2018

Freeman D, Reeve S, Robinson A, Ehlers A, Clark D, Spanlang B, Slater M: Virtual reality in the assessment, understanding, and treatment of mental health disorders. Psychol Med 47:2393–2400, 2017

Maples-Keller JL, Price M, Rauch S, Gerardi M, Rothbaum BO: Investigating relationships between PTSD symptom clusters within virtual reality exposure therapy for OEF/OIF veterans. Behav Ther 48:147–155, 2017

Miloff A, Lindner P, Hamilton W, Reuterskiöld L, Andersson G, Carlbring P: Single-session gamified virtual reality exposure therapy for spider phobia vs. traditional exposure therapy: Study protocol for a randomized controlled non-inferiority trial. Trials 17:1–8, 2016

Nararro-Haro MV, Hoffman HG, Garcia-Palacios A, Sampaio M, Alhalabi W, Hall K, Linehan M: The use of virtual reality to facilitate mindfulness skills training in dialectical behavioral therapy for borderline personality disorder: A case study. Front Psychol 7:1–9, 2016

Wiederhold BK, Wiederhold MD: Virtual Reality Therapy for Anxiety Disorders: Advances in Evaluation and Treatment. Washington, DC: American Psychological Association, 2005

Chepelev L, Giannopoulos A, Tang A, Mitsouras D, Rybicki FJ: Medical 3D printing: Methods to standardize terminology and report trends. 3D Print Med 3:1–9, 2017

Rybicki FJ: 3D printing in medicine: An introductory message from the editor-in-chief. 3D Print Med 1(1):1, 2015

Mitsouras D, Liacouras PC: 3D printing in medicine: 3D printing technologies. In: Rybicki FJ, Grant GT Eds. 3D Printing in Medicine: A Practical Guide for Medical Professionals. Cham: Springer International Publishing, 2017, pp. 5–22

American Association of Physicists in Medicine: Assessment of display performance for medical imaging systems. Med Phys:1–156, 2005

Norweck JT, Seibert JA, Andriole KP, Clunie DA, Curran BH, Flynn MJ, Krupinski E, Lieto RP, Peck DJ, Mian TA, Wyatt M: ACR-AAPM-SIIM technical standard for electronic practice of medical imaging. J Digit Imaging 26:38–52, 2013

Drebin R A, Carpenter L, Hanrahan P: Volume rendering. SIGGRAPH ’88. In: Proc. 15th Annu. Conf. Comput. Graph. Interact. Tech. 22, pp. 65–74, 1988

Levoy M: Volume rendering: Display of surface from volume data. IEEE Comput Graph Appl 8:29–37, 1988

Stegmaiert S, Kieint T, Stegmaier S, Strengert M, Klein T, Ertl T: A simple and flexible volume rendering framework for graphics-hardware-based raycasting. Fourth Int. Work. Vol. Graph, pp. 187–241, 2005

Ljung P, Krüger J, Groller E, Hadwiger M, Hansen CD, Ynnerman A, Gröller E, Hadwiger M, Hansen CD, Ynnerman A: State of the art in transfer functions for direct volume rendering. Comput Graph Forum 35:669–691, 2016

Arens S, Domik G: A survey of transfer functions suitable for volume rendering. IEEE/EG Int. Symp. Vol. Graph., pp. 77–83, 2010

Krüger J, Westermann R: Acceleration techniques for GPU-based volume rendering. IEEE Vis, pp. 287–292, 2003.

Vizua3D: https://vizua3d.com. Available at https://vizua3d.com. Accessed February 2018

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sutherland, J., Belec, J., Sheikh, A. et al. Applying Modern Virtual and Augmented Reality Technologies to Medical Images and Models. J Digit Imaging 32, 38–53 (2019). https://doi.org/10.1007/s10278-018-0122-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-018-0122-7