“There is no theory for the initial value problem for compressible flows in two space dimensions once shocks show up, much less in three space dimensions. This is a scientific scandal and a challenge.”

P. D. Lax, 2007 Gibbs Lecture [48].

Abstract

Entropy solutions have been widely accepted as the suitable solution framework for systems of conservation laws in several space dimensions. However, recent results in De Lellis and Székelyhidi Jr (Ann Math 170(3):1417–1436, 2009) and Chiodaroli et al. (2013) have demonstrated that entropy solutions may not be unique. In this paper, we present numerical evidence that state-of-the-art numerical schemes need not converge to an entropy solution of systems of conservation laws as the mesh is refined. Combining these two facts, we argue that entropy solutions may not be suitable as a solution framework for systems of conservation laws, particularly in several space dimensions. We advocate entropy measure-valued solutions, first proposed by DiPerna, as the appropriate solution paradigm for systems of conservation laws. To this end, we present a detailed numerical procedure which constructs stable approximations to entropy measure-valued solutions, and provide sufficient conditions that guarantee that these approximations converge to an entropy measure-valued solution as the mesh is refined, thus providing a viable numerical framework for systems of conservation laws in several space dimensions. A large number of numerical experiments that illustrate the proposed paradigm are presented and are utilized to examine several interesting properties of the computed entropy measure-valued solutions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A large number of problems in physics and engineering are modeled by systems of conservation laws

Here, the unknown \(u = u(x,t): \mathbb {R}^d\times \mathbb {R}_+ \rightarrow \mathbb {R}^N\) is the vector of conserved variables and \(f = (f^1, \ldots , f^d): \mathbb {R}^N \rightarrow \mathbb {R}^{N\times d}\) is the flux function. We denote \(\mathbb {R}_+ := [0,\infty )\).

The system (1a) is hyperbolic if the flux Jacobian \(\partial _u(f \cdot n)\) has real eigenvalues for all \(n \in \mathbb {R}^d\) with \(|n| = 1\). Examples of hyperbolic systems of conservation laws include the shallow water equations of oceanography, the Euler equations of gas dynamics, the magnetohydrodynamics (MHD) equations of plasma physics, the equations of nonlinear elastodynamics and the Einstein equations of general relativity. We refer to [16, 37] for more theory on hyperbolic conservation laws.

1.1 Mathematical Framework

It is well known that solutions of the Cauchy problem (1) can develop discontinuities such as shock waves in finite time, even when the initial data is smooth. Hence, solutions of hyperbolic systems of conservation laws (1) are sought in the weak (distributional) sense.

Definition 1

A function \(u\in L^\infty (\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) is a weak solution of (1) if it satisfies (1) in the sense of distributions:

for all test functions \(\varphi \in C^1_c(\mathbb {R}^d\times \mathbb {R}_+)\).

Weak solutions are in general not unique: Infinitely many weak solutions may exist after the formation of discontinuities. Thus, to obtain uniqueness, additional admissibility criteria have to be imposed. These admissibility criteria take the form of entropy conditions, which are formulated in terms of entropy pairs.

Definition 2

A pair of functions \((\eta ,q)\) with \(\eta :\mathbb {R}^N\rightarrow \mathbb {R}\), \(q:\mathbb {R}^N\rightarrow \mathbb {R}^d\) is called an entropy pair if \(\eta \) is convex and q satisfies the compatibility condition \(q' = \eta ' \cdot f'\).

Definition 3

A weak solution u of (1) is an entropy solution if the entropy inequality

is satisfied for all entropy pairs \((\eta ,q)\), that is, if

for all nonnegative test functions \(0\leqslant \varphi \in C^1_c(\mathbb {R}^d\times \mathbb {R}_+)\).

For the special case of scalar conservation laws (\(N=1\)), every convex function \(\eta \) gives rise to an entropy pair by letting \(q(u) := \int ^u \eta '(\xi ) f'(\xi ) \hbox {d}\xi \). This rich family of entropy pairs was used by Kruzkhov [45] to obtain existence, uniqueness and stability of solutions for scalar conservation laws.

Corresponding (global) well-posedness results for systems of conservation laws are much harder to obtain. Lax [47] showed existence and stability of entropy solutions for one-dimensional systems of conservation laws for the special case of Riemann initial data. Existence results for the Cauchy problem for one-dimensional systems were obtained by Glimm [35] using the random choice method and by Bianchini and Bressan [5] with the vanishing viscosity method. Uniqueness and stability results for one-dimensional systems were shown by Bressan et al. [9]. All of these results rely on an assumption that the initial data is “sufficiently small,” i.e., lies sufficiently close to a constant state.

On the other hand, no global existence and uniqueness (stability) results are currently available for a generic system of conservation laws in several space dimensions. In fact, recent results (see [17–19] and references therein) provide counterexamples which illustrate that entropy solutions for multi-dimensional systems of conservation laws are not necessarily unique. These results raise serious questions whether the notion of entropy solutions is too restricted to serve as the standard solution framework for systems of conservation laws. It can be argued that one needs to impose even further admissibility criteria, in addition to the entropy inequality (3), to single out a solution among the infinitely many solutions constructed in [17–19]. One possible approach in determining these selection criteria is to employ suitable numerical schemes and observe which, if any, of the entropy solutions are approximated by these schemes.

1.2 Numerical Schemes

Numerical schemes have played a leading role in the study of systems of conservation laws, and a wide variety of numerical methods for approximating (1) are currently available. These include the very popular and highly successful numerical framework of finite volume and finite difference schemes, based on approximate Riemann solvers or on Riemann-solver-free central differencing (see [10, 13, 37, 50]) which utilize TVD [38], ENO [39] or WENO [42] non-oscillatory reconstruction techniques and strong stability preserving (SSP) Runge–Kutta time integrators [34]. Another popular alternative is the discontinuous Galerkin finite element method [14].

The primary goal in the analysis of numerical schemes approximating (1) is proving convergence to an entropy solution as the mesh is refined. This issue has been addressed in the special case of (first-order) monotone schemes for scalar conservation laws (see [15] for the one-dimensional case and [12] for multiple dimensions) using the TVD property. Corresponding convergence results for (formally) arbitrarily high-order accurate finite difference schemes for scalar conservation laws were obtained recently in [28], see also [27]. Convergence results for (arbitrarily high order) space time DG discretization for scalar conservation laws were obtained in [41] and for the spectral viscosity method in [62].

The question of convergence of numerical schemes for systems of conservation laws is significantly more difficult. Currently, there are no rigorous proofs of convergence for any kind of finite volume (difference) and finite element methods to the entropy solutions of a generic system of conservation laws, even in one space dimension. Convergence aside, even the stability of numerical approximations to systems of conservation laws is mostly open. The only notion of numerical stability for systems of conservation laws that has been analyzed rigorously so far is that of entropy stability—the design of numerical approximations that satisfy a discrete version of the entropy inequality. Such schemes have been devised in [27, 40, 60, 61]. However, entropy stability may not suffice to ensure the convergence of approximate solutions.

1.3 Two Numerical Experiments

Given the lack of rigorous stability and convergence results for systems of conservation laws, it has become customary in the field to rely on numerical benchmark tests to demonstrate the convergence of the scheme empirically. One such benchmark test case is the radial Sod shock tube [50].

1.3.1 Sod Shock Tube

In this test, we consider the compressible Euler equations of gas dynamics in two space dimensions (see Sect. 6) as a prototypical hyperbolic system of conservation laws. The initial data for the two-dimensional version of the well-known Sod shock tube problem is given by

with \(\rho _L = p_L = 3\), \(\rho _R=p_R=1\), \(w^1= w^2= 0\). The computational domain is \([-0.5,0.5]^2\), with \(r_0 = 0.15\), and we use periodic boundary conditions.

To begin with, we consider a perturbed version of the Sod shock tube by setting the initial data

where \(\varepsilon >0\) is a small amplitude of the perturbation \(X(\cdot )\) associated with the following state variables—\(\rho ,p\) and \(w=(w^1,w^2)^\top \),

First we set \(\varepsilon = 0.01\) and compute the approximate solutions of the two-dimensional Euler equations (31) with the second-order TeCNO2 finite difference scheme of [27]. In Fig. 1, we present the computed densities at time \(t=0.24\) for three different mesh resolutions. The figure clearly indicates convergence as the mesh is refined. To further quantify this convergence, we compute the difference in the approximate solutions on two successive mesh resolutions:

and plot the results for density in Fig. 2a. The results clearly indicate that the numerical approximations form a Cauchy sequence in \(L^1\) and hence converge. The same numerical experiment was performed with a different scheme: a second-order high-resolution scheme based on an HLLC solver using the MC limiter, implemented in the FISH code [44]. Similar convergence results were obtained (omitted here for brevity).

\(L^1\) differences in density \(\rho \) at time \(t=0.24\) for the Sod shock tube problem with initial data (5). a \(L^1\) Cauchy rates (7) (y-axis) in the density at time \(t=0.24\) versus number of gridpoints (x-axis). b \(L^1\) error with respect to the unperturbed solution (4) (y-axis) versus the perturbation parameter \(\varepsilon \) (x-axis)

Next, we investigate numerically the issue of stability of this system with respect to perturbations in the initial data. To this end, we use exactly the same set up as the previous numerical experiment but let the perturbation amplitude \(\varepsilon \rightarrow 0\) in (5) and plot in Fig. 2b the error in computed density (at a fixed mesh resolution of \(1024^2\) points) for successively lower values of \(\varepsilon \). The reference solution is computed with the finest mesh resolution of \(1024^2\) using the unperturbed initial data (4). The results clearly show convergence to the unperturbed solution in the \(\varepsilon \rightarrow 0\) limit.

The above numerical example suggests convergence of the approximate numerical solutions to an entropy solution, at least for some benchmark test cases. The computed solutions were observed to be stable with respect to perturbations of the initial data. In the literature, it is not uncommon to extrapolate from benchmark test cases like the Sod shock tube and expect that the numerical approximations converge as the mesh is refined for all possible sets of flow configurations.

1.3.2 Kelvin–Helmholtz Problem

We question the universality of the above observed empirical convergence and stability results by considering the following set of initial data for the two-dimensional Euler equations (see Sect. 6):

with \(\rho _L = 2\), \(\rho _R = 1\), \(w^1_L = -0.5\), \(w^1_R = 0.5\), \(w^2_L=w^2_R=0\) and \(p_L=p_R=2.5\). It is readily seen that this is a steady state, i.e., that \(u(x,t) \equiv u_0(x)\) is an entropy solution.

Next, we add the same perturbation (5) to the initial data (8) and compute approximate solutions in the computational domain \([0,1]^2\) with periodic boundary conditions, for different \({\Delta x}>0\). A series of approximate solutions using the TeCNO2 scheme of [27] and perturbation amplitude \(\varepsilon =0.01\) are shown in Fig. 3. The results show that there is no sign of any convergence as the mesh is refined. As a matter of fact, structures at smaller and smaller scales are formed with mesh refinement. This lack of convergence is quantified by plotting the differences between successive mesh levels (7) for the density in Fig. 4a. The results show that as the mesh is refined, the approximate solutions do not seem to form a Cauchy sequence in \(L^1\) (at least for the mesh resolutions that have been tested) and hence may not converge. The results presented in Figs. 3 and 4a are computed with the TeCNO scheme of [27]. Very similar results were also obtained with the FISH code [44] and the ALSVID finite volume code [31]. Furthermore, convergence in even weaker \(W^{-1,p}\), \(1<p\leqslant \infty \), norms was also not observed. Thus, one cannot deduce convergence of even bulk properties of the flow, such as the average domain temperature, in this particular case.

\(L^1\) differences in density \(\rho \) at time \(t=2\) for the Kelvin–Helmholtz problem (8). a \(L^1\) Cauchy rates (7) (y-axis) versus number of gridpoints (x-axis) for the perturbed problem (5), (8) with \(\varepsilon = 0.01\). b \(L^1\) error with respect to the steady-state solution (8) of the unperturbed Kelvin–Helmholtz problem (y-axis) versus perturbation parameter \(\varepsilon \), at a fixed mesh with \(1024^2\) points

Finally, we check stability of the numerical solutions as the perturbation parameter \(\varepsilon \rightarrow 0\). We compute numerical approximations at a fixed fine grid resolution of \(1024^2\) points with successively lower values of \(\varepsilon \). These results are compared with the steady-state solution (8) and are presented in Fig. 4b. The \(L^1\) difference results clearly show that there is no convergence to the steady-state solution (8) as \(\varepsilon \rightarrow 0\).

1.4 A Different Notion of Solutions

The above experiment clearly demonstrates that in general, a whole hostFootnote 1 of state-of-the-art numerical schemes do not seem to converge (even for very fine mesh resolutions) to an entropy solution for multi-dimensional systems of conservation laws. In fact, structures at smaller and smaller scales are formed as the mesh is refined. This fact does not imply that the numerical approximations are at fault (given that all the tested schemes, based on different design philosophies, behaved in the same manner), but rather, that the notion of entropy solutions does not adequately describe the complex flow phenomena that are modeled by systems of conservation laws such as the compressible Euler equations.

When combined with the recent results on the non-uniqueness of entropy solutions of systems of conservation laws ([17, 18] and references therein), our numerical evidence strongly suggests, in more than one way, that entropy solutions need not be an appropriate solution framework for systems of conservation laws. In particular, entropy solutions may not suffice to characterize the limits of numerical approximations to conservation laws in a stable manner.

Based on the fact that oscillations persist on finer and finer scales in numerical approximations of (1) shown in Fig. 3, we focus on the alternative concept of entropy measure-valued solutions, introduced by DiPerna in [22], see also [23]. In this framework, solutions of the system of conservation laws (1) are no longer integrable functions, but parameterized probability measures, or Young measures, which are able to represent the limit behavior of sequences of oscillatory functions. This solution concept was further based on the work of Tartar [63] on characterizing the weak limits of bounded sequences of functions. More recently, Glimm and co-workers ([11, 51] and references therein) have also hypothesized that entropy measure-valued solutions are the appropriate notion of solutions for hyperbolic conservation laws, particularly in several space dimensions.

1.5 Aims and Scope of the Current Paper

In the current paper:

-

We consider entropy measure-valued solutions for the Cauchy problem (1), in the sense of DiPerna [22]. We study the existence and stability of the entropy measure-valued solutions.

-

The main aim of the current paper is to approximate entropy measure-valued solutions numerically. To this end, we propose an algorithm based on the realization of Young measures as the law of random fields and approximate the solution random fields with suitable finite difference (volume) numerical schemes. We propose a set of sufficient conditions that a scheme has to satisfy in order to converge to an entropy measure-valued solution as the mesh is refined. Examples of such convergent schemes are also provided. This provides a viable and rigorous numerical framework for multi-dimensional systems of conservation laws, within the framework of entropy measure-valued solutions.

-

We present a large number of numerical experiments to validate the proposed theory. The numerical approximations are also employed to study the stability as well as other interesting properties of entropy measure-valued solutions.

The rest of this paper is organized as follows: In Sect. 2, we provide a short but self-contained description of Young measures (see also Appendix 1) and then define entropy measure-valued solutions for a generalized Cauchy problem, corresponding to the system of conservation law (1). The well posedness of the entropy measure-valued solutions is discussed in Sect. 3. In Sect. 4, we discuss finite difference schemes approximating (1) and propose abstract criteria that these schemes have to satisfy in order to converge to entropy measure-valued solutions. Two schemes satisfying the abstract convergence framework are presented in Sect. 5. In Sect. 6, we present numerical experiments that illustrate the convergence properties of the schemes and discuss the stability and related properties of entropy measure-valued solutions.

2 Young Measures and Entropy Measure-Valued Solutions

A Young measure on a set \(D\subset \mathbb {R}^k\) (in our setting, \(D=\mathbb {R}^d\times \mathbb {R}_+\) will represent space–time) is a function \(\nu \) which assigns to every point \(y\in D\) a probability measure \(\nu _y \in \mathcal {P}(\mathbb {R}^N)\) on the phase space \(\mathbb {R}^N\). The set of all Young measures from D to \(\mathbb {R}^N\) is denoted by \(\mathbf {Y}(D,\mathbb {R}^N)\). We can compose a Young measure with a continuous function g by defining \(\left\langle \nu _y, g\right\rangle := \int _{\mathbb {R}^N}g(\xi )\hbox {d}\nu _y(\xi )\), the expectation of g with respect to the probability measure \(\nu _y\). Note that this defines a real-valued function of \(y\in D\).

Every measurable function \(u : D \rightarrow \mathbb {R}^N\) gives rise to a Young measure by letting

where \(\delta _\xi \) is the Dirac measure centered at \(\xi \in \mathbb {R}^N\). Such Young measures are called atomic.

If \(\nu ^1,\nu ^2,\ldots \) is a sequence of Young measures, then there are two notions of convergence. We say that \(\nu ^n\) converge weak* to a Young measure \(\nu \) (written \(\nu ^n \rightharpoonup \nu \)) if \(\left\langle \nu ^n, g\right\rangle \overset{*}{\rightharpoonup }\left\langle \nu , g\right\rangle \) in \(L^\infty (D)\) for all \(g\in C_0(\mathbb {R}^N)\), that is, if

By the fundamental theorem of Young measures (see Theorem 13), any suitably bounded sequence of Young measures has a weak* convergent subsequence.

We say that the sequence \(\{\nu ^n\}\) converges strongly to \(\nu \) (written \(\nu ^n \rightarrow \nu \)) if

for some \(p\in [1,\infty )\), where \(W_p\) is the p-Wasserstein distance

which metrizes the topology of weak convergence on the set \(\mathcal {P}^p(\mathbb {R}^N):=\left\{ \mu \in \mathcal {P}(\mathbb {R}^N) : \right. \left. \langle \mu , |\xi |^p\rangle <\infty \right\} \). Here, \(\varPi (\mu ,\rho )\) is the set of probability measures on \(\mathbb {R}^N\times \mathbb {R}^N\) with marginals \(\mu ,\rho \in \mathcal {P}^p(\mathbb {R}^N)\) (see also Appendix A.1.3).

We refer to Appendix 1 for a more rigorous and detailed introduction to Young measures.

2.1 The Measure-Valued (MV) Cauchy Problem

As mentioned in the introduction, we will seek a more general (weaker) notion of solutions to the Cauchy problem for a system of conservation laws (1) by requiring that the solutions be Young measures, instead of integrable functions. Equipped with the notation of the previous section, we propose the following generalized Cauchy problem (corresponding to the system (1)): find a \(\nu \in \mathbf {Y}(\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) such that

where \(\sigma \in \mathbf {Y}(\mathbb {R}^d,\mathbb {R}^N)\) is the initial measure-valued data and \({{\mathrm{id}}}(\xi )=\xi \) is the identity function on \(\mathbb {R}^N\). The above MV Cauchy problem is interpreted as follows.

Definition 4

(DiPerna [22]) A Young measure \(\nu \in \mathbf {Y}(\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) is a measure-valued (MV) solution of (11) if (11) holds in the sense of distributions, i.e.,

for all test functions \(\varphi \in C^1_c(\mathbb {R}^d\times \mathbb {R}_+)\).

Definition 5

(DiPerna [22]) A Young measure \(\nu \in \mathbf {Y}(\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) is an entropy measure-valued (EMV) solution of (11) if in addition to being a measure-valued solution (satisfying (12)), it also satisfies

for every entropy pair \((\eta ,q)\), that is, if

for all nonnegative test functions \(0\leqslant \varphi \in C^1_c(\mathbb {R}^d\times \mathbb {R}_+)\).

Remark 1

The formulations (12) and (14) impose the initial data \(\sigma \) in a very weak manner. The weak formulation (12) requires, roughly speaking, that \(\lim _{t\rightarrow 0} \left\langle \nu _{(x,t)}, {{\mathrm{id}}}\right\rangle = \left\langle \sigma _x, {{\mathrm{id}}}\right\rangle \), i.e., that the barycenters (or mean) of \(\nu _{(x,0)}\) and \(\sigma _x\) should coincide. The inequality (14) implies that \(\limsup _{t\rightarrow 0} \left\langle \nu _{(x,t)}, \eta \right\rangle \leqslant \left\langle \sigma _x, \eta \right\rangle \) (in Theorem 2, we require a slightly stronger form of this inequality). The requirement that the (strictly) \(\eta \)-weighted barycenters of two measures should “coincide” (up to the entropic inequality) will imply that the measures themselves coincide only if \(\sigma _x\) is a Dirac mass. Correspondingly, our condition for initial data implies equality at \(t=0\) only when the initial data is atomic. This is precisely the setting which we choose to focus on in the present paper. In a forthcoming paper [25], we consider the question of uniqueness when the initial data is non-atomic, and how to interpret the initial condition in this more complex setting.

We denote by \(\mathcal {E}(\sigma )\) the set of all entropy MV solutions of the MV Cauchy problem (11) with initial MV data \(\sigma \). It is readily seen that every entropy solution u of (1) gives rise to an EMV solution of (11) with \(\sigma = \delta _{u_0}\), by defining \(\nu _{(x,t)} := \delta _{u(x,t)}\), the atomic Young measure concentrated at u. Thus, the set \(\mathcal {E}(\sigma )\) is at least as large as the set of entropy solutions of (1) whenever \(\sigma \) is atomic, \(\sigma = \delta _{u_0}\).

Remark 2

Although our focus in the current paper will be on the specific case of atomic initial data, we still consider the more general setting of the MV Cauchy problem (11) as it enables us to formulate numerical approximations in a unified manner.

In practice, the initial data \(u_0\) in (1a) is obtained from a measurement or observation process. Since measurements (observations) are intrinsically uncertain, it is customary to model this initial uncertainty statistically by considering the initial data \(u_0\) as a random field. Given the fact that the law of a random field is a Young measure, we can also model this initial uncertainty with non-atomic initial measures in the measure-valued (MV) Cauchy problem (11). Thus, our formulation suffices to include various formalisms for uncertainty quantification of conservation laws, i.e., the determination of solution uncertainty given uncertain initial data. See [52–54] and references therein for an extensive discussion on uncertainty quantification for conservation laws.

3 Well Posedness of EMV Solutions

The questions of existence, uniqueness and stability of EMV solutions of (11) are of fundamental significance. We start with a discussion of the scalar case.

3.1 Scalar Conservation Laws

The question of existence of EMV solutions for scalar conservation laws was considered by DiPerna [22]. We slightly generalize his result for a non-atomic initial data as follows.

Theorem 1

Consider the MV Cauchy problem (11) for a scalar conservation law. If the initial data \(\sigma \) is uniformly bounded (see Appendix A.2.2), then there exists an EMV solution of (11).

Proof

By Proposition 2 in Appendix A.3.2, there exists a probability space \((\varOmega ,\mathcal {F},P)\) and a random field \(u_0:\varOmega \times \mathbb {R}^d\rightarrow \mathbb {R}\) with law \(\sigma \). By the uniform boundedness of \(\sigma \), we have \(\Vert u_0\Vert _{L^\infty (\varOmega \times \mathbb {R}^d)} < \infty \).

For each \(\omega \in \varOmega \), let \(u(\omega ;x,t)\) be the entropy solution of (1) with initial data \(u_0(\omega )\), and define \(\nu \) as the law of u. Then for every entropy pair \((\eta ,q)\) and every test function \(0\leqslant \varphi \in C^1_c(\mathbb {R}^d\times \mathbb {R}_+)\), we have by Fubini’s theorem and the entropy stability of \(u(\omega )\) for each \(\omega \),

This proves the entropy inequality (14). \(\square \)

Although EMV solutions exist for scalar conservation laws with non-atomic measure-valued initial data, they may not be unique. Here is a simple counter-example (see also Schochet [58]).

Example 1

Consider Burgers’ equation

Denote by \(\lambda \) the Lebesgue measure on \(\mathbb {R}\) and by \(\lambda _A\) the restriction of \(\lambda \) to a subset \(A\subset \mathbb {R}\), i.e., \(\lambda _A(B) = \lambda (A\cap B)\). We define \(\varOmega =[0,1]\), \(\mathcal {F}=\mathcal {B}([0,1])\) (the Borel \(\sigma \)-algebra on [0, 1]) and \(P=\lambda _{[0,1]}\). Let \(u_0\) and \(\tilde{u}_0\) be the random fields

It is readily checked that the law of both \(u_0\) and \(\tilde{u}_0\) in \((\varOmega ,\mathcal {F},P)\) equals

Note that both of the random fields \(u_0\) and \(\tilde{u}_0\) model the Burgers’ equation with uncertainty in initial shock location which is widely considered in the UQ literature, see [52] and references therein. Although their laws are the same—i.e., that the initial Young measure is the same in both cases—the resulting two-point correlations (particularly for points left and right of the origin) differ.

The entropy solutions \(u(\omega )\) and \(\tilde{u}(\omega )\) of the Riemann problems with initial data \(u_0(\omega )\) and \(\tilde{u}_0(\omega )\) are given by

To compute the law \(\nu \) of u, we rewrite u as

Hence, if  , then \(\nu _{(x,t)} = \lambda _{[1,2]}\), whereas if

, then \(\nu _{(x,t)} = \lambda _{[1,2]}\), whereas if  , then \(\nu _{(x,t)} = \lambda _{[0,1]}\). When

, then \(\nu _{(x,t)} = \lambda _{[0,1]}\). When  , we have for every \(g\in C_0(\mathbb {R}^N)\)

, we have for every \(g\in C_0(\mathbb {R}^N)\)

After a similar calculation for \(\tilde{\nu }\), we find that

Note that in fact \(\nu _{(x,t)}\) and \(\tilde{\nu }_{(x,t)}\) converges to \(\sigma _x\) strongly as \(t\rightarrow 0\) for all \(x\ne 0\). Thus, \(\nu \) and \(\tilde{\nu }\) are EMV solutions with the same initial MV data \(\sigma \), but do not coincide.\(\square \)

The above example clearly illustrates that the MV Cauchy problem (11) may not have unique solutions, even for the scalar case, when the initial data is a non-atomic Young measure. Hence, it raises serious questions whether the notion of an entropy measure-valued solution is useful. However, the following result shows that when restricting attention to the relevant class of atomic initial data, EMV solutions of the scalar MV Cauchy problem (11) are stable.

Theorem 2

Consider the scalar case \(N=1\). Let \(u_0\in L^1\cap L^\infty (\mathbb {R}^d)\) and let \(\sigma \in \mathbf {Y}(\mathbb {R}^d)\) be uniformly bounded. Let \(u\in L^1\cap L^\infty (\mathbb {R}^d\times \mathbb {R}_+)\) be the entropy solution of the scalar conservation law (1) with initial data \(u_0\). Let \(\nu \) be any EMV solution of (11) which satisfies

Then for all \(t>0\),

or equivalently,

In particular, if \(\sigma = \delta _{u_0}\) then \(\nu = \delta _{u}\).

Proof

We follow DiPerna [22] who proved the uniqueness of scalar MV solutions subject to atomic initial data. Here, we quantify stability in terms of the \(W_1\)-metric, which is related to the \(L^1(x,{v})\)-stability of kinetic solutions associated with (1), see [57].

For \(\xi \in \mathbb {R}\), let \((\eta (\xi ,u), q(\xi ,u))\) be the Kruzkov entropy pair, defined as

By [22, Theorem 4.1] we know that for any entropy solution u of (1) and any entropy MV solution \(\nu \) of (11), we have

that is,

for all test functions \(0\leqslant \varphi \in C^1_c\bigl (\mathbb {R}^d\times (0,\infty )\bigr )\). Setting \(\varphi (x,t) = \theta (t)\) for a \(\theta \in C_c^\infty \bigl ((0,\infty )\bigr )\), we get

Letting \(\theta \) be a smooth approximation of the indicator function on an interval \([0,t_0]\), we find in light of (15) that \(V(t_0) \leqslant \int _{\mathbb {R}^d} \left\langle \sigma _x, |u_0(x)-\xi |\right\rangle \ \hbox {d}x\) for almost every \(t_0>0\). \(\square \)

3.2 Systems of Conservation Laws

It is clear from the above discussion that non-atomic initial data might lead to multiple EMV solutions, see also the discussions in Remark 2.3. However, the scalar results also suggest some possible stability with respect to perturbations of atomic initial data. Based on these considerations, we propose the following (weaker) notion of stability.

Terminology 3

The MV Cauchy problem (11) is MV stable if the following property holds.

For every \(u_0 \in L^\infty (\mathbb {R}^d,\mathbb {R}^N)\) and \(\sigma \in \mathbf {Y}(\mathbb {R}^d,\mathbb {R}^N)\) such that

there exists an EMV solution \(\nu \in \mathcal {E}(\delta _{u_0})\) such that

for every EMV solution \(\nu ^{\sigma } \in \mathcal {E}(\sigma )\) (or a subset thereof).

(Recall that \(\mathcal {E}(\sigma )\) denotes the set of all entropy MV solutions to the MV Cauchy problem (11).) We have intentionally left out several details in the above definition: the admissible set of initial data; the subset of \(\mathcal {E}(\cdot )\) for which the MV Cauchy problem is stable; and the distance \({\mathscr {D}}\) on the set of Young measures. Still, the concept of MV stability carries one of the main messages in this paper: Despite the well-documented instability of entropic weak solutions, as shown for example in the introduction and in Sect. 6, one could still hope for a stable solution of systems of conservation laws, when it is interpreted as a measure-valued solution, subject to atomic initial data.

Carrying out the full scope of this paradigm for general systems of conservation laws is currently beyond reach. Instead, we examine the question of whether EMV solutions of selected systems of conservation laws are stable or not with the aid of numerical experiments reported in Sect. 6. As for the analytical aspects, we recall that in the scalar case, measure-valued perturbations of atomic initial data are stable (Theorem 2). In the following theorem, we prove the MV stability in the case of systems, provided we further limit ourselves to MV perturbations of classical solutions of (11). The proof, along the lines of [20, Theorem 2.2], implies weak–strong uniqueness, as in [8]. In particular, the theorem provides consistency of EMV solutions with classical solutions of (1), as long as the latter exists.

Theorem 4

Assume that there exists a classical solution \(u\in W^{1,\infty }(\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) of (1) with initial data \(u_0\), both taking values in a compact set \(K\subset \mathbb {R}^N\). Let \(\nu \) be an EMV solution of (11) such that the supports of both \(\nu \) and its initial MV data \(\sigma \) are contained in K. Assume that \(\eta \) is uniformly convex on K. Then for all \(t>0\), there exists a constant C depending on u, such that

or equivalently,

In particular, if \(\sigma = \delta _{u_0}\) then \(\nu = \delta _{u}\), and so any (classical, weak or measure-valued) solution must coincide with u.

Proof

Denote \(\overline{u}:= \left\langle \nu , {{\mathrm{id}}}\right\rangle \) and \(\overline{u}_0 := \left\langle \sigma , {{\mathrm{id}}}\right\rangle \). Define the entropy variables \({v}= {v}(x,t) := \eta '(u(x,t))\) and denote \({v}_0 := {v}(x,0) = \eta '(u_0)\). It is readily verified that \({v}_t = -(f^i)'(u) \partial _{i}{v}\) (where \(\partial _i = \frac{\partial }{\partial _{x_i}}\)). Here and in the remainder of the proof, we use the Einstein summation convention.

Subtracting (12) from (2) and putting \(\varphi (x,t) = {v}(x,t)\theta (t)\) for some \(\theta \in C_c^1(\mathbb {R}_+)\) gives

Next, note that since u is a classical solution, the entropy inequality (3) is in fact an equality. Hence, subtracting (14) from (3) and putting \(\varphi (x,t) = \theta (t)\) gives

Subtracting these two expressions thus gives

where

Let \(\delta >0\), and let \(t>0\) be a Lebesgue point for the function \(s \mapsto \int _\mathbb {R}\hat{\eta }(x,s)\ \hbox {d}x\). We define

Taking the limit \(\delta \rightarrow 0\) in (16) then gives

Since \(\nu _{(x,s)}\) is a probability distribution, it follows from the uniform convexity of \(\eta \) that

Similarly, by the \(L^\infty \) bound on both u and \(\partial _i{v}\), we have

Hence,

By the integral form of Grönwall’s lemma, we obtain the desired result. \(\square \)

Remark 3

In addition to proving consistency of entropy measure-valued solutions with classical solutions (when they exist), the above theorem also provides local (in time) uniqueness of MV solutions in the following sense. Let \(u_0 \in W^{1,\infty }(\mathbb {R}^d,\mathbb {R}^N)\) be the initial data in (1), then by standard results [16], we have local (in time) existence of a unique classical solution \(u \in W^{1,\infty }(\mathbb {R}^d \times \mathbb {R}_+,\mathbb {R}^N)\). By the above theorem, \(\delta _u\) is also the unique EMV solution of the MV Cauchy problem (11) with initial data \(\delta _{u_0}\). However, uniqueness can break down once this MV solution develops singularities.

4 Construction of Approximate EMV Solutions

Although existence results for specific systems of conservation laws such as polyconvex elastodynamics [20], two-phase flows [32, 33] and transport equations [11] are available, there exists no global existence result for a generic system of conservation laws. We pursue a different approach by constructing approximate EMV solutions and proving their convergence. A procedure for constructing approximate EMVs is outlined in the present section. It provides a constructive proof of existence of EMV solutions for a generic system of conservation laws, and it is implemented in the numerical simulations reported in Sect. 6.

4.1 Numerical Approximation of EMV Solutions

The construction of approximate EMV solutions consists of several ingredients. It begins with a proper choice of a numerical scheme for approximating the system of conservation laws (1).

4.1.1 Numerical Schemes for One- and Multi-dimensional Conservation Laws

For simplicity, we begin with the description of a numerical scheme for a one-dimensional system of conservation laws, (1) with \(d=1\). We discretize our computational domain into cells  with mesh size

with mesh size  and midpoints

and midpoints

Note that we consider a uniform mesh size \({\Delta x}\) only for the sake of simplicity of the exposition. Next, we discretize the one-dimensional system, \(\partial _t u+\partial _x f(u)=0\), with the following semi-discrete finite difference scheme for \(u^{\Delta x}_i(t)\equiv u^{\Delta x}(x_i,t)\) (cf. [37, 50]):

Here, \(u_0^{{\Delta x}}\) is an approximation to the initial data \(u_0\). Henceforth, the dependence of u and F on \({\Delta x}\) will be suppressed for notational convenience. The numerical flux function

is a function depending on \(u(x_j,t)\) for \(j=i-p+1,\ldots ,i+p\) for some \(p\in \mathbb {N}\). It is assumed to be consistent with f and locally Lipschitz continuous, i.e., for every compact \(K\subset \mathbb {R}^N\) there is a \(C>0\) such that

is a function depending on \(u(x_j,t)\) for \(j=i-p+1,\ldots ,i+p\) for some \(p\in \mathbb {N}\). It is assumed to be consistent with f and locally Lipschitz continuous, i.e., for every compact \(K\subset \mathbb {R}^N\) there is a \(C>0\) such that

whenever \(u(x_j,t)\in K\) for \(j=i-p+1,\ldots ,i+p\).

The semi-discrete scheme (17a) needs to be integrated in time to define a fully discrete numerical approximation. Again for simplicity, we will use an exact time integration, resulting in

The function \(t \mapsto u(x_i,t)\) is then Lipschitz, that is,

In particular, for all \({\Delta x}>0\) and \(i\in \mathbb {N}\), the function \(t \mapsto u(x_i,t)\) is differentiable almost everywhere. We denote the evolution operator associated with the one-dimensional scheme (17) with mesh size \({\Delta x}\) by \(\mathcal {S}^{\varDelta x}\), so that \(u^{\Delta x}= \mathcal {S}^{\varDelta x}u_0\).

A similar framework applies to systems of conservation laws in several space dimensions. To simplify the notation, we restrict ourselves to the two-dimensional case (with the usual relabeling \((x_1,x_2) \mapsto (x,y)\)), \(\partial _t u+\partial _x f^x(u)+\partial _y f^y(u)=0\).

We discretize our two-dimensional computational domain with into cells with mesh size \(\varDelta :=({\Delta x}_1,{\Delta x}_2)\): With the usual relabeling \(({\Delta x}_1,{\Delta x}_2) \mapsto ({\Delta x},\Delta y)\)), these two-dimensional cells  are assumed to a have a fixed mesh ratio,

are assumed to a have a fixed mesh ratio,  and

and  , such that \(\Delta y= c {\Delta x}\) for some constant c. Let

, such that \(\Delta y= c {\Delta x}\) for some constant c. Let

denote the mid-cells. We end up with the following semi-discrete finite difference scheme for \(u^{\varDelta }_{ij}= u^{\varDelta }(x_i,y_j,t)\) (cf. [37, 50]):

Here, \(u_0^{\varDelta }\approx u_0\) is the approximate initial data and  are the locally Lipschitz numerical flux functions which are assumed to be consistent with the flux function \(f = \left( f^x,f^y\right) \). We integrate the semi-discrete scheme (18a) exactly in time to obtain

are the locally Lipschitz numerical flux functions which are assumed to be consistent with the flux function \(f = \left( f^x,f^y\right) \). We integrate the semi-discrete scheme (18a) exactly in time to obtain

We denote the evolution operator corresponding to (18) and associated with the two-dimensional mesh size \(\varDelta :=({\Delta x},\Delta y)\) by \(\mathcal {S}^\varDelta \).

4.1.2 Weak\(^*\) Convergent Schemes

The next ingredient in the construction of approximate EMV solutions for (11) is to employ the above numerical schemes in the following three-step algorithm.

Algorithm 5

-

Step 1: Let \(u_0: \varOmega \mapsto L^{\infty } (\mathbb {R}^d)\) be a random field on a probability space \((\varOmega ,\mathcal {F},P)\) such that the initial Young measure \(\sigma \) in (11) is the law of the random field \(u_0\) (see Proposition 2).

-

Step 2: We evolve the initial random field by applying the numerical scheme (17a) for every \(\omega \in \varOmega \) to obtain an approximation \(u^{\varDelta x}(\omega ) := \mathcal {S}^{{\varDelta x}}u_0(\omega )\) to the solution random field \(u(\omega )\), corresponding to the initial random field \(u_0(\omega )\).

-

Step 3: Define the approximate measure-valued solution \(\nu ^{\varDelta x}\) as the law of \(u^{\varDelta x}\) with respect to P (in Appendix A.3.1).

By Proposition 1 in Appendix A.3.1, \(\nu ^{{\varDelta x}}\) is a Young measure. This sequence of Young measures \(\nu ^{{\varDelta x}}\) serves as approximations to the EMV solutions of (11).

Next, we show that if the numerical scheme (17a) satisfies a set of criteria, then the approximate Young measures \(\nu ^{{\varDelta x}}\) generated by Algorithm 4.1 will converge weak* to an EMV solution of (11). Specific examples for such weak* convergent schemes are provided in Sect. 5. To simplify the presentation, we restrict attention to the one-dimensional case; the argument is readily extended to the general multi-dimensional case, and the details can be found in [28] (see Sect. 3.2, in particular Lemmas 3.4 and 3.5).

Theorem 6

Assume that the approximate solutions \(u^{\varDelta x}\) generated by the one-dimensional numerical scheme (17) satisfy the following.

-

Uniform boundedness:

$$\begin{aligned} \left\| u^{{\Delta x}}(\omega )\right\| _{L^{\infty }(\mathbb {R}\times \mathbb {R}_+)} \leqslant C, \quad \forall \ \omega \in \varOmega ,\ {\Delta x}> 0. \end{aligned}$$(19a) -

Weak BV: There exists \(1\leqslant r< \infty \) such that

$$\begin{aligned} \lim _{{\Delta x}\rightarrow 0}\int _0^T \sum _i \left| u^{\Delta x}_{i+1}(\omega ,t) - u_i^{\Delta x}(\omega ,t)\right| ^r{\Delta x}\hbox {d}t = 0 \quad \forall \ \omega \in \varOmega \end{aligned}$$(19b) -

Entropy consistency: The numerical scheme (17a) is entropy stable with respect to an entropy pair \((\eta ,q)\) i.e., there exists a numerical entropy flux

, consistent with the entropy flux q and locally Lipschitz, such that computed solutions satisfy the discrete entropy inequality

, consistent with the entropy flux q and locally Lipschitz, such that computed solutions satisfy the discrete entropy inequality  (19c)

(19c) -

Consistency with initial data: If \(\sigma ^{\Delta x}\) is the law of \(u_0^{\Delta x}\), then

$$\begin{aligned} \lim _{{\Delta x}\rightarrow 0}\int _{\mathbb {R}}\psi (x)\left\langle \sigma ^{\Delta x}_x, {{\mathrm{id}}}\right\rangle \ \hbox {d}x = \int _{\mathbb {R}}\psi (x)\left\langle \sigma _x, {{\mathrm{id}}}\right\rangle \ \hbox {d}x \quad \forall \ \psi \in C_c^1(\mathbb {R}). \end{aligned}$$(19d)and

$$\begin{aligned} \limsup _{{\Delta x}\rightarrow 0}\int _{\mathbb {R}}\psi (x) \left\langle \sigma ^{\Delta x}_x, \eta \right\rangle \ \hbox {d}x \leqslant \int _{\mathbb {R}}\psi (x) \left\langle \sigma _x, \eta \right\rangle \ \hbox {d}x \quad \forall \ 0\leqslant \psi \in C_c^1(\mathbb {R}) \end{aligned}$$(19e)

Then the approximate Young measures \(\nu ^{{\Delta x}}\) converge weak* (up to a subsequence) as \(\varDelta x \rightarrow 0\), to an EMV solution \(\nu \in \mathbf {Y}(\mathbb {R}\times \mathbb {R}_+,\mathbb {R}^N)\) of (11).

Proof

From the assumption (19a) that \(u^{\Delta x}\) is \(L^\infty \)-bounded, it follows that \(\nu ^{\Delta x}\) is uniformly bounded, in the sense that its support \({{\mathrm{supp}}}\nu ^{\Delta x}_{(x,t)}\) lies in a fixed compact subset of \(\mathbb {R}^N\) for every (x, t); see Appendix A.2.2. The fundamental theorem of Young measures (see Appendix A.2.6) gives the existence of a \(\nu \in \mathbf {Y}(\mathbb {R}^d\times \mathbb {R}_+,\mathbb {R}^N)\) and a subsequence of \(\nu ^{\Delta x}\) such that \(\nu ^{\Delta x}\rightharpoonup \nu \) weak*.

First, we show that the limit Young measure \(\nu \) satisfies the entropy inequality (14). To this end, let \(\varphi \in C_c^1(\mathbb {R}\times [0,T))\). Then

by the weak* convergence \(\nu ^{\Delta x}\rightharpoonup \nu \). Denote \(\eta ^{\Delta x}(\omega ,x,t) := \eta (u^{\Delta x}(\omega ,x,t))\). Then for every \({\Delta x}>0\) we have

(We have written \(\overline{\varphi }^{\Delta x}_i(t) := \frac{1}{{\Delta x}}\int _{\mathcal {C}_i}\varphi (x,t)\ \hbox {d}x\).) The first term can be written as

The second term goes to zero:

by (19b), where \(r'\) is the conjugate exponent of \(r\). In conclusion, the limit \(\nu \) satisfies (14).

The proof that the limit measure \(\nu \) satisfies (12) follows from the above by setting \(\eta = \pm {{\mathrm{id}}}\) and \(q = \pm f\). \(\square \)

A similar construction can be readily performed in several space dimensions. To this end, we replace \(\mathcal {S}^{\Delta x}\) in Step 2 of Algorithm 5 with the two-dimensional solution operator \(\mathcal {S}^{\varDelta }\), and the corresponding approximate solution \(u^{\Delta x}\) with \(u^{\varDelta }\). The weak* convergence of the resulting approximate Young measure \(\nu ^{\varDelta }\) is described below.

Theorem 7

Assume that the approximate solutions \(u^{\varDelta }\) generated by scheme (18a) satisfy the following.

-

Uniform boundedness:

$$\begin{aligned} \Vert u^{\varDelta }(\omega )\Vert _{L^{\infty }(\mathbb {R}^2 \times \mathbb {R}_+)} \leqslant C, \quad \forall \omega \in \varOmega , {\Delta x},\Delta y> 0. \end{aligned}$$(20) -

Weak BV: There exists \(1\leqslant r< \infty \) such that \( \forall \ \omega \in \varOmega \)

$$\begin{aligned}&\lim _{{\Delta x},\Delta y\rightarrow 0}\int _0^T \sum _{i,j} \left( \left| u^\varDelta _{i+1,j}(\omega ,t) - u_{i,j}^\varDelta (\omega ,t)\right| ^r\right. \nonumber \\&\quad \left. + \, \left| u^\varDelta _{i,j+1}(\omega ,t) - u_{i,j}^\varDelta (\omega ,t)\right| ^r\right) {\Delta x}\Delta y\hbox {d}t = 0. \end{aligned}$$(21) -

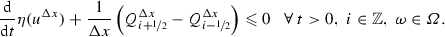

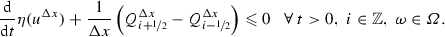

Entropy consistency: The numerical scheme (18a) is entropy stable with respect to an entropy pair \((\eta ,q)\), in the sense that there exist locally Lipschitz numerical entropy fluxes

, consistent with the entropy flux \(q = (q^x,q^y)\), such that computed solutions satisfy the discrete entropy inequality, namely \(\forall \ t>0,\ i,j\in \mathbb {Z},\ \omega \in \varOmega \)

, consistent with the entropy flux \(q = (q^x,q^y)\), such that computed solutions satisfy the discrete entropy inequality, namely \(\forall \ t>0,\ i,j\in \mathbb {Z},\ \omega \in \varOmega \)

(22)

(22) -

Consistency with initial data: Let \(\sigma ^{\varDelta }\) be the law of the random field \(u_0^{\varDelta }\) that approximates the initial random field \(u_0\). Then, the consistency conditions (19d) and (19e) hold.

Then, the approximate Young measures \(\nu ^{\varDelta }\) converge weak* (up to a subsequence) to a Young measure \(\nu \in \mathbf {Y}(\mathbb {R}^2 \times \mathbb {R}_+, \mathbb {R}^N)\) as \({\Delta x},\Delta y\rightarrow 0\) and \(\nu \) is an EMV solution of (11), i.e.,

The proof of the above theorem is a simple generalization of the proof of convergence theorem 6, see Section 3.2 of [28] (in particular Lemmas 3.4 and 3.5) for details. The above construction can also be readily extended to three spatial dimensions.

Remark 4

The uniform \(L^{\infty }\) bound (19a), (20) is a technical assumption that we require in this article. This assumption can be relaxed to only an \(L^p\) bound. This extension is described in a forthcoming paper [29].

Remark 5

The conditions (19d) and (19e), which say that \(\sigma ^{\Delta x}\rightarrow \sigma \) in a certain sense, are weaker than weak* convergence. It is readily checked that a sufficient condition for this is that \(u_0 \in L^1(\mathbb {R};\mathbb {R}^N)\cap L^\infty (\mathbb {R};\mathbb {R}^N)\) and \(u_0^{\Delta x}(\omega ,\cdot ) \rightarrow u_0(\omega ,\cdot )\) in \(L^1(\mathbb {R}^d;\mathbb {R}^N)\) for all \(\omega \in \varOmega \) (which in fact implies that \(\sigma ^{\Delta x}\rightarrow \sigma \) strongly).

4.1.3 Weak\(^*\) Convergence with Atomic Initial Data

In view of the non-uniqueness example 1, one can not expect an unique construction of EMV solutions for general MV initial data. Instead, as argued before, we focus our attention on perturbations of atomic initial data \(\sigma = \delta _{u_0}\) for some \(u_0 \in L^1(\mathbb {R}^d,\mathbb {R}^N) \cap L^{\infty }(\mathbb {R}^d,\mathbb {R}^N)\). We construct approximate EMV solutions of (11) in this case using the following specialization of Algorithm 4.1.

Algorithm 8

Let \((\varOmega , \mathcal {F}, P)\) be a probability space and let \(X: \varOmega \rightarrow L^1(\mathbb {R}^d)\cap L^\infty (\mathbb {R}^d)\) be a random variable satisfying \(\Vert X\Vert _{L^1(\mathbb {R}^d)} \leqslant 1\) P-almost surely.

-

Step 1 Fix a small number \(\varepsilon >0\). Perturb \(u_0\) by defining \(u_0^{\varepsilon }(\omega ,x) := u_0(x) + \varepsilon X(\omega ,x)\). Let \(\sigma ^{\varepsilon }\) be the law of \(u_0^{\varepsilon }\).

-

Step 2 For each \(\omega \in \varOmega \), let \(u^{{\varDelta x},\varepsilon }(\omega ) := \mathcal {S}^{\varDelta x}u_0^{\varepsilon }(\omega )\), with \(\mathcal {S}^{{\varDelta x}}\) being the solution operator corresponding to the numerical scheme (17).

-

Step 3 Let \(\nu ^{{\varDelta x},\varepsilon }\) be the law of \(u^{{\varDelta x},\varepsilon }\) with respect to P. \(\square \)

Theorem 9

Let \(\{\nu ^{{\Delta x},\varepsilon }\}\) be the family approximate EMV solutions constructed by Algorithm 8. Then there exists a subsequence \(({\Delta x}_n,\varepsilon _n) \rightarrow 0\) such that

that is, \(\nu ^{{\Delta x}_n,\varepsilon _n}\) converges weak* to an EMV solution \(\nu \) with atomic initial data \(u_0\).

Proof

By Theorem 6, we know that for every \(\varepsilon > 0\) there exists a subsequence \(\nu ^{{\Delta x}_n,\varepsilon }\) which converges weak* to an EMV solution \(\nu ^{\varepsilon }\) of (11) with initial data \(\sigma ^{\varepsilon }\). Thus, (14) holds with \((\nu ,\sigma )\) replaced by \((\nu ^{\varepsilon },\sigma ^\varepsilon )\); we abbreviate the corresponding entropy statement as (14)\({}_\varepsilon \). The convergence of the sequence \(\nu ^{\varepsilon _n}\) as \(\varepsilon _n \rightarrow 0\) is a consequence of the fundamental theorem of Young measures: By Theorem 13, there exists a weak* convergent subsequence \(\nu ^{\varepsilon _n} \rightharpoonup \nu \). The fact that \(\nu \) is an EMV solution follows at once by taking the limit \(\varepsilon _n \rightarrow 0\) in (14)\({}_{\varepsilon _n}\). \(\square \)

4.2 What are We Computing? Weak* Convergence of Space–Time Averages

We begin by quoting [48, p. 143]: “Just because we cannot prove that compressible flows with prescribed initial values exist doesn’t mean that we cannot compute them.” The question is what are the computed quantities encoded in the EMV solutions.

According to Theorems 6, 9, the approximations generated by Algorithm 4.1 and 4.5 converge to an EMV solution in the following sense: For all \(g \in C_0(\mathbb {R}^N)\) and \(\psi \in L^1(\mathbb {R}^d \times \mathbb {R}_+)\),

As we assume that the approximate solutions are \(L^\infty \)-bounded (property (19a)), any \(g \in C(\mathbb {R}^N)\) can serve as a test function in (23), see Appendix A.2.6. In particular, we can choose \(g(\xi ) = \xi \) to obtain the mean of the measure-valued solution. Similarly, the variance can be computed by choosing the test function \(g(\xi ) = \xi \otimes \xi \). Higher statistical moments can be computed analogously.

In practice, the goal of any numerical simulation is to accurately compute statistics of space–time averages or statistics of functionals of interest of solution variables and to compare them to experimental or observational data. Thus, the weak* convergence of approximate Young measures, computed by Algorithms 4.1 and 4.5, provides an approximation of exactly these observable quantities of interest.

4.2.1 Monte Carlo Approximation

In order to compute statistics of space–time averages in (23), we need to compute phase space integrals with respect to the measure \(\nu ^{{\Delta x}}\):

The last ingredient in our construction of EMV solutions, therefore, is numerical approximation which is necessary to compute these phase space integrals. To this end, we utilize the equivalent representation of the measure \(\nu ^{{\Delta x}}\) as the law of the random field \(u^{{\Delta x}}\):

We will approximate this integral by a Monte Carlo sampling procedure:

Algorithm 10

Let \({\Delta x}> 0\) and let M be a positive integer. Let \(\sigma ^{{\Delta x}}\) be the initial Young measure in (11) and let \(u_0^{\Delta x}\) be a random field \(u_0^{\Delta x}:\varOmega \times \mathbb {R}^d \rightarrow \mathbb {R}^N\) such that \(\sigma ^{{\Delta x}}\) is the law of \(u_0^{\Delta x}\).

-

Step 1 Draw M independent and identically distributed random fields \(u_0^{{\Delta x},k}\) for \(k=1,\ldots , M\).

-

Step 2 For each k and for a fixed \({\omega }\in \varOmega \), use the finite difference scheme (17a) to numerically approximate the conservation law (1) with initial data \(u_0^{{\Delta x},k}({\omega })\). Denote \(u^{{\Delta x},k}({\omega }) = \mathcal {S}^{{\Delta x}}u_0^{{\Delta x},k}({\omega }).\)

-

Step 3 Define the approximate measure-valued solution \(\nu ^{{\Delta x},M} := \frac{1}{M}\sum _{k=1}^M \delta _{u^{{\Delta x},k}({\omega })}\).

For every \(g\in C(\mathbb {R}^N)\), we have

Thus, the space–time average (23) is approximated by

Note that, as in any Monte Carlo method, the approximation \(\nu ^{{\Delta x},M}\) depends on the choice of \(\omega \in \varOmega \), i.e., the choice of seed in the random number generator. However, we can prove that the quality of approximation is independent of this choice, P-almost surely:

Theorem 11

(Convergence for large samples) Algorithm 10 converges, that is,

and, for a subsequence \(M\rightarrow \infty \), P-almost surely. Equivalently, for every \(\psi \in L^1(\mathbb {R}^d \times \mathbb {R}_+)\) and \(g \in C(\mathbb {R}^N)\),

The limits are uniform in \({\Delta x}\).

The proof involves an adaptation of the law of large numbers for the present setup and is provided in Appendix 2. Combining (26) with the convergence established in Theorem 6, we conclude with the following.

Corollary 1

(Convergence with mesh refinement) There are subsequences \({\Delta x}\rightarrow 0\) and \(M\rightarrow \infty \) such that

or equivalently, for every \(\psi \in L^1(\mathbb {R}^d \times \mathbb {R}_+)\) and \(g \in C(\mathbb {R}^N)\),

The limits in \({\Delta x}\) and M are interchangeable.

5 Examples of Weak* Convergent Numerical Schemes

In this section, we provide concrete examples of numerical schemes that satisfy the criteria (19) of Theorem 6, for weak* convergence to EMV solutions of (11). We start with scalar conservation laws.

5.1 Scalar Conservation Laws

We begin by considering scalar conservation laws. Monotone finite difference (volume) schemes (see [15, 37] for a precise definition) for scalar equations are uniformly bounded in \(L^{\infty }\) (as they satisfy a discrete maximum principle), satisfy a discrete entropy inequality (using the Crandall-Majda numerical entropy fluxes [15]) and are TVD—the total variation of the approximate solutions is non-increasing over time. Consequently, the approximate solutions satisfy the weak BV estimate (19b) (resp, (21) in the multi-dimensional case) with \(r= 1\). Thus, monotone schemes, approximating scalar conservation laws, satisfy all the abstract criteria of Theorem 6.

In fact, one can obtain a precise convergence rate for monotone schemes [46]:

where \(u(\omega ) = \lim _{{\Delta x}\rightarrow 0} u^{\Delta x}(\omega )\) denotes the entropy solution of the Cauchy problem for a scalar conservation law with initial data \(u_0(\omega )\). Using this error estimate, we obtain the following strong convergence results for monotone schemes.

Theorem 12

Let \(\nu ^{\Delta x}\) be generated by Algorithm 5, and let \(\nu \) be the law of the entropy solution \(u(\omega )\). If \({{\mathrm{TV}}}(u_0(\omega )) \leqslant C\) for all \(\omega \in \varOmega \), then \(\nu ^{\Delta x}\rightarrow \nu \) strongly as \({\Delta x}\rightarrow 0\).

Proof

Define \(\pi ^{\Delta x}_{z}\in \mathcal {P}(\mathbb {R}^N\times \mathbb {R}^N)\) as the law of the random variable \(\left( u^{\Delta x}(z), u(z)\right) \),

Then \(\pi ^{\Delta x}_{z}\) is a Young measure for all \(z\) and \({\Delta x}>0\). Clearly, \(\pi ^{\Delta x}_{z} \in \varPi \bigl (\nu ^{\Delta x}_{z}, \nu _{z}\bigr )\), and hence

Hence, by Kutznetsov’s error estimate (28),

\(\square \)

Remark 6

We can relax the uniform boundedness of \({{\mathrm{TV}}}(u_0(\omega ))\) to just integrability of the function \(\omega \mapsto {{\mathrm{TV}}}(u_0(\omega ))\).

Remark 7

Note that, in light of Theorem 1 and Example 1, the limit entropy measure-valued solution \(\nu \) is unique only if the initial measure-valued data \(\sigma \) is atomic.

5.2 Systems of Conservation Laws

The convergence theorems 6 and 7 specify that the ensemble-based algorithms converge if the underlying numerical scheme satisfies the criteria (19). We recall from remark 4 that the \(L^{\infty }\) bound (19a), (20), is a technical assumption that cannot be rigorously verified for any of the existing numerical schemes approximating systems of conservation laws. Although this assumption holds for many numerical examples (for instance in all the numerical experiments presented in the next section), it is unclear whether this bound holds for systems of conservation laws with general \(L^{\infty }\) initial data. However, this assumption can be relaxed to requiring uniform \(L^p\) bounds on numerical schemes with \(1\leqslant p < \infty \), if the underlying systems of conservation laws possess a strictly convex entropy. Then, the solution framework utilizes generalized Young measures that allow for concentrations as well as persistent oscillations in sequences of approximations. Consequently, any entropy stable scheme for conservation laws with strictly convex entropy will satisfy an uniform \(L^2\) bound and will converge to the corresponding (generalized) entropy measure-valued solution. Such an extension is presented in a forthcoming paper [29].

Hence, we will present numerical schemes for approximating systems of conservation laws that satisfy the weak BV bounds (19b), (21) and the discrete entropy inequalities (19c). We present examples of such schemes below.

5.2.1 The ELW Scheme

The ELW (entropy stable Lax–Wendroff) scheme, introduced in [28, Section 4.2], is finite difference scheme of the form (17a) with flux function

where  is a pth order accurate (\(p\in \mathbb {N}\)) entropy conservative numerical flux (see [49, 61]),

is a pth order accurate (\(p\in \mathbb {N}\)) entropy conservative numerical flux (see [49, 61]),  is some positive number,

is some positive number,  and \({v}:= \eta '(u)\) is the entropy variable.

and \({v}:= \eta '(u)\) is the entropy variable.

This scheme was shown to be (formally) p-th order accurate, entropy stable, satisfies the weak BV bound (19b), and converges pointwise for scalar conservation laws [28, Proposition 4.2 and 4.3]. The two-dimensional version of the scheme, with the corresponding discrete entropy inequality and weak BV bound (21), is straightforward to construct. Hence, under the assumptions (19a),(20) that the scheme is bounded in \(L^{\infty }\), the approximate measure-valued solutions generated by the ELW scheme converge to an entropy measure-valued solution of (11).

5.2.2 TeCNO Finite Difference Schemes

The TeCNO schemes, introduced in [27, 28], are finite difference schemes of the form (17a) with flux function

Here,  is an entropy conservative flux as in (29),

is an entropy conservative flux as in (29),  is a positive definite matrix, and \({v}_j^\pm \) are the cell interface values of a p-th order accurate ENO reconstruction of the entropy variable \({v}\) (see [26, 39]). The multi-dimensional (Cartesian) version was also designed in [27], see also [28]. It was shown in [27, 28] that the TeCNO schemes

is a positive definite matrix, and \({v}_j^\pm \) are the cell interface values of a p-th order accurate ENO reconstruction of the entropy variable \({v}\) (see [26, 39]). The multi-dimensional (Cartesian) version was also designed in [27], see also [28]. It was shown in [27, 28] that the TeCNO schemes

-

are (formally) p-th order accurate

-

are entropy stable—they satisfy a discrete entropy inequality of the form (19c) (see Theorem 4.1 of [27] for the one-dimensional case and Theorem 6.1 of [27] for the multi-dimensional case)

-

have weakly bounded variation when \(p=1\) and \(p=2\), i.e., they satisfy a bound of the form (19b) in the one-dimensional case and (21) in two dimensions (see Theorem 6.6 of [28] and in general section 3.2 of [28] for the multi-dimensional case).

The weak total variation bound for \(p\geqslant 3\) depends on a conjectured result for the ENO reconstruction method which remains to be proven (see [28, Section 5.5]). In light of these properties, and under the assumption (19a) that the scheme is bounded in \(L^{\infty }\), the approximate measure-valued solutions generated by the TeCNO scheme converge to an EMV solution of (11).

5.2.3 Shock Capturing Space–Time Discontinuous Galerkin (DG) Schemes

Although suitable for Cartesian grids, finite difference schemes of the type (17a) are difficult to extend to unstructured grids in several space dimensions. For problems with complex domain geometry that necessitates the use of unstructured grids (triangles, tetrahedra), an alternative discretization procedure is the space–time discontinuous finite element procedure of [4, 40, 41, 43]. In this procedure, the entropy variables serve as degrees of freedom and entropy stable numerical fluxes like (30) need to be used at cell interfaces. Further stabilization terms like streamline diffusion and shock capturing terms are also necessary. In a recent paper [40], it was shown that a shock capturing streamline diffusion space–time DG method satisfied a discrete entropy inequality and a suitable version of the weak BV bound (19b), see Theorem 3.1 of [40] for the precise statements and results. Hence, this method was also shown to converge to an EMV solution in [40] (see Theorems 4.1 and 4.2 of [40]). We remark that the space–time DG methods are fully discrete, in contrast to semi-discrete finite difference schemes such as (17a).

6 Numerical Results

Our overall goal in this section will be to compute approximate EMV solutions of (11) with atomic initial data using Algorithm 8, as well as to investigate the stability of these solutions with respect to initial data. In Sects. 6.1 and 6.2, we consider the Kelvin–Helmholtz problem (8). In Section 6.3, we consider the Richtmeyer–Meshkov problem, see, e.g., [36] and the references therein.

For the rest of the section, we will present numerical experiments for the two-dimensional compressible Euler equations

Here, the density \(\rho \), velocity field \((w^1,w^2)\), pressure p and total energy E are related by the equation of state

The relevant entropy pair is given by

with \(s = \log (p) - \gamma \log (\rho )\) being the thermodynamic entropy. The adiabatic constant \(\gamma \) is set to 1.4.

6.1 Kelvin–Helmholtz Problem: Mesh Refinement (\(\varDelta x {\downarrow } 0\))

As our first numerical experiment, we consider the two-dimensional compressible Euler equations of gas dynamics (31) with the initial data

with \(\rho _L = 2\), \(\rho _R = 1\), \(w^1_L = -0.5\), \(w^1_R = 0.5\), \(w^2_L=w^2_R=0\) and \(p_L=p_R=2.5\).

The computational domain is \([0,1]^2\), and we consider periodic boundary conditions. Furthermore, the interface profiles

are chosen to be small perturbations around \(J_1:=0.25\) and \(J_2:=0.75\), respectively, with

Here, \(a_j^n = a_j^n(\omega ) \in [0,1]\) and \(b_j^n = b_j^n(\omega )\in [-\pi ,\pi ]\), \(i=1,2\), \(n=1,\ldots ,m\) are randomly chosen numbers. The coefficients \(a_j^n\) have been normalized such that \(\sum _{n=1}^m a_j^n = 1\) to guarantee that \(|I_j(x,\omega ) - J_j| \leqslant \varepsilon \) for \(j=1,2\). We set \(m=10\).

Observe that by making \(\varepsilon \) small, this \(\omega \)-ensemble of initial data lies inside an arbitrarily small ball centered at \(u_0\). Indeed, it is readily checked that measured in, say, the \(L^p([0,1]^2)\)-norm, every sample \(u_0(\cdot ,\omega )\) is \(O(\varepsilon ^{1/p})\) away from the unperturbed steady state in (8).

A representative (single realization with fixed \(\omega \)) initial datum for the density is shown in Fig. 5 (left). We observe that the resulting measure-valued Cauchy problem involves a random perturbation of the interfaces between the two streams (jets). This should be contrasted to initial value problem (8), (5), where the amplitude was randomly perturbed. We note that the law of the above initial datum can readily be written down and serves as the initial Young measure in the measure-valued Cauchy problem (11). Observe that this Young measure is non-atomic for some points in the domain.

6.1.1 Lack of Sample Convergence

We approximate the above MV Cauchy problem with the second-order entropy stable TeCNO2 scheme of [27]. In Fig. 6, we show the density at time \(t = 2\) for a single sample, i.e., for a fixed \(\omega \in \varOmega \), at different grid resolutions, ranging from \(128^2\) points to \(1024^2\) points. The figure suggests that the approximate solutions do not seem to converge as the mesh is refined. In particular, finer and finer-scale structures are formed as the mesh is refined, as already shown in Fig. 3. To further verify this lack of convergence, we compute the \(L^1\) difference of the approximate solutions at successive mesh levels (7) and present the results in Fig. 7. We observe that this difference does not go to zero, suggesting that the approximate solutions may not converge as the mesh is refined.

Cauchy rates (7) at \(t=2\) for the density (y-axis) for a single sample of the Kelvin–Helmholtz problem, versus different mesh resolutions (x-axis)

6.1.2 Convergence of the Mean and Variance

The lack of convergence of the numerical schemes for single samples is not unexpected, given the results already mentioned in the introduction. Next, we will compute statistical quantities of the interest for this problem. First, we compute the Monte Carlo approximation of the mean (25), denoted by \(\bar{u}^{{\Delta x}}(x,t)\), at every point (x, t) in the computational domain. This sample mean of the density computed with \(M=400\) samples and the second-order TeCNO2 scheme is presented in Fig. 8 for a set of successively refined grid resolutions. The figure clearly suggests that the sample mean converges as the mesh is refined. This stands in stark contrast to the lack of convergence, at the level of single samples, as shown in Figs. 3 and 6. Furthermore, Fig. 8 also shows that small-scale structures, present in single sample (realization) computations, are indeed smeared or averaged out in the mean. This convergence of the mean is further quantified by computing the \(L^1\) difference of the mean,

and plotting the results in Fig. 9a. As predicted by the theory presented in Theorems 7 and 9, these results confirm that the sequence of approximate means forms a Cauchy sequence and hence converges to a limit as the mesh is refined. Similar convergence results were also observed for the means of the other conserved variables, namely momentum and total energy (not shown here). Furthermore, Fig. 8 also shows that the mean is varying in the y-direction only. This is completely consistent with the symmetries of the equations, of the initial data and the fact that periodic boundary conditions are employed. This is also in sharp contrast to the situation for single realizations where there is strong variation along both directions (see Fig. 6).

Approximate sample means of the density for the Kelvin–Helmholtz problem (32) at time \(t=2\) and different mesh resolutions. All results are with 400 Monte Carlo samples

Next, we compute the sample variance and show the results in Fig. 10. The results suggest that the variance also converges with grid resolution. This convergence is also demonstrated quantitatively by plotting the \(L^1\) differences of the variance at successive levels of resolution, shown in Fig. 9b. Again, the figure suggests that the sequence forms a Cauchy sequence and hence is convergent. Furthermore, the variance itself shows no small-scale features, even on very fine mesh resolutions (see Fig. 10). This figure also reveals that the variance is higher near the initial mixing layer.

Approximate sample variances of the density for the Kelvin–Helmholtz problem (32) at time \(t=2\) and different mesh resolutions. All results are with 400 Monte Carlo samples

6.1.3 Strong Convergence to an EMV Solution

Convergence of the mean and variance (as well as higher moments (not shown here)) confirm the weak* convergence predicted by (the multi-dimensional version of) Theorems 6 and 9. Note that the convergence illustrated in Fig. 9 is in \(L^1\) of the spatial domain. Next, we test strong convergence of the numerical approximations by computing the Wasserstein distance between two successive mesh resolutions:

(see Appendix A.1.4). In Fig. 11, we show the \(L^1\)-norm of the Wasserstein distance between successive mesh resolutions

at time \(t=2\); recall that this is the quantity appearing in (10). The figure suggests that this difference between successive mesh resolutions converges to zero. Hence, the approximate Young measures converge strongly in both space–time and phase space to a limit Young measure.

In Fig. 12, we show the pointwise difference in Wasserstein distance (35) between two successive mesh levels. The figure reveals that this distance decreases as the mesh is refined. Moreover, we see that the Wasserstein distance between approximate Young measures at successive resolutions is concentrated at the interface mixing layers. This is to be expected as the variance is also concentrated along these layers (cf. the variance plots in Fig. 10).

Wasserstein distances between the approximate Young measure (density) (34) at successive mesh resolutions, at time \(t=2\)

6.2 Kelvin–Helmholtz: Vanishing Variance Around Atomic Initial Data (\(\varepsilon {\downarrow } 0\))

Our aim is to compute the entropy measure-valued solutions of the two-dimensional Euler equations with atomic initial measure, concentrated on the Kelvin–Helmholtz data (8). We utilize Algorithm 8 for this purpose and consider the perturbed initial data (32). Observe that this perturbed initial data converges strongly (cf. (10)) to the initial data (8) as \(\varepsilon \rightarrow 0\). Following Algorithm 8, we wish to study the limit behavior of approximate solutions \(\nu ^{{\Delta x},\varepsilon }\) as \(\varepsilon \rightarrow 0\). To this end, we retain the same set up as the previous numerical experiment and compute approximate solutions using the TeCNO2 scheme of [27] at a very fine mesh resolution of \(1024^2\) points for different values of \(\varepsilon \).

Results for a single sample at time \(t=2\) and different \(\varepsilon \)’s are presented in Fig. 13. The figures indicate that there is no convergence as \(\varepsilon \rightarrow 0\). The spread of the mixing region seems to remain large even when the perturbation parameter is reduced. This lack of convergence is further quantified in Fig. 14, where we plot the \(L^1\) difference of the approximate density for successively reduced values of \(\varepsilon \). This difference remains large even when \(\varepsilon \) is reduced by an order of magnitude.

Approximate density, computed with the TeCNO2 scheme for a single sample with initial data (32) for different initial perturbation amplitudes \(\varepsilon \) on a grid of \(1024^2\) points

Next, we compute the mean of the density over 400 samples at a fixed grid resolution of \(1024^2\) points and for different values of the perturbation parameter \(\varepsilon \). This sample mean is plotted in Fig. 15. The figure clearly shows pointwise convergence as \(\varepsilon \rightarrow 0\), to a limit different from the steady-state solution (8). This convergence of the mean with respect to decaying \(\varepsilon \) is quantified in Fig. 16a, where we compute the \(L^1\) difference of the mean for successive values of \(\varepsilon \). We observe that the mean forms a Cauchy sequence and hence converges.

Approximate sample means of the density for the Kelvin–Helmholtz problem (32) at time \(t=2\) and different values of perturbation parameter \(\varepsilon \). All the computations are on a grid of \(1024^2\) mesh points and 400 Monte Carlo samples

Cauchy rates for the sample mean and the sample variance of the density (y-axis) for the Kelvin–Helmholtz problem (32) for different values of \(\varepsilon \) (x-axis). All the computations are on a grid of \(1024^2\) mesh points and 400 Monte Carlo samples. a Mean, b variance

Similarly the computations of the sample variance for different values of \(\varepsilon \) are presented in Fig. 17. Note that this figure, as well as the computations of the difference in variance in \(L^1\) for successive reductions of the perturbation parameter \(\varepsilon \) (shown in Fig. 16b), clearly shows convergence of variance as \(\varepsilon \rightarrow 0\). Moreover, Fig. 17 clearly indicates that in the \(\varepsilon \rightarrow 0\) limit, the limit variance is nonzero. Hence, this strongly suggests the fact that EMV solution can be non-atomic, even for atomic initial data. These results are consistent with the claims of Theorem 9.

To further demonstrate the non-atomicity of the resulting measure-valued solution, we have plotted the probability density functions (approximated by empirical histograms) for density at the points \(x=(0.5,0.7)\) and \(x=(0.5,0.8)\) in Fig. 18 for a fixed mesh of size \(1024^2\). We see that the initial unit mass centered at \(\rho =2\) (\(\rho =1\), respectively) at \(t=0\) is smeared out over time, and at \(t=2\) the mass has spread out over a range of values of \(\rho \) between 1 and 2.

Figure 19 shows the same quantities, but for a fixed time \(t=2\) over a series of meshes. Although a certain amount of noise seems to persist on the finer meshes—most likely due to the low number of Monte Carlo samples—it can be seen that the probability density functions seem to converge with mesh refinement.

6.3 Richtmeyer–Meshkov Problem

As a second numerical example, we consider the two-dimensional Euler equations (31) in the computational domain \(x\in [0,1]^2\) with initial data:

The radial density interface \(I(x,\omega ) = 0.25 + \varepsilon Y(\varphi (x),\omega )\) is perturbed with

where \(\varphi (x) = \arccos ((x_1 - 1/2)/|x - (0.5,0.5)|)\) and \(a_n,b_n,k\) are the same as in Sect. 6.1.

We repeat that the computational domain is \([0,1]^2\), and we use periodic boundary conditions in both directions.

Approximate density for a single sample for the Richtmeyer–Meshkov problem (36) for different grid resolutions at time \(t=4\)

6.3.1 Lack of Sample Convergence

As in the case of the Kelvin–Helmholtz problem, we test whether numerical approximations for a single sample converge as the mesh is refined. To this end, we compute the approximations of the two-dimensional Euler equations with initial data (36) using a second-order MUSCL type finite volume scheme, based on the HLLC solver, and implemented in the FISH code [44]. The numerical results, presented in Fig. 20, show the effect of grid refinement on the density for a single sample at time \(t=4\). Note that by this time, the leading shock wave has exited the domain but has reentered from the corners on account of the periodic boundary conditions. Furthermore, this reentry shock wave interacts and strongly perturbs the interface forming a very complex region of small-scale eddy-like structures. As seen from Fig. 20, there seems to be no convergence as the mesh is refined. This lack of convergence is quantified in Fig. 21, where we present differences in \(L^1\) for successive mesh resolutions (7) and see that the approximate solutions for a single sample do not form a Cauchy sequence.

6.3.2 Convergence of the Mean and the Variance

Next, we test for convergence of statistical quantities of interest as the mesh is refined. First, we check the convergence of the mean through the Monte Carlo approximation (25) with \(M=400\) samples. The numerical results for the density at time \(t=4\) at different grid resolutions are presented in Fig. 22. The figure clearly shows that the mean converges as the mesh is refined. This convergence is further verified in Fig. 23a where we plot the difference in mean (33) for successive resolutions. This figure proves that the mean of the approximations forms a Cauchy sequence and hence converges. Figure 22 shows that small-scale features are averaged out in the mean and only large-scale structures, such as the strong reentrant shocks (mark the periodic boundary conditions) and mixing regions, are retained through the averaging process.

Mean density for the Richtmeyer–Meshkov problem with initial data (36) for different grid resolutions at time \(t=4\). All results are obtained with 400 Monte Carlo samples