Abstract

A spatio-temporal model of housing price trends is developed that focuses on individual housing sales over time. The model allows for both the spatio-temporal lag effects of previous sales in the vicinity of each housing sale, and for general autocorrelation effects over time. A key feature of this model is the recognition of the unequal spacing between individual housing sales over time. Hence the residuals are modeled as a first-order autoregressive process with unequally spaced events. The maximum-likelihood estimation of this model is developed in detail, and tested in terms of simulations based on selected data. In addition, the model is applied to a small data set in the Philadelphia area.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present model grew out of an effort to identify the impacts of certain Community Development Corporation (CDC) housing projects on their local housing markets in Philadelphia. While no single measure can effectively capture neighborhood revitalization, it is becoming common practice to use changes in housing prices as a summary measure.Footnote 1 Hence this model focuses on the specific problem of identifying trends in housing prices within a given region based on a time series of individual housing sales transactions. Because our approach to this time-series problem appears to be new to the housing literature, the objective of the present paper is to present a self-contained development of this approach.Footnote 2 The resulting model is illustrated by a small example from the Philadelphia study. A presentation of the full study will be given in a subsequent paper [see Wu and Smith (2009)].

Since housing prices are well known to be influenced by the prices of recent house sales nearby, one must allow for possible spatio-temporal dependencies between such prices. In addition there are generally a host of other processes occurring over time that result in unobserved temporal autocorrelations among housing prices. But since individual housing sales do not occur at regular time intervals, it is difficult to model such processes in terms of standard discrete time series. An approach to unequally spaced temporal events has been developed for first-order autoregressive [AR(1)] processes by a number of authors (see Wansbeek and Kapteyn 1985; McKenzie and Kapuscinski 1997; Batalgi and Wu 1999). A continuous version of these models [CAR(1)] has also been developed by Jones and Boadi-Boateng (1991) and Jones (1993). The continuous version is certainly the most flexible one, but involves stochastic differential equations that rely on rather sophisticated analytical methods. Moreover, since housing sales are basically recorded on a daily basis, there is no need to consider finer time intervals. Hence we choose to develop a standard AR(1) model on daily time intervals, and then embed the observed sales events within this process. The approach we adopt is most closely related to Batalgi and Wu (1999). But since their formulation is in terms of panel data, it is convenient to give a self-contained development for the present case. Finally, an interesting alternative approach to modeling sales transactions with unequal time intervals was proposed by Pace et al. (2000). Because of its close similarity to the present paper, a detailed comparison of these two models is presented in Sect. 5 below.

We begin in the next section by developing the basic model, and then consider maximum likelihood estimation and testing of its parameters. This is followed by a small simulation study to examine the properties of the estimation procedure. The model is then applied to a selected sample from the Philadelphia CDC study, and is compared with the approach of Pace et al. (2000). Finally, a possible extension is considered in the concluding section of the paper.

2 Development of the model

Consider a sequence of sales prices (y i : i = 1,…,n), resulting from the sale of individual houses at distinct time points (t i : i = 1,…,n) in a given metropolitan area.Footnote 3 Such prices of course depend on a host of attributes, x i = (x i1,…,x ik ), of each house i, as well as the sales prices of houses recently sold in the immediate area of house i. Here we model such dependencies by spatio-temporal lag weights, w ij , that are assumed to be positive only if house j is sold prior to house i,Footnote 4 and is “sufficiently close” to i in both time and space to be an influential factor. More precisely, it is here assumed that there is some threshold time interval, Δ, and threshold distance, d, beyond which other housing sales have no direct influence on the price of house i. Hence, if the relevant distance from i to j is denoted by d ij , then is assumed that only houses in the spatio-temporal neighborhood,

of i have direct influence on the sales price of house i. If n i denotes the cardinality (size) of N i , then the corresponding lag weights, w ij , are given by

While this simple spatial-threshold assumption plays no substantive role in the analysis to follow, it is used in both the simulations and empirical application below.Footnote 5 With these conventions, our basic model of housing prices takes the following spatio-temporal lag form

In the first term, λ is an intensity parameter reflecting the strength of price dependencies. Note from the normalization assumption, w ij = 1/n i , in (2) that this term is simply the average housing price in N i weighted by λ.Footnote 6 To ensure that variances in housing prices remain bounded over time, it is required that there be a diminished dependency of house prices i on these averages, i.e., that (Green 2003, p. 255):

The second and third terms in (3) involve the usual linearity assumption on housing attributes, where β h is the relevant coefficient for attribute h. (Of particular relevance for our present purposes is the inclusion of time as an attribute of each sale, in order to capture price trend effects.) Finally, the residuals (u i : i = 1,…,n), in (3) are assumed to be generated by an underlying autoregressive process which we now develop.

2.1 Autoregressive process with unequally spaced events

Consider a discrete process, {u(t): t ∈ T}, over a sequence of consecutive days, T = {t 1, t 1 + 1,…, t 1 + k,…}, which is generated by the following AR(1) process, with autocorrelation parameter, ρ,

where in addition it is assumed that u(t 1) ~ N(0, v) and that all innovations, {ε(t): t > t 1}, are independently and identically distributed normal variates, ε(t) ~ N(0, σ2). This process is also assumed to be stationary, so that u(t) ~ N(0, v) holds for all t ∈ T. Since each u(t) is necessarily normal with zero mean by (5), this is equivalent to requiring that variance stay constant, i.e., that var[u(t)] ≡ v. Moreover, since u(t − 1) and ε(t) are independent, this yields the following well known stationarity condition:

In particular, it follows that [as a parallel to (4)] residual variance will be finite only if,

Within this standard AR(1) setting, we now assume that the first sales event occurs on day t 1, so that the first residual in (3) is given by u 1 = u(t 1). Next, if the second sale occurs on day t 2 = t 1 + m, then since u(t 1 + 1) = ρ·u(t 1) + ε(t 1 + 1), and

it follows by successive substitutions into (5) for m > 2 that,

More generally, if we now replace (u 1, u 2, t 1, t 2) by (u i−1, u i , t i−1, t i ) and replace m = t 2 − t 1 by Δ i = t i − t i−1, then exactly the same argument shows that for all i = 2,…, n,

where the (cumulative) innovations, e i , now have the form:

Moreover, since var(u i ) = v for all i = 1,…, n, it also follows from (10) that, in a manner similar to (6),

[which can also be verified by summing the variances in (11) and using the identity, v = σ 2/(1 − ρ 2)]. Following Jones (1993) it is convenient to rescale the innovations, e i , as,

so that var(ε i ) ≡ var(u i ) = v. With this rescaling, it follows that the relevant residual process (u i : i = 1,…, n), for (3) can now be summarized as follows:

It is of interest to note that this residual process, [(14), (15)], is essentially identical to the continuous formulation in Jones (1993, p. 62).Footnote 7 Thus, by allowing time intervals to become arbitrarily small, the above formulation provides a somewhat more intuitive motivation of CAR(1) processes.

However, there is one additional restriction in the present model that is not shared by the continuous model. In particular, observe that with a minimal time unit (such as 1 day), it is quite possible that more that one event occurs in the same time interval (such as more than one house sold on the same day). It should be clear from (15) that this leads to implausible results, since the residuals u i for simultaneous events must be identical.Footnote 8 There are several ways to treat this problem. First, one can simply choose the time unit to be smaller than the closest pair of consecutive events in the given data. While this is possible in the present setting, intervals smaller than a day are at best artificial, and have little meaningful content. A much more satisfactory approach would be to introduce an additional “idiosyncratic” error term in (3) reflecting the unobserved attributes of individual houses that are time independent. This approach is discussed in more detail in the Concluding Remarks. For the present however, we choose to focus on a single set of errors driven by an underlying AR(1) process, and to examine the behavior of this model in detail. But since there are indeed a number of instances of houses sold on the same day in the application presented below, we have chosen to “jitter” the time sequence enough to allow a well-ordered sequence of sales events. Given that this error model [(14), (15)] is intended only to capture unobserved effects with some degree of stationary temporal dependency, such “tie breaking” conventions are deemed to have little affect on the overall behavior of the model.

2.2 Matrix formulation of the model

By combining (3), (14), and (15) we can give a more compact statement of the above model as follows. If we let y = (y 1,…, y n )′, W = (w ij : i, j = 1,…, n), β = (β 1,…, β k )′, u = (u 1,…, u n )′, and let X = [x ij : i = 1,…, n, j = 0, 1,…, k] with x i0 = 1, i = 1,…, n, then (3) becomes

Similarly if we let ε = (ε 1,…, ε n)′, then [(14), (15)] can also be written in matrix form as

where D(ρ) is a lower triangular matrix of the form

and C(ρ) is a diagonal matrix of the form

Hence the present spatio-temporal model is now summarized by (16) through (19) [together with the implicit parameter restrictions (4) and (7)].

Note that there is a strong similarity between the present model and the well-known “spatial autoregressive model with autoregressive disturbances” summarized, for example, in Anselin and Florax (1995, pp. 22–24). However, it should be clear that the present autocorrelation parameter, ρ, enters in a more complex manner than the autoregressive disturbance parameter of that model. On the other hand, the present model is in many ways simpler to analyze, since there are no simultaneities either in space or time. As a consequence, both the spatial weight matrix, W, and the temporal dependency matrix, D(ρ), are lower triangular matrices, which greatly simplifies the analysis of this model.

2.3 Likelihood and concentrated likelihood functions

To estimate the present model it is convenient to combine (16) and (17) into a reduced model form as follows. First, for notational simplicity, we write D = D(ρ) and C = C(ρ) and solve for u in terms of ε to obtain

where B = (I n − D)−1 C.Footnote 9 Next we set A = I n − λW and write (16) as

Hence (20) and (21) are together equivalent to the reduced modelFootnote 10

This in turn implies that y is multivariate normal with distribution

Hence the log likelihood function for parameters (β, v, λ, ρ) is given by

where implicitly, A = A(λ) and B = B(ρ).

In Appendix 1 (see footnote 2 above) it is shown that the associated concentrated likelihood function of ρ is given by:

where

with \( \hat{A}=A\,[\hat{\lambda }(\rho )] \) and where in addition,

and

with

This one-dimensional function can in principle be maximized by a simple line search to obtain the maximum-likelihood estimate, \( \hat{\rho } \). However, there are several practical considerations that should be mentioned at this point. First, in autocorrelation models where time intervals between events are unequal, the value of ρ is very dependent on the underlying time unit. In particular, since (15) shows that the only quantities used in the analysis are the powers, ρ Δ (and their squares ρ 2Δ), it is these values that determine the likelihood function in (25). So if time is rescaled by a factor, α, then the identity, \( \rho^{\Updelta } \, \equiv \,\left( {\rho^{1/\alpha } } \right)^{\alpha \Updelta } \), implies that \( \hat{\rho }\, \to \,\left( {\hat{\rho }} \right)^{1/\alpha } \). Hence even though positive autocorrelation estimates will always lie between zero and one, the actual value of \( \hat{\rho } \) depends on the time units and can only be interpreted in this context.

A second related issue concerns the sign of ρ. While (7) allows negative as well as positive values of ρ, it should be clear that negative dependencies are somewhat problematic in the present setting. In particular, the type of oscillation behavior implied by negative ρ depends crucially on the choice of time unit which, as mentioned above, can be quite arbitrary in the present setting.Footnote 11 Moreover, since positive dependencies are of primary interest in the present setting, we simply restrict the relevant interval of ρ values to the interval [0,1), and take all zero values of \( \hat{\rho } \) to mean “no autocorrelation.”Footnote 12

A final consideration that arises with unequally spaced events relates to the degree of inequality between time intervals. In particular, if the difference between the smallest and largest values of Δ is considerable, then computational overflows can result. For example, if time units are in days (as in the present application) and there is a 30 day lag between two consecutive sales, then standard double-precision computations of the quantity \( (1- \rho^{{2\Updelta_{i} }} )^{1/2} = \sqrt {1- \rho^{60} } \) will be numerically equal to one for all values ρ < 0.5. While most cases are not this extreme, it should be clear that the concentrated likelihood function will tend to be very flat for small values of ρ. The consequences of this flatness are discussed further in Sect. 3.1 below.

Given an estimate, \( \hat{\rho } \), of ρ, one can immediately obtain corresponding estimates \( (\hat{\lambda },\hat{\beta },\hat{v}) \) by successive substitution into (29), (28), (27) and (26). Hence whenever \( \hat{\rho } \) is unique, it follows that all estimates will be unique. Here it should be noted that while the concentrated likelihood function, L c (ρ|y), can fail to be concave (as can be shown by numerical examples), the maximum-likelihood estimates, \( \hat{\rho } \), have proved to be unique in all simulated cases studied to date. Thus it appears that non-uniqueness of parameter estimates is not a serious issue in the present model.

Finally, this set of estimates \( (\hat{\beta },\hat{v},\hat{\lambda },\hat{\rho }) \) can be used to estimate asymptotic variances for testing purposes. A complete derivation of the asymptotic covariance matrix is given in Appendix 2 (see footnote 2 above).

3 Selected simulation results

Two types of simulation analyses were done for this model. The first involved an entirely artificial space-time process constructed on a square grid of housing sites. The purpose of these simulations was to examine the effect of sample size on the reliability of parameter estimates. As will be shown below, maximum-likelihood estimates for this model are quite sensitive to sample size, and can be extremely inefficient for small samples. In view of this, it was crucial to determine whether such effects were present in the CDC application developed below. So a second set of simulations was run for that model, using the empirical space-time structure and sample size, together with the parameter estimates obtained from the given data set. The results of these simulations, presented in Sect. 4.3 below, confirmed that the sample size of this application (400 sales) was sufficiently large to achieve reasonably efficient estimates. Moreover, since data sets for housing sales are typically much larger than this, the present results suggest that maximum-likelihood methods should produce reliable results in most applications. But given the wider range of potential applications of this model, it is of interest to consider its small sample behavior.Footnote 13

3.1 A 100-sample case

Here “small sample” behavior is well illustrated by sample sizes as large as 100. To construct such a case, a population of 100 houses was placed on a 10 by 10 unit grid of locations, and these houses were sold in random order with time intervals sampled from an exponential (“memoriless”) distribution with a mean of 4 days. Time intervals were then rounded upward so that the minimal time interval was 1 day. To construct space time dependencies as in (1), a threshold time interval of Δ = 60 days was chosen, along with a threshold distance of three units, d = 3. This produced a W matrix of space-time influences in which 65% of the houses were influenced by the previous sale of at least one house nearby.Footnote 14 Two housing attribute values (x 1, x 2) were randomly sampled from a uniform distribution on the unit interval, producing a 100 × 2 matrix, X, of housing data. The parameter values chosen for this simulation were β = (5,1,2)′, v = 4, λ = 0.4, and ρ = 0.2. The variance, v, was chosen to be relatively large in comparison to the conditional mean. This was done to maintain some degree of comparability with the application below which also involves two explanatory variables, and thus resulted in substantial unobserved variation. In addition, the value of ρ was chosen to be low enough to allow substantial unobserved variation between sales occurring only 1 day apart (as discussed at the end of Sect. 2.1 above).

In this setting, a set of 1,000 sales price vectors, y = (y i : i = 1,…,1,000), were simulated. The resulting distributions of parameter estimates are summarized in Table 1 below.

Here the means of all parameters are close to their true values, but the standard deviations on most parameters are quite high. Recall that the high variance, v = 4, is a contributing factor here, especially for the β parameters. This can be seen in Fig. 1a below, where even then most inefficient estimator, \( \hat{\beta }_{1} \), is symmetrically distributed about its true value, β 1 = 1 (shown by the arrow head below the figure). The situation for \( \hat{\lambda } \) is surprisingly much better than for the betas, and is seen in Fig. 1b to be much more concentrated around its true value λ = 0.4. But the situation for \( \hat{\rho } \) is quite different, as shown in Fig. 2a below.

Even though the sample mean of \( \hat{\rho } \) seems reasonable, the actual sampling distribution exhibits extreme variation. Notice also that this variation combined with the nonnegativity restriction on ρ (discussed at the end of Sect. 2.3 above) produces a large spike of zero values. Hence it is clear that without this restriction there would also be a severe negative bias in the estimates of ρ. Even with the restriction, there is a slight downward bias in \( \hat{\rho } \), which is directly inherited by \( \hat{v} \) as seen in Table 1 and Fig. 2b. This underestimation of variance is of particular significance for statistical inference, since the primary objective of this model is to account for the unobserved variation that is masked by space-time dependencies.

The main reason for the extreme variation in \( \hat{\rho } \) values turns out to be the relative flatness of the concentrated likelihood function for ρ, as seen in Fig. 3 below, where a case with \( \hat{\rho } \) close to the mean [in (a)], and a case with \( \hat{\rho } \) truncated to zero [in (b)] are seen to have similar concentrated likelihood functions, both very flat in the range of low ρ values. This can in turn be attributed to the fact that for all time intervals, Δ, that are well above one (say at least 5 days), the values of ρ Δ and \( \sqrt {1\, - \,\rho^{2\Updelta } } \) are approximately zero and one, respectively, for all low values of ρ. Hence these terms are essentially constant for small ρ. This flatness of course also results in small values of the Hessian for the concentrated likelihood function, and hence tends to inflate the asymptotic variance of \( \hat{\rho } \) estimates. (Indeed, for the 1,000 simulations above, only about 25% resulted in a significantly positive value of ρ.)

Finally, since all other estimates are constructed from estimates of ρ, it should be clear that this instability in ρ estimates will be inherited by all other estimates.Footnote 15 Thus it would appear that for samples this small, autocorrelated errors with unequal spacing can create substantial problems for parameter estimation.

3.2 A 400-sample case

Given these small-sample problems, it is of interest to extend the above example to a grid large enough to allow a sample size comparable to the sample size, n = 400, in the CDC application below. Hence a 20 by 20 grid was used to obtain 400 housing sites. These were again sold in random order, with lag times defined by rounded samples from an exponential distribution with mean equal to 4 days. A 400 × 2 matrix, X, of housing attributes was again sampled from the uniform distribution, and the same parameter values for [β, v, λ, ρ] were used. The results are shown in Table 2 above.

Here we see that there is considerable improvement with respect to all estimates. Not only are the means slightly better, but the sample standard deviations are roughly cut in half. The reason is made clear by the histogram for \( \hat{\rho } \) in Fig. 4a below, which exhibits much better statistical properties.

While a spike at zero is still evident, there is now a clear concentration around the true value, ρ = 0.2, with only a slight downward bias and a much smaller standard deviation. This reduction is again inherited by all parameter estimates constructed from \( \hat{\rho } \). Of particular importance for statistical inference is the improvement in \( \hat{v} \), as seen in Table 2 and Fig. 4b. Not only is the standard deviation cut in half, but also the downward bias has almost disappeared. Hence it appears that for sample sizes of 400 and larger, the maximum-likelihood estimates of all parameters are quite reliable. Additional confirmation of this will be given in Sect. 4.3 below.

4 Application to a community development corporation area in Philadelphia

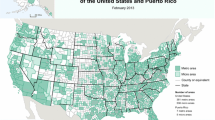

The following application is taken from the Philadelphia study mentioned in the Introduction, and focuses on one of the CDCs in this larger study (which will be reported in a subsequent paper (Wu and Smith 2009)). The present CDC, designated as the People’s Emergency Center (PEC), consists of nine contiguous block groups located in West Philadelphia, as shown in Fig. 5 below.

The specific objective of this study was to determine whether the overall trend of housing sales prices in each CDC area was significantly greater than that of comparable non-CDC areas in Philadelphia.Footnote 16 Here a control area (CA), consisting of 25 contiguous block groups in North Philadelphia was identified (also shown Fig. 5) and used for purposes of comparison. The data for the present illustration consists of all housing sales in these two areas during the 20-month period from January 2004 to September 2005. During this period there were 64 housing sales in PEC, and 336 housing sales in the larger control area, CA.Footnote 17

To compare sales trends, an instance of model (3) was constructed with y i denoting the sales price per square foot of each house i (to control for variations in house sizes). To account for space-time dependencies among housing sales, the time and distance thresholds in (1) above were chosen to be Δ = 60 and d = 500, so that for any two houses, i and j, the corresponding space-time weight, w ij , is nonzero if and only if house j is within 500 feet of house i and is sold no more than 60 days prior to the sale of house i.Footnote 18 Sales in each area were distinguished by a location dummy, δ i (=1 for PEC houses i), and the sales time, t i , of each house i was used to capture (linear) sales-price trends. In this context, the relevant instance of model (3) for the present application takes the form:

where n = 400 and where t i δ i denotes the interaction effect between time and location. For our present purposes, the coefficient of this interaction effect (β 3) is the key parameter of interest. This can be seen more clearly by rewriting (30) as follows:

Here it is clear that β 3 represents the difference in slopes between the linear sales-price trends in the PEC and CA areas. Hence a positive value of β 3 would at least be consistent with a positive local effect of the PEC housing projects.

4.1 OLS estimation

To analyze this effect we start by treating (30) as a standard linear model. Here it is of interest to observe that if temporal autocorrelation effects are assumed to be absent, i.e., if ρ = 0, then (30) is precisely a standard linear model. To see this, consider the form of the full likelihood function in (24) and note first that if ρ = 0, then by definition, C = B = I n , and in particular ln [det (C)] = 0. Hence if we let

so that \( Ay\, - \,X\beta \, = \,y\, - \,\lambda Wy\, - \,X\beta \, = \,y\, - \,\tilde{X}\tilde{\beta } \), and rewrite the reduced likelihood with ρ = 0 as \( L(\beta ,v,\lambda )\, \equiv \,L(\tilde{\beta },v) \), then it follows at once from (24) that

which is precisely the standard linear model likelihood function for \( (\tilde{\beta },v) \).Footnote 19

Hence the ordinary least squares (OLS) estimates of \( (\tilde{\beta },v)\, = \,(\lambda ,\beta ,v) \) for this case are guaranteed to satisfy all the usual optimality properties of maximum-likelihood estimates.Footnote 20 More generally, if temporal autocorrelation effects are present but not too severe (as will be seen to be the case in this application), then OLS should continue to yield quite reasonable estimates. The results for OLS in the present case are summarized in Table 3 below:

Here both the time parameter (β 1) and the key time-location interaction parameter (β 3) are significantly positive—with interaction being very significant. Moreover the relative size of these coefficients shows that price increases in PEC are considerably higher than those of CA, with a rate of increase above that of CA by more than 5 cents per square foot per day. Notice also that the spatial effect of nearby previous sales (λ) is significantly positive.

In the next section it is shown that our present spatio-temporal extension of OLS does not change these conclusions in any substantial way. Moreover, while the present analysis is based only on a small data set drawn from the larger Philadelphia CDC study, these same conclusions follow from the larger study as well. Hence it does appear that prices have been rising faster in the CDC areas than can be accounted for by general housing price increases during this period. In so far as increased housing values can be taken to reflect neighborhood revitalization, our results thus suggest that these Philadelphia CDC projects have achieved some degree of success. For a fuller discussion of these points, see Wu and Smith (2009).

However, there are a number of shortcomings of the present analysis that carry over to the larger study as well. The most obvious is the absence of additional housing attributes that should be controlled for (as reflected by the low value of pseudo R-squareFootnote 21). But unfortunately, such attributes were not available in usable form for the current data set.Footnote 22 The other key question for our present purposes relates to possible temporal autocorrelation among residuals that is not captured by the simple time trend in (30). As is well known, such autocorrelation tends to smooth residuals which can in principle inflate the statistical significance of key parameters. Indeed, this was the primary motivation for the present spatio-temporal model.

4.2 Spatio-temporal estimation

Hence we now re-examine this data in terms of present spatio-temporal model. The results of this estimation are summarized in Table 4 above. Notice first that the key parameter estimates and significance levels are strikingly similar to Table 3.

Moreover, as is seen from the pseudo R-square and AIC values, the overall goodness of fit for both models is also very similar.Footnote 23 Hence, in view of the discussion in Sect. 4.1 above, these similarities would seem to suggest that temporal autocorrelation is not present. However, the P-value (0.044) for temporal autocorrelation, ρ, does indicate some statistical significance here. But as noted in Sect. 2.3 above, the estimated value of ρ can only be interpreted relative to the time units used in the analysis. In the present case, \( \hat{\rho }\, = \,0.112 \), with a time unit of 1 day. This implies from (10) that for consecutive housing sales, i − 1 and i, that are more than 1 day apart, the influence of residual, u i−1, on u i is less than ρ 2 u i−1 ≈ (0.013)u i−1. So in spite of its apparent statistical significance, the autocorrelation impact of ρ in the present context is actually minimal.

In summary, the present application of the spatio-temporal model has simply served to verify that the OLS estimates above do not appear to be severely influenced by unobserved temporal autocorrelation effects.

But given the small-sample estimation difficulties illustrated in Sect. 3.1 above, it is still of interest to ask whether the present value of \( \hat{\rho } \) might actually be underestimating the true value. While no definitive answer can be given to this question, it is instructive to simulate the behavior of maximum-likelihood estimates for this application.

4.3 Simulation of spatio-temporal estimates

Recall from Sect. 3.1 that the sample size, n = 400, was chosen specifically to be comparable with the present application. While the results there suggest that the present estimate of ρ should behave reasonably well, both the spatio-temporal dependency structure and data used for that simulation are considerably different than the present case. Hence it is of interest to carry out the same simulation procedure using the present data set and W matrix. In addition, to gage how well the model is doing in the region of the current parameter estimates, the “true” parameter values were chosen to be (slightly) rounded versions of the parameter estimates above (β 0 = 10.52, β 1 = 0.014, β 2 = 12.38, β 3 = 0.05, λ = 0.12, ρ = 0.112, and v = 305.01). The results of this simulation are summarized in Table 5 above.

Here it is clear that the maximum-likelihood estimates are behaving quite well for this data and set of parameter values above. Of particular interest are the ρ-estimates, which are shown in more detail in Fig. 6a below.

As in Fig. 4a there is still a noticeable spike at zero. But again there is strong clustering about the true value, ρ = 0.112, resulting in only a small downward bias in \( \hat{\rho } \). As expected, these results for ρ lead to even better results for the estimates of variance, v, as compared to Fig. 4b. In short, these results lend further credibility to the estimate of ρ above, and hence to the minimal nature of temporal autocorrelation in the present application.

5 Comparison with a spatio-temporal lag model approach

An alternative approach to the spatio-temporal analysis of individual housing sales with unequal time intervals was proposed by Pace et al. (2000).Footnote 24 Here housing sales are again ordered by time of occurrence, and the full structure of time dependencies among these sales is specified by a nonnegative matrix, T = (τ ij ), with τ ij = 0 for all j ≥ i. Similarly, all spatial dependencies for a given sale are assumed to involve only previous sales, and the structure of such dependencies is represented by a nonnegative matrix, S = (s ij ), with s ij = 0 for all j ≥ i. These are combined into a general linear model designated as the spatio-temporal linear model (STLM). For our present purposes, it is convenient to focus on the special case of STLM given by the following spatio-temporal lag model paralleling (16) above:Footnote 25

Here the simple expression, λWy, in (16) now has a more elaborate form, while the unobserved residuals, u, in (17) have a much simpler form. In essence, all spatio-temporal dependencies are here postulated to be among the observable sales prices, y, and not the residuals, u.Footnote 26 As the authors point out, the appeal of this model is its mathematical simplicity. In particular, since lower triangularity is preserved under products, the spatio-temporal lag matrix in (34) is lower triangular. This, together with the standard OLS specification of residuals, implies that all parameters can be consistently estimated using OLS. Hence this model can be applied to very large data sets, as is common in real estate markets.

To compare (34) with the present model in [(16), (17)], we begin by considering the types of spatio-temporal interactions that can be captured by the product matrices, TS and ST. Here we choose to focus on ST, and write STy more explicitly as:

Hence the spatio-temporal influence on the ith housing sale, say ϕ i (y 1,…, y i − 1), is seen to be of the form:

If we examine a typical term, s ih τ hj y j , then the first point to notice is that by definition, j < h < i. Hence all influences on the price of housing sale, i, by the prices, y j , of previous housing sales, j, involve indirect influences through some other housing sale, h, that is intermediate in time between i and j. To gain some insight as to the nature of such influences, suppose first that both s ih and τ hj are positive so event j does exert some influence on event i through h. Suppose further that τ ih = τ ij = 0 (so that events h and j both happen long before i), and similarly that s ij = s hj = 0 (so that events i and h are both very far from event j in space). Then this spatio-temporal interaction can be given the following interpretation: Even though event h happened long before i, it is close enough in time to event j to share some common temporal influences with j. Moreover, even though event i is very far away from j in space, it is close enough to h to share some of the spatial effects of these earlier influences. Similar interpretations can be given to TS, and show that such spatio-temporal influences are indeed quite meaningful. But the crucial point here is that these influences are necessarily indirect.

In our present model, for example, it is assumed that housing sale i can be influenced by all previous sales j that are sufficiently close to i in both space and time, i.e., for which both τ ij > 0 and s ij > 0. But with respect to the above interpretation, it is clear for example that if all other sales near i happened after i, so that s ih τ hj = 0 for all h < i, then j can have neither an “ST” effect or a “TS” effect on i in (34). While it is true that j can still have additive “T” and “S” effects in (34), it should be clear that there is no way to model our particular joint space-time interaction effect in the STLM model.

However, there is another matrix product that does allow such effects. In particular, if one considers the Hadamard product, S · T, defined by simple component-wise multiplication, [S · T] ij = (s ij )·(τ ij ), then this clearly encompasses the desired joint interactions since,

Of equal importance in the present context is the fact that Hadamard products obviously preserve lower triangularity. Hence by broadening the spatio-temporal lag operator in (34) to

one can encompass both direct and indirect spatio-temporal interactions in a manner that still permits consistent OLS estimation.

Finally it is of interest to ask whether one can also use these simple weight matrices, S and T, to model spatio-temporal autocorrelation effects in the unobserved residuals, u. As in model [(16), (17)] we focus here on temporal autocorrelation and, as an alternative to (10) and (11), now consider the much simpler linear autoregressive model,

with independent innovations, ε i ∼ N(0, σ 2), for all i. Here the unequal time intervals between i and j are assumed to be captured by appropriately chosen values of τ ij . This yields the matrix form,

which is seen an instance of expressions (1) and (5) in Pace et al. (2000).

From a theoretical perspective the major difficulty with this process is that stationarity is not possible without further modification. In particular, if it is assumed that

then it is easily shown that (41) and (42) are inconsistent, unless either T = O or ρ ≡ 0. The basic idea can be seen from (39) plus the first instance of (40). For by (39) it follows that

Hence by setting i = 2 in (40) we see that

But if ρ ≠ 0 then τ 21 ≠ 0 would imply that (ρτ 21)2 > 0, so that (44) can only hold if v = 0. Since this contradicts (42), we must have τ 21 = 0. Proceeding by induction, this forces T = O whenever ρ ≠ 0.Footnote 27

However, it is also well known that by a simple relaxation of the variance of the initial innovation, ε 1, this problem can be overcome for the special case of the standard AR(1) model [given by (10) and (11) above with Δ i ≡ 1].Footnote 28 Here T = (τ ij ) takes the special form with τ i,i−1 = 1 for all i = 2,…, n, and τ ij = 0 elsewhere, so that by relaxing (43) and setting τ 21 = 1, it now follows from the argument in (44) that [in a manner paralleling (12)],

Hence by restricting the admissible values of ρ to the open interval (−1, 1) [so that v is defined], and assuming only that the initial variance in (39) takes the form

a simple inductive argument shows this initialization of the AR(1) model yields a well defined stationary process as in (42), with var(ε i ) = σ 2 for all i > 1.Footnote 29 From a practical viewpoint, this larger initial variance is taken to reflect the entire history of the unobserved process prior to i = 1.Footnote 30

Hence, there remains the interesting question of whether this simple modification for the AR(1) model might not allow variance stationarity (42) for other possible specifications of T. More precisely, one may ask whether there exist (nonzero) specifications of T = (τ ij ) other than AR(1) [together with an appropriate choice of σ 2] such that the model:

with independent innovations (ε i : i = 1,…, n) satisfies stationarity condition (42). Here the answer is unfortunately negative. In particular, if one imposes the reasonable assumption that more distant time influences are never greater than more recent influences, i.e., that

and requires only that model [(45), (50)] hold for ρ in some sufficiently small open interval containing zero (so that full independence is allowed), then it is shown in Appendix 3 (see footnote 2 above) that AR(1) is indeed the only possibility here.Footnote 31 So while model (41) [or its relaxation in (45) and (50)] is very appealing from an analytical viewpoint, such models must always involve non-stationary residuals. Moreover, since the structure of these non-stationarities will depend critically on the particular specification of T, one must justify why this specification together with its implicit non-stationarities is appropriate for the particular time interval (and irregular event sequence) under study.Footnote 32

6 Concluding remarks

In this paper, we have developed a spatio-temporal model that is particularly suitable for the analysis of address-level events occurring sporadically in time. In particular, this model not only avoids the need for temporal aggregation (that is typical of most spatio-temporal regression models), but also allows for the possibility of short-run temporal dependencies (such as changes in asking prices based on very recent sales). Moreover, while the above analysis suggests that more robust estimation procedures may be needed for small-sample applications, the present maximum-likelihood framework does appear to be well suited for the analysis of larger data sets, as typified by housing sales transactions in major urban areas.

There is however a more subtle limitation of the present model that does not arise in more standard temporal aggregation schemes. In particular, when events are aggregated with respect to regular time intervals, as in the standard AR(1) model, these aggregate events are by definition well separated in time. But in our present extension of the AR(1) model to individual events, it is quite possible for such events to occur at almost the same time. In particular, it is possible for several house sales to occur on the same day. Moreover, as discussed in Sect. 2.3 above, the steady-state conditions for this model imply that the unobserved residuals for such events must be identical. This would make perfectly good sense if the unobserved variation captured by u i was due entirely to time dependent phenomena affecting all events occurring at time t i . For then it could be argued by simple continuity that events occurring close in time to t i would be similarly affected. But in actuality each residual, u i , necessarily includes any unobserved attributes of individual house i that influence its sales price but are not shared by other houses sold at times close to t i .

Hence one important extension of the present model would be to incorporate such effects by the addition of an idiosyncratic residual, u oi , for each sales event i. If it is assumed that these residuals are iid normal variates, say with u o = (u oi : i = 1,…, n)′ ∼ N(0, v o I n ), and in addition that u o is independent of the temporal effects captured by u, then the model in (16) could be extended as follows:

Here events occurring simultaneously would exhibit distinct idiosyncratic residuals even though they shared a common temporal residual.

From a conceptual viewpoint, this extension appears to be rather straightforward. But analytically it is considerably more complex. In particular, it is no longer possible to reduce the estimation problem to a single dimension. However, if this model is reparameterized by the standard technique of setting θ = v/v o , then it can be shown that (34) still exhibits many of the analytical properties developed for model (16) above. This extension will be presented in a subsequent paper.

Notes

The number of affordable housing units provided (built or renovated) by the CDC is also commonly used as a measure of “success.” However, it has been argued by many housing researchers that increased housing supply is only a measure of input, and thus is not a fair assessment of neighborhood revitalization as an outcome (see for example Smith (2003) and the many studies cited therein). Hence the assumption implicit in the present approach is that improved neighborhood quality should increase local demand for housing, and thus local housing prices.

Certain technical appendices have been omitted to save space, and can be found in the Electronic Supplementary Material online.

Alternatively, it may often be more appropriate to use the log of sales price as y i .

Note that there may in fact be some minimal time lag required before a given sales price can influence subsequent prices (such as the time required for this sale to be published in the local paper). Hence the inclusion of all prior sales is a simplifying assumption.

It is worth noting that even if more elaborate spatial kernel functions were to be used, the bandwidth, d, of each kernel is well known to be the single most critical determinant of spatial dependence (see for example Silverman 1986).

Note also that if housing prices are in log form, then this term corresponds to a geometric average of housing prices rather than an arithmetic average.

If time intervals, Δ t , are allowed to become “arbitrarily small,” and are denoted by δt, then in the formulation of Jones (1993), ϕ(δt) = exp(−α 0 δt) = ρ 2δt where ρ = exp(−α 0).

This does not arise in the continuous model where it is natural to assume that simultaneous events occur with probability zero.

It is shown in (A1.2) of Appendix 1 (see footnote 2) that det (I n − D) = 1, so that (I n − D)−1 always exits and B is well defined.

It is also shown in (A1.2) of Appendix 1 that det (A) = 1, so that A −1 always exits.

Indeed, such oscillation behavior loses all meaning in the continuous version of AR(1), where the autocorrelation parameter is required to be nonnegative [as is evident from the positivity restriction, α 0 > 0, in Jones (1993) for the identity, ρ = exp(−α 0), of footnote 7 above].

Following Anselin and Moreno (2003), one might also interpret such negative estimates of ρ to be evidence that the present spatio-temporal specification is simply not supported by the data.

Here it should be emphasized that the following simulations are intended only to illustrate the “typical” small sample properties of this model in a single situation. Systematic simulation studies of model performance under a range of space-time structures and parameter values are left for future work.

Here parameter choices (d, Δ) for W were chosen to yield a degree of space-time interaction among housing sales that roughly matched that of the Philadelphia application below, where about 66% of the houses were influenced by previous sales (based on the space-time bandwidths used).

In view of this, it is somewhat surprising that estimates of λ appear to behave quite well by comparison. Moreover, since this relation persists in all simulations studied thus far, it raises an interesting (open) question as to why the expression for \( \hat{\lambda }(\hat{\rho }) \) in (28) above should remain more stable than \( \hat{\rho } \).

During this time period there was a significant increase in housing prices throughout the entire Philadelphia area.

This address-level sales data was extracted from the Philadelphia Board of Revisions of Taxes (BRT) Properties File from 1990 to 2006.

It should be clear from Fig. 5 that the areas PEC and CA are sufficiently far apart to ensure that no space-time dependencies occur between houses in separate areas.

It should be noted here that since this model involves both lagged dependent variables and a time trend term, it is technically an instance of a “autoregressive process around a deterministic time trend.” But while the rates of convergence for OLS estimates are more delicate in this case, it can be shown that the standard model significance tests continue to be asymptotically valid. [See for example Hamilton (1994, Sect. 16.3)].

In particular, OLS estimates in the presence of lagged dependent variables are consistent and asymptotically normally distributed about their true values. However, since the lagged dependent variables and residuals are not fully independent, these estimates are typically biased for small samples. [See for example Davidson and MacKinnon (2004, Sect. 3.2)].

The standard R-square is known to be somewhat problematic in the case of lagged dependent variables. Hence for comparability with the spatio-temporal formulation below, we choose to define pseudo R-square here to be the squared correlation between y and it prediction, \( \hat{y}\, = \,\hat{E}(y|X)\, = \,(I_{n} \, - \,\hat{\lambda }W)^{ - 1} X\hat{\beta } \) with OLS estimates \( \hat{\lambda } \) and \( \hat{\beta } \). However, it is also of interest to note that in this particular application the unadjusted R-square (0.372) is almost the same as the pseudo R-square (0.362).

Here it should be noted that CDCs are local non-profit organizations whose funding is devoted almost entirely to housing projects, and not to data collection. Hence all housing data was drawn from the Philadelphia BRT (footnote 17 above). Moreover, while this BRT data did include provisions for a number of key housing attributes (such as “number of bedrooms” and “interior and exterior condition”), most of this data was either missing or unusable for other reasons.

In the present context of maximum-likelihood estimation, the AIC measure is considered by many to yield more reliable goodness-of-fit comparisons than pseudo R-square. However, the latter is somewhat easier to interpret.

We are indebted to an anonymous referee for pointing out the close similarities between our current model and that of Pace et al. (2000).

It should be remarked that Pace et al. (2000) motivate the form of their model by constructing [in expressions (1), (2) and (5)] a spatio-temporal extension of the spatial Durbin model (Anselin 1988) which does indeed account for spatio-temporal autoregressive dependencies in the unobserved residuals. But this development is somewhat misleading in the sense that their final model, STLM [expression (7)], ignores the crucial “common factor” constraints on coefficients that preserve these autoregressive dependencies. Hence, while STLM could in principle be used to test this “common factor hypothesis” (as implied by their discussion on p. 234), the model itself is simply a more elaborate version of the spatio-temporal lag model in (34) above.

A full argument is given in Appendix 3 (see footnote 2 above).

Proofs of this result can be found in any standard text, such as Hamilton (1994, Sect. 3.4).

For further discussion of this point see Green (2003, Sect. 12.2).

It is also worth noting that this result depends only on the first two moments of the independent innovations (ɛ i : i = 1,…, n) so that the normality assumption in (7) is not required.

For example, if ρ > 0 then it is clear from the cumulative nature of (50) that sales residuals u i with many sales in the recent past, i.e., with many positive dependencies (τ ij : j < i), will tend to have much higher variances than those with very few sales in the recent past.

References

Anselin L (1988) Spatial econometrics: methods and models. Kluwer, Dordrecht

Anselin L, Florax RJGM (1995) Small sample properties of tests for spatial dependence in regression models: some further results. In: Anselin L, Florax RJGM (eds) New directions in spatial econometrics. Springer, New York

Anselin L, Moreno R (2003) Properties of tests for spatial error components. Reg Sci Urban Econ 33:595–618

Batalgi BH, Wu PX (1999) Unequally spaced panel data regressions with AR(1) disturbances. Econom Theory 15:814–823

Davidson R, MacKinnon JG (2004) Econometric theory and methods. Oxford University Press, New York

Green WH (2003) Econometric analysis, 5th edn. Prentice Hall, Upper Saddle River, NJ

Hamilton JD (1994) Time series analysis. Princeton University Press, Princeton

Hinkley DV, Efron B (1978) Assessing the accuracy of the maximum likelihood estimator: observed versus expected Fisher information. Biometrika 65:457–482

Jones RH (1993) Longitudinal data with serial correlation: a state-space approach. Chapman and Hall, New York

Jones RH, Boadi-Boateng F (1991) Unequally spaced longitudinal data with AR(1) serial correlation. Biometrics 47:161–175

Lindsay BG, Li B (1997) On second-order optimality of the observed Fisher information. Ann Stat 25:2172–2199

McKenzie CR, Kapuscinski CA (1997) Estimation in a linear model with serially correlated errors when observations are missing. Math Comput Simul 44:1–9

Ord K (1975) Estimation methods for models of spatial interaction. J Am Stat Assoc 70:120–126

Pace RK, Barry R, Gilley OW, Sirmans CF (2000) A method for spatial–temporal forecasting with an application to real estate prices. Int J Forecast 16:229–246

Searle SR (1971) Linear models. Wiley, New York

Silverman BW (1986) Density estimation for statistics and data analysis. Chapman and Hall, Boca Raton, FL

Smith BC (2003) The impact of community development corporations on neighborhood housing markets modeling appreciation. Urban Aff Rev 39(2):181–204

Wansbeek T, Kapteyn A (1985) Estimation in a linear model with serially correlated errors when observations are missing. Int Econ Rev 26:469–490

Whittle P (1954) On stationary processes in the plane. Biometrika 41:434–439

Wu P, Smith TE (2009) A spatio-temporal analysis of neighborhood change: impacts of community development projects on local property values. Working Paper (in progress), Department of Electrical and Systems Engineering, University of Pennsylvania

Acknowledgments

We owe special thanks to Gloria Guard (President) and Stephanie Wall (Outcomes Manager) at the People’s Emergency Center in Philadelphia for providing us with their data. We are also indebted to the referees of an earlier draft of this paper for many helpful comments.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Smith, T.E., Wu, P. A spatio-temporal model of housing prices based on individual sales transactions over time. J Geogr Syst 11, 333–355 (2009). https://doi.org/10.1007/s10109-009-0085-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10109-009-0085-9