Abstract

Generalizing both mixed-integer linear optimization and convex optimization, mixed-integer convex optimization possesses broad modeling power but has seen relatively few advances in general-purpose solvers in recent years. In this paper, we intend to provide a broadly accessible introduction to our recent work in developing algorithms and software for this problem class. Our approach is based on constructing polyhedral outer approximations of the convex constraints, resulting in a global solution by solving a finite number of mixed-integer linear and continuous convex subproblems. The key advance we present is to strengthen the polyhedral approximations by constructing them in a higher-dimensional space. In order to automate this extended formulation we rely on the algebraic modeling technique of disciplined convex programming (DCP), and for generality and ease of implementation we use conic representations of the convex constraints. Although our framework requires a manual translation of existing models into DCP form, after performing this transformation on the MINLPLIB2 benchmark library we were able to solve a number of unsolved instances and on many other instances achieve superior performance compared with state-of-the-art solvers like Bonmin, SCIP, and Artelys Knitro.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mixed-integer linear programming (MILP) has established itself as a practical framework for optimization problems in scheduling, logistics, planning, and many other areas. Although these problems are in general NP-Hard, more than 50 years of investment in MILP techniques has resulted in powerful commercial and open-source solvers that can solve MILP problems of practical interest within reasonable time limits [30]. The aim of this paper is to develop methodologies for solving the more general class of mixed-integer convex optimization—or mixed-integer convex programming (MICP)—problems by reducing them to a sequence of MILP problems.

In order to employ MILP, we relax the convex constraints by representing them as an intersection of a finite number of half-spaces, that is, polyhedral constraints. Based on this idea, Duran and Grossman [17] and Leyffer [32] developed the outer approximation (OA) algorithm which solves a sequence of MILP and continuous, convex subproblems to deliver a globally optimal solution for MICP problems in a finite number of iterations; we present a generalized version of this algorithm in Sect. 2.

Despite the fact that many MICP approaches, including the OA algorithm, build on MILP approaches, there remains a significant performance gap between the two problem classes. Bonami, Kilinç, and Linderoth [10] note in a recent review that continued advances in MILP have translated into “far more modest” growth in the scale of problems which MICP solvers can solve within reasonable time limits. Hence, despite numerous potential applications (see the reviews [5, 10]), our perception is that MICP has not entered the mainstream of optimization techniques, perhaps with the exception of the special case of mixed-integer second-order cone programming (MISOCP) which we will discuss at length.

The cases in which the OA algorithm and others based on polyhedral approximation perform poorly are those in which the convex set of constraints is poorly approximated by a small number of half-spaces. In Sect. 3, we review a simple example identified by Hijazi et al. [27] where the OA algorithm requires \(2^n\) iterations to solve an MICP instance with n decision variables. Fortunately, [27] also propose a solution based on ideas from [41] that can significantly improve the quality of a polyhedral approximation by constructing the approximation in a higher dimensional space. These constructions are known as extended formulations, which have also been considered by [31, 44]. Although Hijazi et al. demonstrate impressive computational gains by using extended formulations, implementing these techniques within traditional MICP solvers requires more structural information than provided by “black-box” oracles through which these solvers typically interact with nonlinear functions. To our knowledge, MINOTAUR [33] is the only such solver to automate extended formulations. In Sect. 4 we identify the modeling concept of disciplined convex programming (DCP) [22], popularized in the CVX software package [21], as a practical solution to the problem of automatically generating extended formulations based on a user’s algebraic representation of an MICP problem.

Our investigation of DCP leads us in Sect. 5 to consider conic optimization as a representation of convex constraints that compactly encodes all of the information needed to construct extended formulations. This key observation links together a number of streams in convex optimization and MICP research, and in particular explains the increasingly popular role of MISOCP and how it can be extended to cover “general” MICP. Pulling these pieces together, in Sect. 6 we develop the first OA algorithm for mixed-integer conic optimization problems based on conic duality. In Sect. 7, we present Pajarito, a new solver for MICP based on the conic OA algorithm and compare its efficiency with state-of-the-art MICP solvers. We report the solution of a number of previously unsolved benchmark instances.

This paper is meant to be a self-contained introduction to all of the concepts beyond convex optimization and mixed-integer linear optimization needed to understand the algorithm implemented in Pajarito. Following broad interest in our initial work [35], we believe that a primary contribution of this paper is to compile the state of the art for readers and to tell a more detailed story of why DCP and conic representations are a natural fit for MICP. For example, in Sect. 2 we present the OA algorithm in a straightforward yet generic fashion not considered by previous authors that encompasses both the traditional smooth setting and the conic setting. A notable theoretical contribution beyond [35] is an example in Sect. 6 of what may happen when the assumptions of the OA algorithm fail: an MICP instance for which no polyhedral outer approximation is sufficient. Our computational results in Sect. 7 have been revised with more comparisons to existing state-of-the-art solvers, and as a final contribution above [35], our solver Pajarito has now been publicly released along with the data and scripts required to reproduce our experiments.

2 State of the art: polyhedral outer approximation

We state a generic mixed-integer convex optimization problem as

where X is a closed, convex set, and the set \(I \subseteq \{ 1, 2, \ldots , n \}\) indexes the integer-constrained variables, over which we have explicit finite bounds \(l_i\) and \(u_i\) for \(i \in I\). We assume that the objective function is linear. This assumption is without loss of generality because, given a convex, nonlinear objective function f(x), we may introduce an additional variable t, constrain (t, x) to fall in the set \(\{ (t,x) : f(x) \le t \}\), known as the epigraph of f, and then take t as the linear objective to minimize [10]. For concreteness, the convex set of constraints X could be specified as

for some set J where each \(g_j\) is a smooth, convex function, although we do not make this assumption. We refer to the constraints \(x_i \in {\mathbb {Z}}\,\, \forall \, i \in I\) as integrality constraints. Note that when these integrality constraints are relaxed (i.e., removed), MICONV becomes a convex optimization problem.

A straightforward approach for finding the global solution of (MICONV) is branch and bound. Branch and bound is an enumerative algorithm where lower bounds derived from relaxing the integrality constraints in (MICONV) are combined with recursively partitioning the space of possible integer solutions. The recursive partition is based on “branches” such as \(x_i \le k\) and \(x_i \ge k + 1\) for some integer-constrained index \(i \in I\) and some value k chosen between the lower bound \(l_i\) and the upper bound \(u_i\) of \(x_i\). In the worst case, branch and bound requires enumerating all possible assignments of the integer variables, but in practice it can perform much better by effectively pruning the search tree. Gupta and Ravindran [24] describe an early implementation of branch-and-bound for MICP, and Bonami et al. [11] more recently revisit this approach.

On many problems, however, the branch-and-bound algorithm is not competitive with an alternative family of approaches based on polyhedral outer approximation. Driven by the availability of effective solvers for linear programming (LP) and MILP, it was observed in the early 1990s by Leyffer and others [32] that it is often more effective to avoid solving convex, nonlinear relaxations, when possible, in favor of solving polyhedral relaxations using MILP. This idea has influenced a majority of the solvers recently reviewed and benchmarked by Bonami et al. [10].

In this section, we will provide a sketch of an outer approximation (OA) algorithm. We derive the algorithm in a more general way than most authors that will later be useful in the discussion of mixed-integer conic problems in Sect. 6, although for intuition and concreteness of the discussion we illustrate the key points of the algorithm for the case of the smooth, convex representation (1), which is the traditional setting. We refer readers to [1, 9, 17] for a more rigorous treatment of the traditional setting and Sect. 6 for more on the conic setting (i.e., when X is an intersection of convex cone and an affine subspace). We begin by defining polyhedral outer approximations.

Definition 1

A set P is a polyhedral outer approximation of a convex set X if P is a polyhedron (an intersection of a finite number of closed half-spaces, i.e., linear inequalities of the form \(a_i^Tx \le b_i\)) and P contains X, i.e., \(X \subseteq P\).

Note that we have not specified the explicit form of the polyhedron. While the traditional OA algorithm imagines P to be of the form \(\{ x \in {\mathbb {R}} : Ax \le b\}\) for some A and b, it is useful to not tie ourselves, for now, to a particular representation of the polyhedra.

Polyhedral outer approximations of convex sets are quite natural in the sense that every closed convex set can be represented as an intersection of an infinite number of closed half-spaces [29]. For instance, when X is given in the functional form (1) and each \(g_j : {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is smooth and finite-valued over \({\mathbb {R}}^n\) then the following equivalence holds:

where \(\nabla g_j(x')\) is the gradient of \(g_j\). When some \(g_j\) functions are not defined (or do not take finite values) over all of \({\mathbb {R}}^n\) then these “gradient inequalities” plus additional linear constraints enforcing the domain of each \(g_j\) provide a representation of X as an intersection of halfspaces; see [29] for further discussion.

Hence, in the most basic case, a polyhedral approximation of X can be derived by picking a finite number of points \(S \subset {\mathbb {R}}^n\) and collecting the half-spaces in (2) for \(x' \in S\) instead of for all \(x' \in {\mathbb {R}}^n\). What is perhaps surprising is that a finite number of half-spaces provides a sufficient representation of X in order to solve (MICONV) to global optimality, under some assumptions.Footnote 1 This idea is encompassed by the OA algorithm which we now describe.

Given a polyhedral outer approximation P of the constraint set X, we define the following mixed-integer linear relaxation of (MICONV)

Note that MIOA(P) is a relaxation because any x feasible to (MICONV) must be feasible to MIOA(P). Therefore the optimal value of MIOA(P) provides a lower bound on the optimal value of (MICONV). This bound may be NP-Hard to compute, since it requires solving a mixed-integer linear optimization problem; nevertheless we may use existing, powerful MILP solvers for these relaxations.

For notational convenience, we sometimes split the integer-constrained components and the continuous components of x, respectively, writing \(x = (x_I,x_{\bar{I}})\) where \(\bar{I} = \{1, \ldots , n\} \setminus I\). Given a solution \(x^* = (x_I^*,x_{\bar{I}}^*)\) to MIOA(P), the OA algorithm proceeds to solve the continuous, convex problem CONV(\(x^*_I\)) that results from fixing the integer-constrained variables \(x_I\) to their values in \(x^*_I\):

If CONV(\(x^*_I\)) is feasible, let \(x'\) be the optimal solution. Then \(x'\) is a feasible solution to (MICONV) and provides a corresponding upper bound on the best possible objective value. If the objective value of this convex subproblem equals the objective value of MIOA(P) (i.e., \(c^Tx' = c^Tx^*\)), then \(x'\) is a globally optimal solution of (MICONV). If there is a gap, then the OA algorithm must update the polyhedral outer approximation P and re-solve MIOA(P) with a tighter approximation, yielding a nondecreasing sequence of lower bounds.

To ensure finite termination of OA it is sufficient to prevent repetition of unique assignments of the integer-valued components \(x_I^*\), because there is only a finite number of possible values. The following lemma states a condition on the polyhedral outer approximation P that helps prove finite convergence.

Lemma 1

Fixing \(x_I \in {\mathbb {Z}}^{|I|}\), if \(x = (x_I,x_{\bar{I}}) \in P\) implies \(c^Tx \ge v_{x_I}\) for all \(x_{\bar{I}} \in {\mathbb {R}}^{n-|I|}\) where \(v_{x_I}\) is the optimal value of (CONV(\(x_I\))) then the OA algorithm must terminate if MIOA(P) returns an optimal solution \(x^*\) with integer components matching \(x^*_I = x_I\).

Proof

Assume we solve MIOA(P) and obtain a solution \(x^*\). If the integer part of \(x^*\) matches \(x_I\), by our assumptions we have \(r_P = c^Tx^* \ge v_{x_I}\), where \(r_P\) is the optimal value of MIOA(P). Since MIOA(P) is a relaxation and \(v_{x_I}\) is the objective value of a feasible solution, then we must have \(r_P = v_{x_I}\). Thus, we have proven global optimality of this feasible solution and terminate.

Note that Lemma 1 provides a general condition that does not assume any particular representation of the convex constraints X. In the traditional setting of the smooth, convex representation (1), if \(x'\) is an optimal solution to CONV(\(x^*_I\)) and strong duality holds, e.g., as in Prop 5.1.5 of Bertsekas [7], then the set of constraints

are sufficient to enforce the condition in Lemma 1 for finite convergence. In other words, within the OA loop after solving CONV(\(x^*_I\)), updating P by adding the constraints (3) is sufficient to ensure that the integer solution \(x_I^*\) does not repeat, except possibly at termination. Intuitively, strong duality in CONV(\(x^*_I\)) implies that there are no descent directions (over the continuous variables) from \(x'\) which are feasible to a first-order approximation of the constraints \(g_j(x) \le 0\) for \(j \in J\) [7]. Hence a point \(x = (x_I,x_{\bar{I}})\) sharing the integer components \(x_I = x_I^*\) must satisfy \(c^T(x-x') \ge 0\) or precisely \(c^Tx \ge v_{x_I^*}\). See [1, 17, 32] for further discussion.

If CONV(\(x^*_I\)) is infeasible, then to ensure finite convergence it is important to refine the polyhedral approximation P to exclude the corresponding integer point. That is, we update P so that

In the traditional smooth setting, it is possible in the infeasible case to derive a set of constraints analogous to (3), e.g., by solving an auxiliary feasibility problem where we also assume strong duality holds [1, 9].

To review, the OA algorithm proceeds in a loop between the MILP relaxation MIOA(P) and the continuous subproblem with integer values fixed CONV(\(x^*_I\)). The MILP relaxation provides lower bounds and feeds integer assignments to the continuous subproblem. The continuous subproblem yields feasible solutions and sufficient information to update the polyhedral approximation in order to avoid repeating the same assignment of integer values. The algorithm is stated more formally in Algorithm 1 and illustrated in Fig. 1.

The efficiency of the OA algorithm is derived from the speed of solving the MIOA(P) problem by using state-of-the-art MILP solvers. Indeed, in 2014 benchmarks by Hans Mittelman, the OA algorithm implemented within Bonmin using CPLEX as the MILP solver was found to be the fastest among MICP solvers [37]. In spite of taking advantage of MILP solvers, the traditional OA algorithm suffers from the fact that the gradient inequalities (3) may not be sufficiently strong to ensure fast convergence. In the following section, we identify when these conditions may occur and how to work around them within the framework of OA.

An illustration of the outer approximation algorithm. Here, we minimize a linear objective c over the ball \(x_1^2 + x_2^2 \le 2.5\) with \(x_1\) integer constrained. On the left, the point \(x'\) is the solution of the continuous relaxation, and we initialize the polyhedral outer approximation with the tangent at \(x'\). We then solve the MIOA(P) subproblem, which yields \(x^*\). Fixing \(x_1=2\), we optimize over the circle and update the polyhedral approximation with the tangent at \(x'\) (on the right). In the next iteration of the OA algorithm (not shown), we will prove global optimality of \(x'\)

3 State of the art: outer approximation enhancements

The outer approximation algorithm is powerful but relies on polyhedral outer approximations serving as good approximations of convex sets. The assumptions of the OA algorithm guarantee that there exists a polyhedron P such that the optimal objective value of MIOA(P) matches the optimal objective value of (MICONV), precisely at convergence. In the case that (MICONV) has no feasible solution, these assumptions furthermore guarantee that there exists an outer approximating polyhedron P such that MIOA(P) has no feasible solution. In Sect. 6, we discuss in more detail what may happen when the assumptions fail, although even in the typical case when they are satisfied, these polyhedra may have exponentially many constraints. Indeed, there are known cases where the OA algorithm requires \(2^n\) iterations to converge in \({\mathbb {R}}^n\). In this section, we review an illustrative case where the OA algorithm performs poorly and the techniques from the literature that have been proposed to address this issue.

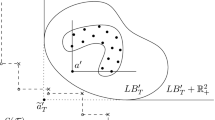

Figure 2 illustrates an example developed by Hijazi et al. [27], specifically the problem,

which, regardless of the objective vector c, has no feasible solutions. Any polyhedral approximation of the single convex constraint, a simple ball, requires \(2^n\) half-spaces until the corresponding outer approximation problem MIOA(P) has no feasible solution. At this point the OA algorithm terminates reporting infeasibility.

The example developed by Hijazi et al. [27] demonstrating the case where the outer approximation algorithm requires \(2^n\) iterations to converge in dimension n. The intersection of the ball with the integer lattice points (in black) is empty, yet any polyhedral outer approximation of the ball in \({\mathbb {R}}^n\) requires \(2^n\) hyperplanes before it has an empty intersection with the integer lattice, because the line segments between any two lattice points (one of which is drawn) intersect the ball. Hence, any hyperplane can separate at most one lattice point from the ball, and we require \(2^n\) of these to prove infeasibility

Hijazi et al. propose a simple yet powerful reformulation that addresses this poor convergence behavior. To motivate their reformulation, we recall a basic example from linear programming. The \(\ell _1\) unit ball, i.e., \(\{ x \in {\mathbb {R}}^n : \sum _{i=1}^n |x_i| \le 1 \}\), is representable as an intersection of half spaces in \({\mathbb {R}}^n\), namely the \(2^n\) half spaces of the form \(\sum _{i=1}^n s_ix_i \le 1\) where \(s_i = \pm 1\). This exponentially large representation of the \(\ell _1\) ball is seldom used in practice, however. Instead, it is common to introduce extra variables \(z_i\) with constraints

It is not difficult to show that \(||x||_1 \le 1\) if and only if there exist z satisfying the constraints (6). Note that these \(2n+1\) constraints define a polyhedron in \({\mathbb {R}}^{2n}\), which we call an extended formulation of the \(\ell _1\) ball because the \(\ell _1\) ball is precisely the projection of this polyhedron defined in (x, z) space onto the space of x variables. It is well known that polyhedra, such as the \(\ell _1\) ball, which require a large description as half-spaces in \({\mathbb {R}}^n\) might require many fewer half-spaces to represent if additional variables are introduced [36]. Note, in this case, that the extended formulation is derived by introducing a variable \(z_i\) to represent the epigraph \(\{ (z,x) : |x| \le z \}\) of each \(|x_i|\) term, taking advantage of the fact that the \(\ell _1\) ball can be represented as a constraint on a sum of these univariate functions.

The solution proposed by Hijazi et al. and earlier by Tawarmalani and Sahinidis [41] follows this line of reasoning by introducing an extended formulation for the polyhedral representation of the smooth \(\ell _2\) ball. Analogously to the case of the \(\ell _1\) ball, Hijazi et al. construct an outer-approximating polyhedron in \({\mathbb {R}}^{2n}\) with \(2n+1\) constraints which contains no integer points. By the previous discussion, we know that the projection of this small polyhedron in \({\mathbb {R}}^{2n}\) must have at least \(2^n\) inequalities in \({\mathbb {R}}^n\). Their solution precisely exploits the separability structure in the definition of the \(\ell _2\) ball, introducing an extra variable \(z_i\) for each term and solving instead

The OA algorithm applied to (7) proves infeasibility in 2 iterations because it constructs polyhedral approximations (based on gradient inequalities (3)) to the constraints in the (x, z) space. More generally, Hijazi et al. and Tawarmalani and Sahinidis propose to reformulate any convex constraint of the form \(\sum _i f_i(x_i) \le k\) as \(\sum _i z_i \le k\) and \(z_i \ge f_i(x_i)\) for each i where \(f_i\) are univariate convex functions. Just by performing this simple transformation before providing the problem to the OA algorithm, they are able to achieve impressive computational gains in reducing the time to solution and number of iterations of the algorithm.

Building on the ideas of Hijazi et al. and Tawarmalani and Sahinidis, Vielma et al. [44] propose an extended formulation for the second-order cone \(\{ (t,x) \in {\mathbb {R}}^{n+1} : ||x||_2 \le t \}\), which is not immediately representable as a sum of univariate convex functions. They recognize that the second-order cone is indeed representable as a sum of bivariate convex functions, i.e., \(\sum _i \frac{x_i^2}{t} \le t\), after squaring both sides and dividing by t. They obtain an extended formulation by introducing auxiliary variables \(z_i \ge \frac{x_i^2}{t}\) and constrain \(\sum _i z_i \le t\). This simple transformation was subsequently implemented by commercial solvers for MISOCP like Gurobi [8], CPLEX [42], and Xpress [4], yielding significant improvements on their internal and public benchmarks.

In spite of the promising computational results of Hijazi et al. first reported in 2011 and the more recent extension by Vielma et al., to our knowledge, MINOTAUR [33] is the only general MICP solver which has implemented these techniques in an automated way. To understand why others like Bonmin [9] have not done so, it is important to realize that MICP solvers historically have had no concept of the mathematical or algebraic structure behind their constraints, instead viewing them through black-box oracles to query first-order and possibly second-order derivative values. The summation structure we exploit, which is algebraic in nature, is simply not available when viewed through this form, making it quite difficult to retrofit this functionality into the existing architectures of MICP solvers. In the following section, we will propose a substantially different representation of mixed-integer convex optimization problems that is a natural fit for extended formulations.

4 Disciplined convex programming (DCP) as a solution

In order to implement the extended formulation proposal of [27] in an automated way, one may be led to attempt a direct analysis of a user’s algebraic representation of the convex constraints in a problem. However, this approach is far from straightforward. First of all, the problem of convexity detection is necessary as a subroutine, because it is only correct to exploit summation structure of a convex function \(h(x) = f(x) + g(x)\) when both f and g are convex. This is not a necessary condition for the convexity of h; consider \(f(x) = x_1^2 - x_2^2\) and \(g(x) = 2x_2^2\). Convexity detection of algebraic expressions is NP-Hard [3], which poses challenges for implementing such an approach in a reliable and scalable way. Ad-hoc approaches [18] are possible but are highly sensitive to the form in which the user inputs the problem; for example, approaches based on composition rules fail to recognize convexity of \(\sqrt{x_1^2 + x_2^2}\) and \(\log (\exp (x_1)+\exp (x_2))\) [41].

Instead of attempting such analyses of arbitrary algebraic representations of convex functions, we propose to use the modeling concept of disciplined convex programming (DCP) first proposed by Grant, Boyd, and Ye [20, 22]. In short, DCP solves the problem of convexity detection by asking users to express convex constraints in such a way that convexity is proven by composition rules, which are sufficient but not necessary. These composition rules are those from basic convex analysis, for example, the sum of convex functions is convex, the point-wise maximum of convex functions is convex, and the composition f(g(x)) is convex when f is convex and nondecreasing and g is convex. The full set of DCP rules is reviewed in [22, 40].

Even though it is possible to write down convex functions which do not satisfy these composition rules, the DCP philosophy is to disallow them and instead introduce new atoms (or basic operations) which users must use when writing down their model. For example, \({\text {logsumexp}}(\left[ \begin{array}{cc} x_1&x_2 \end{array}\right] )\) replaces \(\log (\exp (x_1)+\exp (x_2))\) and \({\text {norm}}(\left[ \begin{array}{cc} x_1&x_2 \end{array}\right] )\) replaces \(\sqrt{x_1^2 + x_2^2}\). Although asking users to express their optimization problems in this form breaks away from the traditional setting of MICP, DCP also formalizes the folklore within the MICP community that the way in which you write down the convex constraints can have a significant impact on the solution time; see, e.g., Hijazi et al. [27] and our example later discussed in Eq. 17.

The success over the past decade of the CVX software package [21] which implements DCP has demonstrated that this modeling concept is practical. Users are willing to learn the rules of DCP in order to gain access to powerful (continuous, convex) solvers, and furthermore the number of basic atoms needed to cover nearly all convex optimization problems of practical interest is relatively small.

Although we motivated DCP as a solution to the subproblem of convexity detection, it in fact provides a complete solution to the problem of automatically generating an extended formulation and encoding it in a computationally convenient form given a user’s algebraic representation of a problem. All DCP-valid expressions are compositions of basic operations (atoms); for example the expression \(\max \{\exp (x^2),-2x\}\) is DCP-valid because the basic composition rules prove its convexity. A lesser-known aspect of DCP is that these rules of composition have a 1-1 correspondence with extended formulations based on the epigraphs of the atoms. Observe, for example, that

if and only if

if and only if there exists s such that

where the validity of the latter transformation holds precisely because \(\exp (\cdot )\) is increasing and therefore \(s \ge x^2\) implies \(\exp (s) \ge \exp (x^2)\). Furthermore, the constraints \(s \ge x^2\) and \(t \ge \exp (s)\) are convex because square and \(\exp \) are convex functions; hence (10) is a convex extended formulation of (8). Note that while we previously discussed extended formulations derived only from disaggregating sums, disaggregating compositions of functions in this form also yields stronger polyhedral approximations [41]. The existence of this extended formulation is no coincidence. Grant and Boyd [20] explain that a tractable representation of the epigraph of an atom is sufficient to incorporate it into a DCP modeling framework. That is, if an implementation of DCP knows how to optimize over a model with the constraint \(t \ge f(x)\) for some convex function f, then f can be incorporated as an atom within the DCP framework and used within much more complex expressions so long as they follow the DCP composition rules.

Our analysis of DCP has led us to the conclusion that DCP provides the means to automate the generation of extended formulations in a way that has never been done in the context of MICP. Users need only express their MICP problem by using a DCP modeling language like CVX or more recent implementations like CVXPY [14] (in Python), or Convex.jl [43] (in Julia). Any DCP-compatible model is convex by construction and emits an extended formulation that can safely disaggregate sums and more complex compositions of functions.

We do note that in some cases it may not be obvious how to write a known convex function in DCP form. In our work described in [35] where we translated MICP benchmark instances into DCP form, we were unable to find a DCP representation of the univariate concave function \(\frac{x}{x+1}\) which is not in DCP form because division of affine expressions is neither convex nor concave in general. Fortunately, a reviewer suggested rewriting \(\frac{x}{x+1} = 1- \frac{1}{x+1}\) where \(\frac{1}{x+1}\) is a DCP-recognized convex function so long as \(x+1 \ge 0\). With this trick we were able to translate all of the benchmark instances we considered into DCP form, as we discuss in more details in the following section.

5 MIDCP and conic representability

While DCP modeling languages have traditionally supported only convex problems, CVX recently added support for mixed-integer convex problems under the name of MIDCP, and the subsequently-developed DCP modeling languages also support integer constraints. We will use the terminology MIDCP to refer to MICP models expressed in DCP form. In the previous section we argued that an MIDCP representation of an MICP problem provides sufficient information to construct an extended formulation, which in turn could be used to accelerate the convergence of the outer approximation algorithm by providing strong polyhedral approximations. However, an MIDCP representation is quite complex, much more so than the “black-box” derivative-based representation that traditional MICP solvers work with. Handling the MIDCP form requires understanding each atom within the DCP library and manipulating the expression graph data structures which are used to represent the user’s algebraic expressions.

It turns out that there is a representation of MIDCP models which is much more compact and convenient for use as an input format for an MICP solver, and this is as mixed-integer conic optimization problems. Before stating the form of these problems, we first consider the standard continuous conic optimization problem:

where \({\mathcal {K}}\subseteq {\mathbb {R}}^n\) is a closed convex cone, that is, a closed convex set \({\mathcal {K}}\) where any nonnegative scaling \(\alpha x\) of a point x in the set remains in the set. A simple example of a cone is the nonnegative orthant \({\mathbb {R}}_+^n = \{ x \in {\mathbb {R}}^n : x \ge 0 \}\). When \(\mathcal {K} = {\mathbb {R}}_+^n\) then (CONE) reduces to a linear programming problem. Typically, \(\mathcal {K}\) is a product of cones \(\mathcal {K}_1 \times \mathcal {K}_2 \times \cdots \times \mathcal {K}_r\), where each \(\mathcal {K}_i\) is one of a small number of recognized cones.

One of Grant et al.’s original motivations for developing the DCP framework was to provide access to powerful solvers for the second-order cone [34],

and the cone of positive semidefinite matrices,

CVX, for example, does not use smooth, derivative-based representations of the epigraphs of atoms but instead uses a conic representation of each of its atoms. For instance, for \(x,y \ge 0\) the epigraph of the negated geometric mean \(f(x,y) = -\sqrt{xy}\) is a convex set representable as \(t \ge -\sqrt{xy}\) iff \(\exists \, z \ge 0\) such that \(-t \le z \le \sqrt{xy}\) iff

where

is the n-dimensional rotated second-order cone, a common cone useful for modeling (e.g., also for functions like \(x^2\)) which itself is representable as a transformation of the second-order cone [6]. While this conic representation of the geometric mean is known in the literature [6], it is arguably unnecessarily complex for modelers to understand, and CVX, for example, provides a geo_mean atom which transparently handles this transformation.

Subsequent to the second-order and positive semidefinite cones, researchers have investigated the exponential cone [39],

and the power cone [26],

which can be used to represent functions like entropy (\(-x\log (x)\)) and fractional powers, respectively. This small collection of cones is sufficient to represent any convex optimization problem which you may input within existing DCP implementations, including CVX.

In the context of MICP, these cones are indeed sufficient from our experience. We classified all 333 MICP instances from the MINLPLIB2 benchmark library [38] and found that 217 are representable by using purely second-order cones (and so fall under the previously mentioned MISOCP problem class), 107 are representable by using purely exponential cones, and the remaining by some mix of second-order, exponential, and power cones. We refer readers to [35] for an extended discussion of conic representability. Of particular note are the trimloss [25] family of instances which have constraints of the form,

Prior to our report in [35], the tls5 and tls6 instances had been unsolved since 2001. By directly rewriting these problems into MIDCP form, we obtained an MISOCP representation because all constraints are representable by using second-order cones, precisely by using the transformation of the geometric mean discussed above. Once in MISOCP form, we provided the problem to Gurobi 6.0, which was able to solve them to global optimality within a day, indicating the value of conic formulations.

Given that DCP provides an infrastructure to translate convex problems into conic form, we may consider mixed-integer conic problems as a compact representation of MIDCP problems. Below, we state our standard form for mixed-integer conic problems,

where \(\mathcal {K}\subseteq {\mathbb {R}}^k\) is a closed convex cone. Without loss of generality, we assume integer variables are not restricted to cones, since we may introduce corresponding continuous variables by equality constraints. In Sect. 6 we discuss solving (MICONE) via polyhedral outer approximation.

6 Outer approximation algorithm for mixed-integer conic problems

The observations of the previous section motivated the development of a solver for problems of the form (MICONE). In [35] we developed the first outer-approximation algorithm with finite-time convergence guarantees for such problems. We note that the traditional convergence theory is generally insufficient because it assumes differentiability, while conic problems have nondifferentiability that is sometimes intrinsic to the model. Nonsmooth perspective functions like \(f(x,y) = x^2/y\), for example, which are used in disjunctive convex optimization [13], have been particularly challenging for derivative-based MICP solvers and have motivated smooth approximations [23]. On the other hand, conic form can handle these nonsmooth functions in a natural way, so long as there is a solver capable of solving the continuous conic relaxations.

In this section, we provide an overview of the key points of the algorithm and refer readers to [35] for the full description. The finite-time convergence guarantees of the outer approximation algorithm depend on an assumption that strong duality holds in certain convex subproblems. Extending [35], we include a discussion on what may happen when this assumption does not hold.

We begin with the definition of dual cones.

Definition 2

Given a cone \(\mathcal {K}\), we define \(\mathcal {K}^* := \{ \beta \in {\mathbb {R}}^k : \beta ^Tz \ge 0 \,\, \forall z \in \mathcal {K}\}\) as the dual cone of \(\mathcal {K}\).

Dual cones provide an equivalent outer description of any closed, convex cone, as the following lemma states. We refer readers to [6] for the proof.

Lemma 2

Let \(\mathcal {K}\) be a closed, convex cone. Then \(z \in \mathcal {K}\) iff \(z^T\beta \ge 0\,\, \forall \beta \in \mathcal {K}^*\).

We note that the second-order cone \(\text {SOC}_n\), the rotated second order cone \(\text {RSOC}_n\) (14), and the cone of positive semidefinite matrices are self-dual, which means that the dual cone and the original cone are the same [6]. While the exponential and power cones are not self-dual, the discussions that follow are valid for them and other general cones.

Based on the above lemma, we state the analogue of the MILP relaxation MIOA(P) for (MICONE) as

Note that if \(T = \mathcal {K}^*\), MICONEOA(T) is an equivalent semi-infinite representation of (MICONE). If \(T \subset \mathcal {K}^*\) and \(|T| < \infty \) then MICONEOA(T) is an MILP outer approximation of (MICONE) whose objective value is a lower bound on the optimal value of (MICONE). In the context of the discussion in Sect. 2, given T, our polyhedral approximation of \(\mathcal {K}\) is \(P_T = \{ z : \beta ^Tz \ge 0\,\,\forall \, \beta \in T\}\), and we explicitly treat the linear equality constraints separately.

In the conic setting, we state the continuous subproblem CONV(\(x^*_I\)) with integer values fixed as

Using conic duality, we obtain the dual of CONE(\(x^*\)) as

In [35] we prove that under the assumptions of strong duality, the optimal solutions \(\beta \) to the dual problem (18) correspond precisely to the half-spaces which ensure the conditions in Lemma 1 when CONE(\(x^*\)) is feasible; hence, we add these solutions to the set T. When CONE(\(x^*\)) is infeasible and (18) is unbounded, the rays of (18) provide solutions that satisfy (4), guaranteeing finite convergence of the OA algorithm.

We previously deferred a discussion of what may happen when the assumption of strong duality fails. We now present a negative result for this case. When the assumption of strong duality fails, it may be impossible for the OA algorithm to converge in a finite number of iterations.

Consider the problem adapted from [28],

Note that \((0,y,z) \in \text {RSOC}_3\) implies \(z = 0\), so the optimal value is trivially zero.

The conic dual of this problem is

The dual is infeasible because there is no \(\beta \) satisfying \(0\beta \ge 1\). So there is no strong duality in this case. The following lemma demonstrates that polyhedral approximations fail entirely. The proof is more technical than the rest of the paper but uses only basic results from linear programming and conic duality.

Lemma 3

There is no polyhedral outer approximation \(P_{\text {RSOC}_3} \supset \text {RSOC}_3\) such that the following relaxation of (19) is bounded:

Proof

Let us assume that \(\text {RSOC}_3 \subset P_{\text {RSOC}_3} := \{ (x,y,z) : A_x x + A_y y + A_z z \ge 0 \}\) for some vectors \(A_x, A_y\), \(A_z\). The right-hand side can be taken to be zero because \(\text {RSOC}_3\) is a cone. Specifically, positive right-hand-side values are invalid because they would cut off the point (0, 0, 0), and negative values can be strengthened to zero. Since (21) is a linear programming problem invariant to positive rescaling, it is bounded iff there exists a feasible dual solution \((\beta ,\alpha )\) satisfying

Suppose, for contradiction, that there exist \((\beta ,\alpha )\) satisfying these dual feasibility conditions. Let \((A_{x,i},A_{y,i},A_{z,i})\) denote the coefficients of the ith linear inequality in \(P_{\text {RSOC}_3}\). Since \(P_{\text {RSOC}_3}\) is a valid outer approximation, we have that

hence \((A_{x,i},A_{y,i},A_{z,i}) \in (\text {RSOC}_3)^* = \text {RSOC}_3\), recalling that \(\text {RSOC}_3\) is self-dual. Therefore we have

for \(\alpha \ge 0\). This follows from the fact that the vector, \((\alpha ^TA_x, \alpha ^TA_y, \alpha ^TA_z)\), is a non-negative linear combination of elements of \(\text {RSOC}_3\) and \(\text {RSOC}_3\) is a convex cone. However, the duality conditions imply \((\beta , 0, 1) \in \text {RSOC}_3\), i.e., \(0 \ge 1\), which is a contradiction. \(\square \)

Lemma 3 implies that the following MISOCP instance cannot be solved by the OA algorithm:

because the optimal value of any MILP relaxation will be \(-\infty \) while the true optimal objective is 0, hence the convergence conditions cannot be satisfied.

This example strengthens the observation by [28] that MISOCP solvers may fail when certain constraint qualifications do not hold. In fact, no approach based on straightforward polyhedral approximation can succeed. Very recently, Gally et al. [19] have studied conditions in the context of mixed-integer semidefinite optimization which ensure that strong duality holds when integer values are fixed.

7 Computational experiments

In this section we extend the numerical experiments performed in our previous work [35]. In that work, we introduced Pajarito. Pajarito is an open-source stand-alone Julia solver, now publicly releasedFootnote 2 at https://github.com/JuliaOpt/Pajarito.jl, that heavily relies on the infrastructure of JuMP [16].

Since [35] we have improved the performance of Pajarito and report revised numerical experiments. We translated 194 convex instances of MINLPLIB2 [38] into Convex.jl [43], a DCP algebraic modeling language in Julia which performs automatic transformation into conic form. Our main points of comparison are Bonmin [9] using its OA algorithm, SCIP [2] using its default LP-based branch-and-cut algorithm, and Artelys Knitro [12] using its default nonlinear branch-and-bound algorithm; all three can be considered state-of-the-art academic or commercial solvers. We further compare our results with CPLEX for MISOCP instances only. All computations were performed on a high-performance cluster at Los Alamos National Laboratory with Intel\(^\circledR \) Xeon\(^\circledR \) E5-2687W v3 @3.10GHz 25.6MB L3 cache processors and 251GB DDR3 memory installed on every node. CPLEX v12.6.2 is used as a MILP and MISOCP solver. Because conic solvers supporting exponential cones were not sufficiently reliable in our initial experiments, we use Artelys Knitro v9.1.0 to solve all conic subproblems via traditional derivative-based methods.

Bonmin v1.8.3 and SCIP v3.2.0 are both compiled with CPLEX v12.6.2 and Ipopt v3.12.3 using the HSL linear algebra library MA97. All solvers are set to a relative optimality gap of \(10^{-5}\), are run on a single thread (both CPLEX and Artelys Knitro), and are given 10 hours of wall time limit (with the exception of gams01, a previously unsolved benchmark instance, where we give 32 threads to CPLEX for the MILP relaxations). The scripts to run these experiments can be found online at https://github.com/mlubin/MICPExperiments.

Comparison performance profiles [15] (solver performs within a factor of \(\theta \) of the best on proportion p of instances) over all instances we tested from the MINLPLIB2 benchmark library. Higher is better. Bonmin is faster than Pajarito often within a small factor, yet Pajarito is able to solve a few more instances overall and with significantly fewer iterations. (a) Solution time, (b) number of OA iterations

Numerical experiments indicate that the extended formulation drastically reduces the number of polyhedral OA iterations as expected. In aggregate across the instances we tested, Bonmin requires 2685 iterations while Pajarito requires 994. We list the full results in Table 1 and summarize them in Fig. 3. In Fig. 4 we present results for the subset of SOC-representable instances, where we can compare with commercial MISOCP solvers. In our performance profiles, all times are shifted by 10 seconds to decrease the influence of easy instances.

Notably, Pajarito is able solve a previously unsolved instance, gams01, whose conic representation requires a mix of SOC and EXP cones and hence was not a pure MISOCP problem. The best known bound was 1735.06 and the best known solution was 21516.83. Pajarito solved the instance to optimality with an objective value of 21380.20 in 6 iterations.

Performance profile [15] (solver is the fastest within a factor of \(\theta \) of the best on proportion p of instances) over the instances representable as mixed-integer second-order cone problems where we can compare with the commercial CPLEX solver. Higher is better. CPLEX is the best overall, since notably it already implements the extended formulation proposed by Vielma et al. [44]

8 Concluding remarks and future work

In this work, we have presented and advanced the state-of-the-art in polyhedral approximation techniques for mixed-integer convex optimization problems, in particular exploiting the idea of extended formulations and how to generate them automatically by using disciplined convex programming (DCP). We explain why the mixed-integer conic view of mixed-integer convex optimization is surprisingly powerful, precisely because it encodes the extended formulation structure in a compact way. We claim that for the vast majority of problems in practice, conic forms using a small number of recognized cones is a sufficient and superior representation to the traditional smooth “black box” view.

Our developments for mixed-integer conic optimization seem to have outpaced the capabilities of existing conic solvers, and we hope that the convex optimization community will continue to develop techniques and publicly available, numerically robust solvers in particular for nonsymmetric cones like the exponential cone. In spite of some numerical troubles when solving the conic subproblems using existing solvers, our new mixed-integer conic solver, Pajarito, has displayed superior performance in many cases to state-of-the-art solvers like Bonmin, including the solution of previously unsolved benchmark problems.

This work has opened up a number of promising directions which we are currently pursuing. In the near term we plan on composing a rigorous report on the technical aspects of implementing the outer-approximation algorithm for mixed-integer conic problems, including aspects we have omitted which are important for the reliability and stability of Pajarito. These will include a larger set of benchmark instances and experiments with a branch-and-cut variant of the algorithm.

We intend to investigate the application of polyhedral approximation in the context of mixed-integer semidefinite optimization, where we expect that failures in strong duality could be a common occurrence based on the reports of [19]. It remains an open question what guidance we can provide to modelers on how to avoid cases where polyhedral approximation can fail, or even if this could be resolved automatically at the level of DCP.

Finally, we note that neither the DCP representation of a problem nor the conic representation of a DCP atom is necessarily unique. Understanding the effects of different formulation choices is an important avenue for future work.

Notes

In Lemma 3 we provide an explicit counterexample.

The results reported here are based on Pajarito version 0.1. The latest release, version 0.4, has been almost completely rewritten with significant algorithmic advances, which will be discussed in upcoming work with Chris Coey.

References

Abhishek, K., Leyffer, S., Linderoth, J.: FilMINT: an outer approximation-based solver for convex mixed-integer nonlinear programs. INFORMS J. Comput. 22(4), 555–567 (2010)

Achterberg, T.: SCIP: solving constraint integer programs. Math. Program. Comput. 1(1), 1–41 (2009)

Ahmadi, A., Olshevsky, A., Parrilo, P., Tsitsiklis, J.: NP-hardness of deciding convexity of quartic polynomials and related problems. Math. Program. 137(1–2), 453–476 (2013)

Belotti, P., Berthold, T., Neves, K.: Algorithms for discrete nonlinear optimization in FICO Xpress. In: 2016 IEEE Sensor Array and Multichannel Signal Processing Workshop (SAM), pp. 1–5 (2016)

Belotti, P., Kirches, C., Leyffer, S., Linderoth, J., Luedtke, J., Mahajan, A.: Mixed-integer nonlinear optimization. Acta Numer. 22, 1–131 (2013)

Ben-Tal, A., Nemirovski, A.: Lectures on Modern Convex Optimization. Society for Industrial and Applied Mathematics (2001)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Bixby, R., Maes, C., Garcia, R.: Recent developments in the Gurobi optimizer. In: Gurobi Optimization Workshop, INFORMS Annual Meeting, Philadelphia, PA (2015)

Bonami, P., Biegler, L.T., Conn, A.R., Cornuéjols, G., Grossmann, I.E., Laird, C.D., Lee, J., Lodi, A., Margot, F., Sawaya, N., Wächter, A.: An algorithmic framework for convex mixed integer nonlinear programs. Discret. Optim. 5(2), 186–204 (2008)

Bonami, P., KilinÇ, M., Linderoth, J.: Algorithms and software for convex mixed integer nonlinear programs. In: Lee, J., Leyffer, S. (eds.) Mixed Integer Nonlinear Programming, The IMA Volumes in Mathematics and its Applications, vol. 154, pp. 1–39. Springer, New York (2012)

Bonami, P., Lee, J., Leyffer, S., Wächter, A.: On branching rules for convex mixed-integer nonlinear optimization. J. Exp. Algorithm. 18, 2.6:2.1-2.6:2.31 (2013)

Byrd, R.H., Nocedal, J., Waltz, R.: KNITRO: an integrated package for nonlinear optimization. In: di Pillo, G., Roma, M. (eds.) Large-Scale Nonlinear Optimization, pp. 35–59. Springer, Berlin (2006)

Ceria, S., Soares, J.: Convex programming for disjunctive convex optimization. Math. Program. 86(3), 595–614 (1999)

Diamond, S., Boyd, S.: CVXPY: a python-embedded modeling language for convex optimization. J. Mach. Learn. Res. 17(83), 1–5 (2016)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Dunning, I., Huchette, J., Lubin, M.: JuMP: a modeling language for mathematical optimization. SIAM Rev. 59(2), 295–320. doi:10.1137/15M1020575 (2017)

Duran, M., Grossmann, I.: An outer-approximation algorithm for a class of mixed-integer nonlinear programs. Math. Program. 36(3), 307–339 (1986)

Fourer, R., Orban, D.: DrAmpl: a meta solver for optimization problem analysis. CMS 7(4), 437–463 (2009)

Gally, T., Pfetsch, M.E., Ulbrich, S.: A framework for solving mixed-integer semidefinite programs. Available on Optimization Online http://www.optimization-online.org/DB_HTML/2016/04/5394.html (2016)

Grant, M., Boyd, S.: Graph implementations for nonsmooth convex programs. In: Blondel, V., Boyd, S., Kimura, H. (eds.) Recent Advances in Learning and Control, Lecture Notes in Control and Information Sciences, pp. 95–110. Springer, Berlin (2008)

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming, version 2.1. http://cvxr.com/cvx (2014)

Grant, M., Boyd, S., Ye, Y.: Disciplined convex programming. In: Liberti, L., Maculan, N. (eds.) Global Optimization, Nonconvex Optimization and Its Applications, vol. 84, pp. 155–210. Springer, New York (2006)

Günlük, O., Linderoth, J.: Perspective reformulation and applications. In: Lee, J., Leyffer, S. (eds.) Mixed Integer Nonlinear Programming, The IMA Volumes in Mathematics and its Applications, vol. 154, pp. 61–89. Springer, New York (2012)

Gupta, O.K., Ravindran, A.: Branch and bound experiments in convex nonlinear integer programming. Manag. Sci. 31(12), 1533–1546 (1985)

Harjunkoski, I., Westerlund, T., Pörn, R., Skrifvars, H.: Different transformations for solving non-convex trim-loss problems by MINLP. Eur. J. Oper. Res. 105(3), 594–603 (1998)

Hien, L.T.K.: Differential properties of euclidean projection onto power cone. Math. Methods Oper. Res. 82(3), 265–284 (2015). doi:10.1007/s00186-015-0514-0

Hijazi, H., Bonami, P., Ouorou, A.: An outer-inner approximation for separable mixed-integer nonlinear programs. INFORMS J. Comput. 26(1), 31–44 (2014)

Hijazi, H., Liberti, L.: Constraint qualification failure in action. Oper. Res. Lett. 44(4), 503–506 (2016)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms. Springer, Heidelberg (1996). Two volumes - 2nd printing

Jünger, M., Liebling, T.M., Naddef, D., Nemhauser, G.L., Pulleyblank, W.R., Reinelt, G., Rinaldi, G., Wolsey, L.A. (eds.): 50 Years of Integer Programming 1958–2008—From the Early Years to the State-of-the-Art. Springer, Berlin (2010)

Kılınç, M.R.: Disjunctive cutting planes and algorithms for convex mixed integer nonlinear programming. Ph.D. Thesis, University of Wisconsin-Madison (2011)

Leyffer, S.: Deterministic methods for mixed integer nonlinear programming. Ph.D. Thesis, University of Dundee (1993)

Leyffer, S., Linderoth, J., Luedtke, J., Mahajan, A., Munson, T., Sharma, M.: Minotaur: toolkit for mixed integer nonlinear optimization problems. https://wiki.mcs.anl.gov/minotaur/index.php/Main_Page. Accessed 16 April 2017

Lobo, M.S., Vandenberghe, L., Boyd, S., Lebret, H.: Applications of second-order cone programming. Linear Algebra Applications. In: International Linear Algebra Society (ILAS) Symposium on Fast Algorithms for Control, Signals and Image Processing, vol 284(13), pp. 193–228 (1998)

Lubin, M., Yamangil, E., Bent, R., Vielma, J.P.: Extended formulations in mixed-integer convex programming. In: Louveaux Q., Skutella M. (eds.) Integer Programming and Combinatorial Optimization: 18th International Conference, IPCO 2016, Liège, Belgium, June 1–3, 2016, Proceedings, pp. 102–113. Springer International Publishing (2016)

Martin, R.: Large Scale Linear and Integer Optimization: A Unified Approach. Springer, New York (1999)

Mittelmann, H.: MINLP benchmark. http://plato.asu.edu/ftp/minlp_old.html. Accessed 13 May 2016

MINLPLIB2 library. http://www.gamsworld.org/minlp/minlplib2/html/. Accessed 13 May 2016

Serrano, S.A.: Algorithms for unsymmetric cone optimization and an implementation for problems with the exponential cone. Ph.D. thesis, Stanford University, Stanford, CA (2015)

Shen, X., Diamond, S., Gu, Y., Boyd, S.: Disciplined Convex-Concave Programming. ArXiv e-prints. http://arxiv.org/abs/1604.02639 (2016)

Tawarmalani, M., Sahinidis, N.V.: A polyhedral branch-and-cut approach to global optimization. Math. Program. 103(2), 225–249 (2005)

Tramontani, A.: Mixed Integer Programming Workshop, June 1st–4th, 2015. The Gleacher Center, Chicago, IL (2015)

Udell, M., Mohan, K., Zeng, D., Hong, J., Diamond, S., Boyd, S.: Convex optimization in Julia. In: Proceedings of HPTCDL ’14, pp. 18–28. IEEE Press, Piscataway, NJ, USA (2014)

Vielma, J.P., Dunning, I., Huchette, J., Lubin, M. (2017) Extended formulations in mixed integer conic quadratic programming. Math. Program. Comput. 9(3), 369–418 (2017). doi:10.1007/s12532-016-0113-y

Acknowledgements

We thank Chris Coey for proofreading and Madeleine Udell and the anonymous reviewers for their comments. M. Lubin was supported by the DOE Computational Science Graduate Fellowship, which is provided under grant number DE-FG02-97ER25308. The work at LANL was funded by the Center for Nonlinear Studies (CNLS) and was carried out under the auspices of the NNSA of the U.S. DOE at LANL under Contract No. DE-AC52-06NA25396. J.P. Vielma was funded by NSF grant CMMI-1351619.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lubin, M., Yamangil, E., Bent, R. et al. Polyhedral approximation in mixed-integer convex optimization. Math. Program. 172, 139–168 (2018). https://doi.org/10.1007/s10107-017-1191-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-017-1191-y