Abstract

We consider minimization of nonsmooth functions which can be represented as the composition of a positively homogeneous convex function and a smooth mapping. This is a sufficiently rich class that includes max-functions, largest eigenvalue functions, and norm-1 regularized functions. The bundle method uses an oracle that is able to compute separately the function and subgradient information for the convex function, and the function and derivatives for the smooth mapping. With this information, it is possible to solve approximately certain proximal linearized subproblems in which the smooth mapping is replaced by its Taylor-series linearization around the current serious step. Our numerical results show the good performance of the Composite Bundle method for a large class of problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

For some years already, nonsmooth optimization research has focused on exploiting structure in the objective function as a way to speed up numerical methods. Indeed, for convex optimization, complexity results establish that oracle based methods have a sublinear rate of convergence; [28]. The \({\fancyscript{U}}\)-Lagrangian theory [16, 17], and the \({\fancyscript{V}}{\fancyscript{U}}\)-space decomposition [24], can be seen as tools to “extract” smooth structure from general convex functions. The approach was extended to a class of nonconvex functions in [26]. Similar ideas for more general nonconvex functions, having a smooth representative on a certain manifold corresponding to the \({\fancyscript{U}}\)-subspace, are explored in [10, 18]. Another line of work concentrates efforts on identifying classes of functions structured enough to have some type of second-order developments, such as the primal-dual gradient structured functions from [24, 25], or the composite functions in [32]. Composite functions were initially considered in [34]; see also [8, Ch. 14]. Later on these functions were studied in [4, 21], and have been more recently revisited in [19, 29], in the convex and nonconvex settings, respectively.

Most of the work above is conceptual, in the sense that if some algorithmic framework is considered, often it is not implementable. Among the exceptions, we find the convex optimization algorithms in [27, 29, 31], and the \({\fancyscript{V}}\)-space identification method in [6]. In this paper, we develop an implementable bundle method that exploits structure for certain nonconvex composite optimization problems. More precisely, given a smooth mapping \(c:\mathfrak R ^n\rightarrow \mathfrak R ^m\) and a positively homogeneous (of degree 1) convex function \(h:\mathfrak R ^m\rightarrow \mathfrak R \), we consider how to solve the unconstrained problem

In this composite objective, we refer to \(c\) as the inner mapping and to \(h\) as the outer function. Note in particular that the outer function is real-valued on \(\mathfrak R ^m\), an assumption that excludes indicator functions, but still covers a rich enough family of interesting problems; see Sect. 2.

For solving the possibly nonconvex problem (1) we develop a specialized bundle method that takes full advantage of the composite structure of the objective function. Specifically, rather than using composite data:

we suppose the oracle has the ability to make separate computations. More precisely,

While (\(\mathtt {bb}_{h\circ c}\)) would build a classical cutting-plane model for the composite objective, having the richer oracle \({(\mathtt {bb}_{c})}\text{-}{(\mathtt {bb}_{h})}\) at hand, we can make a better approximation and build a cutting-plane model for the so-called conceptual model. This model from [8], considered by [19] for general composite functions, and given by (4) below, defines certain proximal linearized subproblems in which the smooth mapping is replaced by its Taylor-series linearization. The corresponding algorithmic framework, named ProxDescent in [19], is rather broad (outer functions can be extended-valued and prox-regular), but relies heavily on the exact computation of proximal points at each iteration. In this work, we provide a fully implementable variant of such an algorithmic framework for a less general, but still large enough, class of outer functions (real-valued, positively homogeneous, and convex).

In order to deal with approximate proximal computations, our fully implementable variant, given by Composite Algorithm 1 below, incorporates typical features from bundle methods, such as serious and null step iterates. But this is not the only modification of ProxDescent: there is an additional level of approximation, introduced by the need to estimate the conceptual model, which is handled in the implementable algorithm by backtracking. For these reasons, our convergence analysis deviates significantly from that of [19]. In addition, instead of a standard proximal term as in ProxDescent, in our development we consider a variable prox-metric that opens the way to exploiting second-order information of the smooth mapping, when available.

An alternative line of work, based on somewhat different richer oracles (that can compute more than one subgradient at any given point), refers to the proximity control bundle method [30]. These methods, devised for nonconvex optimization problems appearing in eigenvalue optimization and automatic control, use certain “first-order local models” that contain the conceptual model from [19] as a special case.

Our paper is organized as follows. In Sect. 2 we give several classes of functions with composite structure. The conceptual model and the proposed cutting-plane function, that makes the conceptual model computationally tractable, are described in Sect. 3. Sections 4 and 5 contain, respectively, the Composite Bundle method and its convergence results. In Sect. 6 we report our numerical experience, on many functions, including some described in Sect. 2. Our results show the good performance of the composite algorithm, when compared to several solvers, on a large number of problems of various dimensions. In the final section with concluding remarks, we compare the main features of our composite method with the proximity control bundle method from [30].

2 Composite functions in the family

Our class of composite functions includes many examples from [19, 29, 32]. We mention in passing that the wording “composite” means slightly different things for different authors. Specifically, while we refer to a composition that can be nonconvex (as in [19, 32]), composite functions in [29] are the sum of two terms, one convex and the other one smooth.

We suppose the outer function is positively homogeneous and convex, so it is also sublinear. In [13, Lesson C] there are many examples of real-valued sublinear functions, such as norms, quadratic seminorms, gauges of closed convex sets containing 0 in their interior, infimal convolutions of sublinear functions, and support functions of bounded sets. The composition of any of such (outer) function with any smooth (inner) mapping defines a function in our family. We review below some interesting special cases. In what follows, the notation \(c\), with lower capitals, refers to the smooth mapping while \(C\), with upper capitals, denotes an \(m\)-dimensional vector in the mapping image, so that \(C=c(x)\) for some \(x\in \mathfrak R ^n\).

Example 1

(max-functions) The function given by the pointwise maximum of a finite collection of \(C^2\)-functions in \(\mathfrak R ^n\), \(\{c_1(\cdot ),\ldots ,c_m(\cdot )\}\), can be defined by composing the outer function \(h(C)=\max (C_1,\ldots ,C_m)\) with the inner mapping with components \(c_j(\cdot )\), for \(j=1,\ldots , m\).

For any \(x\in \mathfrak R ^n\), the Jacobian mapping \(Dc(x)\) is the \(m\times n\) matrix with rows given by the transposed gradients \(\nabla c_j(x)^{\scriptscriptstyle \top }\). As for the second-order derivative, \(D^2c(x)\), for any \(d,\tilde{d}\in \mathfrak R ^n\), \([D^2c(x)\tilde{d}]d\) is the vector in \(\mathfrak R ^m\) with \(j\)th component given by \(\tilde{d}^{\scriptscriptstyle \top } \nabla ^2 c_j(x) d\). \(\square \)

We now give a more involved function, appearing in semidefinite programming.

Example 2

(eigenvalue optimization) Let \(\fancyscript{S}^p\) denote the linear space of symmetric matrices of order \(p\), and consider the function \(\lambda _{\max }(X)\), given by the maximum eigenvalue of a symmetric matrix \(X\in \fancyscript{S}^p\), whose elements are \(C^2\)-functions of a vector \(x\in \mathfrak R ^n\). Suppose that for a fixed \(X\), \(r\) denotes the multiplicity of the maximum eigenvalue. Then from the analysis in [2, Ex. 3.98], the orthogonal projection onto the eigenspace corresponding to the maximum eigenvalue is an analytic function near \(X\). By applying Gram–Schmidt orthonormalization to the columns of such projection, it is possible to define a “diagonalizing” analytic mapping \(\fancyscript{C}:\fancyscript{S}^p\rightarrow \fancyscript{S}^r\), such that: \(\fancyscript{C}(X)=\lambda _{\max }(X)\mathrm{{I}}_r\) where \(\mathrm{{I}}_r\) is the identity matrix of order \(r\); the Jacobian \(D\fancyscript{C}(X)\) is surjective; and the eigenvalues \(\lambda (\fancyscript{C}(X))\) coincide with \(\lambda _{\max }(X)\). The inner smooth mapping corresponds to \(\fancyscript{C}\), while the outer function is the eigenvalue function \(h(C)=\lambda (C)\), a positively homogeneous convex function. \(\square \)

The next function is typically used in applications such as wavelet-based image restoration, sparse representations, sparse regression, and compressive sensing problems.

Example 3

(Regularized minimization maps) Many signal or image reconstruction problems, as well as approximation problems, minimize a function of the form

for \(x\in \mathfrak R ^n\), \(y\in \mathfrak R ^k\), \(A\) a matrix \(k\times n\), \(\tau \) a positive parameter, and for given appropriate norms. In the expression above, the first data fidelity term is smooth, and the second term corresponds to some regularization or penalty, for example ensuring sparsity of the solution. To fit our composite framework, it suffices to take \(m=n+1\),

and define the outer function \(h(C_1,\ldots ,C_{n+1})=C_{n+1}+\tau |(C_1,\ldots ,C_n)|\).

For any \(x\in \mathfrak R ^n\), the Jacobian mapping \(Dc(x)\) is the \((n+1)\times n\) matrix made by appending to the identity matrix of order \(n\) a row with the transpose of \(A^{\scriptscriptstyle \top } (Ax-y)\). As for the second-order derivative, \(D^2c(x)\), for any \(d,\tilde{d}\in \mathfrak R ^n\), \([D^2c(x)\tilde{d}]d\) is the vector with its first \(n\) components equal to 0, and its last component equal to \(\tilde{d}^{\scriptscriptstyle \top } A^{\scriptscriptstyle \top } A d\), a constant with respect to \(x\).\(\square \)

We now give a possibly nonconvex function, appearing in approximation problems.

Example 4

(sum of Euclidean norms) Given a collection of smooth vector functions, \(\{\phi _1,\ldots ,\phi _J\}\) with \(\phi _j:\mathfrak R ^n\rightarrow \mathfrak R ^{m_j}\) for \(j=1,\ldots ,J\), the function \(\sum _{j=1}^J|\phi _j(x)|\) is the composition of the following smooth mapping with \(m=\sum _{j=1}^J m_j\) components,

and outer function:

When \(n=J=m_1=1\), letting \(\phi _1(x)=0.5 a_2x^2+a_1x+a_0\) for \(a_{2,1,0}\) given scalars with \(a_2\ne 0\), it is easy to see that the function \(f\) is convex if and only if \(a_1^2\le 2a_0a_2\). \({}\square \)

The next function shows how to cast \(\ell _1\)-penalizations of nonlinear programming problems into the composite framework (provided no indicator function is used for polyhedral sets, to preserve finite-valuedness of the outer function).

Example 5

(Uryasev’s exact penalty function) This is an optimal control problem over a discrete horizon with \(n\) time steps. The control variable \(x\in \mathfrak R ^n\) is constrained to the box \([-0.2,0.2]^n\). Given initial values \((\xi _0,\psi _0)\), the state variables \((\xi ,\psi )\in \mathfrak R ^2\) follow a trajectory given by a recursive state equation of the form

for \(i=1,\ldots ,n-1\) and where \(\mathtt{cubic}\ge 0\) is a given parameter. In the expressions above, we use the notation \(x_{1:i}\) to refer to the dependence of the \(i\)th-state variables on the first \(i\)th components of the control variable \(x\). The control problem to be solved is:

The corresponding \(\ell _1^+\)-penalty function uses parameters c \(_1\), c \(_2\), c \(_3>0\), and can be written in composite form by letting \(m=3n+1\),

and taking the outer function

The Jacobian mapping \(Dc(x)\) can be computed using the adjoint state equations, to obtain the trajectory derivatives \(\nabla \xi _i\) and \(\nabla \psi _i\). When the parameter cubic is null, the control problem is linear-quadratic and the composite function \(h\circ c\) is convex. In this case, the second-order derivative, \([D^2c(x)\tilde{d}]d\) is the vector with all of its components equal to 0, except for the \((n+1)\)th component, equal to \(\tilde{d}^{\scriptscriptstyle \top } \sum _{i=1}^n\nabla \xi _i\nabla \xi _i^{\scriptscriptstyle \top } d\) for any \(d,\tilde{d}\in \mathfrak R ^n\). When \(\mathtt cubic>0\), the function is nonconvex, and second-order derivatives are difficult to compute for higher dimensions. For \(n=2\), only \(c_2(x)=\psi _2\) and \(c_3(x)=\frac{1}{2}(\xi _1^2+\xi _2^2)\) have a nonzero Hessian, constantly equal to a scalar factor of the identity matrix of order \(n\) (the corresponding factors of cubic are \(-0.08\) and \(0.0016\), respectively).\(\square \)

Our final function, modifying [18, Sec.7] as in [25] to have a smooth inner mapping, is an example of a composite function having a nonconvex positively homogeneous outer function.

Example 6

(Modified Lewis function) For \(x=(x_1,x_2)^{\scriptscriptstyle \top }\) consider the following function, defined on a partition of \(\mathfrak R ^2\):

This nonconvex function has the origin as unique critical point. The composite form, \(f=h\circ c\), has smooth inner mapping \(c:\mathfrak R ^2\rightarrow \mathfrak R ^2\) defined by \(c_1(x)=x_1^2\) and \(c_2(x)=x_2\); and outer function \(h:\mathfrak R ^2\rightarrow \mathfrak R \) given by

This outer function is posivitely homogeneous, but not convex, so \(h\circ c\) is not included in our family.\(\square \)

3 Outer function and conceptual model

Even though the composite function \(h\circ c\) may be nonconvex, it is always locally Lipschitz continuous and directionally differentiable. Furthermore, at any point \(x\in \mathfrak R ^ n\) such that \(c(x)=0\), the composite function is semismooth [23], and strongly semismooth if the inner mapping is twice continuously differentiable; see [32, Prop.3.2]. Moreover, since the outer function is assumed to be positively homogeneous of degree 1, the relation \(h^{\prime }(0;\cdot )=h(\cdot )\) holds. As a result, whenever \(c(x)=0\), the directional derivative of the composite function satisfies the relation \((h\circ c)^{\prime }(x, d)=h^{\prime }(c(x),Dc(x)d)=h(Dc(x)d)\), [32, Sec.3].

The optimality condition for \(\bar{x}\) to be a critical point of (1) is \(0\in \partial (h\circ c)(\bar{x})\). Being real-valued and convex, the outer function is continuous and, using a chain rule [2, Ch.3.4.1],

In particular, critical points satisfy the inclusion

Another important consequence of positive homogeneity is the generalized Euler formula, stating that

(the result follows from the subgradient inequality \(h((\alpha +1)C)\ge h(C)+G^{\scriptscriptstyle \top }((\alpha +1)C-C)\), using that \(h((\alpha +1)C)=(\alpha +1)h(C)\) for any \(\alpha \ge -1\)). This particular structural property will be exploited in the bundle method to define a cutting-plane approximation of the conceptual model.

The conceptual model from [19] composes the outer function with the linearization of the inner mapping around a point \(x^k\). Specifically, if \(D_k=Dc(x^k)\) denotes the Jacobian mapping at \(x^k\), then for any \(d\in \mathfrak R ^n\), letting

the conceptual model is given by the function

To make tractable this conceptual model, we use a cutting-plane approximation \(\check{h}\) for the outer function and, for each pair \((H^i:=h(C^i),G^i\in \partial h(C^i))\), define the plane

with

by the subgradient inequality. The cutting-plane model for the conceptual model has the form

where the set \(\mathtt{B }\) represents a bundle of information, varying along iterations. By convexity of \(h\), the cutting-plane model is always a lower approximation for the conceptual model.

When \(h\) is positively homogeneous, equality (3) implies in (5) that \(\varDelta _i^k:=h(c(x^k))\), so each plane has the form \(G^i~\!^{\scriptscriptstyle \top } c_k(d)\), and the bundle only needs to keep the subgradient information (\(G^i\)). Accordingly, the composite cutting-plane model can be rewritten as

where the symbolism \(i\in \mathtt{B }\) means for all \(i\) such that \(G^i\) is in the current bundle \(\mathtt{B }\).

The composite model may not be a lower approximation for \(h\circ c\). However, since the conceptual model is a good estimate for \(h\circ c\), eventually the composite model is a good approximation for the objective function. This feature is particularly important for nonconvex bundle methods, which need to handle very carefully cutting-plane models (in some cases a tangent line of a nonconvex function can cut-off a section of the graph of the function, leaving out a critical point, for example), [11].

4 Composite bundle method

At iteration number \(\ell \), the composite model \(\check{h}_\ell (c_k(d))\), given by (7) written with bundle set \(\mathtt{B }=\mathtt{B }_{\ell }\), is used to generate a direction \(d^\ell \). The inner mapping is linearized at a point \(x^k\) with \(k=k(\ell )\). It is possible for \(k\) to be fixed for several consecutive values of \(\ell \). The quadratic programming problem (QP) solved by \(d^\ell \) is nothing but the computation of the proximal point of the composite model, with prox-center \(x^k\) and a variable prox-metric depending on matrices \(M_k^\ell \) detailed below.

The bundle of information \(\mathtt{B }_\ell \) is formed from (some of the) past information, corresponding to past directions \(d^i\) with \(i<\ell \), generated using a prox-center \(x^{k(i)}\) and for which a call to the outer black-box (\(\mathtt {bb}_{h}\)) with \(C^i=c_{k(i)}(d^i)\) gave the value \(H^i=h(C^i)\) and the subgradient \(G^i\in \partial h(C^i)\).

The variable prox-metric is induced by a positive definite matrix \(M_k^\ell \) of order \(n\) having the form

and I the identity matrix of order \(n\). The corresponding primal and dual metrics in \(\mathfrak R ^n\) are

respectively. The eigenvalues of \(M_k^\ell \) are obtained by adding \(\mu _\ell \) to those of \(M_k\), while the eigenvectors of \(M_k^\ell \) coincide with those of \(M_k\). As a result, if for a matrix \(M\) the symbol \(\lambda (M)\) (respectively \(\lambda _{\min /\max }(M)\)) denotes an eigenvalue (resp., minimum/maximum eigenvalues), then \(\lambda (M_k^\ell )=\lambda (M_k)+\mu _\ell \),

In particular, letting \(|\cdot |\) denote the Euclidean norm in \(\mathfrak R ^n\),

Having the elements above, the next direction \(d^\ell \in \mathfrak R ^n\) solves the quadratic programming problem

Letting \(C^\ell =c_{k(\ell )}(d^\ell )\), the corresponding optimality condition with \(k=k(\ell )\) is

The optimal gradient \(\hat{G}^{\ell }~\!\) is a convex combination of active \({G^i}\)’s such that \(\check{h}_\ell (C^\ell )={G^i}^{\scriptscriptstyle \top } C^\ell =\hat{G}^{\ell }~\!^{\scriptscriptstyle \top } C^\ell \).

Without loss of clarity, and depending on the context, we sometimes use the short notations \(k\) and \(C^\ell \), instead of the longer ones \(k(\ell )\) and \(c_{k(\ell )}(d^\ell )\).

Algorithm 1

(Composite Bundle) Inner and outer black-boxes, (\(\mathtt {bb}_{c}\)) and (\(\mathtt {bb}_{h}\)) respectively, are available.

Step 0 (Input and initialization)

-

Select a stopping tolerance \(\mathtt{tol}_\mathtt{stop}\ge 0\), two parameters \(m_1,m_2>0\), and a minimum positive threshold \(0<\mu _{\min }<+\infty \).

-

Initialize the iteration and serious step counters to \(\ell =0\) and \(k=k(\ell )=0\).

-

For a starting point \(x^0\in \mathfrak R ^n\), call (\(\mathtt {bb}_{c}\)) to compute the inner oracle values \(C^0=c(x^0)\) and \(D_0=Dc(x^0)\). Call (\(\mathtt {bb}_{h}\)) to compute the outer oracle values \(H^0=(h\circ c)(x^0)\), \(G^0\in \partial h(C^0)\). Set \(\mathtt{B }_0:=\{G^0\}\).

-

Choose a symmetric matrix \(M_0\) of order \(n\) and a prox-parameter \(\mu _0\ge 0\) ensuring that the matrix \(M_0^0=M_0+\mu _0\mathrm{{I}}\) is positive definite.

Step 1 (Model Generation and QP Subproblem)

-

Having the current serious step iterate \(x^k\), \(c(x^k\)), its associated Jacobian \(D_k\), and the bundle \(\{G^i\}_{i \in \mathtt{B }_{\ell }}\), define the composite cutting-plane model function \({\check{h}_\ell (c_k}(\cdot ))\) from (7). A matrix \(M_k\) and a scalar \(\mu _\ell \ge 0\) such that \(M_k^\ell =M_k+\mu _\ell \mathrm{{I}}\) is positive definite are also available.

-

Compute \(d^{\ell }\) by solving the quadratic program (9). These calculations include finding optimal simplicial multipliers \(\alpha ^\ell \) such that in (10)

$$\begin{aligned} \hat{G}^{\ell }~\!=\sum _{i\in \mathtt{B }_\ell }\alpha ^\ell _i {G^i}\,. \end{aligned}$$Define the aggregate error \( \hat{e}_\ell =(h\circ c)(x^{k})-\hat{G}^{\ell }~\!^{\scriptscriptstyle \top } c(x^k)\), noting that \(\hat{e}_\ell \ge 0\) by (5) and (3). Compute the predicted decrease

$$\begin{aligned} \delta _\ell =(h\circ c)(x^k)-{\check{h}_\ell (c_k}(d^\ell ))-\frac{1}{2}|d^\ell |_{k,\ell }^2 =\hat{e}_\ell +\frac{1}{2} \Vert D_k^{\scriptscriptstyle \top }\hat{G}^{\ell }~\!\Vert _{k,\ell }^2 \,, \end{aligned}$$(11)where the last equality follows from (10).

Step 2 (Stopping test)

-

If \(\max (\hat{e}_\ell ,\Vert D_k^{\scriptscriptstyle \top }\hat{G}^{\ell }~\!\Vert _{k,\ell }^2)\le \mathtt{tol}_\mathtt{stop}\), stop.

-

Otherwise, call (\(\mathtt {bb}_{h}\)) to obtain \(h(c_k(d^\ell ))\) and \(G^{\ell }\in \partial h(c_k(d^\ell ))\).

Step 3 (Serious/backtrack/null step test)

-

Check the descent condition

$$\begin{aligned} h(c_k(d^\ell )) \le (h\circ c)(x^k)- m_1 \delta _\ell \,. \end{aligned}$$(12)If (12) does not hold, declare a null step: take \(\mu _{\ell +1}\ge \mu _\ell \), \(M_k^{\ell +1}=M_k+\mu _{\ell +1}\mathrm{{I}}\), and go to Step 4.

-

If (12) is true, call the inner oracle (\(\mathtt {bb}_{c}\)) to compute \(c(x^k+d^\ell )\). Call the outer oracle (\(\mathtt {bb}_{h}\)) to compute \(\varGamma ^\ell \in \partial h( c(x^k+d^\ell ))\), and check the condition below:

$$\begin{aligned} \varGamma ^\ell ~\!^{\scriptscriptstyle \top } [c_k(d^\ell )- c(x^k+d^\ell )]\ge -m_2\delta _\ell \,. \end{aligned}$$(13)If (13) holds, declare a serious step, and go to Step 4. Otherwise, if (13) does not hold, declare a backtracking step: take \(\mu _{\ell +1}\ge \mu _{\min }+\mu _\ell \), \(M_k^{\ell +1}=M_k+\mu _{\ell +1}\mathrm{{I}}\), \(\mathtt{B }_{\ell +1}=\mathtt{B }_\ell \), set \(k(\ell +1)=k(\ell )\), increase \(\ell \) by 1, and loop to Step 1.

Step 4 (Bundle update and management)

-

If needed, compress \(\mathtt{B }_\ell \) either by keeping all strongly active elements (\(G^i\in \mathtt{B }_\ell : \alpha ^\ell _i>0\)), or by replacing some strongly active subgradients by the aggregate gradient \(\hat{G}^{\ell }~\!\). Define \(\mathtt{B }_{\ell +1}\) by adding to the possibly compressed set the new outer gradient \(G^\ell \).

-

If the step was declared null, set \(k(\ell +1)=k(\ell )\), increase \(\ell \) by 1, and loop to Step 1. If the step was declared serious, set \(k(\ell +1)=k+1\), \(x^{k+1}=x^k+d^\ell \), call (\(\mathtt {bb}_{c}\)) to compute \(D_{k+1}=Dc(x^{k+1})\), compute a new symmetric matrix \(M_{k+1}\) and a prox-parameter \(\mu _{\ell +1}\ge 0\) such that \(M_{k+1}^{\ell +1}=M_{k+1}+\mu _{\ell +1}\mathrm{{I}}\) is positive definite. Increase \(k\) and \(\ell \) each by 1, and loop to Step 1.

In Step 3, the decision to declare a null-step is different from standard bundle methods, and exploits the structure knowledge in the composite case. Namely, while condition (12) checks if there is descent for the outer function, (13) checks adequacy between the inner mapping and its conceptual model.

The prox-parameter increases at backtracking steps, and can be increased at null steps or decreased at serious steps. In this context, the wording “backtrack” may sound odd, since it corresponds to choosing a larger prox-parameter. The explanation comes from noticing that, due to (10) and the definition of \(M_k^\ell \), the value \(1/\mu _\ell \) can be seen as a stepsize. In this sense, when the algorithm detects the need for backtracking, increasing the prox-parameter results indeed in a smaller stepsize. Our setting allows the prox-parameter to be zero if the matrix \(M_k\) is positive definite; for this reason in the backtracking step we force positivity of the prox-parameter by means of the positive threshold \(\mu _{\min }\), set at the Initialization step.

In order to ensure that matrices \(M_k^\ell \) remain positive definite, the prox-parameter update can be done as follows:

We mention that there is a difference between the Composite Algorithm 1 and usual bundle methods, in terms of oracle calls. A standard bundle method makes one call to both the inner and outer oracles per iteration, independent of getting a serious or a null step. Instead, Step 2 in Composite Algorithm 1 makes one call to the outer oracle (\(\mathtt bb _\mathtt{{h}}\)) at all iterations, needing additional calls to both oracles (\(\mathtt bb _\mathtt{{h}/\mathtt{c}}\)) at Step 3, for deciding between serious or backtracking steps. In this case, the additional subgradient \(\varGamma ^\ell \in \partial h(c(x^k+d^\ell ))\) may enter the bundle. For comparisons to be fair, an appropriate measure for (bb)-calls is used in our numerical experience; see Sect. 6.3.

In Step 4 we see that the bundle is formed only by outer gradients, corresponding either to \(G^i\in \partial h(C^i)\) or to some past aggregate gradient \(\hat{G}^i\). By convexity of \(h\), the cutting-plane model is always a lower approximation to \(h\):

Moreover, since \(\hat{G}^{\ell }~\!\in \partial \check{h}_\ell (C^\ell )\) by (10), after some algebra involving \(\delta _\ell \) and \(\hat{e}_\ell \), we see that

In particular, since \(h(C)\ge \hat{G}^{\ell }~\!^{\scriptscriptstyle \top } C\), the definition of \(\hat{e}_{\ell }\) in Step 1 implies that and, hence,

Remark 1

(The trivial composite case) To see how a classical bundle method compares to the Composite Algorithm 1, consider a convex nonsmooth function \(f\), and the, somewhat artificial, outer function \(h\equiv f\) and inner mapping \(c(x)=x\). With respect to our composite structure, \(h\) is not necessarily positively homogeneous, \(Dc={I}\), \(c_k(d)=x^k+d=c(x^k+d)\), and, instead of (7), the cutting-plane model has the form (6). Since the inner mapping is affine, there is no second order information to exploit, and the matrices \(M_k\equiv 0\) in Composite Algorithm 1. The QP optimality condition in (10) becomes

for \(\hat{\varDelta }^k_\ell :=\sum _{i\in \mathtt{B }_\ell }\alpha ^\ell _i \varDelta ^k_i\). The aggregate error is defined by \(\hat{e}_\ell \text{:=}\hat{\varDelta }^k_\ell -\hat{G}^{\ell }~\!^{\scriptscriptstyle \top } x^k\), consistent with the error definition in Step 1, because when \(h\) is positively homogenous, \(\varDelta _i^k=h(x^k)\), by (3). As a result, the expression for the predicted decrease in (11) remains true. Finally, in Step 3, because \(c_k(d^\ell )=x^k+d^\ell =c(x^k+d^\ell )\), there is no backtracking step, because the inequality in (13) trivially holds:

From the above it follows that Composite Algorithm 1 with \(M_k\equiv 0\) is applicable for the trivial composite case, becoming a classical bundle method for convex functions without any particular structure.\(\square \)

5 Convergence results

Since matrices \(M_k^\ell \) are always positive definite, they induce a norm and (8) holds. Together with (11) and (10), this means that the relations

are always satisfied.

We start our convergence analysis showing that there can only be a finite number of consecutive backtracking steps.

Proposition 1

(Finite backtracking loop) Suppose the inner mapping is \(C^2\). If after some iteration \(\hat{\ell }\), Composite Algorithm 1 makes a last serious step and thereafter generates only null and backtracking steps, the following holds:

-

(i)

If the sequence \(\{d^\ell \}_{\ell \ge \hat{\ell }}\) is bounded, then there exists \(\mu _{noBT}>0\) such that (13) holds for any \(\mu _\ell >\mu _{noBT}\).

-

(ii)

There cannot be infinitely many consecutive backtracking steps.

Proof

For convenience, let \(\hat{k}=k(\hat{\ell })\), \(\hat{x}=x^{\hat{k}}(=x^{k(\ell )}\) for all \(\ell \ge \hat{\ell }\)), and \(\hat{M}=M_{\hat{k}}\) denote, respectively, the \(k\)-iteration index, prox-center and matrix corresponding to the last serious step.

Since \(c\) is a \(C^2\)-mapping, a mean-value theorem applies to each component \(c_j\), \(j=1, \ldots , m\):

Recall that \(|\cdot |\) is the Euclidean matrix norm. By assumption, \(\{\hat{x}+d^\ell \}\) is bounded so \(|\varGamma ^\ell |\le L\) for all \(\ell \) and some constant \(L\). Similarly, boundedness of \(\{d^\ell \}\) implies that \(|\nabla ^2c_j(\xi _j)|\le D\) for all \(j=1,\ldots ,m\), for some constant \(D\). By Cauchy–Schwarz inequality,

Using the rightmost inequality in (17)(b), we obtain

In the notation used in this proof, \(c(x^k+d^\ell )=c(\hat{x}+d^\ell )\) and \(c_k(d^\ell )=c(\hat{x})+D_{\hat{k}}d^\ell \). Suppose that (13) is not satisfied and multiply by \(-1\) the corresponding inequality:

Using the bounds above we see that

Therefore, nonsatisfaction of (13) implies \(\mu _\ell <\sqrt{m}LD/m_2-\lambda _{\min }(\hat{M})\). Item (i) follows, by taking \(\mu _\mathrm{{noBT}}\ge \sqrt{m}LD/m_2-\lambda _{\min }(M_k)\).The claim in item (ii) is shown by contradiction, assuming that (13) does not hold, with \(\ell \rightarrow \infty \) due to backtracking. An infinite backtracking loop drives \(\mu _\ell \) to infinity, as well as the minimum eigenvalue of the matrix \(M_{\hat{k}}^{\ell }\), because \(M_{\hat{k}}^{\ell }=\hat{M}+\mu _\ell \mathrm{{I}}\). Since there are only backtracking steps, the bundle does not change, and the composite model \(\check{h}_{\ell }(c_{\hat{k}}(\cdot ))\) is a fixed function, say \(\varphi \). Hence, by [12, Prop. XV.4.1.5], the minimand in (9) converges to \(\varphi (0)\) with \(d^\ell \rightarrow 0\) as \(\ell \rightarrow \infty \). Therefore, \(\hat{x}+d^\ell \rightarrow \hat{x}\) with \(\varphi (d^\ell )+\frac{1}{2}|d^\ell |_{\hat{k},\ell }^2 \rightarrow \varphi (0)\). In particular, this means that the sequence \(\{d^\ell \}\) is bounded, and the contradiction follows from item (i). \(\square \)

As a consequence, if the algorithm loops forever, it generates either an infinite sequence of serious steps, or a finite one, followed by infinitely many null steps (possibly nonconsecutive, due to backtracking). Both situations are considered below, adapting classical developments for bundle methods [12, Ch.XV.3, XV.4] to the composite setting.

We start with the case of infinitely many serious steps.

Lemma 1

(Infinitely many serious steps) Suppose the Composite Algorithm 1 generates an infinite sequence \(\{x^k\}\) of serious steps. Denote by \(\ell _k\) an iteration index yielding a serious step: \(x^{k+1}=x^k+d^{\ell _k}\). The following holds:

-

(i)

If \(0<m_2<m_1\) then, as \(k\rightarrow \infty \), either \((h\circ c)(x^k)\rightarrow -\infty \) or \(\delta _{\ell _k}\rightarrow 0\).

-

(ii)

Suppose \(\delta _{\ell _k}\rightarrow 0\). Then \(\lim \hat{e}_{\ell _k}=0\) and,

$$\begin{aligned} \text{ if} \text{ the} \text{ series}\,\displaystyle \sum _{k}\frac{1}{\mu _{\ell _k}+ \lambda _{\max }(M_k )}\,\text{ is} \text{ divergent}, \end{aligned}$$(18)then \(\lim \inf \Vert D_k^{\scriptscriptstyle \top }\hat{G}^{\ell _k}\Vert _{k,\ell _k}=0\).

-

(iii)

If, in addition, the sequence \(\{x^k\}\) is bounded, then it has at least one accumulation point that is critical for (1).

-

(iv)

If, instead of (18), the stronger condition

$$\begin{aligned} \text{ the} \text{ sequence}\,\{\mu _{\ell _k}+ \lambda _{\max }(M_k )\}\,\text{ is} \text{ bounded} \text{ above,} \end{aligned}$$(18′)holds, then all accumulation points are critical for (1).

Proof

By (13), since \(\varGamma ^{\ell _k}\in \partial h( c(x^k+d^{\ell _k}))\), we have that

Together with satisfaction of (12), this means that \((h\circ c)(x^{k+1}) \le (h\circ c)(x^k)- (m_1-m_2) \delta _{\ell _k}\). The telescopic sum yields that either \((h\circ c)(x^k)\!\!\searrow \!\! -\infty \) or \(0\le \delta _{\ell _k}\rightarrow 0\), because \(m_1-m_2>0\). In the first case, problem (1) does not have a minimizer and there is nothing to prove. The second case is addressed by the next item. The first assertion in (ii) follows from (11). The rightmost inequality in (17)(a), together with our assumption (18) gives the second result. To show item (iii), consider a \(k\)-subsequence such that \( \Vert D_k^{\scriptscriptstyle \top }\hat{G}^{\ell _k}\Vert _{k,\ell _k}\rightarrow 0\) and extract a further subsequence of serious steps with accumulation point \(x^{acc}\). By boundedness of \(\{x^k\}\) and local Lipschitzianity of \(h\), there is an associated subsequence \(\{\hat{G}^{\ell }~\!\}\) with accumulation point \(\hat{G}^{acc}\). Passing to the limit in (16) and using that \(\hat{e}_{\ell _k}\rightarrow 0\), we obtain in the limit that \(\hat{G}^{acc}\in \partial h(C^{acc})\) for \(C^{acc}=c(x^{acc})\). By smoothness of \(c\), \(D_k\rightarrow D_{acc}=Dc(x^{acc})\), and by (2), \(D_{acc}^{\scriptscriptstyle \top }\hat{G}^{acc}\in \partial (h\circ c)(x^{acc})\). The result follows from item (ii). Item (iv) is similar to item (iii), noticing that if \(L>0\) denotes an upper bound for \(\{\mu _{\ell _k}+\lambda _{\max }(M_k)\}\), then the relation \(\frac{1}{2(\mu _{\ell _k}+\lambda _{\max }(M_k)}\ge 1/2L\) in (17)(a) gives that \(|D_k^{\scriptscriptstyle \top } \hat{G}^{\ell _k}|\rightarrow 0\) as \(k\rightarrow \infty \) (for the whole sequence). \(\square \)

The remaining case refers to an infinite null-step loop and makes use of the aggregate linearization

the associated strongly convex function

and the result [12, Lem. XV.4.3.3]:

Finally, and similar to [5, Sec. 4], note that Step 4 in the Composite Algorithm 1 updates the bundle of information in a way ensuring that not only

but also

for all \(d\in \mathfrak R ^n\).

Lemma 2

(Finitely many serious steps) Suppose that, after some iteration \(\hat{\ell }\), the Composite Algorithm 1 makes a last serious step \(\hat{x}=\hat{x}^{k(\ell )}\) and thereafter generates an infinite number of null steps, possibly nonconsecutive, due to intermediate backtracking steps. The following holds:

-

(i)

The sequence \(\{d^\ell \}_{\ell >\hat{\ell }}\) is bounded and there is an iteration \(\ell ^{\prime }>\hat{\ell }\) such that only null steps are done for all \(\ell \ge \ell ^{\prime }\).

-

(ii)

If \(m_1<1\), then \(\delta _{\ell }\rightarrow 0\) and \(\hat{e}_\ell \rightarrow 0\).

-

(iii)

If, in addition, for the (fixed) matrix \(\hat{M}=M_{k(\hat{\ell })}\).

$$\begin{aligned} \text{ the} \text{ series} \displaystyle \sum _{\ell \ge \ell ^{\prime }}\frac{\mu _{\ell -1}+\lambda _{\min }(\hat{M})}{(\mu _{\ell }+ \lambda _{\max }(\hat{M} ))^2} \text{ is} \text{ divergent,} \end{aligned}$$(24)then \(\lim \inf |Dc(\hat{x})^{\scriptscriptstyle \top }\hat{G}^{\ell }~\!|=0\), \(\hat{x}+d^\ell \rightarrow \hat{x}\) for some \(\ell \)-subsequence, and \(\hat{x}\) is critical for (1).

Proof

For convenience, let \(\hat{k}=k(\hat{\ell })\), \(\hat{x}=x^{\hat{k}}(=x^{k(\ell )}\) for all \(\ell \ge \hat{\ell }\)), and \(\hat{M}=M_{\hat{k}}\) denote, respectively, the \(k\)-iteration index, prox-center and matrix corresponding to the last serious step.

Consider \(\ell >\hat{\ell }\) and recall that, since \(M_k^\ell =\hat{M}+\mu _\ell \mathrm{{I}}\) and \(\{\mu _\ell \}\) is nondecreasing at null and backtracking steps,

The sum of this inequality and (22), together with (20) written with \(\ell \) replaced \(\ell -1\), results in the relation

In particular, for \(d=d^\ell \), we obtain that \(\mathtt{H }_{\ell -1}(d^\ell )\le \mathtt{H }_{\ell }(d^\ell )\) from (20), using the identity \(\check{h}_\ell (c_{\hat{k}}(d^\ell ))= h_\ell ^\mathrm{agg}(c_{\hat{k}}(d^\ell ))\). Together with (21), written at \(d=d^\ell \), we see that

By the definitions of \( h_\ell ^\mathrm{agg}\) and \(\mathtt{H }_\ell \), the optimal value in (9) equals \(\mathtt{H }_\ell (d^\ell )\). Hence, (25) implies that the sequence of optimal values in (9) is strictly increasing, with \(\mathtt{H }_\ell (d^\ell )\le \check{h}_\ell (c_k(0))=\check{h}_\ell (c(\hat{x}))\). Since, by (15), \(\check{h}_\ell \le h\), then

So \(\{\mathtt{H }_\ell (d^\ell )\}\uparrow \mathtt{H }_\infty \) for some \(\mathtt{H }_\infty \le (h\circ c)(\hat{x})\), with \(|d^\ell -d^{\ell -1}|^2_{\hat{k},\ell -1}\rightarrow 0\) as \(\ell \rightarrow \infty \), by (25). But \(\mu _\ell \ge \mu _{\hat{\ell }}\) (at null and backtracking steps prox-parameters are nondecreasing), so the left relation in (8) implies that

Using (20) with \(d=0\), we see that \(\mathtt{H }_\ell (0)= h_\ell ^\mathrm{agg}(c_{\hat{k}}(0))\). Together with the definition of \( h_\ell ^\mathrm{agg}\), the left inequality in the second line in (10), and the definition of \(c_k(d^\ell )\), this implies that that \(\mathtt{H }_\ell (0)=\check{h}_\ell (c_{\hat{k}}(d^\ell ))-\hat{G}^{\ell }~\!^{\scriptscriptstyle \top } D_{\hat{k}}d^\ell =\hat{G}^{\ell }~\!^{\scriptscriptstyle \top } c(\hat{x})\). Since \(\hat{G}^\ell \in {\mathop {conv}}\{G^i : i\in \mathtt B _\ell \}\), using (5) and (3) we obtain that \(\mathtt{H }_\ell (0)\le (h\circ c)(\hat{x})\). Therefore, writing (21) with \(\ell -1\) replaced by \(\ell \) at \(d=0\) yields the relations

because the sequence \(\{\mathtt{H }_\ell (d^\ell )\}\) is increasing, by (25), since \(\ell >\hat{\ell }\). Using once more the left relation in (8) we conclude that the sequence \(\{d^\ell \}\) is bounded, and item (i) follows from Proposition 1(i).

To show item (ii), consider iteration indices \(\ell -1,\ell \ge \ell ^{\prime }\), giving two consecutive null steps, and set \(C^{\ell }=c(\hat{x})+Dc(\hat{x}) d^{\ell }\) and \(C^{\ell -1}=c(\hat{x})+Dc(\hat{x}) d^{\ell -1}\). Since \(\delta _\ell \ge 0\), we substract the inequality \(\delta _\ell \le (h\circ c)(\hat{x})-\check{h}_\ell (C^\ell )\), obtained from (11), from nonsatisfaction of (12), both with \(x^k=\hat{x}\), to see that

By (3),

and by (23),

Since \(\{d^\ell \}\) is bounded, any Lipschitz constant \(L\) for \(h\) gives an upper bound for \(\{|G^{\ell }|\}\); as a result,

From (28) and (27) it follows that \(\delta _\ell \rightarrow 0\), and by the right hand side expression in (11), \(\hat{e}_\ell \rightarrow 0\), as stated.

Finally, to see item (iii), the left hand side expression in (11) of \(\delta _\ell \) and the definitions of \( h_\ell ^\mathrm{agg}\) and \(\mathtt{H }_\ell \) give the identity \(\delta _\ell =(h\circ c)(\hat{x})-\mathtt{H }_\ell (d^\ell )\). Therefore, by the right inequality in (25), (21) with \(d=d^\ell \), and the left relation in (8),

From (29) and (28), we obtain that \(\delta _\ell \le \frac{2L|Dc(\hat{x})|}{1-m_1} |d^\ell -d^{\ell -1}|\), so

Letting \(K:=(1-m_1)^2/\Bigl (8 |Dc(\hat{x})|^2L^2\Bigr )\), and summing over \(\ell >\hat{\ell }\),

Furthermore, using (17)(a), the series

converges too. With our assumption (24), this implies that \(\lim \inf |Dc(\hat{x})^{\scriptscriptstyle \top } \hat{G}^{\ell }~\!|^4=0\). Consider indices \(\ell \) in a corresponding convergent subsequence of \(\{d^\ell \}\). Since from the expression for \(d^\ell \) in (10), \(|Dc(\hat{x})^{\scriptscriptstyle \top } \hat{G}^{\ell }~\!|^2=|M_k^\ell d^\ell |^2\ge (\lambda _{\min }(\hat{M})+\mu _{\hat{\ell }})^2|d^\ell |^2\), we see that the subsequence fo \(\{d^\ell \}\) converges to zero and, hence, \(\hat{x}+d^\ell \rightarrow \hat{x}\) on this subsequence. Finally, by boundedness of \(\{C^\ell =c(\hat{x})+Dc(\hat{x})d^\ell \}\), all outer subgradients are bounded and, hence, the subsequence \(\{\hat{G}^\ell \}\) has some accumulation point \(\hat{G}^{acc}\) such that \(Dc(\hat{x})^{\scriptscriptstyle \top } \hat{G}^{acc}=0\). The result follows from passing to the limit in (16) to give \(\hat{G}^{acc}\in \partial h(c(\hat{x}))\), and using the chain rule (2). \(\square \)

Remark 2

(The trivial composite case, suite and end.) In the setting of Remark 1, when \(h\) is not positively homogeneous but merely convex, and \(c\) is the identity, the cutting-plane model having the form (6), then (23) states that

which is consistent with the fact that \(h(x^k)=\varDelta ^k_{\ell -1}\) when \(h\) is positively homogenous, by (5) and (3).

In fact, when \(M_k\equiv 0\) for all \(k\), as considered in the trivial structure, Lemmas 1 and 2 boil down to [12, pp.309 and 311, vol.II, Thms.XV.3.2.2 and XV.3.2.4]. \(\square \)

Putting together Proposition 1 and Lemmas 1 and 2, we can show convergence for objective functions that are inf-compact, i.e., functions having some level set that is nonempty and compact (sometimes also referred to as level-bounded functions). In this case, by lower semicontinuity, \(h\circ c\) always attains its minimum and the sequence of serious steps is bounded.

Theorem 1

(Convergence) Consider solving problem (1) with Composite Algorithm 1 and suppose that in (1) the objective function \(h\circ c\) is inf-compact. If \(0<m_2< m_1<1\), \(\mathtt{tol}_\mathtt{stop}=0\), with both (18) and (24) being satisfied, the following holds.

-

(i)

Either the sequence of serious steps is infinite and bounded, and at least one of its accumulation points is critical for (1).

-

(ii)

Or the last serious step \(\hat{x}\) is critical for (1), with \(\{\hat{x}+d^\ell \}\rightarrow \hat{x}\) for some \(\ell \)-subsequence.

If, instead of (18), the stronger condition (18’) holds, item \((i)\) can be replaced by

-

(i’)

Either the sequence of serious steps is infinite and bounded, and all its accumulation points are critical for (1). \(\square \)

Our conditions (18) and (24), on the variable prox-metric, are fairly general and not difficult to enforce. For (18) to hold, it is enough to take matrices \(M_k^\ell \) that are uniformly bounded from above at serious steps (when \(\ell =\ell _k\)). As for null steps, choosing \(\mu _{\ell +1}\in [\mu _\ell ,\mu _{\max }]\) for some finite bound \(\mu _{\max }>-\lambda _{\min }(\hat{M})\), rule (14) ensures satisfaction of (24). Depending on the particular problem, the more general condition (24) may help in preventing bad choices (too small) for the bound \(\mu _{\max }\).

We finish our analysis by supposing the stopping tolerance is positive. In this case, if (18’) and (24) hold, by Lemmas 1 and 2, the nominal decrease goes to 0. Then, by (11), both \(\hat{e}_\ell \) and \(\hat{G}^{\ell }~\!\) go to 0, and eventually the stopping test will be triggered. For such an iteration index, say \(\ell {\text{ best}}\), (16) gives the following approximate optimality condition for the last serious step \(x^{\mathrm{best}}=x^{k(\ell {\mathrm{best}})}\):

for all \(x\in \mathfrak R ^n\). The relation above ensures approximate optimality for \(x^{\mathrm{best}}\), even when the composite function is nonconvex.

6 Numerical experience

To assess practical performance of the Composite Bundle method, we coded the Composite Algorithm 1 in matlab and ran it on many collections of functions, including some from Sect. 2, using a one 3GHz processor and 1.49GB RAM computer.

6.1 Solvers in the benchmark

We compared the performance of the Composite Bundle method with that of the Matlab HANSO package, implementing a “Hybrid Algorithm for NSO”, and downloadable from http://cs.nyu.edu/overton/software/index.html. In [20] it is explained that, for nonsmooth optimization problems, BFGS may fail theoretically. However, our results below showthe that HANSO package can provide good benchmarks for the purpose of comparison, helping to shed some light on important NSO issues. The HANSO package is organized in two phases:

-

Input to a first phase consists of multiple starting points. For each starting point this phase runs a BFGS method for smooth minimization, with a linesearch that attemps to avoid kinks as explained in [20]. If the termination test is not satisfied at the best point found by BFGS, HANSO continues to the next phase.

-

Starting from the best point found by BFGS, the second phase executes up to three runs of the Gradient Sampling method in [3] with decreasing sampling radii. For initial information, this methods uses BFGS final bundle defined as the last \(\min [100, 2n, n + 10]\) generated points and their (bb) information. For locally Lipschitz functions, the method converges to Clarke critical points in a probabilistic sense; [3].

We also created another hybrid algorithm, the Hybrid Composite Bundle, which after the BFGS phase switches to the Composite Bundle method, starting as does Hanso from BFGS’s final bundle.

Therefore, the benchmark considers the following four solvers:

-

CBun, the Composite Bundle method,

-

BFGS, the first phase in the HANSO package,

-

Hanso, the hybrid variant combining BFGS and Gradient Sampling methods; and

-

HyCB, the hybrid variant combining BFGS and CBun.

6.2 Parameters for the different solvers

Letting \(n\) be the problem dimension, the maximum number of iterations and calls to the oracle were set to \(\mathtt{maxit}=150\min (n,20)\) and \(\mathtt{maxsim}=300\min (n,20)\), respectively. We set the stopping tolerance \(\mathtt{tol}_\mathtt{stop}=10^{-5}\) if \(n<50\) and multiply it by \(\sqrt{n}\) when \(n\ge 50\).

6.2.1 Parameters for CBun and HyCB

The two parameters in (12) and (13) are \(m_1=0.9\) and \(m_2=0.55\), respectively. The minimum and maximum positive thresholds are \(\mu _{\min }=10^{-6}\) and \(\mu _{\max }=10^{8}\). In all of our runs, only strongly active bundle elements are kept at each iteration, but, when there are more than \(|\mathtt{B }\max |=50\) strongly active elements, the bundle is compressed.

If no second-order information is available for the inner mapping, in the variable prox-metric we take \(M_k=0\). Otherwise, we use the combination of Hessian matrices

exploiting sparsity patterns, if they exist. To update the prox-parameter, we use an estimate for \(\lambda _{\min }(M_k)\), obtained by a modified Cholesky factorization.

The prox-parameter was started at

The parameter fact is 0 if the function is convex, and 3 otherwise (such a value constrains the search of the next iterate in a region close to \(x^0\), which makes sense for a nonconvex function). At later iterations, every time a serious step is declared, the update is done as follows with \(k=k(\ell )\):

In these relations, \(\mu _\ell ^\mathtt{qN}\) is computed using the reversal quasi-Newton scalar update in [1, § 9.3.3]:

recalling that \(\ell _k\) and \(\ell _{k-1}\) denote the last two iterations declaring a serious step (\(x^k+d^{\ell _k}\) and \(x^{k-1}+d^{\ell _{k-1}}\), respectively). In all cases, if \(\lambda _{\min }(M_k)<0\), then \(\mu _{\ell +1}\ge -1.1\lambda _{\min }(M_k)\).

Backtracking steps multiply the current prox-parameter by a factor of two. At consecutive null steps, the prox-parameter is defined as

for \(\mathtt nul \) a counter of null steps. This update satisfies the convergence condition (24). If necessary, \(\mu _{\ell +1}\) is projected so that \(\mu _{\ell +1}\in [\mu _{\min },\mu _{\max }]\).

6.2.2 Parameters for BFGS and Hanso

Both tolerances normtol and evaldist are set to \(\sqrt{\mathtt{tol}_\mathtt{stop}}\). In the linesearch, Armijo’s and Wolfe’s parameters were taken equal to 0.01 and 0.5, respectively. The option for a so-called Strong Wolfe criterion is not activated, and the method quits when the linesearch fails. As for the quasi-Newton updates, they are of the full memory type, with scaling of the initial Hessian. Finally, the final bundle keeps \(\min [100, 2n, n + 10]\) past gradients.

6.3 Benchmark rules

In an effort to make comparisons fair, we adopted the following rules:

-

All solvers use the same black-boxes.

-

Each solver has a different computational effort per iteration, which depends not only on the solver, but also on how many times the black-box is called per iteration. The number of iterations is not a meaningful measure for comparison, and the number of (bb) calls is different for each solver. While each iteration of Hanso calls \((\mathtt {bb}_{c})\) and \((\mathtt {bb}_{h})\), CBun calls \((\mathtt {bb}_{h})\) at Step 2, and may or may not call \((\mathtt {bb}_{c})\) and \((\mathtt {bb}_{h})\) at Step 3. Moreover, Hanso always uses gradient values, but CBun requires additional first order information for the inner mapping only at a serious step, to define a new inner model. Striving for fairness, we defined the counters below:

-

For Hanso, one call to both \((\mathtt bb _\mathtt{{c}})\) and \((\mathtt bb _\mathtt{h})\) counted as one \((\mathtt bb )\)-call.

-

For CBun, we kept separate counters for the number of \(c\), \(Dc\), and \(h/G\) evaluations: nc, nDc, nf, respectively. To each counter corresponds a number of scalars needed for the corresponding calculation: \(m\), \(m n\), and \(1+m\), respectively. This gives the number of scalars required for a single evaluation of \(h\circ c\) and a subgradient (as in Hanso):

$$\begin{aligned} \mathtt OneEval =m(1+n)+1+m. \end{aligned}$$Accordingly, the number of oracle evaluations in CBun was defined as

$$\begin{aligned} \#(\mathtt{bb})\text{-calls}=\frac{{\mathtt{nc}m+\mathtt{nDc}mn+\mathtt{nf}(1+m)}}{\mathtt{OneEval}}. \end{aligned}$$

-

At this point a potential advantage of CBun becomes clear: when for a given function the method makes many consecutive null steps, this is not expensive in terms of (bb) calls, since in this case only \((\mathtt bb _h\)) is needed.

-

We also computed total CPU time for each solver to reach its stopping test. This information should mostly be taken as a complement to the counter of (bb) calls. The reason is that CPU times can be misleading, because for almost all the tested functions the (bb) calls take a negligible amount of time, a feature that is rare in real-life problems. For example, for the nuclear generation planning problems in [7, Sec.7], 95 % of the total CPU time is spent in producing the black-box information. By contrast, for all but one instance in all of our runs, (bb) calls represent less than 10 % of the total CPU times (taking up to 40 % for one test-function, written in Fortran and requiring a mex-interface).

-

All solvers use the same quadratic programming packages, for which there are two possibilities: a Fortran library with a mex-interface, or a special Matlab solver. Quadratic programs such as (9) can be solved by quadprog, the built-in matlab QP solver, but we prefer the method in [15], and made a mex-interface for the Fortran code developed by the author. This method is specially tailored for quadratic minimization over a simplex, as it is the case for the problem dual to (9) and, hence, often outperforms quadprog, which is a general solver. Hanso also has a special QP solver, written in Matlab. Since Hanso QP problems amount to setting \(M_k^\ell \equiv 0\) in the inclusion \(0\in \mathop {conv}\{G^i\in \mathtt B _\ell \}+M_k^\ell d^\ell \), which is the optimality condition for (9), we could modify the Hanso QP solver to handle (9). Reciprocally, it is possible to use the Fortran QP solver based on [15] to solve Hanso QP problems.

-

For large-scale instances, Hanso offers a limited memory BFGS method explained in [33]. However, since for the prox-variable metric the calculation of \(M_k\) corresponds to that of a full Hessian, the limited-memory option was not activated in the comparisons.

-

Each solver has specific stopping tests, and since BFGS uses a smooth method, the triggers terminating its runs are only heuristic. For each solver we declare a run a success as follows:

-

For BFGS and Hanso, when the tolerance on the shortest vector in the convex hull of certain subgradients is met (for BFGS, these are the subgradients in its final bundle).

-

For CBun and HyCB, when the stopping test in Step 2 is reached.

-

-

All non-successful runs are declared failures, with a special counter for when a solver reached the maximum number of iterations or calls to the black-box (maxit or maxsim, denoted by \(\mathtt max \) in the tables). Hanso does not check if \(\mathtt max \) is attained during the Gradient Sampling cycles. Other possible reasons for failures are detection of unboundedness in \(x\) or in the function values, errors in the QP solver, and, for BFGS, a nondescent direction, or a problem in the linesearch subroutine.

-

The hybrid variants are not initiated if BFGS detected unboundedness. They are started when BFGS succeeds, reaches maxit, cannot descend from the generated direction, or if the linesearch or the QP solver failed.

-

Both BFGS and CBun use the same starting points, each function was run for 10 different starting points. Unless otherwise specified, the starting points have all components randomly drawn in \([-1,1]\).

-

To measure the accuracy reached by each solver, we only considered test-functions either with a known optimal value \(\bar{f}\), or such that all the solvers converged to the same final value within a tolerance of \(10^{-5}\). In this case, the optimal value \(\bar{f}\) is given by the smallest function value found by all the solvers. Letting \(f^{\mathrm{best}}\) denote the function value of the analyzed case, then

$$\begin{aligned} RA:= - \log _{10} \max \left(10^{-16},\frac{f^{\mathrm{best}} - \bar{f}}{1+|\bar{f}|}\right) \end{aligned}$$measures the number of digits of accuracy achieved by the solver.

-

We exclude from the tables results for those nonconvex cases for which different solvers found different critical points, see Table 8 in the “Appendix” for details.

-

Since full tables are large, for the reader’s convenience in this section we only report the overall results of each full table in the “Appendix”.

6.4 Battery of convex test problems

We first consider the typical functions for convex NSO benchmarking in Table 1.

The composite structure \(h\circ c\) for functions Maxquad and Ury is the one described by the respective examples in Sect. 2. Both TR48 and TSP are the piecewise maximum of affine functions, so the inner mapping is affine. However, our Fortran (bb) code for TSP was too involved to identify the \(c\)-components (corresponding to the minimal 1-trees in the underlying graph), so we just took the trivial composite case for TSP, as in Remark 1. Similarly for BadGuy, because it does not have a positively homogeneous outer mapping.

Table 2 summarizes the Group 1 results from Table 9 in the “Appendix”. In Table 9, each column corresponds to one of the four solvers, CBun, BFGS, Hanso, HyCB, run with the Fortran or Matlab special QP solver (denoted by f and m, respectively). For all of our runs we observed that the Matlab QP special solver is systematically less efficient and/or less reliable than the Fortran one, the shorter Table 2 contains the indicators for the Fortran variants only: CBun f , BFGS f , Hanso f , HyCB f .

To each of the ten functions and each case in Table 1 there corresponds a row in Table 9, reporting the numbers obtained with each solver, averaged over ten runs, with random starting points. All solvers used the same starting points, with components in \([-1,1]\) except for BadGuy, taken in \([-512,512]\). Results are displayed in three columns, with the accuracy RA, the mean CPU time in seconds \(\overline{\mathtt{sec}}\), and the average number of black-box calls \(\overline{\mathtt{(bb) }}\), respectively. At the end of each function family there are two lines. A first line with the mean values averaged for all the considered cases with different \(n\)-values (in this line the second column reports between parenthesis the considered number of runs). The second line gives the number of failures and how many of these failures corresponded to having reached the maximum number allowed for iterations or evaluations (\(\mathtt max \)/fails). Finally, the bottom two lines of Table 9 in the “Appendix” contain the same indicators, averaged over all the problems in the group, as well as of the total number of instances considered for the test. These bottom lines and the average for each function family are reproduced in Table 2 as a summary.

For Group 1, we observe that all methods are very accurate. When compared to CBun, BFGS exhibits a significant increase both in CPU times and number of (bb) calls; failing to trigger its termination criteria 38 % of the times (only thrice the reason was \(\mathtt max \)). For the TSP family, BFGS is 60 % more accurate than CBun, but for getting 5 more digits, BFGS spends 19 (8) times more CPU ((bb) calls) than CBun does. We conjecture that endowing TSP with a nontrivial composite structure can improve CBun’s average figures (we observed a significant change for TR48, when comparing CBun performance on TR48 black-boxes with and without composite structure). Hanso is the slowest solver, and uses the most (bb) calls, but it is not the most accurate method: this hybrid variant did not seem to be adequate for this set of problems, probably because they are all convex. By contrast, HyCB eliminated all of BFGS’s failures, with a relatively low additional computational effort: HyCB extra CPU times and (bb) calls represent less than 5 % of BFGS totals. With respect to CBun, the HyCB gain of 30 % in accuracy is obtained at the cost of an increase of almost 20 and 10 times, respectively, in CPU seconds and (bb) calls. For this group of problems, CBun performs better than all the other solvers.

6.5 Convex and nonconvex problems

The two other groups include a mix of convex and nonconvex problems given in Table 3. Group 2 gathers together functions with low dimensions (\(n\le 50\)), while Group 3 contains high dimensional ones (\(n\in \{100,500\}\)). For each instance in Groups 2 and 3, we give the optimal values when known, or the lowest function value found by all solvers, in Table 8 in the “Appendix”.

For the CPS, MQ, EucSum, and TiltedNorm-collections, matrices and vectors were generated randomly. All \(A\)-matrices are symmetric positive semidefinite, with condition number equal to \(\mathop {rank}A^2\). The \(B\)-matrices in CPS are symmetric positive semidefinite with condition number equal to \(n^2\). To make calculations possible in our computer, for all the sparse matrices the density was set to \(0.1,0.01,0.01,0.001\) for \(n=10,50,100,500\), respectively. For GenModRos, five different starting points were considered, namely \(x^0\in \Bigl \{[-0.1,+0.1],[-1,1],[-2,0],[0,2],[-2,2]\Bigr \}\).

Tables 4 and 5 summarize the results for Groups 2 and 3, respectively. In the appendix, Tables 10 and 11 report the respective full details.

For Group 2 the instances excluded because different solvers found different critical points correspond to two variants of the functions NK and LV, namely F8 and T3 in [33]; and GenModRos with starting point in \([0,2]\) and \([-2,2]\). For this group, the overall results show again that CBun performs better on average than the other three solvers. However, BFGS did better for some instances of LV, as well as for GenModRos and ModRos. CBun had difficulties solving the second instance of LV, corresponding to T3 in [33] with \(n=10\). For the excluded instances of GenModRos, the optimal value (5.337) was found often by BFGS while CBun found only a critical point (with value 9.3283). For the function NesChebRos, nine out of the thirty starting points are very difficult to handle by BFGS, but not by CBun, explaining the huge difference in accuracy obtained by these solvers. For these problems, we observed that BFGS got stuck at a nonoptimal kink and exited having triggered its heuristic stopping test (the projection of zero on its final bundle was smaller than the tolerance). For contrast, the Rosenbrock modifications GenModRos and ModRos put CBun into trouble: these are the only problems for which Cbun systematically makes more (bb) evaluations than BFGS. For these functions, CBun finds a very precise minimizer, after taking many serious steps (very short ones); since for each new serious step the mapping Jacobian \(Dc(\hat{x}^k)\) is computed, this significantly increases the total (bb) counter. Finally, and as observed for Group 1, HyCB seems to be a better hybrid variant than Hanso.

For Group 3 the instances excluded because different solvers found different critical points correspond to four variants of the functions NK and LV, namely F8 and T3, T5, and T6 in [33]; and EucSum with \(n=100\) and \(\mathop {rank}A_j=400\).

As expected, functions in this higher dimensional group are more difficult for all the solvers. The low mean \(\overline{\mathtt{(bb)}}\) for CBun indicates that the methods often stalled making many null/backtracking steps, rather than serious steps. However, the second instance of Ferrier functions (corresponding to outer function \(h(\cdot )=\max (\cdot )\) and \(n=100\)) was difficult for CBun, which made many short serious steps, expensive in terms of (bb) calls. Function MQ with \(n=500\) and \(\mathop {rank}A_j=400\) was very difficult to minimize for all methods. The 100 runs of the NK family did not seem difficult for any solver. CBun exited problem TiltedNorm having reached the maximum number of iterations, while BFGS found a good point and triggered its heuristic stopping test. For Ury, both CBun and BFGS ended by having reached the maximum number of iterations, but BFGS terminated at a point that is much better than the one found by CBun. For NesChebRos, BFGS fails in the linesearch, stuck at a nonoptimal kink, and none of the hybrid variants succeeds in getting away from it.

For this group, and especially for its nonconvex functions, we see a more erratic behaviour of CBun, even though it still has the best performance on average.

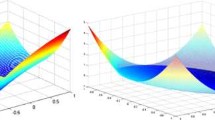

6.6 Performance profiles

Figures 1, 2 and 3 contain performance profiles over all the 920 runs, excluding cases converging to different critical points, but including failures, like in the tables of results. This choice was done not to handicap BFGS, whose heuristic stopping test may sometimes fail to be triggered.

Each curve in a performance profile can be interpreted as a cumulative probability distribution of a resource of interest: accuracy, CPU time, (bb) calls. In general, for a particular solver \(s\), the ordinate \(\phi _s(\theta )\) gives information on the percentage of problems that the solver will solve if given a maximum amount of resource. This maximum amount is equal to \(\theta \) times the minimum amount of resource employed by all solvers. Therefore, for an abscissa \(\theta =\theta _{\min }\) in a graph, the probability \(\phi _s(\theta _{\min })\) of a particular solver is the probability that the solver will win over all the others. As a result, the solver with higher value of \(\phi _s(\theta _{\min })\) should be preferred if a user is only interested in the number of actual wins. On the other hand, for large values of \(\theta \), the probability \(\phi _s(\theta )\) informs if a solver actually solves a problem. Thus, if a user is concerned only in the probability that a solver will be succesful, the solver with highest \(\phi _s(\theta )\) as \(\theta \) becomes large should be considered. The above explanation supposes that “smaller” values of \(\theta \) mean “better performance” of the considered resource. Since for accuracy such is not the case, for this indicator we plotted the reciprocal of the figures obtained by each solver. In this manner in all the profiles below, the solver with the highest curve is the best one for the given indicator of performance.

The first profile, in Fig. 1, shows the performance in terms of accuracy. Looking at the highest value for the leftmost abscissa, we conclude that the hybrid variant HyCB is the most precise solver in 72 % of the runs. The three other solvers, CBun, Hanso, and BFGS, are the most accurate solvers in 37, 36, 31 % runs, respectively.

Profile 2 measures the performance in terms of CPU time in seconds, and shows that CBun is the fastest solver in 56 % of the runs, followed by BFGS, which was fastest in 33 % of the runs.

The final profile, in Fig. 3, measures the performance of the different solvers in terms of (bb) calls, and shows a clear superiority of CBun, which appears as the most economic solver in 88 % of the cases. Hanso makes extensive use of (bb) calls, so it should mostly be used for unstructured nonconvex functions that are not too difficult to evaluate (possibly like the matrix problems in [33, Sec.5.3]). The curves for BFGS and HyCB practically coincide, making both methods indistinguishable in terms of (bb) calls. Since BFGS is faster and HyCB is more precise, the choice between these two solvers should be driven by the user’s preference (speed or accuracy), keeping in mind that HyCB is more reliable in terms of stopping test.

Finally, by examining the right end of the curves in the three profiles, we conclude that all solvers can be deemed similarly successful in solving the considered battery of problems.

6.7 Determining \(\fancyscript{V}\)-dimension

Many composite functions are partly smooth [18], a notion that generalizes to the nonconvex setting the \({\fancyscript{V}}{\fancyscript{U}}\)-space decomposition for convex functions in [16, 24].

Identification of the \({\fancyscript{V}}{\fancyscript{U}}\) subspaces can be used to determine directions along which the function behaves smoothly so that a (manifold restricted) Newton-like method is likely to succeed. Such smooth directions lie in the \({\fancyscript{U}}\)-subspace; its orthogonal complement, the \({\fancyscript{V}}\)-subspace, concentrates all the relevant nonsmoothness of the function, at least locally. At a critical point \(\bar{x}\), the \({\fancyscript{V}}\)-subpace is spanned by the subdifferential \(Dc(\bar{x})^{\scriptscriptstyle \top }\partial h(\bar{C})\), with \(\bar{C}=c(\bar{x})\), and the \({\fancyscript{U}}\)-subspace is the orthogonal complement of \({\fancyscript{V}}\). Alternatively, in the wording of [18], the \({\fancyscript{U}}\)-subspace is the subspace tangent to the smooth activity manifold at \(\bar{x}\), and \({\fancyscript{V}}={\fancyscript{U}}^\perp \).

In [20, 33] it is observed that BFGS can retrieve \({\fancyscript{V}}{\fancyscript{U}}\)-information by analyzing the eigenvalues of the inverse Hessian used to define a new iterate. For comparison purposes, we consider CBun and BFGS only and estimate the dimension of the respective generated \({\fancyscript{V}}\)-subspaces as follows:

-

For CBun we compute the dimension of the subspace spanned by the final strongly active gradients in the bundle:

$$\begin{aligned} \mathop {dim}{\fancyscript{V}}_{\text{ CBun}}:=\mathop {rank}\left\{ Dc(x^k)^{\scriptscriptstyle \top } ({G^i}-\hat{G}^{\ell }~\!) : i\in \mathtt{B }_\ell \text{ with} \alpha _i^\ell >0\right\} , \end{aligned}$$for \(x^k\) the last generated serious step and \(\ell \) the iteration triggering the stopping test; recalling that in Step 4 of Composite Algorithm 1 the bundle sizes are kept controlled by a parameter \(|\mathtt{B }\max |\),

-

For BFGS we count how many eigenvalues of the final inverse Hessian \(H\) cluster near 0:

$$\begin{aligned} \mathop {dim}{\fancyscript{V}}_{\text{ BFGS}}:=\mathop {card}\left\{ i\le n: \frac{\lambda _i(H)}{\lambda _{\max }(H)}\le \epsilon \right\} , \end{aligned}$$for \(\epsilon \) a given tolerance.

Table 6 reports the obtained results for some of the problems in Groups 1 and 2, with low dimension and \({\fancyscript{V}}\)-dimensionality depending on the case. The parameter settings were \(|\mathtt{B }\max |=50\) and \(\epsilon \in \{0.1,0.01\}\) (which gave identical results for this group of runs). Each problem was run 10 times with random starting points. For TR48 the exact \(\mathcal V \)-dimension is reported as ??, because it is unknown.

Even though the rules adopted for determining the \({\fancyscript{V}}\)-dimensions are rather rough, both CBun and BFGS estimations are reasonable, with a few exceptions. For both solvers the worst results are those obtained for the nonconvex EucSum functions. The extremely low \({\fancyscript{V}}\)-dimension estimated by BFGS for BadGuy comes from the fact that it is hard to automatically determine when a very small eigenvalue should be considered equal to zero. An a posteriori (visual) examination of the eigenvalues obtained for each starting point shows a rather erratic behaviour of BFGS for this function over the different starting points, even though BFGS’s heuristic stopping test was always triggered. Such oscillation could be explained by a lack of stability of the Hessian with respect to small perturbations, a common phenomenon for a nonsmooth function near a kink.

We made a second group of runs, to determine the impact of smaller or larger \({\fancyscript{V}}\)-dimension, with respect to the dimension of the full space. We considered the CPS function, with dimension \(n\in \{10,50,100\}\) and varying \({\fancyscript{V}}\)-dimension. For this example, the \({\fancyscript{V}}\)-dimension coincides with the rank of the matrix \(A\) (taken with sparse density equal to 0.1 for all cases).

Table 7 reports the \({\fancyscript{V}}\)-dimensions estimated by CBun and BFGS for different parameters. For CBun, the maximum bundle size was set to 50 and 100: we expect results to be worse if \(|\mathtt{B }\max |<\mathop {dim}{\fancyscript{V}}+1\) and the bundle needs to be compressed to an insufficient number of elements. For BFGS, we took two values of \(\epsilon \), as before. In the table, the parameter values appear between parentheses next to the name of each solver.

We observe that problems with larger \({\fancyscript{V}}\)-subspaces are more difficult for both solvers. In general, CBun(100) seems to give a reasonable estimate, but this is not always true, especially when \(n=100\).

We conclude our analysis with Fig. 4, with the real and estimated \({\fancyscript{V}}\)-dimensions for all the 30 different functions considered in this subsection. In general, we observe that BFGS overestimates the size of the \({\fancyscript{V}}\)-space. We emphasize that this set of tests determining \({\fancyscript{V}}\)-dimensionality is only preliminary, and rather crude. For this reason, the conclusions above should not be taken as an indication of goodness or badness of a solver. The subject of determining \({\fancyscript{V}}{\fancyscript{U}}\) subspaces is still rather unexplored, with a few exceptions in [20, 33], and the MQ functions considered in [6].

7 Concluding remarks

The composite bundle method presented in this work makes tractable the algorithm ProxDescent in [19] for a large class of composite functions, having real-valued, positively homogeneous, and convex outer functions. In particular, the method can be applied to minimize some nonconvex nonsmooth functions, a challenging issue for bundle methods. Our composite cutting-plane model, approximating the conceptual model, avoids typical pitfalls in nonconvex bundle methods.

The numerical experience reported in this work shows the good performance of method for problems of moderate size. For large dimensions, the use of variable prox-metrics may increase the solution times too much, even if there are sparse patterns to exploit. The impact of such an increase is problem dependent: for some functions (such as CPS and TSP) there is a clear advantage in applying a bundle method (\(n\le 500\) in CPS, and \(n\le 3038\) in TSP, but \(M_k\equiv 0\)). The advantage is less clear for other functions, especially some of the nonconvex ones in Group 3. Since we sometimes also observed that too many short serious steps made CBun stall, we conjecture that a linesearch (replacing or complementing the curved search modifying \(\mu _\ell \)) can improve the performance of Composite Algorithm 1 for nonconvex functions, but this is a subject of future research.

Although BFGS is accurate and fast (at least for our examples, with computationally light blackboxes), neither BFGS nor Hanso appeared as the best alternative for many classes of functions considered in our runs. However, conclusions can be different for a different set of test-functions. Also, the usefulness of a solver depends on the specific purpose sought by the user: since BFGS descends fast from a starting point, it could be an interesting alternative if not much accuracy is required, or if the user seeks a “better” point, without caring if it is the best one. For some problems, we observed that BFGS got stuck at a nonoptimal kink and exited having triggered the heuristic stopping test (the projection of zero onto its final bundle was smaller than the tolerance). If reliability is a concern, the output of BFGS can be plugged into a bundle method, as in HyCB, to satisfy a theoretical stopping test.

However, if too much accuracy is desired, the hybrid variant is likely to increase the computational effort of BFGS too much (at least when compared to applying directly CBun). As for Hanso, since it makes extensive use of (bb) calls, we think it should mostly be used for unstructured nonconvex functions that are not too difficult to evaluate (possibly like the matrix problems in [33, Sec. 5.3]).

Another important issue to consider for a heavy duty application is that, even in the presence of a composite structure, the resulting smooth mapping may be large, or have no special second order sparse patterns to exploit. In this case, it can be sound to use null or diagonal matrices \(M_k\) in Composite Algorithm 1, or apply the limited memory variants in [9, 33].

We mention the work [14], comparing several NSO general purpose solvers for different type and size of problems, as well as for different (bb) available information. Table 3 therein, analizing the efficiency and reliability of the considered solvers, can be useful as complementary information for the conclusions drawn from our numerical results, keeping in mind that solvers are different and that the test-functions are not exactly the same, although there is some intersection.

Comparison with [30]. The proximity control bundle algorithm [30] for nonconvex optimization considers models for functions such that several Clarke subgradients at one point can be computed at reasonable cost. The proposed scheme is fairly general and bears some resemblance to our composite approach, which we explain next.

Instead of assuming that the objective function enjoys some particular structure, in [30] the authors suppose there is available a certain local model, \(\phi (\cdot ,x^k)\), for the objective function \(f\) at the current iterate serious \(x^k\). In our notation, \(f=h\circ c\), and the local model is \(\phi (x^k+\cdot ,x^k)= h(c_k(\cdot ))\). As explained in [30, Rems. 2.9 and 6.3], such a composite model is both a strong and strict first-order model for \(f\). At each iteration \(\ell \) the local model is approximated by a working model, \(\phi _\ell (\cdot , x)\), which would correspond to our composite cutting-plane model, \(\check{h}_\ell (c_k(\cdot ))\), keeping in mind that \(k=k(\ell )\). Contrary to our model, the cutting-plane model \(\check{h}_\ell \) needs to satisfy both

Such relations (equivalent to the conditions \(\phi _\ell (x,x)=(h\circ c)(x)\) and \(\partial _1 \phi _\ell (x,x)\subset \partial _1 \phi (x,x)\) imposed to the first-order working model in [30, Def. 3.3]) only hold if the outer subgradient information for \(C=c(x^k)\) was kept in the bundle. For our composite bundle method, such is not a requirement for convergence: only the aggregate and the last generated gradient (\(\hat{G}^\ell \) and \(G^{\ell +1}\), respectively) need to enter the bundle.

Furthermore, the method in [30] drops all the accumulated information every time there is a serious step. More precisely, whenever \(k(\ell +1)=k+1\), the next working model \(\phi _{\ell +1}\) uses the singleton bundle \(\mathtt{B }=\{G^{\ell +1}\}\). This procedure, which can be seen as restarting the method from a different initial point, may be justified for general nonconvex functions. However, for our composite functions such a clearing of the bundle may harm efficiency: in our setting the outer function \(h\) is convex, and past information can (and should) be kept along iterations to improve the algorithm’s performance. Indeed, thanks to the convexity of the outer function, our method ends up with a point that is approximately optimal (recall the last paragraphs in Sect. 5).

Another related important difference is that the working model in [30], in addition to (15), (22), and (23), needs to incorporate exact cutting planes, which corresponds to requiring that

Instead, in (13) we use the outer subgradient \(\varGamma ^\ell \in \partial h(C^\ell )\) for \(C^\ell =c(x^k+d^\ell )\) to detect if the linearization of the inner mapping is not good enough and trigger the backtracking process. But if \(x^k+d^\ell \) is declared a serious step, the corresponding subgradient \(\varGamma ^\ell \) does not enter the bundle (but nothing prevents the bundle management step to incorporate this data).