Abstract

We investigate multi-step Chebyshev spectral collocation method for Volterra integro-differential equations. We obtain numerical solution Y(t) and \(Y'(t)\) to approximate unknown function y(t) and its derivative \(y'(t)\) while Y(t) and \(Y'(t)\) keep the relation that \(Y'(t)\) is the derivative of Y(t). We discuss existence and uniqueness of the solution to corresponding discrete system. We provide convergence analysis for proposed method. Numerical experiments are carried out to confirm theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Volterra integro-differential equations (VIDEs) arise in many problems such as modelling of heredity effects and population dynamics. Due to this, many numerical methods are developed to solve these equations. Linz [13] generalized standard multistep methods to solved VIDEs. Brunner [2] studied implicit Runge–Kutta methods for VIDEs. Makroglou [14] proposed a block-by-block method for nonlinear VIDEs. Lin et al. [12] investigated Petrov–Galerkin Methods for Linear VIDEs. Zhang and Vandewalle [20] developed a numerical method based on the combination of general linear methods with compound quadrature rules for VIDEs with memory. Yi [19] investigated an h–p version of the continuous Petrov–Galerkin finite element method for nonlinear VIDEs. In his monograph, Brunner [3] provided basic principles and the analysis of collocation methods for Volterra integral and integro-differential equations.

Spectral methods are well known for their high precision in differential equations. The monograph [8] by Guo presents the basic algorithms, the main theoretical results, and some applications of spectral methods. In [9], Guo and Wang investigated Jacobi approximations in non-uniformly Jacobi-weighted Sobolev spaces. Some results serve as an important tool in the analysis of numerous quadratures and numerical methods for differential and integral equations. Canuto et al. [4] provided fundamentals of spectral methods on simple domains. In [15], Shen et al. provided a detailed presentation of basic spectral algorithms, a systematical presentation of basic convergence theory and error analysis for spectral methods. Global spectral methods were developed to solve Volterra type integral equations. Tang and his coworkers [16] proposed Legendre spectral collocation method to solve VIEs (Volterra integral equations). Subsequently, Chen and Tang [5–7] proposed Jacobi spectral collocation method to solve weakly singular VIEs. In [11], Li et al. proposed a parallel spectral method to solve VIEs. In [1, 10, 17, 18], global spectral methods were developed to solve VIDEs.

In this paper, we investigate multi-step Chebyshev spectral collocation method for the following VIDEs

We assume that functions describing the above equation all possess continuous derivatives of at least order \(m\ge 1\) on their respective domains, i.e.,

where \(C^{m}([a,b])\) is the space of functions possessing continuous derivatives of at least order \(m\ge 1\) on their domain [a, b].

We divide the interval [0, T] into several subintervals \([\eta _{\mu },\eta _{\mu +1}],\mu =0,1,\ldots ,M\), \(\eta _{0}=0\), \(\eta _{M+1}=T\). In each subinterval \([\eta _{\mu },\eta _{\mu +1}]\), we set \(N+1\) Chebyshev Gauss–Lobatta points as collocation points. We approximate \(y'(t)\) at these collocations points by a continuous piecewise polynomial \(Y'(t)\) of degree N. Then we define \(Y(t):=y_0+\int _{0}^{t}Y'(s)ds\) to approximate y(t). Our method is to find \(Y'(t)\) and Y(t). It is worth noting that \(\frac{d}{dt}Y(t)=Y'(t)\). We discuss existence and uniqueness of the solution to the discrete systems. We provide convergence analysis to show that numerical errors decay at the rate \(h^{m}N^{(1/2)-m}\) in space \(L^{\infty }(0,T)\), where \(h:=\max \{(\eta _{\mu +1}-\eta _{\mu })/2:\mu =0,1,\ldots ,M\}\). This result implies that employing more collocation points (N is larger), and dividing the interval [0, T] into more subintervals (h is smaller), the numerical solution will be more accurate. If given functions possess more regularity (m is larger), numerical errors decay faster. We carry our numerical experiments to confirm these theoretical results.

For the long interval [0, T] (T becomes larger), in order to obtain high precision numerical solution to (1), people using the global spectral collocation method (see [10]) will encounter the difficulty that finding the inverse of a large dimension matrix costs many time on computation. We avoid this problem by dividing the global interval [0, T] into several subintervals. The provided convergence analysis show that dividing the interval [0, T] does not make errors accumulate but even decay faster at the rate \(h^{m}\). We carry out numerical experiment to confirm this result (see Example 3). Numerical experiment shows that multi-step method is more efficient than global method. We also investigate the availability of the proposed method for nonlinear VIDEs.

We choose Chebyshev Gauss–Lobatto points as collocation points because we note that the discrete Chebyshev transforms and the inverse discrete Chebyshev transforms are based on these points (see [4, page 495]). This two transforms enable one to transform freely between physical space and transform space. Fast Fourier transforms can be applied in computing this two transforms to significantly reduce computation. Legendre points are involved in the quadrature formulas in this paper because Gauss quadrature formulas with Legendre points are the best way to approximate the integral terms with smooth kernel.

This paper is organized as follows. In Sect. 2, we introduce the multi-step Chebyshev spectral-collocation method for VIDE (1). The existence and uniqueness of the solution to the discrete system is discussed in Sect. 3. Some useful lemmas for the convergence analysis will be provided in Sect. 4, and the convergence analysis in space \(L^{\infty }\) will be given in Sect. 5. Numerical experiments are carried out in Sect. 6. Finally, in Sect. 7, we end with the conclusion and the future work.

2 Multi-step Chebyshev spectral-collocation method

In this section, we introduce the multi-step Chebyshev spectral collocation method for (1).

Divide the interval [0, T] into \(M+1\) sub intervals \(\delta _{\mu }:=[\eta _{\mu },\eta _{\mu +1}],\mu =0,1,\ldots ,M\), where \(\eta _{0}=0,\eta _{\mu }<\eta _{\mu +1},\eta _{M+1}=T\). In each subinterval \(\delta _{\mu }\), we set \(N+1\) collocation points

where \(h_{\mu }:=\frac{\eta _{\mu +1}-\eta _{\mu }}{2}\), \(\{x_{i}\}_{i=0}^{N}\) is the set of Chebyshev Gauss–Lobatto points in the standard interval \([-1,1]\).

Use \(y'^{\mu }_{i}\) to approximate \(y'(t_{i}^{\mu })\), and

to approximate \(y'|_{\delta _{\mu }}(t)\), i.e., the restriction of \(y'(t)\) to \([\eta _{\mu },\eta _{\mu +1}]\). \(L_{j}^{\mu }(t)\) is the jth Lagrange interpolation basic function, i.e.,

Then \(y'(t)\) can be approximated by a function \(Y'(t)\) defined on \([-1,1]\), i.e.,

which is a continuous functions whose restriction to \([\eta _{\mu },\eta _{\mu +1}]\) is polynomial of degree N. Define

which implies that

We use Y(t) to approximate y(t).

Discrete (1) as

Replace y(t) and \(y'(t)\) with Y(t) and \(Y'(t)\) respectively,

which can be rewritten as

Approximate integral terms by Gauss quadrature formula,

where \(\{v_{k},w_{k}\}_{k=0}^{N}\) is the set of Legendre Gauss–Lobatto points and weights in standard interval \([-1,1]\), and

On the other hand, from (3),

Note that \(Y'_{r}(s),r=0,1,\ldots ,M\) are polynomials of degree N. Then integral terms in (10) can be exactly approximated by Gauss quadrature formula,

which can be written as

where

Note that \(L_{j}^{r}(s_{r}(v))=F_{j}(v)\), where \(F_{j}(v)\) is the jth Lagrange interpolation basic functions associated with Chebyshev Gauss–Lobatto points \(\{x_{i}\}_{i=0}^{N}\) in standard interval \([-1,1]\). Then (11) can be written as

Combining (8) and (13) we obtain the numerical scheme for the proposed method in this paper,

where \(y'^{\mu }_{i},i=0,1,\ldots ,N;\mu =0,1,\ldots ,M\) are unknown elements which are used to construct \(Y'(t)\) and Y(t) approximated to \(y'(t)\) and y(t) respectively.

3 The existence and uniqueness of the solution to discrete system

In this section, we investigate the existence and uniqueness of discrete system (14) and (15). In order to implement the numerical scheme (14) and (15) by computer, we write it in matrix form. From (15),

where

Then

Note that

Then

Note that

Then

From (14) we have

Let

Then the matrix form corresponding to (14) is

which is equal to

Since \(a(t),K(t,s),F_{j}(s)\) are continuous on their definition domain, then elements of matrix \({\mathbb {A}}^{\mu }\) are all uniformly bounded no matter what \(\mu \) is. The Neumann Lemma ([3, page 87]) then shows that the inverse of the matrix

exists whenever

for some matrix norm. This clearly holds whenever \(h_{\mu },\mu =0,1,\ldots ,M\) are uniformly sufficient small. Then the matrix equation (26) possesses a unique solution which implies that so does for discrete system (14) and (15). We conclude this result as the following theorem.

Theorem 1

If \(h_{\mu }(\mu =0,1,\ldots ,M)\) are uniformly sufficient small, then the discrete system (14) and (15) possess unique solution.

4 Useful lemmas

In this section, we give some lemmas which will be used in Sect. 5. For simplicity, let \(\partial _{x}^{k}u(x):=\frac{\text {d}^{k}u}{\text {d}x^{k}}(x)\). Before giving lemmas, we introduce some spaces.

Let (a, b) be a bounded interval in real line, \(\omega (x)\) is the Chebyshev weight function on (a, b). Set \(L^{2}_{\omega }(a,b)\) be the measurable functions space equipped with the norm

Assume that \(m\ge 1\) is an integer. \(H^{m}_{\omega }(a,b)\) is a Sobolev space defined by

Denote by \(L^{\infty }(a,b)\) the measurable functions space with the norm

\(L^{2}(a,b)\) is a space satisfying

\(H^{m}(a,b)\) is a Sobolev space defined by

Let C([a, b]) be the continuous functions space on [a, b].

For \(y\in C([0,T])\), define an interpolation operator \(I_{N}\) as

where \(y|_{\delta _{\mu }}(t)\) is the restriction of y(t) to \(\delta _{\mu }:=[\eta _{\mu },\eta _{\mu +1}]\), \(I_{N}^{\mu }\) is the interpolation operator associated the collocation points \(t_{i}^{\mu },i=0,1,\ldots ,N\) in \([\eta _{\mu },\eta _{\mu +1}]\), i.e.,

Hereafter, C denotes a constant which is independent of N.

Lemma 1

[4] Assume that \(u\in H_{\omega }^{m}(-1,1)(m\) is a positive integer), then

where \(J_{N}\) is the interpolation operator associated with Chebyshev Gauss–Lobatto points \(\{x_{i}\}_{i=0}^{N}\) in standard interval \([-1,1]\).

Lemma 2

Assume that \(y(s)\in C^{m}([0,T])(m\) is a positive integer), then

where \(h:=\max \{h_{\mu }:\mu =0,1,\ldots ,M\}\).

Proof

By the definition of \(I_{N}^{\mu }\), \((I_{N}^{\mu }(y|_{\delta _{\mu }}))(t)\) is a function defined on \([\eta _{\mu },\eta _{\mu +1}]\). The variable transformation \(s_{\mu }(x)\) defined by (9) changes it to be a new function defined on \([-1,1]\),

In the other hand, \(y|_{\delta _{\mu }}(s_{\mu }(x))\) is a function defined on \([-1,1]\). Its Lagrange interpolation polynomial associated with the Chebyshev Gauss–Lobatto points \(x_{j},j=0,1,\ldots ,N\) in \([-1,1]\) is

Note that

Inserting this into (33) yields

By (28)

This is (30).

Now we prove (31). It is obviously that

By (29),

which together with (37) yields

This leads to (31). \(\square \)

Lemma 3

[4] Assume that \(u(x)\in H^{m}(-1,1)(m\) is a positive integer), \(P_{N}(x)\) is a polynomial of degree not exceeding N. Then

Lemma 4

Assume that \(y(t)\in H^{m}(0,T)(m\) is a positive integer), \(Q_{N}(t)\) is function whose restriction to \(\delta _{\mu }:=[\eta _{\mu },\eta _{\mu +1}]\), \(Q_{N}|_{\delta _{\mu }}(t)\), is a polynomial of degree not exceeding N. Then for \(t\in [\eta _{\mu },\eta _{\mu +1}]\),

where \(s^{-1}_{\mu }(t)\) is defined by (12).

Proof

Note that

Use the variable transformation \(s_{r}(v)\) to change the integration interval \([\eta _{r},\eta _{r+1}]\) to standard interval \([-1,1]\),

By Lemma 3,

Therefore

Similarly,

Then

By Cauch’s inequality which is stated as

we have

which together with (43) yields (39). \(\square \)

Lemma 5

[16] Assume that \(e(t)\in C([0,T])\) satisfying

where \(u\in L^{\infty }(0,T),0<c<+\infty \). Then there exist a constant C independent of e(t) and u(t) such that

5 Convergence analysis

Theorem 2

Assume that Y(t) and \(Y'(t)\) obtained by scheme (14) and (15) are approximations to y(t) and \(y'(t)\) which lie in (1) with given functions possessing continuous derivatives of at least m order. Then

where \(e_{0}(t):=y(t)-Y(t),\ e_{1}(t):=y'(t)-Y'(t),\widehat{K}:=\max \{\Vert \partial _{s}^{m}K(t,\cdot )\Vert _{L^{2}(0,t)}:t\in [0,T]\}\).

The error estimation result (47) implies that the delay of errors dependent of N, h, m. Employing more collocation points (N is larger), and dividing the interval [0, T] into more subintervals (h is smaller), the numerical solution will be more accurate. If given functions possess more regularity (m is larger), numerical errors decay faster. It is worth noting that N, h, m are independent of each other. We carry numerical experiments in Sect. 6 to confirm these theoretical results. Note that \(\widehat{K}\), \(\Vert y\Vert _{L^{\infty }(0,T)}\) and \(\Vert \partial ^{m+1}_{t}y\Vert _{L^{\infty }(0,T)}\) will become larger if T becomes larger. This implies that for fixed N and h, a large T is not good for us to obtain high precision numerical solution. We carry out numerical experiment to investigate the effect of T on the decay of errors. For a large T, we investigate the difference between the global spectral collocation method and multi-step spectral collocation method. Numerical result show that multi-step spectral collocation method perform better. All of these numerical experiments will be carried out in Sect. 6.

Proof

where

Multiply \(L_{j}^{\mu }(t)\) to both side of (5) and sum up from \(i=0\) to N,

By the definition of \(I_{N}\),

which can be rewritten as

where

By Lemma 2,

From (4) we know that \(\frac{d}{dt}e_{0}(t)=e_{1}(t)\) or \(e_{0}(t)=\int _{0}^{t} e_{1}(s)ds\) which implies that \(\Vert e_{0}\Vert _{L^{\infty }(0,T)}\le T\Vert e_{1}\Vert _{L^{\infty }(0,T)}\). Then by Lemma 2 with \(m=1\),

Similarly,

By Lemma 4,

Let

which implies that

We have

which together with (51) and (53) yields

Let

Then (60) becomes

which can be rewritten as

By Dirichlet formula,

Then there exist constants c independent of \(e_{1}(t)\), N and h such that

Note that \(e_{1}(t)\) is a function dependent of h, N. At this points, \(\Vert e_{1}\Vert _{L^{\infty }(0,T)}\) is a constant dependent of h and N. Fix h and N. Then (65) is a fixed inequality which implies that the relation between \(e_{1}\) and \(u(h,N,\Vert e_{1}\Vert _{L^{\infty }(0,T)})\) is fixed. By Lemma 5, there exist constant \(C_{0}\) independent of \(e_{1}(t)\), \(u(h,N,\Vert e_{1}\Vert _{L^{\infty }(0,T)})\), N and h such that

We can choose sufficiently small h and sufficiently large N such that

Then

which leads to that

This is (47) for \(e_{1}\). By the relation \(\Vert e_{0}\Vert _{L^{\infty }(0,T)}\le T\Vert e_{1}\Vert _{L^{\infty }(0,T)}\) we obtain (47) for \(e_{0}\). \(\square \)

6 Numerical experiments

In this section we carry out numerical experiments to confirm theoretical results obtained in Sect. 5.

Example 1

Consider VIDEs

The corresponding solution is \(y(t)=e^{t},t\in [0,2]\).

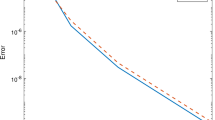

This example is provided to investigate effect of N and 1 / h on the delay of errors. Tables 1 and 2 list errors versus N and h. Figures 1 and 2 plot the behavior of decaying errors. We can see from Figs. 1 and 2 that refining mesh and employing more collocation points will enhance the precision of the numerical solution. This confirms theoretical results.

Example 1: error versus N with \(h=1\)

Example 1: errors versus 1 / h with \( N=3\)

The following numerical example is provided to underline the role of m in the performance of decaying errors.

Example 2

Consider VIDEs

The corresponding solution is \(y(t)=t^{m+\frac{1}{2}},t\in [0,2]\)

It is worth noting that the kernel function is \(K(t,s)=s^{m+\frac{1}{2}}\) whose derivative of \(m+1\) order is singular at \(s=0\). Tables 3 and 4 display errors versus N and 1 / h for \(m=1,3\). The corresponding performance of errors are plotted in Figs. 3 and 4 from which we can see that errors decay faster if m is larger. This result is consistent with theoretical results.

Example 2: errors versus N with \(h=1\)

Example 2: errors versus 1 / h with \(N=3\)

Example 3

Consider VIDEs

The corresponding solution is \(y(t)=\sin t,t\in [0,T]\)

From (47) we know that \(\widehat{K}\), \(\Vert y\Vert _{L^{\infty }(0,T)}\) and \(\Vert \partial ^{m+1}_{t}y\Vert _{L^{\infty }(0,T)}\) will become larger if T becomes larger. This implies that a large T goes against the decay of errors. Example 3 is provide to investigate the effect of T on the decay of errors. By the way, we compare the multi-step spectral collocation method with global spectral collocation method provided that T changes from 1 to 20. For the global method (h \(=\) T/2), we let the number of collocation points in interval [0, T] be 11T. For the multi-step method (h \(=\) 1/2), we let the number of collocation points in subinterval \([\mu ,\mu +1]\) be 11, which implies that the number of collocation points on interval [0, T] is 11T. Our goal in doing so is to maintain the same number (11T) of collocation points in interval [0, T] for both methods. The errors and time cost versus T are listed in Table 5. The performance of errors along with the change of T is plotted in Fig. 5 which shows that for both methods errors increase as T increases. This agrees with the theoretical result (47) in which \(\widehat{K}\) and \(\Vert \partial ^{m+1}_{t}y\Vert _{L^{\infty }(0,T)}\) increase as T increases. The behavior of time costs versus T for both method is plotted in Fig. 6 from which we can see that the multi-step method performs better than global method including global Legendre spectral collocation method.

Example 3: errors versus T

Example 3: time cost versus T

For nonlinear VIDEs of the following form

we can also design a numerical scheme similar to the linear case

Example 4

Consider nonlinear VIDEs

The corresponding solution is \(y(t)=\sin t,t\in [0,2]\)

Example 4: errors versus N with \(h=1\)

Example 4: errors versus 1 / h with \(N=3\)

Errors versus N and 1 / h are listed in Tables 6 and 7. The behavior of decay errors is plotted in Figs. 7 and 8 from which we can see that errors decay at power type. This shows that our method can handle the nonlinear VIDEs.

7 Conclusion and future work

We investigate multi-step Chebyshev spectral collocation method for Volterra integro-differential equations. We discuss existence and uniqueness of corresponding discrete system. We provide convergence analysis for proposed method. Numerical experiments are carried out to confirm theoretical results.

Our future work will focus on multi-step Chebyshev spectral collocation method for Volterra type integral equations with non-vanishing delay and partial Volterra integro-differential equations.

References

Ali, I.: Convergence analysis of spectral methods for integro-differential equations with vanishing proportional delays. J. Comput. Math. 29, 49–60 (2011)

Brunner, H.: Implicit Runge–Kutta methods of optimal order for volterra integro-differential equations. Math. Comput. 42(165), 95–109 (1984)

Brunner, H.: Collocation Methods for Volterra Integral and Related Functional Differential Equations, vol. 15. Cambridge University Press, London (2004)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods Fundamentals in Single Domains. Springer, Berlin (2006)

Chen, Y., Li, X., Tang, T.: A note on Jacobi spectral-collocation methods for weakly singular Volterra integral equations with smooth solutions. J. Comput. Math. 31, 47–56 (2013)

Chen, Y., Tang, T.: Spectral methods for weakly singular Volterra integral equations with smooth solutions. J. Comput. Appl. Math. 233(4), 938–950 (2009)

Chen, Y., Tang, T.: Convergence analysis of the Jacobi spectral-collocation methods for Volterra integral equations with a weakly singular kernel. Math. Comput. 79(269), 147–167 (2010)

Goo, B.Y.: Spectral Methods and Their Applications. World Scientific, Singapore (1998)

Guo, B., Wang, L.: Jacobi approximations in non-uniformly Jacobi-weighted sobolev spaces. J. Approx. Theory 128(1), 1–41 (2004)

Jiang, Y.-J.: On spectral methods for Volterra-type integro-differential equations. J. Comput. Appl. Math. 230(2), 333–340 (2009)

Li, X., Tang, T., Xu, C.: Parallel in time algorithm with spectral-subdomain enhancement for Volterra integral equations. SIAM J. Numer. Anal. 51(3), 1735–1756 (2013)

Lin, T., Lin, Y., Rao, M., Zhang, S.: Petrov–Galerkin methods for linear Volterra integro-differential equations. SIAM J. Numer. Anal. 38(3), 937–963 (2000)

Linz, P.: Linear multistep methods for Volterra integro-differential equations. J. ACM (JACM) 16(2), 295–301 (1969)

Makroglou, A.: Convergence of a block-by-block method for nonlinear Volterra integro-differential equations. Math. Comput. 35(151), 783–796 (1980)

Shen, J., Tang, T., Wang, L.-L.: Spectral Methods: Algorithms, Analysis and Applications, vol. 41. Springer Science and Business Media, Berlin (2011)

Tang, T., Xiang, X., Cheng, J.: On spectral methods for Volterra integral equations and the convergence analysis. J. Comput. Math. 26(6), 825–837 (2008)

Tao, X., Xie, Z., Zhou, X.: Spectral Petrov–Galerkin methods for the second kind Volterra type integro-differential equations. Numer. Math. Theory Methods Appl. 4, 216–236 (2011)

Wei, Y., Chen, Y.: Legendre spectral collocation methods for pantograph Volterra delay integro-differential equations. J. Sci. Comput. 53(3), 672–688 (2012)

Yi, L.: An h-p version of the continuous Petrov-Galerkin finite element method for nonlinear Volterra integro-differential equations. J. Sci. Comput. 65(2), 715–734 (2015)

Zhang, C., Vandewalle, S.: General linear methods for Volterra integro-differential equations with memory. SIAM J. Sci. Comput. 27(6), 2010–2031 (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is supported by the Foundation for Distinguished Young Teachers in Higher Education of Guangdong Province (YQ201403), and the Provincial Foundation of Guangdong University of Finance for Maths Models Teaching Team (0000-E205010014157).

Rights and permissions

About this article

Cite this article

Gu, Z. Multi-step Chebyshev spectral collocation method for Volterra integro-differential equations. Calcolo 53, 559–583 (2016). https://doi.org/10.1007/s10092-015-0162-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10092-015-0162-z

Keywords

- Volterra integro-differential equations

- Chebyshev Gauss–Lobatto points

- Multi-step spectral collocation method

- Convergence analysis

- Numerical experiments