Abstract

Dogs are an ideal species to investigate phylogenetic and ontogenetic factors contributing to face recognition. Previous research has found that dogs can recognise their owner using visual information about the person’s face, presented live. However, a thorough investigation of face processing mechanisms requires the use of graphical representations and it currently remains unclear whether dogs are able to spontaneously recognise human faces in photographs. To test this, pet dogs (N = 60) were briefly separated from their owners and, to achieve reunion, they needed to select the location indicated by a photograph of their owner’s face, rather than that of an unfamiliar person concurrently presented. Photographs were taken under optimal and suboptimal (non-frontally oriented and unevenly illuminated faces) conditions. Results revealed that dogs approached their owner significantly above chance level when presented with photos taken under optimal conditions. Further analysis revealed no difference in the probability of choosing the owner between the optimal and suboptimal conditions. Dogs were more likely to choose the owner if they directed a higher percentage of looking time towards the owner’s photograph compared to the stranger’s one. In addition, the longer the total viewing time of both photos, the higher the probability that dogs chose the stranger. A main effect of dogs’ sex was also obtained, with a higher probability of male dogs choosing the owner’s photograph. This study provides direct evidence that dogs are able to recognise their owner’s face from photographs. The results imply that motion and three-dimensional information is not necessary for recognition. The findings also support the ecological valence of such stimuli and increase the validity of previous investigations into dog cognition that used two-dimensional representations of faces. The effects of attention may reflect differences at the individual level in attraction towards novel faces or in the recruitment of different face processing mechanisms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The recognition of individuals is a widespread, and well-studied, adaptive ability (Mateo 2004; Yorzinsky 2017). For some species this extends to the recognition of heterospecifics, which may be advantageous when specific individuals represent sources of threat (e.g. Staples et al. 2008), but also when they are part of the animal’s social context. A specific case of the latter is the recognition of people by animals who live in anthropogenic environments. We predominantly achieve recognition of other people using visual information about their faces (Barton and Corrow 2016), and the same ability has been reported in a few domestic species, including dogs (Mongillo et al. 2017), sheep (Knolle et al. 2017), horses (Proops et al. 2018) and homing pigeons (Dittrich et al. 2010). Surprisingly, the process seems less efficient in primates; for example, Martin-Malivel and Okada (2007) found that chimpanzees need extensive exposure to recognise human faces. Additional support for the effect of experience was provided by Sugita (2008) who revealed that infant Japanese monkeys (which had not previously seen faces) needed exposure to human or monkey faces to discriminate members of that species, and afterwards they found it difficult to discriminate members of the unexposed species. On the other hand, Sugita also found that the infant monkeys showed a sensitivity towards pictures of human and monkey faces before being exposed to faces, and this innate capacity has also been suggested in avian species (Rosa-Salva et al. 2010) and human neonates (Buiatti et al. 2019). Limited human face recognition abilities have also been reported for rhesus macaques and other monkeys (Doufur et al. 2006 and references therein).

Overall, these studies suggest an interplay between two main factors in determining such ability, namely an adaptive predisposition (most likely resulting from evolutive pressures of domestication), and an ontogenetic role of exposure to human faces. However, how exactly these factors and their interaction contribute towards human face recognition abilities in animals remains unclear. Dogs may represent the ideal species to disentangle the role of such factors: the species shared the same environment as humans for arguably as long as 33,000 years, thus partly sharing similar selective pressures (Ovodov et al. 2011). In western countries, most dogs live among human families, forming enduring relationships with humans (Payne et al. 2015). However, there is large variability in the dog population in terms of degrees of exposure to human beings, which represents an ideal condition for the assessment of the effect of experience on the ontogeny of face recognition. At the same time, phylogenetic aspects can be investigated by comparing dogs and wolves, an approach that has already characterised the study of canids’ ability to understand the communicative nature of some human gestures (Kaminski and Nitzschner 2013).

Dogs’ ability to recognise individual human faces was suggested by a recent experiment of our group, where dogs located their owner and expressed a discrete behavioural response (i.e. approach) when presented with their owner’s and a stranger’s faces protruding through openings of a test apparatus (Mongillo et al. 2017). This study also highlighted how such ability is impaired if head contours are not visible, but it is unaffected by moderate changes in perspective (e.g. a three-quarters or tilted upwards/downwards orientation, rather than full frontal), or by the presence of an uneven illumination. However, a more thorough investigation of face recognition processes requires a systematic manipulation of the stimuli used in assessment procedures, allowing fine control over relevant perceptual features, such as movement, illumination, orientation, or visibility of specific face parts. Such alterations are easily (and often exclusively) achieved using graphical representations, rather than live stimuli. In fact, several studies took advantage of photographs to investigate different aspects of human face processing by dogs, including: the contribution of face parts perception, or of configural/holistic processing to face discrimination (Huber et al. 2013; Pitteri et al. 2014), the characteristics of looking patterns when viewing faces (Guo et al. 2009; Somppi et al. 2012, 2014), the discrimination of human emotional expressions (Nagasawa et al. 2011; Müller et al. 2015; Albuquerque et al. 2016; Barber et al. 2016), the cross-modal identification of human features, such as gender and familiarity (Adachi et al. 2007; Yong and Ruffmann 2015), and neurofunctional correlates of face perception (Cuaya et al. 2016).

Much as these studies inform us about dogs’ ability to process human face photographs, their ecological validity would be increased if a demonstration was provided that dogs recognise the real stimuli in such representations. Evidence of recognition of photographic objects has come from experiments in a wide range of species (Bovet and Vauclair 2000). However, the same ability may not extend to all species, nor to all classes of stimuli. For instance, in pigeons learned responses to real objects can be successfully transferred to photographs of the same objects (Cabe 1976) implying recognition of those items in their graphical representations. However, pigeons proved unable to recognise human faces in photographs, in spite of using face information to recognise the same individuals when presented live (Dittrich et al. 2010). Therefore, species- and object-specific assessments are required to ascertain an animals’ ability to recognise real items in two-dimensional (2D) representation. Regarding dogs, only one study provided some indication that dogs may be able to recognise human faces in photographs, by observing biases in the amount of attention paid to the owner’s photographs presented in conjunction with an unfamiliar person’s voice (or vice versa), compared to coherent voice-face pairs (Adachi et al. 2007). However, some authors question the soundness of quantitative differences in viewing times as an evidence for recognition (Bovet and Vauclair 2000). Stronger evidence of recognition would be provided by a qualitative difference in behaviour in response to the presentation of the owner’s face photographs, and under a variety of viewing conditions.

Therefore, the objective of the current experiment was to determine whether dogs can recognise human faces in photographs as they do with live stimuli. To this end, we employed a procedure similar to that we previously used to demonstrate recognition of live human faces (Mongillo et al. 2017), which involved the presentation of a photograph of the owner’s face along with that of an unfamiliar person, in two separate locations of a test apparatus and in a variety of viewing conditions. Dogs’ ability to locate the owner in the different conditions, as indicated by a spontaneous approach response, was taken as evidence of individual recognition.

Methods

Subjects

Sixty-five owners and their pet dogs were initially recruited for the study through the University of Padua’s Laboratory of Applied Ethology database of volunteers. The only restrictions for recruitment were that dogs had lived with their current owner for the last 6 months and that they were in good health condition. Exclusion of dogs (N = 5) who did not show an approach response in the test (see details of the procedure below), resulted in a final sample of 60 owners (21 men and 39 women) and their pet dogs (31 males and 29 females; mean age ± SD = 5.1 ± 2.8 years). The length of cohabitation between dogs and their current owner ranged from 0.5 to 9.2 years, with a mean ± SD of 4.3 ± 2.4 years. Details about the dogs’ age, breed, length of the relationship and owners’ sex are reported in Table S1.

Apparatus

The experiment took place in a white room (4.7 × 5.8 m), with the test apparatus erected in the centre (Fig. 1). The apparatus consisted of a white plastic panel (5 × 2 m) with six openings, three in a row at ground-level and three above in a row at head height (bottom side at 1.5 m from the ground). The centre–centre distance between the middle upper window and the one to its left and right was 1 m, and the windows themselves measured 20 × 30 cm. The centre–centre distance between the middle lower opening and the one to its left and right was 1 m, and the openings themselves measured 50 × 70 cm. All openings could be covered easily by curtains whilst standing behind the apparatus. Curtains could be opened/closed by a person standing behind the apparatus, at either the left or right side, through a system of ropes and pulleys.

Stimuli

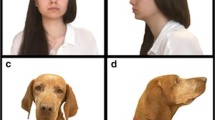

The stimuli consisted of photographs of the dog’s owner’s face and a stranger’s face taken under different conditions. The strangers were matched for each owner on the basis of features such as their gender, hair colour, hair length, presence of beard or whether they wore glasses or not. Photographs were taken with a camera placed at the head level of a medium-sized dog, pointing upwards towards the face of the person who stood in front of it. The experimental conditions were defined in accordance with the real-life conditions that allowed recognition in the study by Mongillo et al. (2017).

-

In the pre-trial condition a photo of the owner was taken with full flash, orientated frontally and looking slightly above the dogs’ head, with a smiling expression (Fig. 2a).

-

In the optimal condition both the owner and a stranger were photographed with neutral expressions, oriented frontally and looking slightly above the dogs’ head, and with even illumination (Fig. 2b).

-

In the suboptimal condition both the owner and a stranger (different from the one photographed in the optimal condition for any given dog) were photographed with neutral expressions, with one of four possible orientations (i.e. towards the left, right, upwards or downwards) with light provided from one of four possible directions (from left, right, above or below) (Fig. 2c, d). Different combinations of illumination and orientation were balanced within the sample.

Procedure

Dogs were given 10 min to become familiarised with the testing room, and during this time their owners were given instructions regarding the procedure. Next, the owner and a figurant unfamiliar to the dog (stranger) dressed in plain dog’s sight in identical white disposable all-in-one suits and blue plastic shoe covers, to ensure dogs were not able to recognise their owner’s clothes. Following this, an experimenter led the dog out of the room.

Each dog was presented with only one trial, counterbalanced for condition and side of presentation across dogs, preceded by a pre-trial (see below). The pre-trial was meant to accustom dogs to the fact that their owner’s face could appear in the upper windows of the apparatus, and to show them that they could reach their owner through the lower opening. It was also used to provide an indication of the dog’s motivation to be reunited with their owner. After the presentation of the pre-trial, the test trial was presented, featuring either the optimal, suboptimal or control condition, as described below.

Pre-trials

During pre-trials all of the windows were closed and the owner waited silently behind the panel in the central opening. The stranger also waited silently behind the apparatus, off center and ready to operate the curtains covering the central opening. One experimenter led the dog into the room and positioned it centrally, facing the apparatus. When the dog was looking forwards, the experimenter said “Okay” and the stranger slid open the upper middle curtain, revealing a photograph of the owner’s face smiling. After 10 s, the stranger opened the lower middle opening revealing the dog’s owner’s real-life legs and feet. When this happened, the experimenter said “Go!” and released the dog. When dogs reached their owner, the latter greeted the dog like they would normally do, for approximately 10 s before the experimenter collected the dog and took it back out of the room. If a dog did not choose to approach their owner through the central door then they were excluded from further testing.

Optimal and suboptimal condition

During these trials all of the windows were initially closed. The owner waited silently behind one set of side openings, the stranger waited behind the other set of side openings and a barrier was placed in between them, perpendicular to the apparatus’ wall, to ensure that dogs could not see or reach their owner if they passed through the apparatus from the stranger’s side. When the dog was led into the room, the experimenter positioned it centrally and said “Okay” when the dog was looking straight forward. Following this, the stranger opened the left and right upper windows’ curtains, revealing both photos at exactly the same time. After 10 s the stranger revealed both the owner’s and stranger’s real-life legs, simultaneously. When this happened, the experimenter said “Go!” and released the dog, who was free to approach either the owner or the stranger through the lower window. If dogs did not approach any of the two people within 30 s from the moment they were released, the trial was considered null and the dog replaced with another subject, until each condition had been presented to 20 dogs.

Control condition

This condition was included to ascertain that dogs were not using any other cues from their owner to determine their location (e.g. olfactory or auditory). The procedure was identical to that of optimal and suboptimal trials described above, with the exception that the stranger did not pull the upper windows curtains open, so no photograph of the owner’s or stranger’s face was revealed.

Data collection and analysis

Behavioural data were extracted from videos recorded through ceiling mounted CCTV cameras, using the Observer XT software (version 12.5, Noldus, Groeningen, The Netherlands). Data regarding the dog’s choice during each trial were coded as a binomial variable, assigning the value of 1 for choosing the owner and 0 for choosing the stranger. A continuous sampling technique was used to collect data about the dogs’ head orientations (i.e. right, left and elsewhere), from the moment that the curtains were lifted until the dog started to move towards the apparatus. From this, two variables were calculated, namely the total time the dog spent looking at either photograph before moving, and the relative amount of such time in which dogs were oriented towards the owner’s photograph. Inter-observer reliability of data about dogs’ choices was assessed using data collected by a second observer on all videos, and resulted in a complete agreement between the two observers. Reliability for head orientation data was assessed using data collected by a second observer on a randomly selected subset of videos (N = 18, ~ 30% of the total number); a Pearson’s correlation coefficient of 0.89 was obtained between data collected by the two observers, supporting the reliability of data collection. The statistical analysis described hereafter was performed on the data collected by the first observer.

A two-tailed binomial test was run to test the null hypothesis H0 that dogs’ choices were not different from a chance level of 0.5, when face photographs were visible (optimal and suboptimal conditions), and when they were not visible (control condition).

Following this, a generalised linear model (GLM) was used to assess the role of various factors in dogs’ probabilities of choosing the owners in this experiment. Specifically, a binary logistic GLM model was built, using the dogs’ choices as a binomial dependent variable. In the model, the following terms were fitted as fixed factors: the condition (optimal, suboptimal, control), the owner presentation side (left, right) and sex (male, female), and the dog’s sex (male, female). The dogs’ sex was included because an effect of sex was found in the previous experiment with real life owner face by Mongillo et al. (2017). The interaction between the dog’s and owner’s sex was also included as a fixed factor, to explore whether a same-sex bias in recognition exists in dogs, as previously reported in humans (Herlitz and Lovèn 2012). The amount of time dogs looked at either photograph, and the percentage of such time dogs were oriented to the owners’ photograph were included as covariates, to explore whether overall inspection time and allocation of attention between the two stimuli affected dogs’ choices. Finally, the length of cohabitation between the dog and its current owner was included in the model as a covariate, to assess whether the extent of exposure to the stimulus affected the probability of recognition. All first-order interactions were also included in the initial model. The final model was obtained by conducting a backwards stepwise elimination of non-significant interactive terms. Sequential Bonferroni-corrected comparisons were performed for levels of factors for which a significant effect was found.

All statistical analysis was conducted using SPSS (ver. 24, IBM, Armonk, New York, USA), with statistical significance level set at 0.05.

Results

All of the dogs initially recruited for the study readily approached the owner in the pre-trial and were presented with the choice trial. In the latter, few dogs (N = 5) did not approach either photograph/lower window within 30 s from the moment they were released. Table 1 summaries the frequency with which the 60 dogs who were eventually included in the experiment approached their owner or the stranger in the optimal, suboptimal and control conditions (individual dogs’ details about which condition they underwent and how they choose are reported in Table S1).

Results of the binomial test rejected the null hypothesis that dogs’ choices were at chance level during the optimal condition (P = 0.043); conversely, dogs’ choices in the suboptimal condition (P = 0.263) and control condition (P = 1.000) were not different from chance.

Results of the GLM are summarised in Table 2, indicating the effect of factors influencing the dogs’ choices in all conditions. The model revealed a main effect of the condition, with higher probability of choosing the owner in both the optimal condition (estimated mean ± SE: 0.86 ± 0.08; lower–upper 95% Confidence Intervals = 0.12–0.60) and in the suboptimal condition (0.74 ± 0.11; 0.61–0.96) than in the control condition (0.31 ± 0.13; 0.48–0.90; vs. optimal: P < 0.004; vs. suboptimal: P = 0.041). The difference between the optimal and suboptimal conditions was non-significant (P = 0.382). The model also revealed an effect of dogs’ sex, with a higher probability of male dogs choosing the owner (estimated mean ± SE: 0.81 ± 0.08; lower–upper 95% Confidence Intervals = 0.60–0.93) than female dogs (0.47 ± 0.12; 0.26–0.69).

A significant effect was found for the percentage of time that dogs directed towards the owner’s photo, with higher attention resulting in a higher probability of choosing the owner (B = 0.038, 95% Confidence Intervals = 0.007–0.069) (Fig. 3). In addition, it was revealed that the total duration of attention (s) directed towards either stimulus significantly impacted dogs’ accuracy, with higher accuracy being associated with shorter total looking times (B = 0.576, 95% Confidence Intervals = 0.143–1.008) (Fig. 4). No effect was found for either the length of the relationship or the owners’ sex.

Discussion

In the current experiment dogs were simultaneously presented with photographs of their owner’s and a stranger’s face, in different orientations and illuminations, and required to use this information to locate their owner who was concealed behind their image. The results revealed that dogs only approached the owner’s location significantly above a 0.5 chance level in the optimal condition, but with a higher probability than in the control condition in both the optimal and suboptimal conditions, which were shown to be not significantly different to each other. Analysis of dogs’ performance in the suboptimal condition is, therefore, somewhat conflicting, and this could be the result of the recognition being more difficult to achieve under suboptimal conditions compared to optimal conditions, since the facial features are less clear. This result was not found in the real-life version of the experiment by Mongillo et al. (2017) where dogs approached their owner at a level significantly higher than predicted by chance also in the suboptimal condition. However, it should be noted that the study using live faces included a larger number of dogs than the current experiment because it used a repeated measures design. In either case, the GLM model is a more complete and informative analysis, because it also allows us to assess the influence of attentional data, and for this reason the results from this analysis will be discussed preferentially. On this basis, we will not discuss the differences between optimal and suboptimal conditions which were covered extensively in the previous study (Mongillo et al. 2017). Overall these results indicate that dogs are able to recognise their owner’s face from photographs. This corroborates previous evidence of dogs’ ability to recognise their owner’s face obtained by exposing dogs to real-life faces (Mongillo et al. 2017) and provides support to the ecological validity of face photographs in the study of face processing by dogs.

While previous research had already demonstrated dogs’ ability to recognise their owner face, photographs differ from real-life faces in important ways. For instance, in the experiment by Mongillo et al. (2017), faces protruded through the windows after the curtains were lifted, giving them movement. Knight and Johnston (1997) found that movement enhances recognition compared to still faces because it facilitates perception about the face’s three-dimensional (3D) structure. Although it is possible that dogs’ face recognition abilities may benefit from motion cues, the results of the current experiment indicate that such information is not required for recognition. In fact, the current experiment suggests that presentation of the actual 3D stimulus is not fundamental information for a dog to recognise a human face. The extent to which dogs’ depth perception is based on stereopsis (i.e. the disparity in visual information of the same object or scene provided by the two eyes) has not been scientifically explored. However, dogs’ limited binocular overlap, as determined by their skull morphology (Miller and Murphy 1995) may imply that stereopsis is scarcely relevant to depth perception for this species.

Although dogs clearly recognised their owner’s face in photographs, our results do not imply that dogs realised the photographs were representations of their owner’s face. In fact, we should consider the more parsimonious explanation that dogs perceived the photograph as if it was their owner’s actual face rather than its representation, or ‘confusion mode’ (Fagot et al. 2000). Some evidence that dogs misinterpret 2D representations as real stimuli comes from a study by Fox (1971), who found that dogs made socially appropriate responses to a life-size dog painting, by spending more time sniffing at specific body regions (e.g. the groin, tail or ear region). Such modality also explains responses to photographs in other species, such as picture-naive baboons (Parron et al. 2008) and tortoises (Wilkinson et al. 2013). Although the ability to perceive photographs as a representational object has been demonstrated in different taxa, including macaques (Dasser 1987), sheep (Kendrick et al. 1996) and pigeons (Aust and Huber 2006; Wilkinson et al. 2010), we cannot take for granted that dogs have the same cognitive ability; moreover, even if such ability was present in dogs, our data cannot tell whether dogs used it in our experiment, since size, colours and location of the photographs were designed to emulate the real-life object. Therefore, future research could investigate this question, for instance by presenting dogs with photos of their owner’s face which differ in size to their real face or are presented in impossible situations.

Studies conducted in other species, namely sheep (Knolle et al. 2017), horses, pigeons (Dittrich et al. 2010), and primates (e.g. chimpanzees and rhesus macaques) (Doufur et al. 2006; Martin-Malivel and Okada 2007) highlighted the potential role of domestication, exposure to humans, and for the specific component of recognition from photographs experience with 2D representations. Obviously, our results cannot isolate the effect of any of such factors: dogs are the species with the longest history of domestication and our subjects had extensive exposure to a variety of humans and it is likely that they were also exposed to 2D representations of reality to some, hardly quantifiable, extent. However, the present demonstration that dogs are in principle able to perform face recognition from 2D representations of human faces is a crucial step towards the possibility to conduct thorough experiments on mechanisms underlying face recognition in animals.

Analysis of dogs’ looking behaviour revealed that visual attention to the photographs influenced the dogs’ approach choice: the longer a dog looked at either photograph, and the smaller the proportion of such time dogs were oriented to the owner’s photograph rather than the strangers’ one, the lower the probability that dogs eventually approached the owner. On the one hand, a possible level of explanation for these results involves motivational factors: a strong neophilia/explorative motivation would lead to increased probability of approaching the stranger a novel stimulus as well as to higher motivation to visually explore the stimuli and, particularly, the novel one. Earlier research indicates that neophilia may be a relevant trait in dogs, suggesting it helped them adapting to life with humans (Kaulfuß and Mills 2008). The behavioural manifestations of neophilia include both preferential approach (Kaulfuß and Mills 2008) and preferential orientation responses towards novel stimuli (Racca et al. 2010). The latter study specifically reports dogs' preference for looking at photographs of novel human faces rather than familiar ones (Racca et al. 2010), supporting a link between visual attention and approach choice in our experiment. As only a minority of dogs eventually approached the stranger, it is possible that such motivation is not equally strong in all dogs, and/or only emerged in dogs who were sufficiently at ease in the experimental situation, in spite of being separated from the owner. On the other hand, our results may also be grounded in the efficiency of visual processing, rather than in motivational factors. It is well known that face information can be encoded by humans through a highly efficient holistic processing (Taubert et al. 2011); in fact, individual differences in face recognition abilities have been linked to people’s ability to resort to such mechanism (Wang et al. 2012). There is evidence that dogs can also process human faces through a configural processing (Pitteri et al. 2014). Therefore, the shorter time spent looking at the photographs may reflect dogs’ ability to use a quicker and effective configural processing, increasing their probability of recognising the owner, and hence pay more attention to her/his photograph and eventually approach her/him. Differences among the dogs in our sample in the recruitment of such mechanisms may be attributed to different factors. For instance, the likeliness to resort to configural processing is subject to extensive experience with the specific class of stimuli, as shown extensively in humans (Richler and Gauthier 2014), and, in a previous study by our group, also in dogs (Pitteri et al. 2014). Thus, our results may reflect dogs’ experience in using human face as a relevant source of information to the aims of recognition.

Regarding experience with the stimulus being recognized, this study included only dogs that had lived with the current owner for more than six months. In fact, the length of the relationship between dogs and owners spanned between 6 months and about 9 years. Although the study was not specifically designed to assess the effects of experience, such variable was included in our analysis to control for a possible effect of exposure to the stimulus on dogs’ recognition abilities. The lack of an effect suggests that recognition does not improve as a function of specific experience with the person’s face, at least after a certain extent of exposure has already been attained.

Beyond experience, the observer’s sex can also be a factor determining differences in face processing and recognition. Sex differences in face recognition have been repeatedly reported in humans, with females generally outperforming males, especially when recognising same-sex faces (for a review: Herlitz and Lovèn 2012). Sex differences were also found in the current experiment, although opposite to what reported for adult humans: greater probabilities of recognizing the owner were found for male than for female dogs. We did not find any effect of the owner’s sex or of the interaction between the dogs’ and owner’s sex in face recognition, excluding the existence of a same-sex bias. The male advantage observed in the present study replicates earlier findings by our group, where live faces, rather than photographs, were presented (Mongillo et al. 2017). In such study, we had tentatively attributed the male advantage to a more different processing style adopted by males and females. In this sense, a parallel exists with human infants, where male infants are believed to adopt a more holistic processing style than females (Rennels and Cummings 2013). A similar explanation fits well with the findings of the present experiment: males’ better performance in face recognition could indeed be rooted in their higher likeliness to recruit an efficient, holistic face-processing mechanism, in turn supporting the relationship between attention patterns and approach choice, as suggested above.

Conclusions

The results of the current study provide the first clear demonstration that dogs are able to spontaneously recognise their owner from photographs of their face. This is an important finding since it supports the ecological valence of such stimuli and increases the validity of previous investigations into dog cognition that have used pictorial representations of human faces. A number of relevant questions which should be addressed in future studies directly stem from our results. For instance, it remains unclear whether dogs recognised the photographs as being representations of real faces, or confused the photographs for the real objects, and the extent to which this ability relies on dogs’ experience with humans at large, with the specific person and with 2D stimuli. Moreover, it remains to be clarified if dogs’ recognition abilities extend to other classes of stimuli, such as conspecifics, or non-living objects.

References

Adachi I, Kuwahata H, Fujita K (2007) Dogs recall their owner's face upon hearing the owner's voice. Anim Cogn 10:17–21. https://doi.org/10.1007/s10071-006-0025-8

Albuquerque N, Guo K, Wilkinson A, Savalli C, Otta E, Mills D (2016) Dogs recognize dog and human emotions. Biol letters 12:20150883. https://doi.org/10.1098/rsbl.2015.0883

Aust U, Huber L (2006) Picture-object recognition in pigeons: evidence of representational insight in a visual categorization task using a complementary information procedure. J Exp Psychol Anim B 32:190. https://doi.org/10.1037/0097-7403.32.2.190

Barber AL, Randi D, Müller CA, Huber L (2016) The processing of human emotional faces by pet and lab dogs: evidence for lateralization and experience effects. PLoS ONE 11:e0152393. https://doi.org/10.1371/journal.pone.0152393

Barton JJS, Corrow SL (2016) Recognizing and identifying people: a neuropsychological review. Cortex 75:132–150. https://doi.org/10.1016/j.cortex.2015.11.023

Bovet D, Vauclair J (2000) Picture recognition in animals and humans. Behav Brain Res 109:143–165. https://doi.org/10.1016/S0166-4328(00)00146-7

Buiatti M, Di Giorgio E, Piazza M, Polloni C, Menna G, Taddei F, Baldo E, Vallortigara G (2019) Cortical route for facelike pattern processing in human newborns. Proc Natl Acad Sci USA 116:4625–4630. https://doi.org/10.1073/pnas.1812419116

Cabe PA (1976) Transfer of discrimination from solid objects to pictures by pigeons: a test of theoretical models of pictorial perception. Percept Psychophys 19:545–550. https://doi.org/10.3758/BF03211224

Cuaya LV, Hernández-Pérez R, Concha L (2016) Our faces in the dog's brain: functional imaging reveals temporal cortex activation during perception of human faces. PLoS ONE 11:e0149431. https://doi.org/10.1371/journal.pone.0149431

Dasser V (1987) Slides of group members as representations of the real animals (Macaca fascicularis). Ethology 76:65–73. https://doi.org/10.1111/j.1439-0310.1987.tb00672.x

Dittrich L, Adam R, Ünver E, Güntürkün O (2010) Pigeons identify individual humans but show no sign of recognizing them in photographs. Behav Process 83:82–89. https://doi.org/10.1016/j.beproc.2009.10.006

Dufour V, Pascalis O, Petit O (2006) Face processing limitation to own species in primates: a comparative study in brown capuchins, Tonkean macaques and humans. Behav Process 73:107–113. https://doi.org/10.1016/j.beproc.2006.04.006

Fagot J, Martin-Malivel J, Dépy D (2000) What is the evidence for an equivalence between objects and pictures in birds and nonhuman primates? In: Fagot J (ed) Picture perception in animals. Psychology Press, New York, pp 295–320

Fox MW (1971) Integrative development of brain and behavior in the dog. University of Chicago Press, Chicago

Guo K, Meints K, Hall C, Hall S, Mills D (2009) Left gaze bias in humans, rhesus monkeys and domestic dogs. Anim Cogn 12:409–418. https://doi.org/10.1007/s10071-008-0199-3

Herlitz A, Lovèn L (2012) Sex differences and the own-gender bias in face recognition: a meta-analytic review. Vis Cogn 21:1306–1336

Huber L, Racca A, Scaf B, Virányi Z, Range F (2013) Discrimination of familiar human faces in dogs (Canis familiaris). Learn Motiv 44:258–269. https://doi.org/10.1016/j.lmot.2013.04.005

Kaminski J, Nitzschner M (2013) Do dogs get the point? A review of dog–human communication ability. Learn Motiv 44:294–302. https://doi.org/10.1016/j.lmot.2013.05.001

Kaulfuß P, Mills DS (2008) Neophilia in domestic dogs (Canis familiaris) and its implication for studies of dog cognition. Anim Cogn 11:553–556. https://doi.org/10.1007/s10071-007-0128-x

Kendrick KM, Atkins K, Hinton MR, Heavens P, Keverne B (1996) Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav Process 38:19–35. https://doi.org/10.1016/0376-6357(96)00006-X

Knight B, Johnston A (1997) The role of movement in face recognition. Vis Cogn 4:265–273. https://doi.org/10.1080/713756764

Knolle F, Goncalves RP, Morton AJ (2017) Sheep recognize familiar and unfamiliar human faces from 2D images. R Soc Open Sci 4:171228. https://doi.org/10.1098/rsos.171228

Martin-Malivel J, Okada K (2007) Human and chimpanzee face recognition in chimpanzees (Pan troglodytes): role of exposure and impact on categorical perception. Behav Neurosci 121:1145. https://doi.org/10.1037/0735-7044.121.6.1145

Mateo JM (2004) Recognition systems and biological organization: the perception component of social recognition. Ann Zool Fenn 41:729–745. https://doi.org/10.2307/23736140

Miller PE, Murphy CJ (1995) Vision in dogs. J Am Vet Med Assoc 207:1623–1634

Mongillo P, Scandurra A, Kramer RS, Marinelli L (2017) Recognition of human faces by dogs (Canis familiaris) requires visibility of head contour. Anim Cogn 20:881–890. https://doi.org/10.1007/s10071-017-1108-4

Müller CA, Schmitt K, Barber AL, Huber L (2015) Dogs can discriminate emotional expressions of human faces. Curr Biol 25:601–605. https://doi.org/10.1016/j.cub.2014.12.055

Nagasawa M, Murai K, Mogi K, Kikusui T (2011) Dogs can discriminate human smiling faces from blank expressions. Anim Cogn 14:525–533. https://doi.org/10.1007/s10071-011-0386-5

Ovodov ND, Crockford SJ, Kuzmin YV, Higham TF, Hodgins GW, van der Plicht J (2011) A 33,000-year-old incipient dog from the Altai Mountains of Siberia: evidence of the earliest domestication disrupted by the Last Glacial Maximum. PLoS ONE 6:e22821. https://doi.org/10.1371/journal.pone.0022821

Parron C, Call J, Fagot J (2008) Behavioural responses to photographs by pictorially naive baboons (Papio anubis), gorillas (Gorilla gorilla) and chimpanzees (Pan troglodytes). Behav Process 78:351–357. https://doi.org/10.1016/j.beproc.2008.01.019

Payne E, Bennett PC, McGreevy PD (2015) Current perspectives on attachment and bonding in the dog–human dyad. Psychol Res Behav Manag 8:71. https://doi.org/10.2147/PRBM.S74972

Pitteri E, Mongillo P, Carnier P, Marinelli L, Huber L (2014) Part-based and configural processing of owner's face in dogs. PLoS ONE 9:e108176. https://doi.org/10.1371/journal.pone.0108176

Proops L, Grounds K, Smith AV, McComb K (2018) Animals remember previous facial expressions that specific humans have exhibited. Curr Biol 28:1428–1432. https://doi.org/10.1016/j.cub.2018.03.035

Racca A, Amadei E, Ligout S, Guo K, Meints K, Mills D (2010) Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris). Anim Cogn 13:525–533. https://doi.org/10.1007/s10071-009-0303-3

Rennels JL, Cummings AJ (2013) Sex differences in facial scanning: similarities and dissimilarities between infants and adults. Int J Behav Dev 37:111–117. https://doi.org/10.1177/0165025412472411

Richler JJ, Gauthier I (2014) A meta-analysis and review of holistic face processing. Psychol Bull 40:1281. https://doi.org/10.1037/a0037004

Rosa-Salva O, Regolin L, Vallortigara G (2010) Faces are special for newly hatched chicks: evidence for inborn domain-specific mechanisms underlying spontaneous preferences for face-like stimuli. Dev Sci 13:565–577. https://doi.org/10.1111/j.1467-7687.2009.00914.x

Somppi S, Törnqvist H, Hänninen L, Krause C, Vainio O (2012) Dogs do look at images: eye tracking in canine cognition research. Anim cogn 15:163–174. https://doi.org/10.1007/s10071-011-0442-1

Somppi S, Törnqvist H, Hänninen L, Krause CM, Vainio O (2014) How dogs scan familiar and inverted faces: an eye movement study. Anim cogn 17:793–803. https://doi.org/10.1007/s10071-013-0713-0

Staples LG, Hunt GE, van Nieuwenhuijzen PS, McGregor IS (2008) Rats discriminate individual cats by their odor: possible involvement of the accessory olfactory system. Neurosci Biobehav Rev 32:1209–1217. https://doi.org/10.1016/j.neubiorev.2008.05.011

Sugita Y (2008) Face perception in monkeys reared with no exposure to faces. Proc Natl Acad Sci USA 105:394–398. https://doi.org/10.1073/pnas.0706079105

Taubert J, Apthorp D, Aagten-Murphy D, Alais D (2011) The role of holistic processing in face perception: evidence from the face inversion effect. Vision Res 51:1273–1278. https://doi.org/10.1016/j.visres.2011.04.002

Wang R, Li J, Fang H, Tian M, Liu J (2012) Individual differences in holistic processing predict face recognition ability. Psychol Sci 231:69–177. https://doi.org/10.1177/0956797611420575

Wilkinson A, Specht HL, Huber L (2010) Pigeons can discriminate group mates from strangers using the concept of familiarity. Anim Behav 80:109–115. https://doi.org/10.1016/j.anbehav.2010.04.006

Wilkinson A, Mueller-Paul J, Huber L (2013) Picture-object recognition in the tortoise Chelonoidis carbonaria. Anim cogn 16:99–107. https://doi.org/10.1007/s10071-012-0555-1

Yong MH, Ruffman T (2015) Domestic dogs match human male voices to faces, but not for females. Behaviour 152:1585–1600. https://doi.org/10.1163/1568539X-00003294

Yorzinski JL (2017) The cognitive basis of individual recognition. Curr Opin Behav Sci 16:53–57. https://doi.org/10.1016/j.cobeha.2017.03.009

Ackowledgements

We are very grateful to Carlo Poltronieri and Sabina Callegari for their technical assistance, and to all the dogs’ owners for volunteering their time. CJE is supported by a post-doc grant from the University of Padua (Grant Nr. BIRD178748/17) and ML is supported by a PhD grant from Fondazione Cariparo. This study was funded by the University of Padua (Grant Nr. DOR1927411/19, granted to PM).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Eatherington, C.J., Mongillo, P., Lõoke, M. et al. Dogs (Canis familiaris) recognise our faces in photographs: implications for existing and future research. Anim Cogn 23, 711–719 (2020). https://doi.org/10.1007/s10071-020-01382-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10071-020-01382-3