Abstract

Often captured images are not focussed everywhere. Many applications of pattern recognition and computer vision require all parts of the image to be well-focussed. The all-in-focus image obtained, through the improved image fusion scheme, is useful for downstream tasks of image processing such as image enhancement, image segmentation, and edge detection. Mostly, fusion techniques have used feature-level information extracted from spatial or transform domain. In contrast, we have proposed a random forest (RF)-based novel scheme that has incorporated feature and decision levels information. In the proposed scheme, useful features are extracted from both spatial and transform domains. These features are used to train randomly generated trees of RF algorithm. The predicted information of trees is aggregated to construct more accurate decision map for fusion. Our proposed scheme has yielded better-fused image than the fused image produced by principal component analysis and Wavelet transform-based previous approaches that use simple feature-level information. Moreover, our approach has generated better-fused images than Support Vector Machine and Probabilistic Neural Network-based individual Machine Learning approaches. The performance of proposed scheme is evaluated using various qualitative and quantitative measures. The proposed scheme has reported 98.83, 97.29, 98.97, 97.78, and 98.14 % accuracy for standard images of Elaine, Barbara, Boat, Lena, and Cameraman, respectively. Further, this scheme has yielded 97.94, 98.84, 97.55, and 98.09 % accuracy for the real blurred images of Calendar, Leaf, Tree, and Lab, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image fusion is a process of integrating useful information from two or more images to get an image which contains more information [1, 2]. Often captured images are not well-focussed everywhere due to limited field depth of optical lens of camera [3, 4]. When image is captured, only objects at a particular distance are focussed while other objects at larger distance than specified by the lens formula are not well-focussed. Thus, the captured image does not provide clear information, which is necessary for human visual perception as well as in the applications of pattern recognition and machine vision. To solve this problem image fusion approaches are developed. These approaches combine complementary information contained in the blurred images to provide more informative and clear images.

Previously, researchers have proposed several image fusion techniques in spatial and transform domains. In spatial domain, image fusion has been performed at pixel level, feature level, and decision level. The simplest technique is the pixel-level fusion that constructs the fused image by calculating pixel-by-pixel average of the blocks of input images. However, feature-level fusion techniques use the features information of the source images and construct the fused image using some fusion rule. Features are extracted from principal component analysis (PCA) and Wavelet transform domains [5]. PCA-based fusion technique transformation performs conversion of the correlated variable to uncorrelated variables named as the principal components. It computes the compact dataset representation. The first principal component represents the most important data sample as it accounts for the maximum variance in the dataset and each succeeding component represents as much of the rest of the variance as possible.

Discrete Wavelet transform and its variant-based image fusion techniques are also proposed [6–9]. Multi-resolution transform-based fusion techniques are also developed using Laplacian pyramid [7], morphological pyramid [8], bi-orthogonal wavelet transform [6]. The performance of multi-resolution transform-based techniques is degraded due to down sampling. To minimize this effect dual-tree frame Wavelet-based technique has been developed [10]. The multi-band vector Wavelet-based fusion technique is proposed that computes the coefficients of high and low frequency bands. However, this technique produces some blocking effect in the fused image. Therefore, anisotropic diffusion arithmetic is also suggested as post-processing to obtain improved image [11]. The multi-band Wavelet is more generic compared to the two-band Wavelet transformation. Its performance is found superior than two-band Wavelet, due to its compact support, orthogonal aspects, and decomposition properties [11]. Recently, Goodman et al. [12] have developed vector Wavelet image fusion technique. This Vector Wavelet-based approach provides both synthesis and analysis operators. Therefore, this decomposition offers more design flexibility. It provides more benefits over scalar Wavelets with respect to short symmetry, support, and orthogonally [13].

At decision level, image fusion techniques have been developed using individual Machine Learning (ML) approaches like Support Vector Machine (SVM) and Artificial Neural Network (ANN). SVM-based image fusion technique has been developed using dual tree complex Wavelet transform [10]. Zhao et al. have suggested SVMs for multi-source image fusion in [14]. SVM-based models perform binary classification by finding the decision surface that has maximum margin between the two closest points. SVM constructs an optimal hyper-plane that minimizes the error for unseen test data samples. ANN based fusion technique is developed using feature-level fusion approach in [15, 16]. Probabilistic Neural Network (PNN) [17] and Radial Basis Function Neural Network (RBFN) [18], two well-known variants of ANN, are proposed for multi-focus image fusion. PNN approach develops the decision of focussed/de-focussed image blocks using Spatial Frequency (SF), visibility, and edge features [17]. PNN approach based on Bayes theory utilizes the computational power efficiently. It has more flexible characteristic than back-propagation neural networks. PNN organizes its functional structure into four layers; input, pattern, summation, and decision layer. However, the fusion decision of ML approaches is limited for diverse type of images.

To address the issue, ensemble models are developed for better decision making. In ensemble scheme, individual models and their diversities can yield better decision. The deficiency in one model can be replaced with the advantages of others. The ensemble decision is considered more accurate and generalized than single machine learning approach [19]. In this study, we proposed random forest (RF)-based ensemble approach for multi-focus image fusion. This approach has been used effectively in different classification and prediction problems [20].

The advantage of RF approach is the generation of diverse types of random trees with reduced variance, i.e. weak learners. Such type of weak learners is primarily required for ensemble [21]. RF-based ensemble approach could compensate for the deficiency of one tree with the advantages of other trees. Therefore, RF algorithm can effectively utilize the diversity of random trees as compared to individual approaches. RF algorithm randomly generates many tree classifiers and aggregates their predictions. The generated trees are trained on bootstrap samples of the training dataset and employs random sub-sampling of features. This strategy offers resistance to over training and over-fitting on input data [22]. RF approach has performed well in high-dimensional feature space, especially, when dataset is small and its structure is complex.

The combined decision obtained through RF is used for the construction of decision map for image fusion. That is why; the proposed RF-based scheme has performed better on diverse types of blurred images. In the proposed scheme, useful features information is extracted from both spatial and transforms domains. These features are then used to train randomly generated trees by RF algorithm. The predicted labels of random trees are aggregated to construct the decision map for better image fusion. The proposed scheme is evaluated in terms of accuracy, specificity, sensitivity, F-score, and Mathew Correlation Coefficient (MCC). However, the quality of fused images is assessed using Peak Signal to Noise Ratio (PSNR), Mutual Information (MI), Root Mean Square (RMSE), correlation, Structure Similarity Index Measure (SSIM), Spatial Frequency (SF), Mean Gradient (MG), and standard deviation (STD). Performance of the proposed scheme is compared to previous image fusion approaches for synthesized and real blurred images. It is found that our proposed scheme has better quality-fused images. The main novelty of our scheme is the effective development of RF-based ensemble scheme for multi-focus image problems. Secondly, this scheme has incorporated both the feature and decision levels information to fuse multi-focus images.

The remaining part of this paper is organized as: In Sect. 2, we have presented various stages of the proposed scheme, in detail. Experimental setup is explained in Sect. 3. Results are discussed in Sect. 4. Finally, in Sect. 5 conclusions are given.

2 Proposed scheme

The block diagram of RF-based proposed scheme is given in Fig. 1. The input dataset is formed by decomposing each input image into blocks of size M × N (Implementation detail about the blocks is given in Sect. 3). The nine useful features are extracted from each block of the blurred/un-blurred images. These useful features provide blur information contained in these images. The detail information about these features is given in Sect. 2.3. RF algorithm efficiently utilized the input dataset to generate decision/classification trees. The predicted labels of randomly generated trees of random forest are combined using majority voting to construct the decision map for the fused image. Finally, various quality measures are employed to report the performance of fused images. Now, we will describe different stages of the proposed scheme.

2.1 Dataset formulation

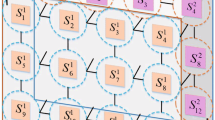

Input dataset is formed by decomposing two differently blurred images I1 and I2 into blocks of size M × N pixels. For each image block, \(B_{{I_{1} }}^{(i)} \in I_{1} \quad {\text{and}}\quad B_{{I_{2} }}^{(i)} \in I_{2}\), nine-dimensional feature vector is constructed, i.e. \(V_{{I_{1} }}^{(i)} = \left( {v_{11} ,v_{12} ,v_{13} , \ldots ,v_{19} } \right)\) and \(V_{{I_{2} }}^{(i)} = \left( {v_{21} ,v_{22} ,v_{23} , \ldots ,v_{29} } \right)\). Next, we assigned target labels \(t^{(i)}\) for ith feature vector of focussed/unfocussed blocks. For this purpose, a binary array is created as:

These values in the array, \(A^{(i)} = \left\{ {0,1} \right\}_{j = 1}^{j = 9}\), belong to one of two classes of focussed/unfocussed blocks, i.e. \(A^{(i)} \in \left\{ {c_{1} ,c_{2} } \right\}\). Target labels \(t^{(i)}\) are assigned using the majority class votes, i.e.

where, \(\Delta \left( {d_{k}^{(i)} ,c^{j} } \right) = \left\{ {\begin{array}{*{20}l} 1 \hfill & {{\text{if}}\quad d_{k}^{(i)} = c_{j} } \hfill \\ 0 \hfill & {\text{otherwise}} \hfill \\ \end{array} } \right.\). The image block is categorized to the class that receives the maximum votes.

2.2 Working of RF algorithm

Figure 2 demonstrates the working diagram of RF algorithm using bootstrap subspace data sampling technique. During forest growing process, bootstrap technique randomly selects samples without replacement from the input dataset of n b blocks, \(S = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{b} }}\). We investigated the performance of RF algorithm by selecting different number of random trees. However, we found the optimal performance for \(n_{t} = 500\) random trees. Each tree is built by randomly selecting 2/3rds of the input dataset with replacement called training dataset \(S_{tr} = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{r} }}\). The remaining 1/3rds testing dataset of ns blocks, \(S_{ts} = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{s} }}\), is kept for the evaluation.

To determine the best node for split, we selected a random subset (subspace) of m variables from training data. In general, the value of m variables is determined from D-dimensional feature vector, i.e. \(m\,\, \approx \sqrt D\). However, in the proposed scheme, for nine-dimensional feature vector, the value of \(m\,\, \approx 3\). These selected variables subset is used to compute the best node split according to Gini criteria [22]. This fitness criterion estimates the importance of variables. This measures the degree of association between variables and classification results. The lowest value of this measure is taken as the best split of each node. Gini measure is computed using the following equation as:

where, p represents the number of children at node d. Here, \(n_{s}\) shows the number of input feature vectors used for training. The Gini impurity \(I(d_{{v_{i} }} )\) gives the distribution of class label in the node. For a feature variable \(v_{i} \in V\) with p values at node d, \(v_{i} = \left\{ {u_{1} ,u_{2} , \ldots ,u_{p} } \right\}\) the value of \(I(d_{{u_{i} }} )\) is computed as:

where, \(n_{{c_{i} }}\) is the number of samples with value u i belong to class c i and \(a_{i}\) indicates the number of samples with value u i at that node d.

After the construction of classification trees on training dataset, out-of-bag (OOB) testing data \(S_{ts} = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{s} }}\) is used to evaluate the predictions performance. To build diverse type of weak classifiers, for good generalization, we have constructed 500 random classification trees with average accuracy is kept in the range of 60–70 %. In the next stage, the whole input dataset of n b blocks, \(S = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{b} }}\), is provided to 500 classification trees for the construction of depth map.

Figure 3 demonstrates an exemplary ensemble of random tress with associated features and predicted labels. In this figure, for each vector of input dataset, \(S = \left\{ {\left( {V^{(i)} ,\,t^{(i)} } \right)} \right\}_{i = 1}^{{i = n_{b} }}\), the predicted labels are extracted using 500 RF tress as: \(\hat{t}_{1}^{i} = f_{1} \left( {V_{{}}^{i} } \right)\), \(\hat{t}_{2}^{i} = f_{2} \left( {V_{{}}^{i} } \right) ,\ldots\) \(\hat{t}_{500}^{i} = f_{500} \left( {V_{{}}^{i} } \right)\), where \(\hat{t}_{j}^{i} = f_{j} \left( {V_{{}}^{i} } \right)\) indicates the predicted value of \(f_{j}\) tree for ith feature vector\(V_{{}}^{i}\). The predicted labels are aggregated using well-known majority voting (MV) technique i.e., \(\hat{t}^{i} = \text{MV} \left( {\hat{t}_{1}^{i} ,\hat{t}_{2}^{i} , \ldots ,\hat{t}_{500}^{i} } \right)\). The predicted values of \(\hat{t}^{i}\) are used to construct the decision map.

2.3 Image fusion using decision map

After construction of decision map, each block of fused image \(B_{F}^{(i)}\) is selected from input images I1 and I2 using the corresponding value of the decision map as:

White and black regions in the binary images of decision maps, in Figs. 4 and 5, show the complimentary information to be picked from two source images. In Fig. 4c, the black regions indicate the focussed parts of the image in Fig. 4a and the white regions of the image in the Fig. 4b. Similar is the case for the Calendar image in Fig. 5. Both these figures demonstrate that our scheme has accurately selected the focussed image blocks from the input images.

2.4 Feature extraction stage

To form diverse type of training data for RF model, we have selected the most informative/discriminative features both from spatial and frequency domain. These futures are used effectively in the literature to measure the image blur [18, 23–27]. The dataset contains nine features in which five features are taken from the spatial domain and remaining four features are extracted from the frequency domain. Spatial domain features consist of visibility, spatial frequency, energy of gradient, variance, and edges. The remaining four frequency domain features include one discrete cosine transform (DCT) high frequency and three detail bands of DWT. The details of these features are given as:

Visibility (VIS): This feature is derived from human visual system [23]. It measures the difference among intensities of the block pixels and mean intensity value of the image block and shows the clarity of image block. It is given by:

where \(\alpha\) is a constant and \(\mu_{\beta }\) is mean intensity value of image block.

Spatial Frequency (SF): This is a measure of activity level in the image [24]. It computes the difference of frequency among the rows and columns of image blocks. The larger value of SF shows greater focus in the image block. This frequency is given as:

Here, RF and CF are the row and column frequencies, respectively. The RF and CF are computed as:

Edges: Edge feature indicates focus of an image. The focussed image blocks consist of more edges than the corresponding de-focussed image blocks. In our current work we have used Canny edge detection algorithm, as proposed in [25], to detect the edges in the image blocks.

Energy of Gradient (EOG): EOG is used to measure focus of an image. The smaller value of EOG shows the block is de-focussed and focussed image block has the greater value of EOG [26]. It is calculated as:

where, \(B_{i} = B\left( {i + 1,j} \right) - B\left( {i,j} \right)\) and \(B_{j} = B\left( {i,j + 1} \right) - B\left( {i,j} \right)\)

Variance: Variance measures focus of the image by computing the gray level contrast between the pixels of image block and mean value of block. The value of variance is given as:

where, \(\mu_{\beta }\) is mean value of the image block.

Discrete Wavelet Transform (DWT): DWT coefficients show the focus of image. The activity level of this transform can be measured at coefficients level by treating each coefficient separately [27]. Another approach computes window-based activity (WBA) by averaging the coefficients over blocks of the image [18, 27]. DWT decomposition of image blocks gives three detail sub-bands and one approximation sub-band. The approximation sub-band does not contain much of the edge information. Therefore, it does not contain useful information about the focus of the image. Hence, the approximation band is not included in the feature set for RF training. Only three detail sub-bands HL, LH, and HH are used in the construction of feature space. The activity level is computed by averaging the Wavelet coefficients over image blocks for each detail sub-band.

High Frequency Energy: Discrete Cosine Transform (DCT) coefficients highlight the variation in frequency information of the image. The high frequency coefficients of this transform give the focus measure of the image blocks, which can be obtained by removing the direct components. The input image \(B(u,v)\) in DCT domain is defined as:

here, c(0)=\(1/\sqrt 2\), c(u) = 1, and u, v = 0,1,…L-1.

DCT high frequency energy (E) is computed as:

2.5 Performance measures

To demonstrate the comparative performance of multi-resolution transforms, individual learners, and combine decision model, we used several performance measures. They are explained as follows:

Accuracy (Acc): The prediction Acc of a classifier is calculated as:

where, TP (True Positive) is number of correctively predicted labels of positive class and FP is number of incorrectly predicted labels of positive class. TN is number of correctively predicted labels of negative class and FN is number of incorrectly predicted labels of negative class.

Sensitivity (Sn) and Specificity (Sp): These measures show the capability of classifier to predict the positive (negative) class. These measures are calculated as:

Mathew Correlation Coefficients (MCC): This measure is discrete form of Pearson’s correlation coefficient. It accounts for both overprediction and underprediction. Its value varies between -1 and 1. If MCC value is 1, it indicates the classifier makes no mistakes in prediction and if -1 then it shows the classifier prediction is wrong. However 0 MCC value means random prediction. MCC is calculated as:

F-Score: F-score evaluates the statistical tests. It uses Precision and Recall to compute the prediction accuracy and can be considered as weighted average of precision and recall. Precision is number of correct prediction divided by total number of returned predictions and recall is correct predictions divided by number of predictions. Its value is computed as:

where, \({\text{Precision}} = \frac{\text{TP}}{{{\text{TP}} + {\text{FP}}}}\) and \({\text{Recall}} = \frac{\text{TP}}{{{\text{TP}} + {\text{FN}}}}\)

Standard Deviation (STD): STD measures the contrast in the fused image. An image with high contrast would have a greater STD value. Its value is computed as:

where, L is the number of gray levels and \(i^{{\prime }} = \sum\limits_{i = 1}^{L} {ih_{F} }\) and \(h_{F}\) is the normalized histogram of fused image.

Mean Gradient (MG): MG is the mean value of the gradient of the final fused image. Gradient usually represents the image details and clear image regions. Then, a larger value of mean gradient indicates that the image contains more image details and clear image regions. The mean gradient is calculated as:

Spatial Frequency (SF): It is an important measure of the image details. The higher spatial frequency value indicates the more image details and further details are given in previous Sect. 2.3.

Root Mean Square Error (RMSE): The RMSE measure calculates the deviation of fused image from the original image. The lesser deviation shows the more similarity between the fused image and the original image. The RMSE value is calculated as:

where, M, N represents the size of the original image O and the fused image F.

Mutual Information (MI): MI calculates the similarity between the fused image and the original Image. The larger MI value indicates greater similarity between the fused and original images. MI is computes as:

where, \(h_{O} , { }h_{F} ,{\text{and}}\,h_{O,F}\) are original, fused, and joint, image histograms, respectively. L is number of intensity levels in the original and fused images.

Peak Signal to Noise Ratio (PSNR): PSNR value is used to measure the resemblance between the original and fused image. PSNR value is computed as:

Correlation (Corr.): The Corr value indicates the similarity between the original image and fused image. Its values vary between 0 and 1. The larger correlation value indicates the greater similarity between the original and fused images. The value of Corr is calculated as:

Structural Similarity Index Measure (SSIM): SSIM measure computes the similarity between two images. The SSIM is calculated by combining a comparison of luminance, contrast, and structure. It is applied locally in an 8 × 8 square window. The window is moved pixel-by-pixel over the whole image. At each step, the local statistics and the SSIM index are calculated within the window. SSIM values range between [0 1]. Values close to 1 show the highest similarity between original and fused Images [28]. SSIM is calculated as:

\(\mu_{0} \,{\text{and}}\,\mu_{f}\) are mean intensities of original and fused images, respectively. \(\sigma_{O} \,{\text{and}}\,\sigma_{F}\) are the standard deviations of original and fused images, respectively. \(\sigma_{OF}\) is combine standard deviation of fused and original image. Here, C 1 = 6.5 and C 2 = 58.5 are constants.

3 Experimental setup

Several experiments are conducted using a pair of multi-focus images to analyze the performance of the proposed scheme. Performance is evaluated using both synthesized and real blurred images. To obtain the synthesized blurred images, we blurred the left half of original image to form image I1, and right half of original image to form image, I2 with the Gaussian blur of radius 5. Further, we blurred blocks of different sizes (80 × 80 and 64 × 64) of the original image to form the image I1 and I2, with Gaussian blur of radius 7. Real blurred images are obtained from www.imagefusion.org. Since multi-focus images, have non-uniform magnitude of focussed pixels, as a result, the block-based fusion techniques may affect the quality of the fused image at the block boundary. To minimize this effect, we used suitable block size of 3 × 3 for real blurred images. Further, block size of 8 × 8 pixels is used for the synthetic blurred images. If we further reduce block size, the computational time increases without appreciable increase in image quality. To develop SVM-based fusion model, we computed the optimal value of model parameters (c = 70) that controls the model complexity and \(\gamma\) = 0.05 of RBF function. PNN-based fusion model develops using the optimal value of spread parameter (\(\sigma\) = 0.9). PCA-based image fusion technique is employed by arranging input images into two column vectors as proposed in [5]. The values of empirical means, eigenvalues, and eigenvectors are calculated. The eigenvectors that have the larger eigen values are obtained. The normalized components P 1 and P 2 (i.e., P 1 + P 2 = 1) are calculated to obtain the fused image as:

Fusion techniques are developed in Wavelet domain by decomposing the input images into detail and approximation bands as proposed in [5]. These details and approximation coefficients are fused according to the fusion rule. The fused image is obtained by applying the inverse transform. Image fusion in Wavelet domain is performed using stationary Wavelet transform with “symlet” function of 2nd order. Input images are decomposed to first level.

4 Results and discussion

In this section, we have compared the qualitative and quantitative performance of the proposed fusion scheme with other approaches based on Wavelet [27], PCA [27], SVM [29], and PNN [17].

The qualitative performance is demonstrated in the Figs. 6, 7, 8, 9 for the images Barbara, Boat, Elaine, and Couple, respectively. These figures show that, for synthesized blurred images, the fused images using the proposed scheme have produced better quality and they have greater similarity with the original images. Further, Figs. 10, 11, 12 highlight the visual quality of the real blurred images fused using our RF-based scheme. It is observed that the fused image obtained through our scheme have better quality than the Wavelet-, PNN-, and PCA- based fusion techniques. From Fig. 10 it is observed that the fused Leaf’s image obtained through PCA approach keep the blurring effects. On the other hand, our scheme has produced better quality fused image than the images fused using PNN- and Wavelet-based approaches. We observed in Fig. 12 the blurring effects in fused images obtained through the Wavelet and PCA approach. However, it is noted from this figure that the proposed scheme has better quality-fused images than previous Wavelet-, PCA-, and SVM-based approaches. It is clear from Table 1 that our scheme has exhibited sufficient higher Acc values of 98.97, 97.78, 98.14, and 96.48 for Elaine, Barbara, Boat, Boat, Lena, Cameraman, and Couple images, respectively. However, for these images, PNN and SVM approaches give relatively lower values of Acc measure.

Our scheme has demonstrated higher Sn values of 98.88, 97.41, 98.88, 97.95, 98.44, and 98.25 for these images. On the other hand, for these images, PNN and SVM approaches give relatively lower values of Sn measure.

Similarly, our scheme has sufficient improved values than SVM and PNN approaches in terms of Sp, F-score, and MCC measures. It is pertinent to mention that proposed scheme observe little bit lower Sp and MCC values of 97.85 and 0.698 for Cameraman and Barbara images, respectively. However, values of Acc, Sn, and F-Score calculated by proposed scheme are much higher than that of PNN for the same images.

It can also be observed from Table 1 that for Elaine, Boat, and Lena images the performance of proposed scheme is much better than PNN and SVM. Overall result of our proposed scheme is better.

Table 2 mimics the performance comparison of proposed scheme with previous PCA-, Wavelet-, PNN-, and SVM-based approaches for different blurred images. It is depicted from Table 2 that our proposed scheme has sufficient higher PSNR values of 47.87, 38.13, 46.57, 41.39, 42.74, and 41.62 for Elaine, Barbara, Boat, Cameraman, Lena, and Couple images, respectively. However, for these images, PCA-, Wavelet-, PNN-, and SVM-based approaches give relatively lower values of PSNR measure. Our scheme has yielded quite lower RMSE values for these images, as well. On the other hand, for these images, PCA-, Wavelet-, PNN-, and SVM-based approaches have given relatively higher values of RMSE. Similarly, in terms of MI, Corr, and SSIM measures, our scheme has demonstrated significant improvement than that of PCA-, Wavelet-, PNN-, and SVM-based approaches. The higher values of MI and Corr highlight that our scheme has transferred more effectively useful information from multi-focus images to fused images. The higher value of SSIM indicates that images fused through the proposed scheme preserve structure similarity more effectively. Figure 14 visually shows that our proposed scheme has higher MI value for various images.

Table 3 shows the comparison of proposed scheme with PCA, Wavelet, PNN, and SVM approaches for real blurred images. It is observed from the table that, our proposed scheme has sufficient higher SF values of 27.01, 20.51, 23.91 and 32.96, for Leafs, Lab, Calendar, and Tree, images, respectively. However, for these images, PCA, Wavelet, PNN, and SVM approaches give relatively lower values of SF measure. This indicates our scheme preserves spatial information more effectively than PCA, Wavelet, PNN, and SVM approaches. Our scheme has given quite higher MG values for these images. While, for the same images, PCA, Wavelet, PNN, and SVM approaches observed relatively lower values of MG. Similarly, proposed scheme has significant improved values of STD than that of PCA, Wavelet, PNN, and SVM approaches. Figure 13 shows that our proposed scheme has more accuracy value as compared to SVM and PNN approaches for various real blurred images.

Table 4 shows the performance comparison of the proposed scheme with recent Wavelet-based image fusion approaches of four-band Wavelet, vector Wavelet, and multi-band vector Wavelet proposed in [11]. It is observed from Table that proposed scheme outperforms these Wavelet-based approaches in terms of PSNR, MI, and RMSE measures.

It is clear from Table 4 that our proposed scheme has noteworthy higher PSNR values of 41.39 and 42.74 for Cameraman and Lena images, respectively. However, for these images, four-band Wavelet, vector Wavelet, and multi-band vector Wavelet approaches give relatively lower values of PSNR. Similarly proposed scheme has provided higher MI values that that of four-band Wavelet, vector Wavelet, and multi-band vector Wavelet approaches. The proposed scheme has yielded lowest RMSE values of 2.16 and 1.79 for the same images. On the other hand, for these images, Wavelet-based recent approaches, give relatively higher values of RMSE. Figure 14 indicates that our proposed scheme has produced very good results in terms of MI than previous fusion approaches.

5 Conclusion

In this paper, we have studied the performance of RF based ensemble scheme for multi-focus image fusion in terms of various quality measures. The proposed scheme has effectively incorporated both feature and decision level information. The feature-level information is extracted from both spatial and frequency domains. These informative features are tailored, during RF algorithm, for useful ensemble of diverse type of random trees. The predicted labels of ensemble trees are aggregated to construct the image fusion. The proposed scheme has yielded improved performance on various standard images and real blurred images. Our scheme has demonstrated better-fused image than feature-level approaches in PCA and wavelet transform. Moreover, our novel ensemble approach has given better-fused images than SVM- and PNN-based approaches under various qualitative and quantitative measures.

It is anticipated that that our scheme can be used as a useful tool for other applications of multi-focus image fusion. In future, we intend to extend our study for medical image fusion.

References

Mitianoudis N, Stathaki T (2007) Joint fusion and blind restoration for multiple image scenarios with missing data. Comput J 50(6):660–673

Susperregi L, Arruti A, Jauregi E, Sierrac B, Martínez-Otzetaa JM, Lazkanoc E, Ansuategui A (2013) Fusing multipleIimage transformations and a thermal sensor with Kinect to improve person detection ability. Eng Appl Artif Intell 26(8):1980–1991

Zhu H, Liu M, Ji H, Li Y (2010) Combined invariants to blur and rotation using Zernike moment descriptors. Pattern Anal Appl. doi:10.1007/s10044-009-0159-9:309-319

Dai X, Zhang H, Liu T, Shu H, Luo L (2014) Legendre moment invariants to blur and affine transformation and their use in image recognition. Pattern Anal Appl 17(2):311–326

Naidu VPS, Raol JR (2008) Pixel-level image fusion using wavelets and principal component analysis. Def Sci J 58(3):338–352

Hamzaa AB, Heb Y, Krimc H, Willskyd A (2005) A multiscale approach to pixel-level image fusion. Integr Comput Aided Eng 12:135–146

Burt PJ, Adelson EH (1983) The Laplacian pyramid as a compact image code. IEEE Trans Commun 31(4):532–540

Matsopoulos GK, Marshall S, Brunt JNH (1994) Multiresolution morphological fusion of MR and CT images of the human brain. IEE Proc Vision Image Signal Proc 141(3):137–142

Burt PJ, Kolczynski RJ (1993) Enhanced image capture through fusion. In: Fourth international conference on computer vision 1993, pp 173–182

Mumtaz A, Choi TS, Majid A, Mumtaz A (2010) Image fusion algorithm based on dual tree complex wavelet transform and support vector machine. In: International Bhurban conference on applied sciences & technology, Islamabad, Pakistan, pp 197–202

Lan Y, Ren H, Zhang Y (2013) Multi-band vector wavelet transformation based multi-focus image fusion algorithm. J Softw 8(1):208–217

Goodman TNT, Lee SL, Tang WS (1993) Wavelets in wandering subspaces. Trans Am Math Soc 338(2):639–654

Piella G (2003) A general framework for multiresolution image fusion: from pixels to regions. Inf Fusion 4:259–280

S-h Zhao, Xue-zhi F, Kang G-d, Ramadan E (2002) Multi-source remote sensing image fusion based on support vector machine. Chin Geogr Sci 12:244–248

Mamatha SG, Rahim SA, Raj CP (2012) Feature-level multi-focus image fusion using neural network and image enhancement. Global J Comput Sci Technol Graph Vis 12(10):16–23

Pagidimarry M, Babu KA (2011) An all approach for multi-focus image fusion using neural network. Int J Comput Sci Telecommun 2(8):23–29

Li S, Kwok JT, Wang Y (2002) Multifocus image fusion using artificial neural networks. Pattern Recogn Lett 23:985–997

Li S, Kwok JT, Wang Y (2004) Fusing images with multiple focuses using support vector machines. IEEE Trans Neural Netw 15(6):1555–1561

Wang X-Y, Zhang B-B, Yang H-Y (2012) Active SVM-based relevance feedback using multiple Classifiers ensemble and features reweighting. Eng Appl Artif Intell 26(2013):368–381

Ali S, Majid A, Khan A (2014) IDM-PhyChm-Ens: intelligent decision-making ensemble methodology for classification of human breast cancer using physicochemical properties of amino acids. Amino Acids 46(4):977–993

Breiman L (1996) Bagging predictors. Mach Learn 26:123–140

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Jiwu H, Shi YQ, Xianhua D (1999) A segmentation-based image coding algorithm using the features of human vision system. J Image Graph 4(5):400–404

Eskicioglu AM, Fisher PS (1993) Image quality measures and their performance. IEEE Trans Commun 43(12):2959–2965

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 8(6):679–698

Zhang Y, Ge L (2009) Efficient fusion scheme for multi-focus images by using blurring measure. Digit Signal Proc 19(2):186–193

Naidu VPS, Raol JR (2008) Pixel-level Image fusion using wavelets and principal component analysis. Defen Sci J 58(3):338–352

Klonus S, Ehlers M (2009) Performance of evaluation methods in image fusion. In: 12th International conference on information fusion, Seattle, WA, USA, July 6–9 2009

Heng C, LI Jie, Weile Z (2006) A novel support vector machine-based multifocus image fusion algorithm. In: International conference on communications, circuits and systems proceedings, 2006, pp 500–504

Acknowledgments

This research work is financially supported by the Higher Education Commission of Pakistan under the indigenous PhD scholarship No. 17-5-4(Ps4-101) HEC/Ind-Sch-2007.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kausar, N., Majid, A. Random forest-based scheme using feature and decision levels information for multi-focus image fusion. Pattern Anal Applic 19, 221–236 (2016). https://doi.org/10.1007/s10044-015-0448-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-015-0448-4